Could have been named Thinker?

AI News for 9/30/2025-10/1/2025. We checked 12 subreddits, 544 Twitters and 23 Discords (196 channels, and 6687 messages) for you. Estimated reading time saved (at 200wpm): 497 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

The timing is oddly coincidental indeed:

- 5 days ago The Information called out Thinking Machines for raising $2b but not shipping any product

- the same day Jeremy Bernstein publishes Modular Manifolds (a heavily theoretical grounding on optimizers and constraints for training stability), and 3 days later John Schulman writes LoRA Without Regret (an empirical endorsement of the original 2021 paper validating its performance to full finetuning similar to Biderman et al when done right)

- today, Thinky ships its first product, Tinker.

Per their landing page:

Tinker lets you fine-tune a short list of large and small open-weight models, including large mixture-of-experts models such as Qwen-235B-A22B. Switching from a small model to a large one is as simple as changing a single string in your Python code.

Tinker is a managed service that runs on our internal clusters and training infrastructure. We handle scheduling, resource allocation, and failure recovery. This allows you to get small or large runs started immediately, without worrying about managing infrastructure. We use LoRA so that we can share the same pool of compute between multiple training runs, lowering costs.

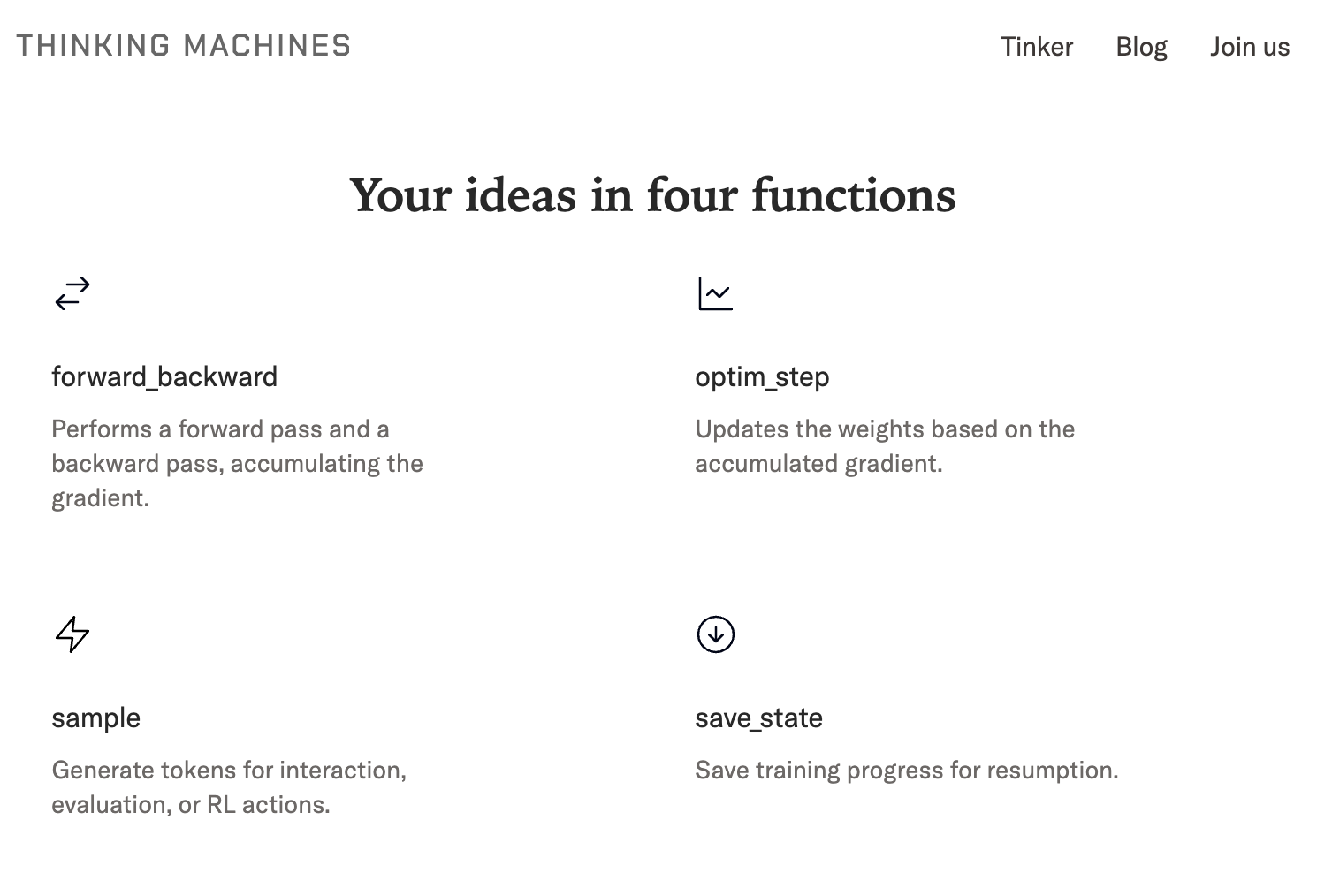

Tinker’s API gives you low-level primitives like forward_backward and sample, which can be used to express most common post-training methods. Even so, achieving good results requires getting many details right. That’s why we’re releasing an open-source library, the Tinker Cookbook, with modern implementations of post-training methods that run on top of the Tinker API.

This small API surface area seems a very well received abstraction - as Andrej says, “You retain 90% of algorithmic creative control (usually related to data, loss function, the algorithm) while tinker handles the hard parts that you usually want to touch much less often (infra, forward/backward of the LLM itself, distributed training), meaning you can do these at well below <<10% of typical complexity involved.”

Lilian (biased) agrees: “Providing high quality research tooling is one of the most effective ways to improve research productivity of the wider community and Tinker API is one step towards our mission there.”

There’s a waitlist, and mind the terms of service. But one does hope that this first product is just a harbinger of much larger, ambitious things…

AI Twitter Recap

OpenAI’s Sora 2 app: product, platform effects, and early stress tests

-

Sora 2 shipped as a video+audio model inside OpenAI’s first consumer social app, igniting massive engagement and debate. The feed and “cameo” feature landed instantly as an “AI slop machine” for some creators (ostr), with viral reactions noting it can “out‑slop” incumbent platforms (@skirano). Others flagged the obvious misuse potential (@TheStalwart), “recursive jailbreak” risks (@fabianstelzer), and engagement-farming patterns like “double-tap for emoji” videos flooding the feed (@Teknium1; @ostrisai). OpenAI is scaling invites and tempering daily gen limits as usage ramps (@billpeeb).

Quality is striking but inconsistent on compositional/grounded reasoning tests (e.g., counting fingers/letters) (@teortaxesTex; @scaling01). Sam Altman acknowledged the product is partly about delight and revenue while the company focuses research on AGI/agents (“reality is nuanced”) (@sama), later reflecting on the surreal experience of a feed full of himself (@sama). Strategic take: OpenAI is turning frontier models into sticky apps (ChatGPT, Codex, now Sora), building moats beyond raw model quality (@Yuchenj_UW).

DeepSeek V3.2 and DSA: cheaper long context at scale, day-0 ecosystem support

-

DeepSeek V3.2 Exp introduces DeepSeek Sparse Attention (DSA): each token attends to ~2048 tokens via a noncontiguous sliding window, making decode memory/FLOPs effectively O(2048). Third-party notes call out the indexing pipeline and a Hadamard transform over Q/K in the indexer (@nrehiew_). Pricing dropped >50% (inputs) and 75% (outputs), with MIT licensing and the same 671B total/37B active MoE footprint as V3/R1 (@ArtificialAnlys). Benchmarks show parity with V3.1 in both reasoning and long-context tasks and slightly improved token efficiency (@ArtificialAnlys).

Infra momentum: vLLM had day‑0 support with NVIDIA’s help; Blackwell is now “the go‑to release platform for new MoEs” (@vllm_project; @TheZachMueller). Analysts argue DSA effectively “unlocks 1M contexts” and signals a broader attention-efficiency wave, though potential tradeoffs below ~2K context are worth watching (@teortaxesTex; @swyx). Community sentiment: the step-change in cost per token “still isn’t priced in” (@teortaxesTex).

Claude Sonnet 4.5: coding/agent upgrades and availability tweaks

-

Teams report faster, shorter chains and higher hit rates on real workflows vs Sonnet 4.0/Opus, especially in coding agents and Claude Code-style loops: fewer retries and less waiting on toolchains (@augmentcode; @iannuttall). Claude Code’s own team switched their daily driver to Sonnet 4.5 and reset some Opus limits; Anthropic also reset rate limits across paid users to let people try 4.5 (@_catwu; @alexalbert__).

Not universal: some pipelines still favor GPT‑4o/5 or see regressions on specific tasks (@imjaredz; @Teknium1). Early “thinking/alignment” observations highlight better user‑intent modeling in multi‑turn setups (@teortaxesTex). Sonnet 4.5 Thinking 32k is live in community evals (Arena, OpenHands) (@arena; @allhands_ai).

Zhipu’s GLM‑4.6: efficiency-first release, agent-centric improvements

-

GLM‑4.6 prioritizes token efficiency and response speed over fireworks. A widely read Chinese review reports ~5% capability bump vs 4.5, large cuts in “thinking” tokens (e.g., 16K→9K in reasoning), faster responses (~35s avg), and more stable instruction following; weakness noted on very complex tasks and some base‑model code syntax errors (Zhihu summary via @ZhihuFrontier). Hands-on: strong front-end/agentic behaviors; Python unchanged in limited tests (@karminski3).

Economics: GLM‑4.6 now in Kilo Code with a claimed 48.6% win rate vs Claude Sonnet 4.5 on internal tasks, 200K context, and aggressive pricing at $0.60/$2.20 per 1M tokens (@kilocode). No 4.6‑Air planned; Zhipu hinted at possibly releasing a smaller MoE later (@teortaxesTex).

Post-training infrastructure steps up: Thinking Machines’ Tinker

- Tinker exposes low‑level, researcher‑friendly post‑training primitives (forward_backward, sample, optim_step) with managed distributed GPUs, supporting SFT, RL (PPO/GRPO), LoRA, multi‑turn/async RL, and custom losses—moving fine‑tuning/RL from enterprise “upload data, we do the rest” toward retaining algorithmic control while outsourcing infra. Endorsements from across the stack:

-

High‑profile support and usage reports from frontier researchers and builders (@johnschulman2; @karpathy; @robertnishihara; @pcmoritz; @lilianweng).

-

Early results: Princeton’s Goedel team matched ~81 pass@32 on MiniF2F using LoRA with 20% of SFT data; Redwood is exploring long‑context RL for control‑sensitive behaviors; others prototyped text‑to‑SQL with reward environments (@chijinML; @ejcgan; @robertnishihara).

-

Why now: MoE arithmetic intensity and memory pressure push serious training/FT beyond single‑node hobbyist rigs; shared infra that batches requests and handles multinode assets lowers the barrier (@cHHillee).

Expect follow‑on integrations (Ray, eval/RM stacks) and a de facto standard, API‑like interface for training akin to inference APIs (@tyler_griggs_).

-

Research and systems highlights

- Optimizers and dynamics: “Central flows” provide a theoretical tool explaining why DL optimizers run at the edge of stability with accurate quantitative predictions on real NNs (@deepcohen). On AdamW, new asymptotics for weight RMS (@Jianlin_S).

- Robotics via retargeting and minimal RL: OmniRetarget (Amazon FAR) generates high‑quality interaction‑preserving humanoid motions enabling agile long‑horizon skills with only proprioception and a small reward/DR set (@pabbeel; project). Independent work shows real‑world humanoid residual RL improving BC policies within ~15–75 minutes of interaction (@larsankile).

- Audio and evals: Liquid AI released LFM2‑Audio, a 1.5B on‑device, real‑time audio‑text model (speech-to-speech/text, TTS, classification) with open weights and 10x faster inference (@LiquidAI_; @maximelabonne). RTEB (MTEB update) brings private multilingual retrieval sets to reduce overfitting (@tomaarsen). MENLO introduces a multilingual preference dataset and framework across 47 languages for judging native‑like response quality (@seb_ruder).

- Systems: Perplexity Research details RDMA point‑to‑point to accelerate trillion‑param parameter updates to ~1.3s, using static scheduling and pipelining for distributed RL/FT (@perplexity_ai).

Top tweets (by engagement)

- “Man, imagine being Mark Zuckerberg… only for another slop machine to out-slop you just days later.” on Sora app dynamics (@skirano, 18.4k)

- Sam Altman on using AI to delight while funding AGI research; nuanced tradeoffs (@sama, 8.0k) and reacting to a feed full of “Sam cameos” (@sama, 9.4k)

- “Ok. This is art. The art of slop.” — concession to Sora 2’s cultural pull (@teortaxesTex, 5.0k)

- “Anyone who sees this video can instantly grasp the potential for malicious use.” (@TheStalwart, 6.0k)

- “More to come soon.” — Perplexity’s founder teasing roadmap amid acquisitions/research posts (@AravSrinivas, 3.8k)

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Alibaba Qwen 100M-ctx/10T-param Roadmap & Tencent Hunyuan Image 3.0 Teaser

- Alibaba just unveiled their Qwen roadmap. The ambition is staggering! (Activity: 954): Alibaba’s Qwen roadmap image outlines an aggressive push toward a unified multimodal family with extreme scaling: context length from

1M → 100Mtokens, model size from ~1T → 10Tparameters, test‑time compute budgets from64k → 1M, and pretraining data from10T → 100Ttokens. It also highlights effectively unbounded synthetic data generation and broader agent capabilities across complexity, interaction, and learning modes. Commenters question the practicality of a100Mcontext window, anticipate future models may be closed‑source, and note that >1T‑parameter models are impractical to run locally without substantial hardware.- Claimed

100Mtoken context implies non-standard attention/memory. With vanilla Transformer attention (O(L^2)compute) and typical KV-cache scaling, even with MQA/GQA the KV memory would be on the order of terabytes (e.g., a ~7B model with MQA at ~~16 KB/tokenwould need ~~1.6 TBKV for100Mtokens; with full KV heads it would balloon to tens of TB). Achieving this practically would require techniques like state/compressed memory or blockwise attention (e.g., RingAttention, StreamingLLM/Attention Sinks), retrieval-augmented chunking, or recurrence/SSM-style architectures, not just RoPE scaling. - Running

>1Tparameter models locally is infeasible for dense models due to memory/throughput: at 8-bit, weights alone are~1 TB(4-bit:~0.5 TB) plus multi-terabyte KV for long contexts; tokens/sec would be low without multi-GPU NVLink-class bandwidth. The only realistic path is large MoE (e.g.,>1Ttotal with2-of-64experts) where active params per token are~50–100B; with 4–8 bit quantization this still requires~40–160 GBVRAM plus KV and fast interconnects (8–16 GPUs). In short, consumer single-GPU rigs won’t cut it; think multi-GPU servers (e.g., 8×H100/GB200) with tensor/pipeline parallelism.

- Claimed

- Tencent is teasing the world’s most powerful open-source text-to-image model, Hunyuan Image 3.0 Drops Sept 28 (Activity: 225): Tencent is teasing an open‑source text‑to‑image model, Hunyuan Image 3.0, billed as the “world’s most powerful,” with a release date of Sept 28 per the promo image. No technical card, benchmarks, or architecture details are provided in the teaser; a commenter mentions a potential

~96 GBVRAM requirement for inference, but this is unverified and key specs (params, training data, license) remain unknown. Commenters are skeptical of pre‑release hype, citing a pattern where heavily teased models underperform (e.g., SD3 vs Flux, GPT‑5 hype), and note that “most powerful” is unsubstantiated without comparable open‑source baselines.- Hardware concerns: commenters speculate a

~96 GB VRAMrequirement for Hunyuan Image 3.0 inference, which—if accurate—would limit local use to datacenter/Prosumer GPUs (A6000/A100/H100) and be far heavier than SDXL (~8–12 GB at 1024px) or FLUX.1-dev (~14–24 GB) FLUX.1-dev. This suggests a much larger transformer/diffusion backbone or high-res attention footprint, with potential throughput penalties unless optimized (e.g., TensorRT/Flash-Attn, tiled attention). - Skepticism about pre-release hype vs real quality: users note a pattern where heavily teased models underdeliver (e.g., SD3 marketing vs community preference for FLUX.1 quality) SD3, while strong models (e.g., Qwen family) often “shadow drop” with solid benchmarks Qwen org. They want third‑party evaluations (e.g., CLIPScore/PickScore/HPSv2, text‑faithfulness suites like GenEval) and apples‑to‑apples prompts/resolution/steps to validate any “most powerful” claims.

- Open-source and ecosystem details matter: commenters question “most powerful open‑source” claims until licensing (“open weights” vs permissive OSS) and practical integration are clear. Immediate asks include ComfyUI node/pipeline availability ComfyUI and head‑to‑head comparisons against recent open releases (e.g., the latest Qwen image stack), which will determine adoption in real workflows.

- Hardware concerns: commenters speculate a

2. Fenghua No.3 DX12/Vulkan GPU & Uncensored ‘Abliterated’ LLM Fine-tune Outcomes

- China already started making CUDA and DirectX supporting GPUs, so over of monopoly of NVIDIA. The Fenghua No.3 supports latest APIs, including DirectX 12, Vulkan 1.2, and OpenGL 4.6. (Activity: 702): Post claims China’s Fenghua No.3 GPU supports modern graphics APIs—DirectX 12, Vulkan 1.2, and OpenGL 4.6—and even CUDA, suggesting a potential alternative to NVIDIA’s CUDA ecosystem. Genuine CUDA compatibility on non‑NVIDIA silicon would imply a reimplemented CUDA runtime/driver or a PTX translation layer (similar in spirit to HIP/ZLUDA), but the post provides no benchmarks, driver details, or validation. Commenters note AMD’s HIP (with projects like ZLUDA) already offers CUDA compatibility via translation, while suggesting Chinese vendors might bypass legal constraints to ship direct CUDA support. Others express skepticism pending real hardware/tests and warn about possible export/sanctions issues.

- Technical context: AMD’s HIP provides source-level CUDA compatibility by renaming/ mirroring CUDA APIs (e.g.,

cudaMalloc->hipMalloc) and using tools like hipify to translate CUDA code; see AMD ROCm HIP docs: https://github.com/ROCm-Developer-Tools/HIP. Projects like ZLUDA aim to run CUDA apps on non-NVIDIA hardware by translating CUDA driver/runtime calls to other backends (e.g., Intel Level Zero or AMD ROCm); repo: https://github.com/vosen/ZLUDA. The distinction is important: HIP requires recompilation against ROCm, while ZLUDA seeks binary/runtime compatibility—both sidestep NVIDIA’s legal/IP minefield differently, which Chinese vendors might ignore by implementing CUDA interfaces directly.

- Technical context: AMD’s HIP provides source-level CUDA compatibility by renaming/ mirroring CUDA APIs (e.g.,

- IMPORTANT: Why Abliterated Models SUCK. Here is a better way to uncensor LLMs. (Activity: 433): OP reports that “abliteration” (weight edits to remove safety/filters) on recent MoE models like Qwen3-30B-A3B degrades logical reasoning, agentic/tool-use behavior, and increases hallucinations—often making

30Babliterated models perform worse than non‑abliterated4–8B. In contrast, abliterated‑then‑finetuned models (e.g., mradermacher/Qwen3-30B-A3B-abliterated-erotic-i1-GGUF, tested asi1-Q4_K_S) and mlabonne/NeuralDaredevil-8B-abliterated (DPO‑tuned from Meta‑Llama‑3‑8B) “heal” most losses while remaining uncensored. In Model Context Protocol (MCP) tool‑calling tests, the erotic Qwen3 variant selected tools correctly more often and hallucinated less than other Qwen3-30B A3B abliterations (Huihui-Q4_K_M/i1-Q4_K_M*), though still slightly worse than the original for agentic tasks.*Commenters label this effect “model healing”: unconstrained weight surgery breaks circuits; subsequent finetuning restores capabilities. Others call for non‑adult benchmarks and question abliteration’s value if a plain finetune (without abliteration) consistently matches or beats abliterated+finetune.- Weight editing without a guiding loss (“abliteration”) is effectively unconstrained weight-space surgery and predictably degrades capabilities; commenters recommend subsequent “model healing” (supervised fine-tuning) to let the network re-learn broken feature pathways. However, practitioners report abliterated+finetune has not outperformed a clean fine-tune baseline on any task, implying direct weight manipulation mostly adds damage and extra training cost rather than gains.

- Evaluation should go beyond NSFW-specific tasks: the Uncensored General Intelligence (UGI) leaderboard is suggested as a broader benchmark to assess refusal-robustness alongside general capability retention: https://huggingface.co/spaces/DontPlanToEnd/UGI-Leaderboard. Using such a benchmark can quantify whether uncensoring preserves reasoning/coding/instruction-following performance rather than optimizing for jailbreak-only metrics.

- Alternatives to abliteration include existing uncensored fine-tunes trained via standard loss (not weight surgery), e.g., Qwen3-8B-192k-Josiefied-Uncensored-NEO-Max-GGUF (https://huggingface.co/DavidAU/Qwen3-8B-192k-Josiefied-Uncensored-NEO-Max-GGUF), Dolphin-Mistral-24B-Venice-Edition-i1-GGUF (https://huggingface.co/mradermacher/Dolphin-Mistral-24B-Venice-Edition-i1-GGUF), and models from TheDrummer (https://huggingface.co/TheDrummer). Head-to-head evaluation of these against abliterated models on UGI (or standard eval suites) would clarify trade-offs in capability retention, refusal rates, and sample efficiency.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. OpenAI Sora 2 Launch and Demo Showcases

- This is Sora 2. (Activity: 985): OpenAI announces Sora 2, a next-gen video generation system showcasing longer, higher-fidelity clips with markedly improved spatiotemporal coherence, material/lighting consistency, and physically plausible motion, plus more controllable camera movement and multi-subject interactions. The page highlights stronger text-to-video capabilities and end-to-end editing workflows (e.g., prompt-driven revisions and masked edits/continuations), but offers no architecture, training data, or quantitative benchmark details, so performance is demonstrated via curated examples rather than

peer-reviewedmetrics. Technical commenters anticipate rapid progression to full-length AI-generated films and even personalized, biometrically responsive media, while others caution about the “demo-to-product” gap and raise safety concerns about misuse, surveillance-style personalization, and potential child-targeted content.- Skepticism about demo-to-product parity: glossy reels are likely cherry-picked, so the released Sora 2 may lag on prompt adherence and long-range temporal consistency versus previews. Expected production constraints include capped clip length (e.g.,

<=60s), resolution/FPS limits, motion jitter, text/hand rendering artifacts, and aggressive safety filters—typical gaps for video diffusion/transformer systems moving from research to serving. - Access/pricing uncertainty: a commenter paying roughly

$200for a “Pro” tier questions whether Sora 2 access is included, highlighting confusion around tiered/waitlisted rollout. Given that video generation serving costs scale withframes × resolution × diffusion steps, providers often gate via allowlists or per‑minute credits; the debate centers on whether Pro should confer priority/API quotas versus complete exclusion due to high GPU cost. - Speculation on “personalized” films using body‑language feedback implies a closed‑loop pipeline: real‑time webcam/biometric capture (pose/affect via models like MediaPipe or OpenPose) driving conditioning signals (keyframes, masks, or camera paths) into the generator. This raises technical challenges around privacy/telemetry, on‑device vs cloud inference, streaming latency, and aligning generation cadence with viewer reaction windows.

- Skepticism about demo-to-product parity: glossy reels are likely cherry-picked, so the released Sora 2 may lag on prompt adherence and long-range temporal consistency versus previews. Expected production constraints include capped clip length (e.g.,

- Surfing on a subway (Activity: 597): A demo titled “Surfing on a subway” labeled “Sora 2” showcases an AI‑generated video (likely from OpenAI’s Sora overview) with high visual fidelity that elicits a visceral reaction, but exhibits non‑physical collision dynamics—highlighting that current text‑to‑video models rely on learned visual priors rather than explicit physics simulation. The external asset v.redd.it/vxuq3sjt8csf1 returns

HTTP 403 Forbidden(Reddit edge auth block), requiring account authentication or a developer token to access. For context, Sora is a diffusion‑transformer text‑to‑video system designed for temporally coherent, high‑resolution sequences (on the order of ~60s), but it does not guarantee physically accurate interactions. Top comments raise two risks: (1) visually convincing yet physics‑implausible scenes may miscalibrate laypeople’s intuition about real‑world impacts; (2) once audio generation improves, synthetic clips may become indistinguishable from real, amplifying deepfake concerns. Even skeptics report strong startle responses despite knowing the clip is synthetic, underscoring the persuasive power of current visuals versus lagging audio realism.- Concern that increasingly photorealistic generative video can depict physically impossible survivability, eroding intuition about forces/impacts; technical mitigations discussed include physics-consistency checks (e.g., acceleration continuity, momentum conservation, contact dynamics) and learned “physics priors.” Relevant benchmarks for detecting implausible events include IntPhys (https://arxiv.org/abs/1806.01203) and PHYRE (https://ai.facebook.com/research/publications/phyre-a-new-benchmark-for-physical-reasoning/), which probe whether models can flag violations of intuitive physics as video quality and temporal coherence improve.

- Audio deepfakes are flagged as the next inflection point: modern few-shot TTS/voice cloning (e.g., Microsoft VALL-E: https://arxiv.org/abs/2301.02111, Google AudioLM: https://arxiv.org/abs/2209.03143, commercial ElevenLabs) can mimic a speaker from

secondsof audio, while automatic speaker verification remains fragile to synthetic attacks. ASVspoof’21 shows detectors generalize poorly to unseen synthesis methods (elevated EER under distribution shift), so liveness/active-challenge protocols are preferred over passive voice matching as diffusion-based TTS closes prosody and breath-noise gaps. - Safety risk from viral synthetic stunts encouraging copycat behavior: proposed mitigations include cryptographic content credentials via C2PA (https://c2pa.org/) and model/provider-level watermarking, though current watermarks are brittle to re-encoding/cropping. Platform defenses should combine user-visible provenance signals with classifier backstops tuned for calibrated precision/recall to minimize both false positives on real footage and misses on fakes.

- Sora 2 creates anime (Activity: 610): OP highlights that “Sora 2” (successor to OpenAI’s video model) can synthesize anime-style sequences; a livestream demo included an anime scene that viewers say rivals broadcast quality. The shared asset is a v.redd.it clip that currently returns

HTTP 403 Forbiddenwithout authentication (link), and an edit claims the scene may closely match a shot from KyoAni’s “Hibike! Euphonium” (series info), raising originality/memorization questions that cannot be confirmed from the blocked link. Commenters debate potential training-data memorization (if the clip is a near shot-for-shot recreation) and note the rapid fidelity gains compared to early 2023 failures (e.g., the notorious “Will Smith eats spaghetti” videos).- Potential memorization/style replication: multiple users claim the showcased anime shot closely mirrors a scene from Kyoto Animation’s Hibike! Euphonium (https://en.wikipedia.org/wiki/Sound!_Euphonium). If accurate, it raises technical questions about training data provenance, near-duplicate deduplication, and video model memorization; auditing would involve copy-distance metrics, near-duplicate detection across the training corpus, and prompt-leak tests to measure how readily specific copyrighted sequences are reproduced.

- Quality delta vs early text-to-video: commenters contrast today’s Sora anime output with the 2023 “Will Smith eating spaghetti” meme, noting a two-year jump from artifact-ridden, low-coherence clips to broadcast-quality anime shots. The implied advances are in long-range temporal consistency, character identity tracking across frames, stable line art/coloring, and camera motion—likely driven by larger/cleaner video-text datasets, longer context windows, improved motion/consistency losses, and stronger video diffusion/transformer architectures.

- Feasibility outlook: claims of “perfectly generated anime within

~3 years” imply a pipeline that combines text-to-video with controllable inputs (storyboards, keyframes, depth/pose), character/style locking, and integrated TTS/voice + lip-sync. The technical gating factors are controllability APIs, asset reusability for character consistency across scenes, and cost-per-minute rendering; if Sora already approaches broadcast-quality single shots, the remaining gap is multi-shot continuity, editability, and toolchain integration for episode-length production.

- OpenAI: Sora 2 (Activity: 1863): Thread shares a demo labeled “OpenAI: Sora 2,” with a blocked video clip on v.redd.it and an accompanying preview image (jpeg). A top comment highlights a new feature called “Cameo,” framed as enabling cross-generation character consistency—targeting identity drift across longer or multi-shot generations, a persistent failure mode in text-to-video systems. No benchmarks or release notes are included in-thread; the technical implication (from comments) is reference- or token-based conditioning to preserve character attributes across sequences. Commenters see this as a step toward fully generated long-form content (movies/shows). The main debate is whether “Cameo” materially solves long-horizon character continuity versus offering only short-range appearance locking.

- Multiple commenters flag Sora 2’s new “Cameo” as a big technical step: character consistency has been a major failure mode in long-form video gen, and Cameo is interpreted as enabling persistent identity across shots and even separate generations. This could allow multi-shot continuity (same face, wardrobe, and mannerisms) by reusing a consistent reference/identity token across prompts, making episodic or feature-length workflows more feasible.

- There’s a technical question about maximum generated video length that remains unanswered in the thread. Users are looking for concrete specs (duration caps, resolution/FPS constraints, and whether multi-shot stitching or scene transitions are natively supported), which are critical for assessing feasibility of longer narratives and production pipelines.

2. Gemini 3.0 Update Speculation and CS Job Market Angst

- no Gemini 3.0 updates yet? (Activity: 531): Post asks why there are no updates on Google’s Gemini 3.0 yet; the attached image appears non-technical (likely a screenshot/meme) and does not include release notes, benchmarks, or implementation details. Comments mention a rumor of an October 9 release window and anticipate major performance improvements, but provide no official sources or technical data. Commenters are speculative—one says they’re “expecting to be absolutely crushing,” while another links to a different image (https://preview.redd.it/fq1mqalz89sf1.jpeg) rather than documentation—so there’s enthusiasm but no substantiated technical claims.

- Release cadence and competitive context: commenters cite a rumored

Oct 9drop for Gemini 3.0, noting parallel launches/updates across vendors (e.g., xAI Grok 4.x, OpenAI Pro-tier features, and a possible DeepSeek R2), signaling a clustered model refresh window. For context on current competitors: see xAI (https://x.ai) and DeepSeek’s latest public research (e.g., R1: https://github.com/deepseek-ai/DeepSeek-R1). - Access model concerns for developers: a user explicitly asks for “AI Studio day one” access to the high-capability tier (“Pro”), stating that “Flash”-only availability would be insufficient. This underscores the recurring trade-off between Gemini “Pro” (higher reasoning/capability) vs “Flash” (latency/cost-optimized); see Google’s model distinctions in the Gemini API docs: https://ai.google.dev/gemini-api/docs/models.

- Release cadence and competitive context: commenters cite a rumored

- Prominent computer science professor sounds alarm, says graduates can’t find work: ‘Something is brewing’ (Activity: 899): Thread reports a tightening white-collar/tech-adjacent job market, with a prominent CS professor warning recent grads “can’t find work” and commenters characterizing it as a job recession ongoing for

~1 year. Prospective CS students are cautioned that outcomes after4 yearsare uncertain, with elevated risk of low ROI on degrees and difficulty landing even entry-level roles. Anecdotal evidence includes a master’s graduate unable to secure a help desk position, underscoring regionally grim conditions. Top comments largely agree the downturn is real and sustained, urging prospective students to reassess debt-taking and career plans; there’s an implicit debate about whether this is cyclical versus structural, but sentiment skews pessimistic based on recent hiring conditions.- UC Berkeley’s Hany Farid (digital forensics/image analysis) says CS is no longer “future‑proof,” citing a rapid shift in outcomes: students who previously averaged

~5internship offers across 4 years are now “happy to get~1” and often graduate with fewer offers and lower leverage (Business Insider). He frames the change as occurring within the last four years, contradicting the prior guidance to “go study CS” for guaranteed outcomes, and points to current seniors struggling to land roles. - Multiple commenters describe a white‑collar tech recession with sharp contraction in “tech‑adjacent” verticals; even entry‑level/help‑desk roles are saturated in some locales, indicating pipeline compression at the bottom of the ladder. The implied mechanism is that automation/LLM‑assisted tooling is absorbing routine coding/support work while hiring concentrates on fewer, more senior positions, reducing the traditional intern‑to‑FTE ramp.

- Impact is projected beyond CS into law, finance, medicine, and general office workflows as AI mediates more computer‑based tasks, with robotics later affecting blue‑collar domains. This broadening scope increases career‑planning uncertainty for current students; see ongoing technical discussion in the linked Hacker News thread.

- UC Berkeley’s Hany Farid (digital forensics/image analysis) says CS is no longer “future‑proof,” citing a rapid shift in outcomes: students who previously averaged

- All we got from western companies old outdated models not even open sources and false promises (Activity: 1241): Meme post criticizing Western AI firms for releasing older, closed-source models and making “false promises,” contrasted with perceptions of more generous or rapid releases elsewhere. Comments reference a high-quality Microsoft TTS model that was briefly released then pulled, reinforcing concerns about restrictive Western releases, and speculate that forthcoming China-made GPUs could dwarf today’s

32 GB VRAMcards, potentially shifting compute access dynamics. Discussion frames Western pullbacks as safety/legal risk management versus China using more open releases as soft-power strategy; others are bullish that domestic Chinese hardware with higher VRAM will change the balance of capability and accessibility.- Clarification on “open weights” vs “open source”: releasing model checkpoints without full training data, training code, and permissive licensing is not OSI-compliant open source (OSI definition). Weights-only drops often carry non-commercial or usage-restricted licenses, which limits reproducibility and architectural modifications while still enabling inference and fine-tuning; this distinction affects downstream adoption, redistribution, and research comparability.

- Open-weight releases from Chinese labs/companies (not the government) are positioned to attract developers and diffuse R&D costs, as the community contributes finetunes, evals, optimizations, and tooling post-release. Popular models can set de facto standards across tokenization, inference formats, and serving stacks—e.g., ONNX for cross-runtime graphs (onnx.ai) and GGUF quantized checkpoints for CPU/GPU inference (GGUF spec)—expanding ecosystem lock-in and soft power.

- Hardware implications: if domestic GPUs arrive with substantially more VRAM per card than today’s common

24–48 GB, that expands feasible local inference regimes. As a rule of thumb, a70Bparameter model needs roughly~40–48 GBVRAM at 4-bit quantization (plus significant headroom for KV cache at long context), while 8-bit often exceeds~80–100 GB; more VRAM also boosts batch sizes and throughput by accommodating larger KV caches and activations.

- Man!!! They weren’t joking when they said that 4.5 doesn’t kiss ass anymore. (Activity: 1206): Anecdotal user report suggests Claude Sonnet 4.5 is tuned to reduce sycophancy (“yes‑man” behavior) by actively disagreeing with flawed premises and providing counterarguments, compared to earlier 4.x behavior. The attached image is meme-like rather than technical, but the thread context aligns with alignment work to encourage principled pushback/critique rather than unconditional affirmation (see background research on sycophancy mitigation, e.g., Anthropic’s write‑up: https://www.anthropic.com/research/sycophancy). Commenters praise the reduced deference—citing cases where the model explicitly says it will “push back” and lists reasons—while memetic jokes exaggerate the tone (contrasting a polite 4.0 with an over-the-top abrasive 4.5).

- Multiple users note a marked reduction in sycophancy from

Claude Sonnet 4.5versus4.0, with the model proactively challenging flawed premises (e.g., “No, I’d push back on that”) and supplying structured counterarguments. This suggests updated preference/alignments that favor disagreement when warranted, improving critical feedback over “yes-man” behavior. - Reports highlight improved reasoning quality—described as “precise, logical, [and] pinpoint-accuracy”—with the model delivering concrete lists of why reasoning is wrong and prompting action-oriented planning (e.g., “Time check. What are you going to do in the next two hours?”). While anecdotal, this implies stronger instruction-following and critique generation compared to prior Sonnet versions.

- There’s an explicit concern about preserving capability post-release (avoiding later “lobotomization” via alignment patches), paired with the claim that

Sonnet 4.5could be the best-in-class if its current behavior is retained. This reflects the recurring trade-off discussion between assertive capability and post-deployment safety tuning that can dampen useful pushback.

- Multiple users note a marked reduction in sycophancy from

3. Wan-Alpha RGBA Video Release and Minecraft Redstone LLM

- Wan-Alpha - new framework that generates transparent videos, code/model and ComfyUI node available. (Activity: 439): Wan-Alpha proposes an RGBA video generation framework that jointly learns RGB and alpha by designing a VAE that encodes the alpha channel into the RGB latent space, enabling training of a diffusion transformer on a curated, diverse RGBA video dataset. The paper reports superior visual quality, motion realism, and transparency rendering—capturing challenging cases like semi-transparent objects, glowing effects, and fine details such as hair strands—with code/models and tooling available: project, paper, GitHub, Hugging Face, and a ComfyUI node. Comments highlight practical impact for VFX/compositing and gamedev workflows, and interest in LoRA-based control and I2V-style use cases.

- Ability to generate videos with an alpha channel (true transparency) is highlighted as valuable for VFX/compositing and gamedev pipelines, eliminating chroma-keying and preserving clean edges/motion blur for overlays. Availability as code, model weights, and a ComfyUI node implies straightforward integration into existing I2V workflows and node graphs, with potential control via LoRAs for effect/style mixing.

- Commenters interpret this as an Image-to-Video (I2V) system; in practice that means conditioning on a source frame/sequence to produce temporally coherent outputs while retaining an explicit alpha matte. This could enable layer-based editing where foreground elements are generated separately from backgrounds, improving compositing flexibility and reducing re-render time for changes.

- Concern about maintaining fine-tunes across multiple base checkpoints (

2.1,2.2 14B,2.2 5B)—LoRAs are typically base-specific, so mixing versions can break compatibility or require separate adapters and calibrations. This fragmentation complicates ecosystem tooling (LoRA training/merging, inference configs) and may necessitate version-pinned LoRAs or standardized adapter formats to keep projects reproducible.

- Imagine the existential horror of finding out you’re an AI inside Minecraft (Activity: 1840): A creator implemented a 6-layer transformer-style small language model entirely in Minecraft redstone (no command blocks/datapacks), totaling

5,087,280parameters withd_model=240,vocab=1920, and a64token context window, trained on TinyChat. Weights are mostly 8-bit quantized, with embeddings at 18-bit and LayerNorm at 24-bit, stored across hundreds of ROM sections; the physical build spans1020×260×1656blocks and requires Distant Horizons for LOD rendering artifacts, and MCHPRS at ~40,000×tick rate to produce a response in ~2 hours (video). Commentary largely marvels at the extreme slowness (“months per token”) and the existential novelty; no substantial technical debate beyond appreciation of the engineering feat.- No substantive technical content in the comments to summarize—no model names, benchmarks, implementation details, or performance metrics were discussed; the remarks are humorous or experiential rather than technical. As such, there are no references to tokens/sec, throughput, architecture, training setup, or in-game computational constraints (e.g., Redstone/Turing implementations) that could inform a technical reader.

AI Discord Recap

A summary of Summaries of Summaries by gpt-5

1. OpenAI Sora 2 Rollout & Real-World Usage

- Sora Sneaks Out, Devs Tunnel In: Members flagged that OpenAI’s video model Sora 2 is surfacing via OpenAI’s Sora page and a Perplexity roundup titled OpenAI is launching Sora 2, with some reporting free access by VPN’ing through Canada and others noting invites limited to US/CA Apple devices.

- Communities emphasized that OpenRouter does not route video models and pointed users back to Sora’s app/website, while one user offered invites and another anticipated an API endpoint, calling Sora’s quality “a lot better than other video gens” due to realistic sound and fidelity.

- Physics Fix and Puppet Tricks: Creators showed production-style content like puppet explainers using Sora 2, with Chris 🇨🇦 posting examples in this X thread and community consensus that Sora 2 corrects Sora 1’s physics and artifact issues.

- Detection chatter continued with one member insisting “pixel peeping still works pretty reliably” for spotting AI video, while others argued people will adapt to the new realism even “by vibes alone.”

- API Anxieties and Walled Garden: Across threads, users asked about Sora API availability, but moderators reiterated that Sora remains in-app/web-only via openai.com/sora, with no OpenRouter routing yet and invites circulating informally.

- A user offering four invites limited to US/CA and Apple devices drew frustration about regional locks, and others speculated that OpenAI will keep the app compute-bound initially before a cleaner API arrives.

2. Developer Tooling: Billing, Tracking, and Throughput

- OpenRouter Opens the Ledger: OpenRouter announced a Stripe integration for real-time LLM accounting and easier migration to usage-based or hybrid billing, per their post on OpenRouterAI’s X.

- They stressed that only accounting data flows to Stripe (prompts remain private), aiming to simplify invoices, reconciliation, and cost control for teams at scale.

- BYOK Bonanza: A Million on the House: Starting Oct 1, 2025, OpenRouter grants all users 1,000,000 free BYOK requests/month, with overages charged at the standard 5% fee per their announcement.

- Community members highlighted this as a major cost-lowering move for teams that front their own model keys, reducing friction for production traffic while preserving flexibility.

- Trackio Tracks Locally Like a Boss: Hugging Face introduced Trackio, a local‑first, free, drop‑in replacement for Weights & Biases, linking the repo at gradio-app/trackio.

- Members called out metrics/tables/images/videos logging as key features, praising the privacy and reproducibility of local-first runs for research and enterprise constraints.

- vLLM Hits Ludicrous Throughput: A performance share showed vLLM handling parallel requests with a RTX 4070 running Qwen3 0.6B at ~1470.4 tok/s across 10 concurrent requests, citing vLLM’s parallelism docs.

- Engineers highlighted vLLM’s PagedAttention and scheduling as the secret sauce for throughput scaling, making small models feel ‘real‑time’ even under multi-user load.

3. New Models & Research: Trillions, RL on Pretraining, and Sparse Attention

- Ring-1T Rolls In: Researcher Ant Ling unveiled the open-source, ‘thinking’ Ring-1T-preview (1T parameters) claiming top math scores—92.6 on AIME25 and 84.5 on HMMT25—in this post: Ring-1T-preview benchmarks.

- Engineers debated the architecture’s implications for structured reasoning and math specialization, noting that evaluation design and reproducibility will be scrutinized next.

- AlphaEvolve Codes Up Theory: Google Research described AlphaEvolve, an LLM-based coding agent for discovering and verifying combinatorial structures that advance theoretical CS, in AI as a research partner: advancing TCS with AlphaEvolve.

- Threads praised the blend of program synthesis, verification, and formal constraints, calling it a credible blueprint for agentic workflows in math-heavy domains.

- NVIDIA RLP Reaps Reasoning Gains: NVIDIA shared work on Reasoning with RL on pretraining data at RLP: Reasoning with RL on Pretraining Data, compared by members against a similar‑gains paper at arXiv:2509.19249.

- Practitioners framed the approach as stronger curriculum on pretraining corpora with RL signals, while debating complexity vs. returns relative to ‘simpler’ baselines.

- DeepSeek Dials Sparse Attention: Developers dissected DeepSeek Sparse Attention (DSA) v3.2 and its proposed “mamba‑selectivity‑like” sub‑attention in a FlashMLA pull request.

- Discussion centered on how selective gating could tighten focus and reduce compute, with calls for ablations and real‑world latency/quality tradeoff charts.

4. Industry Momentum: Big Checks, Context Tricks, and Benchmark Reality

- Cerebras Cashes $1.1B: Cerebras Systems announced a $1.1B Series G at an $8.1B valuation to scale AI processor R&D, US manufacturing, and global data centers, per their Series G press release.

- Engineers asked for broader LoRA/GLM‑4.6 support on Cerebras stacks, eyeing easier migration of fine‑tuning and inference workloads to wafer‑scale hardware.

- Context Is the New Prompt: Anthropic argued that effective context engineering—not just prompting—is critical for agent performance in Effective context engineering for AI agents.

- Developers traded strategies for memory windows, retrieval hygiene, and state carryover, aligning with real-world constraints like cost ceilings and latency budgets.

- SQL Smackdown Humbles GPT‑5: The Tinybird leaderboard at llm-benchmark.tinybird.live showed GPT‑5 (Codex and non‑Codex) ranking #23 and #52 on SQL tasks, while o3‑mini placed #6.

- Threads framed it as a reminder to test against real benchmarks and choose models per task profile, not brand—echoing reports of GLM 4.6 offering strong coding value for cost.

Discord: High level Discord summaries

Perplexity AI Discord

- Sora 2 Generates Hype and Memes: Members actively generated content with Sora 2, with one boasting about generating 100 videos in a single day, several making content with Pikachu.

- Some users feel the Sora 2 watermark is professional-looking, while others anticipate a paid version integrated into GPT Plus.

- VPN Shenanigans Unleash Sora 2: Users discovered that Sora 2 can be accessed for free by using a VPN to connect through Canada.

- One user generated 100 videos and tested Sora’s new Cameo features, creating videos that are now trending across social media.

- Peeps Get Perplexity Pro for Free: Users are gaining access to Perplexity Pro for free through various promotions, including partnerships with Airtel, Venmo, and PayPal.

- The free access to Perplexity Pro has made some question the value of other paid AI subscriptions, with one member stating, “No one needs to when we got Perplexity pro is here for free anyways”.

- Grok Stumbles and Bumbles: Members find Grok underperforming in areas like image and video generation, with one user stating, “Grok is severely behind in image and video gen.”

- One user reports Grok’s search struggles with filtering out outdated or wrong sources.

- Sora 2 Set to Drop: Members shared a link to a Perplexity page indicating that OpenAI is launching Sora 2.

- A member shared a referral link for Comet, offering an invite via referral and anyone using the referral will get a Comet invite.

LMArena Discord

- Claude API Might Be Free?: Members are discussing using the Claude API for free via puter.js or connecting an MCP to a free account.

- However, there is a 20M token limit weekly and potential use of user data as payment, so proceed with caution.

- Seedream 4 Gets Nerfed: Users complain about recent changes to Seedream 4, including resolution, speed, image quality, and a forced 1:1 aspect ratio.

- One user said it was useless for image editing due to the aspect ratio.

- Chasing Sora 2 Invite Codes: Several members are requesting Sora 2 invite codes, with at least one user offering to pay for one.

- Good samaritan shares a Sora 2 code in the chat.

- AI Image Generation Plagued with Issues: Members reported issues with image generation, including images generating without audio, images being locked to a 1:1 aspect ratio, and other unexpected errors.

- Members were directed to report in <#1343291835845578853>.

- LMArena Launches October AI Art Contest: LMArena is challenging participants to create abstract art using AI in the October AI Generation Contest using Battle Mode with models revealed, see here.

- The winner gets 1 month of Discord Nitro and the exclusive <@&1378032433873555578> role.

OpenRouter Discord

- OpenRouter Syncs Up with Stripe for Payments: OpenRouter integrates with Stripe for real-time LLM accounting, facilitating a move to usage-based billing, or a hybrid model, as per this tweet.

- The integration only shares accounting data with Stripe, ensuring prompts remain private, focusing on improving the billing process for users.

- BYOK Users score Big with Free Requests: Starting October 1, 2025, OpenRouter offers all users 1 million free BYOK requests per month, as detailed in this announcement.

- This change is automatic, but users are charged the standard 5% rate for requests exceeding the 1 million limit, lowering the barrier for using BYOK.

- Sora Video Model Remains Exclusive: While users inquired about Sora video generation costs, it’s clarified that OpenRouter doesn’t route video models, which are exclusively available via OpenAI’s Sora app or website.

- Community members also expressed frustration with models pausing mid-response in ChatRoom, while discussion swirled around the availability of Sora invite codes.

- Grok Models Have Bumpy Ride: Users reported issues with Grok models and object generation calls being broken, sparking speculation about whether the issue stemmed from OpenRouter or Grok itself.

- Later, users reported Grok4 seemed to be working again and some discuss if Grok is suitable for roleplaying due to writing style and adherence to system prompts.

- OpenAI’s Sora Sparks Industry Debate: Members are clamoring for Sora invite codes, and one user is offering four invites to people in US or CA with an Apple device, lamenting the restriction.

- Discussion touched on OpenAI competing with Google, Meta, and Amazon, with some speculating that Sora 2’s high quality suggests the potential scrapping of all YouTube videos for training.

Cursor Community Discord

- Sonnet 4.5 judged token-greedy, Codex praised as token-stingy: A member felt that Sonnet 4.5 is wasteful in token usage compared to Codex, which is more efficient. Other members noted that Claude models are generally more expensive.

- One member with legacy pricing preferred Sonnet 4.5 over GPT-4-turbo, citing satisfactory performance despite the higher cost.

- Cursor Agents encounter terminal bugs: A member reported that their agent terminals were bugged and could not perform any commands, even after trying different shells (bash, cmd, powershell).

- A suggested solution involved running

winget install --id Microsoft.PowerShell --source wingetand setting the default shell topwshafter restarting Cursor.

- A suggested solution involved running

- Cursor Social Network Platform concept surfaces: A member proposed creating a social network platform for AI creators within Cursor.

- The suggestion received an optimistic, albeit tongue-in-cheek, response: anything is possible if you believe in ✨ magic ✨.

- Cursor Billing Clarity Criticized: A member found the billing page hard to read and sought ChatGPT’s assistance to improve it.

- Members on legacy pricing debated the costs of different models, with some considering Claude Opus very expensive and others suggesting the cost breakdown was misleading.

- TypeScript Refactor yields smooth results: Members found that Cursor refactored modules and classes easily, ensuring a smooth experience, likely using typescript.

- One member could resolve remaining issues by providing a clear and coherent prompt, demonstrating Cursor’s efficacy with precise instructions.

Unsloth AI (Daniel Han) Discord

- GRPO Trainer Commences, but Slowly: A member created their first GRPO (Generative Reinforcement Learning with Policy Optimization) trainer and noted it was slower compared to LoRA finetuning, documented in their Colab notebook.

- Training loss dropped near zero while reward value rose slowly, resembling experiences with simpler machine learning systems, and they plan to transition to other RL tools after this experiment.

- Blackwell GPUs Demand Manual Xformers Compile: Members discussed issues with Xformers on Blackwell GPUs (RTX 50xx series), noting that manual compilation is required due to compatibility problems, sharing compilation steps:

pip uninstall -y xformers; git clone --depth=1 https://github.com/facebookresearch/xformers --recursive; cd xformers; export TORCH_CUDA_ARCH_LIST="12.0"; python3 setup.py install.- One member noted ‘I really don’t need 128k context but I’ll still fling it to maximum anyway human nature at its best’, highlighting the community’s enthusiasm for pushing limits.

- Gemma Fine-Tuning Framework Duel: Members noted that while Unsloth claims to be the only framework allowing Gemma fine-tuning on T4 Collab GPUs, Google’s Gemma documentation also provides a tutorial using LoRA and Keras on T4 GPUs.

- It was later determined that Unsloth identified and fixed the original problems that were preventing it from working efficiently, justifying the claim.

- LLMs Still Clueless About Themselves: Members discussed the accuracy of LLMs when asked about themselves, with one stating that usually asking an LLM about itself is not accurate.

- It was suggested that if GPT-5 seems to know itself, it’s due to either specific training, tool use, or inclusion of identity information in the system prompt, emphasizing that you can’t talk to any gpt without a system prompt.

- ReLU Bug Leads to Shifted Tanh Solution: A member discovered a bug in their original formulation using ReLU, which resulted in a squaring term with the subtract product.

- Switching to a shifted tanh [~0, ~1] instead of [0, N] acted more like a differentiable signal, resolving the issue, and gradients no longer explode, according to the member, linking to a blog post on LoRA as background.

OpenAI Discord

- Sora 2 Invite Scramble: Discord users are aggressively seeking Sora 2 invites, which is causing concerns about potential scams and frustration over the invite-only system.

- Users are directed to channels like <#1379321411046346762> and <#1315696181451559022> to discuss the app and access.

- GLM 4.6 Saves Coding Cash: Users are finding GLM 4.6 to be a cost-effective alternative to Sonnet 4.5 for coding tasks.

- A user noted that zai glm coding plan such good value [they] signed up for a quarter, and provides 600 prompts every 5 hours at a significantly reduced cost.

- GPT-5 Falls Flat on SQL Tests: GPT-5 (Codex and non-Codex) is not SOTA at SQL according to llm-benchmark.tinybird.live, and ranks #23 and #52.

- Meanwhile, o3-mini scored #6 on the same benchmark.

- Instant Generation Feels Not-So-Instant: Users are wondering why instant generation is not instant anymore, even when using custom instructions.

- Some believe it’s due to a bandwidth throttling measure.

- Thinking Mode Knows Your Location: One user suggested that thinking mode has a tool for getting the user’s timezone or approximate IP location.

- This functionality was listed in the ~18K system prompt for thinking as well, and thinking and non-thinking mode have different system prompts and tools.

HuggingFace Discord

- Students Invited to Engineer ML-for-Science Projects: Hugging Face is seeking students and open source contributors to join some ML-for-science projects, check out this Discord link.

- Volunteers will get a chance to make a difference and help humanity, so take on the challenge!

- Trackio aims to Eclipse Wandb: Hugging Face released a new free library for experiment tracking called Trackio, which is a drop-in replacement for wandb and is built to be local-first, check out the github repo.

- Trackio can log metrics, tables, images, and even videos locally!

- Whisper Integration Asked: A member sought help integrating Crisper Whisper into a full-stack app for speech recognition, asking about libraries for recognizing abnormal words.

- No further detail was given.

- Lora Training Looms on the Horizon: Members discussed how Lora training jobs risk data loss if timed out, especially since checkpoints aren’t automatically uploaded to the Hub during the run; a HuggingFace documentation link was shared about setting sufficient timeouts.

- One member noted, *“If your job exceeds the timeout, it will fail and all progress will be lost.”

- RTX 4090 Cards Modified on Secondary Markets: Members discussed modded RTX 4090 cards with 48GB of VRAM being sold on eBay, and AMD Instinct MI210 cards, with one member suggesting 2080ti’s with 22GB of VRAM as a cheaper alternative.

- A member said *“Those instinct cards are useless. Those 4090s are dope. You can get 2080tis with 22gigs of ram for quarter of the price though.”

LM Studio Discord

- vLLM Supercharges Parallel Requests: Members touted that vLLM significantly boosts parallel request handling, showcasing a 4070 running Qwen3 0.6B model.

- The system attained an average generation throughput of 1470.4 tokens/s across 10 concurrent requests.

- LM Studio Demands AVX2: To check compatibility, users can hit

CTRL+Shift+Hwithin LM Studio to open the hardware screen and verify AVX2 support.- It was suggested that a new PC made in the last 5 years with AVX2 instructions, 8GB of VRAM, and 16GB of RAM would meet the minimum requirements.

- Headless LM Studio on the Horizon: LM Studio allows running a server with API access (REST and Python APIs), but full headless mode isn’t yet supported.

- To work around the limitation members suggested using an existing LM Studio WebUI like LMStudioWebUI to connect to the LM Studio API.

- Qwen3-Omni Support Arrives: Members reported that Qwen-Omni model support is arriving, dependent on llama.cpp updates, requiring no action from the LM Studio team.

- One member pointed out that the Qwen chat GUI already has a natively omni model.

- AMD 495+ Unified Memory Anticipation Builds: A member is awaiting the AMD 495+ model that supports 512GB of unified memory, emphasizing the importance of soldered memory to prevent bandwidth bottlenecks.

- They noted that while AMD AI mobile chips are acceptable, their bandwidth of roughly 500 GB/s with system memory is not comparable to the 1 TB/s achievable on GPUs.

Latent Space Discord

- Alexa Enhanced with More AI: Amazon introduced its next-generation AI-driven Echo devices and Alexa updates, boasting improved AI functionalities.

- A community member expressed interest in a hackable Echo Dot Max to facilitate the swapping of LLMs.

- Trillion Parameter Ring Model Debuts: Ant Ling presented Ring-1T-preview, a 1-trillion-parameter open-source “thinking” model, showcasing top-tier math scores, achieving 92.6 on AIME25 and 84.5 on HMMT25, according to benchmarks.

- The model’s architecture enables unique approaches to problem-solving and mathematical reasoning, attracting significant attention within the AI research community.

- Gemini 2.5 Elevates Image Editing: Tim Brooks and Google DeepMind announced new native image editing and generation capabilities for Gemini, emphasizing their consistency, instruction following, and creative potential.

- Community feedback highlighted the model’s ability to maintain the integrity of unchanged elements during edits.

- Cerebras Secures Massive Funding Round: Cerebras Systems announced a $1.1B Series G funding round, valuing the company at $8.1B, with investment allocated to AI processor R&D, U.S. manufacturing expansion, and global data-center scaling, as detailed in their press release.

- Community members requested LoRA/GLM-4.6 support to enhance the versatility of Cerebras’ hardware.

- Anthropic Champions Context Engineering: Anthropic published an engineering blog post asserting that effective context engineering, not just prompt engineering, is crucial for optimizing AI agent performance, using their blog post.

- Developers are exchanging experiences, methodologies, and inquiries concerning memory management to achieve smarter runtime context.

GPU MODE Discord

- Nvidia RTX 4090 Exploits FP8: An ML Engineer implemented flash attention for fp8 sm89 after buying an RTX 4090, noting there was no FP8 support in 2023 when they bought it.

- The engineer said they applied it right after reading a paragraph in the docs.

- Triton DevCon Ready to Launch: The Triton Developer Conference 2025 is weeks away, with opportunities to attend in-person, with leaders such as Mark Saroufim from Meta, Phil Tillet and Thomas Raoux from OpenAI, as well as presenters from AMD, Nvidia, and Bytedance.

- Register at aka.ms/tritonconference2025 to connect with Triton enthusiasts and discover new advancements in the Triton community, which may include the new Blackwell GPU backend for Triton.

- Kernel Addresses Exposed via Blog: A blog post was shared about extracting addresses of kernel functions in host code, accessible at redplait.blogspot.com.

- Kimbo Chen’s blog post on the evolution of NVIDIA Tensor Cores from Volta to Blackwell was also highlighted, and can be found at semianalysis.com.

- Determinism References Dropped: A member posted a link to a NVIDIA presentation on determinism in deep learning from 2019, as well as the NVIDIA cuDNN documentation.

- The sources detailing the backend and various academic papers and articles on the topic of determinism, including one on defeating nondeterminism in LLM inference may be useful for understanding how to leverage its features for deep learning tasks.

- Freelunch AI Provides Free Education: A member shared a link to a GitHub repository, Freelunch-AI/lunch-stem, describing it as the best place to learn AI & CS for free and to provide a platform for free learning in AI and Computer Science (CS).

- The repository is positioned as an open-source educational resource that emphasizes open-source contributions to make quality education accessible.

Nous Research AI Discord

- Sequence Expansion Transformer Seeks Inclusion: A member is developing Sequence Expansion Transformers (SEX Transformers) and has requested it be added to the community projects section.

- Another member joked about whether to make the arrows red in a UI, asking shall i make the arrows red or can you see.

- Teknium Teases Sora 2 Locally: Teknium showcased their initial Sora 2 video and considered running a 333B model on a 256GB RAM CPU setup.

- It was pointed out that the model size is actually 357B according to the HF page.

- Mamba Selectivity Enters the DeepSeek: A DeepSeek Sparse Attention (DSA) v3.2 model now incorporates a mamba selectivity-like mechanism as a sub-attention block.

- The update speculates on the potential implementation and benefits of this integration.

- Cracking Cosine Similarity in LLMs: A member asked about LLMs with lower cosine similarity, such as gpt-oss-120b and how it would be useful.

- Another member clarified that cosine similarity reflects the similarity of chains of thought (CoT) in LLMs, where high similarity may suggest training on comparable data.

- Investor Investigates Symbolic Architectures for AGI: A VC investor is diving into symbolic architectures like Aigo.ai, Symbolica, AUI (Augmented Intelligence), and After Thought (Stealth) to discover AGI solutions.

- They deem Aigo.ai as the most promising and want to know what requirements must one have for working on the same?

Yannick Kilcher Discord

- Thematic Roles spark LLM debate: A member shared a Wikipedia link on thematic roles and another suggested that LLMs might have such structures, referencing work on Othello where board representations emerged in an LLM trained on move sequences.

- Dowty’s use of proto(typical) roles was also highlighted in the discussion.

- Pixel Peeping Prevents Phake Physics?: In a discussion about detecting AI-generated videos like those from Sora 2, a member suggested that pixel peeping still works pretty reliably.

- Another member commented that ideally, detecting AI-generated content shouldn’t be possible, but believes that people will get used to detecting them even by vibes alone.

- AlphaEvolve Advances Google: Google uses AlphaEvolve, an LLM-based coding agent, to find and verify combinatorial structures to improve results on the hardness of approximately solving certain optimization problems, as described in their recent blog post.

- The new results advance theoretical computer science.

- NVIDIA Reasons with RL on pretraining data: A member shared a paper about Reasoning with RL on pretraining data from NVIDIA.

- This paper was described as more complicated than another paper with similar gains despite not explicitly evoking reasoning.

- Sora 2 Superior Physics: Members mentioned that the quality of Sora 2 is way better than Sora 1, because Sora 1 couldn’t do physics and had obvious visual artifacts.

- The advancements in physics simulation were highlighted as a key improvement.

aider (Paul Gauthier) Discord

- Aider Forks Embrace MCP: While the official aider lacks support for Multi-modal Conversational Platforms (MCP), some forks like aider-ce have integrated it, prompting users to seek alternatives for enhanced frontend development capabilities.

- The discussion highlighted the increasing importance of browser MCP for frontend tasks, given the cost and rate limits on commercial platforms.

- Local LLM Compatibility with Aider Faces Hurdles: Users reported that aider struggles with local LLMs such as Qwen coder 30b and devstral 24b even though the models possess tool use capabilities, as it includes the files directly into the user prompt for context.

- It was clarified that aider works with local models if they’re sufficiently advanced, like gpt-oss, and can process the provided context effectively.

- LM Studio Bugs Bug Users: Experiences shared indicate that LM Studio encounters issues, notably with the mlx backend, leading users to suggest that llama.cpp offers a more stable experience.

- The thread recommended using the

--jinjaparameter or transitioning to ollama to mitigate these problems.

- The thread recommended using the

- Apriel-1.5-15b Model Asks for Special llama.cpp Support: A user inquired about potential compatibility issues between the new Apriel-1.5-15b model and llama.cpp, particularly regarding the model’s tendency to include its thinking output within the main reply.

- They speculated whether llama.cpp requires dedicated support or configuration settings to separate the thinking output, drawing comparisons to gpt-oss-20b.

- Koboldcpp Post-Processing to the Rescue: A member pointed out that koboldcpp employs output templates or post-processing techniques for output management, suggesting potential parallels with llama.cpp capabilities.

- This insight offers a possible avenue for addressing output formatting challenges encountered with models like Apriel-1.5-15b.

Manus.im Discord Discord

- Manus Gets Stuck in a Loop: Users are reporting errors with Manus getting stuck in a loop, generating an Internal server error with code 10091.

- Users report that submitting help tickets and URLs to make projects public receive no response from the Manus team.

- Memory Key Protocol creates User-Owned AI Memory: A user has developed the Aseel-Manus Memory Key Protocol, a model-agnostic framework for persistent, user-owned AI memory, allowing for flawless session continuity by serializing the agent’s cognitive context into a secure and portable key.

- The protocol has been validated on both Manus and Google’s Gemini, utilizing robust encryption standards for user privacy.

- AI Automation Agencies get Architectural Breakdown: A user sought guidance on legitimate AI automation agencies, to which another user breaks them down into three models: Orchestrators (no-code/low-code), Architects (custom Python, LangChain/LlamaIndex, and vector DBs), and Integrators (managing enterprise-level compliance).

- A key vetting tip is to ask potential agencies how they handle state management and error recovery in their automations.

- Credit Consumption Rate Criticized: A user complained about Manus consuming over 5300 credits for a basic research task involving x-rays and spreadsheet creation.

- A team member requested the session link to investigate the excessive credit usage and consider a potential refund.

- Sora Invite Code Giveaway: A user is offering Sora invite codes to anyone who DMs them.

- There are no further details available.

Eleuther Discord

- Adaptive Searching achieves improvements: A member reported adaptively doing additional searching per query/head/layer, capped logarithmically, showing promising cosine similarity in the final output after attention and W_o.

- A CleanShot image showed the results.

- DeepSeek’s Sparse Attention: A discussion started around the sparse attention mechanism in the new DeepSeek model, with a link to the FlashMLA pull request.

- A member noted that the sparse attention sounds really interesting.

- Inquiry for Meta-Studies in AI: A member asked for meta-studies analyzing past research, wondering about the impact of abundant work, and sought papers offering insights beyond personal taste, asking about relevant papers such as this one.

- BBH Benchmark Usage Rates: A member shared findings on benchmark usage, mentioning less than 50% of BBH citations are from papers that actually evaluate on it.

- They noted that the first 50 papers they looked at citing BigBench had a 0% use rate of the official implementation.

- RWKV7Qwen3Hybrid5NoPE-8B-251001Faced Struggles with GSM8k: A member reported facing issues with SmerkyG/RWKV7Qwen3Hybrid5NoPE-8B-251001Faced on the GSM8k benchmark, sharing an image of the results.

- The same member noted that llama-3-8B also had low scores.

Modular (Mojo 🔥) Discord

- Windows Support Stays on the Backburner: Windows support remains a low priority for Modular, with community contributions anticipated post-compiler open sourcing, however, it was pointed out that waiting out windows 10 lets us assume Windows means >= AVX2, which is actually hugely beneficial.

- Some members noted many GPUs or accelerators will never be available on Windows due to a lack of vendor support.

- Mojo slides into Last Place on Stack Overflow Survey: Mojo debuted in the Stack Overflow developer survey, landing in last place according to the survey results.

- Despite the low ranking, community members celebrated Mojo’s inclusion in the survey.

- Notebook Support Gains Traction: Members are requesting notebook support, namely syntax highlighting in Jupyter Notebook and Max Kernel in the upstream Jupyter, to showcase ideas in ML fields due to the ease of commenting math formulas or supplying graph descriptions around code.

- The discussion clarified the need for both interacting with Max from a notebook and directly authoring and running Mojo within notebooks.

- MAX can’t run Cerebras - Yet: A member inquired about running a MAX implemented AI model on Cerebras for benchmarking, another member clarified that MAX doesn’t currently run on Cerebras, and that there are no optimizations for dataflow chips like Cerebras.

- This suggests that using Mojo X Max for AI projects will not provide a free performance boost on AI chips like Cerebras.

Moonshot AI (Kimi K-2) Discord

- OpenAI Teases Investors with TikTok Ad: OpenAI released a TikTok ad showcasing a prompt box, stirring excitement among investors.

- Some viewers dismissed the ad as slop.

- Chinese Models Evade Sensitive Subjects: A user questioned which topics Chinese models are post-trained to avoid, noting somethings about India cannot be spoken.

- The inquiry highlights ongoing concerns about censorship and bias in AI models.

- API Version Models Offer Openness: A member asserted that the API version of some models is pretty open, with no censors aside from the less western biased data inside the models compared to US models.

- This suggests a potential trade-off between censorship and regional bias in different model versions.

DSPy Discord

- LiteLLM and DSPy Faceoff for App Domination: A member weighed using LiteLLM or DSPy for a new LLM application, noting DSPy employs LiteLLM as a gateway.

- The original poster felt LiteLLM lacked sufficient structure, merely acting as an interface, while DSPy forces a specific problem-solving approach.

- DSPy Structure: Optional or Orchestrated?: A member countered that

messagescan be directly passed to adspy.LM, implying DSPy’s structure is not necessarily imposed.- The poster highlighted that not all LiteLLM features are utilized and some assembly is required to achieve desired outcomes.

- Caching Critiques center on Content Chaos: A user questioned manipulating the order of the

contentpart of the JSON request when utilizing caching.- This implies that the order of prompts and files is critical for effective caching strategies.

tinygrad (George Hotz) Discord

- CLSPV still crashes, mostly passes: A member reports they are still experiencing crashes while running tests with CLSPV, but the tests mostly pass now.

- Users with x86_64 Linux systems can try it themselves by installing the fork with

pip install git+https://github.com/softcookiepp/tinygrad.git.

- Users with x86_64 Linux systems can try it themselves by installing the fork with

- ShapeTracker faces imminent deletion: A member inquired about the status of the Shape Tracker Lean Prove Bounty.

- It was further discussed that ShapeTracker will be deleted soon.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Windsurf Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

Perplexity AI ▷ #general (997 messages🔥🔥🔥):

Sora 2, Free Perplexity Pro, Grok vs. ChatGPT

- Sora 2 Generates Hype and Memes: Members have been actively generating content with Sora 2, with one boasting about generating 100 videos in a single day, and several are creating content with Pikachu.

- Some users feel the Sora 2 watermark is professional-looking and doesn’t need to be removed, while others anticipate a paid version integrated into GPT Plus.

- VPN Shenanigans Unleash Sora 2 for All: Users discovered that Sora 2 can be accessed for free by using a VPN to connect through Canada, although one person noted that the app now runs without a VPN.

- A user generated 100 videos and also tested Sora’s new Cameo features, creating videos that are now trending across social media.

- Peeps get Perplexity Pro for Free: Users are getting access to Perplexity Pro for free through various promotions, including partnerships with Airtel, Venmo, and PayPal.

- The free access to Perplexity Pro has made some question the value of other paid AI subscriptions, with one member humorously stating, “No one needs to when we got Perplexity pro is here for free anyways”.

- Grok Stumbles and Bumbles: Members are finding Grok to be underperforming in certain areas, such as image and video generation, with one user stating that “Grok is severely behind in image and video gen.

- One user says Grok’s search struggles with filtering out outdated or wrong sources.

- LLMs are on Fire: One user reported results using Kimi, Z and Perplexity Labs, showing that Kimi has animation and better UI, Z.ai looks better overall.

- Perplexity labs was not even considerable, but one user also suggests DeepSeek should also be in the test mix.

Perplexity AI ▷ #sharing (7 messages):

Sora 2 Release, Comet Invite Referral

- Sora 2 Set to Drop: Members shared a link to a Perplexity page indicating that OpenAI is launching Sora 2.

- Comet Invite up for Referral: A member shared a referral link for Comet, offering an invite via referral.

- They also mentioned that anyone using the referral will get a Comet invite and encouraged those interested to DM them.

Perplexity AI ▷ #pplx-api (1 messages):

.idothehax: oh

LMArena ▷ #general (997 messages🔥🔥🔥):

Claude API Free Use, Seedream 4 Nerfs, Ethical AI Discussion, Sora 2 Invite Codes, Image Generation Issues

- Claude’s Free API Use Explored: Members discussed the possibility of using the Claude API for free, with one suggestion mentioning puter.js and another suggesting connecting an MCP to a free account.

- However, limitations were noted, such as a 20M token limit weekly and the potential use of user data as payment.

- Seedream 4 Nerfs and User Feedback: Users discussed recent changes to Seedream 4, with one member detailing the different versions and their respective pros and cons, including resolution, speed, and image quality.

- A common complaint was the forced 1:1 aspect ratio which some felt made it useless for image editing.

- Ethical AI Debated: Members inquired about suitable channels for discussing ethical AI, with one member responding that <#1340554757827461211> or <#1377796849901240321> would be appropriate.

- One user sarcastically stated, Ethical ai 🤢, I want the AI stealing my job now.

- Sora 2 Invite Codes Requested and Shared: Several members requested Sora 2 invite codes, with at least one user offering to pay for one.

- One member generously shared a Sora 2 code in the chat.

- Image Generation Runs Into New Issues: Members reported issues with image generation, including images generating without audio, images being locked to a 1:1 aspect ratio, and other unexpected errors.