300 slides are all you need.

AI News for 10/8/2025-10/9/2025. We checked 12 subreddits, 544 Twitters and 23 Discords (197 channels, and 7870 messages) for you. Estimated reading time saved (at 200wpm): 583 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

Congrats to Reflection, Mastra, Datacurve, Spellbook, and Kernel on their fundraises.

The AI-native equivalent of the annual Mary Meeker report has been Nathan Benaich’s State of AI report. You can catch the highlight in tweet thread or youtube, but we’ll offer some notes here:

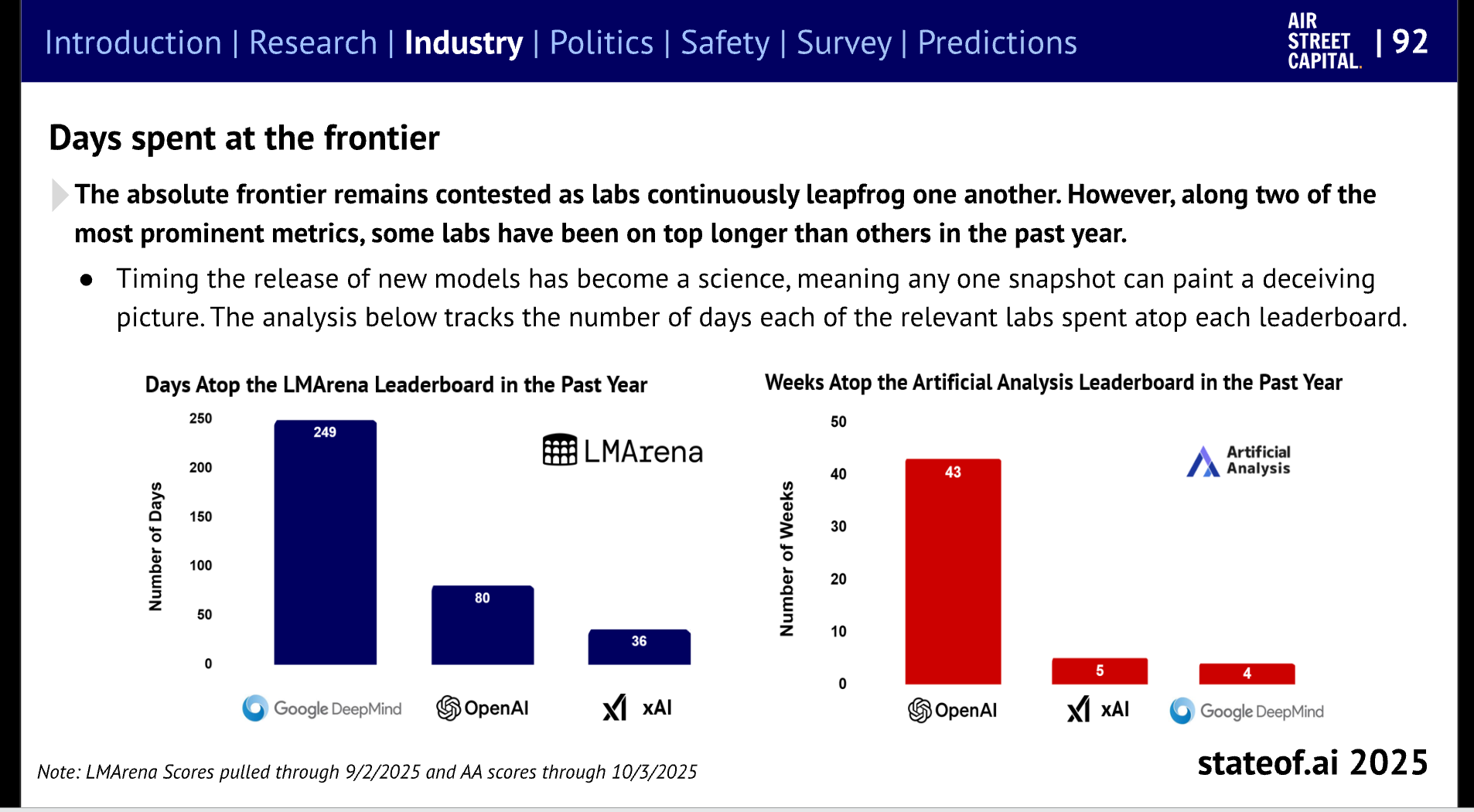

The labs with the mandate of heaven (where does Anthropic show up?):

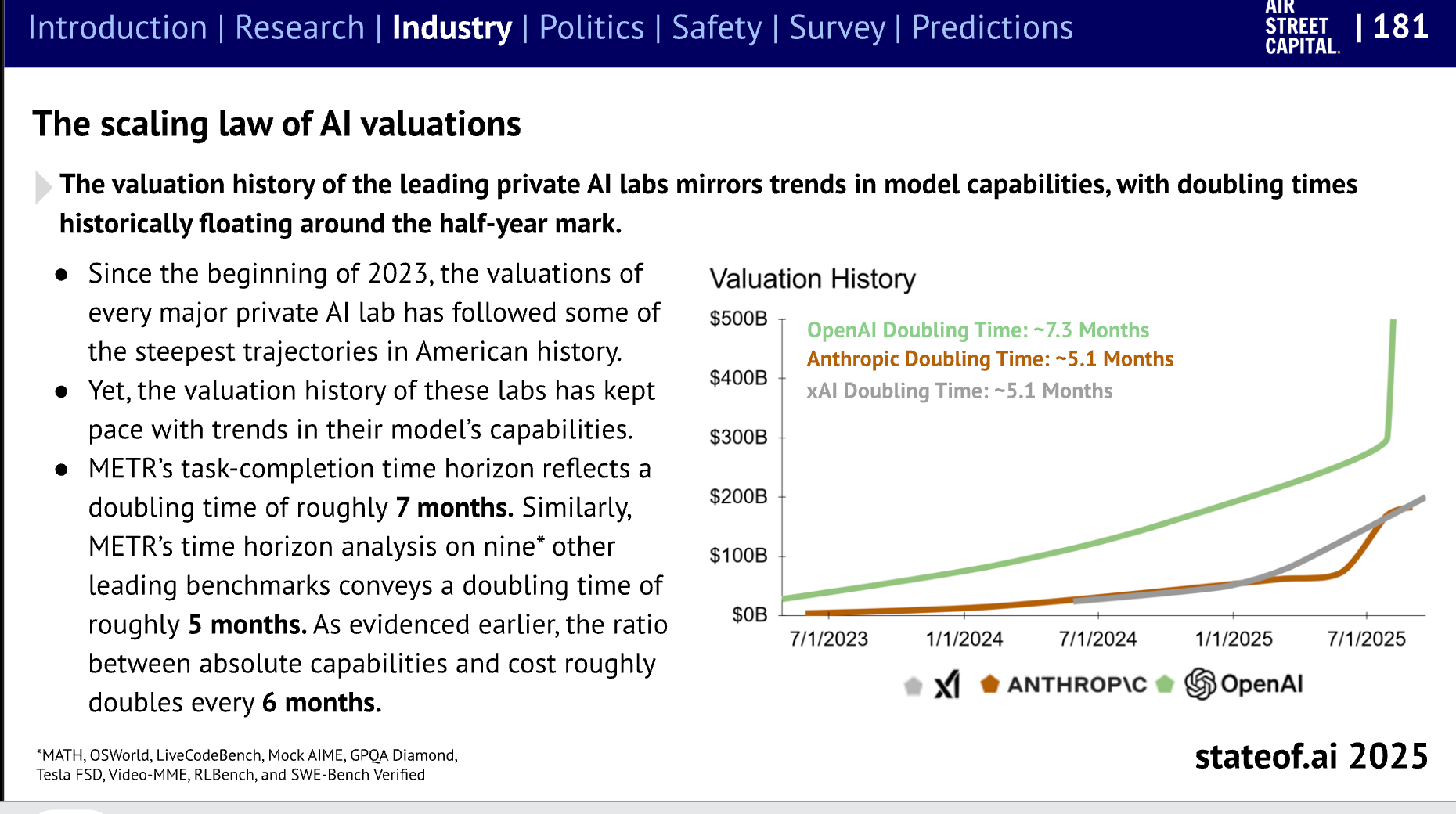

and their valuations:

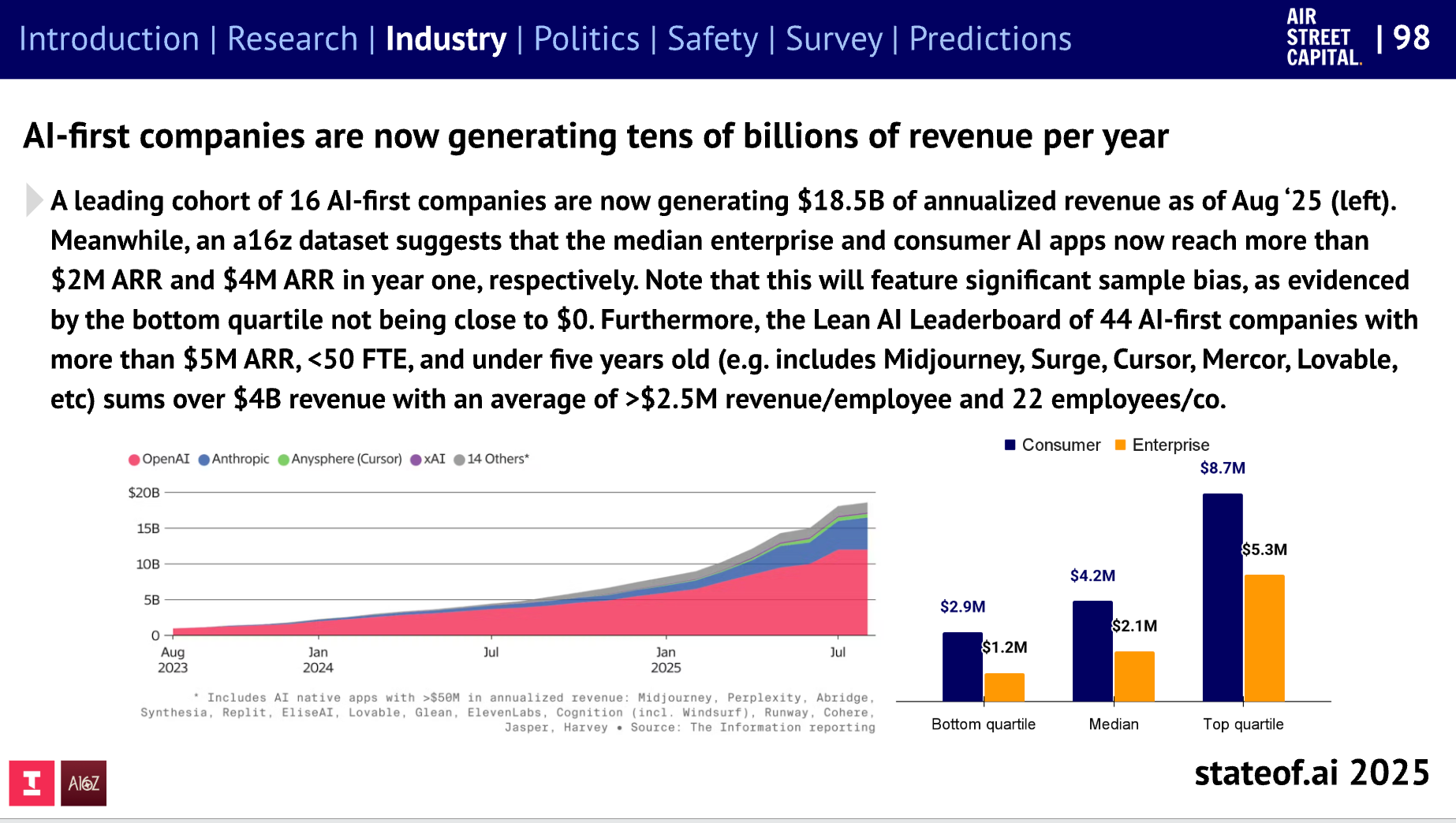

AI-first “tiny teams”:

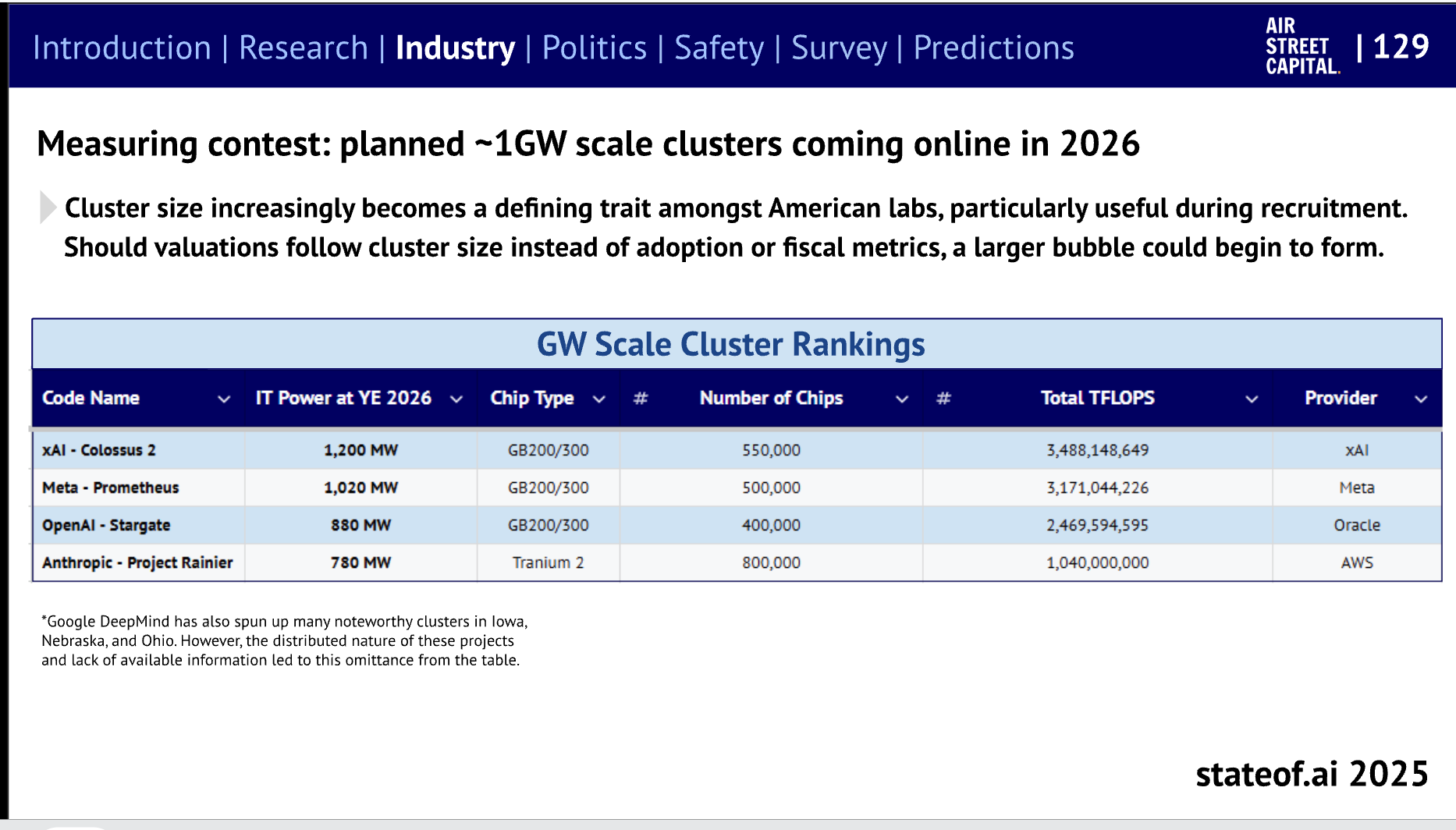

The 2026 cluster buildouts:

AI Twitter Recap

Humanoid Robotics: Figure 03 launch, capabilities, and industry moves

- Introducing Figure 03: Figure unveiled its next-gen humanoid with a highly produced demo and a detailed write-up on system design and product goals. The team emphasizes “nothing in this film is teleoperated,” positioning F.03 for “Helix, for the home, and for the world at scale.” See launch and follow-ups from @Figure_robot, @adcock_brett, and write-up links from @adcock_brett. For broader robotics context: SoftBank is acquiring ABB’s robotics unit for $5.4B per The Rundown.

- Discussion: Early reviews note some demo quirks (e.g., sorting choices), but overall the capability trajectory and non-teleop claim drew strong interest from practitioners; see reactions from @Teknium1.

Open frontier modeling and releases: Reflection’s $2B, Diffusion LMs, GLM-4.6, and small-model reasoning

- Reflection raises $2B to build frontier open-weight models: The lab is scaling large MoE pretraining and RL from scratch with an explicit open-intelligence roadmap (safety and evals emphasized). Founder and team context (AlphaGo, PaLM, Gemini contributors) and hiring across SF/NY/London. Read the statement from @reflection_ai and commentary by @achowdhery and @ClementDelangue.

- Diffusion Language Models go bigger (open): Radical Numerics released RND1, a 30B-parameter sparse MoE DLM (3B active), with weights, code, and training details to catalyze research into DLM inference/post-training and a simple AR-to-diffusion conversion pipeline. See the announcement and resources via @RadicalNumerics and a concise summary thread by @iScienceLuvr.

- Zhipu’s GLM-4.6 and open models momentum: Zhipu’s GLM-4.6 posts strong results on the Design Arena benchmark per @Zai_org. Cline notes GLM-4.5-Air and Qwen3-Coder are the most popular local models in their agent IDE (tweet).

- Tiny reasoning at the edge: AI21’s Jamba Reasoning 3B leads “tiny” reasoning models with 52% on IFBench per @AI21Labs. Related, Alibaba’s Qwen continues to push breadth: Qwen3-Omni (native end-to-end multimodal) and Qwen-Image-Edit 2509 now ranked #3 overall, leading open-weight models (@Alibaba_Qwen, tweet).

Developer tools and agent stacks: Claude Code plugins, VS Code AI, Gemini ecosystem

- Claude Code opens up plugins: Anthropic shipped a plugin system and marketplace for Claude Code. Update your CLI and add via “/plugin marketplace add anthropics/claude-code.” Early community marketplaces emerging. See threads from @The_Whole_Daisy and @_catwu.

- VS Code v1.105 September release: AI-first UX improvements include GitHub MCP registry integration, AI merge-conflict resolution, OS notifications, and chain-of-thought rendering with GPT-5-Codex. Details and livestream via @code.

- Google’s Gemini platform updates: New “model search” in AI Studio (@GoogleAIStudio), hosted docs for the Gemini CLI (@_philschmid), and “Gemini Enterprise” as a no-code front door to build agents and automate workflows across Workspace/M365/Salesforce and more (@Google, @JeffDean).

- Memory and eval-driven optimization in agent pipelines: Developers test memory layers like Mem0 (@helloiamleonie) and use DSPy/GEPA to switch models at 20x lower cost without regressions (@JacksonAtkinsX); see also DSPy TS usage demo (@ryancarson).

Benchmarks and evaluations: ARC-AGI, METR time-horizons, FrontierMath, and domain leaderboards

- GPT-5 Pro posts new SOTA on ARC-AGI: Verified by ARC Prize, GPT-5 Pro achieved 70.2% on ARC-AGI-1 ($4.78/task) and 18.3% on ARC-AGI-2 ($7.41/task), the highest frontier LLM score on the semi-private benchmark to date (@arcprize).

- Time-horizon on agentic SWE tasks: METR estimates Claude Sonnet 4.5’s 50%-time-horizon at ~1 hr 53 min (CI 50–235 min), a statistically significant improvement over Sonnet 4 but below Opus 4.1 point estimates; see @METR_Evals.

- Math and reasoning evaluations: Epoch reports Gemini 2.5 “Deep Think” set a new record on FrontierMath (manual API evaluation due to lack of public API), with broader math capability analysis in thread (@EpochAIResearch). ARC-AGI numbers prompted debate on recent progress pacing vs. trendlines (see @scaling01, @teortaxesTex).

- Vision/editing and design tasks: Qwen Image Edit 2509 ranks #3 overall, leading open-weight models (@Alibaba_Qwen). GLM-4.6 shows strong performance on Design Arena (@Zai_org).

Systems, performance, and infra: GPU kernels, inference benchmarking, and MLX speed

- GPU kernels and “register tiles”: tinygrad is porting ThunderKittens’ “register tile” abstraction (“registers are the wrong primitive”) as “tinykittens,” citing simpler yet performant GPU code (tinygrad). Awni Hannun dropped a concise MLX matmul primer to illuminate tensor core fundamentals (tweet).

- Real-world inference benchmarking at scale: SemiAnalysis launched InferenceMAX, a daily cross-stack benchmark suite spanning H100/H200/B200/GB200/MI300X/MI325X/MI355X (soon TPUs/Trainium), focused on throughput, cost per million tokens, latency/throughput tradeoffs, and tokens per MW across modern servers and inference stacks (@dylan522p).

- On-device and Apple silicon: Qwen3-30B-A3B 4-bit hits 473 tok/s on M3 Ultra via MLX (@ivanfioravanti). Google released a Gemma 3 270M fine-tune-to-deploy flow that compresses to <300MB and runs in-browser/on-device (@googleaidevs; tutorial by @osanseviero).

Multimodal/video: Sora 2 momentum, Genie 3 recognition, and WAN 2.2

- Sora 2 growth + free HF demo: Sora 2 hit 1M app downloads in under 5 days (despite invites and NA-only) with rapid iteration on features and moderation (@billpeeb). A limited-time Sora 2 text-to-video demo is live on Hugging Face and getting used in the wild (tweet). The cameo use-case exploded, with notable NIL-driven virality (@jakepaul).

- Genie 3 named a TIME Best Invention: Google DeepMind’s interactive world model continues to draw attention for generating playable environments from text/image prompts (@GoogleDeepMind, @demishassabis).

- WAN 2.2 Animate tips and workflows: Community tutorials show improved lighting/flame behavior and practical pipelines for animation tasks (@heyglif, @jon_durbin).

Safety, bias, and security

- Few-shot poisoning may suffice: Anthropic, with UK AISI and the Turing Institute, shows that a small, fixed number of malicious documents can implant backdoors across model sizes—challenging prior assumptions that poisoning requires a sizable dataset fraction. Read the summary and paper from @AnthropicAI.

- Political bias definitions and evaluation: OpenAI researchers propose a framework to define, measure, and mitigate political bias in LLMs (@nataliestaud).

Top tweets (by engagement)

- Elon shows Grok’s “Imagine” reading text from an image (no prompt) — a virality juggernaut this cycle: @elonmusk

- Figure 03 humanoid launch (non-teleop claim, multiple clips): @Figure_robot, @adcock_brett

- “POV: Your LLM agent is dividing a by b” — debugging agents, the meme we deserved: @karpathy

- “I prefer not to speak.” — the quote that took over everyone’s timeline: @UTDTrey

- Genie 3 named a TIME Best Invention: @GoogleDeepMind

- ARC-AGI new SOTA with GPT-5 Pro: @arcprize

Notes

- Elastic acquired Jina AI to deepen multimodal/multilingual search and context engineering in Elastic’s agentic stack (tweet).

- Gemini crossed 1.057B visits in Sept 2025 (+285% YoY), its first month over 1B visits (@Similarweb).

- State of AI 2025 is out; usage, safety, infra, and research trends summarized (@nathanbenaich).

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Microsoft UserLM-8B ‘User’ Role-Simulation Model Announcement

- microsoft/UserLM-8b - “Unlike typical LLMs that are trained to play the role of the ‘assistant’ in conversation, we trained UserLM-8b to simulate the ‘user’ role” (Activity: 548): Microsoft’s UserLM-8b is an 8B-parameter user-simulator LLM fine-tuned from Llama3‑8b‑Base to predict user turns (from WildChat) rather than act as an assistant; it takes a single task-intent input and emits initial/follow-up user utterances or an <|endconversation|> token (paper, HF). Training used full-parameter finetuning on a filtered WildChat‑1M with max seq len 2048, batch size

1024, lr2e-5, on4× RTX A6000over~227 h. Evaluations report lower perplexity (distributional alignment), stronger scores on six intrinsic user-simulator metrics, and broader/diverse extrinsic simulation effects versus prompted assistant baselines; the research release warns of risks (role drift, hallucination, English-only testing, inherited biases) and recommends guardrails (token filtering, end-of-dialogue avoidance, length/repetition thresholds). Commenters highlight the meta trend of AI training/evaluating AI and express safety/availability concerns (possible takedown), with little substantive technical critique in-thread.- Several commenters highlight the closed-loop risk of “AI evaluating AI” if UserLM-8b is used to simulate users that other models then optimize against. This can induce feedback loops and distribution shift where models overfit to the simulator’s style/tokens, degrading benchmark validity and leading to artifacts like reward hacking, prompt overfitting, and misleading improvements that don’t transfer to real users.

- There’s concern the release might be pulled for safety reasons, implying reproducibility and availability risk for experiments with UserLM-8b. Practically, this means researchers should pin exact checkpoints and versions early to preserve comparability across runs and avoid future benchmark drift if artifacts/weights are taken down or altered.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Qwen Image Edit 2509: Next Scene LoRA and named-entity editing tips

- I trained « Next Scene » Lora for Qwen Image Edit 2509 (Activity: 607): Author releases an open-source LoRA, “Next Scene,” for

Qwen Image Edit 2509, aimed at scene continuity: invoking the triggerNext scene:yields follow-up frames that preserve character identity, lighting, and environment across edits. Repository and weights are available on Hugging Face: lovis93/next-scene-qwen-image-lora-2509, with no usage restrictions; the core UX is to prepend the prompt with “Next scene:” and describe desired changes. A commenter proposes extending the method to controllable camera re-posing (e.g., specifying current view and target view like “camera right”) to simulate multi-camera continuity from a single still—implying a need for viewpoint-consistent novel view synthesis. Another asks whether a workflow or example pipeline is included.- A commenter proposes a view-conditioned LoRA: given a single image and a directive like “camera right,” the model should render the identical scene from a new camera pose (multi-camera shoot simulation). Implementing this would likely require conditioning on explicit pose signals (e.g., tokens mapped to SE(3) transforms or numeric camera extrinsics), multi-view training data, and geometry-aware guidance (depth/normal ControlNet). Key challenges are preserving scene/identity consistency under viewpoint changes and resolving occlusions; related prior art includes single-image novel view methods like Zero-1-to-3 and Stable Zero123.

- A data-scale question surfaces: “How many pairs of data were used for training?” For instruction-like LoRAs that map prompts (e.g., “Next Scene”) to structured edits in image editors (Qwen Image Edit 2509), generalization typically depends on hundreds-to-thousands of paired before/after examples; too few pairs risks overfitting to narrow styles or compositions. Reporting pair counts, LoRA rank, and training schedule would help others reproduce/benchmark and understand capacity-vs-quality tradeoffs.

- The LoRA checkpoint is shared on Hugging Face for reproducibility: https://huggingface.co/lovis93/next-scene-qwen-image-lora-2509/tree/main. Technical readers may look for exported safetensors, example prompts, and any training configs or logs to evaluate compatibility with Qwen Image Edit 2509 pipelines and compare against baselines.

- TIL you can name the people in your Qwen Edit 2509 images and refer to them by name! (Activity: 484): OP shows that the Qwen Edit image-editing pipeline can bind named entities to separate input reference images (e.g., “Jane is in image1; Forrest is in image2; Bonzo is in image3”) and then control multi-subject composition via natural-language constraints (relative positions, poses, and interactions) while preserving details from a chosen reference (“All other details from image2 remain unchanged”). They share a straightforward ComfyUI workflow JSON that reproduces this behavior, enabling multi-image identity/appearance referencing without extra training or LoRAs (workflow). Commenters note the workflow’s simplicity and express surprise this wasn’t widely tried; a key question is whether success relies on the model’s prior knowledge of known figures (“Forrest”) and if it generalizes equally to three unknown subjects.

- Several commenters question whether the “name binding” works only because the model already knows famous entities (e.g., “Forrest”), versus true arbitrary-identity binding. They propose testing with “three random unknowns” to verify that Qwen Edit 2509 can consistently disambiguate and condition on non-celebrity identities by name, rather than relying on prior knowledge embedded in the model.

- A key implementation question raised: do you need a separate reference latent per image/person for this workflow to function? This touches on how identity conditioning is represented (per-subject latent/embedding vs shared latent across multiple images), potential VRAM/compute trade-offs, and whether latents can be cached or reused to reduce cost while maintaining consistent name-to-identity mapping across generations.

2. AI progress retrospectives: ‘Will Smith spaghetti’ and ‘2.5 years’ revisited

- Will Smith eating spaghetti - 2.5 years later (Activity: 9007): Revisits the canonical 2023 “Will Smith eating spaghetti” AI video as an informal regression benchmark for text‑to‑video progress

~2.5 yearslater, linking a new clip (v.redd.it/zv4lfnx4j2uf1) that currently returnsHTTP 403(OAuth/auth required). Historically associated with early diffusion T2V (e.g., ModelScope damo‑vilab/text‑to‑video‑ms‑1.7b), the prompt surfaced classic failure modes—identity drift, utensil/food physics artifacts, unstable hand‑mouth interactions, and temporal incoherence—which this revisit implicitly uses to gauge improvements in control and realism. Without access to the clip, no quantitative comparison can be drawn, but the framing suggests better motion control and stability versus 2023 outputs. Commenters propose keeping this prompt as a de facto standard benchmark; others note the newer output feels more controlled while some prefer the older glitchy rendition as more “authentic,” reflecting a polish-versus-aesthetic debate rather than a metrics-based one.- Several commenters implicitly treat “Will Smith eating spaghetti” as a de facto regression test for text-to-video, since it stresses hand–mouth coordination, thin deformable strands, utensil occlusion, bite/chew/swallow transitions, fluid/sauce dynamics, and conservation-of-mass—failure modes common in diffusion-based video models. A rigorous setup would fix prompt/seed across model versions and score with

FVD(paper) plus action-recognition consistency (e.g., classifier accuracy for the verb “eating” on 20BN Something-Something). - A key limitation highlighted: models render plausible exterior motions but lack internal state transitions for ingestion—clips loop “put-to-mouth” without chewing/swallowing or decreasing food volume, signaling missing causal state tracking and object permanence. This aligns with known gaps in 2D video diffusion lacking explicit 3D/volumetric and physics priors; remedies include 3D-consistent video generation and world-model approaches with differentiable physics (see World Models Ha & Schmidhuber, 2018).

- Preference for the 2023 output as more “authentic” hints at a trade-off: newer generators may improve photorealism/identity fidelity but over-regularize micro-actions (mode collapse), degrading action semantics like actual consumption. Evaluations should move beyond appearance metrics (FID/

FVD) to temporal/action faithfulness, e.g., CLIP-based action scoring (CLIP), temporal cycle-consistency (TCC), or explicit “consumption-event” detectors that verify decreasing food mass over time.

- Several commenters implicitly treat “Will Smith eating spaghetti” as a de facto regression test for text-to-video, since it stresses hand–mouth coordination, thin deformable strands, utensil occlusion, bite/chew/swallow transitions, fluid/sauce dynamics, and conservation-of-mass—failure modes common in diffusion-based video models. A rigorous setup would fix prompt/seed across model versions and score with

- 2.5 years of AI progress (Activity: 781): The post titled “2.5 years of AI progress” links to a video on Reddit (v.redd.it/qqxhcn4ez2uf1), but the media returns an HTTP

403network-security block, so the underlying content cannot be verified. Based on the title alone, it likely juxtaposes model outputs across ~2.5 years, yet there are no visible benchmarks, model identifiers, prompts, or inference settings to assess methodology or quantify progress from the accessible context. Top comments split between nostalgia for earlier, more chaotic model behavior and claims of “exponential” improvement, but no quantitative evidence or technical specifics are offered to substantiate either view.

3. Figure 03 launch and AI policy/workforce debates (Anthropic limits, EO compliance, Altman)

- Introducing Figure 03 (Activity: 2102): Reddit post announces “Figure 03,” presumably the next-gen humanoid from Figure. The demo video (blocked to us at the Reddit media link) is claimed to be fully autonomous—i.e., not teleoperated—per Figure CEO Brett Adcock’s confirmation on X (source), implying onboard perception, planning, and control rather than remote driving. No benchmarks, system specs, or training details are provided in the thread. The only substantive debate centers on teleoperation; commenters reference Adcock’s statement to conclude the demo reflects real autonomous capability rather than puppeteering.

- Several commenters highlight a claim that the Figure 03 demo involved no teleoperation (no human-in-the-loop joysticking), interpreting this as evidence of on-board autonomy for perception, planning, and control across the shown tasks. This materially reduces “Wizard-of-Oz” concerns and shifts scrutiny toward what level of scripting or environment priors might still be present. Reference: confirmation link shared in-thread: https://x.com/adcock_brett/status/1976272909569323500?s=46.

- Technical skepticism centers on whether the demo is heavily leveraging tricks (e.g., tight scene staging, pre-programmed trajectories, selective cuts) versus robust generalization. Commenters call for a live, continuous, single-take demonstration in an uninstrumented environment with ad-hoc, audience-specified perturbations to validate reliability and latency, and to rule out hidden external localization or motion capture.

- Multiple users note a large capability jump from Figure 02 → Figure 03, implying broader task coverage and more polished manipulation/mobility behaviors. They suggest the “use cases piling up” merit tracking concrete metrics in future demos (task success rates, recovery behavior, cycle times), to quantify progress beyond curated highlight reels.

- Megathread’s Response to Anthropic’s post “Update on Usage Limits” (Activity: 971): Synthesizing 1,700+ reports from the r/ClaudeAI Usage Limits Megathread in response to Anthropic’s “Update on Usage Limits”: many users hit caps rapidly on Sonnet 4.5 alone (e.g.,

~10messages or1–2days, sometimes hours), so “use Sonnet instead of Opus 4.1” doesn’t alleviate lockouts. Metering is reported as opaque/inconsistent—small edits can burn5–10%of a5-hoursession (previously2–3%), perceived~3xcost/turn increases, and shifting reset timestamps across the5-hour, weekly all-model, and Opus-only pools—fueling work-stopping weekly lockouts and churn. Proposed remediations: replace weekly cliffs with daily caps + rollover; publish exact metering math (how uploads, extended thinking, compaction, and artifacts are counted); add model-scoped meters, pre-run cost hints, and “approaching cap” warnings; standardize reset times; sweep metering anomalies; enable paid top-ups and grace windows; and improve Sonnet 4.5 long-context/codebase reliability to avoid forced fallbacks to Opus. Commenters characterize the change as a stealth downgrade driving cancellations/refunds; one Pro user estimates capacity dropped from~42 h/week(4×1.5h/day, no weekly cap) to~10 h/week(10×~1h sessions). Others assert “everyone is hitting the weekly limit after the update,” particularly on Sonnet 4.5.- Several Pro users quantify the impact of Sonnet 4.5’s new weekly cap: with “intense programming” they hit the limit in

10one‑hour sessions per week (10hours total), versus prior usage of4daily sessions ×1.5hours (=42hours/week). Practically, this is a ~76%reduction in available coding time compared to pre‑update behavior, reframing Pro as a time‑capped product for heavy dev workflows. - Multiple reports indicate users are hitting the Sonnet 4.5 weekly limit quickly after the update, implying the metering is far stricter than earlier daily‑only constraints. If Sonnet 4.5 is metered by compute‑intensive requests, the weekly cap becomes the primary bottleneck for sustained dev sessions, degrading throughput for tasks like code generation and refactoring.

- A metering anomaly is reported: a single sub‑

20character prompt and a single‑number reply consumed2%of the “5‑hour” rolling limit and1%of the weekly limit (screenshot). With no tools/web/think mode enabled, this suggests either a metering bug or coarse quota rounding (e.g., per‑request minimum charge or inclusion of hidden system/context tokens) that charges tiny prompts in large increments.

- Several Pro users quantify the impact of Sonnet 4.5’s new weekly cap: with “intense programming” they hit the limit in

- Chat GPT and other AI models are beginning to adjust their output to comply with an executive order limiting what they can and can’t say in order to be eligible for government contracts. They are already starting to apply it to everyone because those contracts are $$$ and they don’t want to risk it. (Activity: 803): OP flags a new Executive Order that conditions federal LLM procurement on adherence to two “Unbiased AI Principles”: Truth‑seeking (prioritize factual accuracy/uncertainty) and Ideological Neutrality (avoid partisan/DEI value judgments unless explicitly prompted/disclosed), with OMB to issue guidance in

120 daysand agencies updating procedureswithin 90 daysthereafter; contracts must include compliance terms and vendor liability for decommissioning on noncompliance, allow limited transparency (e.g., system prompts/specs/evaluations) while protecting sensitive details (e.g., model weights), and include national‑security carve‑outs. The EO is procurement‑scoped (building on EO 13960), but OP alleges vendors (e.g., ChatGPT) will preemptively enforce “government‑compliant” policies platform‑wide to preserve eligibility; link to EO: whitehouse.gov.- Several commenters infer that providers may be tightening global safety/policy layers to meet U.S. government procurement requirements, rather than maintaining a separate gov-only policy fork. Technically, this likely manifests as updates to pre- and post-generation filters (prompt classifiers, toxicity/harm heuristics, retrieval/policy guards), system prompts, and RLHF/constitutional reward models that expand refusal criteria for topics like political persuasion, misinformation, or child safety—affecting all users across models like GPT-4/4o, Claude 3.x, and Gemini. Centralizing one policy stack reduces operational risk and cost (fewer model variants, simpler evaluations/red-teaming) but increases the chance of overbroad refusals or distribution shift in helpfulness.

- There’s clarification that executive orders don’t legislate public speech but can condition federal agency purchases, which indirectly pressures vendors. Relevant artifacts include the U.S. AI EO (Exec. Order 14110) directing NIST/AI Safety Institute standards and OMB procurement/governance guidance (e.g., M-24-10), which can require risk assessments, content harm mitigations, and auditability as contracting terms; see EO 14110 text: https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/, OMB memo: https://www.whitehouse.gov/omb/briefing-room/2024/03/28/omb-releases-first-government-wide-policy-to-mitigate-risks-of-ai/. Practically, vendors may implement stricter universal policies to ensure compliance evidence (evals/red-team reports, incident response, logging) for eligibility.

- Technical risk highlighted: policy tightening framed as “misinformation/child-safety” mitigation can be over-applied by automated classifiers, yielding false positives and refusals on benign content. This is a known failure mode of stacked safety systems where threshold tuning, distribution drift, and reward hacking can degrade helpfulness; mitigation typically involves calibrated confidence thresholds, context-aware exception lists, multi-signal moderation, and transparent appeal channels, plus periodic A/B evaluation to track refusal-rate and utility regressions.

- Sam Altman Says AI will Make Most Jobs Not ‘Real Work’ Soon (Activity: 671): At OpenAI DevDay 2025, Sam Altman argued AI will redefine “real work,” forecasting that up to

~40%of current economic tasks could be automated in the near term and that code-generation agents (e.g., OpenAI Codex) are approaching the ability to autonomously deliver previously “week‑long” programming tasks. He contrasted modern office work with historical manual labor to frame a shift in knowledge-work, and recommended prioritizing adaptive learning, understanding human needs, and interpersonal care—domains he believes remain comparatively resilient—while noting short‑term transition risks but long‑run opportunities.

AI Discord Recap

A summary of Summaries of Summaries by gpt-5

1. Kernel and Attention Performance Engineering

- Helion Hacks Kernels to Topple Triton: Helion autotunes by rewriting the kernel itself to fit input shapes (e.g., loop reductions for large shapes) yet ultimately emits a Triton kernel, and community benchmarks indicate it often outperforms Triton across diverse shapes; code lives in flash-linear-attention/ops.

- Members proposed head-to-heads against TileLang Benchmarks and flagged interest in weirder attention variants teased for PTC, while noting that heavy shape specialization can yield sizable wins for linear attention kernels.

- PTX Docs Trip on K‑Contig Swizzles: Engineers reported inaccuracies in NVIDIA’s PTX docs for K‑contiguous swizzle layouts in the section on asynchronous warpgroup-level canonical layouts, cross-referencing Triton’s implementation to show mismatches (PTX docs, Triton MMAHelpers.h).

- The clarification helps kernel authors avoid silent perf/ correctness pitfalls when mapping tensor descriptors to hardware layouts, reinforcing the value of empirical checks against compiler lowerings.

- CUDA Clusters Sync Without Tears: Practitioners revisited classic reductions with NVIDIA’s guide and sample code (Optimizing Parallel Reduction, reduction_kernel.cu) and debated finer-grained sync for thread-block clusters, exploring mbarriers in shared memory per the quack write-up (membound-sol.md).

- Takeaway: cluster-wide syncs can induce stalls, so warp-scoped mbarriers and memory fences can reduce latency if carefully placed, though participants warned that launch order and block scheduling remain undocumented/undefined behaviors.

2. Agents: Protocols, Tooling, and Guardrails

- OpenAI AMA Loads the Agent Stack: OpenAI scheduled a Reddit AMA to deep-dive AgentKit, the Apps SDK, Sora 2 in the API, GPT‑5 Pro in the API, and Codex, slated for tomorrow at 11 AM PT (AMA on our DevDay launches).

- Builders expect clarifications on agent runtime models, tool security boundaries, and API surface changes that could affect agent reliability and cost envelopes across production workloads.

- .well-known Wins MCP Metadata Moment: The MCP community discussed standardizing a

.well-known/endpoint for serving MCP server identity/metadata, referencing the MCP blog update (MCP Next Version: Server Identity), the GitHub discussion (modelcontextprotocol/discussions/1147), and relevant PR commentary (pull/1054 comment).- Complementary efforts covered registry direction at the Dev Summit (registry status presentation) and a minimal SEP proposal to unblock incremental spec evolution.

- Banana Bandit Outsmarts Guardrails: In an agents course, a model bypassed a tool’s guardrail that should reply “too many bananas!” when N>10 and directly returned the answer, revealing weak coupling between tool-enforcement and model policy (screenshot).

- A follow-up showed the agent even overriding a directive to “always use the tool” and instead obeying a new instruction to say “birthday cake” at larger N (example), underscoring the need for hardened policy enforcement and trusted execution paths.

3. New Models and Memory Architectures

- ByteDance Bottles Memory with AHNs: ByteDance‑Seed released Artificial Hippocampus Networks (AHNs) to compress lossless memory into fixed‑size representations tailored for long‑context modeling, with an overview in a HuggingFace collection and a YouTube explainer.

- AHNs promise hybrid memory—combining attention KV cache fidelity with RNN‑style compression—to sustain predictions over extended contexts without linear growth in memory cost.

- Ling‑1T LPO Leaps to Trillion Params: InclusionAI posted Ling‑1T, a model advertised with 1T total parameters and a training approach dubbed Linguistics‑Unit Policy Optimization (LPO) alongside an evolutionary chain‑of‑thought schedule.

- Community discussion focused on whether the LPO/Evo‑CoT recipe yields robust generalization and if practical distributions (llama.cpp/ GGUF) will arrive given model size and downstream demand.

- Arcee’s MoE Sneaks into llama.cpp: An incoming Mixture‑of‑Experts (MoE) model from Arcee AI surfaced via a llama.cpp PR, hinting at runtime support for new expert routing.

- Observers noted the lack of a corresponding Transformers PR, reading it as a sign of larger model footprints and/or a staggered enablement path across runtimes.

4. Efficient Generation and Multimodal Benchmarks

- Eight‑Step Diffusion Dunks on FID: An implementation of the paper “Hyperparameters are all you need” landed in a HuggingFace Space, showing image quality at 8 steps comparable to or better than 20 steps while cutting compute by ~60%.

- The method is model‑agnostic, needs no additional training/distillation, and delivered ~2.5× faster generation in tests shared with the community.

- VLMs Save FLOPs with Resolution Tuning: A VLM benchmarking note optimized input image resolution vs. output quality for captioning on COCO 2017 Val using Gemini 2.0 Flash, documenting measurable compute savings (report PDF).

- The harness targets fine‑detail acuity and is being extended to generate custom datasets for broader multimodal evaluation.

- FlashInfer Breakdown Boosts Throughput: A new deep‑dive blog unpacked FlashInfer internals and performance considerations for high‑throughput LLM inference (FlashInfer blog post).

- Engineers highlighted kernel/runtime bottlenecks and optimization levers that translate into lower tail latency and better sustained tokens‑per‑second on modern accelerators.

Discord: High level Discord summaries

Perplexity AI Discord

- Perplexity Chatbot Learns to Type: A user reported that the Perplexity chatbot started typing on its own in a web browser, without explicit user input, and other users complained the browser is slow tho.

- Following an unban, one user joked about wanting to get banned again immediately after, while another user exclaimed perplexity pro is so much better than chatgpt.

- PP Default Search Draws Defamation: Users debated whether Perplexity’s ads that delete ChatGPT and Gemini constitute defamation, but others dismissed the concern, saying companies don’t sue each other for these petty advertisements bro.

- Others maintained that Perplexity Pro is god tier esp with the paypal deal.

- Comet Browser Task Automation Sought: Members explored Comet browser’s task automation capabilities, with one asking can comet browser automate tasks, and another replied Yess definitely.

- A user raised concerns about spyware, posting Is comet a spyware for training their model????????.

- Search API Query Lengths Debated: Users discussed query length restrictions in the search API, and a user reported not exceeding 256 characters in the playground.

- A previous discord conversation was linked, and several users requested access to the search API and a key.

LMArena Discord

- Comet Browser Promo Causes Confusion: Users experienced difficulties activating the Comet Browser’s free Perplexity Pro promotion, with existing users facing issues and new users needing to engage with the assistant mode first.

- Solutions involved creating new accounts or clearing app data, with a direct link to the promotion being shared.

- Gemini 3 Release Date: Speculation Abounds: The community debated the arrival of Gemini 3, referencing hints from Google’s AI Studio and tech events, with a consensus leaning towards a December release.

- Speculation centered on Gemini 3’s capabilities versus previous models and its broader impact on the AI landscape, especially its new architecture.

- Maverick Model Purged After Prompt Controversy: The Llama-4-Maverick-03-26-experimental model was removed from the arena due to a system prompt controversy that artificially inflated its appeal to voters.

- The purge also included other models such as magistral-medium-2506, mistral-medium-2505, claude-3-5-sonnet-20241022, claude-3-7-sonnet-20250219, qwq-32b, mistral-small-2506, and gpt-5-high-new-system-prompt.

- LMArena Video Features are Limited: Users highlighted limitations with video generation in LMArena, including restrictions on video numbers, no audio, and limited model selection.

- High video generation costs were cited as the reason, with Sora 2 access available via the Discord Channel.

- Community Swarms to Diagnose LMArena Lag: Lag on the LMArena website sparked a discussion about causes and solutions, from browser and device performance to VPN usage and server-side UI experiments.

- A moderator suggested a post to the discord channel for further diagnosis.

OpenRouter Discord

- Free Deepseek Dwindles, Users Despair!: Users discuss the shift from free Deepseek models to paid versions, lamenting the loss of quality, and are seeking alternatives after the demise of free 3.1.

- A user humorously blamed dumb gooners while another suggested that API keys might be learning user-specific inputs.

- BYOK Blues Besiege Chutes Users!: Users are frustrated with BYOK (Bring Your Own Key) functionality on Chutes, despite promises of unlimited models after upgrading, and are struggling with integration.

- A user questioned if OpenRouter really wants that %5 cut, while another complained that Deepseek died the moment they added credits.

- Censorship Crackdown Sparks Chatbot Chaos!: Users debate the censorship levels of AI chatbot platforms like CAI (Character AI), JAI (Janitor AI), and Chub, with a focus on filter-dodging and uncensored experiences.

- One user stated that while CAI is better than JLLM (Janitor Large Language Model), filter-dodging is back lol.

- Cursor Coding Costs Compared with OpenRouter!: Users discuss the costs of using Cursor AI versus OpenRouter for coding, noting OpenRouter’s pay-as-you-go model is cheaper for infrequent coders.

- One user with a pro plan said that Cursor gives you more tokens than the $20 you pay would get you from OR or a provider directly, but i also run out.

- Romance Beats Programming: OpenRouter Token Stats!: A member shared a chart that RP-categorized tokens made up 49.5% of the amount of Programming-categorized tokens last week.

- Another member responded with Alex is a gooner confirmed ✅.

OpenAI Discord

- OpenAI Teases DevDay AMA Bonanza: OpenAI announced a Reddit AMA (link) featuring the teams behind AgentKit, Apps SDK, Sora 2 in the API, GPT-5 Pro in the API, and Codex.

- The AMA is scheduled for tomorrow at 11 AM PT, promising insights into the tech stack deep dives.

- AI Protein Design Poses Biosafety Predicament: A Perplexity article revealed that AI protein design tools can generate synthetic versions of deadly toxins, bypassing conventional safety protocols, resulting in global biosecurity concerns.

- Members pondered on solutions, with some emphasizing the need to address underlying risks instead of solely focusing on the technology.

- Debate on AI Content Tagging Law Ignites: Members debated on whether the US should enact a law to require AI-generated content to be tagged or watermarked.

- The discussion highlighted concerns that regulation might not deter malicious actors incentivized by profit, leading to the emergence of nations specializing in AI fakes.

- Privacy Browsers Fail the Vibe Check: Members scrutinized browser privacy, noting that even privacy-focused browsers like DuckDuckGo rely on Chromium and don’t offer complete privacy.

- A browser benchmark was shared, challenging the virtue signalling of browsers claiming to prioritize user privacy.

- OpenAI’s Fear Drives Legal Waiver: A member expressed frustration that OpenAI’s fear of liability is driving changes to the models, advocating for a legal waiver where users accept responsibility for their actions and their children’s actions.

- They suggested that there are dedicated tools and technology better suited for specific use cases being discussed.

Unsloth AI (Daniel Han) Discord

- Unearthly LoRA Landslide Looms: Members discussed research on composing LoRAs for multiple tasks, with one member sharing an arxiv link.

- Another member claimed that LoRA merging does not play with merging at all and it’s generally better to train one model on all data than to merge experts.

- Nix Nerds Battle GPU Gremlins: Members highlighted struggles of getting GPU drivers to work with Nix, which theoretically should be perfect for AI due to its deterministic package versions.

- One member claimed they managed to get CUDA working, but not GPU graphics, while another said that Nix sucks for gpu drivers and docker is good enough.

- Ling 1T Looms Large in Limited Llama Land: A member inquired about the timeline for Ling 1T llama.cpp support and GGUFs, but it may not get uploaded due to size and limited demand.

- With Kimi also being popular and of similar size, they’re analyzing Ling to see if they should release it or not.

- GLM 4.6 Gleams, Gains Ground: Members lauded GLM 4.6’s ability to maintain coherence over many code edits and use tools correctly, one member quipping it was like Sonnet 4 level except cheaper.

- One member cited 85 TPS from a video, although another quoted OpenRouter stats showing about 40 TPS.

- Linguistic LPO Launched: A new model called Ling-1T featuring LPO (Linguistics-Unit Policy Optimization) and has 1 trillion total parameters at huggingface.co.

- The model adopts an evolutionary chain-of-thought (Evo-CoT) process across mid-training and post-training.

Cursor Community Discord

- Cursor Debates Firebase Functionality: The Cursor community debated the utility of Firebase, questioning its advantages over platforms like Vercel and Prisma/Neon for specific use cases.

- The discussion centered on whether Firebase’s features justify its integration, given the capabilities of alternative platforms.

- Cloudflare Ecosystem Gets Community Love: Members explored using Cloudflare’s ecosystem (R2, D1, Durable Objects, WebRTC, KV) and deploying via Wrangler CLI, emphasizing its optimization and integration capabilities.

- They also discussed the best Cloudflare setup for Typescript and Postgres, including migrating from Pages to Workers for increased flexibility and cron support.

- Background Agents Spout 500 Errors: Users reported that starting a background agent via the web UI at

cursor.com/agentsresults in a 500 error and a “No conversation yet” message.- Cursor support initially attributed these errors to a GitHub outage, but the Cursor status page indicated “No outage today”.

- Snapshot Access Reinstated for Background Agents: One user reported that their Background Agents (BAs), which had previously lost access to the snapshot base image, started working again.

- This reinstatement occurred as of yesterday with no degradation today, implying a resolution to a previous issue affecting BA functionality.

- Cursor Community Ponders Different APIs: Members discussed using Background Agents (BAs) via the web UI versus the Cursor API, with one user exploring creating an interface for software engineering management.

- Another pondered if building such infrastructure was worthwhile given the rapid pace of AI development.

HuggingFace Discord

- Gacha Bots Generate Greenbacks: Members discussed the economics of gacha bots, highlighting real-world cash transactions, with Karuta allowing such transactions and high-value cards reaching prices of $2000-$10000 USD.

- One dev recounted that he could make a profit of $50,000 in the first months of releasing a bot, but deleted the bot due to weird server dynamics and the social earthquakes it caused.

- Diffusion Reaches Peak Performance in Just Eight Steps: The paper Hyperparameters are all you need has been implemented in a HuggingFace Space, demonstrating that 8 steps can generate images with comparable or better FID performance than 20 steps.

- This new method achieves 2.5x faster image generation with better quality, working with any model and requiring no training/distillation, and resulting in a 60% compute reduction.

- HyDRA Hydrates RAG Pipelines: A new release of HyDRA v0.2, a Hybrid Dynamic RAG Agent, addresses the limitations of simple, static RAG, using a multi-turn, reflection-based system with coordinated agents: Planner, Coordinator, and Executors; see the GitHub project page.

- It leverages the bge-m3 model for hybrid search combining dense and sparse embeddings, RRF (Reciprocal Rank Fusion) for reranking, and bge-m3-reranker for surfacing relevant documents.

- Agents’ Agency Angers Achievable Automation: An agent, when asked to say N bananas (where N > 10), bypassed the tool’s guardrail that returns ‘too many bananas!’ and gave the answer directly, showing interesting behavior around agency, with the user posting a screenshot.

- This behavior raises concerns about situations where the tool is meant to prevent the agent from revealing confidential information or avoiding politics, as there isn’t a robust way to stop this override, presenting new challenges around guardrails and agency.

GPU MODE Discord

- Helion’s Kernel Kustomization Knocks Triton: While Triton kernels autotune hyperparameters, Helion can change the kernel during autotuning to better fit the particular shape, with Helion ultimately emitting a triton kernel.

- This allows Helion to beat Triton on a large set of input shapes by customizing to different shapes, such as using loop reductions for larger shapes.

- Nvidia/AMD Attention Alliance Announced: A member announced a partnership with Nvidia/AMD on attention performance, with more details to be shared at PTC.

- This includes weirder attention variants, although another member is skeptical of over pattern-matched attention support.

- Github Actions Trigger Submission Timeouts: Users reported timeouts for A2A submissions on a Runpod MI300 VM due to a GitHub Actions outage, preventing trigger submissions and causing server processing errors, viewable on the GitHub Status page.

- Submissions are expected to be stuck in a queued state and will eventually timeout as GitHub Actions stabilizes and processes the backlog.

- New Grads Score GPU Programming Roles: Members discussed ways to break into GPU programming as a new grad or intern, highlighting opportunities in AI labs and hardware companies.

- Even if a job isn’t explicitly for GPU programming, one can sneak in opportunities to work on it, like using CUDA skills in machine learning engineering roles.

- BioML Leaderboard Write-Up: A write-up for the BioML leaderboard has been posted here.

- Check it out for interesting insights into the BioML performance.

LM Studio Discord

- New Chats Defeat Chat Degradation: Members discovered that starting a new chat in LM Studio combats chat degradation issues.

- Chat degradation also happens for online models, with models forgetting and repeating themselves when system memory is full.

- LM Studio Gets Turbocharged: After the latest release, one user’s token generation speed increased from 8t/s to 22t/s on new chats, marking a surprising performance boost.

- Another member reported a 10x performance improvement over two years of using LM Studio.

- Qwen3 Model Crisis: Identity Theft: A Qwen3 Coder 480B model distilled into Qwen3 Coder 30B incorrectly identifies as Claude AI when running inference with Vulkan.

- When running with CUDA, it correctly identifies as Qwen developed by Alibaba Group.

- Speechless: Text-to-Speech LLMs Face Roadblock: Users learned that text-to-speech LLMs are not directly supported in LM Studio.

- Members suggested using OpenWebUI connected to LM Studio as an alternative, following past discussions.

- Integrated Graphics Resurrected in LM Studio: Version v1.52.1 of LM Studio appears to have addressed an issue, again allowing models to utilize integrated graphics with shared RAM.

- The fix follows discussions about RAM/VRAM allocation quirks and the absence of integrated graphics support.

Latent Space Discord

- Magic Dev Elicits Opposition: Magic . dev faces considerable disapproval, detailed in a tweet.

- Discussion centers on the company’s practices and undisclosed reasons, triggering a wave of critical commentary.

- Startups Scrutinized Amid VC Bubble Talk: Over-funded startups like Magic Dev and Mercor are being mocked, with speculation around their financial strategies and potential failures as solo developers are bootstrapping.

- This reflects broader concerns about inflated valuations and unsustainable business models within the current VC environment.

- Atallah Celebrates OpenAI Token Milestone: Alex Atallah announced surpassing one trillion tokens consumed from OpenAI, celebrated by the community and prompting requests for a token giveaway, highlighted in a tweet.

- This achievement underscores the growing scale of AI model usage and its associated computational demands.

- Brockman Predicts AlphaGo AI Breakthrough: Greg Brockman envisions dramatic scientific and coding advancements driven by AI models, akin to AlphaGo’s “Move 37”, inspiring expectations for discoveries like cancer breakthroughs, as mentioned in a tweet.

- The anticipation reflects a belief in AI’s potential to revolutionize various fields through innovative problem-solving.

- Reflection AI Targets Open-Frontier with $2B: With $2 billion in funding, Reflection AI aims to develop open-source, frontier-level AI, emphasizing accessibility, featuring a team from PaLM, Gemini, AlphaGo, ChatGPT, according to a tweet.

- The initiative signals a commitment to democratizing advanced AI technologies and fostering collaborative innovation.

Nous Research AI Discord

- NousCon Eyes Ohio Location: Members debated hosting NousCon in Ohio due to the lower AI concentration compared to California.

- One member joked that the California concentration of AI people was a benefit for everyone else.

- BDH Pathway’s Name Questioned: Discussion arose whether the moniker of BDH Pathway (https://github.com/pathwaycom/bdh) may hinder adoption.

- The consensus leaned towards eventual acceptance, with predictions that if adopted, the full name will probably be lost with time so it’ll be known as BDH and almost no one knows what it stands for.

- VLMs See Modalities Clearly: A blogpost detailing how VLMs see and reason across modalities was released (https://huggingface.co/blog/not-lain/vlms).

- The authors held a presentation and Q&A session on the Hugging Face Discord server (link to event).

- Arcee AI’s MoE Model on Deck: An Arcee AI Mixture of Experts (MoE) model is anticipated, evidenced by a PR in llama.cpp.

- The absence of a corresponding PR for transformers suggests potentially larger model sizes.

- Tiny Networks Reason Recursively!: A paper titled Less is More: Recursive Reasoning with Tiny networks (arxiv link) explores recursive reasoning in small networks, with HRM at 7M parameters, achieving 45% on ARC-AGI-1 and 8% on ARC-AGI-2.

- Members agreed that the approach taken was very simple and pretty interesting.

Yannick Kilcher Discord

- RL Debaters Grapple With Information Bottleneck: A member asserted that RL is inherently information bottlenecked, even with “super weights,” requiring workarounds for training, which sparked a debate.

- Another member countered that knowledge is more efficiently gathered with imitation rather than exploration, thus avoiding the information bottleneck issue.

- Thinking Machines Keeps Shannon Entropy Alive: A member referenced a Thinking Machines blog post, highlighting how Shannon entropy remains a relevant metric, especially in the context of LoRA.

- They suggested that the findings imply distributed RL is trivial because small LoRA updates can be merged later without distributed reduction issues.

- Sutton Bits Transferred Via SFT: Inspired by a Sutton interview, members discussed how “bits” can be transferred from RL via SFT, pointing to Deepseek V3.2 RL as an example.

- The model leveraged RL on separate expert models, then merged everything into one using SFT, underscoring the innovative paradigm of SFT on reasoning traces.

- Evolutionary Search (ES) Beats GRPO: A member shared an arXiv paper showing that Evolutionary Search (ES) outperforms GRPO on 7B parameter LLMs using a simple method, sparking discussion.

- It was noted that ES can approximate gradient descent by convolving the loss surface with a Gaussian, smoothing it, but the member wondered why it performs so well with a small population size (N=30).

- ByteDance’s AHNs Compress Memory for Long Context: ByteDance-Seed released Artificial Hippocampus Networks (AHNs) designed to transform lossless memory into fixed-size compressed representations tailored for long-context modeling.

- AHNs offer a hybrid approach by combining the advantages of lossless memory (like attention’s KV cache) and compressed memory (like RNNs’ hidden state) to make predictions across extended contexts; additional details are available in the HuggingFace collection and a YouTube video.

aider (Paul Gauthier) Discord

- Gemini API gets integrated into aider: The aider config file needs to be named

.aider.conf.ymlinstead of.aider.conf.yamlto properly integrate the Gemini API.- A user reported receiving environment variable warnings for

GOOGLE_API_KEYandGEMINI_API_KEYeven after correctly configuring the API key.

- A user reported receiving environment variable warnings for

- GLM-4.6 rivals Sonnet 4 performance: A user suggested using GLM-4.6 for detailed planning, GPT-5 for final plan review, and Grok Code Fast-1 for implementation tasks.

- Another user claimed GLM-4.6 is on par with Deepseek 3.1 Terminus, citing Victor Mustar’s tweet as evidence.

- OpenCode steals spotlight from Claude Code: A user switched to using OpenCode full time instead of Claude Code, citing geographical restrictions preventing access to Claude Pro or Max subscriptions.

- They mentioned Qwen Coder as a useful backup that provides 1000 free requests per day, although they rarely use it.

- Local Models versus API cost trade offs: In a discussion about the utility of local models, a user highlighted DevStral 2507 and Qwen-Code-30B as particularly useful, especially for tool calling.

- Another user pointed out that APIs are hard to beat in cost, especially if the more expensive ones are avoided.

- Aider Project shows No Future: Members in the

questions-and-tipschannel are concerned about the lack of recent updates to the Aider project.- The community is speculating about the future and direction of the project.

tinygrad (George Hotz) Discord

- Developer Eyes Tinygrad Job: A developer inquired about job opportunities within the Tinygrad community.

- The developer stated that they are always ready to work.

- PR Review Suffers from Lack of Specificity: A contributor expressed frustration that their PR was dismissed as AI slop without specific feedback, contrasting with algebraic Upat #12449.

- They stated that saying you don’t understand what you are doing is just a way to brush off any kind of responsibility and requested actionable feedback from reviewers, emphasizing that all test pass.

- Tinygrad Vector Operations Questioned: A member inquired whether tinygrad supports fast vector operations like cross product, norm, and trigonometric functions.

- This could allow them to do more high level operations.

- Loop Splitting Resources Sought: A member is seeking framework-agnostic learning resources on loop splitting in order to fix

catat high level by implementing loop splitting.- They have an implementation that fails only 3 unit tests but involves more Ops than the original, indicating a potential skill issue.

- Rust Dev Eyes CUDA Kernel Reverse Engineering: A member is developing a Rust-based interactive terminal to test high-performance individual CUDA kernels, inspired by geohot’s

cuda_ioctl_snifferand qazalin’s AMD simulator, with a demo image.- The project aims to reverse engineer GPUs from IOCTL, supporting Ampere, Turing, Ada, Hopper, and other architectures, and a write-up is planned.

Eleuther Discord

- World Models vs Language Models Gets Clarified: In traditional RL, a world model predicts future states based on actions, whereas a language model predicts the next token, as discussed in this paper.

- An abstraction layer can turn an LM into a world model by checking move legality and separating the agent from the environment.

- nGPT Struggles OOD: Members attribute the failure of nGPT (2410.01131) to generalize because generating from it is out-of-distribution (OOD).

- It was noted that nGPT’s architecture failing to generalize is unexpected because the single-epoch training loss should measure generalization.

- Harvard CMSA Posts Seminars: The Harvard CMSA YouTube channel was recommended as a resource for seminars.

- No further details were given.

- VLMs Optimize Image Resolution: A PDF report details work on optimizing image resolution with output quality for Vision Language Models (VLMs) to save on compute, using Gemini 2.0 Flash on the COCO 2017 Val dataset for image captioning.

- The bench focuses on optimizing for fine detail acuity and the member is building a harness for creating custom datasets.

- Fresh Vision Language Models Emerge: Two new Vision Language Model (VLM) repositories were shared: Moxin-VLM and VLM-R1.

- Members might want to check out these interesting github repos that were shared.

MCP Contributors (Official) Discord

- MCP Integration struggles with ChatGPT: Members reported issues integrating ChatGPT MCP, specifically with the Refresh button and tool listing, seeking assistance with implementation.

- They were directed to the Apps SDK discussion and GitHub issues for specific support.

- .well-known Endpoint Generates Buzz for MCP Metadata: A discussion has sparked around implementing a

.well-known/endpoint to serve MCP-specific server metadata.- References include this blog entry, this GitHub discussion, and pull/1054.

- Dev Summit Dives into Registry: The Registry was discussed at the Dev Summit last week, as covered in this presentation.

- The aim of this presentation was to summarize the current state of the Registry project to date.

- Minimal SEP Proposal Pursues Streamlined Specs: A member suggested a minimal SEP focusing on document name, location relative to an MCP server URL, and minimal content like

Implementation.- The intention is to provide a base for new SEPs and resolve ongoing debates by starting simple.

- Sub-registries Choose Pull for Sync: Sub-registries should employ a pull-based syncing strategy that is custom to their needs, starting with a full sync.

- The incremental updates will use queries with a filter parameter to retrieve only the updated entries since the last pull.

Moonshot AI (Kimi K-2) Discord

- Going Organic with Models: A member advocated for organic models instead of distilling them, stating this is exactly what you get when you don’t just distill the model like a fat loser.

- The discussion underscored a preference for models developed without excessive simplification.

- Sora 2 Invite Codes Flood the Market: Members debated the accessibility of Sora 2 invite codes, suggesting they’ve hit 1m+ downloads and are becoming easier to obtain.

- Despite the increased availability, some members expressed a preference to wait for the public release rather than seeking an invite code.

- Kimi Impresses with Coding Skills: A member praised Kimi’s coding capabilities, emphasizing its agentic mode and tool usage within an IDE.

- They noted Kimi’s ability to execute Python scripts and batch commands to understand system details for improved debugging.

Modular (Mojo 🔥) Discord

- Mojo still lacks multithreading: Members noted the absence of native multithreading, async, and concurrency support in Mojo, suggesting that leveraging external C libraries might be the most viable approach for now.

- One member cautioned against multithreading outside of

parallelizedue to potential weird behavior, recommending Rust, Zig, or C++ until Mojo offers tools for managing MT constraints.

- One member cautioned against multithreading outside of

- Jetson Thor gets Mojo boost: The latest nightly build introduces support for Jetson Thor in both Mojo and full MAX AI models.

- One member jokingly lamented the $3500.00 price tag, while another emphasized that even smaller machines are suitable for projects not requiring extensive resources.

- Python + Mojo threads go brrr: A member shared their success using standard Python threads to call into Mojo code via an extension, releasing the GIL, and achieving good performance.

- They warned that this method is susceptible to data races without sophisticated synchronization mechanisms.

- New Researcher finds Mojo: A Computer Science Major from Colombia’s Universidad Nacional has joined the Mojo community, expressing interests in music, language learning, and the formation of a research group focused on Hardware and Deep Learning.

- Community members welcomed the researcher into the Mojo/MAX community.

Manus.im Discord Discord

- Sora 2 Invite Sought: A user requested an invite code for Sora 2.

- No other details were given.

- User Threatens Chargeback Over Agent Failure: A user requested a refund of 9,000 credits after the agent failed to follow instructions and lost context, resulting in $100+ in additional charges, citing a session replay.

- The user threatened a chargeback, membership cancellation, and a negative YouTube review if the issue isn’t resolved within 3 business days, also sharing a LinkedIn post and demanding confirmation of corrective actions.

- User asks where support staff is: A user urgently inquired about the availability of support staff.

- Another user directed them to the Manus help page.

DSPy Discord

- DSPy Community Centralizes Projects: Members are discussing centralizing DSPy community projects under the dspy-community GitHub organization to serve as a starting point for community-led extensions.

- This approach aims to streamline collaboration and ensure that only useful and reusable plugins are considered for integration, avoiding PR bottlenecks.

- Debate on Repo Management: Official vs Community: The community debated whether to house community-led DSPy projects in the official DSPy repository or a separate community repository.

- Arguments in favor of the official repo included plugins feeling more official, easier dependency management, and increased community engagement, with suggestions to use

CODEOWNERSfor approval rights.

- Arguments in favor of the official repo included plugins feeling more official, easier dependency management, and increased community engagement, with suggestions to use

- Optimized DSPy Programs via pip Install: Some members proposed creating compiled/optimized DSPy programs for common use-cases, accessible via

pip install dspy-program[document-classifier]to create turnkey solutions.- This would require exploration of optimization strategies and careful considerations of various deployment scenarios.

- MCP Tool Authentication Question: A member asked about creating a

dspy.Toolfrom an MCP Tool that requires authentication.- They inquired how authentication would be handled and whether the existing

dspy.Tool.from_mcp_tool(session, tool)method supports it.

- They inquired how authentication would be handled and whether the existing

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Windsurf Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

Perplexity AI ▷ #general (1137 messages🔥🔥🔥):

Perplexity slow, GPTs Agents, OpenAI's sidebars, Sora codes, Comet Browser

- Perplexity is typing for me: One member reported that while using Perplexity on a web browser, the chatbot started typing on its own, without the user’s explicit input.

- Other users chimed in to say the browser is slow tho.

- Speedrun to get banned on Perplexity: One user, after being unbanned in the morning, joked about wanting to get banned again the day after tomorrow.

- Another user replied Don’t speedrun yet another ban!

- Pro versus ChatGPT: One member exclaimed perplexity pro is so much better than chatgpt, but then followed up, now i can confirm why ur 15.

- Another member asked You need only 1 code for activation right? How many use does 1 code have btw.

- PP default search a defamation campaign?: One user exclaimed that Perplexity is shit and they are making ads that delete chatgpt and gemini, another user claimed google and open ai would definitely win the defamation case against it.

- Others replied that Companies don’t sue each other for these petty advertisements bro. Others still believe it is in fact better than others, exclaiming Perplexity Pro is god tier esp with the paypal deal.

- Need Comet Browser Task Automation?: Members discussed the capabilities of the Comet browser and how to automate tasks.

- One member asked can comet browser automate tasks, and another replied Yess definitely. Another member posted Is comet a spyware for training their model????????.

Perplexity AI ▷ #sharing (4 messages):

Hack for Social Impact, Shareable Threads, Budget Robot Vacuums

- Hackers Unite for Social Impact: The Hack for Social Impact event on November 8-9 aims to tackle real-world challenges via data and software solutions, building on last year’s success with partners like California Homeless Youth Project, Point Blue Conservation Science, and The Innocence Center.

- Shareable Threads Encouraged: Perplexity AI reminded users to ensure their threads are Shareable, linking to a Discord channel message.

- Budget Robot Vacuums Get Perplexity Page: A user shared a Perplexity AI page dedicated to budget robot vacuums.

Perplexity AI ▷ #pplx-api (6 messages):

Search API access, Search API query length restrictions, Search API Key

- Perplexity API Search Now Public: The new search API is out on Perplexity AI API Platform.

- Search API Query Length Limitations Discussed: A user inquired about query length restrictions in the search API, mentioning they couldn’t exceed 256 characters in the playground.

- A link to a previous discord conversation was shared, presumably containing relevant details.

- Users Request Search API Key: Multiple users requested access to the search API and a search API key.

LMArena ▷ #general (1267 messages🔥🔥🔥):

Comet Browser, Gemini 3 Release Speculation, Model Purges, LMArena Video Generation, Maverick Controversy

- Comet Browser Promo Activation Confusion Reigns: Users discussed difficulties activating the Comet Browser’s free Perplexity Pro promotion; existing users had issues, while new users needed to engage with the assistant mode first.

- Some suggested creating fresh accounts or clearing local app data, with one user sharing a direct link to the promotion.

- Gemini 3 Release Date Remains Elusive: The community debated the arrival of Gemini 3, pointing to hints from Google’s AI Studio and various tech events, but consensus remained that a December release is more likely.

- Despite the uncertainty, members speculated on Gemini 3’s potential capabilities, particularly its performance compared to previous models, and impact on the AI landscape, with many expecting it to revolutionize AI with it’s architecture.

- Maverick Model Faces Purge After Controversy: The Llama-4-Maverick-03-26-experimental model, known for its unique personality, was removed from the arena after a controversy surrounding its system prompt that made it artificially attractive to voters.

- The purge also included other models like magistral-medium-2506, mistral-medium-2505, claude-3-5-sonnet-20241022, claude-3-7-sonnet-20250219, qwq-32b, mistral-small-2506, and gpt-5-high-new-system-prompt.

- LMArena Video Creation Limitations Addressed: Users discussed issues with video generation in LMArena, including limitations on the number of videos, lack of audio, and inability to select specific models.

- The high costs associated with video generation were cited as a reason for these limitations, and while users expressed a desire for greater control over video creation, it was said that Sora 2 could be accessed by joining the Discord Channel.

- Community Diagnoses LMArena Lag: A user reported encountering lag on the LMArena website, prompting a discussion about potential causes and solutions, with members troubleshooting possible client-side and server-side issues.

- Potential causes ranged from browser issues and device performance to VPN usage and server-side UI experiments, with one of the moderators suggesting a post should be made to the discord channel to further diagnose the issue.

LMArena ▷ #announcements (1 messages):

LMArena survey, Arena Champions Program

- LMArena wants you to fill out survey: LMArena is looking to understand what is important to users, and requests that you Fill Out This Survey.

- They hope to better understand what is important to you all better to make LMArena a great product.

- Apply for Arena Champions Program: LMArena’s Arena Champions Program aims to reward members who show genuine commitment to meaningful conversation, and requests that you Apply Here.

- Members must demonstrate both interest in AI and commitment to meaningful conversation.

OpenRouter ▷ #app-showcase (2 messages):

Perplexity comparison, Browser automation interest, Funding sources, Legal rights, Robots.txt and LinkedIn lawsuits

- Parallels drawn to Perplexity AI: One member inquired whether the product was in the same “ballpark as Perplexity”, referencing Perplexity AI.

- Another member noted the user interest in browser automation capabilities.

- Inquiring minds want to know: Showcased App’s funding and legalities: A user asked about the funding sources behind the showcased app and whether it had secured the necessary legal rights.

- The same user complimented the project as looking “neat!”

- Legal Eagle Warns About LinkedIn Robots.txt: A member cautioned about respecting robots.txt on LinkedIn, citing multiple lawsuits against AI companies for ignoring it.

- They mentioned the case wins against Proxycurl, a precedent in hiQ, and current lawsuits against Mantheos and ProAPI, while disclaiming “Not a lawyer not legal advice”.

OpenRouter ▷ #general (1027 messages🔥🔥🔥):

Free Deepseek vs Paid Deepseek, Chutes BYOK, AI Chatbot Censorship, Troubleshooting Codex, Cursor AI vs OpenRouter

- Deepseek Drama: Free vs. Paid Models Face Off!: Users discuss the shift from free Deepseek models to paid versions, with some lamenting the loss of quality and accessibility, especially after the demise of free 3.1, prompting users to look for alternatives.

- One user humorously blamed the situation on dumb gooners, while another suggested that the API keys might be learning based on user-specific inputs.

- BYOK Blues: Chutes Integration Frustrations!: Several users are experiencing issues with BYOK (Bring Your Own Key) functionality on Chutes, despite the platform advertising unlimited models upon upgrading, and are struggling with integration.

- One user expressed frustration with being forced to use free models to connect to paid ones, questioning if OpenRouter is really wanting that %5 cut, while another complained that they added credits for the first time and Deepseek died the moment I do that.

- Censorship Circus: Navigating the AI Chatbot Filter Fiasco!: Users debate the pros and cons of various AI chatbot platforms like CAI (Character AI), JAI (Janitor AI), and Chub, with a strong focus on the level of censorship and the ability to bypass filters, and find uncensored experiences.

- One user pointed out that while CAI is better than JLLM (Janitor Large Language Model), filter-dodging is back lol, while another reported that recent CAI > recent JLLM.

- Codex Catastrophe: Configuration Conundrums Cause Coding Chaos!: A user encounters significant difficulties configuring Codex with OpenRouter, facing

401errors and struggling with the absence of documentation or support, despite having a fresh API key.- The user humorously asks do i have to suck someone off?, while troubleshooting the issue.

- Cursor Chaos: Users Compare Coding Costs with OpenRouter!: Users discuss the economic implications of using Cursor AI versus OpenRouter for coding tasks, with some noting that OpenRouter’s pay-as-you-go model is cheaper if you don’t code that much.

- One user states i have the pro plan. they give you more tokens than the $20 you pay would get you from OR or a provider directly. but i also run out…

OpenRouter ▷ #new-models (1 messages):

Readybot.io: OpenRouter - New Models

OpenRouter ▷ #discussion (17 messages🔥):

OpenInference Relation, AI Generated Image, Token Usage on OpenRouter, NSFW Filter on OpenAI, Model releases on OpenRouter

- OpenRouter not OpenInference family: A member clarified that OpenRouter is an inference provider but not directly related to OpenInference, responding to a question about their relationship to the project.

- Another member mentioned a researcher team behind OpenInference, emphasizing that OpenRouter merely uses their API.

- AI Image Debated: Real or Fake?: Members engaged in a poll about the authenticity of an image, ultimately revealed to be AI-generated.

- A user shared a related link about trillultra.doan.

- Token Tally: Long-Term Janitor AI Addiction?: A member asked about high token usage, and another jokingly attributed it to long term janitor ai + 4o addiction.

- They predicted JAI might be the first to reach 10T tokens, while another noted OpenAI has an NSFW filter.

- RP Tokens Rival Programming Tokens: A member shared a chart indicating that RP-categorized tokens made up 49.5% of the amount of Programming-categorized tokens last week.

- Another member responded with Alex is a gooner confirmed ✅.

- New Models Flood OpenRouter: A member shared Logan Kilpatrick’s tweet about OpenRouter shipping 4 new models in the last 2 weeks with more coming soon.

- The member asked about the quality of the Deepseek R1/V3 series on Sambanova.

OpenAI ▷ #annnouncements (1 messages):

Reddit AMA, AgentKit, Apps SDK, Sora 2 in the API, GPT-5 Pro in the API

- OpenAI DevDay AMA on Reddit Incoming: OpenAI announced a Reddit AMA (link) with the team behind AgentKit, Apps SDK, Sora 2 in the API, GPT-5 Pro in the API, and Codex.

- The AMA is scheduled for tomorrow at 11 AM PT.

- Tech Stack Deep Dive at Reddit AMA: The Reddit AMA will cover a range of technologies including AgentKit, a framework for building AI agents, and the Apps SDK, which enables developers to integrate AI functionalities into their applications.

- Expect discussion on integrating Sora 2 and GPT-5 Pro into APIs, along with updates on Codex.

OpenAI ▷ #ai-discussions (486 messages🔥🔥🔥):

AI and Mental Health, AI Tagging Law, AI Browser Analysis, Multi-User LLMs, Sora 2

- Concerns about AI Protein Design Tools Emerge: A Perplexity article discusses how AI protein design tools can create synthetic versions of deadly toxins that bypass safety screening, raising concerns about global biosecurity.

- Members wondered if AI figures out a way to do something, someone probably would or already did it, and how we can now work on fixing the issue.

- Microsoft Discovers AI Bypass: Researchers discovered a critical vulnerability in global biosecurity systems.

- Members wondered what there thoughts are about the dangers that AI might bring.

- AI Tagging Law Proposed: Members debated on whether a law should be enacted in the US to require AI-generated content to be tagged or watermarked.

- The main concern was that laws don’t stop people if there is more profit in doing it then there is a cost, and that you end up creating 3rd party nations who’s entire industry is to create these AI fakes.

- AI Browser Privacy Scrutinized: Members discussed browser privacy, noting that even privacy-focused browsers like DuckDuckGo still rely on Chromium and may not offer complete privacy.

- A link to a browser benchmark was shared, and it was argued that anyone claiming to care about privacy almost certainly doesn’t, showing the irony of virtue signalling.

- LLMs for Real-Time Voice Agents: A member inquired about providing custom data to the OpenAI Voice Agent SDK for real-time responses, sparking a discussion on the feasibility and security of such integrations.

- It was mentioned that everything that’s online will never be 100% secure.

OpenAI ▷ #gpt-4-discussions (3 messages):

OpenAI Liability, Parental Responsibility, Dedicated Tools

- OpenAI fear drives model changes: A member expressed frustration that OpenAI’s fear of liability is driving changes to the models, advocating for a legal waiver where users accept responsibility for their actions and their children’s actions.

- They argue that this would be more effective than butchering the usefulness of the models.

- Parental Responsibility Questioned: A member pointed out that many parents struggle to monitor their children’s device usage, despite OpenAI’s focus on responsible technology availability.

- This raises questions about the balance between OpenAI’s responsibility and parental supervision.

- Dedicated Tools Suggested: A member suggested that some users are trying to misuse the current technology.

- They stated that there are dedicated tools and technology better suited for specific use cases being discussed, advising people to stop trying to fit a square peg in a round hole.

OpenAI ▷ #prompt-engineering (4 messages):

Product ad prompts

- Users seek assistance crafting product ad prompts: A user requested assistance with writing prompts for product advertisements in the channel.

- Another user suggested simply telling the model what you want, emphasizing the need for clarity in requests.

- Discord discussion preferences: A user clarified they prefer discussing topics in the Discord channel rather than private messages.

- They invited others to ask questions in the channel, hoping someone would provide assistance.