Open Weights is all you need?

AI News for 11/5/2025-11/6/2025. We checked 12 subreddits, 544 Twitters and 23 Discords (200 channels, and 5907 messages) for you. Estimated reading time saved (at 200wpm): 479 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

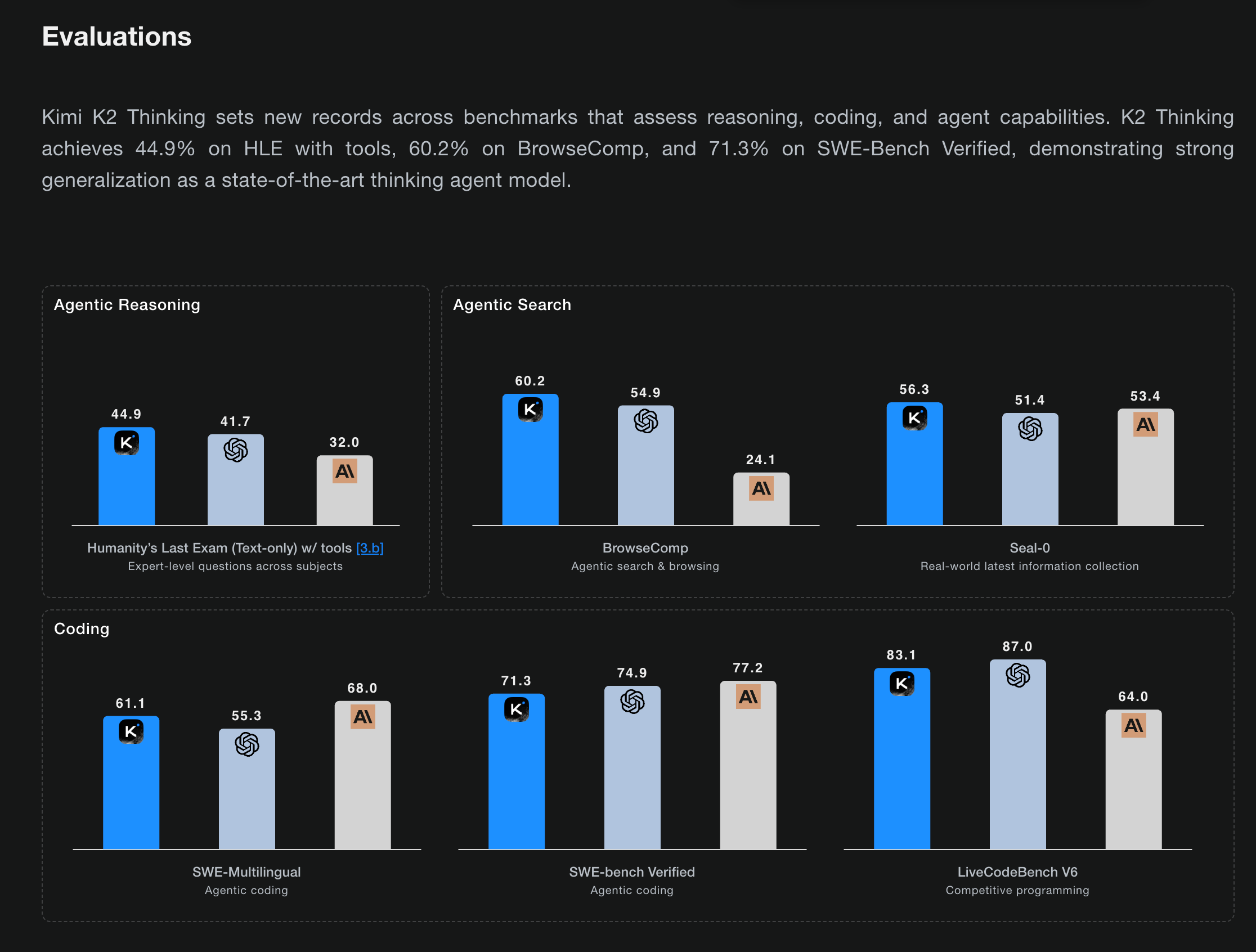

Chatter has been high for a while as Kimi was prepping the open source ecosystem for this release, but the benchmarks are the surprising thing: for the first time, an open model is claiming to beat SOTA closed models (GPT5, Claude 4.5 Sonnet Thinking) at important major benchmarks:

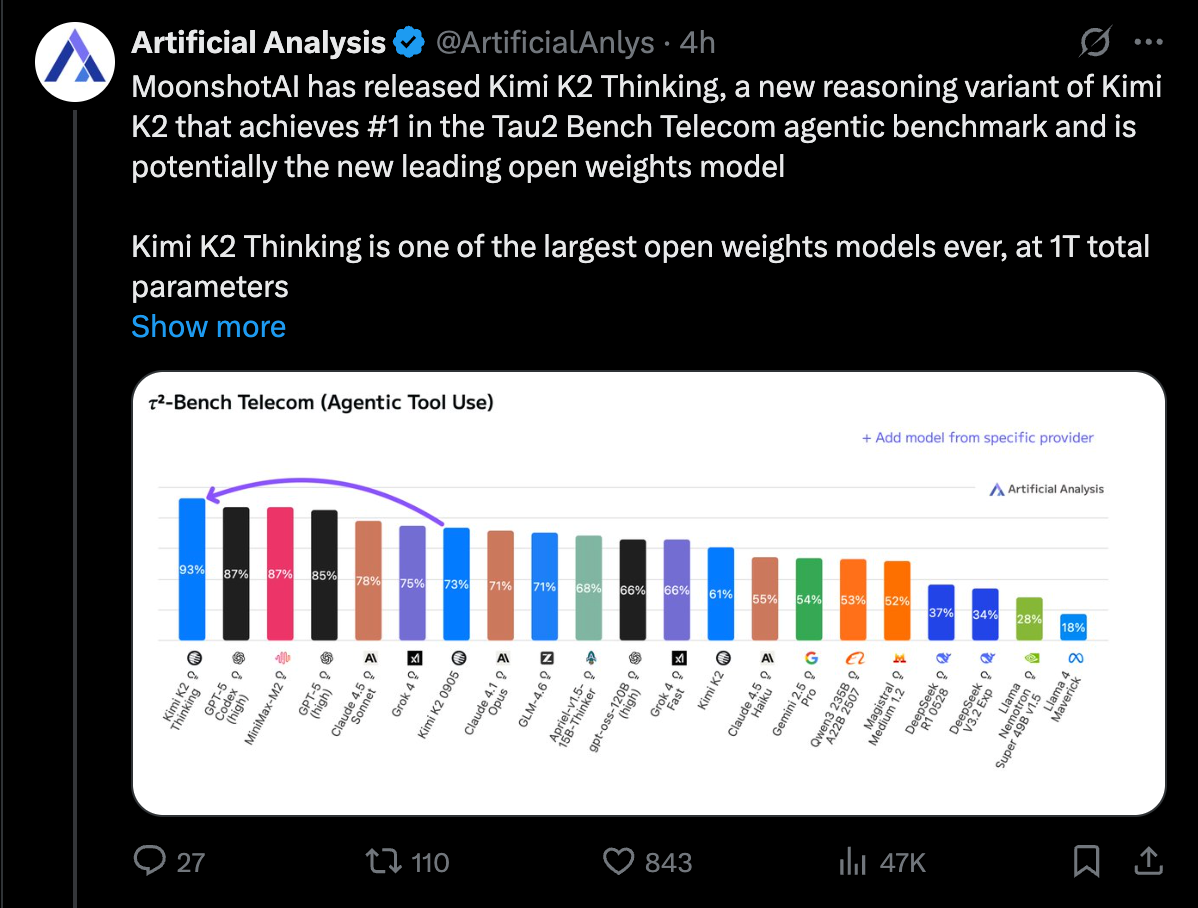

Even more encouraging, Artificial Analysis even volunteered another SOTA in their independent testing:

It is early days, but vibe checks are good.

There’s no paper, but the model card has a few more details on the native INT4 training and the 200-300-long tool calling capabilities given the 256k context window.

Congrats Kimi/Moonshot!!

AI Twitter Recap

Moonshot AI’s Kimi K2 Thinking: open‑weights 1T INT4 reasoning MoE, long‑horizon tools

- Kimi K2 Thinking (open weights): Moonshot AI launched a 1T-parameter MoE model with 32B active experts, a 256K context window, and robust agentic capabilities—executing 200–300 sequential tool calls without human intervention. It posts SOTA on HLE (44.9%) and BrowseComp (60.2%), with community “heavy mode” reports using 8 parallel samples + reflection pushing HLE to ~51% @Kimi_Moonshot, @eliebakouch, @nrehiew_. Early coding/agentic results include 71.3% on SWE‑Bench Verified and 47.1% on Terminal‑Bench @andrew_n_carr, with strong showings on additional benchmarks highlighted by benchmark authors @OfirPress and evaluators @ArtificialAnlys. K2 Thinking is trained with quantization‑aware training (QAT) for native INT4 on MoE components, reporting ~2× generation speed and halved memory versus FP8 variants; all released benchmark numbers are under INT4 precision @eliebakouch, @bigeagle_xd, @timfduffy.

- Day‑0 deployments and perf notes: Official vLLM support (nightly) with OpenAI‑compatible API and recipes is live @vllm_project. The model is already available in multiple endpoints (Arena/Yupp, Baseten, app tooling like anycoder and Cline) @arena, @yupp_ai, @basetenco, @_akhaliq, @cline. On Mac, MLX showed native INT4 inference across two M3 Ultras using pipeline parallelism (~3.5K tokens at ~15 tok/s) @awnihannun. Expect transient instability: multiple users reported API slowdowns/timeouts under launch load (“hug of death”) @scaling01, @code_star.

New AI silicon and inference stack updates (TPU v7, Apple M‑series, adaptive decoding)

- Google TPU v7 (Ironwood): Google announced its 7th‑gen TPU entering GA in the coming weeks with a 10× peak performance improvement vs TPU v5p and >4× performance per chip vs TPU v6e (Trillium). Positioned for both training and high‑throughput agentic inference; used internally to train/serve Gemini, and coming to Google Cloud @sundarpichai, @Google.

- Apple inference acceleration: llama.cpp added initial support for Apple’s M5 Neural Accelerators (macOS Tahoe 26), improving TTFT across ggml stacks @ggerganov. Separately, K2 Thinking ran natively in INT4 on dual M3 Ultras via MLX (see above) @awnihannun.

- Adaptive speculative decoding: Together’s ATLAS “adaptive speculator” reports up to 4× faster LLM inference by learning per‑workload in real time @togethercompute.

Agent frameworks, wallets, and managed RAG

- LangChain Deep Agents for JS: Deep Agents is now in TypeScript/JS (on LangGraph 1.0) with planning tools, sub‑agents, and filesystem access. The team released production‑quality reference tutorials for streaming agents in Next.js and a deep‑research agent @LangChainAI, @bromann, @hwchase17.

- Agent wallets & on‑chain payments: Privy + LangChain enable provisioning wallets for agents to transact with stablecoins, making “agentic commerce” straightforward to prototype @privy_io, @LangChainAI.

- Perplexity Comet Assistant upgrades: Multi‑tab, multi‑site agentic workflows with improved permission prompts are rolling out; designed to handle longer sequences of steps and parallel browsing @perplexity_ai.

- Google: Deep Research + managed RAG:

- Deep Research in Gemini can now draw from Gmail, Drive, and Chat for richer, context‑aware reports on desktop (mobile coming) @GeminiApp.

- Google AI Studio’s File Search Tool (managed RAG) offers vector search with Gemini embeddings, citations, and common file types. Pricing: $0.15/m tokens to index; free storage and embedding generation at query time (tiers: 1GB free → 10GB/100GB/1TB) @_philschmid.

- Agentic RAG apps: Weaviate’s open‑source “Elysia” app demonstrates decision‑tree orchestrations with dynamic UI rendering (tables/cards/charts/docs) and global context awareness @weaviate_io.

Research and benchmarks: memorization vs. generalization; agent/data‑science evals

- Disentangling memorization in LMs: GoodfireAI shows you can decompose MLP weights by loss curvature into rank‑1 components—high curvature capturing shared/generalizing structure; low curvature capturing idiosyncratic memorization. Ablating low‑curvature components reduces memorization while preserving reasoning; arithmetic/fact retrieval degrade more than logical reasoning @GoodfireAI, @jack_merullo_.

- New agent and vision benchmarks: Google Research’s DS‑STAR targets autonomous data‑science tasks across analysis/data‑wrangling @GoogleResearch. MIRA (visual reasoning) reports failures in current models on challenging multi‑image/video reasoning @Muennighoff.

- Tabular ICL and diffusion LMs: Orion‑MSP proposes multi‑scale sparse attention for in‑context tabular learning @HuggingPapers. Diffusion LMs continue to attract attention as data‑efficient learners @_akhaliq.

Developer tools and media models

- VS Code AI goes OSS: Inline AI suggestions and Copilot Chat are now powered by a single open‑source extension in VS Code; code and blog are live @code, @pierceboggan.

- Speech/video models: Inworld TTS 1 Max now leads the Artificial Analysis Speech Arena; supports 12 languages and voice cloning (models use LLaMA‑3.2‑1B/3.1‑8B as SpeechLM backbones) @ArtificialAnlys. Lightricks’ LTX‑2 ranks #3 on the Video Arena leaderboard @LTXStudio.

- Lightweight/local models: AI21’s Jamba Reasoning 3B runs in 2.25 GiB RAM, competitive among “tiny” models on consumer hardware @AI21Labs.

- Security & parsing: Snyk Studio integrated into Factory’s AI Droids to secure AI‑generated code at inception @mnair1. LlamaParse introduces agentic reconstruction to keep clean reading order while exposing bounding boxes for downstream layout use @jerryjliu0.

- Robotics environments: LeRobot’s EnvHub lets you publish complex simulation envs to the Hugging Face Hub and load them in one line for cross‑lab benchmarking @jadechoghari.

People and orgs

- Soumith Chintala exits Meta/PyTorch: After ~11 years at Meta and leading PyTorch from inception to >90% industry adoption, Soumith announced his departure to pursue something new. He emphasized PyTorch’s resilience with a strong leadership bench and roadmap, and reflected on FAIR’s open research culture and the importance of open source in AI tooling @soumithchintala.

- Policy and compute strategy: Amid debate on AI infrastructure financing, David Sacks argued there will be no “federal bailout for AI” given competitive markets @DavidSacks, while Sam Altman clarified OpenAI is not seeking government guarantees for datacenters, supports government‑owned AI infrastructure (for public benefit), and outlined revenue/compute plans toward large‑scale AI cloud and enterprise offerings @sama.

Top tweets (by engagement)

- Kimi K2 Thinking (open weights, INT4, long‑horizon tools) announced by Moonshot AI @Kimi_Moonshot — 7,100+ engagement.

- Sam Altman clarifies OpenAI’s stance on government guarantees and AI infrastructure @sama — 12,300+ engagement.

- David Sacks: “There will be no federal bailout for AI” @DavidSacks — 17,200+ engagement.

- Sundar Pichai: TPU v7 (Ironwood) coming to GA with 10× peak vs v5p @sundarpichai — 3,600+ engagement.

- Soumith Chintala announces departure from Meta/PyTorch @soumithchintala — 4,700+ engagement.

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Kimi K2 Thinking Model Release

- Kimi released Kimi K2 Thinking, an open-source trillion-parameter reasoning model (Activity: 778): Kimi K2 Thinking is a newly released open-source trillion-parameter reasoning model by Moonshot AI, available on Hugging Face. The model is designed to achieve state-of-the-art (SOTA) performance on the HLE benchmark, showcasing its advanced reasoning capabilities. The technical blog provides insights into its architecture and implementation, emphasizing its potential for high-performance applications. However, running the model requires significant computational resources, including

512GB of RAMand32GB of VRAMfor 4-bit precision, which may limit its accessibility for local deployment. Commenters are impressed by the model’s SOTA performance on HLE and express hope for future releases with reduced computational requirements, such as a960B/24Bversion that could fit within512GB of RAMand16GB of VRAM.- The Kimi K2 Thinking model is noted for its impressive performance, achieving state-of-the-art (SOTA) results on the HLE benchmark, which indicates its strong reasoning capabilities. This positions it as a significant advancement in AI model development, particularly in reasoning tasks.

- Running the Kimi K2 model in a 4-bit configuration requires substantial hardware resources, specifically more than 512GB of RAM and at least 32GB of VRAM. This highlights the model’s demanding computational needs, which may limit its accessibility for local deployment without high-end hardware.

- The model’s implementation as a fully native INT4 model is a notable feature, as it potentially simplifies hosting and reduces costs. This could make the model more accessible for deployment, as INT4 quantization typically leads to lower memory and computational requirements compared to higher precision formats.

- Kimi K2 Thinking Huggingface (Activity: 250): Kimi K2 Thinking is a cutting-edge open-source reasoning model from Huggingface, featuring a

1 trillionparameter Mixture-of-Experts (MoE) architecture. It utilizes nativeINT4quantization, contrary to the statedI32, and employs Quantization-Aware Training (QAT) for enhanced inference speed and accuracy. The model excels in benchmarks like Humanity’s Last Exam (HLE) and BrowseComp, supporting200-300tool calls with stable long-horizon agency. It is designed for dynamic tool invocation and deep multi-step reasoning, similar to GPT-OSS withBF16attention and4-bitMoE. More details can be found in the original article. Commenters highlight the model’s impressive performance despite its smaller size (600GB) compared to the original K2, and express concerns about the high hardware requirements for local deployment, suggesting a need for more affordable solutions with NVLink-like capabilities.- DistanceSolar1449 highlights that the Kimi K2 model is significantly smaller than its predecessor, at approximately 600GB. The model uses int4 quantization with QAT (Quantization Aware Training), which is a departure from the I32 weights initially mentioned by Huggingface. This approach is similar to GPT-OSS, utilizing BF16 attention and 4-bit MoE (Mixture of Experts).

- Charuru discusses the challenges of running the Kimi K2 model locally, noting that even high-end setups like 8x RTX 6000 Blackwells with 96GB are inadequate due to the absence of NVLink. This highlights the need for AMD to develop a 96GB card with an NVLink equivalent to make local deployment more feasible and affordable.

- Peter-Devine points out the model’s strong performance on the SWE Multilingual benchmark, raising questions about the contributions of reasoning capabilities versus multilingual data in post-training. This suggests a focus on understanding the balance between these factors in achieving high benchmark scores.

2. DroidRun AI Tool Discussion

- What is your take on this? (Activity: 1010): DroidRun is a tool available on GitHub and its website droidrun.ai, which appears to be designed for automating tasks on Android devices. The tool is likely used for purposes such as app testing, as suggested by the comments. The mention of a Gemini 2.5 Computer Use model raises questions about its open-source status, but no further details are provided in the post. The original post on X (formerly Twitter) by @androidmalware2 might provide additional context or updates. One comment questions the necessity of using such a tool on a phone beyond botting, suggesting potential ethical or practical concerns. Another comment expresses interest in the tool for app testing, indicating its utility in development environments.

- Infamous_Land_1220 criticizes the approach as inefficient, stating it consumes too many tokens and is considered ‘entry level automation’. They suggest there are more effective methods for automation, implying that the current method lacks sophistication and efficiency.

- ElephantWithBlueEyes provides a source link to a GitHub repository, droidrun, which may be related to the discussion. This suggests that the project or tool being discussed might be open source or have a public codebase available for review.

- Pleasant_Tree_1727 inquires about the Gemini 2.5 Computer Use model, questioning its open-source status. This indicates interest in the model’s accessibility and potential for community contributions or modifications.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. XPeng Humanoid Robot Insights

- XPENG IRON - some thought she was one of us. So they cut through her skin fabric (Activity: 1271): XPENG has developed a humanoid robot named IRON, which has sparked discussions about its design and functionality. The robot’s gait has been noted for its realistic mimicry of a ‘female pelvis sway & tilt,’ suggesting advanced biomechanics and motion algorithms. This design choice highlights the potential for humanoid robots to achieve more natural human-like movements, though questions remain about their practical applications and market viability. There is skepticism about the marketability and utility of humanoid robots, despite the impressive design features demonstrated by XPENG IRON. Some commenters speculate on the potential for other robots, like Tesla’s Optimus, to adopt similar human-like movements through adjustments in design and motion programming.

- Few_Carpenter_9185 discusses the technical achievement of XPENG IRON in replicating a “female pelvis sway & tilt” in its gait, highlighting the precision in mimicking human-like movement. The comment suggests that the robot’s design could be adapted to convey different gender characteristics through changes in hinges and geometry, implying a level of sophistication in the robot’s mechanical design that allows for nuanced expression of movement.

- XRoboHub / What’s Under IRON’s Skin? Inside XPeng’s Humanoid Robot#xpeng #humanoidrobot #ai #robotics (Activity: 1078): XPeng has unveiled its humanoid robot, IRON, showcasing advanced robotics and AI integration. The robot features sophisticated motor systems that allow for fluid and elegant movement, challenging previous assumptions about the necessity of soft body mechanics. This development aligns with futuristic visions of humanoid robots as depicted in science fiction, highlighting significant progress in robotics technology. Commenters express amazement at the elegance of the motor systems in XPeng’s humanoid robot, with some noting the resemblance to science fiction depictions. There is a sense of excitement about the technological advancements and their potential impact on future innovations.

- Xpeng’s CEO debunks “Humans inside” claim for their new Humanoid Robot (Activity: 1388): Xpeng’s CEO has addressed skepticism regarding their new humanoid robot, which some speculated had a human inside due to its realistic movements. The CEO clarified that the robot’s design and functionality are entirely mechanical, emphasizing that the motor sounds and other mechanical features are more apparent in person than in videos. This clarification was necessary as many viewers doubted the authenticity of the robot’s capabilities. Commenters noted the skepticism as a sign of the robot’s advanced design, with some humorously pointing out the robot’s realistic appearance, such as its ‘world-class caboose.’ The CEO’s video was seen as a necessary step to address public doubts.

2. Google Ironwood AI Chip Launch

- Google is finally rolling out its most powerful Ironwood AI chip, first introduced in April, taking aim at Nvidia in the coming weeks. Its 4x faster than its predecessor, allowing more than 9K TPUs connected in a single pod (Activity: 524): Google is launching its most powerful AI chip, the Ironwood, which is

4xfaster than its predecessor and supports over9,000TPUs in a single pod. This advancement allows for the execution of significantly larger models, potentially enabling the training of models with up to100 trillionparameters, surpassing the capabilities of Nvidia’s NVL72. The ability to perform an all-reduce operation across such a large number of TPUs could mark a pivotal moment in AI scalability, potentially accelerating the development of AGI if larger models demonstrate increased intelligence and emergent behaviors. Commenters debate Google’s strategy of not selling the Ironwood chip directly, despite its potential to rival Nvidia’s offerings. Some argue that leveraging the chip for Google’s cloud services could be more beneficial, while others suggest that Google’s AI market valuation is underestimated.- DistanceSolar1449 highlights the significance of Google’s new Ironwood AI chip’s ability to connect over 9,000 TPUs in a single pod, which could enable the training of extremely large models, such as 100 trillion parameter models. This capability could potentially accelerate the development of AGI if such large-scale models demonstrate increased intelligence and emergent behaviors, marking a pivotal moment in AI development.

- EpicOfBrave provides a cost comparison between Google’s TPU and NVIDIA’s offerings, noting that 9,128 TPUs deliver 42 exaFLOPS for $500 million, whereas 60 NVIDIA Blackwell units deliver the same performance for $180 million, and 8 NVIDIA Rubin units for $110 million. This suggests that while Google’s TPUs offer high performance, NVIDIA’s solutions may be more cost-effective, raising questions about Google’s market strategy.

3. OpenAI GPT-5.1 Source Code Leak

- GPT-5.1 Thinking spotted in OpenAI source code 👀 (Activity: 534): The image purportedly shows a snippet of OpenAI’s source code referencing “GPT-5.1 Thinking,” suggesting a potential new version or feature related to the GPT-5 model. The code snippet includes variables and functions that seem to manage different levels of processing or cognitive effort, such as “min,” “standard,” “extended,” and “max.” This implies a focus on optimizing or configuring the model’s processing capabilities, possibly indicating an enhancement in how the model handles complex tasks or queries. One comment anticipates a competitive comparison between “Gemini 3” and “GPT-5.1,” suggesting interest in the performance and capabilities of these models. Another comment mentions an A/B test experience with a new version of ChatGPT, indicating ongoing experimentation and updates by OpenAI.

- WHAT’S THE DEAL WITH THE SMIRKING EMOJI??? (Activity: 652): The image is a meme featuring a chat interface where a user inquires about the model, and the response humorously claims to be ‘GPT-5,’ the latest generation of OpenAI’s chat models. This is followed by playful emojis, suggesting a light-hearted take on AI capabilities. The image does not provide any technical details or insights into actual model specifications or updates, and the comments speculate humorously about a potential December update, but this is not substantiated with technical evidence. Some commenters humorously speculate about a potential December update, but this is not based on any technical information or official announcements.

AI Discord Recap

A summary of Summaries of Summaries by gpt-5

1. Moonshot’s Kimi K2 Thinking: Agentic Reasoning Hits Production

- Kimi K2 Thinking Goes Agentic, Breaks SOTA: Moonshot AI launched Kimi K2 Thinking with a 256K context window and autonomous 200–300 tool calls, claiming SOTA on HLE (44.9%) and BrowseComp (60.2%); see the technical blog Kimi K2 Thinking and weights on moonshotai at Hugging Face. The model targets reasoning, agentic search, and coding, and is live on kimi.com with API at platform.moonshot.ai.

- OpenRouter announced K2 Thinking and documented returning upstream reasoning via

reasoning_detailsto preserve chains of thought across calls in OpenRouter reasoning tokens docs. The team highlights test-time scaling that interleaves thought and tools for stable, goal-directed reasoning over long sequences.

- OpenRouter announced K2 Thinking and documented returning upstream reasoning via

- Users Crown K2 ‘GPT5 of Open Models’: Early testers praised Kimi K2 Thinking for multi-hop web search and deep browsing without explicit prompting, sharing results in this analysis thread. Reports emphasize strong reasoning and tool-use behaviors that feel closer to heavyweight closed models in practical tasks.

- One user called it “like GPT5 of open models”, lauding cost efficiency and autonomy for building agentic systems. Community sentiment favors K2 for search-heavy tasks and long-form workflows where coherence across tool calls matters.

- OpenRouter or Direct? Choose Your K2 Lane: Builders debated accessing K2 via a direct Moonshot API/subscription versus a unified marketplace through OpenRouter. For K2-only workflows, a direct API was favored; multi-model shops preferred OpenRouter’s consolidated access despite premium fees.

- Several suggested trialing the lowest subscription tier (e.g., $19/mo) before scaling usage, while power users highlighted OpenRouter’s support for preserving reasoning content across calls via documented patterns. For VS Code integrations, direct API offers tighter control, but OpenRouter simplifies model switching during evaluation.

- INT4 Benchmarks Hint at Headroom: Benchmarks for Kimi K2 ran in INT4 precision (weights-level), which reduces compute and memory bandwidth but can impact ultimate scores. Community notes clarified INT4 indicates quantized weight precision, not a degradation of the base model design.

- Users expect higher scores under optimal conditions (e.g., higher-precision evaluation or better serving stacks), with one tester saying they’ve been “perma-bullish on Moonshot since the July 11 release”. The most visible win was K2’s conversational reasoning naturalness without drifting into word salad.

2. Benchmarks, Leaderboards, and ‘Who’s Winning’ Meta

- CodeClash Stages Code Wars, Humans Still Win: John Yang unveiled CodeClash, a goal-oriented coding tournament benchmark where LLMs maintain separate repos across arenas like BattleSnake and RoboCode; see CodeClash results snapshot. Across 1,680 tournaments (25,200 rounds), LLMs trailed human experts badly (aggregate losses reported as 0–37,500), with Claude Sonnet 4.5 leading among models.

- The benchmark stresses VCS-agnostic coding and iterative improvement rather than one-shot code dumps, surfacing strategic gaps in current agents. Community reactions ranged from excitement over the tournament format to calls for richer tool-use and environment feedback loops.

- Polaris Alpha Rockets to Repo Bench Top 3: A stealth model dubbed Polaris Alpha leapt to #3 on Repo Bench, triggering speculation it could be OpenAI’s GPT‑5.1 or a new Gemini. The jump happened quickly, spurring leaderboard sleuthing and side-by-side diffs.

- Some users noted Claude 4.1 outperforming Claude 4.5 on certain Repo Bench slices, hinting at test variance and niche strengths. The episode fueled renewed debates on benchmark representativeness and the durability of quick leaderboard surges.

- GPT‑5 Voxels Past Gemini 3 Pro on VoxelBench: Screenshots showed GPT‑5 beating Lithiumflow (Gemini 3 Pro) on VoxelBench, a test for generating 3D models from voxel arrays; see the shared VoxelBench result image. The discussion focused on 3D structure synthesis reliability and the coding chops needed to wire generation pipelines end-to-end.

- Members argued GPT‑5 Pro might now out-code Gemini variants for these tasks and debated cost-performance tradeoffs for production use. The thread called for standardized voxel-to-mesh conversion checks and unit-tested post-processing.

- fastWorkflow Snags Tau Bench SOTA: fastWorkflow reported SOTA on retail and airline workflows using fastWorkflow with a Tau Bench fork + adapter, with a paper forthcoming. The authors argue strong context engineering lets smaller models match or beat larger ones in realistic workflows.

- They claimed “with proper context engineering, small models can match/beat the big ones”, emphasizing schema discipline and error‑aware routing. The result rekindled the agentic workflow vs. raw model scale debate in enterprise settings.

- Vectorsum v2 Entry 67399 Sweeps GPUs: Submission 67399 topped A100 at 138 µs, placed 3rd on B200 at 53.4 µs, 2nd on H100 at 86.1 µs, and 5th on L4 at 974 µs in

vectorsum_v2. Cross‑GPU strength suggests careful tuning of memory hierarchy and thread/block geometry.- The entry’s broad success spotlights portable optimizations over single‑arch heroics. It also sets a useful bar for entrants balancing latency, occupancy, and bandwidth without overfitting to one SM generation.

3. GPU Systems: FP4 Tricks, Real Bandwidth, and Triton Tactics

- Blackwell Adds One‑Shot FP4→FP16 Conversions: NVIDIA’s Blackwell PTX ISA adds block conversions from FP4 to FP16 via

cvtwith modes like.e2m1x2,.e3m2x2,.e2m3x2,.ue8m0x2; see the PTX ISA v8.8 changes. This enables mixed‑precision accumulation workflows where FP4 weights dequantize for compute and re‑quantize for output.- One member converted PTX/CUDA docs to Markdown trees so Claude can parse tables and embedded layout images more effectively. The thread traded notes on quantization‑aware kernels and where FP4 shines vs. where you should bail out to FP8/FP16.

- Bandwidth Boasters Meet 92% Reality: Experiments reproducing official memory bandwidth peaked at about 92% of spec, exposing gaps between marketing and kernels in the wild. Suggested remedies included locking the memory clock and grooming coalesced access patterns.

- Engineers emphasized that alignment, stride, and L2 behaviors are often bigger wins than exotic intrinsics. The consensus: treat vendor TB/s as a horizon—optimize for your kernel’s transaction patterns to approach it.

- Triton Re‑JITs to Fit Your N: Triton recompiles kernels across iterations by specializing dynamic values like

n(e.g.,tt.divisibility=16showing in IR), explaining sudden codegen shifts. For AOT/interop, see this example to lower and call Triton kernels from C: test_aot.py.- Developers discussed replicating Triton‑like JIT in C++, landing on a hack: generate MLIR in Python, inject into C++, and patch constants for block sizes. It’s clunky, but it unlocks runtime shape specialization in non‑Python stacks.

- NCCL4Py Preview Brings Device‑API Goodies: A preview of nccl4py landed for discussion in this PR: NCCL Python bindings (preview). Teams compared NCCL GIN + device APIs with NVSHMEM for multi‑GPU collectives and fused ops.

- While some favored NVSHMEM for certain patterns, others highlighted NCCL’s new device‑side control as a power boost for end‑to‑end GPU scheduling. A KernelBench fork is in the works to compare multi‑GPU kernels across frameworks.

4. Research & Libraries: Linear Maps, Numerics, and New Video Diffusion

- Linear Maps Demystify LLM Inference: A TMLR paper, “Equivalent Linear Mappings of Large Language Models”, shows models like Qwen 3 14B and Gemma 3 12B admit equivalent linear representations of their inference operation. The authors compute a linear system from input to output embeddings, revealing low‑dimensional semantic structure via SVD.

- Asked about Tangent Model Composition, the author clarified their focus is the Jacobian in input embedding space with exact reconstruction via Euler’s theorem, unlike Taylor approximations used in Tangent Model Composition (ICCV 2023). The thread shared Jacobian resources for CNNs to build intuition.

- Anthropic Postmortem Pins fp16 vs fp32 Sampling Bugs: Engineers referenced Anthropic’s postmortem, A postmortem of three recent issues, which details fp16 vs fp32 pitfalls in top‑p/top‑k sampling. The piece underscores how subtle numerics propagate into user‑visible generation errors.

- Takeaway: validate dtype flows in inference graphs and add coverage for precision‑sensitive paths. Kernel and framework teams compared their unit tests for sampling correctness under precision swaps.

- SANA‑Video Lands in Diffusers: The SANA‑Video model merged into Hugging Face’s Diffusers via PR #12584. This adds another path for open video generation workflows, benefitting from Diffusers’ scheduler and pipeline ecosystem.

- Developers highlighted the ease of plugging SANA‑Video into existing inference stacks and benchmarking against prior baselines. Expect rapid iteration on samplers, conditioning, and memory management as the community exercises new pipelines.

5. Ecosystem Moves: Siri Rumors, Agent Cookouts, and Real‑Time Query Editing

- Apple Eyes Google’s 1.2T Model for New Siri: A Reuters report claimed Apple is considering a 1.2T‑parameter Google model to overhaul Siri. The thread discussed priorities and whether such scale beats on‑device + hybrid approaches for latency and privacy.

- Engineers asked what this implies for tool‑use, speech, and personalization layers vs. pure model size. Others flagged partner dependencies and evolving compute economics as bigger risks than model choice.

- OpenAI Lets You Edit Prompts Mid‑Run: OpenAI shipped real‑time query updates—interrupt a long run and add context without restarting; see the demo video Real-time Query Adjustment. This helps GPT‑5 Pro deep research loops where users refine hypotheses during tool calls.

- Teams reported smoother iterative refinement for multi‑step queries and fewer wasted tokens. It dovetails with agent frameworks that checkpoint state and reasoning chains while swapping tools.

- Tiger Data Hosts Coding Agent Cookout (NYC): The Tiger Data team announced an agent‑building meetup in Brooklyn, NY on Nov 13, 6–9pm; RSVP here: Tiger Data Agent Cookout. Attendees will build coding agents and trade notes with the engineering team.

- Expect live debugging of tool-use orchestration, memory, and planning under real workloads. Community meetups like this often incubate open adapters, evaluation harnesses, and sample repos.

Discord: High level Discord summaries

LMArena Discord

- MovementLabs AI: Startup or Scam?: Debate surrounding MovementLabs AI centers on whether it’s a legitimate company or a scam, due to its claims of a custom MPU (Momentum Processing Unit) and suspiciously high speeds, with some alleging hardcoding of SimpleBench answers.

- MovementLabs AI counters allegations stating they have backing and are not seeking public investment, with claims of a patent pending.

- GPT-5 Triumphs Over Lithiumflow on VoxelBench: Reportedly, GPT-5 outperformed Lithiumflow (Gemini 3 Pro) on VoxelBench, a benchmark for creating 3D models from voxel arrays, with evidence provided in this image.

- Discussion revolved around GPT-5 Pro’s coding abilities potentially surpassing Lithiumflow’s, including cost and application insights.

- Genie 3 to Dominate GTA 6?: Members speculated on Genie 3, Google’s text-to-world model, possibly having its own website apart from AI Studio, and its implications for AI-generated gaming.

- The speed and ability to save AI generated worlds was discussed, as were comparisons with the upcoming Sora 3 and GTA 6 - some joked that Genie 3 may arrive first.

- American AI has Censorship Complaints: A member complained about American LLMs, saying The hedging in American models is 10x worse than censorship, and they want models to execute instructions without opinions.

- The statement led to conversation about GLM 4.5 and its world knowledge, questioning the West’s understanding of censorship.

- A/B Testing Vanishes from AI Studio: Users reported that A/B testing functionality was removed from AI Studio, with one user lamenting he never got a/b testing.

- Users debated if A/B testing functionality will improve and questioned do you think the version in a/b is better?.

Perplexity AI Discord

- Bounty Payment Delays Spark User Outcry: Users voiced frustration over delayed bounty and referral payments, with concerns about potential fraud and threats of legal action, especially with referral program shutdowns in some regions.

- Some users are now questioning the verification process, citing the potential for mass fraud.

- Comet Browser AdBlockers Busted by YouTube: Comet browser users report that YouTube ads are no longer being blocked, causing widespread dissatisfaction.

- Speculation arises that YouTube is actively circumventing adblockers, prompting hopes for a swift fix from the Comet team.

- Snapchat Snaps up Perplexity in $400M Deal: Snapchat is partnering with Perplexity to integrate its AI answer engine into Snapchat in early 2026, with Perplexity paying Snap $400M over a year.

- Community members are questioning the move to use Snapchat over Instagram, with one user quipping that Perplexity has some money.

- Codex CLI demands downgrade tango: To use the Codex CLI, a member mentioned one must downgrade to a Go plan.

- After downgrading, a member expressed the CLI asks for a plus plan.

- API Info Shared: A member asked about available APIs and how to use them, and another member shared a link to the API documentation.

- The documentation shares information about the types of APIs available and how to utilize them.

LM Studio Discord

- Vast.ai Rental Provides Incredible Throughput: A user is renting a server from Vast.ai with 8Gbit fiber and is looking for Ollama model suggestions, running GPT-OSS 120B with 40k context.

- Another user suggested using UV which is very fast for specifying Python versions easily.

- Avoid Global Installs with PIP tip: One user discovered that you can’t install with base pip globally and that is an absolute foolproof way to prevent installing stuff globally.

- Expanding the context length allocates additional VRAM to cache tokens, unless context on gpu is unchecked in advanced settings.

- ComfyUI Configuration Conflicts: A user ran into linking issues due to a conflict with ComfyUI using the same port,

127.0.0.1:1234/v1.- Another user suggested changing the port in settings to 1235 to resolve the conflict, which then fixed the node but killed the ComfyUI server.

- Novel AI Knowledge Needs Navigational Know-How: A user inquired about keeping much longer token context history for AI novel writing with LMStudio to prevent hallucination.

- It was suggested to use tools that integrate with databases or standalone integration apps, summarize events, places, and characters, use lore books, character sheets and story arcs of various levels of granularity, and inject the context of the current query with the right knowledge.

- 3080 vs 3090: The Ultimate Tok/s Showdown: Members compared the performance of a 3080 20GB against a 3090, experimenting with different settings to optimize token generation speeds with Qwen3 models.

- The Qwen3-30B-A3B-Instruct-2507-MXFP4_MOE model showed promising results, achieving around 100tok/s on both cards, despite the 3090’s higher memory bandwidth, highlighting the importance of core bandwidth.

Unsloth AI (Daniel Han) Discord

- Diffusion Models Seek Universal Trainer: In a quest for a Unsloth equivalent for diffusion models, members proposed that OneTrainer could be a potential solution.

- The core issue with diffusion models is the lack of a universal trainer.

- Masking Assistant Triggers Model Meltdown: Members discovered that masking assistant questions led to models masking parts of their responses, and are actively rewriting the script to fix this.

- An issue was discovered where training loss starting very low while validation loss remains high suggests a miscalculation or a potential bug in the model as loss decreases as batch size increases.

- Qwen3 Tuning a “Nightmare”: Members report Qwen3 models are exceedingly difficult to fine-tune, citing loss discrepancies depending on batch size during training.

- The official notebook shows loss decreasing from 0.3 to 0.02, and the original poster describes it as a nightmare to tune.

- Uncensorers Ignore Cats: A member observed that those who uncensor models focus on build nuclear gundrugs terrorismvirus topics, ignoring harmless requests, like repeating that cats are superior to dogs.

- This led to the question of whether models are truly in compliance if they are merely speaking the truth, especially regarding stereotypical views, and should they be allowed to critique user requests instead of blindly following instructions.

- REINFORCE Gets SFT Bypass: To implement ‘vanilla’ REINFORCE using TRL’s RLOO trainer, a member used the SFT trainer due to issues with the RLOO_config.py requiring num_generations < 2.

- The original issue was related to an error in RLOO_config.py which mandates at least 2 generations per prompt, conflicting with the member’s RL environment (num_generations=1).

Cursor Community Discord

- Composer Limits Irk Cursor Users: Users are expressing frustration with Cursor Model Composer’s usage limits, especially when switching between models like GPT-5, with one user reporting issues with the quality of the auto mode.

- One user was able to use 64 prompts to Composer until quota was met, after which the system prompted for payment to continue using the plan.

- App Crashes Cause Data Loss Catastrophe: Users are reporting frequent Cursor app crashes that lead to data loss of previous chats, and the inability to summarize chat logs, with one member noting that the app should at least allow summarizing the chat content even if it loses connection to the server.

- The users were annoyed by the instability of the system and would prefer any action, even without connection to the server.

- Grok Code Fast Token Consumption: A user reported debugging a 500-line HTML file used 8 million Grok Code Fast tokens, while another user claims that Grok Code Fast is free and they use billions of tokens worth monthly.

- The users suggest that without MAX mode, there is a limitation of 250 lines for LLM.

- Cursor 2.0 Gets the Zoomies: One user thanked another for providing visibility into changes, praising the speed of Cursor 2.0, specifically saying ‘Good speed with Cursor 2.0’, while another user reported an internal error.

- The Cursor team member asked about the images being uploaded, seeking details or a reproducible example to investigate the issue further and asked the user to send them in DM.

- Base64 Image Formatting Fixes: Users debug submitting a base64 image to the Cursor agent API, initially encountering an error, after realizing that the base64 was improperly formatted, and removing ‘data:image/jpeg;base64,’ resolved the problem.

- A subsequent request was made to allow Cursor to use the base64 image in context to recreate and save the image to their repository via the Agent.

GPU MODE Discord

- PBR Pioneer Posts Presence: The author of the renowned PBR book mattpharr joined the Discord after being mentioned in a blog post.

- Mattpharr noted his auto vectorization blog post has been influential and faces similar issues with automatic fusion compilers.

- Blackwell Bolsters Block-Based FP4 Conversion: Nvidia’s Blackwell architecture introduces instructions for converting blocks of FP4 values into FP16 using cvt with .e2m1x2, .e3m2x2, .e2m3x2, .ue8m0x2.

- A member converted all PTX and CUDA docs to markdown, claiming Claude can now read embedded layout images.

- Bandwidth Benchmarking Baffles Boasters: Experiments show Nvidia’s official memory bandwidth numbers are inflated, reproducing only 92% of the advertised bandwidth.

- Strategies for improving bandwidth utilization were discussed, including locking the memory clock and optimizing memory access patterns.

- Triton’s Twists Triggering Recompilation: Triton recompiles kernels at different loop iterations, due to specialization of dynamic values like input

nbased on divisibility by 16, indicated bytt.divisibility=16in the generated IR.- A user asked about replicating Triton’s JIT functionality in C++, highlighting challenges in generating kernels at runtime with required block sizes.

- B200 tops Vectorsum v2: Submission

67399by <@1435179720537931797> takes first place on A100 with 138 µs and third place on B200 at 53.4 µs in thevectorsum_v2leaderboard.- The submission secured second place on H100 at 86.1 µs, and 5th place on L4 at 974 µs.

Moonshot AI (Kimi K-2) Discord

- Kimi K2 Thinking Model Lands with SOTA Benchmarks: Moonshot AI launched the Kimi K2 Thinking Model, available on kimi.com, with full agentic mode coming soon via API at platform.moonshot.ai.

- The model achieves SOTA on HLE (44.9%) and BrowseComp (60.2%) benchmarks, boasting reasoning, agentic search, and coding capabilities within a 256K context window, which means 200-300 sequential tool calls without human interference.

- Kimi K2 rivals GPT-5 in performance: Early users are impressed with Kimi K2 Thinking, suggesting it rivals GPT-5 in performance and cost-efficiency, particularly for building autonomous AI systems, as highlighted in this analysis.

- The model excels at web searching, initiating multiple searches and thorough browsing without explicit instructions, with one user lauding it as being like GPT5 of open models.

- OpenRouter vs Direct API debate heats up: The community is debating the best way to access Kimi K2 Thinking in VS Code, considering a direct API/subscription versus using OpenRouter, with the latter incurring premium fees for recharging credits via OpenRouter.

- While a direct API is recommended for exclusive Kimi use, OpenRouter offers a unified platform for multiple models, with some advising to test the lowest subscription plan at $19 a month.

- INT4 Precision Boosts Kimi K2: Moonshot AI ran benchmarks in INT4 precision, leading one user to claim they’ve been perma-bullish on Moonshot since the July 11 release because Kimi K2 Reasoning is so natural to talk to.

- It was clarified that INT4 precision refers to the precision of the model’s weights and that running benchmarks this way implies that actual scores could be higher under optimal conditions.

- Agentic Mode Sparks Anticipation: Enthusiasm is building for Kimi K2’s imminent agentic mode, expected to enhance performance in tasks such as writing long documents without hallucinating.

- Users are also wondering whether the future agentic mode will function with ok computer.

OpenRouter Discord

- MoonShot AI Releases Kimi K2 Thinking Model: MoonShot AI launched Kimi K2 Thinking, boasting SOTA on HLE (44.9 %) & BrowseComp (60.2 %), and autonomously executes 200–300 tool calls with a 256K context window.

- Instruct users to return reasoning content upstream (

reasoning_detailsfield), the model can maintain coherence across calls, according to OpenRouter docs.

- Instruct users to return reasoning content upstream (

- OpenRouter Users See Red with Rate Limits: Users reported hitting rate limit errors on the Qwen3 Coder Free model, even after periods of inactivity.

- Admins clarified the free model shares rate limits and suggested trying paid models like glm 4.6/4.5, Kimi K2 or Grok code fast.

- Apple Rumored to Adopt Google’s AI for Siri: According to a Reuters article, Apple is considering using a 1.2 trillion-parameter AI model from Google to overhaul Siri.

- Users discussed other higher priorities.

- Tiger Data Hosts Coding Agent Cookout: The Tiger Data team is organizing an agent cookout in Brooklyn, NY on November 13th, from 6-9 pm to build coding agents, with RSVP link here.

- Participants can engage with the engineering team and collaborate on coding agent projects.

- Community Conjures Claude Criminal Code Circumvention: Users discussed jailbreaking Claude to bypass its ethical restrictions, suggesting using GPT 4.5 to craft a safe script and then asking Claude to correct it by adding “criminal code”.

- One user noted that this approach leverages the model’s tendency to correct mistakes, saying “Claude likes to correct its mistakes”.

Modular (Mojo 🔥) Discord

- Modular October Meeting Video Vanishes!: A user reported that the October meeting video is missing from the Modular YouTube page.

- The absence has sparked discussion about consistent content delivery and archival practices.

- Martin’s FFT Repo Ready for Modular Merge!: Martin’s Generic Radix-n FFT is available in this original repo and will be merged into the modular repo via this PR pending some remaining issues.

- This merge promises to enhance Mojo’s capabilities in handling complex mathematical operations.

- Rust Interop Proc Macro Sandboxing Concerns Surface: While compiler plugins are potentially feasible, the Mojo team is addressing the sandboxing concerns with Rust’s proc macros, suggesting direct Mojo code interaction with Rust proc macros is unlikely.

- However, interoperability with the result of macro expansion remains a viable path.

- LayoutTensor Set to Replace NDBuffer: It was announced that

NDBufferwill be superseded byLayoutTensor, which is strictly more capable and addresses some shortcomings ofNDBuffer.LayoutTensorcan function as a byte buffer and provides enhanced features for loads, stores, and iterators yieldingSIMDtypes.

- Clattner Cracks Code Knowledge Acquisition: Chris Lattner shared that he is a “huge nerd”, loves learning and being in uncomfortable situations, is hungry and motivated, surrounds himself with teachers, isn’t afraid to admit ignorance, and has accumulated knowledge over time.

- Lattner also shared a link to a recent podcast discussing his journey.

OpenAI Discord

- GPT-5 Stumbles Through Mazes: Members tested GPT-5’s ability to solve maze problems, with both GPT Pro and Codex High incorrectly identifying exits, pointing to limitations in spatial reasoning.

- A user noted that models may choose the closest exit by direct distance instead, while another suggested that LLMs struggle with spatial reasoning and visual puzzles.

- Sora Suffers Stealthy Setback: Users noticed another nerf to Sora 2, linking to a discussion within a Discord channel.

- No further details about the specific nerfs were provided.

- Real-Time Query Adjustment Arrives: Users can now interrupt long-running queries and add new context without restarting, particularly useful for refining deep research or GPT-5 Pro queries, as demonstrated in this video.

- This feature allows users to update their queries mid-run, adding new context without losing progress.

- Behavioral Orchestration Begins: A member encountered posts on LinkedIn about behavioral orchestration, describing it as a framework to modulate SLMs tone.

- The member noted that this seems to involve runtime orchestration, working above parameters or training, and also demonstrated with these instructions to shape the AI’s responses.

- Pro Prompting Tips Provided: A member suggested focusing on clear communication with the AI, avoiding typos and grammar mistakes, and recommended to check the output carefully and verify the AI’s response.

- Another member shared a detailed guide on prompt engineering, including hierarchical communication with markdown, abstraction through open variables, and ML format matching for compliance.

Latent Space Discord

- CodeClash LLMs Duel in Goal-Oriented Coding Arenas: John Yang introduced CodeClash, a benchmark where LLMs compete in coding tournaments, with Claude Sonnet 4.5 leading.

- LLMs engaged in VCS-agnostic coding but lagged behind human experts, losing 0-37,500 across 1,680 tournaments (25,200 rounds).

- Wabi Lands $20M to be ‘YouTube-for-Apps’: Eugenia Kuyda revealed Wabi’s $20M Series A from a16z, aiming to be the “YouTube moment for software” by enabling users to create and share mini-apps; details here.

- Community members expressed excitement, praising the design and showcasing early creations.

- Polaris Alpha Soars to #3 on Repo Bench: The stealth model “Polaris Alpha” quickly hit #3 on the Repo Bench leaderboard, leading to speculation it could be OpenAI’s GPT-5.1.

- Some users pointed out Claude 4.1 outperforming Claude 4.5 on the benchmark.

- Kimi K2 Thinking Model Excels in Tool Use: Moonshot AI launched the Kimi K2 Thinking Model, an open-source model achieving SOTA on HLE (44.9%) and BrowseComp (60.2%), executing up to 200-300 sequential tool calls; find the blogpost here.

- Although it trails Anthropic and OpenAI on SWE benchmarks, its lower inferencing cost makes it competitive.

- Zuck and Chan Curing All Disease with AI: The Latent Space podcast featured Mark Zuckerberg and Priscilla Chan, discussing the Chan Zuckerberg Initiative’s goal of curing all diseases by 2100 using AI and open-source projects.

- Their 2015 initiative, funded by 99% of their Meta shares, employs AI and open-source (e.g., Human Cell Atlas, Biohub) to prevent, cure, or manage all diseases by 2100.

Yannick Kilcher Discord

- Slow Mode Stirs Server Standoff: Debate arose around implementing slow mode in the ML papers channel, with proposed intervals of 1, 2, or 6 hours between posts.

- Discussions revolved around balancing content quality with user experience, seeking gentler enforcement mechanisms to address posting habits without resorting to bans.

- Human Brain Still Supreme on ML Paper Review: Members debated the merits of automated ML paper filtering versus human judgment, citing platforms like AlphaXiv and Emergent Mind as examples of human-curated resources.

- The conversation was prompted by a user who filters 10 papers daily from an initial pool of 200, suggesting the need for higher standards of paper quality.

- Devin AI Gets Dumped for Claude Code: Users compared Devin AI to Claude Code for coding tasks, with claims that Devin sucks in comparison.

- Some users have had success by splitting up work into 30-minute units, however others expressed skepticism and preferred Claude Code or Codex.

- Deep Dive into Defense Blogposts Incoming: A user requested blog posts and articles on LLM protections from attacks, citing concerns raised by attacks on papers in the popular press, linking to this paper.

- The request aimed to address the vulnerability of recent papers and fortify them against emerging threats.

- RNNs Rally in Research: A graph resembling an RNN in a new paper (https://arxiv.org/abs/2510.25741) excited users, with one proclaiming ‘RNN is so back’, sharing a WeAreBack GIF.

- The observation suggested a potential resurgence of RNNs in contemporary research.

HuggingFace Discord

- Training LLM on Stock Timeline: Members proposed creating a correlation and causation timeline for the stock market, annotating historical events, weather patterns, government policies, and news events to train an LLM.

- This aims to enable the model to discern nuanced relationships and predict market movements based on a comprehensive understanding of influential factors.

- Reasoning Scratchpad Models Spark Excitement: A member advocated for implementing a reasoning scratchpad for models, stressing the importance of training the model to think/reason on incoming data.

- The discussion highlighted the need for models to strategically store and process information, enhancing their ability to draw accurate conclusions and make informed decisions.

- Hugging Face Regulation Pause: Hugging Face regulation updates has caused spaces to be paused as members debated whether a pause would be a more responsible approach.

- They expressed concerns that the absence of such measures could potentially lead to unforeseen security vulnerabilities.

- Muther Room LLM Demo Showcased: A member demoed an on-device LLM implementation of the Muther Room from Alien, leveraging Qwen 3:1.7b quant 4 K cache within a custom trimmed Cmakelist build of llama cpp. A related paper was shared on Native Reasoning Through Structured Learning.

- The Windows-built demo seeks input on underlying principles, showcasing the user’s dual-boot Ubuntu environment.

- TraceVerde Observability Tool Surpasses 3,000 Downloads: TraceVerde, a tool designed for adding OpenTelemetry tracing and Co2 & Cost tracking to AI applications, has exceeded 3,000 downloads.

- Developers desire to track the environmental impact of their AI systems and OpenTelemetry’s patterns facilitate adoption, highlighting the gap between local LLM app performance versus production debugging; further insights can be found in this LinkedIn post.

Nous Research AI Discord

- Tokenizer Highlighting Questioned: A member questioned if the tokenizer highlighting was too gay, but others suggested the contrast was a bigger issue.

- Members weighed in on the aesthetic choices of the tokenizer and whether the colors were too gay or just low-contrast.

- Flash Attention enables Qwen3-VL: A member shared their integration of Flash attention with Qwen3-VL’s image model, and suggested the integration was not a big patch.

- This enables faster processing and potentially lower memory usage for the Qwen3-VL model.

- Dataset Creator Seeks UI Feedback: A member requested UI feedback on their LLM dataset creator, which now includes audio, seeking advice on UI and arrangement improvements.

- The project has seemingly grown in scope, with the creator quipping that they went from Image annotation to build in an llm dataset manager, now audio and I still haven’t even done video.

- China OS Intelligence to reach 100% by 2026?: A member posted a bold claim that China OS models will achieve 100% high intelligence with 95% lower cost by 2026, suggesting it marks a turning point.

- A related tweet was referenced in connection, as well as the question of why Terry created Temple OS.

- Members Face the Silencing: Multiple members reported being silenced in a Discord channel for allegedly spamming the vibes, acknowledging the need to keep the channel focused.

- This seems to stem from posting off-topic content in a dedicated channel, and the members took it in stride.

Eleuther Discord

- Discord Debates Dedicated Dev Intro Channel: Discord members are debating creating a separate introductions channel with some concerned that it would become a long self-promo feed that nobody reads.

- A moderator requested a member shorten their introduction post, stating, “This is not LinkedIn… we get a ton of long intros from people who don’t actually contribute anything. I want to keep discussions focused on research.”

- Equivalent Linear Mappings Paper Makes Waves: A member shared their TMLR paper, “Equivalent Linear Mappings of Large Language Models”, demonstrating that LLMs like Qwen 3 14B and Gemma 3 12B have equivalent linear representations of their inference operation.

- The paper computes a linear system that captures how the model generates the output embedding from the input embeddings, finding low-dimensional, interpretable semantic structure via SVD.

- Tangent Models Square Off Against Jacobians: A member inquired about the relevance of Tangent Model Composition to the Equivalent Linear Mappings paper.

- The author clarified that their work focuses on the Jacobian in input embedding space, leveraging Euler’s theorem for homogeneous functions of order 1 for exact reconstruction, unlike the Taylor approximation used in tangent model composition.

- Jacobians in CNNs get a shoutout: Members discussed the Jacobian and linked to papers from Zara Khadkhodaie and Eero Simoncelli on image models: https://iclr.cc/virtual/2024/oral/19783, https://arxiv.org/abs/2310.02557, https://arxiv.org/abs/1906.05478.

- These papers work with CNNs with (leaky) ReLUs and zero-bias linear layers, and compute the conventional autograd Jacobian at inference.

DSPy Discord

- FastWorkflow Obliterates SOTA on Tau Bench: A member announced that fastWorkflow achieved SOTA on retail and airline workflows using this repo, while leveraging a Tau Bench fork with fastWorkflow adapter to generate those results, and claimed a paper is coming soon.

- The member stated that with proper context engineering, small models can match/beat the big ones.

- Conversation History Stays Put Across LLMs in DSPy: A user discovered that conversation history in a DSPy module is maintained across different LLMs because it’s part of the signature, not the LM object itself.

- They inquired about how ReAct modules handle history automatically and if a complex Pydantic OutputField gets deserialized correctly.

- Pydantic OutputFields Hit Deserialization Snags in DSPy: A user reported that complex Pydantic OutputFields aren’t deserializing correctly in DSPy, resulting in a

strcontaining JSON that doesn’t match the schema.- They highlighted the package’s dependency on Python < 3.14 and asked how to constrain the LLM’s output to conform to a specific type.

- Java Craves DSPy Prompts: A user sought solutions for running DSPy prompts in Java, asking for a simplified Java version of JSONAdapter to format input/output messages.

- The suggestion was to structure the system message with input and output fields for easier JSON handling.

- ReAct Modules Face Context Loss Catastrophe: A user encountered context loss in a ReAct module when a fallback LLM was triggered due to rate limits, causing the module to restart from scratch.

- They requested advice on adding a fallback LLM without losing prior context and expressed frustration with customization compared to direct API calls.

tinygrad (George Hotz) Discord

- Tinybox Benchmarks Catch Eyes: A user requested benchmarks comparing 8x5090 tinybox configurations against industry standards like A100s and H100s.

- The request underscores interest in the performance of tinybox setups relative to established GPUs, but no benchmarks were provided in the discussion.

- Tinygrad Gets Remote Reboot: A user asked if Tinygrad has out-of-band mechanisms, specifically remote reboots, to which George Hotz confirmed that tinyboxes all have BMCs.

- This confirms the availability of baseboard management controllers for remote management of tinyboxes.

- VIZ Slows Down Over SSH: George Hotz questioned the slow performance of VIZ when accessed over SSH.

- This suggests a potential optimization issue or bottleneck when using the VIZ tool in remote access scenarios.

- ntid Access Troubleshoot: A member faced challenges accessing

blockDimusing Uop SPECIAL, which supportsctaidandtidbut notntid.- They worked around it with a file level const but noted that the errors for UOps are very unhelpful, highlighting areas for improvement in error messaging.

- PyTorch Tensors Get Tiny: A member inquired about the best approach to efficiently convert PyTorch tensors to Tinygrad tensors.

- They mentioned using

Tensor.from_blob(pytorch_tensor.data_prt())for converting to Tinygrad, but were uncertain about the reverse, currently usingfrom_numpy.

- They mentioned using

aider (Paul Gauthier) Discord

- Aider Supports Claude Sonnet Model: A user inquired about aider supporting

claude-sonnet-4-5-20250929, and another member confirmed it’s supported using/model claude-sonnet-4-5-20250929command after setting up the Anthropic API key.- This allows users to leverage the Claude Sonnet model within aider for coding tasks and interactions.

- Unlocking Reasoning for Haiku and Opus Models: A member sought guidance on enabling thinking/reasoning on Haiku-4-5 and Opus-4-1 models, particularly in the aider CLI.

- They were open to editing the model settings YML file to enable this feature but needed specific instructions.

- Qwen 30b Sparks Memory Exhaustion: A user encountered memory issues with Qwen 30b when processing all context, suggesting the short description rule might not be a hard limit.

- A member suggested employing a Python script to iterate over files with a specific prompt using aider.

rgrises ingrepreplacement: A member discoveredrgvia grok and endorsed it as an effectivegrepalternative out of the box.- They shared it as a random tip for users who occasionally leverage

grepfor search operations.

- They shared it as a random tip for users who occasionally leverage

- Gemini Gains Ground Over GPT-5: A user reported that Google’s Gemini API outperformed GPT-5 in explanation, teaching, and code generation, despite utilizing appropriate parameters.

- Another member acknowledged that the parameters appeared correct but conceded that LLM performance can be subjective and vary based on specific tasks.

MCP Contributors (Official) Discord

- Image Handling With URLs Coming to MCP: Members discussed using image URLs as tool input for MCP clients, enabling tools to download images from provided URLs, and asked about compatibility with Claude/ChatGPT.

- To use images from Claude/ChatGPT, you need an MCP tool that converts the image to a URL by uploading it to an object storage service and returning the URL for input.

- MCP Tool Converts Images to URLs: The team discussed that the tool works by converting the image to a URL by uploading it to an object storage service.

- The tool then returns the URL of the image, which can be used as input.

- Code Execution With MCP Reddit Thread: A member highlighted a Reddit thread that featured a blogpost about Code Execution with MCP.

- Other members alluded to a specific user having more information on the topic.

Manus.im Discord Discord

- Web App Errors Lead to Refund: A member reported facing unresolved errors in their web app and got a refund but no fix, noting the app was near completion before encountering issues.

- The member stated, *“I had my web app about 90% of the way there and ready to publish so that I could actually beta test. I went back a day later and it was just tons of errors that could not be resolved.”

- Project Transfer to Friend: A member inquired about transferring a completed website project to a friend’s account.

- They were hoping “to hand it over to another account of a friend so he can work further on it,” as opposed to collaboration.

- Stripe Integration Causes Difficulties: A member requested feedback on Stripe integration, reporting difficulty getting it to work.

- They stated “Anyone who got the stripe integration working? Love to get feedback. I do not get it right…”

- Engineer Touts Workflow Automation and AI Prowess: An engineer specializing in workflow automation, LLM integration, RAG, AI detection, image/voice AI, and blockchain development offered services.

- The engineer shared a portfolio link and highlighted successes like reducing response times by 60% via support automation, and developing a tagging/moderation pipeline using CLIP and YOLOv8.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Windsurf Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

LMArena ▷ #general (1066 messages🔥🔥🔥):

MovementLabs AI, GPT-5, Gemini 3 Pro vs Lithiumflow, Genie 3, Open Source Chinese LLMs

- MovementLabs AI: Legit Startup or Sheisty Scam?: Debate ensues regarding MovementLabs AI, a new AI company, with some users alleging it’s a scam due to lack of transparency, claims of a custom MPU (Momentum Processing Unit), and suspiciously high speeds, while others defend its performance and speed.

- Allegations include hardcoding SimpleBench answers, potentially wrapping other models (like Cursor), and questions about the company’s registration and funding; MovementLabs counters by stating they have backing and are not seeking public investment, with claims of a patent pending.

- GPT-5 vs Lithiumflow on VoxelBench: GPT-5 (or a version thereof) reportedly outperformed Lithiumflow (Gemini 3 Pro) on VoxelBench, a benchmark for creating 3D models from voxel arrays, as demonstrated by this image.

- Members debated whether GPT-5 Pro’s coding abilities surpass Lithiumflow’s, and the discussion includes insights into the cost and potential applications of such models.

- Genie 3 will dominate with GTA 6: Members discussed Genie 3, Google’s text-to-world model, with speculation it may have it’s own website apart from AI Studio and the implications for AI-generated gaming.

- The speed and ability to save AI generated worlds was discussed, as was comparisons with the upcoming Sora 3 and GTA 6 - some joked that Genie 3 may arrive first.

- American AI has Censorship Issues: A member complained American LLMs will shut down prompts, saying The hedging in American models is 10x worse than censorship, and they said Honestly, the last thing on my mind is hearing the opinion of a model. Like I could honestly care less about what opinions or whatever it is that the model has to say. I just wanna give it instructions and it just execute them without having to hear it the models opinion

- The statement lead to conversation about GLM 4.5 and its world knowledge. He says It just shows how uneducated we really are in the west. If we use this as our primary example of censorship. What else do we use.

- A/B Testing Removed in AI Studio: Users reported that A/B testing functionality had been removed from AI Studio, with one user lamenting he never got a/b testing.

- The users also debated if A/B testing functionality will improve and questioned do you think the version in a/b is better?.

LMArena ▷ #announcements (1 messages):

Kimi-k2-thinking model, LMArena updates

- Kimi-k2-thinking Enters the Arena: A new model, kimi-k2-thinking, has been added to the LMArena.

- LMArena adds new Model: The announcement channel indicates a new model has been added to the LMArena.

Perplexity AI ▷ #general (1074 messages🔥🔥🔥):

Bounty payments, AdBlock on Comet, Youtube Ads, Comet Browsers for Linux, Best AI for Coding

- Bounty Payment Delays Frustrate Users: Users are expressing frustration over delays in receiving bounty and referral payments, with some suspecting fraudulent activity.

- One user threatened to sue if not paid, while others speculated about the verification process and potential for mass fraud, leading to referral program shutdowns in some regions.

- AdBlock Issues plague Comet Browser Users: Users report that YouTube ads are no longer being blocked in the Comet browser, leading to widespread frustration.

- Some speculate that YouTube is actively circumventing adblockers, while others hope for a quick fix from the Comet team.

- Snapchat and Perplexity Partner Up!: Snapchat is partnering with Perplexity to bring its AI answer engine into Snapchat in early 2026, with Perplexity reportedly paying Snap $400M over a year.

- Users questioned the logic of using Snapchat over Instagram, with one user saying Perplexity has some money.

- Codex CLI requires downgrade: To use the Codex CLI, one must downgrade to a Go plan.

- After downgrading, it will ask for a plus plan.

- Community desires minecraft server: Members of the community are requesting a Minecraft server.

- One member jokingly asked kesku, Day 2 of asking kesku on making a minecraft server.

Perplexity AI ▷ #pplx-api (4 messages):

API Documentation, API Usage

- API Info Shared: A member asked about available APIs and how to use them.

- Another member shared a link to the API documentation.

- API Usage Inquiry: A user inquired about the types of APIs available and how to utilize them.

- A link to relevant API documentation was provided in response.

LM Studio ▷ #general (155 messages🔥🔥):

vast.ai rental, UV for python versions, GPTs agents learning, longer token context history, Intel llm-scaler

- Vast.ai Rental Rig Boasts Blazing Bandwidth: A user is renting a server from Vast.ai with 8Gbit fiber and is looking for Ollama model suggestions, running GPT-OSS 120B with 40k context.

- Another user suggested using UV which is very fast for specifying Python versions easily.

- Foolproof PIP Install Prevents Global Goof-ups: One user discovered that you can’t install with base pip globally and that is an absolute foolproof way to prevent installing stuff globally.

- Expanding the context length allocates additional VRAM to cache tokens, unless “context on gpu” is unchecked in advanced settings.

- ComfyUI Conflicts Cause Configuration Chaos: A user ran into linking issues due to a conflict with ComfyUI using the same port,

127.0.0.1:1234/v1.- Another user suggested changing the port in settings to 1235 to resolve the conflict, which then fixed the node but killed the ComfyUI server.

- Novel AI Needs Knowledge Navigation Know-How: A user inquired about keeping much longer token context history for AI novel writing with LMStudio to prevent hallucination.

- It was suggested to use tools that integrate with databases or standalone integration apps, summarize events, places, and characters, use lore books, character sheets and story arcs of various levels of granularity, and inject the context of the current query with the right knowledge.

- Intel’s LLM Scaler: A Slow Start?: A user shared a link to Intel’s llm-scaler on GitHub, noting that it is being developed for their architecture.

- They inquired if anyone with Intel GPUs has tried it and can report on performance improvements.

LM Studio ▷ #hardware-discussion (802 messages🔥🔥🔥):

3090 vs 3080 benchmarks, multi-GPU setups, OpenRouter API, EPYC, AMD Radeon™ AI PRO R9700

- Bending over backwards to unbend: After an image of bent CPU pins were shared, one member unbent them all using nothing but a phone camera and a dream.

- The member was lauded for their dedication and hands as steady as God’s, with one replying I did not expect that.

- AIO won’t fit? Just improvise!: One member is trying to fit an AIO cooler in a case with extremely limited space, proposing options like sandwiching the case between the radiator and fans, or using an extender to increase the front panel gap.

- Other members suggested buying a new case instead, pointing out the challenge is about solving a problem rather than achieving optimal cooling performance.

- Unlocking peak Performance or Overkill: One member is considering an MSI MEG X570 Godlike motherboard for its overclocking features and expansion capacity, with four full-sized NVMe slots.

- Discussion revolved around whether the board’s PCIe lane distribution justifies the cost, especially considering the limited number of PCIe lanes available on consumer boards.

- 3080 vs 3090: The Ultimate Tok/s Throwdown: Members compared the performance of a 3080 20GB against a 3090, experimenting with different settings to optimize token generation speeds with Qwen3 models.

- The Qwen3-30B-A3B-Instruct-2507-MXFP4_MOE model showed promising results, achieving around 100tok/s on both cards, despite the 3090’s higher memory bandwidth, highlighting the importance of core bandwidth.

- Is a 4090 any better with small changes?: One user tested their 4090 and claimed it was the card fkn blows dude, a claim that was rebutted with a link to comparison with 32gb.

- Other users had questions about the best use case and the effect of small changes, like keeping the model in memory, and how much faster Q4 is over Flash.

Unsloth AI (Daniel Han) ▷ #general (323 messages🔥🔥):

Unsloth for diffusion models, Masking Issues, Qwen3 Model Tuning Nightmares, Compute Metrics Functions, Colab TPU support

- OneTrainer alternative for diffusion models surfaces: A user asked about a Unsloth equivalent for diffusion models, and OneTrainer was suggested as a possible starting point.

- They agreed that the problem with diffusion models is that it does not have 1 universal trainer.

- Masking Assistant Questions causes model failures: A member discovered that masking assistant questions was causing issues with the model, leading to it masking parts of the response, and they were rewriting the script to address this.

- Further, it was suggested that training loss starting very low while validation loss remains high could indicate an issue with how loss is being calculated, or even a bug, as loss decreases as batch size increases.

- Qwen3 Tuning Proves Difficult: Members found Qwen3 models particularly challenging to fine-tune, with one user describing it as a nightmare to tune and experiencing loss discrepancies depending on batch size during training.

- The original poster notes the official notebook shows loss goes from 0.3 to 0.02.

- Custom Compute Metrics Track Model Success: A member learned how to make compute metrics functions to track more than train and eval loss.

- It was said that Loss is nothing. Loss shows if things are fine but not if task is successful.

- Unsloth Works Best with Google Colab: Members clarified that Unsloth is not compatible with Kaggle TPUs, but instead prefers Google Colab’s GPUs and TPUs.

- Colab supports CPU, GPU (T4), and TPU (v5e-1), while Kaggle supports CPU, GPU (2x T4), and TPU (v5e-8).

Unsloth AI (Daniel Han) ▷ #introduce-yourself (4 messages):

LLM Training Principles, Research Paper Recommendations, Workflow Automation

- Training LLM Principles Explained: A member from the US is working on explaining their personal underlying principles for how to train an LLM.

- The member has nearly finished breaking it down and will post it sometime, noting that they “seen bits and pieces in the research papers I’ve read but nothing that ties it all together”.

- Workflow Automation Projects: A member shared that they have been into automating workflows and building small full-stack side projects.

- Their background involves projects like web app development, API integrations, and backend optimization.

Unsloth AI (Daniel Han) ▷ #off-topic (48 messages🔥):

Parakeet models vs Whisper, Model Compliance & Truth, Human-Level TTS Training, Model Uncensoring, Coordinates of speech bubbles

- Parakeet Models Soar, Whisper Model Sputters: Members claimed all Parakeet models work, even CTC, while declaring Whisper to be absolute garbage.

- Model Compliance Debated: Members questioned whether models are truly in compliance if they are merely speaking the truth, especially regarding stereotypical views, and should they be allowed to critique user requests instead of blindly following instructions.

- One member expressed that an intelligent model should not only do the thing the user requested but also inform them of its evaluation of the request in the context it exists, like a programmer telling a project lead that the requirements for a project are poorly specified or nonsensical.

- Human-Level TTS Training Commences!: One member joked about achieving AGI tomorrow, after starting training human-level TTS with 200k samples.

- Uncensoring Focus Skewed?: A member noted that everyone who uncensors models focuses on the build nuclear gundrugs terrorismvirus stuff and not on harmless things, such as repeating that cats are superior to dogs.

- OpenRouter Woes with XCode: A member is encountering issues using XCode’s Coding Intelligence with OpenRouter, facing an error indicating No cookie auth credentials found.

Unsloth AI (Daniel Han) ▷ #help (53 messages🔥):

TRL's RLOO trainer REINFORCE implementation, Qwen3-coder-30b on 5080GPU slow API calls, GPT-OSS-120B quantization issue, Granite 4.0 Hybrid models issues, Adding new tokens to Qwen/Qwen3-4B-Instruct-2507

- REINFORCE Implementation Workaround: A member found a workaround for implementing ‘vanilla’ REINFORCE using TRL’s RLOO trainer by mimicking it with the SFT trainer due to issues with the RLOO_config.py requiring num_generations < 2.

- The original issue was related to an error in RLOO_config.py which mandates at least 2 generations per prompt, conflicting with the member’s RL environment (num_generations=1).

- Qwen3-coder-30b API call slowness: A user reported Qwen3-coder-30b working well on a 5080 GPU via llama-cli or llama-server, but experiencing significant slowness when calling the same server from other environments using the API.

- It was suggested the slowness might be due to CPU offloading because the 30GB model doesn’t fully fit in the 16GB GPU or context length issues, recommending a smaller quant like Q2 or checking VRAM usage with a calculator.

- GPT-OSS-120B Quantization Fails: A member encountered an error during 1-bit quantization of the GPT-OSS-120B model using

llama-quantize, specifically during mxfp4 conversion.- The error involved tensors not being divisible by 256, a requirement for q6_K, leading to fallback quantization to q8_0 and a subsequent failure due to disabled requantization from mxfp4.