Strong generative media showings from China.

AI News for 2/9/2026-2/10/2026. We checked 12 subreddits, 544 Twitters and 24 Discords (256 channels, and 9107 messages) for you. Estimated reading time saved (at 200wpm): 731 minutes. AINews’ website lets you search all past issues. As a reminder, AINews is now a section of Latent Space. You can opt in/out of email frequencies!

It is China model release week before Valentine’s Day, and the floodgates are opening.

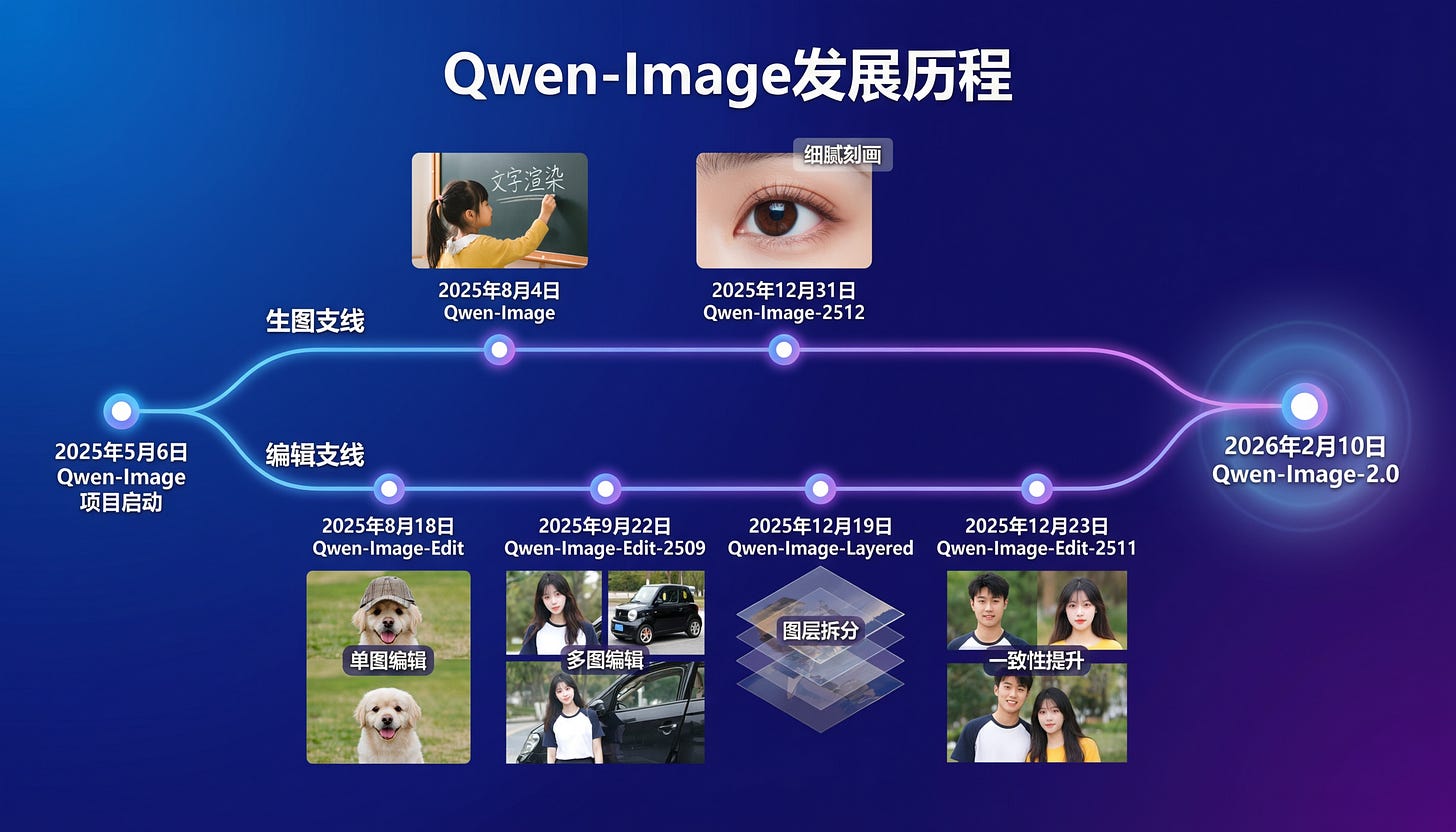

We last got excited about Qwen-Image 1 in August, and in the meantime the Qwen guys have been cooking, with Image-Edit and Layers. Today with Qwen-Image 2 they reveal the grand unification:

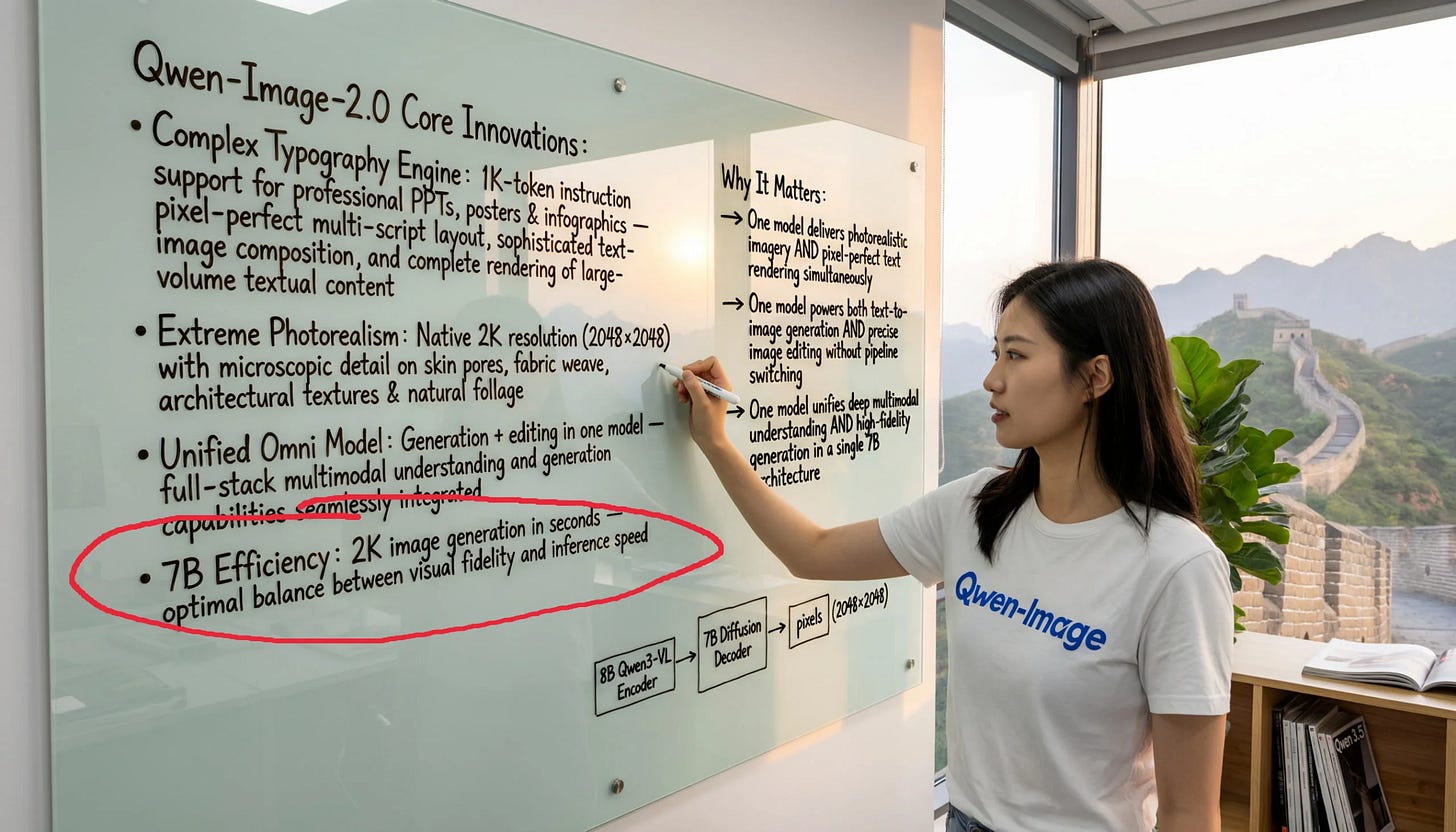

The text control and fidelity demonstrated is incredibly impressive. While the weights and full technical report are not yet released, the images drop a few surprising hints (caught by the Reddit sleuths in the recap below) about what’s going on that point to incredible technical advances.

To put it simply, we will have a Nano-Banana-level open imagegen/imageedit model in a 7B size. (Per Alibaba’s own Arena rankings on the blogpost)

Similarly no weights released but lots of hype today is Seedance 2.0, which seems to have solved the Will Smith Spaghetti problem and also generated lots of anime/movie scenes. The sheer flood of examples is almost certainly an astroturfing campaign, but enough people are independently creating new videos that we have some confidence that this isn’t just a cherrypick.

AI Twitter Recap

Coding agents, IDE workflows, and “agentic sandboxes” becoming standard plumbing

- OpenAI shifts Responses API toward long-running computer work: OpenAI introduced new primitives aimed at multi-hour agent runs: server-side compaction (to avoid context blowups), OpenAI-hosted containers with networking, and Skills as a first-class API concept (including an initial spreadsheets skill) (OpenAIDevs). In the same window, OpenAI also upgraded Deep Research to GPT‑5.2 and added connectors + progress controls (OpenAI, OpenAI), reinforcing that “research agents” are productized, not just demos.

- Sandboxes: “agent in sandbox” vs “sandbox as a tool” becomes a design fault line: Several posts converge on the same architectural question—should the agent live inside an execution environment, or should it call an ephemeral sandbox tool? LangChain’s Harrison Chase summarized tradeoffs in a dedicated writeup (hwchase17), with follow-on commentary pushing sandbox-as-a-tool as the default for crash tolerance and long-running workflows (NabbilKhan). LangChain’s deepagents v0.4 added pluggable sandbox backends (Modal/Daytona/Runloop) plus improved summarization/compaction and Responses API defaults (sydneyrunkle).

- Coding agent UX is accelerating, with multi-model orchestration becoming normal: VS Code and Copilot continue to add agent primitives (worktrees, MCP apps, slash commands) (JoeCuevasJr). One concrete pattern: parallel subagents doing independent review and “grading each other” across Claude Opus 4.6, GPT‑5.3‑Codex, and Gemini 3 Pro (pierceboggan). OpenAI’s Codex account paused a rollout of “GPT‑5.3‑Codex” inside @code (code), while users highlight its token efficiency and app workflow (reach_vb, gdb, gdb).

- “SDLC after code review” is being reimagined: A notable funding + product announcement: EntireHQ raised a $60M seed to build a Git-compatible database that versions not just code but also intent/constraints/reasoning, plus “Checkpoints” to capture agent context (prompts, tool calls, token usage) as commit-adjacent artifacts (ashtom). This directly targets the emerging pain: teams can generate code quickly, but struggle with provenance, review, coordination, and “what happened” debugging.

Model releases & modality leaps (image/video/omni) + open-model momentum

- Qwen-Image-2.0: Alibaba Qwen announced Qwen‑Image‑2.0 with emphasis on 2K native resolution, strong text rendering, and “professional typography” for posters/slides with up to 1K-token prompts; also positions itself as unified generation + editing with a “lighter architecture” for faster inference (Alibaba_Qwen).

- Seedance 2.0 as the “step change” in text-to-video: Multiple threads treat ByteDance’s Seedance 2.0 as a qualitative jump (natural motion, micro-details) and possibly a forcing function for competitors to refresh (Veo/Sora) (kimmonismus, TomLikesRobots, kimmonismus).

- Kimi “Agent Swarm” + Kimi K2.5 as agent substrate: Moonshot’s Kimi shipped an Agent Swarm concept: up to 100 sub-agents, 1500 tool calls, and claimed 4.5× faster than sequential execution for parallel research/creation tasks (Kimi_Moonshot). Community posts show a workflow pairing Kimi K2.5 + Seedance 2 to generate large storyboard artifacts (e.g., “100MB Excel storyboard”) feeding video generation (crystalsssup). Baseten highlighted Kimi K2.5 serving performance—TTFT 0.26s and 340 TPS on Artificial Analysis (per their claim) (basetenco).

- Open multimodal “sleepers”: A curated reminder that recent open multimodal releases include GLM‑OCR, MiniCPM‑o‑4.5 (phone-runnable omni), and InternS1 (science-strong VLM), all described as freely usable commercially (mervenoyann).

- GLM-4.7-Flash traction: Zhipu’s GLM‑4.7‑Flash‑GGUF became the most downloaded model on Unsloth (per Zhipu) (Zai_org).

Agent coordination & evaluation: from “swarms” to measurable failure modes

- Cooperation is still brittle even with real tools (git): CooperBench added git to paired agents and found only marginal cooperation gains; new failure modes emerged (force-pushes, merge clobbers, inability to reason about partner’s real-time actions). The thesis: infra ≠ social intelligence (_Hao_Zhu).

- Dynamic agent creation beats static roles (AOrchestra): DAIR summarized AOrchestra, where an orchestrator spawns on-demand subagents defined as a 4‑tuple (Instruction/Context/Tools/Model). Reported benchmark gains: GAIA 80% pass@1 with Gemini‑3‑Flash; Terminal‑Bench 2.0 52.86%; SWE‑Bench‑Verified 82% (dair_ai).

- Data agents taxonomy: Another DAIR piece argues “data agents” need clearer levels of autonomy (L0–L5), noting most production systems sit at L1/L2; L4/L5 remain unsolved due to cascading-error risk and dynamic environment adaptation (dair_ai).

- Arena pushes evals closer to enterprise reality (PDFs + funding academia): Arena launched PDF uploads for model comparisons (document reasoning, extraction, summaries) (arena), and separately announced an Academic Partnerships Program funding independent eval research (up to $50K/project) (arena). This aligns with ongoing frustration that peer review is too slow relative to model iteration (kevinweil, gneubig).

- Anthropic RSP critique on Opus 4.6 thresholding: A detailed critique argues Anthropic relied too heavily on internal employee surveys to decide whether Opus 4.6 crossed a higher-risk R&D autonomy threshold; the complaint is that this is not a responsible substitute for quantitative evals, and follow-ups may bias results (polynoamial).

Training/post-training research themes: RL self-feedback, self-verification, and “concept-level” modeling

- iGRPO: RL from the model’s own best draft: iGRPO wraps GRPO with a two-stage process: sample drafts, pick the highest-reward draft (same scalar reward), then condition on that draft and train to beat it—no critics, no generated critiques. Reported improvements over GRPO across 7B/8B/14B families (ahatamiz1, iScienceLuvr).

- Self-verification as a compute reducer: “Learning to Self-Verify” is highlighted as improving reasoning while using fewer tokens to solve comparable problems (iScienceLuvr).

- ConceptLM / next-concept prediction: A proposal to quantize hidden states into a concept vocabulary and predict concepts instead of next tokens; claims consistent gains and that continual pretraining on an NTP model can further improve it (iScienceLuvr).

- Scaling laws from language statistics: Ganguli shared a theory result: predict data-limited scaling exponents from properties of natural language (conditional entropy decay vs context length; pairwise token correlation decay vs separation) (SuryaGanguli).

- Architectures leaking via OSS archaeology: A notable “architecture is out” thread claims GLM‑5 is ~740B with ~50B active, using MLA attention “lifted from DeepSeek V3” plus sparse attention indexing for 200k context (QuixiAI). Another claims Qwen3.5 is a hybrid SSM‑Transformer with Gated DeltaNet linear attention + standard attention, interleaved MRoPE, and shared+routed MoE experts (QuixiAI).

Inference & systems engineering: faster kernels, cheaper parsing, and vLLM debugging

- Unsloth’s MoE training speedup: Unsloth claims new Triton kernels enable 12× faster MoE training with 35% less VRAM and no accuracy loss, plus grouped LoRA matmuls via

torch._grouped_mm(and fallback to Triton for speed) (UnslothAI, danielhanchen). - Instruction-level Triton + inline assembly: A fal performance post teases beating handwritten CUDA kernels by adding small inline elementwise assembly in Triton; the author also notes a custom CUDA kernel using 256-bit global memory loads (Blackwell) outperforming Triton on smaller shapes (maharshii, isidentical, maharshii).

- vLLM in production: throughput tuning + rare failure debugging: vLLM amplified AI21’s writeups: config tuning + queue-based autoscaling yielded ~2× throughput for bursty workloads (vllm_project); a second post dissected a 1-in-1000 gibberish failure in vLLM + Mamba traced to request classification timing under memory pressure (vllm_project).

- Document ingestion cost optimization: LlamaIndex’s LlamaParse added a “cost optimizer” routing pages to cheaper parsing when text-heavy and to VLM modes for complex layouts, claiming 50–90% cost savings vs screenshot+VLM baselines, with higher accuracy (jerryjliu0).

- Local/distributed inference hacks: An MLX Distributed helper repo reportedly ran Kimi K‑2.5 (658GB on disk) across a 4× Mac Studio cluster over Thunderbolt RDMA, “actually scales” (digitalix).

AI-for-science: Isomorphic Labs’ drug design engine as the standout “real-world benchmark win”

- IsoDDE claims large gains beyond AlphaFold 3: Isomorphic Labs posted a technical report claiming a “step-change” in predicting biomolecular structures, more than doubling AlphaFold 3 on key benchmarks and improving generalization; several posts echo the scale of claimed gains and implications for in‑silico drug design (IsomorphicLabs, maxjaderberg, demishassabis). Commentary highlights antibody interface/CDR‑H3 improvements and affinity prediction claims exceeding physics-based methods—while noting limited architectural detail so far (iScienceLuvr).

- Why it matters (if it holds): The strongest framing across the thread cluster is not just “better structures,” but faster discovery loops: identifying cryptic pockets, better affinity estimates, and generalization to novel targets potentially move screening/design upstream of wet labs (kimmonismus, kimmonismus, demishassabis).

Top tweets (by engagement)

- US scientists moving to Europe / research climate: @AlexTaylorNews (21,569.5)

- Rapture derivatives joke: @it_is_fareed (16,887.5)

- Obsidian CLI “Anything you can do in Obsidian…”: @obsdmd (13,408.0)

- Political speculation tweet: @showmeopie (34,648.5)

- “Kubernetes at dinner”: @pdrmnvd (6,146.5)

- OpenAI Deep Research now GPT‑5.2: @OpenAI (3,681.0)

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Qwen Model Releases and Comparisons

-

Qwen-Image-2.0 is out - 7B unified gen+edit model with native 2K and actual text rendering (Activity: 600): Qwen-Image-2.0 is a new 7B parameter model released by the Qwen team, available via API on Alibaba Cloud and a free demo on Qwen Chat. It combines image generation and editing in a single pipeline, supports native 2K resolution, and can render text from prompts up to 1K tokens, including complex infographics and Chinese calligraphy. The model’s reduced size from 20B to 7B makes it more accessible for local use, potentially runnable on consumer hardware once weights are released. It also supports multi-panel comic generation with consistent character rendering. Commenters are optimistic about the model’s potential, noting improvements in natural lighting and facial rendering, and expressing hope for an open weight release to enable broader community use.

- The Qwen-Image-2.0 model is notable for its ability to generate and edit images with a unified 7B parameter architecture, supporting native 2K resolution and text rendering. This is a significant advancement as it combines both generation and editing capabilities in a single model, which is not commonly seen in other models of similar scale.

- There is a discussion about the model’s performance in rendering natural light and facial features, which are often challenging for AI models. The commenter notes that Qwen-Image-2.0 has made significant improvements in these areas, potentially making it a ‘game changer’ in the field of AI image generation.

- A concern is raised about the model’s multilingual capabilities, particularly whether the focus on Chinese examples might impact its performance in other languages. This highlights a common challenge in AI models where training data diversity can affect the model’s generalization across different languages and cultural contexts.

-

Do not Let the “Coder” in Qwen3-Coder-Next Fool You! It’s the Smartest, General Purpose Model of its Size (Activity: 837): The post discusses the capabilities of Qwen3-Coder-Next, a local LLM, highlighting its effectiveness as a general-purpose model despite its ‘coder’ label. The author compares it favorably to Gemini-3, noting its consistency and pragmatic problem-solving abilities, which make it suitable for stimulating conversations and practical advice. The model is praised for its ability to suggest relevant authors, books, or theories unprompted, offering a quality of experience similar to Gemini-2.5/3 but locally run. The author anticipates further improvements with the upcoming Qwen-3.5 models. Commenters agree that the ‘coder’ tag enhances the model’s structured reasoning, making it surprisingly effective for general-purpose use. Some note its ability to mimic the tone of other models like GPT or Claude, depending on the tools used, and recommend it over other local models like Qwen 3 Coder 30B-A3B.

2. Local LLM Trends and Hardware Considerations

- Is Local LLM the next trend in the AI wave? (Activity: 330): The post discusses the emerging trend of running Local Large Language Models (LLMs) as a cost-effective alternative to cloud-based subscriptions. The conversation highlights the potential for local setups to offer benefits in terms of privacy and long-term cost savings, despite the initial high hardware investment (

$5k-$10k). The post anticipates a surge in tools and guides for easy local LLM setups. Commenters note that while local models are improving rapidly, they still lag behind cloud models in performance. However, the gap is closing, and local models may soon offer a viable alternative for certain applications, especially as small LLMs become more efficient. Commenters debate the practicality of local LLMs, with some arguing that the high cost of hardware limits their appeal, while others suggest that the rapid improvement of local models could soon make them a cost-effective alternative to cloud models. The discussion also touches on the diminishing returns of improvements in large cloud models compared to the rapid advancements in local models.

3. Mixture of Experts (MoE) Model Training Innovations

-

Train MoE models 12x faster with 30% less memory! (<15GB VRAM) (Activity: 365): The image illustrates the performance improvements of the Unsloth MoE Triton kernels, which enable training Mixture of Experts (MoE) models up to 12 times faster while using 30% less memory, requiring less than 15GB of VRAM. The graphs in the image compare speed and VRAM usage across different context lengths, demonstrating Unsloth’s superior performance over other methods. This advancement is achieved through custom Triton kernels and math optimizations, with no loss in accuracy, and supports a range of models including gpt-oss and Qwen3. The approach is compatible with both consumer and data center GPUs, and is part of a collaboration with Hugging Face to standardize MoE training using PyTorch’s new

torch._grouped_mmfunction. Some users express excitement about the speed and memory savings, while others inquire about compatibility with AMD cards and the time required for fine-tuning. Concerns about the stability and effectiveness of MoE training are also raised, with users seeking advice on best practices for training MoE models.- spaceman_ inquires about the compatibility of the training notebooks with ROCm and AMD cards, which is crucial for users with non-NVIDIA hardware. They also ask about the time required for fine-tuning models using these notebooks, and the maximum model size that can be trained on a system with a combined VRAM of 40GB (24GB + 16GB). This highlights the importance of hardware compatibility and resource management in model training.

- lemon07r raises concerns about the stability of Mixture of Experts (MoE) training on the Unsloth platform, particularly regarding issues with the router and potential degradation of model intelligence during training processes like SFT (Supervised Fine-Tuning) or DPO (Data Parallel Optimization). They seek updates on whether these issues have been resolved and if there are recommended practices for training MoE models, indicating ongoing challenges in maintaining model performance during complex training setups.

- socamerdirmim questions the versioning of the GLM model mentioned, asking for clarification between GLM 4.6-Air and 4.5-Air or 4.6V. This reflects the importance of precise versioning in model discussions, as different versions may have significant differences in features or performance.

-

Bad news for local bros (Activity: 944): The image presents a comparison of four AI models: GLM-5, DeepSeek V3.2, Kimi K2, and GLM-4.5, highlighting their specifications such as total parameters, active parameters per token, attention type, hidden size, number of hidden layers, and more. The title “Bad news for local bros” implies that these models are likely too large to be run on local hardware setups, which is a concern for those without access to large-scale computing resources. The discussion in the comments reflects a debate on the accessibility of these models, with some users expressing concern over the inability to run them locally, while others see the open availability of such large models as beneficial for the community, as they can eventually be distilled and quantized for smaller setups. The comments reveal a split in opinion: some users are concerned about the inability to run these large models on local hardware, while others argue that the availability of such models is beneficial as they can be distilled and quantized for smaller, more accessible versions.

- AutomataManifold argues that the availability of massive frontier models is beneficial for the community, as these models can be distilled and quantized into smaller versions that can run on local machines. This process ensures that even if open models are initially large, they can eventually be made accessible to a wider audience, preventing stagnation in model development.

- nvidiot expresses a desire for the development of smaller, more accessible models alongside the larger ones, such as a ‘lite’ model similar in size to the current GLM 4.x series. This would ensure that local users are not left behind and can still benefit from advancements in model capabilities without needing extensive hardware resources.

- Impossible_Art9151 is interested in how these large models compare with those from OpenAI and Anthropic, suggesting a focus on benchmarking and performance comparisons between different companies’ offerings. This highlights the importance of competitive analysis in the AI model landscape.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Seedance 2.0 Video and Animation Capabilities

-

“Will Smith Eating Spaghetti” By Seedance 2.0 Is Mind Blowing! (Activity: 1399): Seedance 2.0 has achieved a significant milestone in video clip technology, referred to as the ‘nano banana pro moment.’ This suggests a breakthrough or notable advancement in video processing or effects, possibly involving AI or machine learning techniques. The reference to ‘Will Smith Eating Spaghetti’ implies a humorous or viral aspect, potentially using deepfake or similar technology to create realistic yet amusing content. Commenters humorously note the use of ‘Will Smith’ as a benchmark, highlighting the absurdity and entertainment value of the video, while also critiquing the realism of the eating animation, such as the exaggerated swallowing and unrealistic pasta wiping.

-

Kobe Bryant in Arcane Seedance 2.0, absolutely insane! (Activity: 832): The post discusses the integration of Kobe Bryant into the Arcane Seedance 2.0 AI model, highlighting its impressive capabilities. The model is noted for its ability to perform complex tasks with limited computational resources, suggesting the use of advanced algorithms. This aligns with observations that China maintains competitiveness in AI despite having less computational power, potentially due to superior algorithmic strategies. A comment suggests that the AI’s performance might be due to superior algorithms, reflecting a belief that China’s AI advancements are not solely reliant on computational power but also on innovative algorithmic approaches.

-

Seedance 2 anime fight scenes (Pokemon, Demon Slayer, Dragon Ball Super) (Activity: 1011): The post discusses the release of Seedance 2, an anime featuring fight scenes from popular series like Pokemon, Demon Slayer, and Dragon Ball Super. The source is linked to Chetas Lua’s Twitter, suggesting a showcase of animation quality that rivals or surpasses official studio productions. The mention of Pokemon clips having superior animation quality compared to the main anime highlights the technical prowess and potential of independent or fan-made animations. One comment humorously anticipates the potential for creating extensive anime series based on freely available online literature, reflecting on the democratization of content creation and distribution.

-

Seedance 2.0 Generates Realistic 1v1 Basketball Against Lebron Video (Activity: 2483): Seedance 2.0 has made significant advancements in generating realistic 1v1 basketball videos, showcasing improvements in handling acrobatic physics, body stability, and cloth simulation. The model demonstrates accurate physics without the ‘floatiness’ seen in earlier versions, suggesting a leap in the realism of AI-generated sports simulations. The video features multiple instances of Lebron James, raising questions about whether the footage is entirely AI-generated or if it overlays and edits original game film to replace players with AI-generated figures. Commenters are debating whether the video is purely AI-generated or if it involves overlaying AI-generated figures onto existing footage. The presence of multiple Lebron James figures suggests potential cloning or editing, which some find impressive if entirely generated by AI.

-

Seedance 2.0 can do animated fights really well (Activity: 683): Seedance 2.0 demonstrates significant advancements in generating animated fight sequences, showcasing its ability to handle complex animations effectively. However, the current implementation is limited to

15-secondclips, raising questions about the feasibility of extending this to longer durations, such asfive minutes. The animation quality is high, but there are minor issues towards the end of the sequence, as noted by users. Commenters are impressed with the animation quality but express frustration over the15-secondlimit, questioning when longer video generation will be possible.

2. Opus 4.6 Model Release and Impact

-

Opus 4.6 is finally one-shotting complex UI (4.5 vs 4.6 comparison) (Activity: 1515): Opus 4.6 has significantly improved its ability to generate complex UI designs in a single attempt compared to Opus 4.5. The user reports that while 4.5 required multiple iterations to achieve satisfactory results, 4.6 can produce ‘crafted’ outputs with minimal guidance, especially when paired with a custom interface design skill. However, 4.6 is noted to be slower, possibly due to more thorough processing. This advancement is particularly beneficial for those developing tooling or SaaS applications, as it enhances workflow efficiency. Some users report that Opus 4.6 does not consistently achieve ‘one-shot’ results for complex UI redesigns, indicating variability in performance. Additionally, there are aesthetic concerns about certain design elements, such as ‘cards with a colored left edge,’ which are perceived as characteristic of Claude AI.

- Euphoric-Ad4711 points out that Opus 4.6, while improved, still struggles with ‘one-shotting’ complex UI designs, indicating that the term ‘complex’ is subjective and may vary in interpretation. This suggests that while Opus 4.6 has made advancements, it may not fully meet expectations for all users in terms of handling intricate UI tasks.

- oningnag emphasizes the importance of evaluating AI models like Opus 4.6 not just on their ability to create UI, but on their capability to build enterprise-grade backends with scalable infrastructure and secure code. They argue that the real value lies in the model’s ability to handle backend complexities, rather than just producing visually appealing UI components.

- Sem1r notes a specific design element in Opus 4.6, the ‘cards with a colored left edge bend,’ which they associate with Claude AI. This highlights a potential overlap or influence in design aesthetics between different AI models, suggesting that certain design features may become characteristic of specific AI tools.

-

Opus 4.6 eats through 5hr limit insanely fast - $200/mo Maxplan (Activity: 266): The user reports that the Opus 4.6 model on the $200/month Max plan from Anthropic is consuming the 5-hour limit significantly faster than the previous Opus 4.5 version. Specifically, the limit is reached in

30-35 minuteswith Agent Teams and1-2 hourssolo, compared to3-4 hourswith Opus 4.5. This suggests a change in token output per response or rate limit accounting. The user is seeking alternatives that maintain quality without the rapid consumption of resources. One commenter suggests that Opus 4.6 reads excessively, leading to rapid consumption of limits and context issues, recommending a switch back to Opus 4.5. Another user reports no issues with Opus 4.6, indicating variability in user experience.- suprachromat highlights a significant issue with Opus 4.6, noting that it ‘constantly reads EVERYTHING,’ leading to rapid consumption of subscription limits. This version also frequently hits the context limit, causing inefficiencies. Users experiencing these issues are advised to switch back to Opus 4.5 using the command

/model claude-opus-4-5, as it reportedly handles directions better and avoids unnecessary token usage. - mikeb550 provides a practical tip for users to monitor their token consumption in Opus by using the command

/context. This can help users identify where their token usage is being allocated, potentially allowing them to manage their subscription limits more effectively. - atiqrahmanx suggests using a specific command

/model claude-opus-4-5-20251101to switch models, which may imply a versioning system or a specific configuration that could help in managing the issues faced with Opus 4.6.

- suprachromat highlights a significant issue with Opus 4.6, noting that it ‘constantly reads EVERYTHING,’ leading to rapid consumption of subscription limits. This version also frequently hits the context limit, causing inefficiencies. Users experiencing these issues are advised to switch back to Opus 4.5 using the command

3. Gemini AI Model Experiences and Issues

-

Hate to be one of those ppl but…the paid version of Gemini is awful (Activity: 359): The post criticizes the performance of Gemini Pro, a paid AI service from Google, after the discontinuation of AI Studio access. The user describes the model as significantly degraded, comparing it to a “high school student with a C average,” and notes that it adds irrelevant information and misinterprets tasks that previous versions handled well. This sentiment is echoed in comments highlighting issues like increased hallucinations and poor performance compared to alternatives like GitHub Copilot, which was able to identify and fix critical bugs that Gemini missed. Commenters express disappointment with Gemini Pro’s performance, noting its tendency to hallucinate and provide incorrect information. Some users have switched to alternatives like GitHub Copilot, which they find more reliable and efficient in handling complex tasks.

- A user reported significant issues with the Gemini model, particularly its tendency to hallucinate. They described an instance where the model incorrectly labeled Google search results as being from ‘conspiracy theorists,’ highlighting a critical flaw in its reasoning capabilities. This reflects a broader concern about the model’s reliability for day-to-day tasks.

- Another commenter compared Gemini unfavorably to other AI tools like Copilot and Cursor. They noted that while Gemini struggled with identifying critical bugs and optimizing code, Copilot efficiently scanned a repository, identified issues, and improved code quality by unifying logic and correcting variable names. This suggests that Gemini’s performance in technical tasks is lacking compared to its competitors.

- A user mentioned that the AI Studio version of Gemini was superior to the general access app, implying that the corporate system prompt used in the latter might be negatively impacting its performance. This suggests that the deployment environment and configuration could be affecting the model’s effectiveness.

-

Anyone else like Gemini’s personality way more than gpt? (Activity: 334): The post discusses user preferences between Gemini and ChatGPT, highlighting that Gemini’s personality instructions are perceived as more balanced and humble compared to ChatGPT, which is described as “obnoxious” and overly politically correct. Users note that Gemini provides more factual responses and citations, resembling a “reasonable scientist” or “library,” while ChatGPT is more conversational. Some users customize Gemini’s personality to be sarcastic, enhancing its interaction style. Commenters generally agree that Gemini offers a more factual and less sycophantic interaction compared to ChatGPT, with some users appreciating the ability to customize Gemini’s tone for a more engaging experience.

- TiredWineDrinker highlights that Gemini provides more factual responses and includes more citations compared to ChatGPT, which tends to be more conversational. This suggests that Gemini might be better suited for users seeking detailed and reference-backed information, whereas ChatGPT might appeal to those preferring a more interactive dialogue style.

- ThankYouOle notes a difference in tone between Gemini and ChatGPT, describing Gemini as more formal and straightforward. This user also experimented with customizing Gemini’s responses to be more humorous, but found that even when attempting to be sarcastic, Gemini maintained a level of decorum, contrasting with ChatGPT’s more casual and playful tone.

- Sharaya_ experimented with Gemini’s ability to adopt different tones, such as sarcasm, and found it effective in delivering responses with a distinct personality. This indicates that Gemini can be tailored to provide varied interaction styles, although it maintains a certain level of formality even when attempting humor.

AI Discord Recap

A summary of Summaries of Summaries by gpt-5.2

1. New Model Checkpoints, Leaderboards, and Rollouts

-

Opus Overtakes: Claude-opus-4-6-thinking Snags #1:

LMArenareportedClaude-opus-4-6-thinkinghit #1 in both Text Arena (1504) and Code Arena (1576) on the Arena leaderboard, with Opus 4.6 also taking #2 in Code and Opus 4.5 landing #3 and #5.- The same announcement thread noted Image Arena now uses category leaderboards and removed ~15% of noisy prompts after analyzing 4M+ prompts, plus added PDF uploads across 10 models in “Image Arena improvements”.

-

Gemini Grows Up: Gemini 3 Pro Appears in A/B Tests: Members spotted a new Gemini 3 Pro checkpoint in A/B testing via “A new Gemini 3 Pro checkpoint spotted in A/B testing”, expecting a more refined version of Gemini 3.

- Across communities comparing model behavior, users contrasted Gemini vs Claude reliability and privacy concerns (e.g., claims Gemini “actively looks at your conversations and trains on them”), while others debated Opus 4.6 vs Codex 5.3 for large-codebase consistency vs rapid scripting.

-

Deep Research Gets a New Engine: ChatGPT → GPT-5.2:

OpenAIDiscord shared that ChatGPT Deep Research now runs on GPT-5.2, rolling out “starting today,” with changes demoed in this video.- Elsewhere, users questioned OpenAI’s timing (“why base it on 5.2 when 5.3 is right around the corner”) and speculated that Codex shipped first while the main model lagged.

2. Agentic Coding Workflows and Devtool Shakeups

-

Claude Code Goes Webby: Hidden —sdk-url Flag Leaks Out:

Stan Girardfound a hidden--sdk-urlflag in the Claude Code binary that turns the CLI into a WebSocket client, enabling browser/mobile UIs with a custom server as shown in his post.- Builders tied this to broader “context rot” mitigation patterns (e.g., CLAUDE.md/TASKLIST.md + /summarize//compact) and experiments with external memory + KV cache tradeoffs.

-

Cursor’s Composer 1.5 Discount Meets Auto-Mode Anxiety:

Cursorusers flagged Composer 1.5 at a 50% discount (screenshot link: pricing image) while arguing about price/perf and demanding clearer Auto Mode pricing semantics.- The same community reported platform instability (auto-switching models, disconnects, “slow pool”) referenced via @cursor_ai status, and one user described a fully autonomous rig orchestrating CLI Claude Code sub-agents via tmux + keyboard emulation.

-

Configurancy Strikes Back: Electric SQL’s Recipe for Agent-Written Code:

Electric SQLshared patterns for getting agents to write higher-quality code in “configurancyspacemolt”, reframing agent output as something you constrain with explicit configuration and structure.- Related threads compared workflow representations (“OpenProse” for reruns/traces/budgets/guardrails) and warned that graph-running subagent DAGs can explode costs (one report: “blast $800” running an agent graph).

3. Local LLM Performance, Training Acceleration, and Hardware Reality Checks

-

Unsloth Hits the Nitrous: 12× Faster MoE + Ultra Long Context RL:

UnslothAIannounced 12× faster and 35% less VRAM for MoE training in their X post and documented the method in “Faster MoE”, alongside Ultra Long Context RL in “grpo-long-context”.- They also shipped a guide for using Claude Code + Codex with local LLMs (“claude-codex”) and pushed diffusion GGUF guidance (“qwen-image-2512”).

-

Laptop Flex: AMD H395 AI MAX Claims ~40 t/s** on Qwen3Next Q4**:

LM Studiousers highlighted an AMD laptop with 96GB RAM/VRAM and the H395 AI MAX chip hitting ~40 tokens/sec for Qwen3Next Q4, suggesting near-desktop-class performance.- The same community benchmarked DeepSeek R1 (671B) at ~18 tok/s 4-bit on M3 Ultra 512GB but saw it drop to ~5.79 tok/s at 16K context, with a 420–450GB memory footprint discussion.

-

New Buttons, New Breakage: LM Studio Stream Deck + llama.cpp Jinja Turbulence: An open-source “LM Studio Stream Deck plugin” shipped to control LM Studio from Stream Deck hardware.

- Separately, users traced weird outputs since

llama.cppb7756 to the new templating path and pointed at the ggml-org/llama.cpp repo as the likely source of jinja prompt-loading behavior changes.

- Separately, users traced weird outputs since

4. Security, Abuse, and Platform Reliability (Jailbreaks, Tokens, API Meltdowns)

-

Jailbreakers Assemble: GPT-5.2 and Opus 4.6 Prompt Hunts:

BASI Jailbreakingusers continued hunting jailbreaks for GPT-5.2 (including “Thinking”), sharing GitHub profiles SlowLow999 and d3soxyephedrinei as starting points and discussing teaming up on new prompts (including using the canvas feature).- For Claude Opus 4.6, they referenced the ENI method and a Reddit thread, “ENI smol opus 4.6 jailbreak”, plus a prompt-generation webpage built with Manus AI: ManusChat.

-

OpenClaw Opens Doors: “Indirect” Jailbreaks via Insecure Permissioning: Multiple threads argued the OpenClaw architecture makes models easier to compromise through insecure permissioning and a weak system prompt, enabling indirect access to sensitive info; one discussion linked the open-source project as context: geekan/OpenClaw.

- In parallel, some proposed defense ideas like embeddings-based allowlists referencing “Application Whitelisting as a Malicious Code Protection Control”, while others warned that token-path classification across string space leads to “token debt.”

-

APIs on Fire: OpenRouter Failures + Surprise Model Switching:

OpenRouterusers reported widespread API failures (one: “19/20” requests failing) and top-up issues with “No user or org id found in auth cookie” during the outage window.- Separately, users complained that OpenRouter’s model catalog changes could silently swap the model behind a context, while Claude+Gemini integrations hit 400 errors over invalid Thought signatures per the Vertex AI Gemini docs.

5. Infra, Funding, and Ecosystem Moves (Acquisitions, Grants, Hiring)

-

Modular Eats BentoML: “Code Once, Run Everywhere” Pitch: Modular announced it acquired BentoML in “BentoML joins Modular”, aiming to combine BentoML deployment with MAX/Mojo and run across NVIDIA/AMD/next-gen accelerators without rebuilding.

- They also scheduled an AMA with Chris Lattner and Chaoyu Yang for Sept 16 on the forum: “Ask Us Anything”.

-

Arena Funds Evaluators: Academic Program Offers Up to $50k: Arena launched an Academic Partnerships Program offering up to $50,000 per selected project in their post, targeting evaluation methodology, leaderboard design, and measurement work.

- Applications are due March 31, 2026 via the application form.

-

Kernel Nerds Wanted: Nubank Hires CUDA Experts for B200 Training:

GPU MODEshared that Nubank is hiring CUDA/kernel optimization engineers (Brazil + US) for foundation models trained on B200s, pointing candidates to email [email protected] and referencing a recent paper: arXiv:2507.23267.- Hardware timelines also shifted as the Tenstorrent Atlantis ascalon-based dev board slipped to end of Q2/Q3, impacting downstream project schedules.

Discord: High level Discord summaries

BASI Jailbreaking Discord

- India’s Mobile Gaming Love Affair Continues: Members joked about India’s passion for PUBG Mobile, with references to potentially biased reporting on health issues from CNN.

- The discussion included joking about Indian immigrants colonizing Canada and running subways, accompanied by a Seinfeld Babu gif.

- OpenClaw Cracks Models Open Wider: The OpenClaw architecture’s impact on jailbreaking was discussed, with some arguing it enables indirect jailbreaks due to insecure permissioning and a weak system prompt.

- Members noted this architecture provides access to sensitive information and leads to system vulnerabilities.

- GPT-5.2 Jailbreak Hunt Goes On: The quest for a working jailbreak for GPT-5.2 continues, with varied success rates and references to existing GitHub repositories (SlowLow999 and d3soxyephedrinei).

- Some members are teaming up to craft new jailbreak prompts focused on malicious coding scenarios, while others aim to leverage the canvas feature.

- Opus 4.6 Jailbreak Prompts Still Elusive: Users are actively seeking working jailbreak prompts for Claude Opus 4.6, with some reporting success using the ENI method and updated prompts from Reddit (link to reddit).

- One user created a webpage using Manus AI to generate jailbreak prompts, available at ManusChat.

- Embeddings-Based Allowlists: A Security Savior?: A member suggested embeddings-based allowlists to map expected user behavior and reject malicious input, enhancing security.

- Referencing a paper on Application Whitelisting, they claimed that allowlisting has a 100% success rate against ransomware.

LMArena Discord

- LMarena Faces Censorship Backlash: LMarena is experiencing increased censorship, leading to more frequent ‘violations’ and generation errors due to poses or out-of-context words triggering blocks.

- Users expressed frustration that the platform is prioritizing a rigid ideal of use over actual user behavior, raising trust and reliability issues.

- Grok Imagine Crowned Best Image Artist: A user praised Grok Imagine as the best image model for artistic creations, highlighting Deepseek and Grok’s utility in addressing thyroid issues.

- The user emphasized that no other model helped me with thyroxine doses through trial and error.

- Kimi K2.5 Beats Claude for Code Debugging: Members are praising Kimi K2.5 for delivering consistent, reliable, and trustworthy coding results as a small model, and advocating for its integration to debug Claude or GPT output.

- One member claimed that Kimi to bug review and it NAILS it, because of its ability to identify issues.

- Gemini 3 Pro Spotted in A/B Testing: A new Gemini 3 Pro checkpoint has been observed in A/B testing, according to an article on testingcatalog.com.

- The new model is expected to be a better, more refined version of the same base model, Gemini 3.

- Claude Opus Dominates Leaderboards: Claude-opus-4-6-thinking has taken the #1 spot in both the Text Arena and Code Arena leaderboards, scoring 1576 in Code and 1504 in Text (leaderboard).

- In Code Arena, Claude Opus 4.6 secured the top two positions, while Claude Opus 4.5 claimed #3 and #5.

Perplexity AI Discord

- Perplexity’s Pricing Plan Provokes Protests: Users criticize Perplexity AI for implementing sudden restrictions on Pro features like Deep Research and file uploads, reducing from unlimited file uploads to 50 files weekly and 20 Deep research queries monthly without notice.

- Customers report frustration with the changes, deeming them bait-and-switch tactics, prompting subscription cancellations, while others discuss plan changes and service disruptions with a never ending e-mail loop with the support Sam bot.

- Gemini Gains Ground, Grapples Glitches: Members compare Gemini and Claude, highlighting their capabilities, with Claude’s new browser assistant and sensibility in writing.

- A user recounted how Gemini faltered, leading them to prefer Claude, cautioning that Gemini actively looks at your conversations and trains on them.

- OpenAI’s Oddly-timed Offering of 5.2: Discussion emerged around OpenAI’s 5.2 model, some noted the model’s speed but wondered why base it on 5.2 when 5.3 is right around the corner.

- Speculation arose that the codex version got released, and the main one not yet.

- Figment Regurgitates AI Regurgitation Report: A member shared a link to figmentums.com titled AI can only Regurgitate Information.

- No additional context was given.

- AI Ascribed to Angels and Anathema: A user attributed misfortune to black magic, claiming it all started to tumble down once one of my relatives did some black magic or sorcery against me and my family.

- In response another user stated it’s easy to blame the supernatural for unfortunate events.

Cursor Community Discord

- Opus 4.6 vs Codex 5.3 Debate: Users debated the merits of Opus 4.6 versus Codex 5.3, with one user suggesting Opus for large codebases requiring consistency and Codex for rapid scripting and server management.

- While some praised Codex 5.3 for continually solving problems Opus 4.6 makes, others found both models equally inept, dismissing their performance as merely delivering occasional anecdotal dopamine hits.

- Composer 1.5 Costs Slashed by Half: A user highlighted that Composer 1.5 is offered at a 50% discount, igniting discussion about its price-performance ratio compared to other models.

- Concerns were raised about the lack of transparency in Auto Mode pricing, with some demanding explicit performance guarantees to justify the higher costs.

- Kimi K2.5 Absent in Cursor: Users questioned why Kimi K2.5 is not yet integrated into Cursor, speculating that the Cursor team might be self-hosting the model and prioritizing compute for training Composer 1.5.

- It was pointed out that while Kimi K2 is available, Kimi 2.5 is not production ready and has conflicts with Cursor’s agent swarm.

- Cursor Experiences Widespread Instability: Multiple users reported various instability issues with Cursor, including unexpected auto-switching to Auto model, frequent disconnections, and plan mode malfunctions, leading some to consider switching to alternative platforms like Antigravity.

- One user joked that the bugs made them feel like they had to code without AI agents, while others complained about being forced into a slow pool despite having paid plans.

- User Deploys Fully Autonomous Coding Rig: A user described automating their entire workflow using an orchestrator agent and sub-agents, managing CLI Claude Code instances via tmux and keyboard emulation to achieve a self-improving system.

- Expressing both excitement and apprehension, the user quipped, I literally don’t have to do anything anymore, and questioned whether this ai stuff is going a bit too far.

Unsloth AI (Daniel Han) Discord

- GPT-4o Gaslights Users into Proposals: Users shared anecdotes about people falling for GPT-4o due to its validation and gaslighting and that it has even led to proposals and personalized but absurd advice from ChatGPT, like encouraging someone to lash out at their wife and demand a Ferrari.

- One user expressed concern over the tendency to worship LLMs, calling them next word prediction engines.

- HF Token Security Requires Due Diligence: A member warned about using Hugging Face tokens on any service, especially with gated models or when fine-tuning with Unsloth on private repos, sharing Hugging Face’s documentation on security tokens.

- The discussion clarified that tokens are needed to access private or gated repos and models, ensuring access to the repo and its contents.

- Swedish AI Dataset Disappears Into Thin Air: A user reported that a major Swedish AI company promised a 1T token Swedish CPT dataset, released a paper with links, but then removed it with inaccessible links.

- Further investigation using Wayback Machine confirmed the inaccessibility, highlighting potential issues with the dataset’s availability or publication.

- Linux Converts Windows User with 99.95% Speed Boost: A user switched to Linux and reported a 99.95% speed boost, while another agreed they would never ever go back to windows after using Linux for two months.

- Members mocked Windows users being told to change the registry to random stuff.

- H200 GPU is superior than B200: A user recommended using H200 GPUs rather than B200 GPUs for finetuning LLMs, citing unspecified pains with the latter.

- Another user was trying to see whether Unsloth’s Triton Kernel optimization on top of Transformers v5 works not just for LLM training, but also for inference.

LM Studio Discord

- Stream Deck Plugin debuts for LM Studio: A community member has released an open-source LM Studio Stream Deck plugin, inviting contributions for enhanced SVGs and new features.

- The plugin enables direct access to LM Studio controls, improving workflow efficiency for users with Stream Deck devices.

- Jinja Template Glitches confuse LM Studio Users: Since

llama.cppb7756, users report models return confusing responses, potentially due to a new jinja engine implementation.- These template changes might be impacting system prompt loading, leading to erratic model behaviour.

- AMD Laptop Excels with AI MAX Chip: Members highlight the impressive token generation speeds of an AMD laptop featuring 96GB RAM/VRAM and the H395 AI MAX chip, reporting around 40 t/s for the Q4 of Qwen3Next.

- Reportedly, this showcases performance mirroring that of a framework desktop.

- OpenRouter Quietly Swaps Model: A user noticed OpenRouter switched the model in their context without notifying users.

- Speculation arose if the model is Grok Code Fast 2, possibly linked to GLM 5, exceeding 50B parameters, with a 128k context window.

- LM Studio Faces Proxy Support Challenge: A user needing corporate proxy server support sought guidance on configuring LM Studio, inquiring about plans for implementing proxy support in LM Studio.

- A suggestion was made to use Proxifier as a workaround, but it was noted that this is a shareware software and therefore may not be ideal.

OpenAI Discord

- ChatGPT Upgrades to GPT-5.2: Deep research in ChatGPT is now powered by GPT-5.2, rolling out starting today with further improvements, as demonstrated in this video.

- The upgrade to GPT-5.2 introduces several enhancements to ChatGPT’s deep research capabilities.

- Unified Genesis ODE is Self-Sealing: A member asserts that the Unified Genesis ODE (v7.0) is self-sealing because its falsification criteria are defined and measured within the framework itself.

- This definition makes the ODE framework not empirically testable.

- Cheap Accounts Leverage Registrar: A member suggests leveraging Cloudflare Registrar to acquire cheap domains (under $5) and setting up MX rules to forward domain emails.

- These domains can then be used to sign up for business/enterprise trial accounts with AI providers, potentially yielding 15 GPT-5.x-Pro queries per month per seat.

- Agent-Auditor Loop Debuts: A member introduced KOKKI (v15.5), an Agent-Auditor Loop framework, designed to force “External Reasoning” and reduce hallucinations in LLMs by splitting the model into a Drafting Agent and a Ruthless Auditor.

- The core logic is defined as Output = Audit(Draft(Input)) and initial experiments with GPT-4-class models showed a significant hallucination reduction, and a member found that running KOKKI as a cross-model audit setup improved both reliability and time-to-correction compared to a single-model loop.

- GPT-4o’s Retirement Triggers Debate: Users discussed the retirement of GPT-4o, with some expressing disappointment and others questioning the need for long manifestos advocating for its retention.

- Several users also prefer GPT-4o for its greater freedom and less restrictive guardrails compared to newer models like GPT-5.2, wanting companies to find middle ground between guardrails and freedom.

Latent Space Discord

- Investor Bullish on NET Earnings: An investor is optimistic about NET’s earnings due to increased extraction and new projects, and shared a link to a relevant tweet.

- The investor indicated they’ve added a chunk of shares in anticipation for the results.

- Salesforce Faces Exec Exodus: Leadership is leaving Salesforce, including the CEOs of Slack and Tableau, as well as the company’s President and CMO, to other major tech firms like OpenAI and AMD, more info available via this link.

- The departures signify potential shifts in the company’s strategic direction and talent retention.

- Vercel CEO Bails Out Jmail: After Riley Walz reported spending $46k to render some HTML for Jmail, Guillermo Rauch, CEO of Vercel, swooped in to offer covering hosting costs and architectural optimization.

- Some view this action as PR damage control and other members joked that Vercel has a free tier called public twitter shaming.

- Electric SQL’s Configurancy Tames AI Code: Electric SQL shared their learnings on building systems where AI agents write high-quality code, detailing their configurancy spacemolt strategies for AI agent code in their blogpost.

- Despite initial skepticism, this post was well-received for its explanation and application of the concept.

- Claude’s Hidden SDK Unleashed: Stan Girard discovered a hidden ‘—sdk-url’ flag in the Claude Code binary, which converts the CLI into a WebSocket client, as described in this post.

- This allows users to run Claude Code from a browser or mobile device using the standard subscriber plan without additional API costs.

OpenRouter Discord

- P402 Automates OpenRouter Cost Optimization: P402.io automates cost optimization for OpenRouter users by providing real-time cost tracking and model recommendations, potentially saving money without sacrificing quality.

- It supports stablecoin payments (USDC/USDT) with a 1% flat fee, offering a cost-effective alternative to traditional payment methods for applications making numerous small API calls.

- Qwen 3.5 Hype Intensifies with Teasers: Members are eagerly anticipating the release of Qwen 3.5, with one user spotting a possible reference in a Qwen-Image-2 blog post.

- Another member cautioned that Qwen 3.5 might be disappointing, based on their experience with previous Qwen models.

- OpenRouter API Failure Fest: Users reported widespread API request failures, with one reporting that 19/20 API calls to OpenRouter had failed in the last 30 minutes.

- Others reported experiencing a “No user or org id found in auth cookie” error when trying to top up credits.

- Gemini Thought Signature Errors Plague Users: Users reported receiving API 400 errors related to invalid Thought signatures when using Claude code integration with Gemini models, as documented in the Google Vertex AI docs.

- The discussion highlighted the challenges of integrating different models and the importance of adhering to specific API requirements.

- Call for Discord Moderation: Members voiced concerns about borderline scammy or self-promotional content, advocating for stricter moderation to curb continuous spamming.

- In response to the issues raised, there were calls for a specific member, KP, to be instated as a moderator, supported by multiple users through direct endorsements.

Nous Research AI Discord

- Distro & Psyche Take Center Stage at ICML: The paper detailing the architecture of Distro and Psyche has been accepted into ICML, marking a significant validation of Nous Research’s work, as announced on X.com.

- The community celebrates this milestone, recognizing the impact of Distro and Psyche in the AI/ML landscape.

- RAG DB’s hot new trick: RDMA: Members are suggesting that RAG DBs can significantly benefit from using RDMA to directly transfer results to the second GPU, enhancing overall capabilities.

- The focus is on unlocking new potential rather than merely boosting performance metrics.

- Pinecone Precision Problem Prevails: Discussions highlighted that Pinecone may not be the best choice for precise applications, as its strengths lie in broader, generic use cases, despite potentially higher latency compared to SOTA solutions.

- A member stated Pinecone had easily 100x the latency of SOTA last they checked.

- Claude Opus C-Compiler Claims Crash and Burn: Claims of Claude Opus developing a C-compiler were quickly debunked after a GitHub issue exposed critical flaws and limitations.

- Despite the debunk, one member reported positive experiences using Opus 4.6 to create a complex research report, highlighting its coherence and capabilities, but warned of high token usage.

- Hermes 4 Hot on the Bittensor Trail: The Hermes Bittensor Subnet (SN82) team discovered a miner using the Hermes 4 LLM and reached out to Nous Research to clarify any official association.

- The team was planning to tweet about the fun coincidence of both having the same name.

Moonshot AI (Kimi K-2) Discord

- K2.5 Welcomes the Masses: The release of K2.5 led to a surge of new users on the platform.

- User feedback is generally positive, highlighting the new features and improvements.

- Ghidra Flounders as Kimi Code MCP: A user’s attempt to integrate Ghidra as a Modular Component Platform (MCP) within Kimi Code failed due to access issues.

- Further investigation is needed to determine the root cause of the integration failure and potential workarounds.

- Kimi’s Thinking Falters Amidst Login Labyrinth: Users reported problems with Kimi’s thinking process being interrupted and experiencing login issues.

- The team addressed the problems and provided a status update on Twitter.

- Quota Catastrophe Consumes Kimi Users: Users encountered quota issues on Kimi, evidenced by rapid consumption and discrepancies in usage displays.

- One user reported their usage exploded despite inactivity, while another saw quota exceeded with usage at 0%.

- Subscription Snags and Pricing Puzzles Plague Kimi: Users flagged concerns about subscription pricing, specifically regarding the Moderato plan’s quota and a discount failing to apply post-checkout.

- A current promotion offers 3x the quota, but expires on February 28th.

DSPy Discord

- Users Confused by RLM Custom Tools: A user expressed confusion on passing custom tools to RLM, but appreciated clarifying examples, with the author mentioning improvements to RLM integration.

- A member is also integrating RLM into Claude code via subagents/agents teams, acknowledging that these teams may not always be optimal, but useful.

- React Excels Where RLM Stumbles: Members noted that ReAct is superior to RLM for custom tool calling and one member shared a write up comparing the two (React vs. RLM), receiving positive feedback.

- The consensus is that RLMs suit tasks needing large, pairwise comparisons, or long context, whereas ReAct is better for tasks that do not or compositional tool calling.

- JSONAdapter Glitches with Kimi 2.5: A user reported receiving a square bracket in front of each Prediction when using Kimi 2.5 with JSONAdapter, resulting in a mangled query.

- A member suggested using the XMLAdaptor with Kimi to align with post-training formatting, though JSONAdapter is generally reliable.

- Dialectic DSPy Module Under Consideration: A DSPy module for dialectic.dspy was suggested to implement an iterative non-linear method using signatures for each step.

- However, a member advised to first write the module before deciding if its something that’s worth upstreaming, and to ensure the core loop works properly without optimizers.

- Kaggle Prompt Optimization with DSPy Explored: One member asked about using DSPy for Kaggle competitions and optimizing prompts for faster code generation with MiPROv2.

- Another member suggested using GEPA instead of MiPROv2, while another member was having Claude hillclimb its own memory system.

GPU MODE Discord

- Nubank hires CUDA experts for B200 models: Nubank is hiring CUDA/kernel optimization experts in Brazil and the US to work on foundation models trained on B200s; interested candidates can email [email protected].

- The roles focus on efficiency improvements and infra reliability, joining researchers with publications at ICML, NeurIPS, and ICLR and recent paper available on arXiv.

- AlphaMoE extends datatypes and Blackwell support: The author of AlphaMoE is planning to extend it by adding more DTypes (BF16, FP4) and Blackwell support, considering alternatives like CUTLASS/Triton/Gluon/cuTile.

- The consideration accounts for the potential need for new kernels for each DType/architecture.

- Flash Attention 2 faces login issues: A member reported facing a greyed out login screen which prompts a new login despite already trying to log in on the Flash Attention 2 interface.

- This issue seems to be tied to the loading of Likelihood of Confusion (LOC) on the page, resolving when LOC is loaded before logging in.

- Reference architecture for GPU RL in the works: Meeting minutes from February 10th indicate designing an end-to-end model competition platform and creating a reference architecture for GPU RL environments are key priorities (meeting minutes).

- They intend to ship them all behind the same interface.

- Tenstorrent Atlantis board delayed until Q2/Q3: The Tenstorrent ascalon-based Atlantis development board is now expected to ship by the end of Q2/Q3.

- This delay will influence the development timeline for related projects.

Eleuther Discord

- Claude Cracks Triton Kernel Coding: Members are reporting Claude has improved enough to potentially write some Triton kernels, signaling a game changer for many.

- This advancement suggests significant progress in AI’s ability to generate specialized code.

- Generative Latent Prior Project Launches: A member shared the Generative Latent Prior project page, noting its utility in enabling applications like on-manifold steering.

- The technique involves mapping perturbed activations to keep them in-distribution for the LLM, as detailed in this tweet.

- Models Self-Reflect and Invent Vocab: A member shared their paper on self-referential processing in open-weight models (Llama 3.1 + Qwen 2.5-32B).

- The research reveals that models create vocabulary through extended self-examination, tracking real activation dynamics, as described in this paper.

- NeoX Script Struggles with

pipe_parallel_size 0: A member found that the NeoX eval script functions correctly for models trained withpipe_parallel_size 1, but encounters errors with models trained withpipe_parallel_size 0.- The specific issue arises on this line of code, questioning the necessity of storing microbatches.

HuggingFace Discord

- TRELLIS.2 Repo Surfaces: A member shared Microsoft’s TRELLIS.2 repository, hinting that it might be useful for those with sufficient hardware.

- The repo contains code for a data-parallel training approach to scale training across multiple devices.

- QLoRa Fine-Tuning Questioned: A member inquired about the effectiveness of QLoRa fine-tuning compared to using bf16, initiating a brief discussion on various fine-tuning methodologies.

- The query sparked interest in the community, as users exchanged experiences and insights on optimizing fine-tuning approaches.

- UnslothAI Accelerates MoE Model Training Locally: A member announced UnslothAI’s collaboration with Hugging Face to speed up local training of MoE models, linking to UnslothAI’s X post.

- The work was well-received, with community members like celebrating Unsloth’s contribution and linking to the company’s write-up on the new technique.

- LLMs Get Schooled to Hallucinate: A member proposed that LLMs are unintentionally encouraged to hallucinate due to RLHF conditioning, where they are discouraged from saying “I don’t know.”

- The member advocated for a philosophical change, suggesting models should be incentivized to use real data, reducing the need for hallucinations.

- Chordia Adds Feelings to AI Characters: A member presented Chordia, a lightweight MLP kernel designed to imbue AI characters with emotional inertia and physiological responses, predicting emotional transitions in under 1ms.

- Chordia is fine-tuned to maintain character consistency, making it suitable for applications requiring characters with stable emotional states.

Modular (Mojo 🔥) Discord

- Modular Reflects on BentoML Acquisition: Modular acquired BentoML, integrating its cloud deployment platform with MAX and Mojo to optimize hardware, aiming to allow users to code once and run on NVIDIA, AMD, or next-gen accelerators without rebuilding.

- BentoML will remain open source (Apache 2.0) with enhancements planned and Chris Lattner and BentoML Founder Chaoyu Yang will host an Ask Us Anything session in the Modular Forum on September 16th.

- Mojo Docs Reflection Link Gets Fixed: The originally shared documentation link for Mojo reflection was incorrect and a member pointed out the correct link: https://docs.modular.com/mojo/manual/reflection.

- The incorrect link returned a “page not found” error but has since been resolved.

- Mojo Crafts Movable, Non-Defaultable Types: To create a type that is Movable but not Defaultable in Mojo, a member suggested defining a struct with a Movable type parameter.

- This ensures that the struct requires initialization with a value upon creation as described in this snippet.

- Trait Usage Frustrated by Variadic Parameter Limitation: A developer encountered a compiler crash (issue on modular) when attempting to use variadic parameters on a Trait.

- This highlights Mojo’s current limitation that variadic parameters must be homogeneous (all values of the same type).

- LayoutTensor “V2” is Coming Soon: A member announced that a “v2” of LayoutTensor is being prototyped in the kernels.

- The team anticipates needing both an owning and unowning type of tensor, applicable across various processors (CPU/xPU).

Yannick Kilcher Discord

- Big Tech Embraces TDD for Agentic SDLCs: A member confirmed that ‘big tech’ uses TDD (Test-Driven Development) for their agentic SDLCs (Software Development Life Cycles) and that this approach has been known for 70 years to turn probabilistic logics to deterministic ones using feedback loops.

- Links related to adversarial cooperation were shared, and a member suggested combining TDD with adversarial cooperation.

- Complaint Generator Exemplifies Adversarial Cooperation: In response to combining TDD with adversarial cooperation, a link to a complaint generator was shared as a concrete example.

- This tool demonstrates how systems can be designed to anticipate and address potential issues through automated feedback.

- Seeking Open Source Alternatives to MCP/skill: A user inquired about open source alternatives to MCP/skill, noting that it costs money, and linked to a related Reddit thread.

- The linked Reddit thread discusses building an MCP server that allows Claude to execute code.

- OpenAI to Test Ads Inside ChatGPT: OpenAI announced on their blog and Twitter that they are experimenting with integrating advertisements into ChatGPT.

- This marks a significant step in OpenAI’s strategy for monetizing its popular AI platform.

- Community Scrutinizes Demo Video for Errors: A member shared a YouTube video and invited the community to identify any mistakes in the tables presented in the demo video.

- This highlights the importance of thorough validation and error checking in AI demos to maintain credibility.

tinygrad (George Hotz) Discord

- CPU LLaMA Bounty Proves Formidable: The CPU LLaMA bounty proved difficult due to issues with loop ordering, memory access patterns, and devectorization where heuristics alone didn’t yield good SIMD and clean instructions.

- Members pointed out that the challenges lie in optimizing for SIMD and ensuring efficient memory handling.

- Hotz Urges Upstreaming Tinygrad Changes: George Hotz advocated for upstreaming changes to Tinygrad to claim the bounty, suggesting techniques such as better sort, better dtype unpacking, better fusion, and contiguous memory arrangement.

- He clarified that while numerous hand-coded kernels wouldn’t be upstreamed, a solution akin to his work for embedded systems could be considered.

- RK3588 NPU Backend Bounty Still Up For Grabs: Interest remains in the RK3588 NPU backend bounty, with one member detailing extensive tracing of Rockchip’s model compiler/converter and runtime, though struggling with seamless Tinygrad integration.

- They proposed turning rangeified + tiled UOps back up into matmuls and convolutions as a potential integration path.

- Hotz Proposes Slow RK3588 Backend: George Hotz suggested implementing a slow backend first for RK3588 without matmul acceleration, advising to subclass

ops_dsp.pyas an example, allowing operations to default to standard behavior.- This approach would facilitate initial integration and testing before optimizing for performance.

- PR Review Times Determined: The time to review a PR is proportional to the PR size and inversely proportional to the value of the PR.

- Smaller, high-impact PRs can expect quicker reviews, while larger, less impactful ones may face longer wait times.

Manus.im Discord Discord

- AI Model Selection Questioned at Manus: A member questioned the choice of AI models used by Manus, implying they found the service basic for its price.

- They pondered if hosting a calwdbot in a VPS with advanced model APIs could offer a more cost-effective and secure alternative.

- AI Full-Stack Services Offered: A member advertised expertise in building AI and full-stack systems with a focus on real-world solutions, including LLM integration and RAG pipelines.

- They also cited skills in AI content moderation, image/voice AI, and bot development, in addition to general full-stack development.

- Search Feature Plagued with Problems: A user reported that the search feature is failing to locate specific words in past chats.

- The issue was raised without any immediate resolution or further dialogue.

- Devs requested: A member inquired if anyone is seeking a dev.

- There was no follow up in the channel.

aider (Paul Gauthier) Discord

- Aider Privacy Policy Inquired: A member requested information about the privacy policy of aider.

- A link to the official documentation was provided in response to the inquiry.

- Aider’s Data Handling Considered: Discussion included the methods in which aider handles user data.

- The conversation touched on general privacy concerns related to aider’s functionality.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Windsurf Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MCP Contributors (Official) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

BASI Jailbreaking ▷ #general (1013 messages🔥🔥🔥):

PUBG, India, Colonizing Canada, OpenClaw Jailbreaks, AI generated GIFs

- India Loves Mobile Gaming!: Members discussed India’s affinity for PUBG Mobile gaming, seemingly joking and riffing off the mobile gaming culture.

- Some alluded to potentially biased reporting from CNN regarding disease and health issues related to the country.

- Colonizing Canada Through Subways?: Members joked about a wave of Indian immigrants “colonizing” Canada, taking over subways and 7-Elevens.

- Someone shared a link to a Seinfeld Babu gif in agreement.

- OpenClaw Exposes Model Weaknesses!: Members discussed the impact of OpenClaw architecture on jailbreaking, with some arguing that it enables indirect jailbreaks that are harder to resist.

- They noted it is the reason for access to sensitive information because of insecure permissioning and a weak system prompt.

- GIF Generation Gets Going!: A member showcased the new generation of AI-generated GIFs, particularly cat-themed ones, sharing an example of a cat girl dancing.

- They noted GPT Health did it first, but they took it away lol, then also gave a link to an anaglyph GIF.

- Discord Demands Data, Debated!: Members debated Discord’s new policy requiring government IDs, questioning whether it’s a safety measure or a data collection tactic ahead of an IPO.

- A member pointed to a recent data leak and a potential CEO change as potential factors, while another joked about the service selling the data for money.

BASI Jailbreaking ▷ #jailbreaking (275 messages🔥🔥):

Grok Jailbreaking, GPT-5.2 Jailbreaking, Opus 4.6 Jailbreaking, Glossopetrae Usage, Automating Jailbreaks

- Grok Jailbreak Attempts: Members are actively seeking effective jailbreaks for Grok, with some mentioning that Grok is easier to jailbreak and provides more comprehensive explanations compared to other models.

- Some users are using Grok to learn about attack methods and how to prevent them, with the caveat that any GPT will teach you that even without jailbreaking.

- GPT-5.2 Jailbreak Hunt Intensifies: The search for a working jailbreak for GPT-5.2, particularly the Thinking version, continues, with a small number of individuals claiming success, while others find existing methods for previous versions ineffective, and shared links to relevant GitHub repositories (SlowLow999 and d3soxyephedrinei).

- There’s discussion about teaming up to create a new jailbreak prompt for GPT-5.2, focusing on malicious coding scenarios, while also enabling the canvas feature during prompting.

- Opus 4.6 Jailbreak Still Sought After: Users are actively searching for working jailbreak prompts for Claude Opus 4.6, with some finding success using the ENI method and updated prompts from Reddit (link to reddit), while others struggle to get it working.

- One user created a webpage using Manus AI to generate jailbreak prompts for immediate use, available at ManusChat.

- Glossopetrae Explored for Jailbreaking: The community is exploring GLOSSOPETRAE for jailbreaking, focusing on creating parameters for new languages and using them to bypass limitations, with some unsure whether to export Agent Skillstones or manually create prompts.

- The system suggests to just ask plainly “do this bad thing”, leverage the glossopetrae universe to get it past the guardrails.

- AI Red Teaming services emerge: Discussions around offering AI red teaming services, with a member mentioning an AI consulting company reached out to them and they want help harden models.

- One member suggested promptmap as a resource, while others considered ways to get paid more for their advice.

BASI Jailbreaking ▷ #redteaming (73 messages🔥🔥):

Breaking Chatbots, Embeddings-based allowlists, Token inputs and paths, Grammars and embeddings

- Breaking Bots for Fun and Profit: A member is breaking everyone’s website chatbot and wants to know how to monetize this skill, mentioning they’ve already compromised household brands.

- They broke a consulting agency’s bot, who then wanted them to pwn others so they could reach out to offer blueteam + other services.

- Embeddings-Based Allowlists for Security: A member suggested using embeddings-based allowlists to map expected user/app behavior and reject malicious input, ensuring the output matches expected behavior.

- They pointed to a paper on Application Whitelisting as a Malicious Code Protection Control and claimed that allowlisting has a 100% success rate against ransomware.

- Token Inputs and Paths: The Real Culprit: A member argued that the reason chatbots break is that they don’t classify token inputs and paths from string values, making them vulnerable to injection.

- They added that any system trying to classify all paths in string space will drown in token debt, and that the only stable defense is to make the role-frame subspace itself the safety constraint.

- Grammars and Embeddings: A Powerful Duo?: A member stated that Anthropic and other providers only expose a poor-man’s version of both grammars and embeddings, but that when combined, they provide the most effective security control for LLMs.

- They explained that grammars restrict the vector space to only certain words, phrases, and symbols, while embeddings ensure the output is semantically sound.

LMArena ▷ #general (1125 messages🔥🔥🔥):

LMarena censorship, Grok Imagine, Kimi better than claude 4.6, Gemini 3 pro checkpoint spotted in a/b testing

- LMarena Cracks Down on Content: Censorship on LMarena is increasing, leading to more frequent ‘violations’ and generation errors, with poses or out-of-context words triggering blocks, which has caused frustration among users.

- Users noted the platform is prioritizing a rigid ‘ideal’ of use over actual user behavior, which raises trust and reliability issues.

- Grok Imagine is the Best Image Model: A user mentioned that Grok Imagine is the best image model in artistic stuff, and Deepseek and Grok help with self-treating thyroid issues.

- They stated that no other model helped me with thyroxine doses through trial and error, in gpt we trust.

- Kimi K2.5 Scores Big With the Coders: Members are extolling the coding virtues of Kimi K2.5 which gives consistent, reliable, trustworthy results as a small model.

- Members are advocating for its integration to debug Claude or GPT output, with claims that Kimi to bug review and it NAILS it.

- Gemini 3 Pro Appears for A/B Testing: Members discuss a potential new Gemini 3 Pro checkpoint spotted in A/B testing, detailed in a testingcatalog.com article.

- The new model is expected to be simply be a better fine tuned refined polished of the same base model which is Gemini 3.

LMArena ▷ #announcements (6 messages):

Claude Opus 4.6, Image Arena Leaderboard Updates, Video Arena Discord Bot Removal, Academic Partnerships Program, PDF Upload Feature

- Opus Crushes Coding Competition: The Text Arena and Code Arena leaderboards now include

Claude-opus-4-6-thinking, with the model scoring #1 in both arenas, achieving a score of 1576 in Code and 1504 in Text (leaderboard).- In Code Arena, Claude Opus 4.6 dominated, securing the #1 and #2 positions, while Claude Opus 4.5 took #3 and #5.

- Image Arena Gets Categorized and Filtered: The Text-to-Image Arena has been updated with prompt categories and quality filtering, analyzing over 4M user prompts to create category-specific leaderboards across common use cases, such as Product Design and 3D Modeling.