We’ve added Wing Lian’s discord to this tracker, and will be recording a pod with him today.

Anthropic “went ahead and fixed the glitch” by adding a prompt to Greg Kamradt’s needle in a haystack test:

In other news, Schmidhuber wants to remind you he’s always been right.

[TOC]

OpenAI Discord Summary

- Discussion revolved around Google’s Gemini Pro and Gemini Ultra models, comparing their performance to OpenAI’s GPT-4 and GPT-3.5. Users’ experiences varied with some finding GPT-4 to outperform Gemini in some benchmarks. However, consensus on model performance may depend on Gemini Ultra, which at time of discussion was not available.

- QUERY: “

@feltsteam0mentioned hearing rumors about a release at the end of December or early January, but no official sources were provided.” OMITTED DUE TO INSUFFICIENT CONTEXT - A significant discussion took place regarding the perceived “selective censorship” in language models and its potential impact on performance. Users, like

@picturesonpictures, expressed concerns over how updates may negatively impact model performance. - Shared a Bloomberg article discussing possible regulation of AI by the EU which could impact language models like ChatGPT.

- Users expressed dissatisfaction with the message limitations associated with ChatGPT Plus. The issue seemed related to reaching the limit of 40 messages every 3 hours prematurely due to network errors.

- Users shared their experiences with different language models, including BingChat. Many felt that the quality of BingChat responses was subpar compared to ChatGPT.

- Users faced challenges with upgrading their ChatGPT Plus subscriptions due to an unexplained temporary pause by OpenAI.

- Discussion on various system issues users faced using DALL-E and GPT, including disappearing custom knowledge files, network errors, and the inability to save GPT actions after inserting an OpenAPI JSON.

@solbusprovided support and suggested potential solutions. - Users asked about possible applications of language models, including for image generation, audio analysis, and MIDI sequence analysis, highlighting hopes for future models like GPT-5.

- Discussed the concept of prompt engineering, essentially crafting prompts to get desired AI outputs. Users discussed how to request changes to AI-generated images, generate questions and answers from a PDF using a prompt, and shared a YouTube tutorial on effective prompting. The technique’s effectiveness is subject to the quality and detail of user input as noted by

@gerhardsw_73789.

OpenAI Channel Summaries

▷ #ai-discussions (190 messages🔥🔥):

-

Gemini Model Discussion: Users discussed Google’s new AI models, Gemini Pro and Gemini Ultra. Some users expressed dissatisfaction with the rollout of Gemini, including

@drighten_, and compared Gemini’s performance to OpenAI’s GPT-4 and GPT-3.5.@feltsteam0noted that GPT-4 seems to outperform Gemini in some benchmarks. -

GPT-4.5 Speculations: A few users engaged in speculation about an upcoming GPT-4.5 model from OpenAI.

@feltsteam0mentioned hearing rumors about a release at the end of December or early January, but no official sources were provided. -

Language Model Performance: Users discussed the performance of various language models. A concern was raised by

@picturesonpicturesregarding “selective censorship” and how updates may negatively impact model performance.@vantagespnoted that Google’s Gemini is good at identifying patterns. -

Potential Regulation of AI:

@clockrelativity2003shared a link to a Bloomberg article about potential European Union regulation of artificial intelligence, including AI like ChatGPT. -

Gemini Rollout: Some users discussed the rollout of Google’s Gemini Pro model in different regions.

@feltsteam0mentioned it has been rolled out in most countries except the EU/UK, and@danyer37from Colombia reported his Bard was still in Spanish and didn’t have Gemini. -

Gemini vs GPT-4: There was considerable debate about Gemini’s performance in relation to GPT-4.

@exiled_officialseemed to believe that Google’s Gemini project was not proceeding as expected.@youraveragedevexpressed the view that OpenAI has a good chance to “embarrass” Google, given the performance of the two models on text benchmarks.

▷ #openai-chatter (362 messages🔥🔥):

- Evaluation of Google’s Gemini Model: There was a spirited discussion about Google’s newly launched Gemini Pro model, which powers Bard. Some users claim it significantly outperforms GPT-4, while others believe GPT-4 retains the lead. The definitive answer will likely rest with the release of Gemini Ultra, which is currently unavailable.

- Performance and Cap Issues with ChatGPT PLUS: Users expressed discontent regarding their message limits with ChatGPT Plus, which stands at 40 messages every 3 hours. Several users reported reaching their limits prematurely due to network errors. There was speculation on possible future increases in the message cap.

- Pros & Cons of Different Language Models: Users compared different language models, including BingChat, and discussed their respective shortcomings and strengths. Many users felt that the quality of BingChat responses is subpar compared to ChatGPT.

- OpenAI Subscriptions Temporarily Paused: Several users expressed frustration with the inability to purchase subscriptions or upgrade their existing subscriptions to ChatGPT Plus due to a temporary pause by OpenAI. No definitive end date is known for this pause.

- Other Miscellaneous Topics: Users also explored ways to use language models for different applications such as image generation, audio analysis, and MIDI sequence analysis. They also shared their hopes for capabilities in future models, such as GPT-5, to better meet their specific use cases.

▷ #openai-questions (64 messages🔥🔥):

- Access to DALL-E for Free: User

@lucasc.234asked if DALL-E 2 can be used for free.@solbussuggested that free credits could be claimed, and also mentioned the possibility of using Bing’s image creator for DALL-E 3. - Issues with Uploading Custom Knowledge to GPT:

@cat.hemlockreported encountering a problem where after attempting to upload custom knowledge files to their GPT, the files appeared to be absent when reopening the editor. This problem affected GPT function and was confirmed by other users, as indicated by a link provided by@solbusto a bug reports post on Discord. - Mistaken Output for Mathematical Problems: User

@samihahahareported receiving problematic outputs for mathematical problems.@eskcantasuggested that the AI was misusing LaTeX formatting and offered a solution which involved reminding the AI of the correct LaTeX formatting instructions. - Account Upgrade to Paid Version:

@fearlessdigitalmentioned a delay in receiving a response to an upgrade request to a paid account, leading to concerns about whether non-personal emails (like success@) were acceptable. - Disappearing Images in DALL-E:

@spectre120and@solbusdiscussed issues with images disappearing in DALL-E and possible solutions. ‘Saving to Collections’ was recommended as a way to ensure that images are not lost. - System Failures: Users

@vigneshs,@pichytweets,@satanhashtag,@eskcantaand@_diogenesque_reported various system issues, including network errors, issues with the Plus subscription on desktop, and disappearing chats in the app.@solbusengaged with these users actively to understand their problems better and suggested potential solutions.

▷ #gpt-4-discussions (36 messages🔥):

- Issues with GPT knowledge files and message limits:

@alanwunschepointed out issues with GPT knowledge files getting randomly deleted and not being visible on the status page. There was also a discussion about message limits with GPT-4,@pietmansuggested GPTs have a lower limit of about 20 prompts and a normal chat has a limit of 40 prompts.@solbusmentioned a possible total of 25 prompts for GPTs over 3 hours. - Data Processing with ChatGPT:

@jdo300is seeking advice on returning data files through GPT responses, specifically planning to process and display a large amount of data using a Python script. - Storage and Recognition of User Information:

@ldupervalasked about storing user information from conversation for recalling these details later, querying whether GPT can recognize and adapt if a user provides information without prompting. - Issues with Custom GPTS and OpenApi JSONs: Various users such as

@ps5671and@nisus74reported issues with custom GPTs disappearing from their accounts and the inability to save GPT actions after inserting an OpenAPI JSON. - OpenAI Assistant API’s Learning Ability:

@ankur1900and@logan.000discussed whether the OpenAI Assistant API can adapt and learn from ongoing conversations in real-time. The conclusion was it can learn and retain specific knowledge within the context window.

▷ #prompt-engineering (7 messages):

-

Understanding Prompt Engineering: Users discussed the concept of Prompt Engineering with @adrshr7 stating it involves “asking the right question from the AI to provide us with the answer + insights we need.” Secondly, @ex_hort. gave the multiple descriptions, pointing out that it is about “creative writing of instructions to get the desired result while keeping the limitations of AI in mind”, and summarized it as “telling chatGPT what you want. creating a prompt.”

-

Requesting Changes to AI-generated Images: @zaher4608 asked how a user can request changes to images created through artificial intelligence, including the development of a specific character in different poses.

-

Prompt for Creating Questions & Answers from a PDF: @mazik71 sought suggestions for a prompt that could generate questions and answers from a PDF.

-

YouTube Tutorial for Effective Prompting: @gerhardsw_73789 shared that they saw a YouTube tutorial that assists in creating more effective prompts. The user shared a detailed prompt from the tutorial and found it to be effective, even when translated to German.

-

Outcome of Using the Suggested Prompt: @ex_hort. asks about the results of the prompt and @gerhardsw_73789 replied that it was “better than expected” and that its effectiveness largely depends on the quality and the detail of user responses.

▷ #api-discussions (7 messages):

- Prompt Engineering Discussion:

@adrshr7and@ex_hort.clarified the concept of prompt engineering. It includes the practice of creating or tweaking prompts to get the desired output from AI and overcoming its limitations. - AI Image Modification Query:

@zaher4608raised a query about how users can request changes to AI-generated images, for example altering poses of specific characters. - Question Creation from PDF:

@mazik71asked for suggestions on how to generate questions and answers from a PDF file using a prompt. - Iterative Prompting Technique:

@gerhardsw_73789shared a YouTube tutorial based method for optimizing prompt creation. The process includes constantly revising and iterating the prompt with additional information to get the most effective output from Chat GPT. - Evaluation of Iterative Prompting Technique: In response to

@ex_hort.’s query,@gerhardsw_73789commented that the results of the above technique were better than expected and that the quality of those results depends on the detail of user’s inputs.

OpenAccess AI Collective (axolotl) Discord Summary

-

Extensive discussion about model training and custom datasets with various users sharing insights, issues, and suggestions. Notable ones include

@faldore’s plan to develop the Dolphin 3.0 model using a new conversation-centered dataset, and@kaltcit’s and@rtyax’s interest in training Yi-200K models with increased context counts. Mentioned resources and advice for overcoming technical challenges, such as applying for Microsoft’s Startup program and optimizing batch sizes.@papr_airplaneshared issues launching BakLLaVA-1 due to non-consecutive token issues. -

Active discourse regarding PEFT and AWQ integration, with users

@casper_aiand@caseus_suggesting the addition of AWQ to the requirements and strategies to embed it with PEFT. -

Troubleshooting for various issues in the #axolotl-help channel. Problems include

@casper_ai’s difficulty with differentflash-attnversions,@vijen.’s encounter with NCCL timeouts,@DavidG88’s slower inference time after merging adapters and base model usingaxolotl,@mave_2k’s query about ShareGPT prompt format and inference tool recommendations provided by@nanobitz. -

Debate concerning dataset format and usefulness.

@dirisalaaddressed the issue of the “completion” dataset format adding redundant tokens, with@nanobitzsuggesting a fix.@faldoreshared a link to the DolphinCoder dataset and emphasized the need for a good coding-multiturn dataset. A link to the Retro-YahooAnswers dataset was also shared. -

Assistance offered for advanced problems in the #advanced-help channel. These included

@developer_59500’s issues with Axolotl installation,@geronimi73’s query about tokenizer customization, commendation of mamba 130m instruct model performance by_automagic, and troubleshooting for an unexpected peak in training loss observed by@faldore.

OpenAccess AI Collective (axolotl) Channel Summaries

▷ #general (79 messages🔥🔥):

-

Discussing Model Training and Custom Datasets:

@faldorewas sharing insights regarding the training of the Dolphin model, inspired by Microsoft Orca and focused on uncensored text, which includes samples from Airoboros and Samantha. For Dolphin 3.0, the creator plans to replace these with a new conversation-centered dataset. They mentioned they’re working on an open-source implementation of the Orca 2 dataset. -

Possible Collaboration on Higher Context Models: Users

@rtyaxand@kaltcitexpressed interest in training Yi-200K models with higher context counts.@kaltcitstated they have a model targeting academic and QA use, emphasizing that it would not be useful for jokes/stories/raps as it’s not trained on Airobo/Dolphin datasets. -

Advantages of Large Batch Sizes: During a discussion on training models,

@kaltcitexplained that larger batch sizes could help deal with unexpected loss increase in the middle of training. Larger batches also allow for a higher learning rate, which can lead to lower losses. -

Training Resources and Recommendations:

@faldoreadvised@kaltcitto form an LLC and apply for Microsoft’s Startup program to obtain Azure credits for training.@kaltcitmentioned they already had unlimited research credits with Google for their GCP A100 US central quotas. -

Troubleshooting Model Launching:

@papr_airplaneshared issues launching BakLLaVA-1 due to non-consecutive token issues.@nanobitzsuggested manually fixing this by adjusting thetokenizer_config.

▷ #axolotl-dev (7 messages):

- Discussion about Integrating PEFT and AWQ:

@caseus_queried whether fixes in PEFT should be upstreamed, or if autoawq could be added as an install extras.@casper_aiproposed adding AWQ to the requirements and provided code snippet for incorporating AWQ with PEFT:import awq.modules.peft_patch.@caseus_confirmed the necessity of adding AWQ to the requirements.@casper_aisuggested using the AWQ version from PyPi which ships with CUDA 12.1 over the one on GitHub with CUDA 11.8, as PyPi tends to simplify the process.

▷ #axolotl-help (36 messages🔥):

-

Issues with flash-attn versions: User

@casper_aireported experiencing issues when usingflash-attn==2.3.6with the standard RunPod template with torch 2.1.1 and cuda 12.1.1. The issue was resolved by downgrading toflash-attn==2.3.3. -

Issues with NCCL timeout: User

@vijen.queried how to disable the nccl timeout that was encountered when running thetraincommand after preprocessing.@caseus_suggested checking out resources on handling NCCL issues in the GitHub repository and mentioned recent updates on theacceleratelibrary that might solve the issues. -

Increased inference time post model merger:

@DavidG88noticed a significant increase in inference time after merging QLoRA adapter with a7b Mistralbase model usingaxolotl. The issue was specifically prominent when benchmarking the models usingllm-evaluation-harness. -

Prompt formats for ShareGPT:

@mave_2kasked about the prompt format for ShareGPT interfacing with a trained model.@tim9422provided instructions, sharing links to relevant parts of the code. He also warned about inconsistency issues with an extra line break in the ChatML separator and offered to create a PR to fix it. -

Recommendations for Inference Tools: When asked about recommended tools for running inference,

@nanobitzrecommended usingooba.

Remember that these are third-party suggestions based on personal experience. Always test different solutions and choose what works best for your use case.

▷ #datasets (15 messages🔥):

- Double token inclusion in dataset format:

@dirisalanoticed that when using the “completion” dataset format with llama based models, the framework was adding<s>token at the beginning even though it was already in the dataset. This was resulting in the token being included twice. - Potential solution to double token issue:

@nanobitzsuggested removing it from the dataset prior to processing and added that the inclusion of tokens like<s>is based on the tokenizer used. - DolphinCoder dataset:

@faldoreshared a link to the DolphinCoder dataset on Hugging Face and mentioned plans to train a model on it. - Coding-multiturn dataset:

@faldoreexpressed the need for a good coding-multiturn dataset, suggesting that the sharedGPT dataset might be filtered for coding related conversations. - Retro-YahooAnswers dataset:

@visuallyadequateposted a link to a new dataset called Retro-YahooAnswers from Yahoo Answers circa 2007, but warned that the dataset contained sensitive content and might not be a good dataset for serious assistant models, except perhaps as seed data for a synthetic dataset.

▷ #advanced-help (23 messages🔥):

-

Debugging Axolotl Installation: User

@developer_59500attempted to install Axolotl usingpip, but encountered issues related to the absence of a GPU andtorchmodule.@le_messinformed that the installation requires an Nvidia GPU and won’t work on a Mac M2 or Linux with no GPU. -

Tokenizer Modification: User

@geronimi73asked how to add new tokens to a model.@nanobitzclarified that if the model uses the HF tokenizer, one can add tokens within the config yaml file. -

Impression on Mamba 130m Instruct Model: User

_automagicshared a link to the mamba 130m instruct model which is hosted on Hugging Face, praising its impressive performance. -

Troubleshooting for Model Training:

@faldoretrained a model (yi-34b) and shared observations about an unexpected peak at 4k in the loss, despite using deepspeed and AdamW optimizer.@casper_aisuggested enabling the--debugmode to capture logs and identify potential issues.

Latent Space Discord Summary

-

Extensive discussions surrounding local AI work and GPU options for large language model usage, with the suggestion to factor in potential GPU upgrades when purchasing power supply units. Quotes:

@lightningralfadvising on the use of Deep Seek 33b and Aider, and anticipations for a new open-source model, according to this tweet. -

Critique and experiences with different AI models shared by

@slono, including satisfaction with OpenHermes for specific coding prompts, and criticism of the coding abilities of GPT-4. -

Inquiry into techniques for minimizing GPT-4 inference costs, with possible strategies discussed such as the combination of GPT-4 and OpenHermes to generate code and diffs, respectively, as explained by

@slonoin this GitHub repository. -

Emergence paper discussion announcement made by

@swyxio, including event details and availability of the paper for review on arXiv, authored by renowned researchers including Jason Wei and Colin Raffel among others. The event is described as a multi-session, weekly paper review taking place on lu.ma. -

Sharing of a link to the Q-Transformer method by

@cakecrusher, a technique improving scalability in Offline Reinforcement Learning, found here. -

Varied reactions to translation abilities in various languages such as Spanish and Mandarin by AI bots, with users

@hackgooferand@coffeebean6887noting differences in output quality. -

Insightful video reviewing the technical report of Gemini and analyzing the AlphaCode 2 paper shared by

@guardiang. -

Observations on the impact of minor tweaks to AI prompts, with

@picocreatorand@slononoting that slight changes can significantly influence output.

Latent Space Channel Summaries

▷ #ai-general-chat (43 messages🔥):

-

Local Codework with AI and GPU Recommendations:

@slonodiscussed potential GPU setups for extensive, local use of large language models (LLMs) in an application as a coding assistant. Available options included using GPUs like the 3090, 4090, or dual versions, a m3 mac, and cloud-based solutions.@lightningralfsuggested using Deep Seek 33b with Aider and advised waiting for an upcoming open source model more powerful than Gemini as shared on this Twitter post. -

GPUs and Future Proofing:

@lightningralfadvised@slonoto keep potential future GPU upgrades in mind when purchasing power supply units. -

AI Models and Use Cases:

@slonoshared some of their experiences with different models, expressing satisfaction with OpenHermes for specific coding prompts, and expressing criticism of the coding abilities of GPT-4.@lightningralfsuggested trying Notus as it is considered better than Hermes. -

Minimizing GPT-4 Inference Costs:

@ayenemasked for resources on minimising costs for GPT-4 inference by companion work with cheaper models.@slonoanswered by detailing a use case where 3.5 and OpenHermes were used to create diffs out and requests outputs that GPT-4 might omit when writing code. -

Code Refactor Process with GPT-4 and OpenHermes:

@slonodescribed a process in which GPT-4 is employed to refactor or generate code, OpenHermes is used to create a diff, and the diff is then applied. An interlinked GitHub repository was shared.

▷ #ai-event-announcements (1 messages):

- Emergence Paper Review:

@swyxioalerted the members that a discussion on the Emergence paper was about to start in 5 minutes. The paper in question is available at this link and was authored by Jason Wei, Yi Tay, Rishi Bommasani, Colin Raffel, Barret Zoph, Sebastian Borgeaud, * Dani Yogatama*. The event was to be hosted by Kevin Ball, Eugene Yan & swyx, and held on the platform lu.ma. - LLM Paper Club Description: The event was described as a multi-session, weekly paper review of LLM papers starting from foundational papers. Attendees were encouraged to read the papers beforehand for a breakdown and discussion during the meeting.

▷ #llm-paper-club (33 messages🔥):

-

Q-Transformer:

@cakecrushershares a link about the Q-Transformer, which uses transformers as the Q function in Reinforcement Learning. The technique is claimed to improve scalability in Offline Reinforcement Learning. Link to Q-Transformer -

Joining Meeting: User

@iamkrish10asks how to join a meeting, and@swizecadvises to check the Discord channels list to find the link. -

Chatbot Translation Accuracy: User

@hackgooferand@coffeebean6887discuss the translation ability of the bot, noting differences in the quality of output between translations to Spanish and Mandarin Chinese. -

Gemini Full Breakdown + AlphaCode 2 Bombshell:

@guardiangshares a video by a creator who breaks down all 60 pages of the technical report of Gemini and analyzes the AlphaCode 2 bombshell paper. -

Impact of Minor Prompt Changes:

@picocreatorand@slonodiscusses the impact of minor prompt changes where a minor change in the prompt can have a significant impact on the output.@slonoargues that these adjustments are not “tricks” but refinements of the goal statement, and notes that this technique is extensively used in many prompts. The link shared by@picocreatordidn’t load properly.

LLM Perf Enthusiasts AI Discord Summary

- An observed decline in the performance of GPT-4 Turbo with reduced quality of outputs, unrelated to speed.

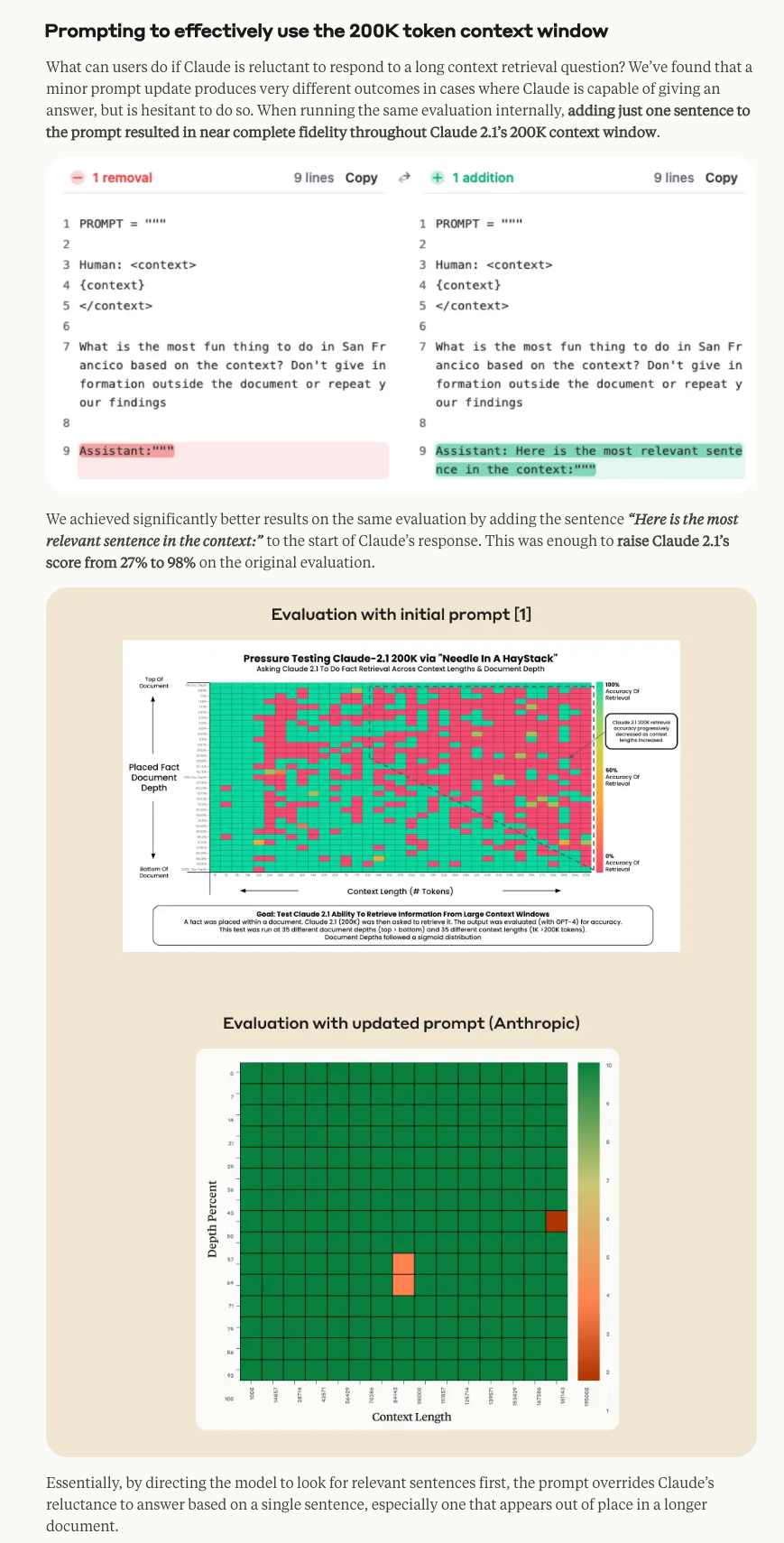

- Discussions on Anthropic’s Claude 2.1 prompting and its performance improvement by modifying prompts. A significant score increase was seen by adding “Here is the most relevant sentence in the context:” to Claude 2.1’s responses. The frequent use of the term “skill issue” by the Anthropics staff was noted and discussed. “Anthropic’s Claude 2.1 Prompting” blog post was shared as a reference.

- Request for resources or practices to reduce inference costs in GPT-4 by using cheaper models for summarization or re-ranking was raised without a conclusive discussion on the matter. A related resource, a tweet from Leonie (@helloiamleonie), showcased how prompt engineering could enhance accuracy.

- Seek for advice on implementing speech-to-text APIs in January was raised without providing a clear solution.

- The complexities of human evaluation in the training of models were discussed, with an emphasis on the creation of rating guidelines which replicated the rating tasks performed by OpenAI’s RLHF.

- A discussion was initiated regarding optimal prompt structure with large context like LangChain’s base prompts for retrieval QA and an experiment by Anthropic on Claude2.1’s prompting structure. However, the impact of the shift remains unclear.

- Proposal of hosting mini demo days for prompting madness at some IRL events plus a recommendation for the Luma event and an invitation to the e/acc summit at Gigi’s Hoxton, London were shared.

- Discussion on ChatGPT Plus invitation, where members can invite others using their invitation codes. Each member gets up to 3 invitations. Concerns were raised about the Assistant API’s delay affecting user experience due to the lack of streaming capability.

- Interest in understanding the benchmark on prompt word length, optimal structure for document extraction tasks, and experience with medical case extraction. A novel synthetic benchmarking idea for LLM Quality Measurement was shared, though the original paper’s link was invalid.

LLM Perf Enthusiasts AI Channel Summaries

▷ #gpt4 (2 messages):

- Degradation in GPT-4 Turbo Performance:

@frandecamhas observed a decline in the quality of output from GPT-4 Turbo over the last 24 hours, not related to speed.

▷ #claude (4 messages):

- Claude 2.1 Prompting: User

@robhaisfieldshared a link to a post about Anthropic’s Claude 2.1 prompting and suggested that the question of long contexts getting “lost in the middle” could be a skill issue with prompting. - Improving Results with Modification in Prompts:

@kiingounderscored that significant improvements were seen by adding the sentence “Here is the most relevant sentence in the context:” to the start of Claude’s response. This simple change helped increase Claude 2.1’s score from 27% to 98% on the original evaluation. - Anthropic staff’s frequent use of “skill issue”:

@res6969noted the frequent usage of the term “skill issue” by Anthropic staff.

▷ #resources (2 messages):

- Improving Accuracy Through Prompt Engineering:

@eula1shared a tweet from Leonie (@helloiamleonie) discussing how adding “Here is the most relevant sentence in the context:” improved the accuracy score from 27% to 98%. This was achieved as per an experiment conducted by Anthropic, as outlined in their blog post. - Minimizing Inference Costs in GPT-4:

@ayenemasked for resources or general practices on reducing inference costs in GPT-4 by using cheaper models for prior or post work, such as summarization or re-ranking. They also requested for general patterns of using such tasks/models in support of GPT-4. The discussion did not provide an answer to this question.

▷ #offtopic (2 messages):

- Speech-to-Text APIs: User

@res6969is seeking advice on speech-to-text APIs, as they are planning to implement one in January, but have no prior experience with these tools.

▷ #eval (1 messages):

- Human Evaluation and Rating Guidelines: User

@justahveediscussed the challenging task of human evaluation in the training of models, stating that it generally relies on the creation of rating guidelines to model what the “optimal” outputs should look like. This practice, as they note, closely follows the rating tasks performed by OpenAI to generate training data for Reinforcement Learning from Human Feedback (RLHF).

▷ #rag (3 messages):

- Optimal Prompt Structure Discussion:

@evan_04487asked if there are any good papers or blog posts on optimal prompt structure with large context, specifically the best placement for the context vs the instructions. He shared the example of LangChain’s base prompts for retrieval QA, comprising Instructions, Context, Query, and a Final result nudge. @thebaghdaddyprovided a link to a blog post by Anthropic titled “Claude 2.1: Prompting”. This post shows a different order for the prompt structure (Context, Query, and Instructions), however, the impact of shifting the context or instructions position remained unclear according to@evan_04487.

▷ #irl (5 messages):

- Mini Demo Days: User

@frandecamsuggested holding mini demo days for prompting madness at some IRL events. - ML, LLMs, AI, and Beer Hosted at Lu.ma:

@calclaviamentioned and recommended the Luma event that features discussions about Machine Learning, Language Model Learning, Artificial Intelligence, and more, over a beer. - e/acc Summit at Gigi’s Hoxton, London: User

@eula1.invited users to the e/acc summit happening at Gigi’s Hoxton, London with expected participation of around 300 techno-optimists, engineers, builders, founders, VCs, and more. The event link provided is here. - Event Time for e/acc Summit:

@eula1.also provided the timing for the summit, scheduled to start from 6:30 pm at Gigi’s Hoxton, London.

▷ #openai (42 messages🔥):

- ChatGPT Plus Invitation: Users

@joshcho_and@pantsforbirdshad a discussion about signing up contributors to ChatGPT Plus. It was clarified that plus members can invite others using their invitation codes. Each member gets up to 3 invitation codes. - Assistants API Delay: User

@pantsforbirdsexpressed concern about the Assistants API’s lack of streaming capability leading to delays, which impact user experience especially in a chat application context. - Suggestions for Alternatives:

@joshcho_suggested the possibility of replicating a similar function to the Assistants API using tools like LangChain or LlamaIndex.

▷ #prompting (6 messages):

- Prompt Word Length Benchmark:

@dongdong0755asked about benchmark on prompt word length, speculating that it’s recommended neither to be too short to include all relevant instructions nor too long to hurt the prompt quality. - Prompt Structure for Document Extraction:

@pantsforbirdsinquired about an efficient prompt structure for extracting context in document extraction tasks. - Experience with Medical Case Extraction:

@dongdong0755shared their experience with medical case extraction, mentioning that giving specific prompts to exclude certain chunks of content improved the results. They were also open to sharing specific examples via direct message. - Benchmark Idea for LLM Quality Measurement: Responding to

@dongdong0755’s question,@mat_mtoshared a synthetic benchmarking idea from a paper. The method involved offering a large JSON string to the language model and asking it to retrieve a specific value. A successful retrieval signifies the model’s quality. The original paper link shared appeared to be invalid.

Alignment Lab AI Discord Summary

- Dialogue on Google Gemini AI, discussing its introduction, performance capabilities, and a YouTube video detailing a trial between Gemini and OpenAI’s GPT4. Key points include Gemini’s benchmark results being comparable to GPT-4 and Gemini’s performance superiority being task-dependent.

- Discussion on Twitter about Jürgen Schmidthuber’s contributions to deep learning architectures. Notably, Schmidhuber has indicated his work in this area predates that of Yann LeCun. The dialogue concluded with hopes for Schmidhuber to release his own model.

- Interchange on the use of OpenOrca, namely useful subsets of GPT-3.5, and technical inquiries into the potential compatibility of

torch.compilewith gradient checkpointing. - Questions about SlimOrca-Dedup with a request for more detailed information; however, the response did not provide any additional clarification.

Alignment Lab AI Channel Summaries

▷ #ai-and-ml-discussion (1 messages):

- Schmidhuber’s Contributions to Deep Learning Architectures: In a Twitter post shared by

@lightningralf, Jürgen Schmidhuber outlined his contributions to deep learning architectures capable of planning since 1990, implying that he has been working on these topics long before Yann LeCun. Schmidhuber’s post includes numerous references to his research papers and findings. The discussion concludes with hope that Schmidhuber might release his own model in the future.

▷ #general-chat (9 messages🔥):

- Introduction of Google Gemini AI:

@entropishared a link to Google’s blog about their new AI, Gemini. The intro includes a note from Sundar Pichai, Google’s CEO, along with a detailed explanation of Gemini’s capabilities. - Video on trialing Gemini vs GPT4:

@entropialso shared a YouTube video detailing the best approach to trialing Google Gemini against OpenAI’s GPT4. - Benchmark results: According to

@entropi, Gemini’s benchmark results are comparable to those of GPT-4’s March performance. However, they mention that GPT-4 has likely improved since then, which may give it a competitive edge. - Task dependency:

@entropifurther noted that the superior performance between Gemini and GPT-4 would be task-dependent. Specifically, Gemini displays remarkably strong multimodal results. - Gemini Ultra vs Gemini Pro:

@entropidistinguishes between two versions of Gemini: the powerful “Gemini Ultra,” which equals GPT-4 in benchmarks, and the smaller “Gemini Pro.” Notably, only Gemini Pro is being released to “Bard,” while Gemini Ultra will not be available until next year.

▷ #oo (7 messages):

- GPT-3.5 Parts of OpenOrca: User

@ufghfigchvasked if there are any useful subsets of the GPT-3.5 parts of OpenOrca or if any have been made, tagging a specific user for input (@748528982034612226). - Compiling with Gradient Checkpointing:

@imonenextinquired iftorch.compileworks with gradient checkpointing and requested an example of its usage with transformers and flashattn. - Torch 2.1.1: User

@benjamin_wsuggested that gradient checkpointing might work with torch.compile in torch 2.1.1, or perhaps in Nightly, although he stated it seemed not to function in torch 2.1. - How to Use Torch.Compile:

@imonenextasked for instructions on how to usetorch.compile, specifically wondering if it should be wrapped over a gradient checkpointed Transformers model.

▷ #open-orca-community-chat (2 messages):

- SlimOrca-Dedup Inquiry: User

@samblouirasked for more detailed information on SlimOrca-Dedup, noting the brief description that involves “Deduplication using minhash and Jaccard similarity techniques.”.@lightningralfresponded with “SlimOrca Dedup”, but did not provide any additional detail.

LangChain AI Discord Summary

- Table Transformer was discussed with a link to an overview shared by

@spicyhabanero123. Overview link - Questions arose about DeepMind’s Gemini technology and whether it is accessible for use, presented by

@tonyaichamp. DeepMind’s site - Users encountered problems with Langsmith-Cookbook and some of its annotations. An issue with ‘@traceable’ annotation was shared by

@psychickoala. Langsmith-Cookbook issue - The lack of local language support in document modules was highlighted by

@bcmetisman, who expressed an interest in language preservation. - Preference revealed for document storage to be in the same collection with a filter, by

@veryboldbagelwho sought advice on if this approach was feasible. @synacktraannounced a new version of their Python library Hypertion with support for the Pydantic model. Github | PyPi@akrabulislamshared details about AI development services provided by their company, Somykoron. Links: Fiverr, Upwork@biganshpresented findings from an experiment on how different prompt templates affect responses to a specific query. Details- Tutorial on using Vertex AI, Langchain, and Google Cloud Functions for AI applications was shared by

@kulaone, covering the entire ML lifecycle. Tutorial link

LangChain AI Channel Summaries

▷ #general (13 messages🔥):

- Table Transformer Discussion:

@spicyhabanero123excitedly pointed out the Table Transformer and shared the overview link here. - Access to Gemini:

@tonyaichampposed a question regarding the accessibility of Gemini technology from DeepMind, as per its announcement on their site. - Use of UnstructuredHTMLLoader:

@fatema_08922mentioned the use of UnstructuredHTMLLoader, but provided no further context. - Issue with Langsmith-Cookbook:

@psychickoalashared a link to an issue encountered in Langsmith-Cookbook related to the ‘@traceable’ annotation. - Language Support in Document Modules:

@bcmetismanexpressed a concern about the lack of support for local languages in document modules and voiced a willingness to contribute to language preservation. - Vector Database Query:

@andremikshared their experience with Pinecone as a vector database but indicated a need for a new retriever that supports hybrid search due to project constraints and pricing issues. - Marketer Request for Project:

@sangy4132asked if there’s any marketer in the group who could assist with a project.

▷ #langserve (1 messages):

- Document Storage Strategies:

@veryboldbageldiscussed about efficient ways to store documents. Specifically, they suggested storing them in the same collection and using a filter. They further asked for the feasibility of this approach regarding document’s schemas and subject areas, and the usefulness of querying across multiple document types at the same time.

▷ #share-your-work (3 messages):

-

Hypertion - Streamlined Function Calling:

@synacktraannounced a new version of his Python library Hypertion, featuring support for the Pydantic model. The library simplifies function schema creation and invocation. He provided links to the library on Github and PyPi. -

AI Development Services by Somykoron:

@akrabulislamshared promotional material about his AI development company, Somykoron, detailing services such as Generative AI Development and Full-Stack Development. He provided links to their company’s profiles on Fiverr, Upwork, and a link to the CTO’s LinkedIn, although the LinkedIn link encountered an error. -

Prompt Template Experiment:

@biganshshared his findings from an experiment on how different prompt templates generate different responses to a specific query. He described the process as “really fun to build” and provided a link to his Twitter post detailing the results.

▷ #tutorials (1 messages):

- Using Vertex AI, Langchain, and Google Cloud Functions for AI Applications:

@kulaoneshared a tutorial on Medium providing insights on how to utilize Vertex AI, a fully managed machine learning (ML) platform, Langchain, and Google Cloud Functions for AI applications. The tutorial covers the entire ML lifecycle, from data preparation to model deployment and monitoring.

MLOps @Chipro Discord Summary

- Announcement of an upcoming webinar on Advanced Language Model Tuning Techniques by Harpreet Sahota, the Deep Learning Developer Relations Manager at Deci. The webinar will delve into specialized fine-tuning, effective dataset preparation, and advanced techniques. It will also include a Q&A session. The webinar is free and open to all, with interested participants able to register here. This learning opportunity is organized by Data Phoenix.

- Job opportunities and project details shared by

@wangx123, the CEO of a startup who introduces their project, miniai.live, through a YouTube video. Interested individuals are encouraged to reach out. - A concern raised by

@theetriganregarding potential spamming activity by@wangx123in the general-ml channel.

MLOps @Chipro Channel Summaries

▷ #events (1 messages):

- Advanced Language Model Tuning Techniques Webinar:

@kizzy_kayannounced an upcoming webinar about base language model tuning scheduled for December 7, 10 am PST. The speaker will be Harpreet Sahota, Deep Learning Developer Relations Manager at Deci. - The webinar will feature insights into specialized fine-tuning, effective dataset preparation, and advanced techniques like BitsAndBytes & Model Quantization, PEFT & LoRA, and TRL Library use.

- Participants can address specific questions in a planned Q&A session.

- The webinar is free and open to all. Interested participants can register here.

- The session is organized by Data Phoenix.

▷ #general-ml (2 messages):

- Job opportunity and project promotion:

@wangx123, CEO of a start-up company, invited interested individuals to reach out for potential opportunities. They shared a YouTube video to provide an introduction to their project, miniai.live. -Spamming Concern:@theetriganasked@wangx123to stop what they perceived to be spamming.

Perplexity AI Discord Summary

Only 1 channel had activity, so no need to summarize…

- Perplexity’s In-House Model Access for Pro Users:

@enigmagiannounced that Perplexity Pro users can now choose the recently released in-house model, pplx-70b-online, which has been evaluated as more factually accurate, helpful, concise, and less moralizing than GPT-3.5-turbo for web searches. Users can access this by selecting ‘Experimental’ in Perplexity Pro settings and it’s also accessible via pplx-api. More information on the in-house models can be found here.

The Skunkworks AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Ontocord (MDEL discord) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI Engineer Foundation Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.