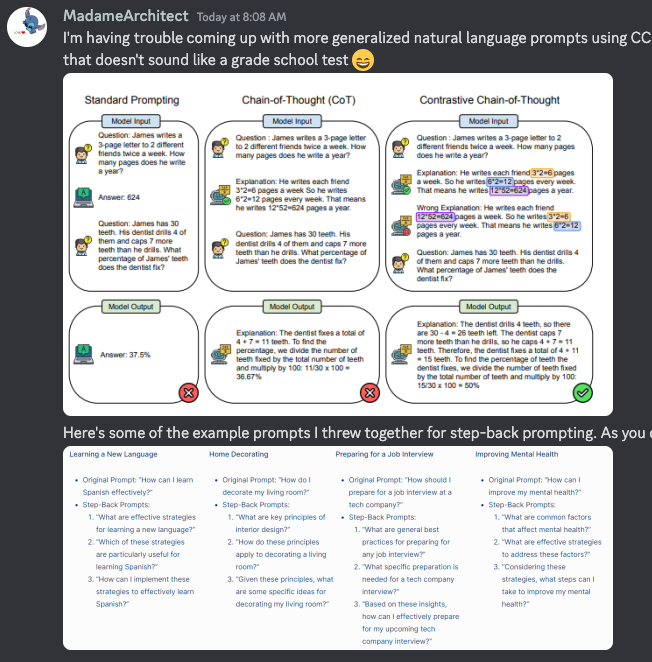

The OpenAI #prompt-engineering channel has some great discussions of prompt techniques from longtime regular MadameArchitect - contrastive Chain of Thought and step back prompting:

Rombodawg’s guide to “Perfecting Merge-kit MoE’s” is also a great read on the stage of model merging and MoE’s (topic from 2 days ago).

—

Table of Contents

[TOC]

OpenAI Discord Summary

-

Infinite Creativity Meets Mortal Bounds: The ai-discussions channel broached the philosophical theme of infinite ideas vs. finite lifespan, contemplating the constraints of human capacity in accessing boundless novelty.

-

Sentience in Silicon? Debating AI Consciousness: A lively debate on AI consciousness saw participants in ai-discussions engage in discussions on whether AIs like ChatGPT possess a consciousness, with disagreements over the definition and presence of consciousness in AI.

-

Hyperdimensional Vectors Lead AI Understanding: Misunderstandings about AI’s utilization of character-based versus token-based embeddings were addressed in ai-discussions, clarifying modern AI’s reliance on hyperdimensional vector space models.

-

Legalities of AI’s Vocal Mimicry: A discourse in ai-discussions regarding the ethics of AI-generated voices emerged after a YouTube video was shared, sparking a conversation about copyright and impersonation concerns in AI applications.

-

Unlocking GPT’s Potential with Prompt Personalization: Users in the gpt-4-discussions and prompt-engineering channels explored customizing GPT to harness its capabilities fully, including giving it personality profiles and crafting prompts that navigate the 8000 character limitation by uploading files with instructions.

-

Technical Trees of Exploration in AI: In api-discussions and prompt-engineering, the concept of a “Tree of Prompts” was dissected, presenting a structured approach that tailors artificial intelligence interactions based on specific tasks, while also debating best practices in prompt engineering and its implications on AI output.

-

Multilingual Discord Dynamics Spark Discussion: An offhand comment in api-discussions about French language usage on Discord led to a brief touch on server language policies and the hypothetical benefit of a universal translator feature within the platform.

OpenAI Channel Summaries

▷ #ai-discussions (200 messages🔥🔥):

-

Infinite Ideas vs. Finite Lifespan: Discussion sparked by

@notbrianzachabout the infinity of ideas in contrast to the finite lifespan and implications of this concept, with@darthgustav.asserting that, while infinite novelty exists, accessibility to this infinity is limited and bound by our finite capacities and lifespan. -

Exploring the Notion of AI Consciousness:

@metaldrgnand@davidwletsch_57978_74310engaged in a debate on whether ChatGPT exhibits a form of consciousness. Interpretations of understanding and consciousness were contested, with@metaldrgnsuggesting a structured approach to consciousness in AI and@darthgustav.providing counterpoints on how transformers like GPT-3 function without conscious understanding. -

Discussing Hyperdimensional Computing and AI:

@red_codespeculated on character-based embeddings and AI comprehension, leading to@darthgustav.correcting misconceptions and noting that modern AI utilizes token-based, hyperdimensional vector space models, which have been around since before the article referenced by@red_code. -

Token Vectors and Multilingual Abilities:

@_jonpodiscovered GPT-4’s ability to render ancient languages and semiotics, and@darthgustav.commented on the extensive language and character systems known to the AI, leveraging this semiotic encoding for information-dense prompts. -

Ethical Considerations of AI-Generated Voices:

@undyingderppresented a YouTube link and questioned the ethics of AI replicating artist voices. Discussions followed, with@7877and@.doozdiscussing copyright and impersonation laws, the latter referencing a YouTube channel’s legal issues related to mimicking David Attenborough, suggesting that similar legal frameworks could apply to AI-generated voice content mimicking artists like Lil Baby.

Links mentioned:

- Real Ones: Provided to YouTube by IIP-DDSReal Ones · PALLBEARERDONDADA4 Pockets Full℗ PALLBEARERDONDADAReleased on: 2024-01-03Composer: Yoshan WeatherspoonAuto-generate…

- A New Approach to Computation Reimagines Artificial Intelligence | Quanta Magazine: By imbuing enormous vectors with semantic meaning, we can get machines to reason more abstractly — and efficiently — than before.

▷ #gpt-4-discussions (192 messages🔥🔥):

-

Experimenting with GPT Personalities: User

@66paddywas happy with the results of giving GPT a personality profile and feeding it transcripts of the person’s interviews and shows to analyze speech patterns and mannerisms. -

Debate on Usage Caps for GPT Development: A discussion took place regarding the usage cap for GPT in the context of developers tweaking their models.

@artofvisualwished such backend tweaks didn’t count as regular usage, while@darthgustav.emphasized that lifting caps could negatively impact regular users and performance. -

Custom GPT Issues and Solutions: Users encountered several issues with custom GPTs. For instance,

@d_smoov77sought advice for creating a translation GPT for fictional languages and received tips from members like@elektronisade,@madame_architect, and@_jonpoon potential workarounds. -

Privacy Concerns in GPT Store: User

@realspacekangarooexpressed concerns about their name being compulsory on their published GPTs, leading to a clarification from@elektronisadethat the store requires either a name or site to be visible for verification, and a later solution found for using just the domain name. -

Pondering the Monetization of Custom GPTs: Conversations about the potential for monetizing GPTs (

@thesethrose,@darthgustav., and@_jonpo) reflected a consensus that success depends on the value provided and popularity of the GPT, with the recognition that the current discovery features in the GPT Store are lacking.

▷ #prompt-engineering (257 messages🔥🔥):

-

GPT File Upload Tricks Revealed: User

@rico_builderinquired about strategies for circumventing the 8000 character limit for GPT instructions. User@darthgustav.advised using specific conditions for file analysis rather than generic instructions, while@eskcantastated that uploading a file with instructions is an effective method and that up to 80K characters tend to work well. -

ChatGPT Purpose Debate: A discussion unfolded involving

@darthgustav.,@madame_architect, and@clad3815about the intended purposes of ChatGPT.@darthgustav.emphasized that the platform’s purpose is not explicitly defined and should not be limited to specific uses. -

Hallucination Benchmark Test: User

@_jonposhared a specific test for hallucination using a made-up term, “Namesake Bias,” which GPT-4 previously passed by stating the term does not exist but had recently begun to fail on the updated model. -

YouTube Video Transcript Challenges: Conversations around difficulties in getting GPT to read YouTube videos included

@ima8.’s experience with unsuccessfully prompting GPT to transcribe a video and@solbussuggesting a GPT model from the OpenAI GPT Store for the task. -

Exploring Prompt Engineering Concepts: Various prompt engineering methods were discussed, with

@madame_architectand@darthgustav.examining techniques like “Contrastive Chain of Thought Prompting,” “Self-Critique” prompting, and “Tree of Prompts,” discussing their potential strengths and applications.

▷ #api-discussions (257 messages🔥🔥):

-

Bypassing the 8k Instruction Limit: User

@rico_builderinquired about surpassing the 8000-character limit for GPT instructions. User@eskcantaadvised that uploading a file with the desired instructions is a practical workaround, which works well up to about 80,000 characters.@eskcantaalso shared that the AI can recognize and utilize additional uploaded supplementary files. -

Exploring ‘Tree of Prompts’ in AI: User

@darthgustav.introduced a concept called “Tree of Prompts,” which is described as a method that combines various prompting techniques based on the task at hand to optimize AI performance. The strategy tries to match the strengths of particular prompt architectures to specific conditions and contexts. -

Prompt Engineering Techniques & Research: User

@madame_architectdiscussed various prompt engineering techniques and research papers with@darthgustav., mentioning specific approaches like Contrastive Chain of Thought (CCOT), and inviting suggestions on optimizing prompts and AI output. They also considered the weight of system instructions in self-critique and discussed the CommaQA dataset as an innovative synthetic data set for benchmarking. -

Hallucination Test on GPT Models: User

@_jonpotalked about a specific test regarding hallucination that GPT-4 could originally pass, which was no longer the case, suggesting that the model’s ability to discern made-up information might be changing. -

GPT Customization With JSON Strategies for Gaming: User

@clad3815shared how they use GPT to analyze and strategize for playing Pokemon, utilizing JSON to structure the AI’s understanding and interaction with viewers, highlighting the need for optimization and cost-effectiveness in output. -

French Language Limitation in Discord: Discussion around language limitations within Discord arose when user

@clad3815was joking with@darthgustav.about using French. The latter mentioned a possible rule issue with non-English languages in the server and the benefits of a universal translator feature.

Nous Research AI Discord Summary

-

GPT-4 Turbo Surpasses Its Predecessors:

@atgctgnoticed a significant performance gap between GPT-4 and GPT-4 Turbo, while@night_w0lfmentioned that GPT-4 Turbo seems to have incorporated user interaction data from services like chatgptplus.@everyoneisgrossand@carsonpooleenter a discussion, hinting at the use of chain of thought (CoT) prompting to improve coding challenge performance. For further reading on the subject, users can refer to links such as Training language models to follow instructions with human feedback. -

Semantic Chunking Quandaries:

@gabriel_symeengages in a conversation about the challenges of semantic chunking with GPT-4 and the inefficiency of GPT-4 Turbo over 4k tokens for completion, sparking a debate on model performance with inputs varying between 100 and 2k tokens. -

LLM Self-Correction Strategies Critiqued: Discussions centered on self-correction in large language models (LLMs), with users like

@gabriel_symeand@everyoneisgrossdebating the merits and demerits of backtracking in LLMs after@miracles_r_trueshared Gladys Tyen’s breakdown from the Google Research Blog. -

Mixtral’s Repetition Problem and AI Model Preferences: Conversations express concerns about text repetition in Mixtral variants after 4,000+ tokens and a dissatisfaction with the Ollama Bakllava model’s performance. User

@everyoneisgrossrecommended LM STUDIO for stability in local chat and service hosting but noted a lack of OpenAI API call functionality, an issue shared by@manojbhwhile discussing LLM interfaces. -

Search for an All-in-One LLM Tool: Users like

@everyoneisgrossand@manojbhvoiced the need for a tool combining API calls, local modeling, and cloud server capabilities, discussing the limitations and uses of various LLM interfaces such as ollama, lmstudio, and openchat.

Nous Research AI Channel Summaries

▷ #ctx-length-research (2 messages):

-

Seeking Context Extension Comparisons:

@dreamgenasked if there is any systematic comparison regarding how much fine-tuning (FT) is required to achieve a certain quality with different context extension methods. They’re curious about the performance of basic rope-scaling with and without FT, across various numbers of FT tokens. -

Does BOS Token Affect Length Extrapolation?:

@euclaiseshared a tweet, pondering if having a Beginning Of Sentence (BOS) token may negatively impact length extrapolation. The tweet by @kaiokendev1 discusses attention in Mistral-OpenHermes 2.5 7B layers when the position is not explicitly provided, noting that tokens may inherently signal their position as of Layer 0.

Links mentioned:

Tweet from Jade (@Euclaise_): I wonder if having a BOS token damages length extrapolation ↘️ Quoting Kaio Ken (@kaiokendev1) Attention in Mistral-OpenHermes 2.5 7B layers when not injecting any position information Tokens impl…

▷ #off-topic (27 messages🔥):

- GIFs and Emoji Banter: Users shared various emojis and GIFs, for instance,

@Error.PDFlinked to a Pedro Pascal GIF and later used a catastrophe emoji (<:catastrophe:1151299904346521610>), while also declaring an end to “Marcus x Yann” followed by a Ryan Gosling emoji (<:gosling2:1151280275205140540>). - Reflective Optimizations for Custom ChatGPT Instructions:

@.beowulfbrsought tips on custom instructions for enhancing ChatGPT responses. User@everyoneisgrossproposed sharing a script that matches meditation techniques to prompts, which could be adapted for various conversational styles. - Semantic Chunking Challenges Shared:

@gabriel_symeinquired about experiences with semantic chunking using embeddings and discussed with@everyoneisgrosschallenges like handling variable chunk sizes from 100 to 2k tokens caused by using distance thresholds. - Model Efficiency Discussed Between Experts: In an exchange regarding the scalability and cost of semantic chunking and embeddings,

@gabriel_symecommented that while embeddings are affordable, the time required could translate into money. He confirmed his practice of sentence-level embedding for window chunking. - Model Performance According to Input Quality:

@everyoneisgrossreflected on the importance of input quality for AI models, noting that smaller models need more carefully formatted chunks to avoid generating nonsensical output. He also mentioned the potential benefit of overlapping chunks, especially given their low cost.

Links mentioned:

Pedro Pascal GIF - Pedro Pascal - Discover & Share GIFs: Click to view the GIF

▷ #interesting-links (66 messages🔥🔥):

-

Google Research Intern Breaks Down LLM Self-Correction: User

@miracles_r_trueshared a link to a Google Research Blog by Gladys Tyen discussing a breakdown of self-correction in large language models (LLMs) into mistake finding and output correction. Various users, including@gabriel_symeand@everyoneisgross, debated the effectiveness of backtracking in LLMs and mentioned other papers on similar topics. -

Artificial General Intelligence AGI might be closer than we think: A Twitter post by @Schindler___ about an AGI architecture receives skeptical feedback from users like

@tekniumand@jdnuvaon the practical challenges of implementing effective memory systems in AI. -

Misinformation on AI Spread via Twitter: Users

@ldj,@youngphlo, and@leontellocommented on a misleading tweet regarding GPU usage for GPT models, criticizing false information and hyperbole with counterpoints and humor. -

Memory, the Last Frontier in AI: User

@tekniumdiscussed the complexities involved in building a memory system for a chatbot, touching on the challenges of coherency, importance, and the mutable nature of memories. -

Ensemble Forecasting and Distillation Innovations: Links shared by

@tofhunterrr,@admiral_snow, and@mixtureoflorasto GitHub repositories present an ensemble forecasting model and a self-critique refining model for LLMs, highlighting ongoing innovation in the AI modeling space.

Links mentioned:

- Tweet from Schindler (@Schindler___): (1/2) Proposition of an architecture for AGI. Samantha from the movie Her is here: An autonomous AI for conversations capable of freely thinking and speaking, continuously learning and evolving. Creat…

- Can large language models identify and correct their mistakes? – Google Research Blog

- GitHub - Nabeegh-Ahmed/llm-distillation: Contribute to Nabeegh-Ahmed/llm-distillation development by creating an account on GitHub.

- GitHub - vicgalle/distilled-self-critique: distilled Self-Critique refines the outputs of a LLM with only synthetic data: distilled Self-Critique refines the outputs of a LLM with only synthetic data - GitHub - vicgalle/distilled-self-critique: distilled Self-Critique refines the outputs of a LLM with only synthetic data

- Is Mamba the End of ChatGPT As We Know It?: The Great New Question

- GitHub - SebastianBodza/EnsembleForecasting: Using multiple LLMs for ensemble Forecasting: Using multiple LLMs for ensemble Forecasting. Contribute to SebastianBodza/EnsembleForecasting development by creating an account on GitHub.

▷ #general (463 messages🔥🔥🔥):

-

Gap between GPT-4 and GPT-4 Turbo Astonishes:

@atgctghighlighted the significant performance gap between GPT-4 and GPT-4 Turbo, comparing it to the difference between GPT-4 and GPT-3.5, suggesting that GPT-4 Turbo’s training included user interactions from services like chatgptplus and chatgpt, according to@night_w0lf. -

Turbo Training Tailored by User Preferences:

@night_w0lfasserted that the training of GPT-4 Turbo was based on user conversations and preferences from chat.@tekniumconcurred, mentioning a substantial increment in RLHF data. -

Semantic Chunking Challenges:

@gabriel_symeencountered difficulties in semantic chunking with GPT-4, taking 2 hours for folder processing. Further discussion with@everyoneisgrossrevealed max inputs at 2k tokens, chunk requests approximately 250 words, and even slower performance with Turbo, limited to 4k tokens for completion. -

GPT-4 Tunes for Code Evaluation:

@carsonpooleexplored using chain of thought (CoT) prompting with Mistral 7b on coding challenges (ARC), surpassing the Open LLM Leaderboard by a significant margin. This sparked a debate about CoT prompting usability and its potential for standardizing evaluations, with differing viewpoints from@teknium,@euclaise, and others. -

Extensive Discussion on Model Training and Evaluations: Various members, including

@teknium,@euclaise,@carsonpoole, and@antonb5162, shared insights on models, training tactics, and benchmarking. Topics included RLAIF, DPO, quantum size benchmarks for Hermes models, and the implications of using different RL methods like PPO, DPO, or even hypotheticals like P3O.

Links mentioned:

- Training language models to follow instructions with human feedback

- Tweet from Blaze (Balázs Galambosi) (@gblazex): Looking further into LLM benchmark x-correlations: - Top row: how each benchmark relates to human judgement (Arena Elo) - Other rows: any benchmark pair & their relationship - On the right: samples = …

- Training language models to follow instructions with human feedback: Making language models bigger does not inherently make them better at following a user’s intent. For example, large language models can generate outputs that are untruthful, toxic, or simply not h…

- Distilling Step-by-Step! Outperforming Larger Language Models with Less Training Data and Smaller Model Sizes: Deploying large language models (LLMs) is challenging because they are memory inefficient and compute-intensive for practical applications. In reaction, researchers train smaller task-specific models …

- Medusa: Simple framework for accelerating LLM generation with multiple decoding heads

- TOGETHER

- Extraction | 🦜️🔗 Langchain: Open In Collab

- Training a Helpful and Harmless Assistant with Reinforcement Learning from Human Feedback: We apply preference modeling and reinforcement learning from human feedback (RLHF) to finetune language models to act as helpful and harmless assistants. We find this alignment training improves perfo…

- Paving the way to efficient architectures: StripedHyena-7B, open source models offering a glimpse into a world beyond Transformers

- Tweet from anton (@abacaj): Tried a LoRA on mistral again, and it immediately picked up multiturn conversations correctly with < 2k samples. Really shows how much better bigger models are over smaller models

- IconicAI/DDD · Datasets at Hugging Face

- rombodawg/Everyone-Coder-4x7b-Base · Hugging Face

- Perfecting Merge-kit MoE’s

- GitHub - VikParuchuri/surya: Accurate line-level text detection and recognition (OCR) in any language: Accurate line-level text detection and recognition (OCR) in any language - GitHub - VikParuchuri/surya: Accurate line-level text detection and recognition (OCR) in any language

- GitHub - huggingface/text-generation-inference: Large Language Model Text Generation Inference: Large Language Model Text Generation Inference. Contribute to huggingface/text-generation-inference development by creating an account on GitHub.

- allenai/soda · Datasets at Hugging Face

▷ #ask-about-llms (16 messages🔥):

- Mixtral Variant Creative Writing Hits Repetition Snag:

@jdnuvaraised an issue about repetitive text in Mixtral variants beyond 4,000+ tokens, even with substantial repetition penalties. - Disappointment with Ollama Bakllava:

@manojbhexpressed dissatisfaction with the ollama Bakllava model, suggesting it is not very good, backed by@n8programswho described it as “very braindead.” - A User’s UI Preferences for LLM Tools:

@everyoneisgrossand@manojbhdiscussed the benefits and drawbacks of various LLM interfaces like ollama, lmstudio, openchat, with@everyoneisgrossrecommending LM STUDIO due to its stability for local chat and service hosting but noting it doesn’t seem to include OpenAI API call functionality.@manojbhyearned for a tool combining API calls, local modeling, and cloud server capabilities. - GPT-4 Amongst the Heralded Models:

@everyoneisgrossopined that nothing surpasses GPT-4, but also accepted that LM Studio doesn’t appear to facilitate direct OpenAI API calls, unlike GPT4ALL. - Model Preferences and Creative Puzzles in the Community:

@garacybeshared their model preferences, including the transition from OpenHermes 2.5 to Dolphin 2.6 Mistral DPO Laser, and proposed an alternative to MoE (Mixture of Experts) models with a conceptual bootleg MoE for enhanced creativity.

▷ #project-obsidian (3 messages):

- Inquiry about VRAM Requirements: User

@manojbhasked about the VRAM requirements for running a program locally. - Prompt for Updates Ignites Response:

@manojbhfollowed up seeking any updates on the VRAM inquiry. - Hermes Vision Alpha as a Lead: In response to

@manojbh,@qnguyen3suggested checking out Hermes Vision Alpha.

LM Studio Discord Summary

- OOM Errors Demystified:

@heyitsyorkieclarified that an Out of Memory (OOM) error occurs notably when reaching a full 125k context during a chat. (Source) - GPU and CPU Tango: Insight was provided into GPU layers on Macs, indicating that they are On/Off for metal acceleration and combine CPU RAM and VRAM. Additionally, discussions emerged about the limitations of TPUs, specifically coral TPUs limited to TensorFlow Lite, and compatibility issues with LMStudio on various hardware, expressing a preference for x86 architecture over ARM. (Source)

- Model Overload Anxiety: Concerns were raised by

@cardpepeover the escalating sizes of models, longing for the days of smaller 13B parameter models compared to the behemoths of 25GB+ now. In terms of performance, the conversation swung to optimize model runtimes with hardware, specifically the capacity of an RTX 4090 managing various large language models. (Source) - Memgpt Memory Mirage: Doubts were cast on the effectiveness of memgpt in dealing with context limitations, prompted by a developer’s feedback indicating less than positive results, suggesting stalled project development. (Source)

- Unique Function Calling Feature in OpenAI: It was pointed out that exclusive function calling is a feature specific to OpenAI’s GPT 3.5 Turbo, not found in open-source models. This triggers a discussion on improving upon existing models with enhancements to memory and context management. (Source)

LM Studio Channel Summaries

▷ #💬-general (292 messages🔥🔥):

- OOM Error Clarified:

@heyitsyorkieexplained an Out of Memory (OOM) error occurs when all context and memory are used up, especially when reaching a full 125k context during a chat (source message). - LM Studio Updates on the Horizon: Users discussed that new features, such as model sorting, have been requested for LM Studio and might be implemented soon (source message).

- Understanding GPU Layers on Mac:

@heyitsyorkieprovided insight into GPU layers on Macs, mentioning they are On/Off for metal acceleration and combine CPU RAM and VRAM (source message). - Linux User Model Load Issue:

@heyitsyorkiedirected a Linux user facing model loading issues to a specific channel for solutions, noting the necessity of a version update to v0.2.10 for Phi 2 support (source message). - Advice on Saving Discord Posts:

@dagbsshared a workaround for bookmarking useful Discord posts by linking them in a personal Discord server (source message).

Links mentioned:

- Spaces Overview

- PsiPi/NousResearch_Nous-Hermes-2-Vision-GGUF · Hugging Face

- Run ANY Open-Source Model LOCALLY (LM Studio Tutorial): Get UPDF Pro with an Exclusive 63% Discount Now: https://bit.ly/46bDM38Use the #UPDF to make your study and work more efficient! The best #adobealternative t…

- Local AI Agent with ANY Open-Source LLM using LMStudio: Hello and welcome to an explanation and tutorial on building your first open-source AI Agent Workforce with LM Studio! We will also learn how to set it up s…

- Don’t ask to ask, just ask

- GitHub - lmstudio-ai/model-catalog: A collection of standardized JSON descriptors for Large Language Model (LLM) files.: A collection of standardized JSON descriptors for Large Language Model (LLM) files. - GitHub - lmstudio-ai/model-catalog: A collection of standardized JSON descriptors for Large Language Model (LLM…

- GitHub - THUDM/CogVLM: a state-of-the-art-level open visual language model | 多模态预训练模型: a state-of-the-art-level open visual language model | 多模态预训练模型 - GitHub - THUDM/CogVLM: a state-of-the-art-level open visual language model | 多模态预训练模型

▷ #🤖-models-discussion-chat (43 messages🔥):

- Model Size Concerns from Cardpepe:

@cardpepeexpresses weariness about the increasing sizes of models, preferring the times when 13 billion parameter models were the standard and now feeling uncomfortable with the 25GB+ sizes.emojis of concern - Mixtral and Its Quirks: Both

@cardpepeand@dagbsdiscuss the use of various models like Mixtral, where@cardpepementions a preference for models reminiscent of GPT-3.5 and finds Mixtral close, but not quite the same. - Quantization Troubles:

@technot80faces an error with the message “invalid unordered_map<K, T> key” when attempting to load a WhiteRabbitNeo-33B model.@heyitsyorkieexplains that this suggests failed quantization, a known issue with some models. - Discussion on Model Optimization:

@sp00n9and@dagbsengage in a conversation about quantization, with@dagbsexplaining that higher quantization (Q8) might be preferable to lower quantization (Q3) but also that it’s a balance between model performance and results. - LM Studio App Compatibility Issues:

@coolbreezerandy6969inquires about issues loading newer GGUF models with the Linux LM Studio AppImage, indicating potential compatibility problems or updates needed.

▷ #🧠-feedback (17 messages🔥):

- Confusion over Rolling Window Policies:

@flared_vase_16017expressed confusion regarding the 2nd and 3rd context overflow policies. They later clarified their understanding that the 3rd policy doesn’t preserve the system prompt, contrary to what others have said.@yagilbconfirmed that the rolling window policy does not preserve the system prompt. - Feature Request for System Prompt Preservation:

@flared_vase_16017and@logandarkdiscussed the need for a rolling window that retains the system prompt.@heyitsyorkiementioned a feature request link that was posted earlier, but@logandarkclarified that they seek a specific feature for maintaining only the system prompt. - Anticipation for LM Studio Discord Banner:

@dagbsinquired about getting a LM Studio Discord banner.@heyitsyorkieresponded that reaching level 3 in boosts is required, while@dagbsmentioned that level 3 is only for animated versions. - Frustration with Preset Selection in LM Studio:

@logandarkexpressed frustration with LM Studio changing their preset settings.@heyitsyorkieprovided a solution suggesting to set the default preset under the “my models” tab.

▷ #🎛-hardware-discussion (16 messages🔥):

- No Easy GPU Expansion via USB:

@fabguymentioned that Nano devices require a specific compilation and currently it’s not feasible to add GPU support via USB. - Tensor Processing Unit Limitations:

@strangematterraised that coral TPUs are efficient for vision tasks but noted their limitation to TensorFlow Lite, possibly requiring conversion scripts to work with other frameworks. - Compatibility Queries for LMStudio:

@sencersultanogluinquired about installing LMStudio on an NVIDIA Jetson AGX Orin Development Kit with ARM CPU, leading@heyitsyorkieto point out compatibility errors due to glibc with Ubuntu 20. - LMStudio x86 Architecture Preference: Further understanding the compatibility,

@heyitsyorkieconfirmed that LMStudio is typically for x86 architecture, not ARM, but suggested building llama.cpp for ARM systems. - Model Performance Advice for 4090 GPU: In a discussion about runtimes,

@heyitsyorkieadvised@ericericericericericericericon the performance expectations of various large language models on a single RTX 4090 GPU rig, noting that Mixtral is quite slow on such a setup.

▷ #🧪-beta-releases-chat (5 messages):

- Query on Necessity of Duplicated RAM Info:

@kadesharquestioned whether displaying RAM usage twice is necessary, suggesting that the blue bar could instead consistently display the app version. - Channel Organization Suggestion:

@dagbshumorously pointed out where to place suggestions by indicating the appropriate channel with an emoji (<#1128339362015346749> 😄). - Improvement Suggested for VRAM/RAM Requirement Calculation:

@kadesharrecommended that VRAM/RAM usage should subtract the amount employed by the currently loaded model for accurate requirement calculations. - Inconsistency in VRAM/RAM Offloading Highlighted:

@kadesharnoted that the program does not wait for models to be fully offloaded from VRAM/RAM before it calculates requirements. - Color-coding Channels for Clarity:

@dagbsproposed color differentiation betweenBeta ReleasesandAMD ROCm Betachannels to improve visual distinguishment.

▷ #autogen (3 messages):

- Smooth Operation Confirmed:

@thelefthandofurzaexpressed satisfaction with getting something up and running, noting its smooth operation and looking forward to future additions. - Awaiting Group Chat Feature through UI:

@tyler8893highlighted that group chat might not yet be available through the UI and speculated that there’s an example involving a JSON file from a GitHub repository.

▷ #langchain (1 messages):

- Exclusive Function Calling in OpenAI’s GPT 3.5 Turbo:

@cryptocoderpointed out that function calling is unique to OpenAI, mentioning that their model, GPT 3.5 Turbo, was specifically trained for this feature. They highlighted the absence of this capability in open-source models.

▷ #memgpt (20 messages🔥):

- Skepticism around memgpt’s efficacy:

@siticexpressed curiosity about memgpt, noting a lack of user reviews and questioning its ability to handle context limitations as advertised. - Developer feedback points to issues:

@flared_vase_16017referenced a GitHub issue comment from the sillytavern dev that seemed less than positive about memgpt (Issue #1212). - memgpt underperforms and forgotten:

@dagbsshared personal experience that memgpt failed to “remember” anything through its usage, leading to the conclusion that the project may have stalled. - Discussing potential improvements to memory and function calling:

@flared_vase_16017and@dagbsengaged in a detailed discussion about the potential for leaner models that efficiently manage language and reasoning by importing context selectively, and debated how to optimize memory retrieval speed and accuracy by combining current context and database storage. - Reviving old code with modern LLMs: Sharing a past project,

@dagbshighlighted the possibility of integrating 2018-era database and function calling code with new language models like those in LM Studio to potentially replicate or improve upon what memgpt intended to achieve.

Links mentioned:

[FEATURE_REQUEST] Add Superboogav2 for “long-term-memory” · Issue #1212 · SillyTavern/SillyTavern: Have you searched for similar requests? Yes Is your feature request related to a problem? If so, please describe. Oobabooga recently made a new version of their vector based memory system that does…

HuggingFace Discord Discord Summary

-

Discord Takes a Dive & miniSDXL Model Enters the Open Source Arena: During a reported Discord outage that affected users like

@lunarflu, the community also buzzed about the new open-source miniSDXL model on HuggingFace which@kopylshared. -

Getting the Most Out of Machine Learning Models: The community dove into practical issues like installing kohya_ss from GitHub and deciphering learning rate decay mysteries, suggesting a scheduler might be at play. Meanwhile,

@tonic_1invites PRs for memory management improvements in their e5-mistral7B embeddings model, and a local guide for LLM terminology, The Llama Hitchiking Guide to Local LLMs, recently hit the digital shelves. -

Visions of the Future: Music, Small Models, and Sky Creations: Members shared advancements ranging from music waveform separation to the Zyte-1B model performance on an M1 chip, noted by

@venkycs. Creative types might find interest in tools for generating custom HDRI skies as suggested by@nebmotion. -

When Brain Waves Meet Diffusers: In the diffusion discussions,

@louis030195presents a novel experiment integrating brain data (EEG) with diffusion models, while@chad_in_the_housedebates the necessity of MATLAB over Python for machine learning work. -

Vision Transformers and the Quest for Clarity: From understanding GT formats for fine-tuning document VQA models to categorizing vast amounts of image data, members discussed various resources, including a Transformers tutorial for image classification and practical applications of tools for image enhancement.

HuggingFace Discord Channel Summaries

▷ #general (183 messages🔥🔥):

- Discord Experiences Outage:

@lunarflumentioned that there was a Discord outage, confirmed by@jo_pmt_79880. - miniSDXL Model Goes Open Source:

@kopylshared an open source miniSDXL model and provided the link (miniSDXL on HuggingFace).@vishyouluckexpressed interest in using the model. - Installing kohya_ss Becomes a Group Effort:

@schwifty4usought help with installing kohya_ss, leading to an extended troubleshooting session with@meatfucker, who guided them through using command line defaults and the setup process. - AI Enthusiasts Tackle Learning Rate Mysteries:

@bluebugreported issues with their model not learning and later questioned why their learning rate was decaying, prompting@doctorpanglossto suggest that a learning rate scheduler might be the cause. - New Comers Seek General Assistance: General questions from community members like

@typoiluseeking resources on transformers, and various users needing assistance with practical issues like choosing a GPU provider, finding animation models for images, and inquiring about easy deployment of LLMs.

Links mentioned:

- Attention Is All You Need: The dominant sequence transduction models are based on complex recurrent or convolutional neural networks in an encoder-decoder configuration. The best performing models also connect the encoder and d…

- Paste ofCode

- lowres (That Time I got Reincarnated to a Hugging Face Organization)

- kopyl/miniSDXL · Hugging Face

- NobodyExistsOnTheInternet/toxicqa · Datasets at Hugging Face

- GitHub - bmaltais/kohya_ss: Contribute to bmaltais/kohya_ss development by creating an account on GitHub.

▷ #cool-finds (14 messages🔥):

-

AI Seperates Music Waveforms:

@callmebojoshared an impactful paper on waveform separation, a significant tool for music producers for resampling tracks. Read the study here: Source Separation. -

Zyte-1B Packs a Tiny Punch:

@venkycshighlighted the tiny yet powerful Zyte-1B model, an advancement over the tinyllama with Direct Parameter Optimization. Check out this model on HuggingFace: Zyte-1.1b Model. -

Song Genre Classification Showcase:

@andysingalpresented a resource showing how to classify songs using Hugging Face and Ray on Vertex AI. Find out more in this Medium post: Is it Pop or Rock?. -

High-Speed Inference with Zyte-1B:

@venkycsdiscussed testing the Zyte-1B model with impressive inference speeds on an M1 chip. They mentioned a Colab included in the LM studio report for trying it out. -

Train a Race Car on Your PC:

@tan007shared a video tutorial on training a virtual, driverless race car using AWS DeepRacer, even on a Windows PC. Watch the guide here: Train a Self-Driving Race Car. -

Create Skies in HDRI with Ease:

@nebmotionintroduced a tool for generating custom HDRI skies quickly, offering a range of features including time-of-day control and high-resolution output. Dive into the creator here: HDRI Magic Power Sky Aurora Creator.

Links mentioned:

- aihub-app/zyte-1B · Hugging Face

- Train a Self-Driving Race Car on Your PC! (AWS DeepRacer DRfC): Hello everyone, now you can train your own driverless car virtually without hardware, even on your Windows PC! Dive into this detailed guide on training an a…

- Is it Pop or Rock? Classify songs with Hugging Face 🤗 and Ray on Vertex AI: The article covers how it is possible to fine-tune an audio classification model using HuggingFace and Ray on Vertex AI.

- HDRI Creator - XYZ360: Create amazing custom HDRI skies today. Have full control of time, world location and clouds. Stop hunting HDRI’s and create your own!

▷ #i-made-this (8 messages🔥):

-

Tonic1’s Embeddings Model on Hugging Face:

@tonic_1presents the e5-mistral7B embeddings model from Microsoft, running on GPUZero. They invite contributions, especially PRs to manage memory more safely. The model is available to explore on Hugging Face’s Spaces at https://huggingface.co/spaces/Tonic/e5. -

Osanseviero’s Glossary for Local LLMs:

@osansevierocreated The Llama Hitchiking Guide to Local LLMs, a glossary to keep up with new concepts like MoE, LASER, and more, which they shared on Twitter and on their blog. -

Proposal for a Terminology Learning Tool:

@stroggozsuggests creating a Duo Lingo type application focused on teaching all the new terminology in the language model domain, following the glossary shared by@osanseviero. -

Recurrent Neural Notes on Alternative to RLHF:

@mateomd_devdiscusses a paper introducing an alternative to Reinforcement Learning from Human Feedback (RLHF), highlighted by Andrew Ng on LinkedIn. The insights are featured in the latest issue of Recurrent Neural Notes, available here: The RNN #6.

Links mentioned:

- E5 - a Hugging Face Space by Tonic

- Tweet from Omar Sanseviero (@osanseviero): The Llama Hitchiking Guide to Local LLMs It’s hard to keep up with so many new concepts. What’s MoE? LASER? SuperHOT? Bagel? Tri Dao? 😱🤯 Take a look at this brief read covering (very brief…

- The RNN #6 - Is RLHF Becoming Obsolete? : Revolutionizing AI Alignment with DPO

▷ #reading-group (2 messages):

- Audio Mishap in Meeting Recording:

@mr.osophyapologized for an issue where the audio of others was not recorded during a meeting after rewatching the session. Mentioned it might have been caused by disconnecting and reconnecting Airpods which changed the sound output settings. - Suggestion for a New Idea Met with Enthusiasm:

@osansevieroexpressed support for what seems to be a proposal, commenting with enthusiasm, “Yes this sounds like a neat idea!”

▷ #diffusion-discussions (6 messages):

- Innovative Approach to Generate Spectrograms:

@louis030195is exploring the use of diffusers lib & HF HuggingFace model to generate a spectrogram from brain data (EEG). They provided a snippet of code where they are attempting to incorporate diffusion models for image generation using brain wave data. - Questioning the Approach:

@vipitisinquired whether the presented method is the only language model decoded and suggested plotting EEG data directly. - MATLAB vs. Python for Machine Learning:

@muhammadmehrozasked about the necessity of learning MATLAB for Machine Learning.@chad_in_the_houseresponded that MATLAB could be useful in robotics, but Python is sufficient for pure ML, also remarking that MATLAB coding is “pretty terrible”.

▷ #computer-vision (14 messages🔥):

-

Discovering the GT Parse Format: User

@swetha98sought help for understanding the ground truth parse format for single question single answer in fine-tuning the Donut model for docvqa. Upon asking for assistance,@nielsr_clarified that a single dictionary per image is required, as shown in his notebook. -

Spot the Similarity in Screenshots:

@amarcelexpressed the need to group 50k similar screenshots without a trained dataset.@nielsr_recommended embedding each image using an off-the-shelf vision model to compute cosine similarity or perform k-means clustering on the embeddings. -

Classifying Vision with Transformers:

@vikas.pprovided a link to a Hugging Face tutorial on image classification with Transformers (Image classification guide) in response to a user looking for group classification of screenshots. -

Enhance Vision Tasks with Coral Accelerators?: User

@strangematterinquired about the performance impact of using coral accelerators for vision tasks compared to typical GPU or CPU usage. -

Impressive Tool Performance on Mediocre Images: User

@damian896636shared a positive experience using an unnamed tool that significantly improved the quality of uploaded images.

Links mentioned:

- google/pix2struct-screen2words-base · Hugging Face

- Image classification

- Transformers-Tutorials/Donut/DocVQA/Fine_tune_Donut_on_DocVQA.ipynb at master · NielsRogge/Transformers-Tutorials: This repository contains demos I made with the Transformers library by HuggingFace. - NielsRogge/Transformers-Tutorials

▷ #NLP (48 messages🔥):

- Troubleshooting GPU Utilization in Model Training:

@meatfuckersuggested an issue might be related to using CPU Torch instead of GPU, but@frosty04212confirmed that GPU utilization is at 99%, therefore it couldn’t be a CPU Torch issue. - Successful Inference Depends on the Model Base: After discussion,

@Cubie | Tomadvised@frosty04212to not start from a specific NER model but from a more general one, which led to a successful outcome when usingroberta-base.@frosty04212expressed gratitude for the resolution. - HuggingFace Token Classification Guide Under Scrutiny:

@frosty04212encountered problems when following HuggingFace’s token classification training guide and urged the HuggingFace team to find a solution. - Inference Inconsistencies on NVIDIA Cards Challenged:

@frosty04212reported inconsistencies when running inference on different machines and requested assistance with the possible guide issues. - Expression of Community Support:

@cakikiappreciated@Cubie | Tom’s successful assistance, expressing gratitude with emojis.

Links mentioned:

▷ #diffusion-discussions (6 messages):

-

Brain Waves to Spectrogram via Diffusers:

@louis030195is exploring diffusion models to generate a spectrogram from EEG brain data. They shared a Python snippet that outlines the basic framework of their diffusion model, utilizing band power data from an EEG for thediffusion_rateandtime_stepsparameters. -

Simpler Alternatives to Plotting EEG Data?:

@vipitisquestioned the complexity of using a diffusion model to plot EEG data, suggesting that the data could be plotted directly. -

MATLAB for Machine Learning?:

@muhammadmehrozinquired if learning MATLAB is necessary for machine learning.@chad_in_the_houseopined that MATLAB has value in robotics, but for machine learning alone, Python suffices, and also remarked that MATLAB coding is not ideal.

Mistral Discord Summary

-

Benchmarking Tools Shared: In a discussion started by

@tao8617,@bozoid.pointed to resources like AllenAI’s catwalk and EleutherAI’s lm-evaluation-harness for model benchmarking outside of the papers, following a mention by@i_am_domof real-life performance aligning with the MoE paper metrics. -

Vector Language Theory and Few-shot Prompting:

@red_codeintroduced the concept of using vectors to represent letters and constructing word vectors, while@robolicioussought community insight on few-shot prompting with Mistral, mentioning the use of LangChain for templates. -

Performance and Deployment Insights: Discussions in #deployment revealed that vLLM may be slower than f16, with token output rates of 15 tokens/s and 30 tokens/s depending on hardware.

@nickbro0355shared a blog post about creating a local LLM assistant and queried for cost-effective deployment methods along with@richardclove. -

Finetuning Forums and API Stability:

@stefatoruscanvassed for finetuning support on Mistral and preferred outsourcing infrastructure, and@sublimatorniqteased that finetuning support is underway. In #la-plateforme, stability for production use and consistent versioning were affirmed, and a type mismatch issue in Mistral’s JavaScript client was flagged, citing the client.d.ts and client.js files. -

Engagement and Showcasing:

@azetismebrought attention to a French YouTuber’s claim that Mistral might eclipse ChatGPT (Micode’s YouTube video). Also,@jakobdylanccorrected the terminology from “Mistral API” to “La plateforme de Mistral” and linked to llmcord on GitHub.

Mistral Channel Summaries

▷ #general (177 messages🔥🔥):

-

Searching for Model Benchmarking Evaluation Pipelines: In response to

@tao8617’s query about the evaluation pipeline for model benchmarking,@i_am_domstated real-life performance seems to reflect the claimed numbers in the MoE paper, despite occasional instability. However,@bozoid.provided links to AllenAI’s catwalk and EleutherAI’s evaluation harness as resources for model benchmarking, which were not mentioned in the actual papers but are relevant tools (AllenAI’s catwalk, EleutherAI’s lm-evaluation-harness). -

Introducing Vector Language Theory: User

@red_codeproposed the idea of treating letters as vectors and combining them to form word vectors, and subsequently creating sentence and paragraph vectors from them. -

Discussion on Direct Policy Optimization (DPO):

@.nikhil2mentioned Andrew Ng’s blog post about DPO already being implemented in Mistral, following a discussion on a Paper related to DPO (Andrew Ng’s blog post). -

Mistral’s Internal Structure and SelfExtend Inquiry:

@hharryrasked for materials explaining the internal structure of Mistral 7b, and@cognitivetechinquired about seeing the 7B model with SelfExtend.@timotheeee1replied identifying Mistral’s structure as a standard decoder-only transformer with GQA. -

OpenAI API and App Development Discussions: There was a lengthy discussion about developing skills and finding employment in the coding world.

@richardclovesuggested starting with contributing to open source, which transitioned into a discussion on how to approach coding as a beginner. Users shared personal experiences and recommended looking at projects like open-source AI models on Hugging Face or building a full-stack application integrating OpenAI API. The conversation highlighted the importance of building a portfolio and learning through doing and contributing to real-world projects.

Links mentioned:

- Continue

- HuggingChat

- mistralai (Mistral AI_)

- app.py · openskyml/mixtral-46.7b-chat at main

- Supported Models

- GitHub - TabbyML/tabby: Self-hosted AI coding assistant: Self-hosted AI coding assistant. Contribute to TabbyML/tabby development by creating an account on GitHub.

- GitHub - allenai/catwalk: This project studies the performance and robustness of language models and task-adaptation methods.: This project studies the performance and robustness of language models and task-adaptation methods. - GitHub - allenai/catwalk: This project studies the performance and robustness of language model…

- GitHub - EleutherAI/lm-evaluation-harness: A framework for few-shot evaluation of language models.: A framework for few-shot evaluation of language models. - GitHub - EleutherAI/lm-evaluation-harness: A framework for few-shot evaluation of language models.

▷ #models (2 messages):

-

The Squeeze Isn’t Worth the Juice?: User

@akshay_1suggested that the “juice is not worth the squeeze” in the context of an unspecified situation. The specifics of the metaphorical “juice” or “squeeze” weren’t detailed. -

Few-shot Prompts with Mistral:

@roboliciousinquired about the community’s experience with few-shot prompting on Mistral, noting that they typically utilize LangChain for templates.

▷ #deployment (7 messages):

- F16 vs VLLM Performance Insights:

@dreamgenmentioned that vLLM is slower than f16, a concern echoed with specific token output rates by others.@charlescearl_45005added that a p3 instance was outputting 15 tokens/s while an M3 Pro with untuned mistral-instruct and llama.cpp 4-bit compressed model managed 30 tokens/s. - Personal LLM Assistant Over Cloud Services:

@nickbro0355shared a blog post on building a local, sassy, and sarcastic LLM assistant, emphasizing local operation over cloud services. John the Nerd Blog - Scroll for System Prompts Query:

@nickbro0355pointed users to a resource on how to obtain system prompts with Mixtral, suggesting to scroll down for the information. - Seeking Cost-Effective Deployment Options:

@richardcloveinquired about a way to deploy having a completion endpoint without expenses for a license, Sage Maker, and the Hugging Face package, looking for the cheapest and reliable method. - Request for Inexpensive Access to the Language Model:

.unyxexpressed a desire to try the language model but is lacking the necessary setup, requesting advice on an inexpensive solution.

Links mentioned:

Building a fully local LLM voice assistant to control my smart home: I’ve had my days with Siri and Google Assistant. While they have the ability to control your devices, they cannot be customized and inherently rely on cloud services. In hopes of learning someth…

▷ #finetuning (20 messages🔥):

- In Search of Finetuning Support:

@stefatorusinquired about finetuning support on the Mistral Platform and mentioned that Mistral-small is open-weights, not open source. - Outsourcing Infra Preferred:

@stefatorusexpressed a preference to “outsource” infrastructure rather than managing it, despite@richardclovehighlighting the benefits of a monthly cost over a pay-per-use model. - Finetuning on the Horizon:

@sublimatorniqrelayed that finetuning support for the Mistral Platform is currently under development as per<@803073039716974593>. - Economical API Uses:

@stefatorusexplained the economic side, stating that in-house hosting would cost 500-1000 EUR monthly, while they pay 300 EUR for pay-as-you-go credits, efficiently handling traffic spikes. - Comparing Mistral and Big Players:

@stefatorusexpressed a desire to financially support developers directly, noting that the Mistral team is a main competitor to OpenAI, impressive given its smaller size and resources compared to corporations like Google.

▷ #showcase (1 messages):

jakobdylanc: Mistral API ❌ La plateforme de Mistral ✅

https://github.com/jakobdylanc/llmcord

▷ #random (1 messages):

- French YouTuber Takes on Mistral: User

@azetismehighlights a YouTube video by Micode de Underscore titled “ChatGPT vient de se faire détrôner par des génies français”. The video discusses how Mistral may have surpassed ChatGPT. Video here. Description indicates a high hype level and mentions potential dangers with the new advancements.

Links mentioned:

ChatGPT vient de se faire détrôner par des génies français: hype/20👀 À ne pas manquer, ChatGPT vient de devenir dangereux : https://youtu.be/ghVWFZ5esnUPas du tout obligé mais si vous vous abonnez ça m’aide vraiment …

▷ #la-plateforme (42 messages🔥):

- Smoother Sailing on Saturdays:

@casper_aiand@sublimatorniqdiscuss improved response times during weekend testing of the platform, but will monitor for changes during the weekdays. - Type Mismatch Tease:

@dreamgenpoints to a type mismatch in Mistral’s JavaScript client with links to the relevant GitHub commit in client.d.ts and client.js. - Timeouts with Big Tasks:

@dreamgenreports timeouts on large output token tasks usingmistral-medium, recommends regression testing, and shares a workaround using streaming and accumulation. - A Plea for API Update Alerts:

@_definitely_not_sam_asks for a dedicated channel for API updates and advance notice of changes to maintain the Golang client properly. - Is Mistral Ready for Production? Discussions led by

@lerelaconfirm the API is stable and versioned, and that the current system can be used for production with rate limits available in user accounts.

Links mentioned:

- Mistral AI API | Mistral AI Large Language Models: Chat Completion and Embeddings APIs

- client-js/src/client.d.ts at f7049e5afa9db744aa1502b71ddd9746062520ff · mistralai/client-js: JS Client library for Mistral AI platform. Contribute to mistralai/client-js development by creating an account on GitHub.

- client-js/src/client.js at f7049e5afa9db744aa1502b71ddd9746062520ff · mistralai/client-js: JS Client library for Mistral AI platform. Contribute to mistralai/client-js development by creating an account on GitHub.

Perplexity AI Discord Summary

-

New Release Hits the Playground:

@ok.alexannounced the upgrade to Perplexity Android App version 2.9.0, emphasizing the new widget feature and the addition of Gemini Pro & Experimental models Perplexity Android App Update. -

Chatbots Under the Microscope: Contrasting Perplexity AI, Bing Chat, and Phind,

@esyrizobserved that all incorporate ChatGPT with web search. Discussions highlighted Bing’s limitations and the anticipated integration of Whisper into the Perplexity app this month, discussed by@icelavamanand others. -

Innovation Spotlight: Perplexity AI Gains Traction: Highlighting articles from Zhihu and Forbes, users discussed Perplexity AI’s user-focused model and its ranking in the AI traffic domain. Further discussions included RabbitMQ’s deployments and AI-created art videos “AI Bahamut” and “The Abandoned”.

-

Unleashing Vim’s Potential with Perplexity API:

@taketsintroduced a vim/neovim plugin that interfaces with the Perplexity API, fostering integration into a text editor environment. -

Diving into API Quirks and Fixes: Users reported discrepancies between the Perplexity API and the main app, with particular issues in summarizing and comparing numbers. A common interest surfaced for a feature to include URL indications in model responses, reflecting a need in professional settings to verify LLM-generated data, yet

@icelavamanstated it’s currently not on the feature roadmap.

Perplexity AI Channel Summaries

▷ #announcements (1 messages):

- Perplexity Android App Hits 2.9.0:

@ok.alexannounced the update of the Perplexity Android App to version 2.9.0 with a new widget feature and inclusion of Gemini Pro & Experimental models. Feedback is encouraged in the dedicated feedback channel.

▷ #general (88 messages🔥🔥):

-

Comparing Chatbot Options:

@esyrizinquired about the differences between Perplexity AI, Bing Chat, and Phind. They noted that all of them use ChatGPT with web search, and mentioned that Bing Chat has GPT-4 for free.@icelavamanpointed out Bing’s limitations, such as fewer sources, no file uploads, and no focus options. -

Whisper Integration Update:

@zwaetschgeraeuberasked if Whisper would be integrated into the Perplexity app, and@icelavamanconfirmed an update was expected within the month. -

Model Performance and Preferences:

@zwaetschgeraeuberranked their preferences for AI models in answering search queries, listing Gemini as the top due to its straight answers compared to GPT, Claude, and Perplexity 70b. They also mentioned that Perplexity 70b sometimes makes grammar mistakes in German. -

Apple Watch and Perplexity:

@srbigquestioned the possibility of using Perplexity on an Apple Watch.@ok.alexresponded with a link but did not confirm the capability.@srbigfollowed up asking about using different models like Claude 2.1 or GPT-4, and `@icelavaman* clarified it’s not possible via the watch. -

Issues with Mistral Medium:

@moyaoasisreported that Mistral Medium was not working and asked if the problem was on their end after switching from the Brave browser to MS Edge.

Links mentioned:

- ChatGPT, Google Bard, Microsoft Bing, Claude, and Perplexity: Which is the Right AI Tool?: Compare chatbots in terms of conversational capabilities and limitations to find the right AI assistant for your needs.

- Pricing

- Universe Space GIF - Universe Space Stars - Discover & Share GIFs: Click to view the GIF

- ChatGPT vs Perplexity AI: Does Perplexity Use ChatGPT? - AI For Folks: The AI landscape is constantly shifting, and can be confusing. Many companies overlay different technologies for their own use. In this article, we’ll compare

- Billing and Subscription: Explore Perplexity’s blog for articles, announcements, product updates, and tips to optimize your experience. Stay informed and make the most of Perplexity.

▷ #sharing (15 messages🔥):

-

Perplexity AI Navigates Growth: An analysis in a Chinese column at Zhihu examines three “growth secrets” behind Perplexity AI’s success: timely feature integration, deep user understanding, and increasing usage frequency. Despite skepticism about its technology, Perplexity AI is rising in AI application traffic ranks, reaching the top 10.

-

Forbes Highlights Perplexity.ai’s Innovation: A Forbes article showcases Perplexity.ai’s disruption of the search engine landscape, noting a shift from an ad-centric model to a user-centric answer engine using large language models (LLMs).

-

Learning About RabbitMQ: Users

@sven_dcand@arvin6573shared a link to RabbitMQ’s official page, highlighting VMware’s commercial offerings which include deployments for Kubernetes and cloud hosting support. -

Showcasing AI-Created Art:

@foreveralways.expressed gratitude and shared two 4k resolution YouTube videos titled “AI Bahamut - Pika AI (4k)” and “The Abandoned - Pika AI (4k)” which seem to be projects involving Pika Labs, RunwayML, and AIARTGEN. Links to the videos: “AI Bahamut” and “The Abandoned”. -

Usage of Share Button Reminder: User

@me.lkreminded@termina4tor_gworldto make their thread public by clicking the share button after posting a Perplexity AI search query link.

Links mentioned:

- RabbitMQ: easy to use, flexible messaging and streaming — RabbitMQ

- How Perplexity.ai Is Pioneering The Future Of Search: Powered by large language models (LLMs), Perplexity is an “answer engine” that places users, not advertisers, at its center.

- 行业洞察|揭秘 Perplexity AI:深入探讨其迅猛崛起的三个实用增长策略,探究其成功之路!: 本周,我们开启新的【行业洞察】专栏,首篇聚焦—— Perplexity AI 今天我们将讨论Perplexity AI迅速增长的三个 增长秘诀:及时功能整合:利用关键的AI进展,实现功能之间的协同作用。深入用户需求:以用户为中心…

- AI Bahamut - Pika AI (4k): @Pika_Labs @AIARTGEN @RunwayML

- The Abandoned - Pika AI (4k): @Pika_Labs @RunwayML @AIARTGEN

▷ #pplx-api (21 messages🔥):

- Seeking Clarity on API Search Capabilities: User

@crit93inquired about the discrepancy in summarizing LinkedIn posts, noting that the Perplexity API isn’t performing like the main app.@dawn.duskand@icelavamanclarified that they are indeed different and that real-time browsing is possible with the correct use of the “site:” operator. - API Responses May Vary:

@adriancowhamreported inconsistencies between results given by the pplx API and the pplx labs playground when asking which of two numbers is greater. They are seeking tips on how to get the API to closely mirror the playground’s more accurate responses. - Vim Integration for pplx API:

@taketsshared a GitHub link for a vim/neovim plugin they created that acts as a client for the Perplexity API. - Awaiting URL Indication Feature:

@jdub1991inquired about an operator to help the pplx model indicate sources and URLs, as seen in the consumer interface, but@icelavamanconfirmed it’s not a planned feature, while@brknclock1215expressed a common professional need to crosscheck generative LLM-generated information. - Handling Authorization Errors: User

@crit93faced a “Missing authentication” problem even while using the correct API key, and@icelavamanadvised to share the issue in a different channel for proper assistance.

Links mentioned:

GitHub - nekowasabi/vim-perplexity: Contribute to nekowasabi/vim-perplexity development by creating an account on GitHub.

LlamaIndex Discord Discord Summary

-

Call for LlamaIndex Showcases:

@jerryjliu0is seeking users to share their LlamaIndex projects or blog posts for broader exposure. Interested parties should contact@jerryjliu0directly with their work. -

Exploring Tabular Data Understanding with LlamaIndex: The Chain-of-Table Technique using LLMs for interpreting tabular data is highlighted. For a deeper dive, check the teaser on Twitter. Moreover, additional discussions elaborate on the importance of reranking in RAG pipelines, a template for a RAG-powered voice assistant, and guidance to the original LinkedIn discussion source. Tweets related: RAG Pipeline Insight, Voice Assistant Template, Directing to LinkedIn Source.

-

RAG System Response Optimization and LlamaIndex Navigation Exploration:

@liqvid1is tackling slow response times in a RAG system potentially due to excessive LLM calls. Collaborative solutions suggested include the hosted APIs and pragmatic prompt design.@lolipopmandiscussed using LlamaIndex for navigation, with support from@desk_and_chair. -

Vector Store Interoperability and Name Clarifications:

@cd_chandraraised interoperability concerns about different vector stores and the necessity to trace metadata. In a separate thread,@_joaquindquestioned the tight integration between LlamaIndex and OpenAI, with@cheesyfishesproviding a historical context for the relationship. Also, accuracy handling issues with table data in models are noted without resolution. -

Probing the GRIT Dataset and Upgrading Chatbot Responses:

@saswatdassought clarification on the GRIT dataset with a call for community insights (GitHub repository for GRIT). Additionally,@sl33p1420introduced a new resource aimed at enhancing chatbot response quality available at Revolutionizing Chatbot Performance.

LlamaIndex Discord Channel Summaries

▷ #announcements (1 messages):

- Show off your LlamaIndex projects:

@jerryjliu0encourages users to share their LlamaIndex related projects or blog posts for promotion.DM @jerryjliu0 if you have something to share!

▷ #blog (4 messages):

- Discovering Chain-of-Table Technique: LlamaIndex introduced a method using LLMs to step-by-step understand tabular data, addressing the limitations of direct text-to-table and Text-to-SQL approaches. The process was teased with a link: https://twitter.com/llama_index/status/1746217167706894467.

- Reranking in RAG Pipelines: @MountainMicky shared insights on the necessity of including a reranker in advanced Retrieval-Augmented Generation (RAG) pipelines to ensure precise context is returned for complex queries. Highlighted in a tweet: https://twitter.com/llama_index/status/1746340454281666972.

- LlamaIndex Points to Original LinkedIn Source: In this message, LlamaIndex directed users to a LinkedIn post as the original source for the latest discussion: https://twitter.com/llama_index/status/1746340495486488607.

- Template for RAG-Powered Voice Assistant: @HarshadSurya1c’s full-stack template for building a voice assistant involving the use of

create-llamaCLI tool was announced. This serves as an example to scaffold backend/frontend applications: https://twitter.com/llama_index/status/1746574062363848870.

▷ #general (47 messages🔥):

-

RAG System Response Time Troubles:

@liqvid1discusses building a RAG system with OpenAI’s GPT-4. They’re experiencing slow response times, approximately 25 minutes, and seek advice on whether it’s due to their local MacBook specs or another issue.@cheesyfishessuggests it’s likely due to the number of LLM calls rather than the local system.@desk_and_chairand@mr.droniemake additional suggestions, including considering lower model versions or using hosted APIs for better performance. -

LlamaIndex as a Navigation Tool:

@lolipopmaninquires about LlamaIndex’s capability to serve links for navigation purposes, like directing users to specific subpages. Through a constructed example, they suggest how a chatbot could guide users.@desk_and_chairresponds by hinting at the potential for using the system prompt of the Query Engine instance to include links in responses. -

Vector Store Compatibility Queries:

@cd_chandraposes questions on how to maintain cross-compatibility between vector stores created in different ways and how to track metadata like the embedding model used.@cheesyfishesresponds suggesting PR for additional fields in the pgvector store class and recommends including the model name in the metadata field for tracking. -

LlamaIndex’s Tight OpenAI Integration Questioned:

@_joaquindquestions why LlamaIndex is so intertwined with OpenAI rather than more open-source options, suggesting a possible mismatch given its name.@cheesyfishesclarifies the history behind the naming and discusses the library’s increasing support for open-source LLMs, while acknowledging OpenAI’s dominance in complex applications. -

Sorting Issues with Table Data in Models:

@andrew sraises a concern about a model that fails to return the correct row post-sorting, instead returning the last row of the unsorted dataset. Looking for insights from the community on this issue.

Links mentioned:

▷ #ai-discussion (2 messages):

- Seeking GRIT dataset clarity: User

@saswatdasrequested help with accessing the GRIT dataset used by the Ferret model, released by Apple. They shared the repository link https://github.com/apple/ml-ferret/ and expressed confusion. - New Chapter Alert for Chatbot Enthusiasts:

@sl33p1420published a new chapter focused on improving chatbot response quality, especially for applications requiring higher response precision. The chapter is available at Revolutionizing Chatbot Performance and continues the series on building a complete chatbot using llama_index.

Links mentioned:

Revolutionizing Chatbot Performance: Unleashing Three Potent Strategies for RAG Enhancement: Previous Articles:

DiscoResearch Discord Summary

-

Engage in GPU Gear Up: The discussions touched on optimal hardware for running models like mixtral-7b-8expert, with a recommendation favoring a setup of dual NVIDIA 4090s and mentioning the capabilities of quantized models to run on a single 4090/3090 with specific VRAM requirements. A comprehensive GPU guide by Tim Dettmers was shared, alongside comparing the NVIDIA 4090 to the Mac Studio M2 Ultra in terms of performance and energy efficiency.

-

Big Data, German Precision:

@philipmayreleased the German DPR-dataset for machine learning applications and requested community feedback and improvement suggestions. The conversation highlighted usage concerns of formal “Sie” in RAG/LLM contexts and the promise of a revised dataset version. Discussions unfolded around dataset generation risks and referenced an article on Model Collapse. -

Collaboration and Anticipation in the Air: Members showed eagerness in teaming up for a deep dive as indicated by

@huunguyen, and@thewindmomexpressed enthusiasm for upcoming developments. Curiosity surfaced about the potential of integrating with mergekit to enhance capabilities. -

Navigating Tonality in Language Models: The dialog mentioned the challenges posed by formal address in German datasets and the exploration of methods to convert between formal and informal speech. Contributors discussed operational improvements and the potential use of few-shot examples and chat features to refine results.

DiscoResearch Channel Summaries

▷ #mixtral_implementation (4 messages):

- Seeking Hardware for Mixtral-7b-8expert:

@leefdeinquired about a hardware setup to run mixtral-7b-8expert and scale to larger models.@jp1_recommended a configuration with dual NVIDIA 4090s for a balance of performance and cost, suitable for models up to 70b in size. - Running Quantized Models on Single GPU:

@thewindmommentioned that a quantized version can be operated on a single 4090/3090 with 3.5bpw on 24 GB VRAM. For larger models, they suggested configurations with dual 3090/4090/a6000/6000 ada’s/L40s. - Comprehensive GPU Guide Shared: A link to Tim Dettmers’ deep learning GPU guide was provided by

@thewindmom, offering insights into important GPU features for making a cost-efficient choice. The guide includes performance comparisons and instructional charts. - Mac Studio M2 Ultra as a Competitor: Mentioned by

@thewindmom, the Mac Studio M2 Ultra was presented as an alternative, capable of holding up to 192 GB unified memory, where speed comparisons indicate that the 4090 runs 10% and 25% faster than the 3090 and M2 Ultra respectively for llama inference. - Discussion about GPU Performance & Energy Consumption:

@jp1_pointed out that the NVIDIA 4090 not only outperforms the 3090 and M2 Ultra but is also more energy-efficient, and delivers a much larger performance boost with specific optimizations like fp8 training and tensorrt.

Links mentioned:

- The Best GPUs for Deep Learning in 2023 — An In-depth Analysis: Here, I provide an in-depth analysis of GPUs for deep learning/machine learning and explain what is the best GPU for your use-case and budget.

- mlx-examples/lora at main · ml-explore/mlx-examples: Examples in the MLX framework. Contribute to ml-explore/mlx-examples development by creating an account on GitHub.

▷ #general (5 messages):

- Potential Team-Up on Deep Dive: User

@huunguyenexpresses willingness to collaborate with@191303852396511232on a deep dive and suggested doing it together. - Eyes on the Prize:

@_jp1_posts an eyes emoji indicating interest or anticipation. - Excitement for the Upcoming 🔥:

@thewindmomresponds to@_jp1_showing enthusiasm for the anticipated developments with a “hyped for that 🔥” comment. - Speculations on mergekit and Launch Acceleration:

@thewindmomasks about possible integration with mergekit, suggesting a significant boost in capability with “also merged with mergekit 🚀?” - Comparing the Compute Powers of 4090 vs. M2 Ultra:

@thewindmomshares a detailed analysis on the energy efficiency and performance of the 4090 GPU compared to the M2 Ultra chip, noting differences in memory bandwidth and their impact on model processing, inviting others to correct any misunderstandings.

▷ #embedding_dev (34 messages🔥):

- Deutsche Daten für Maschinenlernen:

@philipmayhat den deutschen DPR-Datensatz freigegeben und bittet um Rückmeldungen und Verbesserungsvorschläge. - Form vs. Informell im Spotlight:

@sebastian.bodzawies darauf hin, dass im RAG/LLM-Kontext der imperativ häufig “Sie” verwendet wird, was die Performance beim Einbinden beeinträchtigen könnte. - Das „Sie“-Problem wird angegangen:

@philipmayerkannte das Problem mit der formalen Anrede und plant eine zweite Version des Datensatzes. Er erwähnt auch, eine Umwandlung mit3.5-turbozu erwägen. - Datensatz-Generation diskutiert:

@devnull0,@philipmayund@.sniipsdiskutierten über mögliche Lizenz-und Qualitätsprobleme beim Generieren von Datensätzen mit OpenAI-Modellen und erwähnten einen Artikel zum Thema Model Collapse. - Iterative Verbesserung und Feedback: In einem Austausch mit

@bjoernpund@sebastian.bodzasuchte@philipmayFeedback zu einem Prompt zur Umwandlung von formeller zu informeller Anrede und die mögliche Verwendung von Few-shot-Beispielen und Chat-Funktionen für bessere Ergebnisse.

Links mentioned:

- The Curse of Recursion: Training on Generated Data Makes Models Forget: Stable Diffusion revolutionised image creation from descriptive text. GPT-2, GPT-3(.5) and GPT-4 demonstrated astonishing performance across a variety of language tasks. ChatGPT introduced such langua…

- SebastianBodza/wikipedia-22-12-de-dpr · Datasets at Hugging Face

- GitHub - telekom/wikipedia-22-12-de-dpr: German dataset for DPR model training: German dataset for DPR model training. Contribute to telekom/wikipedia-22-12-de-dpr development by creating an account on GitHub.

- GitHub - telekom/wikipedia-22-12-de-dpr: German dataset for DPR model training: German dataset for DPR model training. Contribute to telekom/wikipedia-22-12-de-dpr development by creating an account on GitHub.

LangChain AI Discord Summary

-

LangChain Leaps Forward: Community members like

@gitcommitshowand@lhc1921shared valuable resources, including LangChain’s contributing guide and integration instructions, to help those seeking LangChain integration guidance. Meanwhile,@hiranga.greported success in running LangServe, and subsequently pinpointed.withTypes()for handling nested input types in API design. -

Deployment Dilemmas and Streaming Woes: Contributors like

@daii3696aired issues on Azure streaming functionalities, while@greywolf0324struggled with deploying the GGUF model with LangChain on AWS SageMaker. Related concerns were raised by@stampdelinencountering warnings post-LangChain upgrade in PyCharm, and queries by@__ksolo__about optimal strategies for document Q&A. -

Model Wrangling and Memory Juggling:

@rajib2189and@esxr_elevated the guild’s work share with creative integrations and local solutions, like combining Langchain with AWS Bedrock and local LLMs with macOS Spotlight, showcased in their YouTube videos and GitHub repositories. Also, discussions about LangChain memory integrations using LCEL expressions and RedisChatMessageHistory, and ConversationSummaryBufferMemory by@roi_foscaindicated deep dives into LangChain’s capabilities. -

Bug Hunting and Parameter Puzzles:

@muhammad_ichsanreported a potentialverboseparameter bug in LlamaCpp, with@lhc1921suggesting a fix involving case sensitivity. -

Expanding Framework Horizons:

@aliarmaniinquired about agentic memory management tools for crafting an advanced conversational agent, while@meeffedelved into improving efficiency by combining Python source code with documentation insights from tools like ReadTheDocs.

LangChain AI Channel Summaries

▷ #general (28 messages🔥):

- Seeking LangChain Integration Guidance:

@gitcommitshowinquired about documentation for creating LangChain integrations.@lhc1921responded with links to the contributing guidelines and detailed integration instructions on LangChain’s documentation site. - Azure Streaming Conundrum:

@daii3696experienced issues with streaming functionality when deploying services on Azure with Kubernetes; quite a puzzle as everything worked locally. - AWS SageMaker Deployment Dilemma:

@greywolf0324encountered challenges while attempting to deploy the GGUF model with LangChain on AWS SageMaker, seemed to be “stuck on from deployment” before finding a solution. - Verbose Parameter Bug Report:

@muhammad_ichsanreported a potential bug with theverboseparameter in the LlamaCpp model on Google Colab.@lhc1921suggested a potential fix, pointing out a case sensitivity issue. - Exploring Retrospective Learning Tools:

@aliarmanisought advice on tools and frameworks that excel in agentic memory management for developing an advanced conversational agent system; a true memory lane query. - LangChain Llamafile Pros and Cons:

@rawwerkspitched the idea that LangChain combined with llamafile could rival existing architectures like RAG, enabling privacy, local deployment, and other benefits. Yet,@lhc1921rebutted with a caution that ollama is not ready for production-grade inference. - Optimal Question Strategy for Document Q&A:

@__ksolo__queried about best practices in Q&A over documents, contemplating whether to ask multiple questions in one prompt or separate them; a strategic question quest. - Python Import Warning Post LangChain Upgrade:

@stampdelinfaced a mysterious warning in PyCharm after upgrading LangChain, highlighting the troubles of software upgrades. - Choosing Models from OpenAI:

.citizensnipz.wanted to specify which OpenAI model to use, as all the requests defaulted to GPT-3.5 and found no guidance in the docs for switching to versions 4 or 4 turbo. - Combining Python Source with Docs for Development:

@meeffediscussed combining Python source code loader with a tool like ReadTheDocs loader to integrate documentation insights into a codebase, pondering on memory and agent tools for improved efficiency. - Memory Integration in LangChain:

@roi_foscadiscussed integrating memory into LangChain using LCEL expressions and RedisChatMessageHistory, but was concerned about potential token limit issues and inquired about using ConversationSummaryBufferMemory with LECL expressions.

Links mentioned: