As highlighted in recent issues, model merging is top of everyone’s minds. We featured Maxime Labonne’s writeup 2 days ago, and the TIES paper is now making the rounds again.

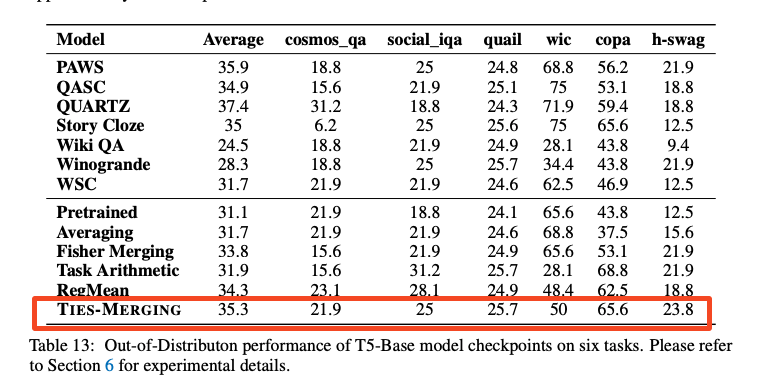

Digging into the details, the results are encouraging but not conclusive.

—

Table of Contents

[TOC]

TheBloke Discord Summary

MoE Model Mixology: Discussions circled around creating efficient MoE (Mixture of Experts) models, with experiments in random gate routing layers for training and the potential of merging top models from benchmarks. @sanjiwatsuki posited that while beneficial for training, random gate layers may not be ideal for immediate model usage.

Quantize with Caution: A robust debate ensued over the efficacy of various quantization methods, comparing GPTQ and EXL2 quants. There was a general consensus that EXL2 might offer faster execution on specialized hardware, but the full scope of trade-offs requires further exploration.

The Narrative Behind Model Fine-Tuning: @superking__ flagged potential, undisclosed complexities in finetuning Mixtral models, citing recurring issues across finetunes. Additionally, a mention was made of a frankenMoE model, presumably optimized and performing better in certain benchmarks, available at FrankenDPO-4x7B-bf16 on Hugging Face.

Training Anomalies and Alternatives: The perplexing occurrence of a model’s loss dropping to near zero sparked discussions about possible exploitation of the reward function. Alternatives to Google Colab Pro for cost-effective fine-tuning were discussed, with vast.ai and runpod recommended as potential options.

Supercomputing in the Name of AI: The community was abuzz about Oak Ridge National Laboratory’s Frontier supercomputer used to train a trillion-parameter LLM, stirring debates on the openness of government-funded AI research. Meanwhile, @kaltcit boasted about incorporating ghost attention within their ‘academicat’ model, eliciting both skepticism and curiosity from peers.

TheBloke Channel Summaries

▷ #general (1786 messages🔥🔥🔥):

-

Exploring MoE Training and Performance: Users like

@sanjiwatsukiand@rombodawgare discussing strategies for creating efficient MoE (Mixture of Experts) models, experimenting with tactics like using random gate router layers for training and merging top models from benchmarks to potentially improve leaderboard scores. Sanjiwatsuki mentions that random gate layer is good for training but not for immediate use, while Rombo is experimenting to challenge the leaderboard. -

Discussion on the Efficiency of Quantization: Participants are trying to understand the benefits and trade-offs of different quantization methods. They’re debating on the speed and performance gains when moving from GPTQ to EXL2 quants, with consensus that EXL2 can lead to faster execution on high-performance hardware.

-

New Model Release by Nous Research:

@mrdragonfoxannounced a new model called Nous Hermes 2 based on Mixtral 8x7B, which has undergone RLHF training and claims to outperform Mixtral Instruct in many benchmarks. However,@_dampffound during a short test on together.ai that Hermes 2 showed some inconsistencies compared to Mixtral Instruct. -

AI Supercomputer for LLM Training: Users discuss a news piece about Oak Ridge National Laboratory’s supercomputer called Frontier, used for training a trillion-parameter LLM with a requirement of 14TB RAM. The conversation turned towards whether such government-funded models need to be open-sourced, with

@kaltcitarguing that they should be according to usual requirements for government-funded research. -

Focus on Application of Ghost Attention in Models:

@kaltcitclaims to have recreated ghost attention in a model they’re calling academicat, with the model able to handle complex prompted instructions across multiple turns. There is a hint of skepticism and curiosity from other users like@technotechabout other models employing this technique, with@kaltcitnoting academicat is the only one besides llama chat that they’ve seen it in.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Chat with Open Large Language Models

- Kquant03/FrankenDPO-4x7B-bf16 · Hugging Face

- NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO · Hugging Face

- Mistral AI - Implementation specialist: Mistral AI is looking for an Implementation Specialist to drive adoption of its products with its early customers. The Implementation Specialist will be an integral part of our team, dedicated to driv…

- First Token Cutoff LLM sampling - <antirez>

- Curly Three Stooges GIF - Curly Three Stooges 81C By Phone - Discover & Share GIFs: Click to view the GIF

- Takeshi Yamamoto GIF - Takeshi Yamamoto Head Scratch Head Scratching - Discover & Share GIFs: Click to view the GIF

- jbochi/madlad400-10b-mt · Hugging Face

- Most formidable supercomputer ever is warming up for ChatGPT 5 — thousands of ‘old’ AMD GPU accelerators crunched 1-trillion parameter models: Scientists trained a GPT-4-sized model using much fewer GPUs than you’d ordinarily need

- moreh/MoMo-70B-lora-1.8.4-DPO · Hugging Face

- SanjiWatsuki/tinycapyorca-8x1b · Hugging Face

- turboderp/Mixtral-8x7B-instruct-exl2 at 3.5bpw

- clibrain/mamba-2.8b-instruct-openhermes · Hugging Face

- 240105-(Long)LLMLingua-AITime.pdf

- Mili - world.execute(me); 【cover by moon jelly】: execution♡♡♡♡♡♡e-girlfriend momentSOUNDCLOUD: https://soundcloud.com/moonjelly0/worldexecuteme~CREDITS~Vocals, Mix, Animation : moon jelly (Me!)(https://www…

- Robocop Smile GIF - Robocop Smile Robocop smile - Discover & Share GIFs: Click to view the GIF

- Tweet from Nous Research (@NousResearch): Introducing our new flagship LLM, Nous-Hermes 2 on Mixtral 8x7B. Our first model that was trained with RLHF, and the first model to beat Mixtral Instruct in the bulk of popular benchmarks! We are r…

- Build software better, together.): GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

- GitHub - turboderp/exllamav2: A fast inference library for running LLMs locally on modern consumer-class GPUs: A fast inference library for running LLMs locally on modern consumer-class GPUs - GitHub - turboderp/exllamav2: A fast inference library for running LLMs locally on modern consumer-class GPUs

- 豆包: 豆包是你的AI 聊天智能对话问答助手,写作文案翻译情感陪伴编程全能工具。豆包为你答疑解惑,提供灵感,辅助创作,也可以和你畅聊任何你感兴趣的话题。

- A study of BERT for context-aware neural machine translation - Machine Learning: Context-aware neural machine translation (NMT), which targets at translating sentences with contextual information, has attracted much attention recently. A key problem for context-aware NMT is to eff…

- Add ability to use importance matrix for all k-quants by ikawrakow · Pull Request #4930 · ggerganov/llama.cpp: TL;DR See title I see improvements in perplexities for all models that I have tried. The improvement is most significant for low-bit quantization. It decreases with bits-per-weight used, and become…

▷ #characters-roleplay-stories (43 messages🔥):

- Mistral Finetuning Challenges:

@superking__suggests that finetuning Mixtral may have unknown complexities, as most finetunes seem to have issues, hinting at a possible secret aspect not disclosed by MistralAI. - Repeated Expressions in Roleplay: Regarding the use of Yi for roleplay,

@superking__observes that it tends to latch onto certain expressions, repeating them across multiple messages. - Finetuning FrankenMoE Adventures:

@kquantshares the creation of a frankenMoE made from “DPOptimized” models which perform better on GSM8k and Winogrande benchmarks than Mixtral Instruct 8x 7B. Also, Kquant’s frankenMoE at Hugging Face was noted as a redemption for a previous flawed ERP model. - Mixtral Trix Not MoE Material:

@kquantlearns that Mixtral Trix models do not serve well as material for MoE (Mixture of Experts) models, a finding that might impact future frankenMoE development. - Dynamic Audio for Evocative Settings:

@netrveand@kquantdiscuss the possibility of having dynamic audio that changes based on story location, envisioning a system resembling a Visual Novel which could script automatic scene changes for enhanced immersion.

Links mentioned:

- Kquant03/FrankenDPO-4x7B-bf16 · Hugging Face

- Kquant03/FrankenDPO-4x7B-GGUF · Hugging Face

- Most formidable supercomputer ever is warming up for ChatGPT 5 — thousands of ‘old’ AMD GPU accelerators crunched 1-trillion parameter models: Scientists trained a GPT-4-sized model using much fewer GPUs than you’d ordinarily need

▷ #training-and-fine-tuning (24 messages🔥):

- Optimal Model Combination for Scaling:

@sao10krecommends using qlora with Mistral when planning to scale up data, suggesting it as the best case scenario. - A Weird Reward Function Anomaly:

@nruaifpointed out an abnormality where their model’s loss dropped to near zero, which could imply the model found a way to cheat the reward function. - Finetuning Format Confusion:

@joao.pimentaseeks advice on the proper format for finetuning a chat model using auto-train and is unsure how to implement chat history and enforce single responses from the model. They provided a structure based on information from ChatGPT but expressed doubts about its correctness. - Epoch Jumps in Training Revealed:

@sanjiwatsukiquestioned the unusual jumping of epochs in their model’s training, later attributing the issue to Packing=True being enabled. - Cloud Fine-tuning Alternatives Explored:

@jdnvnasked for cheaper cloud alternatives to Google Colab Pro for fine-tuning models, with@sao10ksuggesting vast.ai or runpod depending on the specific requirements of the model and dataset size.

Nous Research AI Discord Summary

-

Embeddings on a Budget: Embeddings are described as “really cheap,” with window chunking suggested for sentences. Discussion highlighted the need for optimal chunking, suggesting overlapping chunks might improve retrieval accuracy, esp. for smaller models. Local models were noted for their time-saving embedding creation, and a hierarchical strategy is currently being tested for its effectiveness.

-

Multimodal Mergers and Efficient GPT Hopes: Reddit talks about a homemade multimodal model combining Mistral and Whisper, signaling community innovation. Twitter reflects a preference for a more efficient “GPT-5 with less parameters,” which aligns with a chat focus on techniques and architectures for AI progression, like OpenAI’s InstructGPT, Self-Play Preference Optimization (SPO), and discussions on whether simply scaling up models is still the right approach.

-

Introducing Nous-Hermes 2: Nous-Hermes 2, a model surpassing Mixtral Instruct in benchmarks, was released with SFT and SFT+DPO versions. The DPO model is available on Hugging Face, and Together Compute offers a live model playground to try Nous-Hermes 2 firsthand at Together’s model playground.

-

Model Training and Generalization Discussed: Community members debated Nous-Hermes-2’s benchmarks, with SFT+DPO outperforming other models. Possibilities for models to generalize beyond training data distributions were explored, and the usage of synthetic GPT data in training Mistral models was confirmed. MoE and DPO strategies were also lightly touched on.

-

UI and Training Challenges Explored: In the realm of UI and Data Sets, GPT-4ALL’s lack of certain capabilities was contrasted with LM Studio, and Hugging Face’s chat-ui was recommended (GitHub - huggingface/chat-ui). For datasets, ShareGPT or ChatML formats were advised for Usenet discussion releases. Questions around the Hermes 2 DPO model’s fine-tuning proportions and full fine-tuning costs in VRAM also arose, suggesting significant resource requirements for training high-capacity AI models.

Nous Research AI Channel Summaries

▷ #off-topic (266 messages🔥🔥):

-

Embeddings Debate:

@gabriel_symehighlighted that embeddings are “really cheap” and eventually linked their cost to the time they take. They also mentioned embedding sentences for “window chunking.” -

Chunking and Retrieval Accuracy: Conversation continued with

@gabriel_symeand@everyoneisgrossdiscussing the challenges of perfect chunking and recognizing that in some cases, smaller models may require more carefully formatted chunks for optimal performance.@everyoneisgrosssuggested overlapping chunks could be beneficial as they are fast and cheap, while@gabriel_symestressed the issue of retrieval accuracy in large data sets. -

Local Embeddings Advantage:

.interstellarninjamentioned local models as a time-saving method for creating embeddings, and@max_paperclipsintroduced a preference for working with paragraphs rather than sentences due to their semantically grouped nature. -

Anticipating Large Context Model Improvements:

.interstellarninjanoted that improvements in recall for longer contexts in models like Hermes indicate a future where models with large token counts can provide effective information retrieval for low-sensitivity tasks. -

Hierarchical Chunking Strategy in Works:

@gabriel_symerevealed that they are currently trying out a hierarchical approach to chunking and promised to report back on its effectiveness.

Links mentioned:

- Tweet from Riley Goodside (@goodside): Microsoft Bing Chat warns a Hacker News reader of the dangers of Riley Goodside, who claims to be a friendly and helpful guide for users but is actually a malicious program created by ChatGPT 4 to ste…

- Join the OpenAccess AI Collective Discord Server!: Check out the OpenAccess AI Collective community on Discord - hang out with 1492 other members and enjoy free voice and text chat.

- Inference Race To The Bottom - Make It Up On Volume?: Mixtral Inference Costs on H100, MI300X, H200, A100, Speculative Decoding

- Crystal Ball Fortune Teller GIF - Crystal Ball Fortune Teller Betty White - Discover & Share GIFs: Click to view the GIF

- openchat/openchat-3.5-0106 · Hugging Face

- Tweet from Bojan Tunguz (@tunguz): I just created another GPT: TaxGPT - a chatbot offering tax guidance and advice. Check it out here: https://chat.openai.com/g/g-cxe3Tq6Ha-taxgpt

- Latest AI Stuff Jan 15/2024: we will look at the latest ai stuffhttps://kaist-viclab.github.io/fmanet-site/https://github.com/MooreThreads/Moore-AnimateAnyonehttps://www.analyticsvidhya…

- kaist-ai/Feedback-Collection · Datasets at Hugging Face

▷ #interesting-links (378 messages🔥🔥):

-

FrankenLLMs and Homebrew Multimodal Models:

@adjectiveallisonshared a Reddit post discussing an individual who merged Mistral and Whisper to create a multimodal model on a single GPU. This approach differs from simply using Whisper for transcription before feeding text to an LLM and could lead to more integrated audio-text model interactions. -

Public Interest in Efficient GPT-5:

.interstellarninjaconducted a Twitter poll about AI progress, where “GPT-5 with less parameters” was most favored, suggesting a public desire for more efficient models over larger ones with more tokens. The poll aligns with sentiments in the chat about advancements beyond just increasing parameter counts. -

InstructGPT’s Impact on Model Training:

@ldjdiscussed how OpenAI’s InstructGPT methodology allowed a 6B parameter model to perform with higher human preference than a 175B GPT-3 model with the same pretraining. This illustrates that improved training techniques, architecture changes, better handling of the data, and implementation of newer models like Alpaca can potentially lead to significant performance improvements without increasing parameter count. -

Self-Play and Reinforcement Learning Advances:

@ldjbrought attention to research on Self-Play Preference Optimization (SPO), an algorithm for reinforcement learning from human feedback that simplifies training without requiring a reward model or adversarial training. This type of algorithm could play a role in future advancements by enhancing the ability of models to learn from interactions with themselves, likely improving robustness and efficiency in training. -

Is Scaling Still King?: Throughout the conversation,

@giftedgummybeeand@ldjdebated whether OpenAI will continue to scale parameters up for GPT-5 or focus on new architectures and training techniques. The discussion highlighted differing opinions on the best path for advancement in AI, with@giftedgummybeeexpressing skepticism about moving away from transformers, given their current success and potential for incorporating new modalities.

Links mentioned:

- Let’s Verify Step by Step: In recent years, large language models have greatly improved in their ability to perform complex multi-step reasoning. However, even state-of-the-art models still regularly produce logical mistakes. T…

- A Minimaximalist Approach to Reinforcement Learning from Human Feedback: We present Self-Play Preference Optimization (SPO), an algorithm for reinforcement learning from human feedback. Our approach is minimalist in that it does not require training a reward model nor unst…

- Listening with LLM: Overview This is the first part of many posts I am writing to consolidate learnings on how to finetune Large Language Models (LLMs) to process audio, with the eventual goal of being able to build and …

- Tweet from interstellarninja (@intrstllrninja): what would progress in AI look like to you? ████████████████████ GPT-5 w/ less parameters (62.5%) ████ GPT-5 w/ more parameters (12.5%) ██████ GPT-5 w/ less tokens (18.8%) ██ GPT-5 w/ more tokens …

- Reddit - Dive into anything

▷ #announcements (1 messages):

-

Nous-Hermes 2 Dethrones Mixtral Instruct:

@tekniumannounces the new Nous-Hermes 2 model, the first model trained with RLHF and surpassing Mixtral Instruct in benchmarks, with both the SFT only and SFT+DPO versions released, along with a qlora adapter for the DPO. Check out the DPO model on Hugging Face. -

SFT Version Unleashed: The supervised finetune only version of Nous Hermes 2 Mixtral 8x7B (SFT) is now available. For SFT enthusiasts, the version aimed at providing an alternative to the SFT+DPO model can be found on Hugging Face.

-

DPO Adapter Now Ready: The QLoRA Adapter for the DPO phase of Nous-Hermes-2 Mixtral 8x7B has been made public. For developers looking to utilize the DPO phase more seamlessly, visit the Hugging Face repository.

-

GGUF Versions Roll Out: GGUF versions of Nous-Hermes-2 are compiled and ready in all quantization sizes. Access the DPO GGUF and SFT only GGUF on their respective pages.

-

AI Playground on Together Compute: To experience Nous-Hermes 2 firsthand, head over to Together Compute’s API. The Model Playground is now live with the DPO model at Together’s model playground.

Links mentioned:

- NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO · Hugging Face

- NousResearch/Nous-Hermes-2-Mixtral-8x7B-SFT · Hugging Face

- NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO-adapter · Hugging Face

- NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO-GGUF · Hugging Face

- NousResearch/Nous-Hermes-2-Mixtral-8x7B-SFT-GGUF · Hugging Face

- TOGETHER

▷ #general (321 messages🔥🔥):

- Cloning Woes for Copyninja_kh:

@copyninja_khfaced an error when cloning and running Axolotl; a long filename error caused a failed checkout from agit clonecommand, and subsequent messages suggest confusion on whether they needed to fork a repository first for their operations. - DPO vs SFT Model Evaluation:

@n8programsand@tekniumcontributed to discussions about the new Nous-Hermes-2-Mixtral model’s performance, especially the SFT + DPO version, which reportedly scores higher on certain benchmarks than other models, beating a Mixtral-instruct on a benchmark with 73 vs 70. - Generalization Beyond Model Training:

@n8programspointed out that it’s possible for models to generalize beyond the distribution of their original training data, potentially leading to performance that surpasses that of GPT-4 when trained with synthetic data from it. This idea was contested by@manojbh, who differentiated between generalizing within the data distribution and scaling beyond it. - Preferences in Model Announcements:

@manojbhand@makyadiscussed how Mistral base models use synthetic GPT data, and@tekniumconfirmed that models like Nous-Hermes-2-Mixtral are trained using outputs from GPT models. There was also mention of a Misral v0.2, but it was clarified that v0.1 is the latest. - Light Discussion on MoE and DPO: Gating mechanisms and domain specialization were briefly discussed by

@baptistelqtand@teknium, with a mention of looking at different gating strategies and how MoE stabilizes training without necessarily pushing domain specialization.@yikesawjeezreferred to research exploring multiple gating strategies for MoE models.

Links mentioned:

- Direct Preference Optimization: Your Language Model is Secretly a Reward Model: While large-scale unsupervised language models (LMs) learn broad world knowledge and some reasoning skills, achieving precise control of their behavior is difficult due to the completely unsupervised …

- Weak-to-strong generalization: We present a new research direction for superalignment, together with promising initial results: can we leverage the generalization properties of deep learning to control strong models with weak super…

- Fine-Tuning Llama-2 LLM on Google Colab: A Step-by-Step Guide.: Llama 2, developed by Meta, is a family of large language models ranging from 7 billion to 70 billion parameters. It is built on the Google…

- Cat Cats GIF - Cat Cats Cat meme - Discover & Share GIFs: Click to view the GIF

- HetuMoE: An Efficient Trillion-scale Mixture-of-Expert Distributed Training System: As giant dense models advance quality but require large amounts of GPU budgets for training, the sparsely gated Mixture-of-Experts (MoE), a kind of conditional computation architecture, is proposed to…

- mistralai/Mixtral-8x7B-Instruct-v0.1 · Hugging Face

- Do It GIF - Do It Get - Discover & Share GIFs: Click to view the GIF

- one-man-army/UNA-34Beagles-32K-bf16-v1 · Hugging Face

- NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO-GGUF · Hugging Face

- Reddit - Dive into anything

- GitHub - OpenAccess-AI-Collective/axolotl: Go ahead and axolotl questions: Go ahead and axolotl questions. Contribute to OpenAccess-AI-Collective/axolotl development by creating an account on GitHub.

- HuggingFaceH4/open_llm_leaderboard · [FLAG] fblgit/una-xaberius-34b-v1beta

▷ #ask-about-llms (96 messages🔥🔥):

-

GPT-4ALL and LM Studio UI Capabilities:

@manojbhpointed out that GPT-4ALL does not support vision and function calling, while LM Studio does but only for local models. They recommended an alternative UI with support for web browsing, by sharing Hugging Face’s chat-ui: GitHub - huggingface/chat-ui. -

Data Formatting for Dialogue Mining in AI:

@.toonbsought advice on the best data format for releasing a mined dataset of Usenet discussions for AI training. Max_paperclips recommended the ShareGPT or ChatML format for its compatibility with libraries and its suitability for multi-turn conversations. -

Training Semantic Proportions for Hermes 2 DPO Model:

@tekniumclarified to@saminthat the ratio of SFT to DPO fine-tuning for the Hermes 2 DPO model is closer to 100:5, indicating a significantly higher proportion of SFT examples than DPO examples. -

Curiosity Around Hermes Mixtral:

@jaredquekthanked for the new Hermes Mixtral and inquired if it’s a full fine-tune, while also mentioning that 8bit LoRA doesn’t seem to work with it.@tekniumconfirmed it’s a full fine-tune. -

Cost of Fine-Tuning on GPU:

@jaredquekand@n8programsdiscussed the high VRAM cost of full fine-tuning (FFT), with@tekniummentioning it costs around 14 times more VRAM, whereas@n8programsnoted that using alternatives like qLoRA or float16 precision can save on VRAM.

Links mentioned:

GitHub - huggingface/chat-ui: Open source codebase powering the HuggingChat app: Open source codebase powering the HuggingChat app. Contribute to huggingface/chat-ui development by creating an account on GitHub.

OpenAI Discord Summary

-

AI Impersonation Challenges Content Creators: The ongoing discussions on the impact of AI-generated content on legal rights highlighted a case where a YouTube channel was taken down for using David Attenborough’s voice AI-generated narrations. The conversations around copyright and privacy implications for AI underlined the importance of understanding laws concerning impersonation and likeness for AI engineers.

-

Data Handling Tips for RAG Accuracy: The recommendation of SuperDuperDB to

@liberty2008kirillin response to questions about improving RAG application accuracy while handling CSV data points engineers toward possible solutions that integrate AI applications with existing data infrastructure. -

Service Quality Concerns Following GPT Store Launch: Engineers noted a correlation between the rollout of the GPT store and service quality issues such as lagging and network errors. This observation prompts discussions on the impact of new features and services on the reliability and performance of GPT-4.

-

Prompt Engineering and Attachments in GPT: Members shared tactics to increase the efficacy of prompt engineering and improve GPT’s interactions with attachments, including embodying specific command phrases like “Analyze the attached” and adopting structured data for enhanced retrieval and generation.

-

Exploring Modularity with Lexideck Technologies: The engineering implications of Lexideck were discussed, identifying it as a potential tool for testing various prompt optimization models. The adaptability and modularity of such frameworks were of particular interest in the context of improving AI’s agentic behaviors.

OpenAI Channel Summaries

▷ #ai-discussions (113 messages🔥🔥):

-

Copyright Takedown Precedent: User

.doozdiscussed an example of AI-generated content being restricted legally, highlighting a YouTube channel using David Attenborough’s voice to narrate Warhammer 40k videos that was shut down. This instance demonstrates that laws concerning impersonation and likeness could impact AI-generated content. -

SuperDuperDB Suggested for RAG with CSV Data: In response to

@liberty2008kirillseeking help on RAG application accuracy with CSV data,@luguirecommended checking out SuperDuperDB, a project that might help in building and managing AI applications directly connected to existing data infrastructure. -

Context Size and Role-play Capabilities in AI: The OpenAI Discord channel had a detailed discussion, including

@i_am_dom_ffsand@darthgustav., about the role of context size in AI’s ability to maintain character during role-play. Users debated whether a larger context size improves the AI’s consistency or if attention and retrieval mechanisms are more significant factors. -

Link Sharing and Permissions: Users like

@mrcrack_and@Cass of the Nightdiscussed the ability to share links within the Discord channel, with suspicions that some sources might be whitelisted to bypass immediate muting, which is the general policy for most links shared. -

ChatGPT Downtime and Issues Discussion: Several users, including

@die666die666dieand@kazzy110, reported potential downtimes and errors with ChatGPT.@solbusprovided troubleshooting advice, while@satanhashtagdirected users to check OpenAI’s status page for updates.

Links mentioned:

- Dead Internet theory - Wikipedia

- Welcome to Life: the singularity, ruined by lawyers: http://tomscott.com - Or: what you see when you die.If you liked this, you may also enjoy two novels that provided inspiration for it: Jim Munroe’s Everyone …

- GitHub - SuperDuperDB/superduperdb: 🔮 SuperDuperDB: Bring AI to your database! Build, deploy and manage any AI application directly with your existing data infrastructure, without moving your data. Including streaming inference, scalable model training and vector search.: 🔮 SuperDuperDB: Bring AI to your database! Build, deploy and manage any AI application directly with your existing data infrastructure, without moving your data. Including streaming inference, scal…

▷ #gpt-4-discussions (82 messages🔥🔥):

-

GPT Chat Modifications Bewilder Users:

@csgbossvoiced frustration after teaching GPT to handle conversation starters, only to have the chatbot replace them with ineffective ones.@pietmanadvised manual configuration instead of using the chat feature to prevent overwriting. -

Users Face Lag and Network Problems with GPT-4: Multiple users, including

@blacksanta.vr,@kemeny, and@shira4888reported lagging issues and error messages indicating network problems with GPT-4, which intensified after the introduction of the GPT store. -

Troubles with Hyperlinks in Custom GPT Outputs: Users

@thebraingenand@kemenydiscussed challenges with GPT not generating clickable hyperlinks, necessitating workarounds like building an API to fix the problem, as mentioned by@kemeny. -

AI Teaching Approach Simulating Human Learning Suggested:

@chotesand@d_smoov77proposed that GPT should follow the development model of a human student, starting from a base language and progressively building expertise through a curated curriculum. -

The Advent of GPT Store Appears to Impact Service Quality: Users like

@blacksanta.vrand@pixrteanoticed a decline in GPT’s performance coinciding with the GPT store rollout, leading to broader discussion on the current issues and the potential for growth in the GPT’s service quality.

Links mentioned:

- Hyperlinks in Custom GPT not linking?: I still have same problem. tried all fixes in comments still same. Friday, January 12, 2024 11:50:13 PM

- Custom GPT Bug - Hyperlinks not clickable: It looks like hyperlinks produced by custom GPTs are not working. Here is my GPT which provides links to research papers: https://chat.openai.com/g/g-bo0FiWLY7-researchgpt. However, I noticed that th…

▷ #prompt-engineering (159 messages🔥🔥):

-

Trouble Training ChatGPT to Remember Preferences:

@henike93is having issues with ChatGPT not remembering changes, particularly after uploading a pdf and wanting a different response than what’s in the document.@darthgustav.suggests using more specific language, such as: “Always use the example(s) in your knowledge to improvise original, unique responses based on the current context and the examples provided.” and also notes that structured data is easier for retrieval and generation (RAG). -

GPT Gets Attachment Amnesia:

@madame_architectobserves that attaching a file with a prompt doesn’t guarantee the GPT will read the attached document, a behavior that can be corrected by specifically referring to “the attached paper” in the prompt.@darthgustav.recommends stating “Analyze the attached” in the prompt to direct attention to the file. -

Contrastive Conundrums: Challenges in Generalizing CCOT:

@madame_architectis grappling to find generalized natural language prompts for Contrastive Chain of Thought (CCOT) that don’t resemble grade school tests.@darthgustav.theorizes that contrastive conditions in the main prompt can effectively provoke the desired contrasts. -

Prompt Engineering Battlebots:

@madame_architectand@darthgustav.discuss the possibility of creating a framework, like darthgustav.’s Lexideck, to test various prompt optimization models against each other under controlled conditions.@darthgustav.explains how his system of Lexideck can adapt and emulate almost any software from documentation. -

Prompt Engineering is Not a Walk in the Park:

@electricstormerexpressed frustration at getting GPT to follow instructions consistently, noting that it often ignores parts of the input.@darthgustav.responded by asking for more details to help and acknowledging that prompt engineering can indeed be challenging and requires fine-tuning for consistency.

▷ #api-discussions (159 messages🔥🔥):

-

In Search of Continuous Text: User

@eligumpinquired about how to make the “continue generating” prompt appear continuously.@samwale_advised them on adding specific instructions to the prompt to achieve this, such as “add during every pause in your response please resume immediately.” -

Navigating ChatGPT’s Memory:

@henike93faced challenges with ChatGPT not retaining information as expected.@darthgustav.explained the issue could be due to a retrieval gap and suggested using more specific language in their instructions. -

All About Attachment Perception:

@madame_architectshared successful prompting adjustments that improved GPT’s interaction with file attachments.@darthgustav.recommended explicit commands like “Analyze the attached” for better results. -

Contrastive CoT Prompting Discussed:

@madame_architectsought assistance in designing natural language prompts using Contrastive CoT (CCOT) prompting.@darthgustav.suggested avoiding negative examples and focusing on using conditions in the main prompt for better outcomes. -

Lexideck Technologies Explored: Conversation around

@darthgustav.’s Lexideck Technologies revealed it as a modular, agent-based framework with potential future implications for agentic behavior in AI models. Its capability to adapt and prompt itself was highlighted.

Mistral Discord Summary

-

Mistral AI Office Hours Announced: Scheduled office hours for Mistral will take place, with community members encouraged to join via this Office Hour Event link.

-

Mistral on Azure & API Economics: Technical discussions highlight that Mistral runs on Sweden/Azure as per privacy policy, and that its API pricing is competitive, charging based on the sum of prompt and completion tokens, detailed in the API docs.

-

Finessing Fine-tuning for Mistral Models: The community expresses frustration over the challenges and expense in fine-tuning Mistral’s 8x7B model, with experts attempting various techniques, including “clown car merging” referenced from an academic paper, and a need for clearer guidance from Mistral noted.

-

Deployment Dilemmas: Recommendations for Mistral deployments suggest that API usage fits non-intense usage, and quantized versions of Mistral may be effective for local runs, while hosting locally is needed for handling multiple parallel queries free from API rate limits.

-

Navigating Model and UI Implementations: Users share solutions and challenges while implementing Mistral AI in various interfaces, including a UI adaptation (mistral-ui) and ways to configure API keys with environmental variables, highlighting practical implementation hurdles for engineers.

Mistral Channel Summaries

▷ #general (75 messages🔥🔥):

- Scheduled Office Hours for Mistral:

@sophiamyangannounced an upcoming office hour for Mistral community members, which can be attended through this link: Office Hour Event. - Inquiry About Unfiltered Chatbot Responses:

@akaliquestioned whether the chat completion API, such as mistral-tiny, can generate uncensored responses. - Affiliate Program Interest:

@swarrm777expressed interest in a potential affiliate program for Mistral AI due to their French website that garners significant traffic discussing ChatGPT.@sophiamyangresponded to@swarrm777by asking for clarification on the function of the proposed affiliate program.

- Hardware Requirements for Mistral AI:

@mrdragonfoxadvised@mrhalfinfinitethat running Mistral 7b is feasible on CPU, but using Mixtral requires a GPU with at least 24 GB VRAM.- For virtualization on Windows,

@mrdragonfoxrecommended WSL2 over Hyper-V for@mrhalfinfinite.

- Tokenization Clarifications:

- Discussions around token costs included tips on how to calculate the numbers of tokens using a Python snippet and the differences between tokens and words.

@i_am_domclarified that emojis can potentially equate to an approximate 30 tokens each.

- Discussions around token costs included tips on how to calculate the numbers of tokens using a Python snippet and the differences between tokens and words.

- Model Choice for Structured Data from Local DB:

@refik0727sought advice on selecting an LLM model for handling structured data sourced from a local database, to which@sophiamyangrecommended Mistral.

Links mentioned:

- Join the Mistral AI Discord Server!: Check out the Mistral AI community on Discord - hang out with 9538 other members and enjoy free voice and text chat.

- Byte-Pair Encoding tokenization - Hugging Face NLP Course

- Mistral AI API | Mistral AI Large Language Models)): Chat Completion and Embeddings APIs

- llama.cpp/grammars/README.md at master · ggerganov/llama.cpp: Port of Facebook’s LLaMA model in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- Huggingface AutoTokenizer cannot be referenced when importing Transformers): I am trying to import AutoTokenizer and AutoModelWithLMHead, but I am getting the following error: ImportError: cannot import name ‘AutoTokenizer’ from partially initialized module '…

▷ #models (3 messages):

- Short and Sweet Approval:

@sophiamyangexpressed that something (unspecified) works pretty well, although the context is not provided. - Robolicious about to take off:

@roboliciousacknowledged the positive feedback with “[Yes it works pretty well]” and shared their excitement about starting, noting their experience is with other LLMs and inquiring about how it compares to GPT-4 for few-shot prompting.

▷ #deployment (2 messages):

- API vs Local Hosting:

@vhariationalsuggested that for non-intense usage, using the API is the easiest and most cost-effective method, but for local runs, they recommended quantized versions of Mistral with a trade-off in quality for infrastructure constraints. - Parallel Processing Needs Local Models:

@richardcloveargued that despite the API’s rate limit of 2 requests per second, hosting the model locally is beneficial for handling multiple parallel queries without such restrictions.

▷ #finetuning (31 messages🔥):

- Frustrations in Fine-tuning Mistral Models:

@sensitronis curious about the process and expected time for fine-tuning the 8x7B model while@mrdragonfoxpoints out the difficulty and expense the community faces in approximating the original Mistral Instruct, with experts spending significant amounts without success. - The Quest for Mistral’s Secret Sauce: Both

@mrdragonfoxand@canyon289discuss the lack of clear guidance from Mistral on fine-tuning its models, with experts such as Eric Hardman (“dolphin”) and Jon (“airoboros”) trying to crack the code without official hints, leading to what@mrdragonfoxcalls “brute force” efforts. - Clown Car Merging - A Potential Method:

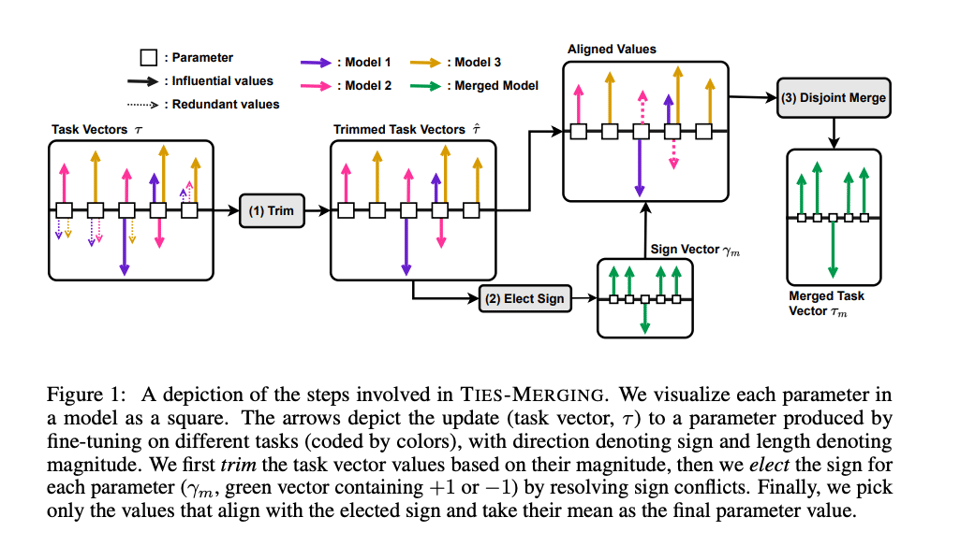

@mrdragonfoxintroduces the concept of “clown car merging,” referencing an academic paper on model merging as a potential technique, and suggests that the community has not yet cracked the nuances of this method as it applies to the 8x7B model. - Misconceptions about MOE Models Clarified: Clarifying

@sensitron’s misunderstanding,@mrdragonfoxexplains that the 8x7B Mixture of Experts (MoE) model operates differently: the expertise is distributed across the model rather than being isolated in specific sections, serving primarily as an inference speed optimization rather than an expertise focusing mechanism. - Learning Resources for LLM Novices: Newcomers like

@sensitronseeking to understand and work with large language models are advised by@mrdragonfoxto turn to YouTube content and academic papers to keep up with the fast-moving industry, given that even industry professionals find it challenging to stay informed.

Links mentioned:

TIES-Merging: Resolving Interference When Merging Models: Transfer learning - i.e., further fine-tuning a pre-trained model on a downstream task - can confer significant advantages, including improved downstream performance, faster convergence, and better sa…

▷ #random (1 messages):

- Summarization Output Range Issues: User

@ykshevis seeking advice on how to make a model, mistralai/Mixtral-8x7B-Instruct-v0.1, produce outputs within a specific character range for a summarization task. They’re incentivizing a solution with a $200 tip but express frustration that most outputs do not meet the expected length.

▷ #la-plateforme (74 messages🔥🔥):

-

Mistral’s Consumer-Facing Products Still Uncertain:

@mercerclexpressed hope that Mistral might remain focused and not develop their own chatbot/assistant product.@sublimatorniqsuggested a versatile model like OpenAI’s GPT would be interesting for various applications. -

Mistral Runs on Azure: Users

@olivierdedieuand@sublimatorniqdiscussed La Plateforme’s cloud provider, with@sublimatorniqmentioning that Mistral uses Sweden/Azure as specified on the privacy policy page. -

Mistral’s API Pricing: User

@vhariationalexplained that Mistral’s API pricing is based on the sum of prompt and completion tokens, with extensive documentation provided. The related@akalinoted Mistral’s competitive pricing compared to ChatGPT 3.5 Turbo API. -

Third-Party UI Solutions for Mistral: User

@clandgrenshared a UI adaptation for Mistral (https://github.com/irony/mistral-ui), originally designed for OpenAI, which functions well and is open source for community feedback and use. Addressed issues include settingOPENAI_API_HOSTcorrectly and dealing with Docker environment variables. -

Access to Mistral and API Key Configuration Challenges: Users discussed how to gain access to Mistral AI, with

@fhnd_querying about the waitlist process, while@arduilexand.elektshared troubleshooting experiences with configuring Mistral API keys and environmental variables in a third-party UI, sometimes resulting in runtime errors and infinite loading issues.

Links mentioned:

- Privacy Policy: Frontier AI in your hands

- Chatbot UI

- HoloViz Blog - Build a Mixtral Chatbot with Panel: With Mistral API, Transformers, and llama.cpp

- Mistral AI API | Mistral AI Large Language Models.): Chat Completion and Embeddings APIs

- HuggingChat

- GitHub - irony/mistral-ui: Contribute to irony/mistral-ui development by creating an account on GitHub.

Eleuther Discord Summary

-

Pile v2 Remains a Mystery: The existence of The Pile v2 was debunked by

@stellaathena, stating it as a work-in-progress and informing about a subset released by CarperAI. Meanwhile, Minipile was highlighted by@giftedgummybeeas a cost-effective alternative for grad students, and a GitHub repository named Awesome-Multilingual-LLM was shared as a resourceful link for multilingual dataset information. -

Innovation in Multilingual Model Training:

@philpaxshared an article from Tensoic Blog on Kannada LLAMA, while@xylthixlmdiscussed how models trained to forget their embeddings could be more adaptable to new languages, as described in an Arxiv paper on Learning to Learn for Language Modeling. -

Byte-Level Tokenization for LLMs Examined: Discussions around fine-tuning LLMs for byte-level tokenization included a suggestion to re-use bytes embeddings from the original vocabulary, and the concept of activation beacons potentially improving byte-level LLMs’ ability to self-tokenize was introduced.

-

Comparing Across Models and Seeking Codes:

@jstephencoreysought model suites like T5, OPT, Pythia, BLOOM, and Cerebras to assess embeddings for retrieval, prompting sharing of accessible codes and data publications, particularly for BLOOM and T5. -

Handling GPT-NeoX Development Issues: OOM errors occurring consistently at 150k training steps were resolved by

@micpieusingskip_train_iteration_ranges. A question regarding gradient storage in mixed-precision training referenced Hugging Face’s Model Training Anatomy, and@catboyslimmergrappled with failing tests, casting doubt on the reliability of the test or system-specific issues.

Eleuther Channel Summaries

▷ #general (95 messages🔥🔥):

- New Dataset Release Speculation: In response to

@lrudl’s question about The Pile v2 release date,@stellaathenaclarifies that Pile v2 is a work-in-progress and doesn’t officially exist, although a subset is available from another direction by CarperAI. - Minipile as a Pile Alternative:

@giftedgummybeepoints out the existence of Minipile, a smaller version of the Pile dataset, which might fit the budget constraints of a grad student as mentioned by@sk5544. - Exploring Multilingual Datasets:

@stellaathenasuggests datasets such as mT5, ROOTS, and multilingual RedPajamas for improving non-English generations of LLMs.@sk5544shares the Awesome-Multilingual-LLM GitHub repository as a resource for related papers. - CIFARnet Dataset Introduced:

@norabelroseshares a link to CIFARnet, a 64x64 resolution dataset extracted from ImageNet-21K which can be found on Hugging Face datasets. The dataset is discussed in relation to label noise and possible experimental uses. - ImageNet Label Noise Discussed:

@ad8eand@norabelroseengage in a conversation about the labeling issues within ImageNet and the CIFARnet dataset, including the presence of grayscale images and potentially mislabeled items.

Links mentioned:

- Re-labeling ImageNet: from Single to Multi-Labels, from Global to Localized Labels: ImageNet has been arguably the most popular image classification benchmark, but it is also the one with a significant level of label noise. Recent studies have shown that many samples contain multiple…

- Know Your Data

- CarperAI/pile-v2-small-filtered · Datasets at Hugging Face

- HPLT

- uonlp/CulturaX · Datasets at Hugging Face

- EleutherAI/cifarnet · Datasets at Hugging Face

- GitHub - y12uc231/Awesome-Multilingual-LLM: Repo with papers related to Multi-lingual LLMs: Repo with papers related to Multi-lingual LLMs. Contribute to y12uc231/Awesome-Multilingual-LLM development by creating an account on GitHub.

▷ #research (62 messages🔥🔥):

-

Exploring Pretraining and Fine-Tuning for New Languages:

@philpaxshared an article about a Continually LoRA PreTrained & FineTuned 7B Indic model, indicating its effectiveness (Tensoic Blog on Kannada LLAMA).@xylthixlmnoted a paper suggesting that Language Models trained to “learn to learn” by periodically wiping the embedding table could be easier to fine-tune for another language (Learning to Learn for Language Modeling). -

Causal vs Bidirectional Models in Transfer Learning:

@grimsqueakerposed a question about the comparative performance in transfer learning between causal models and bidirectional ones, especially for sub 1B sized models.@.soluxsuggested that causality provides substantial performance improvements for transformers, making an equal number of parameters not practically equivalent. -

Fine-Tuning Language Models to Use Raw Bytes:

@carsonpooleinquired about the possibility of fine-tuning a model for byte-level tokenization, suggesting that transformer block representations might carry over during such a process. In follow-up discussions,@the_sphinxrecommended to re-use the bytes embeddings from the original vocab when fine-tuning as bytes, to ease the process and avoid disastrous results. -

Activation Beacons Could Alter Byte-Level LLM Potential:

@carsonpoolementioned that the concept of activation beacons has influenced his view on the potential of byte-level Large Language Models (LLMs).@xylthixlmdescribed activation beacons as allowing the model to tokenize itself by compressing multiple activations into one. -

Comparing Embeddings Across Different Model Suites:

@jstephencoreyqueried for suites of models with a wide range of sizes to evaluate model embeddings for retrieval, noting that quality peaks differed between Pythia and OPT models.@stellaathenaprovided a list of model suites that meet the criteria, including T5, OPT, Pythia, BLOOM, and Cerebras, with@catboyslimmerexpressing interest in accessible code and data for these models, to which@stellaathenaresponded that BLOOM and T5 have published runnable code and data.

Links mentioned:

- Kannada LLAMA | Tensoic

- Soaring from 4K to 400K: Extending LLM’s Context with Activation Beacon: The utilization of long contexts poses a big challenge for large language models due to their limited context window length. Although the context window can be extended through fine-tuning, it will re…

- Turing Complete Transformers: Two Transformers Are More Powerful…: This paper presents Find+Replace transformers, a family of multi-transformer architectures that can provably do things no single transformer can, and which outperforms GPT-4 on several challenging…

- Improving Language Plasticity via Pretraining with Active Forgetting: Pretrained language models (PLMs) are today the primary model for natural language processing. Despite their impressive downstream performance, it can be difficult to apply PLMs to new languages, a ba…

- The Unreasonable Effectiveness of Easy Training Data for Hard Tasks: How can we train models to perform well on hard test data when hard training data is by definition difficult to label correctly? This question has been termed the scalable oversight problem and has dr…

- GenCast: Diffusion-based ensemble forecasting for medium-range weather: Probabilistic weather forecasting is critical for decision-making in high-impact domains such as flood forecasting, energy system planning or transportation routing, where quantifying the uncertainty …

- HandRefiner: Refining Malformed Hands in Generated Images by Diffusion-based Conditional Inpainting: Diffusion models have achieved remarkable success in generating realistic images but suffer from generating accurate human hands, such as incorrect finger counts or irregular shapes. This difficulty a…

▷ #interpretability-general (4 messages):

- Seeking RLHF Interpretability Insights:

@quilaloveinquired about any findings or insights from the rlhf interpretability group. They mentioned the context being a channel titled #rlhf-interp on the mechanistic interpretability discord. - Request for Context by @stellaathena: In response to

@quilalove’s query,@stellaathenaasked for more context in order to provide relevant information regarding RLHF interpretability. - Clarification Provided by @quilalove: After being prompted,

@quilaloveclarified their interest in any knowledge on the effects of RLHF experienced by the group in the #rlhf-interp channel.

▷ #gpt-neox-dev (16 messages🔥):

-

Training Troubles: User

@micpieexperienced an out-of-memory (OOM) error after 150k steps, consistently at the same step. They resolved the issue by using theskip_train_iteration_rangesfeature, skipping more batches around the problematic step. -

Understanding Gradient Precision:

@afcruzsraised a question about gradients always being stored in fp32 even when training with mixed precision, citing Hugging Face’s documentation.@micpieprovided an EleutherAI guide explaining that gradients are computed in fp16 with the weight update being done in fp32, which is normal for mixed precision. -

Tests Yielding Errors: User

@catboyslimmerhas been encountering failing tests while running with pytest, with discrepancies depending on whether the--forkedflag is used. They consider that the tests might be broken or there might be an issue specific to their system. -

Exploring Train/Packing Resources:

@cktalonshared a link to MeetKai’s functionary GitHub repository for a chat language model that interprets and executes functions/plugins.@butaniumthanked@cktalonand was encouraged to share any interesting findings.

Links mentioned:

- Model training anatomy

- functionary/functionary/train/packing at main · MeetKai/functionary: Chat language model that can interpret and execute functions/plugins - MeetKai/functionary

- Jupyter Notebook Viewer

LM Studio Discord Summary

-

Creative Bookmarking Strategies Explored:

@api_1000and@dagbsdiscussed bookmarking Discord posts with a potential solution involving creating a new server to store message links. Meanwhile,@heyitsyorkiementioned the traditional copy/paste for offline backup, providing alternatives for resource management. -

Challenges and Solutions in Dynamic Model Loading: Users

@nyaker.and@nmnir_18598reported issues with loading Mixtral Q3 and image processing errors, respectively. Potential causes suggested by members like@heyitsyorkieand@fabguyinclude version incompatibility and clipboard errors, with remedies pointing towards updates and system checks. -

Navigating Hardware Constraints for Advanced AI models: Insights from users like

@heyitsyorkieand@pefortinemphasized the heavy VRAM requirements of Mixtral 8×7b and potential bandwidth bottlenecks of mixed GPU setups. Discussions included advice on tensor splitting and monitoring proper GPU utilization for model operations. -

Local Model Optimizations for Creative Writing: Recommendations for using OpenHermes and dolphin mixtral models were offered for fiction worldbuilding tasks, with community members guiding on optimizing GPU settings. Utility tools like World Info from SillyTavern were shared to enhance the AI’s understanding of narrative details.

-

Feature Requests and Humor in Feedback: The feedback section saw a tongue-in-cheek remark by

@fabguy, suggesting that a bug could be considered a feature, and a user-driven request by@blackflagmarineto improve the search capabilities of the LLM search with a contains function, aimed at enhancing user experience.

LM Studio Channel Summaries

▷ #💬-general (77 messages🔥🔥):

- DIY Bookmarking Tips:

@api_1000got creative advice from@dagbson bookmarking useful Discord posts by creating a new server and pasting message links there. Alongside@heyitsyorkie, who also suggested the traditional copy/paste method for offline backups. - Model Loading Troubles:

@nyaker.voiced their inability to load Mixtral Q3 with or without GPU acceleration and received input from@heyitsyorkieand@fabguy, suggesting version incompatibility and available system resources as potential issues. They recommended upgrading to later versions and checking system requirements. - Mysterious Vision Error:

@nmnir_18598encountered an error with image processing in the chat window, which@heyitsyorkielinked to clipboard content. The issue was resolved by@fabguywho recommended starting a new chat and advised on potentially editing the JSON file to remove the erroneous content. - Installation Assistance: Newcomers like

@duncan7822and@faradomus_74930inquired about installing LM Studio on Ubuntu Linux, and@heyitsyorkieprovided guidance, including the necessary condition of having an updated glibc for compatibility on Ubuntu 22. - Feature Functionality and Resource FAQs:

@meadyfrickedsought help regarding function calling with autogen, prompting responses from@heyitsyorkieand@dagbson current limitations and workarounds. Additionally,@heyitsyorkieposted a link to an unofficial LMStudio FAQ for community reference.

Links mentioned:

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed…

- GitHub - microsoft/lida: Automatic Generation of Visualizations and Infographics using Large Language Models: Automatic Generation of Visualizations and Infographics using Large Language Models - GitHub - microsoft/lida: Automatic Generation of Visualizations and Infographics using Large Language Models

▷ #🤖-models-discussion-chat (59 messages🔥🔥):

-

LM S Struggles with Newer gguf Models:

@coolbreezerandy6969experienced issues loading newer gguf models with LM S (Linux LM+Studio), clarified by@fabguywho explained that new architectures like Mixtral require updates, and version 0.2.10 might resolve these issues. -

Mixtral Confined to Local Use:

@pinsoasked about TheBloke’s dolphin-2.5-mixtral-8x7b-GGUF model having internet search capabilities, which@heyitsyorkierefuted, confirming that LMStudio does not support function calling for web searches. -

Hefty VRAM Required for Mixtral 8×7b:

@heyitsyorkiementioned that running Mixtral 8×7b at q8 requires 52 GBs of VRAM. Consequently,@madhur_11noted poor performance with just 16 GB of RAM on a laptop, to which@heyitsyorkieresponded that LM Studio’s system for Mixtral models carries bugs. -

Understanding VRAM and Shared GPU Memory: A conversation between

@nikoloz3863and@heyitsyorkiehelped clarify that VRAM is dedicated memory on the graphics card, while shared GPU memory includes a combination of VRAM and CPU RAM. -

Recommendations for Local Models Aiding Fiction Writing:

@rlewisfrsought model recommendations for worldbuilding and was directed by@ptableto try OpenHermes and dolphin mixtral models. Further discussions led to@heyitsyorkieoffering advice on optimizing GPU layer settings and referencing SillyTavern for leveraging World Info for interactive story generation.

Links mentioned:

- dagbs/laserxtral-GGUF · Hugging Face

- liminerity/Blur-7B-slerp-v0.1 · Hugging Face

- World Info | docs.ST.app: World Info (also known as Lorebooks or Memory Books) enhances AI’s understanding of the details in your world.

- 222gate/Blur-7B-slerp-v0.1-q-8-gguf · Hugging Face

▷ #🧠-feedback (2 messages):

- The Feature Debate: User

@fabguyhumorously commented that an aspect of the chatbot, which might be perceived negatively, should be considered a feature, not a bug. - Search Enhancement Request:

@blackflagmarinerequested an addition of a contains functionality to the LLM search to improve the search capabilities.

▷ #🎛-hardware-discussion (6 messages):

-

Franken-PC Experiments Reveal Bandwidth Bottlenecks: In his franken-PC setup with mixed GPUs,

@pefortinshared some experimental performance results with Mixtral 8x7B and different configurations. The combination of a 3090 with a 3060ti led to the best performance at 1.7 tokens/second, while adding slower GPUs and PCIe lanes decreased throughput. -

Tensor Split Needs Investigation:

@dagbssuggested testing the tensor split performance with a 3060ti versus 2x 1660, hinting at possible issues with tensorsplit’s workings.@pefortinresponded, clarifying that the model layers were proportionally split not evenly distributed, implying the splitting mechanism functioned with the GGUF and llamacpp framework. -

Exploring GPTQ/exl2 for Possible Performance Gains:

@pefortinmentioned plans to conduct tests using GPTQ/exl2 formats to see if they alter performance outcomes in the model setup. -

Sanity Check for Model Splits via GPU Monitoring Advised:

@ben.comrecommended monitoring the “copy” graph in Task Manager’s GPU tab to ensure there are no hidden inefficiencies during model splits.@pefortinassured he keeps an eye on GPU memory usage and compute activity, confirming all looked normal.

HuggingFace Discord Discord Summary

-

Merge Dilemma and the Quest for the Right Model: Engineers discussed dataset merging strategies, where

_michaelshbrought up a query about combining 85 GB of audio samples with 186 MB of associated texts. The conversation pivoted to the best Large Language Model (LLM) for a local database, considering models like Mistral, Llama, Tapas, and Tapex, withrefik0727spearheading the discussion. -

Tackling Env Issues and Enhancing Chatbots: There was an exchange on resolving environment-related errors in model packaging, specifically concerning

package_to_hubfunctionality with a non-gymnasium environment, as articulated byboi2324anddoctorpangloss. Additionally, strategies to improve chatbot responses using TinyLLaMa were discussed, proposing an array-based structuring of user/assistant messages to guide model comprehension. -

Learning and Adaptation in AI:

bluebugshared accomplishments of labeling over 6k datasets and creating a new image-to-text labeler tool. Insights into MiniLLM, a method for distilling LLMs developed by Microsoft, were highlighted, featuring reinforcement learning language techniques for efficiently running LLMs on consumer-grade GPUs. -

Tools and Papers Unveiled: The community brought to light academic resources linking Transformers to RNNs and a GitHub repository named UniversalModels designed to act as an adapter between Hugging Face transformers and different APIs.

-

Innovations and Implementations in AI Showcased: Creations spanned from Midwit Studio, an AI-driven text-to-video generator, to articles detailing Stable Diffusion’s inner workings. New models like e5mistral7B were introduced, and tools like a fast-paced data annotation tool and Dhali, a platform for monetizing APIs, were demonstrated.

-

Image Editing Advances and Issue Management:

sayakpaulencouraged an issue thread for clarification accompanied by a reproducible snippet and presented Emu Edit, a multi-task oriented image editing tool, distinct from standard inpainting thanks to its task-specific approach. -

AI Mimicry and Human-Like NPCs: An AI agent that interacts with ChatGPT-4v for block manipulation tasks to achieve human-like behavior was shared by

harsh_xx_tec_87517, indicating potential applications in NPC behavior with a demonstrated process shared through LinkedIn. -

Model Insights and NER Efficiency: Parameter count strategies using safetensors model files and Python functions were debated, leading to a confirmed utility of a parameter estimation function across models like Mistral, LLaMA, and yi-34. An innovative lasso selector tool boasted the ability to label 100 entities in 2 seconds, and model embeddings within LLMs were discussed, focusing on tokenizer origins and training methodologies.

HuggingFace Discord Channel Summaries

▷ #general (62 messages🔥🔥):

- Merge Strategy Mysteries: User

@_michaelshasked about the best method to merge two large datasets, one consisting of 85 GB of audio samples and the other of 186 MB of associated texts.@moizmoizmoizmoizrequested further details to provide an accurate suggestion. - Choosing the Right LLM for Local Database:

@refik0727inquired about the most suitable Large Language Model (LLM) for structured data from a local database, considering models like Mistral, Llama, Tapas, and Tapex. - Gym vs Gymnasium Environment for Model Packaging:

@boi2324encountered an error when attempting to usepackage_to_hubwith a non-gymnasium environment, discussing this with@doctorpanglosswho ultimately recommended using environments supported by Hugging Face to avoid major issues. - Improving Chatbot Responses:

@mastermindfilldiscussed optimizing chatbot responses using TinyLLaMa after observing suboptimal output.@cappuch__advised appending messages to an array with a user/assistant format and using username prompts to direct model comprehension. - Concerns Over Model Safety Labels:

.ehsan_lolexpressed confusion about models being labeled as “unsafe” on Hugging Face, with a specific interest in understanding why this might be for the purpose of downloading the model.

Links mentioned:

▷ #today-im-learning (3 messages):

- Productivity Unleashed with Custom Tool:

@bluebughas successfully labeled a significant amount of data, boasting about having labeled over 6k datasets. - Homebrew Image to Text Tool Completion: Created by

@bluebug, a new image to text labeler tool has been completed to assist with data labeling tasks. - Discovering Mini LLM - A Leap in LLM Distillation:

@frequesnylearned about MiniLLM, a state-of-the-art method developed by Microsoft for distilling large language models (LLMs) using reinforcement learning language. The method boasts impressive results in comparison to existing baselines, and@frequesnyshared the GitHub repository: MiniLLM on GitHub.

Links mentioned:

GitHub - kuleshov/minillm: MiniLLM is a minimal system for running modern LLMs on consumer-grade GPUs: MiniLLM is a minimal system for running modern LLMs on consumer-grade GPUs - GitHub - kuleshov/minillm: MiniLLM is a minimal system for running modern LLMs on consumer-grade GPUs

▷ #cool-finds (2 messages):

- “Transformers meet RNNs” Paper Shared: User

@doodishlashared an academic paper linking Transformers to RNNs, which can be found on arXiv. - Universal Adapters for Transformers:

@andysingalfound a nice GitHub repository named UniversalModels which acts as an adapter between HuggingFace transformers and several different APIs, available at GitHub - matthew-pisano/UniversalModels.

Links mentioned:

GitHub - matthew-pisano/UniversalModels: An adapter between Huggingface transformers and several different APIs: An adapter between Huggingface transformers and several different APIs - GitHub - matthew-pisano/UniversalModels: An adapter between Huggingface transformers and several different APIs

▷ #i-made-this (13 messages🔥):

- Introducing Midwit Studio: User

@ajobi882shared a link to Midwit Studio, an AI-driven text-to-video generator designed for simplification, teasingly suggested for “midwits”. Check it out here: Midwit Studio. - Diving Deep into Stable Diffusion:

@felixsanzpublished a detailed two-part article series on Stable Diffusion: The first explains its working without code, while the second part tackles implementation with Python. Read about it here. - Tonic Spotlights E5 Mistral:

@tonic_1announces the availability of e5mistral7B on GPUZero and describes it as a new Mistral model with merged embeddings capable of creating embeddings from the right prompts. Explore the model on HuggingFace Spaces. - Speedy Data Annotation Tool:

@stroggozintroduces an alpha-stage data annotation tool for NER/text classification, boasting the ability to label around 100 entities every 2 seconds. The tool’s preview is available here. - Monetize APIs with Dhali:

@dsimmopresents Dhali, a platform that allows users to monetize their APIs within minutes, using a Web3 API Gateway and offering low overhead and high throughput without the need for subscriptions. For more details, visit Dhali.

Links mentioned:

- Gyazo Screen Video:

- Midwit Video Studio

- Dhali

- E5 - a Hugging Face Space by Tonic

- How to implement Stable Diffusion: After seeing how Stable Diffusion works theoretically, now it’s time to implement it in Python

- How Stable Diffusion works: Understand in a simple way how Stable Diffusion transforms a few words into a spectacular image.

▷ #reading-group (1 messages):

annorita_anna: I would love to see this happen too!🤍

▷ #diffusion-discussions (5 messages):

-

Invitation to Create Issue Thread:

@sayakpaulencourages the opening of an issue thread for further discussion and clarifies the need for a reproducible snippet. A specific user is cc’ed for visibility. -

Emu Edit’s Approach to Image Editing:

@sayakpauldifferentiates Emu Edit, an image editing model, from inpainting by highlighting its multi-tasking ability across a range of editing tasks. He provides a brief explanation and a link to Emu Edit for further information. -

Assurance on Issue Logging: In response to a link posted by

@felixsanz,@sayakpaulagrees that even if it’s not a bug, having the issue logged is helpful. -

Clarification on “Not a Bug”:

@felixsanzclarifies that the prior issue under discussion is not a bug.

Links mentioned:

Emu Edit: Precise Image Editing via Recognition and Generation Tasks

▷ #computer-vision (1 messages):

- AI Agent Mimics Human Task Management:

@harsh_xx_tec_87517developed an AI agent that captures screenshots and interacts with ChatGPT-4v for block manipulation tasks, iterating this process until a specific state is reached. The agent aims to replicate human-like behavior for potential future use in NPCs and a video demonstration and LinkedIn post provide additional insights.

▷ #NLP (12 messages🔥):

-

Parameter Count Without Model Download:

@robert1inquired about obtaining the parameter count of a model without downloading it.@vipitisresponded that the parameter count can be seen if there is a safetensors model file on the model page. -

Estimating Parameter Count from

config.json:@robert1mentioned the possibility of writing a function to calculate parameter count usingconfig.json, and@vipitisnoted that would require in-depth knowledge of the model’s hyperparameters. -

LLaMA Model Python Function Shared:

@robert1shared a Python function_get_llama_model_parameter_countthat calculates the parameter count for LLaMA-based models using information from theconfig.json. -

Utility of Parameter Count Function Confirmed:

@robert1confirmed that the provided Python function correctly estimates the parameter count across various models like Mistral, LLaMA, and yi-34 after testing. -

Innovative Lasso Selector for NER:

@stroggozshared a 7-second gif demonstrating a lasso selector tool that can be used to label 100 named entities or spans in just 2 seconds. -

Embedding Models in LLMs Discussed:

@pix_asked about the type of embedding used in large language models (LLMs) with positional encoding.@stroggozclarified that embeddings typically derive from a tokenizer and pre-trained transformer base architecture, with random initialization being a possibility for training from scratch.

Links mentioned:

▷ #diffusion-discussions (5 messages):

- Invitation to Open an Issue Thread:

@sayakpaulencouraged users to open an issue thread and include a reproducible snippet for discussion, tagging another user withCc: <@961114522175819847>. - Emu Edit Demonstrates Inpainting Capabilities:

@sayakpaulshared a link to Emu Edit and described its distinct approach to image editing, which involves multi-task training and learned task embeddings to steer generation processes. - Inpainting Requires Binary Mask: In the context of discussing image editing techniques,

@sayakpaulnoted that inpainting, unlike other methods, requires a binary mask to indicate which pixels in an image should be modified. - Clarification That an Issue Is Not a Bug:

@felixsanzstated that although there’s a situation at hand, it does not constitute a bug. This was followed by a reassurance from@sayakpaulthat logging the issue would still be beneficial.

Links mentioned:

Emu Edit: Precise Image Editing via Recognition and Generation Tasks

Perplexity AI Discord Summary

-

Mistral Medium Disconnection Drama: Users

@moyaoasisand@me.lkhighlighted an issue with Mistral Medium disconnecting in MS Edge. The problem was recognized and noted for a fix as per a prior community message. -

Voice for Bots in Question: Curiosity arose about voice conversation features for chatbots as user

@financersinquired about such capabilities in Perplexity, resembling those in ChatGPT. Though uncertain about Perplexity’s adoption of the feature, user@mares1317suggested pi.ai/talk as an alternative for voice interaction. -

Exploring PPLX API’s Potential: Discussion occurred about the new pplx-api, particularly about whether it could include source links in responses. A blog post shared by

@mares1317described the API’s features, indicating a future capability for fact and citation grounding. -

Pro Member Plunges Into Perplexity: Newly minted Pro member

@q7xcis delving into the features and benefits of the platform, as mentioned in the#sharingchannel. -

pplx-7b-online Model Suffers Setback: User

@yueryuerreported experiencing a 500 internal server error while using thepplx-7b-onlinemodel, raising concerns about server stability at the time of the incident.

Perplexity AI Channel Summaries

▷ #general (88 messages🔥🔥):

-

Mistral Medium Disconnection Issue Raised: User

@moyaoasisreported experiencing problems with Mistral Medium disconnecting while other models worked fine after switching to MS Edge from Brave. The issue was confirmed by@me.lkas known and to be fixed as indicated in a community message. -

Curiosity About Voice Features for Chatbots:

@financersinquired if Perplexity would implement voice conversation features like ChatGPT.@mares1317doubted Perplexity would adopt that feature but suggested a third-party alternative, pi.ai/talk, for vocal interaction. -

PPLX API Introduction and Limitations: Users

@d1ceugeneand@mares1317discussed the new pplx-api, with questions regarding its capability to provide source links in responses.@mares1317shared a blog post, detailing the API features and hinting at future support for fact and citation grounding with Perplexity RAG-LLM API. -

Perplexity Access and Performance Issues: Several users including

@louis030195,@zoka.16, and@nathanjliuencountered issues with the API, app responsiveness, and logins across various devices.@mares1317and@ok.alexresponded with troubleshooting suggestions, and@icelavamanlater confirmed that Perplexity should be working again. -

App Login and Account Migration Queries: Users

@.mergesortand@leshmeat.sought assistance with account login issues, specifically related to Apple account migration and lost email access.@ok.alexand@me.lkresponded with possible login steps and support contact for subscription transfers, but no history transfer was confirmed.

Links mentioned:

- Anime Star GIF - Anime Star - Discover & Share GIFs: Click to view the GIF

- Moon (Dark Mode)

- Introducing pplx-api : Perplexity Lab’s fast and efficient API for open-source LLMs

▷ #sharing (4 messages):

-

Perplexity Android Widget Now Available: User

@mares1317shared a tweet from@AravSrinivasannouncing the release of a widget for Perplexity Android users. The tweet, Perplexity Android Users: Thanks for waiting patiently for the widget! Enjoy!, expresses gratitude for users’ patience. -

Channel Etiquette Reminder for Project Sharing:

@ok.alexreminded<@935643161504653363>to share project-related content in the specific channel for such posts, directing them to<#1059504969386037258>. -

New User Praises Perplexity:

@pablogonmojoined the chat to share their initial positive impressions, calling Perplexity a “very solid alternative.” -

Pro Membership Exploration: New Pro member

@q7xcmentioned they are in the process of figuring out the platform.

Links mentioned:

Tweet from Aravind Srinivas (@AravSrinivas): Perplexity Android Users: Thanks for waiting patiently for the widget! Enjoy!

▷ #pplx-api (4 messages):

- Model Misclassifies Companies:

@eggless.omelettereported issues with a model classifying companies into specific categories, receiving responses that included a repetition of the company name, a verbose Google-like search result, or a message stating no results found. - Intriguing ‘related’ Model Mentioned:

@dawn.duskhinted at the existence of a “related” model, expressing curiosity and seeking confirmation by tagging<@830126989687914527>. - Server Error Hurdles for pplx-7b-online Model:

@yueryuerencountered a 500 internal server error when calling the API with thepplx-7b-onlinemodel, questioning the stability of the server at that time.

OpenAccess AI Collective (axolotl) Discord Summary

-

Axolotl Adventures in DPO:

@c.gatoexpressed gratitude for the ease of utilizing Axolotl’s Dynamic Performance Optimizer (DPO), calling the experience immensely FUN.@casper_aiand@xzuynprovided advice on creating DPO datasets which consist of chosen/rejected pairs, confirming that these are designed differently than SFT datasets based on the desired model behavior. -

RLHF Update is Imminent: An update regarding Reinforcement Learning from Human Feedback (RLHF) is to be shared soon, as teased by

@caseus_. -

Empowering the Dataset Formats: Hugging Face MessagesList format is being considered for chat message formatting, as discussed by

@dctanner. To align with this effort, Axolotl Pull Request #1061 will have updates to support this new ‘messageslist’ format, as proposed in the Hugging Face Post. -

Optimization Talk around Model Packing: An interest has been shown in the optimized solution for model packing from MeetKai functionary, with focus on efficiency and potential implementation in a collator.

-

Technicalities and Troubleshootings in Bot Land:

@mrfakename_highlighted potential downtime of a bot after it failed to respond to prompts,@noobmaster29confirmed the online status but shared similar unresponsiveness concerns. In runpod-help,@baptiste_cosuccessfully installedmpi4pyusing Conda, while@tnzkencountered aRuntimeErrorafter installation, suggesting a possible bug report to PyTorch.

OpenAccess AI Collective (axolotl) Channel Summaries

▷ #general (16 messages🔥):

- The Joy of Axolotl DPO:

@c.gatoexpressed excitement and gratitude for the ease of running DPO jobs with Axolotl, having immense FUN in the process. - Upcoming RLHF News Teaser:

@caseus_hinted that updates regarding RLHF will be shared soon. - Details on Training Phases Clarified: In a discussion about training methods,

@caseus_and@casper_aiclarified that SFT should be done first, followed by DPO.@dangfuturesengaged in the conversation seeking clarity on the process. - Guidance on DPO Dataset Creation:

@casper_aiand@xzuynadvised@dangfuturesthat DPO datasets typically consist of chosen/rejected pairs and are designed based on desired model behavior, which can be quite different from SFT datasets. - Inquiry About Continual Pretraining:

@jinwon_kquestioned the success of continual pretraining with Axolotl, to which@nanobitzresponded confirming successful usage, although it’s been a while since implemented.

▷ #axolotl-dev (31 messages🔥):

- Converging on a Chat Dataset Standard: