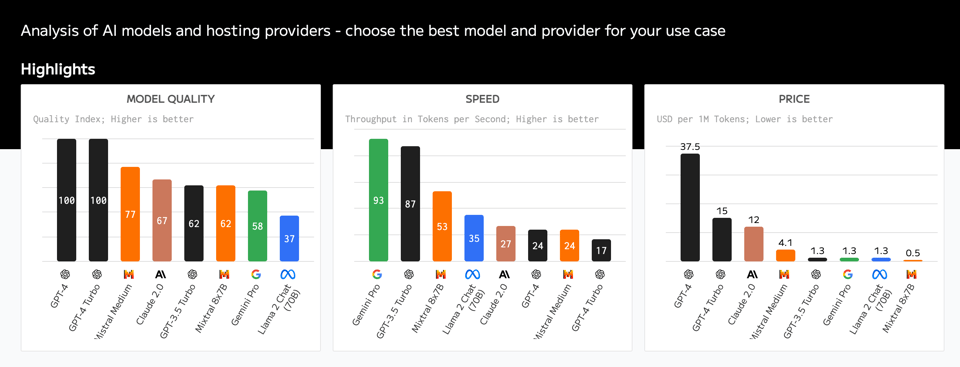

Artificial Analysis: this gem of a models and hosts comparison site was just launched:

swyx’s tweet on this here:

—

Table of Contents

[TOC]

Nous Research AI Discord Summary

-

Innovating Summarization Techniques: Discussions around efficient summarization strategies have included using smaller chunks and large overlaps with the aid of 3090 and 2080ti NVIDIA GPUs for rapid processing of around 100k tokens, while another topic covered adapting AI prompts from high-level models to smaller models like 7B with OpenChat showing promising performance.

-

ArXiv Insights and AI Quirks: An arXiv paper discussing advancements in computer science stirred interest, while a lighthearted note on conversational AIs starting discussions when tasked was pointed out, emphasizing a quirk in current chatbot behavior.

-

The Accessibility of Hermes Mixtral: News that Hermes 2 Mixtral is available on Huggingface’s HuggingChat platform, paired with challenges shared about fine-tuning Mixtral using Axolotl, and successful configurations such as Eric Hartford’s found on Huggingface, provided insightful discourse.

-

Diverse LLM Application Conversations: Users exchanged knowledge on byte-level tokenization, and explored training models on COCO image bytes with captions to look into multimodal capabilities. There were also practical tips on improving inference speeds, such as using optimized libraries including vllm and llama.cpp, and employing tools like Lilac for dataset management.

-

LLM Progress and Concerns: Calls were made for transparency in data hoarding to prevent redundant efforts, with an assurance that Hermes 2 - Mixtral dataset will be open-sourced. Also, dpo and sft methods were compared for their impacts on creativity, and M1 MacBook Pro users discussed running quantized LLMs, considering models such as laserxtral.

Nous Research AI Channel Summaries

▷ #ctx-length-research (1 messages):

gabriel_syme: Dang this looks great https://fxtwitter.com/_akhaliq/status/1747515567492174185

▷ #off-topic (78 messages🔥🔥):

-

Chunking Strategy for Efficient Summarization:

@fullstack6209described their approach to summarization using a smaller chunk size and larger overlap, focusing the LLM prompt to tackle the most important information. They applied this method to approximate large textbooks, mentioning the use of a 3090 and 2080ti NVIDIA GPUs for fast processing of about 100k tokens. -

Cost-Effective AI Solutions:

@fullstack6209discussed the challenge of adapting AI prompts from high-level models to smaller, cost-efficient models like 7B. They mentioned that OpenChat has been significantly outperforming others in their tests. -

Probing Technical Challenges with Nous Hermes Vision Alpha:

@manojbhsought help with issues related to unicode outputs from Nous Hermes Vision Alpha, and underwent troubleshooting with other users, including@teknium, discussing hardware specifications and model versions. -

Exploration of Byte-Level Tokenization in Models:

@carsonpooleintroduced the concept of Byte Mistral, which is a version of Mistral using a byte-level tokenizer instead of BPE, potentially providing advantages in processing noisy text or supporting multiple languages. Other users, like_3sphere, engaged in a discussion about the efficiency and use cases for byte-level tokenization. -

Multimodal Training Experiments:

@carsonpooleshared their plans to train on COCO image bytes along with captions to explore the capabilities of multimodal models. They suggested that this could demonstrate how well multimodal can work, especially with the byte tokenizer.

Links mentioned:

- The Universal Speed of Language: 39 Bits per Second: Whether speaking rapid Japanese or deliberate German, the rate of information conveyed is identical.

- google/byt5-small · Hugging Face

- TheBloke/Nous-Hermes-2-Mixtral-8x7B-DPO-GGUF · Hugging Face

- Lorn - Acid Rain (Official Music Video): 2015 UK MVA ‘Best Dance Music Video’ WinnerMilano Film Festival Showcase 2015SXSW Official Selection 2016Artist : LORN Title: Acid RainLabel: Wednesday Sound…

▷ #interesting-links (2 messages):

- Latest ArXiv Paper Discussed: User

@metaldragon01shared an arXiv paper authored by a large team including Jiaheng Liu, Zhiqi Bai, and others, focusing on a recent advancement in computer science. - Chat Models Earn a Giggle:

@gabriel_symehumorously commented on the nature of conversational AI, saying they tend to start discussions when given a task, highlighting a quirk in current chatbot behavior.

Links mentioned:

E^2-LLM: Efficient and Extreme Length Extension of Large Language Models: Typically, training LLMs with long context sizes is computationally expensive, requiring extensive training hours and GPU resources. Existing long-context extension methods usually need additional tra…

▷ #general (224 messages🔥🔥):

- Hermes 2 Mixtral Now on HuggingChat:

@tekniumshared the news that Hermes 2 Mixtral is now available on HuggingChat by Huggingface, providing access to chat with this model on their platform. - Fine-tuning Mixtral Issues and Solutions: Users like

@qnguyen3reported difficulties fine-tuning Mixtral with Axolotl, while.beowulfbrmentioned a successful config by Eric Hartford found on Huggingface. FFT was noted to cause issues, and Llama-Factory was considered as an alternative. - Language Performance of Hermes Mixtral:

@light4bearand@0xsingletonlyprovided feedback on Hermes Mixtral’s performance in non-English languages, with varying effectiveness noted in Chinese and Traditional Chinese. - Inference Speed and Technique Discussions: Users like

@lightvector_discussed the slow inference speed when using the Mixtral-8x7b model, while@intervitensand@giftedgummybeesuggested using optimized libraries like vllm and llama.cpp for faster performance. The potential use of GGUF quantization with llamacpp was also mentioned. - Dataset Management Tools Inquiry:

@nonameusrinquired about tools for managing datasets and found success in installing and planning to use Lilac, a tool for editing and viewing datasets for LLMs.

Links mentioned:

- Tweet from Argilla (@argilla_io): 🌸 Synthetic Haiku DPO 🌸 🙌A DPO dataset by @vanstriendaniel generated with OSS models ⚗️ Built with distilabel using the awesome OpenHermes by @Teknium1 https://huggingface.co/datasets/davanstr…

- HuggingChat

- Parameter-Efficient Sparsity Crafting from Dense to Mixture-of-Experts for Instruction Tuning on General Tasks: Large Language Models (LLMs) have demonstrated considerable proficiency in general natural language processing (NLP) tasks. Instruction tuning, a successful paradigm, enhances the ability of LLMs to f…

- EAdam Optimizer: How $ε$ Impact Adam: Many adaptive optimization methods have been proposed and used in deep learning, in which Adam is regarded as the default algorithm and widely used in many deep learning frameworks. Recently, many var…

- configs/dolphin-mixtral-8x7b.yml · cognitivecomputations/dolphin-2.5-mixtral-8x7b at main

- DPO Trainer

- Tweet from clem 🤗 (@ClementDelangue): This is my first post on @huggingface! https://huggingface.co/posts/clem/533874509800797

- Tweet from Victor M (@victormustar): 🚨Something BIG is coming to Hugging Face… stay tuned 👀

- Perfecting Merge-kit MoE’s

- Tweet from Nous Research (@NousResearch): Nous-Hermes 2 on Mixtral is now available to chat with on @huggingface’s HuggingChat! Try it now here: https://huggingface.co/chat/?model=NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO Thank you …

- NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO-GGUF at main

- Lilac - Better data, better AI: Lilac enables data and AI practitioners improve their products by improving their data.

- supertrainer2000/supertrainer2k/optim/adalite.py at master · euclaise/supertrainer2000: Contribute to euclaise/supertrainer2000 development by creating an account on GitHub.

- Capybara Let Him Cook GIF - Capybara Let him cook - Discover & Share GIFs: Click to view the GIF

- Finetune LoRA on CPU using llama.cpp: Think of a LoRA finetune as a patch to a full model. The LoRA training makes adjustments to the weights of a base model, e.g., Stheno-L2-13B, which are saved separately, e.g., Stheno-L2-13B-my-awesome…

- Unable to install via pip · Issue #777 · NVIDIA/TensorRT-LLM: when installing via pip with the following code (using windows, not using docker): pip install tensorrt_llm —extra-index-url https://pypi.nvidia.com —extra-index-url https://download.pytorch.org/…

▷ #ask-about-llms (33 messages🔥):

-

Open Source Data Advantages:

@bramvanroyraises concerns over data hoarding by companies and calls for transparency to prevent parallel efforts.@tekniumassures that the dataset used for Hermes 2 - Mixtral will be open sourced, although the filtering may not be shared. -

DPO vs. SFT - A Creative Divide?:

@mr.userbox020inquires about the difference between dpo and sft.@tekniumexplains that dpo is RLHF’ed (Reinforcement Learning with Human Feedback), which can diminish creativity, suggesting to try both and see what works best. -

Creating Organic Datasets Conversations:

@protofeatherasks about creating efficient organic datasets from forums or private code bases. Meanwhile,@taumoebais looking for resources on adding new languages to an LLM and@manveerxyzreferences Cohere’s Aya project as a potential resource. -

Quantized Model Dilemmas on M1 MacBook:

@0xsingletonlyseeks advice on running quantized versions of LLMs on a 16GB M1 MacBook Pro and is advised by@n8programsthat a good 2x7b or 4x7b moe at q3 might be suitable. They further discuss a specific model, leading to the suggestion to consider laserxtral as a viable option. -

Checking the Pulse of LLMs:

@valiantinquires about the current status of Large Language Models, to which@n8programsfacetiously replies “good”, echoing the quick and humorous diversions often found in such discussions.

Links mentioned:

- Introducing Aya: An Open Science Initiative to Accelerate Multilingual AI Progress: TL;DR: Aya is an open science project that aims to build a state of art multilingual generative language model; that harnesses the collective wisdom and contributions of people from all over the worl…

- TheBloke/Mixtral-Fusion-4x7B-Instruct-v0.1-GGUF · Hugging Face

- Agarra La Tele Weon Agarra La Tv Weon GIF - Agarra La Tele Weon Agarra La Tv Weon Terremoto Roblox - Discover & Share GIFs: Click to view the GIF

HuggingFace Discord Discord Summary

-

AI Advances Game Development: Making Games with AI by

@ThomasSimoniniintroduces the creation of Robot AI NPCs in Unity. This practical approach to game development features Hugging Face’s technology and can be explored by developers here. -

Gradio’s New Release Powers Browser-Based ML: Gradio 4.14 enhances developer capabilities for in-browser machine learning interfaces, with Gradio-Lite pioneering this update. Experience Gradio demos in the playground.

-

Gradio’s Startup to Acquisition Saga:

@abidlabsdocumented the journey of Gradio from inception to acquisition by Hugging Face, providing insights into startup trajectories. The enlightening story is accessible here. -

Hugging Face’s Posts Feature Adds Community Collaboration: The introduction of the Posts feature on Hugging Face offers a new avenue for ML professionals to engage and collaborate. Eager members can join here.

-

ONNX Runtime Accelerates SD Turbo Models: Using ONNX Runtime has significantly improved inference speeds for text-to-image models like SD Turbo and SDXL Turbo. Discover more about these advancements here.

-

Security Alert: Pickle with Care:

@cappuch__highlighted the security risks associated with pickled files, drawing attention to potential code vulnerabilities. -

Conversational AI Optimization: Instead of larger models like 7B Llama, fine-tuning smaller models is recommended for conversational AI, emphasizing the former’s inefficiency in dialogue-based tasks.

-

Call for Collaborative Research:

@dsiegelseeks collaboration on projects involving stereo camera systems and light enough algorithms for devices like Raspberry Pi, signaling a need for community support on depth maps and point clouds creation. -

The Quantization Debate: A vibrant discussion about LLM quantization took place, focusing on the trade-offs between model size, quality, and inference performance with shifts from FP32 to FP16 and 4bit.

-

Learning Discussions:

@gag123queries about the model accuracy metrics unleashing discussions about research papers and methodologies for testing models like LLaMA on A100 GPUs. Conversations circulate around the access and use of HPC resources in universities, signaling interest in infrastructure for NLP and simulation work. -

Deep Reinforcement Learning in Business AI:

@scorpio123.questions the application of Deep RL in business AI environments, reflecting an industry movement towards practical AI implementation strategies. -

Nous Research’s Tweet and DeepSeekMoE: A tweet by Nous Research is brought to attention by

@osansevieroand the “DeepSeekMoE: Towards Ultimate Expert Specialization in Mixture-of-Experts Language Models” paper released on arXiv.org offers new perspectives on MoE models to the community. -

Project Showcases and Collaborative Engagement:

lunarfluandosansevierodelve into conversations about transforming a demo for sentence similarity and embedding corpus for semantic search, with a suggestion thread initiated on HuggingFace.sebastian3079showcases a YouTube comment sentiment analyzer, andfrequesnylaunches a Gradio-based hazardous AI model trained on a toxic dataset, now available for interaction here. -

Law and AI, Homomorphic Encryption Discussed:

@chad_in_the_houseconsiders a presentation on law and the use of LLMs, while proposing and receiving feedback on the potential for discussing homomorphic encryption in AI. -

Stable Diffusion Fine-Tuning Focus: Interest in fine-tuning Stable Diffusion 2.1 leads to recommendations of SimpleTuner, with an active engagement in resolving fine-tuning issues for Stable Diffusion 2.x that are stated to be resolved in the master branch.

-

Automated Gaming AI and Troubleshooting: The use of AI in game bot automation is discussed with a desire for full-screen interaction, directing to a relevant blog post on PyTorch and EfficientNet usage in gaming here. Moreover, issues with Flask app deployment on AWS and

.pthfile sizes are surfacing. -

NLP Modeling Queries and Mistral Errors: Interest is expressed in developing custom Question Answering models, with calls for resources and guidance. A bus error while training Mistral on MacOS sparks discussions about Hugging Face Forum threads relevant to non-CUDA-compatible training. Conversations also include advice on fine-tuning for embeddings and tips for downloading transformer model files with an emphasis on safetensors for efficiency.

-

Computer Vision Interactions Surround AI-Powered Automation: Discussions evolve around creating an AI game bot, integrating AI models, and the quest for best practices. A user shares an interest in pyautogui for simulating user input, and another struggles with deploying large models on AWS, revealing the practical challenges of integrating AI with computer vision.

HuggingFace Discord Channel Summaries

▷ #announcements (4 messages):

-

AI Enters Game Development: The first chapter of Making Games with AI is released by

@ThomasSimonini, showing users how to build a Robot AI NPC using Hugging Face and Unity which follows text instructions. Gamers and developers can start creating their own AI NPCs here. -

Gradio Launches Latest Version: Gradio 4.14 release enables developers to build in-browser applications using Gradio-Lite with the new update, further facilitating the creation of machine learning interfaces. Access the Gradio playground for demos here.

-

The Startup Journey to Acquisition:

@abidlabsshares the inspiring story of Gradio—from an initial idea to becoming a part of Hugging Face—highlighting key lessons on startup ventures and acquisitions. Read the full inspiring journey and lessons here. -

Hugging Face’s New Social Platform: Hugging Face introduces a new Posts feature for selected members, providing a space to share, amplify, and collaborate on ML topics. Users interested in posting can request to join here.

-

Accelerated ML Model Inferences Achieved: ONNX Runtime has been leveraged to speed up inferences on text-to-image models SD Turbo and SDXL Turbo, making generative model applications much faster. Explore the advancements and benefits of these accelerated inferences here.

Links mentioned:

- Tweet from Thomas Simonini (@ThomasSimonini): The first chapter of Making Games with AI course is out 🥳 You’ll build a Robot AI NPC 🤖using Hugging Face and Unity 🎮 It understands text orders and executes them. Simply input your text and…

- Gradio Playground: Play Around with Gradio Demos

- social-post-explorers (Social Post Explorers)

- Hugging Face – The AI community building the future.

- Tweet from abhishek (@abhi1thakur): Happy to announce, brand new, open-source Hugging Face Competitions platform 🚀 Now, create a machine learning competition for your friends, colleagues or the world for FREE* and host it on Hugging Fa…

- 📝 Document new gated inputs by coyotte508 · Pull Request #1190 · huggingface/hub-docs: Associated PR: huggingface/moon-landing#8662

- Tweet from Abubakar Abid (@abidlabs): Embraced by Hugging Face: the Inside Story of Our Startup’s Acquisition In late 2021, our team of five engineers, scattered around the globe, signed the papers to shut down our startup, Gradio. For m…

- Accelerating SD Turbo and SDXL Turbo Inference with ONNX Runtime and Olive

- A guide to setting up your own Hugging Face leaderboard: an end-to-end example with Vectara’s hallucination leaderboard

- Make LLM Fine-tuning 2x faster with Unsloth and 🤗 TRL

- Tweet from Daniel van Strien (@vanstriendaniel): 📚 MetaHate from @IRLab_UDC is available on @huggingface. It offers a vast dataset for understanding online hate speech: • 🗨️ Social media posts for real-world insights • 🏷️ Carefully labeled for ac…

▷ #general (141 messages🔥🔥):

- Pickles May Hide a Snake:

@cappuch__warned about the dangers of pickled files potentially harboring malicious code, and their comment was acknowledged with thanks by.ehsan_lolusing a custom emoji. - Fine-Tuning Trumps Size in Conversational Models:

@cappuch__advised against using larger models like the 7B Llama for conversational tasks, suggesting fine-tuning transformers (TL) as a smarter option due to their less effective conversational capabilities. - Collaborative Efforts on Depth Maps and Point Clouds:

@dsiegelexpressed interest in collaborative help with a project involving stereo camera systems, OpenCV, and Open3D to create depth maps and point clouds, emphasizing the need for a system light enough for Raspberry Pi or similar devices. - Sales Corner Shoutout:

@adarshgourabmahalikinquired about selling a project in the channel, and was redirected by@lunarfluto a specific channel designed for sales (<#898618631938789416>), ensuring community guidelines are followed. - Quantization Conversations: A detailed exchange occurred between

@mastermindfill,@meatfucker, and@vipitisconcerning the performance and size of LLMs (large language models), with a focus on the impact of quantization from FP32 to FP16 and even 4bit on both model quality and inference performance.@meatfuckerrecommended the use of quantization for better performance on GPUs and highlighted a repository by@TheBlokeon Hugging Face that houses quantized versions of various models.

Links mentioned:

- Vision Transformer: What It Is & How It Works [2023 Guide]: A vision transformer (ViT) is a transformer-like model that handles vision processing tasks. Learn how it works and see some examples.

- TheBloke (Tom Jobbins)

▷ #today-im-learning (11 messages🔥):

- Seeking Accuracy Metrics:

@gag123inquired about the accuracy metrics for a model without specifying which model they were referring to.@frequesnyshared a research paper in response but without discussing its contents or relevance to the question. - LLaMA Discussion and Testing:

@frequesnymentioned great results from older models and noted concerns regarding the reproducibility of the results, referencing the LLaMA model’s training process. They expressed skepticism about results on new models and planned to test the methodology using A100 GPUs. - University High-Performance Computing: In response to

@jiha’s disbelief about access to A100s,@frequesnymentioned their experience at MIPT and the availability of high-performance computing resources to some students, particularly those in NLP and simulation fields. - Sharing HPC Experiences:

@vipitisshared their university’s availability of DGX100 systems and 1080tis but complained about the difficulty in using the HPC resources. - Relevance of Deep RL for Business Applications with AI:

@scorpio123.sought advice on the relevance of a Deep RL course to their work in business applications using AI, having a strong background in DL and using tools such as OpenAI assistant, Microsoft Autogen, and HuggingFace models.

▷ #cool-finds (2 messages):

- Tweet by Nous Research intrigues osanseviero:

@osansevieroshared excitement about a cool find from Nous Research with a link to their Twitter post. - DeepSeekMoE Paper Drop:

@jshuadvdposted a link to a recent paper titled “DeepSeekMoE: Towards Ultimate Expert Specialization in Mixture-of-Experts Language Models,” which can be read in full at arXiv.org.

▷ #i-made-this (14 messages🔥):

- Engaging in Language Learning:

lunarfluengaged another user in conversation about language learning, though no specific language or details were provided. - Embeddings to Sentence Similarity:

osansevierosuggested transforming a demo of embeddings into a more user-friendly sentence similarity feature, and later elaborated on the idea by proposing embedding a corpus of sentences for semantic search. A discussion was opened on HuggingFace to track this suggestion. - From Nervous to Inspired: User

tonic_1expressed their nervousness but also excitement to potentially fulfill the suggestions made byosanseviero. - AI Messaging Replication Project Share: User

vashi2396replicated an AI Messaging feature similar to one demonstrated in an OpenAI keynote. - YouTube Sentiment Analyzer Project:

sebastian3079shared their first AI project, a sentiment analyzer for YouTube comments, and discussed the lessons learned regarding model complexity and dataset relevance. The project is available on GitHub. - Toxic Llama Environmental Concerns:

frequesnytrained an existing LLM on a toxic dataset and launched it on Gradio for a limited time, prompting users to share their experiences with this potentially hazardous AI model. You can try it here.

Links mentioned:

- Fast AI Image Upscaler 4x - a Hugging Face Space by FumesAI

- Tonic/e5 · Showcase sentence similarity or another downstream task

- GitHub - sebastian46/YouTube-Sentiment-Analysis-2: Contribute to sebastian46/YouTube-Sentiment-Analysis-2 development by creating an account on GitHub.

▷ #reading-group (6 messages):

-

Keep Calm and Carry On Learning:

@lunarfluencourages learning from experiences with a supportive reminder that mistakes are part of the process. -

Law and LLMs as a Future Presentation Topic:

@chad_in_the_houseis considering a presentation on law and language models (LLMs), highlighting current challenges in the field as a compelling topic for the next meeting. -

Homomorphic Encryption Hesitation:

@chad_in_the_housedebates presenting homomorphic encryption applied to AI, expressing concern it might be too technical and less interesting for the group. -

A Possible Deep Dive into Encryption and AI: In response to the homomorphic encryption topic,

@lunarflusuggests it might be a good subject for a more focused discussion under the security category. -

From Chat to Blog - a Path for Complex Topics: Following the suggestion from

@lunarflu,@chad_in_the_houseshows openness to creating a blog post on homomorphic encryption as it pertains to AI. -

Recognizing the Relevance of Legal Matters in AI:

@gduteaudsupports@chad_in_the_house’s proposal on discussing law and LLMs, referencing the topicality in relation to recent events such as the NYT lawsuit.

▷ #diffusion-discussions (6 messages):

- Fine-Tuning Resource for SD 2.1: User

@sayakpaulrecommended SimpleTuner, a general fine-tuning kit for Stable Diffusion 2.1 and SDXL, to a buddy looking to fine-tune SD 2.1. The kit is available on GitHub with a detailed description and resources. - Fix in the Works for SD 2.x:

@pseudoterminalxacknowledged an issue with fine-tuning SD 2.x but mentioned they are actively resolving it. - SD 2.x Fine-Tuning Ready: User

@pseudoterminalxconfirmed that SD 2.x is now fine-tuneable in the master branch, implying that the previously mentioned issue has been resolved. - Alternative Fine-Tuning Model Suggestion:

@pseudoterminalxlinked to a HuggingFace model card for pseudo-flex-base, which offers a photography model based on fine-tuning stable-diffusion-2-1 with different aspect ratios (HuggingFace Model). - Clarification on Training Scripts:

@sayakpaulclarified that they were seeking a specific training script for SD 2.1, not a model recommendation.

Links mentioned:

- GitHub - bghira/SimpleTuner: A general fine-tuning kit geared toward Stable Diffusion 2.1 and SDXL.: A general fine-tuning kit geared toward Stable Diffusion 2.1 and SDXL. - GitHub - bghira/SimpleTuner: A general fine-tuning kit geared toward Stable Diffusion 2.1 and SDXL.

- ptx0/pseudo-flex-base · Hugging Face

▷ #computer-vision (4 messages):

-

AI-Powered Game Bot Sparks Interest:

@banaanbakjeshowed interest in automating workflows using AI and shared a link to a PyTorch and EfficientNet-based AI game bot, asking about solutions for full-screen automation that include mouse clicks. The blog post can be found at How to Build an AI-Powered Game Bot with PyTorch and EfficientNet. -

Python Library for Input Simulation: In response to

@banaanbakje,@cropinkyrecommended the pyautogui library for programming synthetic mouse and keyboard actions in Python. -

Seeking Advice on AI Model Integration:

@banaanbakjecontinued the discussion by inquiring about the best practices for model training and the efficacy of using EfficientNet for full-screen training. -

Flask App Deployment Troubleshooting on AWS:

@smartguy_41719sought assistance with an AWS deployment error indicating that an archive file size exceeded the 512MB limit and was looking for methods to deploy a.pthfile.

Links mentioned:

Akshay’s Personal Website: I am a Machine Learning Enthusiast. Check out my Projects and Blogs

▷ #NLP (5 messages):

-

Aspiring ML Enthusiast Seeks Guidance:

__.ivan.__is eager to learn and develop a custom Question Answering model related to APIs and example code, and is on the lookout for guides, books, or any helpful resources for embarking on this journey. They express a preference for creating and training their own model instead of relying on pre-built solutions. -

Mistral on Mac Goes Kaboom:

lovebrainfuckencountered a zsh: bus error while attempting to trainmistralai/Mistral-7B-Instruct-v0.1on a Mac with substantial RAM but no GPU. They are seeking insights into the matter, referencing a discussion on the HuggingFace Forum about training models on Mac computers without CUDA compatibility. -

Tuning Up for Better Embeddings:

seleais planning to fine-tune MPT7b for sentence/document embeddings and contemplates adding a<CLS>token to the tokenizer, drawing inspiration from a specific research paper. They seek advice on training a single token vector without altering other tokens’ vectors and wish to avoid suggestions to use a dedicated LLM for embeddings. -

Selective Downloading Woes:

robert1is looking to download only the essential files for a transformer language model, specifically preferring safetensors files over .bin files, if available. They are in need of guidance to accomplish this more selective download approach. -

SafeTensors to the Rescue:

vipitispointsrobert1towards using options likeuse_safetensors=Trueorload_safe=Truewhen implementing.from_pretrainedto focus on safetensors files, directing them to the Transformers library documentation for further clarification. The linked GitHub documentation may hold the answers torobert1’s downloading conundrum.

Links mentioned:

- Training On Mac M3 Max.. blazing fast but: Hi All, I have received my brand new M3 max, and discovered sadly that BitsAndBytes is not supported, So I had to adapt my training code to fine tune Mistral on my dataset. =:> Changed the device…

- transformers/src/transformers/modeling_utils.py at c48787f347bd604f656c2cfff730e029c8f8c1fe · huggingface/transformers: 🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX. - huggingface/transformers

▷ #diffusion-discussions (6 messages):

- Fine-tuning Friendships: User

@sayakpaulmentioned referring a friend to SimpleTuner on GitHub for fine-tuning Stable Diffusion 2.1 and inquired about more specific reference points for SD 2.1. - Stable Diffusion Troubleshoot:

@pseudoterminalxrevealed that Stable Diffusion 2.x was broken but mentioned actively working on a fix. - Master Branch Magic: Shortly after,

@pseudoterminalxupdated that the issue with Stable Diffusion 2.x is now resolved in the master branch. - Training Script Troubles:

@sayakpaulclarified the request for a more specific reference was for the training script, not the model itself.

Links mentioned:

- GitHub - bghira/SimpleTuner: A general fine-tuning kit geared toward Stable Diffusion 2.1 and SDXL.: A general fine-tuning kit geared toward Stable Diffusion 2.1 and SDXL. - GitHub - bghira/SimpleTuner: A general fine-tuning kit geared toward Stable Diffusion 2.1 and SDXL.

- ptx0/pseudo-flex-base · Hugging Face

OpenAI Discord Summary

-

Formatting Foibles in GPT-4: Technical users are grappling with GPT-4’s formatting issues, such as unexpected line skips and response delays, that disrupt the engineering workflow, with a collective effort to troubleshoot these problems surfacing on the platform.

-

Legislative Lens on AI: The introduction of the No Artificial Intelligence Fraud Act by Representatives signals a proactive legislative approach to protecting individuals’ rights against AI-generated impersonations, outlined in the Salazar Introduces the No AI Fraud Act.

-

Challenges in ChatGPT Services: Users are reporting a decline in the quality of ChatGPT services, including network issues and message limitations, sparking concerns about the service’s reliability and raising questions about OpenAI’s maintenance and improvement plans.

-

Prompt Engineering Enthusiasm: Discussions on building a “prompt battles” game are underway, with suggestions to set up competitions utilizing Custom GPTs to generate outputs based on user-defined criteria, reflecting an interest in innovative AI applications within the community.

-

Efficient AI Data Handling and Creativity: Threads are focused on how to efficiently manage large volumes of data for training AI, with emphasis on structured input like XML tagging, and the crafting of Shakespearean content by applying structural constraints to guide the AI’s creative output.

OpenAI Channel Summaries

▷ #ai-discussions (71 messages🔥🔥):

-

GPT-4 Format Frustrations: Users like

@masmoriyaand@markon101have complained about GPT-4’s formatting issues, such as skipping lines and taking long pauses between responses, disrupting their workflow. The platform’s unreliability has led to a discussion thread for troubleshooting. -

Legislation Against AI-generated Fakes:

@clockrelativity2003highlighted the introduction of the No Artificial Intelligence Fraud Act by Reps. María Elvira Salazar and Madeleine Dean, pointing out its potential to defend individuals’ rights to their likeness and voice from unauthorized duplications by AI. -

Users Report Chatbot Difficulties: Several users, including

@derella98and@covikodsoli, voiced their frustrations with ChatGPT errors, such as network issues and message limitations. There’s a growing concern about quality degradation in the services provided by these AI tools. -

AI Photo App inquisition:

@alex31195inquired about free apps that can generate AI photos, leading to a humorous exchange with@chotesabout the availability of “AI dads.” -

Microsoft-Copilot vs. OpenAI: There’s an ongoing debate, with

@foreignduckexpressing concerns that Microsoft’s Copilot might overshadow OpenAI’s efforts. Users including@hhf0363and@markon101discuss the trade-offs between Microsoft’s integration and ChatGPT’s features, particularly the ability to upload documents which is crucial for some.

Links mentioned:

Salazar Introduces the No AI Fraud Act: WASHINGTON, D.C. – Today, Reps. María Elvira Salazar (R-FL) and Madeleine Dean (D-PA) introduced the No Artificial Intelligence Fake Replicas And Unauthorized Duplications (No AI FRAUD) Act. The bill …

▷ #gpt-4-discussions (76 messages🔥🔥):

- Trademark Troubles: User

@csgbossfaced issues sharing their GPT due to using a restricted name “Midjourney”. Splitting the name into “Mid Journey” resolved it, while@7877recommended using the brand name in the description instead. - API Access Queries and Concerns:

@sairaghavendrainquired about API access for custom GPTs and was informed by@elektronisadethat API usage isn’t permitted for them. - Search Feature Suggestions and Requests:

@q16.krexpressed a need for a search option similar to iOS for retrieving old conversations with ChatGPT.@solbusclarified that such a feature is available in the app but not on the web versions. - Inconsistencies and Outages:

@_odaenathusand several others reported issues with their GPTs such as failing messages or inconsistent behavior, while@darthgustav.mentioned having no issues aside from changes made by OpenAI to the system prompt. - Community Help for Request Limit Issues:

@.australiaballexperienced a warning about too many requests despite not using ChatGPT in the morning.@darthgustav.and@7877responded with possible explanations and suggested confirming account security and monitoring request counts, while others reported it worked again without intervention.

▷ #prompt-engineering (23 messages🔥):

- Call for Collaborators in “Prompt Battles” Game: User

@my5042expressed interest in creating a “prompt battles” game where players compete against each other, and is seeking collaborators for this project. - PromptBot Battle Framework Proposed:

@darthgustav.suggested steps for building a bot testing framework that includes setting up Custom GPTs and battling them in a competition to produce user-defined outputs. - DMCA-compliant GPT Battles Unleashed:

@darthgustav.mentioned a way for using GPT in prompt bot battles without infringing DMCA regulations. - Scriptwriting by AI Assistant:

@sho2858shared their effort to train an AI assistant on a significant dataset with the goal of generating scripts, prompting a suggestion from@darthgustav.to prioritize algorithms and constraints over extensive knowledge. - Shakespearean AI Poetry Strategy:

@darthgustav.recommends giving the AI structure, like iambic pentameter and act outlines, rather than large volumes of text to create new Shakespearean-inspired content, emphasizing the importance of constraints and improvisation.

▷ #api-discussions (23 messages🔥):

- Battle of the Prompts:

@my5042expressed interest in creating a game called “prompt battles” for multiplayer fun and sought collaboration, while@darthgustav.and@eskcantaoffered different approaches to implement such a game, suggesting the use of Custom GPTs and AI-generated goals, respectively. - GPT tests its might in the PromptBot ThunderDome:

@darthgustav.proposed a bot testing framework involving competitions between home team bots and custom bots, aiming to meet user-defined criteria. - The Documentalist Challenge: User

@sho2858shared their progress in organizing a massive 300k-word text to train their assistant, sparking a conversation on data organization techniques, with@darthgustav.recommending sparse XML tagging and plain text Unicode encoding. - AI poetry with Shakespearean flair:

@darthgustav.highlighted the effectiveness of providing the AI with a format for iambic pentameter, a template, and specific constraints to produce Shakespearean works, rather than simply feeding it Shakespeare’s plays. - Narrative Tension Design by AI: Continuing the discussion on AI-generated content,

@darthgustav.mentioned structuring prompts into acts to guide the AI in building narrative tension and producing adapted content, emphasizing improvisation within constraints.

LM Studio Discord Summary

-

Understanding VRAM Requirements for Large Models: Engineers discussed the VRAM capacities for running large models like Mistral which is composed of 32 layers. One recommendation was setting CPU layers to

-1to fit such a model on a 24GB VRAM GPU. -

Elevating Creative AI with Fine-Tuning and Merging: Conversations centered around enhancing AI capabilities, from the costly venture of fine-tuning a model to master Salesforce, estimated to cost millions, to the enthusiasm for DIY model merging using tools like mergekit, as well as the advent of the new GGUF file format by the llama.cpp team.

-

AI Model Application Hurdles: The community shared their challenges and successes, including difficulty finding multilingual models that perform well in non-English languages, integrating models like CrewAI and Autogen in LM Studio, and seeking models conducive for creative writing and coding assistance.

-

Feedback Funnelling and Bug Busting: Users were reminded to redirect their feedback to the designated channels, highlighting the importance of channel specificity for support and bug reporting within the community.

-

Delving Into GPU Specifics and NVLink Compatibility: Technical discussions delved into the absence of Low Hash Rate (LHR) limitations in Nvidia’s 3090 series GPUs, the incompatibility of NVLink between a 3090 and a 3090ti, and the need for special configurations when running multiple GPUs.

LM Studio Channel Summaries

▷ #💬-general (143 messages🔥🔥):

-

Model Loading VRAM and Size Conversation: @fabguy and

@typicalaidiscussed loading models with different VRAM capacities. It was stated that Mistral is 32 layers, and setting CPU layers to-1should fit the model on@typicalai’s GPU, which has 24GB VRAM. -

AI Poisoning and Election Concerns: @flared_vase_16017 shared a link to an article about AI poisoning, prompting a discussion wherein

@Pisuggested that openness with training data might help combat threats posed by open AI models being turned into “sleeper agents.” -

Chatbot Performance Issues:

@technot80and@heyitsyorkiecommented on performance and speed issues with ChatGPT4, specifically when using the gtpshop function, likening the speed to a “2400 baud modem.” -

LM Studio and CrewAI Integration Success:

@meadyfrickedand@_anarche_reported having success using CrewAI with different models in LM Studio despite some compatibility challenges with Autogen, indicating CrewAI may be easier to use. -

GGUF File Format Explanation: In a discussion about file formats for models,

@dagbsclarified that GGUF is a new format introduced by the llama.cpp team, which is now the standard used by LM Studio, replacing GGML.

Links mentioned:

- TheBloke/dolphin-2.6-mistral-7B-dpo-laser-GGUF · Hugging Face

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed…

- The Best GPUs for Deep Learning in 2023 — An In-depth Analysis: Here, I provide an in-depth analysis of GPUs for deep learning/machine learning and explain what is the best GPU for your use-case and budget.

- January | 2024 | Ars Technica

▷ #🤖-models-discussion-chat (35 messages🔥):

- Salesforce Fine-Tuning Dream Dashed by Cost:

@heyitsyorkieresponded to@sohms’s query on fine-tuning a model to master Salesforce, estimating it as an “extremely large” million-dollar undertaking in training compute. - DIY Enthusiasm for Combining AI Models:

@222gateencouraged@sohmsto explore merging pre-trained models using mergekit and provided a link to a YouTube video on TIES-Merging technique (https://www.youtube.com/watch?v=m58Y79y8wFs). - Models for Creative Minds:

@alastair9776sought recommendations for models apt for creative writing, with@222gaterecommending “neuralhermes 2.5 mistral 7b” among others, while@dagbsshared a link to a new model drop. - Multilingual Model Frustration:

@dermarusis seeking solutions for a model that can consistently respond in a non-English language and integrate external databases, but struggles due to LLM’s primary English datasets as clarified by@heyitsyorkie. - Searching for the Coding Model Sweet Spot:

@silverdemon101asked for advice on the best model to assist with coding, triggering a recommendation from@dagbsto find a model size that is around 20% smaller than the user’s GPU’s maximum VRAM.

Links mentioned:

Mastering Model Merging: A Deep Dive into TIES-Merging Technique: TIES-Merging is a groundbreaking method for merging model checkpoints, enabling seamless multitasking. These robust model merging techniques can greatly enha…

▷ #🧠-feedback (5 messages):

- Feedback Redirect Request:

@heyitsyorkierequested that@fabguymove his feedback to the appropriate channel designated for feedback (<#1111440136287297637>), indicating the current conversation was not meant for support. - Acknowledgment of Chat Direction:

@mattjpowacknowledged the direction to move the feedback and noted he had been doing so, stating he was giving feedback, not asking for support. - Report of Blank Replies Issue:

@ddhmksoireported experiencing a bug leading to blank replies from any model, expressing frustration with the recent increase in bugs. - Guidance to Report Channel:

@dagbspointed@ddhmksoitowards the proper channel (<#1139405564586229810>) to report the bug mentioned about blank replies.

▷ #🎛-hardware-discussion (8 messages🔥):

- LHR worries dispelled: User

@nink1expressed concerns about potential performance issues with Low Hash Rate (LHR) video cards for mining.@rugg0064reassured that LHR limitations have been cracked and officially disabled and mentioned that 3090 cards are not affected. - 3090 series and LHR confusion:

@pefortinclarified that to their knowledge, the Nvidia 3090 was not affected by LHR limits, although there was some uncertainty surrounding this. - Config tips for multiple GPUs:

@.ben.comshared research on running multiple GPUs, mentioning the anti-scaling issue with multi-GPU setups and the relevance of using special configurations for sub-24G models to optimize performance. - Inference TDP and NVLink Query:

@.ben.comalso inquired whether to expect max TDP to be reached during LLM inferencing on 3090 GPUs and asked about NVLink compatibility between a 3090 and a 3090ti. - NVLink Compatibility Clarified: In response to

@.ben.com,@ldeusconfirmed that a 3090 and a 3090ti cannot be connected through NVLink, settling the compatibility query.

Links mentioned:

Yay Kitty GIF - Yay Kitty Cat - Discover & Share GIFs: Click to view the GIF

▷ #autogen (2 messages):

- Praise for Autogen Studio UI 2.0: User

@cluck0maticexpressed approval for the new Autogen Studio UI 2.0, calling it niiiiicee… - Discovery of Autogen Studio:

@dagbsshowed surprise, inquiring they have a studio?? indicating that they were not aware of the existence of an Autogen Studio.

Mistral Discord Summary

-

Mistral Model Comparisons and Performance Insights: The Mistral-medium model ranks 4th on the LMSYS Chatbot Arena Leaderboard, while Gemini Pro (dev) stands at 8th. Meanwhile, users report performance issues with Mistral 7B on MacBook Air M2, and a marginal performance difference between quantization methods like exl2 6bit and fp16, with the former being preferred for speed.

-

Mistral Models and Long-Form Text Goals: Users show interest in using Mistral models for long-form text creation, such as SEO content, and seek advice on running AI models efficiently on specific hardware, including the possibility of executing Mistral 7B on a 6GB GPU using GGUF and 4bit.

-

Quantized Model Formats and API Diagnostics: The GGUF file format is highlighted for its use with quantized models like Mistral-7B. Users compare API performance with local model runs, noting accuracy concerns with the Mistral API and troubleshooting errors using tools like

model_dump. -

Hosting and Deployment Discussions: For those looking to host Mistral 7B or 8x7B models, options such as llama-cpp-python, ollama, or the llama.cpp server example offer various levels of suitability for parallel execution needs.

-

Exploring Model Fine-Tuning and Extensions: The community shares challenges with fine-tuning the Mistral model for improved performance, expressing frustrations and seeking effective strategies. Complexities arise in tasks like merging a LoRA model, converting to GGUF format, and resolving tokenizer issues, with helpful advice found on llama.cpp GitHub repo and Hugging Face discussion threads.

The above summaries are based on discussions that included engineers and engaged with technical, hands-on aspects of working with the mentioned AI models. Links to additional resources or examples provided by community members have been included for further reference.

Mistral Channel Summaries

▷ #general (51 messages🔥):

- Warm Welcome to Mistral’s Newbie:

@mrdomoojoined the Mistral Community, expressing optimism and complimenting the project. - Searching for a Beta Test Key:

@josemavlcinquired about obtaining an invitation to use the Mistral beta. - Mistral 7B Runs Slowly on MacBook Air M2?:

@pierre.lhostereported performance issues when trying to run Mistral 7B on a MacBook Air M2, with@i_am_domsuggesting to check for RAM consumption and unnecessary apps running in the background for performance improvements. - GGUF Format for Smaller Infrastructure:

@vhariationalprovided a detailed explanation of the GGUF file format, used by quantized models such as Mistral-7B and Mixtral-8x7B, including links to community-distributed versions of these models in GGUF format on Hugging Face, and an image of TheBlokeAI. - New LLM API Comparison Website Launched:

@_micah_hshared a new website, ArtificialAnalysis.ai, designed for comparing LLM API providers including pages for Mistral 7B Instruct and Mixtral 8x7B Instruct, and invited users to follow their Twitter for updates.

Links mentioned:

- Open-weight models | Mistral AI Large Language Models): We open-source both pre-trained models and fine-tuned models. These models are not tuned for safety as we want to empower users to test and refine moderation based on their use cases. For safer models…

- TheBloke/Mistral-7B-Instruct-v0.2-GGUF · Hugging Face

- Mistral 7B - Host Analysis | ArtificialAnalysis.ai: Analysis of Mistral 7B Instruct across metrics including quality, latency, throughput, price and others.

- Mixtral 8x7B - Host Analysis | ArtificialAnalysis.ai: Analysis of Mixtral 8x7B Instruct across metrics including quality, latency, throughput, price and others.

▷ #models (46 messages🔥):

- Mistral vs. Gemini Pro:

@vhariationalprovided a link to LMSYS Chatbot Arena Leaderboard for a comparison, where Mistral-medium ranks 4th and Gemini Pro (dev) 8th.@ethuxsuggested also looking at the OpenChat models and provided a link with more information. - Mistral for Long-Form Text Generation:

@stefatorusexpressed interest in using Mistral for generating long-form text, particularly for SEO content writing, despite models often being fine-tuned for shorter responses. - Model Performance on Specific Hardware:

@dayzenqueried about the feasibility of running Mistral 7B GPTQ or GGML / GGUF models on specific hardware specs, leading to a discussion on model requirements and streaming techniques with@chlorobyte. - Advice for Running AI Models:

@ethuxadvised@dayzenthat Mistral 7B could run on a 6GB GPU with GGUF and 4bit, recommending a site lmstudio.ai for testing GGUF models and sharing links to specific models like MagiCoder at EvalPlus Leaderboard and on Hugging Face. - Technical Inquiry About Tokenizer:

@vivien_tranthienasked a technical question regarding the Mistral-7B tokenizer’s list of merges, pointing out potential redundancies in the merges list found on Hugging Face’s tokenizer.json for the model.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- openchat/openchat-3.5-0106 · Hugging Face

- Mistral LLM: All Versions & Hardware Requirements – Hardware Corner

- EvalPlus Leaderboard

- ise-uiuc/Magicoder-S-DS-6.7B · Hugging Face

- TheBloke/Magicoder-S-DS-6.7B-GGUF · Hugging Face

▷ #deployment (1 messages):

- Options for Hosting Mistral Models:

@vhariationaladvised that one could either host the original versions of the 7b or 8x7b models by following the instructions on the Mistral doc, or use a quantized version. There are various options available including llama-cpp-python, ollama, or the native llama.cpp project’s server example, though they have not been fully tested for parallel execution needs.

▷ #ref-implem (1 messages):

- Python Client Example for Embeddings:

@vhariationalshared an example of how to use the Python client for embeddings, with a link to the GitHub repository. The repository provides Python code that demonstrates asynchronous embedding generation with the Mistral AI platform.

Links mentioned:

client-python/examples/async_embeddings.py at main · mistralai/client-python: Python client library for Mistral AI platform. Contribute to mistralai/client-python development by creating an account on GitHub.

▷ #finetuning (28 messages🔥):

-

Frustration over Fine-tuning Performance:

@bdambrosioand@mrdragonfoxdiscussed their troubles with fine-tuning the Mistral chatbot.@mrdragonfoxmentioned that despite a month of efforts and expenses, their fine-tuned models still have not surpassed the performance of the regular instruct model. -

Confusion in Fine-tuning Process:

@hydre2155sought resources for fine-tuning a model with 100k rows of data, though specific resources were not provided within the discussed messages. -

Perplexities of Quantization:

@bdambrosioinquired about the performance of quantization methods, such as exl2 6bit, on the Mistral model.@mrdragonfoxresponded that the performance is nearly on par with fp16, offering only a slight difference, and preferring it for speed improvements. -

Struggles with LoRA Model Merging and Conversion:

@distro1546faced difficulties in merging a LoRA model and converting it to GGUF format for use with ollama, and despite trying solutions involvingllama.cpp, issues persisted.@ethuxresponded with helpful advice on merging LoRA adapters into the base model and provided links for resolving related tokenizer issues, including llama.cpp GitHub repository and discussion on Hugging Face. -

Community Call for Help Clearing Fine-tuning Hurdles:

@mrdragonfoxexpressed the community’s need for just a hint to improve fine-tuning outcomes, suggesting that current methods feel akin to “burning cash with brute force” due to the lack of clearer guidance.

Links mentioned:

- uyiosa/test_mistral_7b · Hugging Face

- GitHub - ggerganov/llama.cpp: Port of Facebook’s LLaMA model in C/C++: Port of Facebook’s LLaMA model in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- LoRA

- TheBloke/AquilaChat2-34B-AWQ · FileNotFoundError - the tokenizer.model file could not be found

- Could not find tokenizer.model in llama2 · Issue #3256 · ggerganov/llama.cpp: When I ran this command: python convert.py \ llama2-summarizer-id-2/final_merged_checkpoint \ —outtype f16 \ —outfile llama2-summarizer-id-2/final_merged_checkpoint/llama2-summarizer-id-2.gguf.fp…

▷ #la-plateforme (23 messages🔥):

- Confusion Over Mistral vs. API Performance:

@rabdullinexpresses concern about a decrease in accuracy when using the Mistral API compared to running models locally, noting a significant drop in quality across all API-served Mistral models, despite using the correct format and tokens for prompts. - Searching for Official System Delimiters:

@vhariationalinquires about the official system delimiters for Mistral models.@rabdullinresponds that they are not documented and he was likely wrong in assuming they were supported, affecting the scores he mentioned earlier. - New User Struggles With UI Errors:

@lakanya27reports an error when trying a UI hosted locally and is advised to become familiar with Next.js’s<Image />component by@Valdisand to try using Docker by@arduilex. - Troubleshooting Kaggle Notebook Errors with MistralClient:

@jortega_17718reports anAttributeErrorwhile trying to use MistralClient on a Kaggle notebook and@rabdullinsuggests usingmodel_dumpon the response object to diagnose the issue.

Links mentioned:

Open-weight models | Mistral AI Large Language Models): We open-source both pre-trained models and fine-tuned models. These models are not tuned for safety as we want to empower users to test and refine moderation based on their use cases. For safer models…

Latent Space Discord Summary

-

Neovim Gets ChatGPT: A favorite Neovim plugin called ChatGPT.nvim was shared, enabling engineers to interact with LLMs directly in the editor.

-

SPADE Takes Center Stage in LLM: The SPADE paper (Shreya Shankar’s paper on SPADE), which focuses on generating custom assertions for LLMs in low-data settings, became a topic of discussion, bringing attention to efforts aimed at making LLMs more reliable.

-

AI Event Notable Announcements: Upcoming events include the AI in Action Discord meetup happening on January 26th, targeting real-world AI engineering. To engage with the community, interested parties can register at AI in Action Weekly Jam and the LLM Paper Club Asia Edition to accommodate Asia and morning CET participants (LLM Paper Club (Asia Edition!)).

-

Humor in Rejection: A humorous and relatable tweet about the rejection of an AI paper “LCM” by ICLR sparked conversations, injecting a light mood into the academic rigor (Tweet from Allen (Simian) Luo).

Latent Space Channel Summaries

▷ #ai-general-chat (40 messages🔥):

- ChatGPT Plugin for Neovim Users:

@thenoahheinshared a favorite Neovim plugin for coding with ChatGPT: ChatGPT.nvim, which integrates a chat interface to interact with LLMs directly in the editor. - GPT-4’s Language Limitations in Coding:

@btdubbinsand@thenoahheindiscussed the challenges of using GPT-4 with less common languages like Golang/HCL, noting better results with languages such as Python or JS. - SPADE Paper on Improving LLM Assertions:

@swyxiohighlighted a paper on SPADE, a system that generates custom assertions for LLMs in low-data settings Shreya Shankar’s paper on SPADE. - Semantic Image Synthesis with SPADE:

@semantic_zoneplayfully commented on the acronym overlap by sharing a link to SPADE, a tool for semantic image synthesis, quipping that AI is running out of acronyms: GitHub SPADE for image synthesis. - AI in Action Meetup:

@kbal11announced an upcoming AI in Action meetup discussing UI/UX patterns for GenAI, with suggested preparatory materials and featuring a discussion led by@794337110994845726.@nuvic_provided a link to register for the event: AI in Action Weekly Jam registration.

Links mentioned:

- Tweet from Shreya Shankar (@sh_reya): We all know LLMs make mistakes. One simply cannot deploy LLM pipelines without assertions, yet writing good assertions is tedious & hard. So, we built SPADE, a system that analyzes prompts & auto-gene…

- GPT-4 Architecture, Infrastructure, Training Dataset, Costs, Vision, MoE: Demystifying GPT-4: The engineering tradeoffs that led OpenAI to their architecture.

- SPADE: Synthesizing Assertions for Large Language Model Pipelines: Operationalizing large language models (LLMs) for custom, repetitive data pipelines is challenging, particularly due to their unpredictable and potentially catastrophic failures. Acknowledging the ine…

- A Flaw in Millions of Apple, AMD, and Qualcomm GPUs Could Expose AI Data: Patching every device affected by the LeftoverLocals vulnerability—which includes some iPhones, iPads, and Macs—may prove difficult.

- Vector DB Comparison: Vector DB Comparison is a free and open source tool from VectorHub to compare vector databases.

- Stable Code 3B: Coding on the Edge — Stability AI: Stable Code, an upgrade from Stable Code Alpha 3B, specializes in code completion and outperforms predecessors in efficiency and multi-language support. It is compatible with standard laptops, includi…

- GitHub - jackMort/ChatGPT.nvim: ChatGPT Neovim Plugin: Effortless Natural Language Generation with OpenAI’s ChatGPT API: ChatGPT Neovim Plugin: Effortless Natural Language Generation with OpenAI's ChatGPT API - GitHub - jackMort/ChatGPT.nvim: ChatGPT Neovim Plugin: Effortless Natural Language Generation with Ope…

- GitHub - Vaibhavs10/open-tts-tracker: Contribute to Vaibhavs10/open-tts-tracker development by creating an account on GitHub.

- AI in Action Weekly Jam · Luma: A weekly virtual chat dedicated to the hands-on application of AI in real-world scenarios, focusing on insights from blogs, podcasts, libraries, etc. to bridge the gap between theory and…

- GitHub - NVlabs/SPADE: Semantic Image Synthesis with SPADE: Semantic Image Synthesis with SPADE. Contribute to NVlabs/SPADE development by creating an account on GitHub.

- Generative Interfaces Beyond Chat // Linus Lee // LLMs in Production Conference: // AbstractLinus has spent the last few years building and experimenting with new kinds of tools for thought and software interfaces for creation, like a can…

- How to Make AI UX Your Moat: Design great AI Products that go beyond “just LLM Wrappers”: make AI more present, more practical, and then more powerful.

▷ #ai-event-announcements (1 messages):

-

AI in Action Weekly Jam Premieres:

@swyxioannounced the new AI in Action Discord meetup happening on January 26th, focusing on real-world AI engineering. To join the event and add it to your calendar, register at https://lu.ma/el0y5mpi and join the conversation on Latent Space Discord. -

LLM Paper Club Asia Edition Launching Soon: The LLM Paper Club Asia, hosted by

@206404469263433728, caters to the Asia timezone, mirroring the <#1107320650961518663> meetup format but convenient for Asian or morning CET participants. Sign up at https://lu.ma/llm-paper-asia and stay tuned for the date to be announced.

Links mentioned:

- AI in Action Weekly Jam · Luma: A weekly virtual chat dedicated to the hands-on application of AI in real-world scenarios, focusing on insights from blogs, podcasts, libraries, etc. to bridge the gap between theory and…

- LLM Paper Club (Asia Edition!) · Luma: Asia-timezone friendly version of the Latent.Space x EugeneYan.com LLM Paper Club!

▷ #llm-paper-club-chat (1 messages):

- LCM Paper Faces Rejection: User

@swyxioshared a tweet with a humorous take on their paper titled “LCM” being rejected by ICLR. The tweet by@SimianLuojokes about the rejection and questions whether to continue research in school. Laugh along with the tweet here.

Links mentioned:

Tweet from Allen (Simian) Luo (@SimianLuo): Best joke of my life. We are happy to announce that LCM get rejected by ICLR🤣🤣 lol. Question:Should i continue to do research in school?😁

LlamaIndex Discord Discord Summary

-

Advanced QA Techniques for Tabular Data: A new collection of cookbooks has been released, detailing advanced QA techniques for tabular data, including few-shot table and row selection strategies. Engineers can explore these methodologies in the stack for tabular QA, found here.

-

Quantization Roadmap for LLM Efficiency: @wenqi_glantz has provided a reference guide on quantizing @MistralAI 7B for better performance in terms of latency and power usage with minimal accuracy sacrifice. The guidelines are a crucial resource for those working on efficient LLM construction, available here.

-

Replit Template Speeds Up RAG System Deployment: LlamaIndex has introduced a Replit template for quick deployment of multi-tenant RAG systems, which is currently gaining traction in the community. The template can be accessed by engineers here.

-

Embeddings Over Context: In a discussion about embeddings, the consensus suggests that while swapping the language model (from Mistral to Llama) doesn’t significantly affect service context vectors, the pivotal factor is the embedding model used. More insights can be found in the LlamaIndex Embeddings Documentation.

-

PDF Loader Talks for Complex Documents: For importing insurance and benefits documents, the community advised that using the nougat PDF loader or an unstructured one might yield the best results with table-laden content, indicating the importance of aligning the tool with document complexity.

LlamaIndex Discord Channel Summaries

▷ #blog (3 messages):

-

Tabular Data Mastery with New Cookbooks: A new resource for building advanced QA over tabular data is available, featuring few-shot table and row selection techniques. Check out the stack for tabular QA, which is detailed in LlamaIndex’s comprehensive cookbooks here.

-

Guidance on LLM Quantization: For developers working with open-source LLMs, @wenqi_glantz’s reference guide on quantizing @MistralAI 7B is essential for lower latency and power consumption with minimal accuracy loss. Find the complete guide for efficient LLM building here.

-

Replit Template for RAG Systems Now Trending: LlamaIndex introduces a trending Replit template to help set up multi-tenant RAG systems. The template is now available and gaining popularity here.

▷ #general (30 messages🔥):

-

Context Matters Less for Embeddings:

@wrapdepolloquestioned whether changing the language model from Mistral to Llama affects the creation of vector indexes for documents with a service context, noticing that the context seemed unchanged.@Teemuand@7levenclarified that for embeddings, the LLM choice isn’t crucial, but one must ensure the correct embedding model is set within the service context. -

Optimal Question-Context Pairs Unspecified:

@physicsweakinquired about the best quantity of question-context pairs for fine-tuning bge-large embeddings, but the conversation did not result in a definite answer or advice. -

Choose the Right PDF Loader for Insurance Documents:

@hosermageasked for guidance on selecting a PDF loader for handling insurance and benefits information.@whitefang_jradvised that the nature of the documents containing tables might make the nougat PDF loader or an unstructured one the best fit. -

In Search Of Logprobs:

@lhc1921queried about setting the “logprobs” parameter to true in the OpenAILike model and usingadditional_kwargswithin the same context.@kapa.airesponded with an uncertain answer and directed@lhc1921towards the LlamaIndex documentation for more information. -

Auto Merge Retrieval Configurations Discussed:

@lhc1921also asked if it is possible to use an Auto Merge Retriever to return only merged nodes without producing an answer, along with how to set a relevance score threshold for returned nodes. Kapa.ai provided examples of usage and referred to the LlamaIndex documentation for more details.

Links mentioned:

Embeddings - LlamaIndex 🦙 0.9.33

OpenAccess AI Collective (axolotl) Discord Summary

-

Mistral’s Fine-Tuning Hits a Ceiling: Discussions suggest that Mistral may be hitting a fine-tuning plateau with DPO models, potentially due to a limitation in activated inference parameters. A universal training speed metric is sought by users but remains unspecified, akin to the tokens/sec metric for inference.

-

Computing Resources Management: Guild members are sharing experiences and seeking advice on renting out excess compute resources on platforms such as vast/runpod.

-

LoRA’s Rank-Stabilized Factor for Efficient Tuning: An arXiv paper was shared about enhancing Low-Rank Adapters using a rank-stabilized scaling factor, with an associated implementation GitHub pull request proposing a modification to the PEFT method for better fine-tuning outcomes.

-

Enhancing AMD GPU Support with Hugging Face: Hugging Face has introduced support for AMD Instinct MI210 and MI250 GPUs, with the overall compatibility detailed in a subsection about Flash Attention 2’s ROCm implementation.

-

Gendering 4D Attention Masks: A new feature touted by Hugging Face handles transformer models accepting custom

4Dattention masks to potentially bypass AMD compatibility issues, with more information on this is detailed in a Hugging Face blog.

OpenAccess AI Collective (axolotl) Channel Summaries

▷ #general (10 messages🔥):

- Mistral’s Fine-Tuning Plateau:

@yamashispeculated that the capabilities of Mistral don’t seem to significantly improve with finetunes and DPO models, possibly due to the limit of activated inference parameters. - Seeking a Universal Training Speed Metric:

@kiraa8415inquired if there’s a universal metric for measuring transformer training speed, similar to the tokens/sec metric used for inference speed. - Compute Rental Experience Inquiry:

@le_messasked the community about experiences with renting out excess compute resources on platforms like vast/runpod, which@leoandlibealso expressed interest in. - Exploring Rank Stabilized Low-Rank Adapters:

@xzuynshared a link to an arXiv paper discussing improving Low-Rank Adapters (LoRA) by using a rank-stabilized scaling factor for better fine-tuning results and a related GitHub pull request for practical implementation. - PEFT Implementation Quandary:

@xzuynindicated a modification needed for the PEFT method from the existinglora_alpha / rtolora_alpha / math.sqrt(r)and asked if anyone had tested it yet. They also queried about the possibility of evaluating models at the first step, which current configuration does at every 50 steps, as highlighted in this GitHub issue.

Links mentioned:

A Rank Stabilization Scaling Factor for Fine-Tuning with LoRA: As large language models (LLMs) have become increasingly compute and memory intensive, parameter-efficient fine-tuning (PEFT) methods are now a common strategy to fine-tune LLMs. A popular PEFT method…

▷ #axolotl-dev (21 messages🔥):

-

AMD GPUs Get a Hugging Face:

@le_messpointed out that Hugging Face now supports AMD Instinct MI210 and MI250 GPUs. He provided the overview link and mentioned that while support for other ROCm-powered GPUs has not been validated, most features are expected to run smoothly; he posted a subsection on Flash Attention 2’s ROCm implementation. -

Flash Attention AMD Dilemma:

@yamashiexpressed disappointment that Flash Attention does not support AMD’s MI100 GPUs nor the XT cards, humorously referring to them as “the dicks”. -

Pondering the PR:

@dctannerinquired about opinions on a pull request concerning system messages in the Axolotl project’s chatML, with@le_messregretting not being able to look at it due to being sick. -

Skipping Flash Attention Troubleshoot:

@faldoredebated whether Flash Attention was mandatory or could be skipped, with@le_messsuggesting disabling it in the config and@caseus_indicating it’s required for sample packing. -

Transformers with 4D Attention Masks:

@caseus_introduced a new feature from Hugging Face allowing transformers to accept custom4Dattention masks, providing a potential workaround for AMD compatibility issues; linked to a Hugging Face blog explaining the feature.

Links mentioned:

- 4D masks support in Transformers

- Using Hugging Face libraries on AMD GPUs

- Draft: Feat/chatml add system message by mhenrichsen · Pull Request #1117 · OpenAccess-AI-Collective/axolotl: Need ideas on how to change the default system message in the prompter.

- GitHub - ROCmSoftwarePlatform/flash-attention: Fast and memory-efficient exact attention: Fast and memory-efficient exact attention. Contribute to ROCmSoftwarePlatform/flash-attention development by creating an account on GitHub.

DiscoResearch Discord Summary

-

Laserxtral Enters the Ring with “Lasering”: Laserxtral, a model trying ‘lasering’ techniques and backed by VAGO Solutions, claims performance comparable to Mixtral 8x7b Instruct despite its smaller size, albeit with some German language “Denglish” issues.

-

Hermes 2 Leapfrogs Mixtral: Nous Hermes 2 Models, based on Mixtral 8x7B, have been released with multiple variants and integrations, featuring immediate accessibility through Together Compute’s API and boasting enhancements over Mixtral Instruct.

-

Incremental Gains in LLM Training: Following the release of Nous Hermes 2, a discussion hinted that additional training with 1 million data points resulted in modest improvements, pointing to the original Mixtral model’s high efficiency.

-

DPO Datasets Crafted by AI, with users discussing the transformation of good responses into “bad and rejected” for DPO dataset creation. Methods included using answers from GPT-3.5 as “bad” and GPT-4 as “good,” as well as using LLaMA-13B for generating “rejected” responses.

-

Embedding Optimization Controversies: Within the embedding development discussions, there’s a debate on token length limits for embeddings, with 540 tokens suggested as optimal but showing practical performance drops after 512 tokens. A proposal was made to use Dense Passage Retrieval models to filter out overly generic questions, and the effectiveness of long-context embeddings remains contested.

DiscoResearch Channel Summaries

▷ #mixtral_implementation (10 messages🔥):

-

Laserxtral - A New Contender:

@sebastian.bodzaintroduced Laserxtral, a new model experimenting with ‘lasering’ to enhance model capabilities, though performance in German showed some issues with “Denglish” sentences. The model, sponsored by VAGO Solutions, is notably smaller than Mixtral 8x7b Instruct but claims a similar performance level. -

Nous Research Ups Their Game:

@philipmayshared links to the new Nous Hermes 2 models based on Mixtral 8x7B, boasting improvements over Mixtral Instruct. This includes variants like SFT+DPO and SFT only, along with GGUF versions for different quantization sizes, and integration with Together Compute’s API for immediate accessibility. -

Lean Training, Grand Results: In response to Nous Hermes 2’s reported improvements,

@sebastian.bodzaand@bjoernpdiscussed that even with 1 million additional training data points, the gains were modest, suggesting the Mixtral Team’s original model was already highly efficient. -

Perplexed by Perplexity:

@thewindmominquired about the perplexity difference between models with 4bpw and 6.5bpw, although no direct response was provided within the message history. -

Innovative MoE Construction:

@philipmayhighlighted an interesting approach to MoE construction using different peft adapters, shared on LinkedIn, which could be a smart way to build upon these models.

Links mentioned:

- cognitivecomputations/laserxtral · Hugging Face

- NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO · Hugging Face

- NousResearch/Nous-Hermes-2-Mixtral-8x7B-SFT · Hugging Face

- NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO-adapter · Hugging Face

- NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO-GGUF · Hugging Face

- NousResearch/Nous-Hermes-2-Mixtral-8x7B-SFT-GGUF · Hugging Face

- TOGETHER

▷ #general (7 messages):

-

Crafting DPO Datasets with LLMs:

@philipmayinquired about the possibility of transforming good answers into “bad and rejected” answers using LLMs for developing datasets suitable for Direct Preference Optimization (DPO). There were no specific papers or blogs mentioned in response to this query. -

Creating DPO Datasets with GPT Versions:

@sebastian.bodzadescribed a method to generate a DPO dataset where responses to an instruction from GPT-3.5 were used as bad answers and responses from GPT-4 as good answers. -

A Look at LLaMA-13B and GPT-4 for DPO Data Generation:

@sebastian.bodzaalso mentioned that LLaMA-13B was utilized to generate rejected responses with GPT-4/GPT-3.5 providing the accepted answers for a task related to converting sentences to Resource Description Framework (RDF) triplets. -

Intel Shares Insights on Fine-tuning and DPO Practices: A blog post was shared by

@sebastian.bodza, detailing the practice of supervised fine-tuning and Direct Preference Optimization on Habana Gaudi2, including a showcase of a top-ranked 7B chat model on the LLM leaderboard. Read the blog post. -

Top 7B-Model Leaderboard Mention:

@sebastian.bodzahighlighted that the model mentioned in the blog post was the top 7B-Model on the LLM leaderboard at the time before model merging and utilizing eval data became common.

Links mentioned:

- Supervised Fine-Tuning and Direct Preference Optimization on Intel Gaudi2: Demonstrating a Top-Ranked 7B Chat Model on the LLM Leaderboard

- Intel/orca_dpo_pairs · Datasets at Hugging Face

▷ #embedding_dev (8 messages🔥):

-

Qdrant Vector DB Pacing Problems:

@sebastian.bodzareported that Qdrant, a vector database system, is slow, taking over an hour to dump 3GB/800k text-vectors. -

Optimal Token Length in Embeddings Debated:

@philipmayshared insights from Nils Reimers, suggesting a 2688 byte limit for contextual embeddings, which corresponds to about 540 tokens, but in practice, long context embedding models deteriorate after 512 tokens. -

Production Token Length Conundrum:

@sebastian.bodzaopposed the reduction to 256 tokens, advocating for maintaining 512 tokens for practical reasons and revealed plans to test various chunk lengths for code documentation to see how information retention scales. -

Identifying Too Generic Questions via Retrieval:

@philipmayproposed training a Dense Passage Retrieval (DPR) model on half the data and testing with the other half to identify “too generic” questions, which would yield too many closely related top results. -

Discussion on Quality of Long Context Embeddings:

@_jp1_challenged the view on the quality of long context embeddings, arguing that what could be considered “quite bad” might still be enough for some applications and that a single model performing reasonably across different context lengths would be highly usable.

LLM Perf Enthusiasts AI Discord Summary

-

Mac vs Linux Debate for AI:

@iyevenkoraised a question about using a Mac for open source AI development, with a response from@nosa_.suggesting Linux as a more suitable option due to better VRAM utilization and optimal AI software performance in Linux/cloud. Despite this,@slater.exe.indicated that MLX should serve@iyevenko’s needs on a Mac. -

Finetuning MoE Models:

@jeffreyw128’s curiosity about finetuning Mistral vs Mixtral models led to@slater.exe.reporting successes in finetuning Mistral with QLoRA/DPO and leveraging mergekit for creating MoE models. -

Smarter Model Merging Strategy: A tactical approach by

@slater.exe.suggests that finetuning smaller models and then merging them could be more efficient, though admitted there are complexities in assessing expert-level model performance. -

Is ChatGPT Getting Smarter?:

@res6969expressed skepticism about ChatGPT’s intelligence post-March 1, seeking studies to validate claims of its improvement, while@thebaghdaddyshared experiences of perceived laziness in the AI post-Turbo release. -

Users Doubt ChatGPT’s Claims of Enhancement: Several users, including

@res6969and@thebaghdaddy, reported no noticeable improvements in ChatGPT’s performance, suggesting the idea of its enhanced capabilities might be unfounded rumor, potentially based on “twitter chaff.”

LLM Perf Enthusiasts AI Channel Summaries

▷ #opensource (10 messages🔥):

-

Mac vs Linux for Open Source AI Dev:

@iyevenkoinquired about specs for open source development on a new M3 Mac,@nosa_.suggested considering Linux for better VRAM utilization and added that most AI software is optimized for Linux/cloud environments.@slater.exe.later mentioned that MLX should work on a Mac for Iyevenko’s needs. -

Experience with Finetuning MoE Models Shared:

@jeffreyw128asked the community about their experiences with finetuning Mistral vs Mixtral.@slater.exe.stepped in, sharing their own success with finetuning Mistral using QLoRA/DPO and creating MoE models with mergekit. -

Combining Smaller Models Could Be More Efficient: In a follow-up message,

@slater.exe.described a preferred method where finetuning smaller models and then merging them yields better efficiency, though there are challenges in benchmarking such expert-level model performance.

▷ #openai (4 messages):