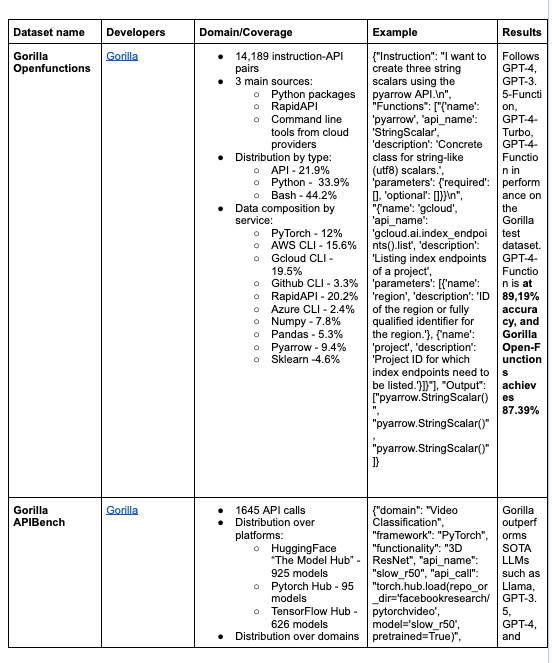

Skunkworks is working on collating function calling datasets - key to turning everything into functions!

It’s also important to familiarize with underlying data formats and sources:

What other datasets are out there for tuning function calls? Can we synthesize some?

Table of Contents

[TOC]

LM Studio Discord Summary

-

LM Studio’s Latest Updates and Compatibility: LM Studio’s FAQ was updated, clarifying its closed-source status, perpetual freeness for personal use, and non-invasive data handling, with the updated FAQ available here. The new LM Studio beta release includes fixes for memory warnings, generation consistency, with

@yagilbhinting at anticipated 2-bit quantization support shown in this pull request. -

Model Selection Advice for Gaming: For a Skyrim mod, Dolphin 2.7 Mixtral 8x7B or MegaDolphin was suggested, and Dolphin 2.6 Mistral 7B DPO with Q4_K_M quantization was chosen for performance. The Ferret model clarification indicated it’s a Mistral 7B finetune, not a vision model, with references to its GitHub repository and Hugging Face page.

-

Performance Bottlenecks and Hardware Discussions: In discussions of model execution, it was noted that a single, powerful GPU often outperforms multi-GPU setups due to potential bottlenecks, and when configuring hardware for LLMs, matching the model size to available GPU memory is key, with older server-class GPUs like the Tesla P40 being a cost-efficient upgrade choice.

-

Exploration of New AI Tools and Requests: Microsoft’s AutoGen Studio was introduced as a new tool for large language model applications, yet issues were reported and its full usefulness seems gated by the need for API fees for open-source models, prompting discussions on integration channels for other projects.

-

Use Cases for Linux Server Users: Users on Linux were directed towards utilizing

llama.cpprather than LM Studio, considering there is no headless mode available andllama.cppoffers a more suitable backend for server use.

Additional links shared provided insights into a variety of projects, including Microsoft’s AutoGen Studio, LM Studio’s alternative for iOS LLMFarm, and various Hugging Face model repositories. However, sparse details or a single message was insufficient to establish context for summarization regarding GitHub links for NexusRaven-V2 and the mention of memory challenges with local models.

LM Studio Channel Summaries

▷ #💬-general (62 messages🔥🔥):

-

LM Studio FAQ Refreshed:

@heyitsyorkieupdated the LM Studio FAQ, outlining key features like it being closed source, always free for personal use, with no data collection from users. The FAQ can be found here. -

LM Studio Closed Source Clarification:

@heyitsyorkieresponded to@esraa_45467’s query about accessing LM Studio code by stating it’s closed source and cannot be viewed. -

No Headless Mode for LM Studio:

@heyitsyorkiementioned that LMStudio must be running to use the local inference server (lis) as there is no headless mode available so it cannot be operated solely through a script. -

Support for macOS and iOS Environments:

@heyitsyorkieconfirmed to@pierre_hugo_that LM Studio is not supported on the MacBook Air 2018 with an Intel CPU and advised@dagbson the feasibility of using jailbreak to run it headlessly, whereas@technot80shared information about an alternative app LLMFarm for iOS. -

Language Limitations and Conversational Oddities: It’s mentioned that LM Studio primarily supports English, following the influx of Spanish-speaking users from a recent video. A discussion was observed about the humorous translations when switching between languages in the models, particularly a translation from Spanish to Chinese.

Links mentioned:

-

Despacio Despacito GIF - Despacio Despacito Luisfonsi - Discover & Share GIFs: Click to view the GIF

-

The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed…

-

GitHub - guinmoon/LLMFarm: llama and other large language models on iOS and MacOS offline using GGML library.: llama and other large language models on iOS and MacOS offline using GGML library. - GitHub - guinmoon/LLMFarm: llama and other large language models on iOS and MacOS offline using GGML library.

▷ #🤖-models-discussion-chat (80 messages🔥🔥):

-

Model Recommendation Inquiry for Skyrim Mod:

@gamerredwas looking for a suitable model to use with a Skyrim mod, and@dagbsadvised that anything in the Dolphin series would do, recommending Dolphin 2.7 Mixtral 8x7B or MegaDolphin for those who have the capability to run larger models.@gamerreddecided on using Dolphin 2.6 Mistral 7B DPO with quantization Q4_K_M for faster responses in-game. -

Confusion over Ferret’s Functionality:

@ahmd3ssamencountered the error “Vision model is not loaded. Cannot process images” when attempting to use Ferret;@heyitsyorkieclarified that Ferret is a Mistral 7B finetune and not a vision model, pointing to its GitHub repository and its Hugging Face page. -

Optimizing AI Performance for In-Game Use:

@dagbssuggested using Dolphin models with lower quantization for better in-game performance due to VRAM limitations, further noting that as conversations lengthen, responses might slow down if not using a rolling message history.@fabguyadded that disabling GPU in LMStudio might improve the experience as the game and the AI compete for GPU resources. -

Prompt Formatting Advice:

@.ben.comsought the correct prompt format for laserxtral leading to a brief discussion, with@dagbsconfirming that the “ChatML” preset is appropriate for all Dolphin-based models. -

Machine Requirements for LLM: In a query about the best model to fit on a 7900 XTX,

@heyitsyorkiereplied to@_anarche_that up to a 33-billion parameter model can be accommodated, such as Llama 1 models Guanaco and WizardVicuna.

Links mentioned:

-

Herika - The ChatGPT Companion: ‘Herika - The ChatGPT Companion’ is a revolutionary mod that aims to integrate Skyrim with Artificial Intelligence technology. It specifically adds a follower, Herika, whose responses and interactions

-

GitHub - apple/ml-ferret: Contribute to apple/ml-ferret development by creating an account on GitHub.

▷ #🧠-feedback (5 messages):

-

New LM Studio Beta Released:

@yagilbannounced a new LM Studio beta release which includes bug fixes such as warning for potential memory issues, a fix for erratic generations after multiple regenerations, and server response consistency. They note the removal of ggml format support and temporary disables for certain features. -

Inquiry About 2-bit Quant Support:

@logandarkinquired about 2-bit quantization support, linking to a GitHub pull request discussing the feature. -

Awaiting Next Beta for New Features: In response to a question about the addition of 2-bit quant,

@yagilbconfirmed that new betas are expected to be released later in the day and may include the discussed feature. -

Eager Users Anticipating Updates:

@logandarkexpressed thanks and eagerness following the update on the upcoming beta from@yagilb.

Links mentioned:

-

SOTA 2-bit quants by ikawrakow · Pull Request #4773 · ggerganov/llama.cpp: TL;DR This PR adds new “true” 2-bit quantization (but due to being implemented within the block-wise quantization approach of ggml/llama.cpp we end up using 2.0625 bpw, see below for more de…

▷ #🎛-hardware-discussion (81 messages🔥🔥):

-

Single GPU Tops Multi-GPU Setups for Speed:

@pefortinshared insights that using a single GPU for model execution is generally faster than distributing the workload across multiple GPUs. They mentioned that the slowest component, like a less powerful GPU in their multi-GPU setup, can bottleneck performance. -

Quantization and Hardware Capabilities Discussion:

@juansinisterra, looking for advice on quantization levels suitable for their hardware, received suggestions to match the model size to available GPU memory from@heyitsyorkieand to explore using both GPU and CPU for model execution from@fabguy. -

Cloud as an Alternative for Model Execution: Users discussed options for running models in the cloud, with

@dagbswarning about legal implications and trust concerns when it comes to uncensored content and recommending considering cost-effective, older server-class GPUs like the Tesla P40 for personal hardware upgrades. -

Navigating the GPU Market for AI Applications: Discussions arose over the cost-effectiveness of various GPUs like the 7900xtx compared to 3090s, with

@heyitsyorkieand.ben.comdiscussing different GPUs’ VRAM and suitability for tasks like Large Language Models (LLMs). -

Configuring Models based on Specific Hardware:

@heyitsyorkieadvised@lex05on the kind of models they can run with their hardware setup, suggesting 7b Q_4 models and reading model cards to determine appropriate configurations for their RTX 4060 with 8GB VRAM.

Links mentioned:

-

ggml/docs/gguf.md at master · ggerganov/ggml: Tensor library for machine learning. Contribute to ggerganov/ggml development by creating an account on GitHub.

-

MSI Radeon RX 7900 XTX GAMING TRIO CLASSIC 24GB Graphics Card | Ebuyer.com

▷ #🧪-beta-releases-chat (8 messages🔥):

-

Ubuntu 22.04 Compatibility Confirmed: User

@ephemeraldustinquired about running the application on Ubuntu 22.04 server, to which@heyitsyorkieresponded that it’s compiled on 22.04 so it should work fine. However, they were also informed that there is no headless mode or cli options available, and the app must remain open for use. -

Seeking CLI Accessibility:

@ephemeraldustwas looking to access the application via command-line interface (CLI), which led@heyitsyorkieto suggest looking intollama.cppfor a more suitable solution. -

Time-Saving Tip Appreciated:

@ephemeraldustthanked@heyitsyorkiefor the advice regardingllama.cpp, acknowledging the potential time saved. -

Clarity on LM Studio and llama.cpp Uses:

@heyitsyorkieclarified that LM Studio serves as a user-friendly front-end for Mac/Windows users, whilellama.cppis the backend that Linux server users should utilize.

▷ #autogen (6 messages):

-

Microsoft’s AutoGen Studio Unveiled:

@senecalouckshared a link to Microsoft’s AutoGen Studio on GitHub, highlighting its aim to enable next-gen large language model applications. -

LM Studio Issues with AutoGen Studio Highlighted:

@dagbstried out AutoGen Studio and reported having issues unrelated to LM Studio, also mentioning that others are experiencing functional difficulties with the tool. -

Functionality Hinges on API Fees:

@senecaloucknoted that the usefulness of AutoGen Studio is currently limited for open-source models and tooling without paying API fees, due to a lack of good function calling capability. -

Request for CrewAI Integration Channel:

@senecalouckrequested the creation of a CrewAI integration channel, indicating they have a project that could be of interest to the community. -

Open Interprite Channel Suggestion:

@dagbsexpressed surprise for the lack of an Open Interprite channel given its mention of LM Studio in their base configurations, hinting at the possible relevance for the community.

Links mentioned:

autogen/samples/apps/autogen-studio at main · microsoft/autogen: Enable Next-Gen Large Language Model Applications. Join our Discord: https://discord.gg/pAbnFJrkgZ - microsoft/autogen

▷ #langchain (1 messages):

sublimatorniq: https://github.com/nexusflowai/NexusRaven-V2

▷ #memgpt (1 messages):

pefortin: Yeah, local models struggle on how and when to use memory.

Eleuther Discord Summary

-

Confusion Cleared on ACL Submission:

ludgerpaehlersought clarification regarding the ACL submission deadline, it is indeed necessary to send submissions to the ARR OpenReview portal by the 15th of February, adhering to the ACL’s outlined process. -

Evaluation Harness Key Error Reported: An issue was filed by

alexrs_on the evaluation-harness and reported an error regarding a KeyError when using certain metrics from huggingface/evaluate. -

Mamba and ZOH Discretization Debate: There was a noteworthy discussion concerning the use of Zero-Order Hold (ZOH) discretization within the Mamba model, with insights regarding its relevance to linear state space models and ODE solutions.

-

Call for Reproducible Builds Amid Python Upgrade: During an update to Python,

@catboyslimmerencountered test failures and difficulties with Apex build while attempting to modernize gpt-neox. They emphasized the urgent need for a reproducible build process. -

BaseLM Refactor and Implementational Directive: Recent refactoring removed

BaseLM, necessitating users to implement functions like_loglikelihood_tokens. However, plans to reintroduce similar features were referenced in a Pull Request, and the team discussed potential solutions for boilerplate code.

Eleuther Channel Summaries

▷ #general (119 messages🔥🔥):

-

Confusion about ACL Submission Process:

ludgerpaehlerneeded clarification on ACL deadline process for submissions. They inquired whether the ACL submission must be sent to ARR OpenReview portal by the 15th of February according to the ACL submission dates and process. -

Issue with Evaluation Harness and Evaluate Metrics:

alexrs_encountered problems with the evaluation-harness and reported an issue where certain metrics from huggingface/evaluate are causing a KeyError. -

Exploring Memmapped Datasets:

lucaslinglesought insights for implementing memmapped datasets and referenced the Pythia codebase’s mention of 5gb-sized memmapped files.hailey_schoelkopfclarified that this size limitation was due to Huggingface upload constraints, but larger sizes should work for Megatron once combined. -

Request for Alternatives to

wandb:.the_alt_manrequested suggestions for a drop-in replacement forwandbwith integrated hyperparameter tuning and plotting, leading to discussions about the architectural choices behind monitoring and tune scheduling. -

Discussion on Hypernetworks versus MoE Layers:

Hawkstarted a conversation asking if anyone had tried hypernet layers as an alternative to MoE layers;zphangnoted that hypernetwork technology might not be advanced enough yet for this application.

Links mentioned:

-

Accelerating PyTorch with CUDA Graphs: Today, we are pleased to announce a new advanced CUDA feature, CUDA Graphs, has been brought to PyTorch. Modern DL frameworks have complicated software stacks that incur significant overheads associat…

-

mamba_small_bench/cifar_10.py at main · apapiu/mamba_small_bench: Trying out the Mamba architecture on small examples (cifar-10, shakespeare char level etc.) - apapiu/mamba_small_bench

-

KeyError on some metrics from huggingface/evaluate · Issue #1302 · EleutherAI/lm-evaluation-harness: Context I am currently utilizing the lm-evaluation-harness in conjunction with the metrics provided by huggingface/evaluate. Specifically, I am using bertscore. This metric returns a dictionary wit…

▷ #research (69 messages🔥🔥):

-

Quality vs Diversity in Generative Models:

@ai_waifumentioned that there’s a tradeoff between quality and diversity that is not well captured by negative log-likelihood (NLL) metrics. Although GANs may perform poorly from an NLL perspective, they can still produce visually appealing images without heavy penalties for mode dropping. -

Exploring 3D CAD Generation with GFlownets:

@johnryan465inquired about literature on GFlownet application to 3D CAD model generation, finding none.@carsonpoolesuggested that large synthetic datasets of CAD images paired with actual geometry could be useful. -

Introducing Contrastive Preference Optimization: A study shared by

@xylthixlmshowcases a new training method for Large Language Models (LLMs) called Contrastive Preference Optimization (CPO) which focuses on training models to avoid generating adequate but not perfect translations, in response to supervised fine-tuning’s shortcomings. -

Mamba and ZOH Discretization Discussion:

@michaelmelonssparked a conversation questioning why the Mamba model employs Zero-Order Hold (ZOH) discretization for its matrices.@useewhynotand@mrgonaooffered insights, relating to linear state space models and the solution of ODEs regarding A’s discretization. -

Tokenization and Byte-Level Encoding Exploration: Discussion led by

@carsonpooleand@rallio.examined the impacts of resetting embedding weights in models and the debate on whether to use Llama tokenizers or raw bytes for inputs. This topic evolved into a broader conversation about tokenizer inefficiencies, particularly in handling proper nouns and noisy data, with users@catboyslimmerand@fern.bearhighlighting the understudied nature of tokenization and its distributional consequences.

Links mentioned:

-

Contrastive Preference Optimization: Pushing the Boundaries of LLM Performance in Machine Translation: Moderate-sized large language models (LLMs) — those with 7B or 13B parameters — exhibit promising machine translation (MT) performance. However, even the top-performing 13B LLM-based translation mod…

-

GitHub - google-deepmind/alphageometry: Contribute to google-deepmind/alphageometry development by creating an account on GitHub.

▷ #interpretability-general (1 messages):

- Discovering Developmental Interpretability: User

@David_mcsharryshared an intriguing update, mentioning their discovery of developmental interpretability which appears to be relevant to the topics of interest in the interpretability-general channel.

▷ #lm-thunderdome (7 messages):

-

Seeking Clarity on BaseLM Removal: User

@daniellepintzinquired about the removal of the usefulBaseLMclass from the EleutherAI repository, which included methods likeloglikelihoodandloglikelihood_rolling. It was found in the code at BaseLM Reference. -

Refactoring Behind BaseLM Removal:

@hailey_schoelkopfclarified that the removal ofBaseLMwas due to a refactoring process designed to improve batch generation and data-parallel evaluation. They indicated that there is a plan to re-add similar functionality, hinted at by Pull Request #1279. -

Implementation Requirements Persist: In response to

@daniellepintznoticing that users must implement_loglikelihood_tokensandloglikelihood_rolling,@stellaathenaadmitted that when creating a novel model API, such implementation steps are largely unavoidable. -

Boilerplate Abstraction Still Possible:

@hailey_schoelkopfacknowledged that while some boilerplate might be abstracted away, functions likeloglikelihood_tokensandgenerate_untilmay entail some unavoidable custom coding. However, reusing or subclassing HFLM could potentially be a solution for users. -

Potential Issue with HF Datasets Version:

@hailey_schoelkopfsuggested pinning the HF datasets version to 2.15 for the time being, noting that versions 2.16 and above might be causing issues for users due to changes in dataset loading scripting.

Links mentioned:

-

lm-evaluation-harness/lm_eval/base.py at 3ccea2b2854dd3cc9ff5ef1772e33de21168c305 · EleutherAI/lm-evaluation-harness: A framework for few-shot evaluation of language models. - EleutherAI/lm-evaluation-harness

-

Loglikelihood refactor attempt 2 using template lm by anjor · Pull Request #1279 · EleutherAI/lm-evaluation-harness: Replaces #1215

▷ #gpt-neox-dev (9 messages🔥):

-

Potential Python Version Upgrade Issues:

@catboyslimmerraised a potential issue about the Python version update pull request #1122 on EleutherAI/gpt-neox failing some tests locally, noting that these failures could predate his changes and detailing his plan to test building in Docker and backporting into a poetry file. -

Docker Build Success Amid Testing Needs:

@catboyslimmermentioned that the build is successful in Docker but admitted that additional testing is likely needed, expressing a lack of certainty about potential issues caused by the changes. -

Complications With Apex Build:

@catboyslimmerencountered difficulties with building Apex, considering extracting a fused kernel directly from Apex to resolve the issue without delving deeper into the underlying problem with Apex. -

Magic in Running Scripts Multiple Times: In response to

@catboyslimmer’s building issues,@stellaathenasuggested repeating the build script execution a few times, which can sometimes resolve the problem, though@catboyslimmerdoubts this will work due to versioning and dependency issues. -

Horrified by the Lack of Reproducibility:

@catboyslimmerexpressed feelings of horror regarding their current non-reproducible build process and is compelled to set up a more reliable build system as soon as possible.

Links mentioned:

Python version update by segyges · Pull Request #1122 · EleutherAI/gpt-neox: Don’t know if this is ready or not; in my local testing it fails some of the pytest tests, but it’s plausible to likely it was doing so before. Bumps image to ubuntu 22.04 and uses the system …

Nous Research AI Discord Summary

-

GPT-4-Turbo Troubles Ahead:

.beowulfbrvoiced dissatisfaction with GPT-4-Turbo, highlighting nonsensical code output and a decline in performance. Meanwhile, the ChatGPT versus GPT-4-Turbo debate stirred up, with comparisons being made on iteration efficiency and bug presence. -

Seeking Simplified Chat Models:

@gabriel_symeand@murchistonwant chat models to skip the chit-chat and get things done, while@giftedgummybeeproposes instructing AI to “use the top 100 most common English words” for clearer communication. -

Anticipating Code Model Contenders: The Discord buzzed with talks of Stable Code 3B’s release, Stable Code 3B, the unveiling of InternLM2 at Hugging Face, and the potential debut of DeciCoder-6B, as discussed by

@osanseviero. -

Innovations in Text Recognition: Traditional OCR is highlighted as currently more reliable than multimodal models for tasks like multilingual invoice analysis, as evidenced by the suggestion to use Tesseract OCR. This approach is posed against AI models like GPT-4 Vision and multimodal alternatives.

-

Advancements in AI Geometry: Updates on AlphaGeometry from DeepMind drew a mixed response from the community, with a mention of DeepMind’s research evoking both humor and technical interest related to LLM integration and mathematical reasoning capabilities.

Nous Research AI Channel Summaries

▷ #off-topic (27 messages🔥):

-

GPT-4-Turbo Under Fire:

.beowulfbrshared frustration with GPT-4-Turbo, mentioning it produced complete nonsensical code when prompted for implementation help. The user reported a noticeable decline in quality and errors, even after correcting the AI. -

ChatGPT versus GPT-4-Turbo Debate: User

giftedgummybeeargued that the API is better compared to ChatGPT, while.beowulfbrcriticized ChatGPT for bugs and for taking twice as many iterations to arrive at the correct outcome in comparison to the API version. -

The Persistence of LLM Errors:

giftedgummybeeandnight_w0lfdiscussed the tendency for LLMs to repeat mistakes and revert to a “stupid LLM mode” if not properly guided, possibly referencing a need for more thorough prompts or “waluigi-ing the model.” -

TTS Software Discussions:

everyoneisgrossraised a question about offline TTS (Text-to-Speech) utilities being used in scripts, expressing challenges with Silero, whiletofhunterrrandleontellosuggested alternatives likesaycommand on Mac and open-source TTS Bark, respectively. -

Coqui TTS as a Recommended Solution:

leontellorecommended checking out Coqui TTS, a tool that allows trying out various TTS alternatives with just a few lines of code.

Links mentioned:

-

Nous Research Deep Learning GIF - Nous Research Research Nous - Discover & Share GIFs: Click to view the GIF

-

Deep Learning Yann Lecun GIF - Deep Learning Yann LeCun LeCun - Discover & Share GIFs: Click to view the GIF

▷ #interesting-links (15 messages🔥):

-

Chat Models Get Chatty:

@gabriel_symeand@murchistonexpress frustration over chat models being verbose and engaging in unnecessary dialogue instead of executing tasks promptly; they desire a straightforward “make it so” approach without the added waffle. -

Simplification Tactic for Chat Models:

@giftedgummybeesuggests enforcing simplicity by telling the AI to “use the top 100 most common English words” as a way to combat overly complicated responses from chat models. -

InternLM2-Chat-20B Release:

@euclaiseshares a link to Hugging Face repository detailing InternLM2, an open-source chat model with a 200K context window, heralded for stellar performance across various tasks, including reasoning and instruction following. -

Call for Digital Art and AI Symposium Proposals:

@everyoneisgrosshighlights the call for proposals for the Rising Algorithms Symposium in Wellington, asking for contributions that explore the intersection between art and AI. -

Vector-based Random Matrix Adaptation (VeRA):

@mister_poodleshares an arXiv paper introducing VeRA, a technique that reduces the number of trainable parameters in finetuning large language models without compromising performance, as@charlesmartin14retains skepticism on its effectiveness without evidence of results.

Links mentioned:

-

VeRA: Vector-based Random Matrix Adaptation: Low-rank adapation (LoRA) is a popular method that reduces the number of trainable parameters when finetuning large language models, but still faces acute storage challenges when scaling to even large…

-

2024 ADA Symposium – Call for Proposals:

Aotearoa Digital Arts Network Symposium

&…

Rising Algorithms: Navigate, Automate, Dream

24 – 26 May 2024

Te Whanganui-a-Tara Wellington -

ReFT: Reasoning with Reinforced Fine-Tuning: One way to enhance the reasoning capability of Large Language Models (LLMs) is to conduct Supervised Fine-Tuning (SFT) using Chain-of-Thought (CoT) annotations. This approach does not show sufficientl…

-

Solution Suicide GIF - Solution Suicide Rick And Morty - Discover & Share GIFs: Click to view the GIF

▷ #general (110 messages🔥🔥):

- Anticipation of Stable Code 3B’s release: Enthusiasm and skepticism were expressed regarding the announcement of the new Stable Code 3B, a state-of-the-art model designed for code completion, with

@.beowulfbrcalling it “disappointing” due to being behind a paywall. - Confusion around new models: Discussion centered on upcoming models like StableLM Code, with users such as

@gabriel_symeand@giftedgummybeetrying to distill the information from teasers on Twitter, questioning whether they have been released or not. - Debate on coding model benchmarks: Members like

@night_w0lftrust specific evaluation platforms like EvalPlus for judging the performance of code models, while others like@tekniumand@antonb5162discuss the validity of HumaEval scores and the reliability of various models. - Interest in new coding models:

@osansevierohighlight the release of DeciCoder-6B, attracting attention with its performance claims and open-source availability. - Crowd-sourced OSS model funding:

@carsonpooleexpresses interest in sponsoring open-source software (OSS) models related to mistral, mixtral, or phi, seeking collaborations with the community.

Links mentioned:

-

Tweet from Google DeepMind (@GoogleDeepMind): Introducing AlphaGeometry: an AI system that solves Olympiad geometry problems at a level approaching a human gold-medalist. 📐 It was trained solely on synthetic data and marks a breakthrough for AI…

-

Tweet from Wavecoder (@TeamCodeLLM_AI): We are in the process of preparing for open-source related matters. Please stay tuned. Once everything is ready, we will announce the latest updates through this account.

-

Tweet from Deci AI (@deci_ai): We’re back and excited to announce two new models: DeciCoder-6B and DeciDiffuion 2.0! 🙌 Here’s the 101: DeciCoder-6B 📋 ✅ A multi-language, codeLLM with support for 8 programming languages. ✅ Rel…

-

Cat Cats GIF - Cat Cats Cat eating - Discover & Share GIFs: Click to view the GIF

-

Tweet from Blaze (Balázs Galambosi) (@gblazex): New SOTA coding model by @MSFTResearch 81.7 HumanEval & only 6.7B! (vs 85.4 GPT4) Only nerfed version of dataset is going open source I think. But the techniques are laid out in the paper. Nerfed vs …

-

Stable Code 3B: Coding on the Edge — Stability AI: Stable Code, an upgrade from Stable Code Alpha 3B, specializes in code completion and outperforms predecessors in efficiency and multi-language support. It is compatible with standard laptops, includi…

-

Meet: Real-time meetings by Google. Using your browser, share your video, desktop, and presentations with teammates and customers.

-

Cat Cats GIF - Cat Cats Cat meme - Discover & Share GIFs: Click to view the GIF

-

Giga Gigacat GIF - Giga Gigacat Cat - Discover & Share GIFs: Click to view the GIF

-

Tweet from Div Garg (@DivGarg9): We just solved the long-horizon planning & execution issue with Agents 🤯! Excited to announce that @MultiON_AI can now take actions well over 500+ steps without loosing context & cross-operate on 10…

-

Tweet from Omar Sanseviero (@osanseviero): Spoiler alert: this might be one of the most exciting weeks for code LLMs since Code Llama

-

FastChat/fastchat/llm_judge/README.md at main · lm-sys/FastChat: An open platform for training, serving, and evaluating large language models. Release repo for Vicuna and Chatbot Arena. - lm-sys/FastChat

-

Tweet from Andriy Burkov (@burkov): If you really want to do something useful in AI, instead of training another tiny llama, pick up this project https://hazyresearch.stanford.edu/blog/2024-01-11-m2-bert-retrieval and train a 1B-paramet…

-

GitHub - evalplus/evalplus: EvalPlus for rigourous evaluation of LLM-synthesized code: EvalPlus for rigourous evaluation of LLM-synthesized code - GitHub - evalplus/evalplus: EvalPlus for rigourous evaluation of LLM-synthesized code

-

GitHub - draganjovanovich/sharegpt-vim-editor: sharegpt jsonl vim editor: sharegpt jsonl vim editor. Contribute to draganjovanovich/sharegpt-vim-editor development by creating an account on GitHub.

▷ #ask-about-llms (40 messages🔥):

-

In Search of Speed and Efficiency with AI Models:

@realsedlyfinquired about the performance of OpenHermes2.5 gptq with vllm compared to using transformers, wondering if it’s faster. -

Benchmarking Code Generation Models:

@leontelloposed a question regarding trusted code generation benchmarks and leaderboards, while@night_w0lfpointed to a recent post in the general channel which apparently contains relevant information, but no specific URL was mentioned. -

Multimodals vs. Traditional OCR for Multilingual Invoice Analysis:

@.beowulfbrsuggested their friend to try Tesseract OCR as an alternative to multimodal models like Qwen-VL, indicating OCR’s superiority in accuracy, especially for invoices in various languages. -

OCR Prevails Over Multimodal Models for Text Recognition:

@bernaferrariand@n8programsdiscussed the limitations of LLMs in image recognition, suggesting that while GPT-4 Vision shows promise, traditional OCR systems are still more effective at tasks like reading car plates. -

DeepMind’s AlphaGeometry Sparks a Mix of Interest and Humor:

@bernaferrarishared DeepMind’s latest research on AlphaGeometry, with community reactions ranging from teknium joking about their own math skills to@mr.userbox020comparing the system to a combination of LLM and code interpreter architectures.

Links mentioned:

-

AlphaGeometry: An Olympiad-level AI system for geometry: Our AI system surpasses the state-of-the-art approach for geometry problems, advancing AI reasoning in mathematics

-

GitHub - tesseract-ocr/tesseract: Tesseract Open Source OCR Engine (main repository): Tesseract Open Source OCR Engine (main repository) - GitHub - tesseract-ocr/tesseract: Tesseract Open Source OCR Engine (main repository)

Mistral Discord Summary

-

Launchpad for LLM APIs Comparison: A new website introduced by

@_micah_hoffers a comparison between different hosting APIs for models like Mistral 7B Instruct and Mixtral 8x7B Instruct, with a focus on technical metrics evaluation. The platforms for Mistral 7B Instruct and Mixtral 8x7B Instruct were shared, alongside their Twitter page for updates. -

Mistral Models’ Verbosity & Token Questions: Users in the models channel discussed challenges with Mistral’s verbose responses and proper use of bos_token in chat templates. Integrating the tokens correctly doesn’t seem to influence model scores significantly; however, verbosity issues are recognized and being addressed.

-

Fine-Tuning Facets and Snags: The finetuning channel saw exchanges on topics like using

--vocabtype bpefor tokenizers withouttokenizer.model, formatting datasets for instruct model fine-tuning, and challenges with fine-tuned models not retaining previous task knowledge. -

Deep Chat and Mistral Performance Optimization: Deep Chat allows running models like Mistral directly in the browser using local resources, with its open-source project available on GitHub. Meanwhile, FluxNinja Aperture was introduced in the showcase channel as a solution for concurrency scheduling, detailed in their blog post.

-

Mistral-7B Instruct Deployment Angel: The rollout of the Mistral-7B Instruct model was broadcasted in la-plateforme channel, directing users to stay tuned to artificialanalysis.ai group, particularly after a tweet update. The analysis of the model can be found at ArtificialAnalysis.ai.

Mistral Channel Summaries

▷ #general (76 messages🔥🔥):

-

New Comparative Website for LLM API Providers:

@_micah_hlaunched a website to compare different hosting APIs for models like Mistral 7B Instruct and Mixtral 8x7B Instruct, providing a platform for Mistral 7B and a platform for Mixtral 8x7B. The Twitter page was also shared for updates. -

Perplexity AI’s Pricing Source and Limitations Discussed: Discussion around Perplexity AI’s input token pricing was addressed by

@_micah_h, pointing to a changelog note revealing the removal of 13B pricing, while also agreeing with@blueridanusthat the limitation to 4k is somewhat unfair. -

Local Deployment and Free Versions of Mistral Discussed:

@rozonlineinquired about free versions of Mistral, and@blueridanussuggested deploying locally or trying Perplexity AI’s playground for some free credits. -

Adding Third-Party PHP Client to Mistral’s Documentation:

@gbourdinrequested that a PHP client library for Mistral API, available on GitHub, be mentioned in Mistral’s client documentation page. -

Public Resources on Mistral AI’s Privacy and Data Processing:

@ethuxprovided@khalifa007with links to Mistral AI’s Privacy Policy and Data Processing Agreement for information regarding personal data handling.

Links mentioned:

-

LLM in a flash: Efficient Large Language Model Inference with Limited Memory: Large language models (LLMs) are central to modern natural language processing, delivering exceptional performance in various tasks. However, their substantial computational and memory requirements pr…

-

Privacy Policy: Frontier AI in your hands

-

Mistral AI | Open-weight models: Frontier AI in your hands

-

Open LLM Leaderboard - a Hugging Face Space by HuggingFaceH4

-

Data Processing Agreement: Frontier AI in your hands

-

LMSys Chatbot Arena Leaderboard - a Hugging Face Space by lmsys

-

Mixtral of Experts: We introduce Mixtral 8x7B, a Sparse Mixture of Experts (SMoE) language model. Mixtral has the same architecture as Mistral 7B, with the difference that each layer is composed of 8 feedforward blocks (…

-

Self-Consuming Generative Models Go MAD: Seismic advances in generative AI algorithms for imagery, text, and other data types has led to the temptation to use synthetic data to train next-generation models. Repeating this process creates an …

-

Client code | Mistral AI Large Language Models: We provide client codes in both Python and Javascript.

-

GitHub - partITech/php-mistral: MistralAi php client: MistralAi php client. Contribute to partITech/php-mistral development by creating an account on GitHub.

-

Mistral 7B - Host Analysis | ArtificialAnalysis.ai: Analysis of Mistral 7B Instruct across metrics including quality, latency, throughput, price and others.

-

Mixtral 8x7B - Host Analysis | ArtificialAnalysis.ai: Analysis of Mixtral 8x7B Instruct across metrics including quality, latency, throughput, price and others.

▷ #models (71 messages🔥🔥):

-

Mistral’s Verbose Responses Puzzle Users: User

@rabdullinreported that Mistral’s hosted models are failing to adhere to the brevity of instructions provided in few-shot prompts, contrasting with the behavior of local models like Mistral 7B Instruct v1. In response,@sophiamyangshared that verbosity in Mistral models is a known issue, and the team is actively working on a fix. -

Formatting Fiasco: Confusion arose regarding the proper use of bos_token in the chat template, with

@rabdullininitially positing that Mistral API might tokenize incorrectly due to his template placing bos_tokens within a loop. However,@sophiamyangclarified that the Mistral models expect the bos_token once at the beginning, leading@rabdullinto adjust his template and find that while verbosity remained, changing the token placement had no significant impact on model scores. -

Benchmark Blues:

@rabdullinis eager to benchmark Mistral models against closed-source benchmarks from products and services, mentioning discrepancies in rankings between versions and unexpected verbosity affecting scores.@sophiamyangprompted for examples that can be shared and investigated by the Mistral team. -

Template Troubles:

@rabdullinquestioned the implications of his template’s incorrect format, which led to a back-and-forth over the role ofandtokens in prompt design. A reference to the GitHub Llama_2 tokenizer seemed to mirror@rabdullin’s structure, but the matter remains unresolved whether the format influenced API behavior. -

Model Misidentification: There was some confusion about the existence of a Mistral 13B model, sparked by

@dfilipp9following an external hardware guide listing an alleged MistralMakise-Merged-13B-GGUF model.@rabdullinpointed out that only Mistral 7B or 8x7B models exist, and they are available on HuggingFace.

Links mentioned:

-

tokenizer_config.json · mistralai/Mistral-7B-Instruct-v0.2 at main

-

tokenizer_config.json · mistralai/Mixtral-8x7B-Instruct-v0.1 at main

-

Mistral LLM: All Versions & Hardware Requirements – Hardware Corner

-

2-Zylinder-Kompressor Twister 3800 D | AGRE | ZGONC): Kompressoren Bei ZGONC kaufen! 2-Zylinder-Kompressor Twister 3800 D, AGRE Spannung in Volt: 400, Leistung in Watt: 3.000,… - Raunz´ned - kauf!

▷ #finetuning (33 messages🔥):

-

Tokenizer Troubles Resolved: User

@ethuxconfirmed that for AquilaChat model which lackstokenizer.model, the workaround is to use--vocabtype bpewhen runningconvert.py. This advice helped@distro1546successfully quantize their fine-tuned AquilaChat model. -

Chit Chat Isn’t Child’s Play:

@distro1546faced issues with fine-tuned Mistral not performing in an “assistant” manner and learned from@ethuxthat the normal model is unsuitable for chat. They consider fine-tuning the instruct version instead. -

Transforming Transformers:

@distro1546also reported continuous text generation until manual interruption and was seeking advice on how to address it, additionally querying about merging a LoRA model with Mistral Instruct using the base model. -

Format Finesse Anyone?: Clarification sought by

@distro1546regarding dataset formatting for fine-tuning instruct models,@denisjannotadvised correct format is[INST]question[/INST]answer</s>without an initial<s>token. -

Fine-Tuning Frustration:

@kam414sought assistance for an issue where their fine-tuned model fails to retain knowledge of previous tasks after learning a new one, despite having a small dataset of 200 rows which led to unsatisfactory loss metrics.

Links mentioned:

-

Add mistral’s new 7B-instruct-v0.2 · Issue #1499 · jmorganca/ollama: Along with many releases, Mistral vastly improved their existing 7B model with a version named v0.2. It has 32k context instead of 8k and better benchmark scores: https://x.com/dchaplot/status/1734…

-

TheBloke/AquilaChat2-34B-AWQ · FileNotFoundError - the tokenizer.model file could not be found

-

Could not find tokenizer.model in llama2 · Issue #3256 · ggerganov/llama.cpp: When I ran this command: python convert.py \ llama2-summarizer-id-2/final_merged_checkpoint \ —outtype f16 \ —outfile llama2-summarizer-id-2/final_merged_checkpoint/llama2-summarizer-id-2.gguf.fp…

-

How to Finetune Mistral AI 7B LLM with Hugging Face AutoTrain - KDnuggets: Learn how to fine-tune the state-of-the-art LLM.

▷ #showcase (6 messages):

-

Deep Chat Integrates LLMs Directly on Browsers: User

@ovi8773shared their open-source project named Deep Chat, which allows running LLMs like Mistral on the browser without needing a server. The project Deep Chat GitHub Repo and Playground were shared for users to experiment with the web component. -

Genuine Excitement for In-Browser LLM:

@gbourdinexpressed excitement about the potential of running LLMs on the browser, as introduced by@ovi8773. -

In-Browser Acceleration Clarification:

@Valdisasked for clarification on how “Deep Chat” works, leading@ovi8773to confirm that the LLM runs inference on the local machine via the browser, using web assembly and hardware acceleration. -

Concurrency Challenges with Mistral AI Highlighted: User

@tuscan_ninjawrote about a blog post discussing the current concurrency and GPU limitation challenges with the Mistral 7B model. They introduced FluxNinja Aperture as a solution offering concurrency scheduling and request prioritization to improve performance (FluxNinja Blog Post). -

User Seeking Moderation Role Information: User

@tominix356mentioned@707162732578734181to inquire about the moderation role, with no further context provided.

Links mentioned:

-

Balancing Cost and Efficiency in Mistral with Concurrency Scheduling | FluxNinja Aperture: FluxNinja Aperture’s Concurrency Scheduling feature efficiently reduces infrastructure costs for operating Mistral, while simultaneously ensuring optimal performance and user experience.

-

GitHub - OvidijusParsiunas/deep-chat: Fully customizable AI chatbot component for your website: Fully customizable AI chatbot component for your website - GitHub - OvidijusParsiunas/deep-chat: Fully customizable AI chatbot component for your website

-

Playground | Deep Chat: Deep Chat Playground

▷ #la-plateforme (5 messages):

-

A Handy Python Tip for Model Outputs: User

@rabdullinshared a handy tip for handling model responses: Usemodel_dumpon theresponseobject to export it, and passmode="json"if you want to save it as JSON. -

Anyscale Performance Benchmarked: User

@freqaicommented on the performance of Anyscale, noting that they rarely see anywhere near those values, with an average closer to 2 for Anyscale. -

Clarification on Shared Graph:

@sublimatorniqclarified that the earlier graph they shared was not their own and they should have provided the source. -

Launch of Mistral-7B Instruct:

@sublimatorniqannounced the launch of the Mistral-7B Instruct model and encouraged following the artificialanalysis.ai group on Twitter for future updates. The source of the info was given as a post in another channel with the ID#1144547040928481394.

Links mentioned:

Mistral 7B - Host Analysis | ArtificialAnalysis.ai: Analysis of Mistral 7B Instruct across metrics including quality, latency, throughput, price and others.

HuggingFace Discord Discord Summary

-

Rapid Response to Repository Alerts: An immediate fix was applied to a repository metadata issue with users confirming a resolution. This alleviated earlier concerns about error messages stating “400 metadata is required” on repositories.

-

BERT and NER Get a Fine-Tuning Facelift: Solutions were shared for correctly labeling BERT’s configuration and detailed guidance on NER dataset creation. Users discussed the handling of

#tokens and the significance of proper labeling in BERT’sconfig.json. -

Harnessing Deep Learning for Diverse Applications: From AR hit-testing resources and automated transcriptions to AI-powered note-takers for school and ad recommendations across modalities, discussions included innovative applications for existing models. Concerns about timeout issues with large language models like

Deci/DeciLM-7Bandphi-2were raised, with suggestions to run Python as admin and test with smaller models likegpt2. -

The Evolution of Model Serving and Deployment: An invite to a ML Model Serving webinar was extended, covering the deployment of ML and LLMs. Users explored multi-stack app deployment on HuggingFace Spaces, chaining models for improved performance, and deploying local LLM assistants attuned to privacy.

-

New Frontiers in Fine-Tuning and Dataset Sharing: Members shared resources, including a new Multimodal Dataset for Visual Question Answering and developments in ontology learning using LLMs. Attention was directed toward fine-tuning scripts for models such as

train_sd2x.py, with one user adding untested LoRA support for Stable Diffusion 2.x. Projects such as SimpleTuner were mentioned for their contributions towards model perfection.

HuggingFace Discord Channel Summaries

▷ #general (84 messages🔥🔥):

-

Quick Fix for Repository Metadata Issue:

@lunarfluacknowledged an issue with “400 metadata is required” on repos and worked on a remedy.@jo_pmt_79880confirmed that it was resolved quickly after a humorously noting initial panic. -

BERT Label Fixes and Fine-Tuning:

@Cubie | Tomoffered a solution for@redopan706on using correct labels instead ofLABEL_0, LABEL_1in BERT’sconfig.json.@stroggozalso provided detailed guidance on data structuring for NER dataset creation and handling of#tokens in outputs for@redopan706. -

Deployment and Utilization of Multi-Model Architectures: Users discussed how to deploy multi-stack apps on HuggingFace Spaces, with

@thethe_realghostseeking assistance. And@vishyouluckasked for model chaining advice and shared experiences with model performance and the use of a “refiner” for image outputs. -

Model Recommendations for Various Tasks:

@zmkeeneyinquired about models for text-to-text tasks with@doctorpanglossproviding a comprehensive response touching on suitability of models for market research, website development, brands creation, and consulting firm support. -

AI-Powered Note Taking Inquiry:

@blakeskoepkaasked about AI note takers for school which spawned a succinct suggestion from@hoangt12345about utilizing class recordings and automated transcriptions.

Links mentioned:

-

Join Pareto.AI’s Screen Recording Team: We’re looking for skilled content creators who are Windows users to screen-record activities they’ve gained proficiency or mastery over for AI training.

-

config.json · yy07/bert-base-japanese-v3-wrime-sentiment at main

-

config.json · yy07/bert-base-japanese-v3-wrime-sentiment at main

▷ #today-im-learning (4 messages):

-

Invitation to ML Model Serving Webinar:

@kizzy_kayshared an invite for a webinar titled “A Whirlwind Tour of ML Model Serving Strategies (Including LLMs)” scheduled for January 25, 10 am PST, featuring Ramon Perez from Seldon. The event is free with required registration and will cover deployment strategies for traditional ML and LLMs. -

Beginner’s Query on Learning ML:

@mastermindfillasked for guidance on starting with machine learning and mentioned they have begun watching the ML series by 3blue1brown. No further recommendations or responses were provided within the given message history.

Links mentioned:

Webinar “A Whirlwind Tour of ML Model Serving Strategies (Including LLMs)” · Luma: Data Phoenix team invites you all to our upcoming webinar that’s going to take place on January 25th, 10 am PST. Topic: A Whirlwind Tour of ML Model Serving Strategies (Including…

▷ #cool-finds (8 messages🔥):

-

Local LLM Assistant for Sarcasm and Privacy:

@tea3200highlighted a blog post about setting up a local LLM assistant Local LLM Assistant that functions without cloud services, with a focus on privacy and flexibility to add new capabilities. -

VQA Dataset now on Hugging Face:

@andysingalhas contributed a Multimodal Dataset for visual question answering to the Hugging Face community, initially found from Mateusz Malinowski and Mario Fritz. You can access the dataset here: Andyrasika/VQA-Dataset. -

Ollama: API for AI Interaction:

@andysingalshared a GitHub repository for Ollama API, which allows developers to deploy a RESTful API server to interact with Ollama and Stable Diffusion. Ollama API on GitHub. -

AlphaGeometry: AI for Olympiad-level Geometry:

@tea3200brought attention to DeepMind’s new AI system, AlphaGeometry, that excels at complex geometry problems. DeepMind has published a research blog post about it here. -

Ontology Learning with LLMs:

@davidello19recommended an arXiv paper about using LLMs for ontology learning LLMs for Ontology Learning and also shared a more accessible article on the same topic Integrating Ontologies with LLMs. -

Improving AI with Juggernaut XL:

@rxiencementioned enhanced performance using Juggernaut XL combined with good prompting in their implemented space on Hugging Face, which can be found here.

Links mentioned:

-

H94 IP Adapter FaceID SDXL - a Hugging Face Space by r-neuschulz

-

AlphaGeometry: An Olympiad-level AI system for geometry: Our AI system surpasses the state-of-the-art approach for geometry problems, advancing AI reasoning in mathematics

-

Building a fully local LLM voice assistant to control my smart home: I’ve had my days with Siri and Google Assistant. While they have the ability to control your devices, they cannot be customized and inherently rely on cloud services. In hopes of learning someth…

-

GitHub - Dublit-Development/ollama-api: Deploy a RESTful API Server to interact with Ollama and Stable Diffusion: Deploy a RESTful API Server to interact with Ollama and Stable Diffusion - GitHub - Dublit-Development/ollama-api: Deploy a RESTful API Server to interact with Ollama and Stable Diffusion

-

Integrating Ontologies with Large Language Models for Decision-Making: The intersection of Ontologies and Large Language Models (LLMs) is opening up new horizons for decision-making tools. Leveraging the unique…

▷ #i-made-this (6 messages):

-

CrewAI Gets an Automation Boost:

@yannie_shared their GitHub project, which automatically creates a crew and tasks in CrewAI. Check out the repo here. -

Instill VDP Hits Open Beta:

@xiaofei5116announced that Instill VDP is now live on Product Hunt. This versatile data pipeline offers an open-source, no-code/low-code ETL solution and is detailed on their Product Hunt page. -

Feedback Celebrated for Instill VDP:

@shihchunhuangexpressed admiration for the Instill VDP project, acknowledging it as an amazing initiative. -

Multimodal VQA Dataset Available:

@andysingalmentioned adding the Multimodal Dataset from Mateusz Malinowski and Mario Fritz to their repo for community use. Find it on HuggingFace here, ensuring credit is given to its creators. -

Innovative FaceID Space Created:

@rxiencelaunched a HuggingFace space that allows zero-shot transfer of face structure onto newly prompted portraits, inviting others to test FaceID SDXL space.

Links mentioned:

-

H94 IP Adapter FaceID SDXL - a Hugging Face Space by r-neuschulz

-

GitHub - yanniedog/crewai-autocrew: Automatically create a crew and tasks in CrewAI: Automatically create a crew and tasks in CrewAI. Contribute to yanniedog/crewai-autocrew development by creating an account on GitHub.

-

Instill VDP - Open source unstructured data ETL for AI first applications | Product Hunt: Versatile Data Pipeline (VDP): An open-source, no-code/low-code solution for quick AI workflow creation. It handles unstructured data, ensuring efficient data connections, flexible pipelines, and smoo…

▷ #reading-group (2 messages):

- Exploring LLM Applications in the Legal Field:

@chad_in_the_houseexpressed interest in integrating Large Language Models (LLMs) in legal settings, such as assisting lawyers or judges.@gduteaudfound this to be a super interesting topic as well.

▷ #diffusion-discussions (12 messages🔥):

-

Seeking Ad Recommendation Datasets:

@andysingalinquired about datasets suitable for ad recommendations, which include text, images, and videos. However, no responses or suggestions were provided. -

Directing Towards Fine-Tuning Scripts:

@sayakpaulrequested a pointer to a fine-tuning script, and@pseudoterminalxresponded by mentioning the script is calledtrain_sd2x.py. -

Training Script for t2i-adapter Unavailable for SD 1.5/2:

@square1111asked about a training script for t2i-adapter for stable diffusion versions 1.5/2, and later pointed out that it is not implemented for those versions, referencing the Hugging Face Diffusers GitHub repository. -

Fine-Tuning for Indian Cuisine on the Menu:

@vishyouluckexpressed intent to fine-tune a diffusion model for knowledge on Indian recipes and ingredients using SimpleTuner on GitHub.@pseudoterminalxsuggested trying SDXL 1.0 with a LoRA approach. -

LoRA Support for SD 2.x Added:

@pseudoterminalxnoted they’ve added LoRA support for Stable Diffusion 2.x but also flagged that they have not tested it yet and mentioned that validations might not work as expected.

Links mentioned:

-

diffusers/examples/t2i_adapter at main · huggingface/diffusers: 🤗 Diffusers: State-of-the-art diffusion models for image and audio generation in PyTorch - huggingface/diffusers

-

GitHub - bghira/SimpleTuner: A general fine-tuning kit geared toward Stable Diffusion 2.1 and SDXL.: A general fine-tuning kit geared toward Stable Diffusion 2.1 and SDXL. - GitHub - bghira/SimpleTuner: A general fine-tuning kit geared toward Stable Diffusion 2.1 and SDXL.

▷ #computer-vision (1 messages):

- In Search of AR Hit-Testing Insights: User

@skibbydooasked for resources on how hit-testing for AR (Augmented Reality) is implemented, specifically relating to plane detection and real-time meshing on mobile devices. Their search hasn’t yielded substantial information yet.

▷ #NLP (53 messages🔥):

-

LLM Setup Woes for @kingpoki: @kingpoki is struggling to get any large language model working with Hugging Face Transformers in Python, encountering timeout errors with no clear exceptions thrown. They attempted to use models like

Deci/DeciLM-7Bandphi-2among others, with robust system specs unlikely to be the issue. -

Troubleshooting with @vipitis: @vipitis engaged in troubleshooting, suggesting various fixes such as updating

accelerateandhuggingface_hub, running Python as an admin, and trying out smaller models likegpt2. Multiple other avenues were discussed, such as avoiding overriding stderr, decreasingmax_new_tokens, and verifying if CPU inference works, but no resolution was reported by the end. -

Subtitles Syncing Inquiry by @dtrifuno: @dtrifuno sought advice on how to match high-quality human transcription text with model-generated speech transcripts containing timestamps. No conclusive solution was provided within the available message history.

-

Question on Context Window Limits by @cornwastaken: @cornwastaken enquired about a resource or repository that details the context window lengths of large language models for a use case involving large documents and question answering. No responses to this question were included in the message history.

-

Docker Setup Query from @theintegralanomaly: @theintegralanomaly asked for assistance on how to pre-download HuggingFace embeddings model data to avoid long initialization times in a new docker container. @Cubie | Tom suggested a potential workflow involving

git clone, incorporating the model into the docker image, and directing the application to use the local files.

Links mentioned:

-

Enable your device for development - Windows apps: Activate Developer Mode on your PC to develop apps.

▷ #diffusion-discussions (12 messages🔥):

-

Looking for Diverse Ad Datasets: User

@andysingalinquired about datasets for ad recommendation that include text, image, and video formats. -

Pointing to Fine-tuning Scripts:

@sayakpaulsought assistance for a fine-tuning script, which was addressed with the suggestion to use thetrain_sd2x.pyscript by@pseudoterminalx. -

Training Script for t2i-adapter Lacks 1.5/2 Support:

@square1111shared a discovery that t2i-adapter training script does not support sd1.5/2, linking to the GitHub repository for reference. -

Fine-tuning for Indian Cuisine Imagery:

@vishyouluckexpressed an intention to fine-tune a diffusion model for Indian recipes and ingredients using SimpleTuner, while seeking advice on the appropriate base model. The recommendation provided by@pseudoterminalxwas to use sdxl 1.0 and consider a LoRA. -

Unverified LoRA Support for SD 2.x:

@pseudoterminalxmentioned adding LoRA support for Stable Diffusion 2.x but cautioned that it is untested and validations might fail.

Links mentioned:

-

diffusers/examples/t2i_adapter at main · huggingface/diffusers: 🤗 Diffusers: State-of-the-art diffusion models for image and audio generation in PyTorch - huggingface/diffusers

-

GitHub - bghira/SimpleTuner: A general fine-tuning kit geared toward Stable Diffusion 2.1 and SDXL.: A general fine-tuning kit geared toward Stable Diffusion 2.1 and SDXL. - GitHub - bghira/SimpleTuner: A general fine-tuning kit geared toward Stable Diffusion 2.1 and SDXL.

OpenAI Discord Summary

GPT’s Negation Challenge Sparks Discussion at Dev Event: During an event, there was a notable acknowledgement from a developer that resonated with @darthgustav. regarding the AI’s issue with handling negation prompts, which tends to ignore the negation leading to potential errors.

Could GPT Assistant Join the Free Tier?: @mischasimpson hinted, based on a live tutorial they watched, that the GPT assistant may soon be accessible without cost, which indicates a possible shift towards making advanced AI tools available on OpenAI’s free tier.

Customizing Education with GPT: Users @mischasimpson and @darthgustav. discussed the use of GPT for generating personalized reading exercises for children, touching on the simplicity of process and the potential to track completion and performance.

The Curious Case of the Mythical GPT-4.5 Turbo: In a conversation spiked with speculation, @okint believed to have encountered a version of the AI dubbed “gpt-4.5-turbo.” However, others like @7877 and @luarstudios were quick to remind the community to be wary of possible AI fabrications, as such a version might be nonexistent.

Managing Expectations of GPT’s Capabilities: Users @solbus and @.bren_._ provided clarity on the actual workings of Custom GPTs, dispelling misconceptions that they can be trained directly on knowledge files and explaining that true model training requires OpenAI services or building a large language model from scratch.

OpenAI Channel Summaries

▷ #ai-discussions (47 messages🔥):

-

GPT Model Hallucinations Addressed:

@luguimentioned that calling the AI’s reference to system internals a “leak” is an exaggeration because that information is public. They clarified that AI does not know its own IP or the server’s IP and any such information it gives out is likely a hallucination. -

Clarity on Custom GPT Web Browsing: In response to

@luarstudios’ issue with web browsing capabilities, the discussion hinted at potential misunderstandings around the AI’s features, indicating that it might not be able to directly access external sources. -

LAM (Large Action Model) Buzz and Backend Speculations:

@exx1described “Large Action Model” as a buzzword that combines various models, including GPT-y with vision capabilities, and speculated that it could be using OpenAI models in the backend. -

Enthusiasm and Skepticism Over GPT-4.5:

-

While

@michael_6138_97508advised@murph12fon fine-tuning options and the use of datasets from sources like Kaggle,@luguiconfirmed the possibility of fine-tuning with books. -

In another thread,

@okintwas convinced they had encountered “gpt-4.5-turbo,” but others, like@7877, reminded them that AI is prone to make up information and that such a version might not exist yet.

-

-

Discord Bot Discussion for Deleting Messages:

@names8619asked about using ChatGPT premium to delete Discord posts, and@7877suggested developing a Discord bot while warning that unauthorized methods, like the ones shown in a YouTube video, could lead to bans.

Links mentioned:

How to Mass Delete Discord Messages: In this video I will be showing you how to mass delete discord messages in dms, channels, servers, ect with UnDiscord which is a easy extension that allows y…

▷ #gpt-4-discussions (43 messages🔥):

-

Confusion Over GPT Chat Renewal Time:

@7877clarifies to@elegante94that the cooldown for the next GPT chat is every 3 hours, not every hour, addressing confusion about the renewal rate. -

In Search of an AI Note-Taker: After discussion,

@satanhashtagsuggests a specific channel<#998381918976479273>in response to@blakeskoepkalooking for an AI note-taking application for school. -

The Perils of Naming Your GPT:

@ufodriverrexpresses frustration over their GPT, Unity GPT, being banned potentially due to trademark issues without a clear path to appeal, despite efforts to address the issue and observing other similar-named GPTs not being banned. -

Limitations of GPT and Knowledge Files Explained:

@solbuscorrects misconceptions by explaining that Custom GPTs do not train on knowledge files but can reference them, and true model training requires access to OpenAI’s fine-tuning services or training your own large language model from scratch. -

GPT Builder’s Misrepresentation and Learning Curves:

@.bren_._points out that the GPT Builder does not actually train on PDFs in a zip file, despite initial claims, sparking a discussion on ChatGPT’s capabilities and how to achieve custom behaviors with it.

▷ #prompt-engineering (31 messages🔥):

-

Surprise Agreement on Negation Prompts:

@darthgustav.expressed satisfaction that a developer echoed their concerns at a GPT event, specifically about the model’s tendency to overlook negations in prompts, leading to undesirable behavior. -

GPT Assistant May Become Free:

@mischasimpsonshared insights while watching a live tutorial, suggesting that the GPT assistant might eventually be accessible on the free tier. -

Enhancing Reading Practices with GPT:

@mischasimpsonand@darthgustav.discussed using GPT for creating tailored reading assignments for students, with@darthgustav.advising to keep it simple and offering to help with the project. -

Prompt Crafting Challenges:

@kobra7777inquired about ensuring ChatGPT follows complete prompts, with@darthgustav.explaining that the model targets roughly 1k tokens and may require additional instructions for longer tasks. -

Testing Task Management with GPT:

@sugondese8995shared examples and sought ways to improve testing for a custom GPT built for task management, while@rendo1asked for clarification on desired improvements.

▷ #api-discussions (31 messages🔥):

-

Surprise Echo at Dev Event: User

@darthgustav.expressed surprise that a developer repeated their comment on negative prompts and its challenges, highlighting that the AI might ignore the negation and perform the unwanted behavior instead. -

Anticipation for GPT Assistant:

@mischasimpsonmentioned watching a live tutorial about the GPT assistant which hinted at the possibility of it becoming available to the free tier. -

Crafting Custom Reading Prompts for Children:

@darthgustav.and@mischasimpsondiscussed creating an easy method for parents to generate reading exercises for children using OpenAI’s tools. Complexity and tracking issues were mentioned, including how to know when AI-generated tasks were complete or how children perform. -

Test Prompt Crafting for Custom GPTs:

@sugondese8995shared their method of using ChatGPT to build test prompts and expected outputs for a custom GPT designed for task management and sought feedback for improvement. -

Seeking Better GPT Prompt Adherence:

@kobra7777inquired about strategies to ensure GPT follows prompts entirely.@darthgustav.provided insight, mentioning the model’s approximate token output target and strategies to manage longer requests.

Latent Space Discord Summary

-

ByteDance Unleashes Quartet of AI Apps: ByteDance has launched four new AI apps, Cici AI, Coze, ChitChop, and BagelBell, powered by OpenAI’s language models, as reported in Forbes by Emily Baker-White.

-

AutoGen UI Amps Up Agent Creation: AutoGen Studio UI 2.0 has been released, featuring an enhanced interface that facilitates custom agent creation, as demonstrated in a YouTube tutorial.

-

Artificial Analysis Puts AI Models to the Test: The new Artificial Analysis benchmarking website allows users to compare AI models and hosting providers, focusing on the balance between price and latency, discussed via a Twitter post.

-

AI in Coding Evolution: AlphaCodium from Codium AI represents a new leap in code generation models and SGLang from lmsys introduces an innovative LLM interface and runtime, potentially achieving up to 5x faster performance as noted on lmsys’ blog.

-

SPIN Your LLM From Weak to Strong: A novel fine-tuning method called Self-Play fIne-tuNing (SPIN) enhances LLMs via self-generated data, effectively boosting their capabilities as outlined in this paper.

-

ICLR Accepts MoE Paper for Spotlight: A paper on Mixture of Experts (MoE) and expert merging called MC-SMoE has been accepted for a spotlight presentation at ICLR, presenting significant resource efficiency improvements, read here.

Latent Space Channel Summaries

▷ #ai-general-chat (17 messages🔥):

-

ByteDance Launches New AI Apps:

@coffeebean6887shared a Forbes article revealing that TikTok’s parent company, ByteDance, has launched four new AI apps named Cici AI, Coze, ChitChop, and BagelBell. The article by Emily Baker-White discusses the offerings of these apps and their reliance on OpenAI’s language models. -

AutoGen UI 2.0 Released:

@swyxiomentioned the release of AutoGen Studio UI 2.0, providing a YouTube link to a video titled “AutoGen Studio UI 2.0: Easiest Way to Create Custom Agents”. -

Artificial Analysis Drops a Benchmark Bomb:

@swyxiohighlighted a Twitter post that discusses a new AI benchmark comparison website called Artificial Analysis. The website compares models and hosting providers, helping users in choosing the best price vs latency trade-offs. -

Syntax to Semantics - The Future of Code?: In an intriguing blog post,

@fanahovacontemplates the shift of coding from syntax to semantics, questioning whether everyone will become an AI engineer. -

Next-Gen Code Generation Model on the Horizon:

@swyxioconfidentially informed the chat about AlphaCodium, a new state-of-the-art code generation model being developed by Codium AI. Feedback is sought for its launch announcement and the related blog post. -

Innovations in LLM Interfaces and Runtimes:

@swyxioalso shared news about a novel LLM interface and runtime called SGLang introduced by lmsys, which incorporates RadixAttention. This could potentially compete with other LLM systems like Guidance and vLLM, with a statement that SGLang can perform up to 5x faster. Details are available in their blog post.

Links mentioned:

-

TikTok Owner ByteDance Quietly Launched 4 Generative AI Apps Powered By OpenAI’s GPT: The websites and policies for new apps Cici AI, ChitChop, Coze, and BagelBell don’t mention that they were made by ByteDance.

-

AI in Action Weekly Jam · Luma: A weekly virtual chat dedicated to the hands-on application of AI in real-world scenarios, focusing on insights from blogs, podcasts, libraries, etc. to bridge the gap between theory and…

-

Tweet from lmsys.org (@lmsysorg): We are thrilled to introduce SGLang, our next-generation interface and runtime for LLM inference! It greatly improves the execution and programming efficiency of complex LLM programs by co-designing t…

-

State-of-the-art Code Generation with AlphaCodium - From Prompt Engineering to Flow Engineering | CodiumAI: Read about State-of-the-art Code Generation with AlphaCodium - From Prompt Engineering to Flow Engineering in our blog.

-

Tweet from Alessio Fanelli (@FanaHOVA): Engineering used to be the bridge between semantics (what the biz wanted) and syntax (how you implemented it) 🌉 https://www.alessiofanelli.com/posts/syntax-to-semantics Code has slowly gotten more s…

-

AutoGen Studio UI 2.0: Easiest Way to Create Custom Agents: AutoGen now has a User Interface for creating powerful AI agents without writing code. In this video, we will look at different components of this newly rele…

-

Tweet from swyx (@swyx): found this absolute GEM in today’s @smolmodels scrape of AI discords: https://artificialanalysis.ai/ a new benchmark comparison site • by an independent third party • clearly outlines the quality…

-

The “Normsky” architecture for AI coding agents — with Beyang Liu + Steve Yegge of SourceGraph: Listen now | Combining Norvig and Chomsky for a new paradigm,

▷ #ai-event-announcements (1 messages):

- Innovative Fine-Tuning Method for LLMs Introduced:

@eugeneyaninvited the group with role@&1107197669547442196to discuss the new Self-Play fIne-tuNing (SPIN) method, alongside@713143846539755581. This method enhances LLMs through self-generated data, negating the need for additional human-annotated data. Read the paper here.

Links mentioned:

-

Self-Play Fine-Tuning Converts Weak Language Models to Strong Language Models: Harnessing the power of human-annotated data through Supervised Fine-Tuning (SFT) is pivotal for advancing Large Language Models (LLMs). In this paper, we delve into the prospect of growing a strong L…

-

Join the /dev/invest + Latent Space Discord Server!: Check out the /dev/invest + Latent Space community on Discord - hang out with 2695 other members and enjoy free voice and text chat.

▷ #llm-paper-club (32 messages🔥):

-

Eager Readers Gather for Self-Play Study:

@eugeneyaninvited participants for a discussion on the Self-Play paper by linking to its abstract on arXiv. The paper presents Self-Play fIne-tuNing (SPIN), a fine-tuning method where a Large Language Model (LLM) plays against itself to refine its abilities without new human-annotated data. -

Anticipation for Self-Instruction Insights:

@swyxiohyped the upcoming discussion on a paper related to self-improvement. The mentioned paper, Self-Instruct, demonstrates a 33% absolute improvement over the original GPT3 model using a self-generated instruction-following framework. -

The Webcam Effect on Discord Limits: Users including

@swizec,@youngphlo, and@gulo0001discussed technical difficulties caused by Discord’s user limit when webcams are on. To address large meeting requirements,@youngphloshared Discord’s “stage” feature allows hundreds of viewers in a more webinar-like setting and linked to the relevant Discord Stage Channels FAQ. -

social-format Discord Meetups Announced:

@swyxioannounced two new Discord clubs starting next week and a social meetup in San Francisco, encouraging community members to participate and socialize. An additional meetup in Seattle is also being planned. -

Spotlight on MoE Research at ICLR:

@swyxiohighlighted the acceptance of a paper focusing on Mixture of Experts (MoE) and expert merging to be featured as a Spotlight paper at ICLR. The paper MC-SMoE showcases methods for reducing memory usage and computational needs by up to 80% through merging and compressing experts.

Links mentioned:

-

Join the /dev/invest + Latent Space Discord Server!: Check out the /dev/invest + Latent Space community on Discord - hang out with 2695 other members and enjoy free voice and text chat.

-

Self-Play Fine-Tuning Converts Weak Language Models to Strong Language Models: Harnessing the power of human-annotated data through Supervised Fine-Tuning (SFT) is pivotal for advancing Large Language Models (LLMs). In this paper, we delve into the prospect of growing a strong L…

-

Self-Instruct: Aligning Language Models with Self-Generated Instructions: Large “instruction-tuned” language models (i.e., finetuned to respond to instructions) have demonstrated a remarkable ability to generalize zero-shot to new tasks. Nevertheless, they depend he…

-

Tweet from Prateek Yadav (@prateeky2806): 🎉 Thrilled to announce our MOE Expert Merging paper has been accepted to @iclr_conf as a SpotLight paper. ! We reduce the inference memory cost of MOE models by utilizing routing statistics-based me…

-

GitHub - jondurbin/airoboros at datascience.fm: Customizable implementation of the self-instruct paper. - GitHub - jondurbin/airoboros at datascience.fm

-

Solving olympiad geometry without human demonstrations - Nature: A new neuro-symbolic theorem prover for Euclidean plane geometry trained from scratch on millions of synthesized theorems and proofs outperforms the previous best method and reaches the performance of…

▷ #llm-paper-club-chat (65 messages🔥🔥):

-

Zooming In on Video Call Limits: In a discussion on increasing user limits for voice channels,

@swyxioand@yikesawjeeznoticed that despite settings saying 99 users, the actual limit appears to be 25 when video or streaming is turned on. A Reddit post clarified when the 25-user limit applies. -

Benchmarking Model Progress:

@swyxioshared a humorous observation regarding Microsoft’s modest improvement over GPT-4 with MedPaLM, noting that a true 8% improvement would indicate a completely new model. Further, they shared personal benchmark notes from GitHub for deeper insights. -

To Share or Not to Share Paper Notes:

@swyxiosuggested to@mhmazurabout making a pull request (PR) to Eugene’s paper notes repo.@eugeneyanlater provided the link to the repo on GitHub for reference. -

Exploring the Importance of Reasoning Continuity:

@yikesawjeezshared a thread from Twitter discussing the significance of maintaining a logical flow over factual accuracy in prompts when it comes to large language models’ performance. This counterintuitive discovery was further examined in a paper available on arXiv. -

Pondering the Challenges of Healthcare Data: In a snippet featuring doubts about synthetic data circumventing HIPAA regulations,

@dsquared70and@nuvic_touched on the complexities and costs involved in using AI within regulated environments.@swyxiomentioned that the use of GPT-4 in this context makes companies like Scale AI essentially act as “GPT-4 whitewashing shops.”

Links mentioned:

-

Tia clinches $100M to build out clinics, virtual care as investors bank on women’s health startups: Tia, a startup building what it calls a “modern medical home for women,” secured a hefty $100 million funding round to scale its virtual and in-person care. | Tia, a startup building what it…

-

Tweet from Aran Komatsuzaki (@arankomatsuzaki): The Impact of Reasoning Step Length on Large Language Models Appending “you must think more steps” to “Let’s think step by step” increases the reasoning steps and signficantly improve…

-

Large Language Models Cannot Self-Correct Reasoning Yet: Large Language Models (LLMs) have emerged as a groundbreaking technology with their unparalleled text generation capabilities across various applications. Nevertheless, concerns persist regarding the …

-

MAUVE: Measuring the Gap Between Neural Text and Human Text using Divergence Frontiers: As major progress is made in open-ended text generation, measuring how close machine-generated text is to human language remains a critical open problem. We introduce MAUVE, a comparison measure for o…

-

Show Your Work: Scratchpads for Intermediate Computation with Language Models: Large pre-trained language models perform remarkably well on tasks that can be done “in one pass”, such as generating realistic text or synthesizing computer programs. However, they struggle w…

-

We removed advertising cookies, here’s what happened: This is not another abstract post about what the ramifications of the cookieless future might be; Sentry actually removed cookies from our…

-