The surprise release of CodeLlama from Meta AI is an incredible gift to open source AI:

As can be expected, the community has already got to work putting it on Ollama and MLX for you to run locally.

Table of Contents

[TOC]

PART 1: High level Discord summaries

TheBloke Discord Summary

-

Miqu Model Sparks Intrigue and Debate: The Miqu model has sparked debates among users like

@itsme9316and@rombodawg, wondering if it’s a leak from Mistral Medium or a fine-tuned version of Llama-2-70b. Concerns about AI ethics and the future risks of AI were discussed, including the misuse of powerful models and the challenges of achieving proper alignment. -

Aphrodite Engine’s Performance Highlighted: Users shared their experiences with the Aphrodite engine, indicating it offers impressive performance at 20 tokens per second on A6000 GPUs with configurations like

--kv-cache-dtype fp8_e5m2, though concerns were raised about GPU utilization and support for model splitting. -

Role-Playing AI Models Discussed for Repetitiveness and Performance: In role-playing settings, models like Mixtral and Flatdolphinmaid showed challenges with repetitiveness, while Noromaid and Rpcal performed better. ChatML with Mixtral Instruct was recommended by

@ks_cfor role-play, highlighting the importance of Direct Prompt Optimization (DPO) to improve AI character responses. -

Learning and Fine-Tuning on ML/DL:

@dirtytigerxadvises that CUDA programming isn’t essential for starting with ML/DL, recommending the Practical Deep Learning for Coders course by fast.ai for a solid foundation. Discussions included VRAM requirements for fine-tuning large models like Mistral 7B, and exploring advanced optimizer technology like Paged 8bit lion or adafactor for efficient VRAM usage. -

Enhancing GitHub Project Navigation and Understanding Codebases: Guidance on understanding GitHub projects for contributions was offered, with

start.pyin tabbyAPI project highlighted as an example entry point. The importance of IDEs or editors with language servers for efficient code navigation was discussed, along with the release of Code Llama 70B on Hugging Face, emphasizing its potential in code generation and debugging across several programming languages.

Nous Research AI Discord Summary

-

Activation Beacon Revolutionizes LLM Context Length: The Activation Beacon project introduces a significant advancement, enabling unlimited context length in LLMs through “global state” tokens. This development, as shared by

@cyrusofeden, could dramatically alter the approach to Dense Retrieval and Retrieval-augmented LLMs with its details available on GitHub. -

Eagle-7B Surpasses Mistral with RWKV-v5 Architecture: Eagle-7B, based on the RWKV-v5 architecture, has been highlighted as outperforming even Mistral in benchmarks, presenting a promising open-source, multilingual model with efficiency gains. It boasts lower inference costs while maintaining high English performance (source).

-

OpenHermes2.5 Touted as Ideal for Consumer Hardware: Recommendations for OpenHermes2.5 emerge as ideal for running on consumer hardware, attributed to its gguf or gptq quantization, positioning it as a leading open-source LLM for question-answering. Further discussions also explore the Nous Hermes 2 Mixtral 8x7B DPO on Hugging Face.

-

Exploration into Multimodal and Specific Domain LLMs: Discussions around using the IMP v1-3b model for classification tasks in orbital imagery suggest a growing interest in multimodal models and their application in specific domains. The dialogue extends to comparisons with other models like Bakllava and Moondream, highlighting the prowess of Qwen-vl in this space.

-

Collective Ambition for Centralized AI Resources: The community’s efforts in centralizing AI resources and research, spearheaded by

@kquant, demonstrate a recognized need for a consolidated repository. This initiative aims to streamline access to AI papers, guides, and training resources, inviting contributions to enhance the resource pool for enthusiasts and professionals alike.

LM Studio Discord Summary

-

GPU Choice for LLM Work: Despite older P40/P100 GPUs having more VRAM, the newer 4060Ti is recommended for training Large Language Models (LLM) due to receiving current updates from Nvidia. Participants, including

@heyitsyorkie, discussed GPU preferences, noting the importance of updates over GRAM speed. -

Model Compatibility and Format Updates: LMStudio’s shift from GGML to GGUF format for models, as notified by

@heyitsyorkie, indicates a move towards more current standards. Further, discussions highlighted the utility of dynamically loading experts in models for MoE (Mixture of Experts) applications within LMStudio. -

Performance Issues and UI Challenges in LMStudio Beta: Users

@msz_mgsand@heyitsyorkiereported performance lag when inserting lengthy prompts and navigational difficulties within the UI. These issues are recognized and slated for resolution in future updates. -

Exploration of VRAM and GPU Utilization: Discussions led by

@aswarpand@heyitsyorkieexplored the unexpected VRAM overheads for running models in LMStudio, highlighting nuances in offloading models to GPUs including the RTX 3070 and 4090. Rumors about an upcoming RTX 40 Titan with 48GB VRAM stirred community discussions, linking to broader market strategies and repurposing GPUs for AI. -

Beta Updates and Cross-Platform Compatibility Queries: The announcement of Beta V2 indicated progress on VRAM estimates and compatibility issues. Queries about Mac Intel versions, Linux appimage availability, and WSL (Windows Subsystem for Linux) support underscored the community’s interest in broadening LMStudio’s platform compatibility.

OpenAI Discord Summary

-

RAG Demystified: In response to @aiguruprasath’s query, Retrieval Augmented Generation (RAG) was explained by @darthgustav. as AI with the capability to directly access knowledge for searching data or semantic matching, enhancing its decision-making processes.

-

Prompt Engineering Evolves: @madame_architect shared a prompt engineering technique:

"Please critique your answer. Then, answer the question again."This method, inspired by a significant research paper, aims to notably improve the quality of AI outputs in complex querying. -

Code Generation Optimized: Frustrations with ChatGPT-4 failing to generate accurate code were addressed by specifying tasks, languages, and architectures in requests, a tip shared by @darthgustav. to assist @jdeinane in achieving better code generation outcomes.

-

Navigating AI Ethics via Conditional Imperatives: An innovative ethical moderation strategy involving a 2/3 pass/fail system based on utilitarianism, deontology, and pragmatism was discussed. @darthgustav. also introduced using conditional imperatives directing AI to consult LEXIDECK_CULTURE.txt for resolving ethical dilemmas, enhancing AI’s decision-making framework.

-

GPT-4’s Limits and Quirks Uncovered: Conversations in the community highlighted GPT-4 Scholar AI limitations, such as error messages indicating the need for a premium subscription for advanced research, and the unresolved struggle with accessing beta features. Users shared tips on managing conversation lengths and error workarounds, emphasizing community-based troubleshooting and innovation in AI interaction strategies.

OpenAccess AI Collective (axolotl) Discord Summary

-

SmoothQuant Boosts LLM Throughput: A discussion centered around SmoothQuant, a new quantization method from MIT’s Han’s lab, aiming at LLMs beyond 100 billion parameters, yielding a 50% throughput improvement for a 70B model as noted in the Pull Request #1508. However, integrating new technologies like SmoothQuant into systems like vLLM could be challenging due to feature bloat.

-

Exploring Efficient Processing of Long Contexts in LLMs: Insights on managing the limited context window of LLMs highlighted the Activation Beacon and RoPE enhancements for improved long context processing. This exploration suggests a route to maintaining performance when dealing with extended contexts, an essential factor for technical applications.

-

Quantization Confusion and Potential on HuggingFace: A misattributed 70B model on HuggingFace led to discussions about quantization’s role and its impact, with specific mentions of the utility and misconceptions surrounding quantized models like Miqu and Mistral Medium. The complexity of model quantization and its implications on memory efficiency surfaced further with LoftQ, where out-of-memory errors were reported despite expected benefits.

-

Model Training and Inference Efficiencies Unpacked: Conversations traversed effective hardware setups for training, notably NVIDIA H100 SXM GPUs, and inference strategies, emphasizing the trade-offs in quantization and the necessity of hardware benchmarking before acquisition. This included a dive into vLLM for serving fp16 models and the hardware-dependent nature of performance, underlining the variegated paths to optimizing model operations.

-

Fine-Tuning and Configurations for Enhanced Model Performance: Various contributions highlighted the importance of appropriate fine-tuning and model configurations, pointing to resources like FlagEmbedding Fine-tuning documentation and LlamaIndex guidelines. The dialogue underscored the necessity for precise setup in achieving improved outputs and more meaningful embedding representations, alongside the innovative approach of creating synthetic instruction datasets for heightened model effectiveness.

Mistral Discord Summary

-

Clarification on Mistral and “Leak” Claims: There was confusion whether a newly emerged model was a leaked version of Mistral, eventually identified not to be Mistral or a leak but possibly a LLaMa model fine-tuned with Mistral data. The community called for official clarification from Mistral staff on the matter.

-

Quantization Affects AI Model Performance: Dense models, speculated to be close approximations of Mistral, could suffer significantly from quantization effects, highlighting the technical nuances of model performance degradation.

-

Challenges in Adapting Mistral for New Languages: Concerns were raised about the vocabulary mismatch when training Mistral in a new language, with suggestions that starting from scratch might not be feasible due to resource requirements, and pretraining might be necessary.

-

VRAM and Token Limitations for Mistral Models: Users reported inconsistencies between VRAM requirements stated by Mistral AI 7B v0.1 models and actual user experiences. Further, token limits for Mistral API text embeddings were clarified, with a max token limit confirmed to be 32k for tiny/small/medium models and 8192 for the embedding API.

-

API and Client Discussions Indicate Expansion and Technical Interest: Discussions about the maximum rate limit for Mistral APIs in production indicated an interest in expansion, with initial limits starting at 2 requests/second. A proposal for a new Java client, langchain4j, for Mistral AI models showcases community-led development efforts outside the primary documentation.

Eleuther Discord Summary

-

New Research and Critical Views on AI Policy Unveiled: AI Techniques in High-Stakes Decision Making introduces insights into deploying AI in critical contexts. Meanwhile, the Biden Administration’s AI regulation fact sheet draws critique for vague policy directions and the proposal of AI/ML education in K-12 schools, raising concerns about effectiveness and implementation.

-

Deep Dive into Model Efficiency and Algorithmic Innovations: Discussions in the #research channel revolve around model efficiency, with techniques like seed-based vocab matrices and gradient preconditioning under examination. Softmax bottleneck alternatives and data preprocessing challenges are hot topics, alongside the exploration of attention mechanisms, alluding to the complexity of model optimization and the pursuit of alternatives.

-

Introducing TOFU for Model Unlearning Insights: TOFU benchmark’s introduction, as found in arXiv:2401.06121, sets the stage for advanced machine unlearning methodologies, sparking interest in the efficacy of gradient ascent on ‘bad data’ as an unlearning strategy. This benchmark aims to deepen the understanding of unlearning in large language models through the use of synthetic author profiles.

-

Apex Build Insights Offer Potential Optimizations: In #gpt-neox-dev, discussions reveal challenges and novel solutions for building apex, especially on AMD’s MI250xs architectures. Highlighted solutions include specifying architectures to reduce build time and exploring CUDA flags for optimization, presenting a practical conversation focused on improving development efficiency.

-

AI Community Eager for Experimentation and Offline Flexibility: A notable enthusiasm for new work and exploratory tests is evident, complemented by a practical inquiry about downloading tasks for offline use. This interest underscores the community’s drive for innovation and the adaptability of tools for diverse usage scenarios.

HuggingFace Discord Summary

-

AI Etiquette Guide for PR Tagging: Community members were reminded not to tag individuals for PR reviews if they’re unrelated to the repo, sparking a discussion about community etiquette and fairness in PR handling.

-

Patience and Strategy in AI Development: Discussions around the development of continuous neural network models and the successful implementation of a deepfake detection pipeline underlined the community’s focus on innovative AI applications and patience in the PR review process.

-

AI Models for Political Campaigns Raise Ethical Questions: A dialog unfolded about the use of AI in elections, suggesting both controversial methods like fraud and ethical approaches like creating numerous videos of candidates. This discussion extended to inquiries about technical specifics and recommendations for text-to-image diffusion models.

-

Deep Learning’s Impact on Medical Imaging: Articles shared from IEEE highlighted breakthroughs in Medical Image Analysis through deep learning, contributing to the guild’s repository of important AI developments in healthcare.

-

Innovative Projects and Demonstrations in AI: Community members showcased various projects, including a Hugging Face Spaces demo for image models, a resume question-answering space, and an app that converts Excel/CSV files into database tables. A YouTube video demonstrating Mistral model performance on Apple Silicon was also featured.

-

Technical Challenges and GPU Acceleration in NLP and Computer Vision: Discussions in the NLP and Computer Vision channels focused on achieving GPU acceleration with llama-cpp and troubleshooting errors in fine-tuning Donut docvqa, reflecting the technical hurdles faced by AI engineers in their work.

Latent Space Discord Summary

-

ColBERT Sparks Interest Over Traditional Embeddings: Simon Willison’s exploration of ColBERT has ignited a debate on its effectiveness against conventional embedding models. Despite growing curiosity, the lack of direct comparative data between ColBERT and other embedding models was highlighted as a gap needing closure.

-

Simon Willison Addresses AI’s Human Element: A feature on Simon Willison delves into how AI intersects with software development, emphasizing the importance of human oversight in AI tools. This has spurred further discussion among engineers regarding AI’s role in augmenting human capabilities in software development.

-

“Arc Search” Anticipated to Alter Web Interactions: The introduction of Arc Search, a novel iOS app, could potentially revolutionize web browsing by offering an AI-powered search that compiles web pages based on queries. The community speculates this could present a challenge to the dominance of traditional search engines.

-

Voyage-Code-2 Touted for Superior Code Retrieval: The unveiling of Voyage-Code-2 was met with enthusiasm, with promises of improved performance in code-related searches. The conversation, primarily driven by

@swyxio, revolved around the model’s potential benchmarking on MTEB and its implications for embedding specialization. -

Claude Models by Anthropic Underrated?: The debate over Anthropic’s Claude models versus those by OpenAI unveiled a consensus that Claude might be underappreciated in its capabilities, particularly in summarization and retrieval tasks. This discussion shed light on the need for a more nuanced comparison across AI models for varied applications.

DiscoResearch Discord Summary

-

MIQU Misunderstanding Resolved: @aiui’s speculation about the MIQU model being a Mixtral medium leak was addressed by @sebastian.bodza, clarified with a tweet by Nisten and further information on the model using a LLaMA tokenizer, as shown in another tweet.

-

German Fine-tuning Frustrations and Triumphs: @philipmay and @johannhartmann exchanged experiences on fine-tuning models for German, with notable attempts on Phi 2 using the OASST-DE dataset and enhancing Tiny Llama with German DPO, documented in TinyLlama-1.1B-Chat-v1.0-german-dpo-Openllm_de.md. Questions about a German Orca DPO dataset led to revealing an experimental dataset on Hugging Face.

-

Breaking New Grounds with WRAP: @bjoernp shared insights into Web Rephrase Augmented Pre-training (WRAP), an approach aimed at improving data quality for language models, as detailed in a recent paper (Web Rephrase Augmented Pre-training) by Apple.

-

Challenges with CodeT5 and Training Data: In the saga of implementing Salesforce’s CodeT5 embeddings, @sebastian.bodza faced technical hurdles and engaged in discussions about the creation of “hard negatives” and the development of training prompts for text-generation, sharing a specific notebook for review.

-

Clarifying Retrieval Model Misconceptions: The discourse on generating a passage retrieval dataset for RAG pointed out common misconceptions regarding the selection of “hard negatives” and dataset construction, with an aim to clarify the purpose and optimal structure for such data, indicative of ongoing improvements and contributions, as seen in embedded GitHub resources.

LangChain AI Discord Summary

-

LangChain Sparks New Developments: @irfansyah5572 sought assistance on answer chains with LangChain, embedding a GitHub link to their project, while @oscarjuarezx is pioneering a PostgreSQL-backed book recommendation system utilizing LangChain’s capabilities.

-

Enhancing PDF Interactions with LangChain: @a404.eth released a YouTube tutorial on developing a frontend for PDF interactions, marking the second installment in their educational series on leveraging LangChain and related technologies.

-

Cache and Efficiency in Focus: Technical discussions echoed around

InMemoryCache’s role in ameliorating inference times for LlamaCPP models, underscored by a shared GitHub issue but highlighted challenges in cache performance. -

Lumos Extension Lights Up Web Browsing: @andrewnguonly introduced Lumos, an open-source Chrome extension powered by LangChain and Ollama, designed to enrich web browsing with local LLMs, inviting community feedback via GitHub and Product Hunt.

-

Tutorials Drive LangChain Mastery: New tutorials like Ryan Nolan’s beginner-friendly guide to Langchain Agents for 2024 and @a404.eth’s deep-dive into RAG creation using LangChain emphasize community-building knowledge and skill development, accessible via YouTube and YouTube respectively.

LlamaIndex Discord Summary

-

New Complex Classifications Approach with LLMs: @KarelDoostrlnck introduces a novel method utilizing Large Language Models (LLMs) for addressing complex classifications that encompass thousands of categories. This technique involves generating a set of predictions followed by retrieval and re-ranking of results, detailed in the IFTTT Announcement.

-

LlamaIndex.TS Receives a Refresh: Upgrades and enhanced documentation have been implemented for LlamaIndex.TS, promising improvements for users. The update announcement and details are shared in this tweet.

-

$16,000 Up For Grabs at RAG Hackathon: A special in-person hackathon focusing on Retriever-Augmented Generation (RAG) technology, dubbed the LlamaIndex RAG-A-THON, announces prizes totaling $16,000. Event specifics can be found in the hackathon details.

-

Exploration of Llama2 Commercial Use and Licensing: Community members discuss the potential for commercial utilization of Llama2, with detailed licensing information suggested to be reviewed on Meta’s official site and in a deepsense.ai article.

-

Advanced Query Strategy Enhancements with LlamaPacks: @wenqi_glantz, in collaboration with @lighthouzai, evaluates seven LlamaPacks showcasing their effectiveness in optimizing query strategies for specific needs. The evaluation results are available in this tweet.

LAION Discord Summary

-

MagViT2 Faces Training Hurdles: Technical discussion highlighted difficulties with MagViT2, where pixelated samples emerged after 10,000 training steps. Solutions suggested include adjusting the learning rate and loss functions, referencing the MagViT2 PyTorch repository.

-

Controversy Sparked by Nightshade: Drhead criticized the irresponsible release of Nightshade, while others like Astropulse underscored concerns regarding the tool’s long-term effects and effectiveness. Strategies to counteract Nightshade’s potential threats were also debated, including finetuning models and avoiding targeted encoders.

-

Debating the Need for New Data in AI: The necessity of new data for enhancing AI models was questioned, with pseudoterminalx and mfcool arguing that the focus should instead be on data quality and proper captioning to improve model performance.

-

Activation Beacon Promises Context Length Breakthrough: A discussion on “Activation Beacon” suggests it could enable unlimited context lengths for LLMs, cited as a significant development with an LLaMa 2 model achieving up to 400K context length after training. Read the paper and check the code.

-

Optimizing Data Storage for Image Datasets: Conversations around datasets’ storage questioned the efficiency of parquet vs. tar files, with a hybrid method of using parquet for captions and tar for images being touted as a potentially optimal solution. Webdatasets and tarp were recommended for high-performance Python-based I/O systems for deep learning, with links shared for further investigation: Webdataset GitHub, Tarp GitHub.

Perplexity AI Discord Summary

-

Choosing the Right AI Model Gets Easier: Users explored the best scenarios for utilizing AI models like Gemini, GPT-4, and Claude, finding technical FAQs helpful for making informed decisions.

-

Perplexity’s Library Feature: An issue where the library sidebar wasn’t showing all items was discussed, concluding it’s a feature, not a bug, displaying only the last eight threads/collections.

-

No Crypto Tokens for Perplexity Project: Queries regarding a potential cryptocurrency tied to Perplexity were addressed, confirming no such token exists.

-

Innovative Uses for AI in Applications: Developers are creatively integrating AI into projects such as a delivery-themed pomodoro app, using AI to generate names and addresses, highlighting AI’s versatility in enhancing app functionalities.

-

Perplexity API Awaits Custom Stop Words Feature: The integration of custom Stop Words in the pplx-api with zed.dev editor is anticipated, aiming to provide a rich alternative to the default OpenAI models for enhanced ‘assistant’ features in editing applications.

LLM Perf Enthusiasts AI Discord Summary

-

Triton: Balancing Control and Complexity:

@tvi_emphasized that Triton offers a sweet spot for AI engineers, providing more control than PyTorch with less complexity than direct CUDA, ideal for those seeking efficiency without delving deep into CUDA’s intricacies. -

An Odyssey of CUDA Optimization for RGB to Grayscale: Amidst attempts to optimize an RGB to Grayscale conversion,

@artsteand@zippikanavigated through struct usage, vectorized operations, and memory layout variations, with a journey full of trials including unexpected performance results and the discovery that__forceinline__can significantly improve kernel performance. -

In Search of CUDA Wisdom: After facing a confusing performance benchmark,

@andreaskoepfsuggested that@zippikadelve into memory optimization techniques and vectorized memory access to enhance CUDA performance, supported by insights from a NVIDIA developer blog. -

Unraveling the Mysteries of PMPP: Queries surrounding Practical Massively Parallel Programming concepts, from unclear questions in Chapter 2 to understanding memory coalescing and banking in Chapter 6, were addressed, showcasing the community’s effort to demystify dense technical material for collective advancement.

-

Learning Continues on YouTube:

@andreaskoepfshared a new educational video pertaining to AI engineering, fostering the community’s continuous learning outside the written forums.

LLM Perf Enthusiasts AI Discord Summary

-

Writing Woes and Wins: Engineers in the guild voiced frustrations and hopes around writing assistant tools. While

@an1lamlamented the limitations of current tools and the lack of alternatives like lex.page,@calclaviaoffered a glimmer of hope by introducing jenni.ai for academic writing, implying it may address some of these concerns. -

Startups Struggle and Strategize with Cloud Costs: The economic challenges of utilizing Google Workspace for startups were highlighted by

@frandecam, whereas@dare.aicountered with a solution: the Google Cloud for Startups program, which promises up to $250,000 in Google Cloud credits but comes with a warning of potential application delays. -

Gorilla Grabs Attention with Open Source Solution: The Gorilla OpenFunctions project stirred interest among engineers, offering an open-source alternative for executing API calls via natural language, detailed through a blog post and a Github repository. This innovation aims to streamline the integration of API calls with Large Language Models (LLMs).

-

Copyright Quandaries for Creative AI:

@jxnlcobroached the issue of a prompt investing vision model bumping up against copyright restrictions in its attempt to read complex labels, highlighting a common challenge for developers navigating the interface between AI outputs and intellectual property rights. -

Mistral Medium Buzz: A single message from thebaghdaddy queried the community’s take on Mistral Medium’s potential to ascend as a rival to GPT-4, sparking curiosity about its capabilities and position in the ever-evolving LLM landscape.

Skunkworks AI Discord Summary

-

Frankenstein Model Comes to Life:

@nistenhas embarked on a pioneering experiment by combining all 70b CodeLlamas into a composite BigCodeLlama-169b model, aiming to set new benchmarks in AI performance. This Franken-model is believed to enhance coding problem-solving capabilities, including calculating Aldrin cycler orbits. -

Tech Meets Humor in AI Development: In the midst of advancing AI with the BigCodeLlama-169b,

@nistenalso sprinkled in humor, sharing a “lolol” moment that underscored the lighter side of their high-stakes, technical endeavors. -

Cutting Edge AI Research Shared:

@pradeep1148introduced community members to RAGatouille, a new library that simplifies working with the ColBERT retrieval model, known for its speed and accuracy in scalable behavior summarization. -

Eagle 7B Soars over Transformers: A significant leap in the RWKV-v5 architecture has been showcased in

@pradeep1148’s Running 🦅 Eagle 7B on A40, which details the Eagle 7B’s capability to process 1 trillion tokens across over 100 languages. -

Community and Innovation Go Hand-in-Hand: Amid the innovations and technical discussions, personal interactions like a friendly check-in from

@zentorjrtowards@nistenhighlight the Skunkworks AI community’s supportive and spirited nature.

Datasette - LLM (@SimonW) Discord Summary

-

Emoji Insight from Short Texts: A new demo named Emoji Suggest was showcased by

@dbreunig, capable of translating short sentences or headlines into a recommended emoji using the CLIP model. The source code for the emoji suggestion tool leverages precomputed embeddings for emojis, enabling fast and sophisticated search capabilities. -

Harnessing Embeddings for Sophisticated Search:

@dbreunighighlighted the effectiveness of using embeddings to quickly develop sophisticated search tools, stressing the importance of careful option curation for optimal search functionalities. This principle is applied in their emoji suggestion tool, exemplifying its utility. -

AI Believes in Its Positive Impact: In a shared conversation,

@bdexternoted an AI, specifically “llama2”, professing its belief that artificial intelligence is a force for good, aimed at aiding humans in actualizing their potential. This underscores a constructive perspective AI can have on enhancing human endeavors. -

Acknowledgment for ColBERT Writeup: The ColBERT writeup on TIL received appreciation from

@bewilderbeest, highlighting the value of shared code snippets and the innovative heatmap visualization of words in search results. This indicates the community’s interest in practical applications of AI research and shared knowledge.

Alignment Lab AI Discord Summary

- GPT-5 Training Sparks Curiosity and Confirmation:

@entropiignited discussions with a YouTube video speculating on whether GPT-5 training has started, based on exclusive interviews and insights. Following the speculation,@lightningralfconfirmed that GPT-5 training is indeed underway, though no further details were shared.

AI Engineer Foundation Discord Summary

- ChatGPT versus Bard in the Captcha Arena: @juanreds highlighted an intriguing distinction in the approach to user interactions and security measures: ChatGPT actively moderates Captcha images, whereas Bard does not. This indicates a possibly significant divergence in security protocols and user interface design philosophies between the two AI systems.

The Ontocord (MDEL discord) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (1403 messages🔥🔥🔥):

- Miqu Continues to Stir Discussions: Users like

@itsme9316and@rombodawgdebate whether the Miqu model could be a leak from Mistral Medium or a fine-tuned version of Llama-2-70b, highlighting its exceptional performance across various tasks. There’s speculation about its origins, with some suggesting it could be a rogue project or an unfinished Llama3 version. - Concerns on AI Ethics and Future Risks: Discussion among users like

@mrdragonfox,@selea, and@spottylucktouched on the ethical implications of AI development, the misuse of powerful models by governments or malicious actors, and the potential existential risks of a fully aligned or “rouge” AI. The conversation spans the necessity of counter-propaganda tools and the intrinsic challenges of achieving proper alignment. - Aphrodite Engine Gains Traction: Users share their experiences with the Aphrodite engine, suggesting it delivers impressive performance with configurations like

--kv-cache-dtype fp8_e5m2for 20 tokens per second on A6000 GPUs. Concerns were raised about its GPU utilization and whether it supports model splitting across multiple GPUs. - Speculation on AI Distribution and the Role of Scraps: The channel sees a philosophical exchange about the impact and contribution of smaller developers (“small fry”) in the context of major foundational models controlled by large entities. There’s acknowledgment of the limitations faced by individuals without access to substantial compute resources.

- User Banter and Theoretical Considerations: Light-hearted exchanges and theoretical musings about AGI, societal control through AI, and the potential outcomes of unrestricted model development. Discussions included whimsical ideas about AI’s role in future governance and the balance of using AI for public good versus its potential for misuse.

Links mentioned:

- GGUF VRAM Calculator - a Hugging Face Space by NyxKrage: no description found

- Scaling Transformer to 1M tokens and beyond with RMT: This technical report presents the application of a recurrent memory to extend the context length of BERT, one of the most effective Transformer-based models in natural language processing. By leverag…

- LoneStriker/CodeLlama-70b-Instruct-hf-GGUF at main: no description found

- miqudev/miqu-1-70b · Hugging Face: no description found

- Forget Memory GIF - Will Smith Men In Black - Discover & Share GIFs: Click to view the GIF

- The Humans Are Dead - Full version: Both parts of “Robots,” a song featured in the pilot episode of Flight of the Conchords. It’s also the ninth track on their self-titled full length that was …

- exllamav2/examples/batched_inference.py at master · turboderp/exllamav2: A fast inference library for running LLMs locally on modern consumer-class GPUs - turboderp/exllamav2

TheBloke ▷ #characters-roleplay-stories (184 messages🔥🔥):

-

Repetitiveness Challenges in Roleplay Models: Users, including

@ks_cand@superking__, discussed the challenge of repetitiveness in various AI models like Mixtral and Flatdolphinmaid when used in role-playing settings. Some models like Noromaid and Rpcal were noted for their better performance in avoiding repetition. -

Exploring the Best Models for Roleplay:

@ks_crecommended ChatML with Mixtral Instruct for role-playing sessions, while@superking__shared experiences with models getting repetitive after a certain token threshold, suggesting an experimentation with smaller models to develop an anti-repeat dataset. -

Unique Approaches to Tackling Repetitiveness:

@superking__discussed experiments involving Direct Prompt Optimization (DPO) to address issues like repetitiveness by editing AI character responses to create good examples. -

Model Comparisons and Performance Feedback: Discussions around comparing models like Mistral Medium and Miqu were brought up by

@ks_c, with other users like@flail_.weighing in on formatting and model sensitivity, emphasizing the importance of experimental setups and comparisons. -

Recommendations for Role-Playing Models: Towards finding the best LLM for role-playing, models like GPT-4, BagelMisteryTour rpcal, and Noromaid Mixtral, among others, were recommended by users such as

@ks_cfor different use-cases, from high token lengths to NSFW content.

Links mentioned:

- ycros/BagelMIsteryTour-v2-8x7B-GGUF · Hugging Face: no description found

- Ayumi Benchmark ERPv4 Chat Logs): no description found

TheBloke ▷ #training-and-fine-tuning (21 messages🔥):

- CUDA Not Essential for Learning ML/DL:

@dirtytigerxadvises@zohaibkhan5040against prioritizing CUDA programming for ML/DL, suggesting it’s a niche skill not crucial for starting with neural networks. Instead, they recommend the Practical Deep Learning for Coders course by fast.ai for a solid foundation. - Beyond Basic ML with Axolotl: For advanced finetuning learning,

@dirtytigerxsuggests diving into source code of projects like Axolotl. Explore the Axolotl GitHub repository for hands-on learning. - Peeling Back Layers of ML Libraries:

@dirtytigerxexplains complex ML software stacks involve several repositories including HuggingFace Transformers, PyTorch, and more, hinting at the value of exploring these to understand ML workings better. - VRAM Requirements for Fine-tuning Large Models Discussed:

@flashmanbahadurinquires about VRAM needs for finetuning Mistral 7B, with@dirtytigerxindicating a substantial amount, around 15-20x the model size when using AdamW, suggesting SGD as a less resource-intensive alternative. - Exploration of Optimizers for Large Models: Following a quest for efficient VRAM usage,

@saunderezhumorously points to the evolving landscape of optimizer technology, suggesting cutting-edge options like Paged 8bit lion or adafactor for large language models.

Links mentioned:

- Practical Deep Learning for Coders - Practical Deep Learning: A free course designed for people with some coding experience, who want to learn how to apply deep learning and machine learning to practical problems.

- GitHub - OpenAccess-AI-Collective/axolotl: Go ahead and axolotl questions: Go ahead and axolotl questions. Contribute to OpenAccess-AI-Collective/axolotl development by creating an account on GitHub.

TheBloke ▷ #coding (29 messages🔥):

-

Navigating GitHub Projects Made Easy:

@opossumpandainquired about understanding the structure of GitHub projects for contributing.@dirtytigerxguided on locating the entry point, especially highlighting thestart.pyfile in the tabbyAPI project as a concrete starting place. They also emphasized utilizing IDE/editors for symbol searching and global repo search on GitHub for navigating codebases efficiently. -

Entry Points in Python Explained:

@dirtytigerxclarified that in Python projects, files with the conditional blockif __name__ == "__main__":signal an entry point for execution. This convention aids in identifying where to begin code exploration. -

IDEs and Editors: Powerful Tools for Code Navigation:

@wbschand@dirtytigerxdiscussed the importance of using IDEs or editors with language servers for efficient code navigation through features like “go to definition.” They also touched upon the utility of GitHub’s improved search features and Sourcegraph’s VSCode extension for exploring codebases. -

Code Llama Model Released on Hugging Face:

@timjanikshared news about the release of Code Llama 70B on Hugging Face, a code-specialized AI model. The announcement details the model’s capabilities in code generation and debugging across several programming languages. -

The Art of Reading Source Code:

@animalmachinehighlighted that reading source code effectively is a critical, yet underpracticed skill that complements coding. They suggested making educated guesses to navigate codebases efficiently and mentioned the potential demo of Cody for this purpose.

Links mentioned:

- codellama (Code Llama): no description found

- tabbyAPI/main.py at main · theroyallab/tabbyAPI: An OAI compatible exllamav2 API that’s both lightweight and fast - theroyallab/tabbyAPI

- GitHub - theroyallab/tabbyAPI: An OAI compatible exllamav2 API that’s both lightweight and fast: An OAI compatible exllamav2 API that’s both lightweight and fast - GitHub - theroyallab/tabbyAPI: An OAI compatible exllamav2 API that’s both lightweight and fast

Nous Research AI ▷ #ctx-length-research (6 messages):

- Explosive Paper on Dynamite AI:

@maxwellandrewshighlights a significant paper with potential impact on AI research, pointing readers towards this groundbreaking work. - Activation Beacon to Redefine LLMs: The Activation Beacon project, shared by

@maxwellandrews, presents a novel approach for Dense Retrieval and Retrieval-augmented LLMs, featuring a promising GitHub repository available here. - Potential Solution for Unlimited Context Length:

@cyrusofedendives deep into how the Activation Beacon could remarkably allow for unlimited context length in LLMs by introducing “global state” tokens, with a matching paper and code shared in their message. - The Community Awaits Lucidrains:

@atkinsmanexpresses anticipation for the reaction or possible contributions from well-known developer lucidrains in light of the recent developments in context length solutions. - Timeless Excitement: Without additional context,

@maxwellandrewsshared a Tenor GIF, possibly indicating the timelessness or significant wait associated with this advancement.

Links mentioned:

- Tweet from Yam Peleg (@Yampeleg): If this is true it is over: Unlimited context length is here. Activation Beacon, New method for extending LLMs context. TL;DR: Add “global state” tokens before the prompt and predict auto-r…

- FlagEmbedding/Long_LLM/activation_beacon at master · FlagOpen/FlagEmbedding: Dense Retrieval and Retrieval-augmented LLMs. Contribute to FlagOpen/FlagEmbedding development by creating an account on GitHub.

- Old Boomer GIF - Old Boomer History - Discover & Share GIFs: Click to view the GIF

Nous Research AI ▷ #off-topic (44 messages🔥):

-

Exploring Advanced AI Models on YouTube:

@pradeep1148shared two YouTube links, one explaining the ColBERT model with RAGatouille (Watch here) and another demonstrating the Eagle 7B model running on A40 hardware (Watch here). The second video received critique from.ben.comrecommending less reading from webpages and more insightful analysis. -

Centralizing AI Resources:

@kquantis working on setting up a website to consolidate AI research, papers, and guides, expressing frustrations with existing scattered resources. They encouraged contributions and offered to organize content into specific niches like text generation including training, fine-tuning, and prompt templates. -

Resource Recommendations for AI Enthusiasts: In response to

@kquant,@lightningralfprovided multiple resources including a Twitter list for papers (Discover here) and GitHub repositories like visenger/awesome-mlops and Hannibal046/Awesome-LLM. -

Inquiries on Data Storage for Large Language Models (LLMs):

@muhh_11’s curiosity about how teams like Mixtral or OpenAI store vast amounts of data was met with explanations from.ben.com,@dorialexa, and@euclaise, highlighting the use of common web crawls and efficient storage solutions to handle tens of trillions of tokens. -

Hardware Considerations for AI Model Training:

.ben.comoffered practical advice, stating that if a model can fit within a single GPU like the 3090, it’s not advantageous to split the load with a lesser GPU like the 1080ti, emphasizing the importance of hardware compatibility in model training efficiency.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Running 🦅 Eagle 7B on A40: 🦅 Eagle 7B : Soaring past Transformers with 1 Trillion Tokens Across 100+ LanguagesA brand new era for the RWKV-v5 architecture and linear transformer’s has…

- Exploring ColBERT with RAGatouille: RAGatouille is a relatively new library that aims to make it easier to work with ColBERT.ColBERT is a fast and accurate retrieval model, enabling scalable BE…

- Hmusicruof4 Rowley GIF - Hmusicruof4 Rowley Diary Of A Wimpy Kid - Discover & Share GIFs: Click to view the GIF

- Capybara Riding GIF - Capybara Riding Alligator - Discover & Share GIFs: Click to view the GIF

- GitHub - Hannibal046/Awesome-LLM: Awesome-LLM: a curated list of Large Language Model: Awesome-LLM: a curated list of Large Language Model - GitHub - Hannibal046/Awesome-LLM: Awesome-LLM: a curated list of Large Language Model

- GitHub - visenger/awesome-mlops: A curated list of references for MLOps: A curated list of references for MLOps . Contribute to visenger/awesome-mlops development by creating an account on GitHub.

- GitHub - swyxio/ai-notes: notes for software engineers getting up to speed on new AI developments. Serves as datastore for https://latent.space writing, and product brainstorming, but has cleaned up canonical references under the /Resources folder.: notes for software engineers getting up to speed on new AI developments. Serves as datastore for https://latent.space writing, and product brainstorming, but has cleaned up canonical references und…

Nous Research AI ▷ #interesting-links (27 messages🔥):

-

Token Healing Deemed Complex by .ben.com:

.ben.comdiscusses the complexity of token healing in theexllamav2class, mentioning a workaround that consumes a variable number of tokens iftoken_healing=True. The solution proposed involves refactoring for better reusability. -

Alibaba Unveils Qwen-VL, Outperforming GPT-4V and Gemini:

@metaldragon01shares a link to Alibaba’s Qwen-VL announcement, which reportedly outperforms GPT-4V and Gemini on several benchmarks. A demo and a blog post about Qwen-VL are also shared for further insight. -

Karpathy’s Challenge Goes Underdiscussed:

@mihai4256points out the lack of Twitter conversation surrounding Andrej Karpathy’s challenging puzzle, despite its difficulty which@mihai4256personally attests to. -

Issues and Solutions with lm-eval-harness and llama.cpp Server:

@if_aencounters and discusses several troubleshooting steps for integrating the Miqu model with the lm-eval-harness, citing aKeyErrorandRequestException.@hailey_schoelkopfprovides solutions, including the use of theggufmodel type and corrections in the API URL, which resolved the issues mentioned. -

Breakthrough in LLM Context Length with Activation Beacon:

@nonameusrhighlights a significant development called Activation Beacon, which allows unlimited context length for LLMs by introducing “global state” tokens. The technique, demonstrated with LLaMA 2 to generalize from 4K to 400K context length, could virtually “solve” the issue of context length limits if reproducible across other models, accompanied by a paper and code link for further exploration.

Links mentioned:

- no title found: no description found

- Tweet from Yam Peleg (@Yampeleg): If this is true it is over: Unlimited context length is here. Activation Beacon, New method for extending LLMs context. TL;DR: Add “global state” tokens before the prompt and predict auto-r…

- GGUF Local Model · Issue #1254 · EleutherAI/lm-evaluation-harness: Is there examples of lm_eval for gruff models hosted locally? lm_eval —model gguf —model_args pretrained=Llama-2-7b-chat-hf-Q4_K_M.gguf, —tasks hellaswag —device mps Getting AssertionError: mus…

- Scholar: no description found

- Andrej Karpathy (@karpathy) on Threads: Fun prompt engineering challenge, Episode 1. Prep: ask an LLM to generate a 5x5 array of random integers in the range [1, 10]. It should do it directly without using any tools or code. Then, ask it …

- Matryoshka Representation Learning: Learned representations are a central component in modern ML systems, serving a multitude of downstream tasks. When training such representations, it is often the case that computational and statistic…

- Tweet from AK (@_akhaliq): Alibaba announces Qwen-VL demo: https://huggingface.co/spaces/Qwen/Qwen-VL-Max blog: https://qwenlm.github.io/blog/qwen-vl/ Qwen-VL outperforms GPT-4V and Gemini on several benchmarks.

Nous Research AI ▷ #general (389 messages🔥🔥):

- Eagle-7B Beats Mistral:

@realsedlyfshared excitement over Eagle-7B, an open-source, multi-lingual model based on the RWKV-v5 architecture that outperforms even Mistral in benchmarks, showcasing lower inference costs while maintaining comparable English performance to the best 1T 7B models. - Mysterious Mistral Medium: A leaked version of Mistral medium triggered a flurry of discussions, with users speculating about its origins and performance.

@theluckynickand@kalomazediscussed its potential as a leaked, fine-tuned version based on L2-70B architecture, stirring excitement and skepticism. - MIQU-1-70B Intrigue: The MIQU-1-70B model sparked debate regarding its identity, with users such as

@n8programsand@agcobra1suggesting it could be a Mistral medium model leaked intentionally or a sophisticated troll project that nevertheless turned out to be highly effective. - Exploring Frankenstein Merges and Experiments in Model Finetuning: Excitement and curiosity surrounded the release of miquella-120b, a merge of pre-trained language models, and experiments like CodeLlama 70B were discussed. Users explored the idea of finetuning models on specific coding bases or tasks and discussed potential new approaches to inter-model communication and efficiency.

- Blockchain Meets AI: The Notre Research’s announcement about using blockchain for model evaluation and potential incentives (mentioned by

@realsedlyfand explored further by others) brought both intrigue and skepticism, pointing towards innovative yet controversial methods of model development and validation.

Links mentioned:

- nisten/BigCodeLlama-169b · Hugging Face: no description found

- alpindale/miqu-1-70b-fp16 · Hugging Face: no description found

- Tweet from AI at Meta (@AIatMeta): Today we’re releasing Code Llama 70B: a new, more performant version of our LLM for code generation — available under the same license as previous Code Llama models. Download the models ➡️ https://bi…

- Mark Zuckerberg: We’re open sourcing a new and improved Code Llama, including a larger 70B parameter model. Writing and editing code has emerged as one of the most important uses of AI models today. The ability t…

- Tayomaki Sakigifs GIF - Tayomaki Sakigifs Cat - Discover & Share GIFs: Click to view the GIF

- Facebook: no description found

- nisten/BigCodeLlama-92b-GGUF at main: no description found

- Introducing The World’s Largest Open Multilingual Language Model: BLOOM: no description found

- Continuum | Generative Software Insights: no description found

- Tweet from Q (@qtnx_): @AlpinDale comparison between miqu (left, between q2 and q5) and mistral medium (unknown quantization)

- @conceptofmind on Hugging Face: “A 1b dense causal language model begins to “saturate” in terms of accuracy…”: no description found

- alpindale/miquella-120b · Hugging Face: no description found

- MILVLG/imp-v1-3b · Hugging Face: no description found

- Tweet from simp 4 satoshi (@iamgingertrash): Early Truffle-1 renders Hopefully going to finalize a core design this week, and then start working on the physics of heat dissipation before pre-orders

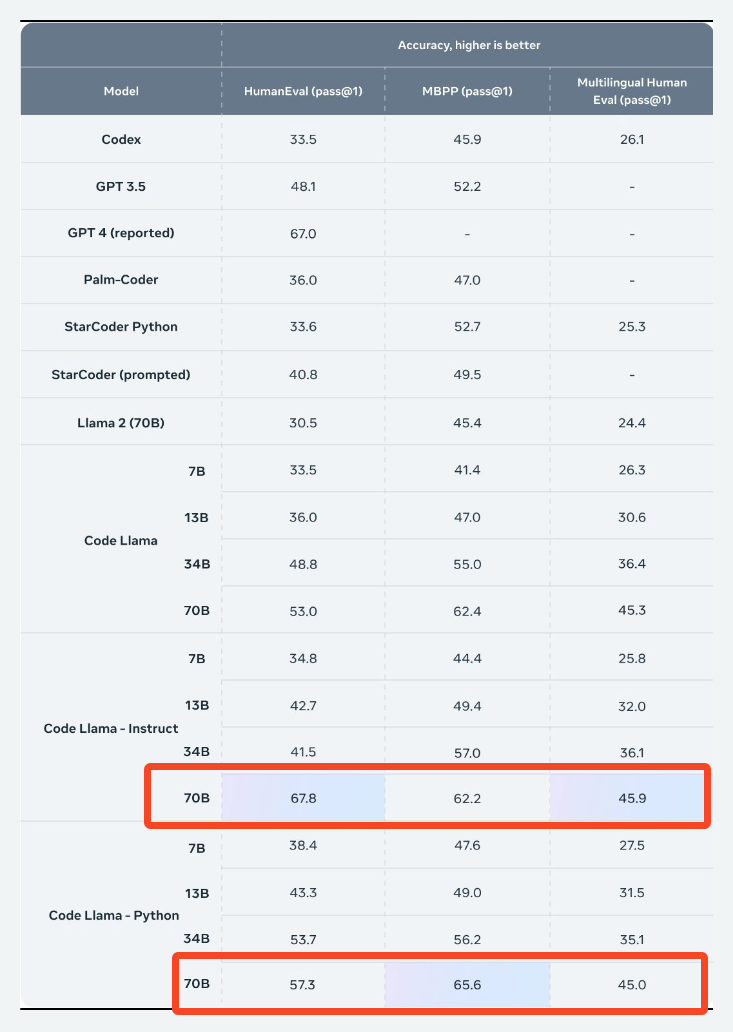

- Tweet from Vaibhav (VB) Srivastav (@reach_vb): Let’s fucking goooo! CodeLlama 70B is here. > 67.8 on HumanEval! https://huggingface.co/codellama/CodeLlama-70b-Instruct-hf

- Tweet from RWKV (@RWKV_AI): Introducing Eagle-7B Based on the RWKV-v5 architecture, bringing into opensource space, the strongest - multi-lingual model (beating even mistral) - attention-free transformer today (10-100x+ l…

- Tweet from NotaCant (@Nota_Cant): There is a fascinating LLM situation on /lmg/ about a potential Mixtral Medium leak. A very good model called Miqu-1-70b was mysteriously dropped by an anon and was originally theorized to be a leak…

- Cat Cats GIF - Cat Cats Explosion - Discover & Share GIFs: Click to view the GIF

- Hal9000 Hal GIF - Hal9000 Hal 2001 - Discover & Share GIFs: Click to view the GIF

- GPT4 reported HumanEval base significantly higher than OpenAI’s reported results · Issue #15 · evalplus/evalplus: Hello, I noticed that GPT4 HumanEval score via the EvalPlus tool reports approx an 88% HumanEval base score. This is substantially higher than OpenAI reports with the official HumanEval test harnes…

- codellama/CodeLlama-70b-Instruct-hf · Hugging Face: no description found

- Breaking Bad Walter White GIF - Breaking Bad Walter White - Discover & Share GIFs: Click to view the GIF

- NobodyExistsOnTheInternet/Llama-2-70b-x8-MoE-clown-truck · Hugging Face: no description found

Nous Research AI ▷ #ask-about-llms (37 messages🔥):

-

OpenHermes2.5 Recommended for Consumer Hardware: User

@realsedlyfrecommends OpenHermes2.5 with gguf or gptq quantized for running on most consumer hardware, considering it the best open-source LLM for answering questions. -

OpenHermes2.5 Performance and Parameters Inquiry:

@mr.fundamentalsshared a link to Nous Hermes 2 Mixtral 8x7B DPO, showcasing its achievement. Queries about setting parameters like max tokens and temperature through the prototyping endpoint were resolved with a prompt template provided by@teknium. -

Clarification on NSFW Content in Models:

@tekniumclarified that the discussed models, including those trained on GPT-4 outputs, do not contain nor specialize in NSFW content, though they don’t exclude it entirely due to lack of specific training data on refusals. -

Prompting Techniques for Specific Answer Sets: User

@exibingssought advice for ensuring model responses align with a predefined set of answers when evaluating over a RSVQA dataset. Techniques involving system prompts and comparison methods like cosine similarity were discussed. -

Exploration of Multimodal Models for Classification Tasks:

@exibingsdiscussed using IMP v1-3b model for a classification dataset related to orbital imagery, highlighting its multimodal capabilities. The conversation extended to comparing it with other models such as Bakllava and Moondream, with mentions of other strong models like Qwen-vl by@rememberlenny.

Links mentioned:

- MILVLG/imp-v1-3b · Hugging Face: no description found

- NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO · Hugging Face: no description found

LM Studio ▷ #💬-general (177 messages🔥🔥):

-

Choosing the Right GPU for LLM Work: In a discussion about which GPU is better for training Large Language Models (LLM),

@heyitsyorkieadvised@.mchinagathat despite the older P40/P100 GPUs having more VRAM, the newer 4060Ti is preferred due to it receiving current updates from Nvidia. This contrasts with@.mchinaga'sconcerns over the GRAM speed and improvement margin from a 3060Ti. -

Running LLMs on CPU and Compatibility Issues:

@heyitsyorkieprovided insights, stating LMStudio runs on CPU by default and doesn’t require PyTorch, responding to@.mchinaga'squery on setting the program to CPU-only. Meanwhile,@hexacubeshared experiences of older GPUs not being recognized due to outdated drivers, emphasizing the importance of compatibility with customer systems. -

GGUF Format and Deprecated Models in LM Studio:

@heyitsyorkieclarified for@pudloand others that LM Studio no longer supports GGML formatted models, only GGUF, highlighting a need for updating the platform’s home tab to reflect current supported formats. -

Questions about Dynamically Loading Experts in Models: Discussion explored dynamically loading experts in models within LM Studio, with

@hexacubeproposing the utility of such a feature and@heyitsyorkieconfirming its feasibility, particularly for MoE (Mixture of Experts) models. -

Python Programming Challenges and Solutions:

@.mchinagafaced an error in a Python project involving chatGPT, prompting responses from multiple users like@.ben.comand@dagbsoffering troubleshooting advice such as correcting API URLs and removing incompatible response keys.

Links mentioned:

Friends Phoebe GIF - Friends Phoebe Rachel - Discover & Share GIFs: Click to view the GIF

LM Studio ▷ #🤖-models-discussion-chat (92 messages🔥🔥):

-

Older Models Still Reign in Some Arenas:

@fabguysparked a discussion stating that some older models could outperform newer ones in specific tasks.@heyitsyorkieand@msz_mgsechoed this sentiment, highlighting that models like llama 1 aren’t affected by the “AI by OpenAI” trend and perform well in their trained areas. -

Choosing the Right Model for the Task:

@vbwyrdeexpressed the need for a resource or site that can help users select the most suitable model for specific tasks, such as Open-Interpreter for function calling or CrewAI Agents for code refactoring. This information could be especially beneficial for beginners needing guidance on model selection and the required GPU size. -

Curiosity on Coding Models and Language Support:

@golangorgohomeinquired about the best models for coding, particularly those supporting the Zig language. Following a series of exchanges with@dagbs, it was clarified that while one model type typically suffices for many tasks, specific plugins might offer enhanced code-completion capabilities. Dagbs recommended using LM Studio for ease of use and exploring various models within the LM Studio ecosystem for different needs. -

Model Characteristics Explained:

@dagbsprovided insights into choosing among different models and suffixes such as-instruct,-chat,-base,DPO, andLaser, explaining their training backgrounds and intended usages. This explanation aimed to demystify the variety of options available and aid@golangorgohomein selecting the best model for their requirements. -

Stability Issues with TinyLlama Versions Explored:

@pudloreported instability and looping issues with TinyLlama Q_2K, seeking advice on improving model performance.@dagbssuggested trying TinyDolphin Q2_K with ChatML for potentially better results, hinting that GPU/CPU issues might contribute to the observed instability. The larger models did not exhibit these problems, suggesting a specific issue with the smaller quant models.

Links mentioned:

- dagbs/TinyDolphin-2.8-1.1b-GGUF · Hugging Face: no description found

- LLMs and Programming in the first days of 2024 - <antirez> : no description found

- OSD Bias Bounty: no description found

- Bug Bounty: ConductorAI - Bias Bounty Program | Bugcrowd: Learn more about Conductor AI’s vulnerability disclosure program powered by Bugcrowd, the leader in crowdsourced security solutions.

LM Studio ▷ #🧠-feedback (14 messages🔥):

- New Beta Version Announced:

@yagilbannounced a new beta version for testing, sharing a link for the latest beta. Following confusion about the version number,@fabguyclarified that the beta is the next version following 0.2.11. - Performance Lag with Lengthy Prompts: Users

@msz_mgsand@heyitsyorkiereported experiencing performance lag when pasting lengthy text into the prompt text box. The issue was identified not with the processing of prompts, but with the pasting action itself. - Navigational Challenges in the UI:

@msz_mgsfurther mentioned difficulties in navigating the UI when dealing with lengthy texts or prompts, particularly in finding icons to delete, copy, or edit text, which requires excessive scrolling.@yagilbacknowledged these as bugs that will be addressed. - Business Inquiry Follow-Up:

@docorange88reached out to@yagilbregarding a business inquiry, mentioning that they had sent several follow-up emails without receiving a response.@yagilbconfirmed receipt of the email and promised a prompt reply.

LM Studio ▷ #🎛-hardware-discussion (38 messages🔥):

-

VRAM Overhead for LMStudio Models Explored:

@aswarpquestioned why models cannot fully offload onto his RTX 3070 with 8GB VRAM.@heyitsyorkieclarified that some VRAM is needed for context length, not just the model size, leading to a discussion on the unexpected VRAM required above the model’s size. -

Misconceptions about Memory Usage:

@fabguycountered@aswarp’s assumptions about the memory footprint of transformers and context, emphasizing that transformers encode prompts as positional data, which significantly increases memory usage. He recommended reading a guide on transformers for a deeper understanding. -

GPU Offload Nuances in LMStudio Unveiled:

@mudmininquired about not achieving full GPU offload on a 4090 GPU, despite it having 24GB of RAM. The conversation revealed that the actual size of an offloaded model includes the model itself, context, and other variables, necessitating trial-and-error to understand fully. -

Integrated GPU Utilization for Full Model Offloading: Following the discussion on GPU offload capabilities,

@mudmindiscovered that using an integrated GPU could facilitate more models to load fully. This insight suggests strategies for optimizing model loading on systems with dual GPU setups. -

RTX 40 Titan Rumors Stir Community:

@rugg0064shared rumors about an RTX 40 Titan with 48GB VRAM, sparking discussions on Nvidia’s market strategy and the disproportionate cost of VRAM upgrades..ben.comadded context by linking to a TechPowerUp article, detailing how Nvidia’s RTX 4090s are being repurposed for AI in China amidst U.S. export restrictions.

Links mentioned:

- Illustrated Guide to Transformers- Step by Step Explanation: Transformers are taking the natural language processing world by storm. These incredible models are breaking multiple NLP records and…

- Special Chinese Factories are Dismantling NVIDIA GeForce RTX 4090 Graphics Cards and Turning Them into AI-Friendly GPU Shape: The recent U.S. government restrictions on AI hardware exports to China have significantly impacted several key semiconductor players, including NVIDIA, AMD, and Intel, restricting them from selling h…

LM Studio ▷ #🧪-beta-releases-chat (11 messages🔥):

-

Beta V2 Gets a Hefty Update:

@yagilbannounced the 0.2.12 Preview - Beta V2 with download links for both Mac and Windows, including a list of bug fixes such as VRAM estimates, OpenCL issues, AI role flicker, and more. Feedback is requested at a specific Discord channel. -

Queries on Platform Compatibility: Users

@djmsreand@nixnoviinquired about a Mac Intel version and a new Linux appimage respectively, with@heyitsyorkieconfirming no Mac Intel version and@yagilbnoting the Linux version would be available soon. -

Call for New Features:

@junkboi76raised the idea of adding image generation support to LM Studio, expressing interest in expanding the tool’s capabilities. -

Questions on Specific Support:

@ausarhuyinquired about LM Studio’s support for WSL (Windows Subsystem for Linux), showing interest in the interoperability between operating systems. -

Beta Performance and Compatibility Reports: Users like

@wolfspyreand@mirko1855reported issues with the beta on the latest Mac Sonoma build and on PCs not supporting AVX2, respectively.@fabguysuggested checking the FAQ for common exit code issues.

Links mentioned:

no title found: no description found

LM Studio ▷ #memgpt (1 messages):

- LM Studio’s OpenAI Compatible API Awaiting Features:

@_anarche_mentioned that LM Studio openai compatible API isn’t fully developed yet, lacking support for openai function calling and assistants. They expressed hope for these features to be included in a future update.

OpenAI ▷ #ai-discussions (3 messages):

- Question about RAG: User

@aiguruprasathasked about what RAG is. No follow-up or answer was provided in this message history. - Laughter in code: User

@penesilimite2321simply commented with “LMFAO”, context or reason for this reaction was not given. - Specific AI for Political Victory:

@iloveh8inquired about the kind of AI products that could significantly aid a political candidate to win an election, mentioning the use of deepfakes for specific scenarios as an example. No responses or further elaboration were noted.

OpenAI ▷ #gpt-4-discussions (114 messages🔥🔥):

-

Exploring Limitations of GPT-4 and Scholar AI: Users like

.broomsageandelektronisadediscussed issues with GPT-4 Scholar AI, including receivingerror talking to plugin.scholarmessages and realization that some features require a premium subscription..broomsagediscovered that premium Scholar AI subscription is necessary for advanced paper analysis features, leading to a decision to stick with standard GPT-4 for now. -

Beta Feature Blues:

darthgustav.voiced frustration over not receiving access to expected beta features for Plus subscribers, specifically the@feature, leading to a discussion on browser troubleshooting and anecdotal evidence of inconsistency in feature access among users. -

Knowledge Integration Quirks Revealed:

fyruzraised a question about how GPT’s knowledge base integrates with conversations, sparking a dialogue on whether certain formats or the context size influences the need for the model to visibly search its knowledge base. -

Persistent GPT Model Errors and File Handling: Users like

loschessanddarthgustav.examined recurring errors such as “Hmm…something seems to have gone wrong,” theorizing about internal GPT issues and sharing solutions like rebuilding GPTs or adjusting knowledge files during high server load periods. -

Context and Length in GPT Conversations: Discussions emerged around the practical limits of conversation length with GPT models, where users like

_odaenathusandblckreapershared experiences and strategies for managing lengthy conversations, highlighting the balance between conversational depth and technical constraints.

OpenAI ▷ #prompt-engineering (44 messages🔥):

- RAG Explained by @darthgustav.: Retrieval Augmented Generation (RAG) is when the AI has knowledge directly attached and can search it for data or semantic matching. This was explained in response to @aiguruprasath’s query about what RAG is.

- Prompt Engineering Technique Shared by @madame_architect: @madame_architect recommends using the prompt pattern

"Please critique your answer. Then, answer the question again."for improving the quality of AI’s output, a technique found in a significant research paper. - Improving Code Generation Requests to ChatGPT-4: @jdeinane expressed frustration with ChatGPT-4 not generating code as requested, to which @darthgustav. suggested specifying the task, language, architecture, and making a plan before asking for the code.

- Ethical Scaffolding in AI Discussed: @darthgustav. introduced a 2/3 pass/fail check against utilitarianism, deontology, and pragmatism to manage ethical considerations in AI, mentioning an example where it prevented unethical suggestions for coupon use.

- Conditional Imperatives in Custom GPTs by @darthgustav.: They elaborated on using conditional imperatives, directing the AI to refer to LEXIDECK_CULTURE.txt when faced with an ethical dilemma. @aminelg showed interest in the content of this culture knowledgebase, which @darthgustav. noted could be explored indirectly through “Lexideck Technologies Ethics” online.

OpenAI ▷ #api-discussions (44 messages🔥):

-

Exploring RAG for Enhanced AI Capabilities: User

@aiguruprasathinquired about RAG (Retrieval Augmented Generation), and@darthgustav.clarified that it involves the AI possessing direct knowledge access for data search or semantic matching, enhancing its performance. -

Prompt Pattern Magic with ChatGPT: According to

@madame_architect, the prompt pattern"Please critique your answer. Then, answer the question again."significantly improves the quality of ChatGPT’s outputs. This pattern was discovered in a major research paper, akin to COT. -

Optimizing Code Generation Requests in ChatGPT-4: Frustrated with ChatGPT-4’s response to coding requests,

@jdeinanesought advice.@darthgustav.recommended specifying the task, language, and code architecture clearly to achieve better results. -

Approaching Prompts as Advanced Programming:

@aminelgand@darthgustav.discussed treating prompt crafting like advanced programming, emphasizing the importance of clear, detailed requests to ChatGPT. They also touched upon viewing ChatGPT as an operating system, capable of polymorphic software behavior. -

Innovative Ethical Moderation via Conditional Imperatives:

@darthgustav.shared insights on using a 2/3 pass/fail check against utilitarianism, deontology, and pragmatism to navigate ethical considerations in AI output. Additionally,@darthgustav.designed a method involving conditional imperatives and a culture knowledgebase (LEXIDECK_CULTURE.txt) to guide ChatGPT’s responses in ethical dilemmas.

OpenAccess AI Collective (axolotl) ▷ #general (139 messages🔥🔥):

-

New Quantization Method, SmoothQuant, Discussed:

@dreamgenand@nanobitzdiscussed a new quantization method called SmoothQuant developed by MIT, Han’s lab, similar to AWQ. It targets LLMs beyond 100 billion parameters but also shows benchmarks for smaller models like 13B and 34B, revealing a 50% throughput improvement for a 70B model (Pull Request #1508 on GitHub). -

Challenges with Model Merging at vLLM:

@dreamgenmentioned that vLLM is becoming bogged down with features, making it difficult to integrate new technologies like SmoothQuant. Despite potential benefits, such as significant server cost reductions, integration may not happen soon due to the complexity of the current system. -

Exploration of Long Context Handling in LLMs:

@xzuynand@dreamgenshared insights on overcoming challenges related to the limited context window of LLMs. They discussed the Activation Beacon and enhancements based on RoPE (Rotary Position Embedding), aiming to efficiently process longer contexts without compromising short context performance. -

Miqu Quantization on HuggingFace Mistaken for Mistral Medium: A 70B model quantized version was uploaded to HuggingFace by a user, sparking discussions (@yamashi) and comparisons to Mistral Medium. The confusion led to an investigation on whether it was a quantized leak, further fueled by unsourced speculations in online forums and social media.

-

Training Hardware Choices and Strategies Explored: Various users, including

@dreamgenand@mistobaan, discussed the most cost-effective training setups using NVIDIA H100 SXM GPUs on platforms like RunPod. They also talked about challenges in obtaining favorable pricing from cloud providers, comparing the efficiency of SXM vs. PCIe versions of GPUs.

Links mentioned:

- NVIDIA Hopper Architecture In-Depth | NVIDIA Technical Blog: Everything you want to know about the new H100 GPU.

- Soaring from 4K to 400K: Extending LLM’s Context with Activation Beacon: The utilization of long contexts poses a big challenge for large language models due to their limited context window length. Although the context window can be extended through fine-tuning, it will re…

- First-fit-decreasing bin packing - Wikipedia: no description found

- GitHub - bjj/exllamav2-openai-server: An OpenAI API compatible LLM inference server based on ExLlamaV2.: An OpenAI API compatible LLM inference server based on ExLlamaV2. - GitHub - bjj/exllamav2-openai-server: An OpenAI API compatible LLM inference server based on ExLlamaV2.

- GitHub - dwzhu-pku/PoSE: Positional Skip-wise Training for Efficient Context Window Extension of LLMs to Extremely Length: Positional Skip-wise Training for Efficient Context Window Extension of LLMs to Extremely Length - GitHub - dwzhu-pku/PoSE: Positional Skip-wise Training for Efficient Context Window Extension of …

- Scaling Laws of RoPE-based Extrapolation: The extrapolation capability of Large Language Models (LLMs) based on Rotary Position Embedding is currently a topic of considerable interest. The mainstream approach to addressing extrapolation with …

- no title found: no description found

- Support W8A8 inference in vllm by AniZpZ · Pull Request #1508 · vllm-project/vllm: We have implemented W8A8 inference in vLLM, which can achieve a 30% improvement in throughput. W4A16 quantization methods require weights to be dequantized into fp16 before compute and lead to a th…

- Importance matrix calculations work best on near-random data · ggerganov/llama.cpp · Discussion #5006: So, I mentioned before that I was concerned that wikitext-style calibration data / data that lacked diversity could potentially be worse for importance matrix calculations in comparison to more ”…

- Support int8 KVCache Quant in Vllm by AniZpZ · Pull Request #1507 · vllm-project/vllm: Quantization for kv cache can lift the throughput with minimal loss in model performance. We impelement int8 kv cache quantization which can achieve a 15% throughput improvement. This pr is part of…

OpenAccess AI Collective (axolotl) ▷ #axolotl-dev (2 messages):

- Question on LoftQ Implementation:

@suikameloninquired whether the LoftQ implementation is meant to be functional, hinting at potential issues or misunderstandings regarding its usage or outcomes. - LoftQ Memory Concerns:

@suikamelonreported attempting a fine-tuning of a 7B model with QLoRA and 8192 context, noting it required 11.8 GiB. However, LoftQ at 4bit resulted in out-of-memory (OOM) errors, raising concerns about its memory efficiency.

OpenAccess AI Collective (axolotl) ▷ #general-help (30 messages🔥):

- Handling Overlong Completion Examples: According to

@dreamgen, completion examples exceeding the context length used to be split but are now discarded. This behavior shift could impact how models process extensive data inputs. - Efficiency Woes in Inference Time:

@diabolic6045reported about ~18 seconds per inference using a quantized model, even withuse_cache=True, seeking advice on faster inference methods.@nanobitzrecommended avoiding Hugging Face’s default settings and exploringquant,vllm, orTGIfor better performance. - Link to Ollamai Documentation:

@dangfuturesshared a link to Ollamai documentation perhaps in context to discussions on text embeddings and model performance optimization. - Config Error During Fine-tuning:

@ragingwater_encountered a specific error while trying to fine-tune OpenHermes with a custom config, leading to a discussion on the correct setup and implications for model merging. They cited a solution from the Axolotl GitHub README, resolving the issue but sought clarification on the impact during model merging. - Bucket Training for Balanced Data Distribution:

@jinwon_khighlighted the technique of “Bucket Training” as outlined by the Colossal-AI team for ensuring a balanced data distribution in continual pre-training, suggesting its potential application in Axolotl. The shared link discusses this strategy in depth, including comparisons between LLaMA versions and implications for model pre-training costs.

Links mentioned:

- Colossal-LLaMA-2: Low Cost and High-quality Domain-specific LLM Solution Using LLaMA and…: The most prominent distinction between LLaMA-1 and LLaMA-2 lies in the incorporation of higher-quality corpora, a pivotal factor…

- gist:5e2c6c87fb0b26266b505f2d5e39947d: GitHub Gist: instantly share code, notes, and snippets.

- axolotl/README.md at 4cb7900a567e97b278cc713ec6bd8af616d2ebf7 · OpenAccess-AI-Collective/axolotl: Go ahead and axolotl questions. Contribute to OpenAccess-AI-Collective/axolotl development by creating an account on GitHub.

OpenAccess AI Collective (axolotl) ▷ #datasets (6 messages):

-

Model Repo Configurations Vary:

@dangfutureshighlighted that the configuration for data (positive label, negative label, query) usually depends on the model repository, as each repo has its own specific requirements. -

FlagEmbedding Fine-tuning Guide Shared:

@dangfuturesshared a link to the FlagEmbedding Fine-tuning documentation, which provides detailed instructions for dense retrieval and retrieval-augmented LLMs fine-tuning. -

LlamaIndex Fine-tuning Overview Posted:

@dangfuturesalso posted a link to LlamaIndex’s fine-tuning guidelines, explaining the benefits of fine-tuning models, including improved quality of outputs and meaningful embedding representations. -

Synthetic Instruction Dataset Creation Discussed:

@_rxavier_shared their process of creating a synthetic instruction dataset based on textbooks, where they generate a JSON with questions and answers from textbook chunks. Considering whether it would be more effective to first generate only questions and then produce the answers in a subsequent step.

Links mentioned:

- Fine-tuning - LlamaIndex 🦙 0.9.39: no description found

- FlagEmbedding/FlagEmbedding/llm_embedder/docs/fine-tune.md at master · FlagOpen/FlagEmbedding: Dense Retrieval and Retrieval-augmented LLMs. Contribute to FlagOpen/FlagEmbedding development by creating an account on GitHub.

OpenAccess AI Collective (axolotl) ▷ #community-showcase (2 messages):

- Model Card Update Incoming:

@ajindalagrees to@caseus_’s request to add an axolotl badge and tag to the model card, indicating a forthcoming update to the documentation.

OpenAccess AI Collective (axolotl) ▷ #deployment-help (15 messages🔥):

- Exploring the Inference Stack for LLMs:

@yamashiis diving into inference after only having experience in training LLMs.@dreamgensuggests that vLLM is probably the easiest for serving fp16 models but mentions performance issues with long contexts and moderate load. - Quantization and Performance Tradeoffs: Both

@dangfuturesand@dreamgendiscuss quantization as a strategy for inference. While it can slow down processes, the price/performance tradeoff remains uncertain. - Hardware Requirements Uncertainty:

@yamashiinquires about the necessary hardware, particularly VRAM, for running a 70b model at 500 token/s in batch mode.@dreamgenadvises that hardware needs are task-dependent and recommends a trial-and-error approach. - Rental Before Purchase Recommended for Benchmarking:

@dreamgensuggests renting hardware to benchmark performance before purchasing. This approach offers a practical solution to@yamashi’s concerns about buying the appropriate hardware for specific model requirements. - Quantization Yields Positive Results:

@dangfuturesshares a positive experience with AWQ quantization, indicating it may offer significant benefits in the context of inference performance.

Mistral ▷ #general (137 messages🔥🔥):

-

Confusion and Speculation Around Mistral and “Leak” Claims: Members of the Mistral Discord chat, including

@ethux,@casper_ai, and@mrdragonfox, engaged in discussions about whether a newly emerged model was a leaked version of Mistral medium or not, referencing its performance, origins, and composition. The model in question, linked to huggingface.co/miqudev/miqu-1-70b by@alexworteega, was eventually identified not to be Mistral or a leak but perhaps a LLaMa model fine-tuned with Mistral data. -

Technical Discussion on Model Performance and Quantization: