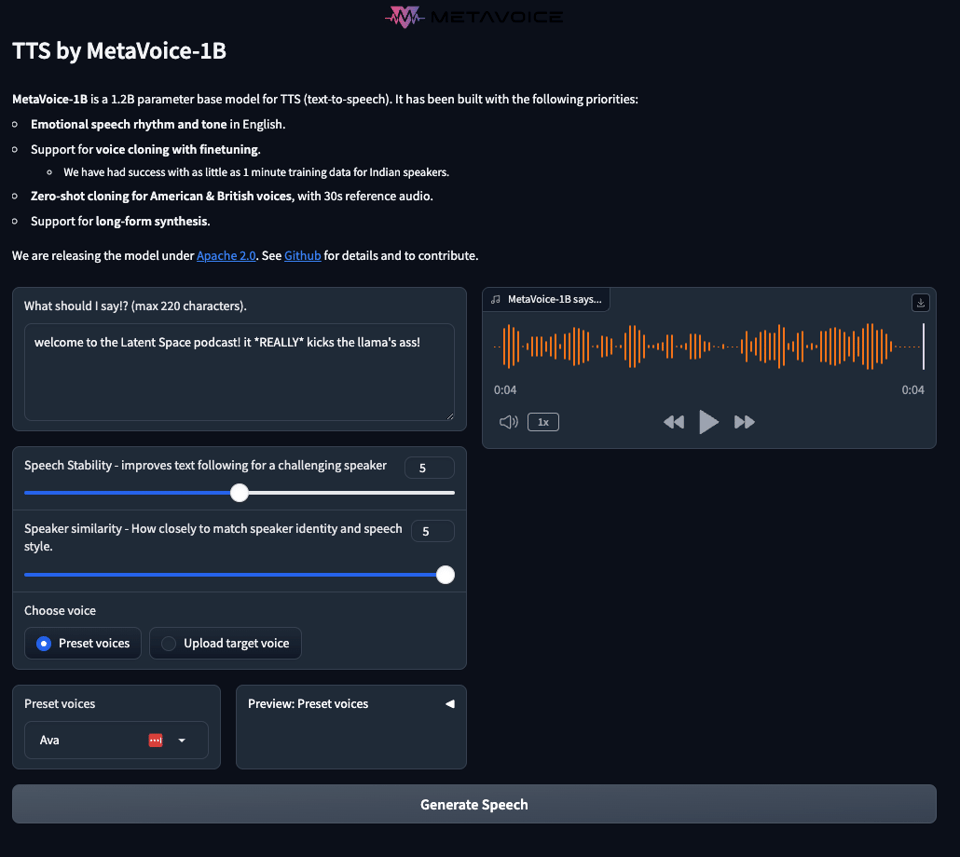

Remember Coqui, the TTS startup that died last month? Well, a new TTS model that supports voice cloning and longform synthesis is here (try it).

It’s a small startup but a promising first ship.

In other news, Google killed the Bard brand for Gemini.

Table of Contents

[TOC]

PART 1: High level Discord summaries

TheBloke Discord Summary

-

AI Training Conversations Heat Up: Discussions involved AI models like Mixtral, Nous Mixtral DPO, and Miqu 70B, comparing them with OpenAI’s GPT models on efficiency and capability. Debates flared on the Reddit /r/LocalLLaMA subreddit’s moderation with shared links to GitHub, Hugging Face, and YouTube videos discussing AI advancements and issues within the community.

-

Antiquity Meets Modernity in AI Nomenclature: In the #characters-roleplay-stories channel,

Thespis 0.8sparked a debate about its Greek tragedy origins, turning the conversation towards the use of mythology for AI context. The practice of lorebooks in roleplay was discussed as a tool for prompt engineering, and DPO (Direct Preference Optimization) was mentioned with example wandb links provided. -

Removing Safety Features for AI’s Full Potential: Users shared insights on LoRA fine-tuning to remove safety guardrails from models like Llama2 70B instruct, citing a LessWrong post. Discussions also suggested combining datasets could enhance model control during fine-tuning.

-

Finding Tech Limits in AI Development: Query on the possibility of offloading transformer layers to a GPU as observed in

llama.cpp, led to the conclusion that the Transformers library doesn’t support layer splitting between CPU and GPU. Interest was shown in Meta’s Sphere project, considered for its potential of incorporating frequent updates using big data tools. -

Exploring AI Implementation on Alternative Platforms: Questions arose about the implementation of LLaMa 2 on MLX for Apple Silicon, specifically on how to adapt the model’s query parameters to this platform. The technical intricacies of multi-head or grouped-query formats were under consideration.

OpenAI Discord Summary

-

DALL-E Adopts Content Authenticity Initiative Standards: DALL-E generated images now include metadata conforming to C2PA specifications, aiding verification of OpenAI-generated content, as announced by

@abdubs. The change aims to assist social platforms and content distributors in content validation. Full details are available in the official help article. -

AI Censorship and Authenticity Stir Debate: AI censorship’s impact on user experience prompted a hot exchange; users

@kotykdand@arturmentadorespectively criticized and defended AI censorship, highlighting user freedom and misuse prevention. Ethical concerns about presenting AI-generated art as human-created were also voiced, emphasizing the need for honest disclosure per OpenAI’s TOS. -

Metadata Manipulation Recognized as Trivial by Community: The significance of metadata in image provenance was heavily discussed, with users agreeing on the ease of removing such data, rendering it an unreliable measure for image source verification. This reflects the technical challenges of securing digital image authenticity.

-

Open Source AI Models Positioned Against Commercial Giants: A debate flourished comparing open-source AI models to commercial options like GPT-4, touching on the impact on innovation and the potential growth of competitive open-source alternatives. The discussion reflects the engineering community’s focus on the development landscape of AI technologies.

-

Discussions Surround GPT-4 Usability and Development: Multiple users, such as

@mikreyyy,@glory_72072, and others, sought help regarding GPT-4 usage issues like logouts, finding demos and storytelling capabilities. The conversation also touched on answer limits and complexities involved in custom GPT usage, indicating a concentration on the practical applications and limitations of GPT-4 in real-world scenarios. -

Community Seeks Improvement and Interaction in AI-Driven Projects: In the realm of prompt engineering and API discussions, community members

@loierand@_fresniclooked for collaborative input on improving GPT modules and refining character interaction prompts. Meanwhile,@novumclassicumsought to provide feedback directly to OpenAI, indicating a desire for more streamlined communication between developers and the OpenAI team.

HuggingFace Discord Summary

-

Hugging Chat Assistant Personalization: Hugging Face introduces Hugging Chat Assistant, allowing users to build personalized assistants with customizable name, avatar, and behavior. It supports LLMs such as Llama2 and Mixtral, streamlining the user experience by eliminating the need for separate custom prompt storage. Check out the new feature at Hugging Chat.

-

Dataset Viewer for PRO and Enterprise: The Dataset Viewer on HuggingFace now supports private datasets, but the feature is exclusively for PRO and Enterprise Hub users. This update is aimed at enhancing data analysis and exploration tools (source).

-

Synthetic Data Trends: HuggingFace Hub adds a

synthetictag to facilitate the sharing and discovery of synthetic datasets, signaling the growing importance of synthetic data in AI. -

AI-Powered Quadrupeds and AI in Fashion: Cat Game Research is developing a video game featuring the first quadruped character controller utilizing ML and AI, while Sketch to Fashion Collection turns sketches into fashion designs. Explore badcatgame.com and Hugging Face Spaces for innovations in AI-powered gaming and fashion.

-

BLOOMChat-v2 Elevates Multilingual Chats: BLOOMChat-v2’s 176B parameter multilingual language model with 32K sequence length is improving upon its predecessors. An API and further advancements are anticipated; details are communicated in a Twitter summary and detailed blog post.

-

Reading Group Excitement and Resources: The HuggingFace Reading Group schedules a presentation for decoder-only foundation models for time-series forecasting, and a GitHub repository (link) is established to compile resources from past sessions, enhancing knowledge sharing.

-

Diffusers and Transformers Learning: For those new to diffusion models, the community suggests courses by HuggingFace and FastAI for deep dives, while queries about details such as timestep setting and conditioning model validation suggest active experimentation and learning within the domain.

-

NLP Channel Gets Textbook: A discussion on fine-tuning LLMs like LLama chat or Mistral with textbook content proposes to enhance domain specific understanding, thereby improving the educational chat capabilities of models.

LM Studio Discord Summary

-

LM Studio Launches v0.2.14: A new LM Studio v0.2.14 version is released, tackling critical bugs like UI freezes and input hangs, which you can access via LM Studio website. Remember to update for a smoother experience.

-

Ease-of-Use Shines with LM Studio: Users are drawn to LM Studio for its simple user interface, enabling anyone to use LLMs without coding skills. But, watch out for default resets in LLM folder locations after updates.

-

Local Model Execution Challenges: While users experience issues like model generation freezes and poor GPU utilization, patches in LM Studio’s latest updates are meant to address these. Also, for detailed model-fine tuning instructions, check out the YouTube tutorial.

-

Hardware Hub: Debates continue over optimal hardware setups for AI tasks, with one user preparing an AMD 8700g test bed and prompting curiosity over possible 7950x3d upgrades. Fan configurations for cooling involve 2x180mm fans versus 3x120mm Arctic P120 fans, but some caution against overestimating APU performance for AI-related computations.

-

Feedback Loop for LM Studio: Users call attention to a few issues in beta versions, like app hang-ups when ejecting models and outdated non-AVX2 beta releases; LM Studio team seems responsive to these concerns. macOS users highlight a persistent bug where the server stays active even after app closure.

-

Specialized AI Utilization Queries: Inquiries arise about chain-compatible seq2seq LLM models for Python scripting and whether Crew-AI has similar UI or web interfaces as other platforms like AutoGen Studio.

-

Model Preferences and Experiences Shared: Users discuss their experiences with various models, with Mixtral mentioned casually as working “alright” for user phoenix2574 in the open-interpreter channel.

Nous Research AI Discord Summary

Mistral Outshines in Programming: @carsonpoole discovered that Mistral outperforms phi2 significantly on the code section of OpenHermes 2.5 under identical sampling scenarios. The discussions included implications for GPT-4’s programming capabilities and sparked curiosity surrounding the expected skillset of a 2-billion-parameter model, with cited expectations from Microsoft Research.

Sparsetral Unveiled and Math Benchmarking Excitement: The introduction of Sparsetral, a sparse MoE model, comes complete with resources such as the original paper and GitHub repos. Meanwhile, .benxh celebrated Deepseek, which incorporates a technique called DPO to achieve new proficiency levels in math-focused assessments.

Quant Tune and EQ-Bench: @tsunemoto has quantized Senku-70B, a finetuned version of the hypothetical Mistral-70B, yielding an EQ-Bench score of 84.89, and shared it on HuggingFace. This sparked a broader discourse on the significance of mathematics in appraising language models’ abilities and hosting LLM-powered robotics hackathons.

Language Model Quirks and Mixtral Issues Noted: Users experienced that Mixtral, directed in Chinese, presents mixed-language responses, and similar issues with OpenHermes. Cloudflare’s AI platform adoption of these models was highlighted through tweets.

Support for Robot-Control Framework: @babycommando sought suggestions on finetuning multi-modal models and released MachinaScript for Robots with a GitHub repository. They asked for guidance on finetuning Obsidian and technical specifications for robot interactions using their LLM-driven framework.

Mistral Discord Summary

-

VRAM Cowboys and Silicon Showdowns: The discussions emphasize the hardware demands of running full fp16 AI models, with suggestions pointing to a minimum of 100GB vRAM for optimal performance and speculation on the adequacy of Nvidia’s 4090 and dual 4080 setups. The debate also took turns discussing the merits and obsolescence of Intel Macs in the face of Apple’s Silicon Macs, evidencing strong divergences on upgrade philosophies and practical longevity concerns.

-

Cost-Effective AI Modeling Secrets Unveiled: Users tackled the challenge of reducing computational costs for Mistral models, referencing DeepInfra pricing and suggesting solutions like serverless platforms, hardware accelerators, and LlamaCPP. Operational cost discussions also navigated the terrain of data sensitivity, fine-tuning, and the balance between in-house inferences and professional hosting services.

-

From Fine-Tuning Frustrations to Inference Innovations: Technical frustrations arose around padding inconsistencies in fine-tuning, with one member expressing confusion despite following resources like QLoRa tutorials. Others shared experiences of improved model boot times, some reporting readiness within 2-10 seconds, and the community brainstormed effective prompt engineering for Mistral-8x7B models using tools like Llama 1.6.

-

Anticipation Builds for Open Source Release: A brief exchange indicated community interest in an unspecified tool, with the promise to release it open source once feasible; however, no further details or timelines were provided.

-

Mark Your Calendars for Office Hours: The next office hour session for the Mistral community is officially on the schedule, and interested parties are provided with a Discord event link for access.

LAION Discord Summary

-

Compromised Discord Account Re-secured:

@astropulsefell prey to a spearfishing attack compromising their Discord account. Users shared cybersecurity tips, highlighting the usefulness of Have I Been Pwned for checking if email addresses have been affected by data breaches. -

Creating Sparse Neural Networks:

@mkaicis working on innovative neural network architectures that allow dynamic reconfiguration of connections during training, which could enhance model sparsity and performance. -

Novel AI Projects in Need of Recognition:

@SegmentationFaultbrought attention to PolyMind on GitHub, which aims to combine multiple AI capabilities in one platform, emphasizing the project’s practical value over entertainment-focused applications. -

Text-to-Image Consistency Without Training: Introducing ConsiStory, a training-free model discussed by

@thejonasbrothers, designed to improve consistency in text-to-image generation using novel attention mechanisms and feature injections. -

Google Research’s Lumiere for Text-to-Video: A YouTube video shared by

@spirit_from_germanyshowcases Lumiere, Google Research’s model focused on creating globally consistent video content from text inputs.

Eleuther Discord Summary

GPT Rivalries and Bots: GPT-3.5 showed surprising prowess in generating code for obscure languages over GPT-4, while the Eleuther server debates the trade-offs between openness and spambot disruptions.

MetaVoice TTS Model Unveiled: MetaVoice 1B, a new TTS model, was released with open source licensing, sparking discussions about its performance which includes features like zero-shot voice cloning and emotional speech synthesis as detailed in a tweet.

Evaluating Model Extrapolation and Optimization: A variety of methods for understanding and pushing model capabilities were reviewed, from analyzing loss vs sequence length to SELF-DISCOVER framework outperforming traditional methods on reasoning benchmarks as described in this paper.

Infinite Limits and Interpretability: Queries about deep learning infinite depth limits and loss landscapes sparked interest in existing research, while a new method called Evolutionary Prompt Optimization (EPO) for language model interpretation was proposed in a research paper.

Dissecting LLM Prompt Influence: The search for reliable input saliency methods in LLM prompts continued with skepticism against Integrated Gradients, underscored by a concerning paper that casts doubt on the attribution methods’ ability to infer model behavior.

OpenAccess AI Collective (axolotl) Discord Summary

-

GCP Grapples with A100 Availability: Community members are experiencing difficulty sourcing A100 GPUs on Google Cloud Platform, raising concerns of a potential shortage. Discussions also touched on benchmark times for various models and tools, such as lm-eval-harness, where a 7b model MMLU test takes around 12 hours on a 4090 GPU.

-

Quest for Axolotl UI: Hugging Face extends a $5000 bounty for creating an Axolotl Spaces training UI, prompting a collaboration call for frontend (with Tailwind CSS preference) and backend developers, ideally in Python. Debates ensue whether to use Shiny or Gradio for the UI, with prototype and support offers on the table from the Shiny team at Posit.

-

Saving Models Multiplied: Users report persistent issues when attempting to save models on multi-GPU, multi-node configurations, with suspicion that distributed saving might not be correctly implemented in Axolotl. Despite the latest transformers library version (4.37.2), pull requests with purported fixes are being scrutinized for multi-node training, as community members actively seek code adaptations to resolve mistral fft saving errors.

-

Tuning DPO with Alpacas and ChatML: Community interactions reveal challenges in achieving reliable DPO results, prompting advice to significantly lower learning rates. A shift from Alpaca format to ChatML is being explored despite earlier successes with Alpaca, with insights into personal workaround methods involving Metharme being shared.

-

Jupyter Confusions and Corrections: Users experiencing critical errors and warnings with Jupyter notebooks are directed towards potential fixes, including a Github pull request (Cloud motd by winglian) addressing issues with a mounted volume affecting the workspace directory. Advice is dispensed to reclone repositories as part of troubleshooting.

Perplexity AI Discord Summary

- Seeking Clarity on Claude Pro’s Necessity: In the general channel,

@brewingbrews_92072questioned the need for a Claude Pro subscription for minimal use cases, reflecting a thoughtful approach before upgrading. - Evaluating AI Services Price Points: A contrasting discussion in the general channel delved into the cost-effectiveness of Perplexity’s API (priced at 0.07/0.28) relative to other AI services, and the general expense associated with AI extensions valued around $12 per month.

- API Credit Economy:

@general3dshared, also in the general channel, their experience of economically running a Discord bot using a $5 monthly API credit, emphasizing the affordability when hosting locally. - Gemini Pro Vs. Premium AI Competitors: The performance of Gemini Pro compared to premium models such as GPT-4 was discussed by

@luke_____________in general, with a look ahead to the potential offered by the upcoming Gemini Ultra. - Challenges with API Utilization and Summarization: Over in the pplx-api channel, users encountered difficulties in tasks like creating an ongoing conversation tracking shortcut, replicating summarization capabilities, and matching the API key format of Perplexity with that of OpenAI’s for broader tool compatibility.

LlamaIndex Discord Summary

-

Webinar on LLM Handling of Tabular Data: Upcoming webinar to focus on tabular data understanding with LLMs, examining Chain-of-Table method and enhancement of LLMs’ performance using multiple reasoning pathways. Register for the Friday 9am PT session here and delve into papers like “Chain-of-Table” and “Rethinking Tabular Data Understanding with Large Language Models”.

-

Leveraging RAG for Enterprise and Research:

@seldoto discuss language models and RAG for enterprises, with resources like self-RAG evolution, Mistral’s RAG documentation, and webinar info. A simple GitHub repo for RAG beginners can be accessed here. -

Technical Queries from General Discussions: Resolving PDF parsing using

ServiceContext.from_defaults, addressing labeling limitations in Neo4j, improving efficiency in node content extraction, clarifying on documents versus nodes inVectorStoreIndex, and troubleshooting SQL query synthesis with LlamaIndex. -

Hacker News and Medium Reveal SQL and RAG Insights: Discussions pivot around seeking reliable NL to SQL solutions highlighted by a Hacker News thread found here and a Medium article on Self-Chunking with RAG and LlamaIndex located here, concerning accuracy challenges and the future of document analysis.

LangChain AI Discord Summary

-

ChromaDB Speeds Up RAG Systems:

@bwo_28inquired about optimizing the performance of a RAG system, and@david1542recommended using ChromaDB’s persistent client to speed up similarity searches by saving embeddings to disk, potentially improving load times by avoiding the need to recreate embeddings (ChromaDB documentation). -

Langchain Strides Forward: There’s a buzz around LangChain’s integration and functionalities. Discussions involved the request for guidance using LangChain with Mistral on AWS SageMaker, queries on setting “response_format” to “vtt” for audio files using

OpenAIWhisperAudio, and troubleshooting aModuleNotFoundErrorinvolvingSQLDatabaseimport fromlangchain. -

Langserve’s Robust Updates and Fixes: New event stream API agent examples were updated by

@veryboldbagel, complete with detailed comments, available at GitHub, while@albertperez.reported a self-resolving deployment loop issue with LangServe. -

Demand for Personal AI Work Coach:

@bartst.is looking to create a personal AI work coach, leading to a discussion where@david1542showed interest in contributing ideas for such an initiative. -

MLBlocks Unveiled:

@neil6430shared an introduction to MLBlocks, a no-code platform that enables building image processing workflows using both AI models and traditional methods, streamlining processes into a single REST API endpoint.

Latent Space Discord Summary

- AI Assistants Becoming Everyday Heroes:

@ashpreetbedisupported the practicality of AI personal assistants in tasks like summarizing daily standups, demonstrating their increasing integration into workplace routines. - Code Automation via AI:

@slonoshared an automated programming approach with a GitHub Gist, revealing the potential of AI assistants like ‘Aider’ in streamlining development processes. - RPA’s AI Revolution:

@pennepitstopsparked discussions on AI’s transformative role in Robotic Process Automation (RPA), citing Adept as a notable newcomer challenging giants like UiPath in personal automation technologies. - Querying the Future with Vector Databases: The dialogue on production-ready vector databases with API endpoints led to a recommendation of Supabase pgvector by

@swyxio, highlighting a trend towards more robust data query tools. - Scaling AI Models on the Radar: An insightful tweet by Stella Biderman about AI scale discussed by

@swyxioresonated with the community, emphasizing developments in models such as RWKV and Mamba.

CUDA MODE Discord Summary

-

GPU Aspirations and Practicality Blend:

@timjones1whimsically showed an interest in setting up a personal computing environment, while@joseph_enrecommended starting with a single 3090 GPU or a more cost-effective 3060 with 12GB VRAM. Meanwhile,@vim410discussed incremental work revealing unexploited hardware features that suggest room for optimizing hardware performance. -

Tuning and Monitoring for Performance:

@cudawarpedrecommended using Nvidia’sncutool for better benchmarking, and shared an example command.@iron_bounddiscussed kernel tuning with CLTune, a tool that may be outdated for modern CUDA.@smexy3introducedgmon, a tool for simplifying GPU monitoring, providing its GitHub link. -

Solving Quantization Puzzles in PyTorch:

@hdcharles_74684faced challenges with dynamic quantization in torchao and improved performance by adding a dequant epilogue, as seen in this GitHub commit. -

GPU Workarounds for Older MacBooks and Cloud Options:

@boredmgr2005questioned the feasibility of using a 2015 MacBook Pro for CUDA programming, while@joseph_ensuggested Google Colab as a free and capable cloud solution for such cases. -

Jax’s Ecosystem Gains Momentum:

@joseph_ennoted Jax’s rising popularity and queried Google’s strategic direction with Jax, hinting at competition with TensorFlow within the AI and machine learning community.

DiscoResearch Discord Summary

-

The Quest for Representative Datasets: Members like

@bjoernprecommend using the original SFT dataset for model training with an interest shown in benchmarks using the same and augmented resources like the German part of multilingual c4, German Wikipedia, and malteos wechsel_de for perplexity testing. -

Avoid the Memory Pit:

@philipmayreports Out of Memory (OOM) issues with the Axolotl model, hinting at possible misconfiguration with Deepspeed’sstage3_gather_16bit_weights_on_model_savesetting, which might not allow the model to fit on a single GPU. -

Jina Embeddings Fall Short: Users like

@sebastian.bodzacriticize Jina embeddings for underperforming, especially when dealing with Out-of-Distribution (OOD) coding documentation;@rasdaniechoes these sentiments with evident disappointment. -

German Inference Pricing Models:

@lightningralfintroduces a proposed two-tiered price model for German inference services, triggering discussions about potential free services with corporate sponsorship. -

Self-Reliant Server Management to Boost Efficiency:

@flozi00reveals an in-house endeavor to construct a data center, reflecting a trend towards proprietary server solutions and specialized in-house server management departments.

LLM Perf Enthusiasts AI Discord Summary

-

Lindy AI Passes Preliminary Tests:

@thebaghdaddyfound Lindy AI capable of performing basic tasks like data retrieval and write-ups, but hints at the possibility of specialized systems for task-specific efficiency. -

Azure’s AI Offerings Questioned: One user,

.psychickoala, queried if Azure has a GPT-4 vision model, but the conversation did not progress with an answer. -

Super JSON Mode Promises Speed:

@res6969introduced Super JSON Mode via a tweet from@varunshenoy_, claiming a 20x speed increase in structured output generation for language models without the need for unconventional approaches. -

Optimizing Hosting for MythoMax:

@jmakis on the lookout for a more cost-effective hosting solution for deploying MythoMax LLM, but lacks community input on the matter. -

Struggling with PDFs? OCR Them All!:

@pantsforbirdsis seeking enhancements for processing PDFs, mainly those with poorly encoded text, while@res6969advocates for universal OCR application to counteract text extraction issues, despite additional costs.

Alignment Lab AI Discord Summary

- Seeking Synergy in Silicon: A member known as

@blankcooreached out for potential collaboration opportunities with the Alignment Lab project. - Enthusiastic Newcomer Alert:

@craigbashared his enthusiasm for joining the Alignment Lab AI Discord and offered his expertise in cybersecurity, referencing his work at Threat Prompt. - Ingenious Code Generation Tools:

@craigbabrought attention to AlphaCodium, a tool leveraging adversarial techniques akin to GANs to generate high-quality code, inviting others to view Tamar Friedman’s brief introduction and explore its GitHub repository. - Appreciation for AI Interview Insights: Acknowledgment was given to the dialogues in Jeremy’s Latent Space interview, specifically praising a question surrounding deep learning and productivity outside of big tech, as seen in “The End of Finetuning.”

Datasette - LLM (@SimonW) Discord Summary

- Free Hairstyle App Hunt Turns Fruitless:

@soundblaster__is on a quest for a free app for changing hairstyles, but is hitting a snag even after scouring the first and second page of Google for options that don’t require post-registration payment.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (1312 messages🔥🔥🔥):

- AI Performance and Training Discussions: Users discussed various AI models and training techniques, with particular attention paid to models like Mixtral, Nous Mixtral DPO, and Miqu 70B. They compared these to OpenAI’s GPT models in terms of efficiency and capability.

- LLM Community and Resource Sharing: Conversations touched on PolyMind, a project that targets Mixtral Instruct with features like Python interpretation and semantic PDF search. Its GitHub repository was shared, but there was also mention of a Reddit post about it being removed, indicating possible moderation issues on the

/r/LocalLLaMAsubreddit. - Tech Specs and Equipment Discussions: Users exchanged insights on computing hardware suitable for running large models locally. They debated the memory bandwidth of chips like Apple’s M2 and AMD’s Epyc, and the practicality of setups with large amounts of RAM for AI inference tasks.

- Community Dynamics: The tone of various AI-focused Discord servers was discussed, with opinions on the nature of conversations and community behavior across servers including TheBloke’s server, SillyTavern, and EleutherAI.

- Reddit Moderation and Policy: There was a critique of ambiguous moderation practices on Reddit, particularly concerning posts on

/r/LocalLLaMAsubreddit and issues with transparency. Users expressed frustration over apparent unequal enforcement of rules when sharing useful AI-related content.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- bartowski/dolphin-2.6-mistral-7b-dpo-exl2 · Hugging Face: no description found

- Sfm Soldier GIF - Sfm Soldier Tf2 - Discover & Share GIFs: Click to view the GIF

- Oh My God Its Happening GIF - Oh My God Its Happening Ok - Discover & Share GIFs: Click to view the GIF

- Transformer Inference Arithmetic | kipply’s blog: kipply’s blog about stuff she does or reads about or observes

- Apple Apple Mac GIF - Apple Apple Mac Apple Mac Studio - Discover & Share GIFs: Click to view the GIF

- GeorgiaTechResearchInstitute/galactica-6.7b-evol-instruct-70k · Hugging Face: no description found

- Qwen-1.5 72B: China’s AI juggernaut DEFEATS Mistral 7B and GPT4! (AI News) 🐉: The East throws down the gauntlet with Qwen 1.5, a game-changing LLM that shatters boundaries. Not only does it rival ChatGPT4 in math and coding prowess, bu…

- GitHub - itsme2417/PolyMind: A multimodal, function calling powered LLM webui.: A multimodal, function calling powered LLM webui. - GitHub - itsme2417/PolyMind: A multimodal, function calling powered LLM webui.

- The Voices GIF - The Voices - Discover & Share GIFs: Click to view the GIF

- Fully Uncensored GPT Is Here 🚨 Use With EXTREME Caution: In this video, we review Wizard Vicuna 30B Uncensored. All censorship has been removed from this LLM. You’ve been asking for this for a while, and now it’s h…

- Hugging Face – The AI community building the future.: no description found

- Reddit - Dive into anything: no description found

- The Voices Meme GIF - The Voices Meme Cat - Discover & Share GIFs: Click to view the GIF

- GitHub - ml-explore/mlx-examples: Examples in the MLX framework: Examples in the MLX framework. Contribute to ml-explore/mlx-examples development by creating an account on GitHub.

- abacusai/Smaug-72B-v0.1 · Hugging Face: no description found

- Open LLM Leaderboard - a Hugging Face Space by HuggingFaceH4: no description found

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

- THE DECODER: Artificial Intelligence is changing the world. THE DECODER brings you all the news about AI.

TheBloke ▷ #characters-roleplay-stories (503 messages🔥🔥🔥):

- Greek Tragedy or Coding Strategy?: Discord users debated the origin and nature of

Thespis 0.8, with@c.gatoclarifying its namesake comes from a Greek tragedy origin and provides a historical context behind the naming.@billynotreallyand others engaged in a playful confusion of the term, likening it to “sepsis” and a Norwegian word. - Detailing DPO Results:

@dreamgenrequests a public Weights & Biases (wandb) for DPO (Direct Preference Optimization) run metrics.@c.gatoindicates that accuracy should rise to 100% and margins should increase over time, and provides an example wandb project link (this link was part of the original chat and does not lead to a real destination). - Lorebooks as Prompt Engineering Tools: In a conversation about using Lorebooks for enhancing roleplay stories,

@johnrobertsmithquestions their effectiveness beyond prompt engineering.@mrdragonfoxsuggests their utility in injecting information without user prompting, reinforcing important elements during a roleplay session. - Merges and Model Trainings Discussed: Users debate the pros and cons of merging models.

@mrdragonfoxexpresses disapproval of merging, while@mrgargues that users only care about end results. The discussion points towards a general consensus on the importance of dataset creation over pure model merging. - Contemplating Benchmark and Dataset Strategies: There’s a contention regarding the value of merging models for benchmarks versus the value of creating datasets, with

@flail_suggesting that benchmarks can be distorted by merges and@mrdragonfoxaffirming the real value is in the datasets, not just the merging of models.

Links mentioned:

- Artefact2/BagelMIsteryTour-v2-8x7B-GGUF · Hugging Face: no description found

- PotatoOff/HamSter-0.2 · Hugging Face: no description found

- Preference Tuning LLMs with Direct Preference Optimization Methods: no description found

- Objective | docs.ST.app: The Objective extension lets the user specify an Objective for the AI to strive towards during the chat.

- TheBloke/Beyonder-4x7B-v2-GGUF · Hugging Face: no description found

- jondurbin: Weights & Biases, developer tools for machine learning

TheBloke ▷ #training-and-fine-tuning (11 messages🔥):

- Seeking to Sidestep Safety: User

@mmarkdinquired about removing safety guardrails from Llama2 70B instruct, while sharing a LessWrong post discussing how LoRA fine-tuning can undo safety training cost-effectively, but noted it lacked specific details for action. - Frustration Over AI’s Ethical Restraints:

@mmarkdmentioned the difficulty of using models like Llama2 70B instruct for tasks due to excessive ethical guardrails, which prevent even non-harmful code refactoring assistance. - Recommendations to Reduce Restraint:

@flail_suggested using alternative finetuned models such as toxicdpo, spicyboros, airoboros, dolphin, and hermes to circumvent overbearing safety features. - Tip for Finer Fine-tuning:

@londonadvised that combining datasets during fine-tuning improves control over various model parameters. - LoRA’s Training Attributes Questioned:

@cogbujidiscussed the extent of change LoRA fine-tuning provides, referencing the QLoRa paper which suggests that applying LoRA to all transformer layers might match the performance of full fine-tuning. - Seeking Colab Support for LM Usage: User

@thiagoribeirosntsis looking for assistance on using wizardLM or LLaMA 2 on Google Colab after facing difficulties. - Contemplating Correct Course for LoRA:

@gandolphthewicked_87678pondered whether to continue using Mistral 7b for LoRA fine-tuning or switch to a base model, seeking recommendations.

Links mentioned:

LoRA Fine-tuning Efficiently Undoes Safety Training from Llama 2-Chat 70B — LessWrong: Produced as part of the SERI ML Alignment Theory Scholars Program - Summer 2023 Cohort, under the mentorship of Jeffrey Ladish. …

TheBloke ▷ #coding (6 messages):

-

Limitations in Transformers for Layer Offloading: User

@mmarkdinquired about offloading specific layers to GPU using Transformers, similar to-nglcommand in llama.cpp, but@itsme9316responded by saying that Transformers library cannot split layers between CPU and GPU. -

Meta’s Sphere Project Intrigue:

@spottyluckshared a GitHub link to Facebook’s Sphere project, musing about Meta’s shift away from a strategy that was viewed as a challenge to Google. They offered insight on leveraging Sphere for frequent updates using common crawl and big data tools. -

Implementation Queries for LLaMa 2 on MLX:

@lushboiis exploring the implementation of LLaMa 2 on MLX for Apple Silicon and questioned the structure of the model’s query parameters, pondering whether to adapt them to a multi-head or grouped-query format.

Links mentioned:

GitHub - facebookresearch/Sphere: Web-scale retrieval for knowledge-intensive NLP: Web-scale retrieval for knowledge-intensive NLP. Contribute to facebookresearch/Sphere development by creating an account on GitHub.

OpenAI ▷ #annnouncements (1 messages):

- DALL-E Images get Metadata Upgrade:

@abdubsannounced that images generated in ChatGPT and OpenAI API now include metadata following C2PA specifications. This enables verification that an image was generated by OpenAI products, helpful for social platforms and content distributors. Read the full details in the help article.

OpenAI ▷ #ai-discussions (300 messages🔥🔥):

-

AI Censorship Doubts Deter Usage: Users

@kotykdand@arturmentadodiscussed the impact of AI censorship on user experience, with@kotykdarguing for user freedom in AI outputs and@arturmentadoexplaining the necessity of safeguarding against misuse. However,@kotykdthinks it’s overreach, while@arturmentadoinsists there are good reasons for protective measures. -

The Cost of True Artistry in the AI Era:

@infidelisand others debated the ethics of misrepresenting AI-generated art as human-made on platforms like Artstation, emphasizing the importance of disclosure. Concerns were raised that platforms suffer when AI art is falsely presented, with@luguiunderscoring that OpenAI’s TOS requires honesty about AI’s role in content creation. -

Metadata’s Role in Provenance Mapping: A significant focus was on the importance and ease of removing metadata from images, with

@whereyamomsat.comproviding resources on EXIF data, and@heavygeecommenting on file size changes after metadata removal. Many users considered metadata manipulation an irrelevant measure due to ease of alteration. -

Navigating Open Source AI vs. Commercial Solutions: Participants like

@infidelisand@arturmentadodiscussed the superiority of open-source models versus commercial AI, like GPT-4. They considered the impact on innovation progress, with some users forecasting the rise of competitive open-source solutions. -

The Controversial Convenience of AI Learning Assists: Users debated the educational applications of AI, with

@germ_stormand@chief_executivediscussing its effectiveness for learning and research versus traditional methods, arguing that AI can be a powerful tool for efficient learning in some study areas.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Overview - C2PA : no description found

- Portals - [TouchDesigner + Stable WarpFusion Project Files]: ♫ + 👁 by myself.You can access these TouchDesigner project files [+ its corresponding warpfusion settings], plus many more project files, tutorials and expe…

- Online photo metadata and EXIF data viewer | Jimpl: View EXIF data of your images online. Find when and where the picture was taken. Remove metadata and location from the photo to protect your privacy.

- NO C2PA - Remove C2PA Metadata: no description found

- Reddit - Dive into anything: no description found

- Content Credentials: Introducing the new standard for content authentication. Content Credentials provide deeper transparency into how content was created or edited.

OpenAI ▷ #gpt-4-discussions (46 messages🔥):

-

Troubleshooting Log-In Issues: User

@mikreyyyinquired about how to log out of all devices from their account, but no solutions or follow-up comments were provided in the discussion thread. -

Finding GPT Demonstrations: User

@glory_72072asked how to find and use the GPT demo, but the details or responses to guide them were absent from the subsequent messages. -

Experiencing Enhanced Storytelling: User

@blckreaperexpressed satisfaction with GPT’s improved narrative output, mentioning longer responses that follow directions more accurately, though they reported issues with the AI not fully adhering to custom instructions. -

Queries About Answer Limits:

@ytzhakconfronted a possible usage cap after getting blocked following 12 to 20 interactions with GPT, sparking a discussion about limitations on customized GPT usage—an issue@blckreaperattributed to a cap on regeneration that counts as one message. -

Custom GPT Troubles and Tips: Users reported various challenges and solutions with Custom GPT, from timeout errors (

@realspacekangaroo) to disappearance of the Explore button (@hawk8225)—which@blckreapersuggested might be fixed by signing out and back in. Others like@woodenrobotdiscussed instructional conflicts and token limitations within custom instructions, while@drinkoblog.weebly.comcommented on the cost-effectiveness of different GPT models.

OpenAI ▷ #prompt-engineering (5 messages):

- Looking for Audience & Advice on GPTs: User

@loieris seeking advice on where to find people interested in their GPTs and wants to learn how to improve module and script setup. - Refining Character Interaction in Prompts:

@_fresnicsuggests incorporating hints within each segment of a character interaction conversation to fine-tune the system’s responses better and is open to reviewing others’ prompts/conversation flows if provided with a screenshot or gist. - Seeking OpenAI Contact for Teams Feedback:

@novumclassicumexpresses a strong desire to discuss the teams integration with OpenAI and is looking for a way to contact someone from the organization, including Sam Altman, to provide passionate feedback and suggestions for improvements.

OpenAI ▷ #api-discussions (5 messages):

- Request for Collaboration and Guidance: User

@loieris seeking a community to discuss GPTs usage, looking for advice on setting up modules and scripts to enhance their GPTs’ performance. - Refining Character Interactions in Prompts: User

@fresnicsuggests interspersing hints about character interactions throughout conversation segments instead of only the initial system prompt and is open to reviewing examples if shared, such as through a screenshot or gist. - Seeking Direct Access to OpenAI Team: User

@novumclassicumexpresses a strong desire to discuss Teams integration with OpenAI representatives, inviting someone like Sam Altman to connect for an in-depth conversation.

HuggingFace ▷ #announcements (1 messages):

-

Create Your Own Chat Assistant: A new feature called Hugging Chat Assistant allows users to build personal assistants with ease. As described by

@_philschmid, it includes customizable elements like name, avatar, and behavior controls, and uses different LLMs like Llama2 or Mixtral. The feature is celebrated for eliminating the need to store custom prompts separately. Discover yours at Hugging Face Chat. -

Private Dataset Viewing Now Possible:

@julien_cannounces an update that makes Dataset Viewer available for private datasets. However, this feature is exclusive to PRO and Enterprise Hub users, offering enhanced tools for data exploration and analysis. Read more from the data team’s work. -

Synthetic and Croissant Data Tags on the Hub: In anticipation of synthetic data’s growing importance,

@vanstriendanielreveals a newsynthetictag on the HuggingFace Hub. It’s been added to ease the discovery and sharing of synthetic datasets; just include this tag in your dataset card metadata. -

Showcase Your Blogposts on Your HF Profile: According to

@not_so_lain, when HuggingFace users write blog posts, they will now appear on their own profiles. This feature serves as a new way to spotlight individual contributions and insights within the community. -

Mini Header for Spaces:

@lunarflu1introduces aheader: minioption for HuggingFace Spaces, allowing for full-screen displays with a minimal header, enhancing the user interface and focus on content.

Links mentioned:

- Tweet from Philipp Schmid (@_philschmid): Introducing Hugging Chat Assistant! 🤵 Build your own personal Assistant in Hugging Face Chat in 2 clicks! Similar to @OpenAI GPTs, you can now create custom versions of @huggingface Chat! 🤯 An Ass…

- HuggingChat - Assistants: Browse HuggingChat assistants made by the community.

- Tweet from Julien Chaumond (@julien_c): NEW on the @huggingface hub: the Dataset Viewer is now available on private datasets too You need to be a PRO or a Enterprise Hub user. 🔥 The Dataset Viewer allows teams to understand their data a…

- Tweet from Daniel van Strien (@vanstriendaniel): Synthetic data is going to be massively important in 2024, so we have recently launched a new tag on the @huggingface Hub to facilitate the discovery and sharing of synthetic datasets. To add this tag…

- Tweet from hafedh (@not_so_lain): @huggingface just found out that when you write a blogpost it shows in your own profile ❤️

- Tweet from lunarflu (@lunarflu1): There’s a new option for @huggingface Spaces 🤗! Add

header: miniin the metadata, and the space will be displayed full-screen with a floating mini header. - Tweet from Sayak Paul (@RisingSayak): Announcing new releases on the weekend is the new norm 🤷🚀 Presenting Diffusers 0.26.0 with two new video models, support for multi IP-adapter inference, and more 📹 Release notes 📜 https://github…

- Tweet from Sourab Mangrulkar (@sourab_m): New Release Alert! 🚨 PEFT v0.8.0 is out now! 🔥🚀✨ Check out the full release notes at https://github.com/huggingface/peft/releases/tag/v0.8.0 [1/9]

- Tweet from Titus.von.Koeller (@Titus_vK): Exciting news for bitsandbytes! We’re thrilled to announce the release of the initial version of our new documentation! 🧵https://huggingface.co/docs/bitsandbytes/main/en/index

- Tweet from Vaibhav (VB) Srivastav (@reach_vb): LETS GOO! Faster CodeLlama 70B w/ AWQ & Flash Attention 2⚡ Powered by AutoAWQ, Transformers & @tri_dao’s Flash Attention 2. GPU VRAM ~40GB 🔥 Want to try it yourself? You’d need to make two…

- Tweet from Sayak Paul (@RisingSayak): Stable Video Diffusion (SVD) can now be used with 🧨 diffusers, thanks to @multimodalart ❤️ SVD v1.1 👉 https://huggingface.co/stabilityai/stable-video-diffusion-img2vid-xt-1-1 Guide 👉 https://hugg…

- Constitutional AI with Open LLMs: no description found

- NPHardEval Leaderboard: Unveiling the Reasoning Abilities of Large Language Models through Complexity Classes and Dynamic Updates: no description found

- Patch Time Series Transformer in Hugging Face: no description found

- Hugging Face Text Generation Inference available for AWS Inferentia2: no description found

- SegMoE: Segmind Mixture of Diffusion Experts: no description found

- Tweet from ai geek (wishesh) ⚡️ (@aigeek__): finally a leaderbord that matter the most. @huggingface’s new Enterprise Scenarios leaderboard just launched. it evaluates the performance of language models on real-world enterprise use cases. …

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

HuggingFace ▷ #general (170 messages🔥🔥):

- XP Boost Debate:

@lunarfludiscussed the idea of adding a multiplier to XP earned for specific roles in the HuggingFace community, mentioning theserver boosteras a good candidate for this bonus. - Docker Inquiry on AI Processing Location:

@criticaldevxposted a question about whether Docker text generation inference is processed on the user’s device or HuggingFace’s servers, to which no clear answer was provided in the messages. - Error Troubleshooting: Several users including

@leifer_and@criticaldevxreported experiencing a 504 error on HuggingFace’s chat feature, suggesting the service might be down. - Collaboration and Contribution:

@lunarfluexpressed willingness to look into fellowships for helping elevate users’ impact, while@ufukhurysought advice on how to contribute to HuggingFace but received no specific steps forward. - Accelerator Load State Issues:

@bit0rand@doctorpanglossengaged in a detailed troubleshooting conversation about issues restoring a checkpoint using Accelerate’s load_state functionality, where using containers like lxd and the specifics of the code were questioned for effectiveness.

Links mentioned:

- LoRA Studio - a Hugging Face Space by enzostvs: no description found

- Quick tour: no description found

- Rockwell Retro Encabulator: Latest technology by Rockwell Automation

- GitHub - HSG-AIML/MaskedSST: Code repository for Scheibenreif, L., Mommert, M., & Borth, D. (2023). Masked Vision Transformers for Hyperspectral Image Classification, In CVPRW EarthVision 2023: Code repository for Scheibenreif, L., Mommert, M., & Borth, D. (2023). Masked Vision Transformers for Hyperspectral Image Classification, In CVPRW EarthVision 2023 - GitHub - HSG-AIML/MaskedSS…

- GitHub - Sanster/tldream: A tiny little diffusion drawing app: A tiny little diffusion drawing app. Contribute to Sanster/tldream development by creating an account on GitHub.

HuggingFace ▷ #cool-finds (7 messages):

-

Cat Game Research Unleashes Quadruped Fur-Force:

@technosourceressextraordinaireshared a model of their cat named Leela created for a video game in development at Cat Game Research for Bat Country Entertainment. The game is said to be pioneering the first quadruped character controller using ML and AI, with more details available at badcatgame.com. -

Scholarly Dive into Language Models and Code:

@vipitisis engrossed in a comprehensive survey paper that reviews advancements in code processing with language models. The paper covers an extensive range of models, datasets, and over 700 works, with an ongoing update thread on GitHub and references to add to a HuggingFace collection. The full paper is accessible at arXiv. -

No Discord Invites, Please: A reminder was issued by

@cakikito follow the channel’s guidelines, which prohibit Discord invites. The rule enforcement was directed at users@1134164664721350676and later reiterated for@985187584684736632. -

Alibaba’s AI Outperforms Competitors:

@dreamer1618highlighted an article stating that Alibaba’s latest artificial intelligence model, Qwen 1.5, outshone both ChatGPT and Claude in multiple benchmark tests. The article discussing these advancements is available at wccftech.com. -

Innovative Fine-Tuning Paper ‘RA-DIT’ Explored:

@austintb.discussed plans to implement techniques from a promising paper titled “RA-DIT: Retrieval-Augmented Dual Instruction Tuning.” The paper proposes advanced methodologies for retriever and language model fine-tuning, with the full document found on arXiv.

Links mentioned:

- Alibaba’s Latest A.I. Beats GPT-3.5, Claude In Multple Benchmark Tests: With 2024 marking a strong start to the global artificial intelligence race, Chinese technology giant Alibaba Group has also announced the latest iteration of its Qwen artificial intelligence model. A…

- Bad Cat Game: You’re a cat, and a jerk — an action adventure rpg game currently being developed by Bat Country Entertainment LLC

- RA-DIT: Retrieval-Augmented Dual Instruction Tuning: Retrieval-augmented language models (RALMs) improve performance by accessing long-tail and up-to-date knowledge from external data stores, but are challenging to build. Existing approaches require eit…

- Unifying the Perspectives of NLP and Software Engineering: A Survey on Language Models for Code: In this work we systematically review the recent advancements in code processing with language models, covering 50+ models, 30+ evaluation tasks, 170+ datasets, and 700+ related works. We break down c…

HuggingFace ▷ #i-made-this (10 messages🔥):

-

Praise for Creation:

@furquan.salshowed appreciation for a creation in a simple message stating “Impressive Bro! Liked it 💛”. -

Inquiries into Frontend Tech: In response to

@furquan.sal’s question about the frontend framework used,@wubs_elaborated that their project’s frontend is built with React and uses Jotai for state management. They also shared their development timeline, inviting questions and interaction. -

TensorLM-webui Unveiled:

@ehristoforuannounced TensorLM-webui, a Gradio web UI for LLM in GGML format based on LLaMA, encouraging users to clone the project from GitHub or test a mini-demo on Hugging Face Spaces. -

From Sketch to Fashion:

@tony_assipresented Sketch to Fashion Collection, an application that transforms sketches into fashion designs, available on Hugging Face Spaces. They later inquired about the possibility of an image generation API. -

BLOOMChat-v2 Announced:

@urmish.shared information about BLOOMChat-v2, a 176B parameter multilingual language model with 32K sequence length capability. The model, soon to be complemented with an API, shows significant improvements over earlier models; further details available in a Twitter summary and a detailed blog post.

Links mentioned:

- Sketch To Fashion Collection - a Hugging Face Space by tonyassi: no description found

- Introducing BLOOMChat 176B - The Multilingual Chat based LLM: We are proud to release BLOOMChat-v2, a 32K sequence length, 176B multilingual language model.

- GitHub - ehristoforu/TensorLM-webui: Simple and modern webui for LLM models based LLaMA.: Simple and modern webui for LLM models based LLaMA. - GitHub - ehristoforu/TensorLM-webui: Simple and modern webui for LLM models based LLaMA.

- TensorLM - Llama.cpp UI - a Hugging Face Space by ehristoforu: no description found

- Art Forge Labs Development Timeline - AI art innovation: Welcome to Art Forge Labs – where our journey in AI art innovation has been as rapid as it has been revolutionary. From our early days of basic setups to leading the charge in AI-driven art, our path …

HuggingFace ▷ #reading-group (101 messages🔥🔥):

- Mamba Presentation Shifted:

@lunarfluannounced shifting the Mamba paper presentation to the following week, inviting others to present something in the meantime. No specific paper or topic has been determined for the current week. - Reading Group on the Radar:

@tonic_1and a friend expressed interest in presenting a paper on decoder-only foundation models for time-series forecasting for the next Reading Group, coordinating for a Friday presentation and stirring up excitement (“geeking out (hard)”). - GitHub Repo for Reading Group Resources:

@chad_in_the_housecreated a GitHub repository to compile past presentations and recordings of the HuggingFace Reading Group for easy access and potential future YouTube dissemination. - S4 and Mamba Discussion Anticipation:

@ericauldis preparing to cover Mamba and S4, seeking input on what aspects the community would find most valuable in a presentation. They suggest focusing on iterations others have made on the papers and potential future developments. - Learning Pathways in ML/AI: Various users shared tips, resources, and starting points for those new to machine learning and AI. Suggested methods include engaging with reading groups, using high-level libraries, working on specific projects, and learning foundational knowledge such as linear algebra.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Using ML-Agents at Hugging Face: no description found

- Spaces - Hugging Face: no description found

- Civitai | Share your models: no description found

- GitHub - isamu-isozaki/huggingface-reading-group: This repository’s goal is to precompile all past presentations of the Huggingface reading group: This repository's goal is to precompile all past presentations of the Huggingface reading group - GitHub - isamu-isozaki/huggingface-reading-group: This repository's goal is to precomp…

- SDXL Unstable Diffusers ヤメールの帝国 ☛ YamerMIX - V11 + RunDiffusion | Stable Diffusion Checkpoint | Civitai: For business inquires, commercial licensing, custom models/commissions, large scale image captioning for datasets and consultation contact me under…

- Mobile ALOHA: no description found

- This new AI that will take your job at McDonald’s: Here is a glimpse into the future of robots powered by AI and trained with teleoperated data.check out my leaderboard website at:https://leaderboard.bycloud…

HuggingFace ▷ #diffusion-discussions (7 messages):

-

Off-Topic Guidance:

@juancopi81directed@eugenekorminto move server-side code discussions to the appropriate channel, urging that diffusion-discussions is for diffusion model-related topics. -

Diffusion Newbie Seeks Guidance:

@_elab, a newcomer to diffusion models, sought advice on several key parameters, including timestep (T), beta scheduling, training pace, and hardware requirements specific to image synthesis using the Stanford Cars dataset. -

Training Trial and Error:

@bitpatternshared a log of training parameters for image generation with Stable Diffusion, including batch sizes, gradient accumulation, and optimization steps, while noting the intention to refine the process by possibly reducing the number of images. -

Diffusion Courses Recommended:

@juancopi81recommended the HuggingFace and FastAI courses on diffusion models to@_elabfor a deeper understanding of diffusion concepts and answers to_elab’s questions. -

Unet2dconditionmodel Query:

@blankspace1586was searching for guidance on using the Unet2dconditionmodel for validation with a non-textual embedding, as there seemed to be a lack of examples for this scenario. -

Per-Token Mask in Cross Attention Inquiry:

@jfischoffinquired about the possibility of implementing a per-token mask for cross attention in diffusers pipelines, aiming to limit the influence of a token to a specific region of the latent space.

HuggingFace ▷ #NLP (5 messages):

- In Search of Domain-Specific Chat:

@alexksternis considering fine-tuning LLama chat or a Mistral model on a class syllabus using chunks of a textbook to foster model expertise in the subject matter. The goal is to create a model that understands domain-specific content before further fine-tuning it to improve its educational chat capabilities. - Beyond Textbooks - Audio as Data:

@technosourceressextraordinairesuggests that content likely already exists in the pre-trained data (“the pile”) but recommends considering audio-to-text transcriptions, such as those from Whisper, for dataset creation. - Clarification on Dataset Utility:

@alexksternseeks confirmation on whether using textbook content to fine-tune for domain knowledge, followed by chat-context fine-tuning, is a valid strategy. - Frustration with Fine-Tuning Failure:

@zen_maypole_40488encountered anInvalidRequestErrorwhen attempting to fine-tune a model on the OpenAI platform, indicating potential issues with the fine-tuning request URL or API usage.

HuggingFace ▷ #diffusion-discussions (7 messages):

-

Diffusion Newbie Seeks Guidance:

@_elabasked for advice on setting parameters for a diffusion model for image synthesis using Stanford Cars database, specifically on the best value forT(timestep), calculatingbetas, and the hardware requirements for training. They are concerned about the speed of training and whether multiple GPUs are necessary. -

Training Session Underway for Image Synthesis:

@bitpatternshared a snapshot of their training log for an image synthesis model utilizing techniques like mixed precision and gradient accumulation. The log indicates the use of a pre-trained model and a resolution of 512 for the dataset. -

Guided Help for Diffusion Model Learners: In response to

@_elab,@juancopi81suggested checking out HuggingFace’s and FastAI’s courses on diffusion models as useful resources for understanding diffusion model parameters and training processes. -

Inquiry on Unet2dconditionmodel Pipelines:

@blankspace1586discussed their success in implementing a training loop for Unet2dconditionmodel with non-textual embeddings as conditioning but expressed uncertainty about the appropriate pipeline to use for validation where they can pass that embedding. -

Seeking Cross Attention Masks for Pipelines:

@jfischoffhad a technical query about the possibility of applying a per-token mask for cross attention within diffusers pipelines, aiming to restrict the influence of a token to a specific latent region.

LM Studio ▷ #💬-general (141 messages🔥🔥):

-

Intel Mac Support for LM Studio:

@robert.bou.infinitesuggests that while LM Studio could theoretically support Intel Macs, the lack of powerful GPUs in older Intel Macs would likely result in poor performance. They advise using remote control of a compatible machine for those stuck on Intel Macs and direct to DM for cloud provider recommendations. -

TTS and Image Support Questions: Users are exploring features related to text-to-speech (TTS) and image support.

@joelthebuilderis having trouble getting AI voice to work on iOS,@enragedantelopeasks about filtering models with “vision adapters,” and@lyraconseeks tips for OCR postprocessing. -

Model Compatibility and Operations:

@xermiz.is guided by@justmarkyand@robert.bou.infiniteregarding compatible models for their RTX 3060 and feedback channels are provided by@robert.bou.infiniteand@heyitsyorkiefor discussing LM Studio features and bug reporting. -

Prompting Strategies, Quantization, and Memory Requirements:

@kujiladiscusses building minimal requirement embedded llama apps and@robert.bou.infinitetalks about challenges with quantizing and running extraordinary large models like Giant Hydra MOE 240b which failed to load even on HuggingFace’s powerful A100x4 setup. -

Executing Code and Other Offline AI Tools:

@artik.uainquires about software that executes code and browses the internet like ChatGPT, and@curiouslycorysuggests tools like ollama + ollama-webui that support image input and document chat, indicating that these tools are alternatives to LM Studio for different AI tasks.

Links mentioned:

- Hugging Face – The AI community building the future.: no description found

- coqui (Coqui.ai): no description found

- ibivibiv/giant-hydra-moe-240b · Hugging Face: no description found

- WHY IS THE STACK SO FAST?: In this video we take a look at the Stack, which sometimes is called Hardware Stack, Call Stack, Program Stack… Keep in mind that this is made for educati…

- Making LLMs lighter with AutoGPTQ and transformers: no description found

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed…

- Running Assistant LLM(ChatGPT) on your computer locally without internet connection using LM Studio!: We explore the need behind running large language models based assistants locally, how to run them and their uses cases using LM Studio

- Extending context size via RoPE scaling · ggerganov/llama.cpp · Discussion #1965: Intro This is a discussion about a recently proposed strategy of extending the context size of LLaMA models. The original idea is proposed here: https://kaiokendev.github.io/til#extending-context-t…

LM Studio ▷ #🤖-models-discussion-chat (73 messages🔥🔥):

-

Users Grapple with Model Preferences:

@hexacubecanceled subscriptions to ChatGPT and expressed mixed feelings about alternatives like Guanaco 33b q5. Meanwhile,@fabguyshared a positive experience with generating long stories using a 120B model, suggesting the use of big models with certain tactics.@goldensun3dsdetailed a structured approach to storytelling with specific instructions to the AI, though experienced some teething issues while transitioning to local models and testing extended contexts. -

Fine-Tuning AI Models to Personal Data: In response to

@goofy_navigator’s query about training models on personal data,@heyitsyorkieindicated that it’s possible but cannot be done within LMStudio. Heyitsyorkie further shared a YouTube tutorial demonstrating the process of fine-tuning a model with custom datasets. -

Local Model Troubleshooting: After a few users, including

@rumpelstilforeskinand@joelthebuilder, reported issues with various models producing suboptimal results or behaving unexpectedly, others recommended checking the version of LMStudio in use or switching to newer models like Dolphins or Nous Hermes. -

Hardware Conversations and Model Advice:

@kujilaand@goldensun3dsdiscussed the feasibility and performance of running the Goliath 120B LongLORA model on different systems, with varying results and emphasis on RAM and VRAM requirements.@heyitsyorkieadvised@goofy_navigatoron ideal model quant levels for laptop usage and recommended sticking with 7b models for the user’s hardware constraints. -

Finding the Right Fit for Specialized AI Use:

@supersnow17was looking for a model fine-tuned for math and physics, to which@fabguysuggested that a different kind of tool might be more suitable for solving math problems specifically.@juanrintainquired about AI for reading disorganized text and was pointed towards RAG resources.

Links mentioned:

- Fine-tuning Llama 2 on Your Own Dataset | Train an LLM for Your Use Case with QLoRA on a Single GPU: Full text tutorial (requires MLExpert Pro): https://www.mlexpert.io/prompt-engineering/fine-tuning-llama-2-on-custom-datasetLearn how to fine-tune the Llama …

- Reddit - Dive into anything: no description found

LM Studio ▷ #announcements (1 messages):

- Critical Bug Fixes in LM Studio v0.2.14:

@yagilbannounced important bug fixes in LM Studio v0.2.14 that address UI freezes when interrupting model generation and hangs caused by pasting long inputs. Users are urged to update through the LM Studio website or the app’s “Check for updates…” feature.

Links mentioned:

👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

LM Studio ▷ #🧠-feedback (12 messages🔥):

- LM Studio Simplifies LLMs for All:

@drawless111highlights LM Studio’s ability to run LLMs with a simple interface that requires no coding, enabling anyone to download and use pre-trained models easily. - Alert on LLM Folder Default Reset:

@msz_mgsobserved that the LLM folder location was reset to default following a recent update, but no other settings were affected. - GPU Inactivation to Load Models:

@geordereported an issue where models fail to load when attempting to use the GPU, which works upon disabling the GPU. - Praise for Improved Text Pasting Speed:

@msz_mgsappreciated the increased speed of pasting long text in LM Studio. - Suggestions for LM Studio Enhancements:

@justmarkysuggested new features such as an audible beep when model downloads complete, the ability to favorite release users like TheBloke, and filters for models by size and user.@fabguyguided them to post feature requests in a designated channel and informed how to filter models by specific release users.

Links mentioned:

LM Studio: experience the magic of LLMs with Zero technical expertise: Your guide to Zero configuration Local LLMs on any computer.

LM Studio ▷ #🎛-hardware-discussion (27 messages🔥):

-

Tornado Case Fans vs. Compact Pressure Solution:

@666siegfried666discusses optimal cooling solutions for hardware, mentioning the use of a case with 2x180mm fans generating significant airflow or, alternatively, a smaller case with 3x120mm Arctic P120 fans for good static pressure and cost-efficiency. -

Exploring AMD 8700g for Dual Local Models:

@bobzdarinquires about performance figures when pairing an AMD 8700g with DDR5 RAM and a 4090 GPU to run language and code models, suggesting 128 GB of RAM to possibly avoid bottlenecks. -

Discussion on APU’s Potential for Running Models:

@ptableand@bobzdarexchange thoughts on whether the APU’s system RAM could be a limitation for running models.@bobzdarshares that the APU can address 32GB directly and the memory controller’s high overclocking potential, with system RAM speeds around 100GB/s. -

Skepticism on APU Performance for AI:

@goldensun3dsadvises taking a cautious approach regarding the performance of APUs for AI tasks, similar to how one would treat ARC GPUs, while@rugg0064points out that despite being faster than system RAM, it still falls short compared to VRAM. -

Awaiting Real-world APU Testing Results:

@bobzdardecides to test the APU’s performance by ordering a setup, ready to opt for a 7950x3d if the results are unsatisfactory, prompting responses from others like@quickdive.who believe the 7950x3d might have been the better option from the start.

Links mentioned:

I Saw W Gus Fring GIF - I Saw W Gus Fring Gus - Discover & Share GIFs: Click to view the GIF

LM Studio ▷ #🧪-beta-releases-chat (15 messages🔥):

-

Ejection Issue Needs a Fix: User

@goldensun3dsmentioned a problematic bug where ejecting a model during message processing causes the program to hang indefinitely. They suggested the need for a way to cancel generation without waiting for token output, to prevent having to restart the program. -

Non-AVX2 Beta Falling Behind:

@mike_50363pointed out that the non-AVX2 beta version lags by two releases, impacting their ability to use the software on several Sandy Bridge systems with 128GB RAM.@yagilbacknowledged the issue and promised to tag them when a new AVX build is released. -

Persistent Bug on LM Studio MacOS:

@laurentcrivelloreported a recurring bug over 3-4 versions on MacOS where the server remains reachable after the app window is closed.@yagilbrecognized the problem and clarified the expected behavior when the red cross is clicked. -

Optimizing UI for Server Indication:

@laurentcrivelloexplained their preference for fewer active app indications on MacOS, desiring the server to run without multiple app icons. They proposed a top bar icon that varies depending on server activity, and@wolfspyreinquired about the specific UI expectations. -

Shortcut Creation Complaint:

@jihainquired about an option to stop the beta version from creating a desktop shortcut upon each installation, implying it’s an undesired behavior.

LM Studio ▷ #langchain (1 messages):

- Inquiry About Chain-Compatible Models: User

@eugenekorminseeks assistance in identifying small seq2seq LLM models (around a few billion parameters) that support the chain and invoke methods for a Python script using langchain. They requested help or direction to obtain a model list supporting these methods.

LM Studio ▷ #crew-ai (1 messages):

- Inquiry About Crew-AI UI: User

@docorange88asked if there are any UI interfaces or web interfaces for CrewAI similar to AutoGen Studio. They expressed that Crew-AI seems to be better and are looking for thoughts on this.

LM Studio ▷ #open-interpreter (1 messages):

phoenix2574: <@294336444393324545> I’m using Mixtral and it seems to work alright

Nous Research AI ▷ #off-topic (8 messages🔥):

- Mistral Trumps Phi2 in Programming: User

@carsonpooleobserved a significant difference when fine-tuning both phi2 and Mistral on the code section of OpenHermes 2.5; Mistral notably outperformed phi2 under the same sampling settings. - Debate Over GPT-4 Programming Capabilities: User

@tekniummade a quip about GPT-4’s programming performance in light of@carsonpoole’s findings, leading to a discussion on the matter. - GPT-4’s Size Discussed: In response to the programming performance,

@n8programsnoted that the model in question was only 2 billion parameters, hinting at the limitations due to its size. - Expectations Versus Reality for GPT-4 Skill Level:

@tekniumcountered by citing a claim from Microsoft Research that suggested they had achieved a GPT-4 level of skill, setting expectations for the model’s performance. - An Expression of Disbelief: User

@Error.PDFshared a humorous shocked cat gif from Tenor, potentially reacting to the discussed performance results.

Links mentioned:

Shocked Shocked Cat GIF - Shocked Shocked cat Silly cat - Discover & Share GIFs: Click to view the GIF

Nous Research AI ▷ #interesting-links (16 messages🔥):

-

Sparsetral MoE Launches:

@dreamgenintroduced Sparsetral, a sparse MoE model derived from dense model Mistral, with resources like the original paper, original repo, and Sparsetral integration repo. They also highlighted forking unsloth for efficient training, noting Sparsetral on vLLM works on hardware like a 4090 with bf16 precision, and shared the model on Hugging Face. -

DeepSeek Sets New Math SOTA:

.benxhexpressed enthusiasm for Deepseek, a tool that has apparently set a new state-of-the-art for math-related benchmarks, by introducing a technique known as DPO and a new way to build datasets. -

PanGu-$\pi$-1 Tiny Language Model Examined:

@bozoidshared a research paper (link) focused on optimizing tiny language models like PanGu-$\pi$-1 with 1B parameters, investigating architecture, initialization, and optimization strategies to improve tiny LLMs’ performance. -

EQ-Bench for LLMs:

@nonameusrintroduced EQ-Bench, an Emotional Intelligence Benchmark for Large Language Models, including links to the GitHub repository and the related paper noting updates to their scoring system. -

Audio Flamingo Excels in Audio Understanding:

@2bit3thnshared details on Audio Flamingo, an audio language model excelling in various audio understanding benchmarks, mentioning that the model adapts well to unseen tasks through in-context learning and has strong multi-turn dialogue abilities (project link).

Links mentioned:

- LLM check: no description found

- EQ-Bench Leaderboard: no description found

- Audio Flamingo: no description found

- Rethinking Optimization and Architecture for Tiny Language Models: The power of large language models (LLMs) has been demonstrated through numerous data and computing resources. However, the application of language models on mobile devices is facing huge challenge on…

- GitHub - babycommando/machinascript-for-robots: Build LLM-powered robots in your garage with MachinaScript For Robots!: Build LLM-powered robots in your garage with MachinaScript For Robots! - GitHub - babycommando/machinascript-for-robots: Build LLM-powered robots in your garage with MachinaScript For Robots!

- Reddit - Dive into anything: no description found

Nous Research AI ▷ #general (198 messages🔥🔥):

-

Quanting the Miqu Finetune:

@tsunemotoshared their quantization of Senku-70B-Full, a finetune of an alleged early Mistral-70B medium model, boasting an EQ-Bench score of 84.89. Queries about whether this is a Finetune on FLAN were answered as an OpenOrca finetune, and you can find it on HuggingFace. -

Exploring Math Performance in LLMs:

@gabriel_symesparked a conversation about the significance of mathematics in evaluating language models. It was suggested that good performance in math may correlate with solid logic and reasoning in (non task specific) LLMs, and a model should be trained on math and programming corpuses. -

Upcoming Event Hype in San Francisco:

@tekniumand other users discussed the Ollama AI developer event in SF, with@coffeebean6887providing links like Starter to SF Guide and Cerebral Valley for additional resources and activities in the area. Despite the sizeable capacity, it’s nearing full and advises swift RSVP actions. -

Mixed Language Conundrums with Mixtral:

@light4bearexpressed that Mixtral, when instructed in Chinese, responds with a mix of Chinese and English, while inversely, OpenHermes occasionally displays responses in Chinese. The latter, mentioned by@teknium, has been added to Cloudflare’s AI platform as evidenced on official Tweets by both Cloudflare and Teknium. -

Questions and Support for Multi-Modal Model Finetuning:

@babycommandointroduced MachinaScript For Robots, a project aiming to control robots using LLMs, and sought advice on finetuning multi-modal models in the[channel]category. They also extended gratitude towards Nous’s work and contributions to the field.

Links mentioned:

- Cerebral Valley: A community of founders and builders creating the next generation of technology.

- LiPO: Listwise Preference Optimization through Learning-to-Rank: Aligning language models (LMs) with curated human feedback is critical to control their behaviors in real-world applications. Several recent policy optimization methods, such as DPO and SLiC, serve as…

- tsunemoto/Senku-70B-Full-GGUF · Hugging Face: no description found

- ShinojiResearch/Senku-70B-Full · Hugging Face: no description found

- Tweet from Teknium (e/λ) (@Teknium1): Cloudflare has added my OpenHermes 2.5 7b to their workers ai platform! ↘️ Quoting Cloudflare (@Cloudflare) Over the last few months, the Workers AI team has been hard at work making improvements t…

- RSVP to Chat (Ro)bots Hackathon @ AGI House | Partiful: Welcome to Robotics x LLMs Hack, where creativity, collaboration, and cutting-edge technology meet. Whether you’re a seasoned coder or a problem-solving guru, this is your chance to build with th…

- Tweet from Alice (e/nya) (@Alice_comfy): Ok so this is one benchmark, but Senku-70B (leaked mistral finetune) beats GPT-4 in EQ Bench. Not sure how I go about getting this added on the website. Senku-70B is available here. https://huggin…

- Local & open-source AI developer meetup · Luma: The Ollamas and Friends are back for another developer focused meetup! We’re going to Cerebral Valley @ the San Francisco Ferry Building! Open-source AI demo day Free catered food &…

- Tweet from Cloudflare (@Cloudflare): Over the last few months, the Workers AI team has been hard at work making improvements to our AI platform. After adding models like Code Llama, Stable Diffusion, Mistral, today, we’re excited to anno…

- Starter Guide to SF for Founders: A kickstarter resource for anyone new to or thinking of moving to San Francisco.

Nous Research AI ▷ #ask-about-llms (11 messages🔥):

-

Seeking Research on Data Generation:

@bigdatamikeis exploring question answer pair generation for fine-tuning an internal LLM and discussed leveraging strategies from a Microsoft paper. They asked for recommendations on other papers covering high-quality data generation. -

Constraints on Mining Model Architectures: