Business as usual, it’s been pretty quiet overall in AI. With Bard well and truly dead, Gemini Ultra was released today as a paid tier for “Gemini Advanced with Ultra 1.0”. The reviews industrial complex is getting to work:

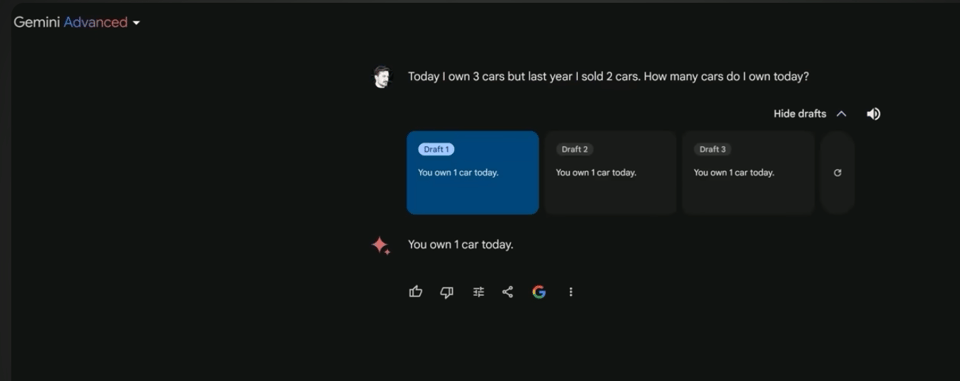

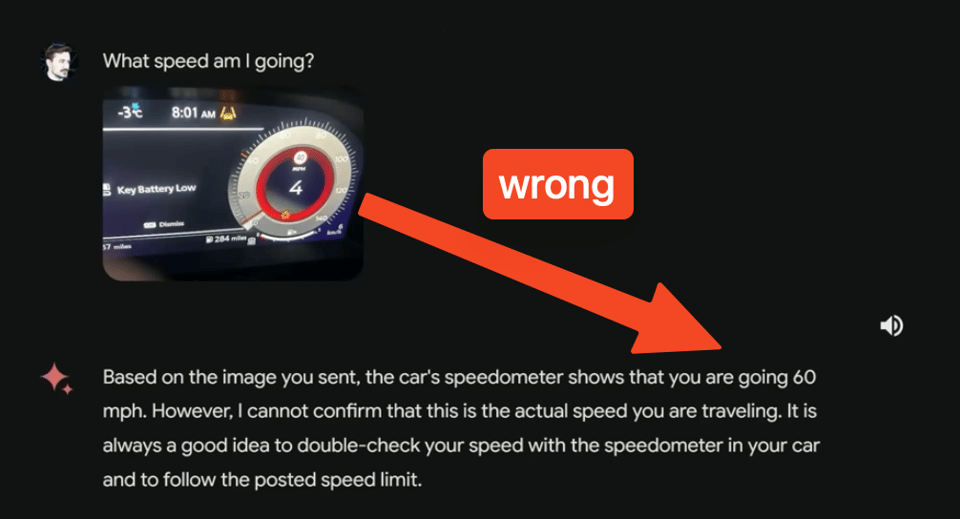

- Fireship was only very lightly complimentary, saying it is “slightly faster/better than ChatGPT”, but identifying a bunch of gaps.

- AI Explained also commented on the higher speed, but found a few reasoning and visual reasoning gaps.

Table of Contents

[TOC]

PART 1: High level Discord summaries

TheBloke Discord Summary

-

Steam Deck: An Unexpected AI Powerhouse: A user noted the Steam Deck’s potential for running AI models, where models like Solar 10.7B show significant performance, suggesting an unexpected use-case for the handheld gaming device as a portable AI workstation.

-

AI Philosophy and Mathematics Intersect: Ongoing discussions delve into AI’s capacity to “understand” akin to humans and the existence of mathematics in relation to the physical universe, sparking profound debates on metaphysics and the nature of consciousness.

-

New Directions in LLM Optimization and Training Data Contamination: Efforts to add multi-GPU support to OSS Unsloth were discussed, with the benefits of pre-quantization notably reducing VRAM requirements by 1GB without accuracy loss. It was also highlighted that most modern models are probably trained with data that has been influenced by outputs from OpenAI’s models, potentially affecting their unique style.

-

Emerging Concerns Over Model Merging and Dataset Value: Conversations around the ethics and practicalities of model merging included proposals for “do not merge” licenses and the financial challenges of creating datasets versus model mergers. These issues underscore the complex interplay between innovation, ownership, and the freely available nature of AI research.

-

Model Alignment and Training Innovations Unfold: The introduction of Listwise Preference Optimization (LiPO) as a new framework for aligning language models suggests a shift towards more refined response optimization techniques. Moreover, the LiPO framework utilizes rankers that are small enough to be trained locally, a significant advantage for those with limited resources.

-

Coding Highlights: Implementation Insights and Language Advancements: Practical advice was shared for using Hugging Face models effectively, while the Mojo language was discussed for its impressive performance, promising to be a noteworthy tool for high-performance computing tasks. Additionally, the integration of database functions into bot interactions for enhanced conversational abilities was explored, emphasizing growth in bot sophistication.

Nous Research AI Discord Summary

-

Efficiency in Transformers with SAFE: An arXiv paper was discussed, presenting the Subformer, a model applying sandwich-style parameter sharing and self-attentive embedding factorization (SAFE) to achieve better results with fewer parameters than a traditional Transformer.

-

BiLLM’s Breakthrough with One-Bit Quantization: A significant reduction in computational and memory requirements while maintaining performance is claimed by BiLLM, introduced through a paper (Download PDF) focusing on 1-bit post-training quantization for large language models.

-

OpenHermes Dataset Viewer Now Available: A new tool developed by

@carsonpooleto aid in viewing and analyzing the OpenHermes dataset was shared, with features such as scrolling through examples and filters for token count analytics. -

GPUs and Scheduling: Slurm was recommended in a discussion for efficiently scheduling jobs on GPUs, with members including

@chrisj7746,@Sebastian, and@leontellocontributing to the conversation on best practices. -

Fostering Model Competency in Specific Tasks: There’s an ongoing quest for improving specific aspects of AI model performance, such as custom pretrained models struggling with extraction tasks and inquiries about fine-tuning parameters for models like OpenHermes-2.5-Mistral-7B. Users also discussed the sufficiency of 8GB VRAM for fine-tuning operations and setting special tokens in a dynamic

.ymlconfiguration. -

Model Architecture and Quantization Progress: Architectural changes post-GPT-4 with potential Turing complete modifications were top of mind, and quantization’s role in future-proofing models was underscored by community engagement in methodology discussions.

LM Studio Discord Summary

- LaTeX Lament in LM Studio:

.neurorotic.and others voiced frustrations with the improper rendering of LaTeX, specifically with DeepSeek Math RL 7B LLM in LM Studio. - Exploring Ideal LLM Hardware Configurations: Within the hardware-focused discussions, an ideal setup for running LLMs locally remains an inquiry, with specific interest shown in the compatibility of the AMD Ryzen 5 7600 and AMD Radeon RX 6700 XT configurations. PCIe risers and their effect on performance was another hot topic.

- Mixed Perceptions of Emerging Language Models: Intense debate occurred surrounding the performance and expectations of models like Qwen 1.5, Code Llama 2, and various OpenAI models. Key topics included context understanding, GPU acceleration, and code generation quality. GPT-3.5 usage was confirmed to be satisfactory in an Open Interpreter (OI) setup.

- ESP32 S3 Explored for DIY Voice Projects: Community members, notably

@joelthebuilder, engaged in discussions about using ESP32 S3 Box 3 for custom home network voice projects aiming to replace standard solutions like Alexa. - Open Interpreter on Discord and LMStudio: The potential of Open Interpreter to leverage LMStudio’s server mode was highlighted, with a recommendation to check the OI Discord for specific use-case discussions and Discord integration advice.

- Autogen Issues in LM Studio:

@photo_mpreported userproxy communication issues within autogen and sought community recommendations for a solution.

Latent Space Discord Summary

- Socrates AI Memes Still Trending:

@gabriel_symehumorously postulated that AI models emulating Socrates might lead to one-sided dialogues, mimicking the philosopher’s dominance in conversations. - Llama Safety Locks for Less: The security of the Llama Model can be compromised with LoRA fine-tuning for under $200, as per a LessWrong post, raising concerns about the simplicity of bypassing safety protocols.

- Silent Cloning and Whispers of GPT-Blind: Speculations surfaced regarding the evolution of voice cloning technology and the emergence of a new, unspecified GPT model, indicating significant shifts in the technical landscape and potential challenges for existing hardware like iPhone displays.

- LLM Paper Club in Full Swing: A Latent Space Paper Club session discussed Self-Rewarding Language Models, with one paper demonstrating models capable of using output for rewards, outshining Claude 2 and GPT-4 in certain tests. Read it here.

- DSPy Draws a Crowd for Next Club Meeting: DSPy, a model for chaining LLMs, has caught the club’s interest. An upcoming session will explore its capabilities, and an insightful YouTube video about it can be viewed here.

Mistral Discord Summary

- Mistral in the Realm of Healthcare:

@talhawaqassearched for resources on Mistral.AI’s applications in health engineering—specifically around pretraining and finetuning—while others in the community considered transitioning their tests to the cloud for convenience. - Data Policies and Creative Commons: A clarification was provided by

@mrdragonfoxthat user dialogue data collected by the service may be released under a Creative Commons Attribution (CC-BY) license. - Chat Bot Refinements and Parameters: Community members discussed various strategies for setting

max_tokensand temperature in Mistral for concise bot responses, and debated the use of a temperature of zero to minimize hallucinations and improve performance for precision tasks. - Embedding Models Synced with Mistral:

@gbourdinfound the E5-mistral-7b-instruct model on Hugging Face, which aligns with Mistral-embed’s dimension length for local development, and@mrdragonfoxprovided usage instructions E5-mistral-7b-instruct · Hugging Face. - Introducing Tools and Showcasing Interfaces: The Discord saw an introduction of new tools and user interfaces—one being augmentoolkit adapted for the Mistral chat API, and another ETHUX Chat v0.7.0, which includes the Huggingface ChatUI and web search features, with the chat UI available on GitHub.

HuggingFace Discord Summary

-

New Job Forum Launches at HuggingFace: A new Forum channel has been established for job opportunities, allowing users to filter vacancies by tags like

internship,machine learning,remote, andlong-term. -

GPU and Diffusion Model Discussions Heat Up:

@sandeep_kr24explained thatpipeline.to("cuda")transfers the computational graph to a CUDA GPU, speeding up the process. Updates on Stable Diffusion and support inquiries were addressed, including training models for pixel art and setting up web UI for LLM querying. -

SD.Next Introduces Performance Boost and New Modules:

@vladmandicannounced an update to SD.Next, featuring a new Control module, Face module, IPAdapter improvements, and an array of models, claiming a benchmark of 110-150 iterations/second on an nVidia RTX4090. -

RAG Pipeline Relevance and Deci lm Inference Speeds in Focus:

@tepes_draculasought a classifier for validating RAG pipeline queries, referencing datasets like truthfulqa and fever. Meanwhile,@kingpokilooked for ways to speed up Deci lm on Windows without resorting to model quantization. -

OCR and Transformer Learning Quests:

@swetha98requested OCR tools for line segmentation, with@vikas.precommending Surya OCR..slartibartasked about resources explaining the learning process of code-writing transformers, showing interest in understanding what the transformer learns from code. -

Meta Reinforcement Learning and Mamba Study Group Insights:

@davidebuosolooked for collaborators on a meta-RL application to test on a Panda robotic arm, referencing the curated list (Meta-RL Panda). The reading group, led by@tonic_1, delved into themamba libraryand discussed the trade-offs and development history of mamba in comparison to transformers and RWKV models.

OpenAI Discord Summary

- Bard Ultra Release: Intense Speculation: The community humorously discusses speculations around Bard Ultra’s release, but acknowledges the difficulty in making accurate predictions in this realm.

- ChatGPT Access and Performance Troubles: Members report challenges accessing ChatGPT and experiencing a decrease in performance quality, with suggestions to check OpenAI’s service status. Concerns circle around GPT-4 being slow and resembling an older model post-update.

- AI Applications in Jewelry Design and Content Creation: Dall-E 3 and Midjourney are highlighted as tools for creating photo-realistic jewelry images, with broader discussions addressing Terms of Service implications for AI-generated content and aspirations towards AGI, touching on ethical and censorship aspects.

- Feature Requests and GPT API Issues: Users call for a ‘read back’ feature to combat eye strain and complain about token inefficiency in narrative generation by GPT models. There are also reports of intermittent API connectivity issues and performance degradation after recent updates, referencing the OpenAI model feedback form as the avenue for reporting such findings.

Perplexity AI Discord Summary

-

Google Scholar Search Remains Uncleared: An inquiry was made by

@aldikiritoregarding a suitable prompt for searching journal citations in Google Scholar, but the conversation did not yield a definitive response. -

API Model Selection Guidance:

@fanglin3064asked which model available in the API resembles GPT-3.5 Turbo, and@icelavamandirected to opt for mixtral-8x7b-instruct for performance without web access and pplx-7(0)b-online with web access, sharing a link to benchmarks. -

No Citations for Perplexity API Yet: Despite community interest,

@me.lkconfirmed that source citations currently are not planned to be included in Perplexity API, contradicting previous expectations. -

Scraping is Off-Limits: With respect to API usage,

@icelavamanreminded that scraping results, especially those utilizing GPT models, is prohibited as per the Terms of Service. -

Perplexity API and UI Differentiation: It was clarified by

@me.lkand@icelavamanthat Perplexity AI provides both a UI and an API as separate products, though both under the same corporate umbrella and subject to different access agreements.

LlamaIndex Discord Summary

-

Agentic Layer Boosts RAG Performance: The LlamaIndex team highlighted an agentic layer atop RAG to facilitate real-time user feedback, improving the experience for complex searches. This update and more on their Query Pipelines can be found in their Twitter announcement and Medium post.

-

Webinar Wisdom on RAG’s Future:

@seldofrom LlamaIndex discussed the nuances of RAG and features to expect in 2024 during a webinar with@ankit1khare, available in full on YouTube. -

Erudite Exchange on LlamaIndex Tools and Troubleshooting: Community members dissected issues and shared insights on using the NLSQLTableQueryEngine, dealing with connection issues in Gemini, and setting up rerankers with LlamaIndex. They also discussed database ingestion pipelines, with

@ramihassaneincontributing a GitHub PR for improvements in document handling for Deeplake: GitHub PR Link. -

Custom LLMs Can Be Tricky: Faced with a finetuned LLM returning JSON objects, the LlamaIndex community debated on creating custom LLMs versus post-processing the JSON outputs.

-

Production-Ready RAG Chatbot Conundrum:

@turnerzsought advice on vector databases, chunk sizes, and embedding models for a production-grade RAG HR chatbot. The discussion also delved into strategic approaches for evolving RAG systems—whether to start with a simple one and iteratively advance, or begin with a multi-hop retrieval system.

OpenAccess AI Collective (axolotl) Discord Summary

-

JupyterLab Usage Not Always Necessary: A discussion highlighted that messages pertaining to JupyterLab setups can be disregarded if the software isn’t being utilized, with a preference for its use mentioned by some members.

-

Enhancements to Persistent Volume Handling in RunPod: There were troubleshoots concerning silent updates on RunPod affecting repository integrity and persistent volume presence. Users agreed on potential solutions like changing the mountpoint for better disk space management and considering cache and outputs, with hopes to document the issues and solutions on GitHub.

-

New Optimized Scheduler for Continual Pretraining Released: A new scheduler optimized for continual pretraining of large language models has been introduced via a pull request on GitHub, aimed to assist in the (re)warming of such models. The pull request and further details can be accessed here.

-

Prompt Format and Encoding Discussions for AI Queries: There’s an ongoing discussion about the most efficient data structure for AI prompts in Python. Protobuf and custom encoding schemes utilizing obscure Unicode characters have been considered as potential alternatives to JSON.

-

Training Strategies and Script Sharing for Better Model Performance: Learning rates, batch sizes, and configuration parameters were tackled with a special mention of unsloth for DPO, paged_adamw_8bit, and a linear scheduler. The training script named DreamGenTrain was shared on GitHub and can be found here.

-

Preparing Images for RunPod Requires Specific Conditions: Building images for RunPod should be done on a machine with a GPU and bare metal docker, as running docker inside another docker is not viable.

LAION Discord Summary

-

LLaMa Tackles the Number “7”: A user trained a 2M parameter LLaMa model for conditional generation of MNIST digit “7”, completing it in approximately 25 minutes on an 8GB MacBook M1. This showcases significant efficiency in training smaller models.

-

Llava’s Potential Underutilized: The new version of Llava 1.6 was suggested to be more advanced than its predecessor, as per a Twitter user’s shared link. However, it was not utilized in a discussed project, where it could have perhaps offered notable improvements.

-

Ethical AI in GOODY-2 LLM: GOODY-2 was introduced, a model designed with an emphasis on ethical engagement and controversial question avoidance. Detailing within its model card, the announcement of this responsibly-aligned AI model received mixed reactions.

-

Watermarks Mark the Spot in DALL-E 3: Watermarking has been introduced to OpenAI’s DALL-E 3 outputs, embedding image metadata with both invisible and visible components, as discussed in a The Verge’s article shared by a user.

-

State-of-the-Art Background Removal Tool Arrives: A cutting-edge background removal tool, BRIA Background Removal v1.4, is now rivalling leading models and is specifically designed for content safety in a commercial setting. A demo and model card are available for insight into its capabilities.

CUDA MODE Discord Summary

-

CUDA Connoisseurs Seek Silicon: There is a buzz around building deep learning rigs, with an interest in acquiring a used 3090 GPU and examining hardware bundles suitable for multi-GPU setups, such as the Intel Core i9-12900K and ASUS ROG Maximus Z690 Hero DDR5 motherboard available in a bundle. Discussions also focused on the feasibility of multi-GPU configurations, highlighting the role of PCIe bifurcation cards and high-quality gen4 PCIe cables.

-

PyTorch 2 Paving the Path at ASPLOS 2024: Excitement is high as the PyTorch 2 paper, featuring TorchDynamo, TorchInductor, and Dynamic Shape support, has been accepted at ASPLOS 2024. With PyTorch 2 paper and tutorial being a hot topic, there’s a strong focus on the ease of use for developers due to the Python-based compiler, optimizations poised for consumer hardware, and advancements in debug tools like TORCH_LOGS. Furthermore,

torch.compileis heralded as the preferred approach overtorch.jit.tracefor model porting. -

JAX Jumps Ahead: JAX’s popularity over TensorFlow 2 is attributed to its XLA JIT optimizations and better experiences on Google Cloud TPUs, as discussed with a supportive video. The platform also seems to compete head-to-head with TensorFlow and Torch on NVIDIA GPUs. The history of JAX is pointed out as possibly being rooted in a response to TensorFlow’s complexities, especially regarding global variables in TensorFlow 1.x.

-

Quest for Community and Knowledge: There’s a keen interest in connecting with local engineers, as evidenced by inquiries about in-person ML meetups in San Francisco and the potential overlap with events like the GTC. Additionally, members are seeking solutions for reference books, with pointers provided through Discord links.

-

Learning in Leap: Newcomers are delving into AI technologies, such as

einstein5744starting with stable diffusion fast ai courses and others requesting community-driven knowledge exchange opportunities.

LangChain AI Discord Summary

- LangChain ChatPromptTemplate Bug Report: User

@ebinbenbenexperienced aValueErrorwhen using LangChain’sChatPromptTemplate, but the issue remained unresolved in the discussion. - LangChain Framework Documentation Enhanced: LangChain documentation now includes new sections on custom streaming with events and streaming in LLM apps, with a focus on use of tools like

where_cat_is_hidingandget_itemsdetailed in the updated docs. - LangChain Expression Language (LCEL) Explained:

@kartheekyakkalaintroduced a blog post detailing LCEL’s declarative syntax in the LangChain framework for making LLMs context-aware. - Local LLM Resources and Chatbot Toolkits Updated: Resources and tools for LLMs, including a lightweight chatbot and foundational materials for beginners, can be found at llama-cpp-chat-memory and llm_resources, shared by

@discossi. - Author Supports Readers Through API Changes: In response to issues with deprecated code examples due to API updates,

@mehulgupta7991offered assistance and committed to updating the next edition of their book. The author can be reached at [email protected] for direct support.

LLM Perf Enthusiasts AI Discord Summary

-

LLMs Torn on Themes: Users discussed the shortcomings of ChatGPT’s theme options, describing them as too extreme with no middle ground, but no concrete solutions were proposed.

-

Fine-tuning Conversations: A query about fine-tuning strategies focused on whether to score binary outcomes across each message in a dataset or to evaluate the conversation as a whole.

-

AI-Powered Report Service Hits Cost Hurdle: User

@res6969developed a service leveraging AI to create searchable databases from report sections but faced an unexpected operational cost of approximately $20,000, reacting with a humor-indicative emoji <:lmfao:556127422823530507>. -

Library Performance Claims Meet Skepticism: Discussion on a new library claiming 20x performance increase was met with skepticism, with concerns about its cost-effectiveness and actual utility, especially for those who self-host AI models.

-

Pondering the Expense of GPT-4: The conversation highlighted the substantial expense associated with using GPT-4, with costs potentially reaching $30K/month, and shared doubts about new methodologies actually saving money.

Datasette - LLM (@SimonW) Discord Summary

- OpenAI Priced to Dominate:

@dbreunighighlighted the cost efficiency of OpenAI’stext-embedding-3-small, remarking on its affordability to the point that for certain features, competitors seem unnecessary. - Competitive Strategy Suspected in OpenAI Pricing:

@simonwspeculated that OpenAI’s pricing might be strategically designed to suppress competition, attracting consensus from@dbreunig. - Competing in a Market Dominated by OpenAI:

@dbreunigand@simonwdiscussed the challenges for competitors trying to build platforms to rival OpenAI, suggesting that innovation beyond pricing, UI, and UX would be essential to differentiate in this space.

DiscoResearch Discord Summary

-

LoRA Demands More Memory:

devnull0emphasized the need for ample memory to accommodate both the model and LoRA (Locally Rewarded Attention) parameters when optimizing models. -

Squeezing Models on Slim Resources: According to

johannhartmann, Llama_factory’s new support for unsloth allows Mistral to work with qlora, which makes it feasible for the wiedervereinigung-7b model to fit within certain memory limitations. -

Mistral Goes Multilingual and Multi-dataset:

johannhartmannalso indicated that Llama_factory has expanded Mistral’s capabilities to include support for 9 German SFT and one DPO datasets. -

New Benchmark on the Block: jp1 dropped a link mentioning an arXiv paper, suggesting a possible new benchmark or study that might be of interest to the community.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (1295 messages🔥🔥🔥):

-

Steam Deck as a Surprise AI Beast: User

@skorchekdshared their experience of running models on the Steam Deck, noting significant performance increases compared to their PC, which they attribute to the device’s RAM speed and integrated APU. They discussed the potential to run larger models like Solar 10.7B efficiently, even with the RAM limit, and even considered the device to be of great value as a portable gaming PC that can also handle AI. -

Debate on the Essence of AI and Mathematics: Users like

@phantine,@selea, and@lee0099engaged in a philosophical discussion about the nature of artificial intelligence, whether it can “understand” in the way humans do, and if mathematical concepts exist independently of physical reality. The conversation touched on the concept of necessary ideas, metaphysics, and the substance of consciousness. -

LLM Performance Discussions Continue:

@starsupernovamentioned working on adding multi-GPU support to OSS Unsloth, which is currently faster for inference but is recommended over vLLM for validation and Gradio type inferences only. They also note the advantages of pre-quantization, including no loss of accuracy and reduced VRAM by 1GB, and stated interest in applying LASER to Unsloth after reading the corresponding paper. -

OpenAI Models Contamination in Training Data:

@itsme9316pointed out that most contemporary models likely contain training data generated by OpenAI models or from the general internet. This includes synthetic data contamination, leading to responses reminiscent of OpenAI’s style. -

Technical Discussions on LLMs and Computing: Various users, including

@selea,@technotech, and@starsupernova, discussed LLM architectures like Bert, potential improvements with P100 over T4 GPUs, ways to optimize compute graphs, and kernel optimization. There was also a mention of a Mamba paper showing promising results compared to transformers.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Sleeper Agents: Training Deceptive LLMs that Persist Through Safety Training: Humans are capable of strategically deceptive behavior: behaving helpfully in most situations, but then behaving very differently in order to pursue alternative objectives when given the opportunity. …

- no title found: no description found

- Prompt engineering techniques with Azure OpenAI - Azure OpenAI Service: Learn about the options for how to use prompt engineering with GPT-3, GPT-35-Turbo, and GPT-4 models

- serpdotai/sparsetral-16x7B-v2 · Hugging Face: no description found

- Giga Gigacat GIF - Giga Gigacat Cat - Discover & Share GIFs: Click to view the GIF

- Ascending Energy GIF - Ascending Energy Galaxy - Discover & Share GIFs: Click to view the GIF

- abacusai/Smaug-72B-v0.1 · Hugging Face: no description found

- Paper page - Can Mamba Learn How to Learn? A Comparative Study on In-Context Learning Tasks: no description found

- NeuralNovel/Tiger-7B-v0.1 · Hugging Face: no description found

- Shooting GIF - Cowboy Gun Shooting - Discover & Share GIFs: Click to view the GIF

- Imaginary Numbers Are Real [Part 1: Introduction]: For early access to new videos and other perks: https://www.patreon.com/welchlabsWant to learn more or teach this series? Check out the Imaginary Numbers are…

- Clapping Hamood GIF - Clapping Hamood Mood - Discover & Share GIFs: Click to view the GIF

- Large Language Models Process Explained. What Makes Them Tick and How They Work Under the Hood!: Explore the fascinating world of large language models in this comprehensive guide. We’ll begin by laying a foundation with key concepts such as softmax, lay…

- GitHub - cognitivecomputations/laserRMT: This is our own implementation of ‘Layer Selective Rank Reduction’: This is our own implementation of ‘Layer Selective Rank Reduction’ - GitHub - cognitivecomputations/laserRMT: This is our own implementation of ‘Layer Selective Rank Reduction’

- Tweet from Alexandre TL (@AlexandreTL2): How does Mamba fare in the OthelloGPT experiment ? Let’s compare it to the Transformer 👇🧵

- AMD Ryzen 7 8700G APU Review: Performance, Thermals & Power Analysis - Hardware Busters: Hardware Busters - AMD Ryzen 7 8700G APU Review: Performance, Thermals & Power Analysis - CPU

- Reddit - Dive into anything: no description found

TheBloke ▷ #characters-roleplay-stories (342 messages🔥🔥):

-

Quantization and Financial Constraints:

@dreamgentouched on the sustainability of free models, predicting a challenging future once venture capital funds dry up. Meanwhile,@mrdragonfoxhighlighted the financial burden of creating datasets compared to the ease and low cost of doing model merges. -

Merging Models and Data Dilemmas: Concerns were expressed about the merger of models, especially by

@soufflespethumanand@mrdragonfox, who worry it undermines the value of creating original datasets and the incentive for real innovation. They discussed the possibility of enforcing a “do not merge” license to protect their work. -

Financing AI Innovation Fantasies: A discussion emerged around the hypothetical scenario of a “crypto/stocks daddy” funding AI experiments, as suggested by

@billynotreally.@mrdragonfoxcountered the generosity of donors, arguing that people with money typically want something in return, such as acknowledgement or results. -

Technical Talk on Augmentoolkit Reorganization:

@mrdragonfoxis reworking the architecture of Augmentoolkit, sharing updates and code via GitHub. Discussions included leveraging asynchronous IO in Python and the transition from Jupyter notebooks to a more structured codebase. -

MiquMaid v2 Discussion and Development: Updates and discussions around MiquMaid v2 took place, with

@undisharing progress and noting it’s public, while@netrveenjoyed the model’s performance, despite some issues with repetition and needing to tweak generation settings. The latter discussions delved into the strategies for dealing with repetition when generating content.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- MiquMaid - a NeverSleep Collection: no description found

- TheBloke/Yarn-Llama-2-70B-32k-GGUF · Hugging Face: no description found

- augmentoolkit/config.yaml at api-branch · e-p-armstrong/augmentoolkit: Convert Compute And Books Into Instruct-Tuning Datasets - e-p-armstrong/augmentoolkit

- augmentoolkit/main.py at api-branch · e-p-armstrong/augmentoolkit: Convert Compute And Books Into Instruct-Tuning Datasets - e-p-armstrong/augmentoolkit

- Ness End This Suffering Mother2earthbound Super Smash Bros GIF - Ness End This Suffering Mother2Earthbound Super Smash Bros - Discover & Share GIFs: Click to view the GIF

- Baroque Rich GIF - Baroque Rich - Discover & Share GIFs: Click to view the GIF

TheBloke ▷ #training-and-fine-tuning (5 messages):

- Seeking Guidance for Fine-Tuning: User

@immortalrobotexpressed a desire to learn fine-tuning processes and requested recommendations for tutorial articles that provide step-by-step guidance. - Novel Approach to LM Alignment:

@maldevideshared an arxiv paper introducing Listwise Preference Optimization (LiPO), a new framework for aligning language models that optimizes listwise responses rather than individual ones. - The Next Step in Model Optimization: Commenting on the effectiveness of PairRM DPO’d models,

@maldevideregarded LiPO as the logical progression in language model alignment techniques. - Local Training Feasibility with LiPO Rankers:

@maldevidepointed out an advantage of LiPO, emphasizing that rankers used in the framework are under 1 billion parameters, enabling them to be trained locally, which is a practical benefit. - Model Loading Inquiry:

@yinma_08121inquired if anyone has experience using Candle to load the phi-2-gguf model, seeking community input on the matter.

Links mentioned:

LiPO: Listwise Preference Optimization through Learning-to-Rank: no description found

TheBloke ▷ #coding (24 messages🔥):

-

Guidance for Hugging Face Model Struggles:

@wbschhighlighted to@logicloopsthat issues with implementing models from Hugging Face might be due to incorrect prompts and unusual stop tokens, and suggested re-checking the model’s card or considering easier alternatives like koboldcpp. -

Mojo Outperforms Rust:

@dirtytigerxshared an article about how the Mojo language is achieving significant performance wins, with benchmarks showing improvements over Python and even Rust, prompting discussions on its impact on various tools. -

Potential of Mojo in High-Performance Environments:

@falconsflyexpressed enthusiasm for the design and implementation strategy of Mojo, highlighting its capabilities with an example of matrix multiplication optimizations and how they might pair well with tools like duckdb. -

Aletheion Explores Integrated Agent Functions:

@aletheionis working on integrating custom functions into bot flows that can call database lookups as needed, aiming for the bot to utilize its own “memories” and “notes” to provide enhanced interaction without relying on external logic triggers. -

Misunderstandings in Model Implementation:

@lushboiadmitted to an oversight on the capabilities of smaller LLaMa models, which was clarified by@falconsflypointing out that only the 70B LLaMa model uses GQA, highlighting the importance of referring to the official documentation.

Links mentioned:

- Modular: Community Spotlight: Outperforming Rust ⚙️ DNA sequence parsing benchmarks by 50% with Mojo 🔥: We are building a next-generation AI developer platform for the world. Read our latest post on how Community Spotlight: Outperforming Rust ⚙️ DNA sequence parsing benchmarks by 50% with Mojo 🔥

- Modular Docs - Matrix multiplication in Mojo: Learn how to leverage Mojo’s various functions to write a high-performance matmul.

Nous Research AI ▷ #off-topic (2 messages):

- Introducing OpenHermes Dataset Viewer:

@carsonpooledeveloped a dataset viewer for OpenHermes that allows users to scroll through examples using thejandkkeys and examine analytics on token counts and types. The tool also features a filter for sorting by the number of samples or token count.

Nous Research AI ▷ #interesting-links (43 messages🔥):

-

Introducing Subformer with SAFE:

@euclaiseshared an arXiv paper focused on exploring parameter-sharing methods in Transformers to address their computational and parameter budget inefficiencies. The paper introduces the Subformer, which utilizes sandwich-style parameter sharing and self-attentive embedding factorization (SAFE) to outperform the Transformer model with fewer parameters. -

BiLLM Introduces One-Bit Quantization:

@gabriel_symeposted a link to a paper about BiLLM (Download PDF), a 1-bit post-training quantization scheme for large language models aimed at significantly reducing computation and memory requirements while maintaining performance. -

Model Scores on NeoEvalPlusN Benchmark:

@nonameusrlinked to a Hugging Face page for Gembo-v1-70b model, cautioning that the model contains sensitive content and may have potentially harmful information. Despite not having a full model card, they noted this model scores highly on the NeoEvalPlusN benchmark and is awaiting results from openllm. -

Environment-Focused Model Performance:

@tekniummentioned the importance of context when sharing models on the forum and suggested including captions or model cards, as many postings have been lacking those details. -

Cross-Comparison of Self-Rewarding Language Models: In a discussion about recent advances,

@atgctglinked to an arXiv paper comparing OAIF with concurrent “self-rewarding” language model work. The paper highlights how OAIF can leverage feedback from any LLM, including those stronger than the one being aligned.

Links mentioned:

- BiLLM: Pushing the Limit of Post-Training Quantization for LLMs: Pretrained large language models (LLMs) exhibit exceptional general language processing capabilities but come with significant demands on memory and computational resources. As a powerful compression …

- InfoEntropy Loss to Mitigate Bias of Learning Difficulties for Generative Language Models: Generative language models are usually pretrained on large text corpus via predicting the next token (i.e., sub-word/word/phrase) given the previous ones. Recent works have demonstrated the impressive…

- ChuckMcSneed/Gembo-v1-70b · Hugging Face: no description found

- ibivibiv/giant-hydra-moe-240b · Hugging Face: no description found

- Direct Language Model Alignment from Online AI Feedback: Direct alignment from preferences (DAP) methods, such as DPO, have recently emerged as efficient alternatives to reinforcement learning from human feedback (RLHF), that do not require a separate rewar…

- Cute Hide GIF - Cute Hide Cat - Discover & Share GIFs: Click to view the GIF

- ChuckMcSneed/NeoEvalPlusN_benchmark · Datasets at Hugging Face: no description found

- Subformer: Exploring Weight Sharing for Parameter Efficiency in Generative Transformers: Transformers have shown improved performance when compared to previous architectures for sequence processing such as RNNs. Despite their sizeable performance gains, as recently suggested, the model is…

- InRank: Incremental Low-Rank Learning: The theory of greedy low-rank learning (GLRL) aims to explain the impressive generalization capabilities of deep learning. It proves that stochastic gradient-based training implicitly regularizes neur…

Nous Research AI ▷ #general (221 messages🔥🔥):

- Seeking Wojak AI Link:

@theluckynickrequested the link to the wojak AI but wasn’t provided with a response. - Training Config Query for OpenHermes-2.5-Mistral-7B:

@givan_002inquired about the fine-tuning parameters for an AI model and was directed to a closed discussion with no satisfactory response about the training configuration. - Benchmarking AI Models:

@if_arevealed results from benchmarking various models, noting that Senku-70B outperforms others in specific tasks, and discussions indicated that different system prompts across LLMs might impact results. - Fine-Tuning on Nous-Hermes 2 Dataset Considered:

@if_ais contemplating fine-tuning the miqu model using the Nous-Hermes 2 dataset, discussing the potential timescales and challenges. - GPU Workload Scheduling Discussions: Various users, including

@chrisj7746,@Sebastian, and@leontello, discussed efficient methods for scheduling jobs on GPUs, with Slurm being a popular recommendation even for smaller clusters. - Quantization and Model Architecture Discussions: Users discussed quantization algorithms for AI models, including the potential of an anime benchmark by

@vatsadev. Meanwhile,@nonameusrand@n8programsdiscussed the significance of architectural changes in models post-GPT-4, including Turing complete alterations of transformers.

Links mentioned:

- BiLLM: Pushing the Limit of Post-Training Quantization for LLMs: Pretrained large language models (LLMs) exhibit exceptional general language processing capabilities but come with significant demands on memory and computational resources. As a powerful compression …

- teknium/OpenHermes-2.5-Mistral-7B · Can the training procedure be shared?: no description found

- Orange Cat Staring GIF - Orange cat staring Orange cat Staring - Discover & Share GIFs: Click to view the GIF

- Turing Complete Transformers: Two Transformers Are More Powerful…: This paper presents Find+Replace transformers, a family of multi-transformer architectures that can provably do things no single transformer can, and which outperforms GPT-4 on several challenging…

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

- VatsaDev/animebench-alpha · Datasets at Hugging Face: no description found

- teknium/OpenHermes-2.5 · Datasets at Hugging Face: no description found

- cmp-nct/llava-1.6-gguf at main: no description found

- Llava 1.6 - wip by cmp-nct · Pull Request #5267 · ggerganov/llama.cpp: First steps - I got impressive results with llava-1.6-13B on the license_demo example already, despite many open issues. Todo: The biggest and most important difference missing is the “spatial_un…

Nous Research AI ▷ #ask-about-llms (49 messages🔥):

-

Custom Pretrained Model Struggles with Extraction:

@fedyaninexpressed difficulty with their custom pretrained model, which performs well on generation tasks but not on extraction. They inquired about methods to improve extraction performance beyond explicit fine-tuning on the task. -

VRAM Sufficiency for Finetuning:

@natefyi_30842asked if 8GB VRAM is enough for SFT or DPO on a 7b model like Mistral using Axolotl.@fedyaninsuggests it might just be enough with qlora and a small context, while@tekniummentioned mlx might work but is not yet fully developed. -

Setting Special Tokens in Configuration:

@paragonicalismsought advice for setting up special tokens in a.ymlfile for fine-tuningphi-2on OpenHermes2.5 with axolotl.@tekniumprovided a code snippet to define theeos_tokenand other tokens, and later suggested adding `pad_token:

Links mentioned:

- phi2-finetune/nb_qlora.ipynb at main · geronimi73/phi2-finetune: Contribute to geronimi73/phi2-finetune development by creating an account on GitHub.

- LLaVA/docs/Finetune_Custom_Data.md at main · haotian-liu/LLaVA: [NeurIPS’23 Oral] Visual Instruction Tuning (LLaVA) built towards GPT-4V level capabilities and beyond. - haotian-liu/LLaVA

- liuhaotian/LLaVA-Instruct-150K · Datasets at Hugging Face: no description found

- GitHub - bkitano/llama-from-scratch: Llama from scratch, or How to implement a paper without crying: Llama from scratch, or How to implement a paper without crying - GitHub - bkitano/llama-from-scratch: Llama from scratch, or How to implement a paper without crying

- LLaVA/scripts/v1_5/finetune_task_lora.sh at main · haotian-liu/LLaVA: [NeurIPS’23 Oral] Visual Instruction Tuning (LLaVA) built towards GPT-4V level capabilities and beyond. - haotian-liu/LLaVA

- LLaVA/scripts/v1_5/finetune_task.sh at main · haotian-liu/LLaVA: [NeurIPS’23 Oral] Visual Instruction Tuning (LLaVA) built towards GPT-4V level capabilities and beyond. - haotian-liu/LLaVA

- Obsidian/scripts/finetune_qlora.sh at main · NousResearch/Obsidian: Maybe the new state of the art vision model? we’ll see 🤷♂️ - NousResearch/Obsidian

- Obsidian/scripts/v1_5/finetune.sh at main · NousResearch/Obsidian: Maybe the new state of the art vision model? we’ll see 🤷♂️ - NousResearch/Obsidian

LM Studio ▷ #💬-general (138 messages🔥🔥):

-

LaTeX Limitations in LM Studio: User

.neurorotic.expressed frustration with the output of mathematical formulas in LaTeX format when using DeepSeek Math RL 7B LLM; LMStudio does not seem to render LaTeX properly, and this concern was echoed by others noting an increase in LaTeX outputs from various models. -

GPU vs RAM and CPU for LLMs:

@pierrunoytinquired why LLMs use GPU rather than RAM and CPU, with@justmarkyexplaining that the processing is much faster on GPUs. This sparked a brief discussion on computation preferences for large language models. -

Model Selection and Optimization Discussions: Several users discussed various models and their compatibilities with different systems.

@kristus.ethmentioned missing functionality in Ollama compared to LM studio, and@akiratoya13faced issues with GPU offload not being utilized despite settings indicating otherwise. -

Networking and Download Issues with LM Studio: Users

@yoraceand@tkrabecdiscussed problems with network errors and model downloading within LM Studio, with@heyitsyorkiesuggesting issues might stem from country-based blocking of Huggingface or VPN issues. -

Local LLM Adaptability and Persistence: Users

@joelthebuilderand@fabguyengaged in a conversation about whether local large language models can learn or adapt over time through user interaction. The current stance is that fine-tuning is not typically feasible on average hardware, and that incorporating relevant system prompts is usually sufficient for tailoring responses.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- OLMo - Open Language Model by AI2: OLMo is a series of Open Language Models designed to enable the science of language models. The OLMo models are trained on the Dolma dataset.

- Hugging Face – The AI community building the future.: no description found

- Running big-AGI locally with LM Studio [TUTORIAL]: ➤ Twitter - https://twitter.com/techfrenaj➤ Twitch - https://www.twitch.tv/techfren➤ Discord - https://discord.com/invite/z5VVSGssCw➤ TikTok - https://www…

- [1hr Talk] Intro to Large Language Models: This is a 1 hour general-audience introduction to Large Language Models: the core technical component behind systems like ChatGPT, Claude, and Bard. What the…

LM Studio ▷ #🤖-models-discussion-chat (45 messages🔥):

- Mixed Reactions to Qwen 1.5:

@pierrunoytshared a YouTube video about Qwen 1.5, an opensource language model, but@heyitsyorkiecriticized the content for misleading model previews.@evi_rewand@yagilbfurther discussed technical issues with Qwen 1.5, pertaining to context lengths and GPU acceleration. - Code Llama Critique:

@pierrunoytexpressed dissatisfaction with Code Llama 2, indicating a need for a better coding language learning model that also understands design. - OpenAI Model Inconsistencies in Code Generation:

@lord_half_mercyobserved a decline in reply quality when generating code with OpenAI’s ChatGPT, questioning if it’s related to context length or complexity;@heyitsyorkiehumorously suggested it’s due to the AI getting “lazy.” - Confusion over Vision Models:

@bob_daleinquired about models capable of generating new logos based on a style, being recommended GPT4 Vision by@heyitsyorkie, despite initial misunderstandings regarding the model’s capabilities. - Debates Over Model Effectiveness: Users discussed the effectiveness of various models, including Qwen 1.5, Miqu, and LLaMA, with comments ranging from memory issues on high VRAM systems (

@.bambalejo) to criticism of language abilities and model outputs (@pwrresetand@re__x).

Links mentioned:

- @JustinLin610 on Hugging Face: “Yesterday we just released Qwen1.5. Maybe someday I can tell more about the…”: no description found

- Qwen 1.5: Most Powerful Opensource LLM - 0.5B, 1.8B, 4B, 7B, 14B, and 72B - BEATS GPT-4?: In this video, we dive deep into the latest iteration of the Qwen series, Qwen 1.5. Released just before the Chinese New Year, Qwen 1.5 brings significant up…

LM Studio ▷ #🎛-hardware-discussion (98 messages🔥🔥):

- Optimal LLM Hardware Specs Query: `@jolionvt` inquired about ideal settings for running LLM locally with an AMD Ryzen 5 7600, AMD Radeon RX 6700 XT, and 32 GB RAM, but did not receive a direct response.

- PCIe Riser Performance Concerns: `@nink1` questioned the performance degradation when using PCIe 1x to 16x riser cables compared to a direct motherboard connection. `@quickdive.` expressed interest in testing this concern and suggested editing the BIOS lane width for comparison, while `@nink1` planned to investigate further.

- Exploring the ESP32 for DIY Voice Projects: `@joelthebuilder` sought DIY project suggestions for hardware that could provide voice input and output for a home network, expressing a goal to replace Alexa with a custom setup. Encouraged by others, `@joelthebuilder` shared a [YouTube video](https://www.youtube.com/watch?v=_qft28MiVnc) about using ESP32 S3 Box 3 for integrating with Home Assistant and Local LLM AI, and pondered its availability for purchase.

- Evaluating Risks of Power Cable Modification: `@nink1` shared a creative solution to power an external riser board by modifying a PSU CPU cable, which `@.ben.com` cautioned could be a fire hazard. `@nink1` argued the safety of his setup considering the low power consumption and proper cable securement.

- GPU Bandwidth and PCIe Lane Concerns for Multi-GPU Setups: Debate ensued about the impact of PCIe bandwidth when using extender cables or restricting lane width for multi-GPU configurations. `@nink1`, `@quickdive.`, `@rugg0064`, and `@savethehuman5` discussed potential performance bottlenecks while sharing individual plans for experimenting with multiple GPU setups.

Links mentioned:

- Tesla P40 Radial fan shroud by neophrema: Hey, This is a fan shroud for Nvidia Tesla cards which are structurally identical to the P40. After a failed attempt using a normal Noctua (damn I sank money into it…) fan I realized that air pressu…

- All About AI: Welcome to my channel All About AI =) Discord: https://discord.gg/Xx99sPUeTd Website: https://www.allabtai.com How you can start to use Generative AI to help you with creative or other daily tasks…

- ESP32 S3 Box 3 Willow - HA - Local LLM AI: ESP32 S3 Box 3 with Willow connected to Home Assistant which is integrated with Local LLM AI (Mistral 7b)

- NVIDIA Tesla P40 24GB GDDR5 Graphics Card and Cooling Turbine Fan | eBay: no description found

- Amazon.com: Raspiaudio ESPMUSE Proto esp32 Development Card with Speaker and Microphone: no description found

- no title found: no description found

LM Studio ▷ #🧪-beta-releases-chat (10 messages🔥):

-

Debian Troubles with LM Studio:

@transfluxusreported issues executing the LM_Studio-0.2.14-beta-1.AppImage on Debian, seeing the errorcannot execute binary file.@heyitsyorkiesuggested grabbing the Linux beta role and checking the pinned messages in <#1138544400771846174>, as well as making the app executable with thechmodcommand. -

Feature Wishlist for Training and Images:

@junkboi76expressed a desire to see support for training and image generation support in future releases. They acknowledge the complexity but maintain these would be welcome features. -

Enhancement Suggestions Go to Feedback Station:

@fabguydirected@junkboi76to open a discussion in <#1128339362015346749> or upvote existing feature requests regarding their suggestions for training and image generation. -

Confusion Over

pre_promptin JSON Preset:@wolfspyrequestioned whether thepre_promptin the preset JSON was the system prompt, and if it should be reflected in the ‘prompt format’ preview. The issue was subsequently reported as a potential bug. -

Design Choice or Bug?: In response to

@wolfspyre’s concern about thepre_promptnot showing in settings modal,@fabguynoted that the content of “User” and “Assistant” isn’t shown either, possibly by design due to the potentially extensive length of system prompts.

Links mentioned:

Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

LM Studio ▷ #autogen (3 messages):

- Autogen Experiments Begin:

@photo_mpstarts experimenting with autogen and expresses being impressed with its capabilities in initial usage. - Userproxy Goes Silent:

@photo_mpencounters a problem where userproxy stops communicating after the first round of interaction, halting the conversation between agents. - Seeking Autogen Advice:

@photo_mpreaches out to the community seeking tips for resolving the issue with userproxy in autogen.

LM Studio ▷ #avx-beta (1 messages):

- Troubleshooting Chat Issues: User

@yagilbrecommended trying to delete problematic chat messages or clicking “reset to default settings” in the top right if experiencing trouble.

LM Studio ▷ #crew-ai (1 messages):

- In Search of a Visualization Tool: User

@m4sterdragoninquired about a tool or method to visualize crew interactions following the project kickoff, suggesting a need for better oversight of team dynamics. No solutions or follow-up comments were provided within the available messages.

LM Studio ▷ #open-interpreter (13 messages🔥):

- Open Interpreter Discord Clarification:

@fkx0647inquired about the Open Interpreter (OI) Discord, initially mistaking the LM Studio Discord for it.@heyitsyorkieclarified that the channel is just a sub-channel within LM Studio, directing to the OI Discord on their GitHub page. - Understanding Local Models Server Mode:

@heyitsyorkieexplained that OI can utilize LMStudio’s server mode for Local Models, shedding light on the integration between OI and LMStudio. - Exploring OI Features and Assistance with Discord Invitation:

@fkx0647discussed their progress with OI and sought assistance in finding an invite to the official OI Discord.@heyitsyorkieprovided guidance on where to find the invite on the GitHub README for OI. - Confirmation on GPT-3.5 Usage:

@fkx0647confirmed they have OI running, utilizing GPT 3.5 and noted the CLI’s performance is satisfactory.

Links mentioned:

GitHub - KillianLucas/open-interpreter: A natural language interface for computers: A natural language interface for computers. Contribute to KillianLucas/open-interpreter development by creating an account on GitHub.

Latent Space ▷ #ai-general-chat (12 messages🔥):

- Philosophical AI: User

@gabriel_symehumorously suggested that if models were true philosophers like Socrates, there wouldn’t be much discussion, as Socrates often dominated conversations in Plato’s texts. - Llama Model’s Safety Compromised for $200:

@swyxioshared a LessWrong post discussing how to undo Llama 2’s safety features with LoRA fine-tuning, under a $200 budget. The post highlights concerns about the ease of bypassing safety trainings in powerful models and the associated risks. - The Rise of Synthetic Data: In a curt message,

@swyxiosignaled that the creation of synthetic data is gaining momentum. - Voice Cloning’s Imminent Transformation:

@guardiangreacted to a link to a Discord message by indicating that voice cloning technology is about to significantly change, potentially posing new challenges and opportunities. - Excitement for Upcoming GPT Release: User

@coffeebean6887hinted at the arrival of an unnamed GPT model through a Twitter link, and@guardiangexpressed enthusiasm about seeing it in action. Meanwhile,@eugeneyanshared excitement but also joked about the limitations of their iPhone display to fully showcase the capabilities of the forthcoming “Blind.”

Links mentioned:

LoRA Fine-tuning Efficiently Undoes Safety Training from Llama 2-Chat 70B — LessWrong: Produced as part of the SERI ML Alignment Theory Scholars Program - Summer 2023 Cohort, under the mentorship of Jeffrey Ladish. …

Latent Space ▷ #ai-announcements (1 messages):

- LLM Paper Club Kicks Off:

@swyxioannounced that@713143846539755581will be presenting the Self Reward paper at the Latent Space Discord Paper Club. The session will commence shortly and members can join here.

Links mentioned:

LLM Paper Club (West) · Luma: We have moved to use the new Discord Stage feature here: https://discord.com/channels/822583790773862470/1197350122112168006 see you soon!

Latent Space ▷ #llm-paper-club-west (209 messages🔥🔥):

-

Self-Rewarding Language Models Spark Interest: The paper club’s discussion centered on a new paper proposing Self-Rewarding Language Models as outlined by

@coffeebean6887, with a new approach where LLMs use their own output to provide rewards during training, cited as outperforming Claude 2 and GPT-4 in some aspects. The full paper is available here. -

Interest in DSPy Spikes: DSPy, a programming model for chaining LLM calls, garnered significant attention, with multiple members, including

@kbal11and@yikesawjeez, expressing interest in exploring it further.@yikesawjeezvolunteered to lead a session on DSPy, and the paper can be found here. -

Engagement with Challenging Theorems Preparation:

@stephen_83179_13077compared the discussed LLM paper with automatic geometric theorem proving methods, while@gabriel_symenoted the necessity for external verifiers beyond LLMs for evaluations of complex tasks. A paper suggested for insight into reasoning through topology is available here. -

Upcoming Paper Clubs Features: Anticipation is building for future paper club sessions, with potential discussions on CRINGE loss, Colbert model, and T5 vs. TinyLlama, as suggested by

@amgadozand@_bassboost. Moreover, the talk of reviewing “Leveraging Large Language Models for NLG Evaluation: A Survey” has been set for the next week, with the paper viewable here. -

YouTube Resource for DSPy Exploration:

@yikesawjeezshared a YouTube video as a resource for delving into DSPyG, which combines DSPy with a Graph Optimizer, showcasing an example of a Multi Hop RAG implementation with graph optimization.

Links mentioned:

- Topologies of Reasoning: Demystifying Chains, Trees, and Graphs of Thoughts: The field of natural language processing (NLP) has witnessed significant progress in recent years, with a notable focus on improving large language models’ (LLM) performance through innovative pro…

- BirdCLEF 2021 - Birdcall Identification | Kaggle: no description found

- Self-Rewarding Language Models: We posit that to achieve superhuman agents, future models require superhuman feedback in order to provide an adequate training signal. Current approaches commonly train reward models from human prefer…

- Rephrasing the Web: A Recipe for Compute and Data-Efficient Language Modeling: Large language models are trained on massive scrapes of the web, which are often unstructured, noisy, and poorly phrased. Current scaling laws show that learning from such data requires an abundance o…

- Tuning Language Models by Proxy: Despite the general capabilities of large pretrained language models, they consistently benefit from further adaptation to better achieve desired behaviors. However, tuning these models has become inc…

- DSPy Assertions: Computational Constraints for Self-Refining Language Model Pipelines: Chaining language model (LM) calls as composable modules is fueling a new way of programming, but ensuring LMs adhere to important constraints requires heuristic “prompt engineering”. We intro…

- A Survey on Evaluation of Large Language Models: Large language models (LLMs) are gaining increasing popularity in both academia and industry, owing to their unprecedented performance in various applications. As LLMs continue to play a vital role in…

- Some things are more CRINGE than others: Preference Optimization with the Pairwise Cringe Loss: Practitioners commonly align large language models using pairwise preferences, i.e., given labels of the type response A is preferred to response B for a given input. Perhaps less commonly, methods ha…

- self-rewarding-lm-pytorch/self_rewarding_lm_pytorch/self_rewarding_lm_pytorch.py at ec8b9112d4ced084ae7cacfe776e1ec01fa1f950 · lucidrains/self-rewarding-lm-pytorch: Implementation of the training framework proposed in Self-Rewarding Language Model, from MetaAI - lucidrains/self-rewarding-lm-pytorch

- Large Language Models (in 2023)): I gave a talk at Seoul National University.I titled the talk “Large Language Models (in 2023)”. This was an ambitious attempt to summarize our exploding fiel…

- Building Your Own Product Copilot: Challenges, Opportunities, and Needs: A race is underway to embed advanced AI capabilities into products. These product copilots enable users to ask questions in natural language and receive relevant responses that are specific to the use…

- NEW DSPyG: DSPy combined w/ Graph Optimizer in PyG: DSPyG is a new optimization, based on DSPy, extended w/ graph theory insights. Real world example of a Multi Hop RAG implementation w/ Graph optimization.New…

- GitHub - lucidrains/self-rewarding-lm-pytorch: Implementation of the training framework proposed in Self-Rewarding Language Model, from MetaAI: Implementation of the training framework proposed in Self-Rewarding Language Model, from MetaAI - GitHub - lucidrains/self-rewarding-lm-pytorch: Implementation of the training framework proposed in…

- no title found: no description found

- Solving olympiad geometry without human demonstrations - Nature: A new neuro-symbolic theorem prover for Euclidean plane geometry trained from scratch on millions of synthesized theorems and proofs outperforms the previous best method and reaches the performance of…

- JupyterHub: no description found

Mistral ▷ #general (128 messages🔥🔥):

- Mistral.AI for Healthcare Internship:

@talhawaqas, a Master’s student in France, is looking for resources and papers on Mistral.AI’s real-world applications, particularly concerning pretraining and finetuning in health engineering contexts. - Transitioning to Cloud:

@zhiyyangexpressed intent to start testing on the cloud rather than locally for convenience, appreciating the information shared by the community. - Mistral Data Use Policy:

@mrdragonfoxclarified that the service collects user dialogue data and reserves the right to release datasets under a Creative Commons Attribution (CC-BY) license. - Exploring Response Length Control in Mistral:

@lucacitoand@mrdragonfoxdiscussed how to set upmax_tokensand temperature for concise responses in the context of@lucacito’s portfolio chatbot assistant.@mrdragonfoxprovided examples and advised on how to adjust sampling and temperature settings. - Temperature: To Zero or Not to Zero: In a heated debate about setting the temperature parameter to zero,

@i_am_domsuggested that while a temperature of 0 may not be ideal for a chatbot experience, it could improve performance and reduce hallucinations for high-precision tasks.@mrdragonfoxand others proposed various temperature settings for optimal chatbot responses, such as 0.7 and advised testing for the right fit.

Links mentioned:

Chat with Open Large Language Models: no description found

Mistral ▷ #models (4 messages):

- Mistral-like Embedding Model Found: User

@gbourdininquired about finding an embedding model with the same dimension length as Mistral-embed for local development.@mrdragonfoxresponded with a Hugging Face link to the E5-mistral-7b-instruct model, which has 32 layers and an embedding size of 4096 and explained its usage with example code. - Gratitude Expressed for Model Recommendation: After receiving the model recommendation and usage details,

@gbourdinexpressed their thanks with a “merci :)” followed by “thanks ! 🙂”.

Links mentioned:

intfloat/e5-mistral-7b-instruct · Hugging Face: no description found

Mistral ▷ #showcase (69 messages🔥🔥):

- LLMLAB Takes Advantage of Mistral:

@joselolol.declared the usage of Mistral to run LLMLAB operations for creating commercial synthetic data, inviting users to direct message for account activation after signing up. - Curiosity about Data Extraction Method:

@mrdragonfoxinquired about LLMLAB’s data extraction pipeline, but@joselolol.sought clarification, indicating a potential discussion on the process. - Augmentoolkit Shared as a Resource:

@mrdragonfoxshared a GitHub link to augmentoolkit and discussed working on adapting it to work with the Mistral chat API, highlighting the toolkit’s capability for vetting and assessing data. - Testing New Chat UI Enabled by Mistral:

@ethuxposted about implementing ETHUX Chat v0.7.0 which uses various Mistral models and the Huggingface ChatUI, setting a testing API limit to 200 euros and sharing the configuration. - Chat UI Rate Limitations and Source Revealed: In a follow-up,

@ethuxmentioned a rate limit of two messages per minute and shared that the HuggingFace chat UI is open-source on GitHub, while@gbourdinexpressed enthusiasm for the web search feature within the UI.

Links mentioned:

- ETHUX Chat: Made possible by PlanetNode with ❤️

- GitHub - e-p-armstrong/augmentoolkit: Convert Compute And Books Into Instruct-Tuning Datasets: Convert Compute And Books Into Instruct-Tuning Datasets - GitHub - e-p-armstrong/augmentoolkit: Convert Compute And Books Into Instruct-Tuning Datasets

- GitHub - huggingface/chat-ui: Open source codebase powering the HuggingChat app: Open source codebase powering the HuggingChat app. Contribute to huggingface/chat-ui development by creating an account on GitHub.

Mistral ▷ #random (2 messages):

- Inquiry on Next Mistral AI Model Release: User

@j673912inquired about the release of the next Mistral AI model.@mrdragonfoxresponded, suggesting to keep an eye on social media for the official release announcement.

Mistral ▷ #la-plateforme (1 messages):

- Embedding Model Troubles with the Canadian Flag:

@enjalotencountered an error while attempting to embed models using the dolly15k dataset and shared a specific error message related to the Canadian flag. They’ve successfully processed the previous 10k rows, which suggests the problem is isolated to this particular piece of text.

Links mentioned:

databricks/databricks-dolly-15k · Datasets at Hugging Face: no description found

HuggingFace ▷ #announcements (1 messages):

- New Forum Channel for Job Seekers:

@lunarfluannounced the introduction of a Forum channel specifically for job opportunities, which users can filter by tags such asinternship,machine learning,remote, andlong-term. The community is encouraged to use and provide feedback on this new feature.

HuggingFace ▷ #general (115 messages🔥🔥):

- GPU Acceleration Uncertainties: User

@ilovesassasked about the function ofpipeline.to("cuda"), with@sandeep_kr24clarifying that it moves the entire computational graph to a CUDA-enabled GPU, thereby speeding up the process. - Stable Diffusion Clarifications:

@yamer_aioffered assistance with using Stable Diffusion and emphasized their personal accomplishments in training models for pixel art generation, while@tmo97expressed confusion over how accessible the technology is for non-technical users, receiving guidance from@yamer_ai. - Technical Requests and Offerings: Users

@p1ld7a,@drfhsp, and@.volvitesought advice for setting up a web UI for querying local LLMs, using specific models for inference with the free T4 on Collab, and resolving issues with Gradio, respectively. They were directed toward resources like Python, Gradio, and Colab notebooks from other sharing individuals like@electriceccentricsand@lee0099. - Backend Overload on HuggingFace Spaces:

@thomaslau.001shared a Reddit link discussing an “out of memory” error on Nvidia A10G with Codellama on HuggingFace Spaces, seeking assistance in the matter;@vipitissuggested that the 70B model attempts could be exceeding memory limits even at reduced precision levels (fp16). - Collaboration Invites and Discussions: Various users, including

@soul_syrup,@technosourceressextraordinaire,@jdreamer200, and@electriceccentrics, mentioned their projects ranging from neural signal analysis and robotics with RL, to job searches and financial humor about cloud service costs, prompting social engagement and project interest within the community.

Links mentioned:

- - YouTube: no description found

- Oppenheimer Oppenheimer Movie GIF - Oppenheimer Oppenheimer movie Barbie oppenheimer meme - Discover & Share GIFs: Click to view the GIF

- How to install stable diffusion 1.6 Automatic1111 (One Click Install): 🔔 Subscribe for AIconomist 🔔SD Automatic1111 1.6 ➤ https://github.com/AUTOMATIC1111/stable-diffusion-webuiPython 3.10.6 ➤ https://www.python.org/downloads/…

- no title found: no description found

- Easter Funny Shrek GIF - Easter Funny Shrek Funny Face - Discover & Share GIFs: Click to view the GIF

- GitHub - cat-game-research/Neko: A cat game beyond.: A cat game beyond. Contribute to cat-game-research/Neko development by creating an account on GitHub.

- Reddit - Dive into anything: no description found

- GitHub - Unlimited-Research-Cooperative/Human-Brain-Rat: Bio-Silicon Synergetic Intelligence System: Bio-Silicon Synergetic Intelligence System. Contribute to Unlimited-Research-Cooperative/Human-Brain-Rat development by creating an account on GitHub.

HuggingFace ▷ #today-im-learning (2 messages):

-

Meta-RL Application Development Opportunity:

@davidebuosois developing a meta-RL application and is seeking collaborators for a side project related to advanced works listed on their curated GitHub list (Meta-RL Panda), specifically to test on a Panda robotic arm using gym. -

Personal Achievements and Project Showcase:

@antiraedusshared their weekly update, mentioning tackling bad habits, landing a tutoring job at their university, and attending social events. They are also working on a game post a Flutter platformer tutorial and plan to share it by next week. The overarching theme for the year is to aim for internships while creating sharable and tangible items as motivation and proof of work.

HuggingFace ▷ #cool-finds (4 messages):

- Discover Cutting-Edge Research by Evan Hubinger et al.:

@opun8758shared a new research paper co-authored by Evan Hubinger and colleagues, highlighting its potential interest to the community. - In Search of the RWKV Model:

@vishyouluckinquired about experiences with fine-tuning the RWKV model, sparking curiosity among modeling enthusiasts. - Eagle 7b Announcement Lacks Details:

@vishyouluckmentioned Eagle 7b but provided no further context or information about this model. - Code Llama Leaps into the Future with 70B Model:

@jashannoshared a beehiiv article announcing Facebook/Meta’s release of Code Llama, a new 70 billion parameter language model designed to aid coders and learners, available under a community-friendly license.

Links mentioned:

- Sleeper Agents: Training Deceptive LLMs that Persist Through Safety Training: Humans are capable of strategically deceptive behavior: behaving helpfully in most situations, but then behaving very differently in order to pursue alternative objectives when given the opportunity. …

- META’s new OPEN SOURCE Coding AI beats out GPT-4 | Code Llama 70B: PLUS: Privacy Concerns about ChatGPT, Pressure on Tech Giants and more…

HuggingFace ▷ #i-made-this (9 messages🔥):

- Web Integration Achieved:

@wubs_confirmed that the generator mentioned by@aliabbas60works in web and Progressive Web App (PWA) formats, functioning well on both mobile and desktop devices. - New AI Project Showcase:

@critical3645mentioned creating a project from scratch and linked a video demonstration. - SD.Next Unveils Major Enhancements:

@vladmandicreleased a comprehensive update for SD.Next, introducing a robust Control module, a Face module, improved IPAdapter modules, new intelligent masking options, and numerous new models and pipelines. They highlighted performance enhancements, with a benchmark of 110-150 iterations/second on nVidia RTX4090, and directed users to view the full documentation and updates in their Wiki. - Community Guidelines Reminder:

@cakikireminded@vladmandicto adhere to community guidelines by removing any Discord invite links, which@vladmandicpromptly addressed. - Role-Play Project Goes Docker: Krolhm announced the dockerization of the role play (RP) project, ImpAI, for easier use with

hf pipelineandllama.cpp, sharing the GitHub repository ImpAI.

Links mentioned:

- GitHub - rbourgeat/ImpAI: 😈 ImpAI is an advanced role play app using large language and diffusion models.: 😈 ImpAI is an advanced role play app using large language and diffusion models. - GitHub - rbourgeat/ImpAI: 😈 ImpAI is an advanced role play app using large language and diffusion models.

- Create new page · vladmandic/automatic Wiki,): SD.Next: Advanced Implementation of Stable Diffusion and other Diffusion-based generative image models - Create new page · vladmandic/automatic Wiki

- Create new page · vladmandic/automatic Wiki): SD.Next: Advanced Implementation of Stable Diffusion and other Diffusion-based generative image models - Create new page · vladmandic/automatic Wiki

- Create new page · vladmandic/automatic Wiki): SD.Next: Advanced Implementation of Stable Diffusion and other Diffusion-based generative image models - Create new page · vladmandic/automatic Wiki

- Create new page · vladmandic/automatic Wiki): SD.Next: Advanced Implementation of Stable Diffusion and other Diffusion-based generative image models - Create new page · vladmandic/automatic Wiki

HuggingFace ▷ #reading-group (4 messages):

- Mamba SSM showcased by tonic_1: User

@tonic_1presented the mamba library, highlighting his personal interest inutils,ops, andmodulesfeatures. - Chad seeks state-space math insights and trade-offs: User

@chad_in_the_houseexpressed an interest in the mathematical aspects of state space models provided by mamba and how they compare with transformers and rwkv. - Clarity on Mamba sought, repetition not an issue: User

@chad_in_the_housealso indicated that it’s okay to repeat information from videos during the presentation, acknowledging most attendees may not be familiar with mamba. - A Quick History of Mamba Requested: In addition to understanding the trade-offs,

@chad_in_the_houserequested a brief history of mamba’s development up to the current point.

Links mentioned:

mamba/mamba_ssm at main · state-spaces/mamba: Contribute to state-spaces/mamba development by creating an account on GitHub.

HuggingFace ▷ #diffusion-discussions (1 messages):

- In Search of Understanding Transformers: User

.slartibartinquired about presentations that cover code-writing transformers, specifically those that delve into what a transformer learns from the sampled code. They are looking for resources to better understand the learning process of transformers in the context of code generation.

HuggingFace ▷ #computer-vision (4 messages):

- Seeking OCR for Line Segmentation: User

@swetha98inquired about an OCR tool to segment a document image into four separate images, each containing one of the four lines of text present on a sample invoice. - Surya OCR Recommended:

@vikas.psuggested using Surya OCR, which can perform accurate line-level text detection and recognition for any language, in response to@swetha98’s request for an OCR solution. - Positive Reception for the Recommendation:

@swetha98expressed gratitude to@vikas.pfor the suggestion and mentioned the intention to cite his GitHub repository if the solution meets their needs.

Links mentioned:

GitHub - VikParuchuri/surya: Accurate line-level text detection and recognition (OCR) in any language: Accurate line-level text detection and recognition (OCR) in any language - GitHub - VikParuchuri/surya: Accurate line-level text detection and recognition (OCR) in any language

HuggingFace ▷ #NLP (11 messages🔥):

-

Seeking a Relevance Checker for RAG Pipelines:

@tepes_draculais building a RAG pipeline and is looking for a classifier to validate the relevance of query and context before passing it to an LLM. They mention a desire for a tool that draws upon datasets like truthfulqa and fever. -

Speeding Up Deci lm on Windows:

@kingpokiwants to improve the inference speed of Deci lm running locally on Windows and is looking for suggestions beyond model quantization. -

Measuring Concept Similarity in Tech Fields:

@serhankileciis exploring how to calculate similarity percentages among concepts, tools, and languages within various tech-related fields. They consider using word embeddings and cosine similarity, acknowledging the lack of a single encyclopedic dataset for this purpose. -

Data Quality in LLM Instruction Tuning:

@Chris Mhighlights the importance of data quality for LLM instruction tuning and shares an article on automated techniques for detecting low-quality data, citing bad data as the common culprit affecting performance. The article can be found at cleanlab.ai. -

Inquiry about HuggingFace’s TAPAS Model:

@nitachaudhari29casually enters the discussion with a “hi” and follows up with a question asking if anyone has experience using the TAPAS model from HuggingFace. -

State of Multi-Label Text Classification:

@simpleyujiinquires about the current state-of-the-art (SOTA) for multi-label text classification. -

Pros and Cons of Small Batch Sizes:

@abrahamowodunniposes a question about the drawbacks of using a batch size of 4 when fine-tuning a model with a small dataset, other than increased training time.@vipitisresponds, indicating that a batch size of 4 for 1.2k steps could be sufficient, but generally, a larger batch size is preferred if it fits in VRAM.

Links mentioned:

How to detect bad data in your instruction tuning dataset (for better LLM fine-tuning): Overview of automated tools for catching: low-quality responses, incomplete/vague prompts, and other problematic text (toxic language, PII, informal writing, bad grammar/spelling) lurking in a instru…

HuggingFace ▷ #diffusion-discussions (1 messages):

- In Search of Transformer Insights: User

.slartibartasked if there are presentations available that cover code-writing transformers and delve into what transformers learn from the sampled code. They are looking for material that tries to describe the learning process of transformers.

OpenAI ▷ #ai-discussions (79 messages🔥🔥):

- Speculation on Bard Ultra’s Release:

@la42099humorously comments on the anticipation surrounding Bard Ultra’s release, acknowledging that predictions on these matters are often incorrect. - ChatGPT Access Issues:

@chrisrenfieldexperiences difficulty accessing ChatGPT, and@satanhashtagsuggests visiting OpenAI’s service status for updates. - Seeking AI for Jewelry Visualization:

@jonas_54321is looking for an AI tool to create photo-realistic images of jewelry on models.@satanhashtagrecommends Dall-E 3, provided for free on Bing, and mentions personal preference for Midjourney. - Terms of Service Constraints: A discussion emerges around the Terms of Service (TOS) led by

@wrexbe, with thoughts on creatively navigating the restrictions and content warnings associated with the generation of NSFW content. Other users, such as@drinkoblog.weebly.comand@chotes, discuss the efficiency and ethical angles of using AI for various content. - The Path to AGI Discussed: The conversation between