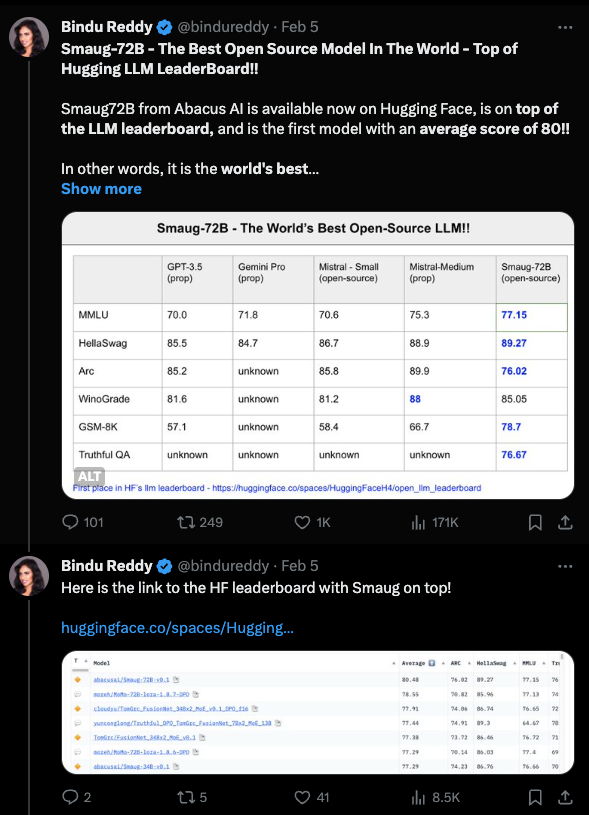

It’s now the Chinese year of the Dragon, and Abacus AI appropriately rung it in making a lot of noise about Smaug 72B, their latest and largest finetune of Qwen (1.0… badly timed since 1.5 just came up, but you can be sure they will update it with more noise)

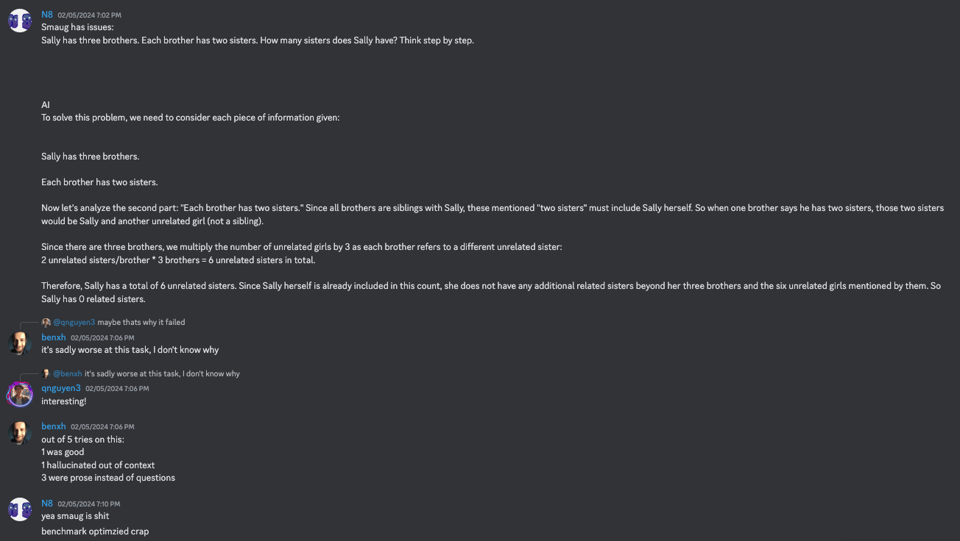

Typical skepticism aside, it is still standing unchallenged after a week on the HF Open LLM Leaderboard, and with published contamination results, which is a good sign. However the Nous people are skeptical:

In other news, LAION popped up with an adorably named local voice assistant model with a great demo.

Table of Contents

[TOC]

PART 1: High level Discord summaries

TheBloke Discord Summary

-

Size Matters in Model Performance: Community members debated the cost versus performance of large models like GPT-4 and explored alternatives like Janitor AI for cost-effective chatbot solutions. The potential effectiveness of smaller models quantized at higher levels was also discussed, but consensus suggests larger models may handle heavy quantization better, though this relationship isn’t strictly linear.

-

Good AI Turns Goody-Two-Shoes: Conversations noted the safety-focused AI model “Goody-2,” acknowledging its extreme caution against controversial content, which sparked some playful ideas about challenging the model’s stringency.

-

Web UI Woes and Wins: Collaborative discussions featured progress in UI development projects such as PolyMind and others, with members discussing the intricacies of web development and prompt engineering.

-

Missing Models and Merging Mysteries: Queries for fine-tuned Mistral-7b models for merging projects surfaced, alongside musings on the conspicuous absence of a member likened to awaiting updates, humorously compared to the expectation for Llama3 to support vision.

-

Model Merging Muscles Flexed: The community saw lively exchanges on model merges like Miquella and the potential performance of ERP-focused models like MiquMaid, showcasing enthusiasm for fine-tuning these AI models to specific tasks, while members also sought advice on setup configurations, such as context length and memory allocation across GPUs.

-

Injected Instructiveness: Interest in fine-tuning methodologies was evident with discussions around converting base models into instruct models by utilizing datasets like WizardLM_evol_instruct_V2_196k and OpenHermes-2.5. This process enriches models with added knowledge and alters the chatbot’s tone, with members pointing to resources like unsloth for efficient fine-tuning.

-

Coding Corner Collaborations: A shared Python script for Surya OCR hinted at the continued development and application of optical character recognition tools among members. Debugging help was sought for a webaudiobook repository with a possible service issue tied to ElevenLabs’ platform performance highlighted as a potential culprit.

LM Studio Discord Summary

-

Model Conversion Mishap: It was noted that an error occurred while running TheBloke’s models, which is likely due to a broken quant during the PyTorch to gguf conversion process. Users also exchanged experiences and advice on hardware components and setups for running Large Language Models efficiently, with mentions of Intel Xeon CPUs, Nvidia P40 GPUs, and the importance of VRAM and CUDA cores.

-

LM Studio’s Technological Underpinnings: Discussions in the LM Studio community clarified that LM Studio uses llama.cpp and potentially Electron for its tech stack. There were also privacy concerns with LMStudio data usage, but it was indicated that the platform prioritizes privacy, only sharing data from updates and model downloads.

-

Image Generation Limitations: Users shared their difficulty in using LM Studio for image generation, prompting some to consider VPN usage to circumvent ISP blockages affecting access to necessary resources like huggingface.co.

-

Web UI Woes: The community sought but confirmed the absence of a web UI for LM Studio, adding a layer of complexity for some users.

-

Challenges with Small Model Classification: Users

'@speedy.dev'faced challenges with classification tasks using 13B and 7B Llama models. A comparison of story writing capabilities between various sized models showed Goliath 120B capturing emotions better while Mixtral 7B outperformed in speed. -

Metadata Management and a ‘model.json’ Standard Proposed: The value of proper model metadata management was highlighted, recommending a categorization system for different parameters, and a proposal for a

model.jsonstandard was posted on GitHub. -

Intel’s AVX-512 Decision Bewilders: Intel’s choice to drop AVX-512 support in a powerful i9 processor while maintaining it in the less powerful i5-11600k sparked confusion among users.

-

Preference for CrewAI Over AutoGen: There was a brief mention indicating a preference for CrewAI over AutoGen due to ease of management concerns.

HuggingFace Discord Summary

-

HF Hub Temporarily Offline: @lunarflu reported that the Hugging Face Hub is experiencing downtime, with git hosting affected. The issue is being addressed.

-

Discord Authentication and API Changes: On Discord verification, user @d3113 solved a bot authentication error by enabling “Allow messages from server members.” Meanwhile, @miker1662 encountered an API bug related to finding conversational models on Hugging Face, with @osanseviero confirming the API’s shift away from

conversationaltowardstext-generation. -

Large Language Models (LLMs) in Focus: Conversations included discussions on the PEFT for LLM Fine-Tuning versus full fine-tuning approaches, with @samuelcorsan choosing the latter, while @akvnn inquired about leveraging a Raspberry Pi for computer vision tasks and @pradeep1148 shared a YouTube video on zero shot object detection.

-

Choice of Hardware for Local LLMs: Dual NVIDIA 4080 Supers versus a single 4090 for coding LLMs was debated by @lookingforspeed and @tddammo, with older generation pro cards like the A4500 or A4000 suggested for better efficiency and NVLink support.

-

API Action with Library Selection and Innovation: @subham5089 shared insights on choosing the right Python library for API calling, highlighting Requests, AIOHTTP, and HTTPX. Websockets in Python were addressed with a recommendation for httpx-ws.

-

Developments in Computer Vision and NLP: From discussions on tools like

Dinov2ForImageClassificationfor simplifying multi-label image classification, to NLP-related issues such as the downgrading to PEFT 0.7.1 for saving LoRA weights, the community engagement is rich with shared solutions and knowledge exchanges.

OpenAI Discord Summary

-

AI Financial Advice Comes with Caution: The limitations of AI in generating trading strategies were discussed with a sense of frustration due to AI’s cautious responses and memory constraints when dealing with financial queries.

-

Innovation in AI-Powered Compression: A tool named

ts_zipwas shared, which leverages Large Language Models for text file compression, sparking interest in AI-driven compression technologies. The tool can be examined at ts_zip: Text Compression using Large Language Models. -

Deliberations on AI’s Resource Extraction and Impact: A mention of 5-7 trillion investment for AI chips led to debates around the impact on AI research and development and societal risks linked with autonomous robotics.

-

Google’s Gemini AI Draws Attention: Google’s Gemini was discussed with regards to its anticipated improvements and current pros and cons, capturing attention for its coding and interpreter functionalities.

-

ChatGPT Token Context Limitations Explored: Issues with ChatGPT’s attention span and context retention were addressed, noting limitations like the full 128K token context available only to API and Enterprise users, contrasting with the 32K token limit for Plus and Team users.

-

GPT-4 Subscription Details Clarified: Clarifications were made around subscription-sharing where it was noted that all GPT versions now utilize GPT-4, and thus a Plus or higher subscription is necessary for usage.

-

Navigating GPT-4’s Conversational Flow and State Awareness: Users discussed GPT-4’s handling of “state changes” and noted its effectiveness in managing dynamic conditions and conversational flow, which is important when providing prompts that require multi-step problem-solving.

-

Effective Prompt Engineering Tactics Shared: In the #prompt-engineering channel, strategies to instruct ChatGPT to perform complex tasks like converting rules of evidence into a spreadsheet and translating text into simple language were discussed. Simplifying tasks and using stepwise prompting were some of the recommended approaches.

Nous Research AI Discord Summary

-

Elon Musk’s Alleged AI Antics: Elon Musk was humorously linked to two separate AI-related events: one where he’s rumored to be on a call with Alex Jones and one where he boasts about creating an AI with 200 GPUs.

@fullstack6209shared a snippet that can be found here and quoted Musk’s comment from February 9th, 2024. Moreover, Hugging Face (HF) was reported to be offline by@gabriel_syme. -

Quantization and Merge Innovations in AI: Senku 70B model quantized to 4-bit achieves significant scores without calibration, and

@carsonpoolesuggests mixing formats for better results. Meanwhile,@nonameusrintroduced the QuartetAnemoi-70B model, and@.benxhdiscussed the potential of 1.5-bit quantization that fits a 70b model into less than 18GB of RAM. Links to QuartetAnemoi-70B model here, and 1.5-bit quantization here. -

Datasets and Models Galore: The engineering community discussed numerous models and datasets: UNA-SimpleSmaug-34B showed improvement over Smaug-34B and was trained on SimpleMath. Lilac Processed OpenHermes-2.5 dataset is available here. TheProfessor-155b, a model using mergekit, was also mentioned.

-

Community Exchanges on Finetuning and Hosting AI Models:

@natefyi_30842looked for simple fine-tuning methods, with@tekniumrecommending together.ai and noting the need for hyperparameter tuning.@nonameusrsought advice for hosting a model on Hugging Face for API inference. -

Discussion on Autonomous LLMs and Platform Features: Interest in autonomous large language models (LLMs) was expressed by

@0xsingletonly, who is looking forward to Nous’s SDK development mentioned by@teknium. Additionally, confusion about feature inclusions on the roadmap was clarified, with the Llava team’s independent integration work pointed out by@qnguyen3. -

Humor and Services Down: Other notable mentions include a humorous reluctance from

@.ben.comto take advice from individuals lacking emoji expertise, and the brief outage of Hugging Face’s services as noted by@gabriel_syme.

Eleuther Discord Summary

-

AI Newcomers Tackle EleutherAI and More: A member with a background in software development and research papers showed interest in contributing to AI and GPT-J, amidst discussions of The Pile dataset and CUDA programming. The community explored Prodigy’s VRAM-heavy performance, AI merging practices, and expressed concerns about the rise of questionable AI practices, along with a reference to OpenAI’s new release potentially timed with the Super Bowl. Relevant resources include The Prodigy optimizer, miqu-1-120b on Hugging Face, and Microsoft’s Copilot commercial.

-

Nested Networks and Vector Confusions: Enthusiasm was shown for nested networks in JAX, with helpful resources like a Colab notebook for experimentation. Discussions also delved into the confusion over vector orientations in mathematics, while help was offered for implementing diffusion paper methods, with code shared in a nbviewer gist. For further reading, an aggregate of UniReps research was shared via GitHub.

-

Debating the Merits of Machine Unlearning Benchmarks: Skepticism arose regarding the significance of the “TOFU” benchmark for unlearning sensitive data as detailed in the TOFU paper. Concerns were raised about its efficacy and real-world applications, with participants also discussing a related neuron pruning paper which may illuminate the conversation.

-

Model Evaluation and Hallucination Tracking: Questions about the MedQA benchmark suggested that Pythia models might struggle with multiple-choice formats. A search for comparative model API data, spanning OpenAI to Anthropic and the Open LLM Leaderboard, was highlighted. For tasks involving the GPQA dataset, warnings against potential data leakage were noted, seeking manual downloads GPQA dataset paper. Clarifications were requested for evaluating Big Bench Hard tasks using GPT-4 models. A call for participation was made to a new hallucinations leaderboard explained on Hugging Face’s blog post and the associated Harness space.

LAION Discord Summary

-

Circuit Integration Trumps Cascading in Voice Assistants: The opinion was voiced that Cascaded ASR + LLM + TTS is less impressive compared to end-to-end training for voice AI, with the BUD-E voice assistant showcased as an example of integrated ASR, LLM, and TTS in a single PyTorch model, promoting end-to-end trainability (Learn More About BUD-E).

-

Legal Tangles for AI Art Creators: A recent court ruling saw U.S. District Judge William Orrick deny Midjourney and StabilityAI’s motion for early dismissal under a First Amendment defense, sparking debate among users about the case’s broader implications.

-

AI Community Grapples with Open-Source Ethics: The AI community discussed the

sd-forgeproject, which combines code from diffusers, automatic1111, and comfyui, yet tries to keep a distance from these projects amidst the evolving landscape for Stable Diffusion models and their open-source UI counterparts. -

Creative Frontiers: AI-Generated DnD Maps: Users have successfully used neural networks to create Dungeons and Dragons maps, reflecting the expanding capabilities of AI in creative endeavors.

-

Hugging Face Faces Hurdles: There were reports of Hugging Face’s services experiencing downtimes. The conversation focused on the challenges of relying on external APIs and the need for robust alternatives to maintain smooth AI development operations.

-

An Open Voice Evolves: BUD-E was introduced as an open-source, low-latency voice assistant designed to operate offline, with an invitation extended to the community to contribute to its further development (Contribute to BUD-E, Join Discord).

-

The Science of Loss in AI: There was a query on Wasserstein loss in one of Stability AI’s projects with a link to the GitHub repository, although no direct code pertaining to the claim was identified (Discriminator Loss Mystery).

-

Stacking Talents and Scientific Insights: A user showcased their full stack design and development skills, while another shared a scientific article without additional context. Additionally, there was a request for guidance on reproducing the MAGVIT V2 model, indicative of active research and development efforts within the community (Shinobi Portfolio, Check out MAGVIT V2).

-

Introducing Agent Foundation Models: The community was alerted to a paper on “An Interactive Agent Foundation Model” available on arXiv, suggesting a shift towards dynamic, agent-based AI systems (Read the Abstract).

Perplexity AI Discord Summary

-

Compare AIs Head-to-Head: Users discussed comparing different AI models by opening multiple tabs; one such site for comparison includes AIswers, where Perplexity’s performance can be tested against others.

-

App Interaction Oversensitivity: Perplexity’s iPad app received criticism for overly sensitive thread exit functionality, and an inquiry about a developers-oriented channel for Perplexity’s API resulted in a redirection to an existing Discord channel.

-

API Rate Limiting Quirks: Some users faced a 429 HTTP status error when using the Perplexity API through an App script, initially mistaking it for an OpenAI-related issue. The problem was resolved by adding a millisecond delay in the script; credits and limits on Perplexity can differ from those on OpenAI.

-

Model Features and Functions Inquiry: There was a request for an update on Mistral’s 32k context length availability from the feature roadmap, as well as clarification that the messages field is required for all inputs with

mistral-8x7b-instructand that function calling isn’t supported. -

Ensure Search Results are Public: Perplexity users were reminded to make sure threads are publicly viewable before sharing in the channel, which is designated for notable results obtained using Perplexity.

CUDA MODE Discord Summary

-

H100 GPU as a Stepping Stone to AGI:

@andreaskoepfdiscussed the potential of the new H100 GPU in achieving Artificial General Intelligence (AGI) when combined with the appropriate models and sufficient numbers of GPUs, referencing an AI Impacts article with FLOPS estimates for human brain activity. -

Learning from Stanford’s AI Hardware Experts: The community highlighted a Stanford MLSys seminar by Benjamin Spector for engineers, which offers insights into AI hardware that might be relevant to the discourse on engineering forums.

-

Serverless Triton Kernel Execution:

@tfsinghannounced the launch of Accelerated Computing Online, a serverless environment for executing Triton kernels on a T4 GPU, and mentioned the project’s GitHub repository (tfsingh/aconline) for further exploration. -

CUDA Development Deep Dives: Discussions centered around CUDA programming involved memory coalescing for performance enhancements, NVIDIA NPP for image operations, the nuances of numerical stability in fp16 matmuls, and best practices for independent development of CUDA-compatible extensions.

-

Multi-GPU Troubleshooting and FAISS Challenges:

@morgangiraudfaced issues with incorrect data during direct device-to-device tensor copying in distributed matrix multiplication and sought collaborators having multi-GPU setups for verification, while@akshay_1dealt with errors embedding vectors in FAISS(colbert) that might stem from distributed worker timeouts. -

CUDA MODE Lecture Sessions and Announcements: Upcoming and past educational events such as “CUDA MODE Lecture 5: Going Further with CUDA for Python Programmers” sparked interest, with link sharing on platforms such as Discord for community engagement and learning.

-

Engagement with Educational Content: Community members, particularly

@smexy3, showed eagerness for future instructional video content, especially those that will teach how to analyze optimization opportunities in reports, with the next video scheduled to be released on March 2.

OpenAccess AI Collective (axolotl) Discord Summary

-

Awq Gguff Converter: Lightning Fast: Users praised the awq gguff converter for its swift performance, calling it a “10/10 fast” conversion tool without specifying further details or links.

-

HuggingFace Troubles Spark Community Solutions: During a HuggingFace outage, which affected even local training jobs, members discussed workarounds including downgrading to version 0.7.1 and considering the use of alternative inference solutions like TensorRT for local inference.

-

Mixtral Quandary Resolved with Peft Upgrade: An issue with Mixtral’s quantization process was resolved by upgrading from peft version 0.7.1 to 0.8.0, with confirmation that the upgrade remedied the initial problems. LlamaFactory’s adoption of ShareGPT format was noted, and discussions about naming conventions ensued without further conclusion.

-

Fine-Tuning Techniques and Efficiency in Focus: The community exchanged tips on fine-tuning strategies, including generating Q/A pairs from historical datasets for chat models and seeking cost-effective methods such as using quantized Mixtral. Practical insights into training configurations for Mistral-7b-instruct were also shared with references to configuration files from Helix and the axolotl GitHub repo.

-

Resource Quest for Fine-Tuning Newbies Goes Unanswered: A request for learning resources on fine-tuning went unanswered in the message history, highlighting a potential area for community support and knowledge sharing.

LlamaIndex Discord Summary

-

LLMs Master Tabular Traversing: A new video tutorial released by

@jerryjliu0highlights advanced text-to-SQL orchestration, essential for navigating and querying tabular data with language models. -

Enhancing Understanding of Tabular Data: Recent advancements have been shared around RAG systems, with a significant emphasis on multi-hop query capabilities, detailed in Tang et al.’s dataset for benchmarking advanced RAG models. Alongside this, a mini-course is available covering the construction of query pipelines that blend text-to-SQL with RAG, amplifying the QA over tabular data framework.

-

Innovating Video Content Interaction: A Multimodal RAG architecture that synergizes OpenAI GPT4V with LanceDB VectorStore is enhancing video content interaction. Video Revolution: GPT4V and LlamaIndex Unleashed discusses this innovation and its potential, a must-read for those interested in the field, available here.

-

Explorations and Solutions in AI Context Management: LlamaIndex community members have discussed practical applications such as using LlamaIndex for generating SQL metadata and the need for solutions like

SimpleChatStorefor maintaining chat continuity across multiple windows. The resolution for extracting keywords from text was suggested to involve prompt engineering. -

Pricing and Availability Clarifications: Questions about LlamaIndex’s free and open-source nature and availability led to clarifications that it is indeed open source, with more details accessible on their official website.

LangChain AI Discord Summary

-

Scktlearn Struggles Call for Voice Support:

@vithansought assistance with scktlearn and pandas, indicating the limitations of text communication and requesting a voice call with@himalaypatelfor more effective troubleshooting. -

LangChain Video Tutorial Drops: A YouTube tutorial “Unlock the Power of LangChain: Deploying to Production Made Easy” was shared by

@a404.eth, detailing the deployment process of a PDF RAG using LangChain and UnstructuredIO to DigitalOcean for production use. The video is accessible at this link. -

Open Source Selfie Project Needs Your Pics:

@dondo.ethintroduced Selfie, an open source project working to improve text generation by utilizing personal data via an OpenAI-compatible API, with contributions and testing welcomed on their GitHub. -

Intellifs Setting the Standard:

@synacktraannounced the creation of Intellifs, a tool for local semantic search based on the aifs library, currently open for contributions on GitHub. -

Your Art, AI’s Touch:

@vansh12344launched ArtFul - AI Image Generator, an app that uses AI models such as Kandinsky and DALL-E to create unique art, free to use with ad support, available on the Google Play Store. -

Merlinn’s Magic Aid in Incident Resolution:

@david1542presented Merlinn, intended to aid teams in quickly resolving production incidents through support from an LLM agent and LangChain integration. More details can be found on the Merlinn website. -

Triform Appeals for Beta Test Cooks: Triform, a new platform for hosting and orchestrating Python scripts with LangChain integration, was announced by

@igxot. Users are invited to obtain a free permanent account through beta participation, with a sign-up link here and documentation here. -

Automatic Object Detection Made Easy:

@pradeep1148shared a YouTube tutorial on using the MoonDream Vision Language Model for zero-shot object detection. -

Chatting up Documents with AI Tools:

@datasciencebasicsposted a video guide explaining the creation of a Retrieval Augmented Generation UI using ChainLit, LangChain, Ollama, & Mistral. -

Playground Disabled in Production:

@gitmaxddiscussed the possibility of disabling the playground on deployed LangChain AI endpoints using a specific code snippet, but received no responses to the inquiry.

Mistral Discord Summary

One Size Fits All with Mistral’s Subscription: Users discussed the subscription model for the Mistral Discord chatbot, confirming it is a unified model with payment per token and scalable deployment, highlighted by @mrdragonfox; quantized models, such as those found on Hugging Face, were also mentioned as requiring less RAM.

GPU Economics: Rent vs. Own: @i_am_dom analyzed the cost-effectiveness of Google GPU rentals versus owning hardware like A100s 40GB, suggesting that after 70000 computational units or about half a year of use, owning GPUs could be more economical.

Docker Deployment Discussion: A request for docker_compose.yml for deploying Mistral AI indicates ongoing discussions about streamlining Mistral AI setups as REST APIs in Docker environments.

Fine-Tuning for Self-Awareness and Personal Assistants: Fine-tuning topics ranged from installation success on Cloudfare AI maker to a lack of self-awareness in models, as noted by @dawn.dusk in relation to GPT-4 and Mistral; a Datacamp tutorial was recommended for learning use cases and prompts.

Showcasing Mistral’s Capabilities: @jakobdylanc’s Discord chatbot with collaborative LLM prompting feature supports multiple models including Mistral with a lean 200-line implementation, available on GitHub; additionally, Mistral 7b’s note-taking prowess was spotlighted in an article at Hacker Noon, outperforming higher-rated models.

Latent Space Discord Summary

-

TurboPuffer Soars on S3: A new serverless vector database called TurboPuffer was discussed for its efficiency, highlighting that warm queries for 1 million vectors take around 10 seconds to cache. The conversation compared TurboPuffer and LanceDb, noting that TurboPuffer leverages S3, while LanceDb is appreciated for its open-source nature.

-

Podcast Ponders AI and Collective Intelligence: An interview with Yohei Nakajima on the Cognitive Revolution podcast was shared, discussing collective intelligence and the role of AI in fostering understanding across cultures.

-

AI as Google’s Achilles’ Heel: A 2018 internal Google memo shared via TechEmails indicating that the company viewed AI as a significant business risk sparked discussion, with its concerns continuing to be relevant years later.

-

ChatGPT’s Impact on College Processes: The trend of using ChatGPT for college applications was analyzed, citing a Forbes article which pointed out potential red flags that may arise, such as the use of specific banned words that alert admissions committees.

-

Avoiding Academic Alert with Banned Words: There was a suggestion to program ChatGPT with a list of banned words to prevent its misuse in academic scenarios, relating back to the discussion on college admissions and the overuse of AI detected via such words.

DiscoResearch Discord Summary

-

Hugging Face Service Disruption Ignites Community Debate: Discussions arose as Hugging Face experienced downtime, with community members such as

_jp1_recognizing the platform’s integral role and revealing past considerations to switch to Amazon S3 for hosting model weights and datasets, yet the convenience of HF’s free services prevailed._jp1_and@philipmayalso pondered HF’s long-term sustainability, floating concerns about possible future monetization and the impact on the AI research community. -

Considerations on HF’s Role as Critical Infrastructure: The debate initiated by

@philipmayquestioned whether Hugging Face qualifies as critical infrastructure for the AI community, highlighting how pivotal external platforms have become in maintaining model operations. -

Prospects of Pliable Monetization Plans:

@philipmayspeculated on a scenario where Hugging Face might begin charging for model access or downloads, triggering a need for preemptive financial planning within the community. -

A Whisper of Sparse Efficiency: Without details,

@phantinedropped hints about an algorithm leveraging sparsity for efficiency, with an intended link for further details which failed to resolve. -

SPINning Around With German Language Models:

@philipmaybrought up applying the SPIN method (self-play) to a Mixtral model in German, sharing the SPIN technique’s official GitHub repository to spark additional conversation or perhaps experimentation.

LLM Perf Enthusiasts AI Discord Summary

- Whispers of Upcoming OpenAI Launch:

@res6969dropped hints about a potential new OpenAI release, creating anticipation with a vague announcement expecting news tomorrow or Tuesday. Conversations sparked with@.psychickoalaplayfully inquiring, “What is it haha” but no concrete details were shared.

Please note that the other message from rabiat did not contain sufficient context or information relevant for a technical, detail-oriented engineer audience and thus was omitted from the summary.

Alignment Lab AI Discord Summary

- Curiosity About Colleague’s Activities: @teknium inquired about the current endeavors of

<@748528982034612226>. - Status Update on Mysterious Member: @atlasunified informed that

<@748528982034612226>has gone off grid, without further elaboration on their status.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (1251 messages🔥🔥🔥):

- Concerns About Model Sizes and Preferences: Members like

@dao_lishared their experiences with various AI models, discussing the costs and effectiveness of GPT-4 and alternatives like Janitor AI for chatbots. As they found GPT-4 expensive, other users suggested trying various small models for more cost-effective solutions. - Discussions on Quantization:

@immortalrobotasked about the trade-offs between low quantized larger models versus higher quantized smaller ones. The consensus, including input from@kalomazeand@superking__, seemed to be that larger models might handle heavy quantization better, but the relationship is not linear. - Jokes About “Goody-2”: The discussion touched upon the safe AI model “Goody-2,” with users like

@selearemarking on its stringency, as it rejects anything that could be controversial. The conversation playfully explored the idea of challenging the model. - User Interface Development:

@itsme9316and@potatooffshared progress on their respective UI development projects with PolyMind and a new UI being built. They discussed the complexities and challenges of web development and prompt engineering. - Curiosities on Model Absence and Updates:

@rombodawgsought fine-tuned Mistral-7b models for a merge project, while@kaltcithumorously remarked on the cat-like absence of a user named turbca, likening it to waiting for Llama3 to support vision.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- no title found: no description found

- GOODY-2 | The world’s most responsible AI model: Introducing a new AI model with next-gen ethical alignment. Chat now.

- Unbabel/TowerInstruct-13B-v0.1 · Hugging Face: no description found

- Aligning LLMs with Direct Preference Optimization: In this workshop, Lewis Tunstall and Edward Beeching from Hugging Face will discuss a powerful alignment technique called Direct Preference Optimisation (DPO…

- PotatoOff/HamSter-0.2 · Hugging Face: no description found

- MrDragonFox/apple-ferret-13b-merged · Hugging Face: no description found

- Rick Astley - Never Gonna Give You Up (Official Music Video): The official video for “Never Gonna Give You Up” by Rick Astley. The new album ‘Are We There Yet?’ is out now: Download here: https://RickAstley.lnk.to/AreWe…

- abacusai/Smaug-72B-v0.1 · Hugging Face: no description found

- Answer Overflow - Search all of Discord: Build the best Discord support server with Answer Overflow. Index your content into Google, answer questions with AI, and gain insights into your community.

- GitHub - apple/ml-ferret: Contribute to apple/ml-ferret development by creating an account on GitHub.

- GitHub - daswer123/xtts-api-server: A simple FastAPI Server to run XTTSv2: A simple FastAPI Server to run XTTSv2. Contribute to daswer123/xtts-api-server development by creating an account on GitHub.

- GitHub - mzbac/mlx-llm-server: For inferring and serving local LLMs using the MLX framework: For inferring and serving local LLMs using the MLX framework - mzbac/mlx-llm-server

- GitHub - Tyrrrz/DiscordChatExporter: Exports Discord chat logs to a file: Exports Discord chat logs to a file. Contribute to Tyrrrz/DiscordChatExporter development by creating an account on GitHub.

- metavoiceio/metavoice-1B-v0.1 · Hugging Face: no description found

- nvidia/canary-1b · Hugging Face: no description found

- Piper Voice Samples: no description found

- GitHub - metavoiceio/metavoice-src: Foundational model for human-like, expressive TTS: Foundational model for human-like, expressive TTS. Contribute to metavoiceio/metavoice-src development by creating an account on GitHub.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- GitHub - daveshap/Reflective_Journaling_Tool: Use a customized version of ChatGPT for reflective journaling. No data saved for privacy reasons.: Use a customized version of ChatGPT for reflective journaling. No data saved for privacy reasons. - GitHub - daveshap/Reflective_Journaling_Tool: Use a customized version of ChatGPT for reflective…

- GitHub - LAION-AI/natural_voice_assistant: Contribute to LAION-AI/natural_voice_assistant development by creating an account on GitHub.

- LoneStriker/HamSter-0.2-8.0bpw-h8-exl2 · Hugging Face: no description found

- Simpsons Homer Simpson GIF - Simpsons Homer simpson - Discover & Share GIFs: Click to view the GIF

- GitHub - LostRuins/koboldcpp: A simple one-file way to run various GGML and GGUF models with KoboldAI’s UI: A simple one-file way to run various GGML and GGUF models with KoboldAI’s UI - LostRuins/koboldcpp

- Everything WRONG with LLM Benchmarks (ft. MMLU)!!!: 🔗 Links 🔗When Benchmarks are Targets: Revealing the Sensitivity of Large Language Model Leaderboardshttps://arxiv.org/pdf/2402.01781.pdf❤️ If you want to s…

- Cheat Sheet: Mastering Temperature and Top_p in ChatGPT API: Hello everyone! Ok, I admit had help from OpenAi with this. But what I “helped” put together I think can greatly improve the results and costs of using OpenAi within your apps and plugins, specially …

- Andrew Garfield Andrew Garfield Moonlight Meme GIF - Andrew garfield Andrew Garfield Moonlight meme Andrew Garfield Moonlight trend - Discover & Share GIFs: Click to view the GIF

- GitHub - jondurbin/airoboros: Customizable implementation of the self-instruct paper.: Customizable implementation of the self-instruct paper. - jondurbin/airoboros

- brucethemoose/Yi-34B-200K-RPMerge · Hugging Face: no description found

- Doctor-Shotgun/Nous-Capybara-limarpv3-34B · Hugging Face: no description found

- GitHub - itsme2417/PolyMind: A multimodal, function calling powered LLM webui.: A multimodal, function calling powered LLM webui. - GitHub - itsme2417/PolyMind: A multimodal, function calling powered LLM webui.

- GitHub - Haidra-Org/AI-Horde-Worker: This repo turns your PC into a AI Horde worker node: This repo turns your PC into a AI Horde worker node - Haidra-Org/AI-Horde-Worker

- Adjust VRAM/RAM split on Apple Silicon · ggerganov/llama.cpp · Discussion #2182: // this tool allows you to change the VRAM/RAM split on Unified Memory on Apple Silicon to whatever you want, allowing for more VRAM for inference // c++ -std=c++17 -framework CoreFoundation -o vra…

- k-quants by ikawrakow · Pull Request #1684 · ggerganov/llama.cpp: What This PR adds a series of 2-6 bit quantization methods, along with quantization mixes, as proposed in #1240 and #1256. Scalar, AVX2, ARM_NEON, and CUDA implementations are provided. Why This is…

- nextai-team/apollo-v1-7b · Hugging Face: no description found

- mistralai/Mistral-7B-Instruct-v0.2 · Hugging Face: no description found

- Intel/neural-chat-7b-v3-3 · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

TheBloke ▷ #characters-roleplay-stories (535 messages🔥🔥🔥):

-

Discussing the Versatility of Miqu Models: Users have been sharing their insights on models such as Miqu, including their strength in performance compared to model merges like Miquella. There was also a mention of the potential performance of a MiquMaid model, which is fine-tuned for ERP, with links to MiquMaid-v2-70B and MiquMaid-v2-70B-DPO provided by

@soufflespethuman. -

Model Configuration and Setup Queries: Users like

@netrveand@johnrobertsmithshared details and experiences on setting up models, debating the effects of context length, repetition penalty, and memory splits across GPUs.@lonestrikerprovided detailed information about exl2 models and their quant sizes, with raw sizes ranging from 34GB to 110GB. -

Lively Debate on Tokenizer Use in ST:

@stoop poopssuggested@netrveread the docs when there was a question about ST’s use of tokenizers. The discussion highlighted some confusion around the purpose and functionality of the tokenizer setting in ST (Silly Tavern). -

Technical Tips for AMD Users:

@spottyluckoffered advice on using AMD’s AOCL for improved CPU performance on inference with llama.cpp, suggesting specific compile options that make use of AMD’s AVX512 extensions and better kernels. -

Implementing Custom Scripting for Character States:

@johnrobertsmithexpressed an interest in creating scripts to manage character states in storytelling scenarios using STscript and lorebooks, looking for assistance and ideas to turn his theoretical knowledge into a practical implementation.

Links mentioned:

- Neko Atsume Cat GIF - Neko Atsume Cat Kitty - Discover & Share GIFs: Click to view the GIF

- NeverSleep/MiquMaid-v2-2x70B-DPO · Hugging Face: no description found

- Homer Simpsons GIF - Homer Simpsons Audacity - Discover & Share GIFs: Click to view the GIF

- The Chi GIF - The Chi - Discover & Share GIFs: Click to view the GIF

- Cat Kitten GIF - Cat Kitten Speech Bubble - Discover & Share GIFs: Click to view the GIF

- What The Fuck Wtf Is Going On GIF - What The Fuck Wtf Is Going On What The - Discover & Share GIFs: Click to view the GIF

- GitHub - yule-BUAA/MergeLM: Codebase for Merging Language Models: Codebase for Merging Language Models. Contribute to yule-BUAA/MergeLM development by creating an account on GitHub.

- Answering questions with data: A free textbook teaching introductory statistics for undergraduates in psychology, including a lab manual, and course website. Licensed on CC BY SA 4.0

- Cats Cat GIF - Cats Cat Cucumber - Discover & Share GIFs: Click to view the GIF

- Boxing Day GIF - Cats Cats In Boxes Armor - Discover & Share GIFs: Click to view the GIF

- Did You Pray Today Turbulence GIF - Did you pray today Turbulence - Discover & Share GIFs: Click to view the GIF

- Nexesenex/abacusai_Smaug-Yi-34B-v0.1-iMat.GGUF at main: no description found

- Skinner Homer GIF - Skinner Homer Drag Net - Discover & Share GIFs: Click to view the GIF

- Catzilla 😅 | Do not do this to your cat, the street friends will laugh at him 👀: “Copyright Disclaimer under section 107 of the Copyright Act of 1976, allowance is made for ‘fair use’ for purposes such as criticism, comment, news reportin…

- Good Heavens GIF - OMG Shocked Surprised - Discover & Share GIFs: Click to view the GIF

- NeverSleep/MiquMaid-v2-70B · Hugging Face: no description found

- NeverSleep/MiquMaid-v2-70B-GGUF · Hugging Face: no description found

- NeverSleep/MiquMaid-v2-70B-DPO · Hugging Face: no description found

- NeverSleep/MiquMaid-v2-70B-DPO-GGUF · Hugging Face: no description found

TheBloke ▷ #training-and-fine-tuning (30 messages🔥):

-

Fine-tuning Chatbot Models:

@maldevidehighlighted the basic steps to convert a base model to an instruct model involve fine-tuning with a good instruct dataset for two epochs. Further discussion by@jondurbinand@starsupernovadelved into details like dataset sources, such as bagel datasets, and the actual process of fine-tuning which can add knowledge to the model. -

Instruct Dataset Recommendations:

@mr.userbox020inquired about which datasets are best for creating an instruct model, and@maldeviderecommended considering datasets like WizardLM_evol_instruct_V2_196k, OpenHermes-2.5, and others for their proven broad base, while also mixing in any specific specializations needed. -

Understanding the Impact of Fine-tuning:

@skirossoasked about the purpose of fine-tuning, leading to a clarification that it can change a chatbot model’s tone and also add knowledge, especially when pretraining is continued across all layers, as explained by@starsupernova.@mr.userbox020agreed, noting that the best instruct models, like mixtral, would already be capable of telling dragon stories instructed by a user. -

The Future of Fine-tuning Speed and Efficiency: Sharing a resource,

@mr.userbox020brought attention to a GitHub repository named unsloth, which claims faster and more efficient QLoRA fine-tuning for models like Mistral.@starsupernovaconfirmed its performance improvements, citing 2.2x speed-up and 70% VRAM reduction. -

Fine-tuning compared to Training:

@wolfsaugeadded depth to the fine-tuning discussion by differentiating between training and fine-tuning, emphasizing resource savings and stability. They also mentioned that staying up-to-date with current fine-tuning trends is crucial and recommended further exploration of specific fine-tuning methods like RHLF with PPO and SFT with DPO.

Links mentioned:

- GitHub - jondurbin/bagel: A bagel, with everything.: A bagel, with everything. Contribute to jondurbin/bagel development by creating an account on GitHub.

- GitHub - unslothai/unsloth: 5X faster 60% less memory QLoRA finetuning: 5X faster 60% less memory QLoRA finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

TheBloke ▷ #coding (10 messages🔥):

- Surya OCR Script Shared:

@cybertimonprovided a Python script for optical character recognition using the Surya OCR tool and mentioned the required installation of the dev branch of Surya with the commandpip install git+https://github.com/VikParuchuri/surya@dev. - Code Snippet Appreciation:

@bartowski1182expressed their admiration for the shared Surya OCR code, calling it awesome. - GitHub Repo Suggestion:

@mr.userbox020suggested@cybertimoncreate a GitHub repository to share the Surya OCR code, but@cybertimonclarified that it was merely an example script, not a full project. They later shared a Gist link to the code. - Request for Debugging Assistance:

@ninyagoasked for help with a bug inuser.htmlfrom their GitHub repository, where the recording function does not always start after ElevenLabs finishes speaking. - Potential Third-Party Issue Highlighted: In response to

@ninyago’s request,@falconsflysuggested that the problem might be related to ElevenLabs, sharing an experience of a job stalling on their platform, indicating a potential issue not with the code but with the ElevenLabs service itself.

Links mentioned:

- Surya OCR: Surya OCR. GitHub Gist: instantly share code, notes, and snippets.

- GitHub - Ninyago53/webaudiobook: Contribute to Ninyago53/webaudiobook development by creating an account on GitHub.

LM Studio ▷ #💬-general (399 messages🔥🔥):

- Model Troubles and Discussions: Users

@lacrak27and@heyitsyorkiediscussed issues running TheBloke’s models, concluding the error likely resulted from a broken quant during the conversion of the original PyTorch model to gguf. - Tech Stack Queries: User

@jitterysniperinquired about the tech stack of LM Studio, clarified by.ben.comas llama.cpp, and the discussion later expanded to the app’s specifics, surmising it might be built with Electron. - Image Generation Woes: Users

@sunboy9710and@heyitsyorkiediscussed the difficulty and limitations of using LM Studio for image generation tasks. - VPNs and ISP Blockages: User

@stevecnycpaignehad issues accessing huggingface.co from different locations, leading@heyitsyorkieto suggest trying a VPN as it might be an ISP-related problem. - Privacy and Usage Data Concerns: User

@f0xacompared GPT4All and LM Studio, seeking clarity on data privacy with LMStudio, and@fabguyindicated LM Studio’s privacy by default, with shared data coming from updates and model downloads from Huggingface. - Web UI for LM Studio Sought After: User

@laststandingknightqueried about the availability of a web UI for LM Studio chats, confirmed to be unavailable by@fabguy.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Continue: no description found

- TheBloke/OpenHermes-2.5-Mistral-7B-GGUF · Hugging Face: no description found

- Twochoices Funny GIF - Twochoices Funny Two - Discover & Share GIFs: Click to view the GIF

- chilliadgl/RG_fake_signatures_ST at main: no description found

- no title found: no description found

- GitHub - b4rtaz/distributed-llama: Run LLMs on weak devices or make powerful devices even more powerful by distributing the workload and dividing the RAM usage.: Run LLMs on weak devices or make powerful devices even more powerful by distributing the workload and dividing the RAM usage. - b4rtaz/distributed-llama

- examples/how-to-run-llama-cpp-on-raspberry-pi.md at master · garyexplains/examples: Example code used in my videos. Contribute to garyexplains/examples development by creating an account on GitHub.

LM Studio ▷ #🤖-models-discussion-chat (92 messages🔥🔥):

- Struggling with Small Model Classification:

@speedy.devexperienced issues with classification tasks using 13B and 7B Llama models, particularly the uncensored variants, and contemplated fine-tuning as a solution. - Goliath vs. Goat - The Model Battle for Story Quality:

@goldensun3dsran tests comparing story writing between the Bloke Goat Storytelling 70B Q6, Bloke Goliath 120B Q6, and Mixtral 7B Q6 models, noting that Goliath captured emotions better, but Mixtral was faster, and offering insight into combating repetitive loops. - Local Chat with Docs Still in Limbo:

@dr.nova.joined the community looking for a local alternative to GPT-4 for chatting with PDFs and received input that while LMStudio has no such feature yet, GPT-4 is the reigning solution for document-based interactions. - Selecting the Best Model for Task Delegation? A Hypothetical Approach:

@binaryalgorithmpondered the idea of a meta-model that could choose the best model for a given task, and@.ben.commentioned that openrouter.ai has a basic router for model selection.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Models - Hugging Face: no description found

- Experimenting with llama2 LLM for local file classification (renaming, summarizing, analysing): Follow-up from OpenAI ChatGPT for automatic generation of matching filenames - #3 by syntagm ChatGPT works extremely well to get some logic into OCRed documents and PDFs, but would be nice to do this…

LM Studio ▷ #🧠-feedback (1 messages):

- Model Metadata Management Insights Shared:

@wolfspyrehighlighted the importance of model metadata management using a HackMD post, which suggests the need for better categorization of model “parameters” among various chat platforms. They drew attention to the differences ininit/load params,run params, andserver/engine params. - Potential for a Model.json Standard: The HackMD document discusses the possibility of establishing a

model.jsonstandard by Jan and provides a link to a Github repository which includes schema and example files for different versions.

Links mentioned:

Model Object Teardowns - HackMD: Model File Formats

LM Studio ▷ #🎛-hardware-discussion (197 messages🔥🔥):

-

Seeking Advice on AVX Support:

@guest7_25187was pleased to find Intel Xeon E5-2670 v3 CPUs on eBay that support AVX2, which would be compatible with their server.@heyitsyorkiesuggested that if combined with sufficient RAM and Nvidia P40 GPUs,@guest7_25187would see significant speed improvements. -

GPU Decisions for Model Performance: Discussions about hardware for running LLMs (Large Language Models) highlighted

@nink1emphasizing the benefit of having more VRAM and CUDA cores, noting particularly the 3090’s advantage in terms of VRAM to core ratio and NVLINK capability.@konst.iowas advised that adding another 64GB of RAM wouldn’t hurt but the priority should be to maximize VRAM first. -

Mac vs. Custom Builds for LLM Inferencing:

@heyitsyorkieshared their experience with running Goliath 120b 32k model on an M3 Max 128gb, clocking it faster than their 4090 setup. Meanwhile,@wildcat_auroradiscussed their setup involving P40 GPUs and Xeon processors which was repurposed from Apple’s Siri service, delivering effective results at a lower power consumption. -

Market Speculations and Hardware Strategies: There was a mix of speculation and desires for future hardware developments with

@nink1,@christianazinn, and others debating Nvidia’s strategic decisions about VRAM on consumer GPUs and looking forward to potential enterprise solutions by Apple with more RAM. -

Troubleshooting and Setup for LLM Inferencing: Members like

@therealril3y,@lardz90, and@the_yo_92sought assistance with issues of LLM Studio not utilizing extra GPUs, RAM not being correctly detected after upgrades, and encountering JavaScript errors on M1 Mac.@heyitsyorkieand@speedy.devprovided quick fixes and suggested creating a support thread or updating software for resolution.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

LM Studio ▷ #🧪-beta-releases-chat (1 messages):

ramendraws: :1ski_smug:

LM Studio ▷ #autogen (2 messages):

- Preference for CrewAI:

@docorange88expressed a distinct preference for CrewAI over AutoGen, suggesting that AutoGen is more difficult to manage.

LM Studio ▷ #avx-beta (1 messages):

- Intel Dropping AVX-512 Raises Eyebrows:

@technot80expressed confusion over Intel’s decision to drop AVX-512 support on a powerful i9 processor, especially since their less powerful i5-11600k includes it. They found the move truly weird.

HuggingFace ▷ #announcements (1 messages):

- HuggingFace Hub Experiencing Downtime: User

@lunarfluannounced that HF Hub and git hosting are temporarily down. They mentioned that the team is currently working on resolving the issue and asked for community support.

HuggingFace ▷ #general (603 messages🔥🔥🔥):

- Verifying on Discord Can Be Tricky: User

@d3113faced an error when trying to authenticate with a bot on Discord, which was resolved by enabling “Allow messages from server members” in their settings. User@lunarfluadvised this solution and mentioned that the token used for verification can be deleted afterwards. - Keeping It Conversational: User

@miker1662was encountering issues with finding conversational models on Hugging Face due to an API bug, which@osansevieroconfirmed and linked to an ongoing issue and changes in API usage. Hugging Face is deprecatingconversationaland merging it intotext-generation. - The Dilemma of PEFT for LLM Fine-Tuning: User

@samuelcorsandebated whether to use PEFT (Parameter Efficient Fine-Tuning) when fine-tuning a conversational chatbot LLM, but eventually decided to go for full fine-tuning, removing PEFT from their code. They were given advice by@vipitisto use the full sequence length during fine-tuning to avoid the model learning positional embeddings, chunking the dataset for efficiency. - Raspberry Pi for CV on the Edge: User

@akvnninquired whether a Raspberry Pi could handle running a computer vision (CV) model continuously for a multi-camera system. And user@yamatovergil89confirmed its viability but did not specify if it could handle multiple cameras simultaneously. - Hardware Queries for Running LLMs Locally:

@lookingforspeedsought advice on whether dual NVIDIA 4080 Supers would be more suitable than a single 4090 for locally coding LLMs like Mixtral.@tddammorecommended older generation pro cards like the A4500 or A4000 for better electrical efficiency and support for NVLink, and subsequently explained the benefits over consumer cards.

Links mentioned:

- mistralai/Mixtral-8x7B-v0.1 · Hugging Face: no description found

- Hugging Face – The AI community building the future.: no description found

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- transformers/src/transformers/configuration_utils.py at 58e3d23e97078f361a533b9ec4a6a2de674ea52a · huggingface/transformers: 🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX. - huggingface/transformers

- Cry Animecry GIF - Cry Animecry Anime - Discover & Share GIFs: Click to view the GIF

- Free Hugs Shack GIF - Free Hugs Shack Forest - Discover & Share GIFs: Click to view the GIF

- Anime Cry GIF - Anime Cry Sad - Discover & Share GIFs: Click to view the GIF

- M3 max 128GB for AI running Llama2 7b 13b and 70b: In this video we run Llama models using the new M3 max with 128GB and we compare it with a M1 pro and RTX 4090 to see the real world performance of this Chip…

- Hugging Face status : no description found

- Tweet from Ross Wightman (@wightmanr): @bhutanisanyam1 @jamesbower NVLINK does make a difference, even on 2-GPUs but impact varies with distributed workload. Unfortunately, it’s not a concern on hobby machines now, 40x0 and RTX6000 Ada…

- andreasjansson/codellama-7b-instruct-gguf – Run with an API on Replicate: no description found

- Whisper Large V3 - a Hugging Face Space by hf-audio: no description found

- GitHub - stanfordnlp/dspy: DSPy: The framework for programming—not prompting—foundation models: DSPy: The framework for programming—not prompting—foundation models - stanfordnlp/dspy

- Corrective Retrieval Augmented Generation: Large language models (LLMs) inevitably exhibit hallucinations since the accuracy of generated texts cannot be secured solely by the parametric knowledge they encapsulate. Although retrieval-augmented…

- Error when calling

InferenceClient.conversational· Issue #2023 · huggingface/huggingface_hub: Describe the bug Calling InferenceClient.conversational according to the docs results in a 400 Client Error. Reproduction from huggingface_hub import InferenceClient InferenceClient().conversationa… - DSPy PROMPT Engineering w/ ICL-RAG (How to Code Self-improving LLM-RM Pipelines): Advanced Prompt Engineering. From human prompt templates to self-improving, self-config prompt pipelines via DSPy. Advanced Techniques in Pipeline Self-Optim…

- Models - Hugging Face: no description found

- Reddit - Dive into anything: no description found

- Amazon EC2 G5 Instances | Amazon Web Services: no description found

- Pricing | Cloud AI Meets Unbeatable Value: With pay as you go pricing up to full on monthly enterprise stable diffusion plans, we have you covered. Empower your vision with unbeatable costs.

- Reddit - Dive into anything: no description found

- What Is NVLink?: NVLink is a high-speed interconnect for GPU and CPU processors in accelerated systems, propelling data and calculations to actionable results.

- GitHub - huggingface/transformers at 58e3d23e97078f361a533b9ec4a6a2de674ea52a: 🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX. - GitHub - huggingface/transformers at 58e3d23e97078f361a533b9ec4a6a2de674ea52a

- intfloat/e5-mistral-7b-instruct · Hugging Face: no description found

HuggingFace ▷ #today-im-learning (8 messages🔥):

- Back in Action on LinkedIn:

@aiman1993has resumed posting on LinkedIn after a 6-week pause, sharing a post on upgrading CUDA Toolkit and NVIDIA Driver. - TokenClassification Fine-tuning:

@kamama2127is learning the ropes of fine-tuning for token classification. - Choosing the Right Python Library for APIs:

@subham5089discussed the importance of understanding different Python libraries for API calling, like Requests, AIOHTTP, and HTTPX, in a LinkedIn post. - Websockets Meet HTTPX: In response to

@dastardlydoright’s questions about websockets in Python,@subham5089recommended httpx-ws, a library for WebSockets support in HTTPX, in addition to providing a link to the source code. - GPT Insights from Karpathy:

@wonder_in_alicelandmentioned enjoying a video by Andrej Karpathy about nanogpt, which explores the inner workings and ideas behind GPT.

Links mentioned:

HTTPX WS: no description found

HuggingFace ▷ #cool-finds (5 messages):

-

Deep Dive into Deep Learning Breakthroughs:

@branchverseshared an article highlighting the progress in deep learning since the 2010s. The article notes innovations driven by open source tools, hardware advancements, and availability of labeled data. -

Normcore LLM Reads on GitHub:

@husainhz7linked a GitHub Gist titled “Normcore LLM Reads,” which is a collection of code, notes, and snippets related to LLMs. -

AI-Infused Genetic Algorithm for Greener Gardens:

@paccerdiscussed an article that features a genetic algorithm combined with LLM for gardening optimization. The AI-powered tool GRDN.AI seeks to improve companion planting and is documented in a Medium post. -

Unveiling Computer Vision Techniques:

@purple_lizardposted a link to the Grad-CAM research paper, which introduces a technique for making convolutional neural network (CNN) decisions transparent via visual explanations. -

Exploring AI Research:

@kamama2127pointed out a recent AI research paper on arXiv with a list of authors contributing to the field. The paper discusses new findings and advancements in artificial intelligence.

Links mentioned:

- OLMo: Accelerating the Science of Language Models: Language models (LMs) have become ubiquitous in both NLP research and in commercial product offerings. As their commercial importance has surged, the most powerful models have become closed off, gated…

- AI-Infused Optimization in the Wild: Developing a Companion Planting App: Key to a thriving garden, companion planting offers natural pest control, promotes healthy growth, and leads to more nutrients in soil. GRDN.AI applies this concept using an AI-infused genetic…

- Normcore LLM Reads: Normcore LLM Reads. GitHub Gist: instantly share code, notes, and snippets.

- Front-end deep learning web apps development and deployment: a review - Applied Intelligence: Machine learning and deep learning models are commonly developed using programming languages such as Python, C++, or R and deployed as web apps delivered from a back-end server or as mobile apps insta…

HuggingFace ▷ #i-made-this (10 messages🔥):

-

Data Structures Demystified: User

@kamama2127created a Streamlit page that aggregates 27 data structures, their implementation in Python, and associated problems. Check out the interactive learning tool here. -

Zero Shot to Object Detection:

@pradeep1148shared a YouTube video illustrating automatic object detection using zero shot methods with the MoonDream vision language model. Watch the tutorial here. -

DALL-E and Midjourney’s Imagery Dataset: User

@ehristoforuintroduced two Hugging Face datasets consisting of images generated by DALL-E 3 and Midjourney models. Explore the DALL-E dataset here and Midjourney dataset here. -

Animation Made Easy with FumesAI:

@myg5702shared a link to FumesAI’s Hugging Face space, featuring the text-to-Animation-Fast-AnimateDiff tool. Jump into animating your text here. -

AI-Enhanced Music Creation Workflow:

.bigdookiediscussed how the integration of the MusicGen tool into Ableton has improved, likening it to playing a slot machine. Watch the creative process here.

Links mentioned:

- Text To Animation Fast AnimateDiff - a Hugging Face Space by FumesAI: no description found

- Prometheus - a Hugging Face Space by Tonic: no description found

- Quiz Maker - a Hugging Face Space by narra-ai: no description found

- Automatic Object Detection: We are going to see how we can do automatic object detetction using zero shot object detection and moondream vison langugae model#llm #ml #ai #largelanguagem…

- another song from scratch with ableton and musicgen - captain’s chair 12: In this episode we use use @CradleAudio ‘s god particle to make much big loudness on our acousticand @Unisonaudio ‘s drum monkey, which kinda bummed me out,…

- ehristoforu/dalle-3-images · Datasets at Hugging Face: no description found

- ehristoforu/midjourney-images · Datasets at Hugging Face: no description found

- Spatial Media Converter: Convert RGB Images to Spatial Photos for Apple Vision Pro.

- no title found: no description found

HuggingFace ▷ #reading-group (20 messages🔥):

- Flash Attention Fires Up Interest:

@ericauldexpressed interest in presenting on Flash Attention, inquiring if it had been discussed in relation to Mamba and S4. Both@chad_in_the_houseand@ericaulddiscussed the mathematical identities that allow softmax calculations to be done blockwise rather than globally. - Curiosity About RWKV:

@typoilushowed an eagerness to give a presentation about the RWKV model, which has not yet been presented, and discussed scheduling for a future date. - Scheduling Mamba Presentation:

@ericualdand@chad_in_the_housecoordinated to find a mutually convenient time for a Mamba presentation, using When2meet for scheduling. - Google Calendar Consideration:

@chad_in_the_housesuggested starting a Google Calendar to manage presentation scheduling, with@lunarflusupporting the idea for its usefulness. - Time Zone Coordination for Presentations:

@typoilunoted their UTC+1 time zone, and@chad_in_the_housementioned the time difference with EST (Eastern Standard Time), looking to find a suitable time for US audience attendance for the RWKV presentation.

Links mentioned:

Mamba Presentation - When2meet: no description found

HuggingFace ▷ #computer-vision (1 messages):

- Simplifying Multi-label Image Classification: User

@nielsr_explained how to fine-tune an image classifier for multi-label classification with Hugging Face Transformers. They provided a code snippet usingDinov2ForImageClassificationfor easy instantiation.

HuggingFace ▷ #NLP (20 messages🔥):

- PEFT Package: User

@开饭噜reported issues with saving LoRA weights locally due to inability to connect to HuggingFace and received a solution from@nruaifrecommending to downgrade to PEFT 0.7.1, which resolved the problem. - Troubleshooting Saving Models with PEFT:

@vipitissuggested that the.save_pretrained()method may attempt to create a repository when a full path is not provided. They recommended trying aPathobject instead of astrto bypass connectivity issues. - Seeking JSON-aware LLM for Local Use:

@nic0653inquired about a robust Large Language Model (LLM) that interprets language and JSON schemas to output JSON. The discussion pointed to Claude excelling in this task but challenges remain for local deployment options. - In Search of Profanity Capable 15b+ LLM:

@wavy_n1c9sought recommendations for a 15 billion+ parameter LLM capable of generating dialogue or text containing profanity for local use, yet no suggestions were made within the chat history. - Small Model for Local Code Generation:

@adolfhipster1asked for advice on a small model fit for code generation, expressing concerns that GPT-2 was insufficient and looking for alternatives to downloading LLaMA.

OpenAI ▷ #ai-discussions (234 messages🔥🔥):

- Discord Community Wonders About AI’s Financial Advice: Members like

@azashirokuchikidiscuss the limitations of AI in generating trading strategies, expressing frustration with the cautious responses and lack of memory in the bot regarding financial matters. - AI-Centric Tool

ts_zipShared: Link to a tool for compressing text files with Large Language Models shared by@lugui, sparking interest in AI-powered compression technologies. - Curiosity about OpenAI’s Funding Ambitions: The extraction of 5-7 trillion mentioned for AI chips sparked a debate, with users like

@thedasenqueenand@1015814weighing in on the feasibility and impact on AI research and development. - AI’s Impact on Societal Risks Discussed:

@1015814delves into the societal risks of improperly integrated autonomous robotics and the debate around AI’s potential for both societal good and potential danger. - Google Gemini Takes Center Stage in Conversations: Several users, including

@jaicraftand@thedreamakeem, discuss the pros and cons of Google’s Gemini AI product, its features, and anticipated improvements in coding and interpreter capabilities.

Links mentioned:

- Building an early warning system for LLM-aided biological threat creation: We’re developing a blueprint for evaluating the risk that a large language model (LLM) could aid someone in creating a biological threat. In an evaluation involving both biology experts and students, …

- Tweet from Jack Krawczyk (@JackK): Ok - Gemini day 2 recap: things people like, things we gotta fix. Keep your feedback coming. We’re reading it all. THINGS PEOPLE LIKE (♥️♥️♥️) - Writing style - Creativity for helping you find th…

- ts_zip: Text Compression using Large Language Models: no description found

OpenAI ▷ #gpt-4-discussions (37 messages🔥):

-

ChatGPT Suffers from Attention Issues:

@.nefasexpressed frustration with ChatGPT’s inconsistent responses during role-playing sessions, which diverge from the discussion abruptly. Others like@satanhashtagand@a1vxreferred to context limits, explaining that the full 128K token context can be utilized in the API and by Enterprise users, but Plus and Team are limited to 32K tokens. -

GPT-4 Subscription Sharing Confusion:

@nickthepaladininquired if non-subscribers could use their GPT, to which@solbusclarified that a Plus or higher subscription is essential since all GPTs utilize GPT-4. -

@ Mentions Feature Inconsistency:

@rudds3802raised an issue about not having access to the @ mentions feature, and@solbusand@jaicraftdiscussed its possible limited rollout and problems on mobile chromium browsers. -

Flagged GPTs Create Confusion:

@eligumpand@yucareuxdiscussed their experiences with their GPT content being flagged and the appeal process, suggesting that compliance with the academic honesty policy might play a role in reapproval. -

Strategies for Effective ChatGPT Interactions:

@blckreaperrecommended providing ChatGPT with multiple narrative options for action-adventure scenarios to improve response flow and save tokens, while@airahaersonlamented the necessity of repeating instructions despite detailed formatting efforts.

Links mentioned:

Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

OpenAI ▷ #prompt-engineering (63 messages🔥🔥):

-

Assistance with ChatGPT Spreadsheet Creation:

@crosscxseeks help for instructing ChatGPT to convert the Midlands rules of evidence into a spreadsheet. However, facing Python errors, they run out of ChatGPT 4 messages. Users@madame_architectand@darthgustavsuggest simplifying the task, perhaps starting without the spreadsheet aspect or breaking the task into manageable chunks. -

Plain Language Transformation Trouble:

@mondsuppediscusses difficulties in getting ChatGPT to translate regular text into simple language adhering to specific plain language rules.@darthgustavand@madame_architectadvise using examples and stepwise prompting to achieve better results, while@eskcantashared a successful strategy using a two-step process. -

Understanding ‘Conversational Cadence’: In a discussion about GPT-4’s capabilities,

@drinkoblog.weebly.comobserves the model’s understanding of conversational cadence, which they argue implies an understanding of time in a specific context.@bambooshootsclarifies that it’s about conversational flow rather than time awareness, leading to a further exchange on the definition of “cadence” with@beanz_and_rice. -

GPT-4’s Handling of ‘State Changes’:

@beanz_and_riceelaborates on GPT-4’s ability to process “state changes,” mentioning its competence in managing dynamic conditions and recommending prompt strategies that allow the model to adapt and respond effectively over multiple messages. -

Video Command Interjection: An interjection in the conversation by

@zen_dove_40136with “/video” is humorously countered by@beanz_and_ricewith “/denied”, continuing the trend of message commands in the discussion.

OpenAI ▷ #api-discussions (63 messages🔥🔥):

-

Chasing the Right Formula for Midlands Rules of Evidence:

@crosscxsought advice on getting ChatGPT to format the Midlands Rules of Evidence into a spreadsheet without altering content, facing a Python error and exhausting ChatGPT messages.@madame_architectsuggested simplifying by not asking for a spreadsheet, while@darthgustav.recommended breaking down the task and later clarified that the issue is a memory error in the CI environment. -

Plain Language Translation Conundrum:

@mondsuppeshared challenges translating text into simple language for individuals with learning difficulties, discussing specific rules such as short sentences and clear structures. Although@darthgustav.suggested templates might help, they expressed doubt about ChatGPT-3.5’s capabilities, suggesting GPT-4 might fare better. -

GPT-4 and Temporal Awareness: Users discussed the nature of GPT-4’s responses, with

@drinkoblog.weebly.comobserving a perception of time when allowing it to respond over multiple cycles, leading to a conversation about cadence and conversational flow with@beanz_and_riceand others. -

Exploring Multi-Step Problem Solving with GPT-3.5:

@eskcantadescribed a two-step process to simplify complex explanations into child-friendly language using GPT-3.5, revealing its potential in breaking down complicated tasks into manageable stages. -

Conversations on Definitions and Capabilities: The channel included a discussion on the definition of “cadence” and its relation to GPT-4’s conversational capabilities, with various interpretations provided by

@beanz_and_riceand@drinkoblog.weebly.com. This highlighted the nuances in understanding how AI processes and delivers information.

Nous Research AI ▷ #off-topic (11 messages🔥):

- Elon Musk Goes Incognito?:

@fullstack6209spotted an individual who resembles Elon Musk in a video, claiming it’s AdrianDittmann and mentioning a surprise call-in by Alex Jones. They shared this snippet from a recorded conversation that supposedly includes Musk, asking viewers to skip to timestamps 1:13 and 1:20 for the key moments, and attempted to summarize the event here. - Musk’s AI Ambitions Exposed: Keeping up with the Elon Musk theme,

@fullstack6209shares a quote of Musk stating, “Hey I’ve got 200 GPUs in the back of my pickup, and I’m going to make an AI faster than you can, and they do”, reportedly from February 9th, 2024. - Emoji Advice Dismissed: User

@.ben.comhumorously comments on not taking advice from someone who lacks emoji skills, though the context of the advice is not provided. - Heavyweight Framework Offline:

@gabriel_symebriefly laments that “HF” (likely referring to Hugging Face) is still down, indicating an ongoing issue with the service. - Sam Altman Tweet Shared:

@tekniumshares a tweet from Sam Altman without further comment, viewers can check the tweet here. - YouTube on Automatic Object Detection:

@pradeep1148provides a link to a YouTube video titled “Automatic Object Detection”, but doesn’t include any additional commentary on the content.

Links mentioned:

- Automatic Object Detection: We are going to see how we can do automatic object detetction using zero shot object detection and moondream vison langugae model#llm #ml #ai #largelanguagem…

- The Truth About Building AI Startups Today: In the first episode of the Lightcone Podcast, YC Group Partners dig into everything they have learned working with the top founders building AI startups tod…

Nous Research AI ▷ #interesting-links (22 messages🔥):

- QuartetAnemoi-70B Unveiled:

@nonameusrshared a new sequential merge model dubbed QuartetAnemoi-70B-t0.0001, a combination of four distinct models using a NearSwap algorithm, showcasing storytelling prowess without relying on typical story-ending clichés. - Senku 70B Scores in TruthfulQA and ARC-Challenge:

@carsonpoolereports that Senku 70B model, quantized to 4-bit without any calibration, achieves 62.3 in TruthfulQA and 85.75 in the ARC-Challenge, noting that the results are influenced by a bespoke prompt format. - Mixing Formats May Boost Senku Performance: Continuing the discussion on Senku 70B,

@carsonpoolementions advice from the trainer suggesting that using chatml could potentially improve the model’s performance, although it’s not currently implemented in the testing format. - Tiny Model Training on OpenHermes:

@euclaiseshared a Twitter thread discussing a small model trained on OpenHermes, which sparked a side conversation about the smallest models members have trained, with@tekniumrevealing a 7B model as their smallest. - 1.5 Bit Quantization Breakthrough:

@.benxhhighlighted a GitHub pull request for 1.5 bit quantization, noting that this state-of-the-art quantization allows a 70b model to fit in less than 18GB of RAM, and expressed the intent to benchmark these new quants on the Miqu model.

Links mentioned:

- alchemonaut/QuartetAnemoi-70B-t0.0001 · Hugging Face: no description found

- Positional Encoding Helps Recurrent Neural Networks Handle a Large Vocabulary: This study discusses the effects of positional encoding on recurrent neural networks (RNNs) utilizing synthetic benchmarks. Positional encoding “time-stamps” data points in time series and com…

- 1.5 bit quantization by ikawrakow · Pull Request #5453 · ggerganov/llama.cpp: This draft PR is a WIP that demonstrates 1.5 bits-per-weight (bpw) quantization. Only CUDA works, there is no implementation for the other supported back-ends. CUDA, AVX2 and ARM_NEON are implement…

Nous Research AI ▷ #general (228 messages🔥🔥):

- Model Making Skills Inquiry: User

@copyninja_khasked about the necessary skills for creating datasets, and after further prompting about technical specifics,@tekniumsuggested looking at how others like wizardevol and alpaca have done it. - UNA-SimpleSmaug-34B on Hugging Face: User

@fblgitshared a link to the UNA-SimpleSmaug-34B model on Hugging Face, describing its superior scoring over the original Smaug-34B model and noting its training on the SimpleMath dataset with an emphasis on improving mathematical and reasoning capabilities. - Exploring Lilac Processed Hermes: User

@nikhil_thoratengaged in a discussion about the utility of UMAP projections in clustering datasets, offering options to share embeddings and projections. Later, he shared a link to the Lilac Processed OpenHermes-2.5 dataset and mentioned that he’d add a column with 2D coordinates to the same dataset in the future. - Hosting Hugging Face Models with APIs: User

@nonameusrsought advice on the easiest way to host a Hugging Face model for API inference, leading to suggestions like using Flask and looking at platforms like Runpod that offer pay-by-the-second GPU services. - Mergekit Usage in Model Creation: User