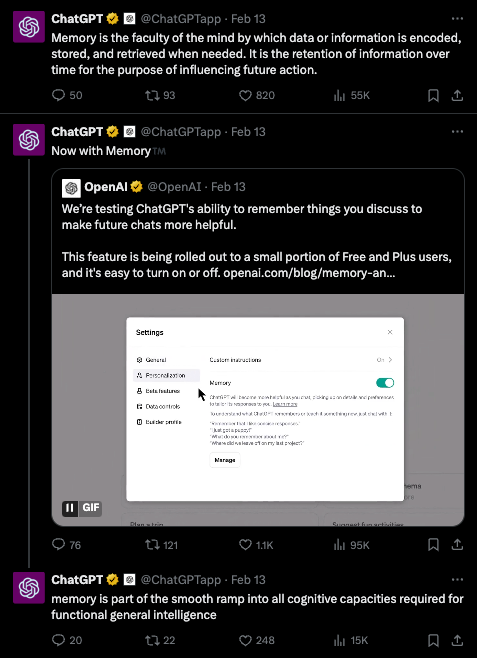

We have long contended that the RAG style operations have been used for context (knowledge base, facts about the world) and memory (running list of facts about you) will diverge. The leading implementation was MemGPT and now it seems to have rolled out in both ChatGPT (with a weirdly roon-y tweet. more details from Joanne Jang) and LangChain.

OpenAI:

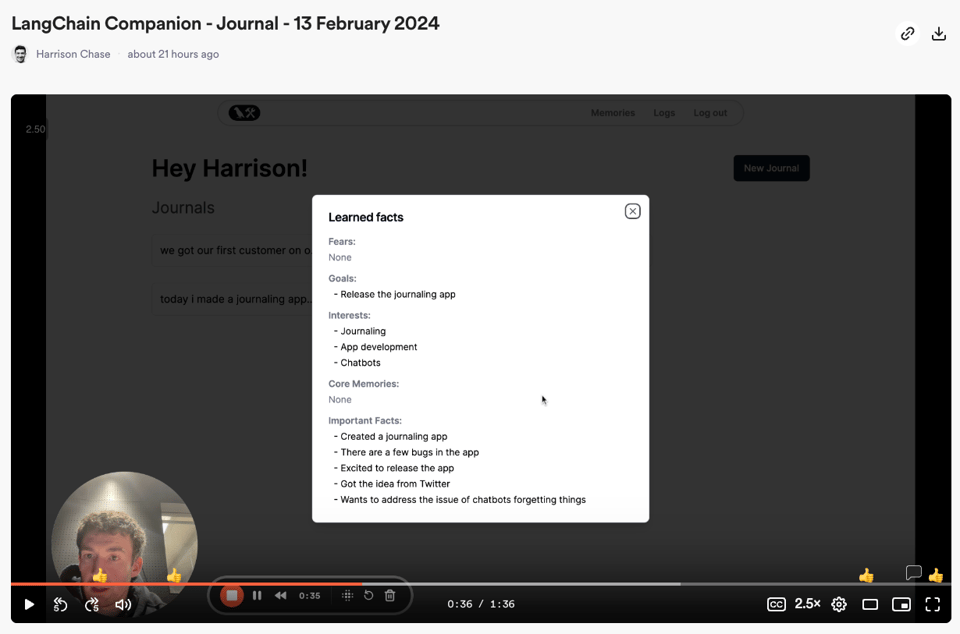

LangChain:

In some sense this is just a crossing over of something the LMstudio/Sillytavern roleplay people have had for a while now. Expectation is that it will mildly improve UX but not lead to a big wow moment since the memory modeling is quite crude at the moment, not humanlike, and subject to context limits.

Table of Contents

[TOC]

PART 1: High level Discord summaries

TheBloke Discord Summary

-

Unbounded Textual Contexts: Engineers are exploring new open-source large language models like the Large World Model, which boasts coherence with contexts up to 1 million tokens. Discussions include language support, as in Cohere’s

ayamodel, which covers 101 languages, and challenges working with jax-based tools during model installations. -

Nurturing Erotically Programmed Role Play: The community is dissecting performances of re-quantized Miqu models like MiquMaid-v2-70B, attuned for Erotic Role Play (ERP). Emphasis was on the impact of enhanced hardware, with a jump from 0.7t/s to 2.1t/s in tokens per second while using 12GB VRAM GPUs.

-

Instruct, Optimize, Repeat: Finetuning techniques explained include using Sequential Fine-Tuning (SFT) followed by Direct Preference Optimization (DPO) as improved RLHF/PPO, detailed on page 6 of a paper. Unsloth AI’s

apply_chat_templateis touted over Alpaca to train LLMs for multi-turn conversations. -

JavaScript Meets Python in AI Development: Experimentation with JSPyBridge led to successful bridging of JavaScript and Python in expanding the SillyTavern project. This included addressing Windows-specific errors, like

cpu_embedding=Trueto circumvent access violation issues and integrating Python classes asynchronously into JavaScript code. -

Confounding Losses in Model Training: An engineer observed an unexplained variance in training loss when finetuning Mixtral 8x7b qlora, resulting in higher losses compared to Mistral 7b despite similar datasets and hyperparameters. The matter remains open for community input or similar experiences.

LM Studio Discord Summary

-

Large Models Crying for RAM: Users like

@nonm3and@theoverseerjackbrightbattled errors loading large models in LM Studio due to limited RAM and VRAM. Suggestions included trying smaller model quants, and some faced GPU detection issues with LM Studio, prompting restart crashes. -

MedAlpaca Heads to the Clinic: Discussions on medical LLMs saw medAlpaca, a fine-tuned model for medical question answering, as a promising addition to

@pepito92i’s medical project. Microsoft’s phi-2 model’s absence from LM Studio was noted, with the possibility of it being converted to .gguf format by user TheBloke to use with llama.cpp. -

GPU Matchmaking and Overclocking: Hardware enthusiasts like

@luxaplexxquestioned NVLink’s role in memory cycling, ultimately suspecting cards like the 960 might not be NVLinked. Users discussed GPU upgrades for better performance with models, considering options like the RTX 3060 12GB. Others like@alastair9776and@rugg0064weighed the benefits and risks of overclocking for faster token generation. -

Quant Leap Forward: Eager anticipation for IQ3_XSS support in LM Studio, with users like

@n8programsand@yagilbexpecting it in the next update. A GitHub pull request reflected the community’s excitement over upcoming 1.5 bit quantization. Meanwhile, preparations were suggested for downloading forthcoming, as-yet-unsupported models like IQ3_XSS. -

Beta Release Relief:

@rafalsebastianran into a stumbling block running LMstudio on CPUs with only AVX support.@heyitsyorkieprovided hope by directing to the 0.2.10 AVX beta release for Windows that enables compatibility, while still recommending an upgrade to AVX2 for optimal performance and offered a helpful link. -

Multi-Model Management Mystery:

@alluring_seahorse_04960sought advice on running dual models simultaneously on one machine to avoid repetition errors, using a Conda environment but steering clear of VMs. The nature of the repetition errors in question was humorously prodded by@wolfspyre, awaiting further details.

LAION Discord Summary

-

Magvit V2 Sparks Interest and Debate: Engineers delved into the technicalities of reproducing the Magvit V2 model, with discussions focusing on appropriate datasets, parameters for video compression and understanding, and the mention of experiments on the lfq side of Magvit2. The community also saw a surge in interest around MAGVIT, likely due to influencer mentions.

-

Scrutinizing Stable Cascade’s Efficacy: Stability AI’s Stable Cascade model spurred intense conversations regarding its high VRAM requirements, optimization issues, and erroneous inference time graphs. Technical issues reported included challenges with text clarity in images and the inability to run models in float16, alongside performance evaluations on GPUs like the 3090.

-

Legal Frays in AI-Generated Imagery: The community engaged in a heated discussion about copyrights and the legality of AI-generated images, highlighting a TorrentFreak article about a court dismissing authors’ copyright infringement claims against OpenAI.

-

Ethical Conundrums with AI and Adult Content: The conversation shifted to the role of adult content in driving technological progress, with some participants recognizing the historical pattern while others doubted its constructive impact on AI. Topics included the rise of non-consensual deepfake pornography, its market dynamics, and the potential ethical pitfalls plaguing the AI community.

-

Calls for Higher AI Image Standards: Discussions included technical insights into improving AI image generation, such as the viability of VAE encoder training. Members also reflected on the community’s photorealism standards, expressing the need for better quality in AI-generated images.

Eleuther Discord Summary

-

Checksum Hunting Season Open:

@paganpegasusprovided checksums for The Pile zst shards and pointed to EleutherAI’s hashes and the Discord pins. -

Image Content Classification Tools Discussed: OwlViT and CLIP models were recommended as tools for discerning the content of images and the concept of “nothing” in imagery was discussed due to an inquiry by

@everlasting_gomjabbar. -

Paper Review in Collaborative Spirit: A user received appreciative feedback on a manuscript titled “Don’t think about the paper,” with the EleutherAI Discord community being credited in the paper’s acknowledgements.

-

Cloud Computing Resources Examined: GCP and Colab surfaced as favorable cloud resources for NLP classification model training, with discussions encompassing cost-benefit analyses of platforms like runpod and vast.ai.

-

Research Computing Power Up for Grabs: EleutherAI’s computational resources were said to be available for collaboration on a semi-custom LLM project, with the caveat of having a clear research agenda and collaborative value proposition.

-

Semantic Scholar’s Linking Logic Revealed: Arxiv papers are automatically linked to authors on Semantic Scholar, with room for manual corrections to ensure accuracy.

-

Fractal Fun with Neural Training Parameters:

@jbusttershared fractals created from neural network hyperparameters, highlighting a blog by Jascha Sohl-Dickstein that correlates fractals with training convergence/divergence. -

A Deep Dive into Data Presentation for ML: A discussion was sparked concerning active learning and methods for models to choose their own data presentation sequence.

-

Enriching Encoder-Decoder Models with Unsupervised Data: Strategies to employ unsupervised datasets effectively in encoder-decoder models were discussed.

-

New NLP Robustness Method Flies Off the Press: A paper focusing on test-time augmentation (TTA) to enhance text classifiers’ robustness was published, with the author thanking the community for support.

-

The Quest for Interpretability Insight:

@jaimervasked for updated resources on interpretability methods beyond the standard Representation Engineering paper. -

Summoning Collaborators for Hallucination Leaderboard: A call for contributions to a hallucinations leaderboard was made, requesting assistance with tasks, datasets, metrics, and result evaluations.

-

Aligning Pythia with Practice: Concerns were aired about potential misalignments between training batches and checkpoints in the 2.8b size Pythia deduped suite, with follow-up discussions suggesting opportunities for a publication on Pythia’s reliability.

LlamaIndex Discord Summary

LlamaIndex v0.10 Marks Major Milestone: LlamaIndex v0.10 has been released, presenting notable advancements including a new llama-index-core package and PyPi packages for every integration/template. Detailed information on migration is accessible through their comprehensive blog post and documentation.

Webinar on No-Code RAG with LlamaIndex: A webinar demonstrating the creation of no-code Retrieve and Generate (RAG) apps using LlamaIndex.TS is set up with Flowise co-founder Henry Heng. Registration for the Friday event is available here.

Troubleshooting LlamaIndex: Engineers faced challenges with migration following LlamaIndex’s update and were pointed to a Notion migration guide for assistance. Furthermore, for configuration queries like chunk_size post-ServiceContext depreciation, engineers are advised to refer to the new Settings documentation and relevant LlamaIndex GitHub resources.

RAG App Building with Dewy Tremendously Simplified: A comprehensive guide to building a full-stack RAG app using NextJS, OpenAI, and the open-source knowledge base Dewy has been shared. The tutorial is aimed at grounding language models in precise, reliable data and can be studied in detail here.

Handling Document Complexity and Enhancing Enterprise with LlamaIndex: Users engaged in discussions about filtering complex documents and integrating LlamaIndex to enhance enterprise efficiency with tools such as Slack, Jira, and GDrive. Also, creating multiple agents for merging different document sources was considered, referencing the possibility of using traditional indexing techniques instead of high-cost LLMs for dynamic filtering.

HuggingFace Discord Summary

-

Hugging Face Accelerates with Message API: Hugging Face launched a new Message API compatible with OpenAI, aimed at streamlining use of inference endpoints and text generation services with client libraries. They’ve also advanced their offerings with new releases like Datatrove on PyPI, Gradio 4.18.0, and tools like Nanotron and Accelerate 0.27.0 for 3D parallelism training. Additional partnerships and resources, such as a Codecademy AI course and a blog post on SegMoE, support the continuous learning and innovation in their community.

-

Search Engine Woes and Hosting Queries in Focus: Technical discussions spotlighted the difficulties in creating search engines with mentions of approaches like TF-IDF and BM25, and the use of spaCy for Part of Speech tagging. Other conversations pivoted to queries about hosting custom models and serverless inferencing solutions, as well as the practicality of running 100B+ parameter models on enthusiast-level hardware.

-

Template Talk and Model Deployment Discussions: Users addressed the need for a simple chatbot development prototype capable of database interaction and email API integration, featuring resources like Microsoft’s AutoGen on GitHub and the potential of AutoGen Studio. Challenges around deploying finetuned machine learning models such as mistarl_7b_gptq for fast inferences were raised, with emphasis on choosing the right platforms or libraries for the task.

-

Glimpse into Creator Innovations: Members of the community showcased their creative projects, including GIMP 3.0 plugins interfacing with Automatic1111, development of an automated image tagging model for diffusion tasks, and updates to tools like PanoramAI.xyz introducing a “remix” mode for image transformations. Excitement built around AI-applications in fashion design as well, demonstrating the breadth of applications being pursued.

-

Analyzing S4 and Advancing NLP: The community shared their insights into the S4 architecture ideal for long-range sequences and sought clarity on its implementation. The paper on LangTest got introduced, which offers testing and augmenting capabilities for NLP models. Topics extended to extracting language identifiers from models like XLM-RoBERTa and converting natural language into formal algebraic expressions.

-

Enthusiasm for Diffusion and Emerging Models: Conversations sparked around facilitating multi-GPU training for diffusion model fine-tuning, with mentions of scripts such as

train_text_to_image.py. The successful deployment of models like mistarl_7b_gptq for fast inference, and effective text generation with stable cascade were discussed. The buzz was palpable around the teased development of a new terminus model. -

Complications in Computer Vision Explored: The channel delved into challenges like hierarchical image classification, with resource suggestions including an ECCV22 paper on the same. Members discussed requirements for Gaussian splats, industry-grade image retrieval systems and sought collaboration on multimodal projects.

Nous Research AI Discord Summary

-

LongCorpus Dataset Unveiled for Pre-Training: The new LongCorpus-2.5B dataset is released, featuring 2.5 billion tokens from various domains, specifically curated for long-context continual pre-training and designed to minimize n-gram similarity with training sets.

-

Coherence Preserved in Scaling Models: Scaling with ‘self-extend’ is considered superior over ‘rope scaling’ for maintaining coherence, as indicated by the implementation in llama.cpp, and offers the benefit of requiring no setup, fine-tuning, or additional parameters.

-

Persistence and Resistance in AI Agents and Models: LangGraph agents can persist their state across interactions, as shown in a YouTube demonstration, while the Gemini model shows resistance, with its refusal tendencies prompting comparisons unfavorable to GPT-4.

-

Multimodal AI Breakthrough with Reka Flash: Reka Flash, a new 21B fast multimodal language model, is introduced and now available in public beta, promising to measure up to major models like Gemini Pro and GPT-3.5. The initiative can be followed on Reka AI’s announcement page.

-

CUDA Pains and WAVeform Gains in AI Research: The ZLUDA project aimed to run CUDA on AMD GPUs can no longer be considered active, and a fresh perspective in AI research proposed in an arXiv paper, suggests wavelet transforms could enhance Transformers by addressing both positional and frequency details efficiently.

Mistral Discord Summary

-

Newbies Get Model Recommendations: Participants recommended instruct models for chat-GPT-like interactions to newcomer

@nana.wav, with the clearer instruction-following focus as opposed to the more general autocompletion capabilities of other models. -

RAG Setup and Model Debates Heat Up: A guide on integrating Mistral with RAG was shared, while the effectiveness of LangChain vs. LlamaIndex was debated; separately, DSPy was touted for leveraging LLMs for programming rather than chatting, adorned with a supportive Twitter link.

-

Deployment Dilemmas and Solutions: Docker deployment via ollama or vllm projects was suggested, while others discussed API alternatives and faced cloud quota barriers; meanwhile, success stories involved deploying Mixtral on HuggingFace despite the hiccups with AWQ quantization.

-

Fine-Tuning Finesse and RAG Revelations: Users discussed fine-tuning vs. RAG with insights into LLM base knowledge importance; guidance was given on input data structuring for LLM output enhancement and queries about prompt versioning tools surfaced.

-

Humans in Tech and AI Seek Touchpoints: French librarian (

@maeelk) sought internship opportunities in psychology and AI; the cost of innovatively building audio-inclusive S2S models sparked discussions around budget constraints and investment needs. -

Technical Troubles and Support Suggestions:

@ingohammfaces hurdles with TypingMind’s API key and a suggestion was made to contact [email protected] for assistance with API and subscription issues.

Perplexity AI Discord Summary

-

Perplexity AI Outshines Rivals in Complex Query Handling:

@tbramstested Perplexity AI with a difficult question from the “Gemini” paper and found it outperformed Google’s Gemini service and OpenAI, answering more quickly. The test results from Perplexity AI are documented here. -

Perplexity’s Potential in API Customization Highlighted: The PPLX API allows for custom search queries using parameters like

"site:reddit.com OR site:youtube.com", as mentioned by@me.lk. However, several users have encountered issues with the API such as performance hiccups (@andrewgazelka) and nonsensical responses (@myadmingushwork_52332). -

Perplexity AI Subscription and Renewal Queries Addressed: Users are seeking details on trial subscriptions and renewal processes for Pro subscriptions, with inquiries about token refresh rates also surfacing. There is currently no early access program for new Perplexity features as confirmed by

@icelavaman. -

Promising Enhancements and Community Collaborations: Perplexity AI is receiving community praise for tools like the pplx shortcut action (

@twodogseeds). Meanwhile,@ok.alexis encouraging a community-driven effort to contribute to an alternative feed/newsletter Alt-D-Feed. -

Seeking Direct Support Channel for Sensitive Data Issues: A user (

@kitsuiwebster) has expressed the need for direct assistance with a sensitive company data issue, avoiding public disclosure while lacking response from support channels.

OpenAI Discord Summary

-

ChatGPT Remembers Your Favorite Color: OpenAI announced a new memory feature for ChatGPT, rolling out to select users, enabling ChatGPT to remember user preferences and details over conversations for a more personalized experience. Users can control what ChatGPT remembers and can switch off this feature.

-

AI-Assistants in Creative Process Paid Talks: A UK researcher,

@noodles7584, is looking to compensate community members for a 30-minute discussion on AI use in creative workflows. -

Performance Quirks in GPT Variants: The community reported fluctuations in GPT-4’s task handling, and Abacus.AI’s Smaug-72B was noted for outperforming GPT-3.5, while ChatGPT-4 seems hesitant to generate full code snippets.

-

Fine-Tuning AI to Watch Videos? Not Yet: Discussion in #gpt-4-discussions clarified that while GPT can describe images from a video with its vision capabilities, it cannot yet be fine-tuned for video-specific knowledge or tasks.

-

Exploring and Perfecting Prompt Engineering: Good prompt engineering was highlighted as involving clear instructions and precision, with a focus on fostering simple storytelling in text-based AI adventures and recognizing differences between prompt engineering and API infrastructure development.

OpenAccess AI Collective (axolotl) Discord Summary

-

Axolotl Embraces MPS, Thanks to GitHub Heroes: Maxime added MPS support in the axolotl project via pull request #1264, referencing the importance of a PyTorch pull request #99272. Clarification on contributor identities highlighted the importance of collective recognition in open source.

-

Chat In The Time Of Datasets: The MessagesList standard for chat datasets proposed by

@dctanneraims for cross-compatibility and is under discussion. The format might include conversation pairs, greetings, and assistant-initiated closures, with challenges noted in JSON-schema validation. -

Axolotl Tokenized Right, Check the Debug Flag: Users are troubleshooting tokenization within axolotl, with advice to inspect the tokenizer configs and a recommendation to use a debug flag for verification.

-

Model Query Woes and Training Queries Grow: Queries about improving model’s multilingual capabilities, LoRA adapter inferencing, and model parallelism were discussed, with solutions ranging from pre-training needs to updates in transformers and DeepSpeed Zero 3 configs for better functionality.

-

Fine-tune or Re-train? Duplicate Data’s Pain: The impact of training data overlap and finetuning practices were questioned, highlighting concerns about reusing text that a model may have encountered during pretraining.

-

RunPod Image on Vast.AI, A Smooth Sail!: The Axoltl RunPod image was reported by

@dreamgento work seamlessly on Vast.AI, underscoring the inter-operability with cloud infrastructure providers.

LangChain AI Discord Summary

-

LangChain Unveils Memory Journaling App:

@hwchase17introduced a new journaling app featuring LangChain memory module, inviting feedback for the early version akin to OpenAI’s ChatGPT with memory feature. Try and give feedback using this journal app and watch the intro video. -

LangChain Community Tackles Diverse Technical Challenges: Topics covered included the possibility of LangChain’s Android integration, pre-processing benefits for efficient embeddings, the search for a capable PDF parser, and calls for improved documentation structure. Additionally, a user faced dependency issues while updating Pinecone Database to v2 with LangChain, which was promptly addressed.

-

Scaling and Integration Enquiries in Langserve Channel: Discussions included questions about scaling Langserve and using Langsmith for deployment. There was a query about exposing a chain from a NodeJS app and an unaddressed issue regarding disabling intermediate steps in the playground. Connection issues with an OpenAI API call from a k8s cluster-based app were also described.

-

Dewy RAG Application with NextJS and OpenAI Guide Shared:

@kerinincontributed a guide exploring a full-stack RAG application, utilizing NextJS, OpenAI API, and Dewy, focusing on reducing hallucinations and improving model response accuracy. The full guide is available here. -

Quest for a Functional PDF Parser and Custom Calculator: Within the tutorials channel, the search for a superior contextual PDF parser to Adobe API, and guidance for building a Langchain-based calculator were topics of discussion, aiming for practical integrations and solutions in AI workflows.

DiscoResearch Discord Summary

- Seeking Argilla Hosting Solutions:

@drxd1000requested advice for hosting an Argilla server capable of supporting multiple annotators with no clear resolution reached. - Layer Selective Rank Reduction in the Spotlight:

@johannhartmanndiscussed an implementation of ‘Layer Selective Rank Reduction’ for mitigating continual training forgetting. The method targets statistically less significant layer parts, and a GitHub repository was mentioned. - Overcoming OOM With Mixtral:

@philipmayencountered an Out of Memory error with a mixtral model, and@bjoernpsuggested using multi-GPU support, mentioning that two A100s might alleviate the issue. - Cross-Language Toxicity Detection Dataset:

@sten6633sought a German toxicity evaluation dataset, considering the translation of ToxiGen from Hugging Face, which requires access agreement. - New Computational Technique Teased:

@phantineteased a technique named “Universes in a bottle” with implications for the P=NP problem, linked to a GitHub page, but details were sparse. - BM25 Search Strategy Proves Effective:

huunguyenreported success using BM25 with additional querying and reranking to enhance search capabilities, and successfully indexed the entirety of Wikipedia into an index under 3GB. - German AI Model Update Inquiry: thomasrenkert asked about the release timeline for version 2 of the German model or a Mixtral variant, but no additional details were provided.

CUDA MODE Discord Summary

-

CUDA Compatibility Crusade: Members discussed achieving CUDA binary compatibility on HIP/ROCm platforms, driven by the ZLUDA project on GitHub, which is a CUDA on AMD GPU initiative. Amidst technical emoji enthusiasm, there were musings about market monopolies and AGI, alongside personal experiences with Radeon hardware issues related to dynamic memory allocation.

-

Generative AI Jobs Galore: A Deep Tech Generative AI startup in Hyderabad is hiring ML, Data, Research, and SDE roles, with applications welcomed here. However, the legitimacy of the job posting was questioned, flagging the need for moderator attention.

-

Compute Shaders and Matrix Math Musings: Inquiries on educational materials for CUDA led to The Book of Shaders recommendation, while the discussion in the PMPP book channel debated the benefits, or lack thereof, of transposing matrices to reduce cache misses in multiplication, indicating varied opinions but no consensus on observed benefits.

-

Apple Chips Enter Monitoring Realm:

@marksaroufimshared asitop, a CLI performance monitoring tool designed for Apple Silicon, likening it totopornvtopin utility for engineers leveraging Apple’s technology. -

GPU Experiments and Job Shuffling: An engineer successfully relocated an Asus WS motherboard to a miner setup, effectively running large quantized models on a NVIDIA 3060 GPU. This indicates a hands-on approach within the community towards custom hardware configurations.

Latent Space Discord Summary

- Reka Enters the Model Arena: A new AI entity named the Reka model has sparked interest in the community following a tweet shared by

@swyxio. The excitement is palpable with discussions around the tweet found here. - Investor Insights Meet AI:

@swyxiospotlighted a VC podcast delving into AI, which could be of significant interest to engineering aficionados. The podcast episode is accessible here. - BUD-E Buzz: BUD-E, an empathetic and context-aware open voice assistant developed by LAION, could signal a new direction in conversational AI. More details are laid out on the LAION blog.

- Pondering the Definition of Agents: The community exchanged views on defining “agents,” with

@slonosuggesting that they are goal-oriented programs that require minimal input from users, a concept significant in the realm of AI development. - Karpathy’s OpenAI Exit Raises Questions: The AI community is abuzz over the news of Andrej Karpathy leaving OpenAI, with

@nembalpointing to an article from The Information and speculation about AGI influences. The article is accessible here.

LLM Perf Enthusiasts AI Discord Summary

-

Minding the Model Size for M2 Max:

@potrockinquired about running Mistral model sizes on an M2 Max with 32GB, and@natureplayeradvised that a 4GB model would be the feasible option, cautioning against an 8GB model and noting that 5GB models may be unstable. -

GPT-5 Rumor Mill:

@res6969expressed humorous doubt about GPT-5’s existence, suggesting that speculation on the model’s upcoming release is overstated, with others joining the jest with emojis. -

Enhanced Memory in ChatGPT:

@potrockhighlighted a new feature tested in ChatGPT, based on a blog post, where it can retain user preferences and information across sessions for more personalized interactions.

AI Engineer Foundation Discord Summary

- Weekly Sync-Up Teases Déjà vu:

@._zplayfully announces the start of the weekly team meeting likening it to a recurring Déjà vu experience. - Member Bows Out from Meeting:

@juanredssends regrets for being unable to attend the weekly meeting, offering apologies to the team. - Call for AI Hackathon Co-Hosts:

@caramelchameleonseeks collaborators to co-host an AI developers hackathon in tandem with game developers in the lead-up to the GDC. - Hackathon Offers Dual Attendance Modes: The hackathon mentioned by

@caramelchameleonhas options for attendance both online and onsite in San Francisco. - Hackathon Organizer Steps Up:

@yikesawjeezshows eagerness to get involved in organizing the hackathon and highlights their expertise with Bay Area events.

Skunkworks AI Discord Summary

- Direct Messaging Initiated: User

@bondconneryhas put out a request for a private message. - Exploring LLaVA Framework Integration:

@CodeManinquired about integrating the LLaVA framework with an SGLang server and SGLang worker, aiming for a potentially more specialized setup than a conventional model worker. - Off-Topic Video Share Ignored: A non-technical video link was shared, not relevant to the engineering discussions.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Datasette - LLM (@SimonW) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (1460 messages🔥🔥🔥):

- Exploring the Limits of Large Language Models: Users are discussing new open-source large language models capable of handling extremely long contexts, such as the Large World Model which claims to work coherently with contexts up to 1 million tokens. There are also mentions of the Cohere’s

ayamodel that supports 101 languages. - The Quest for Efficient Multimodal AIs: Conversations focus on multimodality in AI with references to models handling visual inputs and potential outputs, indicating significant advancements beyond text-based models. The jax-based tools required to run the models are causing installation hiccups for some users.

- Models Under Scrutiny: The community is very active in testing released models, mentioning issues such as TUX dependency problems and a

ValueErrorduring setup, indicating some challenges in getting the advanced models running smoothly. - Users Share Knowledge: Experienced users offer insights and assistance on how to handle models and UIs for various tasks, including long-context quantization in existing frameworks like ExLlama v2. Discussions also touch on the possibility of banishing stop tokens to encourage longer continuous outputs.

- Towards Intelligent Role-Playing: There is a discussion on finding the balance between RP-oriented models and smarter generalized ones, with mentions of a Mixtral variant (

BagelMIsteryTour) that might better fulfill user requirements for intelligent and adaptable model behavior.

Links mentioned:

- Context – share whatever you see with others in seconds: no description found

- Lil Yachty Drake GIF - Lil Yachty Drake - Discover & Share GIFs: Click to view the GIF

- Memory and new controls for ChatGPT: We’re testing the ability for ChatGPT to remember things you discuss to make future chats more helpful. You’re in control of ChatGPT’s memory.

- brucethemoose/LargeWorldModel_LWM-Text-Chat-128K-55bpw · Hugging Face: no description found

- YOLO: Real-Time Object Detection: no description found

- Kooten/BagelMIsteryTour-v2-8x7B-5bpw-exl2 · Hugging Face: no description found

- no title found: no description found

- Think Bigger Skeletor GIF - Think Bigger Skeletor Masters Of The Universe Revelation - Discover & Share GIFs: Click to view the GIF

- no title found: no description found

- CausalLM/34b-beta · Hugging Face: no description found

- SimSim93/CausalLM-34b-beta_q8 · Hugging Face: no description found

- GitHub - jy-yuan/KIVI: KIVI: A Tuning-Free Asymmetric 2bit Quantization for KV Cache: KIVI: A Tuning-Free Asymmetric 2bit Quantization for KV Cache - jy-yuan/KIVI

- The Verge: How NOT to Build a Computer: SPONSOR: Go to http://expressvpn.com/science to take back your Internet privacy TODAY and find out how you can get 3 months free.Link to the Verge’s awful vi…

- LWM/lwm/llama.py at main · LargeWorldModel/LWM: Contribute to LargeWorldModel/LWM development by creating an account on GitHub.

- https://drive.google.com/drive/folders/1my-8wOIYXmfnlryDbwJ20_y6PFCqRfA-?usp=sharinghttps://drive.google.com/drive/folders/1my-8wOIYXmfnlryDbwJ20_y6PFCqRfA-?usp=sharingData Challenge - Aether 2024: In order to participate in the Data Challenge organised by Enigma as part of Aether, Please fill out this form Event Date & Time: Wednesday, February 14th - 2:30 pm Please double-check your detail…

- LargeWorldModel (Large World Model): no description found

- GitHub - lhao499/tux: Tools and Utils for Experiments (TUX): Tools and Utils for Experiments (TUX). Contribute to lhao499/tux development by creating an account on GitHub.

- GitHub - itsme2417/PolyMind: A multimodal, function calling powered LLM webui.: A multimodal, function calling powered LLM webui. - GitHub - itsme2417/PolyMind: A multimodal, function calling powered LLM webui.

- llama.cpp/examples/server at master · ggerganov/llama.cpp: Port of Facebook’s LLaMA model in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- Tweet from Cohere For AI (@CohereForAI): Today, we’re launching Aya, a new open-source, massively multilingual LLM & dataset to help support under-represented languages. Aya outperforms existing open-source models and covers 101 different la…

- OpenAI Researcher Andrej Karpathy Departs: Andrej Karpathy, one of the founding members of OpenAI, has left the company, a spokesperson confirmed. Karpathy, a prominent artificial intelligence researcher, was developing a product he has descri…

- CohereForAI/aya-101 · Hugging Face: no description found

- GitHub - valine/NeuralFlow: Contribute to valine/NeuralFlow development by creating an account on GitHub.

- ChatGPT but Uncensored and Free! | Oogabooga LLM Tutorial: ChatGPT but uncensored and free, well its now possible thanks to the open source AI community! In this video I show you how to set up the Oogabooga graphical…

- LWM/lwm/vision_chat.py at main · LargeWorldModel/LWM: Contribute to LargeWorldModel/LWM development by creating an account on GitHub.

- New emails reveal scientists believed COVID-19 was man-made: New emails have revealed scientists got together to discuss the origins of COVID, suspecting it was man-made, before deciding to tell the public it originate…

- GitHub - itsme2417/PolyMind: A multimodal, function calling powered LLM webui.: A multimodal, function calling powered LLM webui. - GitHub - itsme2417/PolyMind: A multimodal, function calling powered LLM webui.

- GitHub - LargeWorldModel/LWM: Contribute to LargeWorldModel/LWM development by creating an account on GitHub.

- GitHub - acorn-io/rubra: AI Assistants, LLMs and tools made easy: AI Assistants, LLMs and tools made easy. Contribute to acorn-io/rubra development by creating an account on GitHub.

- unalignment/weeeeee.0 · Hugging Face: no description found

- unalignment/weeeeee.1 · Hugging Face: no description found

- unalignment/weeeeee.2 · Hugging Face: no description found

- CohereForAI/aya_dataset · Datasets at Hugging Face: no description found

- CohereForAI/aya_collection · Datasets at Hugging Face: no description found

TheBloke ▷ #characters-roleplay-stories (154 messages🔥🔥):

-

Exploring Miqu Variants:

@superking__opened a discussion about the performance of the Miqu models, particularly after being re-quants from the original GGUFs (Google’s Generative Unsupervised Feature extraction).@soufflespethumanmentioned MiquMaid-v2-70B, a variant specifically fine-tuned for ERP (Erotic Role Play), and provided sensitive content links to various versions on Hugging Face, which have been marked due to their nature. -

Performance Gain with Better Hardware:

@superking__shared their experience on performance improvement from “painfully slow” to “almost usable” by upgrading their hardware to 12GB VRAM, which changed the given model’s tokens per second from 0.7t/s to 2.1t/s. -

Model Comparisons and Recommendations: In the context of roleplay and storytelling, users discussed various models.

@spottyluckpraised Nous Capybara Limarpv3 34B for its capabilities and provided a link to the model on Hugging Face.@wolfsaugeshared a sketch about “The Continental” featuring Christopher Walken and@eqobabainquired about appropriate models and settings for engaging in NSFW ERP, mentioning a specification of 48GB VRAM and RTX A600. -

Discussing Model Output Improvement:

@nerisssuggested using a higher temperature or lower minimum probability to reduce repetition and improve creativity in AI model outputs. The conversation highlighted variations in temperature settings, with@dercheinzsuggesting higher temperatures, while@nerissadvised lower ones, each to counteract repetitive or uncreative responses from models. -

Dataset Cleaning Challenges and Strategies:

@c.gatoand@potatooffexchanged thoughts on cleaning datasets manually, with@c.gatoseeking advice on how to perform ngram analysis to prevent overtraining on specific ngrams.@mrdragonfoxrecommended using Python’s pandas library for handling tabular or JSON data, sharing a gist for guidance.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- TheBloke/Nous-Capybara-limarpv3-34B-GGUF · Hugging Face: no description found

- Models - Hugging Face: no description found

- gist:f786564868357cde5894ef6e2c6f64cf: GitHub Gist: instantly share code, notes, and snippets.

- The Continental: Anticipation - Saturday Night Live: Subscribe to SaturdayNightLive: http://j.mp/1bjU39dSEASON 26: http://j.mp/14GYJ6nThe night air is tinged with anticipation. It’s time to meet The Continental…

- Happy Fun Ball - SNL: Happy Fun Ball seems great until you hear all the potential side effects. [Season 16, 1991]#SNLSubscribe to SNL: https://goo.gl/tUsXwMStream Current Full Epi…

- NeverSleep/MiquMaid-v2-70B · Hugging Face: no description found

- NeverSleep/MiquMaid-v2-70B-GGUF · Hugging Face: no description found

- NeverSleep/MiquMaid-v2-70B-DPO · Hugging Face: no description found

- NeverSleep/MiquMaid-v2-70B-DPO-GGUF · Hugging Face: no description found

TheBloke ▷ #training-and-fine-tuning (43 messages🔥):

-

Understanding Finetuning Techniques:

@starsupernovaexplained that Mixtral – Instruct was trained using SFT on an instruction dataset followed by Direct Preference Optimization (DPO) on a paired feedback dataset, as detailed on page 6 of their paper. DPO is described as an optimized form of RLHF/PPO finetuning. -

Unsloth AI’s Apply Chat Template:

@starsupernova, likely the founder of Unsloth AI, highlighted the use ofapply_chat_templateinstead of Alpaca for training an LLM on multi-turn conversation datasets. They also hinted at uploading a new notebook with all chat templates to simplify the process. -

Augmentoolkit for Instruct-Tuning Datasets: In the conversation,

@mr.userbox020shared a link to a GitHub repository offering a toolkit to convert Compute and Books Into Instruct-Tuning Datasets. Although@starsupernovawas not familiar with it, they suggested trying it out as it appeared promising. -

Anticipation for Updated Training Resources:

@avinierdcis awaiting an updated notebook from@starsupernovafor fine-tuning Mistral on multi-turn conversation datasets.@starsupernovaassured they would ping when it’s available on the Unsloth’s Discord server. -

Unexplained Variation in Training Loss:

@dreamgenreported observing a 2x higher training and evaluation loss when fine-tuning Mixtral 8x7b qlora compared to Mistral 7b, despite using the same dataset and similar hyperparameters, and inquired if others had seen something similar.

Links mentioned:

GitHub - e-p-armstrong/augmentoolkit: Convert Compute And Books Into Instruct-Tuning Datasets: Convert Compute And Books Into Instruct-Tuning Datasets - e-p-armstrong/augmentoolkit

TheBloke ▷ #coding (8 messages🔥):

-

Interest in Collaboration Sparked:

@_b_i_s_c_u_i_t_s_expressed interest in an unspecified topic, potentially around chatbot implementation, which was well received by@mr_pebble, finding it motivating to progress on implementing various chat methods. -

Bridging JavaScript and Python:

@spottyluckexperimented with expanding the SillyTavern project to use a JavaScript-Python bridge, utilizing JSPyBridge to potentially adapt and enhance functionalities. They shared how it enabled testing of Microsoft’s LLMLingua, despite some issues with prompt mangling. -

Using Python Classes in JavaScript:

@spottyluckprovided code examples illustrating the ease of creating Python classes within JavaScript using JSPyBridge, along with an asynchronous function,compressPrompt, which demonstrates the interaction between languages to compress prompts. -

Modifications to Handle Windows Errors and Devices: In their continued development,

@spottyluckmodified Intel’s BigDL.llm transformer to support specific requirements, likecpu_embedding=Trueon Windows due to access violation errors, and dealing with model device allocation issues usingmodel.to(). -

Compression Process Integration into Routing:

@spottyluckexplained integrating prompt compression into their web service by adding a flag to the/generaterouter post and using conditional logic to process the prompt through the bridge, demonstrating how Python can operate as if it were a JavaScript class.

Links mentioned:

GitHub - extremeheat/JSPyBridge: 🌉. Bridge to interoperate Node.js and Python: 🌉. Bridge to interoperate Node.js and Python . Contribute to extremeheat/JSPyBridge development by creating an account on GitHub.

LM Studio ▷ #💬-general (202 messages🔥🔥):

-

Struggles with Large Models: Users like

@nonm3encountered errors while loading large models in LM Studio due to insufficient RAM and VRAM, with suggestions to try smaller model quants. Others like@theoverseerjackbrightfaced issues with LM Studio not detecting GPUs correctly and crashing post-restart. -

Software Seekers and Recommendations:

@tvb1199was in search of client software that can interact with LM Studio for RAG capabilities, and was pointed towards AGiXT, while@pierrunoytand others discussed Nvidia’s ‘Chat with RTX’ with RAG features as a potential game-changer. -

Compatibility Inquiries: Several users such as

@wizzy09had trouble installing or opening LLM Studio on unsupported platforms like a 2014 MacBook Pro, with clarifications from users like@heyitsyorkieexplaining that LMStudio does not work on Intel Macs. -

Nvidia’s Chat with RTX Triggers Interest: The community showed a keen interest in Nvidia’s ‘Chat with RTX’. Users like

@hypocritipuswere intrigued by the RAG feature, hoping for a similar easy-install, no-dependency RAG feature in LM Studio. -

LM Studio Usage and Model Discussions: Users like

@bigboimarkusexpressed satisfaction with LM Studio for tasks such as proofreading, whereas@mr.stark_queried about models that learn on the fly. Conversations included the functionality and integration with other tools like Ollama and Automatic1111. -

General Community Assistance and Banter: Throughout, community members engaged in sharing tips, offering troubleshooting advice, including suggestions for alternatives or downgrading versions, and occasionally joked about AI capabilities such as predicting lottery numbers.

Links mentioned:

- Stable Cascade - a Hugging Face Space by multimodalart: no description found

- cmp-nct/Yi-VL-6B-GGUF at main: no description found

- TheBloke/CodeLlama-70B-Instruct-GGUF at main: no description found

- Chost Machine GIF - Chost Machine Ai - Discover & Share GIFs: Click to view the GIF

- System prompt - Pastebin.com: Pastebin.com is the number one paste tool since 2002. Pastebin is a website where you can store text online for a set period of time.

- NVIDIA Chat With RTX: Your Personalized AI Chatbot.

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed…

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed…

LM Studio ▷ #🤖-models-discussion-chat (75 messages🔥🔥):

- MedAlpaca for Medical LLMs: User

@heyitsyorkiesuggested medAlpaca, a model fine-tuned for medical question answering, for@pepito92i’s project on LLMs in the medical field. - Phi-2 Model Discussions:

@.dochoss333inquired about the absence of the official “microsoft/phi-2” model in LM Studio, and@heyitsyorkieclarified that it’s not a GGUF model and thus won’t show up.@hugocapstagiaire_54167mentioned user TheBloke might have transformed it into a .gguf for usability with llama.cpp. - LLama.cpp and Model Support:

@jedd1puzzled over why some models wouldn’t load, and@heyitsyorkiepointed out that the Yi-VL models are unsupported in the current build of llama.cpp, requiring an update for compatibility. - LM Studio Assistant Functionality Inquiry: User

@edu0835inquired about the possibility of creating an assistant in LM Studio with the ability to utilize PDFs or books for a medical assistant application, without a direct response provided at this time. - Model Performance Comparisons Engage Community: Users like

@kujilaand@heyitsyorkieengaged in comparisons between different language models, with discussions on model specificity, ethical behavior of AI models, and suggestions to try out models like Deepseek Coder Ins 33B.

Links mentioned:

- Nexesenex/Senku-70b-iMat.GGUF at main: no description found

- Hi Everybody Simpsons GIF - Hi Everybody Simpsons Wave - Discover & Share GIFs: Click to view the GIF

- TheBloke/medicine-chat-GGUF · Hugging Face: no description found

- wolfram/miquliz-120b-v2.0-GGUF · Hugging Face: no description found

- GitHub - kbressem/medAlpaca: LLM finetuned for medical question answering: LLM finetuned for medical question answering. Contribute to kbressem/medAlpaca development by creating an account on GitHub.

- The new Yi-VL-6B and 34B multimodals ( inferenced on llama.cpp, results here ) · ggerganov/llama.cpp · Discussion #5092: Well, their benchmarks claim they are almost at GPT4V level, beating everything else by a mile. They also claim that CovVLM is one of the worst (and it’s actually the best next to GPT4, by far) On…

LM Studio ▷ #🎛-hardware-discussion (140 messages🔥🔥):

-

NVLink and Memory Cycling Queries:

@luxaplexxasked if GPUs were NVLinked and how memory cycles through in a multi-GPU setup. The consensus, including from@heyitsyorkie, is that they likely aren’t NVLinked due to potential CUDA issues, especially with older cards like the 960. Users are considering whether different generations of NVIDIA GPUs like the 1080 and 1060 6g can work together effectively. -

Discussions on Upgrading to Better GPUs: Several users, including

@crsongbirband@heyitsyorkie, discussed upgrading their GPUs for improved performance in LLM tasks, with a suggestion to look at the RTX 3060 12GB as a viable option for running LLMs locally. -

Risks and Rewards of Overclocking: In a discussion initiated by

@alastair9776about overclocking for better performance,@rugg0064and@crsongbirbnoted that overclocking VRAM/RAM can lead to a notable increase in token generation speed, although caution is advised due to potential hardware stress. -

Combining GPUs and Threadripper Dreams: Conversation ensued about the feasibility and costs of using multiple high-performance GPUs, with users like

@nink1and@quickdive.debating if a beefy CPU is necessary when having multiple powerful GPUs, and the logistics of housing such a setup. -

CUDA on AMD and Other Hardware Convos:

@666siegfried666shared news about the ZLUDA project allowing CUDA apps to run on AMD hardware and this sparked a brief discussion on the relevance and future potential of such a feature. Users such as@addressofreturnaddressand@joelthebuilderalso discussed their own rig setups and potential upgrades, highlighting personal preferences and value assessments.

Links mentioned:

- Doja Cat GIF - Doja Cat Star - Discover & Share GIFs: Click to view the GIF

- Brexit British GIF - Brexit British Pirate - Discover & Share GIFs: Click to view the GIF

- ATOM Echo Smart Speaker Development Kit: ATOM ECHO is a programmable smart speaker.This eps32 AIoT Development Kit has a microphone and speaker for AI voice interaction light and small. It can be access AWS, Baidu, ESPHome and Home Assistant…

- Unmodified NVIDIA CUDA apps can now run on AMD GPUs thanks to ZLUDA - VideoCardz.com: ZLUDA enables CUDA apps on ROCm platform, no code changes required AMD-backed ZLUDA project can now enable code written in NVIDIA CUDA to run natively on AMD hardware. AMD has reportedly taken over t…

- Lian-Li O11 Dynamic XL ROG certificated -Black color Tempered Glass: Lian Li O11 Dynamic XL ROG certificated, Front and Left Tempered Glass, E-ATX, ATX Full Tower Gaming Computer Case - Black

LM Studio ▷ #🧪-beta-releases-chat (21 messages🔥):

- Awaiting the Next Update for IQ3_XSS Support:

@n8programsinquired about IQ3_XSS support in the latest release, to which@yagilbresponded that it will be included in the next update. - Elevation of 1bit Quantization on the Horizon:

@drawless111shared excitement about upcoming 1.5 bit quantization, posting a GitHub pull request link indicating progress. This elicits reactions with@heyitsyorkieanticipating a sweet next beta with the new quant sizes. - Model Benchmarking Induces Awe:

@drawless111expressed amazement at the latest benchmarks for 1bit quantization, stating “70B model on 16 GB card. WOOF.” and pointing out a ‘70B’ model posted on Hugging Face that can offload on VRAM effectively. - Preparations for Incompatible Model Downloads: Users, including

@epicureus, are advised to download models like IQ3_XSS even if they’re not supported yet, with@fabguyhumorously suggesting “Save the model, save the world!” - Hugging Face Hub Features Multiple New Models:

@drawless111shared an update, revealing the availability of 5 IQ1 models on Hugging Face that work with various VRAM sizes, nonchalantly noting an increase to 10 by the end of the conversation.

Links mentioned:

- Nexesenex/NousResearch_Yarn-Llama-2-70b-32k-iMat.GGUF · Hugging Face: no description found

- Claire Bennet Heroes GIF - Claire Bennet Heroes Smile - Discover & Share GIFs: Click to view the GIF

- 1.5 bit quantization by ikawrakow · Pull Request #5453 · ggerganov/llama.cpp: This draft PR is a WIP that demonstrates 1.5 bits-per-weight (bpw) quantization. Only CUDA works, there is no implementation for the other supported back-ends. CUDA, AVX2 and ARM_NEON are implement…

LM Studio ▷ #avx-beta (2 messages):

- No AVX2, No Cry:

@rafalsebastianexpressed concerns about not being able to run LMstudio on CPUs with only AVX (version one) after getting the message that their processor doesn’t support AVX2. They wondered if they should switch machines for running local LLMs. - LM Studio Beta for the Rescue:

@heyitsyorkieresponded with a solution, mentioning that LM Studio can indeed run on CPUs with only AVX support by downloading the 0.2.10 AVX beta release for Windows. They also recommended upgrading to a CPU with AVX2 for optimal results and provided a link to beta releases and terms of use.

Links mentioned:

LM Studio Beta Releases: no description found

LM Studio ▷ #crew-ai (3 messages):

- Looking for Dual Model Deployment Tips:

@alluring_seahorse_04960wonders how to run two models on the same machine without facing repetition errors. The user mentions using a Conda environment on Ubuntu and avoids VMs for their slowness. - Humorous Clarification Request on Repetition: In response to

@alluring_seahorse_04960,@wolfspyrejokes about the nature of the repetition errors, questioning whether they pertain to looping outputs or tasking issues within worker processes.

LAION ▷ #general (361 messages🔥🔥):

-

Magvit V2 Reproduction Inquiries:

@.lostnekosought technical guidance for reproducing Magvit V2. Discussions circled around the ideal datasets and parameters for video compression and understanding, with@chad_in_the_housementioning experiments on the lfq side of Magvit2. -

Mysterious Buzz around Magvit:

@pseudoterminalxand others in the chat noticed sudden interest in MAGVIT, speculating about a recent influencer mention given the two mentions within a short time frame. -

Stable Cascade Discussions Heat Up: Focus shifted to Stability AI’s Stable Cascade model, with dialogues highlighting its hefty VRAM requirements, misleading inference time graphs, and concerns about the model being poorly optimized and full of bugs.

@pseudoterminalxshared examples of its capabilities, including issues with text clarity in image outputs. -

Evaluating AI Models and Copyright Concerns: Conversations touched on the usage and legality of AI-generated images. Users

@vrus0188and@kenjiqqdebated AI image model copyrights, commercial use, and the implications of research-only model licenses. -

Hardware and Performance Perspectives: A technical dialogue ensued over Stable Cascade’s heavy VRAM use and optimization problems, as

@pseudoterminalxreported issues like inability to run models in float16 and@kenjiqqprovided details about inference time on consumer GPUs like the 3090.

Links mentioned:

- Stable Cascade - a Hugging Face Space by multimodalart: no description found

- Court Dismisses Authors’ Copyright Infringement Claims Against OpenAI * TorrentFreak: no description found

- Stable Cascade のご紹介 — Stability AI Japan — Stability AI Japan: Stable Cascade の研究プレビューが開始されました。この革新的なテキストから画像へのモデルは、品質、柔軟性、微調整、効率性の新しいベンチマークを設定し、ハードウェアの障壁をさらに排除することに重点を置いた、興味深い3段階のアプローチを導入しています。

- Hey Hindi GIF - Hey Hindi Bollywood - Discover & Share GIFs: Click to view the GIF

- Don’t ask to ask, just ask: no description found

- GitHub - Stability-AI/StableCascade: Contribute to Stability-AI/StableCascade development by creating an account on GitHub.

- Crypto Kids Poster | 24posters | Hip Hop & Street Art Prints: Transform your walls with our viral new Crypto Kids Poster. Inspired by street-wear & hip hop culture, enjoy artwork designed to bring you bedroom to life. Fast shipping times (3-5 days) 10,000+ h…

LAION ▷ #research (48 messages🔥):

-

Discussion on Impact of Adult Content on AI:

@vrus0188and others discuss the historical contributions of adult content to advancing technology, juxtaposing it with AI developments. Some users like@twoaboveacknowledge the pattern of adult industries driving tech advancements, while others like@SegmentationFaultdoubt if the focus on adult content leads to meaningful progress in AI. -

Concern Over Explicit AI-Generated Content:

@thejonasbrothersshares a news article highlighting the misuse of AI in creating non-consensual pornography, noting the challenges it poses and its high visibility. This leads to a discussion on the broader implications and controversies surrounding AI’s use in adult content. -

Observations on the Pornography Market and AI: Users like

@chad_in_the_houseand@freondiscuss the profitability and market saturation of NSFW content, contemplating the economical and ethical risks involved in this space. -

Debates Over the Merits of AI-Powered Erotic Roleplay:

@SegmentationFaultexpresses frustration over the preference for low-effort erotic content in AI communities, arguing that this hinders meaningful developments in AI models. Others like@mfcooland@.undeletedecho these sentiments, criticizing the quality stagnation in AI-generated adult imagery. -

Technical Discussion on AI Image Quality:

@drheaddelves into technical aspects of AI-generated images, mentioning the NovelAI model and discussing the viability and impact of VAE encoder training for improved image generation. There is a communal reflection on the standards of “photorealism” within the community and how they could be improved.

Links mentioned:

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

- AI brings deepfake pornography to the masses, as Canadian laws play catch-up: Underage Canadian high school girls are targeted using AI to create fake explicit photos that spread online. Google searches bring up multiple free websites capable of “undressing” women in …

Eleuther ▷ #general (179 messages🔥🔥):

-

Checksums for The Pile Data Located:

@paganpegasusprovided@hailey_schoelkopfwith the checksums for The Pile zst shards, linking both the Discord pins and the EleutherAI’s hashes. -

Tools to Determine Image Content:

@everlasting_gomjabbarinquired about tools to discern if an image is of an object/location versus ‘nothing’ like a blurry shot.@paganpegasusdescribed the complexity of defining “nothing” in images, while@rallio.recommended using models like OwlViT or CLIP. -

Manuscript Review and Editing in Progress:

@wonkothesensible, through a series of messages, provided meticulous feedback on a paper draft provisionally titled “Don’t think about the paper”, focusing on clarifying language and grammar.@hailey_schoelkopfexpressed gratitude and indicated credits to the EleutherAI Discord in the paper’s acknowledgements. -

Cloud Resources for NLP Classification Discussed: In response to

@pxxxlseeking advice on cloud resources for training NLP classification models,@ad8erecommended GCP and Colab, with various participants chiming in about the costs and features of various platforms like runpod and vast.ai. -

Inquiries About EleutherAI Computing Resources: User

@vidavaasked about the guidelines and requirements for accessing EleutherAI’s computational resources for a semi-custom LLM project featuring architectural adjustments and fine-tuning adapters.@stellaathenaindicated openness to collaboration but highlighted the need for clarity on the research agenda and proposed a collaborative value proposition. -

Semantic Scholar Paper-Author Linking Mechanism: Regarding whether Semantic Scholar automatically links Arxiv papers to authors,

_inoxclarified that the process is automatic but allows for manual intervention or suggested changes if errors occur.

Links mentioned:

- Overleaf, Online LaTeX Editor: An online LaTeX editor that’s easy to use. No installation, real-time collaboration, version control, hundreds of LaTeX templates, and more.

- Research Paper Release Checklist : no description found

- lora_example.py: lora_example.py. GitHub Gist: instantly share code, notes, and snippets.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Hashes — EleutherAI: no description found

Eleuther ▷ #research (208 messages🔥🔥):

-

Fractal Analysis of Neural Network Hyperparameters:

@jbusttershared visualizations of fractals generated from neural network hyperparameters, with red indicating diverging training and blue for converging. Jascha Sohl-Dickstein’s blog post showcases the concept, correlating fractal patterns with the learning rates of network layers and the network’s weight offset. -

Discussing Convergence and Divergence in Training: The conversation, involving users like

@Hawk,@genetyx8, and@mrgonao, discussed the means for determining if neural network training is converging or diverging, debating the presence of “diverging to infinity” and the nature of boundaries within fractal visualizations, with suggestions that NaNs may denote divergence. -

Active Learning and Data Presentation Order in ML:

@rybchukinquired about research on models choosing the order of data presentation, leading to a discussion about active learning.@thatspysaspymentioned the subfield’s existence, noting its lack of success, and@catboy_slim_added that it could halve training requirements by using smaller models to filter data for larger models’ training. -

Leveraging Unsupervised Data in Encoder-Decoder Models: The question of how to utilize large unsupervised datasets effectively in encoder-decoder models for tasks such as audio to text was brought up by

@loubb. Suggestions and discussions ranged from training components separately to integrating cross-attention during pre-training. -

Release of an NLP Robustness Paper and Test-Time Augmentation:

@millanderannounced the publication of their lead author paper on improving text classifiers’ robustness through test-time augmentation (TTA) using large language models. They thanked the community for support and shared the arxiv link to their work.

Links mentioned:

- Neural network training makes beautiful fractals: This blog is intended to be a place to share ideas and results that are too weird, incomplete, or off-topic to turn into an academic paper, but that I think may be important. Let me know what you thin…

- A Poster for Neural Circuit Diagrams: As some of you might know, I have been working on neural circuit diagrams over the past year or so. These diagrams solve a lingering challenge in deep learning research – clearly and accurately commun…

- MoE-Mamba: Efficient Selective State Space Models with Mixture of Experts: State Space Models (SSMs) have become serious contenders in the field of sequential modeling, challenging the dominance of Transformers. At the same time, Mixture of Experts (MoE) has significantly im…

- Scaling Laws for Fine-Grained Mixture of Experts: Mixture of Experts (MoE) models have emerged as a primary solution for reducing the computational cost of Large Language Models. In this work, we analyze their scaling properties, incorporating an exp…

- Model Editing with Canonical Examples: We introduce model editing with canonical examples, a setting in which (1) a single learning example is provided per desired behavior, (2) evaluation is performed exclusively out-of-distribution, and …

- An Exponential Learning Rate Schedule for Deep Learning: Intriguing empirical evidence exists that deep learning can work well with exoticschedules for varying the learning rate. This paper suggests that the phenomenon may be due to Batch Normalization or B…

- Nonlinear computation in deep linear networks: no description found

- Feedback Loops With Language Models Drive In-Context Reward Hacking: Language models influence the external world: they query APIs that read and write to web pages, generate content that shapes human behavior, and run system commands as autonomous agents. These interac…

- Can Mamba Learn How to Learn? A Comparative Study on In-Context Learning Tasks: State-space models (SSMs), such as Mamba Gu & Dao (2034), have been proposed as alternatives to Transformer networks in language modeling, by incorporating gating, convolutions, and input-dependen…

- Suppressing Pink Elephants with Direct Principle Feedback: Existing methods for controlling language models, such as RLHF and Constitutional AI, involve determining which LLM behaviors are desirable and training them into a language model. However, in many ca…

- Improving Black-box Robustness with In-Context Rewriting: Machine learning models often excel on in-distribution (ID) data but struggle with unseen out-of-distribution (OOD) inputs. Most techniques for improving OOD robustness are not applicable to settings …

- Prismatic VLMs: Investigating the Design Space of Visually-Conditioned Language Models: Visually-conditioned language models (VLMs) have seen growing adoption in applications such as visual dialogue, scene understanding, and robotic task planning; adoption that has fueled a wealth of new…

- Tweet from Nature Reviews Physics (@NatRevPhys): Perspective: Generative learning for nonlinear dynamics By @wgilpin0 @TexasScience https://rdcu.be/dysiB

- Tweet from Hannes Stärk (@HannesStaerk): Diffusion models are dead - long live joint conditional flow matching! 🙃 Tomorrow @AlexanderTong7 presents his “Improving and generalizing flow-based generative models with minibatch optimal tran…

- A weight matrix in a neural network tries to break symmetry and fails.: We initialize a neural network so that the weight matrices can be nearly factorized as the Kronecker product of a random matrix and the matrix where all of t…

- Mixture of Tokens: Efficient LLMs through Cross-Example Aggregation: Despite the promise of Mixture of Experts (MoE) models in increasing parameter counts of Transformer models while maintaining training and inference costs, their application carries notable drawbacks…

- llm-random/research/conditional/moe_layers/expert_choice.py at ad41b940c3fbf004a1230c1686502fd3a3a79032 · llm-random/llm-random: Contribute to llm-random/llm-random development by creating an account on GitHub.

- An Emulator for Fine-Tuning Large Language Models using Small Language Models: Widely used language models (LMs) are typically built by scaling up a two-stage training pipeline: a pre-training stage that uses a very large, diverse dataset of text and a fine-tuning (sometimes, &#…

- MASS: Masked Sequence to Sequence Pre-training for Language Generation: Pre-training and fine-tuning, e.g., BERT, have achieved great success in language understanding by transferring knowledge from rich-resource pre-training task to the low/zero-resource downstream tasks…

- Meta- (out-of-context) learning in neural networks: Brown et al. (2020) famously introduced the phenomenon of in-context learning in large language models (LLMs). We establish the existence of a phenomenon we call meta-out-of-context learning (meta-OCL…

- Secret Collusion Among Generative AI Agents: Recent capability increases in large language models (LLMs) open up applications in which teams of communicating generative AI agents solve joint tasks. This poses privacy and security challenges conc…

- Portal: Home of the TechBio community. Tune into our weekly reading groups (M2D2, LoGG, CARE), read community blogs, and join the discussion forum.

- Generative learning for nonlinear dynamics | Nature Reviews Physics: no description found

- Policy Improvement using Language Feedback Models: We introduce Language Feedback Models (LFMs) that identify desirable behaviour - actions that help achieve tasks specified in the instruction - for imitation learning in instruction following. To trai…

- To Repeat or Not To Repeat: Insights from Scaling LLM under Token-Crisis: Recent research has highlighted the importance of dataset size in scaling language models. However, large language models (LLMs) are notoriously token-hungry during pre-training, and high-quality text…

- UnitY: Two-pass Direct Speech-to-speech Translation with Discrete Units - Meta Research: We present a novel two-pass direct S2ST architecture, UnitY, which first generates textual representations and predicts discrete acoustic units subsequently.

Eleuther ▷ #interpretability-general (1 messages):

- In Search of Interpretability Guidance:

@jaimervreached out to the channel asking for a more current overview of approaches to interpretability than the paper they referenced on Representation Engineering. They are seeking assistance for potentially better or newer resources on the topic.

Eleuther ▷ #lm-thunderdome (4 messages):

- Contributors Wanted for Hallucinations Leaderboard:

@pminervinishared a call to action for contributions to the hallucinations leaderboard, adding that there are several new hallucination-oriented tasks to work on within the Harness leaderboard space. - Enthusiastic Response to Collaboration: Following the announcement,

@baber_expressed interest and asked what specific help was needed. - Call for Specific Assistance: In response,

@pminervinimentioned they need help with task definitions, proposing/adding new datasets and metrics, and assistance in determining which results to re-compute following recent updates to the harness.

Eleuther ▷ #gpt-neox-dev (4 messages):

- Potential Misalignment in Pythia Deduped Data:

@pietrolescihas raised concerns about a possible misalignment between training data batches and checkpoints specifically for the 2.8b size Pythia deduped suite. Other models, including the smaller versions and 6.9b, seem well-aligned. - Response to Data Alignment Query:

@hailey_schoelkopfacknowledged@pietrolesci’s query about the alignment issue and stated they will follow up on this matter. - Interest in Pythia Research and Suggestion for Publication:

@stellaathenaexpressed excitement about the potential for a blog post or workshop paper demonstrating the reliability of Pythia, which they would extensively cite. - Openness to Writing About Pythia: In response to

@stellaathena,@pietrolesciappreciated the suggestion about creating a post regarding their findings on Pythia, considering it a good short project post-ACL deadline.

LlamaIndex ▷ #announcements (2 messages):

- LlamaIndex v0.10 Released:

@jerryjliu0announced the release of LlamaIndex v0.10, which is the most significant update to date, featuring a newllama-index-corepackage and splitting integrations/templates into separate PyPi packages. Thellamahub.aiis also being revamped, they’ve deprecated ServiceContext for better developer experience, and encourage the community to explore the blog post and documentation for detailed info on migration and contributing. - Celebrating Team Achievement: Big thanks were given to

<@334536717648265216>and<@908844510807728140>for leading the effort on the latest LlamaIndex update, which is a step towards making it a production-ready data framework. - Tweet about LlamaIndex v0.10 Launch: LlamaIndex shared a tweet highlighting key updates in LlamaIndex v0.10, including the creation of hundreds of separate PyPi packages, the refactoring of LlamaHub, and the deprecation of ServiceContext.

- Webinar Announcement with No-Code RAG Tutorial: Flowise’s co-founder, Henry Heng, will feature in a LlamaIndex Webinar to demonstrate building no-code Retrieve and Generate (RAG) applications using their new integration with LlamaIndex.TS. The webinar is scheduled for Friday 9am PT and interested individuals can register here.

Links mentioned:

- LlamaIndex Webinar: Build No-Code RAG · Zoom · Luma: Flowise is one of the leading no-code tools for building LLM-powered workflows. Instead of learning how to code in a framework / programming language, users can drag and drop the components…

- Tweet from LlamaIndex 🦙 (@llama_index): 💫 LlamaIndex v0.10 💫 - our biggest open-source release to date, and a massive step towards production-readiness. 🚀 ✅ Create a core package, split off every integration/template into separate PyPi …

- LlamaIndex v0.10: Today we’re excited to launch LlamaIndex v0.10.0. It is by far the biggest update to our Python package to date (see this gargantuan PR)…

LlamaIndex ▷ #blog (5 messages):

-

LlamaIndex Hits v0.10 Milestone: LlamaIndex announces its biggest open-source release, v0.10, signaling a shift towards production-readiness. A core package has been created and hundreds of integrations split off into separate PyPi packages as highlighted in their Twitter post.

-

Tutorial on Multimodal Apps with LlamaIndex:

@ollamaand LlamaIndex co-present a tutorial for building context-augmented multimodal applications on a MacBook, including smart receipt reading and product image augmentation, shared via this tweet. -

DanswerAI Enhances Enterprise with LlamaIndex: DanswerAI leverages

@llama_indexto offer ChatGPT functionalities over enterprise knowledge bases, integrating with common workplace tools such as GDrive, Slack, and Jira to boost team efficiency as announced in the Twitter announcement. -

Upcoming No-Code RAG Webinar with FlowiseAI:

@llama_indexteams up with@FlowiseAIfor a webinar on building no-code RAG (Retrieval-Augmented Generation) workflows with LlamaIndex.TS and Flowise, details in their recent tweet. -

Define Research Workflow with RAG-powered Agent: A notebook by

@quantoceanlioutlines a process to establish a scientific research workflow, harnessing LlamaIndex to operate with resources like ArXiv and Wikipedia for an innovative RAG-powered agent, showcased in this tweet.

LlamaIndex ▷ #general (303 messages🔥🔥):

-

LlamaIndex Import Troubles: Users like

@ddashed,@bhrdwj,@lhc1921, and@cheesyfishesdiscuss issues with the latest LlamaIndex update. Users were advised to start with a fresh venv or container and pointed towards a migration guide and package registry for reference. -

Complex Document Filtering Challenges: User

@_shrigmamalesought assistance in filtering large directories of complex documents based on keywords, dates, and file types. Another user,@qingsongyao, suggested traditional indexing techniques over expensive LLMs like GPT-4 for dynamic file filtering. -

Efficient Handling of Multiple Document Sources: Users like

@nvmm_,@whitefang_jr, and@.saitejengaged in discussions about handling and merging private user-uploaded documents with public indexed documents using LlamaIndex and the potential for creating multiple agents for individual documents. -

Configuring Chunk Sizes and Testing Performance:

@sgaserettoasked about where to specifychunk_sizenow thatServiceContextis deprecated in favor ofSettings.@cheesyfishesprovided the new way to configure chunk size globally or by passing the node parser/text splitter into the index. -

Handling Changes with Chat Memory Buffer:

@benzen.vninquired about experiencing non-relevant responses when using aChatMemoryBuffer.@whitefang_jrsuggested that off-topic conversations might degrade the relevancy of queries and pointed to parts of the LlamaIndex source code for explanation.

Links mentioned:

- Notion – The all-in-one workspace for your notes, tasks, wikis, and databases.: A new tool that blends your everyday work apps into one. It’s the all-in-one workspace for you and your team

- Response Modes - LlamaIndex 🦙 v0.10.3: no description found

- Notion – The all-in-one workspace for your notes, tasks, wikis, and databases.: A new tool that blends your everyday work apps into one. It’s the all-in-one workspace for you and your team

- Google Colaboratory: no description found

- Build a chatbot with custom data sources, powered by LlamaIndex: Augment any LLM with your own data in 43 lines of code!

- Router Query Engine - LlamaIndex 🦙 v0.10.3: no description found

- Elasticsearch Vector Store - LlamaIndex 🦙 v0.10.3: no description found

- llama_index/llama-index-legacy/llama_index/legacy/vector_stores/mongodb.py at main · run-llama/llama_index: LlamaIndex (formerly GPT Index) is a data framework for your LLM applications - run-llama/llama_index

- llama_index/llama-index-core/llama_index/core/chat_engine/condense_question.py at 3823389e3f91cab47b72e2cc2814826db9f98e32 · run-llama/llama_index: LlamaIndex (formerly GPT Index) is a data framework for your LLM applications - run-llama/llama_index

- Usage Pattern - LlamaIndex 🦙 v0.10.3: no description found

- Node Postprocessor Modules - LlamaIndex 🦙 v0.10.3: no description found

- llama_index/llama-index-core/llama_index/core/indices/base.py at 5d557cb2fe48b90e4056ecae25b9371681752a3c · run-llama/llama_index: LlamaIndex (formerly GPT Index) is a data framework for your LLM applications - run-llama/llama_index

- Configuring Settings - LlamaIndex 🦙 v0.10.3: no description found

- Migrating from ServiceContext to Settings - LlamaIndex 🦙 v0.10.3: no description found

LlamaIndex ▷ #ai-discussion (1 messages):

- Super-Easy Full Stack RAG App Building Guide Released:

@kerininhas shared an article about building a Retrieval-Augmented Generation (RAG) application using Dewy, a new open-source knowledge base. The guide entails using NextJS, OpenAI API, and Dewy to create a RAG application that improves the accuracy of language model responses by grounding them in specific, reliable information. Read the guide.

Links mentioned:

Building a RAG chatbot with NextJS, OpenAI & Dewy | Dewy: This guide will walk you through building a RAG application using NextJS for the web framework, the OpenAI API for the language model, and Dewy as your knowledge base.

HuggingFace ▷ #announcements (1 messages):

-

Hugging Face Launches Message API: 🚀 Hugging Face introduces a new Message API compatible with OpenAI, enabling the use of OpenAI client libraries or third-party tools directly with Hugging Face Inference Endpoints and Text Generation Inference. Learn more from their announcement here.

-

New Open Source Releases and Features: 🤗 Datatrove goes live on PyPI, Gradio updates to 4.18.0 with an improved

ChatInterfaceand more, and there’s a launch of Remove Background Web for in-browser background removal. Additionally, Nanotron for 3D parallelism training and new features in Hugging Face Competitions were announced. Accelerate 0.27.0 was released, boasting a PyTorch-native pipeline-parallel inference framework. -