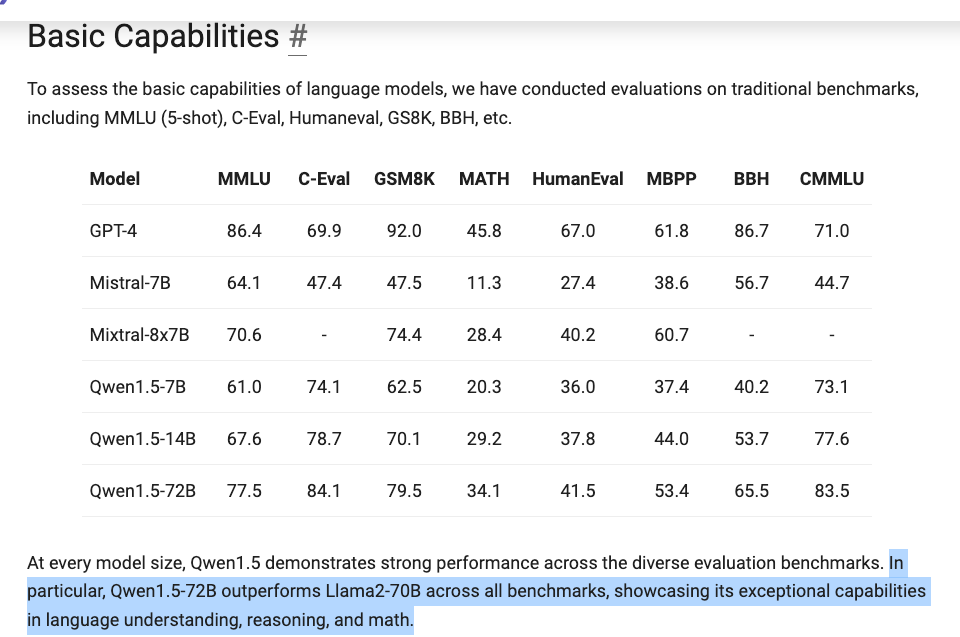

The Chinese models (Yi, Deepseek, and Qwen, to a lesser extent Zhipu) have been quietly cooking up a storm. Qwen’s release this week claims strong performance vs Mistral and Llama2 equivalents:

with up to 32k token context. The technical report also discusses a number of evals made on multilingual, RAG, agent planning, and code generation capabilities. The Qwen team are also showing serious dedication to the downstream ecosystem, releasing with HF transformers compatibility and official AWQ/GPTQ 4/8bit quantized models.

Table of Contents

[TOC]

PART 1: High level Discord summaries

TheBloke Discord Summary

-

Quantization Quest for 70B LLM: An exploration of quantizing a 70B LLM on vast.ai was shared with suggestions like creating a large swap file and potentially using USB-attached SSDs to circumvent powerful GPU requirements.

-

GPTZero Faces Scrutiny: Debates over the effectiveness of AI content detection tools like GPTZero sparked discussions, highlighting its potential unreliability in detecting subtly augmented prompts.

-

Introducing Sparsetral: A new Sparse MoE model based on Mistral was introduced, emphasizing its efficient operation and selective weight application during forward passes, garnering interest and sparking inquiries into its training intricacies.

-

Merging Vs. Fine-Tuning Dilemma: There’s an ongoing debate on whether it’s more efficient to individually fine-tune models for separate datasets or to combine datasets and fine-tune a single model, with the community generally leaning towards the latter for coherence.

-

Knowledge Sharing in AI: Community members discussed a range of topics involving LLM performance and handling, including strategies to augment memory capabilities and sharing of problem-solving tactics, reinforcing the collaborative spirit within the guild.

-

Deep Dive into DPO and Character Generation: Insights were exchanged on merging adapters in the training process using Direct Preference Optimization (DPO), with a focus on role-playing character generation, as well as tactics to prevent overfitting in such models.

-

Eldritch ASCII Art Conversations: Attempts at creating ASCII art using various models prompted discussions on the evolving capabilities of language models in creative endeavors.

-

Enigmas of Model Merging: A desire to comprehend model merging led to sharing resources that delve into the tensor operations involved, accompanied by recommendations of tools like ComfyUI-DareMerge for the task.

-

Coding Discussions Span Character Memory and 3D Generation: Inquiries about setting up ChromeDB for character-specific long-term memory were seen alongside promotions of a text-to-3D gen AI project, solutions for OpenAI costs, and shared links to code-generating LLMs with detailed explanations.

Nous Research AI Discord Summary

-

Kanji Generation’s Complex Quest: The community discussed the challenges in training a model for Japanese Kanji generation, with

@lorenzoroxyoloreferencing a Stable Diffusion experiment for inspiration. The use of a controlnet was suggested by.ben.comas an alternative method for nuanced tasks such as this. -

AI Scams Surface on Social Media: A surge in AI-related scams on platforms like Facebook prompted community members to discuss the importance of awareness and the detrimental impact fictional narratives can have on AI’s perception.

-

Meta’s Pioneering VR Prototypes at SIGGRAPH: Meta’s advancement in VR technology, specifically the development of new headset prototypes with retinal resolution and advanced light field passthrough, was presented at SIGGRAPH 2023 and shared by

@nonameusrwith relevant articles from Road to VR and a developer blog post. -

Finetuning Frozen Networks and New AI Models: Discussions revolved around the effectiveness of fine-tuning normalization layers in frozen networks, as described in an arXiv paper, and the sharing of information on new models like bagel-7b-v0.4, DeepSeek-Math-7b-instruct, and Sparsetral-16x7B-v2 across various Hugging Face repositories, each with unique capabilities and suggested improvements.

-

Performance Review and Anticipated Release: The community scrutinized the performance of different models, including Qwen 1.5’s release which some found underwhelming compared to its predecessor. Additionally, an announcement was made about an unspecified release happening in 23.9 hours; fblgit unveiled a new model-similarity analysis tool on GitHub for community contribution.

-

Transformer Matrices and LLM Conversation Memory Debate: Practical engineering advice was shared, such as fusing QKV matrices in transformers for efficiency. Users also explored techniques for managing conversation history with LLMs, noting the potential use of langchain and the benefits of summarizing history or utilizing long-context models to navigate context size limitations. Concerns were raised over licensing changes between various Hermes datasets for commercial use.

Eleuther Discord Summary

-

Initiative to Build Foundational Models Kindles Interest:

@pratikk10seeks collaboration on creating foundational models, acknowledging diverse applications like text-to-text/image/video. However,@_3spherehighlights the prohibitive costs of such models, discussing the matter in the context of the newly released Qwen1.5, a 72B parameter chat model detailed in their blog post and repository. -

Grapple with Interpreting LLMs: Debates on the effectiveness of interpretability in large language models (LLMs) ensue, drawing parallels to the human genome project and questioning the relationship between interpretability and intelligence. There’s a critical assessment of AGI claims and model capabilities, particularly the authenticity of performance on benchmarks such as the MMLU.

-

Scaling Law Reconnaissance:

@stellaathenadiscusses possible efficiency improvements in scaling laws research, referencing Kaplan et al. and Hoffman et al. with interest in reducing the necessity for numerous runs. Contributions from@clashlukeand others ponder the application of PCGrad in multi-task loss handling, the role of hypernetworks in generating LoRA weights, and the use of varying activation functions like polynomials, substantiated by a neural-style Python file and facebookresearch’s code. -

Bootstrapping Pooled Variance for Rigorous Model Evaluation:

@hailey_schoelkopfupdates the lm-evaluation-harness to use pooled variance for standard error, an optimally chosen approach over combined variance as detailed here, prompting@stellaathenato recommend preserving both methods with expert-use warnings. -

Discern Vector Semantics in Activation Functions:

@digthatdataand@norabelrosedissect the conception of vectors as directions, operators, and Euclidean representations in the context of deep learning, complemented by the introduction of[model-similarities](https://github.com/fblgit/model-similarity), a tool for layer-wise parameter space analysis of different models. -

Remedying LM Pre-training with Paraphrased Web Data: New initiatives like Web Rephrase Augmented Pre-training (WRAP), documented in an arxiv paper, aim to augment large model pre-training by improving data quality, with

@elliottdysonsuggesting a comparative study to gauge WRAP’s advantage over mere fine-tuning.

HuggingFace Discord Summary

-

AI Journey from Novice to Pro: @dykyi_vladk discussed their ML learning curve, focusing on specific models and techniques, while @lunarflu highlighted the importance of building demos and sharing them as a vital step to advance as an ML professional.

-

A100G Launch May Cause Server Hiccups: Server downtime raised questions about its relation to the A100G launch; @lunarflu offered to escalate the issue. For full AI model utilization, @meatfucker recommended distributing tasks across multiple GPUs.

-

Experts in Computer Vision Sought: @danielsamuel131 invited computer vision specialists to share their expertise with the community.

-

Papillon Flaunts NER & Sentiment Tool: An NER and sentiment analysis tool developed by @8i8__papillon__8i8d1tyr, based on Flair and FLERT, was shared; find it on GitHub.

-

LLaMA-VID Debuts for Long Videos: The LLaMA-VID model, designed to support hour-long videos, was introduced by @tonic_1. However, user concerns over empty model cards and lack of details may hinder usage. An arXiv paper on cost-efficient LLM strategies was also shared by @jessjess84.

-

Boosting Conversational AI with Fine-Tuning: @joeyzero seeks resources on conversational datasets for fine-tuning a chatbot. Meanwhile, @denisjannot struggled with fine-tuning Mistral 7b for YAML generation and eyed the Instruqt model for improvement. @meatfucker recommended few-shot learning techniques for making precise YAML modifications.

-

Ankush’s Finetuning Mastery: Ankush Singal’s finetuned model, based on previous work from OpenPipe, earned community kudos. The model is available at Hugging Face.

-

Schedule Flex for Reading Group: @ericauld may need to postpone a planned talk due to jury duty, with @chad_in_the_house expressing support. Keep an eye on the events calendar for potential changes.

-

The Meme Highlighting AI Progress: @typoilu presented an article detailing AI advancements through the lens of a meme in the “Mamba Series LLM Enlightenment,” but community engagement on the content remains to be seen. Read the article here.

LM Studio Discord Summary

- Model Selection Mayhem: Amidst numerous models,

@hades____sought advice on picking the right one, with suggestions pointing to use-case focus over a universal solution. - DeepSeek-Math-7B Launches: DeepSeek-Math-7B was unveiled by

@czkokoas the latest entry in the model arena, catered specifically to mathematical problem-solving and research, and available on GitHub. - LM Studio Polishes Performance: LM Studio v0.2.13 brings new features such as Qwen 1.5 support, pinning models and chats, and quality of life updates, downloadable at LM Studio’s website with open-sourced Qwen1.5 models on Hugging Face.

- Hardware Discourse Heats Up: Operating system compatibility, GPU utilization, and speed optimizations in token generation dominated discussions, as users shared experiences with LMStudio on different hardware configurations; recommendations included using Ubuntu 22 and quantization methods, as illustrated in comparisons on YouTube.

- Beta and Feature Beckoning: A beta preview of LM Studio v0.2.13 dropped inviting feedback, while users appealed for a model leaderboard in-app but meanwhile can reference rankings on Hugging Face Leaderboard and OpenRouter; a GUI for server access and improved chat interfaces were hot topics.

- RAG System Question Creation Quest:

@varelasebsought techniques for generating questions in a RAG system, inquiring about Tuna without much background info available on it.

Mistral Discord Summary

-

LangChain’s Uncertain Future: Discord users expressed concerns over the longevity of LangChain’s utility, suggesting a need for stabilization after a week. Meanwhile, the deterministic nature of Mistral 8x7B faced scrutiny, establishing its probabilistic behavior adjustable by a temperature parameter, yet no definitive resolution was reached on the deterministic inquiry.

-

Mistral’s Emoji-Terminating Quirk: A peculiar terminating behavior was observed in Mistral models, where responses ended with the “stop” finish_reason but still contained an emoji. The issue was noted across all three Mistral API models, signaling a potential area for debugging or insight into response construction.

-

AI’s Philosophical Conundrum: Philosophical implications of LLMs were deliberated, with participants promoting a deeper understanding of AI’s foundational principles. This discussion underscores the evolving complexity of AI impacts on broader intellectual fields.

-

Prompt Precision Practices: Discussion around refining prompts to improve accuracy was active, with the conversion of a PHP class to a JSON schema being one method shared. However, methods for synthetic dataset generation, despite being a hot topic, remained close-guarded due to its value as a source of income.

-

Fine-Tuning Pad Pains: Concerns about padding during fine-tuning were voiced, pointing out issues with models not generating end-of-sentence tokens using the common

tokenizer.pad = tokenizer.eospractice. This suggests a need for optimized fine-tuning approaches to enhance model performance. -

Lean Chatbots and Starry Success: A Discord chatbot capable of multi-user interaction and supporting multiple LLMs, including Mistral, made headlines with its lean 200-line code and functionality like vision support and streamed responses, attracting attention with over 69 GitHub stars. GitHub - jakobdylanc/discord-llm-chatbot

-

Flags and Fast GIFs: Among lighter interactions, users shared flag emojis and humorous GIFs, hinting at a casual and engaging community dynamic. Specifically mentioned was a Sanic the Hedgehog GIF from Tenor, celebrated for its humor in the context of a language settings discussion. Sanic The Hedgehob GIF - Tenor

CUDA MODE Discord Summary

-

Enthusiastic Engineering for Dual GPU Builds: Community member

@morgangiraudis assembling a dual build with 2 x 4070 TI SUPER GPUs and considering VRAM trade-offs between newer and older cards. They shared their build costing 4k in total through PCPartPicker. -

Library Launch to Lighten LLM Load:

@andreaskoepfhighlighted FlashInfer, an open-source library aimed at boosting performance of LLM serving, by optimizing Self-Attention and other key operations. -

Precision Predicament Perplexes PyTorch Programmer:

@zippikaencountered inaccuracies with dequantize and linear operations in PyTorch and sought the cause, speculating it may be rounding issues or related to disabled C++ flags like"__CUDA_NO_HALF_OPERATORS__". -

GPU Kernel Conundrums in JAX:

@stefangligaintroduced Pallas, an experimental JAX extension for writing custom GPU/TPU kernels, while@nshepperdshared insights on using pure CUDA kernels within JAX.@marvelousmitinquired about methods to print Triton kernels and the code JAX executes for kernel profiling. -

Recorded Resourcefulness for CUDA Coders: Mark Saroufim assured users that despite technical delays, Lecture 4’s recording has been uploaded to YouTube, promising HD quality shortly after.

-

Fast.ai Favoritism Flows Freely: Users

@einstein5744and@joseph_ensignal satisfaction with fast.ai’s educational resources, particularly regarding a course on diffusion models and the DiffEdit paper. -

Weighing Words of Wisdom for March 9th Meet-up:

@jku100is tentatively set to speak on March 9th about their work with thetorch.compileoptimizations, which has shown promise in AI acceleration techniques. -

Preparing for PMPP’s Fifth Lecture:

@jeremyhowardscheduled lecture 5 for the weekend, linking the Discord event, while@lancertsqueried about the ‘swizzled order’ concept discussed in a PyTorch blog and its coverage in the PMPP book.

OpenAI Discord Summary

- ChatGPT File Upload Fiasco: Users, including

@sherlyleta,@guti_310, and@lugui, have brought up ongoing issues with the ChatGPT file upload feature, which has been glitchy since the previous week.@luguimentioned that resolution is on the horizon. - Firmware Fix Frenzy in Manufacturer Talk: Debates heated on manufacturer responsibility for technical issues, with

@aipythonistaadvocating for firmware updates as solutions instead of relying on content like Louis Rossman’s, citing potential brand bias. - Mistral GPT Alternatives Gather Steam: Amidst talks of local GPT-3.5 instances and the infeasibility pointed out by

@elektronisade,@riatyrecommended the open-source Mistral 8x7b for homelab diagnostics. - Trademark Tangles Trouble GPT Customizer:

@zurbinjofaced trademark obstacles when naming a GPT, with clarification from@solbusabout the prohibition due to OpenAI’s branding guidelines. - Perfecting PDF Presentations to AI: A brief exchange prompted by

@wazzldorrexplored whether AIs perform better processing PDFs or extracted text for scientific articles, with@luguiassuring AI’s capability to handle PDFs effectively.

LangChain AI Discord Summary

-

RunPod and BGE-M3 Catch Engineers’ Attention: In the general channel,

@lhc1921highlighted RunPod for competitively priced GPU nodes and introduced the BGE-M3 multi-functional embedding model, providing the GitHub repository and research paper.@kapa.aidetailed using OpenAIEmbedder with LangChain, citing LangChain’s JavaScript documentation and Python documentation. -

Bearer Token and Setup Woes in LangServe Discussions: The langserve channel saw a sharing of tips on AzureGPT.setHeader with bearer token by

@veryboldbagel, referencing Configurable Runnables documentation and APIHandler examples.@gitmaxdprovided a guide for Hosted LangServe setup, while@lucas_89226and@veryboldbagelengaged in troubleshooting discussions, with a suggestion to use LangServe GitHub discussions page for further help. -

Showcasing Innovations and Job Openings in Share-Your-Work Channel:

@siddishintroduced AI Form Roast by WorkHack on Product Hunt for online form optimization, and@shving90highlighted TweetStorm Express Flow for crafting tweets via a Twitter post. The Dewy knowledge base for RAG applications was presented by@kerininwith a blog post, while@hinayokaannounced job opportunities in a crypto project.@felixv3785showcased a Backlink Outreach Message Generator tool for SEO.

Latent Space Discord Summary

-

AI Team Formation Tactics: Discussions about setting up an internal AI engineering team suggested starting solo to showcase value before scaling up. Eugene Yan’s articles on real-time ML and team configurations were recommended, illustrating various organizational tactics including centralization and embedding data scientists into product teams.

-

DSPy Series Simplified: A video series on DSPy prompted requests for a more digestible explanation, indicating the community’s readiness to collaborate on grasping its concepts. The DSPy explained video was shared as a starting point for those interested in learning more.

-

GPT-4, the Procrastinator?: GPT-4’s perceived initial laziness became a topic of amusement, supported by Sam Altman’s tweets and several Reddit discussions that ultimately confirmed GPT-4 should now be “much less lazy,” according to the shared community feedback.

-

Philosophical Digital Library Envisioned: The concept of a digital library with AI philosophical agents led to suggestions of leveraging tools like Botpress and WorkAdventure for development, indicating an interest in merging philosophical discourse with AI technology.

-

Technical Setup Exchanges: Engineers like

@ashpreetbedishared their technical setups, which involve tools such as PyCharm and ScreenStudio, reflecting a shared interest in the practical aspects of engineering environments and tooling.

LAION Discord Summary

Call for Collaboration in Foundational Models: A discussion initiated by @pratikk10 invites interested parties to contribute to the creation of foundational models across different media, including text, image, and video, seeking exchanges with serious creators.

Bias Watch in Reinforcement Learning: RLHF’s introduction of significant biases is debated, with @pseudoterminalx and @astropulse noting the potentially counterproductive effect on base model development, while also observing a distinctive style in Midjourney’s images potentially rooted in such biases.

Tackling Textual Bias in Pixart: Conversations reveal challenges in unlearning textual biases from datasets, specifically version 5.1 of pixart. Critique is directed at the use of the JourneyDB dataset, with suggestions to find more robust alternatives for unbiased text modalities.

Innovative Reading of Ancient Texts: The Vesuvius Challenge 2023 Grand Prize announcement highlighted a successful method for reading 2000-year-old scrolls without unrolling them, using a TimeSformer model and a particle accelerator, although at a high cost of $40,000 per scroll.

Chinese Machine Learning Thrives Despite Restrictions: Discussions ponder the success of Chinese ML entities in light of GPU restrictions, noting their preemptive procurement of NVIDIA’s H100s and A100s before restrictions came into play, questioning the overall impact on technological progress.

Critique of Hugging Face’s OWLSAM: @SegmentationFault shared and commented on the performance of OWLSAM in a Hugging Face Space, indicating that the model lacked coverage in visual representation and accuracy in object detection.

LlamaIndex Discord Summary

-

LlamaIndex Gears Up for Big Release: A significant release of LlamaIndex is due this week, with cleanups signaling an important update for users planning to upgrade their LlamaIndex systems.

-

Boosting Multi-modal Applications on MacBooks: LlamaIndex’s recent integration allows building multi-modal applications on a MacBook, enhancing image reasoning. The related announcement and developments were shared in a tweet.

-

Home AI Triumphs at Hackathon with PDF Search Innovation: Home AI’s unique implementation of a RAG-powered search engine for home filtering won Best Use of PDF Parser at an in-person hackathon, details of which can be found in this tweet.

-

Hackathon Spurs LlamaIndex Enhancement: The hackathon saw participation from nearly 200 people, offering feedback for the LlamaIndex team, and a resource guide catering to developers was circulated.

-

Building Engineer-Conscious Chatbots: One discussion focused on creating a chatbot for engineers to interact with standards documents using LlamaIndex, supported by a GitHub project at GitHub - imartinez/privateGPT.

-

Navigating Vector Search Challenges: Users discussed methods to improve vector search results with Qdrant, sharing insights into embedding and score analysis, and highlighted the usage of TypeScript code example for generating and comparing embeddings with

Ollama.

OpenAccess AI Collective (axolotl) Discord Summary

-

New Player in Town - Qwen1.5: The OpenAccess AI community is abuzz with the release of Qwen1.5, promising higher performance with quantized versions. The release was accompanied by a comprehensive blog post and various development resources. However, some users already see room for improvement, noting the absence of a 30b model and the need for including standard deviation in benchmarks to account for noise.

-

GCP’s Competitive Edge: GCP is extending olive branches to enterprise customers with A100 instances available at $1.5-2 per hour on demand. This rate is significantly lower than what non-enterprise customers pay, spotlighting strategies employed by cloud providers to manage their ecosystems of users and resellers, including spotlight deals like GCP’s L4 spot price at $0.2 per hour.

-

Bridging the Quantization Gap: Within the Axolotl development community, there’s discussion around Hugging Face’s suggestion to quantize before merging models.

@dreamgensuggests that this approach could benefit performance, sparking conversation about the potential of Bayesian optimization within the Axolotl framework and reports of a smoother implementation process. -

Axolotl’s Growing Pains: Axolotl users are reporting installation woes, noting dependency conflicts, specifically with

torchandxformers. A suggested fix involves usingtorch 2.1.2. Also, there’s a call to simplify YAML configurations in Axolotl, indicating that ease of use and accessibility are high on the developer wishlist, along with creating a Hugging Face Spaces UI for a more beginner-friendly setup. -

Crickets in RLHF: A lone message in the #rlhf channel, seemingly directed to a specific user, asks for configurations related to zephyer, leaving much to the readers’ imagination regarding context or importance, and offers too little to chew on for the tech-hungry engineer audience.

Perplexity AI Discord Summary

-

Pro Payment Problems: Users like

@officialjulianexperienced payment issues with the Pro upgrade—funds were deducted without service activation, suggesting an error with Stripe’s payment system, while@yuki.uedafaced unresponsive customer support over billing inquiries. It was recommended to reach out to [email protected] for assistance. -

AI Ethics in Education Debated:

@worriedhobbitonhighlighted the need for AI to offer unbiased and culturally sensitive support, especially in educational contexts like Pasco High School, reflecting the challenges in serving diverse student populations. -

Mismatched AI Research Responses: User experiences like

@byerk_enjoyer_sociology_enjoyer’s underscore the limitations of AI in delivering relevant search outcomes, as shown in the shared Perplexity AI search result, which did not match the research query about source validation. -

Speedy Summary Solutions Sought:

@sid.jjjexpressed the need to improve API response times when generating summaries, noting that current processes take about 10 seconds for three parallel links, underlining a performance benchmark concern for AI engineers. -

Discrepancies Detected in AI Usage: Concerns were raised by

@jbruvollabout inconsistencies between interactive and API use of Perplexity AI, directing to a specific Discord message for further detail, which highlights the importance of aligning AI behavior across different interfaces.

DiscoResearch Discord Summary

-

Danish Delight in Model Merging: A Danish language model utilizing the dare_ties merge method achieved 2nd place on the Mainland Scandinavian NLG leaderboard, introduced by

@johannhartmannand detailed here. -

Merge Models Minus Massive Machines:

@sebastian.bodzanoted that LeoLM models can be merged without GPUs, highlighting alternatives like Google Colab for performing model merging tasks. -

German Giant - Wiedervereinigung-7b-dpo-laser:

@johannhartmannunveiled a 7b parameter German model combining top German models, named Wiedervereinigung-7b-dpo-laser, which has high MT-Bench-DE scores. -

Merging Models More than a Score Game: The conversation between

@johannhartmannand@bjoernpsuggested an improvement in actual use-cases, like chat functions, after merging models, beyond just achieving high scores. -

Code Cross-Language Searchability Soars: Jina AI released new code embeddings that support English and 30 programming languages with an impressive sequence length of 8192, as shared by

@sebastian.bodza. The model is available on Hugging Face and is optimized for use via Jina AI’s Embedding API.

Alignment Lab AI Discord Summary

-

Taming Llama2’s Training Loss: An engineer faced an unexpected training loss curve with LLama2 using SFT which might be due to a high learning rate. A peer recommended switching to Axolotl and provided specific configuration examples that suggest increasing the learning rate.

-

Ankr Networking for Node Collaboration:

@anastasia_ankrreached out to discuss node infrastructure with the team and was directed to contact@748528982034612226for further dialogue. -

Community Engagement: Users

@xterthyand@aslawlietkept the community active with brief greetings, contributing to a friendly atmosphere. -

Awaiting Direct Communications:

@mizzy_1100flagged a direct message for@748528982034612226’s attention, indicating important pending communications. -

Celebrating Collaborative Spirit:

@ruschcompared the Discord server to an “amazing discordian circus,” highlighting its dynamic and entertaining nature for knowledge sharing and innovation.

Datasette - LLM (@SimonW) Discord Summary

-

Audacity Echoes with Intel’s AI: Audacity’s integration of Intel’s AI tools adds powerful local features: noise suppression, transcription, music separation, and experimental music generation, presenting a challenge to expensive subscription services.

-

Thesis Enhancement with LLM: On LLM integration,

@kilotonseeks advice for handling PDFs and web searches, and whether chat histories can be transferred and stored across different models. -

SQL, Simplified with Hugging Face:

@dbreunigpoints to the integration potential ofllmwith Hugging Face’s transformers, highlighting the Natural-SQL-7B model for its advanced Text-to-SQL capabilities and deep comprehension of complex questions.

LLM Perf Enthusiasts AI Discord Summary

- Qwen1.5 Debuts with Open Source Fanfare: Qwen1.5 has been introduced and open-sourced, offering base and chat models across six sizes. Resources shared by

@potrockinclude a blog post, GitHub repository, a presence on Hugging Face, Modelscope, a user-friendly demo, and an invitation to join the Qwen Discord community. - Efficiency Breakthrough with Qwen1.5: The 0.5B Qwen1.5 model has shown promise by exhibiting performance on par with the much larger Llama 7B model, signaling a new wave in model efficiency optimization as shared by

@potrock.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (1293 messages🔥🔥🔥):

-

Model Quantization Guidance:

@xmrigexplored quantizing the 70B LLM model on vast.ai due to local resource limitations. Advice offered included creating a large swap file and potentially using external USB-attached SSDs, avoiding the need for a powerful GPU during the quantization process (@spottyluck,@rtyax,@stoop poops). -

GPTZero Analysis: Users debated the effectiveness of AI content detection tools such as GPTZero, with suggestions that it may not reliably detect more subtly augmented prompts and that it’s seen as a student’s tool, making it far from product-ready (

@mr.userbox020,.meathead,@kaltcit,@righthandofdoom,@itsme9316). -

Sparsetral, a New Sparse MoE Model:

@morpheus.sandmannshared a Sparse MoE model based on Mistral, underscoring efficient operation on high-end hardware. The sparse model uses only a portion of weights during forward passes, applying adapters selectively through a router, which sparked the community’s interest but also raised questions on the intricacies of its training and functionality (@netrve,@itsme9316). -

Merging vs. Single Model Fine-tuning:

@givan_002inquired about the efficiency of fine-tuning separate models for different datasets versus fine-tuning one model on a combined dataset. The consensus leaned towards using one comprehensive set for coherence and optimization (@amogus2432). -

Community Assistance with LLM Tasks: Users

@kaltcit,@potatooff, and others discussed various topics from LLM performance to practical advice on using LLMs and related technologies, like swapping VRAM to augment memory, showcasing collaborative problem-solving and knowledge sharing within the community.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- no title found: no description found

- Realtime Colors: Visualize your color palettes on a real website.

- Screenshot to HTML - a Hugging Face Space by HuggingFaceM4: no description found

- Realtime Colors: Visualize your color palettes on a real website.

- Qwen1.5 - a Qwen Collection: no description found

- Qwen/Qwen1.5-14B-Chat-GGUF · Hugging Face: no description found

- Huang Jensen Nvidia Ceo GIF - Huang Jensen Nvidia Ceo - Discover & Share GIFs: Click to view the GIF

- budecosystem/code-millenials-13b · Hugging Face: no description found

- Introducing Qwen1.5: GITHUB HUGGING FACE MODELSCOPE DEMO DISCORD Introduction In recent months, our focus has been on developing a “good” model while optimizing the developer experience. As we progress towards…

- Bing GIF - BING - Discover & Share GIFs: Click to view the GIF

- TheBloke/Llama-2-70B-GGUF · Hugging Face: no description found

- NousResearch/Nous-Hermes-Llama2-13b · Hugging Face: no description found

- Social Credit GIF - Social Credit - Discover & Share GIFs: Click to view the GIF

- dataautogpt3/miqu-120b · Hugging Face: no description found

- GitHub - TheBlokeAI/dockerLLM: TheBloke’s Dockerfiles: TheBloke’s Dockerfiles. Contribute to TheBlokeAI/dockerLLM development by creating an account on GitHub.

- wolfram/miquliz-120b · Hugging Face: no description found

- v0 by Vercel: Generate UI with simple text prompts. Copy, paste, ship.

- Swap on video RAM - ArchWiki: no description found

- Why No One Feels Like They Can Focus Anymore: And what to do about it

- Reddit - Dive into anything: no description found

TheBloke ▷ #characters-roleplay-stories (457 messages🔥🔥🔥):

-

Dynamic Adapter Merging in Training:

@jondurbinrecommends merging the adapter from SFT only after DPO, rather than before, continuing with the adapter from SFT throughout the process. Insights came while discussing the Direct Preference Optimization (DPO) Trainer as detailed in the TRL documentation. -

Discussions on DPO and Adapter Loading:

@dreamgenshares information that the DPO Trainer expects a specific dataset format, citing examples from theAnthropic/hh-rlhfdataset. Issues such as the attribute nametrainconflicting with the latest transformers version are addressed by@jondurbin. -

CharGen v2 - A Model for Roleplaying Creatives:

@kalomazeunveils CharGen v2, a model designed to generate character descriptions for role playing, featured on Hugging Face with a live version available here. The model creates character descriptions in a dialogue format, generating one field at a time to allow for partial re-rolls and reduce repetition. -

Fine-Tuning Role Play Models with Diverse Data: Users discuss strategies for preventing overfitting in role play models, suggesting a mix of RP data with varied datasets like The Pile or MiniPile at the start of each epoch (

@kalomaze).@stoop poopsand@flail_.exchange views on enhancement tactics like incorporating assistant data with RP data to avoid dumb outputs. -

Eldritch ASCII Art Endeavors:

@c.gatoand others experiment with generating ASCII art using various models like Mixtral, Miqu, and GPT-4. The conversation showcases attempts at creating simple ASCII art with varying degrees of success, highlighting the limited but improving abilities of language models in this creative task.

Links mentioned:

- kubernetes-bad/chargen-v2 · Hugging Face: no description found

- 152334H/miqu-1-70b-sf · Hugging Face: no description found

- G-reen (G): no description found

- GitHub - MeNicefellow/Intelligent_RolePlaying_Sandbox: Contribute to MeNicefellow/Intelligent_RolePlaying_Sandbox development by creating an account on GitHub.

- DPO Trainer: no description found

- DPO Trainer: no description found

- bigscience/sgpt-bloom-7b1-msmarco · Hugging Face: no description found

- Norquinal/claude_multiround_chat_30k · Datasets at Hugging Face: no description found

- ASCII Art Archive: A large collection of ASCII art drawings and other related ASCII art pictures.

TheBloke ▷ #training-and-fine-tuning (2 messages):

- Fine-tuning Llama2-7b-chat with website content:

@gabrielelanzafameinquired about the possibility of fine-tuning Llama2-7b-chat using text scraped from a website. They want to train the model to generate copy in the brand’s tone or evaluate the brand tone in given copy. - Strategies for Fine-tuning with Multiple Datasets:

@givan_002asked whether it is more effective to fine-tune a separate model for each individual dataset—airoboros, hermes, limarp—and then merge them, or to combine all datasets and fine-tune a single model.

TheBloke ▷ #model-merging (3 messages):

- Seeking Wisdom on Model Merging:

@noobmaster29expressed a desire to understand model merging at a deeper level, beyond just demo notebooks, seeking sources for reading or video explanations. - Diving Deep into Model Merging:

@maldevidereferenced their own gist as a thorough breakdown of model merging at the tensor operation level, aimed at providing a deeper understanding. - Tool Suggestion for Model Merging:

@maldevidesuggested using ComfyUI-DareMerge, a tool that facilitates model merging for SD1.5 and SDXL, as a convenient resource already present in their notebook.

Links mentioned:

GitHub - 54rt1n/ComfyUI-DareMerge: ComfyUI powertools for SD1.5 and SDXL model merging: ComfyUI powertools for SD1.5 and SDXL model merging - GitHub - 54rt1n/ComfyUI-DareMerge: ComfyUI powertools for SD1.5 and SDXL model merging

TheBloke ▷ #coding (4 messages):

-

Creating Character Memory with ChromeDB:

@vishnu_86081inquired about implementing long-term memory for each character in a chatbot app by using ChromeDB. They’re currently using ooba web UI API for text generation and MongoDB for storing messages, and they seek guidance on setting up ChromeDB to separate messages of each character. -

Seeking Shared Links for neThing.xyz:

@rawwerksrequested the re-sharing of links for their text-to-3D gen AI project called neThing.xyz, expressing concerns over their OpenAI costs while offering free user trials. -

Code-13B and Code-33B Links Reshared:

@londonshared links to Code-13B and Code-33B, two Large Language Models (LLMs) trained to generate code with detailed explanations, available on the Hugging Face platform. These models were trained using the datasets Python-Code-23k-ShareGPT and Code-74k-ShareGPT, with the former taking 42 hours and the latter 6 days & 5 hours to train.

Links mentioned:

- neThing.xyz - AI Text to 3D CAD Model: 3D generative AI for CAD modeling. Now everyone is an engineer. Make your ideas real.

- ajibawa-2023/Code-13B · Hugging Face: no description found

- ajibawa-2023/Code-33B · Hugging Face: no description found

Nous Research AI ▷ #off-topic (28 messages🔥):

-

Quest for Kanji Mastery: User

@lorenzoroxyoloexpressed frustration with their attempts to train a model on Japanese Kanji generation, referring to a Stable Diffusion experiment by@hardmarufor inspiration. User.ben.comrecommended considering a controlnet instead of stable diffusion for nuanced tasks like rendering complex images. -

Model Training Challenges Discussed: Amidst the concerns about unsatisfactory kanji generation models,

.ben.comsuggested@lorenzoroxyolocould learn from the IDS repository to understand the structure of characters better and potentially optimize model training. -

AI Breeding Game Theory Emerges:

@bananawalnut69speculated about an “AI breeding” game to generate child models using a method akin to the GAN approach, with@Error.PDFresponding that reinforcement learning might align with the concept. -

Scams Awareness Raised: Discussion about the prevalence of AI-related scams on Facebook led

@Error.PDFto lament the misinformation and shallow perceptions fostered by fictional narratives, which results in references to Skynet or WALL·E in serious AI talks. -

Meta Pushes VR Boundaries:

@nonameusrshared a link to a Road to VR article and an accompanying developer blog post about Meta’s new VR headset prototypes with retinal resolution and advanced light field passthrough capability presented at SIGGRAPH 2023.

Links mentioned:

- Huh Cat Huh M4rtin GIF - Huh Cat Huh M4rtin Huh - Discover & Share GIFs: Click to view the GIF

- Skeleton Skeleton Laugh GIF - Skeleton Skeleton laugh Laugh - Discover & Share GIFs: Click to view the GIF

- Tweet from hardmaru (@hardmaru): A #StableDiffusion model trained on images of Japanese Kanji characters came up with “Fake Kanji” for novel concepts like Skyscraper, Pikachu, Elon Musk, Deep Learning, YouTube, Gundam, Singularity, e…

- Meta Reveals New Prototype VR Headsets Focused on Retinal Resolution and Light Field Passthrough: Meta unveiled two new VR headset prototypes that showcase more progress in the fight to solve some persistent technical challenges facing VR today. Presenting at SIGGRAPH 2023, Meta is demonstrating a…

Nous Research AI ▷ #interesting-links (14 messages🔥):

-

Boosting Frozen Networks via Fine-Tuning: @euclaise discussed the potential of fine-tuning only the normalization layers of frozen networks, hinting it could be a promising approach as an alternative to LoRA. This concept is based on the findings from a recent arXiv paper.

-

New Flavor of Bagel Unleashed: @nonameusr shared a link to the non-DPO version of the Mistral-7b model fine-tuned, known as bagel-7b-v0.4 on Hugging Face. It’s reported that this version is better for roleplay usage, and the model card outlines compute details and data sources, with a DPO variant expected soon.

-

DeepSeek Unveils Math-Savvy Model: @metaldragon01 introduced the DeepSeek-Math-7b-instruct model, alongside links to use cases and a paper detailing the model’s capabilities for mathematical reasoning using chain-of-thought prompts. Model details can be found on Hugging Face.

-

Sparse Modeling with Sparsetral: @mister_poodle provided a link to Sparsetral-16x7B-v2, a model trained with QLoRA and MoE adapters. The Hugging Face model card supplies key information on training, prompt format, and usage.

-

Sparsetral Ramblings on Reddit: @dreamgen pointed to a Reddit post about Sparsetral, a sparse MoE model derived from Mistral, alongside several links to resources and papers. The discussion also suggests improving Sparsetral by initializing experts from Mixtral, with the model available on Hugging Face.

Links mentioned:

- deepseek-ai/deepseek-math-7b-instruct · Hugging Face: no description found

- serpdotai/sparsetral-16x7B-v2 · Hugging Face: no description found

- jondurbin/bagel-7b-v0.4 · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- The Expressive Power of Tuning Only the Normalization Layers: Feature normalization transforms such as Batch and Layer-Normalization have become indispensable ingredients of state-of-the-art deep neural networks. Recent studies on fine-tuning large pretrained mo…

Nous Research AI ▷ #general (514 messages🔥🔥🔥):

- IPO Performance Revisited:

@tekniumcommented on IPO paper recommendations improving IPO, but@dreamgennoted DPO still outperforms IPO in tests atop open hermes.@tekniumacknowledged outdated information and a missed update. - Quantization Sensitivity Discussed:

@dreamgenhighlighted that DPO is sensitive to beta settings, potentially problematic for users unable to run extensive beta sweeps.@tekniumreplied with Hermes Mixtral sensitivity to beta, indicating the issue is broader. - Upcoming Release Anticipation:

@main.aiannounced that 23.9 hours remain until an unspecified release based on AoE time, linking to a tweet for confirmation. - Introduction of model-similarity Tool by fblgit:

@fblgitpresented a new tool for analyzing model similarities, capable of understanding weight differences and parameter alignment between various models. The tool is open for contributions on GitHub. - Quality Concerns over Qwen 1.5 Release: Amidst the Qwen 1.5 release,

@nonameusrexpressed underwhelmed sentiment, pointing to minimal benchmark improvements over Qwen 1. Meanwhile,@euclaiseand@metaldragon01discussed the prospects of smaller Qwen models and a potential Qwen-Miqu model merge.

Links mentioned:

- Qwen1.5 72B Chat - a Hugging Face Space by Qwen: no description found

- HuggingChat - Assistants: Browse HuggingChat assistants made by the community.

- Social Credit GIF - Social Credit - Discover & Share GIFs: Click to view the GIF

- You Naughty Naughty Pointing GIF - You Naughty Naughty Pointing Smile - Discover & Share GIFs: Click to view the GIF

- Introducing Qwen1.5: GITHUB HUGGING FACE MODELSCOPE DEMO DISCORD Introduction In recent months, our focus has been on developing a “good” model while optimizing the developer experience. As we progress towards…

- Qwen/Qwen1.5-7B-Chat-GGUF · Hugging Face: no description found

- CausalLM/34b-beta · Hugging Face: no description found

- wolfram/miquliz-120b · Hugging Face: no description found

- Tweet from qnguyen3 (@stablequan): Introducing Quyen, our first flagship LLM series based on the Qwen1.5 family with 6 different sizes: Quyen-SE (0.5B) Quyen-Mini (1.8B) Quyen (4B) Quyen-Plus (7B) Quyen-Pro (14B) Quyen-Pro-Max (72B) Al…

- Tweet from Vaibhav (VB) Srivastav (@reach_vb): It’s done! 🤯 miqudev merged the PR from @arthurmensch ↘️ Quoting Vaibhav (VB) Srivastav (@reach_vb) leak/ acc.

- Tweet from Awni Hannun (@awnihannun): Qwen1.5 is out, and already works with MLX ! pip install -U mlx-lm Models from 0.5B to 72B, all super high quality. 0.5B runs fast with MLX on my laptop, high quality, hardly any RAM: ↘️ Quoting J…

- Kind request for updating MT-Bench leaderboards with Qwen1.5-Chat series · Issue #3009 · lm-sys/FastChat: Hi LM-Sys team, we would like to present the generation results and self-report scores of Qwen1.5-7B-Chat, Qwen1.5-14B-Chat, and Qwen1.5-72B-Chat on MT-Bench. Could you kindly help us verify them a…

- GitHub - fblgit/model-similarity: Simple Model Similarities Analysis: Simple Model Similarities Analysis. Contribute to fblgit/model-similarity development by creating an account on GitHub.

Nous Research AI ▷ #ask-about-llms (36 messages🔥):

- Fusing QKV Matrices Optimizes Performance:

@carsonpooleclarified to@sherlockzoozoothat fusing the Query, Key, and Value (QKV) matrices in transformer models is mathematically identical but slightly faster, as it reduces the number of operations and memory loads required. - Understanding LLMs for Conversation Memory: In a conversation initiated by

@lushaiagency,@4bidddenmentioned using langchain to handle conversation history with LLMs, while.ben.comand@samuel.stevensdiscussed issues around context size and history breakdown, suggesting that summarizing history or using long-context models could be solutions. - Licensing Questions on Hermes 2.5 Dataset:

@tculler91expressed concerns about the licensing change between the OpenHermes and Hermes 2.5 datasets, looking for clarification for commercial use. - Configuring Special Tokens for OpenHermes:

@gabriel_symesought advice on token configurations for fine-tuning an OpenHermes model, and@tekniumadvised including `“

Eleuther ▷ #general (363 messages🔥🔥):

- Creating Foundational Models Discussion: User

@pratikk10expressed an interest in connecting with anyone considering creating their own foundational models for various applications, including text-to-text/image/video. - High Cost of Foundational Models Highlighted: In response to

@pratikk10, user@_3spherepointed out the expensive nature of developing foundational models. - Qwen1.5 Model Release and Details: User

@johnryan465shared links to Qwen1.5, a 72B parameter chat model, including its introduction, repositories, and demos (Qwen1.5 Blog Post, Qwen GitHub, Hugging Face Space). - Interpreting Large Language Models: Discussions spanning various users including

@rami4400,@_3sphere, and@fern.beardebated the effectiveness and potential of interpretability in neural networks like LLMs, with comparisons to the human genome project and skepticism about whether interpretability aligns with the nature of intelligence. - Concerns About AGI Claims and Model Capabilities: Skepticism was expressed by

@fern.bear,@vara2096, and@worthlesshoboregarding the performance claims of some models, like Qwen 1 and 2 on the MMLU benchmark, and the potential of overfitting or “cheating” on test sets.

Links mentioned:

- Teslas Have a Minor Issue Where the Wheels Fly Off While Driving, Documents Show: Despite knowing about chronic “flaws,” Tesla reportedly blamed drivers for glaring defects like collapsed suspensions and breaking axles.

- Cavemanspongebob React GIF - Cavemanspongebob Caveman Spongebob - Discover & Share GIFs: Click to view the GIF

- Qwen1.5 72B Chat - a Hugging Face Space by Qwen: no description found

- Join the Self-Play Language Models Discord Server!: Check out the Self-Play Language Models community on Discord - hang out with 15 other members and enjoy free voice and text chat.

- Troy Community GIF - Troy Community Room - Discover & Share GIFs: Click to view the GIF

- Introducing Qwen1.5: GITHUB HUGGING FACE MODELSCOPE DEMO DISCORD Introduction In recent months, our focus has been on developing a “good” model while optimizing the developer experience. As we progress towards…

- simple ai - chat: no description found

- File:Clock 10-30.svg - Wikimedia Commons: no description found

- Minimum description length - Wikipedia: no description found

- GitHub - idiap/nvib: Contribute to idiap/nvib development by creating an account on GitHub.

- GitHub - SimonKohl/probabilistic_unet: A U-Net combined with a variational auto-encoder that is able to learn conditional distributions over semantic segmentations.: A U-Net combined with a variational auto-encoder that is able to learn conditional distributions over semantic segmentations. - GitHub - SimonKohl/probabilistic_unet: A U-Net combined with a variat…

Eleuther ▷ #research (61 messages🔥🔥):

-

Clarification on Multi-task Loss Handling:

@clashlukediscussed PCGrad’s role in improving estimates over manual per-gradient reweighting. They raised concerns about magnitude preservation in gradients using this method, referencing the official code from facebookresearch/encodec. -

Scaling Studies Query:

@stellaathenainitiated a discussion on whether a post-hoc correlation could convert Kaplan et al.-style scaling laws study to Hoffman et al.-style, potentially reducing the number of training runs needed. They shared a link to the related Twitter conversation and blog post for further exploration. -

Hypernetworks for LoRA Weights: Dialogue between

@.rend,@thatspysaspy, and others covered the idea of using hypernetworks to generate LoRA weights for pretrained language models tailored to specific contexts. An issue ondavisyoshida/loraxdetailed a related interest, and@thatspysaspyoffered a code sample for experimentation. -

Discussing CNN Training Methodology:

@jstephencoreyvoiced confusion about a video’s explanation of CNN training, sparking debate on how layers learn and the effectiveness of pruning. Users like@Hawkand@xylthixlmdiscussed the accuracy of the video’s claims, although opinions differed on the technicalities. -

Polyquant Activation Function Debate: In a conversation about substituting traditional activation functions with alternatives like polynomials,

@clashlukeand others scrutinized a novel architecture’s performance on ImageNet without typical activation functions.@fern.bearquestioned the nonlinearity of the proposed product function, while@clashlukehighlighted its optimization potential.

Links mentioned:

- Tweet from Grigoris Chrysos (@Grigoris_c): Proud for our new #ICLR2024 paper attempting to answer: Are activation functions required for all deep networks? Can networks perform well on ImageNet recognition without activation functions, max po…

- neural-style-pt/neural_style.py at master · ProGamerGov/neural-style-pt: PyTorch implementation of neural style transfer algorithm - ProGamerGov/neural-style-pt

- encodec/encodec/balancer.py at main · facebookresearch/encodec: State-of-the-art deep learning based audio codec supporting both mono 24 kHz audio and stereo 48 kHz audio. - facebookresearch/encodec

- Zoology (Blogpost 2): Simple, Input-Dependent, and Sub-Quadratic Sequence Mixers: no description found

- Tweet from Stella Biderman (@BlancheMinerva): @Wetassprior @daphneipp Is there a post-hoc correlation that can be applied to a scaling laws study done Kaplan et al.-style to get one done Hoffman et al.-style? Note that this would be very high val…

- Predicting LoRA weights · Issue #6 · davisyoshida/lorax: I would like to use a separate neural network to predict LoRA weights for a main neural network, while training both neural networks at the same time. How can I manipulate the pytrees or to achieve…

Eleuther ▷ #scaling-laws (2 messages):

- Exploring the Tensor Programs Issue:

@lucaslingleexplained that@.johnnysands’ mention of “wrong init” refers to the findings from Tensor Programs 4 and 5 papers, which show that the “standard parameterization” can cause infinite logits when the model width increases indefinitely.

Eleuther ▷ #interpretability-general (10 messages🔥):

- Simple Model Analysis Tool Launched:

@fblgitintroduced model-similarities, a tool for computing per-layer cosine similarities in parameter space of different models. - Comparison with CCA/CKA:

@xa9axinquired about the insights offered by the model-similarity tool compared to Canonical Correlation Analysis (CCA) or Centered Kernel Alignment (CKA), and@digthatdataclarified that the tool specifically contrasts the parameter space. - Directions in Vector Space Explained:

@norabelroseand@pinconefishdiscussed the notion of a “direction,” and@norabelroseclarified it’s a 1D subspace with orientation in vector space rather than just any unit vector. - Understanding Vectors Beyond Coordinates:

@digthatdataoutlined that in deep learning, a vector is understood as both a direction and a magnitude, not just a position in space, and further elaborated on how cosine similarity measures the angle between two vectors. - Vectors as Directions and Operators:

@digthatdatacontinued to explain that a vector can function as an “operator,” illustrating with the vectors representingKING,QUEEN,MAN, andWOMAN, how the difference vectorzbetweenWOMANandMANacts semantically to alter the meaning fromKINGtoQUEEN.

Links mentioned:

GitHub - fblgit/model-similarity: Simple Model Similarities Analysis: Simple Model Similarities Analysis. Contribute to fblgit/model-similarity development by creating an account on GitHub.

Eleuther ▷ #lm-thunderdome (16 messages🔥):

-

Statistics Snafu Solved:

@hailey_schoelkopfclarified that bootstrapping for standard error on MMLU matches the pooled variance formula, not the combined variance. The proper formula for pooled variance is now selected for use in their project over the current combined variance formula. -

Statistical Dragons Ahead:

@stellaathenasuggested keeping both the old and new statistical formulas in their codebase for expert use, with a humorous warning comment added by@hailey_schoelkopf:# here there be dragons. -

Harnessing Language Model Evaluation:

@jbdel.prepared a clean fork containing updates for the lm-evaluation-harness in anticipation of a meeting, with all changes available in a commit on GitHub. The updated harness allows evaluation of language models with specific arguments and task settings.

Links mentioned:

- Use Pooled rather than Combined Variance for calculating stderr of task groupings by haileyschoelkopf · Pull Request #1390 · EleutherAI/lm-evaluation-harness: This PR updates the formula we use for aggregating stderrs / sample std. deviations across groups of tasks. In this PR: formula: result: hf (pretrained=mistralai/Mistral-7B-v0.1), gen_kwargs: (Non…

- Use Pooled rather than Combined Variance for calculating stderr of task groupings by haileyschoelkopf · Pull Request #1390 · EleutherAI/lm-evaluation-harness: This PR updates the formula we use for aggregating stderrs / sample std. deviations across groups of tasks. In this PR: formula: result: hf (pretrained=mistralai/Mistral-7B-v0.1), gen_kwargs: (Non…

- GitHub - jbdel/lm-evaluation-harness-multi: A framework for few-shot evaluation of language models.: A framework for few-shot evaluation of language models. - GitHub - jbdel/lm-evaluation-harness-multi: A framework for few-shot evaluation of language models.

- VLM · jbdel/lm-evaluation-harness-multi@83209a8: no description found

Eleuther ▷ #gpt-neox-dev (3 messages):

-

Seeking Pre-tokenized Validation/Test Datasets:

@pietrolesciexpressed dissatisfaction with the current validation set created using the pile-uncopyrighted dataset from Hugging Face. They inquired about the availability of pre-tokenized validation/test splits in a manner akin to the pre-tokenized training set on Hugging Face. -

Introducing Web Rephrase Augmented Pre-training (WRAP):

@elliottdysonshared an arxiv paper proposing WRAP, a method to improve large language model pre-training by paraphrasing web data into higher quality formats, which could potentially reduce compute and data requirements. -

Comparing WRAP Efficacy to Fine-Tuning:

@elliottdysonpondered if using WRAP would be more beneficial compared to just fine-tuning models on the same data and suggested that a comparative study on data processed with and without WRAP followed by fine-tuning could shed some light on its efficacy.

Links mentioned:

Rephrasing the Web: A Recipe for Compute and Data-Efficient Language Modeling: Large language models are trained on massive scrapes of the web, which are often unstructured, noisy, and poorly phrased. Current scaling laws show that learning from such data requires an abundance o…

HuggingFace ▷ #general (321 messages🔥🔥):

-

AI Training and Career Progression Tips: User

@dykyi_vladkshared their learning journey in ML over the past year mentioning specific models and techniques they’ve studied.@lunarflurecommended building demos and sharing them as a next step to becoming a professional. -

Server Troubles Amid A100G Launch:

@lolsktreported the server being down for nearly 12 hours and queried if it was related to the A100G launch. After a discussion,@lunarfludirected to send details to email and offered to forward the issue to the team. -

Modifying Model Responses: User

@tmo97sought advice on prompting models to stop giving warnings.@lunarflusuggested techniques to modify prompts and discussed the balance of safety and user control in AI responses. -

HuggingFace Fellowship & Model Uploading Queries:

@not_lainanswered multiple questions about using the HuggingFace platform, including the process to join the fellowship program and details about uploading custom pipeline instances and models. -

Inference Performance and Hardware Utilization: User

@prod.novainquired why their 4 RTX A5000 GPUs weren’t being utilized to their full potential during generation.@meatfuckerclarified that inference usually utilizes a single GPU and suggested running an instance on each card to distribute the task.

Links mentioned:

- Templates for Chat Models: no description found

- GitHub - Significant-Gravitas/AutoGPT: AutoGPT is the vision of accessible AI for everyone, to use and to build on. Our mission is to provide the tools, so that you can focus on what matters.: AutoGPT is the vision of accessible AI for everyone, to use and to build on. Our mission is to provide the tools, so that you can focus on what matters. - GitHub - Significant-Gravitas/AutoGPT: Aut…

- GitHub - Sanster/tldream: A tiny little diffusion drawing app: A tiny little diffusion drawing app. Contribute to Sanster/tldream development by creating an account on GitHub.

- Add

push_to_hub( )to pipeline by not-lain · Pull Request #28870 · huggingface/transformers: What does this PR do? this will add a push_to_hub( ) method when working with pipelines. this is a fix for #28857 allowing for easier way to push custom pipelines to huggingface Fixes # (issue) #28… - TheBloke (Tom Jobbins): no description found

HuggingFace ▷ #today-im-learning (1 messages):

- Pyannote Praised for Performance:

@marc.casals.salvadorexpressed admiration for Pyannote, a tool for speaker diarization, citing its excellent performance. - Introduction of Diarizationlm:

@marc.casals.salvadorbrought to attention Diarizationlm, a module that simultaneously trains Automatic Speech Recognition (ASR) and diarization to improve annotations by correcting diarizations through the speech itself.

HuggingFace ▷ #cool-finds (5 messages):

-

Empty Model Cards on HuggingFace:

@tonic_1pointed out that model cards are empty on HuggingFace, which can be a hindrance for users seeking model information. -

Interactive Gradio Demo Lacks Visibility:

@tonic_1shared excitement about a cool gradio demo but expressed concerns over the lack of details provided for this potentially overlooked model. -

Innovative LLaMA-VID Model Introduced: A model card for LLaMA-VID was shared by

@tonic_1, detailing an open-source chatbot that supports hour-long videos by using an extra context token. The model and more information can be found here. -

DV Lab’s Under-the-Radar Model Hosted on HuggingFace:

@tonic_1mentioned discovering another compelling but underappreciated model by DV Lab on HuggingFace, expressing hope to be able to serve it. -

Exploring Cost-Efficient LLM Usage:

@jessjess84shared an arXiv paper describing research on cost-efficient strategies for inference and fine-tuning of large language models (LLMs), including distributed solutions leveraging consumer-grade networks.

Links mentioned:

- Distributed Inference and Fine-tuning of Large Language Models Over The Internet: Large language models (LLMs) are useful in many NLP tasks and become more capable with size, with the best open-source models having over 50 billion parameters. However, using these 50B+ models requir…

- YanweiLi/llama-vid-7b-full-224-long-video · Hugging Face: no description found

HuggingFace ▷ #i-made-this (3 messages):

-

Ankush’s Finetuning Feat: User

@andysingalannounced a finetuned model developed by Ankush Singal. The model is an optimization based on a previous model from OpenPipe and comes with an installation and usage guide. -

Community Applause for New Finetuned Model:

@osansevierocongratulated@andysingalfor the creation of the new finetuned model, hailing it as very cool and fiery with a 🔥 emoji.

Links mentioned:

Andyrasika/mistral-ft-optimized-dpo · Hugging Face: no description found

HuggingFace ▷ #reading-group (3 messages):

-

Discover a Memetastic AI Milestone: User

typoilushared an article titled “A Meme’s Glimpse into the Pinnacle of Artificial Intelligence (AI) Progress in a Mamba Series LLM Enlightenment” claiming it contains insightful information about AI progress. There was no further discussion on the content. Read Here -

Scheduled Talk May Need Rescheduling:

@ericauldmight have jury duty on Friday, suggesting postponing the planned talk to Friday the 16th. An adjustment in the event calendar may be needed. -

Chad Brings Support with a Dash of Luck: In response to the potential rescheduling,

@chad_in_the_houseshowed understanding and wished@ericauldgood luck with jury duty if it happens.

Links mentioned:

no title found: no description found

HuggingFace ▷ #computer-vision (1 messages):

- Invitation to Share Computer Vision Expertise: User

@danielsamuel131has made an open call for those with expertise in computer vision to come forward and share their knowledge. Interested individuals are encouraged to drop him a direct message.

HuggingFace ▷ #NLP (11 messages🔥):

-

Fine-Tuning Frenzy:

@joeyzerois looking to fine-tune a chatbot with noisy old data and seeks resources on conversational data sets and tools for managing data sets easily. The user is open to suggestions including datasets ready for conversational use, robust editing tools, and any tutorials or tips for such NLP projects. -

Papillon’s NLP Tool Shared:

@8i8__papillon__8i8d1tyrshared a tool for Named Entity Recognition (NER) and Sentiment Analysis based on Flair and FLERT with a link to the GitHub repository. -

YAML Fine-Tuning Challenges:

@denisjannotexperienced issues with fine-tuning Mistral 7b for YAML generation, where unintended parts of YAML are modified upon a second request to alter specific parts. -

Trajectory for YAML Fine-Tuning:

@denisjannotmentions plans to train the Instruqt model to see if it improves the YAML modification issue. There’s a request for ideas to enhance the fine-tuning process without including modification examples in the training dataset. -

Few-Shot Learning Suggestion:

@meatfuckeradvised including examples of the desired modification in the prompt to induce one-shot or few-shot learning, which should work well even if the examples aren’t in the training dataset. This is intended to guide the model for YAML modifications.

Links mentioned:

bootcupboard/flair/SentimentalNERD.py at main · CodeAKrome/bootcupboard: It’s bigger on the inside than the outside! Contribute to CodeAKrome/bootcupboard development by creating an account on GitHub.

LM Studio ▷ #💬-general (103 messages🔥🔥):

-

Confusion in Choosing the Right Model: User

@hades____expressed feeling overwhelmed by the hundreds of available models, looking for guidance on how to select the best one for their needs. Suggestions pointed to resources and the idea of focusing on specific use-cases rather than seeking a “one size fits all” model. -

Model Compatibility Queries: Various users, including

@dicerxand@foxwear, inquired about connecting LM Studio with specific applications and models. The conversation involved potential compatibility with iOS apps and multimodal models, seeking clarity on integration possibilities. -

Resource and Template Requests: Users like

@Jonatan,@funapple, and@ayelwenrequested resources for code examples, prompt templates, and explanations of model differences, highlighting a community need for easily accessible and straightforward documentation. -

Technical Assistance Sought for LM Studio: Participants

@plaraje,@perkelson,@ts9718, and@joelthebuilderasked for technical help with issues ranging from prompt generation quirks to software functionalities in LM Studio, such as changes in zoom behavior and server connection guidance. -

Jokes and Light-Hearted Comments Flow: Amidst technical discussions, users like

@sica.rios,@rugg0064, and@wildcat_aurorainfused humor into the conversation, joking about AI’s capabilities and making light of language misunderstandings while interacting with the AI models.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Introducing Qwen1.5: GITHUB HUGGING FACE MODELSCOPE DEMO DISCORD Introduction In recent months, our focus has been on developing a “good” model while optimizing the developer experience. As we progress towards…

- liuhaotian/llava-v1.5-7b · gguf variant availability: no description found

- GitHub - FriendofAI/LM_Chat_TTS_FrontEnd.html: LM_Chat_TTS_FrontEnd is a simple yet powerful interface for interacting with LM Studio models using text-to-speech functionality. This project is designed to be lightweight and user-friendly, making it suitable for a wide range of users interested in exploring voice interactions with AI models.: LM_Chat_TTS_FrontEnd is a simple yet powerful interface for interacting with LM Studio models using text-to-speech functionality. This project is designed to be lightweight and user-friendly, makin…

- GitHub - THUDM/CogVLM: a state-of-the-art-level open visual language model | 多模态预训练模型: a state-of-the-art-level open visual language model | 多模态预训练模型 - GitHub - THUDM/CogVLM: a state-of-the-art-level open visual language model | 多模态预训练模型

- Reddit - Dive into anything: no description found

LM Studio ▷ #🤖-models-discussion-chat (3 messages):

- Brief Mention of Channel Reference:

@egalitaristenmentioned a channel with the code<#1167546635098804284>, but provided no context or further details. - Inquiry About a Model Usage:

@delfi_r_88002inquired if anyone is using the model llava-v1.6-34b.Q4_K_M.gguf, but did not offer further information or context. - New Model DeepSeek-Math-7B Released:

@czkokoannounced the release of DeepSeek’s new model, DeepSeek-Math-7B. The model can be found on GitHub with the corresponding metadata provided from the page.

Links mentioned:

GitHub - deepseek-ai/DeepSeek-Math: Contribute to deepseek-ai/DeepSeek-Math development by creating an account on GitHub.

LM Studio ▷ #announcements (1 messages):

-

LM Studio v0.2.13 Drops with Qwen 1.5: LM Studio announced

@yagilbthe release of LM Studio v0.2.13, featuring support for Qwen 1.5 across a range of model sizes (0.5B, 1.8B, 4B, 7B, 72B). Users can download the new version directly from https://lmstudio.ai or update through the app. -

Pin Your Favorites in LM Studio: The new LM Studio update allows users to pin models and chats to the top of their lists, making favorite tools more accessible.

-

Qwen’s New Models Now Open Source: Qwen1.5 models have been released and open-sourced, with sizes ranging from 0.5B to 72B, including base, chat, AWQ, GPTQ, GGUF models available on various platforms including Hugging Face and LM Studio.

-

Integration and Accessibility Enhancements: Qwen1.5 features quality improvements and integrates into Hugging Face transformers, removing the need for

trust_remote_code. With APIs offered on DashScope and Together, the recommended model to try is Qwen1.5-72B-chat on https://api.together.xyz/. -

Performance Tuning in LM Studio App: The latest LM Studio release eliminates subprocesses used to measure CPU and RAM on Windows, leading to improvements in the app’s performance.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- Tweet from LM Studio (@LMStudioAI): LM Studio v0.2.13 is available now! What’s New: - 🚀 Support for Qwen 1.5! (0.5B, 1.8B, 4B, 7B, 72B) And: - 🤖📌 Pin models to the top of the list - 💬📌 Pin chats, too Download from https://…

- Qwen1.5 GGUF - a lmstudio-ai Collection: no description found

LM Studio ▷ #🧠-feedback (9 messages🔥):

- Shoutout for LM Studio’s Simplicity:

@drawless111shared a link to a Medium blog post praising LM Studio for allowing anyone to experience the power of large language models with a simple user interface and no technical expertise needed. - Feature Request Acknowledgment:

@heyitsyorkieresponded to@foobar8553’s call for a resume feature by pointing to a current feature request and asked to show support for it. - Appreciation for LM Studio App:

@gli7ch.comexpressed gratitude towards LM Studio app developers for making work with LLMs easier, especially in terms of integrating them with automation tasks. - Inquiry About Investment Opportunities:

@ahakobyan.asked about investment opportunities which led to a witty exchange between@fabguyand@ptable, both humorously claiming they’d accept money for their fabricated and competing “foundations.” - Questioning Odd Zoom Shortcuts:

@perkelsonquestioned the logic behind LM Studio using non-standard zoom shortcuts, differing from those commonly used in browsers.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Elio And Lea GIF - Elio and Lea - Discover & Share GIFs: Click to view the GIF

- LM Studio: experience the magic of LLMs with Zero technical expertise: Your guide to Zero configuration Local LLMs on any computer.

LM Studio ▷ #🎛-hardware-discussion (40 messages🔥):

- Troubleshooting LMStudio on Linux:

@heyitsyorkieadvised@aswarpto use the latest Ubuntu 22 to avoid glibc errors when running LMStudio, adding that the Linux build still has compatibility issues with older Ubuntu versions. - GPU Utilization Mastery for Chatbots:

@heyitsyorkiereassured@shylorthat near-full utilization of GPU memory without spilling into shared RAM is good, as too much use of shared RAM can degrade performance over time with LLM chatbot interactions. - Speeding Up Token Generation:

@roscopekodiscussed ways to reduce the time to the first token, mentioning a 6-second delay with a powerful setup; members like@aswarpand@alastair9776suggested different approaches including picking another model, quantization, or trying out the AVX beta. - Shadow PC Machinery Under the Microscope:

@goldensun3dsshared a YouTube video detailing a comparison of running LLMs on a Shadow PC compared to a powerful local PC setup, noting the Shadow PC’s slower performances in loading models. - Grappling with Hardware Resources & Model Configurations:

@robert.bou.infinitedetailed available GPU configurations for running LMStudio across various Nvidia offerings in data centers, outlining the implications of NVLINK technology and Kubernetes Pods compatibility, suggesting that multiple GPU setups can be leveraged effectively for LLM workloads.

Links mentioned:

- Nvidia LHR explained: What is a ‘Lite Hash Rate’ GPU?: Nvidia’s Lite Hash Rate technology is designed to foil Ethereum miners and get more GeForce graphics cards in the hands of gamers. Here’s what you need to know.

- Open LLM Leaderboard - a Hugging Face Space by HuggingFaceH4: no description found

- Testing Shadow PC Pro (Cloud PC) with LM Studio LLMs (AI Chatbot) and comparing to my RTX 4060 Ti PC: I have been using Chat GPT since it launched about a year ago and I’ve become skilled with prompting, but I’m still very new with running LLMs “locally”. Whe…

LM Studio ▷ #🧪-beta-releases-chat (14 messages🔥):

-

New Beta Update Rolls Out:

@yagilbannounces LM Studio version 0.2.13 Preview - Build V3, featuring the ability to pin models and chats and performance improvements. The update is available for download with Mac and Windows links provided, and feedback is encouraged through a specified Discord channel. Download here. -

Leaderboard for Best LLMs Request:

@kyucilowrequests a leaderboard tab for the best LLMs to make selection easier,@minorellosuggests referencing that in a certain channel, while@heyitsyorkieand@re__xprovide links to external resources featuring LLM rankings. Hugging Face LLM Leaderboard and OpenRouter Rankings. -

GUI for Server Access Desired:

@_jayrossexpresses a wish for a web server GUI for remote access of the LM Studio server component;@goldensun3dsoffers a workaround using Parsec for remote access and inquires about Intel ARC GPU support in LM Studio. -

Chat Interface Issues and Error Reporting:

@wolfspyreencounters a potential issue where, after ejecting a model in LMS’ chat interface and modifying settings, the chat system seems to stop responding. The problem is being investigated for bugs or unexpected behavior. -

Dependency Installation Tips Shared:

@greg0403suggests installing the blast library to address a potential issue, recommending the use ofsudo apt-get install ncbi-blast+.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Yet Another LLM Leaderboard - a Hugging Face Space by mlabonne: no description found

- OpenRouter: Language models ranked and analyzed by usage across apps

LM Studio ▷ #autogen (1 messages):

lowkey9920: Try autogen studio . It’s two commands to get started with a ui

LM Studio ▷ #langchain (1 messages):

- Inquiry into Methods for Question Creation:

@varelasebexpressed interest in improving a RAG system and asked for advice on methods for question creation over a dataset. Specifically, they mentioned a lack of information on Tuna despite hearing good things about it.

Mistral ▷ #general (118 messages🔥🔥):

- LangChain Lamentations: Discord users

@akshay_1and@mrdragonfoxexpress dissatisfaction with LangChain, with the former suggesting its utility may only last a week before components need solidifying. - Search for Old Mac Compatible Interpreters:

@zhiyyangseeks a model interpreter for Mac OSX versions under 11, with suggestions ensuing but no specific solutions provided. - Mistral 8x7B Determinism Discussed:

@zaragatungabumbagumba_59827queries about the deterministic nature of Mixtral 8x7B during inference, and@mrdragonfoxexplains that the behavior is probabilistic, influenced by an adjustable temperature parameter. - Philosophical Perspectives on AI: A philosophy student

@zaragatungabumbagumba_59827inquires into the philosophical implications of LLMs, receiving recommendations from@mrdragonfoxand others to explore fundamental AI concepts. - Synthetic Data Generation Secrets Stay Secret: In a discussion about dataset generation,

@mrdragonfoxmentions the utility of GitHub repositories like airoboros and augmentoolkit, but declines to share specific methods, highlighting synthetic data generation as a crucial income source.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- GitHub - e-p-armstrong/augmentoolkit: Convert Compute And Books Into Instruct-Tuning Datasets: Convert Compute And Books Into Instruct-Tuning Datasets - GitHub - e-p-armstrong/augmentoolkit: Convert Compute And Books Into Instruct-Tuning Datasets

- GitHub - jondurbin/airoboros: Customizable implementation of the self-instruct paper.: Customizable implementation of the self-instruct paper. - GitHub - jondurbin/airoboros: Customizable implementation of the self-instruct paper.

Mistral ▷ #deployment (6 messages):

- Prompt Enhancement Queries: