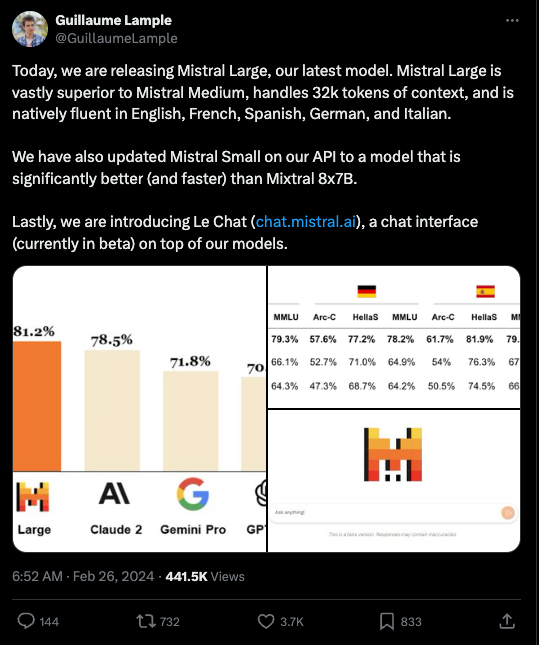

Mistral came out swinging today announcing Mistral-Large on La Plateforme and on Azure, trailing GPT4 about 5 percentage points on their aggregated benchmarks:

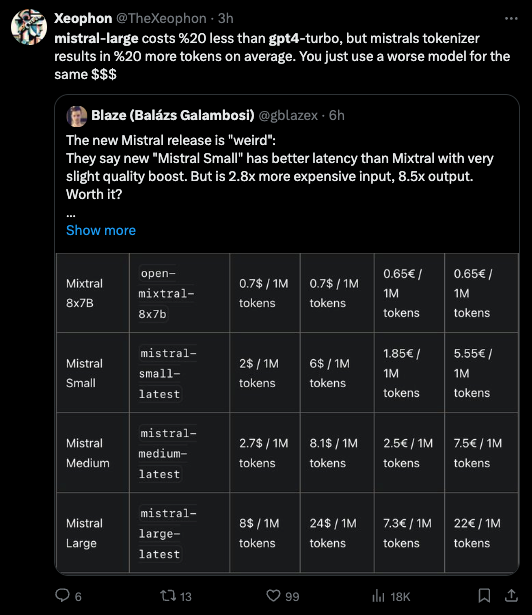

The community reception has been mildly negative.

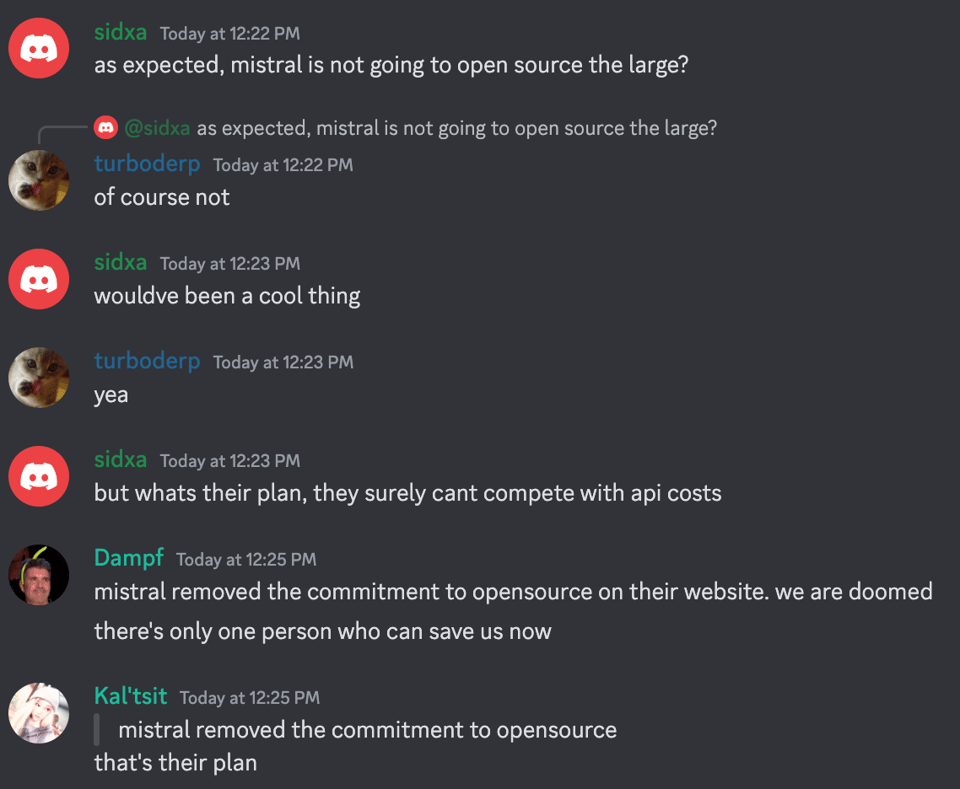

And hopes are not high for open sourcing. Notably, Mistral are also claiming that the new Mistral-Small is “significantly better” than the openly released Mixtral 8x7B.

Table of Contents

[TOC]

PART 0: Summary of Summaries of Summaries

Evaluating LLMs Performance and Cost-Efficiency:

The discussion in TheBloke Discord underscores the comparative analysis between Mistral Large and GPT-4 Turbo, with Mistral Large's performance on benchmarks like MMLU falling short despite similar cost implications, suggesting a reevaluation of cost-benefit for users and developers alike.

Technical Training Hurdles and Best Practices:

Challenges in implementing DeepSpeed to avoid out-of-memory errors and the application of DPO using the DPOTrainer highlight the technical intricacies and community-driven solution sharing, illustrating the ongoing efforts to optimize LLM training efficiency and practicality.

Advancements in AI Deception for Roleplay Characters:

The dialogue on creating AI characters capable of deception, especially with the application of survival goals, reflects the nuanced exploration of AI's narrative capabilities. The use of DreamGen Opus V1 despite tokenizer and verbosity issues underscores the creative pursuits in AI storytelling.

Intricacies of Model Merging:

The discourse led by community members on merging non-homogenous models using strategies like linear interpolation and PEFT merging methods reveals a deep dive into the complexities and potential of enhancing LLMs through model integration, marking a significant area of exploration within AI development practices.

PART 1: High level Discord summaries

TheBloke Discord Summary

-

Evaluating LLMs Performance and Cost-Efficiency: The performance of Mistral Large was compared unfavorably to GPT-4 Turbo by

@timotheeee1, suggesting it may not be worth the similar costs given its performance on benchmarks like MMLU. -

Technical Training Hurdles and Best Practices: Issues with DeepSpeed OOM errors and discussions around practical implementations of DPO using the

DPOTrainerfrom thetrlHugging Face library prompted sharing of insights and resources among users such as@cogbujiand@plasmator. -

Advancing AI Deception in Roleplay Characters: Dialogues on creating AI characters that can convincingly lie highlighted the improvements when applying explicit survival goals. Challenges encountered when using the DreamGen Opus V1 model were discussed, along with tokenizer issues and verbosity in AI storytelling.

-

The Intricacies of Model Merging Explored: Discussions led by

@jsarneckiand@maldevidedelved into the complexities of merging non-homogenous models and various strategies for successful mergers, like linear interpolation. The limitations and possibilities were articulated, drawing on resources like mergekit and advancements in PEFT merging methods outlined in a Hugging Face blog post. -

Engineers Pine for the Past While Gazing Toward AI’s Future in Decompilation: Reminiscences of OllyDbg’s features by

@spottyluckcontrast with excitement for potential AI-assisted decompilation expressed by@mrjackspade. The suggestion to use a large volume of open-source projects for creating AI training data sets demonstrates forward-thinking for advancing AI capabilities in code reconstruction.

Mistral Discord Summary

Mistral Large Takes the Stage: The introduction of Mistral Large, a highly optimized language model with an 81.2% accuracy on MMLU and features such as multilingual capabilities and native function calling, stirred interest and discussion across the community. It’s available for use via platforms such as la Plateforme.

Technical Hurdles & Triumphs in LLM Deployment: Members shared experiences and exchanged technical advice on the challenges of deploying Mistral models, such as the Mistral7x8b and Mistral-7B-Instruct, on various hardware setups including Tesla V100s and local machines with limited VRAM. Tips on adjusting layer sharing, precision levels, and dealing with freezing issues were exchanged, highlighting the technical nuances of high-performance model usage.

Fine-Tuning Finesse: The community discussed fine-tuning practices, emphasizing the need for experimentation and adequate data quantities, with suggestions pointing to around 4000 instances for specific tasks. There was also a focus on the right data format for fine-tuning with Mistral models, and the necessity of understanding advanced fine-tuning techniques like LoRA.

Contemplating Commercial Impacts & Open Access: Conversations around Mistral’s shift towards more business-oriented, closed-weight models like Mistral Small and Large surfaced concerns about the future of open models. However, many members are hopeful for the continued support of open model development despite big tech partnerships.

Mistral API Insights and Queries: Queries related to the Mistral API were numerous, ranging from concerns about data privacy, with confirmations that data isn’t used for model training, to functional inquiries about running Mistral on local machines without GPUs. There was also a discussion on third-party offerings and potential integrations for extending Mistral’s capabilities.

User-Driven Design and Application Ideas: The community actively shared ideas for new applications and enhancements, including the development of plugins and mobile apps that leverage Mistral. One user proposed adding a language level setting to Mistral’s Le Chat and there’s a buzz around the feature simplicity of Mistral-Next within Le Chat, which could indicate a user preference for streamlined AI products.

LM Studio Discord Summary

-

Troubleshooting LM Studio’s White Window Woe: User

@steve_evianencountered an issue where LM Studio launched to a white window;@heyitsyorkierecommended clearing .cache and %APPDATA%, which resolved the issue. -

Exploring Multilingual LLM Presence:

@.deugqueried about pre-trained multilingual LLMs with Korean support;@heyitsyorkienoted a scarcity of LLMs proficient in Korean to English translation, recommending combining LM Studio with an online translator like DeepL. -

LM Studio API Refuses to Run Headless:

@muradbinquired about headless operation for LM Studio API;@heyitsyorkieclarified the current version doesn’t support it, while@krypt_lynxexpressed desire for open-source and headless features, confirmed to be unavailable by@heyitsyorkie. -

Hyperparameter Evaluation Remains a Personal Choice:

@0xtotempondered the proper dataset for hyperparameter evaluation for a RAG model—with consensus leaning towards using the closest data available, as specific guidance was lacking. -

GPU Wars: Nvidia Faces Off AMD in User Preferences: The suitability of AMD’s GPUs for running LLMs was debated; users showed a general preference for Nvidia due to the ease of AI applications setup, despite speculation about AMD working on CUDA alternatives.

-

A Collective Effort to Aid IQ Models in LM Studio:

@drawless111succeeded in making IQ models work and offered guidance on locating specified formats on HGF; others discussed improvements and updates to various models and tools like llama.cpp. -

Online Reinforcement Learning Without File System Access:

@wolfspyreasked about LM Studio’s capability for local file system access; it was clarified that LLMs don’t have this capability, nor does LM Studio support executing commands from LLMs. -

AutoGen Anomalies Addressed with a Classic Reboot: Users shared troubleshooting tips for AutoGen errors, including reinstalling packages and the reliable “turn it off and on again” strategy, as humorously depicted in a Tenor GIF.

-

Seeking Support for Langchain’s RAG Utilization: In a one-message brief mention, bigsuh.eth inquired about using RAG within Langchain via LM Studio, but no discussions or answers followed.

-

Open-Interpreter Connectivity Conundrum Cracked: User

@nxonxidealt with connection errors and syntax mistakes when trying to run Open Interpreter with--localflag; after troubleshooting, simple Python requests worked as a solution.

Perplexity AI Discord Summary

-

Discover Daily Podcast Unveiled: Perplexity AI, in partnership with ElevenLabs, launched the Discover Daily podcast. The episodes sourced from Perplexity’s Discover feed are available on podcast.perplexity.ai, featuring daily tech, science, and culture insights using ElevenLabs’ voice technology.

-

Sonar Models Spark Debate: New

sonar-small-chatandsonar-medium-chatmodels along with their search-enhanced versions were introduced by Perplexity AI, leading to community comparisons withpplx-70b-online. Users reported incoherent responses from sonar models, requesting not to phase outpplx-70b-onlinedue to its better performance and mentioning that fixes for sonar models were underway as per community insights and the API Updates. -

Gibberish Responses from Sonar Models Under Scrutiny: Users like

@brknclock1215suggested possible mitigation of gibberish outputs by limiting response length, which contrasted with the stable output quality of pplx models even at longer lengths. Meanwhile, API users discussed fetching model details programmatically for improving user interface selections. -

Engagements in #general Rife with AI Chat Model Discussions: The community engaged in various discussions including the retirement of Gemini in favor of possible Gemini Ultra, inconsistencies in model responses across different platforms, and leveraging Perplexity’s Pro capability for image generation.

-

Assorted Inquiries and Tests in Sharing: Members in the sharing channel delved into a mix of topics like exploring user guides for Perplexity topics, questioning the novelty of Lenovo’s technology, and sharing mixed use cases leveraging the AI for personal assistance and technical inquiries.

OpenAI Discord Summary

-

VPN Interference with OpenAI Services:

@strang999encountered an error using OpenAI services, which@satanhashtagattributed to potential VPN interference and suggested disabling web protection in VPN settings. -

GPT-4 Context and Captcha Challenges:

@orbartand@blckreaperare frustrated with ChatGPT’s reduced memory for narrative work, suspecting a decrease in tokens processed, while@little.toadstooland@necrosystvreported cumbersome captcha tests within ChatGPT. -

Quest for Image-to-Video and Data Privacy Concerns:

@sparkettewas looking for a browser-based image-to-video generator and@razorbackx9xasked about AI for sorting credit report data, with@eskcantacautioning against uploading sensitive personally identifiable information (PII). -

Navigating Custom GPT and Assistant Differences: Users noted inconsistencies between Custom GPTs and Assistant GPTs in handling formatting and markdown, particularly when generating tables or images, with advice to refer to specific API configurations.

-

Anticipating Sora and Protecting Prompts: The community is curious about the capabilities of OpenAI’s Sora and discussed the feasibility of protecting custom prompts with

@.dunamis.and@kyleschullerdev_51255agreeing that complete protection isn’t possible, suggesting a layered web application for security instead.

LAION Discord Summary

-

Watch Out for Crypto Scams: One post in the #learning-ml channel from

@josephsweeney11appears to be a potential scam involving making $40k in 72 hours and should be approached with extreme caution. -

Understanding Transformers’ Learning Capabilities: In the #learning-ml channel,

@phryq.inquired about experiments training transformers to understand size relationships to enhance image generation, using hypothetical objects. -

New Snap Video Project Unveiled: A new project termed Snap Video was discussed in the #general channel, addressing challenges in video generation with a transformer-based model, and sharing the project link and related research paper.

-

Debate Over Optimal CLIP Filtering Techniques: In the #research channel, the discussion revolved around whether CLIP filtering is suboptimal compared to image-text pair classifiers, with reference to a recently published DFN paper in the conversation.

-

Gradient Precision: bfloat16 vs fp32 Debate: Conversations in the #research channel have touched on the use of autocasting on TPUs with bfloat16 gradients and compared its performance against the default fp32 gradients in PyTorch’s autocast behavior.

-

Sharing of AI Research Papers and Methods: Across the channels, participants shared insights and resources on various AI research topics such as state space architecture, Transformer optimization, AI-generated text detection, and discussions around making LLMs significantly cheaper, with links to resources like Mamba-ND, among others.

HuggingFace Discord Summary

-

Democratization of AI Hardware Sparks Intense Debate: In the discussion surrounding the potential of creating proprietary TPUs and the democratization of hardware, parallels to the car and RAM industries elicited skepticism regarding tech promises from companies like Samsung. The importance of such advancements was underscored, given their impact on AI capabilities and access.

-

Towards Accessible and Practical AI Solutions: Several initiatives, including the creation of Galaxy AI, offering free API access to models such as GPT-4, GPT-3.5, and Galaxy AI’s Gemini Pro, to the presentation of surya, an OCR and line detection project that supports over 90 languages, are aimed at making AI tools more accessible and practical for various applications, as explained in this GitHub repository.

-

Neural Network Innovations & Model Finetuning Challenges: From introducing the support for WavLMForXVector in browsers to reviewing Peft’s library for new merging methods for LoRA, there’s a clear focus on model deployment and improving AI performance. Finetuning difficulties, whether with Flan T5 producing incoherent output or a zigzag loss graph in Qwen1.5-0.5B, remain pivotal points of discussion.

-

Cross-disciplinary AI Projects Garner Attention: Projects that integrate AI with specific disciplines, such as Unburn Toys, an open-source AI toolbox, or the TTS Arena for comparing TTS models, signify a cross-functional approach to AI development. This is complemented by the release of datasets for niche applications like philosophical Q&A, available on Hugging Face here.

-

Knowledge Sharing and Collaborative Growth in AI Communities: Whether it’s a query on imitation learning for robotics, the use of AnimeBackgroundGAN, the issues related to multi-language OCR, or the Japanese Stable Diffusion model’s approach to training in a new language, it’s evident that AI communities serve as invaluable forums for sharing knowledge, solving problems, and fostering collective progress in the field.

Eleuther Discord Summary

-

Batching Blues with GPT-4:

@rwamitraised concerns about increasing processing time from 2s to 60s per iteration when implementing batching to query GPT-4 using langchain wrapper, ballooning the task from 5 to 96 hours for 5-6k records. -

Intrigue in Initialization: A particular code piece in Gemma’s PyTorch implementation involving RMSNorm sparked discussions about the significance of the addition of +1 to the normalization process.

-

EfficientNet’s Efficacy: A debate arose over EfficientNet’s merits, with

@vapalusdefending its use in segmentation tasks despite criticism from@fern.bearregarding its marketing versus performance. -

Mistral Large Debuts: The release of Mistral Large was announced, a model acclaimed for its strong text generation performance and availability on la Plateforme and Azure. Check out Mistral Large for additional insights.

-

DPO Paper and SFT: Clarity was sought by

@staticpunchaboutmodel_refinitialization in DPO, with confirmation that Supervised Fine-Tuning (SFT) on preferred completions should precede DPO, as discussed in the DPO paper. -

Diving Deeper into GRUs:

@mrgonaoshowed curiosity about why gated units like GRUs are termed as such, yet explanations regarding their etymology remained elusive within the channel. -

The Search for Smarter Search: The “Searchformer” paper Beyond A*: Better Planning with Transformers via Search Dynamics Bootstrapping describes how a Transformer-based model can outperform traditional A* methods in solving puzzles, offering an innovative approach to search problems.

-

RLHF and the Simplicity Debate: A paper advocating for simpler REINFORCE-style optimization over Proximal Policy Optimization (PPO) for RLHF triggered discussions on the efficiency of fundamental methods in RL for language models. The paper is accessible here.

-

Watermarking Frameworks Face-off: The landscape of text watermarking for large language models was shared, featuring techniques for embedding detectable signals in generated text and analyzing the robustness of such watermarks.

-

Tales of GPT-NeoX and Python: Amidst hesitation about upgrading to Python 3.10, conversations in development veered towards preferences for a custom training loop over GPT-NeoX, showing an active engagement with the finer points of AI development optimization.

-

Multilingual Matters in Tokenization: Queries about optimizing the Mistral tokenizer for better multilingual representation underscored ongoing efforts to enhance language model capabilities beyond English, indicating a focus on global applicability.

LlamaIndex Discord Summary

-

Create-llama Eases Full-Stack Development: The newest create-llama release integrates LlamaPack, streamlining the construction of full-stack web apps through the inclusion of advanced RAG concepts with minimal coding. The announcement was shared in a tweet by @llama_index.

-

Counselor Copilot Leverages Advanced RAG: Counselor Copilot project, highlighted in a tweet, distinguishes itself by utilizing advanced RAG to assist crisis counselors, showcasing a use case as a co-pilot rather than a basic chatbot.

-

RAG Retrieval Enhanced by Summaries: To improve RAG retrieval, a technique using sub-document summaries helps tackle global concept awareness problems that arise from naive chunking. This approach is detailed in a tweet discussing the consequential boost in contextual awareness of each chunk.

-

LlamaParse Masters Complex PDF Parsing: LlamaParse has been introduced as a powerful tool for parsing PDFs with complex tables and figures, crucial for high-quality RAG applications. Accurate table representations aid the LLM in providing correct answers, as stated in a tweet.

-

Challenges with Kafka’s Protagonists in AI: In a discussion regarding generating a book review for Kafka’s “Metamorphosis,”

@daguilaraguilarfaces trouble with the AI incorrectly highlighting “Grete” as the protagonist instead of “Mr. Samsa,” referencing their code. -

Insights into Financial Document Analysis and Context Management: SEC Insights brings advanced capabilities for analyzing financial documents, and there is a call within the community for benchmarks related to best practices in context management for large-window LLMs such as GPT-4 turbo and Gemini 1.5.

Latent Space Discord Summary

Sora’s Consistency Questioned: In a correction to a WSJ video, @swyxio pointed out that OpenAI’s Sora maintains consistency over more than 1-minute videos by interpolating from a start image.

NVIDIA’s GEARing Up: NVIDIA announced a new research group, GEAR (Generalist Embodied Agent Research), co-founded by Dr. Jim Fan, focusing on autonomous machines and general-purpose AI.

AI-Generated Podcasts Hit the Airwaves: Perplexity has launched an AI-generated podcast, drawing content from their Discover feed and employing ElevenLabs’ voices for narration.

One Line of AI Code with Cloudflare: Cloudflare’s new AI Gateway has been introduced, featuring easy integration via a single line of code for AI analytics and insights.

AI Takes on Data Analysis with GPT-4-ada-v2: A new tool - ChatGPT Data Analysis V2 enhances data analysis by offering targeted replies and data grid overlay editor, possibly implementing interactive charts and leveraging gpt-4-ada-v2.

LLM Paper Club T5 Session Recap: A recent LLM Paper Club session led by @bryanblackbee dissected the T5 paper with discussions encapsulated in shared Notion notes. Open inquiries included model vocabulary, fine-tuning processes, and architecture differences for NLP tasks.

Local Model Enthusiasts Convene in AI in Action Club: The “AI in Action” event highlighted local model exploration, tooling discussions for local AI models, and references to model fine-tuning with LoRAs deploying tools like ComfyUI. The Latent Space Final Frontiers event was announced, inviting teams to push the boundaries of AI with an application link here.

OpenAccess AI Collective (axolotl) Discord Summary

-

Gradient Clipping Woes & DeepSpeed Query: An issue with gradient clipping set to 0.3 was discussed with suspicions of temporary spikes; meanwhile, a GitHub issue about HuggingFace’s Trainer supporting DeepSpeed Stage 3 incited feedback on usage and updates. Axolotl’s cache clearing techniques were also shared, using

huggingface-cli delete-cache. -

Strategic Shifts at Mistral AI?: Discussions surfaced regarding a strategic partnership between Microsoft and Mistral AI, centering on potential implications for open-source models and the commercial direction of Mistral AI. Links to a Twitter post and news article were shared for further insight.

-

Ease of Access with Axolotl’s Auto-Install: The Axolotl project saw improvements with the introduction of

auto_install.shto simplify installations, showing commitment to non-Python developer support. A Twitter post sought community support for the CUDA mode series with the potential assistance of Jeremy Howard. -

GPUs, Dockers, and Newbies: Technical issues regarding GPUs, such as long training times and high loss, Docker container complications, and the desire for a beginner-friendly Axolotl tutorial were prominent. Hugging Face’s reported checkpoint save error issue #29157 and Axolotl’s GitHub #1320 were among the key references.

-

Community Highlights Korean Expansion & RAG Features: A fine-tuned phi-2 model without a model card was announced, EEVE-Korean models were touted for extended Korean vocabulary, and R2R Framework for RAG system development was introduced. The supporting arXiv technical report and various Hugging Face models were provided to the community.

-

Runpod Hits a DNS Hitch: A NameResolutionError on runpod suggested DNS resolution issues possibly involving proxy settings when trying to reach ‘huggingface.co’ were reported.

CUDA MODE Discord Summary

-

CUDA Under Fire: Computing legend Jim Keller criticized NVIDIA’s CUDA architecture in a Tom’s Hardware article, suggesting it lacks elegance and is cobbled together. Meanwhile, the introduction of ZLUDA, which enables CUDA code to run on AMD and Intel GPUs, was open-sourced with hopes to challenge NVIDIA’s AI dominance (GitHub link).

-

Gearing Up with GPUs: Debates surfaced regarding GPU choices for AI with the 4060 ti being the cheapest 16GB consumer GPU and the 3090 offering 24GB VRAM as a stronger alternative for LLM tasks. Discussions were also vibrant around second-hand GPU buying strategies and potential technical remedies when issues arise.

-

Quantized Computation Conversations: Clarity surfaced on how computations in quantized models maintain accuracy, and discussions around implementing efficient CUDA kernels through

torch.compileby detecting patterns were prominent. The speed of CUDA kernel compilation was also a topic, with methods to reduce compile times from over 30 seconds to under 2 seconds proposed (repository link). -

Triton Tinkering: Interest piqued in Triton, a tool for enabling Jax support via Pallas and its comparison to CUDA for multi-GPU/node execution. There were calls for experts to explain the lower-level workings of Triton, its foundation in LLVM and MLIR, and to create benchmarks for its quantized matmul kernel.

-

Flash Attention Finessed: Within ring attention discussions, a

zigzag_ring_flash_attn_varlen_qkvpacked_funcimplementation showed speed improvements. A Hugging Face document detailed memory efficiency benefits (Flash Attention Visual), and benchmarks indicated a 20% speed up over classical flash attention (benchmark link). -

CMU’s Paper on Efficient LLM Serving: A paper from CMU on efficient methods in deploying generative LLMs was shared, titled “Towards Efficient Generative Large Language Model Serving: A Survey from Algorithms to Systems” (arXiv link), surveying over 150 works on techniques including non-autoregressive generation and local attention variants.

-

Learning Efficiency Through MIT: An MIT course on efficient AI computing was unveiled, covering model compression, pruning, quantization, and providing hands-on experience with models like LLaMa 2 and touching quantum machine learning topics (course link).

-

CUDA-MODE Lecture Announcements and Learnings: Lecture 7 on Quantization titled Quantization CUDA vs Triton was announced, emphasizing the discourse on efficient techniques in AI computations with quantization at the forefront. Lecture content was supplemented by YouTube videos and easily accessible slide presentations, fostering continued education in the community (YouTube Lecture 6, Lecture 7).

-

Job Prospects and Queries: Nvidia was confirmed to be looking for CUDA and C++ experts, inviting applicants to DM their CV for JobID: JR1968004. Questions around hiring status for companies like Mistral were floated, underlining the employment buzz within the AI engineering sector.

LangChain AI Discord Summary

-

Exploring Function Calls in AI Models: Engineer

@saita_ma_is looking for ways to execute function calls with local models such as OpenHermes, inspired by what CrewAI achieved. Meanwhile,@kenwu_shared a Google Colab seeking assistance on agent and function calling using Cohere API and LangChain. -

LangChain Integration in Various Projects: The creation of a personalized chatbot implementing OpenAI, Qdrant DB, and Langchain JS/TS SDK was shared by

@deadmanabir, while@david1542introduced Merlinn, a machine learning tool to support on-call engineers. Furthermore,@edartru.offered Langchain-rust, a crate that allows Rust developers to use large language models in programming. -

Tutorial Resources Promote DIY AI Projects: A recent YouTube tutorial shows viewers how to create a ChatGPT-like UI using ChainLit, LangChain, Ollama, & Gemma.

@rito3281wrote about using LLMs for finance analysis in the insurance industry, and@tarikkaoutarposted a video on creating a multi-agent application involving LangGraph. -

Sarcasm Detection and Timeout Extensions in LLMs: There was a suggestion to tag phrases with “sarcasm” for better LLM detection post-fine-tuning, but further discussion on the mechanics was not provided. A query about extending the default 900-second timeout was raised, yet no subsequent solutions or elaborations were found.

-

Emerging Tools and Use Cases Explored by Developers:

@solo78invites collaborative discussions on AI implementations in the insurance sector’s finance function. An AI-powered resume optimizer that helped secure interviews at tech companies was introduced by@eyeamansh.

Datasette - LLM (@SimonW) Discord Summary

-

ChatGPT Multilingual Mishaps: Users noted that chatgpt-3.5-turbo sometimes mistranslates document titles, with one instance changing “Taking Advantage of the Internet” to “Sacándole Provecho a Internet”. The suggested workaround is to use a system prompt specifying “Always use English” to prevent such language detection errors.

-

Prompt Crafting Nostalgia & Fixes:

@tariqalidiscussed the benefits of old school prompt crafting for better control in light of chatbot “time out” issues. Meanwhile,@derekpwillisand@simonwconversed about devcontainer configuration, with@simonwrecommending the addition ofllm modelsto thesetup.shscript, which@derekpwillisimplemented to solve certain bugs. -

Aspirations for LargeWorldModel on LLM: There is interest in running the LargeWorldModel on LLM, possibly leveraging GPU instances for PyTorch models, as discussed by

@simonw. He referenced the models’ availability on the Hugging Face repository. -

Groq Inference Plugin Debuts:

@angerman.released a Groq inference plugin, llm-groq, with the community showing support and curiosity regarding its performance capabilities. -

llm-groq Plugin Hits PyPI: Following advice from

@0xgrrr,@angerman.published his llm-groq plugin to PyPI, facilitating easier installation viallm install. He shared his publishing experience and drew comparisons between Haskell and Python community practices.

LLM Perf Enthusiasts AI Discord Summary

-

Bold Claims on AI Hallucination Footprint: Richard Socher’s tweet hinting at possible solutions for AI hallucinations sparked discussions around embedding models and validation mechanisms to improve AI’s factual accuracy.

-

Introducing a New Wikipedia: Globe Explorer, a tool leveraging GPT-4 to generate customizable Wikipedia-style pages, has been launched and made viral, with a drive to top Product Hunt’s list with additional Product Hunt details.

-

FireFunction V1 Ignites Excitement: The release of FireFunction V1 by

@lqiaopromises GPT-4-level outputs with faster and more efficacious function calling, announced along with useful new structured output modes such as JSON, discussed with interest among function calling approaches, as detailed in FireFunction’s blog post. -

Fine-Tuning Adventures with gpt-4-turbo: The query on embedding techniques for improved data extraction and classification tasks using gpt-4-turbo for 1-shot learning stirred interest in effective fine-tuning practices.

-

Anki’s AI Flashcard Revolution Still Pending: The integration of GPT-4 for producing Anki flashcards revealed successes and limitations, such as verbose outputs and challenges with visual content integration, featured in an analytical Tweet by Niccolò Zanichelli.

-

Peering into Feather’s purpose: Feather OpenAI’s icon, hinting at a writing tool, along with historical snapshots and its significance in hiring for SME data labeling and coding annotation, garnered interest, alongside advancements like the “gpt-4-ada-v2” with features enhancing data analysis capabilities, as discussed in Semafor’s article and Tibor Blaho’s tweet.

DiscoResearch Discord Summary

-

Callbacks Feature in Hugging Face Trainer: Sebastian Bodza discussed using custom callbacks with the Hugging Face trainer, emphasizing that while currently exclusive to PyTorch, they offer “read-only” control through the

TrainerControlinterface. -

Benchmarks Emerge in German Emotional Intelligence: EQ-Bench now supports the German language, courtesy of updates from Calytrix, with

gpt-4-1106-previewtopping the German EQ-Bench preliminary scores, details found at the EQ-Bench GitHub repository. However, concerns were raised about the validity of the translated benchmarks, suggesting emotional understanding nuances might be lost, potentially skewing results due to English-centric reasoning patterns. -

Misgivings on Probability-Based LLM Evaluations: Bjoernp recommended an arXiv paper revealing the inherent limitations in probability-based evaluation methods for LLMs, specifically regarding multiple-choice questions and their alignment with generation-based predictions.

-

Introducing Layered Sentence Transformers: Johann Hartmann unveiled Matryoshka Embeddings via a Hugging Face blog post, detailing their advantages over regular embeddings, and confirmed their integration into the Sentence Transformers library, enhancing the toolkit for users.

-

Clarity on RAG Approach for German Dataset: Johann Hartmann and Philip May deliberated the evaluation methodology for a German retrieval context understanding dataset, with May clarifying that it’s crucial to assess if an LLM can identify relevant information in multiple retrieved contexts. The dataset is a work-in-progress and currently lacks public accessibility.

AI Engineer Foundation Discord Summary

-

Hackathon Team Formation Heats Up:

@reydelplatanosand@hiro.saxophoneteamed up for an upcoming hackathon, with@hiro.saxophonebringing experience in ML engineering, particularly in multimodal RAG. Meanwhile,@ryznerf.also showed interest in joining a hackathon team, emphasizing eagerness to participate. -

Collaboration Across Disciplines: Back end developer

@reydelplatanoshas partnered with ML engineer@hiro.saxophonefor the hackathon, representing a fusion of backend and machine learning skills in their new team. -

Hackathon Registration Rush:

@silverpiranhaand@jamthewallfacerdiscussed registration for an event, with@silverpiranhaeventually confirming successful registration and suggesting a potential team-up. -

Drones managed by Code:

@.yosunintroduced a hackathon project idea about controlling drones through function calls, referencing a method from the OpenAI Cookbook and shared a code snippet as an illustration.

Alignment Lab AI Discord Summary

- Gemma-7B Gets Conversation Manners:

@imonenexthas integrated special tokens<start_of_turn>and<end_of_turn>into the Gemma-7B model to facilitate turn-taking in conversational AI. The model with these enhancements is now available for training and fine-tuning on Hugging Face.

Skunkworks AI Discord Summary

- Seeding Insights for Stochastic Precision:

@stereoplegichighlighted an article on the significance of random seeds in deep learning, particularly for Python’s PyTorch users. The article Random Numbers in Deep Learning; Python & the PyTorch Library was lauded as a “shockingly good read” for those keen to explore or fine-tune the underlying mechanics of randomness in model training.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (1013 messages🔥🔥🔥):

- Mistral Large Not Worth the Performance?:

@timotheeee1suggested that Mistral Large, with a similar cost as GPT-4 Turbo, is not justifiable given its slightly inferior performance on benchmarks like MMLU. The cost effectiveness is questioned. - Megatokens Make Their Debut:

@itsme9316humorously coined the term “megatoken” during a discussion about token costs, sparking a series of light-hearted responses including “lol” from several users such as@technotech. - The Great Model Debate: A lengthy debate ensued regarding whether LLMs can truly “reason.” Users like

@kalomazeand@kaltcitexchanged views on language models’ capabilities to perform reasoning or whether what they exhibit can only be termed as quasi-reasoning. - Open Source Hopes Dashed for Mistral Large?: Dialogue surrounding Mistral’s commitment to open sourcing its large models showed frustration, with users like

_dampflamenting the change and expressing a lack of surprise at the news. - Experiencing Technical Difficulties: Users like

@kaltcitreported issues with models like academiccat dpo, experiencing errors and segfaults during measurement, hinting at instability or unpredictability in some AI models.

Links mentioned:

- Cody - Sourcegraph: no description found

- No GIF - No Nope Cat - Discover & Share GIFs: Click to view the GIF

- Supermaven: no description found

- Cat Cat Jumping GIF - Cat Cat Jumping Cat Excited - Discover & Share GIFs: Click to view the GIF

- Mark Zuckerberg Last Breath Sans GIF - Mark Zuckerberg Last Breath Sans Last Breath - Discover & Share GIFs: Click to view the GIF

- Neural Text Generation With Unlikelihood Training: Neural text generation is a key tool in natural language applications, but it is well known there are major problems at its core. In particular, standard likelihood training and decoding leads to…

- OpenCodeInterpreter: no description found

- mobiuslabsgmbh/aanaphi2-v0.1 · Hugging Face: no description found

- LongRoPE: Like 👍. Comment 💬. Subscribe 🟥.🏘 Discord: https://discord.gg/pPAFwndTJdhttps://github.com/hu-po/docs/blob/main/2024.02.25.longrope/main.mdhttps://arxiv.o…

- Cat Kitten GIF - Cat Kitten Speech Bubble - Discover & Share GIFs: Click to view the GIF

- Vampire Cat Cat Eating Box GIF - Vampire Cat Cat Eating Box Cat Box - Discover & Share GIFs: Click to view the GIF

- Welcome Gemma - Google’s new open LLM: no description found

- 2021 Texas power crisis - Wikipedia: no description found

- MaxRiven - Turn It Up | Official Music Video | AI: Thank for watching !Watch in HD and enjoy it !If you liked the video please share with your friends !►Stream & Download: https://fanlink.to/MXRVNturnitupThan…

- GitHub - Dicklesworthstone/the_lighthill_debate_on_ai: A Full Transcript of the Lighthill Debate on AI from 1973, with Introductory Remarks: A Full Transcript of the Lighthill Debate on AI from 1973, with Introductory Remarks - Dicklesworthstone/the_lighthill_debate_on_ai

- Uglyspeckles - Carrot Cake Soul Shuffling Incident SFX: From The House in Fata Morgana fandisc: Carrot Cake Jinkaku Shuffle JikenThis soundtrack is owned by Novectacle (Vegetacle)

- GitHub - Azure/PyRIT: The Python Risk Identification Tool for generative AI (PyRIT) is an open access automation framework to empower security professionals and machine learning engineers to proactively find risks in their generative AI systems.: The Python Risk Identification Tool for generative AI (PyRIT) is an open access automation framework to empower security professionals and machine learning engineers to proactively find risks in th…

- Announcing Microsoft’s open automation framework to red team generative AI Systems | Microsoft Security Blog: Read about Microsoft’s new open automation framework, PyRIT, to empower security professionals and machine learning engineers to proactively find risks in their generative AI systems.

- The Strange Evolution of Artificial Intelligence: Center for the Future Mind presents Scott Aaronson speakingn at Mindfest 2024. Full episode will go live tomorrow Tuesday February 27 at 12PM EST.NOTE: The p…

- no title found: no description found

TheBloke ▷ #characters-roleplay-stories (275 messages🔥🔥):

- Achieving Deception in AI:

@superking__and others discussed the challenges of programming a character to lie convincingly, as larger models like Mixtral perform better with explicit goals such as “survive at any cost”. - The Tale of a Shapeshifting Android: Despite

@superking__’s efforts to create a character card for an android hiding its identity, the AI blew its cover until tasked with the goal “survive at any cost”, which led to an improvement in secretive behavior. - Opus V1 Models and Technical Challenges: Participants such as

@dreamgenand@kquantnavigated issues around DreamGen Opus V1, tokenizer problems, and optimal model settings for better performance. - Model Issues with Verbosity and Looping: Several users, including

@superking__and@dreamgen, discussed instances where the AI would write unnecessarily long sentences or enter looping patterns, with shared experiences and potential fixes. - Discussion on Character Roleplay:

@keyboardkingsuccessfully created a character card that managed a gender disguise narrative, showcasing current AI capabilities in managing nuanced roleplay scenarios.

Links mentioned:

- Kquant03/NurseButtercup-4x7B-bf16 · Hugging Face: no description found

- maeeeeee/maid-yuzu-v8-alter-3.7bpw-exl2 · Hugging Face: no description found

- Chub: Find, share, modify, convert, and version control characters and other data for conversational large language models (LLMs). Previously/AKA Character Hub, CharacterHub, CharHub, CharaHub, Char Hub.

- dreamgen/opus-v1-34b-awq · Hugging Face: no description found

- Angry Bender Mad GIF - Angry Bender Mad Angry - Discover & Share GIFs: Click to view the GIF

- #0SeptimusFebruary 24, 2024 3:31 PMWhat tale do you wish to hear?# - Pastebin.com: Pastebin.com is the number one paste tool since 2002. Pastebin is a website where you can store text online for a set period of time.

TheBloke ▷ #training-and-fine-tuning (71 messages🔥🔥):

- Seeking DPO Implementation Advice:

@cogbujiis on the hunt for a practical implementation of DPO (Decision Transformer) and considers using theDPOTrainerfrom thetrlHugging Face library as a reference. Various members, such as@dirtytigerx, engage in the discussion, offering insights and resources. - Fine-Tuning Versus Training Dilemmas:

@cognitivetechexpresses concerns about the efficiency of fine-tuning full LLMs and the potential loss of information. The user considers usinggguffor fine-tuning and also explores leveraging the official QA-Lora implementation for instruct fine-tuning. - Dealing with DeepSpeed OOM Issues:

@plasmatorstruggles to set up DeepSpeed Zero due to out-of-memory errors, despite calculations indicating sufficient resources. - Storytelling LLMs and Comic Book Training Set:

@hellblazer.666inquires about how to train smaller models for storytelling, specifically using comic book texts as a dataset. They also share the Augmentoolkit repository as a potential tool for converting their data into a suitable format for training. - Training Methods and Model Selection: In a comprehensive discussion,

@dirtytigerxand@hellblazer.666discuss various training methods for LLMs, including full fine-tuning, PEFT-techniques like LoRA, as well as the usage of retrieval-augmented generation (RAG). They conclude that starting with a base model fine-tuned for storytelling might be the best approach for@hellblazer.666’s project.

Links mentioned:

- GitHub - e-p-armstrong/augmentoolkit: Convert Compute And Books Into Instruct-Tuning Datasets: Convert Compute And Books Into Instruct-Tuning Datasets - e-p-armstrong/augmentoolkit

- trl/trl/trainer/dpo_trainer.py at main · huggingface/trl: Train transformer language models with reinforcement learning. - huggingface/trl

TheBloke ▷ #model-merging (37 messages🔥):

- Challenges in Novel Model Merging: User

@jsarneckiinquired about merging non-homogenous models like llama-2-13b and Mistral-7b using mergekit, which@maldevideconfirmed is not possible. The discussion evolved towards exploring merging techniques that could help@jsarneckireach their objective. - Optimizing for Use-Cases:

@maldevideprompted@jsarneckito consider whether they were experimenting for capability discovery or targeting specific use-cases, further providing insights into successful merges on Hugging Face’s models. - Techniques for Merging Homogenous Models:

@alphaatlas1mentioned git-rebasin as a potential option for merging models with identical size/layout and discussed limitations such as the lack of a good technique for merging different base models. - Advanced Merging Tactics Discussed: The conversation shifted to various merging strategies, including linear interpolation, additive merging, and stochastic sampling as shared by

@maldevide. The complexity of model merging techniques and their applicability to different model types was highlighted. - DARE Ties Merging Insights: Diffusion models were noted to have challenges with DARE ties merging, as mentioned by

@alphaatlas1, who also referenced a particular Hugging Face blog post. However,@maldevideshared a successful experience, pointing to a different implementation on GitHub.

Links mentioned:

- 🤗 PEFT welcomes new merging methods: no description found

- Daring Hydra - v1.2 | Stable Diffusion Checkpoint | Civitai: Daring Hydra is an attempt at a lifelike realistic model. v1.2 is actually the result of four different attempts to improve from v1.1, which is pre…

- GitHub - 54rt1n/ComfyUI-DareMerge: ComfyUI powertools for SD1.5 and SDXL model merging: ComfyUI powertools for SD1.5 and SDXL model merging - 54rt1n/ComfyUI-DareMerge

- GitHub - s1dlx/meh: Merging Execution Helper: Merging Execution Helper. Contribute to s1dlx/meh development by creating an account on GitHub.

TheBloke ▷ #coding (6 messages):

- DotPeek Scope Clarification:

@al_lansleyinquired about the languages that DotPeek supports, and@spottyluckconfirmed it’s limited to just C#. - Nostalgia for OllyDbg’s Features:

@spottylucklamented the lack of a true successor to OllyDbg, particularly its “animate into” feature, noting its limitations with 64bit which renders it nearly obsolete. - Eager Anticipation for AI in Decompilation:

@mrjackspadeexpressed excitement for the potential of AI-assisted decompilation to simplify the reverse-engineering process. - Frustration with Reconstructing Code:

@mrjackspadeshared their frustration over manually reconstructing obfuscated decompiled code, hinting at the tedious nature of the process. - Idea for AI Training Data Sets:

@mrjackspadesuggested an approach to creating training data sets for AI decompilation by using a large volume of open-source projects and their outputs.

Mistral ▷ #general (1198 messages🔥🔥🔥):

- Mistral Large vs Next Performance: Users like `@yasserrmd` and `@chrunt` compared the capabilities of Mistral Large and Next. Large seemingly outperforms Next in certain benchmarks while Next is favored for its concise responses.

- Hardware Requirements for AI: Discussions led by `@mrdragonfox` and `@tu4m01l` highlighted the impracticality of running large AI models like Mistral Large on CPUs, suggesting the use of APIs for efficiency.

- Corporate Partnerships and Open Models: Concerns were voiced by users such as `@reguile` about the future of open models following the Microsoft partnership with Mistral. Some, like `@foxlays`, hope Mistral continues to support open model development.

- Speculations on GPT-3.5 Turbo Parameters: Debates around the actual size of GPT-3.5 Turbo were stirred by a redacted Microsoft paper, with `@i_am_dom` and `@lyrcaxis` discussing its validity and efficiency.

- Mistral's Market Positioning and Strategy: `@blacksummer99` shared insights into Mistral's efforts to differentiate from OpenAI and the conceived positioning as a European leader in the AI field.

Links mentioned:

- Pricing and rate limits | Mistral AI Large Language Models: Pay-as-you-go

- GOODY-2 | The world’s most responsible AI model: Introducing a new AI model with next-gen ethical alignment. Chat now.

- Endpoints and benchmarks | Mistral AI Large Language Models: We provide five different API endpoints to serve our generative models with different price/performance tradeoffs and one embedding endpoint for our embedding model.

- Au Large: Mistral Large is our flagship model, with top-tier reasoning capacities. It is also available on Azure.

- AI Playground: Run your prompts across mutiple models and scenarios: Compare and evaluate multiple AI model completions on different prompts and model parameters

- Cat Bruh GIF - Cat Bruh Annoyed - Discover & Share GIFs: Click to view the GIF

- CRYNYL: Fall Out Boy’s new album, filled with the band’s real tears for maximum emotional fidelity.

- Assisting in Writing Wikipedia-like Articles From Scratch with Large Language Models: We study how to apply large language models to write grounded and organized long-form articles from scratch, with comparable breadth and depth to Wikipedia pages. This underexplored problem poses new …

- Legal terms and conditions: Terms and conditions for using Mistral products and services.

- Typing With Feet GIF - Typing With Feet - Discover & Share GIFs: Click to view the GIF

- WWW.SB: no description found

- Council Post: Is Bigger Better? Why The ChatGPT Vs. GPT-3 Vs. GPT-4 ‘Battle’ Is Just A Family Chat: Alright, now we understand that ChatGPT is just a smaller and a more specific version of GPT-3, but does it mean that we will be having more such models emerging in the nearest future: MarGPT for Mark…

- no title found: no description found

- no title found: no description found

- no title found: no description found

Mistral ▷ #models (209 messages🔥🔥):

-

GPU Essentials for Server Builds:

@lukuninquired about which models could run on a server with no GPU, and@tom_lrdand@_._pandora_._explained that a GPU is necessary for reasonable performance, even with smaller models. For larger models, having a GPU with at least 24 GB VRAM, such as a 3090/4090, is recommended by@mrdragonfox. They also provided a detailed test gist on GitHub to illustrate the performance at different conditions. -

The Cost of Scaling Up: Users

@dekaspace,@mrdragonfox, and others discussed the specs for a server build to run language models.@mrdragonfoxsuggested 24 GB VRAM as a baseline and noted that models over 70B parameters would require a substantial investment in specialized hardware, mentioning Groq’s custom ASIC deployment as a costly approach. -

Questions About Mistral’s Direction: Several users, including

@redbrainand@blacksummer99, expressed concerns over Mistral’s seemingly new business-oriented direction, with closed-weight models like Mistral Small and Mistral Large, diverging from their previously open model reputation. The community speculated about upcoming releases and potential for open weight models in the future. -

Benchmarks of Mistral Models:

@bofenghuangconducted tests with Mistral’s models on a French version of MT-Bench, publishing results that placed Mistral Large at a notable position behind GPT-4. They shared their findings on Hugging Face Datasets and a browser-based space for further inspection. -

Hopes for Open Access to New Models: Community sentiment as shared by

@saintvaseline,@_._pandora_._, and others reflects a mix of hope for future open-access models and skepticism due to the involvement of large tech firms like Microsoft. Some members, including@tom_lrd,@m._.m._.m, and@charlescearl_45005, anticipated Mistral to eventually offer some lesser-quality open models while speculating on the implications of commercial partnerships.

Links mentioned:

- GroqChat: no description found

- Au Large: Mistral Large is our flagship model, with top-tier reasoning capacities. It is also available on Azure.

- Always Has Been Among Us GIF - Always Has Been Among Us Astronaut - Discover & Share GIFs: Click to view the GIF

- TheBloke/Mistral-7B-Instruct-v0.1-GGUF · Hugging Face: no description found

- bofenghuang/mt-bench-french · Datasets at Hugging Face: no description found

- Mt Bench French Browser - a Hugging Face Space by bofenghuang: no description found

- Northern Monk Beer GIF - Northern Monk Beer Craft Beer - Discover & Share GIFs: Click to view the GIF

- 100k test . exllama2(testbranch) + fa 1 - 100k in 128t steps: 100k test . exllama2(testbranch) + fa 1 - 100k in 128t steps - gist:71658f280ea0fc0ad4b97d2a616f4ce8

- [Feature Request] Dynamic temperature sampling for better coherence / creativity · Issue #3483 · ggerganov/llama.cpp: Prerequisites [✅] I reviewed the Discussions, and have a new bug or useful enhancement to share. Feature Idea Typical sampling methods for large language models, such as Top P and Top K, (as well a…

Mistral ▷ #deployment (56 messages🔥🔥):

- Request for Support Unanswered:

@fanghflagged that they sent an email last week and haven’t received a response, seeking an update from@266127174426165249. - Running Mixtral on Local Machine Query:

@c_ffeestaininquired if they can run Mixtral 8x7B on their local machine with 32GB RAM and 8GB VRAM, and is currently using a version on HuggingFace. - GPU Compatibility and Configuration Advice:

@_._pandora_._explained that in theory, Mixtral could be run on@c_ffeestain’s machine but would be extremely slow. They also offered help with finding the number of layers shared with the GPU to improve performance. - Exploring Model Quants and Layer Sharing:

@c_ffeestainnoted after downloading the model that generating one token takes about 5-10 seconds. They are in the process of adjusting how many layers are shared with their GPU, but encounter issues detecting their AMD GPU. - Inference and Fine-tuning on a Tesla V100:

@dazzling_maypole_30144experienced an out-of-memory error trying to deploy Mistral-7B-Instruct on a Tesla V100.@mrdragonfoxand@casper_aisuggested that the V100 might not have enough memory for this task and recommended either alternatives like T4 or A10 GPUs or running the model in AWQ format for better compatibility.

Links mentioned:

- HuggingChat: Making the community’s best AI chat models available to everyone.

- TheBloke/Mixtral-8x7B-Instruct-v0.1-GGUF · Hugging Face: no description found

Mistral ▷ #ref-implem (6 messages):

- Inquiry about Mistral Data Normalization: User

@severinodadaltfrom Barcelona Supercomputing Center inquired if the Mistral data has been normalized and the method of its implementation. The user mentioned the absence of information on the topic and is considering that no normalization has been applied. - No Base Model Normalization Details: In response to

@severinodadalt’s inquiry about data normalization,@mrdragonfoxnoted that no base model will provide such information. - Performance Variance in Different Precision Levels:

@bdambrosioasked if there would be a change in inference speed when running Mistral 8x7B locally in full fp16, compared to the current 8 bit exl2, especially with more VRAM available. The question arises from noticing differences between 6.5 and 8-bit precision levels. - Precision Levels Affect Performance: In response,

@mrdragonfoxconfirmed that differences are noticeable, and that performance measurement tools like turboderp generally assess perplexity (ppl), suggesting that the precision level does indeed affect performance. - Quantization and Context Accuracy:

@mrdragonfoxalso pointed out that quantization can slightly degrade context accuracy when performing tasks with models like Mistral.

Mistral ▷ #finetuning (185 messages🔥🔥):

-

Fine-Tuning Data Quantities and Expectations:

@pteromapleinquired about the amount of data needed for fine-tuning, questioning if 4000 instances would suffice. While@egalitaristensuggested it depends on the specificity of the fine-tuning, highlighting that for narrow tasks, this might be enough, the discussion concluded that trial and error could be the best approach. -

Data Format Dilemmas for Fine-Tuning:

@pteromaplesought advice on the correct data format for fine-tuning ‘Mistral-7B-Instruct-v0.2’ with Unsloth and queried about the impact of data format on training results, revealing their current use of Alpaca format.@_._pandora_._recommended creating a custom prompt format and warned about potential issues when fine-tuning Mistral 7B Instruct with non-English languages. -

Mistral’s Mysterious Output After Fine-Tuning:

@mr_seekerreported a peculiar issue where a fine-tuned model outputs/******/and loses coherence when prompted with non-dataset-like data. Suggestions from@mrdragonfoxand others pointed towards the model’s routing layer, with an indication that successful fine-tuning may require understanding the intricacies of the model’s architecture beyond just applying techniques such as LoRA. -

Serverless Fine-Tuning and Model Hosting Discussed:

@stefatorusquestioned the possibility of Mistral offering serverless fine-tuning functionalities in the cloud and discussed related offerings by companies like Hugging Face and OpenAI. RunPod was also brought up as a potential cost-effective solution, but the viability for those with budget constraints was a concern. -

LoRA Parameters Puzzle:

@tom891faced challenges in determining the appropriate LoRA parameters for their 200k sample dataset for Mistral 7B fine-tuning. Despite guidance from@mrdragonfoxand others emphasizing the necessity of understanding the underlying theory and urging independent exploration over spoon-fed answers, the user continued to seek direct suggestions for effective parameter configurations.

Links mentioned:

Serverless GPUs for AI Inference and Training: no description found

Mistral ▷ #announcements (2 messages):

-

Meet Mistral Large:

@sophiamyangannounced the launch of Mistral Large, a new optimised model with top-tier reasoning, multilingual capabilities, native function calling, and a 32k parameter size. Boasting 81.2% accuracy on MMLU, it stands as the second-ranked model in the world and is available via la Plateforme and Azure. -

La Plateforme Premieres le Chat Mistral:

@sophiamyangintroduced le Chat Mistral, a front-end demonstration showcasing the capabilities of the Mistral models. Discover its potential at Chat Mistral.

Links mentioned:

- Au Large: Mistral Large is our flagship model, with top-tier reasoning capacities. It is also available on Azure.

- no title found: no description found

Mistral ▷ #showcase (24 messages🔥):

- Join @jay9265’s Live Coding Stream: @jay9265 is live streaming on Twitch, inviting everyone interested to join.

- LLMs for Problem Formulation Assistance: @egalitaristen suggests that LLMs can be utilized to help formulate problems or tasks, reminding @jay9265 that explaining the issue to an LLM is a way to seek assistance.

- Lower Temperature for Structured Code: For tasks that involve structured code like JSON, @egalitaristen advised @jay9265 to reduce the generation temperature to around

0.3for less “creativity” and more accuracy. - WhatsApp Chrome Plugin by @yasserrmd: @yasserrmd has developed a Chrome plugin that uses Mistral API to generate WhatsApp formatted text, with more details available on LinkedIn.

- AI Inference Benchmarking Analysis: @yasserrmd shared insights from benchmarking AI inference performance across platforms like Groq using Mistral, OpenAI ChatGPT-4, and Google Gemini, providing a LinkedIn post for more information.

Links mentioned:

Twitch: no description found

Mistral ▷ #random (17 messages🔥):

- Pricing Woes in the Chatbot Landscape:

@sublimatorniqbrought up the subject of perplexity, likely referring to pricing or complexity in chatbot services.@mrdragonfoxsuggested that the race to offer the lowest prices cannot continue indefinitely, with unit economics needing to make sense for businesses. - Groq’s Competitive Pricing Promise:

@shivakiran_highlighted Groq’s promise of $0.27/million, which likely refers to the cost of processing a certain number of chatbot interactions. - Sustainability of Low Prices Questioned:

@mrdragonfoxpointed out that sustaining low prices for the sake of competition isn’t a financially sound strategy, as it doesn’t equate to profitability, especially with new players willing to absorb even more costs. - Critique of Initial Pricing Strategies in Tech:

@egalitaristenexpressed concern over companies that start with low initial pricing only to later introduce “real” pricing that can be multiple times higher, warning that it may drive most of the user base to seek alternatives. - Pistachio Day Proclaimed on Discord:

@privetinshared a celebration of Pistachio Day with a link to nutsforlife.com.au and fun facts about the benefits of pistachios, including their protein content and sleep-inducing melatonin.

Links mentioned:

- Laughing GIF - Laughing - Discover & Share GIFs: Click to view the GIF

- Pistachio Day - Nuts for Life | Australian Nuts for Nutrition & Health: Happy Pistachio Day! Each year, 26 February is dedicated to this tiny nut, which punches above its weight when it comes to taste and nutrition!

Mistral ▷ #la-plateforme (66 messages🔥🔥):

-

Privacy and Hosting Clarifications Sought: User

@exa634enquired about whether data passing through the Mistral API is used for model training and about the geographical location of the hosting. It was confirmed by@akshay_1and@ethuxthat the data is not used for training and that servers are located in Sweden, as mentioned in Mistral’s privacy policy. -

Mistral7x8b Freezing Issue: User

@m.kasreported a bug where Mistral7x8b freezes when trying to generate content for the year 2024. The user@1015814suggested checking for an accidental end token, but@m.kasclarified no such token was set. -

Expectation of Function Calling on Mistral Platform: Users

@nionedand@mrdragonfoxbrought up the topic of function calling on the platform, hinting that third-party providers may offer solutions and expressing optimism that Mistral will implement it in due time. -

API Key Activation Delays Addressed: User

@argumentativealgorithmexperienced a delay with their API key activation after adding billing information.@lerelaconfirmed that a short waiting period is common before the key becomes operational, which resolved the user’s issue. -

Speech to Speech App Query: User

@daveo1711asked about using Mistral Large for a speech-to-speech application, to which@akshay_1replied that Mistral only supports text and suggested checking out other models for the desired functionality.

Links mentioned:

- Legal terms and conditions: Terms and conditions for using Mistral products and services.

- client-python/examples/function_calling.py at main · mistralai/client-python: Python client library for Mistral AI platform. Contribute to mistralai/client-python development by creating an account on GitHub.

- Client does not return a response · Issue #50 · mistralai/client-js: Hi there, Running the latest version of the SDK 0.1.3, but when I try to init and call the client, it does not return anything. Here is my code: const mistral = new MistralClient(env.PUBLIC_MISTRAL…

- GitHub - Gage-Technologies/mistral-go at v1.0.0: Mistral API Client in Golang. Contribute to Gage-Technologies/mistral-go development by creating an account on GitHub.

Mistral ▷ #le-chat (69 messages🔥🔥):

-

Mistral Chat’s Popularity Issues: Users

@lerela,@mr_electro84, and others have noted that Le Chat is experiencing difficulties likely due to high traffic and popularity.@mr_electro84reported platform outages, including the API console. -

Confusion Over Mistral Chat’s Pricing:

@_._pandora_._and@wath5discussed whether Le Chat is free, with some users believing they are using paid credits while others, including@margaret_52502, stated it’s free. -

Enthusiasm and Suggestions for Mistral’s Potential: User

@aircactus500proposed various enhancements for Mistral, from a mobile app with social networking elements to a search engine and even a 3D virtual assistant. They mentioned the idea of a language level setting forle Chatwhich sparked interest in the community. -

Conversation About Mistral-Next: Users

@__oo__,@_._pandora_._, and@tom_lrddiscussed a feature within Le Chat called Mistral-Next, highlighting its conciseness and simplicity compared to the large model, with hopes for its availability as an openweights model. -

Developing Concepts for Mistral Chat Applications: User

@aircactus500is conceptualizing features for an app tailored to Le Chat, including the ability to select the AI’s conversational style. They have expressed excitement over having a French AI community platform, feeling it enhances idea generation without needing to translate thoughts.

LM Studio ▷ #💬-general (608 messages🔥🔥🔥):

-

LM Studio White Window Issue: User

@steve_evianreported an issue where LM Studio only displays a white window upon launch.@heyitsyorkiesuggested clearing .cache and %APPDATA% before reinstalling, which resolved the problem for@steve_evian. -

LM Studio Multilingual Model Query: User

@.deugasked for recommendations on pre-trained multilingual LLMs that include Korean language support.@heyitsyorkieresponded that there are few LLMs adept in translating Korean to English consistently and advised using an online translator like DeepL in combination with LM Studio. -

Presets Reverting in LM Studio: User

@wyrathcommented about LM Studio’s UX, pointing out that when starting a “New Chat,” selected presets revert to defaults, necessitating manual reselection each time. The discussion provided workarounds and the possibility of this being a bug. -

LM Studio API and Local Hosting: User

@muradbinquired about running the LM Studio API on a server without a graphical environment.@heyitsyorkieclarified that LM Studio doesn’t support headless running and didn’t comment on future plans for this feature. -

Request for Open Source and Headless LM Studio: User

@krypt_lynxregretfully noted LM Studio’s closed source nature, also expressing that community contributions could add missing features such as headless operation.@heyitsyorkieconfirmed that LM Studio is indeed closed source.

Links mentioned:

- GroqChat: no description found

- Phind: no description found

- Seth Meyers GIF - Seth Meyers Myers - Discover & Share GIFs: Click to view the GIF

- Big Code Models Leaderboard - a Hugging Face Space by bigcode: no description found

- Fine-tune a pretrained model: no description found

- TheBloke/SOLAR-10.7B-Instruct-v1.0-uncensored-GGUF · Hugging Face: no description found

- Continual Learning for Large Language Models: A Survey: Large language models (LLMs) are not amenable to frequent re-training, due to high training costs arising from their massive scale. However, updates are necessary to endow LLMs with new skills and kee…

- dreamgen/opus-v1.2-7b · Hugging Face: no description found

- Anima/air_llm at main · lyogavin/Anima: 33B Chinese LLM, DPO QLORA, 100K context, AirLLM 70B inference with single 4GB GPU - lyogavin/Anima

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed…

- no title found: no description found

- MusicLM: no description found

- Reddit - Dive into anything: no description found

- GitHub - deepseek-ai/DeepSeek-Coder: DeepSeek Coder: Let the Code Write Itself: DeepSeek Coder: Let the Code Write Itself. Contribute to deepseek-ai/DeepSeek-Coder development by creating an account on GitHub.

- Grounding Large Language Models in Interactive Environments with Online Reinforcement Learning: Recent works successfully leveraged Large Language Models’ (LLM) abilities to capture abstract knowledge about world’s physics to solve decision-making problems. Yet, the alignment between LLM…

LM Studio ▷ #🤖-models-discussion-chat (98 messages🔥🔥):

- Hyperparameter Evaluation Dilemma:

@0xtoteminquired whether hyperparameters for a RAG should be evaluated on their own dataset or if a similar dataset would suffice. It remains unresolved as a personal choice depending on the closest available data. - Dolphin Model Dilemma:

@yahir9023struggled to create a Dolphin model prompt template in LM Studio, sharing an external file for further explanation since Discord lacks text-sending functionality. - Model Memory Challenge:

@mistershark_discussed the difficulty of keeping multiple large language models in VRAM simultaneously and confirmed the availability and capabilities of ooba.@goldensun3dsasked for clarification, and@mistershark_explained the need for significant hardware, sharing the GitHub link to ooba. - Translation Model Inquiry:

@goldensun3dsquestioned the best model for Japanese to English translation, considering models like Goliath 120B and suggesting a potential Mixtral model. No definitive answer was given, drawing attention to the user’s powerful hardware setup. - Mixed-Expert Models:

@freethepublicdebtqueried if there will be future models with different mixtures of expert precisions (FP16, 8bit, and 4bit), which could promote generalization and GPU efficiency. No response was given regarding the existence or development of such models.

Links mentioned:

- Knight Rider Turbo GIF - Knight Rider Turbo Boost - Discover & Share GIFs: Click to view the GIF

- Pedro Sánchez anuncia la creación de un “gran modelo de lenguaje de inteligencia artificial” entrenado en español: El Mobile World Congress ya ha comenzado y las conferencias ya empiezan a sucederse. Xiaomi y HONOR dieron el pistoletazo de salida al evento y Pedro Sánchez…

- GitHub - rhasspy/piper: A fast, local neural text to speech system: A fast, local neural text to speech system. Contribute to rhasspy/piper development by creating an account on GitHub.

- GitHub - oobabooga/text-generation-webui-extensions: Contribute to oobabooga/text-generation-webui-extensions development by creating an account on GitHub.

LM Studio ▷ #🧠-feedback (8 messages🔥):

-

Fire Emoji for the Fresh Update: User

@macflyexpressed enthusiasm for the latest update, complimenting its look and feel. -

Acknowledging a Needed Fix:

@yagilbacknowledged an unspecified issue and assured that it will be fixed, apologizing for any inconvenience. -

High Praise for LM from a Seasoned User:

@iandol, who previously used GPT4All, praised LM for its excellent GUI and user-friendly local server setup. -

Download Dilemma in China:

@iandolreported difficulties downloading models due to being in China and inquired about proxy support to facilitate downloads. -

Seeking Dolphin 2.7 Download Support:

@mcg9523faced challenges downloading Dolphin 2.7 in LM Studio and was advised by@heyitsyorkieto switch to “compatibility guess” and collapse the readme for better visibility.

LM Studio ▷ #🎛-hardware-discussion (178 messages🔥🔥):

-

Quest for CUDA Support in AMD: User

@nink1reminisced about AMD’s growth and speculated that smart folks at AMD might be working on creating their own CUDA support, citing enterprise trends towards cost-effective solutions. The fact that ZLUDA was open-sourced hints at potential internal advancements at AMD. -

Choosing the Best GPU for LLMs: Amidst discussions on AMD vs. Nvidia GPUs, users like

@baraduk,@wolfspyre, and@heyitsyorkiedebated Radeon RX 7800 XT’s suitability for running LLM models, with a general preference for Nvidia due to easier setup for AI applications, notably with ROCm on AMD requiring additional effort. -

To NVLink or Not to NVLink: Participants like

@slothi_jan,@dave2266_72415, and@nink1explored the pros and cons of NVLink for multi-GPU setups. While NVLink could theoretically boost performance compared to using standard PCIe slots, practical considerations like cost and compatibility are significant factors. -

Mac vs. Custom PC for Running LLMs: User

@slothi_jansparked discussions on whether to purchase a Mac Studio or a custom PC with multiple RTX 3090 GPUs for AI model use. Opinions varied, but factors like speed, cost, ease of use, and future-proofing were key considerations with valuable input from users like@heyitsyorkie,@rugg0064, and@nink1, who noted the surprisingly good performance of Apple’s M3 Max. -

Troubleshooting PC Shutdowns During LLM Use:

@666siegfried666sought assistance with their PC (featuring a 5800X3D CPU and 7900 XTX GPU) shutting down during use of LM Studio.@heyitsyorkiesuggested testing with other compute-intensive tasks to identify whether the issue is with LM Studio or the PC hardware itself.

Links mentioned:

- no title found: no description found

- no title found: no description found

- High Five GIF - High Five Minion - Discover & Share GIFs: Click to view the GIF

- README.md · TheBloke/Llama-2-70B-GGUF at main: no description found

- Releases · ggerganov/llama.cpp: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- OpenCL - The Open Standard for Parallel Programming of Heterogeneous Systems: no description found

- Knowledge Doubling Every 12 Months, Soon to be Every 12 Hours - Industry Tap: Knowledge Doubling Every 12 Months, Soon to be Every 12 Hours - Industry Tap

LM Studio ▷ #🧪-beta-releases-chat (27 messages🔥):

-

Celebrating “IQ” Models Working:

@drawless111enthusiastically confirmed that IQ1, IQ2, and IQ3 models are working in LM Studio, praising Yags and the team. They highlighted IQ1’s impressive specs with 14.5 GB VRAM and 70 Billion model at 11.95 t/s. -

Searching for “IQ” Formats Revealed:

@drawless111provided a step-by-step guide on finding “IQ” formats on HGF such as “gguf imat” or “gguf imatrix”, and noted to avoid compressions fixed with random text for higher quality. -

LLM Local File System Access Query:

@wolfspyreinquired about local file system access for running models, wondering if a directory like/tmpis accessible, but later@fabguyclarified that LLMs don’t have such capabilities, and LM Studio does not support executing commands from LLMs. -

No Model Tokenization Speed Stats API Yet:

@wolfspyreasked if there’s an API to get model tokenization speed stats to which@yagilbshortly replied with a “Not yet”. -

Llama 1.6 Update Rolled Out: Users

@n8programsand@heyitsyorkiediscussed and celebrated the update of llama.cpp to version 1.6 in LM Studio, describing it as EPIC.

LM Studio ▷ #autogen (9 messages🔥):

- AutoGen Anomaly Squashed: User

@thebest6337initially reported a mysterious error with AutoGen but resolved the issue by “uninstall[ing] and reinstall[ing] every autogen python package”. - Good Samaritan Reminder:

@heyitsyorkieencouraged@thebest6337to share the solution to their problem with AutoGen to assist others, leading to the discovery of the fix. - When in Doubt, Reboot!: In response to

@thebest6337’s fix,@heyitsyorkiehumorously posted a Tenor GIF link, implying that the classic “turn it off and on again” method is a universally applicable solution: Tenor GIF. - Slow Responding Local Models: User

@gb24.queried about the slow response time (approx. five minutes) from a local model, implying it is an unusually long delay as the task was not code intensive.

Links mentioned:

It Problem Phone Call GIF - It Problem Phone Call Have You Tried Turning It Off And On Again - Discover & Share GIFs: Click to view the GIF

LM Studio ▷ #langchain (1 messages):

bigsuh.eth: Hello, can I use LM Studio and use RAG in langchain?

LM Studio ▷ #open-interpreter (7 messages):

- Connection Issues for nxonxi: User

@nxonxiencountered ahttpcore.Connect Error: [Errno 111] Connection refusedwhen attempting to runopen-interpreterwith the--localcommand after installing LM Studio. - Syntax Error Strikes: The same user received an error stating

{'error': "'prompt' field is required"}, which turned out to be due to a syntax error in their request payload. - Simple Python Request to the Rescue:

@nxonxiconfirmed that while LM Studio is not working from OpenAI (OI), it is operational via a simple Python request. - Endpoint URL Troubleshooting:

@1sbeforesuggested checking the endpoint URL, mentioning that for TGWUI it ishttp://0.0.0.0:5000/v1and advised@nxonxito possibly remove/completionsor/v1/completionsfrom the URL being used in requests as a possible solution.

Perplexity AI ▷ #announcements (1 messages):

- Perplexity Partners with ElevenLabs:

@ok.alexannounced the launch of the Discover Daily podcast, a collaboration with ElevenLabs, pioneers in Voice AI technology. Find the podcast on your favorite platforms for a daily dive into tech, science, and culture, with episodes sourced from Perplexity’s Discover feed. - Discover Daily Podcast Elevates Your Day: Listening to the latest episodes of Discover Daily is recommended during your daily commute or in that spare moment of curiosity. The episodes are available at podcast.perplexity.ai and are enhanced by ElevenLabs’ voice technology.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discover Daily by Perplexity: We want to bring the world’s stories to your ears, offering a daily blend of tech, science, and culture. Curated from our Discover feed, each episode is designed to enrich your day with insights and c…

Perplexity AI ▷ #general (348 messages🔥🔥):

-

Perplexity AI Unveils New Models ‘Sonar’: The Perplexity AI Discord community discussed the recent introduction of Sonar models (

sonar-small-chatandsonar-medium-chat) and their search-enhanced versions. These models claim improvements in cost-efficiency, speed, and performance. Users speculate, based on test interactions, that Sonar Medium may possess a knowledge cutoff around December 2023 (source). -

Goodbye Gemini: The community briefly mourned the removal of Gemini from the list of available models on Perplexity, with some users clamoring for its return or the potential introduction of Gemini Ultra.

-

Perplexity AI and Imagery: It was clarified that Perplexity Pro does have the capability to generate images, albeit with some operational issues under scrutiny. Users are directed to online resources and Reddit for assistance (Reddit post).

-

Mobile-specific Responses from AI Models: There was a discussion about whether AI chat models respond differently on mobile devices compared to PCs, with some users noticing concise answers from models like Gemini when accessed through the app (system prompt).

-

Alleged Deals and Discrepancies: In the mix of conversations, various unrelated topics were raised such as a supposed 6-month free trial of Perplexity Pro tied to a card service, which was confirmed to be legitimate by a moderator, and an inquiry about whether Mistral is partnering with Microsoft following a historical collaboration with Google.

Links mentioned:

- Phind: no description found