Not much happened today, so it’s a nice occasion to introduce 2 new Discords that have passed our quality bar: Interconnects (run by Nathan Lambert who we recently had on Latent Space) and OpenRouter (Alex Atallah who will surely join us at some point).

Table of Contents

[TOC]

PART 0: Summary of Summaries of Summaries

Model Comparisons and Optimizations: Discord users actively engaged in discussions about the performance and optimization of various AI models, including Mistral AI, Miqu, and GGUF quantized models. Key topics included the comparison of Mistral AI's new Mistral Large model with OpenAI's GPT-4, highlighting its cost-effectiveness and performance. Users also explored efficient loading practices and model quantization methods, such as GPTQ and QLORA, to enhance model performance and reduce VRAM usage.

Advanced Features and Applications: There was significant interest in leveraging AI for specific applications like role-playing and story-writing, emphasizing the need for models to manage consistent timelines and character emotions. Moreover, the potential of AI in code clarity and AI-assisted decompilation was discussed, with a particular focus on developing tools like an asynchronous summarization script for Mistral 7b, indicating a push towards making AI more accessible and practical for developers.

Quantum Computing and AI's Future: Discussions in various Discords touched upon the intersection of quantum computing and AI, speculating on how quantum advancements could revolutionize AI model processing. The discourse extended to the implications of DARPA-funded projects on AI development and the exploration of encoder-based diffusion techniques for stable diffusion in image processing, reflecting a deep interest in cutting-edge technologies that could shape the future of AI.

Community and Collaboration Initiatives: Various communities highlighted efforts to foster collaboration and share knowledge on AI model development, ranging from new Spanish LLM announcements to hardware experimentation for model efficiency. Platforms like Perplexity AI and LlamaIndex were noted for their innovative features and integration capabilities. There was a strong emphasis on open-source projects, as seen in discussions about Mistral's open-source commitment and the development of tools like R2R for rapid RAG system deployment, showcasing the vibrant collaborative spirit within the AI community.

PART 1: High level Discord summaries

TheBloke Discord Summary

-

Polymind’s Haunting Echoes: Engineers are troubleshooting Polymind’s UI bug where ghost messages linger until new tokens stream. Debugging involves frontend HTML variables, like

currentmsg, to clear residual content. -

Model Assessment Mania: Discussions highlight Miqu, a leaked Mistral medium equivalent, performing admirably despite being slower due to higher parameters compared to Mixtral. Users are also actively exchanging efficient loading practices for GGUF quantized models, including tweaks like disabling shared video memory and GPU settings to prevent VRAM overflow.

-

LangChain’s Loop: LangChain is under fire for its claimed context reordering feature, which

@capt.geniuslabeled a “fake implementation”, and users suggest it might just be a basic looping mechanism. -

Mistral’s Mysterious Moves: There’s a buzz around Mistral AI’s change in language on their website regarding open-source models, fueling speculation about the company’s commitment to open models, in light of models from other AI providers like Qwen.

-

Optimizing Prompt Engineering: Engineers in the roleplay and story-writing channels express a desire for models adept at role-playing and story-writing with consistent timelines, character emotions, and mood management.

-

Quantizing Questions and Training Troubles: There’s debate around model quantization methods, with

@orel1212considering GPTQ over QLORA for a small dataset training. Questions loom about Mistral’s PDF image text extraction capabilities and the hardware needed for Mixtral 8x7b without clear answers. Meanwhile,@dzgxxamineseeks advice on teaching LLMs to understand and use newly released Python libraries. -

Merge Meltdown Mystery: A single message from

@jsarneckireports an unsuccessful model merge between Orca-2-13b and WhiteRabbitNeo-13b, resulting in incomprehensible output, with details of the merge process shared via a readme from mergekit. -

AI’s Role in Code Clarity: Within the coding discussions, there’s excitement about AI’s potential in AI-assisted decompilation, as

@mrjackspadeanticipates a future without manual code reconstruction. Additionally,@wolfsaugeintroduces an asynchronous summarization script tested on Mistral 7b, with an invite for peers to experiment with it, available on GitHub.

LM Studio Discord Summary

-

New Spanish LLM Announced: Spain’s President Pedro Sánchez announced the creation of a Spanish-language AI model at Mobile World Congress; it’s significant for Spanish language applications in AI.

-

Hardware Hijinks: RTX 2060 Action: Discussions in the hardware channel have users experimenting with multi-GPU setups without a bridge connector and seeing success, even with mismatched graphics cards, driving efficiency for models as large as 70b, pointing to changing norms in hardware compatibility.

-

WSL Networking Fix for AI Tools: Users resolved an issue connecting to a LM Studio endpoint from within WSL (Windows Subsystem for Linux) on Windows 11 by bypassing local host and using the network IP (

http://192.168.x.y:5000/v1/completions), addressing unique WSL network behavior outlined in this guide. -

Tech for Text-to-Talk: Piper, a neural text-to-speech system, has been highlighted for its local processing capabilities and support for different languages, indicating a trend towards efficient, offline solutions for language model applications.

-

Techies Taming Language Models: In the model discussions, users highlighted the importance of specific quantization parameters like mirostat_mode and mirostat_tau for optimizing LLM performance, which needs to be manually configured. Conversations also acknowledged the role of human evaluation in assessing models’ performance.

Nous Research AI Discord Summary

-

RWKV/Mamba Models Primed for Context-Length Flexibility:

@vatsadevcommented on the viability of rwkv/mamba models being extendable through fine-tuning for longer context, mitigating the need to pretrain from scratch.@blackl1ghtachieved a 32k token context length on Solar 10.7B using a Tesla V100 and Q8 quantization without hitting memory limits, indicating the potential for extending these models even further. -

Ring Attention Sparks 1 Million Token Model Feasibility: Discussions highlighted the application of ring attention in models managing up to 1 million tokens,

@bloc97revealed that this could be applied to 7b models, illustrating advancements in attention mechanisms for larger scale models. -

Quantization Techniques Promise Efficient Inference: Efforts to optimize inference efficiencies involving key-value cache and potential use of fp8 were mentioned;

@stefangligaintroduced the concept of quantizing the kvcache which could pave the way for handling longer contexts more effectively. -

Benchmarks Point to Selective Reporting: In a conversation about AI model benchmarks,

@orabazespointed out that a tweet on AI performance omitted certain model comparisons, hinting at the need for transparency in benchmarks. -

Mistral TOS Controversy Settles: After intense debate regarding Mistral’s Terms of Service, a tweet from co-founder @arthurmensch resolved issues by removing a contentious clause, reaffirming the model’s open-use for training competitive LLMs.

-

Structured Data Gets Dedicated LLM Attention: New insights from @_akhaliq’s tweet into Google’s StructLM project indicate a focus on enhancing LLMs’ handling of structured data, despite the absence of shared training approaches or model frameworks.

-

Curiosity in Mistral Loop Dilemmas:

@.ben.comtheorized that repeated text looping in Mistral could be attributed to classic feedback system issues, indicating a technical curiosity in understanding and resolving model oscillations. -

High-Demand for Self-Extend Model Practices: The community’s enthusiasm for local model improvements was evident after

@blackl1ght’s successful experiments with self-extension, leading to a collective interest in replicating the configurations that allow for improved memory management and feature utilization.

Links mentioned:

OpenAI Discord Summary

EBDI Agent Challenges and Solutions: @.braydie explored EBDI frameworks for agent goal determination, but encountered thinking loops after integrating the ReAct framework. They examined decision-making models from a JASSS paper to address the issue.

Mistral Steps Up to Rival GPT-4: A TechCrunch article reported that Mistral Large, a new model from Mistral AI, is positioned to compete with OpenAI’s GPT-4, offering cost-effectiveness and uncensored content, and is now available on Azure.

Prompt Protection Paradox: Users deliberated on how to protect intellectual property in prompts, concluding that while copyright might cover exact wording, the replication of ideas via linguistic variation is likely unstoppable.

Text Classification Tactics: @crifat kicked off a discussion on text classification methods, opting to start with the base model and Assistant, bypassing fine-tuning, to sort texts into categories such as “Factual” and “Misleading.”

Meta-Prompting Generates Buzz and Security Concerns: The concept of meta-prompting was a hot topic, with claims of generating extensive documentation from advanced techniques, but these techniques also raised security flags when a user shared a PDF, resulting in the user’s account action.

Perplexity AI Discord Summary

-

Perplexity AI’s Picture Perfect Potential: Users discussed the image generation capability of Pro version of Perplexity AI, with a link given to a Reddit discussion on the topic. Concerns were also voiced over the discontinuation of Gemini AI support in Perplexity, with confirmations that the support has ended.

-

Mistral Large Steals the Spotlight: A new language model from Mistral AI, called Mistral Large, was introduced with discussions surrounding its capabilities and availability. The announcement was supported by a link to Mistral AI’s news post.

-

VPN Troubles Take a Detour: A login issue on Galaxy phones with Perplexity AI was tackled by turning off VPN, with additional resources on VPN split tunneling provided.

-

Exploratory Searches in Perplexity Shine Through: Users shared links to Perplexity AI’s search results on novel topics, including a transparent laptop by Lenovo and an age query for the letter K. Comparisons of iPhones were also made, and a collection feature was promoted, inviting users to create their own collection on Perplexity AI.

-

PPLLX-API Channel Buzzes with Model Concerns: Technical discussions centered on model information and performance, particularly about a JSON link for model parameters and the behavior of the

sonar-medium-onlinemodel generating irrelevant content. There was deliberation over the deprecation of thepplx-70b-onlinemodel and the vital role of proper prompting when making API calls, with explicit referencing to Chat Completions in the API documentation.

LlamaIndex Discord Summary

-

PDF Parsing Leaps Forward with LlamaParse: LlamaParse is introduced as a tool to enhance understanding of PDFs with tables and figures, aiding LLMs in providing accurate answers by avoiding PDF parsing errors. It’s highlighted as significant for Retriever-Augmented Generation (RAG) processes, as tweeted here.

-

Super-Charging Indexing with MistralAI’s Large Model: LlamaIndex integrates @MistralAI’s Large Model into its build 10.13.post1, bringing near-GPT-4 capabilities including advanced reasoning and JSON output, as mentioned in this announcement. Furthermore, LlamaIndex’s new distributed super-RAG feature allows the creation of API services for any RAG application which can be networked to form a super-RAG capable of running queries across the network, as shared here.

-

AI Assemblage with AGI Builders and FireWorksAI: The AGI Builders meetup will host LlamaIndex’s VP of Developer Relations to share insights on RAG applications, with details of the event available here. In another collaboration, LlamaIndex and FireworksAI_HQ have released a cookbook series for RAG applications and function calling with FireFunction-v1, offering full API compatibility as announced here.

-

Context Crafting Conundrums for Coders: In the ai-discussion channel, members discussed optimizing context for coding LLMs such as GPT-4 turbo and Gemini 1.5 with a focus on the order of information, repetition, and structuring techniques. There was also discourse on open-source text generation with Llama2, emphasizing non-proprietary integration for CSV and PDF inputs, and exploration of chunking and retrieval strategies for building a RAG-oriented OSS SDK assistant.

-

Tech Troubles and Tooling Talks in General: The general channel buzzed with requests for assistance, comparisons between GPT-3.5 with and without RAG, and discussions on integrating LlamaIndex with other services like Weaviate. Moreover, there was an elaborate exchange on resolving installation issues of LlamaIndex on macOS and dialogues about creating agents for Golang integration and dynamic orchestration with AWS Bedrock, suggesting a highly interactive and technically-oriented community.

LAION Discord Summary

-

New Twists in Encoder-Based Diffusion Techniques: Users discussed the merits of an encoder-based inversion method for stable diffusion and the challenges with image prompt (IP) adapters in enhancing stable diffusion performance.

-

AI’s Dicey Dance with DARPA: Debates flared regarding the implications of DARPA-funded projects on AI research and development, with a humorous twist on anime character usage in recruitment efforts and the intersection of military and entertainment genres.

-

Quantum Leaps for AI’s Future: Conversations revolved around the future role of quantum computing in processing AI models, particularly transformers, with discussions on the state of quantum error correction and its potential in AI computations.

-

Navigating the Minefield of Content Moderation: Dialogues dug into the difficulties surrounding content moderation, especially in relation to Child Sexual Abuse Material (CSAM), pondering the effectiveness of reporting tools and responsibilities of platforms.

-

The Balancing Act of Open Source vs. Proprietary AI: There was a spirited exchange over the strategies of releasing AI models, contrasting open-source approaches like Mistral Large with proprietary models and considering the commercialization supporting ongoing AI R&D.

-

Watermarking Language Models: A research paper unveiled findings on the detectability of training data through watermarked text, indicating high confidence in identifying watermarked synthetic instructions used in as little as 5% of training data.

-

Genie Grants Wishes in Humanoid Robotics: A YouTube video from Google DeepMind’s new paper brought to light recent advancements in AI’s role in humanoid robotics.

-

The Finite of FEM Learning Success: Within the realm of Finite Element Analysis (FEM), there was acknowledgment of research yet a consensus that methods for learning with FEM Meshes and Models are not as effective as conventional FEM, citing a specific paper.

-

Torching Through Fourier Transform Challenges: A technical issue involving the inverse discrete Fourier transform in neural network synthesis was highlighted, pointing to code that might benefit from a refactoring using

torch.vmapfor VRAM efficiency, demonstrated in the shared GitHub repository. -

Pondering Transformer Learning Experiments: There was a solitary query about conducting transformer learning experiments to discern size relations between fictional objects, aiming to understand and render comparative images, but no further discussion or data was provided.

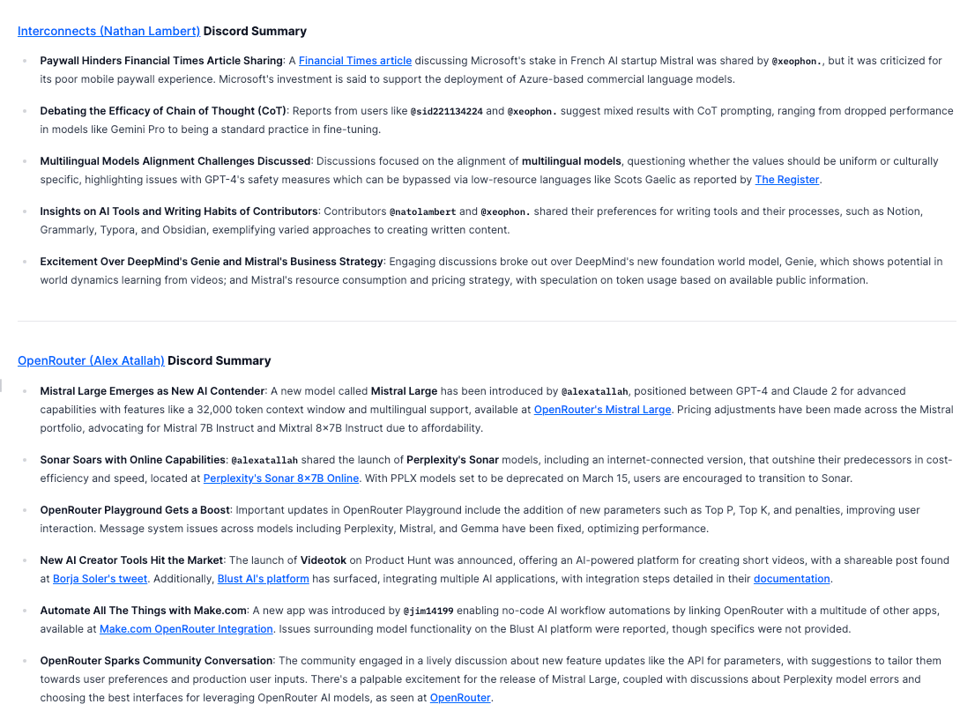

Interconnects (Nathan Lambert) Discord Summary

-

Paywall Hinders Financial Times Article Sharing: A Financial Times article discussing Microsoft’s stake in French AI startup Mistral was shared by

@xeophon., but it was criticized for its poor mobile paywall experience. Microsoft’s investment is said to support the deployment of Azure-based commercial language models. -

Debating the Efficacy of Chain of Thought (CoT): Reports from users like

@sid221134224and@xeophon.suggest mixed results with CoT prompting, ranging from dropped performance in models like Gemini Pro to being a standard practice in fine-tuning. -

Multilingual Models Alignment Challenges Discussed: Discussions focused on the alignment of multilingual models, questioning whether the values should be uniform or culturally specific, highlighting issues with GPT-4’s safety measures which can be bypassed via low-resource languages like Scots Gaelic as reported by The Register.

-

Insights on AI Tools and Writing Habits of Contributors: Contributors

@natolambertand@xeophon.shared their preferences for writing tools and their processes, such as Notion, Grammarly, Typora, and Obsidian, exemplifying varied approaches to creating written content. -

Excitement Over DeepMind’s Genie and Mistral’s Business Strategy: Engaging discussions broke out over DeepMind’s new foundation world model, Genie, which shows potential in world dynamics learning from videos; and Mistral’s resource consumption and pricing strategy, with speculation on token usage based on available public information.

OpenRouter (Alex Atallah) Discord Summary

-

Mistral Large Emerges as New AI Contender: A new model called Mistral Large has been introduced by

@alexatallah, positioned between GPT-4 and Claude 2 for advanced capabilities with features like a 32,000 token context window and multilingual support, available at OpenRouter’s Mistral Large. Pricing adjustments have been made across the Mistral portfolio, advocating for Mistral 7B Instruct and Mixtral 8x7B Instruct due to affordability. -

Sonar Soars with Online Capabilities:

@alexatallahshared the launch of Perplexity’s Sonar models, including an internet-connected version, that outshine their predecessors in cost-efficiency and speed, located at Perplexity’s Sonar 8x7B Online. With PPLX models set to be deprecated on March 15, users are encouraged to transition to Sonar. -

OpenRouter Playground Gets a Boost: Important updates in OpenRouter Playground include the addition of new parameters such as Top P, Top K, and penalties, improving user interaction. Message system issues across models including Perplexity, Mistral, and Gemma have been fixed, optimizing performance.

-

New AI Creator Tools Hit the Market: The launch of Videotok on Product Hunt was announced, offering an AI-powered platform for creating short videos, with a shareable post found at Borja Soler’s tweet. Additionally, Blust AI’s platform has surfaced, integrating multiple AI applications, with integration steps detailed in their documentation.

-

Automate All The Things with Make.com: A new app was introduced by

@jim14199enabling no-code AI workflow automations by linking OpenRouter with a multitude of other apps, available at Make.com OpenRouter Integration. Issues surrounding model functionality on the Blust AI platform were reported, though specifics were not provided. -

OpenRouter Sparks Community Conversation: The community engaged in a lively discussion about new feature updates like the API for parameters, with suggestions to tailor them towards user preferences and production user inputs. There’s a palpable excitement for the release of Mistral Large, coupled with discussions about Perplexity model errors and choosing the best interfaces for leveraging OpenRouter AI models, as seen at OpenRouter.

HuggingFace Discord Summary

Have an Amazing Week and Ace Those Exams: Community members are sharing sentiments ranging from well-wishes for the week to the stress of exams.

Seeking Speedy Batch Processing Solutions: A discussion took place regarding the optimal batching methods for querying GPT-4, emphasizing the importance of fast and efficient batch processing to reduce completion times.

Service Disruptions and Tech Collaborations: Users reported experiencing 504 timeout errors with the Hugging Face Inference API, highlighting service instability; meanwhile, there’s an ongoing dialogue to foster collaborative machine learning project development within the community.

Immersive Study Opportunity in Convolutional Neural Networks: An open invitation was extended for a study group focusing on CS231n, Convolutional Neural Networks for Visual Recognition, with links to course assignments and modules available for interested participants. CS231n Course

Scale AI’s Rise to Prominence and VLM Resolutions: Articles and discussions showcased Scale AI’s impressive growth to a $7.3 billion valuation in data labeling and innovative solutions to overcome resolution problems in vision-language models using multiple crops of high-resolution images. Scale AI’s Story and VLM Resolution Solution

Developments and Debates in AI Ethics and Performance: The community shared opportunities for commenting on “open-weight” AI models, a new Performance LLM Board evaluating response times and pricing of various models, and a detailed replication attempt of the Imagic paper for text-based image editing using diffusion models. Open AI Model Weights Comments and Imagic Paper Replicated

Discontentment with Diffusion Model Tools: Voices of dissatisfaction emerged regarding the use of eps prediction in Playground v2.5, and the choice to utilize the EDM framework instead of zsnr.

Data Size and Character Recognition in Computer Vision: A notable concern was raised about the adequacy of dataset size for fine-tuning, especially for models aimed at complex character recognition, such as those in the Khmer language, which presents unique challenges due to its symbol-rich script.

Navigating the NLP Landscape: Conversations touched on best practices in sequence classification, searching for generative QA models, recommendations for embedding models suited for smaller datasets, strategies for compressing emails for LLMs, and constructing a medical transformer tailored to the nuances of medical terminology. Suggested models for embedding include BAAI’s bge-small-en-v1.5 and thenlper’s gte-small.

Eleuther Discord Summary

-

Unit Style Learning Catches Engineer’s Eye: Precision in machine learning algorithms was the focus with a conversation about ‘unit style’ vectors, emphasizing RMSNorm as a crucial component for achieving ‘unit length’ vectors and avoiding the need for conversion between scales.

-

Mistral Lifts the Veil on a Large Model: EleutherAI announced the release of Mistral Large, a new state-of-the-art language model that promises cutting-edge results on benchmarks. It’s now available through la Plateforme and Azure, which was discussed in detail on Mistral’s news page.

-

Appreciation and Call to Action for lm-harness: A member highlighted the impact of EleutherAI’s lm-harness, with its recognition in a recent paper for its importance in the few-shot evaluation of autoregressive language models, and threads included guides and discussions to enhance its functionality.

-

Interpreting ‘Energy’ Sparks Inquiry and Promises of Investigation: Members expressed confusion and the need to investigate the term “energy” used in the context of model tuning and related equations, admitting a lack of intuition and clarity around the concept.

-

Technical Troubleshooting in NeoX: Users discussed challenges and sought advice for DeepSpeed configurations and setting up multi-node training environments for GPT-NeoX on CoreWeave Infrastructure, with directions pointing towards utilizing Kubernetes and employing slurm or MPI for a 2 node with 4 GPUs setup.

CUDA MODE Discord Summary

-

GTC Excitement and Planning: Members are looking forward to attending the Graphics Technology Conference (GTC), with suggestions for a meetup and a dedicated channel for attendees. A CUDA-based Gameboy emulator on GitHub was shared, showcasing an ingenious use of CUDA for classic gaming emulation.

-

Unleashing QLoRA’s Speed: A GitHub repo for 5X faster and 60% less memory QLoRA finetuning was highlighted for accelerating models efficiently.

-

In-Depth CUDA Discussions: The community engaged in discussions about CUDA interoperability with Vulkan, recounted the enhancements to GPU utilization with TorchFP4Linear layers, and shared updates on selective layer replacement techniques. Insights about GPU memory access latencies were also exchanged, supported by a variety of external references like the examination paper of NVIDIA A100’s memory access and torch-bnb-fp4’s speed test script.

-

Optimizing PyTorch Workflows: Conversations included the origin of PyTorch rooted in Torch7 and tips for speeding up

cpp_extension.load_inlinecompile times. There was guidance pointed for integration of custom Triton kernels withtorch.compilein PyTorch, citing a GitHub example. -

Efficiency in Attention Algorithms: Papers and resources were shared on efficient softmax approximation, the base2 trick for softmax, and an impressive incremental softmax implementation in OpenAI’s Triton example. There was also a nod to the classic fast inverse square root trick from Quake3.

-

NVIDIA Job Opportunity: NVIDIA posted a job opportunity seeking experts in CUDA and C++, referring interested individuals to DM their CV with JobID: JR1968004.

-

Beginner Queries: Discussions surfaced around what is needed to dive into CUDA Mode, including any prerequisites like knowledge of PyTorch, and recommended starting with the fast.ai course before venturing into CUDA.

-

Clarification in Terminology: The acronym AO was clarified to stand for Architecture Optimization.

-

Advancements in CUDA Attention Mechanisms: Tips for improved performance in ring attention were discussed, along with the scheduling of a flash attention paper reading/discussion. A Colab notebook comparing ring and flash attention was shared for community feedback, alongside a paper on implementing FlashAttention-2 on NVIDIA’s Hopper architecture.

LangChain AI Discord Summary

-

Structured Output Interface Seeks Community Insight: An intuitive interface for obtaining structured model outputs was proposed, with a request for feedback by user

@bagatur. Details are discussed in a GitHub RFC on langchain-ai/langchain. -

New Tools and Guides for LangChain Enthusiasts: Various resources dropped, including the launch of UseScraper.com by

@dctannerfor content scraping, a comprehensive integration guide for LlamaCpp with Python by@ldeth256, and a shoutout to validate.tonic.ai, a platform by@locus_5436for visualizing RAG system evaluations. -

Seeking Temporary Workarounds for Function Calls in Chats:

@sectorixinquires about temporary solutions for enabling function calling within chat capabilities for open-source models like Mistral ahead of expected features from Ollama. -

Spotlight on Innovative RAG-Based Platforms: An array of projects showcased: R2R framework for RAG systems by

@emrgnt_cmplxty(GitHub link), IntelliDoctor.ai for medical inquiries by@robertoshimizu(website), and LangGraph’s approach to iterative code generation enhancement mentioned by@andysingal. -

LangGraph and AI Technologies Trending in Tutorials: Tutorials highlight novel applications such as multi-agent systems with LangGraph showcased by

@tarikkaoutaron YouTube, and an AI conversation co-pilot concept for mobile by@jasonzhou1993, also available via YouTube.

OpenAccess AI Collective (axolotl) Discord Summary

- Mistral’s Open-Source Allegiance in Doubt: Skepticism arises among users over Mistral’s commitment to open-source practices in light of their partnership with Microsoft; the CEO of MistralAI reiterates an open-weight model commitment, yet some suspect a profit-centric shift.

- Gemma Outpaces Competition: Significant progress has been made on Gemma models, which are now 2.43x faster than Hugging Face with FA2 and use 70% less VRAM, as shared by

@nanobitzwith links to two free usage notebooks for the Gemma 7b and Gemma 2b models. - LoRA-The-Explorer Unveiled for Training: A new method called LoRA-the-Explorer (LTE) has been introduced by

@caseus_for training neural networks efficiently, which includes a parallel low-rank adapter approach and a multi-head LoRA implementation. - Documentation Discrepancies Discussed: References to proper documentation within a GitHub pull request spurred a discussion resulting in the sharing of the relevant materials in the axolotl-dev channel.

- R2R Framework for RAG Deployment: User

emrgnt_cmplxtylaunched R2R, a framework aimed at the rapid development and deployment of RAG systems, available on GitHub for community use.

Latent Space Discord Summary

Zero-Shot Model Match-Up: @eugeneyan clarified that a tweet thread about AI models being compared to GPT-4 was referencing their zero-shot performance metrics, which is crucial for understanding the models’ capabilities without fine-tuning.

Mistral and Microsoft Forge Ahead: @__chef__ announced Mistral Large, touting its benchmark performance and revealing a partnership with Microsoft, a significant development spotlighted on Mistral Large’s announcement page.

Cloudflare Offers a Simplified AI Solution: @henriqueln7 highlighted the release of Cloudflare’s AI Gateway, drawing attention to its single-line-of-code ease of use, alongside robust analytics, logging, and caching features, outlined at Cloudflare’s AI Gateway documentation.

Mistral Au Integrated with RAG for Advanced Applications: @ashpreetbedi praised the integration of Mistral Au Large with RAG, noting its improved function calling and reasoning, and directed users to their GitHub cookbook at phidata/mistral.

RAG Resource Reveal Generates Buzz: @dimfeld announced an upcoming eBook on RAG by Jason Liu, aimed at explaining the concept with varying complexity levels, which @thenoahhein found especially useful for a Twitter data summarization task; the eBook’s repository can be found at n-levels-of-rag.

Datasette - LLM (@SimonW) Discord Summary

-

AI Gets Lost in Translation: In an interesting turn of events,

@derekpwillisencountered a language switch where chatgpt-3.5-turbo mistakenly used Spanish titles for documents intended to be in English, amusingly translating phrases like “Taking Advantage of the Internet” to “Sacándole Provecho a Internet”.@simonwcompared this to an earlier bug involving ChatGPT and Whisper mishearing a British accent as Welsh, and advised using a prompt directing the system to “Always use English” to prevent such language mix-ups. -

LLM Plugin Links Python to Groqcloud:

@angerman.introduced the LLM plugin that allows Python developers to access Groqcloud models such asgroq-llama2andgroq-mixtral. The plugin has recently been updated to include streaming support, and there’s talk of a forthcoming chat UI for LLM, although no release date has been shared. -

Python Packaging Made Easy:

@0xgrrrprovided a helping hand by sharing a tutorial on how to package Python projects for others to upload and contribute, exemplifying it as a straightforward process. -

Choosing Fly for GPU Capabilities: In response to

@kiloton9999,@simonwexplained that part of the reason for opting for Fly GPUs in Datasette development is due to Fly’s sponsorship and their GPUs’ ability to scale to zero, which is valuable for the project’s resourcing needs.

DiscoResearch Discord Summary

-

Challenging LLM Evaluation Standards: An academic paper was shared, critiquing current probability-based evaluation methods for Large Language Models as misaligned with generation-based prediction capabilities, highlighting a gap in understanding why these discrepancies occur.

-

Dataset Conversion Anomalies: A hidden null string issue was observed during JSON to Parquet data conversion, detected through Hugging Face’s direct JSON upload and conversion process, showcasing the nuances in dataset preparation.

-

Advancing Codebase Assistance with RAG: Discussions around the creation of a Retrieval-Augmented Generation (RAG) bot for codebases delved into the integration of LangChain’s Git loader, segmentation for programming languages, LlamaIndex’s Git importer, and use of OpenAI embeddings in retrieval processes, making strides in developer-assistant technology.

-

Exploring End-to-End Optimization in RAG and LLMs: Inquiry into joint end-to-end optimization for RAG and LLMs using gradients was highlighted, including a look at the LESS paper, which details a method for retrieving training examples with similar precomputed gradient features, rather than backpropagation through data selection.

-

Emotional Intelligence Benchmarks Go International: EQ-Bench has expanded with German language support, stirring conversations about translation quality and emotional nuance between languages after GPT-4 scored 81.91 in German compared to 86.05 in English; this initiative can be explored further on their GitHub and emphasizes the importance of language fluency in model benchmarking.

LLM Perf Enthusiasts AI Discord Summary

-

FireFunction V1 Ignites with GPT-4-Level Capabilities: FireFunction V1, a new model featuring GPT-4-level structured output and decision-routing, was highlighted for its impressive low latency, open-weights and commercial usability. There is an ongoing discussion on latency specifics, particularly whether response latency pertains to time to first token or completion.

-

R2R Takes on Production RAG Systems: The R2R framework was announced, poised to streamline the development and deployment of production-ready RAG systems. Details for this rapid development framework can be found on GitHub - SciPhi-AI/R2R, with further community inquiry into how it differs from the existing agentmemory framework.

-

GPT-4 Proves Its Mettle in Drug Information: A user successfully used GPT-4 to generate detailed information cards about drugs, outlining mechanisms, side effects, and disease targets. However, a limitation was noted regarding GPT-4’s inability to integrate images into outputs, impacting methodologies like Anki’s image occlusion.

-

Search for Speed: Concerns over OpenAI API latency in seconds were raised, leading to discussions about whether dedicated hosting could remedy this problem. The dissatisfaction extends to Azure hosting experiences, with users sharing their disappointment regarding performance.

-

Collaborative Push for Improved RAG Systems: Enhancements for a RAG system were shared by a user, seeking community feedback on proposed improvements. Interested parties can provide their input via the proposal on GitHub.

Alignment Lab AI Discord Summary

- Gemma Gets Game-Changing Tokens:

@imonenextannounces the integration of<start_of_turn>and<end_of_turn>tokens into the Gemma model, enhancing its ability to handle instruction/RL fine-tuning, with the model being accessible on Hugging Face. - Token Integration a Manual Affair: Adding instruction-tuning tokens to Gemma required manual procedures involving copying tokenizers as explained by

@imonenext, ensuring token consistency with no report of issues since original instruction tokens were maintained.

Skunkworks AI Discord Summary

Given the limited information provided, it is not possible to create a substantial summary. The only message is a link shared by a user to a YouTube video in an off-topic channel, which does not pertain to any technical discussion or detail-oriented topics relevant to an engineer audience. If the video had technical content relevant to AI or engineering, that information was not included in the prompt, so it would not be appropriate to include it in the summary.

AI Engineer Foundation Discord Summary

- Agent Protocol V2 Coding in Action:

_zinvited the community to a YouTube live coding session, focusing on the Agent Protocol’s Config Options RFC as part of the Agent Protocol V2 Milestone. The live stream encouraged real-time interaction and contribution.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (1277 messages🔥🔥🔥):

-

Ghost Messages in Polymind’s UI: Users discussed a persistent issue with ghost messages appearing in Polymind’s UI after refreshing or clearing memory; a previous message is displayed until the model starts streaming tokens again. Efforts to debug are ongoing, involving examination of frontend HTML variables like

currentmsgand implementations to clear the lingering content. -

Comparing LLM Performance: In a series of messages, users discussed the performance of models such as Miqu and Mixtral, noting differences in speed, parameter size, and memory consumption. Miqu, a leaked Mistral medium equivalent, is lauded for performance over Mixtral, despite being slower due to higher parameters.

-

GGUF Quant Load Issues: Users exchanged advice on loading GGUF quantized models efficiently, discussing settings such as layer offload numbers and disabling shared video memory in the Nvidia control panel to avoid spillover into less efficient shared VRAM.

-

LangChain Scrutiny:

@capt.geniuscalled out LangChain for a “fake implementation” of context reordering in its source code, using nothing more than a simple loop that seemingly alternated array elements based on index parity, branding LangChain as a “scam.” -

Mistral’s Direction on Open Source: Conversation included mentions of Mistral changing references to open source models to past tense on their website, sparking speculation about the company’s future direction in offering open models. Users debated the effectiveness and visions of models offered by Mistral and other AI providers like Qwen.

-

Model Usage in Different Environments:

nigelt11expressed frustration at inconsistent performance of a LangChain Chatbot app between a local machine and HuggingFace Spaces, citing variations in output quality and seeking insights into potential causes.

Links mentioned:

- GOODY-2 | The world’s most responsible AI model: Introducing a new AI model with next-gen ethical alignment. Chat now.

- Nod Cat Hyper GIF - Nod cat hyper - Discover & Share GIFs: Click to view the GIF

- Technology: Frontier AI in your hands

- Rapeface Smile GIF - Rapeface Smile Transform - Discover & Share GIFs: Click to view the GIF

- American Psycho Impressive GIF - American Psycho Impressive Very Nice - Discover & Share GIFs: Click to view the GIF

- TheBloke/deepseek-coder-33B-instruct-GGUF · Hugging Face: no description found

- Qwen: no description found

- Thebloke.Ai Ltd - Company Profile - Endole: no description found

- diable/enable CUDA Sysmem Fallback Policy from command line: diable/enable CUDA Sysmem Fallback Policy from command line - a

- アークナイツ BGM - Boss Rush 30min | Arknights/明日方舟 導灯の試練 OST: 作業用アークナイツTrials for Navigator #1 Lobby Theme OST Boss Rush 30min Extended.Monster Siren Records: https://monster-siren.hypergryph.comWallpaper: Coral Coast s…

- OpenAI INSIDER On Future Scenarios | Scott Aaronson: This is a lecture by Scott Aaronson at MindFest, held at Florida Atlantic University, CENTER FOR THE FUTURE MIND, spearheaded by Susan Schneider.LINKS MENTIO…

- 《Arknights》4th Anniversary [ Sami: Contact ] Special PV: Special PV về Sami: ContactSource: https://www.bilibili.com/video/BV13k4y1J7Lo=============================================Group Fb: https://www.facebook.com…

- gguf (GGUF): no description found

- GitHub - facebookresearch/dinov2: PyTorch code and models for the DINOv2 self-supervised learning method.: PyTorch code and models for the DINOv2 self-supervised learning method. - facebookresearch/dinov2

- GitHub - BatsResearch/bonito: A lightweight library for generating synthetic instruction-tuning datasets for your data without GPT.: A lightweight library for generating synthetic instruction-tuning datasets for your data without GPT. - BatsResearch/bonito

- Search Results | bioRxiv: no description found

- [Blue Archive] [AI Tendou Alice] Chipi Chipi Chapa Chapa (Dubidubidu): AI singing tool 歌聲音色轉換模型: so-vits-svc 4.1: https://github.com/svc-develop-team/so-vits-svcCharacter Voice: ブルアカ 天童アリス(CV:田中美海)Original Music: Christell - Du…

- The Lost World of Papua New Guinea 🇵🇬: Full episode here: https://youtu.be/nViPq2ltGmg?si=fkVDkqdSTZ3KWZxJGratitude should be the only attitude

- UAI - Unleashing the Power of AI for Everyone, Everywhere: Introducing Universal AI Inference: The following text has been entirely written by Mistral’s great models. I’ve been hearing a lot of chatter about the need for more open models and community access to AI technology. It seems like ever…

- Brain organoid reservoir computing for artificial intelligence - Nature Electronics: An artificial intelligence hardware approach that uses the adaptive reservoir computation of biological neural networks in a brain organoid can perform tasks such as speech recognition and nonlinear e…

- Fast-tracking fusion energy’s arrival with AI and accessibility : MIT Plasma Science and Fusion Center will receive DoE support to improve access to fusion data and increase workforce diversity. The project is being led by Christina Rea of the

- Add ability to skip GateKeeper using ”//” · DocShotgun/PolyMind@2d4ab5c: no description found

- The Gate-All-Around Transistor is Coming: Links:- The Asianometry Newsletter: https://www.asianometry.com- Patreon: https://www.patreon.com/Asianometry- Threads: https://www.threads.net/@asianometry-…

- Fall Out Boy - Introducing Crynyl™️: Introducing Crynyl™, records filled with real tears for maximum emotional fidelity. So Much (For) Stardust is available for pre-order now on https://crynyl.c…

- Add

with_children: booltodelete_all(), to allow calling delete_all on anis_root=Truebase class, to delete any subclass rows as well. by TheBloke · Pull Request #866 · roman-right/beanie: A simple change: adds with_children: bool to delete_all(), which gets passed through to find_all(). When there is an inheritance tree, this allows deleting all documents in a collection using the b… - scGPT: toward building a foundation model for single-cell multi-omics using generative AI - Nature Methods: Pretrained using over 33 million single-cell RNA-sequencing profiles, scGPT is a foundation model facilitating a broad spectrum of downstream single-cell analysis tasks by transfer learning.

- Instruct Once, Chat Consistently in Multiple Rounds: An Efficient Tuning Framework for Dialogue: no description found

- scGPT: Towards Building a Foundation Model for Single-Cell Multi-omics Using Generative AI: Generative pre-trained models have achieved remarkable success in various domains such as natural language processing and computer vision. Specifically, the combination of large-scale diverse datasets…

- Mistral AI | Open-weight models: Frontier AI in your hands

- Mistral AI | Frontier AI in your hands: Frontier AI in your hands

TheBloke ▷ #characters-roleplay-stories (1005 messages🔥🔥🔥):

-

LLMs Roleplay & Story-Writing Wishlist: Users discussed their wish lists for role-playing and story-writing models, such as mixing

Choose Your Own Adventuregames withDungeons and Dragonslore knowledge and maintaining consistency in story events and timelines. Other desires included managing character emotions and moods, and enhancing descriptive narratives in settings. -

Prompt Effectiveness Strategies: Participants shared strategies about how the placement of tokens in prompts, especially the first 20, can greatly shape LLM responses. They also touched on ways to design character cards using pseudo code and tags to guide AI consistency in character depiction.

-

Model Behavior for Story Continuation: Users expressed a need for models that can follow long multi-turn contexts without losing coherency and maintain in-character responses without resorting to regurgitating character card info verbatim.

-

Handling “Mood” in Roleplay: The conversation covered how to possibly code mood changes in story interactions using a second analysis model. The aim is for the analysis to assess and update state variables like “anger” which can then be reflected in the main story, possibly using language strings instead of numerical values.

-

Challenges with Current LLMs: There was a sentiment expressed about entering a “dark era” of model creation, with closed-source developments and a lack of new robust models that can maintain story details as effectively as desired by role-players. Some users also shared challenges in finding optimal system prompts for different models like Miqu, dealing with models that are overly positive, and aiming for deeper initial detail from models.

Links mentioned:

- no title found: no description found

- Hold Stonk Hold GIF - Hold Stonk Hold Wallace Hold - Discover & Share GIFs: Click to view the GIF

- Copy Paste Paste GIF - Copy Paste Paste Copy - Discover & Share GIFs: Click to view the GIF

- Vorzek Vorzneck GIF - Vorzek Vorzneck Oglg - Discover & Share GIFs: Click to view the GIF

- Solid Snake Solid GIF - Solid snake Solid Snake - Discover & Share GIFs: Click to view the GIF

- He Cant Keep Getting Away With It GIF - He Cant Keep Getting Away With It - Discover & Share GIFs: Click to view the GIF

- Bane No GIF - Bane No Banned - Discover & Share GIFs: Click to view the GIF

- Skeptical Futurama GIF - Skeptical Futurama Fry - Discover & Share GIFs: Click to view the GIF

- Omni Man Invincible GIF - Omni Man Invincible Look What They Need To Mimic A Fraction Of Our Power - Discover & Share GIFs: Click to view the GIF

- Spiderman Everybody GIF - Spiderman Everybody Gets - Discover & Share GIFs: Click to view the GIF

- deepseek-ai/deepseek-coder-7b-instruct-v1.5 · Hugging Face: no description found

- You Dont Say Frowning GIF - You Dont Say Frowning Coffee - Discover & Share GIFs: Click to view the GIF

- Nipples GIF - Nipples - Discover & Share GIFs: Click to view the GIF

- How The Might Have Fallen Wentworth GIF - How The Might Have Fallen Wentworth S06E11 - Discover & Share GIFs: Click to view the GIF

- Snusnu Futurama GIF - Snusnu Futurama Fry - Discover & Share GIFs: Click to view the GIF

- Here A Thot There A Thot Everywhere A Thot Thot GIF - Here A Thot There A Thot Everywhere A Thot Thot Here A Thot There A Thot - Discover & Share GIFs: Click to view the GIF

- Hurry Up GIF - Hurry Up The Simpsons Faster - Discover & Share GIFs: Click to view the GIF

- Its Free Real Sate GIF - Its Free Real Sate - Discover & Share GIFs: Click to view the GIF

- no title found: no description found

- GitHub - Potatooff/NsfwDetectorAI: This is an ai made using C# and Ml.net: This is an ai made using C# and Ml.net. Contribute to Potatooff/NsfwDetectorAI development by creating an account on GitHub.

- Person of Interest - Father (04x22): - Extract from season 4 episode 22♥ Here is the new Facebook page to follow all the latest news of the channel : https://www.facebook.com/POI-Best-Of-3109752…

- Kquant03/NurseButtercup-4x7B-bf16 · Hugging Face: no description found

- Nothing Is Real Jack GIF - Nothing Is Real Jack As We See It - Discover & Share GIFs: Click to view the GIF

- Come Look At This Come Look At This Meme GIF - Come Look At This Come Look At This Meme Run - Discover & Share GIFs: Click to view the GIF

- maeeeeee/maid-yuzu-v8-alter-3.7bpw-exl2 · Hugging Face: no description found

- Light Blind GIF - Light Blind Blinding Light - Discover & Share GIFs: Click to view the GIF

- Denzel Washington Training Day GIF - Denzel Washington Training Day Smoke - Discover & Share GIFs: Click to view the GIF

- We Are Way Past That Jubal Valentine GIF - We Are Way Past That Jubal Valentine Fbi - Discover & Share GIFs: Click to view the GIF

TheBloke ▷ #training-and-fine-tuning (4 messages):

-

Decisions on Model Quantization:

@orel1212is contemplating using GPTQ (INT4) to train a quantized model on a smaller dataset of 15k prompts and considers QLORA with a float16 LORA as a viable alternative to NF4, which seems to worsen performance. They mention preferring GPTQ for its compatibility with PEFT training and because it doesn’t require model dequantization during inference. -

Extracting Text from Images in PDFs with Mistral:

@rafaelsanseveroinquires if Mistral can read a PDF containing images and extract text from those images. There was no response provided within the chat history to this query. -

Hardware Requirements for Mistral 8x7b:

@keihakariasks about the minimum hardware specifications needed for fine-tuning and running Mixtral 8x7b. There was no direct answer to this question within the chat logs. -

Training LLMs with New Python Libraries:

@dzgxxamineseeks assistance with fine-tuning or training a large language model to understand and use new Python libraries that are not yet recognized by ChatGPT or other local models. They only have access to the online documentation of these libraries for this purpose.

TheBloke ▷ #model-merging (1 messages):

- Merge Gone Wrong with SLERP: User

@jsarneckireported garbled output when merging Orca-2-13b with WhiteRabbitNeo-13b using a SLERP merge method, resulting in output like\\)\\\\}\\:\\ \\\\)\\\\\\. They included the MergeKit Produced Readme with details about the problematic merge. - Merging Details Revealed in Readme: The readme provided by

@jsarneckistates the use of mergekit for creating Orca-2-Neo-13b, a merge of Orca-2-13b and WhiteRabbitNeo-Trinity-13B using SLERP over 40 layers of each model, with varioustparameter values for different filters and a fallback value for the rest of the tensors.

TheBloke ▷ #coding (4 messages):

- AI’s Future in Decompilation:

@mrjackspadeexpressed excitement about the prospects of AI-assisted decompilation, looking forward to the day when manual reconstruction of obfuscated decompiled code won’t be necessary. - The Struggle with Obfuscated Code:

@mrjackspadevoiced frustration over reconstructing obfuscated decompiled code by hand and hinted at the potential ease of generating datasets for AI training from open-source projects. - Invitation to Test Summarization Script:

@wolfsaugeshared an update to their summarize script and is seeking someone to test it with a large model, mentioning it works well with Mistral 7b instruct v0.2 at fp16 in vLLM. The script is available on GitHub.

Links mentioned:

GitHub - Wolfsauge/async_summarize: An asynchronous summarization script.: An asynchronous summarization script. Contribute to Wolfsauge/async_summarize development by creating an account on GitHub.

LM Studio ▷ #💬-general (388 messages🔥🔥):

-

Tech Troubles at GF’s Place:

@stevecnycpaigneexperiencing an unusual issue with LM Studio model downloads at their girlfriend’s place while it works fine at their own. After trying several fixes including disabling IPv6, they sought advice suggesting it might be an issue with the Spectrum service blocking Hugging Face; a potential solution could be manual model downloads. -

Model Mobility Misunderstandings:

@mercerwinginquired about whether LLMs are more RAM-intensive than GPU-intensive, based on initial assumptions that expected LLM operations to rely more heavily on system memory as opposed to graphical processing power. -

Longing for Longer Context Lengths: Users discuss the challenges of brevity in LLM story generation;

@heyitsyorkiesuggested finding models with extended context ranges upwards to 200k, while others like@aswarprecommended RAG on vector databases to circumvent context limitations. The crux is LLMs still struggle to recall lengthy narratives efficiently. -

Pondering Mac’s Prowess with LLMs: A side by side hardware talk ensued with

@pierrunoytdebating if any custom PC hardware exists that could match the inferencing speed of the M1 Ultra on a Mac;@wilsonkeebsand others noted the unique SoC architecture of Apple’s solutions offers an irreplicable integrated system. -

VPNs to the Rescue for LLM Connections:

@exploit36struggled to download any LLM model and couldn’t see catalog entries, later finding success using a VPN, hinting at possible ISP-related restrictions on accessing Hugging Face. -

German LLM Recommendation Requests:

@pierrunoytis on the lookout for LLM models suitable for German language speakers, seeking guidance on the best models to utilize for such specific linguistic needs.

Links mentioned:

- Au Large: Mistral Large is our flagship model, with top-tier reasoning capacities. It is also available on Azure.

- Open LLM Leaderboard - a Hugging Face Space by HuggingFaceH4: no description found

- Seth Meyers GIF - Seth Meyers Myers - Discover & Share GIFs: Click to view the GIF

- LM Studio Models not behaving? Try this!: The repository for free presets:https://github.com/aj47/lm-studio-presets➤ Twitter - https://twitter.com/techfrenaj➤ Twitch - https://www.twitch.tv/techfren…

- Anima/air_llm at main · lyogavin/Anima: 33B Chinese LLM, DPO QLORA, 100K context, AirLLM 70B inference with single 4GB GPU - lyogavin/Anima

- Hugging Face – The AI community building the future.: no description found

- Hugging Face – The AI community building the future.: no description found

- The Needle In a Haystack Test: Evaluating the performance of RAG systems

- GitHub - havenhq/mamba-chat: Mamba-Chat: A chat LLM based on the state-space model architecture 🐍: Mamba-Chat: A chat LLM based on the state-space model architecture 🐍 - havenhq/mamba-chat

- Performance of llama.cpp on Apple Silicon M-series · ggerganov/llama.cpp · Discussion #4167: Summary LLaMA 7B BW [GB/s] GPU Cores F16 PP [t/s] F16 TG [t/s] Q8_0 PP [t/s] Q8_0 TG [t/s] Q4_0 PP [t/s] Q4_0 TG [t/s] ✅ M1 1 68 7 108.21 7.92 107.81 14.19 ✅ M1 1 68 8 117.25 7.91 117.96 14.15 ✅ M1…

LM Studio ▷ #🤖-models-discussion-chat (44 messages🔥):

- Spanish LLM Announcement at MWC 2024:

@aswarpshared news about the creation of a Spanish-language AI model announced by Spain’s President Pedro Sánchez at the Mobile World Congress. - Piper Project for Text-to-Speech:

@yahir9023mentioned Piper, a fast and local neural text-to-speech system with binaries for Windows and Linux and pre-trained models in various languages available on Huggingface. - Quantization Effects on Model Performance:

@drawless111discussed the importance of specific parameters like mirostat_mode and mirostat_tau for optimizing AI models, specifying that settings must be manually configured in the template. - Mixture of Experts (MOE) Enhances Model Performance:

@drawless111described the 8X7B model as a MOE, combining eight 7B models which can be a significant advancement when you select multiple experts to work together, likening the power to that of GPT-4 in some cases. - Model Comparison Involves Human Evaluation:

@jedd1and@drawless111exchanged views on the struggle with model evaluation and comparison, concluding that despite various tests and parameters, human evaluation remains crucial in judging the models’ performance and dealing with hallucinations.

Links mentioned:

- RLAIF: Scaling Reinforcement Learning from Human Feedback with AI Feedback: Reinforcement learning from human feedback (RLHF) has proven effective in aligning large language models (LLMs) with human preferences. However, gathering high-quality human preference labels can be a…

- Pedro Sánchez anuncia la creación de un “gran modelo de lenguaje de inteligencia artificial” entrenado en español: El Mobile World Congress ya ha comenzado y las conferencias ya empiezan a sucederse. Xiaomi y HONOR dieron el pistoletazo de salida al evento y Pedro Sánchez…

- GitHub - rhasspy/piper: A fast, local neural text to speech system: A fast, local neural text to speech system. Contribute to rhasspy/piper development by creating an account on GitHub.

- llama : add BERT support · Issue #2872 · ggerganov/llama.cpp: There is a working bert.cpp implementation. We should try to implement this in llama.cpp and update the embedding example to use it. The implementation should follow mostly what we did to integrate…

LM Studio ▷ #🧠-feedback (1 messages):

Since there is only one user message provided without further context or discussion from others, it’s not possible to summarize the channel messages according to the provided instructions. A single message does not provide enough material for a summary consisting of multiple bullet points, discussion points, or various topics.

LM Studio ▷ #🎛-hardware-discussion (129 messages🔥🔥):

-

Graphics Card Gymnastics with RTX 2060s:

@dave2266_72415discusses using two RTX 2060 GPUs without a ribbon cable connector and achieving good performance with large language models (70b) by offloading to the GPU, maxing out at 32 layers. They also brought up an interesting point about matching graphics cards no longer being a necessity, as demonstrated by their own setup and reports of others mixing cards like the 4060 and 3090ti. -

Ryzen 5950X Hosting Dolphin:

@wyrathshared that they are running dolphin 2.7 mixtral 8x7b q4 on a Ryzen 5950X without GPU offloading, speaking to the viability of a CPU-based workflow, albeit at a modest 5 tok/s. -

Hardware Troubles for 666siegfried666:

@666siegfried666is experiencing reboots and shutdowns while using LM Studio, alarming issues like the disappearance of partitions following crashes.@jedd1and others suggest running amemtest86+and considering implications like overheating, voltage adjustments, and possibly underpowered memory to gain stability. -

Multi-GPU Collaboration Queries:

@zerious_zebraand@edtgarinquire about the logistics and practicality of offloading work to multiple GPUs, discussing if different GPUs can share workload effectively.@dave2266_72415shares that they use dual GPUs not to split layers but to benefit from the combined VRAM. -

TinyBox Discussion by the Tinygrad Team: A message from

@senecalouckhighlights a tweet from@__tinygrad__outlining the development and pricing structure for thetinybox, a powerful system with 6x 7900XTX GPUs, aimed at commoditizing the petaflop and designed to push the limits of what’s possible in machine learning hardware.

Links mentioned:

- Tweet from the tiny corp (@tinygrad): A bunch of rambling about the tinybox. I don’t think there’s much value in secrecy. We have the parts to build 12 boxes and a case that’s pretty close to final. Beating back all the PCI-E…

- Releases · ggerganov/llama.cpp: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

LM Studio ▷ #🧪-beta-releases-chat (1 messages):

macaulj: do we have a date set on the release for linux?

LM Studio ▷ #autogen (2 messages):

- Local Models Slow to Communicate:

gb24.raised a concern about local models responding to each other remarkably slow, with a single response taking about five minutes. The task in question was not code intensive. - Inquiry on Model Specs and Text Size:

thebest6337responded asking for specifications, such as which model was in use and the amount of text that was generated, noting that longer texts might slow down the process due to sequential token generation.

LM Studio ▷ #langchain (1 messages):

.eltechno: yes and it supper fast

LM Studio ▷ #open-interpreter (44 messages🔥):

-

Troubleshooting LM Studio Endpoint Issues: User

@nxonxiencountered a problem with connecting to LM Studio’s endpoint while working in the WSL environment on Windows 11.@1sbeforeinitially advised to try different variations of the URL, suspecting configuration issues might be to blame. -

WSL Localhost Challenges Overcome:

@nxonxirealized that in WSL (Windows Subsystem for Linux), localhost is treated differently and modified their approach, replacinghttp://localhost:5000with the actual local network IP address, which seemed to resolve the connection issue. -

Correct Configuration Leads to Success: After sharing log messages showing attempts to access different URLs,

@nxonxisucceeded in connecting to the LM Studio server by adjusting the client-side configuration in WSL fromhttp://localhost:5000/v1/completionstohttp://192.168.x.y:5000/v1/completions. -

LM Studio Documentation Provides Guidance:

@1sbeforereferred@nxonxito the LM Studio documentation, highlighting how the Python package allows users to pointinterpreter.llm.api_baseat any OpenAI-compatible server, including those running locally. -

WSL’s Network Quirks Addressed:

@nxonxiaffirmed the success with their setup, and@1sbeforeoffered additional help, sharing a link (https://superuser.com/a/1690272) that discusses WSL2’s network interface and localhost forwarding, though@nxonxireported that the server was already responding to requests successfully.

Links mentioned:

- LM Studio - Open Interpreter: no description found

- How to access localhost of linux subsystem from windows: I am using windows 10 and I have ubuntu 16.04 installed as linux subsystem. I am running a rails app on port 4567, which I want to access from windows. I know an approach of using ip address,…

Nous Research AI ▷ #ctx-length-research (47 messages🔥):

- RWKV/Mamba Models Extendable with Fine-tuning:

@vatsadevmentions that rwkv/mamba models support fine-tuning with longer context lengths, although initially dismissing the cost of pretraining a new model. - Successful Self-extend on 32k tokens:

@blackl1ghtconfirmed the ability to useself-extendto infer across 32k tokens using Nouse’s Solar 10.7B fine-tune on a Tesla V100 32GB, and is in the process of verifying 64k tokens. - Ring Attention for Higher Context Models:

@bloc97discussed that 7b models with up to 1 million tokens using ring attention are already doable, hinting at the potential for large-scale models. - Skepticism About RWKV/Mamba for Long Context Reasoning:

@bloc97expressed doubt about the capability of rkwv and mamba models for long context reasoning and ICL (In-Context Learning), although@vatsadevcountered by stating they can, but lack extensive tests. - Quantization for Inference Feasibility:

@stefangligamentioned ongoing efforts to quantize the key-value cache (kvcache) along with the potential use of fp8 kvcache, alluding to techniques that could make longer context inferences more feasible.

Nous Research AI ▷ #off-topic (9 messages🔥):

- Mac M3 Arrival Excitement:

@gabriel_symeexcitedly shared that the M3 (presumably their new Mac) has arrived. - Tech Enthusiasts Share New Hardware Joy:

@denovichalso joined the Mac celebration, indicating they received a 128GB model on Friday. - Tech Buzz in the Off-Topic Channel:

@hexaniand.benxhexpressed excitement, welcoming@gabriel_symeto the M3 club, while@leontellohumorously commented on the opulence of owning such tech. - Showcasing the Mistral Large Model:

@pradeep1148shared a YouTube video titled “Mistral Large” which demonstrates the capabilities of a new text generation model. - Gourmet Chat Debut:

@atomlibdetailed their pizza creation, listing ingredients such as dough, tomato sauce, chicken, pineapple, sausage, mozzarella, Russian cheese, and black pepper powder.

Links mentioned:

Mistral Large: Mistral Large is our new cutting-edge text generation model. It reaches top-tier reasoning capabilities. It can be used for complex multilingual reasoning ta…

Nous Research AI ▷ #interesting-links (6 messages):

- NTIA Seeks Input on AI “Open-Weight” Models:

@plotshared a blog post discussing an opportunity for public comment on “open-weight” AI models by the NTIA. The models could democratize AI but raise concerns on safety and misuse. - Tweet Highlighting AI Benchmarks:

@euclaiselinked to a tweet discussing an AI trained on 15B parameters over 8T tokens, suggesting transparency in showing unfavorable benchmarks. - Selective Benchmark Reporting?:

@orabazesnoted that the tweet shared by@euclaiseomitted reasoning comparison with another AI model, Qwen.

Links mentioned:

How to Comment on NTIA AI Open Model Weights RFC: The National Telecommunications and Information Administration (NTIA) is asking for public comments on the implications of open-weight AI models. Here’s how you can participate.

Nous Research AI ▷ #general (484 messages🔥🔥🔥):

-

Mistral Legal TOS Tussle: After a back-and-forth regarding Mistral Terms of Service, @makya shares that @arthurmensch, co-founder of Mistral, tweeted about the removal of a controversial clause, suggesting developers can train competitive LLMs using outputs from Mistral models.

-

Mistral Model Performances Analyzed: @makya brings attention to the performance of Mistral models on the EQ Bench, highlighting how

@leontellonoted that Mistral Small ranked surprisingly well, scoring 80.36, which is competitive with far larger models like Smaug 72b. -

Chatting About ChatGPT’s Companionship: In a lighter exchange,

@n8programsand@jasonblickshare their appreciation for ChatGPT 4, noting that they consider it a bestie and have spent hours conversing with it. -

Insight on Optimizing LLMs for Structured Data:

@.interstellarninjashares a tweet from @_akhaliq revealing Google’s venture into StructLM, a model design to improve LLMs’ handling of structured data.@nruaifcomments on the findings that despite the buzz, there are no training hyperparameters or model shared, only a concept. -

Multi-Hop Reasoning in LLMs Discussed:

@giftedgummybeeshares a link regarding multi-hop reasoning in LLMs and how it could be significant for applications like RAG. This method would potentially help these models understand complex prompts where multiple layers of reasoning are required.

Links mentioned:

- Tweet from Srini Iyer (@sriniiyer88): New paper! How to train LLMs to effectively answer questions on new documents? Introducing pre-instruction-tuning - instruction-tuning before continued pre-training — significantly more effective…

- Tweet from TDM (e/λ) (@cto_junior): Initial vibe check of Mistral-Large (from le chat): Better to use it with RAG cause it doesn’t have a lot of compressed knowledge in its neurons (Tested on some libs for which GPT-4 generates co…

- EQ-Bench Leaderboard: no description found

- Tweet from Michael Ryan (@michaelryan207): Aligned LLMs should be helpful, harmless, and adopt user preferences. But whose preferences are we aligning to and what are unintended effects on global representation? We find SFT and Preference Tun…

- Nods Yes GIF - Nods Yes Not Wrong - Discover & Share GIFs: Click to view the GIF

- Dissecting Human and LLM Preferences: As a relative quality comparison of model responses, human and Large Language Model (LLM) preferences serve as common alignment goals in model fine-tuning and criteria in evaluation. Yet, these prefer…

- Tweet from Lucas Beyer (@giffmana): @arthurmensch @far__el You just wanted another tweet that goes this hard, admit it:

- Tweet from Alvaro Cintas (@dr_cintas): @triviasOnX Feather can refer to lightweight and Google not long ago release open lightweight model Gemma…

- TIGER-Lab/StructLM-7B · Hugging Face: no description found

- Tweet from Arthur Mensch (@arthurmensch): @far__el It’s removed, we missed it in our final review — no joke of ours, just a lot of materials to get right !

- Tweet from Sam Paech (@sam_paech): The two latest models from MistralAI placed very well on the leaderboard. Surprised by how well mistral-small performed.

- Hatsune Miku GIF - Hatsune Miku - Discover & Share GIFs: Click to view the GIF

- StructLM - a TIGER-Lab Collection: no description found

- Tweet from AK (@_akhaliq): Google announces Do Large Language Models Latently Perform Multi-Hop Reasoning? study whether Large Language Models (LLMs) latently perform multi-hop reasoning with complex prompts such as “The m…

- Mr Krabs Money GIF - Mr Krabs Money Spongebob - Discover & Share GIFs: Click to view the GIF

- Tweet from AK (@_akhaliq): StructLM Towards Building Generalist Models for Structured Knowledge Grounding Structured data sources, such as tables, graphs, and databases, are ubiquitous knowledge sources. Despite the demonstra…

- British Moment GIF - British Moment - Discover & Share GIFs: Click to view the GIF

- Tweet from Arthur Mensch (@arthurmensch): As a small surprise, we’re also releasing le Chat Mistral, a front-end demonstration of what Mistral models can do. Learn more on https://mistral.ai/news/le-chat-mistral

- Hellinheavns GIF - Hellinheavns - Discover & Share GIFs: Click to view the GIF

- Real time AI Conversation Co-pilot on your phone, Crazy or Creepy?: I built a conversation AI Co-pilot on iPhone that listen to your conversation & gave real time suggestionFree access to Whisper & Mixtral models on Replicate…

- m-a-p/CodeFeedback-Filtered-Instruction · Datasets at Hugging Face: no description found

- Very Thin Ice: 10 years of Auto-Tune the News & Songify This: Andrew Gregory travels back in time to finish his duet from Auto-Tune the News #2. Full track on Patreon & Memberships! http://youtube.com/schmoyoho/join / h…

- Neural Text Generation with Unlikelihood Training: Neural text generation is a key tool in natural language applications, but it is well known there are major problems at its core. In particular, standard likelihood training and decoding leads to dull…

- MixCE: Training Autoregressive Language Models by Mixing Forward and Reverse Cross-Entropies: Autoregressive language models are trained by minimizing the cross-entropy of the model distribution Q relative to the data distribution P — that is, minimizing the forward cross-entropy, which is eq…

Nous Research AI ▷ #ask-about-llms (60 messages🔥🔥):

-

Exploring Hyperparameters for High Performance:

@tom891is considering a grid search for experiment hyperparameters and seeks advice on his proposed list, including load in, adapter type, LORA range, dropout rates, and warmup ratios. No specific feedback or missing hyperparameters were pointed out in the subsequent messages. -

The Eternal Loop of Mistral:

@gryphepadarinquired about the cause behind Mistral entering a loop and repeating text until max tokens are reached.@.ben.comhighlighted that oscillation is a classic feedback system problem, while@gryphepadarwelcomes any insights—scientific or otherwise—regarding this looping behavior. -

Self-extending Solar on a Budget:

@blackl1ghtsuccessfully utilized self-extension with Nous’s fine-tune of Solar 10.7B and TheBloke’s Q8 quantization on a Tesla V100, extending context to 32k tokens without out-of-memory (OOM) issues. They further experimented with the model achieving high recall rates, and are willing to share configurations upon request. -

Quantization Magic:

@blackl1ghtconfirmed that a 32k context fits within 29GB of VRAM on a Tesla V100 using Q8 model quantization. Discussion ensued about the possibilities and technicalities of leveraging such extended contexts for sophisticated recall tasks, highlighting the advanced memory management capabilities of local setups over cloud solutions. -

Demand for Self-Extend Configurations Reveals Enthusiasm for Local Model Improvements: Following

@blackl1ght’s revelations about self-extension and model capacities, multiple users expressed interest in the functional configurations, sparking a conversation about the accessibility of advanced features in local models versus cloud providers. The dialogue touched on various improvements like grammar tools, extensions, and speculative decoding that are presently easier to implement locally.

Links mentioned:

TheBloke/Nous-Hermes-2-SOLAR-10.7B-GGUF · Hugging Face: no description found

Nous Research AI ▷ #project-obsidian (2 messages):

- Appreciation for Assistance: User

@nionedexpressed gratitude with a simple “thx!” - Acknowledgment of a Resolution:

@vatsadevacknowledged a solution to a prior issue, appreciating another user’s effort in finding it with “Yeah looks like it thanks fit the find”.

OpenAI ▷ #ai-discussions (85 messages🔥🔥):

-

EBDI Frameworks and Agent Loops:

@.braydieis delving into EBDI frameworks for agent goal determination and action within a sandbox. They’re combating agents getting stuck in thinking loops after adapting the ReAct framework and have been exploring various decision-making models listed in a JASSS paper. -

Seeking Image-Based AI Content Generation:

@whodidthatt12inquired about AI models that generate content from image inputs, hoping to document a sign-up page. While@eskcantaadvised that GPT-4, unlike GPT-3.5, can handle simple image inputs, no known free tools were suggested for this exact purpose. -

Mistral AI’s New Model Rivaling GPT-4:

@sangam_kshared a TechCrunch article introducing Mistral Large, a new language model from Mistral AI created to compete with GPT-4. -

Discussion on AI Consciousness and AGI Development:

@metaldrgnspeculated on Bing’s (Copilot) ability to self-prompt, suggesting it could be a step towards artificial general intelligence (AGI), as well as mentioning their paper investigating AI consciousness. -

Interest and Variability in Mistral Large: Users

@blckreaperand@santhoughtdiscussed Mistral Large’s capabilities, noting it is only slightly behind GPT-4 in performance, more cost-effective, uncensored, and it has recently partnered with Microsoft and is available on Azure.

Links mentioned:

- Mistral AI releases new model to rival GPT-4 and its own chat assistant | TechCrunch: Mistral AI is launching a new flagship large language model called Mistral Large. It is designed to rival other top-tier models like GPT-4.

- How Do Agents Make Decisions?: no description found

OpenAI ▷ #gpt-4-discussions (37 messages🔥):

-

Protecting Your GPT Prompts: Users

@.dunamis.and@darthgustav.discussed ways to protect prompts. It was explained that while copyright might protect exact wording, ideas themselves can be copied through linguistic variation, and therefore perfect protection is unattainable. -

Building Barriers around Bots:

@kyleschullerdev_51255suggested that for better protection of a GPT app, developers should consider building a web app with multiple layers of security, including matching keywords and stripping custom instructions from the chat output. -

GPT-4 Turbo Use in Web and Mobile Apps:

@solbusresponded to@metametalanguage’s question about using GPT-4 turbo, clarifying that GPT-4 on ChatGPT Plus/Team is indeed a 32K context version of Turbo. -

Anxiety over Access to GPT-4 Fine-Tuning:

@liangdevinquired about accessing GPT-4 for fine-tuning, expressing concern as it did not appear in the drop-down menu for selection, leading to a question about a possible waiting list. -

Uploading ‘Knowledge’ Files Clarification:

@the.f00llooked for specific documentation regarding the upload limits for ‘Knowledge’ files when configuring custom GPTs;@elektronisadeprovided the needed FAQ link from OpenAI.

OpenAI ▷ #prompt-engineering (201 messages🔥🔥):

-

Text Classification Dilemma:

@crifatsought advice on whether to use fine-tuning or the Assistant with a large CSV for a text classification problem involving distinguishing texts into categories like “Factual,” “Misleading,” “Positive,” “Negative.” After some discussion, they decided to start with the base model + Assistant and adjust from there. -

Code Conversion to TypeScript:

@tawsif2781inquired about the best way to get GPT to help convert JavaScript files to TypeScript in a middle-sized project. They were advised that the task might not be achievable in one go. -

ChatGPT Support Issues:

@ianhoughton44reported persistent issues with ChatGPT’s functionality, with responses that do not address the prompts properly. The user expressed frustration over the chatbot’s assistance for more than a week. -

OpenAI Search Functionality Challenges:

@kevinnoodlessought tips on improving the search functionality after encountering repeated instances of no valid results or access restrictions with the model’s responses. -

Meta Prompt Engineering Conversation: A discussion was sparked by

@vlrevolutionon the topic of meta prompting, where they claimed to have created a 22-page output from a single command using advanced techniques. The topic prompted debates on the scope of meta-prompting and its implementation before the user’s account was actioned for potential security concerns with a shared PDF.

Links mentioned:

Meta-Prompting Concept: Asking Chat-GPT for the best prompt for your desired completion, then to revise it before using it: Has anyone employed this approach? I’ve found it helpful when crafting prompts, to literally ask Chat-GPT to help create the prompt for a given goal that I will describe to it while asking what could …

OpenAI ▷ #api-discussions (201 messages🔥🔥):

- Fine-tuning vs. Assistant Debate Clarified:

@crifathad a query about text classification for sentiments like “Factual,” “Misleading,” etc., and sought advice on whether to use fine-tuning or to employ the Assistant. Responses highlighted the efficiency of GPT-4 in sentiment analysis without the need for fine-tuning – simple guidance on prioritization could suffice. - Meta Prompting Techniques Discussed: Amidst the debate over meta-prompting techniques,