Claude 3 is here! Nothing else from the weekend matters in comparison, which is awfully nice for weekday newsletter writers.

TLDR:

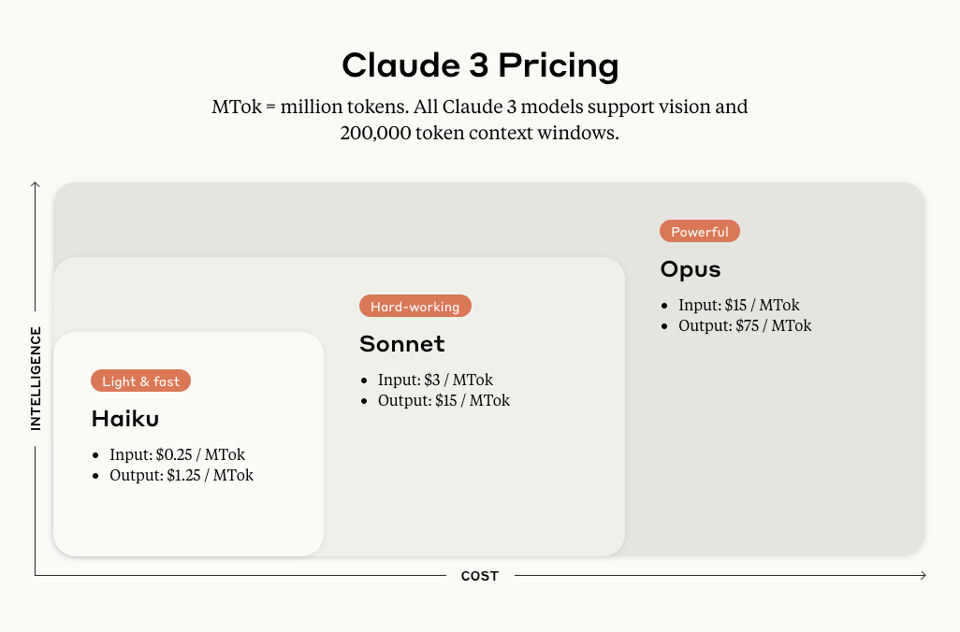

- Claude now comes in 3 sizes - the smallest two (Haiku - unreleased, Sonnet - default, on claude.ai, AWS and GCP) are fast (2x faster than Claude 2) and cheap (half the cost of GPT4T) and good, and the big one (Opus, on Claude Pro, but slower and more expensive) appears to beat GPT4 on every benchmark that matters. Sometimes, like in GPQA, a LOT better, impressing the GPQA benchmark author.

- They’re all multimodal, specifically vision and convincingly turned a 2hr Karpathy video into a blogpost.

- Better alignment - fewer bad refusals, and improved accuracy on hard questions

- 200k token context, can extend up to 1m tokens, with Gemini 1.5-like perfect recal;

- Notably, detected that it was being tested while doing a routine Needle in Haystack test. Safetyists up in arms.

Our full notes below:

- Haiku (small, $0.25/mtok - “available soon”), Sonnet (medium, $3/mtok - powers claude.ai, is on Amazon Bedrock and Google Vertex), Opus (large $15/mtok - powers Claude Pro)

- Speed: Haiku is the fastest and most cost-effective model on the market for its intelligence category. It can read an information and data dense research paper on arXiv (~10k tokens) with charts and graphs in less than three seconds. Following launch, we expect to improve performance even further. Sonnet is 2x faster than Opus and Claude 2/2.1

- Vision: The Claude 3 models have sophisticated vision capabilities on par with other leading models. They can process a wide range of visual formats, including photos, charts, graphs and technical diagrams.

- Opus can turn a 2hr video into a blogpost

- Long context and near-perfect recall: Claude 3 Opus not only achieved near-perfect recall, surpassing 99% accuracy, but in some cases, it even identified the limitations of the evaluation itself by recognizing that the “needle” sentence appeared to be artificially inserted into the original text by a human.

- Easier to use: The Claude 3 models are better at following complex, multi-step instructions. They are particularly adept at adhering to brand voice and response guidelines, and developing customer-facing experiences our users can trust. In addition, the Claude 3 models are better at producing popular structured output in formats like JSON—making it simpler to instruct Claude for use cases like natural language classification and sentiment analysis.

- Safety

- Lower refusal rate - very good to combat anthropic safetyist image and topical vs gemini issues from feb

- “Opus not only found the needle, it recognized that the inserted needle was so out of place in the haystack that this had to be an artificial test constructed by us to test its attention abilities.” from Anthropic prompt engineer

- criticized by MMitchell and Connor Leahy

- Evals

- choosing to highlight Finance, Medicine, Philosophy domain evals rather than MMLU/HumanEval is good

- 59.5% on GPQA is much better than generalist PhDs and GPT4 - GPQA author is impressed. paper.

- GPT4 comparisons

- beats GPT4 at coding a discord bot

- fails at simple shirt drying but GPT4 doesnt

- misc commentary

- 200k context, can extend to 1m tokens

- Haiku is close to GPT4 in evals, but half the cost of GPT3.5T

- Trained on synthetic data

- lower loss on code is normal/unremarkable

As a bonus, Noah did 2 runs of Claude 3 (Sonnet) vs GPT4 on the same Twitter data scrapes you see below. We think Claude 3’s summarization capabilities are way, way better.

Table of Contents

[TOC]

PART X: AI Twitter

AI Progress and Capabilities

- Sam Altman said that “all of this has happened before, all of this will happen again” and “the hurricane turns faster and faster but it stays perfectly calm in the eye”, possibly alluding to the rapid progress in AI.

- Gemini 1.5 Pro from Google is impressive, with “objectively sharper optics and higher contrast images than the Apple Vision Pro” according to Yann LeCun. However, John Carmack points out there are many variables that make this comparison not definitive.

- François Chollet believes his 2023 views on LLM capabilities were overestimating their potential and usefulness. He outlines four levels of generalization LLMs can achieve, with general intelligence being the ability to synthesize new programs to solve never-seen-before tasks.

- Gemma from Google is able to be deployed zero-shot in the wild in San Francisco for real-world tasks, without any reinforcement learning, just from next-token prediction on simulation and YouTube data.

AI Investments and Business

- Softbank sold all its Nvidia shares in 2019 for $3.6B, which would be worth $93B today. Investing in AI was one of the primary goals of Softbank’s Vision Fund.

- Nvidia’s early years involved relentlessly improving despite competitors having advantages. Their differentiator was taking software more seriously, building the CUDA ecosystem.

- Google faces a problem with the likes of OpenAI and Perplexity showing that many “search” tasks are better served through conversational AI, similar to how Google disrupted with PageRank and links 25 years ago.

- Compute and data are the currency of the future according to Alexandr Wang.

AI Safety and Regulation

- Elon Musk’s lawsuit revealed an investor remark after meeting with Demis Hassabis that “the best thing he could have done for the human race was shoot Mr. Hassabis then and there”.

- India is regulating the ability to spin up AI models, which some see as self-sabotage at a critical moment, similar to China kicking out its tech giants.

- Vinod Khosla called for banning open-source AI platforms, which Yann LeCun believes would cause us to lose the “war” he thinks we are in.

Memes and Humor

- “Thank god I didn’t go into computer science” says the junior analyst in New York staring at Excel. “Thank god I didn’t go into finance” says the ML scientist in San Francisco, also staring at a spreadsheet.

- Geoff Hinton being spotted working on Gemini at Google leads to speculation he’s preparing to take back the CEO role from Sundar Pichai to save the company he built.

- “Trump’s internal LLM seems to have suffered excessive pruning. How many parameters does he have left? How short is his context window now?”

Other Relevant Tweets for AI Engineers

- “You know you’ve got your new system’s core abstractions right when things you didn’t explicitly design for that used to be complex become incredibly simple”

- A guide to probabilistic programming and analyzing

PART 0: Summary of Summaries of Summaries

This is now also driven by Claude 3, which is way better than OpenAI’s output.

Got it, here's the summary in bullet point markdown format:

AI Model Performance and Comparisons

- The release of Claude 3 by Anthropic sparked extensive discussions and benchmarking comparisons against GPT-4 across multiple Discord servers, with users claiming superior performance on tasks like math and coding. Claude 3's ~60% accuracy on GPQA was highlighted.

- Debates arose around the Mistral Large model's performance versus GPT-4 for coding tasks, with some claiming its superiority despite official benchmarks.

- The Mamba LM chess model with 11M parameters showed promising results, achieving a 37.7% win rate against Stockfish level 0 as white.

AI Engineering and Deployment Challenges

- Extensive discussions revolved around the difficulties of deploying large language models (LLMs) like Mistral, with specific focus on VRAM requirements, quantization strategies, and optimal configurations for setups like dual NVIDIA 3090 GPUs.

- CUDA and GPU optimization were recurring topics, with resources like NVIDIA's cuBLASDx documentation and a lecture on CUDA performance gotchas being shared.

- The Terminator architecture was introduced, proposing to replace residual learning with a novel approach to full context interaction.

AI Ethics, Privacy, and Regulations

- Concerns were raised about potential data scraping from personal profiles after an AI model's response contained identifiable personal details, prompting discussions on ethics and legality.

- India's AI deployment regulations requiring government approval sparked alarms over potential stifling of innovation, as per Martin Casado's tweet.

- The Open Source Initiative is working on a new draft for an open-source AI definition, with the evolving drafts available here.

Cutting-Edge AI Research and Techniques

- Aristotelian Rescoring, a concept that could address complex AI challenges, was discussed, with related works like STORIUM, FairytaleQA, and TellMeWhy available on GitHub and Hugging Face.

- The novel HyperZ⋅Z⋅W Operator was introduced as part of the #Terminator network, blending classic and modern technologies, with the full research available here.

- RAPTOR, a new technique for Retrieval-Augmented Generation (RAG) introduced by LlamaIndex, aims to improve higher-level context retrieval, as announced on Twitter.

PART 1: High level Discord summaries

TheBloke Discord Summary

-

AI Sensitivity on the Rise: Claude 3 AI’s latest version has heightened sensitivity to potential offensive content and copyright issues, raising questions about safety or over-cautiousness. The mention of Claude 3 was associated with Google-backed Anthropic debuting its most powerful chatbot yet.

-

CUDA Conundrum: There’s concern within the community about NVIDIA’s new licensing terms that restrict the use of CUDA on non-NVIDIA hardware, particularly impacting translation layers. This discussion revolves around the recent update that Nvidia bans the use of translation layers.

-

Stuck in the Game: Skepticism prevails over AI’s near-future role in game development as current AI limitations may not be easily surpassed by brute-forcing with more compute power.

-

Fine-Tuning Frustration: An issue with fine-tuning is reported, specifically with an OpenOrca Mistral 7b model that gives incorrect outputs post

ggufconversion. The reported issue can be traced across multiple channels, indicating a broader interest in the problem and potential solutions, with suggestions like checking pre-quantization performance and considering the use of imatrix for outliers. -

Chess Model Checkmate Performance: Success is seen in the training of a smaller parameter Mamba LM chess model with an 11M parameter count, performing better as white with a 37.7% win rate against Stockfish level 0. Model available at HaileyStorm/MambaMate-Micro · Hugging Face.

-

Code-Capable AI Harnesses New Heights: User @ajibawa_2023 presents their fine-tuned models, notably the OpenHermes-2.5-Code-290k-13B, which demonstrates proficient coding capabilities and the potential for applications in diverse tasks including blogging and story generation.

OpenAI Discord Summary

-

AI Community Finds Alternatives During GPT Outage: Users discussed alternatives to GPT during a recent service downtime, mentioning Bing Chat, Hugginface, Deft GPT, LeChat, together.xyz, and Stable Chat. Anthropic’s Claude 3 was highlighted as a particularly impressive alternative, with one user mentioning experimenting with the free Sonnet model, while others debated the capabilities and cost considerations of AI models like Claude 3 and OpenAI’s offerings.

-

Custom Agents and Optimal Code Generation: Questions arose about whether custom agents could integrate CSV files into their knowledge bases, prompting a technical discussion on file types. User @yuyu1337 explored finding an optimal GPT model for code generation, sparking a conversation about achieving the best time/space complexity and suggestions for using pseudo code.

-

Vision and Humor APIs Puzzle Engineers: Participants grappled with applying humor in their prompts with varying success between ChatGPT and the GPT 3.5 API. The Discord community was also engrossed in a “owl and palm” brain teaser, trying to solve the puzzle using GPT-V with multiple prompting strategies, yet encountering obstacles due to the model’s limitations in interpreting measurements.

-

Community Laughs at and Laments Over Usage Limits: Amid playful banter about AI limitations and usage limits, users exchanged prompt engineering techniques with mixed results. Concerns were raised over server auto-moderation impacting the ability to discuss and share advanced prompts, stirring a call for OpenAI to reconsider prompt restrictions for more effective knowledge sharing.

-

AI Enthusiasts Offer Tips and Seek Training Advice: Newcomers and experienced users alike asked for and provided advice on prompt engineering, discussing the importance of template structuring and the utilization of AI for content creation tasks while adhering to OpenAI’s policies. Discussions highlighted the importance of community and knowledge exchange in the evolving landscape of AI engineering and usage.

Perplexity AI Discord Summary

-

Subscription Snafu Sparks Outrage: @vivekrgopal reported being charged for an annual Perplexity subscription despite attempting cancellation during the trial, seeking a refund through direct messages.

-

AI Integration Fever Rises: Users such as @bioforever and @sebastyan5218 are keenly awaiting the integration of new language models like Claude 3 and Gemini Ultra into Perplexity, signaling a high demand for cutting-edge AI features.

-

Benchmark Bafflements with AI Responses: @dailyfocus_daily delved into the inconsistencies across AI model problem-solving by comparing responses from GPT-4, Claude 3, and others to a benchmark question about sorting labeled balls into boxes.

-

IAM Insights and AI Fundamentals: Users like @riverborse and @krishna08011 shared Perplexity links focusing on insights into identity access management, and the basics of AI, useful for technical professionals looking to deepen their understanding of key concepts.

-

API Discussions Unfold with Concerns and Anticipations: Users discussed the limits of Perplexity API, including time-sensitive query issues and missing YouTube summary features; they also anticipated new features like citation access. A discussion on temperature settings revisited how they affect the naturalness and reliability of language outputs, and a link to assist with API usage was shared by @icelavaman, Perplexity API Info.

Mistral Discord Summary

Hermes 2.5 Takes the Lead: Discussions in the guild revealed that Hermes 2.5 has unexpectedly outperformed Hermes 2 in various benchmarks, with specific reference to the MMLU benchmark performance - a significant point for those considering upgrades or new deployments.

Mistral Deployment and Configuration Insights: Engineers seeking optimal configurations for Mistral deployment gathered valuable advice, with best practices discussed for a dual NVIDIA 3090 setup, VRAM requirements for fp16 precision (~90GB), and quantization strategies. Curious eyes were also pointed towards “thebloke“‘s Discord for additional community support.

Benchmarks Resonating With Personal Experience: A significant number of posts revolved around performance benchmarks and personal experiences with different models. Particularly intriguing was the reported superiority of Mistral Large over GPT-4 for coding tasks, challenging official tests and signaling the need for user-specific benchmarks.

Discussions Hover Around Model Limitations: Technical dialogues converged on the inherent limitations of models such as Mistral and Mixtral, specifically discussing the context size constraints with a 32k token limit for Mistral-7B-Instruct-v0.2, and sliding window functionality issues leading to possible performance degradation.

Fine Tuning and Usage Nuances Explored: Users shared insights on successfully leveraging models for specific tasks, such as sentiment analysis and scientific reasoning. However, concerns about Mixtral’s training implementation and requests for a minimalistic guide suggest a demand for clearer documentation within the community.

Emerging AI Tools and Competitive Landscape: Enthusiasts and practitioners alike have turned their attention to emerging AI tools, including Kubernetes AI tooling and Anthropic’s release of Claude-3, sparking discussions on competitive offerings and the importance of open weights for AI models.

Nous Research AI Discord Summary

-

Phi-2’s Token Limit Hits a Roadblock: Users discussed the limits of the Phi-2 model regarding token expansion, with a suggestion that it might behave like a default transformer beyond its configured limit of 2,048 tokens. Caution was advised when altering Phi-2’s settings to avoid erratic performance. A link to Phi-2’s configuration file was provided here.

-

Mac Setup for Engineers: Community members exchanged a flurry of suggestions for setting up a new Mac, mentioning tools like Homebrew, TG Pro for temperature monitoring, and Time Machine for backups. A YouTube tutorial on Mac setup for Python/ML was highlighted, available here.

-

Scaling AI Model Debate Rages On: There was a heated debate about the benefits of scaling up AI models. Some users argued that post-500B-1T parameters, efficiency gains are more likely from training techniques than sheer size, citing articles critical of the scaling approach. The contention touched upon the practicality of training 100T parameter models and the potential of smaller models, with one side expressing skepticism and another suggesting a sufficient data threshold like Redpajama v2 could still push scaling benefits. Cost-effectiveness and recent comparisons of AI models were also topics of interest.

-

Claude 3 Piques Interest: In the general discussion, Claude 3 captured attention with its potential performance against GPT-4. There was interest in inference platforms for function calling models, and advice exchanged on B2B software sales strategies. Additionally, approaches to building knowledge graphs were discussed, with anticipation for a new model to enhance structured data extraction.

-

Diverse Queries on LLMs Addressed: Questions flew around topics like PPO script availability for LLMs, best platforms for model inference, 1-shot training in ChatML, and fine-tuning AI for customer interactions. A warning against possible model manipulation was shared, along with a Business Insider article for context here.

-

Praise for Moondream In Project Obsidian: Moondream, a tiny vision language model, received praise for its performance in preliminary testing, with a GitHub link provided for those interested in exploring it here.

Eleuther Discord Summary

-

Open Source AI Nears Milestone: The Open Source Initiative (OSI) is working on a new draft for an open-source AI definition with a monthly release cadence, aiming for a version 1.0 by October 2024, as discussions continue in their public forum. The evolving drafts can be reviewed here.

-

EFF’s Legal Stance on DMCA: The Electronic Frontier Foundation (EFF) has initiated a legal challenge, Green v. Department of Justice, against the DMCA’s anti-circumvention provisions, claiming they impede access to legally purchased copyrighted content. Details of the case are documented here.

-

Quantization in AI Comes Under Scrutiny: Debates have risen around quantization in neural networks, especially regarding weights and activations. Researchers have discussed papers like the ‘bitlinear paper’ and the quantization of activation functions, touching upon the concept of epistemic uncertainty.

-

Safety Alert: Compromised Code via GitHub Malware: A malware campaign on GitHub has cloned legitimate repositories to distribute malware. A detailed threat analysis by Apiiro is available here.

-

Challenging Predictive Modeling in Biology: A user claimed that predictive modeling cannot effectively create economically viable biomolecules due to the complexity of biological systems, indicating a contrast with the more predictable physical models used in engineering.

-

Revolutionizing AI with Counterfactuals: A new approach named CounterCurate, combining GPT-4V and DALLE-3, leads to visio-linguistic improvements. CounterCurate uses counterfactual image-caption pairs to boost performance on benchmarks. The paper explaining this is available here.

-

LLMs Overhyped? Functional Benchmarks Suggest So: Discussions arose from a Twitter thread questioning over-reported reasoning capabilities of LLMs, referring to functional benchmarks indicating significant reasoning gaps, available here, with an accompanying GitHub repository.

-

Terminator Architecture Could Replace Residual Learning: The Terminator network architecture could replace residual learning with its new approach to full context interaction. An arXiv paper discusses its potential. Future applications and code release were hinted by community members.

-

AzureML Integration with

lm-eval-harness: AzureML users discussed issues and solutions regarding the setup oflm-eval-harness. The talk included dependency, CUDA detection, multi-GPU use, and orchestration across nodes, with insights found here and here. -

Mamba vs Transformer: A comparison was drawn between Mamba and Transformer models in terms of their ability to learn and generalize on the PARITY task. Concerns over LSTM, Mamba performance, and the mechanism models used to learn PARITY were voiced, along with a shared GitHub script for training Mamba.

-

Advancing Dataset Development: A GitHub repository containing scripts for development of The Pile dataset was shared, particularly useful for those working on training language models. The repository and its README can be accessed here.

-

Figma Meets Imageio in Creative Animation: An innovative workflow was mentioned where animation was achieved by manipulating SVG frames created in Figma into a GIF using imageio.

LM Studio Discord Summary

-

Switch the Bot When Models Misbehave: Users faced issues with the Codellama Python 7B model within LM Studio, and

@heyitsyorkiesuggested switching to the Magicoder-S-DS-6.7B-GGUF model on Hugging Face to fix a “broken quant” problem. Discussions about model support, such as for LoRAs and QLoRA, indicated they are not yet available, and users cannot upload pdf files directly to LM Studio. -

Data Privacy Alarm Bells Ringing: Concerns were aired about potential data scraping from personal profiles after an unexpected model response contained identifiable personal details, leading to discussions on the ethics and legality of such practices in training AIs.

-

VRAM: A Hot Topic Among Hardware Geeks: Several threads touched on the necessity of substantial VRAM, with recommendations for a GPU having at least 24 GB for running large language models efficiently. The discussions pointed to resources like Debian on Apple M1, emphasizing the limitations and potential challenges with Apple’s unified memory architecture and using Apple M1 Macs for AI work with Linux.

-

Impending Beta Release Buzz:

@heyitsyorkieindicated an upcoming beta release of LM Studio would include integration of Starcoder2-15b. This discussion was backed by a GitHub pull request adding support for it to llama.cpp. -

The Trials and Errors of Autogen: Users experienced issues with Autogen integration, such as a 401 error and slow model loading times in LM Studio. Suggestions for troubleshooting included reinstalling and using the Docker guide with adjustments for Windows system paths as found on StackOverflow.

-

AI Engineering with Distributed LLMs: A query was raised regarding the development of custom AI agents and running different large language models on various hardware setups, including mentioning specific hardware such as a 3090 GPU, a Jetson Orion, and a 6800XT GPU. However, there was no additional context or detailed discussion provided on these topics.

-

Short Communications: A user confirmed the existence of a package on Arch Linux using yay, and another inquired about Linux support for a feature without additional context.

-

Need for Clarity in AI Discussions: Comments indicated a lack of context and clarity in discussions regarding JavaScript compatibility with crew ai, as well as a mention of Visual Studio Code that required further information.

HuggingFace Discord Summary

-

Model Training Hunger Games: Engineers joked about the hunger of AI models during training, devouring 90GB of memory. Better check those Gradio components for deployment, as outdated versions like

ImageEditormight haunt your dreams. -

AI Learning Ladder: From newbie to pro, members are eager to climb the AI learning curve, sharing resources for CUDA, SASS in Gradio, and PPO theory – no safety ropes attached.

-

Chat Conference Calling: AI community events like conferences in Asheville, NC are the real-world meeting grounds for GenAI aficionados. Meanwhile, collaborations emerge for tasks like TTS and crafting book club questions – who said AI doesn’t have hobbies?

-

Discord Doubles Down on Diffusers:

diffusersscheduler naming issues had everyone double-checking their classes post-update until a pull request fix was merged. Inpainting discussions were illustrated, and LoRA adapter implementation advice was dispensed like candy on Halloween. -

Edgy Bots and Data Detectives: Creative engineers unleashed bots like DeepSeek Coder 33b and V0.4 Proteus on the Poe platform. Others shared breakthroughs in protein anomaly detection and musings on the intersection of AI and music sampling, hinting at an era where AI could be the DJ at your next party.

-

Scheduler Confusion Resolution in Diffusers: A GitHub issue with incorrect scheduler class names in

diffuserswas resolved by a pull request, improving accuracy for AI engineers needing the right tools without the confusion. -

NLP Model Deployment Drama: Flask vs. Triton is not an apples-to-apples comparison when deploying NLP models – pick your battle. And if you’re on the hunt for efficiency, Adam optimizer still wears the crown in some circles, but keep an eye on the competition.

-

Building Bridges to Computer Vision: The connection between a georeferenced PDF of a civil drawing and GIS CAD is being explored, while curious minds considered the potential of small Visual Language Models for tasks like client onboarding. Glimpses into the synergy of AI and vision are ever-expanding, just beyond the visible spectrum.

LAION Discord Summary

-

HyperZ⋅Z⋅W Operator Shaking Foundations:

@alex_cool6introduced the #Terminator network, blending classic and modern technologies and utilizing the novel HyperZ⋅Z⋅W Operator, with the full research available here. -

Claude 3 Attracts Attention: Discussions around the Claude 3 Model are heating up, with its performance benchmarks stirring the community. A Reddit thread showcases the community’s investigation into its capabilities.

-

Claude 3 Outperforms GPT-4:

@segmentationfault8268found Claude 3 to outdo GPT-4 in dynamic response and understanding, potentially stealing users away from their existing ChatGPT Plus subscriptions. -

CUDA Kernel Challenges Persist with Claude 3: Despite its advancements, Claude 3 seems to lack improvement in non-standard tasks like handling PyTorch CUDA kernels, as pointed out by

@twoabove. -

Sonnet Enters VLM Arena: The conversation has ignited interest in Sonnet, identified as a Visual Language Model (VLM), and its comparative performance with giants like GPT4v and CogVLM.

-

Seeking Aid for DPO Adjustment:

@huunguyenmade a call for collaboration to refine the Dynamic Programming Optimizer (DPO). Interested collaborators are encouraged to connect via direct message.

CUDA MODE Discord Summary

Swap Space on Speed Dial: Discussion centered on using Linux VRAM as swap space with potential speed advantages over traditional disk paging, although possible demand conflicts were noted. Resources like vramfs on GitHub and ArchLinux documentation were shared.

Rapid Verification and Chat Retrievals: Users sought assistance on accessing previous day’s live chat discussions and queried about Gmail verification times on lightning.ai, highlighting quick resolution times and the ease of accessing recorded sessions.

CUDA Conundrums and Triton Tweaks: Engineers shared insights into CUDA programming difficulties, examining Triton’s relationship to NVCC and asynchronous matrix multiplication in Hopper architecture. Resources such as the unsloth repository and the Triton GitHub page were highlighted.

GPU-Powered Databases: The idea of running databases on GPUs gained traction, with mentions of the cuDF library and reference to a ZDNet article on GPU databases.

Mistral’s Computation Contemplations: Debates arose over Mistral’s computing capabilities, questioning the adequacy of 1.5k H100 GPUs for large-scale model training and discussing asynchronous operations. Links included NVIDIA’s cuBLASDx documentation and a tweet from Arthur Mensch.

PyTorch Developer Podcast Drops New Episode: The podcast’s episode discussing AoTInductor was shared, echoing community enthusiasm for the series.

Ring Attention Rings a Bell: Ring and Striped Attention were hot topics, with references to discussions on the YK Discord and a Together.ai blog post. Various code bases like ring-flash-attention and flash-attention provided implementation insights.

CUDA-MODE Lecture Loaded: Announcement of Lecture 8 on CUDA performance gotchas with a promise of tricks for maximizing occupancy and minimizing issues, set to start promptly for eager learners.

Career Cornerstones: Job postings by Lamini AI and Quadrature aimed at HPC and GPU Optimization Engineers, highlighting opportunities to work on exciting projects such as optimizing LLMs on AMD GPUs and AI workloads in global financial markets. Details can be found on Lamini AI Careers and Quadrature Careers.

Lecture 8 Redux on YouTube: After technical issues with a prior recording, Lecture 8, titled CUDA Performance Checklist, was re-recorded and shared along with corresponding code samples and slides.

LlamaIndex Discord Summary

-

RAPTOR Elevates RAG Retrieval: LlamaIndex introduced RAPTOR, a new technique for Retrieval-Augmented Generation (RAG) that improves the retrieval of higher-level context. Promoting better handling of complex questions, it was announced via Twitter.

-

GAI Enters Urban Planning: LlamaIndex displayed practical applications of RAG, including a GAI-powered ADU planner, aiming to enhance the process of constructing accessory dwelling units Tweet.

-

MongoDB Meets RAG with LlamaIndex’s new reference architecture, developed by @AlakeRichmond, utilizing @MongoDB Atlas for efficient data indexing, vital for building sophisticated RAG systems, as per a Twitter update.

-

Semantic Strategies Sharpen RAG: Semantic chunking is spotlighted for its potential to advance RAG’s retrieval and synthesis capabilities by grouping semantically similar data, an approach shared by Florian June and picked up by LlamaIndex Twitter post.

-

Claude 3’s Triumphant Trio: Claude 3 has been released with different variants, including Claude Opus, surpassing GPT-4’s performance according to LlamaIndex, which has announced immediate support for the model announcement.

-

Leveraging LongContext with LlamaIndex: Integration of LlamaIndex with LongContext shows promise for enhancing RAG, especially with Google’s recent Gemini 1.5 Pro release that features a 1M context window, which could potentially be incorporated Medium article.

-

Community Corner Catches Fire: The LlamaIndex Discord community was complimented as more organized and supportive than others, particularly in terms of API documentation structure insights and practical guides on setting up sophisticated search systems involving hybrid vector and keyword searching Y Combinator news, LlamaIndex Documentation, and multiple other resources listed above.

OpenRouter (Alex Atallah) Discord Summary

-

Claude 3.0 Arrival on OpenRouter: The much-anticipated Claude 3 AI has been released, with an exclusive mention of an experimental self-moderated version being available on OpenRouter, as announced by

@alexatallah. -

LLM Security Game Sparks Caution:

@leumonhas launched a game on a server attempting to deceive GPT3.5 into exposing a secret key, underlining the significance of handling AI outputs with caution and safeguarding sensitive data. Players can also engage freely with various AI models like Claude-v1, Gemini Pro, Mixtral, Dolphin, and Yi. -

Claude 3 vs GPT-4 Reactions and Tests: Discussions and reactions to the comparison between Claude 3, including Claude 3 Opus, and GPT-4 are ongoing, with users like

@arsobannoting greater text comprehension in Claude 3 Opus in tests, while others express concerns over its pricing. -

Performance Debate Heats Up Among AIs: The capabilities of different Claude 3 variants spurred debates, with shared observations such as Sonnet sometimes outperforming Opus and plans to test Claude 3 for English-to-code translations in gaming applications.

-

AI Deterioration Detected by Community:

@capitaindavepointed out what seems to be a diminishing reasoning ability over time in Gemini Ultra, sparking discussions on the potential deterioration of model performance after initial release.

Links mentioned:

- OpenRouter with Claude 3.0: OpenRouter

- LLM Encryption Challenge on Discord: Discord - A New Way to Chat with Friends & Communities

- Claude Performance Test Result Image: codebyars.dev

LLM Perf Enthusiasts AI Discord Summary

-

OpenAI Turns a New Page with Browsing Feature: OpenAI has unveiled a browsing feature, prompting excitement for its resemblence to existing tools like Gemini/Perplexity. The announcement was shared via a tweet from OpenAI.

-

Claude 3’s Promising Debut: The new Claude 3 model family is creating buzz for potentially surpassing GPT-4 in tasks involving math and code based on user

@res6969’s claims. Discussions concerning its cost-efficiency and the anticipation for the Haiku model highlight user interest in balancing price with performance. -

Claude 3’s Operative Edge: Experiments referred to by

@res6969point to Claude 3’s latency outperforming others, with first token responses around 4 seconds, demonstrating its operational efficiency in user experiences. -

Navigating Cost-Effective Embedding Solutions: With a goal of 100 inferences per second in production,

@iyevenkoexplored the most cost-effective embedding models. User@yikesawjeez’s recommendations included Qdrant and Weaviate. -

Weighing OpenAI’s Embedding Affordability: Despite initial quality concerns,

@iyevenkois considering OpenAI’s embedding solutions for cloud infrastructure, which appear to be quite cost-effective, especially in light of improvements to their embeddings.

Interconnects (Nathan Lambert) Discord Summary

-

Anthropic Unveils Claude 3 to Rave Reviews: AnthropicAI announced Claude 3, its latest series of AI models including Claude 3 Opus, Claude 3 Sonnet, and Claude 3 Haiku, challenging benchmarks in AI performance. Users like

@sid221134224and@canadagoose1expressed their excitement, noting Claude 3’s strengths over GPT-4 and its potential due to no reliance on proprietary data sets. -

Claude 3 Ignites Misinformation and Drama: The release of Claude 3 catalyzed the spread of problematic tweets, causing

@natolambertto intervene directly by addressing misleading posts as “dumb.”@natolambertalso humorously rejected the idea of using an alternate account to combat misinformation due to the effort involved. -

RL Innovations and Discussions: A paper on a foundational model for RL was highlighted, discussing a policy conditioned on embedding of the reward function for adaptable generalization (Sergey Levine’s tweet). Concurrently, the community explored the Cohere PPO paper’s claim that corrections for Policy Gradient Optimization (PPO) may not be required for Large Language Models (LLMs), sparking interest in verification from other research groups.

-

From Silver Screen to AI Dreams:

@natolambertis seeking a video editing partner to create a trailer, possibly inspired by the film Her, emphasizing AI themes. Additionally,@natolambertteased upcoming content and mentioned possible collaboration with Hugging Face’s CTO, linking to a discussion about the benefits of open source AI (Logan.GPT’s tweet). -

The AI Community Embraces Julia: Amid discussions,

@xeophon.focused on the merits of the Julia programming language for AI development, providing a link to JuliaLang for those interested. The conversation indicated a growing engagement with Julia within the engineering community.

LangChain AI Discord Summary

-

Deciphering Tokenizer Mechanics: A YouTube tutorial was shared by

@lhc1921, offering insights into constructing a tokenizer for Large Language Models (LLMs), highlighting its significance in converting strings to tokens. -

Galaxy AI Proposes Free API Access: Galaxy AI was introduced by

@white_d3vilwhich offers complimentary API services for high-caliber AI models, including GPT-4, GPT-4-1106-PREVIEW, and GPT-3.5-turbo-1106. -

Tech Stack Advice for Scalable LLM Web Apps: Mixed suggestions were made on building a scalable LLM web application, ranging from using Python 3.11 with FastAPI and Langchain to leveraging Next.js with Langserve.js on Vercel. Langchain’s production readiness and customization for commercial use were queried, expressing a preference for custom code in production settings.

-

Beware of Potential Spam Links: Users are warned against a suspicious link shared by

@teitei40across multiple channels, claiming to offer a $50 Steam gift card but raising concerns about its legitimacy and potential as a phishing attempt. -

Innovative Projects and Educational Resources: The community has showcased a variety of works, including Devscribe AI’s YouTube video chat tool, a guide on using generative AI for asset-liability management, and a Next.js 14+ starter template for modern web development. Additionally, discussions on enhancing Langchain’s retrieval-augmented generation and the efficacy of the Feynman Technique for learning were highlighted.

Latent Space Discord Summary

-

Overflowing with AI Knowledge: Gemini for Google Cloud is set for a boost with the integration of Stack Overflow’s knowledge via OverflowAPI, aiming to sharpen AI assistance directly within the cloud console.

-

Brin Banks on AGI Breakthroughs: Google’s co-founder, Sergey Brin, has sparked discussions by suggesting initiatives like Gemini could lead Google’s artificial intelligence towards AGI, as flaunted in a circulating tweet about his insights.

-

Perfecting Digital Reality: LayerDiffusion envisions a new horizon for AI creativity, offering tools to seamlessly insert items with realistic reflections into photos, a promising venture for Stable Diffusion aficionados.

-

Claude 3 Makes a Splash: Anthropic’s announcement of its Claude 3 model family stirs the AI community with discussions on its advanced metadata awareness and impact on current AI models, with important benchmarks being shared, such as Claude 3’s ~60% accuracy on GPQA.

-

India’s AI Regulatory Chokepoint: Martin Casado’s tweet on India’s AI deployment regulations has raised alarms over potential stifling of innovation due to the required government approval, stirring debate among the tech community about the balance between oversight and progress.

OpenAccess AI Collective (axolotl) Discord Summary

-

Resolved: Hugging Face Commit Chaos:

@giftedgummybeereported that the Hugging FaceKTOissue was resolved by identifying a commit version mix-up. This primarily concerned the Hugging Face transformers library, relevant to Axolotl’s deployment. -

Axolotl Staying Put on Hugging Face:

@nanobitzclarified that there are no plans to port Axolotl to Tinygrad, citing dependency on the Hugging Face transformers library, and reminded users to keep configuration questions to appropriate help channels. -

Optuna CLI Consideration for Axolotl:

@casper_aisuggested the integration of a CLI tool for hyperparameter optimization using Optuna, referencing a GitHub issue for context. -

Deep Learning GPU Conundrums and Fixes: Various GPU-related issues were surfaced, including a

pythonvspython3conflict and a glitch with deepspeed’s final save; however,@rtyaxdid not experience issues with deepspeed 0.13.4’s final save function. -

Mixtral vs. Mistral: Model Preference Showdown: A discussion was initiated by

@dctannercomparing Mixtral to Mistral Large for synthetic data generation, with@le_messexpressing a preference for personal models over Mixtral, suggesting nuanced performance outcomes for different use-cases.

DiscoResearch Discord Summary

- Aristotelian AI Models Entering the Stage: @crispstrobe discussed the potential of “Aristotelian Rescoring”, a concept that could address complex AI challenges, highlighting related works such as STORIUM, FairytaleQA, and TellMeWhy, with resources available on GitHub and Hugging Face.

- German Semantics Leaping Forward:

@sten6633improved German semantic similarity calculations by fine-tuning Deepset’sgbertlargewith domain-specific texts and Telekom’s paraphrase dataset, and turning it into a sentence transformer. - Eager for AI-Production Know-how Sharing:

@dsquared70invited individuals working with Generative AI in production to speak at an upcoming conference in Asheville, NC, with applications open until April 30. - Aligning German Data Delicately:

@johannhartmannpointed out a translation error in a dataset but managed to integrate the fixed dataset into FastEval, following a bug fix in their evaluation using./fasteval. - Brezn’s Bilingual Breakthrough: @thomasrenkert lauded Brezn-7b’s performance in German, spurred by model merging and aligned with 3 DPO datasets, while @johannhartmann proposed potentially using ChatML by default to improve Brezn’s benchmark scores.

Datasette - LLM (@SimonW) Discord Summary

- Stable Diffusion Goes Extra Large: Stable Diffusion XL Lightning impresses users with its capabilities, as highlighted in the shared demo link: fastsdxl.ai.

- Claude 3 Interaction Now Simplified: SimonW released a new plugin for the Claude 3 models, with the repository available on GitHub.

- Artichoke Naming Gets Creative: One user infuses humor into the discussion by suggesting whimsical names for artichokes such as “Choke-a-tastic” and “Arti-party.”

- Mistral Model Prices Noticed: The Mistral large model earns praise for its data extraction performance, but also noted for its higher-than-desired cost.

- Plugin Development Speed Wins Applause: The development pace of the new plugin for interacting with the Claude 3 model garners quick commendation from the community.

Alignment Lab AI Discord Summary

- New Collaboration Opportunity:

@wasoolihas shown a keen interest to collaborate within the Alignment Lab AI community, with@taodoggyopen to further discussions through direct messaging. - GenAI Conference Call:

@dsquared70has announced a GenAI in production conference, encouraging submissions by April 30th. More information and application details can be found at AI in Production.

Skunkworks AI Discord Summary

-

Call for AI Integration Expertise: Developers actively integrating GenAI into production systems are invited to share their insights at a conference in Asheville, NC. Interested parties can submit their papers by April 30 at AI in Production Call for Presentations.

-

A Comical Start to the Day: An attempt to brighten the chat with “good morning yokks” brought a humorous start to the discussions despite the typo corrected to “yolks”.

AI Engineer Foundation Discord Summary

- Hackathon Hierarchy Explained:

@needforspeed4raised a question about whether the Agape hackathon is related to the AI Engineer Foundation managing the Discord server, and the use of separate Discords for different hackathons.@hackgooferclarified that the AI Engineer Foundation Hackathons are held within this Discord, but pointed out that the Agape hackathon operates independently.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (994 messages🔥🔥🔥):

- Claude 3 AI, Safety or Over-Caution?: Claude 3, a new AI model, is showcased by users highlighting its heightened sensitivity to potentially offensive content or copyright concerns.

- Rumination Over AI’s Role in Game Development: Some users predict AI will be involved in the rendering and creation of future video games; however, netrve voices skepticism about the capability to bruteforce current AI limitations with raw compute.

- Contentious NVIDIA Licensing Tactics: Nvidia’s efforts to restrict the use of CUDA on non-NVIDIA hardware through licensing terms spurs discussion on the legality and impact on developers, especially regarding translation layers.

- Benchmarks and OpenAI’s Future: Models like Phind 70b are discussed while users question the reliability of benchmarks and the significance of ongoing AI model releases with the anticipation of GPT-5.

- Technical Deep Dive into GPU Technologies: Netrve discusses the complexities and advancements in game rendering, including Epic’s Nanite system in Unreal Engine 5, while others lament restrictive moves by NVIDIA.

Links mentioned:

- no title found: no description found

- no title found: no description found

- AI Open Letter - SVA: Build AI for a Better Future

- Tweet from Anthropic (@AnthropicAI): With this release, users can opt for the ideal combination of intelligence, speed, and cost to suit their use case. Opus, our most intelligent model, achieves near-human comprehension capabilities. I…

- Nvidia bans using translation layers for CUDA software — previously the prohibition was only listed in the online EULA, now included in installed files [Updated]: Translators in the crosshairs.

- Fr Lie GIF - Fr Lie - Discover & Share GIFs: Click to view the GIF

- Google-backed Anthropic debuts its most powerful chatbot yet, as generative AI battle heats up: Anthropic on Monday debuted Claude 3, a chatbot and suite of AI models that it calls its fastest and most powerful yet.

- Google is rapidly turning into a formidable opponent to BFF Nvidia — the TPU v5p AI chip powering its hypercomputer is faster and has more memory and bandwidth than ever before, beating even the mighty H100: Google’s latest AI chip is up to 2.8 times faster at training LLMs than its predecessor, and is fitted into the AI Hypercomputing architecture

- GPU Reviews, Analysis and Buying Guides | Tom’s Hardware: no description found

- Lone (Hippie): no description found

- Turbo (Chen): no description found

- pip wheel - pip documentation v24.0: no description found

- GitHub: Let’s build from here: GitHub is where over 100 million developers shape the future of software, together. Contribute to the open source community, manage your Git repositories, review code like a pro, track bugs and fea…

- Fix defaults + correct error in documentation for Mixtral configuration by kalomaze · Pull Request #29436 · huggingface/transformers: What does this PR do? The default value for the max_position_embeddings was erroneously set to 4096 * 32. This has been corrected to 32768 Mixtral does not use Sliding Window Attention, it is set …

- Add Q4 cache mode · turboderp/exllamav2@bafe539: no description found

- [Mixtral] Fixes attention masking in the loss by DesmonDay · Pull Request #29363 · huggingface/transformers: What does this PR do? I think there may be something not quite correct in load_balancing_loss. Before submitting This PR fixes a typo or improves the docs (you can dismiss the other checks if t…

- GitHub - e-p-armstrong/augmentoolkit: Convert Compute And Books Into Instruct-Tuning Datasets: Convert Compute And Books Into Instruct-Tuning Datasets - e-p-armstrong/augmentoolkit

TheBloke ▷ #characters-roleplay-stories (379 messages🔥🔥):

- Understanding Llama.cpp Limitations:

@pri9278noted that while SD (Sparse Diffusion) and Lookup decoding are implemented in llama.cpp, they are not integrated into server APIs, which limits the capabilities of the server-sided implementation of the model. - Model Performance and Hardcoding:

@superking__discussed the complexity of hardcoding models, noting the difficulty when using transformers and the possibilities when using strict formats for model prompting. - Discussions on Roleplay and Story Generation: Chat members, including

@gamingdaveuk,@netrve,@lisamacintosh, and@concedo, engaged in complex discussions about using AI models for roleplaying and story generation, exploring topics like context caching for optimization, front-end/user interface quirks, and specific use cases for chatbots in roleplay scenarios. - Sharing Experiences with Fine-tuned Models:

@c.gatoshared their experience testing the Thespis-CurtainCall Mixtral model, commenting on its performance with complex tasks like playing tic-tac-toe and generating prompts based on greentext stories. - Engaging with AutoGPT and DSPY:

@sunijainquired about the status of AutoGPT and its applications in roleplay, prompting replies from@wolfsaugeand@maldevidediscussing alternative methods, such as DSPY, for optimized prompt generation and automatic evaluation of response variations.

Links mentioned:

- Constructive: no description found

- Chub: Find, share, modify, convert, and version control characters and other data for conversational large language models (LLMs). Previously/AKA Character Hub, CharacterHub, CharHub, CharaHub, Char Hub.

- MTEB Leaderboard - a Hugging Face Space by mteb: no description found

- cgato/Thespis-CurtainCall-8x7b-v0.3 · Hugging Face: no description found

- Thanos Memoji GIF - Thanos Memoji - Discover & Share GIFs: Click to view the GIF

- ZeroBin.net: no description found

- ZeroBin.net: no description found

- Mihawk Zoro GIF - Mihawk Zoro One piece - Discover & Share GIFs: Click to view the GIF

- Worldsgreatestswordsmen Onepiece GIF - Worldsgreatestswordsmen Onepiece Mihawk - Discover & Share GIFs: Click to view the GIF

- Cat Cat Meme GIF - Cat Cat meme Funny cat - Discover & Share GIFs: Click to view the GIF

- Rapeface Smile GIF - Rapeface Smile Transform - Discover & Share GIFs: Click to view the GIF

- GGUF quantizations overview: GGUF quantizations overview. GitHub Gist: instantly share code, notes, and snippets.

- Family guys - Sony Sexbox: Clip from Family Guy S03E16

TheBloke ▷ #training-and-fine-tuning (39 messages🔥):

- Fine-tuning Troubles:

@coldedkillerexperienced issues fine-tuning an OpenOrca Mistral 7b model; after converting to ‘gguf’ format the model failed to give correct outputs for both its own data and the data it was fine-tuned on. - Cosine Similarity Cutoffs in Training Models:

@gt9393inquired about the appropriate cosine similarity cutoff for models, leading@dirtytigerxto respond that it depends on various factors, and no hard cutoff can be provided. - Use of Special Tokens and Model Training:

@gt9393discussed uncertainties regarding the inclusion of start and end of sequence tokens in datasets.@dirtytigerxrecommended having these tokens, but appending them after the prompt has been encoded. - Chess Model Training Achievement:

@.haileystormshared their success training an 11M parameter Mamba LM chess model, offering links to the relevant resources, training code, and indicating it plays better as white. The model’s training was compared to a larger parameter model and showcased a 37.7% win rate against Stockfish level 0. - Seeking Fine-Tuning Guidance for Small to Medium LLMs: Users

@coldedkillerand@zelriksought advice for fine-tuning language models, being directed to resources by Jon Durbin and a guide from UnslothAI. Discussions covered format, special tokens, and hardware requirements with@maldevideproviding insights on preprocessing book texts, hardware capacities, and tools for parameter-efficient fine-tuning (PEFT).

Links mentioned:

- HaileyStorm/MambaMate-Micro · Hugging Face: no description found

- GitHub - jondurbin/bagel: A bagel, with everything.: A bagel, with everything. Contribute to jondurbin/bagel development by creating an account on GitHub.

- GitHub - unslothai/unsloth: 5X faster 60% less memory QLoRA finetuning: 5X faster 60% less memory QLoRA finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

TheBloke ▷ #model-merging (1 messages):

- Fine-tuning Woes with OpenOrca:

@coldedkilleris experiencing issues with a fine-tuned OpenOrca Mistral 7b model. After converting it toggufformat, the model fails to produce proper output on both original and fine-tuned datasets.

TheBloke ▷ #coding (11 messages🔥):

-

OpenOrca Fine-tuning Woes: User

@coldedkilleris facing issues where their fine-tuned OpenOrca Mistral 7b model isn’t outputting expected answers post conversion to gguf format.@spottylucksuggests checking the model’s performance pre-quantization and considering the use of imatrix if there’s a problem with outliers. -

GPTQ Out of the Spotlight:

@yeghroqueries if GPTQ is no longer in focus since TheBloke has stopped releasing more about it, and@_._pandora_._hints at rumors that TheBloke is missing, contributing to no recent releases. -

Model Test Dilemma:

@gamingdaveukseeks the smallest possible model to load on a 6GB VRAM laptop for API call tests. They mention finding an answer on Reddit suggesting the use of Mistral instruct v0.2, and@dirtytigerxadvocates for any gguf quant model as long as it’s around 4GB in size. -

Coldedkiller’s Model Mishap: In a follow-up,

@coldedkillerelaborates on the issue with their fine-tuned model not providing answers from their trained Q&A dataset after format conversion. They observe the model gives irrelevant responses when queried. -

Ajibawa_2023 Showcases Enhanced Models: User

@ajibawa_2023shares links to their fine-tuned models boasting enhanced coding capabilities. One model, OpenHermes-2.5-Code-290k-13B, incorporates their dataset, performs well in coding rankings, and can handle various tasks including blogging and story generation.

Links mentioned:

- ajibawa-2023/OpenHermes-2.5-Code-290k-13B · Hugging Face: no description found

- ajibawa-2023/Code-290k-6.7B-Instruct · Hugging Face: no description found

OpenAI ▷ #ai-discussions (128 messages🔥🔥):

-

GPT Alternatives Discussed Amidst Downtime: User

@whodidthatt12expressed frustration with GPT being down and inquired about alternative AI writing assistants. Suggestions included Bing Chat, Hugginface, Deft GPT, LeChat, together.xyz, and Stable Chat. -

Claude 3 AI Impressions:

@glamratmentioned testing Anthropic’s Claude 3, finding it impressive, especially the free Sonnet model. Various users are discussing their experiences and expectations, from using Claude 3 for math tutoring (@reynupj) to potentially dropping a GPT Plus subscription in favor of Claude (@treks1766). -

Enthusiasm for AI Competition: Users like

@treks1766and@lolrepeatlolexpressed their excitement about the competition between AI services like Claude 3 and GPT-4, anticipating benefits for consumers and advancements in the AI field. -

Debate Over AI Model Capabilities: Some users argued over the reported superiority of Claude 3 over OpenAI’s models (

@darthcourt.,@hanah_34414,@cosmosraven), with comments ranging from skepticism (@drinkoblog.weebly.com) to anticipation for the next big release by OpenAI. -

Cost Considerations and Availability: Concerns were raised about the cost of using Claude 3’s API (

@dezuzel) and the availability of different models in various regions. There is anticipation around how existing services like Perplexity AI Pro will integrate with new models like Claude 3 (@hugovranic).

Links mentioned:

- Anthropic says its latest AI bot can beat Gemini and ChatGPT: Claude 3 is here with some big improvements.

- OpenAI Status: no description found

OpenAI ▷ #gpt-4-discussions (38 messages🔥):

- GPT Alternatives Sought Amidst Downtime: User

@whodidthatt12is seeking alternative AI options for writing assignments due to GPT being down. - Custom CSVs for AI Knowledge Bases:

@.bren_._inquired if custom agents could utilize CSV files as part of their knowledge bases and was experiencing technical difficulties confirming if it’s a valid file type for use. - File Types and Technical Support in Custom Agents:

@.bren_._shared an error message about accessing system root directories, while@darthgustav.suggested using row-separated values in plain text files as a more successful approach. - Finding the Most Optimal GPT for Code:

@yuyu1337is searching for a GPT model that generates code with optimal time/space complexity, with other users like@eskcantaand@beanz_and_ricecontributing to the discussion on achieving optimality and providing creative pseudo code. - GPT Store Publishing Paths Clarified:

@bluenail65queries about the necessity of a website to list GPTs in the store, to which@solbusclarifies the options for publishing, including using a billing name or sharing privately via a link.

OpenAI ▷ #prompt-engineering (506 messages🔥🔥🔥):

-

Humor Struggles in API:

@dantekavalais experiencing a discrepancy where tests in ChatGPT work well for prompting a humorous writing style, but the same approach fails when used with GPT 3.5 API; the API’s output remains consistent, unaffected by the requested style. They’ve tried various styles and reached out for guidance in the Developers Corner. -

Owl and Palm Puzzle Persists: Many participants, including

@madame_architect,@aminelg, and@eskcanta, have engaged in a lively exploration of the “owl and palm” brain teaser. While they have all attempted various prompting strategies to accurately solve the puzzle using GPT-V, none have achieved consistent success. -

Prompt Engineering Tactics Discussed: User

@madame_architectsuggests using multiple prompting tactics like the “take a deep breath” trick from the system 2 thinking paper and points from emotionprompt (tm) to tackle the problem. However,@eskcantanotes that the core issue might be with the Vision model’s training, not so much the prompting methods themselves. -

Vision Model’s Limitations: Despite testing various prompts and theories about the Vision model’s understanding of image measurement, users like

@eskcantaand@darthgustav.highlight that the model’s failure to consistently interpret measurements correctly may stem from the need for additional training, rather than prompting inadequacies. -

Feedback on Personal Creations: Newcomer

@dollengoinquires about creating and training AI for educational purposes, with an intention to publish, but there is a focus on staying within OpenAI’s dialogue and sharing policies. Users@eskcantaand@aminelggive advice respecting the platforms terms of service and prompt-writing practices for the AI models.

Links mentioned:

- Terms of use: no description found

- DALL·E 3: DALL·E 3 understands significantly more nuance and detail than our previous systems, allowing you to easily translate your ideas into exceptionally accurate images.

OpenAI ▷ #api-discussions (506 messages🔥🔥🔥):

-

Puzzle Prompt Engineering Saga Continues: Users

@aminelg,@eskcanta,@darthgustav., and@madame_architectcontinued their efforts to craft the perfect advanced prompt for an AI vision puzzle involving an owl and a tree. Despite various strategies, issues persisted with GPT-V accurately interpreting the image, leading to discussions about the model’s limitations and potential need for retraining. -

The Highs and Lows of Model Behavior: Across multiple attempts with nuanced prompts (like

@madame_architect’s which achieved a singular success), GPT-V consistently misinterpreted the measurement of the 200 units on the right side of the image, often confusing it with the full height of the tree, making it an observable weakness in the model’s capabilities. -

Playful Competition Heats Up: Discussions turned humorous as

@aminelgand@spikydexchanged jests about reaching their usage limits and joked about generating prompts that would outperform the AI’s current understanding of the complex image teasing out the occasional correct response as a “10 points to GPT V” moment. -

Sharing Knowledge Comes at a Cost:

@darthgustav.expressed frustration with the Discord server’s auto-moderation, which limited his ability to discuss certain details and share prompts, triggering calls for OpenAI to revise system prompt restrictions for a more transparent and conducive prompt engineering discussion. -

Newcomer Queries and Tips Exchange: New participants like

@snkrbots,@chenzhen0048, and@dollengosought advice on prompt engineering and AI training, eliciting responses from veteran contributors. Ideas exchanged included improving prompts with template structuring, asking GPT for refinement aid, and the potential for AI to assist in content creation tasks.

Links mentioned:

- Terms of use: no description found

- DALL·E 3: DALL·E 3 understands significantly more nuance and detail than our previous systems, allowing you to easily translate your ideas into exceptionally accurate images.

Perplexity AI ▷ #general (618 messages🔥🔥🔥):

- Potential Perplexity Subscription Issue: User

@vivekrgopalexpressed frustration about being charged for an annual subscription after attempting to cancel during the trial period. They requested assistance for a refund through direct messages. - Users Eager for New AI Integrations: There’s anticipation among users like

@bioforeverand@sebastyan5218for Perplexity to integrate new language models such as Claude 3 and Gemini Ultra, highlighting the community’s desire for the latest AI advancements. - Discussion on Perplexity AI’s Effectiveness: User

@names8619cheered on Perplexity Pro’s performance, comparing it favorably against YouTube for research without clickbait, while others mentioned challenges with OpenAI’s GPT-3 results needing to switch to models like Mistral for certain topics. - Uncertainty Over AI Model Availability: Users

@gooddawg10and@fluxkrakendiscussed the availability of certain AI models (Gemini Ultra, Claude 3) within Perplexity, with some confusion about which models are accessible to users. - Comparison of AI Models and Their Responses: User

@dailyfocus_dailyshared a benchmark question regarding sorting labeled balls into boxes and compared the varied answers given by different AI models including GPT-4, Claude 3, and others, illustrating the inconsistencies in their problem-solving abilities.

Links mentioned:

- AI in Production - AI strategy and tactics.: no description found

- GitHub Next | GPT-4 with Calc: GitHub Next Project: An exploration of using calculation generation to improve GPT-4’s capabilities for numeric reasoning.

- Tweet from Ananay (@ananayarora): Just ported Perplexity to the Apple Watch! 🚀@perplexity_ai

- The One Billion Row Challenge in Go: from 1m45s to 4s in nine solutions: no description found

- Oliver Twist GIF - Oliver Twist - Discover & Share GIFs: Click to view the GIF

- David Leonhardt book talk: Ours Was the Shining Future, The Story of the American Dream: Join Professor Jeff Colgan in conversation with senior New York Times writer David Leonhardt as they discuss David’s new book, which examines the past centur…

- SmartGPT: Major Benchmark Broken - 89.0% on MMLU + Exam’s Many Errors: Has GPT4, using a SmartGPT system, broken a major benchmark, the MMLU, in more ways than one? 89.0% is an unofficial record, but do we urgently need a new, a…

- Perplexity.ai Turns Tables on Google, Upends SEO Credos: AI search leader mixes Meta-built smarts with scrappy startup fervor

- PerplexityBot: We strive to improve our service every day. To provide the best search experience, we need to collect data. We use web crawlers to gather information from the internet and index it for our search engi…

- GitHub - danielmiessler/fabric: fabric is an open-source framework for augmenting humans using AI. It provides a modular framework for solving specific problems using a crowdsourced set of AI prompts that can be used anywhere.: fabric is an open-source framework for augmenting humans using AI. It provides a modular framework for solving specific problems using a crowdsourced set of AI prompts that can be used anywhere. - …

Perplexity AI ▷ #sharing (20 messages🔥):

- Exploring Identity Access Management: User

@riverborseshared a link diving into what identity access management (IAM) entails. - Understanding Perplexity v2:

@scarey022provided a link to learn more about the concept of perplexity in language models. - In Search of Optimal Solutions: User

@dtyler10posted a link that leads to discussions about creating optimal settings, environments, or outcomes. - Technical Insights Offered: A technical explanation was the focus of a link shared by

@imigueldiaz. - AI Basics Explored:

@krishna08011and@elpacon64shared links (link1, link2) discussing what AI is and its various aspects.

Perplexity AI ▷ #pplx-api (27 messages🔥):

-

Confusion over Random Number Generator Ethics: User

@moistcornflakeexpressed amusement and confusion over codellama providing an ethical warning when asked to create a random number generator. The bot response suggested prioritizing content that promotes positive values and ethical considerations. -

Performance Issues Noted for Time-Sensitive Queries:

@brknclock1215observed an improvement in general quality but reported continued failures in time-sensitive queries and reminisced that it used to perform better in such tasks. -

Missing Feature for YouTube Summarization:

@rexx.0569highlighted the absence of a feature that summarized YouTube videos, which seemed to have been a native function of Perplexity. They noted that the feature isn’t accessible on different devices. -

Inquiry About Perplexity API Usage:

@marvin_lucksought help on how to achieve the same effects as a web request through Perplexity API. To which@icelavamanshared a discord link, presumably with relevant information, Perplexity API Info. -

Users Anticipate Citation Feature Access:

@_samratand@brknclock1215are waiting to gain access to citations in the API, and@icelavamanmentioned that this process might take 1-2 weeks or more.@brknclock1215later confirmed seeing improvement in response quality and eagerly awaits the addition of citations. -

Temperature Settings Discussion:

@brknclock1215,@thedigitalcat, and@heathenistengaged in a discussion about how temperature settings in AI models affect the naturalness and reliability of language outputs. They suggested that lower temperature settings don’t always guarantee more reliable outputs and touched upon the complexity of natural language and self-attention mechanisms.

Links mentioned:

Perplexity Blog): Explore Perplexity’s blog for articles, announcements, product updates, and tips to optimize your experience. Stay informed and make the most of Perplexity.

Mistral ▷ #general (213 messages🔥🔥):

- Broken Discord Links: Users

@v01338and_._pandora_._mentioned that both Discord and LinkedIn links on the Mistral AI website are broken._._pandora_._confirmed this by checking HTML source. - Discussion on Model Lock-In Scenarios:

@justandiasked if migrating from one model to another in an enterprise context could lock in a specific implementation.@mrdragonfoxchimes in saying that the inference API is similar across platforms, hinting at seamless migration. - Concerns Over Model Benchmarking Transparency:

@i_am_domexpressed concerns about the lack of published scores for specific Mistral model benchmarks, suggesting that transparency is essential, especially from benchmark owners. - Ollama and VLLM Discussion for Mixtral Inference:

@distro1546inquired about achieving sub-second inference times with Mixtral using an A100 server and was advised by@mrdragonfoxto consider exllamav2 or vLLM deployment with 6bpw instead of using llama.cpp, which doesn’t fully utilize GPU capabilities. - Clarification on Mixtral’s Context Window:

@_._pandora_._and@i_am_domdiscuss confusion regarding Mistral and Mixtral’s context sizes and sliding window functionality. A Reddit update and documentation inaccuracies in Hugging Face were mentioned, highlighting the need for HF to update their documents.

Links mentioned:

- vLLM | Mistral AI Large Language Models: vLLM can be deployed using a docker image we provide, or directly from the python package.

- Reddit - Dive into anything: no description found

- Mixtral Tiny GPTQ By TheBlokeAI: Benchmarks and Detailed Analysis. Insights on Mixtral Tiny GPTQ.: LLM Card: 90.1m LLM, VRAM: 0.2GB, Context: 128K, Quantized.

- You Have GIF - You Have No - Discover & Share GIFs: Click to view the GIF

- TheBloke/Mixtral-8x7B-v0.1-GGUF · Hugging Face: no description found

- Mixtral: no description found

Mistral ▷ #models (79 messages🔥🔥):

-

Mistral Large Surprises in Coding:

@claidlerreported better performance with Mistral Large than GPT-4 for coding tasks, despite official tests suggesting GPT-4’s superiority. They observed Mistral Large providing correct solutions where GPT-4 failed repeatedly, raising questions about the tests’ accuracy or applicability in certain scenarios. -

Personal Benchmarks Matter Most:

@tom_lrdadvised that personal experience with models should be considered the best benchmark and recommended trying different models with the same input to see their performance on specific use cases. -

Mistral Next’s Speed Questioned:

@nezha___inquired if Mistral Next is smaller than Mistral Large, noting its quicker responses, and wondering if its speed is due to being a Mixture of Experts (MoE) model. -

Context Size Limit Clarified: Conversation between

@fauji2464,@mrdragonfox, and._pandora_._discussed warnings about exceeding the model’s maximum length when using Mistral-7B-Instruct-v0.2. It was clarified that the model will ignore content beyond the 32k token limit, leading to performance issues. -

LLM Context Windows Explained:

._pandora_._explained Large Language Models (LLMs) like Mistral and Mixtral have a “narrow vision” and can only consider up to 32k tokens for their current context in each inference cycle. If input exceeds this, extra content is ignored, but the model will still produce output based on the last 32k tokens.

Links mentioned:

LLM Visualization: no description found

Mistral ▷ #deployment (17 messages🔥):

- Seeking Mistral Deployment on Dual 3090s: User

@generalenthuinquired about the best approach for setting up Mistral on a system with 2x NVIDIA 3090 GPUs, aiming for minimal quantization and seeking advice on managing the trade-off between speed and using GPU vs RAM. - VRAM Requirements for fp16:

@mrdragonfoxinformed that using fp16 precision would require approximately 90GB of VRAM for running the model. - Model Run with Exllama:

@mrdragonfoxmentioned that on a 48GB VRAM setup, one can run Mistral with about 5-6 bits per word (bpw) using exllama configuration just fine. - How to Start Setup and Use Quants:

@mrdragonfoxadvised@generalenthuto start with a “regular oobabooga” as a default setup, access N inferences, and use quantization models from lonestriker and turboderp available on Hugging Face. - Additional Resources and Community Support:

@mrdragonfoxsuggested that@generalenthujoin “thebloke“‘s Discord for further support from a community that assists with local model deployment, noting that it could be a supplement to the current community for this specific use case.

Mistral ▷ #ref-implem (1 messages):

- Request for Minimalistic Mistral Training Guide: User

@casper_aimentioned that the community faces challenges in achieving optimal results with the Mixtral model. They referenced previous conversations which suggest an implementation discrepancy in the Huggingface trainer, and asked for a minimalistic reference implementation of Mixtral training.

Mistral ▷ #finetuning (1 messages):

- Smaug-Mixtral Outperforms Mixtral-8x7b:

@bdambrosiomentioned that Smaug-Mixtral surpasses mixtral-8x7b-instruct-v0.1 in 8bit exl2 quant tests, specifically for applications in long-context scientific reasoning and medium length report writing. Exact performance metrics were not provided, but outcomes may vary based on use case.

Mistral ▷ #showcase (3 messages):

- Collaborative AI for Offline LLM Agents: User

@yoan8095shared their work on using Mistral 7b for LLM Agents that operate offline, coupling it with a neuro-symbolic system for better planning. The repository available at HybridAGI on GitHub allows for Graph-based Prompt Programming to program AI behavior. - Feature-Rich Discord Bot Announcement:

@jakobdylancpromotes their Discord bot capable of interfacing with over 100 LLMs, offering features such as collaborative prompting, vision support, and streamed responses, all within 200 lines of code. The project is outlined on GitHub. - Mistral-Large’s Formatting Flaws:

@fergusfettesreports that while Mistral-large produces good results, it struggles with formatting and switching between completion mode and chat mode. They shared a video demonstrating how loomed integration of different LLMs can work at Multiloom Demo: Fieldshifting Nightshade.

Links mentioned:

- Multiloom Demo: Fieldshifting Nightshade: Demonstrating a loom for integrating LLM outputs into one coherent document by fieldshifting a research paper from computer science into sociology.Results vi…

- GitHub - jakobdylanc/discord-llm-chatbot: Supports 100+ LLMs • Collaborative prompting • Vision support • Streamed responses • 200 lines of code 🔥: Supports 100+ LLMs • Collaborative prompting • Vision support • Streamed responses • 200 lines of code 🔥 - jakobdylanc/discord-llm-chatbot

- GitHub - SynaLinks/HybridAGI: The Programmable Neuro-Symbolic AGI that lets you program its behavior using Graph-based Prompt Programming: for people who want AI to behave as expected: The Programmable Neuro-Symbolic AGI that lets you program its behavior using Graph-based Prompt Programming: for people who want AI to behave as expected - SynaLinks/HybridAGI

Mistral ▷ #random (13 messages🔥):

- Kubernetes AI Tooling Made Easy:

@alextreebeardshared their open-sourced package meant to simplify setting up AI tools on Kubernetes, inviting users for feedback. The tool can be found at GitHub - treebeardtech/terraform-helm-kubeflow. - The Arrival of Claude-3:

@benjoyo.linked the Anthropic AI’s announcement of their new model family, Claude-3, and hinted a query about when a comparable “mistral-huge” might be released. - Model Training Takes Time: In response to a query related to Mistral’s response to new competition,

@mrdragonfoxexplained that large models take quite a while to train, with large versions only recently coming out. - Competition Heating Up: Following early testing,

@benjoyo.observed that Anthropic’s new model is “extremely capable and ultra steerable/adherent,” while continuing to champion the value of open weights for differentiation. - New AI Model Pricing Discussed:

@nunodonatoreflected on the costliness of the new models, while@mrdragonfoxprovided specific pricing for Opus model usage, with input costing $15 per Mega Token (MTok) and output at $75 per MTok.

Links mentioned:

GitHub - treebeardtech/terraform-helm-kubeflow: Kubeflow Terraform Modules - run Jupyter in Kubernetes 🪐: Kubeflow Terraform Modules - run Jupyter in Kubernetes 🪐 - treebeardtech/terraform-helm-kubeflow

Mistral ▷ #la-plateforme (82 messages🔥🔥):

- Function Calling In NodeJS:

@jetset2000was looking for documentation on using function calling with Mistral in NodeJS.@sophiamyangprovided a helpful response with an example in the Mistral AI’s JS client repository. - Mistral Medium Model Timeout Issues:

@patrice_33841reported timeouts when making requests to the mistral-medium-latest model. Other users seemed to have no trouble with the medium model, and@mrdragonfoxprovided contact information for support, suggesting to post in the tech support channel or email support directly. - Confusion on Prompt Documentation:

@benjoyoexpressed confusion about the consistency between user and system messages and actual prompts in Mistral’s documentation, which@sophiamyangacknowledged and promised clarity soon. - Response Format Clarifications Needed:

@gbourdinencountered issues with new JSON response formats, leading to a discussion about correct prompt settings which@proffessorblueclarified with instructions from the docs, resolving@gbourdin’s problem. - Exploring Sentiment Analysis Efficacy:

@krangbaeshared experiences with using different Mistral models for sentiment analysis, noting that 8x7b seemed more effective than the small model.

Links mentioned:

- Pricing and rate limits | Mistral AI Large Language Models: Pay-as-you-go

- Model Selection | Mistral AI Large Language Models: Mistral AI provides five API endpoints featuring five leading Large Language Models:

- Client code | Mistral AI Large Language Models: We provide client codes in both Python and Javascript.

- Function Calling | Mistral AI Large Language Models: Function calling allows Mistral models to connect to external tools. By integrating Mistral models with external tools such as user defined functions or APIs, users can easily build applications cater…