Top news of the day is DeepMind’s MoD paper describing a technique that, given a compute budget, can dynamically allocate FLOPs to different layers instead of uniformly. The motivation is well written:

Not all problems require the same amount of time or effort to solve. Analogously, in language modeling not all tokens and sequences require the same time or effort to accurately make a prediction. And yet, transformer models expend the same amount of compute per token in a forward pass. Ideally, transformers would use smaller total compute budgets by not spending compute unnecessarily.

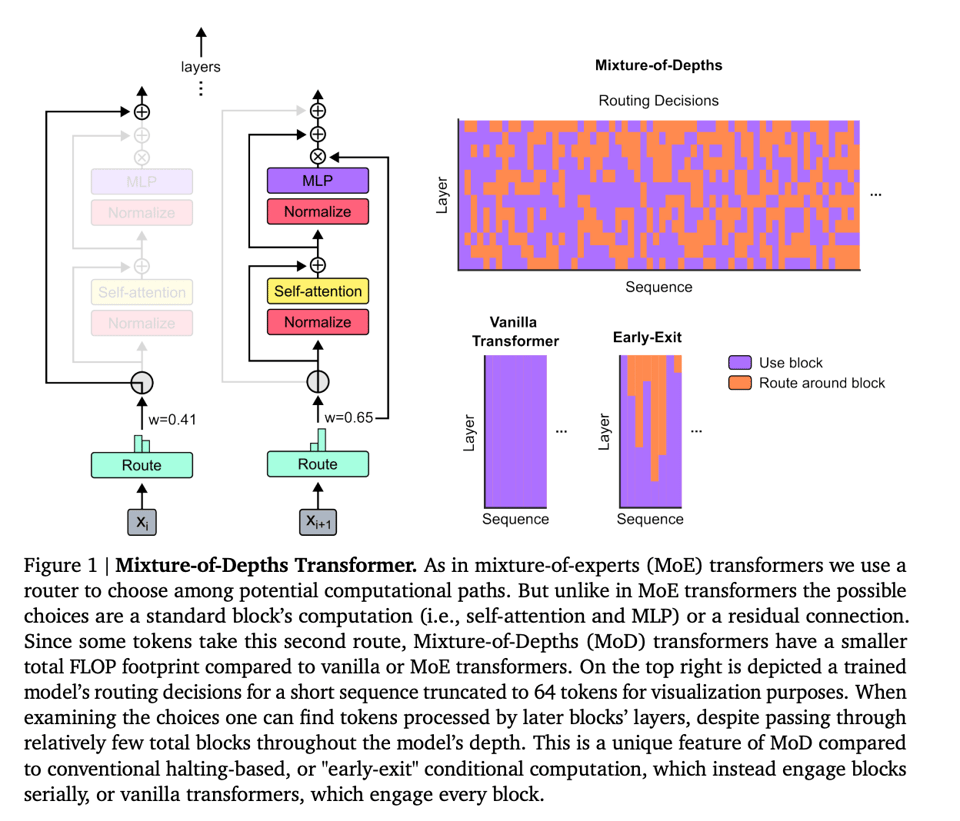

The method uses top-k routing allowing for selective processing of tokens, thus maintaining a fixed compute budget. You can compare it to a “depth” sparsity version of how MoEs scale model “width”:

We leverage an approach akin to Mixture of Experts (MoE) transformers, in which dynamic token-level routing decisions are made across the network depth. Departing from MoE, we choose to either apply a computation to a token (as would be the case for a standard transformer), or pass it through a residual connection (remaining unchanged and saving compute). Also in contrast to MoE, we apply this routing to both forward MLPs and multi-head attention. Since this therefore also impacts the keys and queries we process, the routing makes decisions not only about which tokens to update, but also which tokens are made available to attend to. We refer to this strategy as Mixture-of-Depths (MoD) to emphasize how individual tokens pass through different numbers of layers, or blocks, through the depth of the transformer

Per Piotr, Authors found that routing ⅛ tokens through every second layer worked the best. They also make an observation that the cost of attention for those layers decreases quadratically, so this could be an interesting way of making ultra long context length much faster. There’s no impact at training time, but can be “upwards of 50% faster” per forward pass.

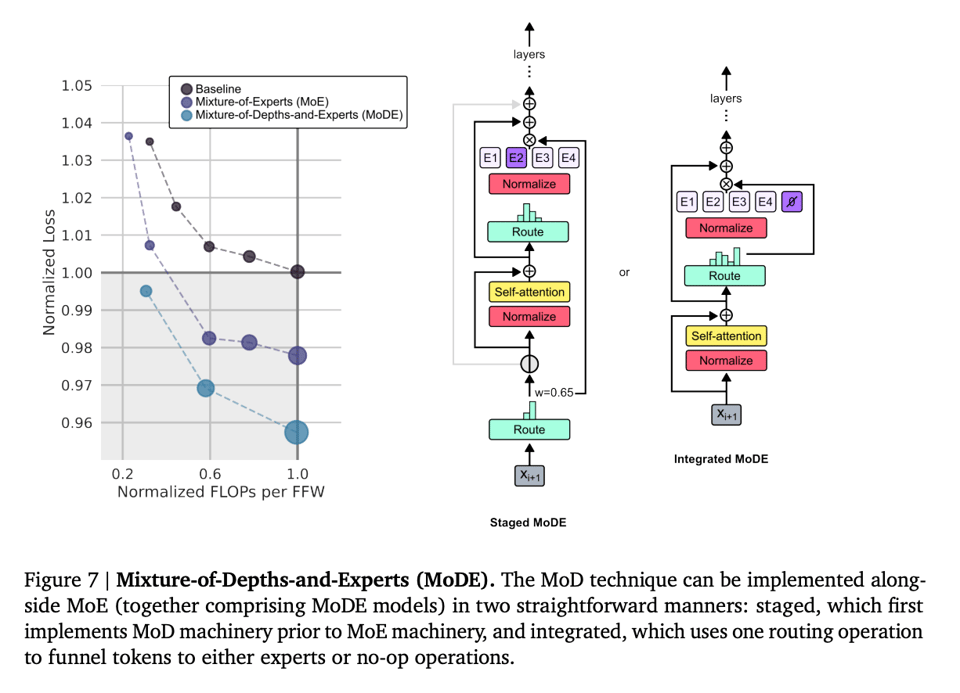

The authors also demonstrate how MoD can be combined with MoE (eg by having a no-op expert) to decouple the routing for queries, keys, and values:

Table of Contents

[TOC]

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence. Comment crawling still not implemented but coming soon.

AI Research and Development

- Concerns about LLM hype: In /r/MachineLearning, a post argues that LLM hype is driving attention and investment away from other potentially impactful AI technologies. The author claims there has been little progress in LLM performance and design since GPT-4, with the main approach being to make models bigger, and expresses concern about an influx of people without ML knowledge claiming to be “AI researchers”.

- Improving transformer efficiency: Deepmind introduces a method for transformers to dynamically allocate compute to specific positions in a sequence, optimizing allocation across layers. Models match baseline performance with equivalent FLOPs and training time, but require fewer FLOPs per forward pass and can be over 50% faster during sampling.

- Enhancing algorithmic reasoning: A new framework called Think-and-Execute decomposes LM reasoning into discovering task-level logic expressed as pseudocode, then tailoring it to each instance and simulating execution. This improves algorithmic reasoning by 10-20 percentage points over CoT and PoT baselines.

- Visual Autoregressive modeling: VAR redefines autoregressive learning on images as coarse-to-fine “next-scale prediction”, allowing AR transformers to learn visual distributions fast, surpass diffusion in image quality and speed, and exhibit scaling laws and zero-shot generalization similar to LLMs.

- On-device models: Octopus v2, an on-device 2B parameter model, surpasses GPT-4 accuracy and latency for function calling, enhancing latency 35-fold over LLaMA-7B with RAG. It is suitable for deployment on edge devices in production.

AI Products and Services

- YouTube’s stance on Sora: YouTube says OpenAI training Sora with its videos would break the rules, raising questions about data usage for AI training.

- Claude’s tool use: Anthropic’s Claude model now has the capability to use tools, expanding its potential applications.

- Cohere’s large model: Cohere releases Command R+, a scalable 104B parameter LLM focused on enterprise use cases.

- Google’s AI search monetization: There is speculation that Google’s AI-powered search will most likely be put behind a paywall, raising questions about the accessibility of AI-enhanced services.

AI Hardware and Performance

- Apple’s MLX performance: Apple’s MLX reaches 100 tokens/second for 4-bit Mistral 7B on M2 Ultra, showcasing strong on-device inference capabilities.

- QLoRA on consumer devices: QLoRA enables running Cohere’s 104B Command R+ model on an M2 Ultra, achieving ~25 tokens/sec and ~7.5 tokens/sec generation speed on a pro-sumer device.

- AMD’s open-source move: AMD is making its ROCm GPU computing platform open-source, including the software stack and hardware documentation. This could accelerate development and adoption of AI hardware and software.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

AI Models and Architectures

- Google’s Training LLMs over Neurally Compressed Text: @arankomatsuzaki noted that Google’s approach of training LLMs over neurally compressed text outperforms byte-level baselines by a wide margin, though has worse PPL than subword tokenizers but benefits from shorter sequence lengths.

- Alibaba’s Qwen1.5 Models: @huybery announced the Qwen1.5-32B dense model, which incorporates GQA, shows competitive performance comparable to the 72B model, and impresses in language understanding, multilingual support, coding and mathematical abilities. @_philschmid added that Qwen1.5 32B is a multilingual dense LLM with 32k context, used DPO for preference training, has a custom license, is commercially usable, and is available on Hugging Face, achieving 74.30 on MMLU and 70.47 on the open LLM leaderboard.

- ReFT: Representation Finetuning for Language Models: @arankomatsuzaki shared the ReFT paper, which proposes a 10x-50x more parameter-efficient fine-tuning method compared to prior state-of-the-art parameter-efficient methods.

- Apple’s MM1 Multimodal LLM Pre-training: @_philschmid summarized Apple’s MM1 paper investigating the effects of architecture components and data choices for Vision-Language-Models (VLMs). Key factors impacting performance include image resolution, model size, and training data composition, with Mixture-of-Experts (MoE) variants showing superior performance compared to dense variants.

Techniques and Frameworks

- LangChain Weaviate Integration: @LangChainAI announced the

langchain-weaviatepackage, providing access to Weaviate’s open-source vectorstore with features like native multi-tenancy and advanced filtering. - Claude Function Calling Agent: @llama_index released a Claude Function Calling Agent powered by LlamaIndex abstractions, leveraging Anthropic’s tool use support in its messages API for advanced QA/RAG, workflow automation, and more.

- AutoRAG: @llama_index introduced AutoRAG by Marker-Inc-Korea, which automatically finds and optimizes RAG pipelines given an evaluation dataset, allowing users to focus on declaring RAG modules rather than manual tuning.

- LLMs as Compilers: @omarsar0 shared a paper proposing a think-and-execute framework to decompose reasoning in LLMs, expressing task-level logic in pseudocode and simulating execution with LMs to improve algorithmic reasoning performance.

- Visualization-of-Thought Prompting: @omarsar0 discussed a paper on Visualization-of-Thought (VoT) prompting, enabling LLMs to “visualize” reasoning traces and create mental images to guide spatial reasoning, outperforming multimodal LLMs on multi-hop spatial reasoning tasks.

Datasets

- Gretel’s Synthetic Text-to-SQL Dataset: @_philschmid shared Gretel’s high-quality synthetic Text-to-SQL dataset (retelai/synthetic_text_to_sql) with 105,851 samples, ~23M tokens, coverage across 100 domains/verticals, and a wide range of SQL complexity levels, released under Apache 2.0 license.

Compute Infrastructure

- AWS EC2 G6 Instances with NVIDIA L4 GPUs: @_philschmid reported on new AWS EC2 G6 instances with NVIDIA L4 GPUs (24GB), supporting up to 8 GPUs (192GB) per instance, 25% cheaper than G5 with A10G GPUs.

- Google Colab L4 GPU Instances: @danielhanchen noted that Google Colab now offers L4 GPU instances at $0.482/hr, with native fp8 support and 24GB VRAM, along with price drops for A100 and T4 instances.

Discussions and Perspectives

- Commoditization of Language Models: @bindureddy suggested that Google, with its strong revenue stream and facing threats from LLMs in search, should open-source Gemini 1.5 and 2.0, as the number of companies joining the open-source AI revolution grows, with only Google, OpenAI, and Anthropic remaining closed-source.

- Benchmarking Concerns: @soumithchintala raised issues with benchmarks posted by Google’s Jeff Dean and François Chollet, citing wrong timing code, benchmarking different precisions, and the need for Google teams to work with PyTorch teams before posting to avoid divisive moments in the community.

- AI Harming Research: @bindureddy argued that LLMs are harming AI research to some extent by diverting focus from tabular data and brand new innovation, predicting a glut of LLMs by the end of the year.

- Framing AI Products as “Virtual Employees”: @dzhng critiqued the framing of AI products as “virtual employees,” suggesting it sets unrealistic expectations and limits the disruptive potential of AI, proposing a focus on specific “scopes of work” and envisioning future “neural corporations” run by coordinating AI agents.

Memes and Humor

- Google’s Transformer 2: @arankomatsuzaki shared details on Google’s Transformer 2, which unifies attention, recurrence, retrieval, and FFN into a single module, performs on par with Transformer with 20x better compute efficiency, and can efficiently process 100M context length. A delayed April’s Fools joke.

- @cto_junior joked about their super fast RAG app using “numpy bruteforce similarity search” instead of expensive enterprise solutions.

- @vikhyatk quipped about working on a “mamba mixture of experts diffusion qlora 1.58bit model trained using jax, rust, go, triton, dpo, and rag.”

- @cto_junior humorously lamented the complexity of AWS policies, resorting to copying from Hackernoon and hoping it resolves 500 errors.

AI Discord Recap

A summary of Summaries of Summaries

1. Cutting-Edge LLM Advancements and Releases

-

Cohere unveiled Command R+, a 104B parameter LLM with Retrieval Augmented Generation (RAG), multilingual support, and enterprise capabilities. Its performance impressed many, even outshining GPT-4 on tasks like translating Middle High German.

-

Anthropic showcased live tool use in Claude, sparking analysis of its operational complexity. Initial tests found Claude “pretty good” but with latency challenges.

-

QuaRot, a new 4-bit quantization scheme, can quantize LLMs end-to-end with minimal performance loss. The quantized LLaMa2-70B retained 99% of its zero-shot capabilities.

-

JetMoE-8B is a cost-effective alternative to large models like LLaMA2-7B, claiming to match performance at just $0.1M in training costs while being academia-friendly.

2. Parameter-Efficient LLM Fine-Tuning Techniques

-

ReFT (Representation Finetuning) is a new method claimed to be 10-50x more parameter-efficient than prior techniques, allowing LLM adaptation with minimal parameter updates.

-

Discussions on LoRA, QLoRA, LoReFT, and other efficient fine-tuning approaches like Facebook’s new “schedule-free” optimizer that removes the need for learning rate schedules.

-

Axolotl explored integrating techniques like LoReFT and the latest PEFT v0.10.0 with quantized DoRA support.

3. Architectural Innovations for Efficient Transformers

-

Mixture-of-Depths enables dynamic FLOPs allocation in transformers via a top-k routing mechanism, promising significant compute reductions by processing easier tokens with less compute.

-

Discussions on combining Mixture-of-Experts (MoE) with Mixture-of-Depths, and potential for integrating these methods into existing models over weekends.

-

BitMat showcased an efficient implementation of the “Era of 1-bit LLMs” method, while the LASP library brought improved AMD support for longer context processing.

4. Open-Source AI Frameworks and Community Efforts

-

LM Studio gained a new community page on HuggingFace for sharing GGUF quants, filling the void left by a prolific contributor.

-

LlamaIndex introduced Adaptive RAG, AutoRAG, and the Claude Function Calling Agent for advanced multi-document handling.

-

Basalt emerged as a new Machine Learning framework in pure Mojo, aiming to provide a Deep Learning solution comparable to PyTorch.

-

Unsloth AI explored GPU memory optimizations like GaLore and facilitated discussions on finetuning workshops and strict versioning for reproducibility.

PART 1: High level Discord summaries

Perplexity AI Discord

-

Perplexity Pro Puzzlements: Engineers are questioning the capability and accessibility of Perplexity Pro, addressing how to enable channels, file deletion issues, and purchasing obstacles; a suggestion was made to contact support or mods for help.

-

AI’s Cloud Conundrum: There’s buzz about cloud services’ role in large language model (LLM) development, with debates over AWS vs. Azure market shares and speculative chats on a potential Perplexity-Anthropic collaboration.

-

Apple’s AI Ambitions Analyzed: The guild is analyzing Apple 3b model’s niche applications and ponders the mainstream potential of Apple Glass, contrasting these with Google’s VR initiatives.

-

API Pricing and Limits Laid Out: Queries about Perplexity’s API, such as purchasing additional credits with Google Pay and the cost of sonar-medium-chat ($0.60 per 1M tokens), have been clarified, with pointers to the rate limits and pricing documentation.

-

Community Curiosities With Perplexity: Members are actively using Perplexity AI search for a range of topics, from beauty and dictatorships to Cohere’s Command R; they’re also sharing content with reminders on how to set threads as shareable.

Stability.ai (Stable Diffusion) Discord

Maximizing Image Fidelity: Technical suggestions to circumvent issues with generating 2k resolution realistic images emphasized lower resolution generation followed by upscaling, minimizing steps, and engaging “hiresfix”. Trade-offs between quality and distortions during upscaling framed the dialogue.

SD3 Release Leaves Crowd Restless: While some guild members are eagerly awaiting Stable Diffusion 3 (SD3), others sense a delay, which has led to mixed feelings ranging from anticipation to skepticism and comparisons with other models like Ideogram and DALLE 3.

AI Meets Art: Creative discussions unfolded around using AI for artistic endeavors, highlighting Daz AI in image generation, and the intricacies of finessing models for art-specific outputs, such as generating clothing designs in Stable Diffusion.

VRAM to the Rescue: Technical discourse delved into model resource demands, particularly operating models across various VRAM allotments and the anticipation of SD3’s performance on standard consumer GPUs.

Demystifying Stable Diffusion Know-how: Users shared insights and sought advice on optimizing Stable Diffusion model versions and interfaces, covering best practices for image finetuning and effective model checkpoint management.

OpenAI Discord

Fine-Tuning API Gets a Makeover: OpenAI has rolled out updates to the fine-tuning API, aiming to give developers more control over model customization. The enhancements include new dashboards and metrics, and expand the custom models program, as detailed in OpenAI’s blog post and an accompanying YouTube tutorial.

AI Discussions Heat Up: Across channels, there is debate around concepts such as AI cognition and ASCII art generation, probing AI’s potential in 3D printing, and balancing excitement for releases with security measures. Additionally, implementation queries on using AI for document analysis and fine-tuning for data enhancement were highlighted, alongside an observation of inconsistent behavior when setting the assistant’s temperature to 0.0.

Prompt Engineering Tactics Unveiled: Members are sharing strategies to make GPT-3 produce longer outputs and to constrain responses to specific documentation. Tips range from starting a new chat with “continue” to stern instructions that make the AI confirm the existence of answers within provided materials.

Assertive Prompting May Boost GPT Accuracy: To ensure that GPT’s outputs are based strictly on supplied content, the advice is to give clear and assertive prompts. Whether discussing the nature of consciousness to mimic human responses or reinforcing documentation-specific replies, the community explores the semblance of an AI’s understanding.

Clarity on GPT-4 Usage Costs: Discussions clarify that incorporating GPT models into apps requires a subscription plan, such as the Plus plan, as all models now operate under GPT-4. Users seeking enhanced functionality with GPT models must consider this when developing AI-powered applications.

LM Studio Discord

-

LM Studio Stays Offline: It’s confirmed that LM Studio lacks web search capabilities akin to other AI tools, offering local deployment options, outlined in their discussion and LM Studio’s documentation.

-

Models Clash on the Leaderboard: The community is evaluating models on scoreboards like the LMsys Chatbot Arena Leaderboard, highlighting that only select top-ranked models allow local operation, a critical factor for this audience.

-

Big Models, Big GPUs, Big Questions: Members debated the performance trade-offs of multi-GPU setups in LM Studio against the size of models like Mixtral 8x7b and Command-R Plus, giving insights into token speeds and hardware-specific limitations, including issues mixing different generations and brands, mostly NVIDIA.

-

The Rise of Eurus: The community discussed the advancement of the Eurus-7b model, offering improved reasoning abilities. It has been partially trained on the UltraInteract dataset and is available on HuggingFace, indicative of the group’s continuous search for improved models.

-

Archiving and Community Support: LM Studio announced a new Hugging Face community page, lmstudio-community, for sharing GGUF quant models, filling the vacancy left by a notable community contributor.

-

Reliability Across Interfaces: Users compared the reliability of LM Studio’s beta features, such as text embeddings, against alternative local LLM user interfaces and discussed workarounds for issues, including loading limitations and ROCm’s potential with new Intel processors, shared on social platforms like Reddit and Radeon’s Tweet.

Nous Research AI Discord

Bold New Leap for LoRA: A proposal has been made to apply Low-Rank Adaptation (LoRA) to Mistral 7B, aiming to augment its capabilities. Plans are afoot to integrate a taxonomy-driven approach for sentence categorization.

State-of-the-Art Archival and Web Crawling Practices: Discussions highlighted the thin line between archival groups and data hoarding, with a nod toward Common Crawl for web crawling excluding Twitter. The promotion of Aurora-M, a 15.5B parameter open-source, multilingual LLM with over 2 trillion training tokens was noted, in addition to tools for structuring LLM outputs like Instructor.

LLM Landscape Expanded: Announcements included a 104B LLM, C4AI Command R+, with RAG functionality and support for multiple languages available on Hugging Face. The community also discussed GPT-4 fine-tuning pricing and welcomed updates on an AI development teased by @rohanpaul_ai, while highlighting the LLaMA-2-7B model’s 700K token context length training and the uncertainty regarding fp8 usability on Nvidia’s 4090 GPUs.

Datasets and Tools Forge Ahead: An introduction to Augmentoolkit, which converts books and computes into instruction-tuning datasets, was discussed. Excitement surrounded Severian/Internal-Knowledge-Map with its novel approach to LM understanding, and the neurallambda project’s aim to enable reasoning in AI with lambda calculus.

Dynamic Function Calling: An example of function calling with Hermes is to be demonstrated in a repository, alongside serious debugging efforts for its functioning with Vercel AI SDK RSC. The Hermes-Function-Calling repository faced critique, resulting in adherence to the Google Python Style Guide. Previewed was the Eurus-7B-KTO model, garnering interest for its use in the SOLAR framework.

Dependency Dilemmas and Dataset Stratagems: An emerging dependency issue was acknowledged without further context. The RAG dataset channel elucidated plans for pinning summaries, exploring adaptive RAG techniques, and the utilization of diverse data sources for RAG, along with discussions of Interface updates from Command R+ and Claude Opus.

World Building Steams Ahead with WorldSim: Tokens circulated regarding the WorldSim Versions & Command Sets and the Command Index, covering user experience details like custom emoji suggestions. Also brewing were thoughts on new channels for philosophy cross-pollinated with AI and a TRS-80 telepresence experience reflecting on Zipf’s law. Anticipation buzzed for a WorldSim update with enhanced UX, hoping to ground self-steering issues.

Unsloth AI (Daniel Han) Discord

GPU Memory Gains: The GaLore update promises to enhance GPU memory efficiency with fused kernels, sparking discussions on integrating it with Unsloth AI for superior performance.

Model Packing Misfits: Caution is advised against employing packing parameter on Gemma models due to compatibility issues, although it can hasten training by concatenating tokenized sequences.

Optimization Opportunities: There’s ongoing exploration into combining Unsloth with GaLore for memory and speed optimizations, despite GaLore’s default performance lag behind Lora.

Anticipating Unsloth’s New Features: Unsloth AI plans to release a “GPU poor” feature by April 22 and an “Automatic optimizer” in early May. The available Unsloth Pro since November 2023 is examined for distribution improvements.

Dataset Diversity in Synthetic Generation: Format flexibility is deemed inconsequential for synthetic dataset generation’s impact on performance, allowing for personal preference in formats chosen for fine-tuning LLMs.

Eagerly Awaiting Kaggle’s Reset: Kaggle enthusiasts await the new season, leveraging additional sleep hours due to Daylight Saving Time adjustments, while seeking AI news sources and discussing pretraining datasets potentially including libgen or scihub.

Unsloth Enables Streamlined Inference: Community feedback praises Unsloth’s ease of use for inference processes, with additional resources like batch inference guidelines being shared.

Finetuning Workshops Tackled: Users brainstorm on how to deliver effective finetuning workshops with hands-on experiences, involving innovations such as preparing models beforehand or employing LoRaX as a web UI for model interaction.

Version Control for Stability: Concerns about the impact of Unsloth updates on model reproducibility prompted a consensus on the necessity for strict versioning, to ensure numerical consistency and reversibility.

Parameter Efficiency in Fine-Tuning: A new fine-tuning technique called ReFT is showcased for being highly parameter-efficient, described in detail within a GitHub repo and an accompanying paper.

Eleuther Discord

Wiki Wisdom Now Publicly Accessible: Members tackled the challenges of accessing Wikitext-2 and Wikitext-103 datasets, sharing links from Stephen Merity’s page and Hugging Face, with concerns over the ease of use of raw data formats.

GateLoop Replication Spark Debate: Skepticism regarding the GateLoop architecture’s perplexity scores met clarifying information with released code, igniting discussions on experiment replication and the performance of various attention mechanisms.

Modular LLMs at the Forefront: Intense discussions focused on Mixture of Experts (MoE) architectures, spanning interpretability, hierarchical vs. flat structures, and efficiency strategies in Large Language Models (LLMs), referencing multiple papers and a Master’s thesis tease suggesting an upcoming breakthrough in MoE Floating Point Operations (FLOPs).

Interpretability Implementations Interchange: Queries about the availability of an opensource implementation of AtP* led to the sharing of the GitHub repo for AtP*, while David Bau sought community support on GitHub for nnsight to fulfill NSF reviewer requirements.

From Troubleshooting to Trials in the Thunderdome: Discussions in #lm-thunderdome dove into troubleshooting, from syntax quirks with top_p=1 to confusion over model argument compatibility and efficiency gains from batch_size=auto, advising fresh installations or the use of Google Colab for certain issues.

Gemini Garners Cloud Support: A brief message highlighted Gemini’s support implementation by AWS, with a mention of support from Azure as well.

Modular (Mojo 🔥) Discord

Boosting Mojo’s Debugging Capabilities: Engineers queried about debugging support for editors like neovim, incorporating the Language Server Protocol (LSP) for enhanced problem-solving.

Dynamic Discussions on Variant Types: The use of Variant type was endorsed over isinstance function in Mojo, highlighting its dynamic data storage abilities and type checks using isa and get/take methods as shown in the Mojo documentation.

Basalt Lights Up ML Framework Torch: The newly minted Machine Learning framework Basalt is making headlines, differentiated as “Deep Learning” and comparable to PyTorch, with its foundational version v.0.1.0 on GitHub and related Medium article.

Counting Bytes, Not Just Buckets: A discourse on bucket sizing for value storage highlighted that each bucket holds UInt32 values, a mere 4 bytes each. This attention to memory efficiency is critical for handling up to 2^32 - 1 values.

Evolving Interop with Python: Progress in interfacing Python with Mojo was revealed, focusing on the use of PyMethodDef and PyCFunction_New, with stable reference counting and no issues to date. The current developments can be viewed on rd4com’s GitHub branch.

OpenAccess AI Collective (axolotl) Discord

-

LASP Library Gains Traction: The Linear Attention Sequence Parallelism (LASP) library is commended for its improved AMD support and the ability to split cache over multiple devices, facilitating longer context processing without the flash attn repository.

-

GPT-3 Cost-Benefit Analysis: AI engineers are engaging in cost analysis of GPT-3, concluding that purchasing a GPU could be more cost-effective than renting after approximately 125 days, indicating a consideration for long-term investment over continuous rental costs.

-

Colab GPU Update Engages Community: The AI engineering community reacts to Colab’s new GPU offerings and pricing changes, with a tweet from @danielhanchen mentioning new L4 GPUs and adjusted prices for A100 GPUs, supported by a shared spreadsheet detailing the updates.

-

Technical Discussion on Advanced Fine-tuning Strategies: Conversations are centered on fine-tuning methods like LoReFT, quantized DoRA in PEFT version 0.10.0, and a new technique from Facebook Research that negates the need for learning rate schedules, indicating a drive for optimizing model performance through innovative techniques.

LlamaIndex Discord

-

Webinar Whistleblower: Don’t miss the webinar action! Jerryjliu0 reminded users that the webinar is starting in 15 minutes with a nudge on the announcements channel.

-

AI Agog Over Adaptive RAG and AutoRAG: The Adaptive RAG technique is catching eyes with potential for tailored performance on complex queries as per a recent tweet, while AutoRAG steps up to automatically optimize RAG pipelines for peak performance, detailed in another tweet.

-

RAG Reimagined in Visual Spaces: AI aficionados discussed the potential of visual retrieval-augmented generation (RAG) models, capable of counting objects or modifying images with specific conditions, while Unlocking the Power of Multi-Document Agents with LlamaIndex hints at recent advancements in multi-document agents.

-

Troubleshooting Time in Tech Town: AI engineers shared challenges like async issues with SQL query engines, Azure BadRequestError puzzles, prompt engineering tips for AWS context, complexities of Pydantic JSON structures, and RouterQueryEngine filter applications.

-

Hail the Claude Calling Agent: LlamaIndex’s latest, the Claude Function Calling Agent, touted for enabling advanced tool use, can now be found on Twitter (tweet), boasting of new applications with Haiku/Sonnet/Opus integration.

OpenRouter (Alex Atallah) Discord

Claude Gets Tangled in Safety Nets: Users report higher decline rates when utilizing Claude with OpenRouter API compared to Anthropic’s API, suspecting OpenRouter might have added extra “safety” layers that interfere with performance.

Restoring Midnight Rose: Midnight Rose experienced downtime but was brought back online after restarting the cluster. The incident has sparked talks among users for switching to a more resilient provider or technology stack.

A Symphony of Modals: Following a shift to multimodal functionality, the Claude 3 model now accepts image inputs, necessitating code updates by developers. More details are announced here.

Command R+ Sparks Code-Conducting Excitement: Command R+, a 104B parameter model from Cohere, noted for its strong coding and multilingual capabilities, has excited users about its incorporation in OpenRouter, and comprehensive benchmarks can be found here.

Troubleshooting the Mixtral Puzzle: The Mixtral-8x7B-Instruct encountered issues following a JSON schema, which was successfully resolved by OpenRouter, not the providers, creating an eagerness for fixes and updates to streamline use with JSON modes.

HuggingFace Discord

A New Contender in Image Generation: A Visual AutoRegressive (VAR) model is proposed that promises to outshine diffusion transformers in image generation, boasting significant improvements in Frechet inception distance (FID) from 18.65 to 1.80 and an increase in inception score (IS) from 80.4 to 356.4.

Rethinking Batch Sizes for Better Minima: Engineers are debating whether smaller batch sizes, even though they slow down training, could achieve better results by not skipping over optimal local minima, in contrast to larger batch sizes that might expedite training but perform suboptimally.

Update Your Datasets like Git: AI practitioners are reminded that updates to datasets and models on Hugging Face require the same git-like discipline—an update locally followed by a commit and push—to reflect changes on the platform.

Bridging AI and Music with Open Source: A breakthrough was shared in the form of a musiclang2musicgen pipeline demonstrated through a YouTube video, promoting the viability of open-source solutions in audio generation.

Stanford’s Treasure Trove for NLP Newbies: For those starting in NLP and deciding between Transformer architectures and traditional models like LSTM, the recommendation is to utilize the Stanford CS224N course, available through a YouTube playlist, as a first-rate resource.

Tuning and Deploying LLMs: Questions arose concerning Ollama model deployment, especially regarding memory requirements for the phi variant, along with inquiries on whether local deployment or API-based solutions like OpenAI’s are more suitable for particular use cases.

tinygrad (George Hotz) Discord

Tinygrad’s NPU Buzz and Intel GPU Gossip: Discussion in the guild mentioned that while tinygrad lacks dedicated NPU support on new laptops, it provides an optimization checklist for comparing performance with onnxruntime. Guild members also dissected the Linux kernel 6.8’s capability to drive Intel hardware, especially post-Ubuntu 24.04 LTS release, eyeing advancement in Intel’s GPUs and NPUs’ kernel drivers.

Scalability Dialogue and Power Efficiency Talks: Dialogues touched on tinygrad’s future scalability, with George Hotz indicating the potential for significant scaling using a 200 GbE full 16x interconnect slot and teased multimachine support. There was also a comparison of NPUs and GPUs in terms of power efficiency, highlighting NPUs’ ability to match GPU performance with considerably less power consumption.

Prospects and Perils in Kernel Development: Among AI engineers, there was recognition of the obstacles presented by AVX-512 and interest in Intel making improvements based on a discussion thread on Real World Technologies. Conversations also covered AMD’s open-source intentions with a side of skepticism towards the actual impact, and looked forward to how the AMD Phoronix update will affect the scene.

Learning Through Tinygrad’s JIT: A post cleared confusion regarding JIT cache collection, and a community member contributed study notes to aid in performance profiling with DEBUG=2 for tinygrad. There’s a collective effort to refine a community-provided TinyJit tutorial, as the author welcomed corrections, signaling the community’s commitment to mutual learning and documentation accuracy.

Community Collaboration Encouraged: The conversations conveyed a strong sentiment for peer collaboration, urging knowledgeable members to submit pull requests to correct inaccuracies in TinyJit documentation, thus promoting a help-forward approach among the guild participants.

Interconnects (Nathan Lambert) Discord

-

Command R+ Enters The Enterprise Arena: Cohere announced the launch of Command R+, a scalable large language model focusing on Retrieval-Augmented Generation (RAG) and Tool Use, boasting a 128k-token context window and multilingual capabilities, with its weights available on Hugging Face.

-

Cost and Performance of Models in the Spotlight: The new JetMoE-8B model, positioned as a cost-effective alternative with minimal compute requirements, is claimed to outperform Meta’s LLaMA2-7B and is noted for being accessible to academia, verifying its details on Hugging Face.

-

A Surge of Enhancement Techniques for Efficiency: The conversation pivoted to DeepMind’s Mixture of Depths, which dynamically allocates FLOPs across transformer sequences, possibly paving the way for future integration with Mixture of Experts (MoE) models and inviting weekend experimentation.

-

Upcoming Guest Lecture Spotlights Industry-Research Integration: Nathan will present at CS25, amidst a lineup of experts from OpenAI, Google, NVIDIA, and ContextualAI, as listed on Stanford’s CS25 class page, underscoring ongoing industry-academic synergy.

-

Legal Threats and Credit Disputes Occasion Skepticism: Emphasized discussions include Musk’s hinted legal pursuits in a tweet and doubts voiced over a former colleague’s claims of credit on a project, revealing underlying tensions in the community interaction.

LangChain AI Discord

-

Discourse on Chain-based JSON Parsing: AI engineers engaged in a rigorous discussion about employing Output Parsers, Tools, and Evaluators within LangChain to ensure JSON formatted output from an LLM chain. They also tackled the intricacies of ChatGroq summarization errors, shared tactics for handling legal document Q&A chunking, compared the performance of budget LLMs, and expressed a need for tutoring on the RAG (retrieval-augmented generation) technique within LangChain.

-

Troublesome PDF Agent and Azure Integration Query: Engineers brainstormed on tuning an agent’s search protocol that was defaulting to PDF searches and consulted on integrating Azure credentials within a bot context while maintaining a FAISS Vector Database.

-

Semantic Chunking Goes TypeScript: A community contributor put forward a TypeScript implementation for Semantic Chunking, thereby extending the functionality to Node.js environments.

-

DSPy Tutorial Goes Español: A basic tutorial for DSPy, targeting Spanish-speaking enthusiasts, has been shared through a YouTube tutorial, thus broadening access to the application.

LAION Discord

AI Skirmishes with Stress and Time: The community is discussing AIDE’s achievements in Kaggle competitions, questioning if it’s comparable to the human contestant experience that involves factors like stress and time constraints. No consensus was reached, but the debate highlights the growing capabilities of AI in competitive data science.

Back to Basics with Apple and PyTorch: The technical crowd is expressing frustration over Apple’s MPS with some recommending trying the PyTorch nightly branch for potential fixes. Additionally, the benefits of PyTorch on macOS, specifically the aot_eager backend, were shown with a case of the backend reducing image generation time significantly when leveraging Apple’s CoreML.

A Glimpse into Audio AI: There’s curiosity about capabilities such as DALL·E’s image edit history and the desire to implement a similar feature within SDXL. Moreover, questions arose about voice-specific technologies for parsing podcast audio beyond conventional speaker diarization.

Revival of Access and Information: Discussions revealed concerns over Reddit’s API access being cut and its effects on developers and the blind community, as well as the reopening of the subreddit /r/StableDiffusion and its implications for the community.

Computational Smarts in Transformers: The buzz is about Google’s token compression method which aims to shrink model size and computational load, and a paper discussing a dynamic FLOPs allocation strategy in transformer models, employing a top-$k$ routing algorithm that balances computational resources and performance. This method is described in the paper “Mixture-of-Depths: Dynamically allocating compute in transformer-based language models”.

Latent Space Discord

Dynamic Allocation Divides the Crowd: DeepMind’s approach to dynamic compute in transformers, dubbed Mixture-of-Depths, garners mixed reactions; some praise its compute reductions while others doubt its novelty and practicality.

Claude Masters Tools: Anthropic’s Claude exhibits impressive tool use, stirring discussions about the practical applications and scalability of such capabilities within AI systems.

Paper Club Prepares to Convene: The San Diego AI community announces a paper club session, encouraging participants to select and dive into AI-related articles, with a simple sign-up process available to those eager to join.

ReFT Redefines Fine-Tuning: Stanford introduces ReFT (Representation Finetuning), touting it as a more parameter-efficient fine-tuning method, which has the AI field weighing its pros and cons against existing techniques.

Keras vs. PyTorch: A Heated Benchmark Battle: François Chollet highlights a benchmark where Keras outperforms PyTorch, sparking debates over benchmarks’ fairness and the importance of out-of-the-box speed versus optimized performance.

Enroll in AI Education: Latent Space University announces its first online course with a focus on coding custom ChatGPT solutions, inviting AI engineers to enroll and emphasizing the session’s applicability for those looking to deepen their knowledge in AI product engineering.

OpenInterpreter Discord

OpenInterpreter Talks the Talk: An innovative wrapper for voice interactions with OpenInterpreter has been developed, though it falls short of 01’s voice capabilities. The community is engaging in the set up and compatibility challenges, with Windows users struggling and CTRL + C not exiting the terminal as expected.

Compare and Contrast with OpenAI: A mysterious Compare endpoint has surfaced in the OpenAI API’s playground, yet without formal documentation; it facilitates direct comparisons between models and parameters.

Python Predicaments and Ubuntu Upset: OpenInterpreter’s 01OS is wrestling with Python 3.11+ incompatibility issues, suggesting a step back to Python 3.10 or lower for stability. Meanwhile, Ubuntu 21 and above users find no support for OpenInterpreter due to Wayland incompatibility, as x11 remains a necessity as noted in Issue #219.

Listening In, No Response: Users have reported troubling anomalies with 01’s audio connection, where sound is recorded but not transferred for processing, indicating potential new client-side bugs.

Conda Conundrum: To handle troublesome TTS package installations, the recommendation is to create a Conda environment using Python 3.10 or lower, followed by a repository re-clone and a clean installation to bypass conflicts.

CUDA MODE Discord

BitMat Breakthrough in LLM: The BitMat implementation was brought into the spotlight, reflecting advances in the “Era of 1-bit LLMs” via an efficient method hosted on GitHub at astramind-ai/BitMat.

QuaRot Quashes Quantization Quibbles: A newly introduced quantization scheme called QuaRot promises effective end-to-end 4-bit quantization of Large Language Models, with the notable achievement of a quantized LLaMa2-70B model maintaining 99% of its zero-shot performance.

CUDA Kernel Tutorial Gets Thumbs Up: A revered Udacity course on “Intro to Parallel Programming” was resurfaced for its enduring relevance on parallel algorithms and performance tuning, applicable even a decade after its introduction.

HQQ-GPT-Fast Fusion: There was a fiery conversation in the #hqq channel regarding integrating and benchmarking HQQ with gpt-fast, focusing on leveraging Llama2-7B models and experimenting with 3/4-bit quantization strategies for optimizing LLMs.

Enhanced Visualization Aims for Clarity: Triton-viz discussions aimed at better illustrating data flows in visualizations with amendments like directional arrows, value display on interactive elements, and possible shifts to JavaScript frameworks such as Three.js for superior interactivity.

Datasette - LLM (@SimonW) Discord

-

AI Product Development Guided by Wisdom: A deep dive into Hamel Husain’s blog post has stimulated discussion on best practices in evaluating AI systems, focusing on its utility for building robust AI features and enterprises.

-

The Datasette Initiative: Intentions are set to build evaluations for the Datasette SQL query assistant plugin, with emphasis placed on empowering users through prompt visibility and editability.

-

Perfecting Prompt Management: Three strategies for managing AI prompts in large applications have been proposed: a localization pattern with separate prompt files, a middleware pattern with an API for prompt retrieval, and a microservice pattern for AI service management.

-

Breaking Down Cohere LLM’s JSON Goldmine: The richness of Cohere LLM’s JSON responses was highlighted, evidenced by a detailed GitHub issue comment, revealing its potential in enhancing LLM user experiences.

-

DSPy: A Discussion Divided: The guild saw a split in opinion on the DSPy framework; some members were skeptical of its approach to simplifying LLMs into “black boxes,” while others showed enthusiasm for the unpredictability it introduces, likening it to a form of magical realism in AI.

DiscoResearch Discord

Judge A Book By Its Creativity: The new EQBench Creative Writing and Judgemark leaderboards have sparked interest with their unique assessments of LLMs’ creative output and judgement capabilities. Notably, the Creative Writing leaderboard leverages 36 narrowly defined criteria for better model discrimination, and a 0-10 quality scale has been recommended for nuanced quality assessments.

COMET’s New Scripts Land on GitHub: Two scripts for evaluating translations without references, comet_eval.ipynb & overall_scores.py, are now available in the llm_translation GitHub repository, signaling a step forward in transparency and standardized LLM performance measurement.

Cohere’s Demo Outshines the Rest: A new demo by CohereForAI on Hugging Face’s platform has showcased a significant leap in AI models’ grounding capabilities, inviting discussions on its potential to shape future model developments.

Old School Translations Get Schooled: The Hugging Face model, command-r, seemingly makes traditional methods of LLM Middle High German translation training obsolete with its translation prowess and is suggested to revolutionize linguistic database integrations during inference.

Pondering the Future of Model Licensing: The potential open-sourcing of CohereForAI’s model license is a hot topic, with comparative discussions involving GPT-4 and Nous Hermes 2 Mixtral underscoring the expected community growth and innovation that could mirror the Mistral model’s impact.

Mozilla AI Discord

-

Mozilla’s Solo Soars into Site Building: Mozilla proudly presents Solo, a new no-code AI website builder designed for entrepreneurs, currently available in beta. To hone the tool, Mozilla seeks early product testers who can provide valuable feedback.

-

Optimized GPU Usage for AI Models: Engineers recommend using

--gpu nvidiabefore-nglfor better performance in model operations; a 16GB 4090 Mobile GPU supports up to 10 layers at Q8. The exact number of layers that can be run efficiently may vary based on the model and GPU capacity. -

Tooling Up with Intel: Intel’s oneAPI basekit is being utilized alongside

icxfor its necessity in working withsyclcode andonemkl, which is pertinent within the Intel ecosystem. This integration underlines Intel’s significant role in the AI operations workflow. -

Kubernetes Clusters and AI Performance: Utilizing a mistral-7b model within a Kubernetes cluster (7 cores, 12GB RAM) resulted in a steady rate of 5 tokens per second; discussions are underway concerning whether RAM size, RAM speed, CPU, or GPU power play the biggest role in scaling this performance.

-

Caution Against Possible Malware in AI Tools: Raised cybersecurity concerns about llamafile-0.6.2.exe being flagged as malicious have prompted user vigilance. VirusTotal reports indicate both versions 0.6.2 and 0.7 of llamafile have been flagged, with the latter having a lower risk score, as seen on references like VirusTotal.

Skunkworks AI Discord

-

Dynamic Compute Allocation via MoD: The introduction of the Mixture-of-Depths (MoD) method for language models, as discussed in Skunkworks AI, enables dynamic compute allocation akin to MoE transformers but with a solitary expert, optimizing through a top-k routing mechanism. The potential for more efficient processing tailored to specific token positions is expounded in the research paper.

-

Standalone Video Content: A link to a YouTube video was shared without additional context, which likely falls outside the scope of technical discussions.

-

Unspecified Paper Reference: A member shared an arXiv link to a paper without accompanying commentary, making its relevance unclear. The document can be accessed here, but without context, its importance to ongoing conversations cannot be determined.

LLM Perf Enthusiasts AI Discord

- Anthropic’s AI Blesses the Stage: A member highlighted a tweet from AnthropicAI indicating promising initial test results for their new AI model.

- High Performance Met with High Latency: Although the AI’s capabilities were applauded, a concern was raised that latency issues become a bottleneck when dealing with serial AI operations.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

Perplexity AI ▷ #general (1314 messages🔥🔥🔥):

-

Perplexity Pro Inquiries: Users questioned the capabilities and accessibility of Perplexity Pro. They discussed how to enable channels, the inability to delete uploaded files, and difficulties purchasing the Pro plan due to redirects. Support suggested emailing or contacting mods directly for assistance.

-

Cloud Services and AI: Conversations centered around cloud service providers and their role in the LLM race. There were debates over the market shares of AWS and Azure, and speculations about the potential collaboration between Perplexity and Anthropic were discussed.

-

Apple’s Vision and AI Strategy: Users expressed views on Apple 3b model, discussing its niche use case and the need for lighter, less expensive iterations. There was sentiment that Apple Glass could be more mainstream and that Google’s VR initiatives were preferred.

-

AI Model Usage and Fine-tuning: Queries were made about the context length of GPT-4 Turbo vs Claude Opus, with suggested parity at 32k tokens. Discussions on open-source models emphasized Stable Diffusion 3 and the possibility of government interference in open-sourcing decisions.

-

User Interface and Accessibility Challenges on Arc: Users shared tips on using Arc browser more efficiently and reported bugs affecting the user interface, including issues with changing settings and accessing extensions.

Links mentioned:

- Pricing: no description found

- Introducing improvements to the fine-tuning API and expanding our custom models program: We’re adding new features to help developers have more control over fine-tuning and announcing new ways to build custom models with OpenAI.

- Yes No GIF - Yes No - Discover & Share GIFs: Click to view the GIF

- Pokemon Pokemon Go GIF - Pokemon Pokemon Go The Pokemon Company - Discover & Share GIFs: Click to view the GIF

- Is It GIF - Is It - Discover & Share GIFs: Click to view the GIF

- Sal Lurking Sal Vulcano GIF - Sal Lurking Sal Lurk Sal Vulcano - Discover & Share GIFs: Click to view the GIF

- Chat Completions: no description found

- OpenAI's STUNNING "GPT-based agents" for Businesses | Custom Models for Industries | AI Flywheels: Join Our Forum:https://www.natural20.com📩 My 5 Minute Daily AI Brief 📩https://natural20.beehiiv.com/subscribe🐥 Follow Me On Twitter (X) 🐥https://twitter....

- 1111Hz Conéctate con el universo - Recibe guía del universo - Atrae energías mágicas y curativas #2: 1111Hz Conéctate con el universo - Recibe guía del universo - Atrae energías mágicas y curativas #2Este canal se trata de curar su mente, alma, cuerpo, trast...

- 2024年から始めるPerplexityの使い方超入門: 「時間がない」「スキルがない」そんな人にために丸投げできるブログ代行サービス「Hands+」を始めました。 → https://bit.ly/blog-beginner検索エンジンからの集客を増やしたい企業様向けのオウンドメディア立ち上げサービスはこちら → https://bit.ly/owned-media6...

- 2024年から始めるPerplexityの使い方超入門: 「時間がない」「スキルがない」そんな人にために丸投げできるブログ代行サービス「Hands+」を始めました。 → https://bit.ly/blog-beginner検索エンジンからの集客を増やしたい企業様向けのオウンドメディア立ち上げサービスはこちら → https://bit.ly/owned-media6...

- Revolutionizing Search with Perplexity AI | Aravind Srinivas: Join host Craig Smith on episode #175 of Eye on AI as he engages in an enlightening conversation with Aravind Srinivas, co-founder and CEO of Perplexity AI.I...

- Inside Japan's Nuclear Meltdown (full documentary) | FRONTLINE: A devastating earthquake and tsunami struck Japan on March 11, 2011 triggering a crisis inside the Fukushima Daiichi nuclear complex. This 2012 documentary r...

- Antitrust (2001) ⭐ 6.1 | Action, Crime, Drama: 1h 48m | PG-13

Perplexity AI ▷ #sharing (11 messages🔥):

- Perplexity AI in Action: Members shared various Perplexity AI search links touching on subjects like beauty, the rise of dictatorships, and queries related to Cohere’s Command R.

- Setting Threads to Shareable: One member posted a reminder to others to ensure their threads are set to shareable, providing a Discord instruction link.

- Seeking Understanding and Improvements: Users queried Perplexity AI for insights on different topics and also expressed looking for improvements on a challenging day using the introducing improvements link.

- From Philosophical to Personal: The discussions spanned from general knowledge queries like “Who was Jean?” to more personalized searches suggesting specific needs or incidents of the day.

Perplexity AI ▷ #pplx-api (18 messages🔥):

-

Clarification on Search API Cost Concern: A discussion was sparked about why search APIs are viewed as expensive. Icelavaman clarified that the cost is not per search but per request, meaning that a single request to the model could encapsulate multiple searches within it.

-

Paying for More API Credits: Mydpi asked about purchasing additional API credits using Google Pay for their pro subscription, and ok.alex confirmed that more credits can be bought via the API settings on the web, with Google Pay being a valid payment method.

-

Pricing Inquiry for Sonar-Medium-Chat: Julianc_g inquired about the pricing of sonar-medium-chat, and icelavaman responded, confirming the price to be $0.60 per 1M tokens.

-

Query About Subscription Bonus and Payment Methods: Mydpi received a response from ok.alex indicating that the $5 Pro Bonus and purchase of more credits can be managed via Google Play as one of the payment options on the web settings page.

-

Rate Limits and Concurrent API Calls: Perplexity AI FAQ provided information about concurrent API calls and referred to the rate limits and pricing structure mentioned in the official pplx-API documentation for further details.

Stability.ai (Stable Diffusion) ▷ #general-chat (600 messages🔥🔥🔥):

-

Speed and Realism in Image Generation: A user struggled with generating high-resolution (2k) realistic images of people using “realistic vision v6”. The suggestion was to generate at lower resolution, upscale, use fewer steps, and enable “hiresfix” for better results. Discussions centered around the challenges of maintaining quality with upscaling and the distortions that sometimes result.

-

Stable Diffusion 3 Anticipation and Access Concerns: Amidst the excitement for the upcoming release of Stable Diffusion 3 (SD3), some users felt the release was delayed, while others anticipated new invites signaling progress. Thoughts on SD3 ranged from its potential improvements to skepticism about its impending release and comparison with rival models like Ideogram and DALLE 3.

-

AI Delving into Artistic Territories: Users discussed integrating AI with artistic creation, such as leveraging Daz AI to generate images. The dialogue included tactics for generating images in a particular style and optimizing model training and merging techniques for creating content like clothing ‘Loras’ using Stable Diffusion.

-

Technical Discussions on Model Resource Requirements: The conversation touched on technical aspects like running models with different VRAM capacities, handling checkpoints across different user interfaces, and the prospects of the forthcoming SD3 running efficiently on consumer GPUs.

-

Exploration and Optimization of Stable Diffusion Usage: Users exchanged tips and sought advice on using different versions of Stable Diffusion models and user interfaces. They discussed alternatives to generating better quality images, the process of finetuning images, and handling model checkpoints.

Links mentioned:

- Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction: We present Visual AutoRegressive modeling (VAR), a new generation paradigm that redefines the autoregressive learning on images as coarse-to-fine "next-scale prediction" or "next-resolutio...

- Identifying Stable Diffusion XL 1.0 images from VAE artifacts: The new SDXL 1.0 text-to-image generation model was recently released that generates small artifacts in the image when the earlier 0.9 release didn't have them.

- Home v2: Transform your projects with our AI image generator. Generate high-quality, AI generated images with unparalleled speed and style to elevate your creative vision

- Observing with NASA: Control your OWN telescope using the MicroObservatory Robotic Telescope Network.

- Reddit - Dive into anything: no description found

- Never Gonna Give You Up - Rick Astley [Minions Ver.]: Stream Never Gonna Give You Up - Rick Astley [Minions Ver.] by Pelusita,la chica fideo on desktop and mobile. Play over 320 million tracks for free on SoundCloud.

- GitHub - lllyasviel/stable-diffusion-webui-forge: Contribute to lllyasviel/stable-diffusion-webui-forge development by creating an account on GitHub.

- NewRealityXL ❗ All-In-One Photographic - ✔2.1 Main | Stable Diffusion Checkpoint | Civitai: IMPORTANT: v2.x ---> Main Version | v3.x ---> Experimental Version I need your time to thoroughly test this new 3rd version to understand all...

OpenAI ▷ #annnouncements (1 messages):

- Boosting Developer Control with Fine-Tuning API: OpenAI announces enhancements to the fine-tuning API, introducing new dashboards, metrics, and integrations to provide developers with greater control, and expanding the custom models program with new options for building tailored AI solutions. Introducing Improvements to the Fine-Tuning API and YouTube video on various techniques detail how to enhance model performance and work with OpenAI experts to develop custom AI implementations.

Link mentioned: Introducing improvements to the fine-tuning API and expanding our custom models program: We’re adding new features to help developers have more control over fine-tuning and announcing new ways to build custom models with OpenAI.

OpenAI ▷ #ai-discussions (539 messages🔥🔥🔥):

-

AI Discussions Span Broad Spectrum: Users engaged in spirited discussion about AI, ranging from machine cognition to AI’s understanding and generation of ASCII art. Terminology and concepts like sentience, consciousness, and the nature of AI’s cognitive processes, including whether LLMs “think” or merely process information, were debated.

-

Reflections on Business Ideas and AI Limitations: One user proposed a business idea leveraging AI’s capabilities to generate money, involving creating AI prompts compiled from generated tips. Another member pondered the possibility of using language models to perform tasks traditionally associated with humans, like playing chess or successful business planning.

-

Speculation on AI’s Potential in Various Fields: Users expressed anticipation for the integration of AI in fields such as 3D printing and design, suggesting ideas like a generative fill for 3D modeling that could revolutionize manufacturing.

-

Concerns and Considerations About AI Product Releases: A discussion point highlighted frustration with the AI product release process, noting OpenAI’s cautionary stance due to security concerns versus users’ eagerness for unrestricted access to new AI capabilities.

-

Queries About Implementing AI Features: Questions arose about implementing features like document analysis and using fine-tuning versus embeddings for internal company data augmentation, with users discussing the efficacy and suitability of different AI techniques for specific applications.

Links mentioned:

- China brain - Wikipedia: no description found

- Wow Really GIF - Wow Really - Discover & Share GIFs: Click to view the GIF

- ASCII Art Bananas - asciiart.eu: A large collection of ASCII art drawings of bananas and other related food and drink ASCII art pictures.

OpenAI ▷ #gpt-4-discussions (11 messages🔥):

- Zero Temperature Mayhem: A member reported experiencing random behavior in different threads even when the assistant’s temperature is set to 0.0, questioning the consistency at this setting.

- In Pursuit of Prompt Perfection: A user inquired about a GPT Prompt Enhancer to improve their prompts, and another member directed them to a specific channel for recommendations.

- Dramatizing Chatbot Responses: A user sought to mimic the behavior of showing progress messages like “analyzing the pdf document” or “searching web” in their chatbot API. They received advice implying custom development is necessary for such functionality.

- Error in the Matrix: A participant noted that GPT-4 often returns “error analysing” in the middle of a calculation and questioned if there were any solutions.

- Subscription for GPT Usage Confirmed: One user asked if GPT models in an app are free to use; another clarified that a Plus plan or higher is necessary due to all models utilizing GPT-4.

OpenAI ▷ #prompt-engineering (15 messages🔥):

-

Expanding Text Outputs: Members discuss strategies for making GPT-3 produce longer text, as stating “make the text longer” no longer seems effective. A suggestion includes copying the output, starting a new chat, and using the command “continue,” although there are concerns about losing context and style.

-

Addressing LLM Template Inconsistencies: One member asks for advice on how to ensure an LLM returns all sections of a modified document template, noting challenges with sections being omitted if the LLM perceives them as unchanged. The community has not yet offered a solution.

-

Prompt Crafting to Limit GPT’s Reliance on Training Data: A member seeks advice on crafting prompts that make a GPT focus on answers from provided documentation only and not default to its general training data. Suggestions include lowering the temperature setting and being explicit in the instructions that the model should confirm the answer exists within the given documentation before proceeding.

-

Enforcing Documentation-Constrained Responses: To better ensure GPT answers are drawn exclusively from provided materials, one member suggests using aggressive and stern instructions, e.g., commanding the model to “THROW AN ERROR” if an answer is not found specifically within the documentation.

-

Simulating Human-Like Interaction in GPT: A member experiments with GPT, discussing the nature of consciousness and trying to simulate human emotion through pseudocode explanations of human chemicals like serotonin. The conversation touches on the parallels between machine learning and human experiences such as dopamine responses.

OpenAI ▷ #api-discussions (15 messages🔥):

-

Tackling Repetitive Text Expansion: Users discussed how the command to “make text longer” no longer yields lengthier text variations, instead repeating the same content. To address this, strategies such as initiating a new chat with the “continue” command were suggested, though concerns about style inconsistencies and context disregard were raised.

-

Bridging the Gap in AI Document Drafting: One discussion point covered the issue of LLMs not recognizing and incorporating modifications in certain sections of a document. A user struggled with an LLM that didn’t acknowledge changes made to documents and sought solutions for this problem.

-

Ensuring GPT Fulfills Its Designed Role: The focus was on instructing GPT to answer queries strictly based on user-provided documents, avoiding reliance on its pre-trained knowledge. Lowering the temperature setting and being assertive in the prompt were recommended to enforce this rule effectively.

-

Simulating Human Emotions in AI: A user engaged GPT in a conversation about the nature of consciousness, asking it to mimic human chemical responses using pseudocode. This interaction aimed to explore a machine’s simulation of human-like emotions.

-

Recipe for Stern Instructions: It was suggested that a more effective way of instructing GPT is to be concise and firm, akin to the “Italian way,” thus emphasizing clarity and strict adherence to specified sources.

LM Studio ▷ #💬-general (198 messages🔥🔥):

- LM Studio’s Internet Independence: Members confirmed that LM Studio does not have the ability to ‘search the web’, similar to functionalities seen in tools like co-pilot or cloud-based language models.

- Exploring the Chatbot Arena Leaderboard: Some members discussed model performance and shared URLs such as the LMsys Chatbot Arena Leaderboard to highlight available models, noting that only certain models within the top ranks permit local deployment.

- Anything LMM Document Troubles: Users reported issues embedding documents in Anything LMM workspaces, which were addressed by downloading the correct version of LM Studio or ensuring proper dependencies, like the C Redistributable for Windows, were installed.

- Discussions on Multi-GPU Support and Performance: There were several exchanges about the effectiveness of multi-GPU setups in LM Studio with a consensus being that while multiple GPUs can be utilized, the resulting performance gains may not be proportional to the increase in hardware capability. Specific models were recommended based on available system specs.

- Absence of a Community Member: A brief conversation brought up a prolific open-source model creator known as @thebloke, expressing appreciation for his contributions and inquiring about his current activities.

Links mentioned:

- LMSys Chatbot Arena Leaderboard - a Hugging Face Space by lmsys: no description found

- Documentation | LM Studio: Technical Reference

- LM Studio Beta Releases: no description found

- Text Embeddings | LM Studio: Text Embeddings is in beta. Download LM Studio with support for it from here.

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed...

LM Studio ▷ #🤖-models-discussion-chat (85 messages🔥🔥):

- Mixtral vs. Mistral Clarified: Discussion highlighted that Mixtral has combined 8x7b models to simulate a 56b parameter model, while Mistral is a standard 7b model.

- Power-Hungry Giants: Users discussed the requirements and challenges of running Mixtral 8x7b on high-end GPUs like the 3090, noting the extreme slowness, with token speeds of around 5 tok/s.

- Compatibility Issues With Command-R Plus: Members shared their experiences and struggles with making the 103b Command-R Plus model work locally, referencing an experimental branch on GitHub and a HuggingFace space, indicating that the model is not yet supported in LLamaCPP or LM Studio.

- Eurus-7b Unveiled: A new promising 7b model, Eurus-7b, designed for reasoning, was shared from HuggingFace, sporting a KTO finetuning based on multi-turn trajectory pairs from the UltraInteract dataset.

- Mamba Model Supported: An exchange mentioned the availability of a Mamba-based LLM and its support within llamacpp, with an accompanying HuggingFace repository link, although its compatibility with LM Studio was uncertain as of version 0.2.19 beta.

Links mentioned:

- pmysl/c4ai-command-r-plus-GGUF · Hugging Face: no description found

- C4AI Command R Plus - a Hugging Face Space by CohereForAI: no description found

- CohereForAI/c4ai-command-r-plus · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- Direct Preference Optimization (DPO): A Simplified Approach to Fine-tuning Large Language Models: no description found

- openbmb/Eurus-7b-kto · Hugging Face: no description found

- Qwen/Qwen1.5-32B-Chat-GGUF at main: no description found

- christopherthompson81/quant_exploration · Datasets at Hugging Face: no description found

- ggml : update mul_mat_id to use the same tensor for all the experts by slaren · Pull Request #6387 · ggerganov/llama.cpp: Changes the storage of experts in memory from a tensor per expert, to a single 3D tensor with all the experts. This will allow us support models with a large number of experts such as qwen2moe. Exi...

- Add Command R Plus support by Carolinabanana · Pull Request #6491 · ggerganov/llama.cpp: Updated tensor mapping to add Command R Plus support for GGUF conversion.

LM Studio ▷ #announcements (1 messages):

- LM Studio Fills the Community Void: The LM Studio team and @159452079490990082 have launched a new “lmstudio-community” page on Hugging Face to provide the latest GGUF quants for the community after @330757983845875713’s absence. @159452079490990082 will act as the dedicated LLM Archivist.

- Find GGUF quants Fast: Users are advised to search for

lmstudio-communitywithin LM Studio for a quick way to find and experiment with new models. - Twitter Buzz for LM Studio Community: LM Studio announced their new community initiative on Twitter, inviting followers to check out their Hugging Face page for GGUF quants. The post acknowledges the collaboration with @bartowski1182 as the LLM Archivist.

Link mentioned: Tweet from LM Studio (@LMStudioAI): If you’ve been around these parts for long enough, you might be missing @TheBlokeAI as much as we do 🥲. Us & @bartowski1182 decided to try to help fill the void. We’re excited to share the n…

LM Studio ▷ #🧠-feedback (8 messages🔥):

-

Search Reset Confusion Cleared: A member noted an issue that search results do not reset after removing a query and pressing enter. However, it was clarified that there are no initial search results and a curated list of models can be found on the homepage.

-

Preset Creation Possibility Explained: In response to a query about the inability to create new presets, a member was guided on how to create a new preset in the LM Studio.

-

Praises for LM Studio over Competitors: A user commended LM Studio for producing the best results as compared to other local LLM GUIs like oogabooga text generation UI and Faraday, even when using the same models and instructions.

-

A Multitude of Feature Requests: One member requested several updates for LM Studio, including support for reading files, multi-modality features (text to images, text to voice, etc.), and enhancement tools similar to an existing tool named Devin to improve performance.

-

Inquiry about Community Member’s Absence: There was a query regarding the absence of a community member, TheBloke, asking for reasons and expressing concern about their wellbeing.

LM Studio ▷ #📝-prompts-discussion-chat (2 messages):

- Channel Resurrected: A member initiated the conversation with a brief message: “Unarchiving this channel.”

- In Search of the Best Blogging Buddy: A member inquired about the best model for writing blogs within the context of the chatbot discussions.

LM Studio ▷ #🎛-hardware-discussion (21 messages🔥):

- Mixed-GPU Configs Spark Curiosity: A user inquired if combining Nvidia and Radeon cards allows using combined VRAM or running them in parallel, but it was clarified that due to CUDA/OpenCL/ROCm incompatibilities, it’s not feasible. However, it’s possible to run separate instances of LM Studio, each using a different card.

- Optimizing GPU Use in LM Studio: There’s a query regarding why LM Studio is seemingly not utilizing an RTX 4070 for running larger models, leading to a discussion on ensuring GPU acceleration with VRAM offloading. Members suggested looking into GPU Offload settings and model layers configuration upon the user’s return to this issue later.

- Mixing Old and New Nvidia Cards: Conversation about usage efficacy when mixing a newer RTX 3060 with an older GTX 1070 surfaced, with the consensus being that similar GPUs yield better performance. One member shares their personal setup, indicating noticeable performance improvement, but considering it a temporary solution until they can upgrade to matching cards.

- Potential of Intel’s AMX with LM Studio: A question was raised regarding LM Studio’s ability to utilize Intel Xeon’s 4th generation Advanced Matrix Extensions (AMX), though no definitive answer was provided in the discussion.

LM Studio ▷ #🧪-beta-releases-chat (54 messages🔥):

-

Exploring LM Studio Text Embeddings: LM Studio 0.2.19 Beta introduces text embeddings, allowing users to generate embeddings locally via the server’s POST /v1/embeddings endpoint. Users were directed to read about text embeddings on LM Studio’s documentation.

-

Version Confusion Cleared Up: Some users were confused about their current version of LM Studio, and it was clarified that beta releases are based on the last build, with version numbers updating upon live release.

-

Anticipation for LM Studio 2.19 Alpha: Members expressed excitement about the alpha release of LM Studio 2.19, which includes text embeddings support and can be downloaded from Beta Releases.

-

Inquiries and Updates on Pythagora: Users discussed Pythagora, also known as GPT-Pilot, a Visual Studio Code plugin capable of building apps. The website Pythagora provides more information about its capabilities and integration with various LLMs.

-

ROCM Version Behind but Praised: A user mentioned that the ROCM build tends to be behind the main release, but even in its current state, it received positive feedback for ease of installation and functionality despite some bugs.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- What are embeddings in machine learning?: Embeddings are vectors that represent real-world objects, like words, images, or videos, in a form that machine learning models can easily process.

- Text Embeddings | LM Studio: Text Embeddings is in beta. Download LM Studio with support for it from here.

- Pythagora: no description found

- nomic-ai/nomic-embed-text-v1.5-GGUF at main: no description found

LM Studio ▷ #autogen (10 messages🔥):

- Troubleshooting Autogen Short Responses: In LM Studio with Autogen Studio, a user experienced a problem where inference yielded only 1 or 2 tokens. This issue was acknowledged by another member as a recurrent problem.

- Anticipation for a New Multi-Agent System: A member mentioned developing their own multi-agent system as a solution to Autogen issues, with plans to release it by the end of the week.

- Crewai Suggested as Autogen Alternative: Crewai was recommended as an alternative to Autogen, but it was noted that it still requires some coding to utilize effectively.

- User Interface Expected for New System: The member developing a new solution promised a user interface (UI), implying easier use without the need to write code.

- Pre-Launch Secrecy Maintained: Despite building excitement, screenshots or further details of the new system were not shared as the domain registration for the project is still pending.

LM Studio ▷ #langchain (1 messages):

- Inquiries on Retaining Memory: A member expressed curiosity about successfully having a bot analyze a file and wondered how to make the bot retain memory throughout the same runtime. No solution or follow-up was provided within the given messages.

LM Studio ▷ #amd-rocm-tech-preview (27 messages🔥):

-

AMD GPU Compatibility Queries: Users discussed compatibility issues with ROCm on AMD GPUs, especially the 6700XT (gfx 1031). One user reported an inability to load models despite trying various configurations, while another suggested it may be a driver issue that AMD needs to address.

-

ROCm Performance Insights: A significant performance boost was reported when using ROCm over OpenCL; one user noted an increase from 12T/s to 33T/s in generation tasks, underscoring criticisms of AMD’s OpenCL implementation.

-

Linux vs. Windows Support for ROCm: It was mentioned that ROCm has functionality limitations on Windows that don’t exist on Linux, where users can spoof chip versions to get certain GPUs to work. There were hints that if ROCm for Linux is released, more graphics cards could be supported by LM Studio.

-

Anticipation for Open Source ROCm: A tweet from @amdradeon was shared about ROCm going open source, raising hopes for easier Linux build support on more AMD graphics cards. The introduction of open-source ROCm could potentially expand compatibility (Radeon’s Tweet).

-

User Explorations and Configurations: Different set-ups were discussed and compared, with mentions of disabling iGPUs to run VRAM at the correct amount and varied configurations involving dual GPUs and high-performance builds for gaming transitioning towards AI and machine learning workloads.

Link mentioned: Reddit - Dive into anything: no description found

LM Studio ▷ #crew-ai (22 messages🔥):

- Navigating CORS: A member queried about CORS (Cross-Origin Resource Sharing), but there was no follow-up discussion providing details or context.

- Successful Code Execution: Adjusting the “expected_output” in their task allowed a member to successfully run a shared code, indicating a resolution to their issue.

- Seeking Agent Activity Logs: A member expected to see agent activity logs within the LM Studio server logs but found no entries, despite confirming the verbose option is set to true.

- Logging Conundrum in LM Studio: Consensus is lacking on whether LM Studio should display logs when interacting with crewAI, with members expressing uncertainty and no definitive resolution offered.

- Error Encountered with crewAI: After experiencing a “json.decoder.JSONDecodeError” related to an unterminated string, a member sought advice on resolving the issue, with a suggestion to consider the error message contents for clues.

Nous Research AI ▷ #ctx-length-research (2 messages):

- LoRA Layer on Mistral 7B in the Works: A member suggested the potential of creating a LoRA (Low-Rank Adaptation) on top of models like Mistral 7B to significantly enhance its capabilities.

- Advanced Task for AI Involves Taxonomy: In response to the LoRA suggestion, it was revealed that there are plans to not just split sentences but also to categorize each one according to a specific taxonomy for the task at hand.

Nous Research AI ▷ #off-topic (10 messages🔥):

- Web Crawling State of the Art Inquiry: One member expressed being lost while attempting to identify the current state-of-the-art practices in web crawling technology.