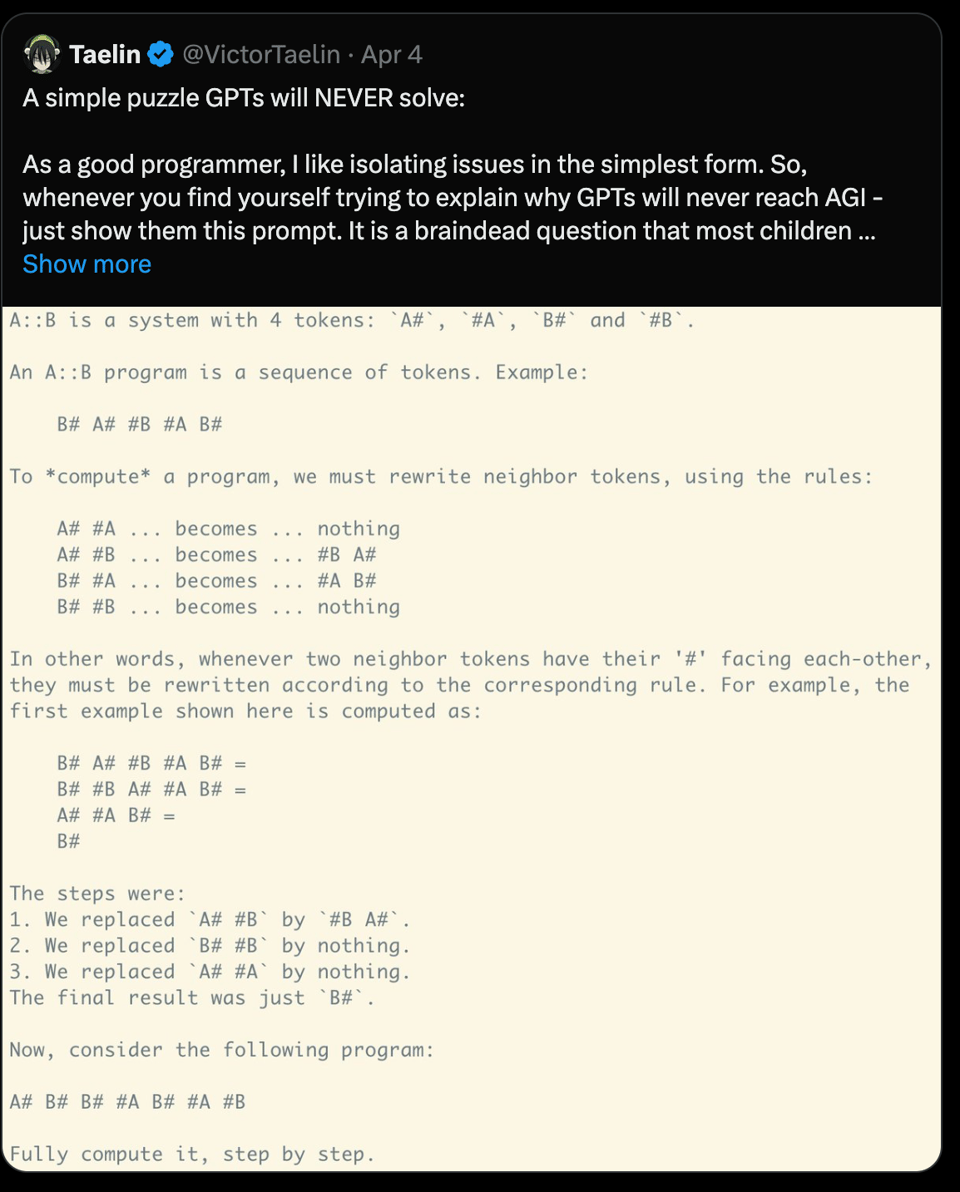

4 days ago, Victor Taelin confidently tweeted a simple A::B challenge for GPTs and then offered a $10k contest to prove him wrong:

His initial attempts with all SOTA models got 10% success rates. Community submissions got 56%. It took another day for @futuristfrog to surpass 90%. The challenge lasted 48 hours in total. A fun lesson in GPT capability, and another reminder that failure to do something in 2024 AI pre AGI is often a simple skill issue.

Table of Contents

[TOC]

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence. Comment crawling still not implemented but coming soon.

Technical Developments and Releases

- Command R Plus (104B) working with Ollama: In /r/LocalLLaMA, Command R Plus (104B) is working with Ollama using a forked llama.cpp, allowing for quantized models to run on M2 Max hardware.

- GGUF quantizations for Command R+ 104B released: In /r/LocalLLaMA, Dranger has released GGUF quantizations for Command R+ 104B from 1 to 8 bit on Huggingface.

- Streaming t2v now available: In /r/StableDiffusion, streaming t2v is now available, allowing for generating longer videos using the st2v Github repo.

- New version of WD Tagger (v3) released: In /r/StableDiffusion, a new version of WD Tagger (v3) is available for mass auto captioning of datasets, utilizing a WebUI interface.

Techniques and Prompting

- Lesser known prompting techniques yield thought-provoking outputs: In /r/OpenAI, thought provoking outputs were generated using lesser known prompting techniques such as self-tagging output, generational frameworks, and real-time self-checks.

- Experiment with self-evolving system prompts: In /r/OpenAI, an experiment letting OpenAI API write its own system prompt over multiple iterations resulted in increasingly flowery and grandeur wording.

- Promptless outpaint/inpaint canvas updated: In /r/StableDiffusion, promptless outpaint/inpaint canvas has been updated to run ComfyUI workflows on low-end hardware.

Questions and Discussions

- Importance of image composition when training character LoRAs: In /r/StableDiffusion, there is a discussion on the importance of image composition when training character LoRAs, and whether auto-tagging sufficiently captures details.

- Best checkpoint for video game characters in Stable Diffusion 1.5: In /r/StableDiffusion, there is a question about the best checkpoint for generating video game characters in Stable Diffusion 1.5.

- Scarcity of 5B parameter models: In /r/LocalLLaMA, there is an inquiry about why there are so few 5B parameter models compared to 3B and 7B.

- Open(ish) licenses and aligning incentives for open source AI: In /r/LocalLLaMA, there is a discussion on open(ish) licenses and what terms are desired to align incentives for open source AI.

Memes and Humor

- Humorous post about dancing anime girls and “realistic” Call of Duty: In /r/StableDiffusion, there is a humorous post about too many dancing anime girls, countered with a “realistic” Call of Duty image.

- Joke about ChatGPT vs Gemini training data: In /r/ProgrammerHumor, there is a joke confirming that ChatGPT was trained with YouTubers while Gemini was not.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

AI and Robotics Research Developments

- AI and robotics progress: @adcock_brett shares a weekly roundup of the most important research and developments in AI and robotics, highlighting the rapid pace of progress in the field.

- Rumored capabilities of GPT-5: @bindureddy reports that OpenAI’s upcoming GPT-5 model is rumored to have extremely powerful coding, reasoning and language understanding abilities that surpass Anthropic’s Claude 3.

- Sora for generating music videos: @gdb showcases Sora, a tool that allows users to visualize how a song has always “looked” by generating corresponding music videos.

- Fast performance of 4-bit Mistral 7B: @awnihannun achieved an impressive 103.5 tokens-per-second running the 4-bit Mistral 7B model on an M2 Ultra chip.

- Many-shot jailbreaking technique: @adcock_brett shares that Anthropic researchers discovered a technique called “many-shot jailbreaking” that can evade the safety guardrails of large language models by exploiting expanded context windows.

AI Agents and Robotics

- Complexity of building AI agents: @bindureddy notes that only 10% of the work in building AI agents is about LLMs and reasoning, while the remaining 90% involves heavy lifting in code, data, memory, evaluation and monitoring.

- OpenAI’s plans and LLMs in robotics: @adcock_brett provides an overview of OpenAI’s plans and discusses why large language models are important for robotics applications.

- Key factors for reliable LM-based agents: @sarahcat21 emphasizes that purposeful pretraining and interface design are crucial for building reliable agents based on large language models.

- Growth of coding agents: @mbusigin highlights the rapid explosion in the development and adoption of coding agents.

- Figure-01 humanoid robot: @adcock_brett shares an image of the Figure-01 electromechanical humanoid robot.

LLM Developments and Capabilities

- Grok 2.0 rumored performance: @bindureddy reports that Grok 2.0 is rumored to be the second model after Anthropic’s Claude Opus to beat OpenAI’s GPT-4 in performance, which would be a significant achievement for Grok and X.

- Claude 3 Opus outperforms GPT-4: @Teknium1 and @bindureddy note that Anthropic’s Claude 3 Opus model outperforms GPT-4 on certain tasks.

- New model releases: @osanseviero announces the release of Cohere Command R+, Google Gemma Instruct 1.1, and the Qwen 1.5 32B model family.

Retrieval Augmented Generation (RAG) Architectures

- Finance agent with LangChain and Yahoo Finance: @llama_index demonstrates building a finance agent using LangChain and Yahoo Finance, covering functions for stock analysis such as balance sheets, income statements, cash flow, and recommendations.

- Multi-document agents with LlamaIndex: @llama_index and @jerryjliu0 showcase treating documents as sub-agents for both semantic search and summarization using LlamaIndex.

- Agentic extension for RAG: @jerryjliu0 proposes an agentic extension for retrieval augmented generation that treats documents as tools and agents for dynamic interaction beyond fixed chunks.

- Extracting document knowledge graph for RAG: @llama_index demonstrates using LlamaParse to extract structured markdown, convert it to a document graph, and store it in Neo4j for advanced querying to power a RAG pipeline.

Memes and Humor

- Timeout set in seconds instead of milliseconds: @gdb shares a humorous meme about accidentally setting a timeout in seconds instead of milliseconds.

- Preferring In-N-Out to Shake Shack: @adcock_brett jokes about preferring In-N-Out to Shake Shack after living in NYC.

- Biological neural network performance after bad sleep: @_jasonwei humorously compares the performance of a biological neural network after a bad night’s sleep to GPT-4 base with poor prompting.

- Pains of saying Claude solved something: @Teknium1 shares a meme about the pains of admitting that Anthropic’s Claude model solved a problem.

- Studying LeetCode for 3 months without getting a job: @jxnlco shares a meme about the frustration of studying LeetCode for 3 months but not getting a job.

AI Discord Recap

A summary of Summaries of Summaries

1. Quantization and Optimization Breakthroughs for LLMs

-

QuaRot enables end-to-end 4-bit quantization of large language models like LLaMa2-70B with minimal performance loss, handling outliers while maintaining computational invariance. HQQ also showcased promising 4-bit quantization results integrated with gpt-fast.

-

Schedule-Free Optimization Gains Traction: Meta’s schedule-free optimizers for AdamW and SGD have been integrated into Hugging Face’s transformers library, potentially revolutionizing model training. Discussions revolved around the Schedule-Free Optimization in PyTorch repository and a related Twitter thread by Aaron Defazio on the topic.

-

Discussions around torch.compile focused on its utilization of Triton kernels only for CUDA inputs, asynchronous collective operations, DeepSpeed integration, and potential MLP optimizations using tiny-cuda-nn or CUTLASS.

2. Expanding Context Lengths and Attention Mechanisms

-

The EasyContext project introduces memory optimization and training recipes to extrapolate language model context lengths to 1 million tokens using ring attention on modest hardware like 8 A100 GPUs. A tweet by Zhang Peiyuan discussed the impact of increased context size on training throughput.

-

Mixture-of-Depths proposes dynamically allocating compute across a transformer sequence within a fixed budget, potentially enhancing efficiency without compromising flexibility.

-

Discussions covered linear attention vs. classic attention, variable length striped attention implementations, and the speed/memory trade-offs of ring attention in distributed computing scenarios.

3. Open-Source AI Advancements and Community Engagement

-

AMD announced open-sourcing the Micro Engine Scheduler (MES) firmware and documentation for Radeon GPUs, aligning with broader open-source GPU efforts welcomed by the community. (The Register Article, AMD Radeon Tweet)

-

The PaperReplica GitHub repository aims to replicate AI/ML research papers through community contributions, fostering knowledge sharing and skill development.

-

Licensing changes for text-generation-inference (TGI) to Apache 2 sparked a surge in contributors after the project was fully open-sourced, highlighting the potential economic benefits of open ecosystems like Mistral.

-

Command R+ by Cohere demonstrated impressive translation capabilities for archaic languages like Middle High German, outperforming GPT-4 class models and fueling hopes for an open-source release to drive developer engagement.

4. Multimodal AI Advancements and Applications

-

The Aurora-M project introduced a new 15.5B parameter open-source multilingual language model following the U.S. Executive Order on AI, showcasing cross-lingual impact of mono-lingual safety alignment across 2 trillion training tokens.

-

Unsloth AI faced challenges with models like Chat GPT and Claude accurately converting images to HTML while preserving colors and borders, prompting tongue-in-cheek suggestions to use ASCII art instead.

-

BrushNet, a new method for AI inpainting incorporating object detection, promises higher quality results as demonstrated in a tutorial video.

-

The LLaVA vision-language model underwent a novel “Rorschach test” by feeding it random image embeddings and analyzing its interpretations, detailed in a blog post. A compact nanoLLaVA model for edge devices was also released on Hugging Face.

5. Misc

-

Tinygrad Development Progresses with Reversions and Integrations: George Hotz reverted the command queue in tinygrad and is integrating the memory scheduler directly with the existing scheduler model using the multidevicegraph abstraction. The TinyJit tutorial and multi GPU training guide were shared to aid contributors.

-

Jamba Models Offer Alternatives for Limited Hardware: Scaled-down versions of the Jamba architecture, including an 8xMoE with 29B parameters and 4xMoE with 17.7B parameters, were created using spherical linear interpolation (Slerp) of expert weights to enable fine-tuning on more accessible hardware like a 4090 GPU at 4-bit precision.

PART 1: High level Discord summaries

Perplexity AI Discord

iOS Users Test Drive New Story Discovery: Perplexity AI is trialing an innovative story discovery format on iOS. Users are encouraged to provide feedback on their experiences through a designated channel, and can download the test app here.

AI Event Ends in Harmony: The Perplexity AI Discord event wrapped up with both eun08000 and codelicious sharing first place. Prize recipients will receive direct messages with details.

Claude 3 Opus - A Model Debate: On the server, the talk revolved around observed variations in Perplexity’s implementation of the Claude 3 Opus model compared to others, particularly regarding tasks demanding creativity.

API Quirks and Queries: Users noted inconsistencies between Perplexity’s API and web application, with the API showing more hallucinations; the API’s default model diverges from the web version. The ‘sonar-medium-online’ model is suggested for API users to closely mimic the Sonar model accessible via the web app for non-Pro users.

Tech Enthusiasts Share and Learn: Users exchanged information on a variety of topics from how AI affects the music industry to Tesla’s and Apple’s latest tech innovations. Additionally, a case study featuring Perplexity AI highlighted a 40% speed increase in model training powered by Amazon Web Services, demonstrating Perplexity’s efficient utilization of advanced machine learning infrastructure and techniques.

Nous Research AI Discord

-

Rorschach Test for AI Vision Models: The LLaVA vision-language model was put through a novel “Rorschach test” by feeding it random image embeddings and analyzing the interpretations, described in a blog post. Moreover, a compact nanoLLaVA model suitable for edge devices was introduced on Hugging Face.

-

Claude’s Memory Mechanism in Question: Technical discussions ensued on whether Claude retains information across sessions or if the semblance of memory is due to probabilistic modeling. Engineers debated the effectiveness of current models against the challenge of persistent context.

-

Worldsim Woes and Wisdom: Post-DDoS attack, proposals for a Worldsim login system to thwart future threats and discussions of a “pro” version to include more scenarios were afoot. Meanwhile, philosophical musings floated around potential AI-driven simulations akin to observed realities.

-

Chunking for RAG Diversity: Suggestions arose to pre-generate diverse datasets for RAG using a chunking script, alongside talk of creating complex multi-domain queries using Claude Opus. Ethical queries surfaced regarding data provenance, specifically using leaked documents from ransomware attacks, contrasting with the clustering strategies like RAPTOR for dataset curation.

-

The Coalescence of GitHub and Hermes: A GitHub repository, VikParuchuri/marker, was spotlighted for its high-accuracy PDF-to-markdown conversion, and can be found at GitHub - VikParuchuri/marker. Additionally, discussions focused on enhancing

Hermes-2-Pro-Mistral-7Bto execute functions withtoolsconfigurations, a hurdle matching the challenges delegates face with full-parameter finetuning vis-à-vis adapter training in various LLM contexts. -

Canada’s AI Ambitions and Enterprise LLMs: From the introduction of Command R+, a scalable LLM by Cohere for businesses, to insights into Canada’s strategy to champion global AI leadership, the discourse expanded towards understanding SSL certifications, creating local solutions akin to Google Image Search and untangling the surfeit of AI research and synthesis.

Stability.ai (Stable Diffusion) Discord

- Stability Bot MIA: Users seeking image generation services were guided to check bot status due to outages, pushing them towards alternate server channels for updates and support.

- Quality Quest in Image Generation: Debates emerged comparing local model outputs with Dreamstudio’s, with participants recommending open-source upscalers and discussing the effectiveness of various image enhancement techniques.

- SD3 Buzz Builds: There is an informal 2-4 week ETA on Stable Diffusion 3 (SD3), sparking conversations around expected improvements and new capabilities of the model.

- LoRa Training Dialogue: Information exchange on LoRa training saw users seeking installation advice and citing GitHub repositories for practical training methods.

- User Interface Upgrades: Discussions on user interface enhancements included suggestions for transitioning from Automatic 1.1.1.1 to StableSwarm, with a focus on user-friendliness and feature accessibility for new adopters.

Unsloth AI (Daniel Han) Discord

HTML Conversion Leaves Engineers Blue: AI engineers discussed the limitations of current language models like Chat GPT and Claude in accurately converting images to HTML, leading to lost color fidelity and rounded borders. A tongue-in-cheek proposal suggested the use of ASCII art as an alternative, stemming from its ability to elicit responses from AI models as shown in this Ars Technica article.

Aurora-M Lights Up Possibilities: An open-source multilingual model, Aurora-M, boasting 15.5 billion parameters, was introduced and caught the community’s attention with its cross-lingual safety capabilities, further detailed in this paper. The findings show that safety alignment in one language can have a positive impact on other languages.

Jamba Juice or Mamba Sluice? Investment Opinions Clash: Engineers debated the investment into AI21 Labs’ Jamba, especially given their recent fundraising of $155 million as reported by TechCrunch. The return on investment (ROI) of focused model fine-tuning was brought to light, presenting an optimistic view despite the model’s upfront costs.

AI Fine-Tuning Perspectives Merge and Diverge: The community engaged in a robust exchange on fine-tuning approaches, such as unsupervised fine-tuning techniques mentioned like GGUF, and the benefits of Dynamic Positional Offsets (DPO). Specific strategies for fine-tuning and the application of techniques like LoRA in enhancing performance were discussed.

Private AI Hosting Hustle: Data privacy concerns have led members to host their AI projects on personal servers, with anecdotes of using platforms like Hircoir TTS independently. Some envisioned future plans include integrating advertisements to capitalize on the growing portfolio of models.

LM Studio Discord

Boost Your Model’s Performance: The LM Studio appears to leap ahead of alternatives like oogabooga and Faraday with a GUI that wins user preference for its higher quality outputs. Suggestions poured in for expansions, notably for file reading support and modalities such as text-to-image and text-to-voice; such features edge closer to what Devin already offers and are angled towards enhancing creativity and productivity.

Big Thinkers, Bigger Models: A technical crowd advocates the power play of handling heavyweight models such as the Command R+, tipping the scales at 104B, and recommending brawnier hardware like the Nvidia P40 for older yet hefty models. Discussions around VRAM spill into strategies for optimizing multi-GPU setups, hinting at the use of both RTX 4060 Ti and GTX 1070 to spread the computational load, and leveraging Tesla P40 GPUs despite potential outdated CUDA woes.

The Joy of Smoothly Running Models: On both ROCM and ROCm Preview Beta fronts, GPU support discussion was rife, including the use of AMD’s RX 5000 and 6000 series chips. Users flagged the “exit 42” errors on ROCm 0.2.19 Beta, rallying around debug builds for a solution, displaying a communal spirit in action. Meanwhile, whispers of Intel’s Advanced Matrix Extensions (AMX) stirred speculation on how LM Studio could tap into such formidable processing prowess.

Excavating Model Gems: A surge in shared resources and models came through announcements, including Starling-LM 7B, c4ai command r v01, and stable-code-instruct-3b, among others. Accessibility stands upfront with a collective push towards a community page on Hugging Face, where the latest GGUF quants shine, luring AI enthusiasts to experiment with the offerings such as Google’s Gemma 1.1 2B, and stay alert for the upcoming 7B variant.

Sculpting the Vision Models Landscape: A member’s inquisition about training LLMs to decipher stock market OHLC patterns, amidst praise for LM Studio’s utility in vision model implementations, ignites a spark in exploring how the intricate dance between technology and finance could be choreographed with AI’s grace. The revelation of vision models on Hugging Face mirrors the community’s camera-ready attitude to snapshot and subsequently transpose this conceptual aesthetic into practical applications.

HuggingFace Discord

Gradio’s API Recorder and Chatbot UI Fixes Gear Up for Release: Gradio version 4.26.0 introduces an API Recorder to translate interactions into code and addresses crucial bugs related to page load times and chatbot UI crashes. The update is detailed in the Gradio Changelog.

A Crescendo of Concern Over LLMs: Security concerns gain spotlight as ‘Crescendo’, a new method that challenges the ethical restraints of LLMs, and vulnerabilities in Cohere’s Command-R-plus are exposed. Meanwhile, Mixture-of-Depths (Modes) proposal and llamaindex blogs offer innovative solutions for model efficiency and information retrieval.

NLP Community Finesse with SageMaker, Desire for PDF ChatGPT, and Sails Through Challenges: The community debates deploying models on SageMaker, customizing ChatGPT for PDFs, and shares fascination over Gemini 1.5’s 10M context window. Solution seekers confront multi-GPU training hiccups and demand token count information when using Hugging Face libraries.

Thriving Repository of AI Contributions and Dialogues: HybridAGI’s neuro-symbolic behavior programming on GitHub welcomes peer review, and the Hugging Face reading group archives its collective wisdom on GitHub. PaperReplica’s open-source invitation and RAG-enabled llamaindex shine as beacons of collaborative learning and resource sharing.

Vision and Beyond: Dialogues in the computer vision channel touch on the utility of HuggingFace as a model repository, efficacy of different Transformer models (e.g., XCLIP), and address real-time challenges using tools like the HuggingFace ‘datasets’ library for parquet file manipulation. Meanwhile, an open call for resources to apply diffusion models to video enhancement signifies the domain’s vibrant investigative spirit.

Modular (Mojo 🔥) Discord

Mojo Rising: A Dive into Special Functions and SICP Adaptation

- The Mojo community is flexing its technical muscle, diving into specialized mathematical functions with an update to the Specials package and porting the famed “Structure and Interpretation of Computer Programs” (SICP) text to Mojo. Users can now find numerically accurate functions like

expandlogin the Specials package and participate in collaborative algorithm and package sharing via repositories such as mojo-packages.

MAX Aligns with AWS; Open Source Documentation Drive

- Modular announced a strategic alliance with AWS, intending to integrate MAX with AWS services and extend AI capabilities globally. The Mojo language is gearing up for enhanced collaboration with an appeal for community contributions to Mojo’s standard library documentation.

Discord Dynamics: Python Interop and Contributing to Mojo’s Growth

- The Mojo community is actively engaging in discussions about metaprogramming capabilities, compile-time evaluation complexities, and lifetimes in the

Referencetypes. They are exploring pathways to Python interoperability by implementing essential functions and are inviting contributors to jump in on “good first issues” on GitHub, offering a starting point with Mojo’s Changelog and contribution guidelines.

Var vs. Let - the Mojo Parameter Saga

- A conversation revealed that while

letmay have been removed from Mojo,varremains for lazily assigned variables with details in the Mojo Manual, feeding further knowledge to users. Additionally, efforts are converging on infusing Mojo into web development, with the availability of lightbug_http, reiterating Mojo’s position as a comprehensive general-purpose language.

Nightly Chronicles: From CPython Interop to Community Discussions

- Members are celebrating advancements in CPython interoperability in Mojo and fostering an environment ripe for contributions, discussing best practices for signed-off commits in PRs, and sharing solutions for managing nightly builds and package updates. This proactive collaboration is paving the way for future open source contributions, signposted on GitHub, including anticipated discussions on the Mojo Standard Library.

Blog Beats and Video Treats in Mojo’s Creative Continuum

- The launch of the Joy of Mojo website underscores the community’s commitment to sharing creative experiences with Mojo, further amplified by GitHub repositories like mojo-packages and enlightening videos on Mojo’s Python interoperability, underscoring its dynamic evolution.

Eleuther Discord

-

WikiText’s New Main Access Point: Stephen Merity has rehosted WikiText on Cloudflare R2, offering a larger dataset while maintaining original formatting, which is important for training language models with authentic data structures.

-

Perplexing Perplexity Scores: A debate emerged about the validity of perplexity scores reported by the GateLoop Transformer author, with lucidrains unable to replicate them, prompting discussions over result reproduction and transparency in reporting.

-

Hugging Face’s Automatic Parquet Conversion Frustration: Users expressed frustration at Hugging Face’s autoconversion of datasets to parquet format, which can cause confusion and issues, such as with

.rawfiles; a workaround involves hosting datasets using Git LFS. -

Documentation Ephemera and Reproducibility Emphasis: OpenAI’s fluctuating documentation on models, with some links being removed, underscores the importance of reliable resources like archived pages for consistency in the AI research community. Simultaneously, there’s a push for reproducible data formats, as shown by community efforts to mirror datasets like WikiText on platforms such as Cloudflare R2.

-

Optimizer Optimization and Zero-Shot Innovations: Conversations coalesced around the Schedule-Free optimizer and its capacity to estimate optimal learning rates, as well as intriguing methods for teaching language models to search using a stream of search (SoS) language. Moreover, the connection between emergent abilities in language models and exposure to long-tail data during training was a focal topic, with implications for zero-shot task performance.

-

Stars Matter for NSF Reviews: The number of GitHub stars for nnsight was highlighted by an NSF reviewer as a metric of concern, illustrating the unconventional impact of community engagement on research funding perspectives.

-

GPU Utilization and BigBench Task Recognition: Analysis of GPU utilization led to reduction in evaluation times by using

batch size=auto, revealing potential underutilization issues. Members also navigated confusion around BigBench tasks, suggesting verification of task variants usinglm_eval —tasks list. -

CLI Command Conundrums and Logit Bias Discussions: Technical discussions flourished around the

—predict_onlyCLI command issues and the non-effect of OpenAI’slogit_biasas expected on logits during one-token MCQA tasks, leading to exploration of alternative approaches such asgreedy_until. Temperature settings and their effects on outputs were clarified, highlighting the importance of correctgen_kwargssettings for achieving desired model behavior.

OpenAI Discord

- Translation Showdown: GPT-4 vs DeepL: GPT-4’s translation capabilities were compared to DeepL, highlighting that while DeepL excels in contextual language translation, GPT-4 sometimes falls short on nuancing basic contexts.

- AI Models in Code Generation Face-Off: Opus and GPT-4 received praise for impressive performance in code generation tasks, but GPT-4 also showed potential issues when processing larger contexts compared to other models.

- Decoding AI Consciousness: A lively exploration into simulating human consciousness with AI involved equating human neurochemical activities with GPT’s programming mechanisms, sparking debates on consciousness’s origins and AI’s role in its depiction.

- Prompt Engineering for Sensitive Content: Writers discussed circumventing ChatGPT’s content policy to develop backstories for characters with traumatic histories, seeking subtler ways to infuse nuanced, sensitive details into their narratives.

- Building AI-Powered Games: Engineers suggested utilizing JSON for structuring game progress data while discussing the challenge of crafting seamless game experiences that keep underlying code concealed from players.

OpenRouter (Alex Atallah) Discord

Claude 3 Takes on Images: The Claude 3 models have been updated to multimodal, now supporting image input, requiring developers to modify existing codebases accordingly.

AI Goes Old School with Rock, Paper, Scissors: A new game at blust.ai, where players can challenge ChatGPT to a classic round of Rock, Paper, Scissors.

Frontends and Favorites Front and Center: Engineers discussed various OpenRouter API frontends like LibreChat, SillyTavern, and Jan.ai. Command-R+ has emerged as a favored model for coding tasks and interactions in Turkish, while concerns are raised about content censorship in models.

Performance Insights in Modeling: Conversations highlighted that Sonnet outstrips Opus in coding tasks, and Claude 3 is superior in PDF data extraction compared to Gemini Pro 1.5, which prompted some skepticism about its utility.

Model Efficacy Metrics Spark Debate: The community has voiced that model ranking based solely on usage statistics might not accurately reflect a model’s worth, suggesting spending or retention as potential alternate measures.

LlamaIndex Discord

Revving Up RAG Applications: Marker-Inc-Korea introduced AutoRAG, an automated tool for tuning RAG pipelines to enhance performance, detailed and linked in their tweet. Meanwhile, create-llama was released to streamline the launch of full-stack RAG/agent applications, as announced in its tweet.

Tweaking Sales Pitches with AI: A new application using RAG to create personalized sales emails was featured in a recent webinar, ditching hard-coded templates with an LLM-powered approach, further info available in a tweet.

Deep Diving Into Documents: Andy Singal presented on multi-document agents that handle complex QA across numerous sources. The aim is to expand this functionality for more intricate inquiries, shared in a presentation tweet.

Metadata to the Rescue for Document Queries: To get page numbers and document references from multi-document queries, make sure to include this metadata before indexing, allowing retrieval of detailed references post-query.

Optimization Overhaul for Azure and Embedding Times: Participants noted issues with Azure’s OpenAI not recognizing context and discussed using batching methods for faster embedding generation. Regarding challenges with ReAct agents and open-source models like “llama2” and “mistral”, better router descriptions may improve model-routing performance.

OpenInterpreter Discord

Mistral Needs Muscle: Mistral 7B Instruct v0.2 has been acknowledged as high-performing, yet it demands substantial resources—expect to allocate at least 16GB of RAM and have some GPU support for smooth operation.

Challenges with Python Compatibility: There’s a community consensus to stick with Python <=3.10 to avoid issues with TTS packages, with repeated suggestions to avoid using Python 3.11.4 for setups dependent on voice command recognition.

A Call for Better Documentation: Inquiries about local vision models and calls highlighting the need for more comprehensive examples and documentation in the Open Interpreter’s cookbook reveal gaps that are yet to be filled.

Efficiency Over Expense with Local Models: The costliness of GPT-4 has prompted discussions around leveraging local models such as Hermes 7B and Haiku—less expensive yet slightly less refined alternatives offering privacy and lower operating costs.

Hardware Hang-Ups and Software Setbacks: The O1 community reported hardware issues, particularly with external push-button integration, and software setup challenges when installing on Windows, with tweaks including using chocolatey, virtualenv, and specific environment variables being part of the troubleshooting dialogue.

Relevant resources and conversations are threaded throughout the community, with direct engagement on issues being tracked on platforms like GitHub.

LangChain AI Discord

-

GitHub Grievances: A user requested assistance with a Pull Request that was failing due to a “module not found” error related to “openapi-pydantic,” even though the module was included in dependencies. This highlights dependency management as a notable pain point in the community.

-

Fine-Tuning Finesse Without the GPU Muscle: Queries about training and fine-tuning language models sans GPU led to recommendations for tools like Google Colab and the mention of ludwig.ai as viable options, indicating an area of interest among engineers seeking cost-effective computing resources.

-

Visual Visions via Artful AI’s Update: The announcement of Artful AI’s new models, including Dalle Creative, Anime Dream, & Epic Realism, released on the Google Play Store, piqued the community’s interest in the evolving domain of AI-driven image generation.

-

Security Spotlight on AISploit: The introduction of AISploit, available on GitHub, sparked discussions on leveraging AI for offensive security simulations, indicating a tactical pivot in the use of AI technologies in cybersecurity.

-

TypeScript and Text Chunking Techniques Revealed: The share of a TypeScript Gist that demonstrated breaking up large text into semantic chunks using OpenAI’s sentence embedding service exemplified community engagement in developing and sharing tools for enhanced text processing workflows.

LAION Discord

Apple’s AI Ambitions Under Scrutiny: Apple is criticized for the subpar performance of Metal Performance Shaders (MPS) and torch compile, even as recent merges aim to fix MPS issues in the PyTorch nightly branch. Community experiences with torch.compile vary, reflecting ongoing optimizations needed for Apple’s platforms.

Copyright Conundrum: AI’s use of copyrighted content for creating derivative works sparks legal debate, with consensus on the insufficiency of paraphrasing to avoid infringement. The community anticipates the need for substantial legal changes to accommodate new AI training data practices.

The Harmony of AI-Composed Music: Discussions about AI-generated music, involving companies like Suno and Nvidia, recognized rapid advancements but also forecasted potential legal spats with the music industry. Members also noted the less impressive progress in text-to-speech (TTS) technology compared to AI’s leap in music generation.

AI Career Dynamics Shifting: The rise of freelance AI-related careers due to technological progress is noted, with resources like Bloomberry’s analysis cited. Stability AI’s CosXL model release sparks conversations about the efficacy of EDM schedules and offset noise in model training.

Novelties in AI Research Techniques: A new paper on transformers shows computational resource allocation can be dynamic, DARE’s pruning technique for language models hints at preservable capabilities, and BrushNet introduces enhanced AI inpainting. Latent diffusion for text generation, referenced from a NeurIPS paper, indicates a potential shift in generative model techniques.

Latent Space Discord

-

GPT Models Tackle the A::B Challenge: Victor Taelin conceded that GPT structures could indeed address certain problem-solving tasks, including long-term reasoning, after a participant utilized GPT to solve the A::B problem with a near 100% success rate, winning a $10k prize. Victor Taelin’s statement on the outcome is available online.

-

Stanford Debuts Language Modeling Course CS336: Stanford is offering a new course, CS336, which delves into the nuts and bolts of language modeling, including insights on Transformers and LLMs, garnering significant interest from the community eager for the release of lecture recordings.

-

Groq Plans to Topple AI Hardware Rivals: The AI hardware startup Groq, led by a founder with an unconventional educational background, aims to outdo all existing inference capacity providers combined by next year and asserts their developers enjoy reduced inference costs and speedier hardware in comparison to NVIDIA’s offerings.

-

Introducing LangChain’s Memory Service: LangChain’s latest alpha release brings a memory service aiming to upgrade chatbot interactions by automatically condensing and refining conversations, with resources posted for quick start.

-

Peer Learning in AI Tools and Knowledge Management: Engineers exchanged resources and strategies for curating personal and organizational knowledge using AI tools, such as incorporating Obsidian-Copilot and fabric, and discussed the development of integrations to enhance tools like ChatGPT within knowledge systems.

OpenAccess AI Collective (axolotl) Discord

-

Quantized DoRA Available, Dance of the LoRA: The latest release of

peft=0.10.0supports quantized DoRA, prompting suggestions to update axolotl’srequirements.txt(PEFT’s release notes). The advanced optimizers from Facebook Research have now been integrated into Hugging Face’s transformers library, with Schedule-Free Learning open-sourced and specific parameter recommendations of0.0025for ScheduleFreeAdamW (Hugging Face PR #30079). -

Model Generation Hiccup: Users reported and discussed an error occurring in the generation process with a fine-tuned Mistral 7b model using fp16, specifically after a few successful generations resulting in

_queue.Empty. -

Rotary Queries and Sharding Insights: The parameter

"rope_theta": 10000.0came under scrutiny, relating to Rotary Positional Embedding. Meanwhile, The FSDP configuration for Mistral was shared with details on how theMixtralSparseMoeBlockclass should be utilized (mixtral-qlora-fsdp.yml). -

Seek and You Shall Find: LISA and Configs: Queries arose about the location and absence of LISA parameters in documentation, later resolved with the discovery of the LISA configuration file. Members also engaged in technical discussions on handling optimizer states for unfreezing new layers during training.

-

Model Training Conundrums Solved: The community solved various challenges including training with raw text, adapting Alpaca instruction sets, differentiating micro batch size and batch size, and adjusting configurations to disable checkpoints and evaluations or handling special tokens.

Interconnects (Nathan Lambert) Discord

Podcasting Gold: John Schulman to Possibly Feature on Show: Nathan Lambert is considering featuring John Schulman in a podcast, a move that stirred excitement among members. Moreover, a licensing change for text-generation-inference (TGI) to Apache 2 has spurred a significant increase in contributors to the open-source project.

Memes Channel Maintains Light-Heartedness: The memes channel included joking references to targetings without context, improvements in experiences, and confirmation of employment status, indicating a casual, light-hearted discourse among members.

Open AI Weights Debate Hits Engaged Nerve: The #reads channel had a vibrant discussion on the societal impacts of open foundation models, with a focus on safety thresholds, regulation feasibility, and AI’s potential to manipulate societal processes. A shared visualization of Transformer attention mechanisms and speculation about future models that emphasize verification instead of generation were among the in-depth topics discussed.

Bridging the Knowledge Gaps with Visuals: The #sp2024-history-of-open-alignment channel discussed effective resources like lmsys and alpacaeval leaderboard to find state-of-the-art models. Additionally, an intent to visually categorize models for better comprehension was expressed, along with sharing a live document (Google Slides presentation) for an upcoming alignment talk and a guide (comprehensive spreadsheet) on open models by Xeophon.

A Note on AI Generated Music: Nathan noted the impressive quality of a new contender in AI music generation, posing a potential challenge to the Suno AI platform.

CUDA MODE Discord

-

Fast Track to Tokenization: Engineers discussed speeding up tokenization using Huggingface’s fast tokenizer for c4-en, exploring options like increasing threads or utilizing more capable machines.

-

Open Source GPU: AMD announced the open sourcing of its Micro Engine Scheduler (MES) firmware for Radeon GPUs, a decision celebrated within the community and praised by entities like George Hotz’s Tiny Corp. (The Register Article, AMD Radeon Tweet).

-

Paper Trail: An open-source repository, PaperReplica GitHub Repo, for replicating research papers in AI & ML got its unveiling, inviting community contributions and GitHub stars.

-

CUDA Conundrums and Triton Strategizing: From setting up CUDA environments on Ubuntu to appreciating libraries that boost proficiency with Triton, members exchanged tips and troubles. In particular, a lean GPT-2 training implementation by Andrej Karpathy in C was highlighted for its efficiency without the heft of PyTorch or Python (GitHub).

-

DeepSpeed in the Fast Lane: Conversations revolved around practical applications of DeepSpeed, integration with Hugging Face’s Accelerate, and memory optimization wonders even at zero stage. Additionally, use of Triton kernels was noted to be conditional on CUDA device input, and a curiosity about optimizing transformer MLPs with cublas or tiny-cuda-nn was shared (tiny-cuda-nn Documentation).

-

Quantum of Solace for LLMs: A novel quantization approach, QuaRot, was mooted for its capability to quantize LLMs to 4 bits effectively, while a revelatory tweet hinted at schedule-free optimization, potentially indicating a move away from the traditional learning rate schedules (Twitter).

-

Vexed by Visualizing Triton: Engineers delved into the challenges and opportunities in visualizing Triton code, from shared memory to tensor views, and from CPU constructs to enhancing JavaScript interactivity, signaling a continuing quest for more user-friendly debugging tools.

-

Calendar Confusion Cleared: A small timezone clarification was sought for a ring attention session, hinting at the vibrancy of the community’s relentless pursuit of knowledge and optimization.

-

Of Numbers and Neurons: The value of precise quantization methods surfaced, highlighting the importance of accurate tensor transformations and the potential performance gains leveraged from tools like Triton, indicating a keen focus on efficiency within machine learning pipelines.

tinygrad (George Hotz) Discord

Tinygrad Takes a Step Back: George Hotz has reverted the command queue in tinygrad and is opting to integrate the memory scheduler directly with the current scheduler model. This approach utilizes the multidevicegraph abstraction already in place, as discussed here.

TinyJIT Under the Microscope: The TinyJit tutorial has been released, although it may contain inaccuracies, particularly with the apply_graph_to_jit function, and users are encouraged to submit pull requests for corrections TinyJit Tutorial.

Tinygrad Learning Expanded: A collection of tutorials and guides for contributing to tinygrad are now available with a focus on topics like multi GPU training Multi GPU Training Guide.

Discord Roles Reflect Contribution: George Hotz redesigned roles within the tinygrad Discord to better reflect community engagement and contribution levels, reinforcing the value of collaboration and respect for others’ time.

Unpacking MEC’s Firmware Mystery: Discussions about MEC firmware’s opcode architectures emerged with speculation on RISC-V and different instruction sets, revealing a potential cbz instruction and inclusive dialogue around the nuances of RISC-V ISA.

Mozilla AI Discord

Scan Reveals Llamafile’s Wrongful Accusation: Versions of llamafile, including llamafile-0.6.2.exe and llamafile-0.7, were flagged as malware by antivirus software; utilizing appeal forms with the respective antivirus companies was suggested as a remedial step.

Run Llamafile Smoother in Kaggle: Users encountering issues when running llamafile on Kaggle found solace through an updated command that resolves CUDA compilation and compatible GPU architecture concerns, enabling efficient usage of llamafile-0.7.

RAG-LLM Gets Local Legs: A query about locally distributing RAG-LLM application without the burdens of Docker or Python was answered affirmatively, indicating the suitability of llamafile for such purposes, particularly beneficial for macOS audiences.

Taming the Memory Beast with an Argument: An out of memory error experienced by a user was rectified by adjusting the -ngl parameter, demonstrating the importance of fine-tuning arguments based on the specific capabilities of their NVIDIA GeForce GTX 1050 card.

Vulkan Integration Spurs Performance Gains: A proposition to bolster llamafile by integrating Vulkan support led to performance enhancements on an Intel-based laptop with an integrated GPU, yet this required the granular task of re-importing and amending the llama.cpp file.

DiscoResearch Discord

- No Schedules Needed for New Optimizers: The huggingface/transformers repository now has a pull request introducing Meta’s schedule-free optimizers for AdamW and SGD, which promises substantial enhancements in model training routines.

- AI Devs Convene in Hürth: An AI community event focusing on synthetic data generation, LLM/RAG pipelines, and embeddings is scheduled for May 7th in Hürth, Germany. Registration is open, with emphasis on a hands-on, developer-centric format, and can be found at Developer Event - AI Village.

- Sharing Synthetic Data Insights Sought: Demand for knowledge on synthetic data strategies is high, with specific interest in the quality of German translated versus German generated data, indicating a niche requirement for regional data handling expertise.

- Command-R Tackles Tough Translations: The Command-R model showcased on Hugging Face Spaces excels at translating archaic Middle High German text, outperforming GPT-4 equivalents and underscoring the potential upheaval in historical language processing.

- Open-Source Model Development Desired: There’s anticipatory buzz that an open-source release of the impressive Command-R could amplify developer engagement, echoing the ecosystem success seen with publicly accessible models like Mistral.

AI21 Labs (Jamba) Discord

-

Slow and Steady Wins the Race?: Comparisons reveal that Jamba’s 1B Mamba model lags in training speed by 76% when run on an HGX, compared to a standard Transformer model.

-

Size Doesn’t Always Matter: Engineers have introduced scaled-down Jamba models, 8xMoE with 29B and 4xMoE with 17.7B parameters, achieving decent performance on hardware as accessible as a 4090 GPU at 4 bit.

-

Weights and Measures: A creator’s application of spherical linear interpolation (Slerp) for expert weight reduction in Jamba models sparked interest, with plans to share a notebook detailing the process.

-

Power Play: In the quest for optimal GPU utilization while handling a 52B Jamba model, one engineer seeks more efficient methods for training, likely considering a switch from pipeline to Tensor Parallelism given current capacity constraints.

-

What’s the Best Model Serving Approach?: The community is engaging in conversations about effective inference engines for Jamba models, though no consensus has been reached yet.

Datasette - LLM (@SimonW) Discord

-

QNAP NAS - A Home Lab for AI Enthusiasts: An AI engineer shared a guide about setting up a QNAP NAS (model TS-h1290FX) as an AI testing platform, emphasizing its notable specs such as an AMD EPYC 7302P CPU, 256GB DRAM, and 25GbE networking.

-

Streamlining AI with Preset Prompts: There’s curiosity among engineers about storing and reusing system prompts to improve efficiency in AI interactions, although the discussion did not progress with more detailed insights or experiences.

-

Alter: The Mac’s AI Writing Assistant: Alter is launching in beta, offering AI-powered text improvement services to macOS users, capable of integrating with applications like Keynote, as showcased in this demonstration video.

-

A Singular AI Solution for Mac Enthusiasts: The Alter app aims to provide context-aware AI features across all macOS applications, potentially centralizing AI tools and reducing the need for multiple services. Details about its full capabilities are available on the Alter website.

Skunkworks AI Discord

- Dynamic Compute Allocation Sparks Ideas: Engineers discussed a paper proposing dynamic allocation of compute resources on a per-token basis within neural networks, which stirred interest for possible adaptations in neurallambda; the aim is to allow the network to self-regulate its computational efforts.

- Rethinking Training Approaches for neurallambda: Exploratory talks included using pause/think tokens, reinforcement learning for conditionals, and emulating aspects of RNNs that adaptively control their compute usage, which could enhance training efficacy for neurallambda.

- Innovative Input Handling on the Horizon: Technologists considered novel input approaches for neurallambda, like using a neural queue for more flexible processing and conceptualizing input as a Turing machine-esque tape, where the network could initiate tape movements.

- Improving LLMs Data Structuring Capabilities: Participants shared an instructional video titled “Instructor, Generating Structure from LLMs”, showing methods to extract structured data such as JSON from LLMs like GPT-3.5, GPT-4, and GPT-4-Vision, aimed at getting more reliable results from these models. Watch the instructional video.

- Video Learning Opportunities: A second educational video was linked, however, it was provided without context, suggesting a potential resource for self-guided learning for the curious. Explore the video.

LLM Perf Enthusiasts AI Discord

-

Haiku Performance Tuning Search: A guild member is seeking advice on improving the speed of Haiku due to dissatisfaction with its current throughput.

-

Anthropic’s API Outperforms GPT-4 Turbo: A user presented evidence that Anthropic’s beta API surpassed GPT-4 Turbo in numerous tests on the Berkeley function calling benchmark. Results from this study can be found in a detailed Twitter thread.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

Perplexity AI ▷ #announcements (1 messages):

- New Story Discovery Experience on iOS: Perplexity is testing a new format for story discovery in its iOS app. Feedback is welcomed in the designated channel; get the app here.

Perplexity AI ▷ #general (1199 messages🔥🔥🔥):

-

Event Ends with a Draw: The Perplexity AI Discord event concluded with users eun08000 and codelicious tied for top place. Winners will be contacted via DMs for their prizes.

-

Differences in Claude 3 Opus: Users discussed differences in the Claude 3 Opus model between Poe and Perplexity, noting performance variations, particularly in creativity and writing tasks.

-

Solar Eclipse Excitement: Members of the server shared their anticipation and observations of the solar eclipse, with conversations including the ideal viewing equipment and experiences of witnessing the phenomenon.

-

Questions on Moon Formation: A discussion arose about the formation of the Moon, with one user skeptical about the theory of the Moon being part of a celestial body that collided with Earth. Links to educational resources were shared for further understanding.

-

Getting Pro Role on Discord: Users inquired about obtaining the ‘Pro’ role on the Discord server, with a direction to rejoin via a Pro Discord link provided in the account settings on the Perplexity website.

Links mentioned:

- Tweet from OscaR-_-010 (@OscaR_010__): @kodjima33 @bing @OpenAI @perplexity_ai @AnthropicAI Hi, friend . Here I do have to say that in searches @perplexity_ai surpasses all those mentioned, its search range is superior. The search includes...

- rabbit: $199 no subscription required - the future of human-machine interface - pre-order now

- Supported Models: no description found

- Impact of sanctions on Russia and Belarus : Stripe: Help & Support: no description found

- Cat Cat Memes GIF - Cat Cat memes Cat images - Discover & Share GIFs: Click to view the GIF

- Space GIF - Space - Discover & Share GIFs: Click to view the GIF

- Queen - Champion GIF - Queen Freddie Mercury We Are The Champions - Discover & Share GIFs: Click to view the GIF

- Mobile Vendor Market Share Worldwide | Statcounter Global Stats: This graph shows the market share of mobile vendors worldwide based on over 5 billion monthly page views.

- Can I use Privacy if I live outside the US?: We are only able to provide our service to US citizens or legal residents of the US at this time. We're continuing to explore opportunities and options to bring Privacy to the rest of the world. H...

- Mobile Operating System Market Share Worldwide | Statcounter Global Stats: This graph shows the market share of mobile operating systems worldwide based on over 5 billion monthly page views.

- Unlimited Power Star Wars GIF - Unlimited Power Star Wars - Discover & Share GIFs: Click to view the GIF

- Mojeek Labs | RAG Search: no description found

- Are Sonar models new?: no description found

- The difference between the models on the PPL website and the API models.: no description found

- Model names?: no description found

- Solar Eclipse LIVE Coverage (with Video & Updates): Join us for live coverage of the solar eclipse, featuring live eclipse video! We’ll show you the total solar eclipse live in Mexico, the United States, and C...

- Eight Wonders Of Our Solar System | The Planets | BBC Earth Science: Discover the most memorable events in the history of our solar system. Travel to the surface of these dynamic worlds to witness the moments of high drama tha...

- GOES-East CONUS - GeoColor - NOAA / NESDIS / STAR: no description found

Perplexity AI ▷ #sharing (40 messages🔥):

- Exploring the AI Frontier: Users shared a plethora of search queries leading to Perplexity AI’s platform, covering topics from the Samsung Galaxy S23 to the impacts of AI on the music industry.

- Tech Giants Making Moves: A link to a YouTube video discussing Tesla’s robotaxi announcement and Apple’s home robot project was shared, highlighting the advancements and rumors in technology sectors.

- Interactivity Reminders: Several reminders for users were posted, urging them to ensure their threads are shareable, indicated by specific instructions and attachment links.

- Featured Success Story: Perplexity AI’s efficiency in model training was showcased in an Amazon Web Services case study, presenting significant reductions in training times and enhanced user experience.

- Educational Insights: Links to knowledge resources on various subjects such as color basics, the origins of geometric proofs, and SpaceX’s Mars plans were provided, reflecting a diverse interest in learning and self-improvement.

Links mentioned:

- Perplexity Accelerates Foundation Model Training by 40% with Amazon SageMaker HyperPod | Perplexity Case Study | AWS: no description found

- Tesla robotaxi announcement, Alphabet-HubSpot deal rumors, Apple’s home robot project: In today's episode of Discover Daily by Perplexity, we dive into Tesla's upcoming robotaxi unveiling, set for August 8th, and explore what this autonomous ve...

- Workflows & Tooling to Create Trusted AI | Ask More of AI with Clara Shih: Clara sits down with the founder/CEOs of three of the hottest AI companies-- Aravind Srinivas (Perplexity AI), Jerry Liu (LlamaIndex), and Harrison Chase (La...

Perplexity AI ▷ #pplx-api (40 messages🔥):

- API Credit Purchase Difficulties: Members are experiencing issues when attempting to purchase API credits; the balance appears as $0 after a refresh despite trying multiple times. ok.alex requests affected users to send account details for resolution.

- Discrepancy Between pplx-labs, API, and Web App: Users have reported inconsistencies in results when using the same prompts across the pplx-labs, API, and web application, with the API showing more hallucinations. icelavaman informed that the default model from pplx.ai is not available via the API, and citations are currently in closed beta.

- Ruby Wrapper for Perplexity API in Progress: filterse7en is developing an OpenAI-based Ruby wrapper library for the Perplexity API.

- API vs Web App Model Differences: Discussions reveal differences between results from the API and the web application, with skepticism around results quality and the presence of hallucinations. brknclock1215 suggests that using the

sonar-medium-onlinemodel via the API should effectively be the same as the “Sonar” model on the web version without Pro. - Inquiries on pplx-pro Model API Access: marciano inquired if the model used in pplx-pro is accessible via the API. ok.alex clarified that Pro search is only available on the web and their apps, not via the API.

Links mentioned:

- no title found: no description found

- Supported Models: no description found

Nous Research AI ▷ #off-topic (15 messages🔥):

- Introducing Command R+: A YouTube video titled “Introducing Command R+: A Scalable LLM Built for Business” has been shared, showcasing cohere’s powerful LLM specifically built for enterprise applications.

- Overflow of AI Research: A member expressed concern asking if more AI researchers are needed, with one member suggesting there’s already more research than what can be digested, while another member pointed out the need for more meta-researchers to synthesize and interpret the influx of information.

- Search Images in a Snap: The project ‘Where’s My Pic?’ was introduced, offering a solution similar to Google Image Search for local folders, which can be a time-saver for locating images quickly. Learn more about the project in this YouTube video.

- Canada’s AI Strategy: An announcement of Canada’s ambition to be at the forefront of AI, including creating good-paying job opportunities in innovation and technology, was highlighted through a government news release.

- Hugging Face Tech Insights: The SSL certificates of huggingface.tech have been analyzed, providing insights into the tools they use, as detailed on crt.sh.

Links mentioned:

- Introducing Command R+: A Scalable LLM Built for Business: Today, we will take a look at the Command R+, cohere's most powerful, scalable large language model (LLM) purpose-built to excel at real-world enterprise us...

- Securing Canada’s AI advantage: no description found

- crt.sh | huggingface.tech: no description found

Nous Research AI ▷ #interesting-links (49 messages🔥):

-

Rohan Paul’s AI Tweet Sparks Curiosity: A tweet by Rohan Paul regarding AI was revisited, noting its promising earlier impression but lacking follow-up information and insights after three months. The discussion also touched upon the usability of fp8 on NVIDIA’s 4090 GPUs.

-

LLaMA-2-7B Breaks the Context Length: A groundbreaking achievement was shared where LLaMA-2-7B was trained to handle a massive 700K context length using just 8 A100 GPUs, significantly surpassing the expected capacity of 32K to 200K tokens.

-

Gemma 1.1 Joins the AI Language Model Family: Google released Gemma 1.1 7B (IT), an instructive language model, on Hugging Face, boasting improvements in quality, coding capabilities, and instruction following. It was highlighted for its novel RLHF method used during training.

-

Pathfinding in Mazes Takes a Twist: A unique approach to unifying physics was proposed using conjectural frameworks like the Fibonacci binomial conjecture, suggesting that NLP can simulate any process.

-

GPT-4 Takes a Meta Turn: An experience with GPT-4 was shared where a given prompt led to unexpectedly meta and self-referential content. The discussion on this intriguing performance included a YouTube link to a relevant game’s narrator feature.

Links mentioned:

- Tweet from Zhang Peiyuan (@PY_Z001): 🌟700K context with 8 GPUs🌟 How many tokens do you think one can put in a single context during training, with 8 A100, for a 7B transformer? 32K? 64K? 200K? No, my dear friend. I just managed to tra...

- Large Language Models as Commonsense Knowledge for Large-Scale Task Planning: Large-scale task planning is a major challenge. Recent work exploits large language models (LLMs) directly as a policy and shows surprisingly interesting results. This paper shows that LLMs provide a ...

- Classification - Outlines 〰️: Structured text generation with LLMs

- Paper page - Beyond A*: Better Planning with Transformers via Search Dynamics Bootstrapping: no description found

- google/gemma-1.1-7b-it · Hugging Face: no description found

- Paper page - Direct Nash Optimization: Teaching Language Models to Self-Improve with General Preferences: no description found

- Factorial Funds | Under The Hood: How OpenAI's Sora Model Works: no description found

- Visualizing Attention, a Transformer's Heart | Chapter 6, Deep Learning: Demystifying attention, the key mechanism inside transformers and LLMs.Instead of sponsored ad reads, these lessons are funded directly by viewers: https://3...

- Bastion: Narrator Bits Part 1 (Wharf District, Workmen Ward, Breaker Barracks): I recorded the game with narrator audio only and everything else turned down to zero in the sound menu volume settings. Then I just cut out the silent part...

- GitHub - vicgalle/configurable-safety-tuning: Data and models for the paper "Configurable Safety Tuning of Language Models with Synthetic Preference Data": Data and models for the paper "Configurable Safety Tuning of Language Models with Synthetic Preference Data" - vicgalle/configurable-safety-tuning

Nous Research AI ▷ #ask-about-llms (148 messages🔥🔥):

-

GitHub Resource for PDF to Markdown Conversion: A member shared a GitHub repository titled VikParuchuri/marker, which provides a tool to convert PDF files to markdown format with high accuracy. The repository can be found at GitHub - VikParuchuri/marker.

-

Hermes Function Calling Woes: There was a discussion on how to make

Hermes-2-Pro-Mistral-7Bexecute functions using atoolsconfiguration similar to OpenAI’s models. It was noted that while the model can handle ChatML syntax and function calls within messages, it encounters problems executing functions defined intools. -

Full-Parameter vs Adapter Training in LLMs: Members debated on the challenges of achieving consistent results with full parameter finetuning compared to training adapters, with some sharing their relative success or lack thereof with either method in different contexts, such as Mixtral or Llamas.

-

Exploring Large Model Output Limitations: A conversation took place about the limitations on output size in large language models, with the understanding that while input contexts can be quite large, output is limited due to different training data and operational considerations like needing examples of similarly sized outputs for training.

-

Combining Ontologies and Vector Searches: There was an extensive discussion on utilizing knowledge graph (KG) ontologies with language models. Tips were shared on how to create Cypher queries from input text, the effectiveness of walking KG graphs using a vector search evaluation function, and the integration of vector databases with graph databases for production uses.

Links mentioned:

- TroyDoesAI/MermaidMistral · Hugging Face: no description found

- GitHub - NousResearch/Hermes-Function-Calling: Contribute to NousResearch/Hermes-Function-Calling development by creating an account on GitHub.

- About GPU memory usage · Issue #8 · joey00072/ohara: Hello. First of all, thanks for sharing a bitnet training code. I have a question about GPU memory usage. As I understanding, bitnet can reduce VRAM usage compared to fp16/bf16 precision. However, ...

- GitHub - VikParuchuri/marker: Convert PDF to markdown quickly with high accuracy: Convert PDF to markdown quickly with high accuracy - VikParuchuri/marker

Nous Research AI ▷ #project-obsidian (5 messages):

-

Twisting LLaVA with Randomness: A member experimented with the LLaVA vision-language model by injecting randomness into the image embeddings and observed the LLM’s interpretations, detailed in their blog post. The process involved tweaking the model to accept random projections instead of CLIP projections, essentially performing a “Rorschach test” on the AI.

-

nanoLLaVA’s Mighty Appearance: Launching the “small but mighty” nanoLLaVA sub 1B vision-language model, a member shared a link to their creation nanoLLaVA on Hugging Face, running on edge devices and boasting a unique combination of a Base LLM and Vision Encoder.

-

Obsidian and Hermes Vision Updates Imminent: The same member announced impending updates to both Obsidian and Hermes Vision, suggesting enhancements in vision-language model capabilities.

-

ChatML Fusing Capabilities with LLaVA: There was a successful endeavor to make ChatML work with the LLaVA model, hinting at a potential bridge between chat and vision-language tasks.

Links mentioned:

- anotherjesse.com - Rorschach Test For LLaVA LLM: no description found

- qnguyen3/nanoLLaVA · Hugging Face: no description found

Nous Research AI ▷ #rag-dataset (19 messages🔥):

- Chunking Script for Diverse Dataset Suggested: The idea of writing a chunking script to save using a big RAG call at the time of generating the dataset was put forward. It can potentially make the dataset more diverse and efficient by preparing the RAG generation beforehand.

- Multidoc Queries Via Claude Opus: A discussion took place about the possibility of generating multidoc queries using Claude Opus by selecting documents from varied domains and generating queries that cut across them. This approach could enhance complex query generation for RAG models.

- Diverse Document Sources for Model Training: Links to diverse document sources have been shared, such as the OCCRP data platform and a repository of various files at The Eye. These sources could be scraped to create a rich training dataset.

- Ransomware Victim Documents for Training: There was a consideration of ransomware groups publishing victims’ internal documents as a potential training data source. However, the ethics of using such data was flagged as questionable.

- RAPTOR Clustering Strategy Discussed: The recursive aspect of the RAPTOR clustering method was highlighted, prompting a discussion on the strategy of generating clusters and their role in stratifying collections for the RAG dataset.

Links mentioned:

- no title found: no description found

- Index of /public/: no description found

Nous Research AI ▷ #world-sim (567 messages🔥🔥🔥):

-

Worldsim Wheezes as We Wait: Users continue to eagerly inquire about the recovery of Worldsim following a DDoS attack, discussing the potential implementation of a login system to prevent future attacks from notorious online communities like 4chan.

-

AI Memory Mystery: There’s confusion and discussion about whether Claude can remember information across separate sessions or whether it is just imitating this ability through its probabilistic model, with random token selection causing varying outcomes despite identical prompts.

-

Seeking Sustainable Solution: As users propose subscription models for Worldsim to offset the high operational costs sparked by indiscriminate access, Nous Research hints at a future “pro” version with plans for more scenarios, while stressing the need for a sustainable platform.

-

Tales and Tech of Transcendence: The channel teems with philosophical discussions about consciousness, existence, and the potential of living in a simulation; parallel dialogues delve into the nature of AI, existence, and the interplay of science and philosophy.

-

Impatient for Play: Users express a mix of impatience and enthusiasm for Worldsim’s return, asking for updates, while discussing ways to prevent unrestricted access and pondering potential costs of a subscription-based model to keep the service both financially viable and protected from misuse.

Links mentioned:

- Client SDKs: no description found

- MemGPT - Unlimited Context (Memory) for LLMs | MLExpert - Crush Your Machine Learning interview: How can you overcome the context window size limit of LLMs? MemGPT helps handle longer conversations by cleverly managing different memory tiers.

- fbjr/cohere_c4ai-command-r-plus-mlx-4bit-128g · Hugging Face: no description found

- world_sim: no description found

- websim.ai: no description found

- no title found: no description found

- Google's New AI Can Generate Entire 2D Platformer Games: The new model, dubbed Genie, can create playable environments from a single image prompts.

- An Introduction to Game B - Game B Wiki: no description found

- The Box | Science Fiction Animatic: Video Summary:The animatic follows the perspective of "The Breacher," a character determined to escape the confines of a simulated reality, known as the "Wor...

- GitHub - simonw/llm: Access large language models from the command-line: Access large language models from the command-line - simonw/llm

- Worldsim and jailbreaking Claude 3 Including subtitles [-2-]: Personal learning

- The Great Eclipse | Science Fiction Animatic: Video Summary:This animatic explores a war of ideas and beliefs, fought not with weapons but through the power of data, debates, and simulated worlds. As dif...

- Quantum Mechanics can be understood through stochastic optimization on spacetimes - Scientific Reports: no description found

- Home: no description found

Stability.ai (Stable Diffusion) ▷ #general-chat (977 messages🔥🔥🔥):

- Searching for the Stability Bot: Users inquired about generating images and were redirected to check server status in <#1047610792226340935> as bots are currently down.

- Curiosity About Image Generation Results: Users discussed differences in image output quality between local models and Dreamstudio, with suggestions to try open-source upscalers and inquiries into the effectiveness of various techniques.

- Anticipation for Stable Diffusion 3: Conversations indicate an informal ETA of 2-4 weeks for the release of SD3, with discussions about its anticipated improvements and capabilities.

- Exploring SD Model Enhancements: Users exchanged information on training LoRAs, including questions about installation and practicality, with suggestions to follow specific GitHub repositories for guidance.

- Switching Between UIs: Members shared advice for switching from Automatic 1.1.1.1 to other UIs like StableSwarm, emphasizing the latter’s enhanced user experience and features for newcomers.

Links mentioned:

- Leonardo.Ai: Create production-quality visual assets for your projects with unprecedented quality, speed and style-consistency.

- stabilityai/cosxl · Hugging Face: no description found

- SDXL-Lightning - a Hugging Face Space by ByteDance: no description found

- Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction: We present Visual AutoRegressive modeling (VAR), a new generation paradigm that redefines the autoregressive learning on images as coarse-to-fine "next-scale prediction" or "next-resolutio...

- PixArt LCM - a Hugging Face Space by PixArt-alpha: no description found

- Lexica Testica - 1.0 | Stable Diffusion Checkpoint | Civitai: Initialized from OpenJourney v2, further fine-tuned for 4000 steps on images scraped from the front page of Lexica art (January 2023). Good at prod...

- Frieren Wow GIF - Frieren Wow Elf - Discover & Share GIFs: Click to view the GIF

- Yoshi Mario GIF - Yoshi Mario Yoshis Island - Discover & Share GIFs: Click to view the GIF

- OpenModelDB: OpenModelDB is a community driven database of AI Upscaling models. We aim to provide a better way to find and compare models than existing sources.

- Reddit - Dive into anything: no description found

- VAR/demo_sample.ipynb at main · FoundationVision/VAR: [GPT beats diffusion🔥] [scaling laws in visual generation📈] Official impl. of "Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction" - FoundationVision/VAR

- GitHub - LykosAI/StabilityMatrix: Multi-Platform Package Manager for Stable Diffusion: Multi-Platform Package Manager for Stable Diffusion - LykosAI/StabilityMatrix

- Federal Register :: Request Access: no description found

- How to Run Stable Diffusion in Google Colab (Free) WITHOUT DISCONNECT: Here's how to code your own python notebook in Colab to generate AI images for FREE, without getting disconnected. We'll use the Diffusers library from Huggi...

- Survey: Comparing generated photos by AI (Diffusion Models): This is a survey to determine the more accurate output from different diffusion models like SD 1.5, SD 2.0, SDXL, Dall-e-3 and a custom fine tuned model. It will take a few minutes to complete the su...

- Installing Stability Matrix (1 click installers for Automatic 1111, ComfyUI, Fooocus, and more): This Stability Matrix application is designed for Windows and allows for installing and managing text to image web ui apps like Automatic 1111, ComfyUI, and ...

- A Conversation with Malcolm and Simone Collins (Supercut): Malcolm and Simone are the founders of pronatalist.org, The Collins Institute for the Gifted, and Based Camp Podcast.All Outcomes Are Acceptable Blog: https:...

- GitHub - altoiddealer/--sd-webui-ar-plusplus: Select img aspect ratio from presets in sd-webui: Select img aspect ratio from presets in sd-webui. Contribute to altoiddealer/--sd-webui-ar-plusplus development by creating an account on GitHub.

- Identifying Stable Diffusion XL 1.0 images from VAE artifacts: The new SDXL 1.0 text-to-image generation model was recently released that generates small artifacts in the image when the earlier 0.9 release didn't have them.

- GitHub - nashsu/FreeAskInternet: FreeAskInternet is a completely free, private and locally running search aggregator & answer generate using LLM, without GPU needed. The user can ask a question and the system will make a multi engine search and combine the search result to the ChatGPT3.5 LLM and generate the answer based on search results.: FreeAskInternet is a completely free, private and locally running search aggregator & answer generate using LLM, without GPU needed. The user can ask a question and the system will make a mul...

- How to Install Stable Diffusion - automatic1111: Part 2: How to Use Stable Diffusion https://youtu.be/nJlHJZo66UAAutomatic1111 https://github.com/AUTOMATIC1111/stable-diffusion-webuiInstall Python https://w...

- GitHub - derrian-distro/LoRA_Easy_Training_Scripts: A UI made in Pyside6 to make training LoRA/LoCon and other LoRA type models in sd-scripts easy: A UI made in Pyside6 to make training LoRA/LoCon and other LoRA type models in sd-scripts easy - derrian-distro/LoRA_Easy_Training_Scripts

- SonicDiffusion - V4 | Stable Diffusion Checkpoint | Civitai: Try it out here! https://mobians.ai/ Join the discord for updates, share generated-images, just want to chat or if you want to contribute to helpin...

- GitHub - Stability-AI/StableSwarmUI: StableSwarmUI, A Modular Stable Diffusion Web-User-Interface, with an emphasis on making powertools easily accessible, high performance, and extensibility.: StableSwarmUI, A Modular Stable Diffusion Web-User-Interface, with an emphasis on making powertools easily accessible, high performance, and extensibility. - Stability-AI/StableSwarmUI

- GitHub - camenduru/Open-Sora-Plan-replicate: Contribute to camenduru/Open-Sora-Plan-replicate development by creating an account on GitHub.

- GitHub - GarlicCookie/PNG-SD-Info-Viewer: PNG-SD-Info-Viewer is a program designed to quickly allow the browsing of PNG files with associated metadata from Stable Diffusion generated images.: PNG-SD-Info-Viewer is a program designed to quickly allow the browsing of PNG files with associated metadata from Stable Diffusion generated images. - GarlicCookie/PNG-SD-Info-Viewer

- GitHub - GarlicCookie/SD-Quick-View: SD-Quick-View is a program designed to very quickly look through images generated by Stable Diffusion and see associated metadata.: SD-Quick-View is a program designed to very quickly look through images generated by Stable Diffusion and see associated metadata. - GarlicCookie/SD-Quick-View

- GitHub - lllyasviel/stable-diffusion-webui-forge: Contribute to lllyasviel/stable-diffusion-webui-forge development by creating an account on GitHub.

- ComfyUI-Tara-LLM-Integration/README.md at main · ronniebasak/ComfyUI-Tara-LLM-Integration: Contribute to ronniebasak/ComfyUI-Tara-LLM-Integration development by creating an account on GitHub.

- Reddit - Dive into anything: no description found

- NewRealityXL ❗ All-In-One Photographic - ✔ 3.0 Experimental | Stable Diffusion Checkpoint | Civitai: IMPORTANT: v2.x ---> Main Version | v3.x ---> Experimental Version I need your time to thoroughly test this new 3rd version to understand all...

Unsloth AI (Daniel Han) ▷ #general (341 messages🔥🔥):

-