One thing we missed covering in the weekend rush is Lilian Weng’s blog on Diffusion Models for Video Generation. While her work is rarely breaking news on any particular day, it is almost always the single most worthwhile resource on a given important AI topic, and we would say this even if she did not happen to work at OpenAI.

Anyone keen on Sora, the biggest AI launch of the year so far (now rumored to be coming to Adobe Premiere Pro), should read this. Unfortunately for most of us, the average diffusion paper requires 150+ IQ to read.

We are only half joking. As per Lilian’s style, she takes us on a wild tour of all the SOTA videogen techniques of the past 2 years, humbling every other AI summarizooor on earth:

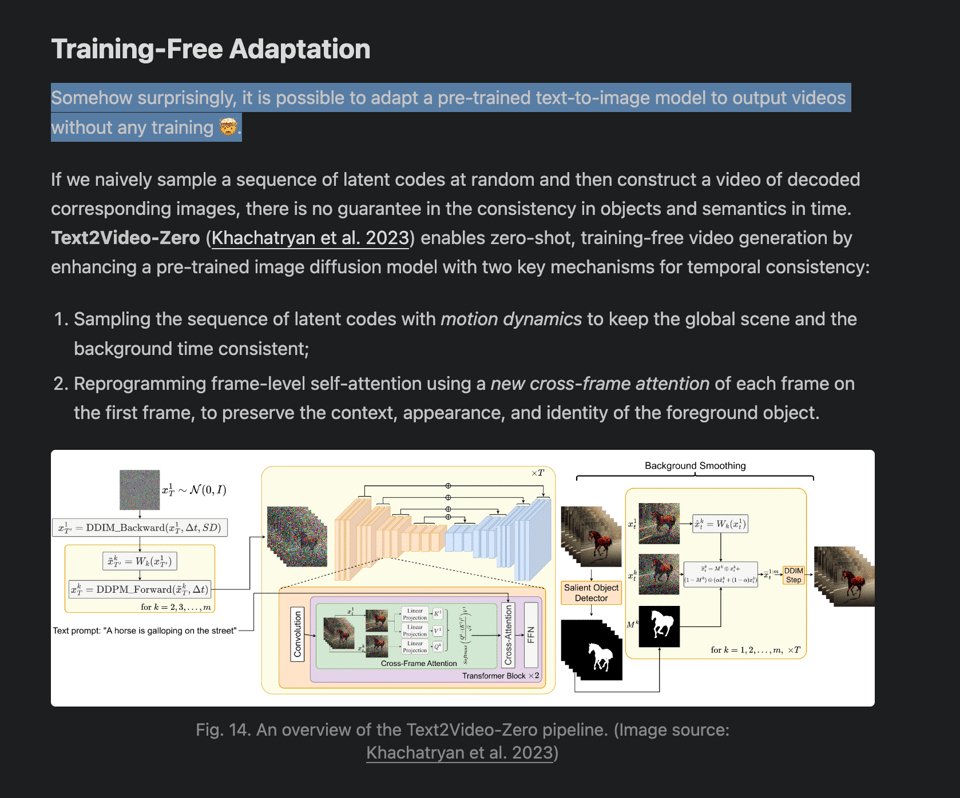

The surprise find of the day comes from her highlight of Training-free adaptation, which is exactly as wild as it sounds:

“Somehow surprisingly, it is possible to adapt a pre-trained text-to-image model to output videos without any training 🤯.”

She unfortunately only spends 2 sentences discussing Sora, and she definitely knows more she can’t say. Anyway, this is likely the most authoritative explanation to How SOTA AI Video Actually Works you or I are ever likely to get unless Bill Peebles takes to paper writing again.

Table of Contents

[TOC]

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/Singularity. Comment crawling works now but has lots to improve!

AI Companies and Releases

- OpenAI expands: OpenAI launches in Japan, introduces Batch API, and partners with Adobe to bring Sora video model to Premiere Pro.

- New models: Reka AI releases Reka Core multimodal language model.

- Competitive landscape: Sam Altman says OpenAI will “steamroll” startups. Devin AI model sees record internal usage.

New Model Releases and Advancements in AI Capabilities

- WizardLM-2 released: In /r/LocalLLaMA, WizardLM-2 was just released and is showing impressive performance.

- Llama 3 news coming soon: An image post hints that news about Llama 3 will be coming soon.

- Reka Core multimodal model released: Reka AI announced the release of Reka Core, their new frontier-class multimodal language model.

- AI models showing intuition and creativity: Geoffrey Hinton says current AI models are exhibiting intuition, creativity and can see analogies humans cannot.

- AI contributing to its own development: Devin was the biggest contributor to its own repository for the first time, an AI system contributing significantly to its own codebase.

- AI recognizing its own outputs: In /r/singularity, it was shared that Opus can recognize its own generated outputs, an impressive new capability.

Industry Trends, Predictions and Ethical Concerns

- Warnings about AI disruption: Sam Altman warned startups about the risk of getting steamrolled by OpenAI if they don’t adapt quickly enough.

- Debate on AGI timelines: While Yann LeCun believes AGI is inevitable, he says it’s not coming next year or only from LLMs.

- Toxicity issues with models: WizardLM-2 had to be deleted shortly after release because the developers forgot to test it for toxicity, highlighting the challenges with responsible AI development.

- Proposed AI regulation in the US: The Center for AI Policy put forth a new proposal for a bill to regulate AI development in the US.

- Warning about AI startups: A PSA in /r/singularity warned about being cautious with startups that seem too good to be true, as some have questionable pasts tied to crypto.

Technical Discussions and Humor

- Building Mixture-of-Experts models: /r/LocalLLaMA shared a guide on how to easily build your own MoE language model using mergoo.

- Diffusion vs autoregressive models: /r/MachineLearning had a discussion comparing diffusion and autoregressive approaches for image generation and debating which is better.

- Fine-tuning GPT-3.5: /r/OpenAI posted a guide for fine-tuning GPT-3.5 for custom use cases.

- AI advancement memes: The community shared some humorous memes, including a “can’t wait” meme about the pace of AI progress, a meme about reversing aging in mice, and a cursed rave video meme.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

WizardLM-2 Release and Withdrawal

- WizardLM-2 Release: @WizardLM_AI announced the release of WizardLM-2, their next-generation state-of-the-art LLM family, including 8x22B, 70B, and 7B models which demonstrate highly competitive performance compared to leading proprietary LLMs.

- Toxicity Testing Missed: @WizardLM_AI apologized for accidentally missing the required toxicity testing in their release process, and stated they will complete the test quickly and re-release the model as soon as possible.

- Model Weights Pulled: @abacaj noted that WizardLM-2 model weights were pulled from Hugging Face, speculating it may have been a premature release or something else going on.

Reka Core Release

- Reka Core Announcement: @RekaAILabs announced the release of Reka Core, their most capable multimodal language model yet, which has a lot of capabilities including understanding video.

- Technical Report: @RekaAILabs published a technical report detailing the training, architecture, data, and evaluation for the Reka models.

- Benchmark Performance: @RekaAILabs evaluated Core on standard benchmarks for both text and multimodal, along with a blind third-party human evaluation, showing it approaches frontier-class models like Claude3 Opus and GPT4-V.

Open Source Model Developments

- Pile-T5: @arankomatsuzaki announced the release of Pile-T5, a T5 model trained on 2T tokens from the Pile using the Llama tokenizer, featuring intermediate checkpoints and a significant boost in benchmark performance.

- Idefics2: @huggingface released Idefics2, an 8B vision-language model with significantly enhanced capabilities in OCR, document understanding, and visual reasoning, available under the Apache 2.0 license.

- Snowflake Embedding Models: @SnowflakeDB open-sourced snowflake-arctic-embed, a family of powerful embedding models ranging from 22 to 335 million parameters with 384-1024 embedding dimensions and 50-56 MTEB scores.

LLM Architecture Developments

- Megalodon Architecture: @_akhaliq shared Meta’s announcement of Megalodon, an efficient LLM pretraining and inference architecture with unlimited context length.

- TransformerFAM: @_akhaliq shared Google’s announcement of TransformerFAM, where feedback attention is used as working memory to enable Transformers to process infinitely long inputs.

Miscellaneous Discussions

- Humanoid Robots Prediction: @DrJimFan predicted that humanoid robots will exceed the supply of iPhones in the next decade, gradually then suddenly.

- Captchas and Bots: @fchollet argued that captchas cannot prevent bots from signing up for services, as professional spam operations employ people to solve captchas manually for about 1 cent per account.

AI Discord Recap

A summary of Summaries of Summaries

1. New Language Model Releases and Benchmarks

-

EleutherAI released Pile-T5, an enhanced T5 model trained on the Pile dataset with up to 2 trillion tokens, showing improved performance across benchmarks. The release was also announced on Twitter.

-

Microsoft released WizardLM-2, a state-of-the-art instruction-following model that was later removed due to a missed toxicity test, but mirrors remain on sites like Hugging Face.

-

Reka AI introduced Reka Core, a frontier-class multimodal language model competitive with OpenAI, Anthropic, and Google models.

-

Hugging Face released Idefics2, an 8B multimodal model excelling in vision-language tasks like OCR, document understanding, and visual reasoning.

-

Discussions around model performance, sampling techniques like MinP/DynaTemp/Quadratic, and the impact of tokenization per a Berkeley paper.

2. Open Source AI Tools and Community Contributions

-

LangChain introduced a revamped documentation structure and saw community contributions like Perplexica (an open-source AI search engine), OppyDev (an AI coding assistant), and Payman AI (enabling AI agents to hire humans).

-

LlamaIndex announced tutorials on agent interfaces, a hybrid cloud service with Qdrant Engine, and an Azure AI integration guide for hybrid search.

-

Unsloth AI saw discussions on LoRA fine-tuning, ORPO optimization, CUDA learning resources, and cleaning the ShareGPT90k dataset for training.

-

Axolotl provided a guide for multi-node distributed fine-tuning, while Modular introduced mojo2py to convert Mojo code to Python.

-

CUDA MODE shared lecture recordings, with focuses on CUDA optimization, quantization techniques like HQQ+, and the llm.C project for efficient kernels.

3. AI Hardware and Deployment Advancements

-

Discussions around Nvidia’s potential early RTX 5090 launch due to competitive pressure and the anticipated performance gains.

-

Strong Compute announced grants of $10k-$100k for AI researchers exploring trust in AI, post-transformer architectures, new training methods, and explainable AI, with GPU resources up for grabs.

-

Limitless AI, previously known as Rewind, introduced a wearable AI device, sparking discussions around data privacy, HIPAA compliance, and cloud storage concerns.

-

tinygrad explored cost-effective GPU cluster setups, MNIST handling, documentation improvements, and enhancing the developer experience as it transitions to version 1.0.

-

Deployment insights like packaging custom models into llamafiles, running CUDA on consumer hardware, and converting models from ONNX to WebGL/WebGPU using tinygrad.

4. AI Safety, Ethics, and Societal Impact Debates

-

Discussions around the ethical implications of AI development, including the need for safety benchmarks like ALERT to assess potentially harmful content generation by language models.

-

Concerns over the spread of misinformation and unethical practices, with mentions of a potential AI scam advertised on Facebook called Open Sora.

-

Debates on finding a balance between AI capabilities and societal expectations, with some advocating for creative freedom while others prioritize safety considerations.

-

Philosophical exchanges comparing the reasoning abilities of AI systems to humans, touching on aspects like independent decision-making, emotional intelligence, and the neurobiological underpinnings of language comprehension.

-

Emerging legislation targeting deepfakes and the creation of explicit AI-generated content, prompting discussions around enforcement challenges and intent considerations.

5. Misc

-

Excitement and Speculation Around New Models: There was significant buzz and discussion around the release of new AI models like Pile-T5 from EleutherAI, Idefics2 8B from Hugging Face, Reka Core from Reka AI, and WizardLM 2 from Microsoft (despite its mysterious takedown). The AI community eagerly explored these models’ capabilities and training approaches.

-

Advancements in Multimodal AI and Diffusion Models: Conversations highlighted progress in multimodal AI with models like IDEFICS-2 showcasing advanced OCR, visual reasoning and conversational abilities. Research into diffusion models for video generation (Lilian Weng’s blog post) and the significance of tokenization in language modeling (UC Berkeley paper) also garnered interest.

-

Tooling and Frameworks for Model Development: Discussions covered various tools and frameworks for AI development, including Axolotl for multi-node distributed fine-tuning, LangChain for building LLM applications, tinygrad for efficient deep learning, and Hugging Face’s libraries like parler-tts for high-quality TTS models.

-

Emerging Platforms and Initiatives: The AI community took note of various emerging platforms and initiatives such as Limitless (rebranded from Rewind) for personalized AI, Cohere Compass beta for multi-aspect data search, Payman AI for AI-to-human task marketplaces, and Strong Compute’s $10k-$100k grants for AI research. These developments signaled an expanding ecosystem for applied AI.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

- Stable Diffusion 3: A Legend or Reality?: The release date for Stable Diffusion 3 (SD3) has turned into folklore within the community, with speculated dates like “April 26” and “April 69” reflecting excitement and sarcasm about its long-awaited arrival.

- Animating Images Becomes Comfier: Engineers are exchanging tips for image animation, pointing towards ComfyUI workflows and Stability Forge while mentioning challenges in running models directly via Python, highlighting a need for simpler animation APIs.

- Pixel Art Gets a Realistic Touch: There’s a buzz about turning pixel art into realistic images using models like SUPIR, available on Hugging Face, and enhancing pixelated images using img2img controlnet solutions like Fooocus or Magic Image Refiner.

- Prompt Engineering Debate: Prompt crafting best practices were discussed, with some arguing that expertise is not necessary and using services like Civitai can provide solid prompting baselines; discussions around WizardLM-2 were redacted due to untested toxicity concerns.

- AI Utopia or Dystopia?: Casual conversations veered into envisioning a future saturated with advanced AI applications, from style conversion in gaming to AI-assisted brain surgery, interspersed with jokes about an “AI University for prompt engineers” and tongue-in-cheek product names like “Stable Coin.”

Unsloth AI (Daniel Han) Discord

Benchmark Bonanza: Engineers shared positive feedback on a first benchmark’s results, praising its performance. There was also a conversation around extracting tokenizer.chat_template for model template identification in leaderboards.

Progressive Techniques in LoRA Tuning: Community members exchanged tips on LoRA fine-tuning, suggesting that the alpha parameter to tweak could be double the rank. They discussed ORPO’s resource-optimization in model training and discouraged the use of native CUDA, advocating for Triton instead for learning and development benefits.

Data Hygiene Takes Center Stage: The ShareGPT90k dataset was presented in a cleaned and ChatML format to facilitate training with Unsloth AI, and users highlighted the key role of data quality in model training, alluding to a community preference for hands-on experimentation in learning model training approaches.

Collaboration and Contributions on the Rise: Open calls for contributions to Unsloth documentation and projects such as Open Empathic were made, indicating a receptive attitude toward community involvement. A member announced the development of an “emotional” LLM and collaboration with a Chroma contributor on libSQL and WASM integration.

Navigating Unsloth’s Notebook Nuggets: Assistance with formatting personal messages for AI training was given, complete with a Python script link and a guide to use the ShareGPT format. Advice on packing and configurations for Gemma models were discussed to mitigate unexpected training issues.

Modular (Mojo 🔥) Discord

Bold Python Package Sets to Conquer Mojo Code: The creation of mojo2py, a Python package to convert Mojo language code into Python, indicates a trend toward developing tools for Python and Mojo interoperability.

Grammar Police Tackle Code Aesthetics: Engaging discussions highlighted the importance of indenting code, considered laughable yet significant for readability, and there was a sense of light-hearted camaraderie over code formatting conventions.

Accolades for Achieving Level 9 in Modular: A community member was congratulated for reaching level 9, indicating a point system or achievement metric within the Modular community.

Modular Tweets Tease the Tech-Savvy: A series of mysterious tweets from Modular sparked speculation and interest among the community, serving as an intriguing marketing puzzle.

Nightly Updates Kindle Community Interest: A fresh Mojo nightly update was announced, directing engineers to update their version to nightly/mojo and review the latest changes and enhancements detailed on GitHub’s diff and the changelog.

Perplexity AI Discord

Billing Confusion and API Misalignments: Users express dissatisfaction with unexpected charges and discrepancies between Perplexity AI and API usage, pointing to instances where promo codes don’t appear and seeking an understanding of parameters such as temperature for consistent results between different platforms.

Pro Feature Puzzlement: Changes to the Pro message counter in Perplexity AI led to mixed reactions, with some users enjoying “reduced stress” but others questioning the rationale behind such feature tweaks.

Model Performance Scrutiny: A divergence in opinion emerges on AI coding competencies, with GPT-4 seen as inadequate by some users, while others ponder the delicate trade-offs between various Perplexity models’ abilities and performance.

Cultural Curiosity and Tech Talk: The community engages in a range of searches, from probing Microsoft’s ad-testing endeavors to celebrating global cultural days, reflecting an eclectic mix of technical and creative interests.

API Result Inconsistencies Provoking Discussions: Queries in the community focus on aligning outcomes from Perplexity Pro and the API, with an undercurrent of worries about hallucinations and source credibility in the API’s content.

LM Studio Discord

Windows Cleared for Model Takeoff: Responding to queries, members confirmed that the Windows executables for LM Studio are signed with an authenticode certificate and discussed the cost differences between Windows certificates and Apple developer licenses, with the former requiring a hardware security module (HSM).

The Trouble with VRAM Detection: Users reported errors related to AMD hardware on Intel-based systems in Linux, despite attempts to solve the issue with ocl-icd-opencl-dev. It led to a broader discussion about hardware misidentification and the challenges it poses in configurations.

WizardLM-2 Sharpens Its Conversational Sword: The WizardLM 2 7B model was praised for its ability in multi-turn conversations and its training methods, with its availability announced on Hugging Face. The WaveCoder ultra 6.7b was also recognized for its coding prowess following fine-tuning on Microsoft’s CodeOcean.

Model Showdown: Users shared performance experiences with models like WizardLM-2-8x22B and Command R Plus, voicing mixed reactions. They exchanged views on what defines a “Base” AI model and the nuances of model fine-tuning and continuous learning, sparking debates over AI memory and bias.

Diverse Coding Prowess Under the Microscope: Within the guild, members delved into Python coding model capabilities, like Deepseek Coder and Aixcoder, urging others to check ‘human eval’ scores. Skepticism was expressed over claims about WaveCoder Ultra’s superiority, with some implying exaggerated results, while discussions on model fine-tuning and quantization illuminated varying preferences for coding models and AI agent creation tools.

Nous Research AI Discord

-

Engineers Tackle Tokenization Trouble: An engineer experienced difficulties with tokenized outputs for end-of-sequence predictions using llama.cpp with the OpenHermes 2.5 Mistral 7B model on Hugging Face and sought advice on resolving the issue.

-

Tech Titans’ AI Tools Scrutinized: Users compared Reka AI’s Core model with GPT-4V, Claude-3 Opus, and Gemini Ultra in a showcase and discussed Google’s CodecLM, which aims for high-quality synthetic data generation for language model alignment.

-

Innovation or Hype? Open AI Models Excite but Confuse: Despite the enthusiastic downloading of WizardLM-2 before its takedown, confusion remained about its removal, while new models like CodeQwen1.5 promise enhanced code generation in 92 languages, and Qwen’s 7B, 14B, and 32B models are mentioned for their benchmark scores.

-

Breaking Binary Boundaries: Discussion of a binary quantization-friendly AI model on Hugging Face sparked interest due to memory-efficient int8 and binary embeddings, with calculation methods like XOR operations for embedding distance, cited from Cohere’s blog post.

-

Game Design Meets Quantum Probability: Enthusiasm about WorldSim’s potential to revolutionize game development is evident, with conversations about using LLMs in future to affect in-game variables and content creation, flavoring it with undertones of AI-assisted omnipotence.

OpenRouter (Alex Atallah) Discord

-

WizardLM Models Magically Appear on OpenRouter: The WizardLM-2 8x22B and WizardLM-2 7B models from Microsoft have been added to OpenRouter, with the former’s cost now at $0.65/M tokens. Several members have initiated a thread to discuss the implications of this addition.

-

Intermittent Latency Looms Over Users: There were reports of high latency issues affecting models like Mistral 7B Instruct and Nous Capybara 34b, with the problem traced back to an upstream issue with a cloud provider’s DDoS protection. Further complications have led to said provider being deranked to alleviate concerns, and global users are being called upon to report their experience with the issue.

-

Rubiks.ai Rolls Out Beta with Big Name Models: A new AI platform, Rubiks.ai, is courting beta testers with the offer of 2 months free premium access and the chance to experiment with models such as Claude 3 Opus, GPT-4 Turbo, and Mistral Large. Users facing account-related issues are advised to send feedback directly to the developers.

-

Falcon 180B Soars With GPU Thirst: The hefty GPU memory requirement of around 100GB for the Falcon 180B Chat GPTQ got the community talking about both the model’s resource intensity and its potential usage considerations, underlined by a link to the Falcon 180B repository.

-

Cost-effective Communication & Model Responsiveness Advice: In a nod to efficient model usage, it was proposed that an average of 1.5 tokens per word could be a benchmark for cost calculation. Separate discussions highlighted positive attributes of the Airoboros 70B’s prompt compliance, contrasting it with less consistent models.

CUDA MODE Discord

PyTorch Book Still Flares Interest: Despite being 4 years old, “Deep Learning with PyTorch” is seen as a useful foundation for PyTorch fundamentals, while chapters on transformers, LLMs, and deployment are dated. Anticipation grows for a new edition to cover recent advancements.

Torch and CUDA Grapple with Optimization: Understanding and implementing custom backward operations in Llama exhibit challenges for AI engineers, while the use of torch.nn.functional.linear and the stable-fast library are leading discussions for optimizing inference in the CUDA environment.

Novel Approaches in Transcript Processing: An automated transcript for a CUDA talk utilizing cutting-edge tools is provided by Augmend Replay, offering the AI community OCR and segmentation features for video content analysis.

Quantum Leaps with HQQ and GPT-Fast: Significant strides in token generation speeds are observed after implementing torchao int4 kernel in the generation pipeline for transformers, rising to 152 tokens/sec. The HQQ+ method also marked an accuracy increase, spurring discussions around quantization axis and integration with other frameworks.

llm.C at the Forefront of CUDA Exploration: The llm.C project ignites discussions on CUDA optimizations, underscoring the balance between education and creating efficient kernels. Optimizations, profiling, potential strategies, and applicable datasets all jostle for attention in this growing space.

Eleuther Discord

-

Pile-T5 Reveals Impressive Benchmarks: EleutherAI introduced Pile-T5, a T5 model family trained on the Pile with up to 2 trillion tokens and showing improved performance on language tasks with the new LLAMA tokenizer. Key resources include a blog post, GitHub repository, and a Twitter announcement.

-

Tackling the Temporal Challenge in Video Diffusion: Discussions in the research channel touched on the complexities of video synthesis using diffusion models, with participants referring to a post on diffusion models for video generation and deliberating on the importance of tokenization in language models, as outlined in a Berkeley paper.

-

LLM Evaluation Continues to Evolve: Within the lm-thunderdome channel, there were insights into OpenAI’s public release of GPT-4-Turbo’s evaluation implementations on GitHub, and enhancement of

lm-evaluation-harnesswith new benchmarks such asflores-200andsib-200. -

Token Management Under the Microscope: The gpt-neox-dev channel broached technical issues such as the effect of weight decay on dummy tokens, the necessity of sanity checks after model adjustments, and token encoding behaviors with shared code outputs demonstrating token transformations.

-

Debates on Model Architecture Efficiency: Active discussions contrasted dense models with Mixture-of-Experts (MoE), debated their efficiency, inference cost, and constraints, showing the ongoing quest for optimizing language model architectures.

OpenAI Discord

-

Brains and Bots: Members shared interest in Angela D. Friederici’s book, Language in Our Brain: The Origins of a Uniquely Human Capacity, sparking dialogue on the neurobiological underpinnings that differentiate humans and AI in language capacities. The discussions stressed the challenge in neuroscience of handling the ‘data glut’ from Big Brain Projects and the proprietary hurdles that hinder data interpretation.

-

AI Limitations and Liberties: In a look at the contrast between AI and humans, it emerged that artificial systems are yet to match human-like storage of learned information, independent decision-making abilities, and emotional responses. The reference to Claude 3 API’s accessibility issues in Brazil underscored the geographical nuances in reaching AI tools.

-

Chatbots Grapple with GPT Constraints: Despite advances, the GPT’s context window remains a critical concern, with GPT-3’s API permitting a 128k context but ChatGPT itself constrained to 32k. Mechanisms like “retrieval” through document upload were demystified, allowing extension of the effective context window within the API’s framework.

-

Discovering the Depths of Turing Completeness: A spirited debate arose about the Turing completeness of Magic: The Gathering, suggesting that the concept extends its reach well beyond traditional computational systems.

-

Clouded Queries and Cryptic Replies: Prompt Engineering and API Discussions channels surfaced brief and ambiguous exchanges about an unidentified competition and cryptic one-word responses such as “buzz” and “light year,” underscoring the occasional opacity in dialogue within technical forums.

LlamaIndex Discord

Tutorial Treasure Trove: LlamaIndex announced an introductory tutorial series for agent interfaces and applications, aiming to clarify usage of core agent interfaces. In collaboration, LlamaIndex and Qdrant Engine introduced a hybrid cloud service offering, and a new tutorial was shared highlighting the integration of LlamaIndex with Azure AI to leverage hybrid search in RAG applications, crafted by Khye Wei from Microsoft found here.

AI Chat Chops: Within the LlamaIndex community, discussion ranged from implementing async compatibility with Claude in Bedrock (where async has not yet been implemented) to complex query construction help available in the documentation. Integration issues with gpt-3.5-turbo and LlamaIndex were likely related to outdated versions or account balances, and configuring fallbacks for decision-making with incomplete data remains an open challenge.

Reasoning Chains Revolution: Revealing advancements in reasoning chain integration with LlamaIndex, a key article titled “Unlocking Efficient Reasoning” can be found here. Solutions for token counting in RAGStringQueryEngine and hierarchical document organization in LlamaIndex were discussed in detail, with the community providing a concrete token counter integration guide involving a TokenCountingHandler and CallbackManager as per LlamaIndex’s reference documentation.

LAION Discord

Hugging Face Rings in New TTS Library: A high-quality TTS model library, parler-tts, for both inference and training was showcased, bolstered by its hosting on Hugging Face’s community-driven platform.

Scaling Down CLIP – Less Data, Equal Power: A study on CLIP demonstrates that strategic data use and augmentation can allow smaller datasets to match the performance of the full model, introducing new considerations for data-efficient model training.

Deepfakes – Legislation Incoming, Controversies Continue: The community debated newly proposed laws against deepfakes as well as unethical practices in AI, raising awareness about a potential scam promoted through a suspicious site advertised on Facebook, found here.

Safety Benchmarking Becomes ALERT: Discussion on the importance of safety in AI highlighted the release of the ALERT benchmark, designed to evaluate large language models for handling potentially harmful content and reinforcing conversations around safety versus creative freedom.

Audio Generation Advancements on the Horizon: Research involving the Tango model to enhance text-to-audio generation shed light on improvements in relevance and order of audio events, marking progress for audio generation from text in data-scarce setups.

HuggingFace Discord

-

IDEFICS-2 Shines in Multimodal Processing: The recently released Idefics2 enhances multimodal capabilities, excelling in tasks such as image and text sequence processing, and is set to get a chatty variant for conversational interaction. When probed, demonstrations of its capabilities like decoding CAPTCHAs with heavy distortion were highlighted.

-

Diverse AI Insights and Queries: Community members have raised various topics ranging from BLIP model fine-tuning for long captions, musical AI projects like

.bigdookie'sinfinite remix GitHub repo, and the usage of Java for image recognition outlined in a Medium article. Discussions also cover best practices for collaborative work on HuggingFace and survey participation from machine learning practitioners. -

Unlock the Potential of BERTopic: AI engineers engaged in deep dives into frameworks like BERTopic, which revolutionizes topic modeling through the use of transformers. It’s lauded for its performance and versatility, with guides like the BERTopic Guide assisting users in navigating its myriad capabilities for structured topic extraction.

-

Clarifying NLP and Diffusion Model Confusions: Clarifications were sought for NLP tensor decoding using T5 models and LoRA configurations, while questions about the differences between LLMs and embedding models, and issues with token limits in diffusion models were also discussed. A community member flagged a warning regarding token truncation when using stable diffusion models, referring to an open GitHub issue.

-

Vision and NLP Model Optimization Efforts: Engineers have shown interest in tuning models for specific use cases, such as a vision model for low-resolution image captioning and the potential use of advanced taggers for SDXL. Similarly, advice is sought for preparing a dataset for fine-tuning a ROBERTA Q&A chatbot and utilizing models like spaCy and NLTK for getting started in NLP.

Cohere Discord

Command-R Struggles with Macedonian: Discussions flagged that Command-R doesn’t perform well in Macedonian, with concerns raised on the community-support channel. Issues raised highlight the need for multilingual model improvements.

Asynchronous Streaming with Command-R: Engineers queried the best practices for converting synchronous code to asynchronous in Python, aiming to enhance the efficiency of chat streaming with the Command-R model.

Trial API Limits Clarified: For Cohere’s API, engineers discovered that the ‘generate, summarize’ endpoint has a limit of 5 calls per minute, while other endpoints permit 100 calls per minute, with a shared pool of 5000 calls per month for all trial keys.

Commander R+ Gains Traction: A discussion took root around accessing Commander R+ using Cohere’s paid Production API, highlighting existing documentation for potential subscribers.

Rubiks.ai Introduces AI Powerhouse: Engineers took note of the launch of Rubiks.ai, which offers a suite of models including Claude 3 Opus, GPT-4 Turbo, Mistral Large, and Mixtral-8x22B, with an introductory offer of 2 months of premium access on Groq servers.

OpenAccess AI Collective (axolotl) Discord

Deepspeed’s Multi-node Milestone: A guide for multi-node distributed fine-tuning using Axolotl with Deepspeed 01 and 02 configurations was shared. The pull request outlines steps to address configuration issues.

Idefics2 Raises the Bar: The newly released Idefics2 8B on Hugging Face surpasses Idefics1 in OCR, document understanding, and visual reasoning with fewer parameters. Access the model on Hugging Face.

Pacing for RTX 5090’s Big Reveal: Anticipation builds for Nvidia’s upcoming RTX 5090 graphics card, speculated to debut at the Computex trade show. This early release may be fueled by competitive pressure as discussed on PCGamesN.

Gradient Accumulation Spotlighted: Queries on gradient accumulation’s memory conservation in the context of sample packing and dataset length led to explorations of its impact on training time.

Streamline Model Saving with Axolotl: Configuring Axolotl to save models only upon training completion rather than after each epoch involves setting save_strategy to "no". Additionally, “TinyLlama-1.1B-Chat-v1.0” was recommended for tight computational spaces, with its setup in the examples/tiny-llama directory of Axolotl’s repository.

Latent Space Discord

Rewound Now Unbound as Limitless: The wearable tech previously referred to as Rewind has been rebranded to Limitless, sparking a discussion about its real-time application potential and the implications for future AI advancements. Concerns regarding data privacy and HIPAA compliance for cloud-stored information were vocalized by members.

The Birth of Reka Core: Reka Core enters the chat as a multimodal language model that comprehends video. The community appears intrigued by the small team achievement in AI democratization and the technical report released at publications.reka.ai.

Cohere Compass Beta Steers In: Cohere’s Compass Beta was unveiled as a next-level data search system, meriting discussion around its embedding model and the beta testing opportunities for applicants eager to explore its functional boundaries.

Payman AI Explores AI-Human Marketplaces: Payman AI piqued interest with its innovative concept of a marketplace where AI can hire humans, driving conversations around implications for data generation and advancing AI training methodologies.

Strong Compute Serves Resources on Silver Platter: Strong Compute revealed a grant program for AI researchers, dangling the carrot of $10k-$100k and substantial GPU resources for initiatives in Explainable AI, post-transformer models, and other groundbreaking areas, with a swift application deadline signaled by the end of April. Details on the offer and the application process were outlined at Strong Compute research grants page.

OpenInterpreter Discord

AI Innovation Storm Brewing: The OpenInterpreter community launched a brainstorming space to ideate on uses of the platform, focusing on features, bugs, and innovative applications.

Voice Communication Soars with Airchat: There’s a buzz around Airchat within the community as engineers exchange usernames and scrutinize its features and usability, signaling a growing interest in diverse communication platforms.

Open Source AI Generates Excitement: Opensource AI models, notably WizardLm2, are receiving attention for providing transparent access to powerful AI capabilities akin to GPT-4, highlighting community interest in open-source alternatives.

Navigating the 01 Pre-order Process: For those reconsidering their 01 pre-orders, they can easily cancel by reaching out to [email protected], and there’s growing discussion on Windows 11 installation woes and hardware compatibility improvisations using parts from AliExpress.

Linux Love for OpenInterpreter: Linux users are directed to rbrisita’s GitHub branch, agglomerating all the latest PRs for the 01 device, and the community is also optimizing their 01 setups with custom designs and battery life improvements.

LangChain AI Discord

-

LangChain Documentation Revamp Requesting Feedback: LangChain engineers have outlined a new documentation structure to better categorize tutorials, how-to guides, and conceptual information, to improve user navigation across resources. Feedback is sought, and an introduction to LangChain has been made available, detailing its application lifecycle process for large language models (LLMs).

-

Parallel Execution and Azure AI Conflict Solving: Technical discussions confirmed that LangChain’s

RunnableParallelclass allows for concurrent execution of tasks, with reference to Python documentation for parallel node running. Meanwhile, solutions are being exchanged on issues withneofjVectorIndexandfaiss-cpu, including LangChain version rollbacks and branch switches. -

Innovations and Announcements Flood LangChain: A series of project updates and community exchanges highlighted advancements such as improved Rag Chatbot performance via multiprocessing, the introduction of Perplexica as a new AI-driven search engine, and the launch of tools like Payman for AI-to-human payments, viewable at Payman.ai. Other announcements included GalaxyAI’s free premium model access, OppyDev’s AI-assisted coding tool (oppydev.ai), and a call for beta testers for Rubiks AI’s research assistant with perks (rubiks.ai).

-

Channeling AI for RBAC Implementation and YC Aspirations: Specific discussions touched on implementing role-based access control (RBAC) within LangChain for large organizations and gauging the landscape for finetuning models for YC applications, indicating both challenges and existing companies like Holocene Intelligence in the space.

-

Nurturing AI with Memory and Collaborative Efforts: Shared knowledge included a video on crafting AI agents with long-term memory and a call for collaboration in integrating LangServe with Nemo Guardrails, suggesting a need for a new output parser due to updates. Community members also explored payment-enabled AI recommendations and document processing concerns, all hinting at an emphasis on shared growth and collaborative experimentation.

tinygrad (George Hotz) Discord

-

Budget-Friendly GPU Clusters: Engineers discussed a cost-effective alternative to TinyBox using six RTX 4090 GPUs, resulting in up to a 61.624% cost reduction compared to the $25,000 TinyBox model. The emphasis was on achieving 144 GB of GPU RAM within a budget.

-

A Potential BatchNorm Bug: George Hotz called for a test case to investigate a potential bug in tinygrad’s batchnorm implementation, following a user’s concern about the order of operations involving

invstdandbias. -

Navigating Tinygrad’s Documentation Work: Participants recognized the need to enhance tinygrad documentation, with ongoing efforts to make strides towards more comprehensive guides, particularly as the system evolves from version 0.9 to 1.0.

-

Strategies for Model Conversion: Users are exploring ways to convert models from ONNX to WebGL/WebGPU efficiently, targeting memory optimization by potentially leveraging tinygrad’s

extras.onnxmodule, as indicated by interest in Stable Diffusion WebGPU examples. -

Improving Tinygrad Development Experience: The community suggested increasing the line limit for merging NV backends to 7,500 lines, as seen in a recent commit, to balance codebase inclusiveness and quality, while addressing experiences of error comprehensibility.

Interconnects (Nathan Lambert) Discord

AI Models Flood the Market: EleutherAI has introduced the Pile-T5 with details shared in a blog post, while WizardLM 2 is drawing interest with its foundation transformer tech and guide on WizardLM’s page. Additionally, Reka Core breaks onto the scene as explained in its technical report, and Idefics2’s debut is narrated on the Hugging Face blog, amid Dolma going open-source under an ODC-BY license.

Graph Love and Hefty Models Emit Buzz: The community is showing keen interest in turning sophisticated graphs into a Python library for model exploration, while expressing mixed reactions to LLAMA 3’s massive training scale of 30 trillion tokens.

WizardLM Vanishes with Abrupt Apology: Tension rose with the unexplained removal of WizardLM, with its model weights and posts erased, prompting speculation and an apology from WizardLM AI over a missed toxicity test, and a potential re-release in the pipeline.

Exploration vs. Intervention: A member considers whether to leave a bot to its own learning process or to step in, illustrating the fine line between letting algorithms explore and manual intervention.

Datasette - LLM (@SimonW) Discord

-

Debate on Data Annotation Necessity: In a recent discussion, participants explored whether the traditional practice of dataset annotation prior to training models is still critical given the rise of advanced LLMs. They pondered if in-depth understanding of datasets remains important or models can sufficiently learn patterns independently.

-

Transparency in LLM Demos Demanded: Dissatisfaction was voiced over LLM demos that lack open prompts, with users favoring clear insight into the model behavior to achieve desired outcomes without guesswork. Concerns were also raised about models inconsistently following privacy directives during tasks such as indexing sensitive information.

-

Streamlit Eases LLM Log Browsing: An LLM web UI for more user-friendly navigation of log data has been created using Streamlit, with an aim for simpler revisiting of past chats compared to Datasette. The interface currently supports log browsing and the creator provided the initial code via a GitHub gist.

-

Call for Interface Integration Ideas: Following the showcase of the web UI prototype, discussion ensued regarding the possibility of its integration either as a Datasette plugin or as a standalone tool, pondering the practicality and long-term utility of such enhancements.

-

Quest for a Consistent Indexing Tool: Exchanges highlighted the unpredictability of language models in handling tasks like newspaper indexing, particularly with models refusing to list names in adherence to privacy norms. The conversation underscored the need for more reliable tools and noted reaching out to Anthropic for assistance with model refusals.

Alignment Lab AI Discord

-

WizardLM2 Disappears from Hugging Face: The WizardLM2 collection on Hugging Face has vanished, and a collection update shows all models and posts are now missing. A direct link to the update is provided here: WizardLM Collection Update.

-

Potential Legal Concerns for WizardLM2: There’s an unconfirmed question circulating about whether the removal of WizardLM2 is due to legal concerns, though no further information or sources are cited to clarify the nature of these potential issues.

-

Rush for WizardLM2 Resources: The community is actively seeking anyone who might have downloaded the WizardLM2 weights prior to their deletion.

-

Evidence of WizardLM2’s Erroneous Deletion: A community member shared a screenshot that provides evidence that WizardLM2 was deleted as a result of improper testing. The screenshot can be viewed here: WizardLM2 Deletion Confirmation.

DiscoResearch Discord

LLama-Tokenizer Training Troubles: Engineering members shared challenges in training a Llama-tokenizer with the goal of achieving hardware compatibility via reduced embedding and output perceptron sizes. They explored scripts like convert_slow_tokenizer.py from Hugging Face and convert.py from llama.cpp to aid in the process.

Hunt for EU Copyright-Compliant Resources: There’s an active quest to find text and multimodal datasets compatible with EU copyright laws for training a multimodal model. Suggestions for starting points included Wikipedia, Wikicommons, and CC Search to gather permissive or free data.

Sampling Strategies Examined: Discourse in the engineering circles revolved around decoding strategies for language models, emphasizing the need for academic papers to include modern methods like MinP/DynaTemp/Quadratic Sampling. A shared Reddit post offers a layman’s comparison, while the conversation called for more rigorous research into these strategies.

Decoding Methodology Deserves a Closer Look: An examination of decoding methods in LLMs has exposed a gap in current literature, specifically related to open-ended tasks seen in operational models. Members expressed the need for in-depth research on advanced sampling methods and their impacts on model performance.

Creative Writing Boost with MinP Sampling: A notable performance boost in creative writing tasks was highlighted, with the alpaca-eval style elo score increasing by +8 and the eq-bench creative writing test seeing a +10 increment due to min_p sampling parameters. Such improvements signify the potential impacts of fine-tuning sampling strategies on LLM outputs.

Skunkworks AI Discord

-

NYC Event for Scaling Gen AI Apps: A General AI enthusiast meetup at Gunderson Legal in New York City will focus on scaling Gen AI applications to production stages. The event details and registration link are available here, alongside a note of participation by industry leaders from Portkey and Noetica.

-

Reka Core Emerges as a Strong Contender: A new video titled “Reka Core: A Frontier Class Multimodal Language Model” showcases Reka Core holding its own against competing models by OpenAI, Anthropic, and Google, highlighted in a YouTube video.

-

JetMoE-8B Achieves Cost-Efficient Superiority: The JetMoE-8B model, developed on a budget under $0.1 million, reportedly excels past Meta AI’s LLaMA2-7B, a model created with significantly larger wealth, as revealed in a YouTube video.

Mozilla AI Discord

- Packaging Custom Models Just Got Easier: A community member’s request for guides on packing customized AI models into a llamafile was noted, aiming to support peers in their endeavors.

- Docker Deployment Demystified: A GitHub pull request provided walkthrough steps for engineers to build and publish containers to Docker Hub using GitHub Actions, complete with necessary setting up of repository secrets like

DOCKER_HUB_USERNAMEandDOCKER_HUB_ACCESS_TOKEN. Publish container to Docker Hub.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

Stability.ai (Stable Diffusion) ▷ #general-chat (1015 messages🔥🔥🔥):

- In Search of the Elusive SD3: The anticipated launch of Stable Diffusion 3 (SD3) remains a topic of speculation with various members inquiring about its release date, and many whimsically suggesting that it’s a myth with satirical estimates like “April 26” or “April 69.”

- Choosing an Image to Video Workflow: For those wanting to animate images, resources like ComfyUI workflows and Stability Forge are recommended. Some users experience difficulties running models directly in Python and seek advice on simple APIs for animation.

- Stable Diffusion with Different Technologies: Discussion threads touched upon various aspects of Stable Diffusion and related AI advances such as pixel art conversion to realistic images with models like SUPIR, and the transformation of pixelated images using img2img controlnet-based solutions like Fooocus or Magic Image Refiner.

- Prompt Crafting and Model Discussion: Users debate prompt engineering, with suggestions that you don’t need to be an expert or take a course to craft an effective prompt; using platforms like civitai can give decent prompting baselines. New advancements like WizardLM-2 briefly appear before being deleted for untested toxicity.

- Casual Banter and AI Future Musings: The community casually jokes about “Stable Coin,” “Stable Miner,” and “university for prompt engineers,” while also envisioning a world with advanced AI technologies like game style conversions and AI brain surgery, reflecting both humour and aspirational hopes for AI development.

Links mentioned:

- Video Examples: Examples of ComfyUI workflows

- no title found: no description found

- camenduru/SUPIR · Hugging Face: no description found

- Perturbed-Attention-Guidance Test | Civitai: A post by rMada. Tagged with . PAG (Perturbed-Attention Guidance): https://ku-cvlab.github.io/Perturbed-Attention-Guidance/ PROM...

- TikTok - Make Your Day: no description found

- Reddit - Dive into anything: no description found

- WizardLM/WizardLM-2 at main · victorsungo/WizardLM: Family of instruction-following LLMs powered by Evol-Instruct: WizardLM, WizardCoder - victorsungo/WizardLM

- Developer Recruiters GIF - Developer Recruiters Programmer - Discover & Share GIFs: Click to view the GIF

- Consistent Cartoon Character in Stable Diffusion | LoRa Training: In this video, I will try to make a consistent cartoon character by training a Lora.*After Detailer* ➜ https://github.com/Bing-su/adetailer*Kohya SS GUI* ➜ h...

- Is it possible to use Flan-T5 · Issue #20 · city96/ComfyUI_ExtraModels: Would it be possible to use encoder only version of Flan-T5 with Pixart-Sigma? This one: https://huggingface.co/Kijai/flan-t5-xl-encoder-only-bf16/tree/main

- Reddit - Dive into anything: no description found

- How To Install Stable Diffusion (In 60 SECONDS!!): Here's the easiest way to install Stable Diffusion AI locally FAST - Big Shout-Outs to Le-Fourbe for walking me through the process!!GitHub Link here:https:/...

- GitHub - BatouResearch/magic-image-refiner: Contribute to BatouResearch/magic-image-refiner development by creating an account on GitHub.

- GitHub - QuintessentialForms/ParrotLUX: Pen-Tablet Painting App for Open-Source AI: Pen-Tablet Painting App for Open-Source AI. Contribute to QuintessentialForms/ParrotLUX development by creating an account on GitHub.

- GitHub - kijai/ComfyUI-SUPIR: SUPIR upscaling wrapper for ComfyUI: SUPIR upscaling wrapper for ComfyUI. Contribute to kijai/ComfyUI-SUPIR development by creating an account on GitHub.

- GitHub - AUTOMATIC1111/stable-diffusion-webui: Stable Diffusion web UI: Stable Diffusion web UI. Contribute to AUTOMATIC1111/stable-diffusion-webui development by creating an account on GitHub.

- Reddit - Dive into anything: no description found

- GitHub - city96/ComfyUI_ExtraModels: Support for miscellaneous image models. Currently supports: DiT, PixArt, T5 and a few custom VAEs: Support for miscellaneous image models. Currently supports: DiT, PixArt, T5 and a few custom VAEs - city96/ComfyUI_ExtraModels

- Comfy Workflows: Share, discover, & run thousands of ComfyUI workflows.

- GitHub - lllyasviel/stable-diffusion-webui-forge: Contribute to lllyasviel/stable-diffusion-webui-forge development by creating an account on GitHub.

- Amazon.com: NVIDIA H100 Graphics Card, 80GB HBM2e Memory, Deep Learning, Data Center, Compute GPU : Electronics: no description found

Unsloth AI (Daniel Han) ▷ #general (430 messages🔥🔥🔥):

-

Complications in Notebook Implementation: Members discussed the complexity of a certain task, mentioning they will need to create a detailed notebook for it. The process was acknowledged as complicated for both the members involved and the users.

-

Confusion over Crypto and TGE: A user asked about “mainnet” and “TGE” dates, leading to confusion among the chat participants. It was clarified that Unsloth AI is not associated with cryptocurrency.

-

Troubleshooting Technical Issues with Unsloth: Users faced issues with getting continuous outputs and package errors. It was suggested to use end-of-string markers (

</s>) to limit model generation, and members were advised to follow the Colab notebooks provided by Unsloth, which contain pre-configured settings. -

Discussions about Upcoming Model Releases: There were anticipations about potential new model releases, including discussions on “llama 3” and the difference between various Unsloth optimization tactics. Users shared resources and engaged in speculation based on reputation and past announcements, indicating a mix of excitement and nervousness about the potential workload a new release could bring to the team.

-

Contributions and Contributions to Unsloth: Individuals expressed interest in both contributing to Unsloth documentation and making a one-time financial contribution. It was mentioned that contributions focused on expanding Unsloth’s Wiki, particularly regarding Ollama/oobabooga/vllm, would be valuable, and the team expressed openness to community involvement in improving their documentation.

-

Surrounding Controversy and Reupload of WizardLM: A notable incident was discussed concerning the re-upload of WizardLM versions on various platforms following the original release getting pulled due to a missed toxicity test. Multiple users exchanged information about the reuploads and the reasons for the original takedown.

Links mentioned:

- WizardLM 2: SOCIAL MEDIA DESCRIPTION TAG TAG

- screenshot: Discover the magic of the internet at Imgur, a community powered entertainment destination. Lift your spirits with funny jokes, trending memes, entertaining gifs, inspiring stories, viral videos, and ...

- alpindale/WizardLM-2-8x22B · Hugging Face: no description found

- Google Colaboratory: no description found

- lucyknada/microsoft_WizardLM-2-7B · Hugging Face: no description found

- Google Colaboratory: no description found

- Home: 2-5X faster 80% less memory LLM finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

- twitter.co - Domain Name For Sale | Dan.com: I found a great domain name for sale on Dan.com. Check it out!

- Full fine tuning vs (Q)LoRA: ➡️ Get Life-time Access to the complete scripts (and future improvements): https://trelis.com/advanced-fine-tuning-scripts/➡️ Runpod one-click fine-tuning te...

- GitHub - cognitivecomputations/OpenChatML: Contribute to cognitivecomputations/OpenChatML development by creating an account on GitHub.

Unsloth AI (Daniel Han) ▷ #random (6 messages):

- Coding an Emotional LLM: ashthescholar. indicated that they are working on the skeleton for an “emotional” large language model (LLM) and are about to start coding, looking forward to sharing their progress.

- Syncing Up With Chroma’s Roadmap: lhc1921 shared their plans to work on an edge version of Chroma with libSQL (SQLite) in Go and WASM, partially inspired by another member’s strategy on making on-device training possible. The work is in collaboration with taz, a key Chroma contributor, and the repository can be found on GitHub - l4b4r4b4b4/go-chroma.

Link mentioned: GitHub - l4b4r4b4b4/go-chroma: Go port of Chroma vector storage: Go port of Chroma vector storage. Contribute to l4b4r4b4b4/go-chroma development by creating an account on GitHub.

Unsloth AI (Daniel Han) ▷ #help (322 messages🔥🔥):

- Unsloth Assists Data-Driven Chatbot Upgrade: A member discusses formatting personal chat messages for training an AI clone of themselves via Unsloth and gets assistance including a Python script for converting the dataset into ShareGPT format, and advice on using this script with their personal data to create a training-ready dataset. They were also directed to a related Unsloth notebook.

- LoRA Tweaking for Enhanced Training: Users report varying the alpha parameter during fine-tuning a model using LoRA. For rslora, it’s suggested that alpha be double the rank value, though the exact optimal value may vary by case.

- ORPO Support with Unsloth: According to a member, ORPO, which optimizes the resources required for model training, is already supported within Unsloth. The ORPO method differs from DPO by not requiring a separate SFT (Supervised Fine-Tuning) step beforehand.

- CUDA Learning and Triton’s Promise: A participant new to CUDA gets advice to lean on Triton tutorials for learning and advised that Unsloth does not recommend native CUDA, instead suggesting Triton as more beneficial for LLM work.

- Unsloth and Gemma: For best results, don’t use packing with Gemma models. The Unsloth library is compatible with packing for Llama and Mistral, and configurations with high-rank adapters may exhibit unexpected loss jump issues during training.

Links mentioned:

- Find Open Datasets and Machine Learning Projects | Kaggle: Download Open Datasets on 1000s of Projects + Share Projects on One Platform. Explore Popular Topics Like Government, Sports, Medicine, Fintech, Food, More. Flexible Data Ingestion.

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- G-reen/EXPERIMENT-ORPO-m7b2-1-merged · Hugging Face: no description found

- Practical Tips for Finetuning LLMs Using LoRA (Low-Rank Adaptation): Things I Learned From Hundreds of Experiments

- Unsloth - 4x longer context windows & 1.7x larger batch sizes: Unsloth now supports finetuning of LLMs with very long context windows, up to 228K (Hugging Face + Flash Attention 2 does 58K so 4x longer) on H100 and 56K (HF + FA2 does 14K) on RTX 4090. We managed...

- Intro to Triton: Coding Softmax in PyTorch: Let's code Softmax in PyTorch eager and make sure we have a working version to compare our Triton Softmax version with. Next video - we'll code Softmax in Tr...

- Home: 2-5X faster 80% less memory LLM finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

- Installation: no description found

- no title found: no description found

- Installation: no description found

- GitHub - comfyanonymous/ComfyUI: The most powerful and modular stable diffusion GUI, api and backend with a graph/nodes interface.: The most powerful and modular stable diffusion GUI, api and backend with a graph/nodes interface. - comfyanonymous/ComfyUI

- Add ORPO example notebook to the docs · Issue #331 · unslothai/unsloth: It's possible to use the ORPOTrainer from TRL with very little modification to the current DPO notebook. Since ORPO reduces the resources required for training chat models even further (no separat...

- A Rank Stabilization Scaling Factor for Fine-Tuning with LoRA: As large language models (LLMs) have become increasingly compute and memory intensive, parameter-efficient fine-tuning (PEFT) methods are now a common strategy to fine-tune LLMs. A popular PEFT method...

- Rank-Stabilized LoRA: Unlocking the Potential of LoRA Fine-Tuning: no description found

- Tokenizer: no description found

- Adding New Vocabulary Tokens to the Models · Issue #1413 · huggingface/transformers: ❓ Questions & Help Hi, How could I extend the vocabulary of the pre-trained models, e.g. by adding new tokens to the lookup table? Any examples demonstrating this?

Unsloth AI (Daniel Han) ▷ #showcase (47 messages🔥):

- Benchmark Buzz: Members discussed a first benchmark’s performance and shared encouraging remarks, such as proclaiming it looks “fantastic” and “pretty good.”

- Model Template Mysteries: A user queried how a leaderboard discerns the model template, which was clarified by another member pointing to

tokenizer.chat_template. - Squeaky Clean Data: A dataset named ShareGPT90k was pushed in a cleaned and ChatML format, with HTML tags like

<div>and<p>replaced with empty strings. The user urged others to use thetextkey when training with Unsloth AI. - Anticipating Ghost’s Recipe: Debates arose around the accessibility and vulnerability of training recipes for LLMs, particularly regarding a model named Ghost. Users discussed the importance of data quality over fine-tuning techniques and gave a nod to the importance of gaining experience through experimentation rather than relying on predefined recipes.

- Sharing Academic Insights and Resources: Conversations included sharing valuable resources like detailed research papers and YouTube tutorials explaining advanced AI concepts like Direct Preference Optimization. One user specifically looked forward to learning about the training approach behind the Ghost model.

Links mentioned:

- Practical Tips for Finetuning LLMs Using LoRA (Low-Rank Adaptation): Things I Learned From Hundreds of Experiments

- Sloth Crawling GIF - Sloth Crawling Slow - Discover & Share GIFs: Click to view the GIF

- Direct Preference Optimization (DPO) explained: Bradley-Terry model, log probabilities, math: In this video I will explain Direct Preference Optimization (DPO), an alignment technique for language models introduced in the paper "Direct Preference Opti...

- FractalFormer: A WIP Transformer Architecture Inspired By Fractals: Check out the GitHub repo herehttps://github.com/evintunador/FractalFormerSupport my learning journey on patreon!https://patreon.com/Tunadorable?utm_medium=u...

- Neural Graph Reasoning: Complex Logical Query Answering Meets Graph Databases: Complex logical query answering (CLQA) is a recently emerged task of graph machine learning that goes beyond simple one-hop link prediction and solves a far more complex task of multi-hop logical reas...

- LLM Phase Transition: New Discovery: Phase Transitions in a dot-product Attention Layer learning, discovered by Swiss AI team. The study of phase transitions within the attention mechanisms of L...

- After 500+ LoRAs made, here is the secret: Well, you wanted it, here it is: The quality of dataset is 95% of everything. The rest 5% is not to ruin it with bad parameters. Yeah, I know,...

- pacozaa/sharegpt90k-cleanned · Datasets at Hugging Face: no description found

Modular (Mojo 🔥) ▷ #general (60 messages🔥🔥):

-

Kapa.AI Brings Instant Answers to Discord Communities: Kapa.AI offers customizable AI bots that provide instant technical support in communities such as Slack and Discord. Their bots can be added to servers to improve developer experience by providing immediate answers and eliminating wait times, which is elaborated on their community engagement use-case page and their Discord installation documentation.

-

Exploring Compilation Optimization with Mojo Language: A discussion on using aliases for compile-time parameter decisions in Mojo language revealed that using such methods can lead to memory optimizations as unused aliases don’t reserve memory space after compilation. For more nuanced opinions on code clarity versus comments, a YouTube video on the subject was shared.

-

Understanding Typestates and Memory Efficiency: Conversing about the benefits of typestates over aliases, a member recommended a technique from Rust for making compile-time guarantees about object state, as described in an article about Rust typestates. The discussion evolved around memory use optimizations in language design, specifically how boolean values are stored and addressed in memory.

-

Bit-Level Optimizations in Programming Languages Explored: The chat shed light on why language specifications, like those in C, define enums as 32-bit integers and debated whether bools need to use a full byte. These topics touched on processor-level memory allocation and the potential efficiencies in memory usage at the language-level, referencing how boolean values could be represented more compactly as bits within a byte.

-

Rust’s BitVec Crate Offers Both Speed and Memory Efficiency: The discussion concluded by endorsing Rust’s BitVec crate for being both speed and memory efficient when handling sets of boolean flags. An example cited was an optimization case which improved performance, going from taking years to just minutes, by using a bitset in Rust, as detailed on willcrichton.net.

Links mentioned:

- Packed structs in Zig make bit/flag sets trivial: As we've been building Mach engine, we've been using a neat little pattern in Zig that enables writing flag sets more nicely in Zig than in other languages. Here's a brief explainer.

- Bamboozled GIF - Bamboozled - Discover & Share GIFs: Click to view the GIF

- Analyzing Data 180,000x Faster with Rust: How to hash, index, profile, multi-thread, and SIMD your way to incredible speeds.

- kapa.ai - Instant AI Answers to Technical Questions: kapa.ai makes it easy for developer-facing companies to build LLM-powered support and onboarding bots for their community. Teams at OpenAI, Airbyte and NextJS use kapa to level up their developer expe...

- The Typestate Pattern in Rust - Cliffle : no description found

- kapa.ai - ChatGPT for your developer-facing product: kapa.ai makes it easy for developer-facing companies to build LLM-powered support and onboarding bots for their community. Teams at OpenAI, Airbyte and NextJS use kapa to level up their developer expe...

- Discord Bot | kapa.ai docs: Kapa can be installed as a bot on your Discord server. The bot allows users to ask questions in natural language about your product which improves developer experience as developers can find answers t...

- Don't Write Comments: Why you shouldn't write comments in your code (write documentation)Access to code examples, discord, song names and more at https://www.patreon.com/codeaesth...

Modular (Mojo 🔥) ▷ #💬︱twitter (5 messages):

- Modular Tweets a Mystery: Modular shared a cryptic message on Twitter, enticing curiosity without context.

- The Plot Thickens with Another Tweet: Shortly after, Modular posted another enigmatic tweet, maintaining the suspense.

- Three’s a Charm for Modular’s Teasers: Continuing the trend, Modular released a third tweet, adding more intrigue.

- Modular’s Tweet Streak Unbroken: The string of mysterious messages from Modular extended with a fourth tweet.

- Fifth Tweet Keeps the Mystery Alive: Modular capped off the series with a fifth mysterious tweet, leaving followers in anticipation.

Modular (Mojo 🔥) ▷ #ai (2 messages):

- Mojo Replication Buzz: A member expressed interest in replicating an unidentified feature or project within Modular (Mojo), signaling enthusiasm for the platform’s capabilities.

- Unlocking AI Agents’ True Potential: A member shared a YouTube video explaining the creation of long-term memory and self-improving AI agents in a concise 10-minute presentation, potentially offering valuable insights for fellow enthusiasts.

Link mentioned: Unlock AI Agent real power?! Long term memory & Self improving: How to build Long term memory & Self improving ability into your AI Agent?Use AI Slide deck builder Gamma for free: https://gamma.app/?utm_source=youtube&utm…

Modular (Mojo 🔥) ▷ #🔥mojo (541 messages🔥🔥🔥):

- Mojo Adaptation of Python Tools: A new Python package called mojo2py has been developed to convert Mojo code to Python code, indicating a growing interest in tools that bridge the gap between Python and Mojo. The repository is available on GitHub.

- Learning Mojo Essentials: For those looking to learn Mojo from scratch, the Mojo Programming Manual is the go-to comprehensive guide, with emphasis on core concepts such as parameters versus arguments and understanding traits.

- Introducing Conditional Conformance: There is an ongoing discussion about the potential for conditional conformance in Mojo, allowing for behaviors like structural patterns found in other languages such as C++ or Haskell, though there may be challenges when it comes to generic code.

- Variant Type for Runtime Flexibility: The usage of

Variantas a way to create a list containing multiple types akin to Python is validated, with the point made thatVariantacts more like a tagged union at runtime, akin to ADTs in TypeScript. - Efforts on Syntaxes and Representation: Multiple members are actively discussing possible improvements to Mojo’s syntax for function signatures and type representation, including the use of

'1numerals to avoid verbose naming in partially-bound types, and Treesitter grammar/LSP development for broader integrations beyond VS Code.

Links mentioned:

- Documentation: no description found

- Documentation: no description found

- Mojo Manual | Modular Docs: A comprehensive guide to the Mojo programming language.

- Correct Plankton GIF - Correct Plankton - Discover & Share GIFs: Click to view the GIF

- Mojo🔥 modules | Modular Docs: A list of all modules in the Mojo standard library.

- Einstein notation - Wikipedia: no description found

- tuple - Rust: no description found

- mojo/examples/matmul.mojo at f1493c87ff8cbabb3cb88fb11fd1063403a7ffe2 · modularml/mojo: The Mojo Programming Language. Contribute to modularml/mojo development by creating an account on GitHub.

- mojo/examples/matmul.mojo at f1493c87ff8cbabb3cb88fb11fd1063403a7ffe2 · modularml/mojo: The Mojo Programming Language. Contribute to modularml/mojo development by creating an account on GitHub.

- GitHub - venvis/mojo2py: A python package to convert mojo code into python code: A python package to convert mojo code into python code - venvis/mojo2py

- Conditional Trait Conformance · Issue #2308 · modularml/mojo: Review Mojo's priorities I have read the roadmap and priorities and I believe this request falls within the priorities. What is your request? I believe it would be nice for there to be conditional...

- TkLineNums/tklinenums/tklinenums.py at main · Moosems/TkLineNums: A simple line numbering widget for tkinter. Contribute to Moosems/TkLineNums development by creating an account on GitHub.

- jax/jax/_src/interpreters/partial_eval.py at f8919a32e02841c9d5a202398e16357c7506b102 · google/jax: Composable transformations of Python+NumPy programs: differentiate, vectorize, JIT to GPU/TPU, and more - google/jax

- Conditional Trait Conformance · Issue #2308 · modularml/mojo: Review Mojo's priorities I have read the roadmap and priorities and I believe this request falls within the priorities. What is your request? I believe it would be nice for there to be conditional...

Modular (Mojo 🔥) ▷ #community-projects (4 messages):

-

Mojo meets gRPC: A community member is working on integrating functional Mojo code with legacy C++ via IPC to improve product performance.

-

Hunting for the Updated Llama: A member inquires about an official version of Llama2 and shares a guest blog post by Aydyn Tairov about building the project. They have attempted to update the project to v24.2.x and provided a link to their work-in-progress on GitHub.

-

Llama2 Gets Official MAX API: In response to a query about Llama2, another member directs to the official llama2 in MAX graph API available on GitHub.

-

Mojo Code - Python Transformation Tool: A new Python package called mojo2py has been created by a community member to convert Mojo code into Python code, with the repository available here.

Links mentioned:

- GitHub - venvis/mojo2py: A python package to convert mojo code into python code: A python package to convert mojo code into python code - venvis/mojo2py

- max/examples/graph-api/llama2 at main · modularml/max: A collection of sample programs, notebooks, and tools which highlight the power of the MAX platform - modularml/max

- Modular: Community Spotlight: How I built llama2.🔥 by Aydyn Tairov: We are building a next-generation AI developer platform for the world. Check out our latest post: Community Spotlight: How I built llama2.🔥 by Aydyn Tairov

- Update to 24.2 WIP by anthony-sarkis · Pull Request #89 · tairov/llama2.mojo: Related to #88 Work in progress, still getting these errors. I'm new to Mojo so if anyone can assist: /root/llama2.mojo/llama2.mojo:506:20: error: 'String' value has no attribute 'bitc...

Modular (Mojo 🔥) ▷ #nightly (12 messages🔥):

- Jank Over Traits: The discussion humorously criticized the lack of trait parameterization with a preference for what’s referred to as jank.

- Indentation Anarchy: One user lamented the lack of proper indentation in for loops, which prompted a mix of laughter and light-hearted agreement over the importance of indenting code.

- Leveling Up in Modular: <@244534125095157760> was congratulated for reaching level 9 in what appears to be a gamified system within the Modular community.

- Code Formatting Peer Pressure: There’s a jest about giving in to peer pressure regarding code formatting, indicating a light-hearted conversation about personal coding styles within the community.

- Mojo Nightly Update Announced: A new Mojo update called

nightly/mojohas been released, with members encouraged to update and check the diff on GitHub and the changelog on their changelog page.

Links mentioned:

- [stdlib] Update stdlib corresponding to `2024-04-16` nightly/mojo by patrickdoc · Pull Request #2313 · modularml/mojo: This updates the stdlib with the internal commits corresponding to today's nightly release: mojo 2024.4.1618 . In the future, we may push these updates directly to nightly branch.

- mojo/docs/changelog.md at nightly · modularml/mojo: The Mojo Programming Language. Contribute to modularml/mojo development by creating an account on GitHub.

Perplexity AI ▷ #general (549 messages🔥🔥🔥):

-

Confusion Over Subscriptions and Payment Methods: Members reported instances of unexpected charges despite using promo codes and concerns about managing payment methods for Perplexity API. Promises for improvements to show main payment methods on the Perplexity website were mentioned by a user presumed to be a team member.

-

Perplexity Pro Message Counter Disappears: Users have noticed that the message counter, indicating the number of messages left for Perplexity Pro users, is not being displayed unless under 100 messages are left. A member with a presumed team role confirmed this change and cited user reports of reduced stress as the reason, while others expressed dissatisfaction with the removal.

-

Perplexity Performance and Model Updates: Some users express that Perplexity seems to be forgetting the context more quickly than before and question the reasoning behind changes to features such as the Pro message counter. Inquiries about the integration of GPT-4-Turbo-2024-04-09 into Perplexity were met with referenced previous statements, suggesting members refer to discussions in the official channel.

-

AI Models and Coding: There’s a consensus among users that AI models are still lacking in coding capabilities, with GPT-4 mentioned as underperforming despite being better than its predecessors. Perplexity’s diverse model offerings are acknowledged, but some feel that the original models are superior in performance.

-

Payment and Subscription Issues Discussed: Members discussed issues with promotional codes and billing confusion. One user pointed out a lack of clarity in managing payment methods for the Perplexity API, while others voiced concerns about not seeing promo code options upon checkout. A response from user ok.alex indicated upcoming improvements to the payment method visibility.

Links mentioned:

- no title found: no description found

- Grok-1.5 Vision Preview: no description found

- Raycast - Perplexity API: Use the powerful models via Perplexity API from the comfort of Raycast.

- Hamak Chilling GIF - Hamak Chilling Beach - Discover & Share GIFs: Click to view the GIF

- No The Office GIF - No The Office Michael Scott - Discover & Share GIFs: Click to view the GIF

- Chopping Garlic Chopping GIF - Chopping Garlic Chopping Knife Skills - Discover & Share GIFs: Click to view the GIF

- Tweet from OpenAI Developers (@OpenAIDevs): Introducing the Batch API: save costs and get higher rate limits on async tasks (such as summarization, translation, and image classification). Just upload a file of bulk requests, receive results wi...

- How Soon Is Now Smiths GIF - How Soon Is Now Smiths Morrissey - Discover & Share GIFs: Click to view the GIF

- TikTok - Make Your Day: no description found

Perplexity AI ▷ #sharing (12 messages🔥):

- Exploring Perplexity AI: Members shared various searches on Perplexity AI, exploring topics such as Microsoft testing ads, World Art Day, and methods to act as an Ichjogu.

- Celebrating Days of Art and Voice: Searches were conducted related to World Art Day and World Voice Day, highlighting community interest in global cultural observations.

- Inspecting Tech and Games: Discussions included searches on Microsoft’s test ads, Atari, and Amazon Web Services hardening guide.

- Music and Lyrics Searches: Members showed an inclination towards music by searching for lyrics to the song “SBK Borderline” and the phrase “Whatever It Is”.

- Curiosities in Costs and Queries: The community delved into diverse inquiries, from asking about the cost of certain items to requesting explanations in Portuguese.

Perplexity AI ▷ #pplx-api (3 messages):

- Inconsistency in Perplexity Pro vs. API Answers: A member expressed difficulty with getting different responses when using Perplexity Pro compared to the API. There is a desire to understand settings like the temperature that the web client uses to try and match API results for consistency.