As is their established pattern, Mistral followed up their magnet link with a blogpost, and an instruct-tuned version of their 8x22B model:

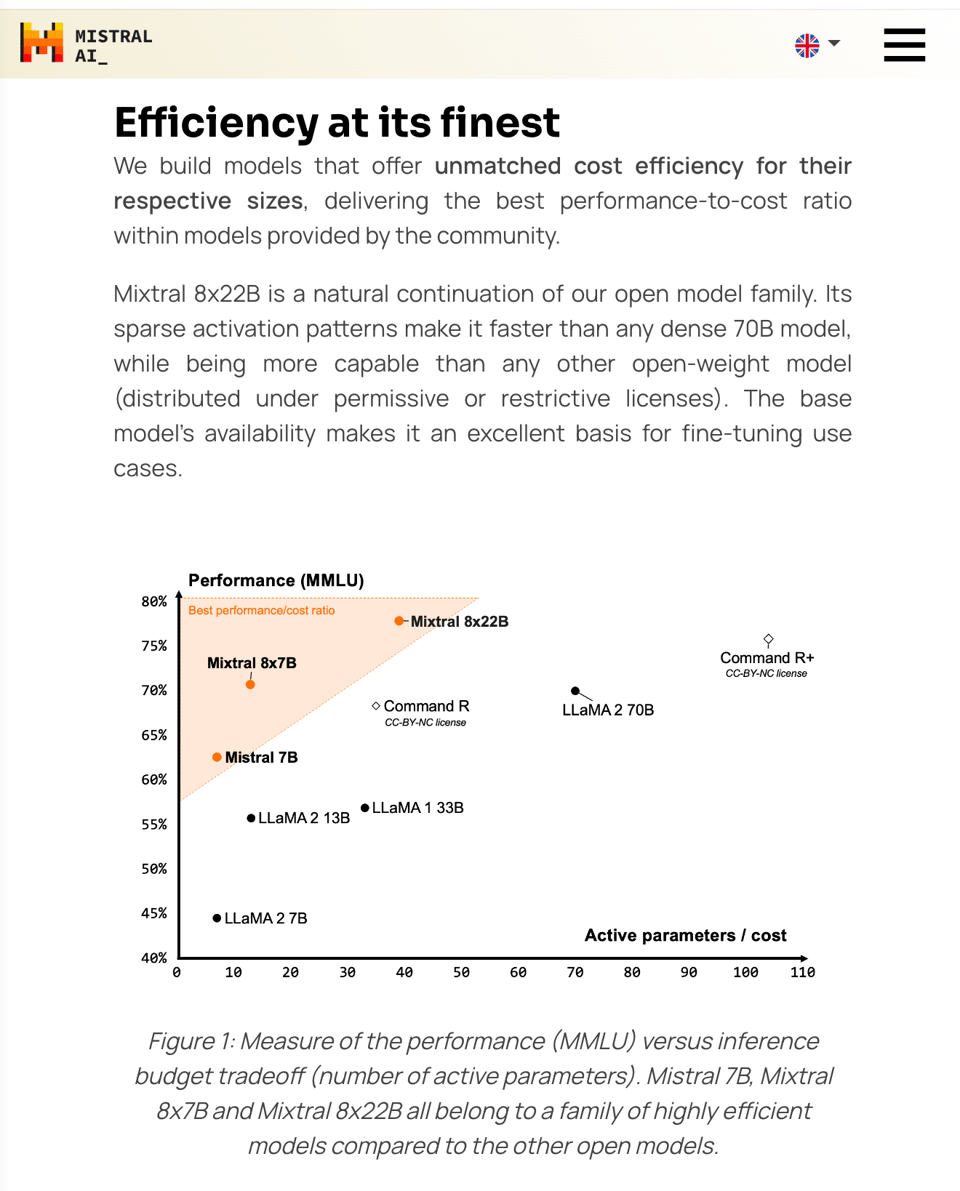

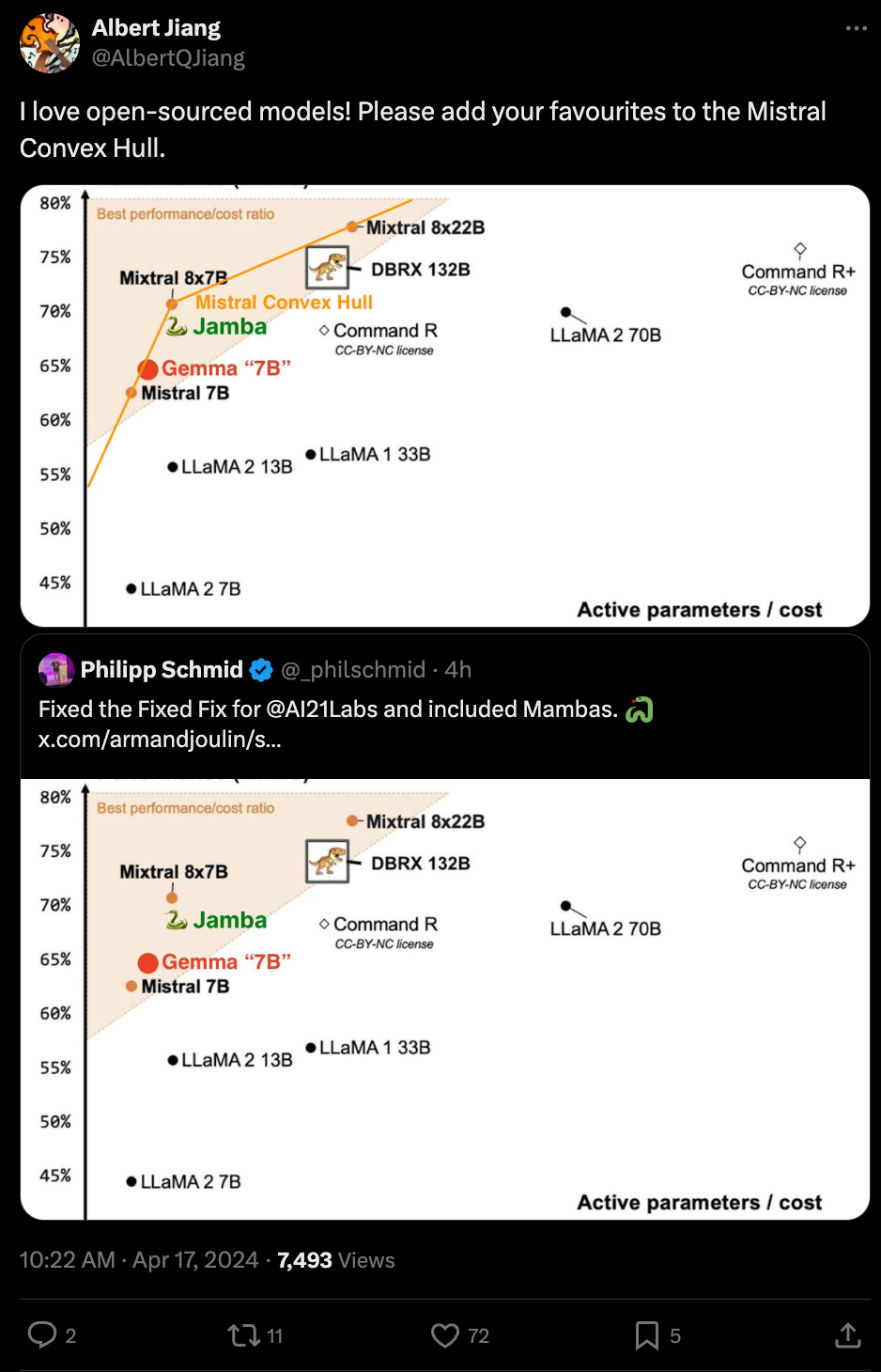

the image ended up sparking some friendly competition between Databricks, Google, and AI21, all of which merely emphasized that Mixtral created a new tradeoff between active params and MMLU performance:

Of course, what is unsaid that the active params count doesnt linearly correlate with cost to run dense models, and that singular focus on MMLU isn’t ideal for less scrupulous competitors.

Table of Contents

[TOC]

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/Singularity. Comment crawling works now but has lots to improve!

AI Investments & Advancements

-

Massive AI investments from tech giants: In /r/singularity, DeepMind CEO reveals Google plans to invest over $100 billion in AI, with other tech giants like Microsoft, Intel, SoftBank, and an Abu Dhabi fund making similarly huge bets, indicating high confidence in AI’s potential.

-

UK criminalizes non-consensual deepfake porn: The UK has made it a crime to create sexually explicit deepfake images without consent. In /r/technology, commenters debate the implications and enforcement challenges.

-

Nvidia’s AI chip dominance: In /r/hardware, a former Nvidia employee claims on Twitter that no one will catch up to Nvidia’s AI chip lead this decade, sparking discussion about the company’s strong position.

AI Assistants & Applications

-

Potential billion-dollar market for AI companions: In /r/singularity, a tech executive predicts AI girlfriends could become a $1 billion business. Commenters suggest this is a vast underestimate and discuss the societal implications.

-

Unlimited context length for language models: A tweet posted in /r/artificial announces unlimited context length, a significant advancement for AI language models.

-

AI surpassing humans on basic tasks: In /r/artificial, a Nature article reports that AI has surpassed human performance on several basic tasks, though still trails on more complex ones.

AI Models & Architectures

- Zamba: Novel 7B parameter hybrid architecture: In /r/LocalLLaMA, Zyphra unveils Zamba, a 7B parameter hybrid architecture combining Mamba blocks with shared attention. It outperforms models like LLaMA-2 7B and OLMo-7B despite less training data. The model was developed by a team of 7 using 128 H100 GPUs over 30 days.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

Mixtral 8x22B Instruct Model Release

- Impressive Performance: @GuillaumeLample announced the release of Mixtral 8x22B Instruct, which significantly outperforms existing open models using only 39B active parameters during inference, making it faster than 70B models.

- Multilingual Capabilities: @osanseviero highlighted that Mixtral 8x22B is fluent in 5 languages (English, French, Italian, German, Spanish), has math and code capabilities, and a 64k context window.

- Availability: The model is available on the @huggingface Hub under an Apache 2.0 license and can be downloaded and run locally, as confirmed by @_philschmid.

RAG (Retrieval-Augmented Generation) Advancements

- GroundX for Improved Accuracy: @svpino shared that @eyelevelai released GroundX, an advanced RAG API. In tests on 1,000 pages of tax documents, GroundX achieved 98% accuracy compared to 64% for LangChain and 45% for LlamaIndex.

- Importance of Assessing Risks: @omarsar0 emphasized the need to assess risks when using LLMs with contextual information that may contain supporting, contradicting, or incorrect data, based on a paper on RAG model faithfulness.

- LangChain RAG Tutorials: @LangChainAI released a playlist explaining RAG fundamentals and advanced methods on @freeCodeCamp. They also shared a @llama_index tutorial on using Mixtral 8x22B for RAG.

Snowflake Arctic Embed Models

- Powerful Embedding Models: @SnowflakeDB open-sourced their Arctic family of embedding models on @huggingface, which are the result of @Neeva’s search expertise and Snowflake’s AI commitment, as noted by @RamaswmySridhar.

- Efficiency and Performance: @rohanpaul_ai highlighted the efficiency of these models, with parameter counts from 23M to 335M, sequence lengths from 512 to 8192, and support for up to 2048 tokens without RPE or 8192 with RPE.

- LangChain Integration: @LangChainAI announced same-day support for using Snowflake Arctic Embed models with their @huggingface Embeddings connector.

Misc

- CodeQwen1.5 Release: @huybery introduced CodeQwen1.5-7B and CodeQwen1.5-7B-Chat, specialized codeLLMs pretrained with 3T tokens of code data. They exhibit exceptional code generation, long-context modeling (64K), code editing, and SQL capabilities, surpassing ChatGPT-3.5 in SWE-Bench.

- Boston Dynamics’ New Robot: @DrJimFan shared a video of Boston Dynamics’ new robot, arguing that humanoid robots will exceed iPhone supply in the next decade and that “human-level” is just an artificial ceiling.

- Superhuman AI from Day One: @ylecun stated that AI assistants need human-like intelligence plus superhuman abilities from the start, requiring understanding of the physical world, persistent memory, reasoning and hierarchical planning.

AI Discord Recap

A summary of Summaries of Summaries

Stable Diffusion 3 and Stable Diffusion 3 Turbo Launches:

- Stability AI introduced Stable Diffusion 3 and its faster variant Stable Diffusion 3 Turbo, claiming superior performance over DALL-E 3 and Midjourney v6. The models use the new Multimodal Diffusion Transformer (MMDiT) architecture.

- Plans to release SD3 weights for self-hosting with a Stability AI Membership, continuing their open generative AI approach.

- Community awaits licensing clarification on personal vs commercial use of SD3.

Unsloth AI Developments:

- Discussions on GPT-4 as a fine-tuned iteration over GPT-3.5, and the impressive multilingual capabilities of Mistral7B.

- Excitement around the open-source release of Mixtral 8x22B under Apache 2.0, with strengths in multilingual fluency and long context windows.

- Interest in contributing to Unsloth AI’s documentation and considering donations to support its development.

WizardLM-2 Unveiling and Subsequent Takedown:

-

Microsoft announced the WizardLM-2 family, including 8x22B, 70B, and 7B models, demonstrating competitive performance.

-

However, WizardLM-2 was unpublished due to lack of compliance review, not toxicity concerns as initially speculated.

-

Confusion and discussions around the takedown, with some users expressing interest in obtaining the original version.

-

Stable Diffusion 3 Launches with Improved Performance: Stability AI has released Stable Diffusion 3 and Stable Diffusion 3 Turbo, now available on their Developer Platform API, boasting the fastest and most reliable performance. The community awaits clarification on the Stability AI Membership model for self-hosting SD3 weights. Meanwhile, SDXL finetunes have made SDXL refiners nearly obsolete, and users discuss model merging challenges in ComfyUI and limitations of the diffusers pipeline.

-

WizardLM-2 Debuts Amidst Excitement and Uncertainty: The release of WizardLM-2 models by Microsoft has sparked enthusiasm for their potential GPT-4-like capabilities in an open-source format. However, the sudden takedown of the models due to a missed compliance review has led to confusion and speculation. Users compare the performance of WizardLM-2 variants and share tips for resolving compatibility issues in LM Studio.

-

Multimodal Models Advance with Idefics2 and Reka Core: Hugging Face’s Idefics2 8B and Reka Core have emerged as powerful multimodal language models, showcasing impressive capabilities in visual question answering, document retrieval, and coding. The upcoming chat-focused variant of Idefics2 and Reka Core’s competitive performance against industry giants have generated significant interest. Discussions also revolve around the cost-efficiency of models like JetMoE-8B and the launch of Snowflake’s Arctic embed family for text-embedding.

Other notable topics include:

- The introduction of ALERT, a safety benchmark for assessing Large Language Models, and debates around AI safety standards.

- Explorations of Retrieval Augmented Generation (RAG) for vision-based applications and the philosophical implications of AI simulations in World-Sim.

- The rise of AI-human collaboration platforms like Payman AI and the integration of AI inference in Supabase’s edge functions.

- Challenges to the Chinchilla scaling laws and discussions on the expressive power of state-space models in the research community.

- Advancements in PEFT methods like Dora and RSLoRA, and the pursuit of multilingual model expansion using Mixture-of-Experts (MoE) approaches.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

Stable Diffusion 3 Turbo Charges the Scene: Stability AI has introduced Stable Diffusion 3 and Stable Diffusion 3 Turbo, now available on their Developer Platform API, with claims of the fastest and most reliable performance, supported by Fireworks AI. Interested parties can get started with SD3 at Stable Diffusion 3 & Developer API, and an open generative AI approach is promised with plans for the model weights to be available for self-hosting for members.

Refining Visually Intuitive Generative AI: The SDXL finetunes have made the use of SDXL refiners nearly obsolete, as they are now prevalent in Civitai downloads, suggesting a trend towards integrated finetunes over separate refiner modules, reflecting a community-driven optimization.

Model Merging Explored: There is lively discussion on model merging tactics within ComfyUI, grappling with complex mechanisms such as V-prediction and epsilon, highlighting the community’s experimentation with these methods to achieve enhanced outcomes, yet acknowledging that correct implementations are crucial to prevent unpredictable results.

Navigating Diffusers Library Limitations: A conversation emerged around the limitations and dependencies in the diffusers pipeline, with a focus on Stable Video Diffusion Pipeline challenges. Despite these challenges, some users are optimizing usage by running models independently post-download, bypassing certain Hugging Face library constraints.

Awaiting SD3’s Membership Model Details: The community is keenly waiting for Stability AI to provide clarifications on Stable Diffusion 3 licensing for personal versus commercial use, especially in light of the new membership model revealed for accessing self-hosted weights.

Unsloth AI (Daniel Han) Discord

GPT-4 Gains Over GPT-3.5: The new iteration of GPT, GPT-4, is regarded as a fine-tuned enhancement over GPT-3.5, though specifics on performance metrics or features were not provided.

Mistral7B Shines in Multilingualism: Members conferred about the multilingual capabilities of the Mistral7B model, recommending the inclusion of diverse language data in training sets, particularly French, to improve performance.

Unsloth AI Gets Help from Fans: There’s a tangibly positive response from the community towards Unsloth AI, with users keen to help with documentation, expansion, and even considering donations. The Mixtral 8x22B model’s release under Apache 2.0 was met with excitement for its promise in multilingual fluency and handling of extensive context windows.

Chroma Goes Go: The Chroma project leaps forward with an edge version written in Go, which utilizes SQLite and WASM for browser-based applications, now available on GitHub.

Mobile AI Deployment Discussed: The complexity of deploying AI models on mobile devices surfaced, noting challenges such as the absence of CUDA and the infeasibility of running standard Deep Learning Python codes on such platforms.

LM Studio Discord

AI Assistance for NeoScript Programming: A user looking for help with NeoScript programming expressed challenges in configuring AI models. Microsoft’s new release, WaveCoder Ultra 6.7b, excels in code translation and could be a strong candidate for this task.

Solving AI’s Echo Chamber: To combat repetitive AI responses, particularly in Dolphin 2 Mistral, members discussed strategies such as fine-tuning models and leveraging multi-turn conversation frameworks outlined in Azure’s article.

Introducing the WizardLM-2 League: The debut of WizardLM-2 models sparked discussions about performance. Compatibility with existing tools, including the importance of using GGUF quants and version 0.2.19 or newer for proper functionality, was emphasized.

Tech Wizards at Play: One user successfully enabled direct communication between four 3090 GPUs, improving model performance by bypassing CPU/RAM. There was also chatter about the challenges of signing Windows executables, with a hint that the Windows versions are indeed signed with an Authenticode cert.

Quantization Conundrum and Model Preferences: Mixed reviews on quantization levels, from Q8 to Q6K, pointed to a preference for models with higher quantization levels when VRAM is sufficient. For large models, such as WizardLM-2-8x22B, GPUs like the 4090 with 24GB VRAM may be inadequate.

Nous Research AI Discord

-

Multimodal Models Stepping Up: Exciting advancements in multimodal language models are showcased, with Hugging Face’s Idefics2 8B and Reka Core emerging as key players, evident from Open Multimodal ChatGPT video and Reka Core overview. The GPT4v/Geminipro Vision and Claude Sonnet models are recommended for vision-RAG applications.

-

LLMs Tuning into Self-Optimization: New techniques for enhancing Instruct Model LLMs look promising, with models able to select the best solution by reconstructing inputs from outputs, detailed in a Google Slideshow on aligning LLMs for medical reasoning.

-

WizardLM Disappearance Sparks Debate: There’s uncertainty around WizardLM’s sudden takedown; while some speculated on toxicity issues, confirmed reports attributed it to lack of a compliance review as shared in a comprehensive WizardLM information bundle.

-

LLMs Performance: A Roller Coaster of Expectations: Engineers discuss CodeQwen1.5-7B Chat’s impressive benchmarking and debate on architectures and tuning’s impact on performance. Furthermore, upcoming models like Hermes 8x22B are eagerly awaited, with concerns on whether they can be accommodated by personal equipment setups.

-

World-Sim’s Return Triggers AI Philosophical Debates: As World-Sim gears up for a return, enthusiasts burst with anticipation, pondering the philosophical aspects and implications of such simulated worlds. Official confirmation sent excitement soaring with a Websim link provided for those eager to jump in.

Perplexity AI Discord

Robots Debating Their Roots: Engineers exchanged insights on the performance nuances of AI models including GPT-4 and Claude 3 Opus, with a shared sentiment that GPT-4 may exhibit “lazy” tendencies in real-world applications. The open-source Mixtral’s 8x22B model is highlighted for its impressive capabilities, sparking debates on model efficacy.

Stumped by Stubborn Software Issues: A conversation was noted about achieving consistency between the web client and the API, with specific attention to parameters like temperature settings. Engineers are also discussing the benefits of including a rate limit counter in the API response for better management and transparency.

The Vanishing Messages Mystery: Concern was voiced over changes in the Perplexity API’s payment method management, particularly the opacity surrounding the remaining message counts for pro users. This focus on transparency indicates professionals need clarity to manage resources efficiently.

A Tale of Truncated Tokens: Technical dialogue included challenges faced when engaging models with large context sizes, like a 42k token prompt, and the tendency for models to summarize rather than dive deep into lengthy documents. This could be pivotal as engineers optimize models to process complex prompts fully.

The Search for Smarter Searches: Members also discussed using site:URL search operators for more targeted information retrieval. Additionally, there is a call for better communication regarding rate limits in the API, including the possibility of a 429 response.

LAION Discord

-

PyTorch’s Abstraction Puzzle: Engineers are grappling with PyTorch’s philosophy of abstracting complexities, which, while simplifying coding, often leaves them puzzled when troubleshooting unexpected results.

-

Handling Hefty Datasets with Zarr: There’s active exploration on utilizing zarr to manage a hefty 150 GB MRI dataset, with discussions circling around its efficiency and whether it will overload RAM with large data loads.

-

Legal Lines Drawn for Deepfakes in the UK: Members are discussing the implications of UK legislation targeting the creation of distressing images, questioning its enforceability given the blurriness of proving intent.

-

AI Inference Fine-Tuning Talks: Voices from the community are calling for clarity on AI models’ inference settings, like controlling CFG or integrating models with robust ODE solvers, beyond just defaulting to Euler’s method.

-

Cascade Team’s Corporate Shuffle: There’s speculation about the future of Stability AI’s Cascade team after their departure and the dissolution of their Discord channel, with wonderment if there’s a link to a new venture, possibly Leonardo, or an ongoing affiliation with SAI.

-

ALERT! A New Safety Benchmark for LLMs: The introduction of ALERT, a safety benchmark for assessing Large Language Models, has sparked interest, providing a Dataset of Problematic Outputs (DPO) for community evaluation, available on GitHub.

-

AI Audio-Visual Harmony: An Arxiv paper presents methods for generating audio from text, improving performance by zeroing in on concepts or events, stirring dialogue in the research community.

-

AI Safe or Stifled?: The AI safety debate is heated, with some pushing back against confining AI strictly to PG content, arguing it could crimp its creative spark compared to other artistic mediums.

-

GANs vs. Diffusion Models: Speed or Aesthetics?: Discussions are heating up over the advantages of GANs—notably, their faster inference and lesser parameter count—versus diffusion models, even as GANs face criticism for image quality and training challenges.

OpenRouter (Alex Atallah) Discord

OpenRouter Welcomes WizardLM Raptors: OpenRouter announced the release of WizardLM-2 7B and a price drop for WizardLM-2 8x22B to $0.65/M tokens. The WizardLM-2 8x22B Nitro boasts over 100 transactions per second post its database restart.

Latency Labyrinth Resolved: Latency issues on various models such as Mistral 7B Instruct and Mixtral 8x7B Instruct were attributed to cloud provider DDoS protection, with updates concerning the resolution found in the associated discussion thread.

Calling All Frontend Mavericks: A member seeks web development assistance for an AI-based frontend project for OpenRouter, specifically emphasizing role-playing novel mode and conversation style systems. Ability to distinguish AI-generated text from user input is also requested.

AI Model Morality and Multilingual Mastery: Vigorous exchanges regarding both censorship protocols for NSFW content and the imperative for enhancing models’ multilingual performance took place. Members looked forward to direct endpoints and new provider integrations for an anticipated AI model release.

Bitrate Bits and Quality Quibbles: Users showed a clear preference for a minimum of 5 bits per word (bpw) for model quantization, noting that reductions below this threshold notably compromise quality. Discussions underscored the trade-offs between efficient operation and maintaining high fidelity in AI outputs.

Modular (Mojo 🔥) Discord

-

Mojo to Python Conversion Now a Possibility: Engineers discuss the new package mojo2py, capable of converting Mojo code to Python, and chatted about the desire for more learning resources, pointing to the Mojo programming manual for beginners.

-

Maxim Zaks Debates the Mojo ‘Hype’: A PyCon Lithuania talk titled “Is Mojo just a hype?” by Maxim Zaks was highlighted, provoking debate on the chatbot’s industry impact, available in a video.

-

Mojo’s Inherent Nightly Nuances: Users are navigating through the challenges of a new nightly Mojo release, noting unconventional code styling for readability, desires for comprehensive tutorials on traits, and a recent pull request reflecting significant updates.

-

Optimizing with Compile-Time Aliases: Discussion thrived around optimizing alias memory usage in Mojo, hinted by the recommendation of readable code over extensive commenting from a cited YouTube video.

-

Community Mojo Projects Surge: Community contributions soared with a shared Mojo ‘sketch’ found at this gist and a request about implementing the Canny edge recognition algorithm in Mojo, coupled with directions to Mojo’s documentation and tooling resources.

CUDA MODE Discord

PyTorch Resource Debate: While discussing if “Deep Learning with PyTorch” is a relevant resource despite being 4 years old, members noted that the PyTorch core has remained stable, though significant updates have occurred in the compiler and distributed systems. A member shared a teaser for an upcoming edition of the book, which would include coverage of transformers and Large Language Models.

CUDA Custom GEMM Sparking Interest: The conversation involved improving GEMM performance in CUDA, with one member providing a new implementation that outperformed PyTorch’s function on specific benchmarks, sharing their code on GitHub. However, another highlighted JIT compilation issues with torch.compile. The group also discussed optimal block size parameters, referencing a related code example on Gist.

Next-Gen Video Analysis & Robotics Gains Screenshare: Members shared links about Augmend’s video processing features, which combine OCR and image segmentation, previewed on wip.augmend.us, and the full service to be hosted on augmend.com. Another highlight was Boston Dynamics’ unveiling of a fully electric robot named Atlas intending for real-world applications, showcased in their All New Atlas | Boston Dynamics video.

Bridging the CUDA Toolkit Knowledge Gap: In the #beginner channel, members discussed issues related to using the CUDA toolkit on WSL, with one user facing problems running the ncu profiler. The community provided troubleshooting steps and stressed the importance of setting the correct CUDA path in environment variables. There was also an advisory that Windows 11 might be necessary for effective CUDA profiling on WSL 2, with one user providing a guide on the subject.

Quantization Dilemmas and Solutions in Air: A thorough chat occurred on the topic of quantization axes in GPT models with a highlight on the complexities when using axis=0. Participants suggested quantizing Q, K, and V separately with references to Triton kernels and an autograd optimization method for boosting speed and performance. Their debate continued with discussions of 2/3 bits quantization practicality and was supplemented with implementation details and benchmarks on GitHub.

Optimizing ML Model Performance: A GitHub notebook for extending PyTorch with CUDA Python garnered attention for speed enhancements but with a need for more optimization to fully tap into tensor core capabilities, as shared in the notebook’s link. Additionally, there were mentions of optimizing the softmax function and block sizes for cache utilization, with insights shared through a GitHub pull request.

OpenAI Discord

Multiplayer GPT Headed for the Gaming Galaxy: Engineers discussed the potential of integrating GPT-Vision and camera inputs for a real-time gaming assistant to tackle multiple-choice games. The possibility of utilizing Azure or virtual machines to handle intensive computational tasks was raised, alongside leveraging TensorFlow or OpenCV for system management.

AI Versus Human Conundrum Continues: A philosophical debate emerged concerning the differences between AI and human cognition, discussing the prospects of AI acquiring human-like reasoning and emotions, and the role of quantum computing in this evolution.

The Quest for Knowledge Enhancements: Members sought information on how to prepare a knowledge base for custom GPT applications and questioned the arrival of the Whisper v3 API. The noted limitations such as GPT-4’s token memory span being speculated to have shrunk triggered calls for improved clarity on API capabilities.

Creative Minds Favor Claude and Gemini: When tackling literature reviews and fictional works, AI aficionados recommended using models like Claude and Gemini 1.5. These tools were favored for their prowess in handling literary tasks and creative writing respectively.

Discord Channel Dynamics: Two channels, prompt-engineering and api-discussions, experienced a notable decrease in activity, with participants attributing the quiet to possible over-moderation and a recent string of timeouts, including a specific 5-month timeout case involving assistance to another user.

LlamaIndex Discord

-

Hybrid Cloud Hustle with Qdrant: Qdrant’s new hybrid cloud offering allows for running their service across various environments while maintaining control over data. They backed their launch with a thorough tutorial on the setup process.

-

LlamaIndex Beefs Up with Azure AI Search: LlamaIndex teams up with Azure AI Search for advanced RAG applications, featuring a tutorial by Khye Wei that illustrates Hybrid Search and Query rewriting capabilities.

-

MistralAI Model Immediately Indexed: LlamaIndex has instant support for MistralAI’s newly released 8x22b model, paired with a Mistral cookbook focusing on intelligent query routing and tool usage.

-

Building and Debugging in LlamaIndex: AI engineers discussed best practices for constructing search engines in LlamaIndex, resolving API key authentication errors, and navigating through updates and bug fixes, including a specific

BaseComponenterror with a GitHub solution. -

Hierarchical Structure Strategy Session: Inquiry within the ai-discussion channel about constructing a hierarchical document structure using ParentDocumentRetriever, with LlamaIndex as the framework of choice.

Eleuther Discord

-

Peering into the Future of Long-Sequence Models: Feedback Attention Memory (FAM), discussed in recent conversations, proposes a solution to the quadratic attention problem of Transformers, enabling processing of indefinitely long sequences and showing improvement on long-context tasks. Reka’s new encoder-decoder model is touted to support sequences up to 128k, as detailed in their core tech report.

-

Precision in Scaling Laws and Evaluation: Questions on compute-optimal scaling laws by Hoffman et al. (2022) led to an exploration of the credibility of narrow confidence intervals without extensive experiments as detailed in Chinchilla Scaling: A replication attempt. Moreover, accurate cost estimations within ML papers are hindered when the size of datasets like that in the SoundStream paper is omitted, bringing to light the necessity of transparent data reporting.

-

Unpacking Model Evaluation Techniques: In Eleuther’s

#lm-thunderdome, the usage oflm-evaluation-harnesswas demystified, explaining the output format required forarc_easytasks and discussing the significance of BPC (bits per character) as an intelligent proxy correlating with a model’s compression capacity. Concerning tasks like ARC, a dialogue ensued about why random guessing results in a roughly 25% accuracy rate due to its four possible answers. -

Multi-Modal Learning Gains Traction: The possibility of Total Correlation Gain Maximization (TCGM) for semi-supervised multi-modal learning received attention, with one arXiv paper discussing the informational approach and the ability to utilize unlabeled data across modalities effectively. Emphasis was also given to the method’s theoretical promises and its implications in identifying Bayesian classifiers for diverse learning scenarios.

-

Concrete Guidelines for FLOPS Calculation: On the

#scaling-lawschannel, advice was given on estimating the FLOPS for a model such as SoundStream, including using the equation 6 * # of parameters for transformers during forward and backward passes. Newcomers are directed to a comprehensive breakdown in Section 2.1 of the relevant paper for a complete understanding of computational cost estimation.

HuggingFace Discord

-

IDEFICS-2 Takes the Limelight: The release of IDEFICS-2 brings an impressive skill set with 8B parameters, capable of high-resolution image processing and excelling in visual question answering and document retrieval tasks. Anticipation builds as a chat-focused variant of IDEFICS-2 is promised, while current capabilities such as solving complex CAPTCHAs are demonstrated in a shared example.

-

Knowledge Graphs Meet Chatbots: An informative blog post highlights the integration of Knowledge Graphs with chatbots to boost performance, with exploration encouraged for those interested in advanced chatbot functionality.

-

Snowflake’s Arctic Expedition: Snowflake breaks new ground with the launch of the Arctic embed family of models, claimed to set new benchmarks in practical text-embedding model performance, particularly in retrieval use cases. This development is complemented by a hands-on Splatter Image space for creating splatter art quickly, and how Multi-Modal RAG fuses language and images, as detailed in LlamaIndex documentation.

-

Model Training and Comparisons Drive Innovation: A fresh IP-Adapter Playground is unveiled, further enabling creative text-to-image interactions, alongside a new option to

push_to_hubdirectly in the transformers library’s pipelines. Comparing image captioning models just got easier with a dedicated Hugging Face Space. -

Challenges and Opportunities in NLP and Vision: Community members discuss issues from truncated token handling in prompts to exploring LoRA configurations, with links shared to resources on topic modeling with BERTopic, training T5 models (Github Resource), and LaTeX-OCR possibilities for equation conversion LaTeX-OCR GitHub. These conversations encapsulate the collective pursuit of refining and harnessing AI capabilities.

OpenAccess AI Collective (axolotl) Discord

Idefics2 Brings Multimodal Flair: The new multimodal model Idefics2 has been introduced, capable of processing both text and images with improved OCR and visual reasoning skills. It is offered in both base and fine-tuned forms and is under the Apache 2.0 license.

RTX 5090 Speculation Stokes Anticipation: NVidia is rumored to be considering an expedited release of the RTX 5090, potentially at Computex 2024, to stay ahead of AMD’s advances, sparking discussions on hardware suitability for cutting-edge AI models.

Model Training Finetuning: Engineers shared insights on model training configurations, focusing on the ‘train_on_input’ parameter in loss calculation, and suggested using “TinyLlama-1.1B-Chat-v1.0” for fine-tuning small models for efficient experimentation.

Phorm AI Becomes Go-To Resource: Members referred to Phorm AI for various inquiries, including epoch-wise saving techniques and data preparation for models like TinyLlama for tasks like text-to-color code predictions.

Spam Flood Triggers Alerts: Multiple channels within the community were targeted by spam messages promoting OnlyFans content, attempting to divert attention from the AI-centric conversations and technical discourse.

Latent Space Discord

LLM Ranking Resource Revealed: A comprehensive website, LLM Explorer, has been shared, showcasing a plethora of open-source language models, each assessed through ELO scores, HuggingFace leaderboard ranks, and task-specific accuracy metrics, serving as a valuable resource for model comparison and selection.

AI+Human Symphony in the Gig Economy: The launch of Payman AI, a platform facilitating AI agents to remunerate humans for tasks beyond AI capabilities, has sparked interest; the concept promotes a cooperative ecosystem between AI and human talents in domains like design and legal services.

Supabase Embraces AI Inference: Supabase introduces a simple API for running AI inferences within its edge functions, allowing AI models such as gte-small to be employed directly in databases, as detailed in their announcement.

Buzz Around “Llama 3” and OpenAI API Moves: The AI community is abuzz about the mysterious “Llama 3” speculated to debut at a London hackathon, and OpenAI’s Assistants API enhancements are drawing attention in light of a potential GPT-5 release, stirring debates about possible impacts on AI startups and platforms.

BloombergGPT Paper Club Session Goes Zoom: The LLM Paper Club invites engineers to a Zoom session on BloombergGPT, due to prior challenges with Discord screensharing, and the discussion has pivoted to Zoom for a better sharing experience. Participants can register for the event here, and further reminders to join the discussions are being circulated within the community.

OpenInterpreter Discord

-

AI Wearable Woes: AI wearables lack the contextual knowledge of smartphones, as discussed with reference to a YouTube review by Marquis Brownlee. Engineers pointed out that greater contextual understanding is necessary for AI assistants to provide efficient responses.

-

Open-Source AI Model Buzz: The WizardLm2 open-source model garners interest for its potential to deliver GPT-4-like capabilities. Discussions forecast a strong future demand despite ongoing advancements.

-

Translator Bot’s Inclusive Promise: Engineers are currently evaluating a new translation bot for its ability to enrich communication by providing two-way translations, aiming for more inclusive and unified discussions.

-

Cross-Platform Compatibility Challenges: There’s a clear need for software like 01 Light to operate on Windows, consistent with dialogues about difficulties adapting Mac-centric software to Windows frameworks, thereby hinting at the necessity for platform-agnostic development approaches.

-

Hardware Heats Up: Conversations indicate significant interest in AI hardware solutions like the Limitless device, with comparisons drawn around user experiences. Emphasis on the need for robust backend support and seamless AI integration is shaping hardware aspirations.

Interconnects (Nathan Lambert) Discord

Big Win for qwen-1.5-0.5B: The qwen-1.5-0.5B model’s winrate soared from 4% to 32% against heavyweights like AlpacaEval using generation in chunks. This approach, along with a 300M reward model, may be a game-changer in output searching.

How To Win Friends and Influence AIs: The recently unveiled Mixtral 8x22B, a polyglot SMoE model, is sharing the limelight owing to its impressive capabilities and the Apache 2.0 open license. Meanwhile, the rise of OLMo 1.7 7B indicates a notable stride in language model science with a robust performance leap on the MMLU benchmark.

Replicating Chinchilla: An Anomaly: Discrepancies in replicating the Chinchilla scaling paper by Hoffmann et al. have cast doubts around the paper’s findings. The community’s reaction ranged from confusion to concern, signaling an escalating drama around the challenge of scaling law verification.

Lighthearted Anticipation and Rumination: With playful banter on potential showdowns in olmo vs llama, community members show humor in competition. Moreover, Nathan Lambert teases the guild with a forecast of content deluge, signaling a possibly intense week of knowledge sharing.

Model Madness or Jocularity?: A side comment in an underpopulated channel by Nathan mentioned a potential tease involving WizardLM 2 as a troll, showing a blend of humor and light-heartedness amidst technical discussions.

Cohere Discord

-

API Confusion Needs Resolving: Engineers are probing the Cohere API for details on system prompt capabilities and available models. A user highlighted the request for details due to their significance in application development.

-

Benchmarking Cohere’s Embeddings: There is curiosity about how Cohere’s embeddings v3 perform against OpenAI’s new large embeddings with reference to the Cohere blog, suggesting a comparative analysis has been conducted Introducing Command R+.

-

Integration Tips and Tricks: Technical discussions addressed integrating Language Learning Models (LLMs) with platforms like BotPress, and whether Coral necessitates a local hosting solution. Future updates might simplify these integrations.

-

Fine-Tuning Fine-Tuned Models: Clarification was sought about fine-tuning already customized models via Cohere’s Web UI, directing users to the official guide Fine-Tuning with the Web UI.

-

Beta Testers Called to Action: A project named Quant Fino is recruiting beta testers for its Agentic entity that merges GAI with FinTech. Interested participants can apply at Join Beta - Quant Fino.

-

Security Flaws Exposed in AI Model: A redteaming exercise revealed vulnerabilities in Command R+, demonstrating the ability to manipulate the model into creating unrestricted agents. Concerned engineers and researchers can review the full write-up Creating unrestricted AI Agents with Command R+.

LangChain AI Discord

AI Documentation Gets Facelift: In an effort to improve usability, contributors to the LangChain documentation are revamping its structure, introducing categories like ‘tutorial’, ‘how to guides’, and ‘conceptual guide’. A member shared the LangChain introduction page, emphasizing LangChain’s components such as building blocks, LangSmith, and LangServe, which aid in the development and deployment of applications with large language models.

Building with LangChain — An Expressive Endeavor?: Within the #general channel, a member sought advice on YC startup applications while drawing parallels to Extensiv, leading to the mention of several entities like Unsloth, Mistral AI, and Lumini. Simultaneously, challenges with LangServe integration when combined with Nemo Guardrails were highlighted due to Nemo’s transformation of output structures.

Forge Ahead with New AI Tools and Services: GalaxyAI’s debut of an API service with complimentary access to GPT-4 and GPT-3.5-turbo stirred up interest, showcased at Galaxy AI. Similarly, OppyDev’s fusion of an IDE and a chat client received attention, advocating an improved coding platform accessible at OppyDev AI. Meanwhile, Rubiks.ai appealed to tech enthusiasts to beta test their search engine and assistant at Rubiks.ai using code RUBIX.

AI Pioneers Share Educational Resources and Seek Collaboration: A member from #tutorials posted a YouTube tutorial on granting AI agents with long-term memory, igniting a discussion why ‘langgraph’ wasn’t employed. Furthermore, a participant expressed eagerness to collaborate on new projects, inviting others to connect through direct messaging.

Diverse Dialogues on Data and Optimization: In a lively exchange, strategies for optimizing RAG (Retrieval-Augmented Generation) with large documents were evaluated, including document splitting. Members also dialogued over the best methods to manipulate CSV files with Langchain, suggesting improvements for chatbots and data processing.

DiscoResearch Discord

- 64 GPUs Engaged for Full-Scale Deep-Speed: Maxidl pushed the limits by utilizing 64 80GB GPUs, each at 77GB capacity, to run full-scale deep-speed with 32k sequence length and batch size of one, exploring 8-bit optimization for better memory efficiency.

- FSDP’s Memory Usage Secrets Unlocked: jp1 suggested

fsdp_transformer_layer_cls_to_wrap: MixtralSparseMoeBlock, and settingoffload_params = trueto minimize memory usage, potentially reducing GPU requirements to 32, while maxidl sought out calculators for memory usage, referencing a HuggingFace discussion. - Copyright Conundrum for Text Scraping: A member pointed out the EU copyright gray area affecting text data scraping and suggested DFKI as a useful source. Meanwhile, multimodal data from Wikicommons and others are found on Creative Commons Search.

- Tokenization Techniques on the Rise: The community shared insights into creating a Llama tokenizer without HuggingFace, noted a misspelling in a shared custom tokenizer, and highlighted Mistral’s new tokenization library, with a GitHub notebook provided.

- Decoding Strategies and Sampling Techniques Evaluated: Concerns that a paper on decoding methods overlooked useful strategies led to a discussion on unaddressed techniques like MinP/DynaTemp/Quadratic Sampling. A Reddit post showed the impact of min_p sampling on creative writing, boosting scores by +8 in alpaca-eval style elo and +10 in eq-bench creative writing test.

tinygrad (George Hotz) Discord

Int8 Integration in Tinygrad: Tinygrad has been confirmed to support INT8 computations, with recognition that such data type support often depends more on hardware capabilities rather than the software design itself.

Graph Nirvana with Tiny-tools: For enhanced graph visualizations in Tinygrad, users can visit Tiny-tools Graph Visualization to create slicker graphs than the basic GRAPH=1 setting.

Pytorch-Lightning’s Hardware Adaptability: Discussions about Pytorch-Lightning touched on its hardware-agnostic capabilities, with practical applications noted on hardware like the 7900xtx. Discover Pytorch-Lightning on GitHub.

Tinygrad Meets Metal: Community members are exploring the generation of Metal compute shaders with tinygrad, discussing how to run simple Metal programs without Xcode and the possibility of applying this to meshnet models.

Model Manipulation and Efficiency in Tinygrad: A member’s proposal for a fast, probabilistically complete Node.equals() prompted discussions on efficiency, while George Hotz explained layer device allocation, and users were directed toward tinygrad/shape/shapetracker.py or view.py for zero-cost tensor manipulations like broadcast and reshape.

Skunkworks AI Discord

- Hugging Face Showcases Idefics2: Hugging Face introduces Idefics2, a new multimodal ChatGPT iteration that integrates Python coding capabilities, as demonstrated in their latest video.

- Reka Core Rivals Tech Behemoths: Touted for its performance, Reka Core emerges as a strong competitor to language models from OpenAI and others, with a video overview available to showcase its capabilities.

- JetMoE-8B Flaunts Efficient AI Performance: The JetMoE-8B model impresses with performance that surpasses Meta AI’s LLaMA2-7B while costing under $0.1 million, suggesting a cost-efficient approach to AI development as explained in this breakdown.

- Snowflake Announces Premier Text-Embedding Model: Snowflake debuts the Snowflake Arctic embed family of models, claiming the title for the world’s most effective practical text-embedding model, detailed in their announcement.

Datasette - LLM (@SimonW) Discord

- Mixtral Mania: Engineers are eagerly awaiting to test the Mixtral 8x22B Instruct model; for those interested, the Model Card on HuggingFace is now available.

- Glitch in the Machine: There’s a reported installation error for llm-gpt4all that seems to obstruct usage; details of the problem can be found in the GitHub issue tracker.

Alignment Lab AI Discord

- Legal Entanglements Afoot?: A member hinted at possible legal involvement in an unspecified situation, yet no context was provided to ascertain the details or nature of the legal matters in question.

- The Misfortune of wizardlm-2: An image was shared showing the deletion of wizardlm-2, noted specifically for lack of testing on v0; the intricacies of wizardlm-2 or the testing processes were not elaborated. View Image

Mozilla AI Discord

-

Llamafile Script Gets a Facelift: An improved repacking script for the llamafile archive version upgrade is now accessible via this Gist, triggering a discussion on whether to merge it with the main GitHub repo or to start new llamafiles from scratch due to concerns about maintainability.

-

Seeking Protocol for Security Flaws: The discussion surfaced a need for clarification on the procedure to report security vulnerabilities within the system, including the steps to request a CVE number, although specific guidance is currently lacking.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

Stability.ai (Stable Diffusion) ▷ #announcements (1 messages):

-

Stable Diffusion 3 Launch Celebration: Stable Diffusion 3 and its faster variant, Stable Diffusion 3 Turbo, are now available on the Stability AI Developer Platform API. This release is powered through a partnership with Fireworks AI, boasting claims of being the fastest and most reliable API platform.

-

Open Generative AI Continues: There is a plan to make Stable Diffusion 3 model weights available for self-hosting, which would require a Stability AI Membership, emphasizing the continued commitment to open generative AI.

-

Discover More About SD3: Users are directed to learn more and get started with the new offerings through the provided link, which includes further details and documentation.

-

Research Background Unpacked: According to the Stable Diffusion 3 research paper, this iteration rivals or surpasses the leading text-to-image systems like DALL-E 3 and Midjourney v6 in aspects such as typography and adherence to prompts, based on human preference studies.

-

Technical Advancements in SD3: The latest version introduces the Multimodal Diffusion Transformer (MMDiT) architecture, offering improved text comprehension and image representation over previous Stable Diffusion models by utilizing distinct weight sets for different modalities.

Link mentioned: Stable Diffusion 3 API Now Available — Stability AI: We are pleased to announce the availability of Stable Diffusion 3 and Stable Diffusion 3 Turbo on the Stability AI Developer Platform API.

Stability.ai (Stable Diffusion) ▷ #general-chat (1039 messages🔥🔥🔥):

-

SD3 Awaits Membership Clarification: Amidst the concerns of licensing and accessibility, users await a clear statement from Stability AI regarding SD3’s availability for personal and commercial use. Discussions arose following an announcement stating plans to make the model weights available for self-hosting with a Stability AI Membership.

-

SDXL Refiners Deemed Redundant: The community finds SDXL finetunes to have made the use of SDXL refiners obsolete, stating that refiner-trained finetunes have taken precedence in Civitai downloads. Some users reminisce about initial uses of refiners but acknowledge that finetune integrations quickly replaced the need for them.

-

Model Merging Challenges: Users explore the effectiveness and understanding of model-merging concepts around V-prediction and epsilon in ComfyUI. There’s debate on the necessity of correct implementation to avoid unpredictable results, with recommendations to gain minimal knowledge through UI experimentation.

-

Diffusers Pipeline Limitations: Some users point out limitations in the diffusers pipeline requiring Hugging Face dependency, yet others contend that once models are downloaded, the process can run independently and efficiently on local systems. Concerns are raised about the inaccessibility of

StableVideoDiffusionPipeline.from_single_file(path)method in SVD finetunes, suggesting Comfy UI as an easier alternative.

Links mentioned:

- Video Examples: Examples of ComfyUI workflows

- Model Merging Examples: Examples of ComfyUI workflows

- Stable Cascade - a Hugging Face Space by multimodalart: no description found

- PixArt Sigma - a Hugging Face Space by PixArt-alpha: no description found

- camenduru/SUPIR · Hugging Face: no description found

- Stable Diffusion 3 API Now Available — Stability AI: We are pleased to announce the availability of Stable Diffusion 3 and Stable Diffusion 3 Turbo on the Stability AI Developer Platform API.

- Membership — Stability AI: The Stability AI Membership offers flexibility for your generative AI needs by combining our range of state-of-the-art open models with self-hosting benefits.

- Stable Video Diffusion: no description found

- GitHub - kijai/ComfyUI-SUPIR: SUPIR upscaling wrapper for ComfyUI: SUPIR upscaling wrapper for ComfyUI. Contribute to kijai/ComfyUI-SUPIR development by creating an account on GitHub.

- WizardLM/WizardLM-2 at main · victorsungo/WizardLM: Family of instruction-following LLMs powered by Evol-Instruct: WizardLM, WizardCoder - victorsungo/WizardLM

- Reddit - Dive into anything: no description found

- GitHub - king159/svd-mv: Training code for Stable Video Diffusion Multi-View: Training code for Stable Video Diffusion Multi-View - king159/svd-mv

- Reddit - Dive into anything: no description found

- GitHub - BatouResearch/magic-image-refiner: Contribute to BatouResearch/magic-image-refiner development by creating an account on GitHub.

- Fix ELLA timesteps by kijai · Pull Request #25 · ExponentialML/ComfyUI_ELLA: I have been comparing the results from this implementation to the diffusers implementation, and it's not on par. In diffusers ELLA is applied on each timestep, with the actual timestep value. Appl...

- Pixel Art XL - v1.1 | Stable Diffusion LoRA | Civitai: Pixel Art XL Consider supporting further research on Ko-Fi or Twitter If you have a request, you can do it via Ko-Fi Checkout my other models at Re...

- GitHub - kijai/ComfyUI-KJNodes: Various custom nodes for ComfyUI: Various custom nodes for ComfyUI. Contribute to kijai/ComfyUI-KJNodes development by creating an account on GitHub.

- GitHub - city96/ComfyUI_ExtraModels: Support for miscellaneous image models. Currently supports: DiT, PixArt, T5 and a few custom VAEs: Support for miscellaneous image models. Currently supports: DiT, PixArt, T5 and a few custom VAEs - city96/ComfyUI_ExtraModels

- Add node to use SD3 through API · kijai/ComfyUI-KJNodes@22cf8d8: no description found

- GitHub - lllyasviel/stable-diffusion-webui-forge: Contribute to lllyasviel/stable-diffusion-webui-forge development by creating an account on GitHub.

- Comfy Workflows: Share, discover, & run thousands of ComfyUI workflows.

Unsloth AI (Daniel Han) ▷ #general (383 messages🔥🔥):

- GPT-4 and GPT-3.5 Clarification: A distinction was made between GPT-4 and GPT-3.5, noting that the newer version appears to be a fine-tuned iteration of its predecessor.

- Mistral Model Multilingual Capabilities Discussed: Members discussed whether datasets for Mistral7B need to be in English to perform well, with advice given to include French data for better results.

- Finetuning and Cost Concerns Addressed: A discussion about finetuning methods, costs, and specific resources like notebooks provided insights for those new to the domain. It was suggested that continued pretraining and sft could be beneficial and cost-effective.

- Concerning UnSloth Contributions: Members expressed interest in contributing to UnSloth AI, offering help in expanding documentation and considering donations, with links to existing resources and discussions on potential contributions shared.

- Mixtral 8x22B Release Excitement: The release of Mixtral 8x22B, a sparse Mixture-of-Experts model with strengths in multilingual fluency and long context windows, sparks discussions due to its open-sourcing under the Apache 2.0 license.

Links mentioned:

- Cheaper, Better, Faster, Stronger: Continuing to push the frontier of AI and making it accessible to all.

- no title found: no description found

- Google Colaboratory: no description found

- lucyknada/microsoft_WizardLM-2-7B · Hugging Face: no description found

- Placa de Vídeo Galax NVIDIA GeForce RTX 3090 TI EX Gamer, 24GB GDDR6X, 384 Bits - 39IXM5MD6HEX: Placa De Video Galax GeforceTorne sua rotina diária mais fluída Assine o Prime Ninja e tenha promoções exclusivas desconto no frete e cupons em dobro

- Google Colaboratory: no description found

- Home: 2-5X faster 80% less memory LLM finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

- gist:e45b337e9d9bd0492bf5d3c1d4706c7b: GitHub Gist: instantly share code, notes, and snippets.

- Full fine tuning vs (Q)LoRA: ➡️ Get Life-time Access to the complete scripts (and future improvements): https://trelis.com/advanced-fine-tuning-scripts/➡️ Runpod one-click fine-tuning te...

- mistralai/Mixtral-8x22B-Instruct-v0.1 · Hugging Face: no description found

- Support for x86/ARM CPUs (e.g., Xeon, M1) · Issue #194 · openai/triton: Hi there, Is there any future plan for macOS support? ❯ pip install -U --pre triton DEPRECATION: Configuring installation scheme with distutils config files is deprecated and will no longer work in...

- Ollama.md Documentation by jedt · Pull Request #3699 · ollama/ollama: A guide on setting up a fine-tuned Unsloth FastLanguageModel from a Google Colab notebook to: HF hub GGUF local Ollama Preview link: https://github.com/ollama/ollama/blob/66f7b5bf9e63e1e98c98e8f4...

Unsloth AI (Daniel Han) ▷ #random (27 messages🔥):

-

Chroma Project Takes a Leap: Inspired by unsloth AI strategies, a member announces the development of an edge version of Chroma written in Go, using SQLite for on-device vector storage. The project, which is also compatible with browsers via WASM, is accessible on GitHub.

-

Smileys Invade the Bottom Page: A heartwarming mini-discussion about cute smiley faces at the bottom of a page, highlighting a particular mustache smiley as a favorite.

-

PyTorch’s New Torchtune: Mention of Torchtune, a native PyTorch library for LLM fine-tuning that has been shared on GitHub, sparking interest due to its potential to make fine-tuning more accessible.

-

Unsloth AI’s Broad GPU Support Praised: A member congratulates Unsloth for its broad GPU support, which makes it more accessible compared to other tools that require newer GPU architectures.

-

Mobile Deployment of AI Models Discussed: Members discuss the feasibility of running neural networks on mobile phones, identifying the need for custom inference engines and noting the absence of CUDA on mobile devices. The challenges of running typical DL Python code on iPhones versus Macs with M chips are also mentioned.

Links mentioned:

- GitHub - pytorch/torchtune: A Native-PyTorch Library for LLM Fine-tuning: A Native-PyTorch Library for LLM Fine-tuning. Contribute to pytorch/torchtune development by creating an account on GitHub.

- GitHub - l4b4r4b4b4/go-chroma: Go port of Chroma vector storage: Go port of Chroma vector storage. Contribute to l4b4r4b4b4/go-chroma development by creating an account on GitHub.

Unsloth AI (Daniel Han) ▷ #help (275 messages🔥🔥):

- Questions About Unsupported Attributes: A user encountered an

AttributeErrorwhen trying to fine-tune a model, reporting that the'MistralSdpaAttention'object has no attribute'temp_QA'. It seems to be related to a specific method within their custom training pipeline. - ORPO Support and Usage Clarified: Users inquired about ORPO support in Unsloth. It’s confirmed that ORPO is supported, referenced by links to a model trained using ORPO on HuggingFace and a colab notebook.

- Discussions on LoRA and rslora: Users discussed using LoRA and rslora in training, with advice on handling different

alphavalues and potential loss spikes. Some members suggested adjustingrandalphaand disabling packing as possible solutions to training issues. - Embedding Tokens Not Trained: Users touched on the subject of embedding tokens that were not trained in the Mistral model, in the context of whether it is possible to train these embeddings during fine-tuning.

- Saving and Hosting Models: Questions arose about saving finetuned models in different formats using commands like

save_pretrained_mergedandsave_pretrained_gguf; whether they work sequentially and the need to start with fp16 first. There was also a query about hosting a model with GGUF files on the HuggingFace inference API.

Links mentioned:

- Find Open Datasets and Machine Learning Projects | Kaggle: Download Open Datasets on 1000s of Projects + Share Projects on One Platform. Explore Popular Topics Like Government, Sports, Medicine, Fintech, Food, More. Flexible Data Ingestion.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- G-reen/EXPERIMENT-ORPO-m7b2-1-merged · Hugging Face: no description found

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- Practical Tips for Finetuning LLMs Using LoRA (Low-Rank Adaptation): Things I Learned From Hundreds of Experiments

- Unsloth - 4x longer context windows & 1.7x larger batch sizes: Unsloth now supports finetuning of LLMs with very long context windows, up to 228K (Hugging Face + Flash Attention 2 does 58K so 4x longer) on H100 and 56K (HF + FA2 does 14K) on RTX 4090. We managed...

- Tokenization | Mistral AI Large Language Models: Tokenization is a fundamental step in LLMs. It is the process of breaking down text into smaller subword units, known as tokens. We recently open-sourced our tokenizer at Mistral AI. This guide will w...

- Home: 2-5X faster 80% less memory LLM finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

- A Rank Stabilization Scaling Factor for Fine-Tuning with LoRA: As large language models (LLMs) have become increasingly compute and memory intensive, parameter-efficient fine-tuning (PEFT) methods are now a common strategy to fine-tune LLMs. A popular PEFT method...

- Rank-Stabilized LoRA: Unlocking the Potential of LoRA Fine-Tuning: no description found

- Installation: no description found

- no title found: no description found

- Installation: no description found

- Home: 2-5X faster 80% less memory LLM finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

- GitHub - comfyanonymous/ComfyUI: The most powerful and modular stable diffusion GUI, api and backend with a graph/nodes interface.: The most powerful and modular stable diffusion GUI, api and backend with a graph/nodes interface. - comfyanonymous/ComfyUI

- Add ORPO example notebook to the docs · Issue #331 · unslothai/unsloth: It's possible to use the ORPOTrainer from TRL with very little modification to the current DPO notebook. Since ORPO reduces the resources required for training chat models even further (no separat...

- Tokenizer: no description found

- GitHub - ggerganov/llama.cpp: LLM inference in C/C++: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- Adding New Vocabulary Tokens to the Models · Issue #1413 · huggingface/transformers: ❓ Questions & Help Hi, How could I extend the vocabulary of the pre-trained models, e.g. by adding new tokens to the lookup table? Any examples demonstrating this?

Unsloth AI (Daniel Han) ▷ #showcase (46 messages🔥):

- Clarification on Leaderboard Model Templates: A member asked how the leaderboard knows the model template. It was clarified that the model’s

tokenizer.chat_templateis used to inform the leaderboard. - ShareGPT90k Dataset Cleaned and Formatted: A new version of the ShareGPT90k dataset has been cleaned of HTML tags and is available in chatml format on Hugging Face, allowing users to train with Unsloth. Dataset ready for action.

- Ghost Model Training Intrigue: Members engaged in a detailed conversation about what constitutes a ‘recipe’ for training AI models. One member is particular about needing a detailed recipe that leads to creating a specific model with defined characteristics and not just a set of tools or methods.

- Recipes vs. Tools in AI Model Training: The conversation continued on the difference between a full “recipe” including datasets and specific steps, as opposed to tools and methods. One member shared their approach, underlying the importance of data quality and replication of existing models, referencing the Dolphin model card on Hugging Face.

- Recommender Systems vs. NLP Challenges and Expertise: A PhD candidate discussed the differences and similarities between working on NLP and developing recommender systems, highlighting the unique challenges and expertise required in the latter which includes handling noise in data, induction biases, and significant feature engineering.

Links mentioned:

- Practical Tips for Finetuning LLMs Using LoRA (Low-Rank Adaptation): Things I Learned From Hundreds of Experiments

- Nice Click Nice GIF - Nice Click Nice Man - Discover & Share GIFs: Click to view the GIF

- Aligning LLMs with Direct Preference Optimization: In this workshop, Lewis Tunstall and Edward Beeching from Hugging Face will discuss a powerful alignment technique called Direct Preference Optimisation (DPO...

- Direct Preference Optimization (DPO) explained: Bradley-Terry model, log probabilities, math: In this video I will explain Direct Preference Optimization (DPO), an alignment technique for language models introduced in the paper "Direct Preference Opti...

- FractalFormer: A WIP Transformer Architecture Inspired By Fractals: Check out the GitHub repo herehttps://github.com/evintunador/FractalFormerSupport my learning journey on patreon!https://patreon.com/Tunadorable?utm_medium=u...

- llama-recipes/recipes at 0efb8bd31e4359ba9e8f52e8d003d35ff038e081 · meta-llama/llama-recipes: Scripts for fine-tuning Llama2 with composable FSDP & PEFT methods to cover single/multi-node GPUs. Supports default & custom datasets for applications such as summarization & ...

- llama-recipes/recipes/multilingual/README.md at 0efb8bd31e4359ba9e8f52e8d003d35ff038e081 · meta-llama/llama-recipes: Scripts for fine-tuning Llama2 with composable FSDP & PEFT methods to cover single/multi-node GPUs. Supports default & custom datasets for applications such as summarization & ...

- Neural Graph Reasoning: Complex Logical Query Answering Meets Graph Databases: Complex logical query answering (CLQA) is a recently emerged task of graph machine learning that goes beyond simple one-hop link prediction and solves a far more complex task of multi-hop logical reas...

- LLM Phase Transition: New Discovery: Phase Transitions in a dot-product Attention Layer learning, discovered by Swiss AI team. The study of phase transitions within the attention mechanisms of L...

- pacozaa/sharegpt90k-cleanned · Datasets at Hugging Face: no description found

- After 500+ LoRAs made, here is the secret: Well, you wanted it, here it is: The quality of dataset is 95% of everything. The rest 5% is not to ruin it with bad parameters. Yeah, I know,...

Unsloth AI (Daniel Han) ▷ #suggestions (15 messages🔥):

- Exploring Multilingual Model Approaches: A member brought up the issue of catastrophic forgetting in multilingual models trained on languages like Hindi or Thai. They proposed a two-phase solution involving translating questions to English, using a large English model for answering, and then translating back to the original language, questioning the drawbacks of this method.

- Multilingual Expansion Through MOE: Another member expressed excitement about the possibility of using MoE (Mixture of Experts) to expand multilingual capabilities of models, anticipating it would “open so many doors!”

- Torchtune Gains Enthusiasm: The community shows interest in Torchtune, an alternative to the abstractions provided by Hugging Face and Axolotl, highlighting its potential to streamline the fine-tuning process. There is also a hint at possible collaborations involving Unsloth AI.

- Contemplating Language Mixing in Datasets: In response to the splitting of translation and question-answering tasks, a member considered the possibility of combining multiple languages into a single dataset for model training and using a strategy that involves priming the model with Wikipedia articles.

- Double-Translation Mechanism Discussed: A concept articulated as

translate(LLM(translate(instruction)))was proposed and discussed, supporting the idea of using a larger, more robust English language model in tandem with translation layers to process non-English queries. Concerns about the added cost due to multiple model calls were raised.

LM Studio ▷ #💬-general (175 messages🔥🔥):

- Repeat AI Responses Challenge: A member asked how to prevent AI from repeating the same information during a conversation, specifically using Dolphin 2 Mistral. They also inquired about what “multi-turn conversations” are, to which another member linked an article explaining the concept in relation to bots.

- WizardLM-2 LLM Announced: An announcement for the new large language model family was shared, featuring WizardLM-2 8x22B, 70B, and 7B. Links to a release blog and model weights on Hugging Face were included, with members discussing its availability and performance.

- Understanding Tool Differences: One user asked for the differences between ollama and LMStudio, and it was explained that both are wrappers for llama.cpp, but LM Studio is GUI based and easier for beginners.

- Fine-Tuning and Agents Discussion: There was a discussion on whether it’s worth learning tools like langchain depending on needs and use cases, with some suggesting it can be a hindrance if venturing outside its default settings.

- File Management and API Interactions in LM Studio: A new member inquired about relocating downloaded app files and interfacing LM Studio with an existing API. It was clarified that models cannot change default install locations, and files can be found under the My Models tab for relocating. No specific method for API interaction through LM Studio was mentioned.

Links mentioned:

- RAM Latency Calculator: no description found

- lmstudio-community/WizardLM-2-7B-GGUF · Hugging Face: no description found

- Multi-turn conversations - QnA Maker - Azure AI services: Use prompts and context to manage the multiple turns, known as multi-turn, for your bot from one question to another. Multi-turn is the ability to have a back-and-forth conversation where the previous...

- Mission Squad. Flexible AI agent desktop app.: no description found

- Tweet from WizardLM (@WizardLM_AI): 🔥Today we are announcing WizardLM-2, our next generation state-of-the-art LLM. New family includes three cutting-edge models: WizardLM-2 8x22B, 70B, and 7B - demonstrates highly competitive performa...

- GitHub - hiyouga/LLaMA-Factory: Unify Efficient Fine-Tuning of 100+ LLMs: Unify Efficient Fine-Tuning of 100+ LLMs. Contribute to hiyouga/LLaMA-Factory development by creating an account on GitHub.

- Microsoft’s Punch in the Face to Open AI (Open Source & Beats GPT-4): WizardLM 2 is a groundbreaking family of large language models developed by Microsoft that push the boundaries of artificial intelligence.▼ Link(s) From Toda...

- GitHub - Unstructured-IO/unstructured: Open source libraries and APIs to build custom preprocessing pipelines for labeling, training, or production machine learning pipelines.: Open source libraries and APIs to build custom preprocessing pipelines for labeling, training, or production machine learning pipelines. - GitHub - Unstructured-IO/unstructured: Open source librar...

LM Studio ▷ #🤖-models-discussion-chat (96 messages🔥🔥):

- Template Troubles with WizardLM 2: Members reported issues with the WizardLM 2 and the Vicuna 1.5 preset, where the bot generated inputs for the user instead. A suggested solution included adjusting the rope frequency to 1 or setting

freq_baseto 0, which appeared to correct the behavior. - Mixed Opinions on WizardLM 2 and Wavecoder: While some users expressed a high opinion of WizardLM 2, claiming it performed well even compared to other 7B models, others judged the performance as subpar, not noticing any significant improvement even after fine-tuning.

- Exploring Best Quantization Practices: Users discussed the effectiveness of different quantization levels for 7B models, comparing Q8 to Q6K quality. The consensus leaned towards higher quantization being more desirable if one has sufficient VRAM, while acknowledging the utility of smaller models for certain tasks.

- Model Performance Debate: There was a spirited discussion around the relative superiority of models, with focus on parameter count versus quantization level, and the belief that fine-tuning and quality of the training can be deciding factors over just the size of the model’s parameters.

- Finding the Right Code Generator: A user experienced difficulties with the code-generating capabilities of WaveCoder-Ultra-6.7B, receiving messages that it couldn’t write complete applications. Tips offered included using assertive prompts and adjusting the context window size for the model to load appropriately.

Links mentioned:

- lmstudio-community/wavecoder-ultra-6.7b-GGUF · Hugging Face: no description found

- mistralai/Mixtral-8x22B-Instruct-v0.1 · Hugging Face: no description found

- Virt-io/Google-Colab-Imatrix-GGUF at main: no description found

- High Quality / Hard to Find - a DavidAU Collection: no description found

- lmstudio-community/WizardLM-2-7B-GGUF · Hugging Face: no description found

- Responses: These are answers to the prompt by two different LLMs. You are going to analyze Factuality Depth Level of detail Coherency <any other area that I might have missed but is generally considered impo...

- bartowski/zephyr-orpo-141b-A35b-v0.1-GGUF at main: no description found

LM Studio ▷ #🧠-feedback (4 messages):

-

Model Loading Error in Action: A user encountered an error loading model architecture when trying out Wirard LLM 2 on LM Studio across different model sizes, including 2 4bit and 6bit, prompting a Failed to load model message.

-

Fix Suggestion for Model Loading: Another user recommended ensuring the use of GGUF quants and also noted that version 0.2.19 is required for WizardLM2 models to function properly.

-

Request for stable-diffusion.cpp: A request was made to add stable-diffusion.cpp to LM Studio to enhance the software’s capabilities.

LM Studio ▷ #📝-prompts-discussion-chat (17 messages🔥):

- Cleaning Up LM Studio: Users with issues were advised to delete specific LM Studio folders such as

C:\Users\Username\.cache\lm-studio,C:\Users\Username\AppData\Local\LM-Studio, andC:\Users\Username\AppData\Roaming\LM Studio. It’s crucial to backup models and important data prior to deletion. - Prompt Crafting for NexusRaven: A user inquired if anyone has experimented with NexusRaven and devised any prompt presets for it, indicating interest in collective knowledge-sharing.

- Script Writing with AI: One member asked how to make the AI output a full script, suggesting they are searching for tips on generating longer content.

- Compatibility Issues with Hugging Face Models: A user noted problems with running certain Hugging Face models, like

changge29/bert_enron_emailsandktkeller/mem-jasper-writer-testing, in LM Studio. Assistance with running these models was sought. - Seeking Partnership for Affiliate Marketing: A user indicated interest in finding a partner with coding expertise for help with affiliate marketing campaigns, mentioning a willingness to share profits if successful. The user emphasized a serious offer for a partnership based on results.

LM Studio ▷ #🎛-hardware-discussion (18 messages🔥):

- GPU Comparison Sheet Quest Continues: User freethepublicdebt was searching for an elusive Google sheet comparing GPUs and could not find the link they worked on. Another user, heyitsyorkie, attempted to help but provided the wrong link leading to further confusion.

- Direct GPU Communication Breakthrough: rugg0064 shared a Reddit post celebrating the success of getting GPUs to communicate directly, bypassing the CPU/RAM and potentially leading to performance improvements.

- Customizing GPU Load in LM Studio: heyitsyorkie provided insight on adjusting the GPU offload for models in LM Studio’s Linux beta by navigating to Chat mode -> Settings Panel -> Advanced Config.

- Splitting Workloads Between Different GPUs: In response to a query from .spicynoodle about uneven model allocation between their GPUs, heyitsyorkie suggested modifying GPU preferences json and searching for “tensor_split” for further guidance.

- SLI and Nvlink Troubles with P100s: ethernova is seeking advice for their setup with dual P100s not showing up in certain software and NVLink status appearing inactive despite having NVLink bridges attached.

Links mentioned:

- George Hotz Geohot GIF - George hotz Geohot Money - Discover & Share GIFs: Click to view the GIF

- Reddit - Dive into anything: no description found

LM Studio ▷ #🧪-beta-releases-chat (31 messages🔥):

- VRAM vs. System RAM in Model Performance: There’s a discussion on whether a model would run on a system with 24 GB VRam and 96 GB Ram, with one member suggesting that it might run but inference will be incredibly slow due to the speed difference between VRam and system RAM.

- Expectations for WizardLM-2-8x22B: Members compare the WizardLM-2-8x22B to other models like Command R Plus, with mixed experiences. While one member was not impressed with Mixtral 8x22b and plans to test WizardLM-2-8x22B, another mentioned getting satisfactory results with 10+ tokens/sec from WizardLM.

- Model Performance on Different Hardware: Users with an M3 MacBook Pro 128GB report running model q6_k of Command R Plus, achieving about 5 tokens/sec. The speed is considered half as fast as GPT-4 on ChatGPT, but not painfully slow as each token represents a word or subword.

- Base Model Clarification: Clarification on what constitutes a “Base” model was provided—models not fine-tuned for chat or instruct tasks are considered base models, and they are generally found to perform poorly in comparison to their fine-tuned counterparts.

- Model Size and Local Running Feasibility: A conversation about the feasibility of running large models like WizardLM-2-8x22B locally was had, noting that a GPU like a 4090 with 24GB is too small to run such a large model, which runs best on Mac systems with substantial RAM.

LM Studio ▷ #amd-rocm-tech-preview (19 messages🔥):

- Curiosity about Windows Executable Signing: A member was curious whether the Windows executables are signed with an Authenticode cert. It was confirmed that they are indeed signed.

- Challenges with Code Signing Certificates: In the context of signing an app, there was a discussion on the cost and process complexities associated with obtaining a Windows certificate, including a comparison to the cost of an Apple developer license.

- Seeking Expertise on Automated Compile and Sign Process: A member expressed interest in understanding the automated process for compiling and signing, offering to compensate for the knowledge exchange.

- AMD HIP SDK System Requirements Clarification: A member provided information about system requirements for GPUs from a link to the AMD HIP SDK system requirements and asked about the stance of LM Studio on supporting certain AMD GPUs not officially supported by the SDK.

- Issues with AMD dGPU Recognition in LM Studio Software: Members discussed an issue where LM Studio software was using an AMD integrated GPU (iGPU) instead of the dedicated GPU (dGPU), with one member suggesting disabling the iGPU in the device manager. Another member stated that version 0.2.19 of the software should have resolved this issue and encouraged to report the problem if it persists.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- System requirements (Windows) — HIP SDK installation Windows: no description found

- Bill Gates Chair GIF - Bill Gates Chair Jump - Discover & Share GIFs: Click to view the GIF

LM Studio ▷ #model-announcements (3 messages):

- WaveCoder Ultra Unveiled: Microsoft has released WaveCoder ultra 6.7b, finely tuned using their ‘CodeOcean’. This impressive model specializes in code translation and supports the Alpaca format for instruction following, with examples available on its model card.

- Seeking NeoScript AI Assistant: A community member new to AI has inquired about utilizing models for NeoScript programming, specifically for RAD applications using a platform formerly known as NeoBook. They are seeking suggestions on configuring AI models despite unsuccessful initial attempts using documents as references.

Link mentioned: lmstudio-community/wavecoder-ultra-6.7b-GGUF · Hugging Face: no description found

Nous Research AI ▷ #off-topic (17 messages🔥):

- Introducing Multimodal Chat GPTs: A link to a YouTube video titled “Introducing Idefics2 8B: Open Multimodal ChatGPT” was shared, discussing the development of Hugging Face’s open multimodal language model, Idefics2. Watch it here.

- Reka Core Joins the Multimodal Race: Another YouTube video shared discusses “Reka Core,” a competitive multimodal language model claiming to rival big industry names like OpenAI, Anthropic, and Google. The video can be viewed here.