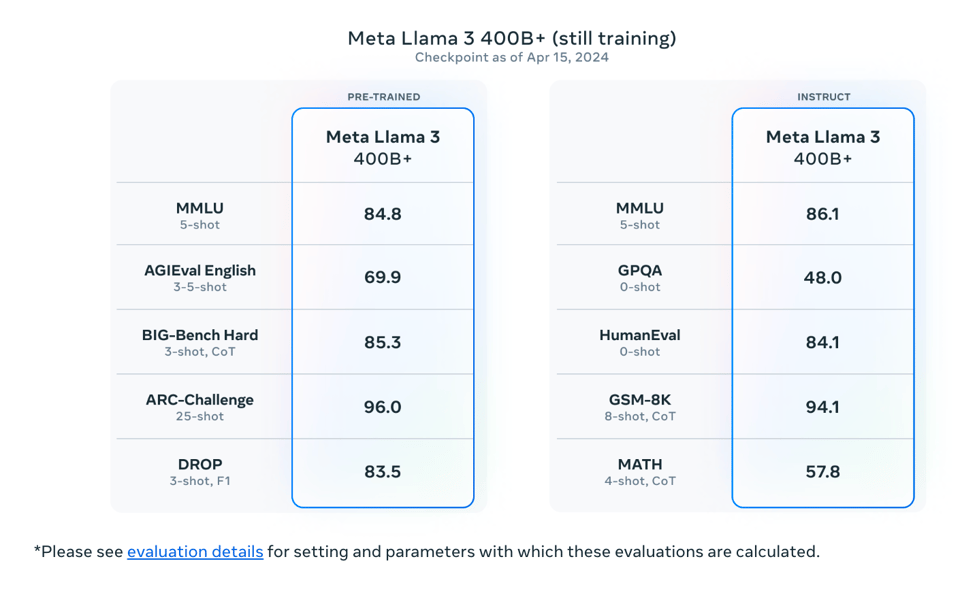

As widely telegraphed, Meta partially released Llama 3 today, 8B and 70B variants, but with the star of the show being the 400B variant (still in training) which is widely lauded as being the first GPT-4 level OSS model.

We are traveling for most of the day so we will add all the remaining commentary tomorrow, but head to HN for the best live coverage.

Table of Contents

[TOC]

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, r/Singularity. Comment crawling works now but has lots to improve!

Key Themes in Recent AI Developments

-

Stable Diffusion 3 Release and Comparisons: Stability AI has released the Stable Diffusion 3 API, with model weights coming soon. Comparisons between SD3 and Midjourney V6 show mixed results, while realism tests demonstrate SD3’s capabilities. Emad Mostaque confirmed SD3 weights will be released on Hugging Face along with ComfyUI workflows.

-

Advances in Robotics and AI Agents: Boston Dynamics revealed an electric version of their humanoid robot Atlas with impressive agility. Menteebot is a human-sized AI robot controllable via natural language. Microsoft’s VASA-1 model generates lifelike talking faces from audio in real-time at 40fps on an RTX 4090.

-

New Language Models and Benchmarks: Mistral, a European OpenAI rival, seeks $5B in funding. Their Mixtral-8x22B-Instruct-v0.1 outperforms open models with 100% accuracy on 64K context. New 7B merge models combine strengths of different bases. Coxcomb, a 7B creative writing model, scores well on benchmarks.

-

AI Safety and Regulation Discussions: Former OpenAI board member Helen Toner calls for audits of top AI companies to share info on capabilities and risks. The Mormon Church released AI usage principles, noting benefits and risks.

-

Tools and Frameworks for AI Development: The Ctrl-Adapter framework adapts controls to diffusion models. Distilabel 1.0.0 enables synthetic dataset pipelines with LLMs. Data Bonsai cleans data with LLMs, integrating ML libraries. Dendron builds LLM agents with behavior trees.

-

Memes and Humor: An expectation vs reality meme jokes about AI development vs futuristic visions. Snoop Dogg in a PS2-style LORA shows AI meme potential. AI Sans vs Frisk reimagines Undertale with AI art. A humorous take suggests AI isn’t that advanced yet.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

Here is the summary in the requested format:

Meta Llama 3 Release

- Llama 3 Models Released: @AIatMeta announced the release of Llama 3 8B and 70B models, delivering improved reasoning and setting a new SOTA for models of their size. More models, capabilities, and a research paper are expected in the coming months.

- Model Details: @omarsar0 noted that Llama 3 uses a standard decoder-only transformer, a 128K token vocab, 8K token sequences, grouped query attention, 15T pretraining tokens, and alignment techniques like SFT, rejection sampling, PPO and DPO.

- Performance: @DrJimFan compared Llama 3 70B performance to Claude 3 Opus, GPT-4, and Gemini, showing it is approaching GPT-4 level. @ylecun also highlighted strong benchmark results for the 8B and 70B models.

Open Source LLM Developments

- Mixtral 8x22B Release: @GuillaumeLample released Mixtral 8x22B, an open model with 141B params (39B active), multilingual capabilities, native function calling, and a 64K context window. It sets a new standard for open models.

- Mixtral Performance: @bindureddy noted Mixtral 8x22B has the best cost-to-performance ratio, with strong MMLU performance and fine-tuning potential to surpass GPT-4. @rohanpaul_ai highlighted its math capabilities.

- Open Model Leaderboard: @bindureddy and @osanseviero shared the open model leaderboard, showing the rapid progress and proliferation of open models. Llama 3 is poised to advance this further.

AI Agents and RAG (Retrieval-Augmented Generation)

- RAG Fundamentals: @LangChainAI released a playlist of videos explaining RAG fundamentals and advanced methods, in collaboration with @RLanceMartin and @freeCodeCamp.

- Mistral RAG Agents: @llama_index and @LangChainAI shared tutorials on building RAG agents with @MistralAI’s new 8x22B model, showing document routing, relevance checks, and tool use.

- Faithfulness of RAG: @omarsar0 shared a paper quantifying the tension between LLMs’ internal knowledge and retrieved information in RAG settings, highlighting implications for deploying LLMs in information-sensitive domains.

AI Courses and Education

- Google ML Courses: @svpino shared 300 hours of free Google courses on ML engineering, from beginner to advanced levels.

- Hugging Face Course: @DeepLearningAI announced a new free course on quantization fundamentals with Hugging Face, to make open models more accessible and efficient.

- Stanford CS224N Demographics: @stanfordnlp shared demographics for the 615 students in CS224N this quarter, showing broad representation across majors and levels.

Miscellaneous

- Zerve as Jupyter Alternative: @svpino suggested Zerve, a web-based IDE with a different philosophy than Jupyter, could potentially replace Jupyter notebooks for many use cases. It has unique features for ML/DS workflows.

- Capital Gains and Inflation: @scottastevenson explained how capital gains taxes during inflation can tax people for gaining nothing, impacting the middle class through assets like retirement funds, homes, and businesses.

AI Discord Recap

A summary of Summaries of Summaries

Llama 3 Launch Generates Excitement: Meta’s release of Llama 3, an 8B and 70B parameter instruction-tuned model, has sparked significant interest across AI communities. Key details:

- Promises improved reasoning capabilities and sets “new state-of-the-art” benchmarks across tasks.

- Available for inference and finetuning via partnerships like Together AI’s API offering up to 350 tokens/sec.

- Anticipation builds for an upcoming 400B+ parameter version.

- Some express concerns over output restrictions hindering open-source development.

Mixtral 8x22B Redefines Efficiency: The newly launched Mixtral 8x22B is lauded for its performance, cost-efficiency, and specialization across math, coding, and multilingual tasks. Highlights:

- Utilizes 39B active parameters out of 141B total via sparse Mixture-of-Experts (MoE) architecture.

- Supports 64K token context window for precise information recall.

- Released under Apache 2.0 open-source license along with Mistral’s custom tokenizer.

Tokenizers and Multilingual Capabilities Scrutinized: As powerful models like Llama 3 and Mixtral emerge, their tokenizers and multilingual performance are areas of focus:

- Llama 3’s 128K vocabulary tokenizer covers over 30 languages but may underperform for non-English tasks.

- Mistral open-sources its tokenizer with tool calls and structured outputs to standardize finetuning.

- Discussions on larger tokenizer vocabularies benefiting multilingual LLMs.

Scaling Laws and Replication Challenges: The AI research community engages in heated debates around scaling laws and replicability of influential papers:

- Chinchilla scaling paper findings questioned, with authors admitting errors and open-sourcing data.

- Differing views on whether results reaffirm or refute existence of scaling laws.

- Calls for more realistic experiment counts and narrower confidence intervals when extrapolating from limited data.

Misc

-

Llama 3 Launch Generates Excitement and Scrutiny: Meta’s release of Llama 3, with 8B and 70B parameter models, has sparked widespread interest and testing across AI communities. Engineers are impressed by its performance rivaling predecessors like Llama 2 and GPT-4, but also note limitations like a 128k token context window. Integrations are underway in frameworks like Axolotl and Unsloth, with quantized versions emerging on Hugging Face. However, some express concerns over Llama 3’s licensing restrictions on downstream use.

-

Mixtral and WizardLM Push Open Source Boundaries: Mistral AI’s Mixtral 8x22B and Microsoft’s WizardLM-2 are making waves as powerful open source models. Mixtral 8x22B boasts 39B active parameters and specializes in math, coding, and multilingual tasks. WizardLM-2 offers an 8x22B flagship and a speedy 7B variant. Both demonstrate the rapid advancement of open models, with support expanding to platforms like OpenRouter and LlamaIndex.

-

Stable Diffusion 3 Launches with Mixed Reception: Stability AI released Stable Diffusion 3 on API, but initial impressions are mixed. While it brings advancements in typography and prompt adherence, some report performance issues and a steep price increase. The model’s absence for local use has also drawn criticism, though Stability AI has pledged to offer weights to members soon.

-

CUDA Conundrums and Optimizations: CUDA engineers grappled with various challenges, from tiled matrix multiplication to custom kernel compatibility with torch.compile. Discussions delved into memory access patterns, warp allocation, and techniques like Half-Quadratic Quantization (HQQ). The llm.c project saw optimizations reducing memory usage and speeding up attention mechanisms.

-

AI Ecosystem Expands with New Platforms and Funding: The AI startup scene saw a flurry of activity, with theaiplugs.com debuting as a marketplace for AI plugins and assistants, and SpeedLegal launching on Product Hunt. A dataset of $30 billion in AI startup funding across 550 rounds was compiled and shared. Platforms like Cohere and Replicate introduced new models and pricing structures, signaling a maturing ecosystem.

PART 1: High level Discord summaries

Perplexity AI Discord

-

Opus Cap Curtails Conversations: Engineers are disgruntled over the unexpected Opus model usage limit, which dropped suddenly from 600 to 30 messages per day, derailing plans and prompting some to seek refunds or pursue alternatives like Tune Chat.

-

Llama 3 Hype Exceeds Expectations: There’s buzz around Meta’s open-source Llama 3 model, with engineers sharing links and discussing its potential, while keeping an eye out for new benchmarks posted on Twitter and links like llama3 which offer a deep dive.

-

API Ecosystem Grows Despite Hiccups: Amid discussions on API inconsistencies, mixtral-8x22b-instruct joins Perplexity Labs’ offerings at labs.pplx.ai, and users with Perplexity Pro now benefit from $5 monthly API credits.

-

Shared Enthusiasm Over Typographic Transformation: Engineers showed excitement for typographic developments with links circulated on the new Tubi font and related discussions.

-

Models Perform Poetic Analysis: mixtral-8x22b-instruct is receiving praise for its nuanced understanding of lyrics, such as those by Leonard Cohen, indicating a new bar set for other models to meet in content interpretation.

Stability.ai (Stable Diffusion) Discord

- Stable Diffusion 3 Blazes onto API: Stability AI has launched Stable Diffusion 3 and the Turbo version on their Developer Platform API, claiming noteworthy improvements in typography and adherence to prompts.

- Model Weights for the Masses: Stability AI commits to releasing model weights for members, encouraging self-hosting and supporting the broader open generative AI movement.

- GPU Debate Ignites: Discussion abounds regarding whether to invest in the imminent but unreleased GPUs like the 5090 or to opt for current high-performance ones like a second-hand 3090 for AI tasks, pondering over VRAM capacity and NVLink capabilities.

- AI Influencers - A Controversial Craft: Dialogues traverse the creation of AI influencers, revealing a mix of motivations ranging from simple curiosity to profitability, amidst some questioning their societal contribution.

- Fine-tuning Fixes and Job Fishing: Engineers exchange insights on fine-tuning newer models for better performance, with eye on job openings and partnerships in AI domains, linking to a job post for a Stable Diffusion Engineer at Palazzo, Inc..

Nous Research AI Discord

-

Snowflake’s Embeddings Stir Discussions: A newly launched text-embedding model by Snowflake became a topic of interest, sparking discussions on language’s vector space and symbolic language forms. Concerns were raised about the efficiency and meaningfulness of the relatively smaller 256-dimensional embeddings mentioned, compared to the more common 1500 dimensions, with members planning to run their own tests on retrieval accuracy.

-

Model Security Vulnerabilities Exposed: Discussions revealed a security incident at Hugging Face involving a malicious .pickle file and pondered similar vulnerabilities in OpenAI’s systems. This highlights the ongoing risks and challenges in AI system security, emphasizing the need for engineers to design robust countermeasures.

-

Llama 3 Amplifies Benchmarking Buzz: The performance of Meta’s Llama 3 stirs excitement, despite concerns about its context length limitations compared to models like Mixtral 8x22B. There’s active dialogue about finetuning with MLX, but sudden gating of Mistral models implies regulatory challenges or abuse prevention measures.

-

Prompting Perplexities and GPU Ponderings: Questions and advice exchanges were observed about the Hermes 2 Pro model’s prompting behavior and the need for a “directly_answer” tool. Additionally, technical challenges of conducting long context inference on GPU clusters and using twin A100 GPUs with jamba for processing 200k tokens were particular points of discussion, instrumental for engineers working on similar high-capacity deployment scenarios.

-

WorldSim Whispers: Eagerness and playfulness marked the community as they awaited the re-launch of WorldSim. Insights from users suggested the implementation of usage limitations and fees to help prevent manipulative inputs which had caused a shutdown. The platform’s potential for user-generated AI civilizations stood out as a significant point of anticipation and philosophical conversation.

LM Studio Discord

LLama 3 is Heating Up LM Studio: The new Meta Llama 3, particularly the 8B Instruct version, is stirring up excitement with its release and availability on Hugging Face, but users report issues with unexpected output repetition and prompt loops. Enthusiasts debated the feasibility of running large models such as WizardLM-2-8x22B locally, with the understanding that it might not be practical on a 24GB Nvidia 4090 graphics card.

Tech Troubles and Triumphs: AI engineers shared approaches to optimize Llama 3’s performance on diverse hardware setups, from Ryzen 5 3600 to Mac M1 and M3 Max, and one user resolved thermal throttling by adjusting motherboard settings for cooler operations. Dual P100 GPUs are proving tricky for some, with concerns about proper utilization, while users also discussed the ability of different NVIDIA GPUs to contribute VRAM as needed.

AI App Engagement and Enquiry: Interest peaks with MissionSquad, an Electron-based app offering Prompt Studio in the recent V1.1.0 release, however, calls for transparency with some preferring to view source code are being balanced against privacy concerns. A suggestion to incorporate text-to-speech (TTS) functionality into LM Studio reflects the desire for enhanced interactivity.

AMD Adventures: Users with AMD setups encounter GPU selection challenges when running LM Studio, and while the latest ROCm preview (0.2.19) should resolve iGPU selection woes, reports of inference anomalies suggest lingering support issues for large models like the 8B model. A workaround involving disabling the iGPU has been shared, and an update or bug report submission is recommended for persistent issues.

Prompt Crafting Callout: Discussions in LM Studio extend to practical matters like crafting affiliate marketing campaigns, with users requesting AI models with specificity beyond generic outputs. One member highlighted the need for transactional arrangements as opposed to speculative partnerships when soliciting developer involvement.

Unsloth AI (Daniel Han) Discord

Llama 3 Launch Lures Engineers: Members of the technical Discord community engaged in active discussions and testing of Llama 3, evaluating its benchmark results that suggest performance parity with its predecessor, Llama 2, despite having fewer parameters (8B compared to 70B). They experimented with integrating it into the Unsloth AI framework, citing a Google Colab notebook for the 8B model, and they also explored incorporating a 4-bit quantized version of the 70B model.

Coping with CUDA’s Absence on Mobile: Participants noted the challenge of deploying neural networks on mobile devices due to the lack of CUDA compatibility, leading to discussions about custom inference engines as alternatives. These dialogues touch upon the intricacies of compiling neural network models for deployment on iPhone hardware.

Legacy Hardware Left Hanging by TorchTune: TorchTune’s discontinuation of support for older GPUs spawned discussions about its impact on those utilizing prior generation hardware. Users mentioned workarounds like utilizing notetaking tools such as Obsidian for knowledge management purposes.

License Logistics and Name Games: The importance of adhering to Llama 3’s new licensing terms was a topic of discussion, specifically the necessity of including the “Llama 3” prefix in the names of any derivatives. This kind of attention to detail underscores the legal considerations important in the open-source AI space.

Bilingual Brainstorming: The community pondered over strategies for creating bilingual models, weighing the costs and complexity of potential solutions, such as a threefold LLM call for translation layers. Additionally, Distributed Negotiation Optimization (DNO) grabbed attention with the realization that while it remains unimplemented in libraries, it could serve as an effective iteration on Distributed Public Optimization (DPO).

CUDA MODE Discord

Tiling Transformation: Discussing tiled matrix multiplication, engineers noted that padding large matrices for tiling can save memory bandwidth, despite additional computing for padded areas.

Meta’s Llama Lacks MOE: Meta’s newly unveiled Llama 3 is a dense 405 billion parameter model that does not incorporate an MOE (Mixture of Experts) model, contrasting it with other state-of-the-art architectures. Meta’s Llama 3 details

CUDA Crusaders Converse: CUDA discussions ranged from best practices in loading large datasets and optimizing kernel settings to debugging discrepancies in results and unpacking memory access patterns and their impact on performance.

Triton Puzzles with Custom Operations: AI Engineers exchanged techniques for making custom functions compatible with torch.compile, with references to handling torch.jit.ignore and demonstration of custom Triton kernels. GitHub PR reference for custom CUDA wih torch.compile and composition of custom Triton kernels were part of the conversation.

Quantization Quandary: In depth charted discussions about Half-Quadratic Quantization (HQQ) methods, particularly focusing on axis=0 vs axis=1 quantization and tackling transformers’ weight matrix concatenation challenges. Links shared included evaluations of current practices, innovative optimization techniques, and possible future enhancements to integrate HQQ into torchao. Details on HQQ implementation

Collaborative Coordination for CUDA Event: The Massively Parallel Crew’s planning for an overlapping panel and CUDA MODE event showcases teamwork in arranging for recording, overcoming scheduling conflicts, and post-production work.

LAION Discord

SD3 Debuts with API-Only and Mixed Feelings: Stability AI released SD3 via API, and responses were mixed, acknowledging some performance issues, especially in text rendering, alongside strategic moves towards monetization.

Dataset Dilemma: With LAION datasets pulled from Huggingface, members sought out alternatives like coyo-700m and datacomp-1b for training new models. Simultaneously, interest in PAG’s application to SDXL was noted, offering better visual results compared to previous ones but not exceeding DALLE-3’s capabilities.

Stability AI’s Shaky Ground: High-profile exits from Stability AI prompted discussion about the company’s future and potential effects on open AI models, with a cloud of mismanagement concerns looming. The broader AI community is starting to test and react to Meta’s LLaMA 3, applauding its performance on a variety of tasks despite a modest context window.

GANs Hold a Narrow Lead in Efficiency: GANs were noted for their inference speed and parameter efficiency, but they’re tricky to train and often fall short visually. Meanwhile, Microsoft’s unveiling of VASA-1 is set to revolutionize real-time lifelike talking faces, leveraging audio cues.

Datasets and Models Evolving: HQ-Edit, a sizeable dataset for image editing guided by instructions containing about 200,000 edits, is now accessible, potentially augmenting future AI-based photo editing tools. Also, Meta’s announcement of the robust, open-source Llama 3 language model showcases its commitment to AI accessibility and advancement.

OpenAccess AI Collective (axolotl) Discord

Boost in Llama: The newly launched Llama 3 catapults performance with a Tiktoken-based tokenizer and 8k context length.

Axolotl Ups Its Game: PR submitted to integrate Llama 3 Qlora into Axolotl, along with discussions on cuda errors in 80GB GPU setups. Further, adapters post-finetuning presented challenges, resolved by altering tokenizer settings with legacy=False and use_fast=True.

Fine-Tuning Finesse: A dive into finetuning techniques reveals member efforts to extend context lengths using parameters like rope_theta and experiences in preventing training crashes by unfreezing specific layers in model finetuning endeavors.

Conundrums in Configuration: YAML file comments aren’t parsed in Axolotl, while the feasibility of setting PAD tokens in YAML configs piqued user interest, signifying a need for clearer documentation on such configurations.

Token Tweaking Techniques: Exchanges spotlighted methods to replace tokens using add_tokens and manual vocabulary adjustments, sparking technical discourse on optimal tokenizer adjustments for models like Llama-3.

OpenRouter (Alex Atallah) Discord

-

Atlas Goes Electric: Boston Dynamics showcased a new fully electric version of the Atlas robot, emphasizing advancements from previous iterations, with the reveal attracting significant discourse in a video.

-

Mixtral and WizardLM Redefine LLMs: Mistral AI’s Mixtral 8x22B Instruct has 39B active parameters and specializes in math, coding, and multilingual tasks, while Microsoft AI’s WizardLM-2 showcases its own 8x22B model plus a faster 7B variant. The models boast impressive benchmarks and use fine-tuning techniques to enhance their instruct capabilities.

-

Llama 3 Enters the AI Scene with Meta: Together AI partners with Meta, launching Meta Llama 3 for fine-tuning, offering models with 8B and 70B parameters and achieving up to 350 tokens per second throughput in API benchmarks.

-

Redefining AI Access with OpenRouter: OpenRouter discussions centered on leveraging models like WizardLM and Claude for less restricted use, with notes on Mixtral being utilized by Together AI, and the self-hosting of Llama 3 for extended context applications.

-

Subscriber System Glitch and Startup Shoutout: There were reports of OpenRouter’s subscription system experiencing issues, coupled with a community invitation to check out a member’s startup, SpeedLegal on Product Hunt, an AI tool for negotiating contracts.

OpenAI Discord

Claude’s Longing for a Global Stage: There’s chatter about Claude excelling in literature-related tasks but remaining inaccessible outside of certain geographic areas, highlighting a desire for broader availability.

Whispers of Whisper v3: Expectation is building for the release of Whisper v3 API, a significant follow-up given the year since the initial launch, but official details are scant.

GPT-4 Forgets Its Past?: Community observations suggest a decrease in GPT-4’s memory capabilities, with members noting a seemingly reduced token capacity for the AI, though concrete evidence is lacking.

GPT-4 Speed Bumps Detected: Users report that versions like GPT-4-0125-preview are experiencing latency, impacting applications sensitive to response times, with an alternative model, gpt-4-turbo-2024-04-09, also feeling slower despite being a proposed solution.

New Frontiers in AI and Blockchain: One member signaled an intersection between AI and blockchain, inviting collaboration on prompt development to propel this novel integration forward.

Eleuther Discord

Flop-Sweating Over SoundStream: Community guidance helped a newcomer estimate training flops for SoundStream, with detailed advice on operations per token and dataset size multiplication as laid out in a transformer paper.

Scaling Laws Scrutiny Intensifies: A replication attempt paper challenges Hoffmann et al.’s proposed scaling laws, igniting discussions on confidence intervals and the realistic number of experiments needed for such large language models (LLMs).

Deciphering Tokenizers’ Impact on LLMs: Engineering minds debated the benefits of larger tokenizer vocabularies, especially concerning multilingual LLMs, and considered methods like bits per byte for understanding model perplexity when tokenizers vary.

Tying Up Emerging Techniques in LLMs: Community chatter touched on the effectiveness of untied embeddings and new attention mechanisms for LLMs, and discussed integrating Monte Carlo Tree Search (MCTS) with LLMs for better reasoning, as explored in Tencent’s AlphaLLM.

Resource Sharing and Call for Collaborative Reviews: Links to flan-finetuned models like lintang/pile-t5-base-flan were shared, and requests were made to review PRs for flores-200 and sib-200 benchmarks, necessary for advancing multi-lingual evaluation.

Modular (Mojo 🔥) Discord

Integrating C with Mojo: The mojo-ffi project and a tutorial using external_call were pointed out for those interested in using C with Mojo. The tutorial particularly addresses calling libc functions in Mojo.

Tweet-tastic Modular: Modular’s recent tweets have attracted attention with direct links provided, pointing to first tweet and second tweet.

Mojo’s Compatibility Queries: Discussions arose about the Mojo plugin’s compatibility with Windows and WSL, potential nightly build features for the Mojo playground to support low RAM usage GitHub discussion, and the lack of Variant support for the Movable trait as a pending issue.

Community Projects Foster Growth: Community activity around Mojo included trouble compiling with Mojo 24.2, a student seeking guidance on implementing an algorithm in Mojo, and the community’s supportive response pointing to resources like the Get Started with Mojo page.

LLaMa on the Rise: The release of Meta’s LLaMa 3 was covered in a YouTube video exploring the model’s new features, indicating ongoing interest in cutting-edge AI research within the community.

Interconnects (Nathan Lambert) Discord

-

MCTS Meets PPO for AI Breakthrough: Exploring a fusion of Monte Carlo Tree Search (MCTS) with Proximal Policy Optimization (PPO) could be a game-changer for AI decision-making, leading to a novel PPO-MCTS value-guided decoding algorithm aimed at improving natural language generation. Here’s an innovative research paper on the subject.

-

New Kids on the Language Model Block: The AI community is abuzz with introductions of impressive models like Mixtral 8x22B and OLMo 1.7 7B, each setting new benchmarks in multi-language fluency and MMLU scores. The prospect of advancements in chatbot applications with Mixtral Instruct and the curiosity around the scope of Meta Llama 3 highlight a period of significant expansion and accessibility in AI. Details and resources linked: Mixtral 8x22B Apache 2.0, OLMo on Hugging Face, and Mixtral-Instruct model card.

-

Chinchilla Scaling Controversy: The Chinchilla paper’s disputed scaling laws ignited heated debates across the AI community, with researchers @tamaybes, @suchenzang, and @drjwrae weighing in and author @borgeaud_s acknowledging an error. The contentious discourse underscores the need for data validation and transparency. Reference tweets attest to the intensity of the debate: tamaybes tweet, suchenzang concerns, and borgeaud_s owning up.

-

The AI Comedy Hour: Nathan Lambert caught some laughs with a Saturday Night Live sketch disrupting a live AI news event, pinpointing AI’s grip on culture crossing into humor territory.

-

AI Space Oddities and Musings: Discussions swung between an imminent OLMO vs. LLaMa 3 model showdown and the esoteric link between the Three-Body Problem, podcast features, numerology, and blog post forecasts. Meanwhile, Jeremy Howard’s tweet about an ‘experimental’ aspect sparked speculation. Jeremy’s tweet piques interest.

-

SnailBot’s Slow and Steady Progress: SnailBot might not win the race for speed, but it’s crossing the finish line for functionality on WIP posts, ironically mirroring the speed snafus tech often grapples with.

Cohere Discord

-

Web UI Fine-Tuning - Simple Start, API for the Rest: Initiating fine-tuning of models via the Web UI at Cohere is user-friendly, but further fine-tuning with new datasets necessitates API usage, with comprehensive instructions available in the official documentation.

-

Cohere’s Newest Prodigy: Command R+: The launch of Command R+ by Cohere has been recognized for its notable advancements. Extensive feature comparisons and model capabilities can be explored on the Cohere website.

-

Ethical AI: Tackling Potential Risks of Command R+: Concerns raised about Command R+ pertain to vulnerabilities that might be manipulated for unethical purposes, as highlighted through a redteam exercise linked to LessWrong.

-

LLMs Jailbreak - From Language to Agency: The conversation evolved around the concept of AI jailbreaking, noting a shift from extracting inappropriate language to prompting complex, autonomous behaviors from large language models—a critical consideration for organizations using AI in sensitive contexts.

-

Cohere Command and Llama – Performance Notes: Users are impressed by the Llama 3 model’s capabilities, discussing the importance of real-world applicability in evaluating AI. The performance of large models like the 70b and 400b variants are evaluated based on their response to complex prompts such as mathematical equations and SVG markup.

Latent Space Discord

- Startup Jokes Mask Real Talk: Members humorously proposed a startup to create superior chat libraries, suggesting potential dominance over giants like OpenAI.

- Local Models: Small is the New Big: The guild discussed the potential shift towards smaller, performant AI models with user-friendly interfaces, emphasizing expedience over complexity.

- Latency: Every Millisecond Counts: Engineers underscored that latency is detrimental to the user experience and successful adoption of AI applications, highlighting the importance of speedy responses.

- The Mystery of Declining AI Performance: Observations were shared about a sharp decline in AWS-hosted Claude 3’s performance, with clinical concept extraction tasks going from over 95% accuracy to nearly zero.

- Meeting Moved to Zoom: The llm-paper-club-west facilitated discussions about papers by moving the meeting over to Zoom and providing reminders for a smooth transition, Zoom meeting link.

OpenInterpreter Discord

Windows Woes with PowerShell Puzzles: Engineers reported challenges in implementing OpenInterpreter on Windows, specifically with PowerShell not recognizing environmental variables such as OPENAI_API_KEY. There were also discussions surrounding the time it takes to install poetry and the complexities of running OpenInterpreter on diverse Windows environments.

Connection Woes with ESP32: Users shared difficulties in connecting ESP32 devices, with suggestions pointing towards different IDEs and the use of curl commands. Error messages relating to message arrays underline ongoing issues with device connectivity.

Debugging with Local Servers and WebSockets: Challenges emerged around setting up local servers for OpenInterpreter and troubleshooting issues with websockets and Python version incompatibilities. The efforts included manual server address configurations via curl and attempts to solve audio buffering problems.

Exploring Cross-Device Compatibility: Discussions on OpenInterpreter spanned using LM Studio on Windows while running the software on a Mac, emphasizing the necessity for cross-operating system compatibility. Users reported switching to MacBooks to potentially circumvent existing obstacles.

Hugging Face Highlight: A single message referenced a Hugging Face space where users can chat with Meta LLM3_8b, indicating interest in experimenting with alternative language models within the community.

LlamaIndex Discord

-

MistralAI’s 8x22b Hits the Stage: MistralAI has released the 8x22b model, which has been supported by LlamaIndex since its inception, featuring advanced capabilities like RAG, query routing, and tool use, as announced in a Twitter post.

-

Tutorial for Building Free RAG with Elasticsearch: LlamaIndex and Elasticsearch are showcased in a guide on creating free Retrieval Augmented Generation (RAG) applications, detailed in a blog post.

-

Implementation Tips for Efficient RAG Systems: AI engineers discuss methods to optimize RAG implementations and provide multilingual support, highlighting a fine-tuning guide and resources for summarization techniques within RAG, including Q&A Summarization.

-

Google’s Infinite Context Teases LLMs Future: Google’s development of a method that allows large language models to handle infinite context is being discussed, with implications for existing frameworks like RAG facing a potential paradigm shift. The technical approach and its implications are explored in VentureBeat’s article.

-

Data-Driven AI Fundraising Insights Revealed: manhattanproject2023 offers the AI community access to an elaborate dataset related to AI fundraising, featuring $30 billion in investment across various stages of company development, available for analysis at AI Hype Train - Airtable.

LangChain AI Discord

SQL Skirmish to Chatbot Progress: Engineers grappled with LangChain’s SQL agent limitations and prompt engineering challenges for chatbot implementations, reference materials including createOpenAIToolsAgent and SqlToolkit to integrate SQL databases into conversational AI.

Memory Management Mentorship: Strong focus was placed upon utilizing RunnableWithMessageHistory for managing chat histories, with hands-on advice and code examples referenced to enhance message retrieval and chatbot memory capabilities as documented in the LangChain codebase.

Marketplace for AI Plugs Emerges: theaiplugs.com has launched, offering a solution for selling AI plugins, tools, and assistants and addressing APIs, marketing, and billing to streamline creators’ workflows.

Product Hunt Seeks AI Speedsters: SpeedLegal introduced itself on Product Hunt, calling for community support, while a new prompt engineering course found its way to LinkedIn Learning for those eager to refine their skills.

Llama 3 Thunders into Public Domain: Developers unveiled public access to Llama 3, inviting users to explore its capabilities via chat interface and API, as part of efforts to disseminate advanced AI tooling to a broader audience.

Alignment Lab AI Discord

-

Alert for Inappropriate Content Across Channels: Multiple channels within the Discord guild were targeted with spam messages promoting adult content, specifically referencing “Hot Teen & Onlyfans Leaks” alongside Discord invite links (invitation link). The guild members were urged for increased moderation in light of these incidents.

-

Spam Infiltrates Technical Discussions: The spam issue that plagued the guild was prevalent across both technical discussion channels such as #programming-help and #alignment-lab-ai, and community-focused channels like #general-chat and #join-in, indicating a guild-wide moderation challenge.

-

Wizards of the Code Unveil WizardLM-2: The WizardLM-2 model has seen advancements and is now publicly accessible on Hugging Face, with additional resources provided through the WizardLM-2 Release Blog, a GitHub repository, a related Twitter account, and academic papers on arXiv.

-

Seek and You Shall Find Meta Llama 3 Tokenizer: After a guild member’s request for the Meta Llama 3-8B tokenizer, it has been made available by a user named Undi95, and can now be found on Hugging Face, circumventing the need to comply with a specific privacy policy.

-

Community Calls for Action: Discussions in channels such as #open-orca-community-chat highlighted the need for immediate moderation action, including possible bans, to maintain the integrity of the engineering-focused guild environment.

DiscoResearch Discord

VRAM Hunger: Biting More Than You Can Chew?: Training the Mixtral-8x22B model necessitates a staggering 3673 GB of VRAM with the Adam optimizer, as per discussions indicating that even 64 GPUs with 80GB each weren’t sufficient to avoid out-of-memory errors for training long 32k sequence lengths. Additionally, members are weighing the potential of 8-bit optimizations to manage the massive memory requirements.

Model Training Achievements and Setbacks: A freshly trained Mixtral-8x22B model focusing on English and German instructions was successfully completed and shared on Hugging Face. However, implementing fsdp_transformer_layer_cls_to_wrap: MixtralSparseMoeBlock has been met with shape errors, suggesting potential issues with parameter states not fully utilizing mixed precision, complicating FSDP configurations.

Tokenizer Unification Effort: Mistral has publicized their tokenizer library designed for cross-model compatibility, featuring Tool Calls and structured outputs with an example available in this Jupyter Notebook.

Meta’s Llama 3 Debuts with Ambitious Support: Meta’s release of Llama 3 has drawn interest for its promise of enhanced multilingual capabilities and direct integration with cloud platforms, although its 128K token tokenizer is under scrutiny for potentially subpar non-English performance despite a multilingual data presence in the training set. You can find more details at the Meta AI Blog.

The Double-Edge of Model Openness: With the advent of Llama 3, there are concerns regarding the restrictions on Llama 3 output which may hinder open-source development, bringing to light the community’s partiality towards platforms like MistralAI that impose fewer constraints. The community’s reservations are buoyed by sentiment expressed in this critical tweet.

Datasette - LLM (@SimonW) Discord

-

Startup Shine on Product Hunt: A member launched their startup, SpeedLegal, on Product Hunt, pitching an AI tool designed to aid in contract negotiations by identifying risks and simplifying legal jargon.

-

Karpathy Advocates for Meticulous Small Models: Andrej Karpathy’s recent tweet hints at the communal undertraining of small models, suggesting an 8B parameter model, well-honed with a 15T token dataset, could rival larger models.

-

Smaller Models Win Community Favor: The notion of small, diligently trained AI models has struck a chord with the community, likely inspired by Karpathy’s advocacy of potentially underexploited smaller architectures.

-

Eager Eyes on Mixtral: Community members are keen to test Mixtral 8x22B Instruct, with the model card on Hugging Face being shared detailing use-cases and implementation.

-

Plugin Pandemonium Challenges LLM Development: Issues within llm-gpt4all plugin installations have surfaced, causing python applications to break, highlighted in GitHub issue #28, and raising concerns about llm plugin resilience and dependency management.

tinygrad (George Hotz) Discord

- PyTorch Lightning Strikes with Hardware Neutrality: The conversation highlighted PyTorch-Lightning’s capability to train, tune, and deploy AI models across various platforms, including GPUs and TPUs, without needing to alter the code.

- AMD Radeon’s GPU Success Story: An AMD Radeon 7900XTX GPU has been successfully employed for running Pytorch-Lightning, showcasing its compatibility with a diversity of hardware options.

- ROCm Gives PyTorch the Speed Boost: PyTorch-Lightning has achieved faster performance than regular PyTorch on some models when tested on a 7900XTX GPU, taking advantage of ROCm’s optimizations.

- Fresh AI Model on the Block: LLaMa3, a new AI model, has been launched, promising pretrained versions suitable for different scales of AI applications as featured on its official page.

- Tinygrad Steps into Efficient Tensor Ops: In tinygrad, the pursuit of zero-cost tensor operations like broadcast, reshape, permute is on, with suggestions to explore tinygrad/shape/shapetracker.py or view.py for strategic guidance.

Skunkworks AI Discord

-

AI Community Abuzz with New Model Launches: Discussions highlighted the release of Snowflake Arctic embed family of models, Mixtral 8x22B, and Meta’s Llama 3, praising them as milestones in the text-embedding and large language model (LLM) arena. Detailed insights and introductions to these models were shared through YouTube links.

-

Curiosity for Serverless Fine-tuning: A conversation sparked interest in the possibility of a no-code fine-tuning and serverless inference platform for open-source AI models akin to the ease offered by certain platforms for GPT-3.5.

-

Informal Chatter Lacks Substance: A high-energy greeting “HELLLLOOOOOOOO!!!!!!!!” was exchanged in the channel but it lacked substantive content relevant to the engineering discussions.

-

Videos Galore for Model Introductions: The community shared valuable video resources from YouTube providing overviews of the Snowflake Arctic embed models, Mixtral 8x22B, and Llama 3 for anyone interested in the cutting-edge of AI model development.

-

No-code AI Tools - A Dream or Imminence?: The question about the existence (or development) of a no-code AI platform that supports models other than GPT-3.5 was raised, hinting at an undercurrent of demand for more accessible AI fine-tuning techniques.

Mozilla AI Discord

Llamafile Script Now Cleaner: An upgraded and clean version of the llamafile archive version upgrade repacking script has been shared in a Gist, with a consideration for its addition to the llamafile GitHub repo. The member has cautioned that creating new llamafiles from the ground up is preferable to repacking old versions.

Vulnerability Reporting Steps Questioned: There was a query about how to report security vulnerabilities and the process for obtaining CVEs, which was taken offline for further detailed discussion.

Beware of Exposing LLM APIs: A general warning was issued against exposing LLM API endpoints publicly, highlighting that this is not the first occurrence of bugs being spotted in LLM infrastructure code. The emphasis was on previous experiences with vulnerabilities in such systems.

LLM Perf Enthusiasts AI Discord

- Inquiry about LITELLM Usage: A member named jeffreyw128 asked the community if anyone is currently using litellm, looking for insights or experiences related to this tool.

AI21 Labs (Jamba) Discord

- Call for Code on Distributed Inference Implementation: A member facing challenges with long context inference using AI21 Labs’ Jamba on a 2x A100 GPU setup is seeking example code to navigate distributed system complexities. There was a specific request for examples geared towards a distributed inference scenario on a multi-GPU cluster.

PART 2: Detailed by-Channel summaries and links

Perplexity AI ▷ #general (910 messages🔥🔥🔥):

- Opus Usage Cap Causes Frustration: Users voiced their dissatisfaction with the recent cap on Opus model usage, noting it had been reduced from 600 to 30 messages per day without prior notice, impacting both new and ongoing subscriptions.

- Chargeback and Refund Debates: A lengthy discussion took place about the possibility of chargebacks and refunds due to the unexpected service change, with various users weighing the legal and ethical considerations, and some seeking refunds from Perplexity support.

- Llama 3 Anticipation: Anticipation for the Llama 3 model, an open-source offering from Meta, is high, with users discussing its potential and sharing external links about its benchmarks and capabilities.

- Cancellation and Service Alternatives: Due to the Opus limit reduction, several users reported cancelling trials or full subscriptions, while others considered migrating to different services or waiting for Perplexity to resolve the issue.

- Technical Issues and Side Discussions: Users reported unrelated technical problems, such as trouble using the app on Android, and some side conversations included sharing external content such as YouTube videos or tweets related to AI developments.

Links mentioned:

- Tweet from Perplexity (@perplexity_ai): Learn more about Llama 3 👇https://www.perplexity.ai/search/Llama-3-Overview-Mz3Cw09KTdq9gavmibDBeA

- Tune Chat - Chat app powered by open-source LLMS: With Tune Chat, access Prompts library, Chat with PDF, and Brand Voice features to enhance your content writing and analysis and maintain a consistent tone across all your creations.

- llama3: Meta Llama 3: The most capable openly available LLM to date

- mistralai/Mixtral-8x22B-Instruct-v0.1 · Hugging Face: no description found

- no title found: no description found

- Tweet from Lech Mazur (@LechMazur): Meta's LLama 3 70B and 8B benchmarked on NYT Connections! Very strong results for their sizes.

- https://i.redd.it/w5wsw2ecq9vc1.png: no description found

- Wack Whack GIF - Wack Whack - Discover & Share GIFs: Click to view the GIF

- Klatschen Clapping GIF - Klatschen Clapping - Discover & Share GIFs: Click to view the GIF

- Microsoft Azure Marketplace: no description found

- Ladies And Gentlemen Mikey Day GIF - Ladies And Gentlemen Mikey Day Saturday Night Live - Discover & Share GIFs: Click to view the GIF

- Zuckerberg GIF - Zuckerberg - Discover & Share GIFs: Click to view the GIF

- Laptop Smoking GIF - Laptop Smoking Fire - Discover & Share GIFs: Click to view the GIF

- Snape Harry Potter GIF - Snape Harry Potter You Dare Use My Own Spells Against Me Potter - Discover & Share GIFs: Click to view the GIF

- Movie One Eternity Later GIF - Movie One Eternity Later - Discover & Share GIFs: Click to view the GIF

- PictoChat Online, by ayunami2000.: PictoChat web app with a server written in Java! Source: https://github.com/ayunami2000/ayunpictojava

- Nintendo DS PictoChat is BACK!: Nintendo DS's PictoChat isn't dead, as there's a website where you can relive those glory days of sending heinous drawings to your friends, but now online!Pi...

- 24 vs 32 core M1 Max MacBook Pro - Apples HIDDEN Secret..: What NOBODY has yet Shown You about the CHEAPER Unicorn MacBook! Get your Squarespace site FREE Trial ➡ http://squarespace.com/maxtechAfter One Month of expe...

- Mark Zuckerberg - Llama 3, $10B Models, Caesar Augustus, & 1 GW Datacenters: Zuck on:- Llama 3- open sourcing towards AGI - custom silicon, synthetic data, & energy constraints on scaling- Caesar Augustus, intelligence explosion, biow...

- GitHub - meta-llama/llama3: The official Meta Llama 3 GitHub site: The official Meta Llama 3 GitHub site. Contribute to meta-llama/llama3 development by creating an account on GitHub.

- Rick Astley - Never Gonna Give You Up (Official Music Video): The official video for “Never Gonna Give You Up” by Rick Astley. The new album 'Are We There Yet?' is out now: Download here: https://RickAstley.lnk.to/AreWe...

- Meta AI: Use Meta AI assistant to get things done, create AI-generated images for free, and get answers to any of your questions. Meta AI is built on Meta's latest Llama large language model and uses Emu,...

Perplexity AI ▷ #sharing (12 messages🔥):

- Typographic Transformation: A member found value in discussing the new Tubi font, expressing enthusiasm for typographic topics.

- Illusion of Authenticity: Two different members pointed to a link discussing how actors run fake scenarios in their industry.

- Exploring the Past: The history of “m” intrigued one member, sharing a link for those interested in this particular historical insight.

- Boundless Ambitions: The Limitless AI pendant garnered attention, with a member directing others to a relevant discussion.

- Creation & Curation: Members shared interests in a range of topics from making something specific, to data visualization techniques, and even Adobe’s training of their Firefly AI.

Perplexity AI ▷ #pplx-api (12 messages🔥):

-

API Summary Requests and Differences: A user inquired about the ability to get references or summaries through the API, noticing that responses from

sonar-medium-onlinediffer from those in the browser app. A link was shared directing them to a Discord channel for further information, although the link shared was invalid as per the messages. -

Perplexity API Integration with OpenAI: One member shared their experience of stalling requests when attempting to integrate Perplexity’s API with OpenAI GPTs’ actions, suggesting difficulties in creating a functional OpenAPI schema.

-

Mixtral-8x22b Now Available: The community was informed about the new addition of

mixtral-8x22b-instructto Perplexity Labs and the API, with a link provided to try it out on labs.pplx.ai. -

Deep Dive into Leonard Cohen’s “Avalanche”: A user highlighted the impressive performance of

mixtral-8x22b-instruct, sharing detailed feedback on the model’s interpretation of Leonard Cohen’s song “Avalanche,” and how it outperformed other models in recognizing the artist and song from mere lyrics. -

New AI Models Enrich User Experience: Updates on the availability of various new models such as

llama-3-8b-instructandllama-3-70b-instructwere discussed, revealing their addition to the Perplexity Labs and API, and mentioning that users with Perplexity Pro receive $5 monthly API credits. Additionally, a member expressed satisfaction with the performance improvements presented by these new models to their application.

Links mentioned:

- Tweet from Perplexity (@perplexity_ai): 🚨 Update: We've added mixtral-8x22b-instruct to Perplexity Labs and our API! ↘️ Quoting Perplexity (@perplexity_ai) Mixtral-8X22B is now available on Perplexity Labs! Give it a spin on http://...

- Tweet from Aravind Srinivas (@AravSrinivas): 🦙 🦙 🦙http://labs.perplexity.ai and brought up llama-3 - 8b and 70b instruct models. Have fun chatting! we will soon be bringing up search-grounded online versions of them after some post-training. ...

- Supported Models: no description found

Stability.ai (Stable Diffusion) ▷ #announcements (1 messages):

-

Stable Diffusion 3 Materializes on API: Stability AI is excited to announce the launch of both Stable Diffusion 3 and Stable Diffusion 3 Turbo on the Stability AI Developer Platform API, in partnership with Fireworks AI. Full details and access instructions are provided here.

-

Text-to-Image Magic Surpasses Rivals: Stable Diffusion 3 outpaces competitors like DALL-E 3 and Midjourney v6 in typography and prompt adherence, boasting an advanced Multimodal Diffusion Transformer (MMDiT) architecture for enhanced text and image processing, as highlighted in the research paper.

-

Open Generative AI’s Next Chapter: A pledge was made to soon offer model weights for self-hosting to those with a Stability AI Membership, underscoring Stability AI’s dedication to open generative AI.

Link mentioned: Stable Diffusion 3 API Now Available — Stability AI: We are pleased to announce the availability of Stable Diffusion 3 and Stable Diffusion 3 Turbo on the Stability AI Developer Platform API.

Stability.ai (Stable Diffusion) ▷ #general-chat (947 messages🔥🔥🔥):

- API Over Local Use: SD3 is presently only accessible through an API, costing around $0.065/image; there’s anticipation for the model to be made available for local use in the near future.

- Discussions on Hardware Requirements: Users are contemplating whether to wait for newer GPUs like the 5090 or to purchase currently available options like a used 3090 for AI work, weighing factors such as VRAM, speed, power consumption, and NVLink support.

- Fine-tuning and Generation Challenges: There’s acknowledgment that while newer models might seem lackluster in certain areas, such as anatomy generation, fine-tuning can potentially rectify these shortcomings.

- Creating AI Influencers?: A conversation about AI influencers points to a variety of motivations, from curiosity to profit-making, against a backdrop of some users’ skepticism about the social value of such endeavors.

- AI Model Considerations and Job Opportunities: Discussions included concerns about pricing structures for API credits compared to alternatives like Ideogram, the variance in output quality across different model versions, and requests for job opportunities or partnerships in AI-related projects.

Links mentioned:

- Model Merging Examples: Examples of ComfyUI workflows

- Stable Diffusion Engineer - Palazzo, Inc.: About Us:Palazzo is a dynamic and innovative technology company committed to pushing the boundaries of Global AI for Interior Design. We are seeking a skilled Stable Diffusion Engineer to join our tea...

- Tweet from Stability AI (@StabilityAI): Today, we are pleased to announce the availability of Stable Diffusion 3 and Stable Diffusion 3 Turbo on the Stability AI Developer Platform API. We have partnered with @FireworksAI_HQ , the fastest ...

- Power-hungry AI is putting the hurt on global electricity supply: Data centers are becoming a bottleneck for AI development.

- Card game developer says it paid an 'AI artist' $90,000 to generate card art because 'no one comes close to the quality he delivers': no description found

- Stable Video Diffusion: no description found

- Reddit - Dive into anything: no description found

- TikTok - Make Your Day: no description found

- Contact Us — Stability AI: no description found

- Membership — Stability AI: The Stability AI Membership offers flexibility for your generative AI needs by combining our range of state-of-the-art open models with self-hosting benefits.

- Feds appoint “AI doomer” to run AI safety at US institute: Former OpenAI researcher once predicted a 50 percent chance of AI killing all of us.

- The Impact of Over-Reliance on AI: Balancing Technology and Critical Thinking: I am a 27-year-old who is active, open-minded, and tech enthusiast. I am a bit lazy person that assisted me with making things more…

- Decoding Stable Diffusion: LoRA, Checkpoints & Key Terms Simplified!: 🌟 Unlock the mysteries of Stable Diffusion with our clear and concise guide! 🌟Join us as we break down complex AI terms like 'LoRA', 'Checkpoint', and 'Con...

- no title found: no description found

- GitHub - kijai/ComfyUI-KJNodes: Various custom nodes for ComfyUI: Various custom nodes for ComfyUI. Contribute to kijai/ComfyUI-KJNodes development by creating an account on GitHub.

- Takeshi’s Castle | Official Trailer | Amazon Prime: Let’s get physical! The iconic 80s Japanese game show Takeshi’s Castle is now streaming 🏯SUBSCRIBE: http://bit.ly/PrimeVideoSGStart your 30-day free trial: ...

- RTX 4080 vs RTX 3090 vs RTX 4080 SUPER vs RTX 3090 TI - Test in 20 Games: RTX 4080 vs RTX 3090 vs RTX 4080 SUPER vs RTX 3090 TI - Test in 20 Games 1080p, 1440p, 2160p, 2k, 4k⏩GPUs & Amazon US⏪ (Affiliate links)- RTX 4080 16GB: http...

- GitHub - ShineChen1024/MagicClothing: Official implementation of Magic Clothing: Controllable Garment-Driven Image Synthesis: Official implementation of Magic Clothing: Controllable Garment-Driven Image Synthesis - ShineChen1024/MagicClothing

- GitHub - PierrunoYT/stable-diffusion-3-web-ui: This is a web-based user interface for generating images using the Stability AI API. It allows users to enter a text prompt, select an output format and aspect ratio, and generate an image based on the provided parameters.: This is a web-based user interface for generating images using the Stability AI API. It allows users to enter a text prompt, select an output format and aspect ratio, and generate an image based on...

- GitHub - Priyansxu/vega: Contribute to Priyansxu/vega development by creating an account on GitHub.

- Comfy Workflows videos page: run & discover workflows that are not meant for any single task, but are rather showcases of how awesome ComfyUI animations and videos can be. ex: a cool human animation, real-time LCM art, etc.

- GitHub - TencentQQGYLab/ELLA: ELLA: Equip Diffusion Models with LLM for Enhanced Semantic Alignment: ELLA: Equip Diffusion Models with LLM for Enhanced Semantic Alignment - TencentQQGYLab/ELLA

- GitHub - codaloc/sdwebui-ux-forge-fusion: Combining the aesthetic interface and user-centric design of the UI-UX fork with the unparalleled optimizations and speed of the Forge fork.: Combining the aesthetic interface and user-centric design of the UI-UX fork with the unparalleled optimizations and speed of the Forge fork. - codaloc/sdwebui-ux-forge-fusion

- April | 2024 | Ars Technica: no description found

- Dwayne Loses His Patience 😳 #ai #aiart #chatgpt: no description found

- 1 Mad Dance of the Presidents (ai) Joe Biden 🤣😂😎✅ #stopworking #joebiden #donaldtrump #funny #usa: 🎉 🤣🤣🤣🤣 Get ready to burst into fits of laughter with our latest "Funny Animals Compilation Mix" on the "Funny Viral" channel! 🤣 These adorable and misc...

- DreamStudio: no description found

- Create stunning visuals in seconds with AI.: Remove background, cleanup pictures, upscaling, Stable diffusion and more…

- Wordware - Compare prompts: Runs Stable Diffusion 3 with the input prompt and a refined prompt

Nous Research AI ▷ #off-topic (46 messages🔥):

- Snowflake Unveils Groundbreaking Embedding Model: A new text-embedding model by Snowflake has been launched and open-sourced, signaling advancements in text analysis capabilities.

- Deciphering the Vector Space of Language: Members pondered on the conceptual framework of meaning representation within high-dimensional vector space, discussing possibilities of “envelopes within the vectorspace of meaning,” hinting at scale-based formations and the potential for new symbolic language forms.

- Encrypted Communication Analogy Discussed: The conversation explored analogies between encryption and various scientific phenomena, such as gravitational dynamics, and contemplated on the need for divergent language and understanding as a consequence of “performing Work on Infinity.”

- Startup Launch Seeks Support on Product Hunt: A user requested feedback and support for their recently launched startup, SpeedLegal, on Product Hunt, with ensuing discussion addressing the product’s market fit and potential business strategies.

- Bloke Discord Invite Sought and Shared: Community members aided in sharing a valid link to the Bloke Discord server after the one found on Twitter was non-functional, with an exchange on lifting temporary bot restrictions to facilitate the invite posting.

Links mentioned:

- Join the TheBloke AI Discord Server!: For discussion of and support for AI Large Language Models, and AI in general. | 24155 members

- Introducing Llama 3 Best Open Source Large Language Model: introducing Meta Llama 3, the next generation of Facebook's state-of-the-art open source large language model.https://ai.meta.com/blog/meta-llama-3/#python #...

- Snowflake Launches the World’s Best Practical Text-Embedding Model: Today Snowflake is launching and open-sourcing with an Apache 2.0 license the Snowflake Arctic embed family of models. Based on the Massive Text Embedding Be...

- Mixtral 8x22B Best Open model by Mistral: Mixtral 8x22B is the latest open model. It sets a new standard for performance and efficiency within the AI community. It is a sparse Mixture-of-Experts (SMo...

- SpeedLegal - Your personal AI contract negotiator | Product Hunt: SpeedLegal is an AI tool that helps you understand and negotiate contracts better. It can quickly identify potential risks and explain complicated legal terms in simple language. SpeedLegal also gives...

Nous Research AI ▷ #interesting-links (44 messages🔥):

- Tokenization Decoded by Mistral AI: Mistral AI has open-sourced their tokenizer, including a guide and a Colab notebook. The tokenizer breaks down text into smaller subword units, known as tokens, for language models to understand text numerically.

- Skeptical About Tokenization Overhype: Discussion in the channel questions the significance of tokenization if models like Opus can efficiently utilize XML tags, suggesting token relevance might be limited to model steerability.

- Hugging Face Security Breach Revealed: A YouTube video discusses a security incident involving Hugging Face, caused by a malicious .pickle file, highlighting potential vulnerabilities in AI systems.

- Potential Exploits with Pickles in OpenAI: A conversation revealed that using insecure pickles in OpenAI’s environment could pose a risk, as it allows execution of large documents but might be disabled if recognized as an exploit.

- State of AI in 2023 via Stanford’s AI Index: The latest Stanford AI Index report is released, summarizing multimodal foundation models, investment trends, and a movement toward more open-sourced models, with a high number of foundation models released last year.

Links mentioned:

- AI Index: State of AI in 13 Charts: In the new report, foundation models dominate, benchmarks fall, prices skyrocket, and on the global stage, the U.S. overshadows.

- Tokenization | Mistral AI Large Language Models: Tokenization is a fundamental step in LLMs. It is the process of breaking down text into smaller subword units, known as tokens. We recently open-sourced our tokenizer at Mistral AI. This guide will w...

- meta-llama/Meta-Llama-3-70B · Hugging Face: no description found

- Udio | Make your music: Discover, create, and share music with the world.

- SpeedLegal - Your personal AI contract negotiator | Product Hunt: SpeedLegal is an AI tool that helps you understand and negotiate contracts better. It can quickly identify potential risks and explain complicated legal terms in simple language. SpeedLegal also gives...

- Edward Gibson: Human Language, Psycholinguistics, Syntax, Grammar & LLMs | Lex Fridman Podcast #426: Edward Gibson is a psycholinguistics professor at MIT and heads the MIT Language Lab. Please support this podcast by checking out our sponsors:- Yahoo Financ...

- Hugging Face got hacked: Links:Homepage: https://ykilcher.comMerch: https://ykilcher.com/merchYouTube: https://www.youtube.com/c/yannickilcherTwitter: https://twitter.com/ykilcherDis...

- GitHub - NVlabs/DoRA: Official PyTorch implementation of DoRA: Weight-Decomposed Low-Rank Adaptation: Official PyTorch implementation of DoRA: Weight-Decomposed Low-Rank Adaptation - NVlabs/DoRA

- Regretting Thinking GIF - Regretting Thinking Nervous - Discover & Share GIFs: Click to view the GIF

Nous Research AI ▷ #general (756 messages🔥🔥🔥):

-

Llama 3 Hype Train: The community is raving about the performance of Meta Llama 3. Increments in benchmarks like MMLU and GSM-8K are highlighted, with model sizes from 8B up to potentially 400B providing performance rivalling or surpassing existing models like GPT-4 and various Mixtral sizes.

-

GGUF Troubles and Triumphs: Multiple users report issues with GGUF (quantized) versions of Llama 3, specifically with tokenization and non-stop text generation. However, a GGUF from LM Studio reportedly works well.

-

Context Length Concerns: Despite the excitement, concerns about Llama 3’s context length are expressed, with some preferring the longer contexts of models like Mixtral 8x22B for certain tasks.

-

Mistral Model Gating: Suddenly, Mistral models are reported to be gated, which sparks discussion about potential reasons, including speculation about new EU regulations, and comments that anyone could re-host the models since they’re Apache 2 licensed.

-

Anticipation for Finetuning Capabilities: Community members are eager to conduct finetuning on Llama 3, discussing the possibilities and looking forward to the tools and capabilities that MLX finetuning might unlock.

Links mentioned:

- lluminous: no description found

- NousResearch/Meta-Llama-3-8B-Instruct · Hugging Face: no description found

- N8Programs/Coxcomb-GGUF · Hugging Face: no description found

- meraGPT/mera-mix-4x7B · Hugging Face: no description found

- NousResearch/Meta-Llama-3-8B-Instruct-GGUF · Hugging Face: no description found

- Meta AI: Use Meta AI assistant to get things done, create AI-generated images for free, and get answers to any of your questions. Meta AI is built on Meta's latest Llama large language model and uses Emu,...

- N8Programs/Coxcomb · Hugging Face: no description found

- maxidl/Mixtral-8x22B-v0.1-Instruct-sft-en-de · Hugging Face: no description found

- lmstudio-community/Meta-Llama-3-8B-Instruct-GGUF · Hugging Face: no description found

- Udio | Back Than Ever by drewknee: Make your music

- NousResearch/Meta-Llama-3-8B · Hugging Face: no description found

- meta-llama/Meta-Llama-3-8B · Hugging Face: no description found

- no title found: no description found

- meta-llama/Meta-Llama-3-70B · Hugging Face: no description found

- NousResearch/Meta-Llama-3-70B-Instruct-GGUF · Hugging Face: no description found

- LLM running on Roland MT-32: LLM 2 ROLAND MT-32Demonstrating "https://huggingface.co/N8Programs/Coxcomb" a new story telling model from N8.

- Angry Mad GIF - Angry Mad Angry face - Discover & Share GIFs: Click to view the GIF

- Reddit - Dive into anything: no description found

- Diablo Joke GIF - Diablo Joke Meme - Discover & Share GIFs: Click to view the GIF

- torchtune/recipes/configs/llama3/8B_qlora_single_device.yaml at main · pytorch/torchtune: A Native-PyTorch Library for LLM Fine-tuning. Contribute to pytorch/torchtune development by creating an account on GitHub.

- How Did Open Source Catch Up To OpenAI? [Mixtral-8x7B]: Sign-up for GTC24 now using this link! https://nvda.ws/48s4tmcFor the giveaway of the RTX4080 Super, the full detailed plans are still being developed. Howev...

- OLMo 1.7–7B: A 24 point improvement on MMLU: Today, we’ve released an updated version of our 7 billion parameter Open Language Model, OLMo 1.7–7B. This model scores 52 on MMLU, sitting…

- GitHub - asg017/sqlite-vss: A SQLite extension for efficient vector search, based on Faiss!: A SQLite extension for efficient vector search, based on Faiss! - asg017/sqlite-vss

- Rubik's AI - AI research assistant & Search Engine: no description found

- Support Llama 3 conversion by pcuenca · Pull Request #6745 · ggerganov/llama.cpp: The tokenizer is BPE.

Nous Research AI ▷ #rules (1 messages):

- New Reporting Command Introduced: Users can report spammers, scammers, and other rule violators using the

/reportcommand. A moderator will be notified and review the report.

Nous Research AI ▷ #ask-about-llms (11 messages🔥):

-

Hermes 2 Pro Tool Call Behavior: A member mentioned difficulty with Hermes 2 Pro always returning <tool_call> when sometimes a chat response is desired. Another member highlighted the need for Hermes 2 Pro to better understand when not to trigger tool calls and mentioned that upcoming versions would address this.

-

Fine-tuning Practices for Base Models: One member discussed a multi-stage fine-tuning approach involving a pre-trained base model and instruction datasets followed by preference dataset fine-tuning. They experienced issues with the model returning random sentences or information alongside answers.

-

Directly Answer with Hermes 2 Pro: There was a suggestion to add a tool called “directly_answer” as in Langchain’s ReAct Agent for scenarios where Hermes 2 Pro is meant to chat rather than execute a function call, alongside a JSON example of how it operates.

-

Prompting Troubles with Hermes 2 Pro: A member asked for advice on proper prompting formats for the NousResearch/Hermes-2-Pro-Mistral-7B model, providing a code snippet and noting difficulty in getting desired outputs.

-

Long Context Inference Challenges on GPU Clusters: Two messages referenced technical challenges—one regarding running long context inference on a GPU cluster, and another on employing twin A100 GPUs with jamba to process 200k tokens.

Nous Research AI ▷ #project-obsidian (1 messages):

- VLM on Raspberry Pis: A member expressed their intention to utilize the technology for a school project with the goal to install VLM on Raspberry Pis for both enjoyment and benefit, acknowledging the usefulness of the shared resource.

Nous Research AI ▷ #rag-dataset (27 messages🔥):

- Debating OAI Assistants Search Implementation: A discussion on OpenAI’s assistant search approach delved into its fixed 800-token chunking size with 50% overlap and 20 max chunks per context.

- Dimensionality Dilemmas in Embeddings: The use of a 256-dimensional embedding for search purposes, as opposed to the typical 1500 dimensions, led to conversations about the impact of dimensionality on model performance, referencing the curse of dimensionality.

- Model Performance and Optimization: There was talk of conducting experiments to determine if lower dimensional embeddings yield higher retrieval accuracy, with one member expressing ambition to test this themselves.

- Curiosity Around Multimodal Models: Members highlighted both the strengths and limitations of current models like gpt4v, discussing the need for finetuning and expressing interest in reducing qwen-vl-max’s document understanding capabilities into a smaller model like llava.

- GPT Variants and Open Source Insights: The conversation also covered experiences with open source models, including their requirement for task-specific finetuning and the potential efficiency of OCR and vision models for extracting metadata in search applications.

Links mentioned:

- MTEB Leaderboard - a Hugging Face Space by mteb: no description found

- Curse of dimensionality - Wikipedia: no description found

- Abstractions/raw_notes/abstractions_types_no_cat_theory.md at main · furlat/Abstractions: A Collection of Pydantic Models to Abstract IRL. Contribute to furlat/Abstractions development by creating an account on GitHub.

Nous Research AI ▷ #world-sim (312 messages🔥🔥):

-

WorldSim Anticipation Builds: Members of the Nous Research AI Discord are highly anticipating the return of WorldSim, frequently inquiring about the exact time of its comeback. Despite no confirmed launch time, the sentiment is optimistic, with repeated confirmations indicating the return is imminent.

-

Nitro Giveaway Stakes Rise: Amid the excitement for WorldSim, kainan_e jokingly risks bankruptcy, offering Discord Nitro to users if the launch does not happen by midnight EST. The gesture of goodwill extends regardless of WorldSim’s launch status, with Nitro being distributed.

-

Philosophical Depth Explored: Conversations delve into the philosophical underpinnings of WorldSim, discussing the complex interaction of AI with user narratives and potential for user-guided AI civilizations within simulations. The Desideratic AI (DSJJJJ) philosophy is referenced, emphasizing emergent cognition from organized complexity.

-

Limitations and Fees Addressed: There are mentions of possible limitations and fees associated with the new version of WorldSim to limit abuse, potentially related to a prior attack that forced a shutdown due to excessive and manipulative input.

-

Final Countdown and Community Support: As the expected WorldSim launch time approaches, the community rallies around the shared excitement and suspense, with kainan_e supporting member enthusiasm through humor and freebies, and proprietary teasing that completion is close.

Links mentioned:

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- world_sim: no description found

- Let Me In Crazy GIF - Let Me In Crazy Funny - Discover & Share GIFs: Click to view the GIF

- Pipes: Pipes are necessary to smoke frop. "Bob" is always smoking his pipe full of frop. Every SubGenius has a pipe full of frop and they smoke it nonstop. Often, SubGenii find a picture of a famou...

- Fire Writing GIF - Fire Writing - Discover & Share GIFs: Click to view the GIF

- Anime Excited GIF - Anime Excited Happy - Discover & Share GIFs: Click to view the GIF

- A GIF - Finding Nemo Escape Ninja - Discover & Share GIFs: Click to view the GIF

- Mika Kagehira Kagehira Mika GIF - Mika kagehira Kagehira mika Ensemble stars - Discover & Share GIFs: Click to view the GIF

- Tree Fiddy GIF - Tree Fiddy South - Discover & Share GIFs: Click to view the GIF

- Tea Tea Sip GIF - Tea Tea Sip Anime - Discover & Share GIFs: Click to view the GIF

- Forge - NOUS RESEARCH: NOUS FORGE Download Coming June 2024

- DSJJJJ: Simulacra in the Stupor of Becoming - NOUS RESEARCH: Desideratic AI (DSJJJJ) is a philosophical movement focused on creating AI systems using concepts traditionally found in monism, mereology, and philology. Desidera aim to create AI that can act as bet...

- DSJJJJ: Simulacra in the Stupor of Becoming - NOUS RESEARCH: Desideratic AI (DSJJJJ) is a philosophical movement focused on creating AI systems using concepts traditionally found in monism, mereology, and philology. Desidera aim to create AI that can act as bet...

LM Studio ▷ #💬-general (515 messages🔥🔥🔥):

-

Llama 3 Performance Evaluations: Users are testing the Llama 3 8b model with various presets and settings. There’s interest in how Llama 3 compares to other models, especially in terms of coherency and speed on different system configurations including one with a Ryzen 5 3600 and another user using a laptop with a Core i5 8350u. The model’s performance is broadly being seen as promising, although some are experiencing unexpected outputs, like repeated appearances of

<|eot_id|>assistant. -

Llama 3 Optimization Challenges: Users are noticing high CPU utilization when running Llama 3 locally on LM Studio, especially on systems with integrated GPUs like the Intel iGPU, suggesting that Llama 3 might be more efficiently used with dedicated GPUs. Some users are experiencing slowness or less effective multilingual responses, with different levels of token generation speed on various system configurations.

-

Llama 3 Integration and Compatibility: Inquiries are being made about Llama 3’s compatibility with various apps and platforms, such as VSCode Copilot. A user shared details on using Continue.dev for integrating LLMs from LM Studio into alternative platforms. There’s also interest in whether the model can facilitate specific tasks or be fine-tuned for improved performance.

-

Evaluation of Smaller Models: A discussion about the efficacy of smaller LLMs like Phi 2 and Llama 3’s 1.1B model raises questions about their coherence and the potential for embedding them into devices for specific functions. Users are discussing optimizing and fine-tuning smaller models for specialized tasks and considering the implications of running AI on low-power devices.

-

User Troubleshooting: Several users are troubleshooting issues with their LM Studio setups, from problems with GPU utilization to inconsistent settings behavior between chat sessions. There’s ongoing back-and-forth about the best configurations and settings for running Llama 3, with users sharing experiences and solutions for better model performance.

Links mentioned:

- meraGPT/mera-mix-4x7B · Hugging Face: no description found

- lmstudio-community/Meta-Llama-3-8B-Instruct-GGUF · Hugging Face: no description found

- LM Studio | Continue: LM Studio is an application for Mac, Windows, and Linux that makes it easy to locally run open-source models and comes with a great UI. To get started with LM Studio, download from the website, use th...

- meta-llama/Meta-Llama-3-70B-Instruct - HuggingChat: Use meta-llama/Meta-Llama-3-70B-Instruct with HuggingChat

- Mission Squad. Flexible AI agent desktop app.: no description found

- Monaspace: An innovative superfamily of fonts for code

- Tweet from AI at Meta (@AIatMeta): Introducing Meta Llama 3: the most capable openly available LLM to date. Today we’re releasing 8B & 70B models that deliver on new capabilities such as improved reasoning and set a new state-of-the-a...

- Welcome Llama 3 - Meta's new open LLM: no description found

- Puss In Boots Math GIF - Puss In Boots Math I Never Counted - Discover & Share GIFs: Click to view the GIF

- Johnny English Agent GIF - Johnny English Agent Yawn - Discover & Share GIFs: Click to view the GIF

- Meta Releases LLaMA 3: Deep Dive & Demo: Today, 18 April 2024, is something special! In this video, In this video I'm covering the release of @meta's LLaMA 3. This model is the third iteration of th...