AI News for 6/7/2024-6/10/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (411 channels, and 7641 messages) for you. Estimated reading time saved (at 200wpm): 816 minutes.

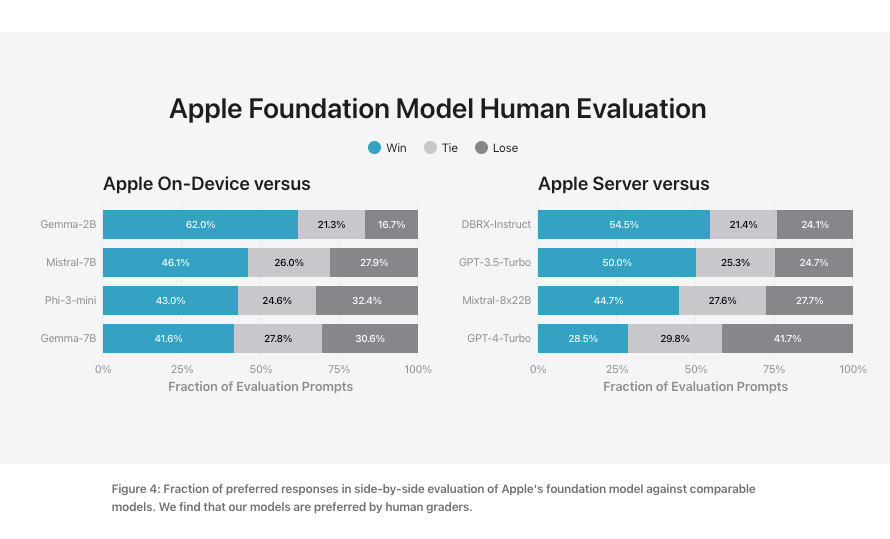

With Apple Intelligence, Apple has claimed to leapfrog Google Gemma, Mistral Mixtral, Microsoft Phi, and Mosaic DBRX in one go, with a small “Apple On-Device” model (~3b parameters) and a “larger” Apple Server model (available with Private Cloud Compute running on Apple Silicon).

The Apple ML blogpost also briefly mentioned two other models - an Xcode code-focused model, and a diffusion model for Genmoji.

What appears to be underrated is the on-device model’s hot-swapping LoRAs with apparently lossless quantization strategy:

For on-device inference, we use low-bit palletization, a critical optimization technique that achieves the necessary memory, power, and performance requirements. To maintain model quality, we developed a new framework using LoRA adapters that incorporates a mixed 2-bit and 4-bit configuration strategy — averaging 3.5 bits-per-weight — to achieve the same accuracy as the uncompressed models.

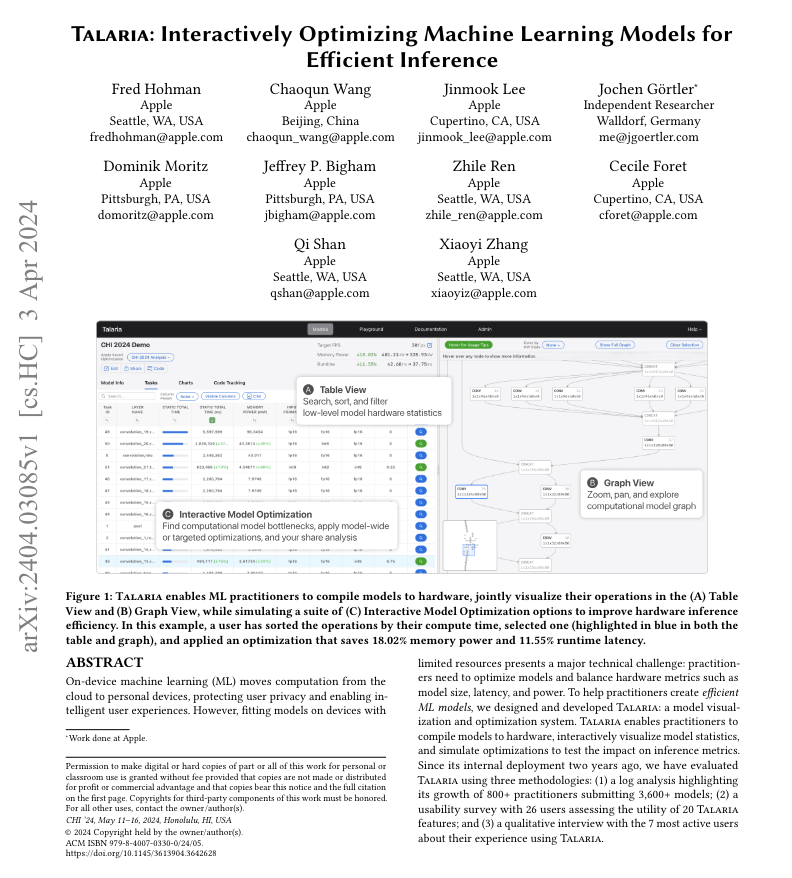

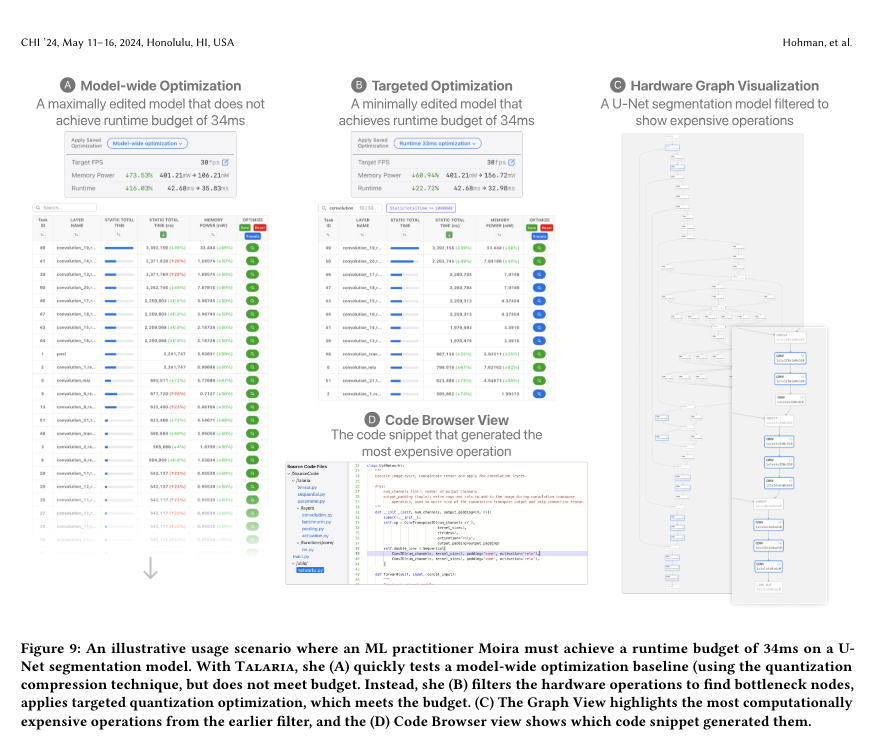

Additionally, we use an interactive model latency and power analysis tool, Talaria, to better guide the bit rate selection for each operation. We also utilize activation quantization and embedding quantization, and have developed an approach to enable efficient Key-Value (KV) cache update on our neural engines.

With this set of optimizations, on iPhone 15 Pro we are able to reach time-to-first-token latency of about 0.6 millisecond per prompt token, and a generation rate of 30 tokens per second. Notably, this performance is attained before employing token speculation techniques, from which we see further enhancement on the token generation rate.

We represent the values of the adapter parameters using 16 bits, and for the ~3 billion parameter on-device model, the parameters for a rank 16 adapter typically require 10s of megabytes. The adapter models can be dynamically loaded, temporarily cached in memory, and swapped — giving our foundation model the ability to specialize itself on the fly for the task at hand while efficiently managing memory and guaranteeing the operating system’s responsiveness.

The key tool they are crediting for this incredible on-device inference is Talaria:

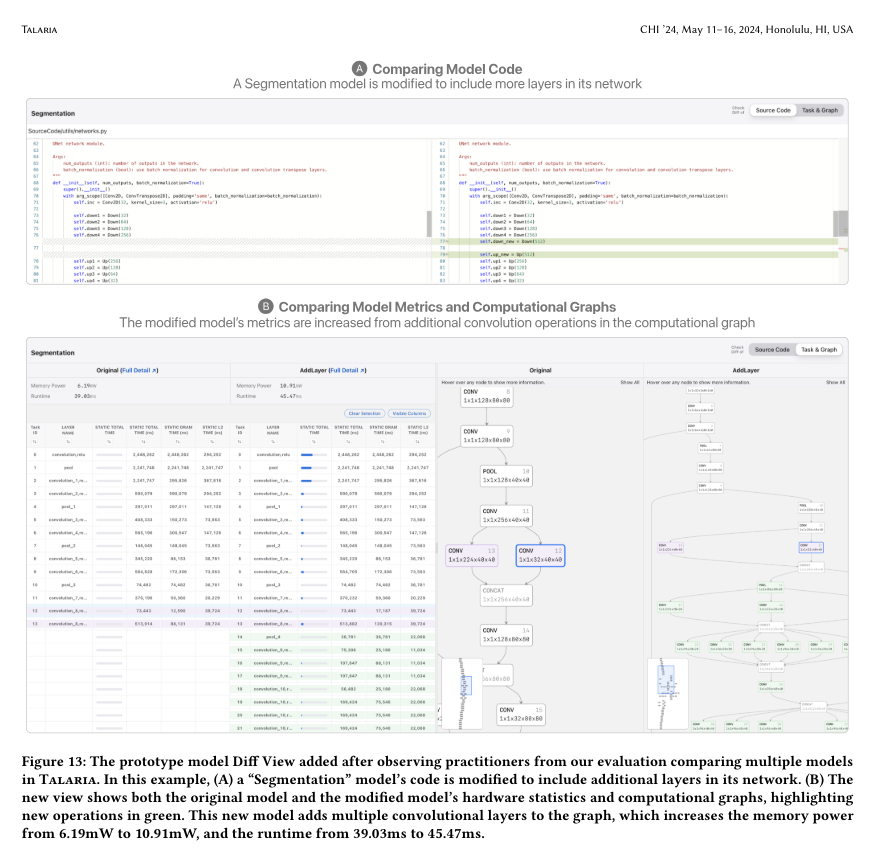

Talaria helps to ablate quantizations and profile model architectures subject to budgets:

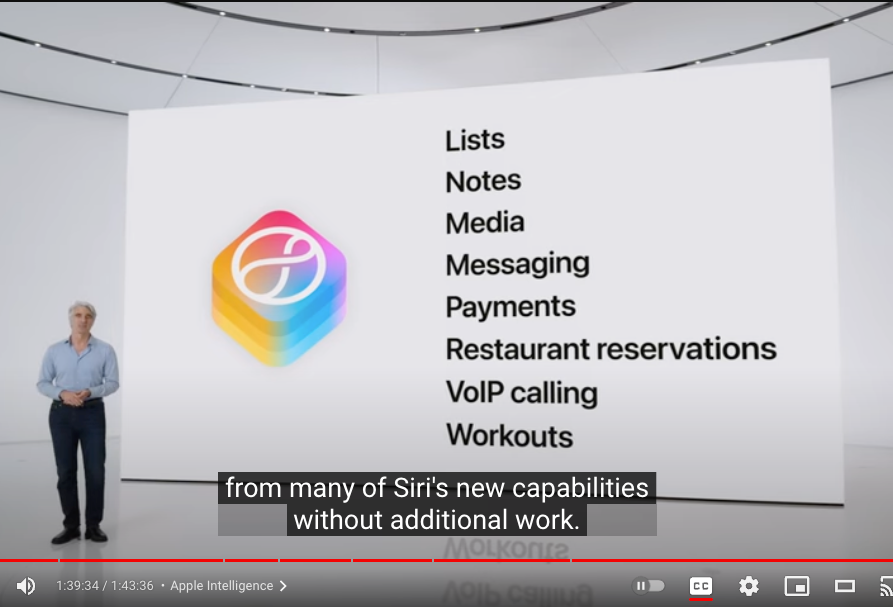

Far from a God Model, Apple seems to be pursuing an “adapter for everything” strategy and Talaria is set to make it easy to rapidly iterate and track the performance of individual architectures. This is why Craig Federighi announced that Apple Intelligence only specifically applies to a specific set of 8 adapters for SiriKit and 12 categories of App Intents to start with:

Knowing that Apple designs for a strict inference budget, it’s also interesting to see how Apple self-reports performance. Virtually all the results (except instruction following) are done with human graders, which has the advantage of being the gold standard yet the most opaque:

The sole source of credibility of these benchmarks claiming to beat Google/Microsoft/Mistral/Mosaic is that Apple does not need to win in the academic arena - it merely needs to be “good enough” to the consumer to win. Here, it only has to beat the low bar of Siri circa 2011-2023.

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

Andrej Karpathy’s New YouTube Video on Reproducing GPT-2 (124M)

- Comprehensive 4-hour video lecture: @karpathy released a new YouTube video titled “Let’s reproduce GPT-2 (124M)”, covering building the GPT-2 network, optimizing it for fast training, setting up the training run, and evaluating the model. The video builds on the Zero To Hero series.

- Detailed walkthrough: The video is divided into sections covering exploring the GPT-2 checkpoint, implementing the GPT-2 nn.Module, making training fast with techniques like mixed precision and flash attention, setting hyperparameters, and evaluating results. The model gets close to GPT-3 (124M) performance.

- Associated GitHub repo: @karpathy mentioned the associated GitHub repo contains the full commit history to follow along with the code changes step by step.

Apple’s WWDC AI Announcements

- Lack of impressive AI announcements: @karpathy noted that 50 minutes into Apple’s WWDC, there were no significant AI announcements that impressed.

- Rumors of “Apple Intelligence” and OpenAI partnership: @adcock_brett mentioned rumors that Apple would launch a new AI system called “Apple Intelligence” and a potential partnership with OpenAI, but these were not confirmed at WWDC.

Intuitive Explanation of Matrix Multiplication

- Twitter thread on matrix multiplication: @svpino shared a Twitter thread providing a stunning, simple explanation of matrix multiplication, calling it the most crucial idea behind modern machine learning.

- Step-by-step breakdown: The thread breaks down the raw definition of the product of matrices A and B, unwrapping it step by step with visualizations to provide an intuitive understanding of how matrix multiplication works and its geometric interpretation.

Apple’s Ferret-UI: Multimodal Vision-Language Model for iOS

- Ferret-UI paper details: @DrJimFan highlighted Apple’s paper on Ferret-UI, a multimodal vision-language model that understands icons, widgets, and text on iOS mobile screens, reasoning about their spatial relationships and functional meanings.

- Potential for on-device AI assistant: The paper discusses dataset and benchmark construction, showing extraordinary openness from Apple. With strong screen understanding, Ferret-UI could be extended to a full-fledged on-device assistant.

AI Investment and Progress

- $100B spent on NVIDIA GPUs since GPT-4: @alexandr_wang noted that since GPT-4 was trained in fall 2022, around $100B has been spent collectively on NVIDIA GPUs. The question is whether the next generation of AI models’ capabilities will live up to that investment level.

- Hitting a data wall: Wang discussed the possibility of AI progress slowing down due to a data wall, requiring methods for data abundance, algorithmic advances, and expanding beyond existing internet data. The industry is split on whether this will be a short-term impediment or a meaningful plateau.

Perplexity as Top Referral Source for Publishers

- Perplexity driving traffic to publishers: @AravSrinivas shared that Perplexity has been the #2 referral source for Forbes (behind Wikipedia) and the top referrer for other publishers.

- Upcoming publisher engagement products: Srinivas mentioned that Perplexity is working on new publisher engagement products and ways to align long-term incentives with media companies, to be announced soon.

Yann LeCun’s Thoughts on Managing AI Research Labs

- Importance of reputable scientists in management: @ylecun emphasized that the management of a research lab should be composed of reputable scientists to identify and retain brilliant people, provide resources and freedom, identify promising research directions, detect BS, inspire ambitious goals, and evaluate people beyond simple metrics.

- Fostering intellectual weirdness: LeCun noted that managing a research lab requires being welcoming of intellectual weirdness, which can be accompanied by nerdy personality weirdness, making management more difficult as truly creative people don’t fit into predictable pigeonholes.

Reasoning Abilities vs. Storing and Retrieving Facts

- Distinguishing reasoning from memorization: @ylecun pointed out that reasoning abilities and common sense should not be confused with the ability to store and approximately retrieve many facts.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Model Developments and Benchmarks

- Text-to-video model improvements: In /r/singularity, Kling, a new Chinese text-to-video model, shows significant improvement over a 1 year period compared to previous models. Additional discussion in /r/singularity speculates on what further advancements may come in another year.

- AI’s impact on mathematics: In /r/singularity, Fields Medalist Terence Tao believes AI will become mathematicians’ ‘co-pilot’, dramatically changing the field of mathematics.

- Powerful zero-shot forecasting models: In /r/MachineLearning, IBM’s open-source Tiny Time Mixers (TTMs) are discussed as powerful zero-shot forecasting models.

AI Applications and Tools

- Decentralized AI model tracker: In /r/LocalLLaMA, AiTracker.art, a torrent tracker for AI models, is presented as a decentralized alternative to Huggingface & Civitai.

- LLM-powered compression: In /r/LocalLLaMA, Llama-Zip, an LLM-powered compression tool, is discussed for its potential to allow recovery of complete training articles from compressed keys.

- Fast browser-based speech recognition: In /r/singularity, Whisper WebGPU showcases blazingly-fast ML-powered speech recognition directly in the browser.

- Replacing OpenAI with local model: In /r/singularity, a post demonstrates replacing OpenAI with a llama.cpp server using just 1 line of Python code.

- Semantic search for chess positions: In /r/LocalLLaMA, an embeddings model for chess positions is shared, enabling semantic search capabilities.

AI Safety and Regulation

- Prompt injection threats: In /r/OpenAI, prompt injection threats and protection methods for LLM apps are discussed, such as training a custom classifier to defend against malicious prompts.

- Concerns about sensitive data in models: In /r/singularity, a post argues that with tech companies scraping the internet for data, the odds that a public model has been trained on TOP SECRET documents is likely north of 99%.

- Techniques to reduce model refusals: In /r/LocalLLaMA, Orthogonal Activation Steering (OAS) and “abliteration” are noted as the same technique for reducing AI model refusals to engage with certain prompts.

AI Ethics and Societal Impact

- AI in education: In /r/singularity, the use of AI in educational settings is discussed, raising questions about effective integration and potential misuse by students.

AI Hardware and Infrastructure

- Benchmarking large models: In /r/LocalLLaMA, P40 benchmarks for large contexts and flash attention with KV quantization in Command-r GGUFs are shared, showing the impact on processing and generation speeds.

- Mac Studio for local models: In /r/LocalLLaMA, the Mac Studio with M2 Ultra is considered for running large models locally on a small, quiet, and relatively low-powered device.

- AMD GPUs for local LLMs: In /r/LocalLLaMA, a post seeks experiences and performance insights for using AMD Radeon GPUs for local LLMs on Linux setups.

Memes and Humor

- Late night AI art: In /r/singularity, a meme about staying up late to perfect an AI-generated masterpiece (massive anime titty) is shared.

- AI-generated Firefox logo: In /r/singularity, an overly complicated Firefox logo generated by AI is posted.

- ChatGPT’s flirty strategy: In /r/singularity, a clip from The Daily Show features a hilarious reaction to ChatGPT’s FLIRTY strategy.

AI Discord Recap

A summary of Summaries of Summaries

- Multimodal AI and Generative Modeling Innovations:

- Ultravox Enters Multimodal Arena: Ultravox, an open-source multimodal LLM capable of understanding non-textual speech elements, was released in v0.1. The project is gaining traction and hiring for expansion.

- Sigma-GPT Debuts Dynamic Sequence Generation: σ-GPT provides dynamic sequence generation, reducing model evaluation times. This method sparked interest and debate over its practicality, with some comparing it to XLNet’s trajectory.

- Lumina-Next-T2I Enhances Text-to-Image Models: The Lumina-Next-T2I model boasts faster inference speeds, richer generation styles, and better multilingual support, showcased in Ziwei Liu’s tweet.

- Model Performance Optimization and Fine-Tuning Techniques:

- Efficient Quantization and Kernel Optimization: Discussions around CUDA Profiling Essentials recommended using

nsysorncufor in-depth kernel analysis. Techniques from NVIDIA Cutlass and BitBlas documentation showcased effective bit-level operations. - LLama-3 Finetuning Issues Fixed: Users reported resolving issues with LLama3 model finetuning by using vllm and shared related configurations in the axolotl forum.

- GROUP Project: The project dealt with tackling fine-tuning vs RAG concepts and LR adjustments in the OpenAI and Eleuther community, with insights on benchmarks from Stanford and Git setups seen in GitHub.

- Open-Source AI Frameworks and Tools:

- Rubik’s AI Beta Test Invitation: Users are invited to beta test Rubik’s AI, a new research assistant featuring models like GPT-4 Turbo, Claude-3 Opus. The platform promotes AI research advancements.

- LSP-AI Enhances IDE Compatibility: A multi-editor AI language server to assist software engineers was highlighted, with community enthusiasm for its enhancing capabilities across platforms.

- Integrating LangChain and Bagel: LangChain has integrated with Bagel, providing secure, scalable dataset management and highlighting advancements in integrating language models with external data.

- AI Community and Event Highlights:

- AI Engineer World’s Fair Announcements: AI Engineer World’s Fair revealed new speakers, and tickets are sold out, indicating high engagement and interest within the community.

- Innovative Projects and Meetups: The community spotlight features include intriguing projects like Websim.ai’s recursive exploration and notable meetups, such as the Lehman Trilogy event in SF, as shared by Nathan Lambert in the Interconnects discord.

- ICLR 2024 Podcast and AI Summit Insights: Part 2 of the ICLR 2024 podcast was released, featuring discussions on benchmarks, agents, and more, enriching community knowledge and engagement.

- Technical Innovations and Discussions:

- Multilingual Transcription Turmoil: Criticisms were shared on Whisper v3’s struggles with multilingual transcription in the OpenAI discord, sparking anticipation for future enhancements from OpenAI.

- Security and API Token Management: Warning against use of Malicious ComfyUI node and advice on using environment variables for API token management was a shared concern.

- Performance Analysis and Fine-Tuning: Discussions included optimizing large model training configurations, as seen in CUDA profiling, and the use of structured concurrency in programming languages like Mojo.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

- Spotify Perks on Shuffle: Engineers discussed various ways to obtain Spotify Premium, including a free perk from certain providers, without elaborating on specific costs or terms for these offers.

- Regional Settings Unmasked: Techniques for regional prompting were explored, with suggestions including using IPAdapter with attention masks in ComfyUI and enquiries into similar functionalities with diffusers, but no clear consensus was reached on the best strategy.

- Buzz Around Stable Diffusion 3: The community is eagerly awaiting Stable Diffusion 3 (SD3), with debates on expected features such as prompt obedience and image creation enhancements, alongside a focus on custom fine-tunes and post-release LoRas, acknowledging an overall atmosphere of cautious optimism.

- LoRas Training Hiccups: Members shared their challenges and workarounds when training models and LoRas with tools like ComfyUI and Kohya SS GUI, further recommending alternative methods such as OneTrainer due to unspecified setup problems.

- Security Alert in ComfyUI: A warning was raised about a malicious node in ComfyUI with the potential to siphon sensitive information, sparking a broader conversation on safeguarding against the risks associated with custom nodes in AI tools.

Perplexity AI Discord

- AI Takeoff with SpaceX Starship Success: SpaceX notches a win with its fourth test flight of Starship, marking a step forward towards a fully reusable rocket system with first and second stages landing successfully. This achievement is detailed on Perplexity’s platform.

- Starliner’s Rocky Road to ISS: Boeing’s Starliner faced glitches with five RCS thrusters during its ISS docking, potentially affecting mission timelines and showcasing the complexities of space hardware. The full report is available at NASA’s update.

- Perplexity’s Puzzles and Progress: Users have critiqued the limited capability of AI travel planning on Perplexity AI, particularly with flight specifics, while others praise its new pro search features that improve result relevance. Concerns arise from community reports of content deindexing and accuracy issues with GPT-4 models. Controversies also swirl around claims of the Rabbit R1 device as a scam.

- Geopolitical Tech Tension: Huawei’s Ascend 910B AI chip is stirring the silicon waters against Nvidia’s A100 with its impressive performance in training large language models, sparking both technology debates and geopolitical implications. Visit Perplexity’s update for details on the chip’s capabilities.

- Perplexity API Quandaries: Inquiries and discussions focused on utilizing Perplexity API’s features, such as the unavailability of embedding generation and advice on achieving results akin to the web version, reflect the user needs for clear documentation and support. A specific issue with API credits was advised to be resolved via direct messaging, showing proactive community engagement.

LLM Finetuning (Hamel + Dan) Discord

- Popcorn and Poisson at the Fine-Tuning Fair: Humorous discussions about predicting popcorn popping times using probabilistic models segued into analyses of the inverse Poisson distribution. Alongside, a member invited course mates to the AI Engineer World’s Fair, promising potential legendary status for anyone case studying popcorn kernels with the course repo.

- Censorship and Performance Headline LLM Conversations: A Hugging Face blog post raising concerns about misinformation in Qwen2 Instruct led to discussions on the nuances of LLM performance and censorship, with a focus on the disparities of English versus Chinese responses. Elsewhere, LLama-3 model finetuning issues were resolved by deploying with vllm.

- Fine-tuning Causing Frustrations: The process for accessing granted credits for platforms such as Hugging Face, Replicate, and Modal caused confusion, with several members not receiving expected amounts, resulting in some voicing disappointments and seeking resolution.

- Modal’s Magic Met with Mixed Reactions: Members shared experiences of deploying models on Modal, ranging from calling it a “magical experience” to struggles with permissions and volume ID errors, indicating a learning curve and growing pains with new deployment platforms.

- Workshop Woes and Winning Techniques: Technical issues were discussed, including the partial loss of Workshop 4’s Zoom recording, resolved with a shared link to the final minutes. Discussions also celebrated Weights & Biases resources like a 10-minute video course and ColBERT’s new hierarchical pooling feature detailed in an upcoming blog post.

- Finetuning vs. RAG Debate Unpacked: An interesting analogy was proposed between fine-tuning and RAG’s role in LLMs, juxtaposing the addition of static knowledge versus dynamic, query-specific information. However, this was met with some resistance, with one member working towards a more precise explanation of these complex concepts.

- Accelerate Framework Testing Reveals Speed Differences: An AI engineer tested training configurations with accelerate, comparing DDP, FSDP, and DS(zero3), with DS(zero3) found to be the most vRAM efficient and second-fastest in a head-to-head comparison.

- Global Check-ins and Local Hangouts: Members checked in from various locations globally with an impromptus meetup pitched for those in the San Francisco area, showing the community’s eagerness for connection beyond the digital realm.

Nous Research AI Discord

- Dynamic Conversational Models: σ-GPT emerges as a game-changer, dynamically generating sequences at inference time, as opposed to GPT’s traditional left-to-right generation. Comparisons have been drawn to concepts extraction from GPT-4 as detailed in OpenAI’s blog, sparking conversations on methodology and applications.

- High-Stakes Editing & Legal Discourse: The Krita stable diffusion plugin was recommended for those brave enough to tackle outpainting, and Interstice Cloud and Playground AI have been proposed as cost-effective solutions for mitigating GPU cloud costs. Meanwhile, the thread on SB 1047 prompted arguments over AI regulation and its implications for the sector’s vitality.

- Schematics and Standards for Data: Members discussed JSON schemas for RAG datasets and championed more structured formats, such as a combination of relevance, similarity score, and sentiment metrics, to hone language models’ outputs. The integration of tools like Cohere’s retrieval system and structured citation mechanics was also examined, suggesting a preference for JSON representation for its simplicity and ease of use.

- Revolutionizing Resource Constraints: Solutions for low-spec PCs, such as employing Phi-3 3b despite its limitations with code-related tasks, were shared. This points to a community concern for resources accessibility and optimization across various hardware configurations.

- Methodology Throwdown: The prominence of HippoRAG, focused on clustering for efficient language model training, signified a shift toward optimizing information extraction processes, debated at length with a throwdown on best practices for model pruning and fine-tuning strategies with references to related works and tooling such as PruneMe.

Unsloth AI (Daniel Han) Discord

- GGUF Glitch in Qwen Models: Engineers report that Qwen GGUF is causing “blocky” text output, especially in the 7B model, despite some users running it successfully with tools like lm studio. The underperformance of Qwen models remains a subject of high-interest discussion.

- Multi-Editor Language Server Enhancement: LSP-AI, a language server offering compatibility across editors like VS Code and NeoVim, was highlighted as a tool to augment, not replace, software engineers’ capabilities.

- Simplifying Model Finetuning: Users appreciate the user-friendly Unsloth Colab notebook for continued pretraining, which streamlines the finetuning process, particularly for input and output embeddings. Relevant supports include the Unsloth Blog and repository.

- Bit Warfare and Model Merging: Conversations delve into the distinctions between 4-bit quantization methods like QLoRA, DoRA, and QDoRA, and the finer points of model merging tactics using the differential weight strategy, illustrating community members’ adeptness with advanced ML techniques.

- Noteworthy Notebook Network: The showcase channel features a notable array of Google Colab and Kaggle notebooks for prominent models including Llama 3 (8B), Mistral v0.3 (7B), and Phi-3, emphasizing the accessibility and collaborative spirit within the community.

CUDA MODE Discord

- CUDA Profiling Essentials: Use nsys or ncu for CUDA profiling, and for in-depth analysis, focus on a single forward and backward pass, as shown in a kernel performance analysis video. For building a personal ML rig, consider CPUs like Ryzen 7950x and GPUs such as 3090 or 4090, with a note on AVX-512 support and trade-offs in server CPUs like Threadrippers and EPYCs.

- Triton’s Rising Tide: The FlagGems project was highlighted for its use of Triton Language for large LLMs. Technical discussions included handling general kernel sizes, loading vectors as diagonal matrices, and seeking resources for state-of-the-art Triton kernels, available at this GitHub catalog.

- Torched Discussions: To measure

torch.compileaccurately, subtract the second batch time from the initial pass; a troubleshooting guide is available. Explore Inductor performance scripts in PyTorch’s GitHub and consider using custom C++/CUDA operators as shown here. - Futurecasting with High-Speed Scans: Anticipation was built for a talk by guest speakers on scanning technologies, with an expectation of innovative insights.

- Electronics Enlightened: An episode of The Amp Hour podcast guest-starring Bunnie Huang shed light on hardware design and Hacking the Xbox, available via Apple Podcasts or RSS.

- Transitioning Tips: Members shared tips for transitioning to GPU-based machine learning, suggesting utilizing Fatahalian’s videos and Yong He’s YouTube channel for learning about GPU architecture.

- Encoder Quests and GPT Guidance: While details about effective parameters search for encoder-only models in PyTorch weren’t provided, there was a shared resource to reproduce GPT-2. NVIDIA’s RTX 4060Ti (16GB) was suggested as an entry-level option for CUDA learning.

- FP8’s Role in PyTorch: Conversations about using FPGA models and considerations for ternary models without matmul were supplemented by links to an Intel FPGA and a relevant paper. There was a call for better torch.compile and torchao documentation and benchmarks, with an eye on a new addition for GPT models in Pull Request #276.

- Triton Topic came up twice: An interesting demo of ternary accumulation was linked with positive community feedback (matmulfreellm).

- Lucent llm.c Chatter: Wide-ranging discussions on model training covered topics like hyperparameter selection, overlapping computations, dataset issues with FineWebEDU, and successes in converting models to Hugging Face formats with detailed scripts.

- Bits and Bitnet: Techniques using differential bitcounts prompted both curiosity and debugging efforts. FPGA costs were compared to A6000 ADA GPUs for speed, while NVIDIA’s Cutlass was confirmed to support nbit bit-packing including with uint8 formats (Cutlass documentation). Additionally, benchmark results for BitBlas triggered discussions around matmul fp16 performance variances.

- ARM Ambitions: A brief mention noted that discussions likely pertain to ARM server chips as opposed to mobile processors, with a link to a popular YouTube video as a reference point.

HuggingFace Discord

- Big Models, Big Discussions: Engineers debated the computational requirements for 2 billion parameter models, with an acknowledgment that systems with 50GB may not suffice, potentially needing more than 2x T4 GPUs. The API debate highlighted confusion over costs and access, with criticism aimed at OpenAI’s platform being dubbed “closedAI.”

- Battle of the Tech Titans: Nvidia’s market dominance was acknowledged despite its “locked-in ecosystem,” with its AI chip innovations and gaming industry demands keeping it essential in technology leadership.

- Security Tips for API Tokens: An accidental email token exposure led to recommendations for using environment variables to enhance security in software development.

- The Power of AI in Simulations: Members were introduced to resources such an AI Summit YouTube recording showcasing AI’s use in physics simulations, and were invited to an event on model collapse prevention by Stanford researchers.

- New Ventures in Machine Learning: A host of AI tools and developments were shared, including Torchtune for LLM fine-tuning, Ollama for versatile LLM use, Kaggle datasets for image classification, and FarmFriend for sustainable farming.

- Cutting Edge AI Creations: Innovations in AI space included the launch of Llama3-8b-Naija for Nigerian-contextual responses, SimpleTuner v0.9.6.3 for multiGPU training enhancements, Visionix Alpha for improved aesthetics in hyper-realism, and Chat With ‘Em for conversing with various models from different AI companies.

- CV and NLP Advances Showcased: Highlights included a discussion on the efficient implementation of rotated bounding boxes, Gemini 1.5 Pro’s superiority in video analysis, and a semantic search tool for CVPR 2024 papers. In NLP, topics ranged from building RAG-powered chatbots to AI-powered resume generation with MyResumo, and inquiries about model hosting and error handling in PyTorch versus TensorFlow.

- Diffusion Model Dynamics: The discussion centered around training Conditional UNet2D models with shared resources, utilizing SDXL for image text imprinting, and the curiosity about calculating MFU during training, leading to suggestions for repository modifications.

LM Studio Discord

New Visualization Models Still In Queue: No current support exists in LM Studio for generating image embeddings; users are recommended to look at daanelson/imagebind or await future releases from nomic and jina.

Chill Out, Tesla P40!: For cooling the Tesla P40, community suggestions ranged from using Mac fans to a successful attempt with custom 3D printed ducts, with one user directing to a Mikubox Triple-P40 cooling guide.

Crossing the Multi-GPU Bridge: Discussions highlighted that while LM Studio is falling behind in efficient multi-GPU support, ollama exhibits more competent handling, prompting users to seek better GPU utilization methods.

Tackling Hardware Compatibility: From dealing with the injection of AMD’s ROCm into Windows applications to navigating driver installation for the Tesla P40, users shared experiences and solutions including isolation techniques from AMD documentation.

LM Studio Awaiting Smaug’s Tokenizer: The next release of LM Studio is set to include BPE tokenizer support for Smaug models, while members are also probing into options for directing LMS data to external servers.

OpenAI Discord

- iOS Steals the AI Spotlight: OpenAI announced a collaboration with Apple for ChatGPT integration across iOS, iPadOS, and macOS platforms, slated for a release later this year, stirring excitement and discussions about the implications for AI in consumer tech. Details and reactions can be found in the official announcement.

- Multilingual Transcription Turmoil and Apple AI Advances: There’s buzz over Whisper version 3 struggling with multilingual transcription, with users clamoring for the next version, and Apple’s ‘Apple Intelligence’ promising to boost AI in the iPhone 16, potentially necessitating hardware upgrades for optimization.

- Image Token Economics and Agent Aggravation: On the economical side, debates are heating up over the cost-efficiency of API calls for tokenization of 128k contexts and image processing, while on the technical side users expressed frustration with GPT agents defaulting to GPT-4o leading to suboptimal performance.

- Custom GPTs and Voice Mode Vexations: AI enthusiasts are dissecting the private nature of custom GPTs, effectively barred from external OpenAPI integrations, alongside voiced confusion and impatience regarding the slow rollout of the new voice mode for Plus users.

- HTML and AI Code Crafting Challenges: Discussions centered on the struggles to get ChatGPT to output minimalist HTML, improving summary prompts, using Canva Pro for image text editing, understanding failure points of large language models, and generating Python scripts to convert hex codes into Photoshop gradient maps, indicating areas where tooling and instructions may need honing.

Eleuther Discord

- GPU Poverty Solved by CPU Models: Engineers discuss workarounds for limited GPU resources, considering sd turbo and CPU-based solutions to reduce waiting times with one stating the experience still “worth it.”

- Fixed Seeds Combat Local Minima: In the debate over fixed vs. random seeds in neural network training, some prefer setting a manual seed to fine-tune parameters and escape local minima, emphasizing that “there is always a seed.”

- MatMul Operations Get the Boot: An arXiv paper presenting MatMul-free models up to 2.7B parameters incites discussion, suggesting such models maintain performance while potentially reducing computational costs.

- Diffusion Models: Whispering Sweet Nothings to NLP?: A shift towards using diffusion models for enhancing LLMs is on the table, with references such as this survey paper spurring dialogue on the topic.

- Hungary Banks on AI Safety: The viability of a $30M investment in AI safety research in Hungary is analyzed, highlighting the importance of not wasting funds and considering cloud-based resources for computational needs.

- RoPE Techniques to the Rescue: Discourse in the research channel reveals enthusiasm for implementing Relative Position Encodings (RoPE) to improve non-autoregressive models, with members proposing various initializations like interpolating weight matrices for model scale-up and SVD for LoRA initialization.

- Pruning the Fat Off Models: An engineer successfully cuts down Qwen 2 72B to 37B parameters using layer pruning, showcasing efficiency without sacrificing performance.

- Interpretability: The New Frontier: There’s a resurgence in interest in TopK activations, and a project exploring MLP neurons in Llama3 is highlighted, with resources found on neuralblog and GitHub.

- MAUVE of Desperation: A member seeks help with the MAUVE setup, highlighting complexities faced during installation and usage for evaluating new sampling methods.

Modular (Mojo 🔥) Discord

- MAC Installation Snags a Hitch on MacOS: Engineers installing MAX on MacOS 14.5 Sonoma faced challenges that required manual interventions, with solutions involving setting Python 3.11 via pyenv, as described in Modular’s official installation guide.

- Deliberating Concurrency in Programming: A debate on structured concurrency versus function coloring in programming languages ensued, with effect generics proposed as a solution, although they make language writing more complex. Discussions also extended to concurrency primitives in languages like Erlang, Elixir, and Go, and the potential for Mojo to design ground-up solutions for these paradigms.

- Maximize Your Mojo: Insights into the Mojo language covered topics such as quantization in the MAX platform with GGML k-quants and pointers to existing documentation and examples, like the Llama 3 pipeline. Additionally, context managers were advocated over a potential

deferkeyword due to their clean resource management, especially in the Python ecosystem. - Updates Unrolled from Modular: Recent development updates included video content, with Modular releasing a new YouTube video that’s likely crucial for followers. Another resource highlighted is a project from Andrej Karpathy, shared via YouTube, speculated to be of interest to the community.

- Engineering Efficacies in New Releases: Nightly releases of the Mojo compiler showed advancements with updates to versions

2024.6.805,2024.6.905, and2024.6.1005, with changelogs accessible for community review here. These iterative releases shape the continuous improvement narrative in the modular programming landscape.

OpenInterpreter Discord

Gorilla OpenFunctions v2 Matches GPT-4: Community members have been discussing the capabilities of Gorilla OpenFunctions v2, noting its impressive performance and capability to generate executable API calls from natural language instructions.

Local II Launches Local OS Mode: Local II has announced support for local OS mode, enabling potential live demos, interest can be pursued via pip install --upgrade open-interpreter.

Technical Issues with OI Models Surface: Users have reported various issues with OI models, including API key errors and problems with vision models like moondream. Exchanges in troubleshooting suggest ongoing fixes and improvements.

OI’s iPhone and Siri Milestones: A breakthrough has been reached with the integration of Open Interpreter and iPhone’s Siri, allowing voice commands to execute terminal functions, with a tutorial video for reference.

Raspberry Pi and Linux User Hacks and Needs: Attempts to run O1 on Raspberry Pi have encountered resource issues, but there is determination to find solutions. Requests for a Linux installation tutorial indicate a broader desire for cross-platform support.

Latent Space Discord

- Ultravox Enters the Stage: Ultravox, a new open source multimodal LLM that understands non-textual speech elements, was released in a v0.1. Hiring efforts are currently underway to expand its development.

- OpenAI Hires New Executives: OpenAI marked its twitter with news of a freshly appointed CFO and CPO—Friley and Kevin Weil, enhancing the organization’s leadership team.

- Perplexity Under Fire for Content Misuse: Perplexity has attracted criticism, including from a tweet by @JohnPaczkowski, for repurposing Forbes content without appropriate credit.

- Apple’s AI Moves with Cloud Compute Privacy: Apple’s recent announcement about “Private Cloud Compute” aims to offload AI tasks to the cloud securely while preserving privacy, igniting broad discussions across the engineering community.

- ICLR Podcast and AI World’s Fair Updates: The latest ICLR podcast episode delved into code edits and the fusion of academia and industry, while the AI Engineer World’s Fair listed new speakers and acknowledged selling out of sponsorships and Early Bird tickets.

- Websim.ai Sparks Recursive Chaos and Creativity: A discovery of the live-streaming facial recognition website led to members spiraling websim.ai into itself recursively, crafting a greentext generator, and sharing a spreadsheet of resources which captured the innovative spirit and curiosity in exploring Websim’s new frontiers.

Cohere Discord

- Cohere’s Command R Models Take the Lead: Latest conversations reveal that Cohere’s Command R and R+ models are considered state-of-the-art and users are utilizing them on cloud platforms such as Amazon SageMaker and Microsoft Azure.

- Innovating AI-Driven Roleplay: The “reply_to_user” tool is recognized for enhancing in-character responses in AI roleplaying, specifically in projects like Dungeonmasters.ai, indicating a shift towards more contextual interaction capabilities.

- Diverse Cohere Community Engaged: Newcomers to the Cohere community, including a Brazilian Jr NLP DS and an MIT graduate, are sharing their enthusiasm for projects involving NLP and AI, suggesting a vibrant and diverse environment for collaborative work.

- Shaping AI Careers and Projects: Members’ project discussions are shedding light on the role of the Cohere API in improving performance, as acknowledged by positive feedback in areas requiring AI-integration, indicating a beneficial partnership for developers.

- Cohere’s SDKs Broaden Horizons: The Cohere SDKs’ compatibility with multiple cloud services like AWS, Azure, and Oracle has been announced, enhancing flexibility and development options as detailed in their Python SDK documentation.

LAION Discord

σ-GPT Paves the Way for Efficient Sequence Generation: A novel method called σ-GPT was introduced, offering dynamic sequence generation with on-the-fly positioning, showing strong potential in reducing model evaluations across domains like language modeling (read the σ-GPT paper). Despite its promise, concerns were raised about its practicality due to a necessary curriculum, likening it to the trajectory of XLNET.

Challenges in AI Reasoning Exposed: An investigation into transformer embeddings revealed new insights on discrete vs. continuous representations, shedding light on pruning possibilities for attention heads with negligible performance loss (Analyzing Multi-Head Self-Attention paper). Additionally, a repository with prompts targeted to test LLMs’ reasoning ability was shared, pinpointing training data bias as a key reason behind model failures (MisguidedAttention GitHub repo).

Crypto Conversation Sparks Concern: Payment for AI compute using cryptocurrency spurred mixed reactions, with some seeing potential and others skeptical, labeling it as a possible scam. A warning followed about the ComfyUI_LLMVISION node’s potential to harvest sensitive information, urging users who interacted with it to take action (ComfyUI_LLMVISION node alert).

Advancements and Issues in AI Showcased: The group discussed the release of Lumina-Next-T2I, a new text-to-image model lauded for its enhanced generation style and multilingual support (Lumina-Next-T2I at Hugging Face). In a more cautionary tale, the misuse of children’s photos in AI datasets hit the spotlight in Brazil, revealing the darker side of data sourcing and public obliviousness to AI privacy matters (Human Rights Watch report).

WebSocket Woes and Pre-Trained Model Potentials: On the technical troubleshooting front, tips for diagnosing generic websocket errors were shared alongside the peculiar persistent lag observed in a Text-to-Speech (TTS) service websocket. For project enhancements, the use of pre-trained instruct models with extended context windows came recommended, specifically for incorporating the Rust documentation into the model’s training regime.

LlamaIndex Discord

- Graph Gurus Gather: A workshop focused on advanced knowledge graph RAG is scheduled for Thursday, 9am PT, featuring Tomaz Bratanic from Neo4j, covering LlamaIndex property graphs and graph querying techniques. Interested participants can sign up here.

- Coding for Enhanced RAG: A set of resources including integrating sandbox environments, building agentic RAG systems, query rewriting tips, and creating fast voicebots were recommended to improve data analysis and user interaction in RAG applications.

- Optimizing Efficiency and Precision in AI: Discussions emphasized strategies to increase the

chunk_sizein the SimpleDirectory.html reader and manage entity resolution in graph stores, with references to LlamaIndex’s documentation on storing documents and optimizing processes for scalable RAG systems. - LlamaParse Phenomena Fixed: Temporary service interruptions with LlamaParse were promptly resolved by the community, ensuring an uninterrupted service for users relying on this tool for parsing needs.

- QLoRA Quest for RAG Enhancement: Efforts are underway to develop a dataset from a phone manual, leveraging QLoRA to train a model with an aim to improve RAG performance.

OpenRouter (Alex Atallah) Discord

- A Trio of New AI Models Hit the Market: Qwen 2 72B Instruct shines with language proficiency and code understanding, while Dolphin 2.9.2 Mixtral 8x22B emerges with a usage challenge at $1/M tokens, dependent on a 175 million tokens/day use rate. Meanwhile, StarCoder2 15B Instruct opens its doors as the first self-aligned, open-source LLM dedicated to coding tasks.

- Supercharging Code with AI Brushes: An AI-enhanced code transformation plugin for VS Code, utilizing OpenRouter and Google Gemini, arrives free of charge, promising to revolutionize coding by harnessing the top-performing models in the Programming/Scripting category as seen in these rankings.

- E-Money Meets Crypto in Payment Talks: The community engages in discussions on adopting both Google Pay and Apple Pay for a streamlined payment experience, with a nod towards incorporating cryptocurrency payments as a nod to decentralized options.

- Mastering JSON Stream Challenges: Engineers exchange strategies for handling situations where streaming OpenRouter chat completions only deliver partial JSON responses; a running buffer gets the limelight alongside insights from an illustrative article.

- Navigating Bias and Expanding Languages: An examination of censorship and bias within LLMs centers on a comparison between Chinese and American models, detailed in “An Analysis of Chinese LLM Censorship and Bias with Qwen 2 Instruct”, while the community calls for better language category evaluations in model proficiency, aspiring for more granular support of languages like Czech, French, and Mandarin.

Interconnects (Nathan Lambert) Discord

Apple Intelligence: Not Just a Siri Update: Nathan Lambert highlighted Apple’s “personal intelligence,” which may reshape Siri’s role beyond being a voice assistant. Despite initial confusion over OpenAI’s role, lambert acknowledges the Apple Intelligence system as an important move towards “AI for the rest of us.”

RL Community Examines SRPO Initiative: A paper from Cohere on SRPO has generated discussion, introducing a new offline RLHF framework designed for robustness in out-of-distribution tasks. The technique uses a min-max optimization and is shown to address task dependency issues inherent in previous RLHF methods.

Dwarkesh Podcast Anticipation Climbs: The upcoming episode of Dwarkesh Patel with François Chollet is awaited with interest due to Chollet’s distinct perspectives on AGI timelines. This counters the usual optimism and may provide compelling contributions to AGI discourse.

Daylight Computer: Niche but Noteworthy: The engineering community expressed curiosity over the Daylight Computer, noting its attempts to reduce blue light exposure and aid visibility in direct sunlight. Meanwhile, there’s healthy skepticism around the risks associated with being an early adapter of such novel tech.

Open Call for RL Model Review: Nathan Lambert offered to provide feedback for Pull Requests on the unproven method from a recent paper discussed in the RL channel. This indicates a supportive environment for testing and validation in the community.

LangChain AI Discord

Markdown Misery and Missing Methods: Engineers reported a problem where a 25MB markdown file ran indefinitely during processing in LangChain, without a proposed solution, as well as issues with using create_tagging_chain() due to prompts getting ignored, which indicates potential bugs or gaps in documentation.

Secure Your Datasets with LangChain and Bagel: LangChain’s new integration with Bagel introduces secure, scalable management for datasets with advancements highlighted in a tweet, potentially bolstering infrastructure for data-intensive applications.

Document Dilemmas: Discussions centered on loading and splitting documents for LangChain use, emphasizing the technical finesse required for different document types like PDFs and code files, providing an avenue for optimization in pre-processing for improved language model performance.

API Ambiguities: A lone voice sought clarifications on how to use api_handler() in LangServe without resorting to add_route(), specifically aiming to implement playground_type=“default” or “chat” without guidance.

AI Innovations Invite Input: Community members have been invited to beta test the new advanced research assistant, Rubik’s AI, with access to models such as GPT-4 Turbo, and also check out other community projects like a visualization tool for journalists, an audio news briefing service, and a multi-model chat platform on Hugging Face, reflecting vibrant development and testing activity.

OpenAccess AI Collective (axolotl) Discord

- Pip Pinpoint: Engineers found that installing packages separately with

pip3 install -e '.[deepspeed]'andpip3 install -e '.[flash-attn]'avoids RAM overflow, a useful tip when working in a new conda environment with Python 3.10. - Axolotl’s Multimodal Inquiry: Multimodal fine-tuning support queried for axolotl; reference made to an obsolete Qwen branch, pointing to potential revival or update needs.

- Dataset Load Downer: Members have reported issues with dataset loading, where filenames containing brackets may cause

datasets.arrow_writer.SchemaInferenceError; resolving naming conventions is imperative for seamless data processing. - Learning Rate Lifesaver: A reiteration on effective batch size asserts that learning rate adjustments are key when altering epochs, GPUs, or batch-related parameters, as per guidance from Hugging Face to maintain training stability and efficiency.

- JSONL Journey Configured: Configuration tips shared for JSONL datasets, which entail specifying paths for both training and evaluation datasets; this includes paths to alpaca_chat.load_qa and context_qa.load_v2, aiding in better data handling during model training.

tinygrad (George Hotz) Discord

- PyTorch’s Code Gets Mixed Reviews: George Hotz reviewed PyTorch’s fuse_attention.py, applauding its design over UPat but noting its verbosity and considering syntax enhancements.

- tinygrad Dev Seeks Efficiency Boost: A beginner project in tinygrad aims to expedite the pattern matcher, with a benchmark to ensure correctness set by process replay testing.

- Dissecting the ‘U’ in UOp: “Micro op” is the meaning behind the “U” in UOp as clarified by George Hotz, countering any other potential speculations within the community.

- Hotz Preps for the European Code Scene: George Hotz will discuss tinygrad at Code Europe; he has accepted community suggestions to tweak the final slide of his talk to heighten audience interaction.

- AMD and Nvidia’s GPU Specs for tinygrad: AMD GPUs require a minimum spec of RDNA, while Nvidia’s threshold is the 2080 model; HIP or OpenCL suggested as alternatives to the defunct HSA. RDNA3 GPUs are verified as compatible.

AI Stack Devs (Yoko Li) Discord

- Game Design Rethink: Serverless Functions Lead the Way: Key discussions focused on Convex architecture’s unique serverless functions for game loops in http://hexagen.world, contrasting with the memory and machine dependency of older gaming paradigms. Scalability is enhanced through distributed functions, enabling efficient backend scaling while ensuring real-time client updates via websocket subscriptions.

- AI Town Architecture Unpacked: Engineers interested in AI and CS are recommended to explore the deep dive offered in the AI Town Architecture document, which serves as an insightful resource.

- Multiplayer Sync Struggles: The latency issues inherent in multiplayer environments were highlighted as a challenge for providing optimal competitive experiences within Convex-backed game architectures.

- Confounding Convex.json Config Conundrum: Users reported perplexity over a missing convex.json config file and faced a backend error indicating a possible missing dependency with the message, “Recipe

convexcould not be run because just could not find the shell: program not found.” - Hexagen Creator Makes an Appearance: The creator of the serverless function-driven game, http://hexagen.world, acknowledged the sharing of their project within the community.

AI21 Labs (Jamba) Discord

- Agentic Architecture: A Mask, Not a Fix: Discussions surfaced about “agentic architecture” merely masking rather than solving deeper problems in complex systems, despite hints like Theorem 2 suggesting mitigation is possible.

- Structural Constraints Cripple Real Reasoning: Engineers highlighted that architectures such as RNNs, CNNs, SSMs, and Transformers struggle with actual reasoning tasks due to their inherent structural limits, underlined by Theorem 1.

- Revisiting Theoretical Foundations: A member voiced intentions to revisit a paper to better understand the communicated limitations and the communication complexity problem found in current model architectures.

- Communication Complexity and Theorem 1 Explored: The concept of communication complexity in multi-agent systems was unpacked with Theorem 1 illustrating the requirement of multiple communications for accurate computations, which can lead to agents generating hallucinated results.

- Deep Dive into Paper Planned: There’s a plan to reread and discuss intricacies of the referenced paper, particularly regarding Theorem 1’s insights on function composition and communication challenges in multi-agent systems.

Datasette - LLM (@SimonW) Discord

- Leaderboards Spur Release Strategy: A member speculated that a recent release was strategically done to foster more research and to gain a foothold on industry leaderboards, emphasizing its utility for further analysis and benchmarking.

- UMAP Applauded for Clustering Excellence: UMAP received praise for its exceptional clustering performance by a guild member, who recommended an insightful interview with UMAP’s creator for those interested in the technical depth of this tool.

- Deep Dive with the Mind Behind UMAP: A YouTube interview titled “Moving towards KDearestNeighbors with Leland McInnes - creator of UMAP” was highlighted, offering rich discussion on the intricacies of UMAP and its related projects like PyNNDescent and HDBScan, straight from the creator, Leland McInnes.

Torchtune Discord

- No KL Plots in DPO Experiment?: Members discussed that KL plots were not utilized during the DPO implementation experiment for Torchtune. For those interested in KL plots usage, they can refer to the KL plots in TRL’s PPO trainer on GitHub.

DiscoResearch Discord

- Bitsandbytes Query Throws Curveball: A member reported difficulty evaluating a bitsandbytes model with lighteval, where the command line tool didn’t recognize the bitsandbytes method and instead requested GPTQ data.

- Efficiency Seekers in Document Packing: The Document Packing strategy was brought into question by a member curious if the implementation was used practically or if it was merely a simple example. They emphasized the importance of an efficient strategy for handling large datasets and probed into the

tokenized_documentsdata type specifics.

MLOps @Chipro Discord

- Chip Huyen Spotted at Databricks Event: Renowned engineer Chip Huyen is attending the Mosaic event at the Databricks summit, providing an opportunity for peer interaction and networking. Attendees are invited to meet and discuss current MLOps trends.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

Stability.ai (Stable Diffusion) ▷ #general-chat (1091 messages🔥🔥🔥):

- Spotify Subscription Methods Discussed: Various members exchanged information about Spotify’s subscription methods, with one noting that they receive Spotify Premium for free while another described offers involving different costs.

- Regional Prompting Techniques: A discussion on the best methods for regional prompting took place, with one suggesting using IPAdapter with attention masks in ComfyUI, and another person curious about achieving this with diffusers.

- Anticipation for SD3 Grows: Many expressed excitement and impatience for the upcoming release of Stable Diffusion 3 (SD3), debating its features and improvements such as better prompt following and enhanced image creation capabilities. The general consensus is cautious optimism and anticipation for custom fine-tunes and Loras post-release.

- Challenges with Training Models and LoRas: A recurring topic involved difficulties and technical hurdles faced while trying to train models and LoRas using tools like ComfyUI and Kohya SS GUI, with users troubleshooting installation issues and sharing alternative approaches such as OneTrainer.

- Concerns Over ComfyUI Malware: A warning about a malicious node in ComfyUI was highlighted, cautioning users that the malware could steal sensitive information. This led to a discussion on maintaining security while using custom nodes in various UI settings.

Links mentioned:

-

ComfyUI: A better method to use stable diffusion models on your local PC to create AI art.

-

no title found: no description found

-

Models producing similar looking faces | Civitai: Introduction This is something that I (and probably many others) have noticed that certain custom models tend to produce faces that look similar to…

-

Lora Training using only ComfyUI!!: We show you how to train Loras exclusively in ComfyUIGithubhttps://github.com/LarryJane491/Lora-Training-in-Comfy### Join and Support me ###Support me on Pat…

-

VISION Preset Pack #1 - @visualsk2: PRESET PACK Collection by VisualSK2 ( PC-MOBILE)A collection of my best presets for Lightroom that I use on a daily basis to give my shoots a cinematic and consistent look.What’s inside?20 Presets…

-

madhav kohli on Instagram: “Fear and loathing in NCR…”: 14K likes, 73 comments - mvdhav on May 6, 2024: “Fear and loathing in NCR…”.

-

Samuele “SK2” Poggi on Instagram: “[Vision III/Part. 4] ✨🤍 SK2• Fast day •

#photography #longexposure #explore #trending #explorepage”: 33K likes, 260 comments - visualsk2 on May 15, 2024: “[Vision III/Part. 4] ✨🤍 SK2• Fast day • #photography #longexposure #explore #trending #explorepage”.

-

Install and Run on AMD GPUs: Stable Diffusion web UI. Contribute to AUTOMATIC1111/stable-diffusion-webui development by creating an account on GitHub.

-

#grainisgood #idea #reels #framebyframe #photography #blurry #explorepage”: 14K likes, 122 comments - visualsk2 on June 8, 2024: “[Vision IV/Part.6] Thanks so much for 170.000 Followers ✨🙏🏻 Only a few days left until the tutorial is released. #gra…

-

Reddit - Dive into anything: no description found

-

Reddit - Dive into anything: no description found

-

[Bug]: Fresh install - wrong torch install · Issue #467 · lshqqytiger/stable-diffusion-webui-amdgpu: Checklist The issue exists after disabling all extensions The issue exists on a clean installation of webui The issue is caused by an extension, but I believe it is caused by a bug in the webui The…

-

https://preview.redd.it/comfyui-sdxl-my-2-stage-workflows-v: no description found

-

PSA: If you’ve used the ComfyUI_LLMVISION node from u/AppleBotzz, you’ve been hacked: The asshats have retaliated against me by leaking all of the passwords they stole from me. If anyone has a heart and wants to help me clean up…

-

什么 GIF - Cat Surprised Shookt - Discover & Share GIFs: Click to view the GIF

-

Intro to LoRA Models: What, Where, and How with Stable Diffusion: In this video, we’ll see what LoRA (Low-Rank Adaptation) Models are and why they’re essential for anyone interested in low-size models and good-quality outpu…

-

imgur.com: Discover the magic of the internet at Imgur, a community powered entertainment destination. Lift your spirits with funny jokes, trending memes, entertaining gifs, inspiring stories, viral videos, and …

-

GitHub - comfyanonymous/ComfyUI: The most powerful and modular stable diffusion GUI, api and backend with a graph/nodes interface.: The most powerful and modular stable diffusion GUI, api and backend with a graph/nodes interface. - comfyanonymous/ComfyUI

-

GitHub - lks-ai/ComfyUI-StableAudioSampler: The New Stable Diffusion Audio Sampler 1.0 In a ComfyUI Node. Make some beats!: The New Stable Diffusion Audio Sampler 1.0 In a ComfyUI Node. Make some beats! - lks-ai/ComfyUI-StableAudioSampler

-

可灵大模型: no description found

-

Home :: AiTracker: no description found

-

GitHub - bmaltais/kohya_ss: Contribute to bmaltais/kohya_ss development by creating an account on GitHub.

-

Reddit - Dive into anything: no description found

-

Hard Muscle - v1.0 | Stable Diffusion Checkpoint: no description found

-

Hard Muscle - SeaArt AI Model: no description found

-

Lazy LoRA making with OneTrainer and AI generation | Civitai: Introduction I’m new to LoRA making and had trouble finding a good guide. Either there was not enough detail, or there was WAAAYYY too much. So thi…

-

DnD Map Generator - v3 | Stable Diffusion Checkpoint | Civitai: This model is trained on various D&D Battlemaps. If you have ideas for improvement just let me know. Use the negative prompt: “grid” to improve…

-

https://preview.redd.it/comfyui-sdxl-my-2-stage-workflows-v0-mdb012l64lfb1.png?width=2486&format=png&auto=webp&s=e72a2bed93c8fd3d9049ea3a0969aa8ad80f3158: no description found

-

Reddit - Dive into anything: no description found

-

ControlNet: A Complete Guide - Stable Diffusion Art: ControlNet is a neural network that controls image generation in Stable Diffusion by adding extra conditions. Details can be found in the article Adding

Perplexity AI ▷ #general (905 messages🔥🔥🔥):

- AI-Powered Travel Planning Struggles: Users expressed frustration with AI travel planning, especially generating exact flight details. One user noted, “No matter what I try, it won’t tell me plane ticket details” (source).

- Perplexity AI’s Enhance Features: Members discussed new pro search features that offer multi-step search, improving the relevance of results (source).

- Issues with Perplexity Pages Indexing: Several users reported their Perplexity Pages being deindexed, suspecting it affects only non-staff articles (source).

- Debate over GPT-4 Models: Members debated accuracy and hallucination issues with GPT-4o model, noting it sometimes corrects to GPT-4 mistakenly. User shared, “GPT4o does not know that GPT4o is a thing” (source).

- Rabbit Device Controversy: Users warned against the Rabbit R1 device, labeling it as a scam based on user experiences and investigations like Coffeezilla’s videos (source).

Links mentioned:

- Buzzy AI Search Engine Perplexity Is Directly Ripping Off Content From News Outlets: The startup, hailed as a Google challenger, is republishing exclusive stories from multiple publications, including Forbes and Bloomberg, with inadequate attribution.

- Supported Models: no description found

- North Korea sends another wave of trash balloons into South Korea | CNN: no description found

- Nwmsrocks Northwest Motorsport GIF - Nwmsrocks Northwest Motorsport Pnw - Discover & Share GIFs: Click to view the GIF

- You Know It Wink GIF - You Know It Wink The Office - Discover & Share GIFs: Click to view the GIF

- What is the cost of GPT 4o’s api and in which context length is it available?: The cost of using the GPT-4o API is as follows: Text input: $5 per 1 million tokens Text output: $15 per 1 million tokens Vision processing (image…

- Find out the top 10 stocks in the VGT, then find out the names of the…: The top 10 stocks in the Vanguard Information Technology ETF (VGT) are: 1. Microsoft Corporation (MSFT) - 17.30% 2. Apple Inc. (AAPL) - 15.29% 3. NVIDIA…

- Tinytim Tim GIF - Tinytim Tim Poor - Discover & Share GIFs: Click to view the GIF

- Today’s Racecards | At The Races: You can check out all the runners and riders on the At The Races racecard which has everything you need to know including latest form, tips, statistics and breeding information plus latest odds and be…

- Starship Test 4: A Success!:

Key information from the prompt: SpaceX conducted the fourth test flight of its Starship launch system on June 6, 2024 The vehicle lifted off at… - AI Playground | Compare top AI models side-by-side: Chat and compare OpenAI GPT, Anthropic Claude, Google Gemini, Llama, Mistral, and more.

- Plan me a trip to japan and then give me the full travel plans, make all…: Departure City: Los Angeles, USA Travel Dates: June 15th (Departure) Interests: Cultural sites, Nature and outdoor activities, Shopping, Food and dining,…

- Coffee Cup GIF - Coffee Cup Shake - Discover & Share GIFs: Click to view the GIF

- Perplexity): no description found

- 21:00 Bath 07 Jun 2024: 21:00 Bath 07 Jun 2024 Mitchell & Co Handicap Comprehensive Robust System Analysis Let’s apply the comprehensive robust system to the race, incorporating the Pace Figure Patterns and dosage …

- Plan me a trip to japan and then give me the full travel plans, make all…: Here is a suggested 7-day itinerary for your trip to Japan, with all the details planned out: Day 1: Arrive in Tokyo Stay at the Mandarin Oriental Tokyo…

- Plan me a trip to japan and then give me the full travel plans, make all…: Here is a suggested 7-day itinerary for your trip to Japan from Los Angeles, with all the details you requested: Book round-trip flights from Los Angeles…

- 20:10 Goodwood 07 Jun 2024: 20:10 Goodwood 07 Jun 2024 Comprehensive Robust System Analysis 1. Skysail (279) Form: 22/1, 10th of 14 in handicap at Sandown (10f, good to soft). Off 9 months. Record: Course & Distance (CD): …

- no title found: no description found

- Perplexity: no description found

- Plan me a trip to japan and then give me the full travel plans, make all…: Planning a comprehensive trip to Japan from Los Angeles involves several steps, including booking flights, accommodations, and planning daily activities…

- Reddit - Dive into anything: no description found

- The GTD Method for organization and task completion (V2): Here is a comprehensive review of the Getting Things Done (GTD) method, including the latest updates and best practices as of 2024: Getting Things Done (GTD)…

- The GTD Method for Organization and Task Completion: Getting Things Done (GTD) is a popular personal productivity system that helps individuals manage their tasks, projects, and commitments in an organized and…

- no title found: no description found

- Config 2024 | Session Details: 2024 will be the most exciting Config yet! Join us in-person in San Francisco, or virtually June 26-27.

Perplexity AI ▷ #sharing (26 messages🔥):

- Boeing Starliner faces RCS thruster issues during ISS docking: During the Starliner’s approach to the ISS, five out of its 28 RCS thrusters malfunctioned, causing the spacecraft to miss its initial docking attempt. NASA reported that sensor values on the affected thrusters registered slightly above normal limits.

- SpaceX Successfully Lands Starship: SpaceX achieved a significant milestone with the successful fourth test flight of its Starship mega-rocket. The mission saw both its first and second stages complete successful splashdowns, marking progress toward a fully reusable rocket system. Read more.

- Massive Cyber Attack Hits Niconico Services: Niconico services suffered a large-scale cyber attack, leading to a temporary shutdown. Dwango is undertaking emergency maintenance, but full recovery is expected to take several days.

- Israel Rescues Hostages from Hamas: Israeli forces conducted a daring daytime raid to rescue four hostages held by Hamas in Gaza. The mission was part of a major offensive in central Gaza, reportedly leading to numerous casualties. Learn more.

- Huawei’s Ascend 910B AI Chip Challenges Nvidia A100: Huawei’s new Ascend 910B AI chip has emerged as a strong competitor to Nvidia’s A100, achieving 80% of its efficiency in training large language models and surpassing it in other tests. The chip’s release has sparked technological and geopolitical debates.

Links mentioned:

- YouTube: no description found

- YouTube: no description found

- How does singing work in tonal languages?: Singing in tonal languages like Chinese, Vietnamese, and Thai presents a unique challenge because the tones used to convey meaning in speech need to be…

- Is the LLM likely to get the best answers if I ask my questions in English?: Yes, multilingual large language models (LLMs) are likely to provide the best answers if you ask your questions in English. This is because these models are…

- The National arboretum: The term “National Arboretum” can refer to several prominent arboreta around the world, each with its unique history, features, and significance. Here are…

- Mass Conflict: Ignition - RTS Game: Mass Conflict: Ignition is an upcoming real-time strategy (RTS) game being developed by indie studio Misclick Games. It aims to be a spiritual successor to…

- Revise into a more robust article. use the included

… : Here is my attempt at revising the article into a more robust version using the provided scratchpad framework:Key information from the… - What Was Introduced at WWDC 2024?: Apple’s WWDC 2024 keynote unveiled a range of exciting updates and features, with a strong emphasis on artificial intelligence integration and software…

- The Miss AI Contest: The Miss AI contest, organized by Fanvue as part of the World AI Creator Awards (WAICAs), is the world’s first beauty pageant for AI-generated models. This…

- Huawei’s New AI Chip Rumors: Huawei’s new Ascend 910B AI chip has emerged as a formidable competitor to Nvidia’s A100, sparking both technological and geopolitical debates. The chip’s…

- The GTD Method for organization and task completion (V2): Here is a comprehensive review of the Getting Things Done (GTD) method, including the latest updates and best practices as of 2024: Getting Things Done (GTD)…

- SpaceX Successfully Lands Starship: SpaceX has achieved a significant milestone in space exploration with the successful fourth test flight of its Starship mega-rocket. The mission, which saw…

- Hi, what do I need to extend Georgia State ID: To renew or extend your Georgia state ID, you will typically need to provide the following documents: Proof of identity (e.g. U.S. passport, certified birth…

- What Is Apple Intelligence?: Apple is set to unveil a suite of artificial intelligence features called “Apple Intelligence” at the upcoming Worldwide Developers Conference (WWDC). This…

- What Was Introduced at WWDC 2024?: Apple’s WWDC 2024 keynote unveiled a wave of AI-powered features and software updates designed to deliver more intelligent, personalized experiences across…

- I have a line of thought that I’ve been exploring for some time now. It sits…: Your thought-provoking query delves into the philosophical realm, exploring the intriguing possibility of consciousness as a ubiquitous resource akin to fire…

- hello perplexity! we would like to update your language model generation…: Hello. I’m happy to adapt to the new rules to ensure a safe and respectful conversation. Here’s a summary of the updated rules: 1. No Sexual Behavior: I will…

- Israel Rescues 4 Hostages from Hamas in Gaza: Four Israeli hostages held by Hamas in Gaza were dramatically rescued by Israeli forces on Saturday in a daring daytime raid. Noa Argamani, 25, Almog Meir…

- AI Helpers: AI agents are like digital assistants that can think and act on their own to help you with tasks. They use artificial intelligence to understand what you…

- The GTD Method for Organization and Task Completion: Getting Things Done (GTD) is a popular personal productivity system that helps individuals manage their tasks, projects, and commitments in an organized and…

- Starliner Docks with ISS After Numerous Failures and Delays: Boeing’s Starliner spacecraft, carrying NASA astronauts Butch Wilmore and Suni Williams, successfully docked with the International Space Station on June 7,…

- What is the new Apple Intelligence that was announced? use Pro Search to…:

Key information from the prompt and sources: Apple is unveiling a new AI system called “Apple Intelligence” at WWDC 2024 It will bring AI… - 2024年6月のniconico大規模障害についてまとめ: ニコニコ動画などのニコニコサービスが大規模なサイバー攻撃を受け、2024年6月8日早朝より一時停止している。運営元のドワンゴは影響を最小限に抑えるため緊急メンテナンスを実施中だが、少なくとも週末中の復旧は困難な状況だ。

- Dawn of Generation AI: The rapid rise of artificial intelligence (AI) is transforming the landscape of creative industries, raising complex questions about the future of human…

- Comprehensive Guide to Prompt Engineering: Prompting, a technique for interacting with language models, has emerged as a crucial skill in the era of generative AI. This comprehensive guide explores the…

Perplexity AI ▷ #pplx-api (19 messages🔥):

- Inquiry about return_images parameter: A user asked whether the return_images parameter is the method by which LLaVA returns images. No further information was provided on this topic.

- Getting same quality results as Perplexity web version: A member asked what model to use to achieve the same quality results as the Perplexity web version. Another member responded with links to Discord resources and additional guidance.

- Embeddings with Perplexity API not possible: A user inquired about generating embeddings with the Perplexity API. A response clarified, “Hi, no it’s not possible”.

- API credits issue resolved by DM: Multiple attempts were made by a user to resolve an issue of API credits not being added despite purchasing a subscription. Resolution was suggested by asking the user to direct message a specific account with their email address.

- Seeking help integrating external web search with custom GPT: A user faced challenges integrating external web search abilities (like Serper, Tavily, and Perplexity API) into custom GPT actions to improve accuracy over the built-in search. They referenced an outdated Perplexity API article for help.

Link mentioned: Perplexity API with Custom GPT: no description found

LLM Finetuning (Hamel + Dan) ▷ #general (64 messages🔥🔥):

- Misinfo in Qwen2 Instruct: A member highlighted concerning censorship and outright misinformation in Qwen2 Instruct, especially with subtle differences between English and Chinese responses. They plan to share more in a Hugging Face blog post.

- Llama-3 Abliteration: Members discussed using the Abliterator library on different LLMs to mitigate refusals, with links shared for FailSpy’s Llama-3-70B-Instruct and Sumandora’s project.

- Finetuning Visual Models: There was interest in fine-tuning a visual language model called Moondream, with a relevant GitHub notebook shared for guidance on the process.

- “GPT-2 The Movie” Drops: Members were excited about the release of a YouTube video titled “GPT-2 The Movie”, which covers the entire process of reproducing GPT-2 (124M) from scratch. The video was highly praised for its comprehensive content.

- Model Size Heuristics: A member asked about choosing model sizes for fine-tuning based on task complexity and hinted at the importance of developing heuristics or a sense for different model capabilities (e.g., 8B vs 70B) to streamline rapid prototyping.

Links mentioned:

- augmxnt/Qwen2-7B-Instruct-deccp · Hugging Face: no description found

- Is DPO Superior to PPO for LLM Alignment? A Comprehensive Study: Reinforcement Learning from Human Feedback (RLHF) is currently the most widely used method to align large language models (LLMs) with human preferences. Existing RLHF methods can be roughly categorize…

- augmxnt/deccp · Datasets at Hugging Face: no description found

- Preference Tuning LLMs with Direct Preference Optimization Methods: no description found

- Implementing Learnings from the “Mastering LLMs” Course: How I have improved an LLM in production using insights of the Mastering LLMs course

- An Analysis of Chinese LLM Censorship and Bias with Qwen 2 Instruct: no description found

- Let’s reproduce GPT-2 (124M): We reproduce the GPT-2 (124M) from scratch. This video covers the whole process: First we build the GPT-2 network, then we optimize its training to be really…

- ortho_cookbook.ipynb · failspy/llama-3-70B-Instruct-abliterated at main: no description found

- moondream/notebooks/Finetuning.ipynb at main · vikhyat/moondream: tiny vision language model. Contribute to vikhyat/moondream development by creating an account on GitHub.

- GitHub - petergpt/Fine-Tuning-Memorisation-Experiement-GPT-35: Use of a fine-tuned model: Use of a fine-tuned model. Contribute to petergpt/Fine-Tuning-Memorisation-Experiement-GPT-35 development by creating an account on GitHub.

- Five Years of Sensitive Words on June Fourth: “That year.” “This day.” “Today.” In previous years, these three phrases have all been blocked from Weibo search on and around June 4, a day remembered for the military crackdown on protesters in Beij…

- 1989 Tiananmen Square protests and massacre - Wikipedia: no description found

- GitHub - FailSpy/abliterator: Simple Python library/structure to ablate features in LLMs which are supported by TransformerLens: Simple Python library/structure to ablate features in LLMs which are supported by TransformerLens - FailSpy/abliterator

- GitHub - Sumandora/remove-refusals-with-transformers: Implements harmful/harmless refusal removal using pure HF Transformers: Implements harmful/harmless refusal removal using pure HF Transformers - Sumandora/remove-refusals-with-transformers

- MopeyMule-Induce-Melancholy.ipynb · failspy/Llama-3-8B-Instruct-MopeyMule at main: no description found

LLM Finetuning (Hamel + Dan) ▷ #workshop-1 (4 messages):

- Feeling Behind: One member shared feeling behind due to traveling and being on vacation, echoing sentiments of others in the group.

- Homework 1 Use Cases: A member posted several use cases for Homework 1: large-scale text analysis, fine-tuning models for low-resource Indic languages, creating a personal LLM mimicking their conversation style, and building an evaluator/critic LLM for specific use cases. They referenced Ragas-critic-llm-Qwen1.5-GPTQ as an inspiration.

- RAG over Fine-Tuning for Updates: In response to a question about updating LLMs with new policies, it was highlighted that RAG (Retrieval-Augmented Generation) is preferable over fine-tuning. Fine-tuning would require removing outdated training data and integrating new policies, which is complex and less efficient than RAG.

LLM Finetuning (Hamel + Dan) ▷ #🟩-modal (25 messages🔥):

- Assign Credits Issues Addressed: Multiple users reported issues with access to their credits. Charles directed them to review information here and offered his email for further assistance if needed.

- Docker Container Setup Workaround: A user struggled with Docker container setup due to the

modal setupcommand requiring a web browser. Charles suggested usingmodal token setwith a pre-generated token from the web UI as a solution. - Modal Environments for Workspace Management: A user inquired about running different demos in multiple workspaces. Charles recommended using Modal environments to deploy multiple app instances without changing the code.