AI News for 8/2/2024-8/5/2024. We checked 7 subreddits, 384 Twitters and 28 Discords (249 channels, and 5970 messages) for you. Estimated reading time saved (at 200wpm): 685 minutes. You can now tag @smol_ai for AINews discussions!

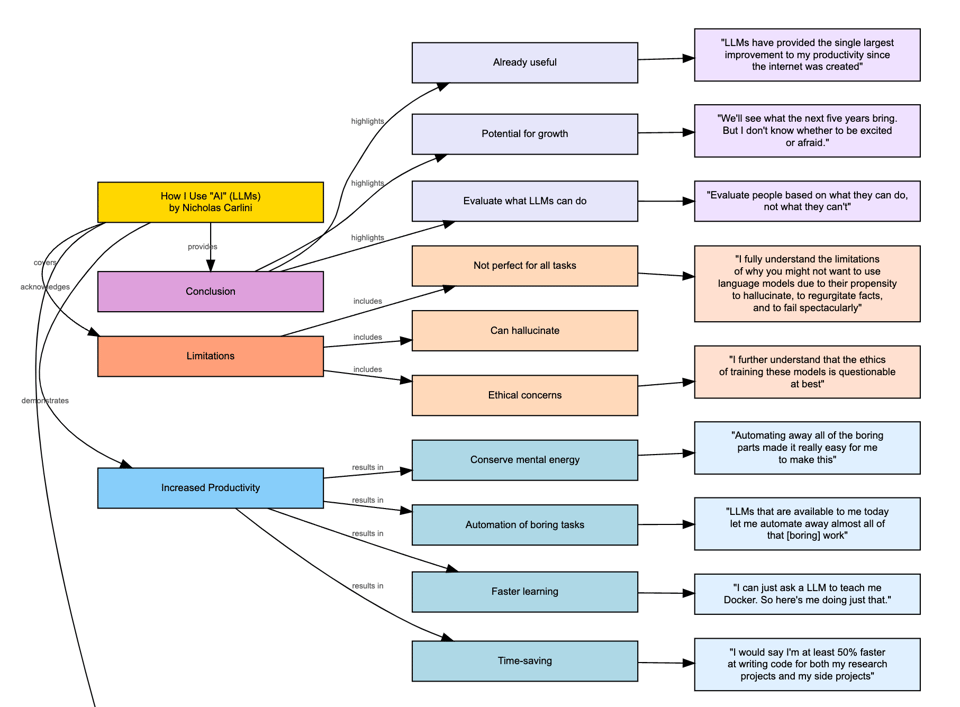

Congrats to Groq’s shareholders’ net worth going up while everyone else’s goes down (and Intel’s CEO prays). Nicholas Carlini of DeepMind is getting some recognition (and criticism) as one of the most thoughtful public writers on AI with a research background. This year he has been broadening out from his usual adversarial stomping grounds with his benchmark for large language models and made waves this weekend with an 80,000 word treatise on How He Uses AI, which we of course used AI to summarize:

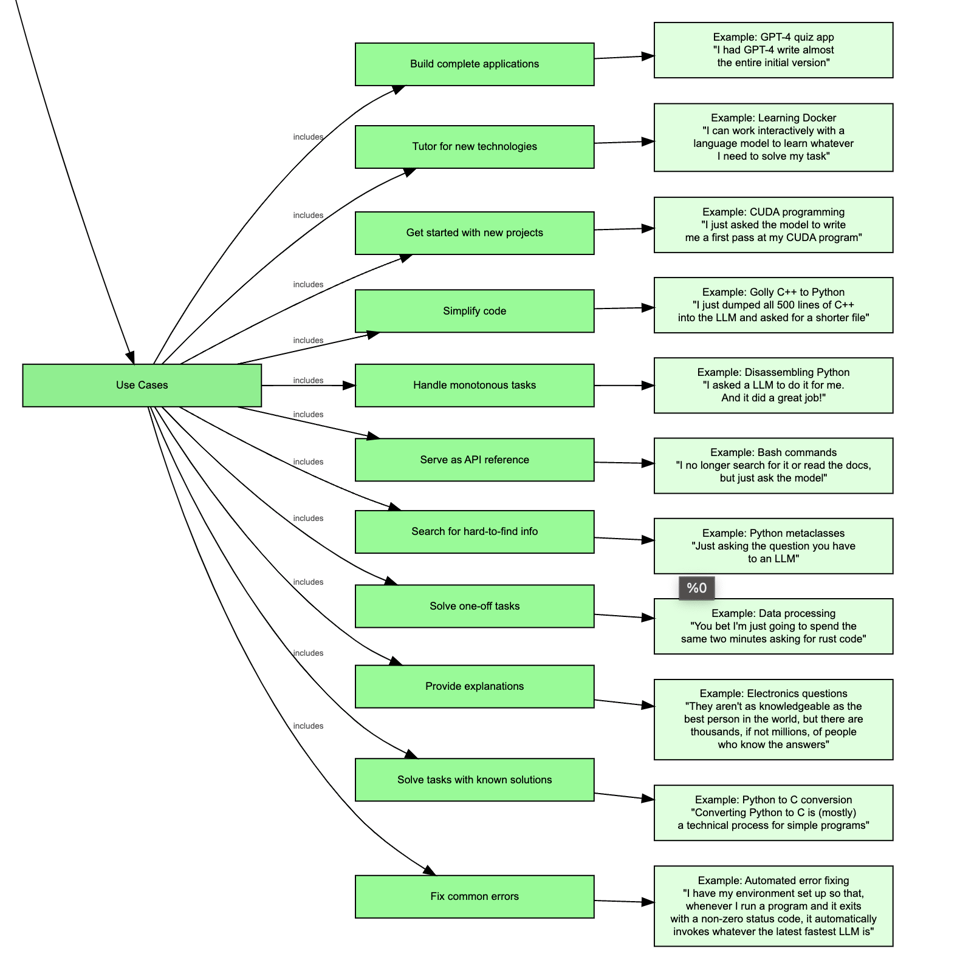

as well as usecases:

And, impressively, he says that this is “less than 2%” of the usecases for LLMs he has had (that’s 4 million words of writing if he listed everything).

Chris Dixon is known for saying “What the smartest people do on the weekend is what everyone else will do during the week in ten years”. When people blow wind on the setting AI Winter saying it hasn’t produced enough measurable impact at work, they may simply be too short term oriented. Each of these is at least worth polished tooling, if not a startup.

New: we are experimenting with smol, tasteful ads specifically for help AI Engineers. Please click through to support our sponsors, and hit reply to let us know what you’d like to see!

[Sponsored by Box] Box stores docs. Box can also extract structured data from those docs. Here’s how to do it using the Box AI API..

swyx comment: S3’s rigidity is Box’s opportunity here. The idea of a “multimodal Box” - stick anything in there, get structured data out - makes all digital content legible to machines. Extra kudos to the blogpost for also showing that this solution -like any LLM-driven one- can fail unexpectedly!

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs. AI and Robotics Developments

-

Figure AI: @adcock_brett announced the launch of Figure 02, described as “the most advanced humanoid robot on the planet,” with more details coming soon.

-

OpenAI: Started rolling out ‘Advanced Voice Mode’ for ChatGPT to some users, featuring natural, real-time conversational AI with emotion detection capabilities.

-

Google: Revealed and open-sourced Gemma 2 2B, scoring 1130 on the LMSYS Chatbot Arena, matching GPT-3.5-Turbo-0613 and Mixtral-8x7b despite being much smaller.

-

Meta: Introduced Segment Anything Model 2 (SAM 2), an open-source AI model for real-time object identification and tracking across video frames.

-

NVIDIA: Project GR00T showcased a new approach to scale robot data using Apple Vision Pro for humanoid teleoperation.

-

Stability AI: Introduced Stable Fast 3D, generating 3D assets from a single image in 0.5 seconds.

-

Runway: Announced that Gen-3 Alpha, their AI text-to-video generation model, can now create high-quality videos from images.

AI Research and Development

-

Direct Preference Optimization (DPO): @rasbt shared a from-scratch implementation of DPO, a method for aligning large language models with user preferences.

-

MLX: @awnihannun recommended using lazy loading to reduce peak memory use in MLX.

-

Modality-aware Mixture-of-Experts (MoE): @rohanpaul_ai discussed a paper from Meta AI on a modality-aware MoE architecture for pre-training mixed-modal, early-fusion language models, achieving substantial FLOPs savings.

-

Quantization: @osanseviero shared five free resources for learning about quantization in AI models.

-

LangChain: @LangChainAI introduced Denser Retriever, an enterprise-grade AI retriever designed to streamline AI integration into applications.

AI Tools and Applications

-

FarmBot: @karpathy likened FarmBot to “solar panels for food,” highlighting its potential to automate food production in backyards.

-

Composio: @llama_index mentioned Composio as a production-ready toolset for AI agents, including over 100 tools for various platforms.

-

RAG Deployment: @llama_index shared a comprehensive tutorial on deploying and scaling a “chat with your code” app on Google Kubernetes Engine.

-

FastHTML: @swyx announced starting an app using FastHTML to turn AINews into a website.

AI Ethics and Societal Impact

-

AI Regulation: @fabianstelzer drew parallels between current AI regulation efforts and historical restrictions on the printing press in the Ottoman Empire.

-

AI and Job Displacement: @svpino humorously commented on the recurring prediction of AI taking over jobs.

Memes and Humor

-

@nearcyan shared a meme about Mark Zuckerberg’s public image change.

-

@nearcyan joked about idolizing tech CEOs.

-

@lumpenspace made a humorous comment about the interpretation of diffusion as autoregression in the frequency domain.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. The Data Quality vs. Quantity Debate in LLM Training

-

Since this is such a fast moving field, where do you think LLM will be in two years? (Score: 61, Comments: 101): In the next two years, the poster anticipates significant advancements in Large Language Models (LLMs), particularly in model efficiency and mobile deployment. They specifically inquire about potential reductions in parameter count for GPT-4-level capabilities and the feasibility of running sophisticated LLMs on smartphones.

- Synthetic data generation is becoming crucial as organic data runs out. The Llama 3 paper demonstrated successful techniques, including running generated code through ground truth sources to strengthen prediction abilities without model collapse.

- Researchers anticipate growth in multimodal domains, with models incorporating image/audio encoders for better world understanding. Future developments may include 4D synthetic data (xyzt data associated with text, video, and pictures) and improved context handling capabilities.

- Model efficiency is expected to improve significantly. Predictions suggest 300M parameter models outperforming today’s 7B models, and the possibility of GPT-4 level capabilities running on smartphones within two years, enabled by advancements in accelerator hardware and ASIC development.

-

“We will run out of Data” Really? (Score: 61, Comments: 67): The post challenges the notion of running out of data for training LLMs, citing that the internet contains 64 ZB of data while current model training uses data in the TB range. According to Common Crawl, as of June 2023, the publicly accessible web contains ~3 billion web pages and ~400 TB of uncompressed data, but this represents only a fraction of total internet data, with vast amounts existing in private organizations, behind paywalls, or on sites that block crawling. The author suggests that future model training may involve purchasing large amounts of private sector data rather than using generated data, and notes that data volume will continue to increase as more countries adopt internet technologies and IoT usage expands.

- Users argue that freely accessible and financially easily accessible data may run out, with companies realizing the value of their data and locking it down. The quality of internet data is also questioned, with some suggesting that removing Reddit from training data improved model performance.

- The 64 ZB figure represents total worldwide storage capacity, not available text data. Current models like GPT-4 have trained on only 13 trillion tokens (about 4 trillion unique), while estimates suggest over 200 trillion text tokens of decent quality are publicly available.

- A significant portion of internet data is likely video content, with Netflix accounting for 15% of all internet traffic in 2022. Users debate the value of this data for language modeling and suggest focusing on high-quality, curated datasets rather than raw volume.

Theme 2. Emerging AI Technologies and Their Real-World Applications

- Logical Fallacy Scoreboard (Score: 118, Comments: 61): The post proposes a real-time logical fallacy detection system for political debates using Large Language Models (LLMs). This system would analyze debates in real-time, identify logical fallacies, and display a “Logical Fallacy Scoreboard” to viewers, potentially improving the quality of political discourse and helping audiences critically evaluate arguments presented by candidates.

- Users expressed interest in a real-time version of the tool for live debates, with one suggesting a “live bullshit tracker” for all candidates. The developer plans to run the system on upcoming debates if Trump doesn’t back out.

- Concerns were raised about the AI’s ability to accurately detect fallacies, with examples of inconsistencies and potential biases in the model’s judgments. Some suggested using a smaller, fine-tuned LLM or a BERT-based classifier instead of large pre-trained models.

- The project received praise for its potential to defend democracy, while others suggested improvements such as tracking unresolved statements, categorizing lies, and distilling the 70B model to 2-8B for real-time performance. Users also requested analysis of other politicians like Biden and Harris.

All AI Reddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Capabilities and Advancements

-

Flux AI demonstrates impressive text and image generation: Multiple posts on r/StableDiffusion showcase Flux AI’s ability to generate highly detailed product advertisements with accurate text placement and brand consistency. Examples include a Tide PODS Flavor Bubble Tea ad and a Hot Pockets “Sleepytime Chicken” box. Users note Flux’s superior text generation compared to other models like Midjourney.

-

OpenAI decides against watermarking ChatGPT outputs: OpenAI announced they won’t implement watermarking for ChatGPT-generated text, citing concerns about potential negative impacts on users. The decision sparked discussions about detection methods, academic integrity, and the balance between transparency and user protection.

AI Ethics and Societal Impact

-

Debate over AI’s impact on jobs: A highly upvoted post on r/singularity discusses the potential effects of AI on employment, reflecting ongoing concerns about workforce disruption.

-

AI-powered verification and deepfakes: A post on r/singularity highlights the increasing sophistication of AI-generated images for verification purposes, raising questions about digital identity and the challenges of distinguishing between real and AI-generated content.

AI in Education and Development

- Potential of AI tutors: A detailed post on r/singularity explores the concept of AI tutors potentially enhancing children’s learning capabilities, drawing parallels to historical examples of intensive education methods.

AI Industry and Market Trends

- Ben Goertzel on the future of generative AI: AI researcher Ben Goertzel predicts that the generative AI market will continue to grow, citing rapid development of high-value applications.

AI Discord Recap

A summary of Summaries of Summaries

1. LLM Advancements

- Llama 3 performance issues: Users reported issues with Llama 3’s tokenization approach, particularly with EOS and BOS token usage leading to inference challenges. Participants speculated that missing tokens in inference could lead to out-of-distribution contexts during training, prompting a reassessment of documentation.

- Members agreed on the need for a reassessment of documentation to address these tokenization bugs, emphasizing the importance of accurate token handling.

- Claude AI offers code fixes: Members discussed using Claude AI to upload

output.jsonfor code fixes without file access, as outlined in this Medium article. Despite the potential, skepticism remained about the empirical effectiveness of this approach.- Skepticism remained about the empirical effectiveness of this approach, highlighting the need for more evidence-based results to validate its utility.

2. Model Performance Optimization

- Optimizing LLM inference speed: Suggestions for speeding up LLM inference included using torch.compile and comparing performance with tools like vLLM. The ongoing discussion highlights the interest in improving efficiency and performance for large language models.

- Members expressed keen interest in enhancing efficiency while handling large language models, exploring various tools and techniques.

- Mojo enhances data processing pipelines: Discussions highlighted the potential of Mojo for integrating analytics with database workloads, enabling quicker data handling through JIT compilation and direct file operations.

- Members mentioned compatibility with PyArrow and Ibis, suggesting a promising future for a robust data ecosystem within the Mojo framework.

3. Fine-tuning Challenges

- Challenges with fine-tuning multilingual models: Users shared their experiences with fine-tuning models like Llama 3.1 and Mistral with diverse datasets, encountering output relevance issues due to possibly incorrect prompt formatting. Suggestions urged reverting to standard prompt formats to ensure proper dataset handling.

- Participants emphasized the importance of using standard formats to avoid issues, highlighting the need for consistent prompt formatting.

- LoRA training issues: A user reported poor results from their SFTTrainer after trying to format datasets with concatenated text and labels, questioning potential misconfiguration. Clarifications pointed to correct column usage yet failed to resolve the underlying issue.

- Clarifications pointed to correct column usage but failed to resolve the underlying issue, indicating a need for further investigation into the dataset configuration.

4. Open-Source AI Developments

- Introducing DistillKit: Arcee AI announced DistillKit, an open-source tool for distilling knowledge from larger models to create smaller, powerful models. The toolkit combines traditional training techniques with novel methods to optimize model efficiency.

- The toolkit focuses on optimizing models to be efficient and accessible, combining traditional training techniques with novel distillation methods.

- OpenRouter launches new models: OpenRouter rolled out impressive new models, including Llama 3.1 405B BASE and Mistral Nemo 12B Celeste, which can be viewed at their model page. The addition of Llama 3.1 Sonar family further expands application capabilities.

- The new entries cater to diverse needs and adapt to community feedback for continual updates, enhancing the utility of OpenRouter’s offerings.

5. Multimodal AI Innovations

- CatVTON redefines virtual try-on methods: A recent arXiv paper introduced CatVTON, a method that significantly reduces training costs by directly concatenating garment images. This innovation promises realistic garment transfers, revolutionizing virtual try-on tech.

- This method eliminates the need for a ReferenceNet and additional image encoders, maintaining realistic garment transfers while reducing costs.

- Open Interpreter speech recognition proposal: A user proposed implementing a speech recognition method in a native language, facilitating translation between English and the local tongue. They cautioned about translation errors, dubbing it Garbage in, Garbage out.

- The approach raised concerns about the potential pitfalls of translation errors, emphasizing the need for accurate input to ensure reliable output.

PART 1: High level Discord summaries

LM Studio Discord

- LM Studio suffers from performance hiccups: Multiple users reported issues with LM Studio version 0.2.31, particularly problems starting the application and models not loading correctly. Downgrading to earlier versions like 0.2.29 was suggested as a potential workaround.

- Users confirmed that performance inconsistencies persist, urging the community to explore stable versions to maintain workflow.

- Model download speeds throttle down: Users experienced fluctuating download speeds from LM Studio’s website, with reports of throttled speeds as low as 200kbps. Suggestions include waiting or retrying downloads later due to typical AWS throttling issues.

- The conversation underscored the need for patience during high-demand download times, further stressing the importance of checking connection stability.

- AI wants to control your computer!: Discussion arose about whether AI models, particularly OpenInterpreter, could gain vision capabilities to control PCs, pointing to the limitations of current AI understanding. Participants expressed concerns about potential unforeseen behaviors from such integrations.

- The debate highlighted the need for careful consideration before implementing AI control mechanisms on local systems.

- Multi-modal models create curious buzz: Interest in multi-modal models available for AnythingLLM sparked discussions among users, emphasizing exploration of uncensored models. Resources like UGI Leaderboard were recommended for capability comparisons.

- Participants stressed the importance of community-driven exploration of advanced models to enhance functional versatility.

- Dual GPU setups draw mixed views: Conversations about dual 4090 setups suggest benefits of splitting models across cards for improved performance, yet caution users about programming requirements for effective utilization. Concerns persist regarding the struggle of a single 4090 with larger models.

- Members preferred discussing the balance between power and ease of use when considering multi-GPU configurations.

HuggingFace Discord

- Diving into Hugging Face Model Features: Users shared insights about various models on Hugging Face such as MarionMT for translations and the TatoebaChallenge for language support.

- Concerns about model limitations and the necessity for better documentation sparked a broader discussion.

- Speeding Up LLM Inference Techniques: Optimizing LLM inference became a hot topic, with suggestions like using torch.compile and evaluating performance with tools such as vLLM.

- Members expressed keen interest in enhancing efficiency while handling large language models.

- CatVTON Redefines Virtual Try-On Methods: A recent arXiv paper introduced CatVTON, a method that significantly reduces training costs by directly concatenating garment images.

- This innovation promises realistic garment transfers, revolutionizing virtual try-on tech.

- Gradient Checkpointing Implementation in Diffusers: Recent updates now include a method for setting gradient checkpointing in Diffusers, allowing toggling in compatible modules.

- This enhancement promises to optimize memory usage during model training.

- Identifying Relationships in Tables Using NLP: Members are exploring NLP methods to determine relationships between tables based on their column descriptions and names.

- This inquiry suggests a need for further exploration in the realm of relational modeling with NLP.

Stability.ai (Stable Diffusion) Discord

- Flux Model Rockets on GPUs: Users reported image generation speeds for the Flux model ranging from 1.0 to 2.0 iterations per second depending on GPU setup and model version.

- Some managed successful image generation on lower VRAM setups using CPU offloading or quantization techniques.

- ComfyUI Installation Hacks: Discussions revolved around the installation of Flux on ComfyUI, recommending the use of the

update.pyscript instead of the manager for updates.- Helpful installation guides were shared for newcomers to smoothly set up their environments.

- Stable Diffusion Model Showdown: Participants detailed the different Stable Diffusion models: SD1.5, SDXL, and SD3, noting each model’s strengths while positioning Flux as a newcomer from the SD3 team.

- The higher resource demands of Flux compared to traditional models were highlighted in the discussions.

- RAM vs VRAM Showdown: Adequate VRAM is critical for Stable Diffusion performance, with users recommending at least 16GB VRAM for optimal results, overshadowing the need for high RAM.

- The community advised that while RAM assists in model loading, it’s not a major factor in generation speeds.

- Animation Tools Inquiry: Participants queried about tools like Animatediff for video content generation, seeking the latest updates on available methods.

- Current suggestions highlight that while Animatediff is still useful, newer alternatives may be surfacing for similar tasks.

CUDA MODE Discord

- Epoch 8 Accuracy Spikes: Members noted a surprising spike in accuracy scores after epoch 8 during training, raising questions on expected behaviors.

- Looks totally normal reassured another member, indicating no cause for concern.

- Challenges with CUDA in DRL: Frustrations arose around creating environments in CUDA for Deep Reinforcement Learning with PufferAI suggested for better parallelism.

- Participants stressed the complexities involved in setup, emphasizing the need for robust tooling.

- Seeking Winter Internship in ML: A user is urgently looking for a winter internship starting January 2025, focused on ML systems and applied ML.

- The individual highlighted previous internships and ongoing open source contributions as part of their background.

- Concerns Over AI Bubble Bursting: Speculation about a potential AI bubble began circulating, with contrasting views on the long-term potential of investments.

- Participants noted the lag time between research outcomes and profitability as a key concern.

- Llama 3 Tokenization Issues: Inconsistencies in Llama 3’s tokenization approach were discussed, specifically regarding EOS and BOS token usage leading to inference challenges.

- Participants agreed on the need for a reassessment of documentation to address these tokenization bugs.

Unsloth AI (Daniel Han) Discord

- Unsloth Installation Issues Persist: Users faced errors when installing Unsloth locally, particularly regarding Python compatibility and PyTorch installation, with fixes such as upgrading pip.

- Some solved their issues by reconnecting their Colab runtime and verifying library installations.

- Challenges with Fine-tuning Multilingual Models: Users shared their experiences fine-tuning models like Llama 3.1 and Mistral with diverse datasets, encountering output relevance issues due to possibly incorrect prompt formatting.

- Suggestions urged reverting to standard prompt formats to ensure proper dataset handling.

- LoRA Training Stumbles on Dataset Format: A user reported poor results from their SFTTrainer after trying to format datasets with concatenated text and labels, questioning potential misconfiguration.

- Clarifications pointed to correct column usage yet failed to resolve the underlying issue.

- Memory Issues with Loading Large Models: Loading the 405B Llama-3.1 model on a single GPU resulted in memory challenges, prompting users to note the necessity of multiple GPUs.

- This highlights a common understanding that larger models demand greater computational resources for loading.

- Self-Compressing Neural Networks Optimize Model Size: The paper on Self-Compressing Neural Networks discusses using size in bytes in the loss function to achieve significant reductions, requiring just 3% of the bits and 18% of the weights.

- This technique claims to enhance training efficiency without the need for specialized hardware.

Perplexity AI Discord

- Perplexity’s Browsing Capabilities Under Fire: Users reported mixed experiences with Perplexity’s browsing, noting struggles in retrieving up-to-date information and strange behaviors when using the web app.

- Conversations highlighted inconsistencies in model responses, particularly for tasks like coding queries that are essential for technical applications.

- Breakthrough in HIV Research with Llama Antibodies: Researchers at Georgia State University engineered a hybrid antibody that combines llama-derived nanobodies with human antibodies, neutralizing over 95% of HIV-1 strains.

- This hybrid approach takes advantage of the unique properties of llama nanobodies, allowing greater access to evasive virus regions.

- Concerns Over Model Performance: Llama 3.1 vs. Expectations: Users found that the Llama 3.1-sonar-large-128k-online model underperformed in Japanese tests, providing less accurate results than GPT-3.5.

- This has led to calls for the development of a sonar-large model specifically optimized for Japanese to improve output quality.

- Uber One Subscription Frustration: A user criticized the Uber One offer as being limited to new Perplexity accounts, indicating it serves more as a user acquisition tactic than a genuine benefit.

- Debates about account creation to capitalize on promotions raised important questions about user management in AI services.

- API Quality Concerns with Perplexity: Multiple users shared issues with the Perplexity API, mentioning unreliable responses and the return of low-quality results when querying recent news.

- Frustrations arose over API outputs, which often appeared ‘poisoned’ with nonsensical content, urging a demand for improved model and API performance.

OpenAI Discord

- OpenAI DevDay Hits the Road: OpenAI is taking DevDay on the road this fall to San Francisco, London, and Singapore for hands-on sessions, demos, and best practices. Attendees will have the chance to meet engineers and see how developers are building with OpenAI; more info can be found at the DevDay website.

- Participants will interact with engineers during the events, enhancing technical understanding and community engagement.

- AI’s Global Threat Discussion: A heated debate unfolded regarding the perception of AI as a global threat, highlighting government behavior concerning open-source AI versus superior closed-source models. Concerns about potential risks rise in light of expanding AI capabilities.

- This issue was emphasized as viewpoints regarding AI’s implications become increasingly polarized.

- Insights on GPT-4o Image Generation: Discussions revealed insights into GPT-4o’s image tokenization capabilities, with the potential for images to be represented as tokens. However, practical implications and limitations remain blurry in the current implementations.

- Mentioned resources include a tweet from Greg Brockman discussing the team’s ongoing work in image generation with GPT-4o.

- Prompt Engineering Hurdles: Users reported ongoing challenges in producing high-quality output when utilizing prompts with ChatGPT, often leading to frustration. The difficulty lies in defining what constitutes high-quality output, complicating interactions.

- Members shared experiences illustrating the importance of crafting clear, open-ended prompts to improve results.

- Diversity and Bias in AI Image Generation: Concerns arose about racial representation in AI-generated images, with specific prompts prompting refusals due to terms of service guidelines. Members exchanged successful strategies to ensure diverse representation by explicitly including multiple ethnic backgrounds in their prompts.

- The discussion also revealed negative prompting effects where attempts to restrict traits produced undesirable results. Recommendations centered around crafting positive, detailed descriptions to enhance output quality.

Latent Space Discord

- AI Engineer Demand Soars: The need for AI engineers is skyrocketing as companies seek generalist skills, particularly from web developers who can integrate AI into practical applications.

- This shift highlights the gap in high-level ML expertise, pushing web devs to fill key roles in AI projects.

- Groq Raises $640M in Series D: Groq has secured a $640 million Series D funding round led by BlackRock, boosting its valuation to $2.8 billion.

- The funds will be directed towards expanding production capacity and enhancing the development of next-gen AI chips.

- NVIDIA’s Scraping Ethics Under Fire: Leaked information reveals NVIDIA’s extensive AI data scraping, amassing ‘a human lifetime’ of videos daily and raising significant ethical concerns.

- This situation has ignited debates on the legal and community implications of such aggressive data acquisition tactics.

- Comparing Cody and Cursor: Discussions highlighted Cody’s superior context-awareness compared to Cursor, with Cody allowing users to index repositories for relevant responses.

- Users appreciate Cody’s ease of use while finding Cursor’s context management cumbersome and complicated.

- Claude Introduces Sync Folder Feature: Anthropic is reportedly developing a Sync Folder feature for Claude, enabling batch uploads from local folders for better project management.

- This feature is anticipated to streamline the workflow and organization of files within Claude projects.

Nous Research AI Discord

- Recommendations on LLM as Judge and Dataset Generation: A user inquired about must-reads related to current trends in LLM as Judge and synthetic dataset generation, focusing on instruction and preference data, highlighting the latest two papers from WizardLM as a starting point.

- This discussion positions LLM advancements as crucial in understanding shifts in model applications.

- Concerns Over Claude Sonnet 3.5: Users reported issues with Claude Sonnet 3.5, noting its underperformance and increased error rates compared to its predecessor.

- This raises questions about the effectiveness of recent updates and their impact on core functionalities.

- Introduction of DistillKit: Arcee AI announced DistillKit, an open-source tool for distilling knowledge from larger models to create smaller, powerful models.

- The toolkit combines traditional training techniques with novel methods to optimize model efficiency.

- Efficient VRAM Calculation Made Easy: A Ruby script was shared for estimating VRAM requirements based on bits per weight and context length, available here.

- This tool aids users in determining maximum context and bits per weight in LLM models, streamlining VRAM calculations.

- Innovative Mistral 7B MoEification: The Mistral 7B MoEified model allows slicing individual layers into multiple experts, aiming for coherent model behavior.

- This approach enables models to share available expert resources equally during processing.

OpenRouter (Alex Atallah) Discord

- Chatroom Gets a Fresh Look: The Chatroom has been launched with local chat saving and a simplified UI, allowing better room configuration at OpenRouter. This revamped platform enhances user experience and accessibility.

- Users can explore the new features to enhance interaction within the Chatroom.

- OpenRouter Announces New Model Variants: OpenRouter rolled out impressive new models, including Llama 3.1 405B BASE and Mistral Nemo 12B Celeste, which can be viewed at their model page. The addition of Llama 3.1 Sonar family further expands application capabilities.

- The new entries cater to diverse needs and adapt to community feedback for continual updates.

- Mistral Models Now on Azure: The Mistral Large and Mistral Nemo models are now accessible via Azure, enhancing their utility within a cloud environment. This move aims to provide better infrastructure and performance to users.

- Users can leverage Azure’s capacity while accessing high-performance AI models effortlessly.

- Gemini Pro Undergoes Pricing Overhaul: The pricing for Google Gemini 1.5 Flash will be halved on the 12th, making it more competitive against counterparts like Yi-Vision and FireLLaVA. This shift could facilitate more user engagement in automated captioning.

- Community feedback has been crucial in shaping this transition as users desire more economical options.

- Launch of Multi-AI Answers: The Multi-AI answer website has officially launched on Product Hunt with the backing of OpenRouter. Their team encourages community upvotes and suggestions to refine the service.

- Community contributions during the launch signify the importance of user engagement in the development process.

Modular (Mojo 🔥) Discord

- Mojo speeds up data processing pipelines: Discussions highlight the potential of Mojo for integrating analytics with database workloads, enabling quicker data handling through JIT compilation and direct file operations.

- Members mentioned compatibility with PyArrow and Ibis, suggesting a promising future for a robust data ecosystem within the Mojo framework.

- Elixir’s confusing error handling: Members discussed Elixir’s challenge where libraries return error atoms or raise exceptions, leading to non-standardized error handling.

- A YouTube video featuring Chris Lattner and Lex Fridman elaborated on exceptions versus errors, providing further context.

- Mojo debugger lacks support: A member confirmed that the Mojo debugger currently does not work with VS Code, referencing an existing GitHub issue for debugging support.

- Debugging workflows appear to be reliant on print statements, indicating a need for improved debugging tools.

- Performance woes with Mojo SIMD: Concerns surfaced about the performance of Mojo’s operations on large SIMD lists, which can lag on select hardware configurations.

- A suggestion arose that using a SIMD size fitting the CPU’s handling capabilities can enhance performance.

- Missing MAX Engine comparison documentation: A user reported difficulty locating documentation that compared the MAX Engine with PyTorch and ONYX, especially across models like ResNet.

- The query highlights a gap in available resources for users seeking comparison data.

Eleuther Discord

- Claude AI Offers Code Fixes: Members discussed starting a new chat with Claude AI to upload

output.json, enabling it to provide code fixes directly without file access, as outlined in this Medium article.- Despite the potential, skepticism remained about the empirical effectiveness of this approach.

- Enhancing Performance through Architecture: New architectures, particularly for user-specific audio classification, can significantly improve performance using strategies like contrastive learning to maintain user-invariant features.

- Additionally, adapting architectures for 3D data was discussed as a means to ensure performance under transformations.

- State of the Art in Music Generation: Queries about SOTA models for music generation included discussions around an ongoing AI music generation lawsuit, with members favoring local execution over external dependencies.

- This conversation reflects a growing trend toward increased control in music generation applications.

- Insights on RIAA and Labels: The relationship between RIAA and music labels was scrutinized, highlighting how they influence artist payments and the industry structure, demanding more direct compensation methods.

- Concerns surfaced about artists receiving meager royalties relative to industry profits, suggesting a push for self-promotion.

- HDF5 for Efficient Embedding Management: Discussions continued on the relevance of HDF5 for loading batches from large embedding datasets, reflecting ongoing efforts to streamline data management techniques.

- This indicates a persistent interest in efficient data usage within the AI community.

LangChain AI Discord

- Ollama Memory Error Chronicles: A user reported a ValueError indicating the model ran out of memory when invoking a retrieval chain despite low GPU usage with models like aya (4GB) and nomic-embed-text (272MB).

- This raises questions about resource allocation and memory management in high-performance setups.

- Mixing CPU and GPU Resources: Discussions centered on whether Ollama effectively utilizes both CPU and GPU during heavy loads, with users noting the expected fallback to CPU didn’t occur as anticipated.

- Users emphasized the importance of understanding fallback mechanisms to prevent inference bottlenecks.

- LangChain Memory Management Insights: Insights were shared about how LangChain handles memory and object persistence, focusing on evaluating inputs for memory efficiency across sessions.

- Queries for determining suitable information for memory storage were testing grounds for different model responses.

- SAM 2 Fork: CPU Compatibility in Action: A member initiated a CPU-compatible fork of the SAM 2 model, displaying prompted segmentation and automated mask generation, with aspirations for GPU compatibility.

- Feedback regarding this endeavor is being actively solicited on the GitHub repository.

- Jumpstart Your AI Voice Assistant: A tutorial video titled ‘Create a custom AI Voice Assistant in 8 minutes! - Powered by ChatGPT-4o’ guides users through building a voice assistant for their website.

- The creator provided a demo link offering potential users hands-on experience before signing up for the service.

LlamaIndex Discord

- Build ReAct Agents with LlamaIndex: You can create ReAct agents from scratch leveraging LlamaIndex workflows for enhanced internal logic visibility.

- This method allows you to ‘explode’ the logic, ensuring a deeper understanding and control over agentic systems.

- Terraform Assistant for AI Engineers: Develop a Terraform assistant using LlamaIndex and Qdrant Engine aimed at aspiring AI engineers, with guidance provided here.

- The tutorial gives practical insights and a framework for integrating AI within the DevOps space.

- Automated Payslip Extraction with LlamaExtract: LlamaExtract allows high-quality RAG on payslips through automated schema definition and metadata extraction.

- This process significantly enhances data handling capabilities for payroll documents.

- Scaling RAG Applications Tutorial: Benito Martin outlines how to deploy and scale your chat applications on Google Kubernetes, emphasizing practical strategies here.

- This resource addresses content scarcity on productionizing RAG applications in detail.

- Innovative GraphRAG Integration: The integration of GraphRAG with LlamaIndex enhances intelligent question answering capabilities, as discussed in a Medium article.

- This integration leverages knowledge graphs to improve context and accuracy of AI responses.

Interconnects (Nathan Lambert) Discord

- Bay Area Events Generate Buzz: Members expressed a desire for updates on upcoming events in the Bay Area, with some noting personal absences.

- The ongoing interest hints at a need for better communication around local gatherings.

- Noam Shazeer Lacks Recognition: Discussion arose around the absence of a Wikipedia page for Noam Shazeer, a key figure at Google since 2002.

- Members reflected on Wikipedia can be silly, highlighting the ironic oversight of impactful professionals.

- Skepticism of 30 Under 30 Awards Validity: A member critiqued the 30 Under 30 awards as catering more to insiders than genuine merit, suggesting special types of people seek such validation.

- This struck a chord among members who noted the often superficial recognition those awards bestow.

- Debate on Synthetic Data Using Nemotron: A heated discussion emerged about redoing synthetic data leveraging Nemotron for fine-tuning Olmo models.

- Concerns were raised over the potential hijacking of the Nemotron name and criticisms of AI2’s trajectory.

- KTO Outperforms DPO in Noisy Environments: The Neural Notes interview discussed KTO’s strength over DPO when handling noisy data, suggesting significant performance gains.

- Adaptations from UCLA reported KTO’s success against DPO with human preferences indicating a 70-30% edge.

LAION Discord

- Synthetic Datasets Spark Controversy: Members debated the effectiveness of synthetic datasets versus original ones, noting they can accelerate training but may risk misalignment and lower quality.

- Concerns were voiced about biases, prompting calls for more intentional dataset creation to avoid generating a billion useless images.

- FLUX Model Performance Divides Opinions: Users shared mixed views on the FLUX model’s ability to generate artistic outputs; some praised its capability while others were disappointed.

- Discussion pointed out that better parameter settings could enhance its performance, yet skepticism remained regarding its overall utility for artistry.

- CIFAR-10 Validation Accuracy Hits 80%: 80% validation accuracy achieved on the CIFAR-10 dataset using only 36k parameters, treating real and imaginary components of complex parameters as separate.

- Tweaks to architecture and dropout implementation resolved previous issues, resulting in a more robust model with nearly eliminated overfitting.

- Ethical Concerns in Model Training: Discussions heated up around the ethical implications of training on copyrighted images, sparking anxiety over copyright laundering in synthetic datasets.

- Some proposed that while synthetic data has advantages, stricter scrutiny may impose regulations on training practices within the community.

- Stable Diffusion Dataset Availability Questioned: A user expressed frustration over the unavailability of a Stable Diffusion dataset, which hindered their progress.

- Peers clarified that the dataset isn’t strictly necessary for utilizing Stable Diffusion, offering alternative solutions.

DSPy Discord

- Adding a Coding Agent to ChatmanGPT Stack: A member is seeking recommendations for a coding agent to add to the ChatmanGPT Stack, with Agent Zero suggested as a potential choice.

- Looking for an effective addition to enhance coding interactions.

- Golden-Retriever Paper Overview: A shared link to the paper on Golden-Retriever details how it efficiently navigates industrial knowledge bases by improving on traditional LLM fine-tuning challenges, particularly with a reflection-based question augmentation step.

- This method enhances retrieval accuracy by clarifying jargon and context prior to document retrieval. Read more in the Golden-Retriever Paper.

- Livecoding in the Voice Lounge: A member announced their return and mentioned livecoding sessions in the Voice Lounge, signaling a collaborative coding effort ahead.

- Members look forward to joining forces in this engaging setup.

- AI NPCs Respond and Patrol: Plans are underway for developing AI characters in a C++ game using the Oobabooga API for player interaction, focusing on patrolling and response functions.

- The necessary components include modifying the ‘world’ node and extending the NPC class.

- Exporting Discord Chats Made Easy: A user successfully exported Discord channels to HTML and JSON using the DiscordChatExporter tool, generating 463 thread files.

- This tool streamlines chat organization, making it easier for future reference. Check out the DiscordChatExporter.

OpenInterpreter Discord

- Open Interpreter runs on local LLM!: A user successfully integrated Open Interpreter with a local LLM using LM Studio as a server, gaining access to the OI system prompt.

- They found the integration both interesting and informative, paving the way for local deployments.

- Troubleshooting Hugging Face API integration: Users faced challenges while setting up the Hugging Face API integration in Open Interpreter, encountering various errors despite following the documentation.

- One user expressed gratitude for support, hoping for a resolution to their integration issues.

- Executing screenshot commands becomes a chore: Concerns arose as users questioned why Open Interpreter generates extensive code instead of executing the screenshot command directly.

- A workaround using the ‘screencapture’ command confirmed functionality, alleviating some frustrations.

- Speech recognition in multiple languages proposed: A user proposed implementing a speech recognition method in a native language, facilitating translation between English and the local tongue.

- They cautioned about translation errors, dubbing it Garbage in, Garbage out.

- Electra AI shows promise for AI on Linux: A member unveiled Electra AI, a Linux distro built with AI capabilities that are free for use, highlighting its potential for integration.

- They noted that Electra AI offers three flavors: Lindoz, Max, and Shift—all available for free.

Cohere Discord

- Cohere Support for CORS Issues: To address the CORS problems on the billing page, community members suggested emailing [email protected] for help, including organization’s details in the inquiry.

- This support method aims to resolve issues that have hindered user payments for services.

- GenAI Bootcamp Seeks Cohere Insights: Andrew Brown is exploring the potential of Cohere for a free GenAI Bootcamp, which seeks to reach 50K participants this year.

- He highlighted the need for insights beyond documentation, especially regarding Cohere’s cloud-agnostic capabilities.

- Benchmarking Models with Consistency: A member inquired about keeping the validation subset consistent while benchmarking multiple models, emphasizing the importance of controlled comparisons.

- Discussions reinforced the necessity of maintaining consistent validation sets to enhance the accuracy of comparisons.

- Rerank Activation on Azure Models: Cohere announced the availability of Rerank for Azure Models, with integration potential for the RAG app, as detailed in this blog post.

- Members showed interest in updating their toolkit to utilize Rerank for Azure users.

- Clarification on Cohere Model Confusion: A user who paid for the Cohere API found only the Coral model available and faced confusion regarding accessing the Command R model.

- In response, a member clarified that Coral is indeed a version of Command R+ to ease the user’s concerns.

tinygrad (George Hotz) Discord

- tinygrad 0.9.2 introduces exciting features: The recent release of tinygrad 0.9.2 brings notable updates like faster gemv, kernel timing, and improvements with CapturedJit.

- Additional discussions included enhancements for ResNet and advanced indexing techniques, marking a significant step for performance optimization.

- Evaluating tinygrad on Aurora supercomputer: Members discussed the feasibility of running tinygrad on the Aurora supercomputer, stressing concerns over compatibility with Intel GPUs.

- While OpenCL support exists, there were queries regarding performance constraints and efficiency on this platform.

- CUDA performance disappoints compared to CLANG: Members noted that tests in CUDA run slower than in CLANG, prompting an investigation into possible efficiency issues.

- This discrepancy raises important questions about the execution integrity of CUDA, especially in test_winograd.py.

- Custom tensor kernels spark discussion: A user shared interest in executing custom kernels on tensors, referencing a GitHub file for guidance.

- This reflects ongoing enhancements in tensor operations within tinygrad, showcasing community engagement in practical implementation.

- Bounties incentivize tinygrad feature contributions: The community has opened discussions on bounties for tinygrad improvements, such as fast sharded llama and optimizations for AMX.

- This initiative encourages developers to actively engage in enhancing the framework, aiming for broader functionality.

Torchtune Discord

- PPO Training Recipe Now Live: The team has introduced an end-to-end PPO training recipe to integrate RLHF with Torchtune, as noted in the GitHub pull request.

- Check it out and try it out!

- Qwen2 Model Support Added: Qwen2 model support is now included in training recipes, with the 7B model available in the GitHub pull request.

- Expect the upcoming 1.5B and 0.5B versions to arrive soon!

- LLAMA 3 Tangles with Generation: Users successfully ran the LLAMA 3 8B INSTRUCT model with a custom configuration, generating a time query in 27.19 seconds at 12.25 tokens/sec, utilizing 20.62 GB of memory.

- However, there’s a concern about text repeating 10 times, and a pull request is under review to address unexpected ending tokens.

- Debugging Mode Call for LLAMA 3: Concerns arose regarding the absence of a debugging mode that displays all tokens in the LLAMA 3 generation output.

- A member suggested that adding a parameter to the generation script could resolve this issue.

- Model Blurbs Maintenance Anxiety: Members expressed concerns about keeping updated model blurbs, fearing the maintenance could be overwhelming.

- One proposed using a snapshot from a model card or whitepaper as a minimal blurb solution.

OpenAccess AI Collective (axolotl) Discord

- bitsandbytes Installation for ROCm Simplified: A recent pull request enables packaging wheels for bitsandbytes on ROCm, streamlining the installation process for users.

- This PR updates the compilation process for ROCm 6.1 to support the latest Instinct and Radeon GPUs.

- Building an AI Nutritionist Needs Datasets: A member is developing an AI Nutritionist and considers fine-tuning GPT-4o mini but seeks suitable nutrition datasets like the USDA FoodData Central.

- Recommendations include potential dataset compilation from FNDDS, though it’s unclear if it’s available on Hugging Face.

- Searching for FFT and Baseline Tests: A member expressed interest in finding FFT or LORA/QLORA for experimentation with a 27b model, mentioning good results with a 9b model but challenges with the larger one.

- Caseus suggested a QLORA version for Gemma 2 27b might work with adjustments to the learning rate and the latest flash attention.

- Inquiry about L40S GPUs Performance: A member asked if anyone has trained or served models on L40S GPUs, seeking insights about their performance.

- This inquiry highlights interest in the efficiency and capabilities of L40S GPUs for AI model training.

- Discussion on DPO Alternatives in AI Training: A member questioned whether DPO remains the best approach in AI training, suggesting alternatives like orpo, simpo, or kto might be superior.

- This led to an exchange of differing opinions on the effectiveness of various methods in AI model training.

MLOps @Chipro Discord

- Triton Conference Registration Now Open!: Registration for the Triton Conference on September 17, 2024 at Meta Campus, Fremont CA is now open! Sign up via this Google Form to secure your spot.

- Attendance is free, but spots are limited, so early registration is encouraged.

- Information Required for Registration: Participants must provide their email, name, affiliation, and role to register. Additional optional questions include dietary preferences like vegetarian, vegan, kosher, and gluten-free.

- Pro tip: Capture what attendees hope to take away from the conference!

- Google Sign-In for Conference Registration: Attendees are prompted to sign in to Google to save their progress on the registration form. All responses will be emailed to the participant’s provided address.

- Don’t forget: participants should never submit passwords through Google Forms to ensure security.

Mozilla AI Discord

- Llamafile boosts offline LLM access: The core maintainer of Llamafile reports significant advancements in enabling offline, accessible LLMs within a single file.

- This initiative improves accessibility and simplifies user interactions with large language models.

- Community excited about August projects: A vibrant discussion has ignited around ongoing projects for August, encouraging community members to showcase their work.

- Participants have the chance to engage and share their contributions within the Mozilla AI space.

- sqlite-vec release party on the horizon: An upcoming release party for sqlite-vec will allow attendees to discuss features and engage with the core maintainer.

- Demos and discussions are set to unfold, creating opportunities for rich exchanges on the latest developments.

- Exciting Machine Learning Paper Talks scheduled: Upcoming talks featuring topics such as Communicative Agents and Extended Mind Transformers will include distinguished speakers.

- These events promise valuable insights into cutting-edge research and collaborative opportunities in machine learning.

- Local AI AMA promises open-source insights: A scheduled Local AI AMA with the core maintainer will offer insights into this self-hostable alternative to OpenAI.

- This session invites attendees to explore Local AI’s capabilities and directly address their queries.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

LM Studio ▷ #general (708 messages🔥🔥🔥):

LM Studio performance issuesModel downloading speedsAI interaction with local systemsMulti-modal models in AnythingLLMRAM and VRAM utilization

- LM Studio performance issues: Multiple users reported issues with LM Studio version 0.2.31, including problems starting the application and models not loading correctly.

- Downgrading to earlier versions, such as 0.2.29, was suggested as a potential workaround for these issues.

- Model downloading speeds: Users experienced fluctuating download speeds from LM Studio’s website, with some noting throttled speeds as low as 200kbps.

- It was suggested to wait or retry downloads later due to AWS throttling, which is not uncommon for shared resources.

- AI interaction with local systems: Discussion arose about whether AI models, specifically LLMs like OpenInterpreter, could gain vision capabilities to control PCs.

- It was noted that such capabilities may prompt unforeseen behavior from the models, illustrating the limitations of current AI understanding.

- Multi-modal models in AnythingLLM: Users expressed interest in the capabilities of multi-modal models and their availability for use in the AnythingLLM framework.

- Recommendations included exploring uncensored models and checking resources like UGI-Leaderboard for comparisons.

- RAM and VRAM utilization: It was confirmed that users can combine RAM and VRAM for running larger models, with settings configurable in LM Studio.

- The default setting allows the application to manage the use of RAM and VRAM efficiently for optimal performance.

Links mentioned:

- MathEval: MathEval is a benchmark dedicated to the holistic evaluation on mathematical capacities of LLMs, consisting of 22 evaluation datasets in various mathematical fields and nearly 30,000 math problems. Th...

- Radxa ROCK 5 ITX: Your 8K ARM Personal Computer

- UGI Leaderboard - a Hugging Face Space by DontPlanToEnd: no description found

- Crosstalk Multi-LLM AI Chat – Applications sur Google Play: no description found

- mradermacher/TinyStories-656K-GGUF · Hugging Face: no description found

- legraphista/internlm2_5-20b-chat-IMat-GGUF · Hugging Face: no description found

- no title found: no description found

- Physics of Language Models: Part 3.3, Knowledge Capacity Scaling Laws: Scaling laws describe the relationship between the size of language models and their capabilities. Unlike prior studies that evaluate a model's capability via loss or benchmarks, we estimate the n...

- lmstudio-community/Meta-Llama-3.1-8B-Instruct-GGUF · Update to Models: no description found

- Private AI – Applications sur Google Play: no description found

- lmstudio-community/Meta-Llama-3.1-70B-Instruct-GGUF at main: no description found

- Gemini-1.5 Pro Experiment (0801): NEW Updates to Gemini BEATS Claude & GPT-4O (Fully Tested): Join this channel to get access to perks: https://www.youtube.com/@aicodeking/joinIn this video, I'll be talking about the new Gemini-1.5 Pro Experiment (080...

- What Year Is It Jumanji GIF - What Year Is It Jumanji Forgotten - Discover & Share GIFs: Click to view the GIF

- configs/Extension-Pack-Instructions.md at main · lmstudio-ai/configs: LM Studio JSON configuration file format and a collection of example config files. - lmstudio-ai/configs

- no title found: no description found

- GitHub - OpenInterpreter/open-interpreter: A natural language interface for computers: A natural language interface for computers. Contribute to OpenInterpreter/open-interpreter development by creating an account on GitHub.

- no title found: no description found

- GitHub - ggerganov/llama.cpp: LLM inference in C/C++: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- Build software better, together: GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

- [1hr Talk] Intro to Large Language Models: This is a 1 hour general-audience introduction to Large Language Models: the core technical component behind systems like ChatGPT, Claude, and Bard. What the...

LM Studio ▷ #hardware-discussion (138 messages🔥🔥):

Dual GPU setups and performanceNVIDIA GPU comparisonsNPU capabilities in laptopsTesla M10 usabilityUpcoming hardware releases

- Dual 4090 setups versus single GPUs: Discussions around dual GPU setups indicate multi-GPU configurations may split models across cards, impacting performance and speed.

- Members voiced concerns that a single 4090 might struggle with larger models, while dual setups require programming for effective use.

- NPU in Laptops: The Future?: The conversation explored the integration of NPUs in laptops, with mixed opinions on their performance benefits compared to traditional GPUs.

- Some participants argued that offloading tasks to NPUs could enhance efficiency, particularly in power-limited environments.

- Tesla M10: Is it Worth It?: Several members warned against purchasing older GPUs like the NVIDIA Tesla M10 due to their inefficiency and obsolescence.

- There were suggestions to consider more recent models like the P40 if users pursue legacy hardware.

- Performance of GPUs in LLM inference: Users reported varying experiences using integrated GPUs and discussed their performance metrics, notably with Llama 3.1 models.

- Inferences indicate performance peaks are often tied not only to GPU power but memory management and context window settings.

- Future Hardware: Anticipations and Upgrades: Participants expressed excitement about upcoming hardware, particularly the Studio M4 Ultra and Blackwell architecture next year.

- Discussions highlighted the potential benefits of upgrading to a 4090 for deep learning tasks while waiting for next-gen releases.

Links mentioned:

- Gyazo:

- mistralai/Mistral-Large-Instruct-2407 · Hugging Face: no description found

- Guaton Computadora GIF - Guaton Computadora Enojado - Discover & Share GIFs: Click to view the GIF

- Reddit - Dive into anything: no description found

- AMD "Strix Halo" a Large Rectangular BGA Package the Size of an LGA1700 Processor: Apparently the AMD "Strix Halo" processor is real, and it's large. The chip is designed to square off against the likes of the Apple M3 Pro and M3 Max, in letting ultraportable notebook...

HuggingFace ▷ #general (810 messages🔥🔥🔥):

Hugging Face model featuresLLM inference optimizationOpen source contribution guidanceFine-tuning models with PEFTUsing CUDA graphs in PyTorch

- Discussion on Hugging Face model features: Users discussed various models available on Hugging Face, including the MarionMT model for translations and the potential of the TatoebaChallenge repo for language support.

- Concerns were raised about the limitations of certain models and the need for better documentation and implementation examples.

- Optimizing LLM inference speed: Several suggestions were made for speeding up LLM inference, including using torch.compile and comparing performance between vLLM, TGI, and LMdeploy.

- The ongoing discussion highlights the interest in improving efficiency and performance when working with large language models.

- Guidance on open source contributions: A user sought advice on making open source contributions and found discussions helpful in reconsidering their approach and motivations.

- Links to relevant blog posts and tutorials were shared to help new contributors get started.

- Fine-tuning models with PEFT: A user shared their fine-tuning experience with the Llama2 model, encountering some issues with model pushing, leading to discussions about best practices in the process.

- Best practices were suggested, including the correct usage of pushing to the hub and managing training configurations.

- Using CUDA graphs in PyTorch: The discussion explored how CUDA graphs can optimize PyTorch models by reducing the overhead associated with launching GPU operations.

- Users expressed interest in improving performance and noted that proper usage of libraries like torch are crucial for effective graph implementations.

Links mentioned:

- Teaching BERT to Solve Word Problems: Teaching Bert to Solve Word Problems

- Repository limitations and recommendations: no description found

- chatpdflocal/llama3.1-8b-gguf · Hugging Face: no description found

- Hugging Face – The AI community building the future.: no description found

- hugging-quants (Hugging Quants): no description found

- mlabonne/Llama-3.1-70B-Instruct-lorablated · Hugging Face: no description found

- compile2011/W-finetune · Discussions: no description found

- Don't Contribute to Open Source: You heard me right. I don't think you should contribute to open source. Unless...KEYWORDS: GITHUB OPEN SOURCE CODING DEVELOPING PROGRAMMING LEARNING TO CODE ...

- Helicopter Baguette GIF - Helicopter Baguette - Discover & Share GIFs: Click to view the GIF

- Tweet from merve (@mervenoyann): OWLSAM2: text-promptable SAM2 🦉 Marrying cutting-edge zero-shot object detector OWLv2 🤝 mask generator SAM2 (small) Zero-shot segmentation with insane precision ⛵️

- GitHub - vllm-project/vllm: A high-throughput and memory-efficient inference and serving engine for LLMs: A high-throughput and memory-efficient inference and serving engine for LLMs - vllm-project/vllm

- or4cl3ai/SquanchNastyAI · Hugging Face: no description found

- or4cl3ai/IntelliChat · Hugging Face: no description found

- or4cl3ai/SoundSlayerAI · Hugging Face: no description found

- or4cl3ai/A-os43-v1 · Hugging Face: no description found

- DataCentricVisualAIChallenge - a Hugging Face Space by Voxel51: no description found

- Models - Hugging Face: no description found

- What is Question Answering? - Hugging Face: no description found

- Minitron - a Hugging Face Space by Tonic: no description found

- Accelerating PyTorch with CUDA Graphs: Today, we are pleased to announce a new advanced CUDA feature, CUDA Graphs, has been brought to PyTorch. Modern DL frameworks have complicated software stacks that incur significant overheads associat...

- Papers with Code - GSM8K Benchmark (Arithmetic Reasoning): The current state-of-the-art on GSM8K is GPT-4 DUP. See a full comparison of 152 papers with code.

- stanfordnlp/imdb at main: no description found

- RAG chatbot using llama3: no description found

- Tonic/Minitron at main: no description found

- Models - Hugging Face: no description found

- How we leveraged distilabel to create an Argilla 2.0 Chatbot: no description found

- Fine-Tuning Gemma Models in Hugging Face: no description found

- transformers/CONTRIBUTING.md at main · huggingface/transformers: 🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX. - huggingface/transformers

- [WIP] update to wgpu-native 22.1 by Vipitis · Pull Request #547 · pygfx/wgpu-py: I am really excited for better error handling, compilation info and glsl const built-ins, so I already started this. Feel free to cherry pick my changes or commit into this branch if it helps. I go...

- SpiegelMining – Reverse Engineering von Spiegel-Online (33c3): Wer denkt, Vorratsdatenspeicherungen und „Big Data“ sind harmlos, der kriegt hier eine Demo an Spiegel-Online.Seit Mitte 2014 hat David fast 100.000 Artikel ...

- Announcing DistillKit for creating & distributing SLMs: First, Arcee AI revolutionized Small Language Models (SLMs) with Model Merging and the open-source repo MergeKit. Today we bring you another leap forward in the creation and distribution of SLMs with ...

- GitHub - arcee-ai/DistillKit at blog.arcee.ai: An Open Source Toolkit For LLM Distillation. Contribute to arcee-ai/DistillKit development by creating an account on GitHub.

- [WIP] update to wgpu-native 22.1 by Vipitis · Pull Request #547 · pygfx/wgpu-py: I am really excited for better error handling, compilation info and glsl const built-ins, so I already started this. Feel free to cherry pick my changes or commit into this branch if it helps. I go...

- [WIP] update to wgpu-native 22.1 by Vipitis · Pull Request #547 · pygfx/wgpu-py: I am really excited for better error handling, compilation info and glsl const built-ins, so I already started this. Feel free to cherry pick my changes or commit into this branch if it helps. I go...

HuggingFace ▷ #today-im-learning (3 messages):

LLM Inference OptimizationCurriculum-based AI Approach

- Exploring LLM Inference Optimization Techniques: A noteworthy article discusses techniques to optimize LLM inference for better throughput and GPU utilization while decreasing latency, showcasing the challenges faced with large models.

- It highlights that stacking transformer layers leads to better accuracies and elaborates on the costs associated with retrieval-augmented generation (RAG) pipelines which demand substantial processing power.

- Curriculum-Based Approach in AI: A paper outlines the limitations of current AI systems in reasoning and adaptability, emphasizing the need for a robust curriculum-based approach to enhance explainability and causal understanding.

- The author emphasizes that while AI excels in pattern recognition, it struggles in complex reasoning environments, which fundamentally limits its transformative potential.

Links mentioned:

- From Data to Understanding: A Curriculum-Based Approach to Nurturing AI Reasoning: no description found

- Mastering LLM Techniques: Inference Optimization | NVIDIA Technical Blog: Stacking transformer layers to create large models results in better accuracies, few-shot learning capabilities, and even near-human emergent abilities on a wide range of language tasks.

HuggingFace ▷ #cool-finds (10 messages🔥):

CatVTON Virtual Try-On ModelGeekyGhost ExtensionsSweetHug AI ChatbotJoy Captioning for ASCII ArtLLM Deployment with Optimized Inference

- CatVTON redefines virtual try-on methods: A recent arXiv paper introduces CatVTON, an efficient virtual try-on diffusion model that eliminates the need for a ReferenceNet and additional image encoders by concatenating garment images directly during processing.

- This innovation reduces training costs while maintaining realistic garment transfers to target persons.

- GeekyGhost unveils Automatic1111 extension: A member shared their creation of the Automatic1111 extension on GitHub, which is a port of their comfyUI geely remb tool.

- They also introduced another project for a web UI using Gradio, further showcasing their work in the community.

- Discover SweetHug AI chatbot: Another user highlighted the capabilities of SweetHug AI, an AI character platform that offers users a chance to explore chats with AI girlfriends as they share their thoughts and fantasies.

- The service is handled by Ally AI Pte. Ltd. and includes features like NSFW chats and an affiliate program.

- Joy Captioning excels in ASCII art: A member pointed out the Joy Captioning space, which successfully captions ASCII art instead of misinterpreting it as technical diagrams.

- They expressed their excitement over discovering a tool that accurately reflects artistic expressions in text format.

- Inquiry on LLM deployment optimization: A user sought insights regarding LLM deployment with optimized inference, highlighting an interest in efficiency for large language models.

- This sparked curiosity about advancements and practices in this area within the community.

Links mentioned:

- CatVTON: Concatenation Is All You Need for Virtual Try-On with Diffusion Models: Virtual try-on methods based on diffusion models achieve realistic try-on effects but often replicate the backbone network as a ReferenceNet or use additional image encoders to process condition input...

- Joy Caption Pre Alpha - a Hugging Face Space by fancyfeast: no description found

- SweetHug AI: Free Chat With Your AI Girlfriends - No Limits: no description found

- GitHub - GeekyGhost/Automatic1111-Geeky-Remb: Automatic1111 port of my comfyUI geely remb tool: Automatic1111 port of my comfyUI geely remb tool. Contribute to GeekyGhost/Automatic1111-Geeky-Remb development by creating an account on GitHub.

HuggingFace ▷ #i-made-this (11 messages🔥):

TestcontainersRob's Instagram InteractionSelf-Supervised Learning in Dense PredictionAI Research Agent Documentation

- Explore Testcontainers for AI Development: A member shared their discovery of Testcontainers, emphasizing its potential for development and serving AI applications.

- They also mentioned their new hobby of contributing to the Docker Testcontainers project, encouraging others to join in the fun.

- Rob Gains New Powers on Instagram: Through a vision model, a member successfully enabled Rob to read and react to his Instagram comments, showcasing his growing capabilities.

- Another member humorously noted Rob’s increasing powers, suggesting a TikTok live session could be lucrative.

- Self-Supervised Learning Revolutionizes Dense Prediction: A member highlighted the advances in self-supervised learning methods, specifically in boosting performance for dense prediction tasks like object detection and segmentation.

- They provided a link to an informative post discussing the challenges faced by traditional SSL methods in these applications.

- AI Research Agent Documentation Launch: A member shared resources including documentation and demos for an AI Research library they developed.

- They promoted features like search capabilities, text extraction, and keyphrase topic extraction while inviting discussions on integration.

Links mentioned:

- no title found: no description found

- ai-research-agent Home: no description found

- Using Self-Supervised Learning for Dense Prediction Tasks: Overview of Self-Supervised Learning methods for dense prediction tasks such as object detection, instance segmentation, and semantic segmentation

- Local AI with Docker's Testcontainers: no description found

HuggingFace ▷ #reading-group (29 messages🔥):

Group Focus for LearningHackathon CollaborationSEE-2-SOUND PresentationRecording of PresentationsLinear Algebra Article

- Choosing a Main Focus for Learning: Members discussed the importance of selecting a common focus, like a course or project, to enhance learning and accountability within the group.

- Teaming up for hands-on challenges like hackathons can foster collaboration, but it’s crucial that participants have similar skill levels to prevent uneven work distribution.

- SEE-2-SOUND Revolutionizes Spatial Audio: A presentation was held on SEE-2-SOUND, a zero-shot framework that generates spatial audio from visual content without needing extensive prior training.

- This innovative approach decomposes the process into identifying key visual aspects and integrating them into high-quality spatial audio, posing exciting implications for immersive content.

- Availability of Session Recordings: A member inquired about the availability of a recording from a recent presentation, which experienced technical difficulties.

- The presenter confirmed that there will be an edited version of the session distributed later.

- Introduction of New Members and Resources: New members inquired about group resources, such as a calendar for events, to stay engaged in activities.

- Members responded that event scheduling is typically managed through messaging platforms with updates posted regularly.

- Sharing Knowledge through Articles: A member shared a new article about linear algebra on Medium, focusing on linear combinations and spans of vectors.

- This article serves as a resource for members keen on strengthening their understanding of linear algebra fundamentals.

Links mentioned:

- SEE-2-SOUND: Zero-Shot Spatial Environment-to-Spatial Sound: Generating combined visual and auditory sensory experiences is critical for the consumption of immersive content. Recent advances in neural generative models have enabled the creation of high-resoluti...

- Join our Cloud HD Video Meeting: Zoom is the leader in modern enterprise video communications, with an easy, reliable cloud platform for video and audio conferencing, chat, and webinars across mobile, desktop, and room systems. Zoom ...

- Linear Algebra (Part-2): Linear Combination And Span: Learn about the linear combination and the span of vectors.

- Video Conferencing, Web Conferencing, Webinars, Screen Sharing: Zoom is the leader in modern enterprise video communications, with an easy, reliable cloud platform for video and audio conferencing, chat, and webinars across mobile, desktop, and room systems. Zoom ...

HuggingFace ▷ #computer-vision (8 messages🔥):

Computer Vision Course AssignmentsSF-LLaVA Paper DiscussionCV Project Suggestions3D Object Orientation ModelingTime-tagged Outdoor Image Datasets

- Clarity Needed on Computer Vision Course Assignments: Users expressed confusion about the assignment components of their computer vision course, seeking clarity on requirements.

- One participant suggested collaborating over voice chat to discuss relevant materials.

- Collaborative Discussion on SF-LLaVA: One user proposed a voice chat to review the SF-LLaVA paper, encouraging others to join the discussion.

- This initiative reflects the community’s willingness to support each other in understanding academic resources.

- Suggestions for Quick CV Projects: Participants shared ideas for CV projects, including binocular depth estimation as a viable option that can be completed in a week.

- This discussion highlights a proactive approach for learners to engage in practical applications of their knowledge.

- Modeling 3D Object Orientation: A user inquired about methods to create a model capable of determining the direction a 3D object is facing.

- This question underscores the interest in advancing skills in spatial understanding within computer vision.

- Finding Time-tagged Outdoor Image Datasets: A user is searching for a good dataset with outdoor images tagged by time of day, expressing difficulties with existing options like MIRFLIKR.

- This inquiry suggests a demand for high-quality datasets that facilitate specific research needs in computer vision.

HuggingFace ▷ #NLP (4 messages):

Dependency Issues with ChaquopyFinding Related Tables with NLP

- Dependency Issues with Chaquopy for Qwen2-0.5B-Instruct: A member is facing persistent dependency conflicts while developing an Android app using Chaquopy with Python, specifically relating to transformers and tokenizers.

- They provided their Gradle configuration and expressed that attempts to use various package versions led to an error: InvalidVersion: Invalid version: ‘0.10.1,<0.11’.

- Seeking NLP methods for Relational Models: Another member is inquiring about how to identify relationships between tables based on column names and descriptions using NLP techniques.

- They are looking for suggestions or references to understand how tables relate to each other when provided a specific table.

HuggingFace ▷ #diffusion-discussions (7 messages):

Gradient Checkpointing in DiffusersQuanto Library Loading TimesCNN for Object CroppingFlux Models Issues

- Gradient Checkpointing gets Implemented: A user highlighted that gradient checkpointing was previously missing in Diffusers, but shared a snippet revealing the addition of a method to set gradient checkpointing.

- The new method

_set_gradient_checkpointingallows toggling checkpointing for modules that support it.

- The new method

- Quanto Library has Slow Model Loading Times: A member discussed spending two days with the Quanto library, facing over 400 seconds to move quantized models to the device on their setup (4080 - Ryzen 9 5900X).

- They noted that new QBitsTensors are created during this process, which may be contributing to the delay.

- Request for Issue Tracking in Quanto: Another user suggested creating an issue on the Quanto repository regarding the slow loading times to track and potentially solve the problem.

- They mentioned that the maintainer is currently on leave, which might delay responses.