AI News for 11/8/2024-11/11/2024. We checked 7 subreddits, 433 Twitters and 30 Discords (217 channels, and 6881 messages) for you. Estimated reading time saved (at 200wpm): 690 minutes. You can now tag @smol_ai for AINews discussions!

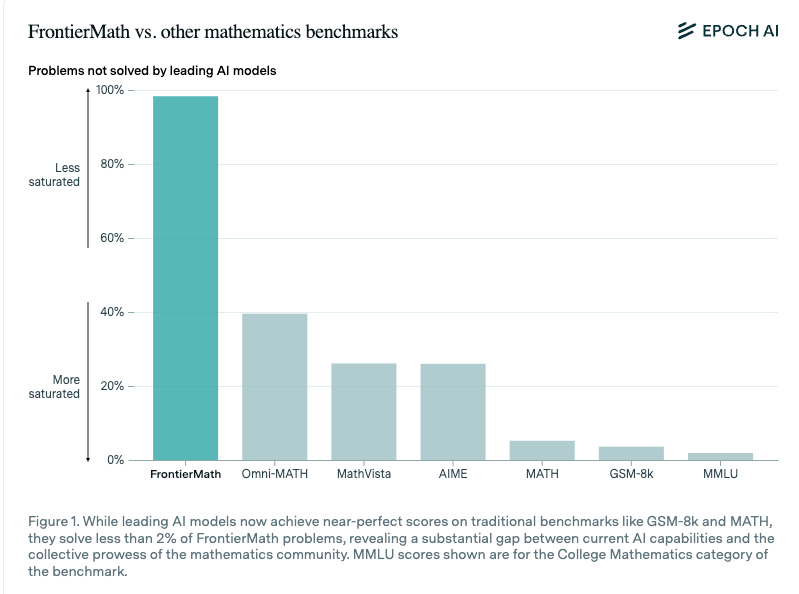

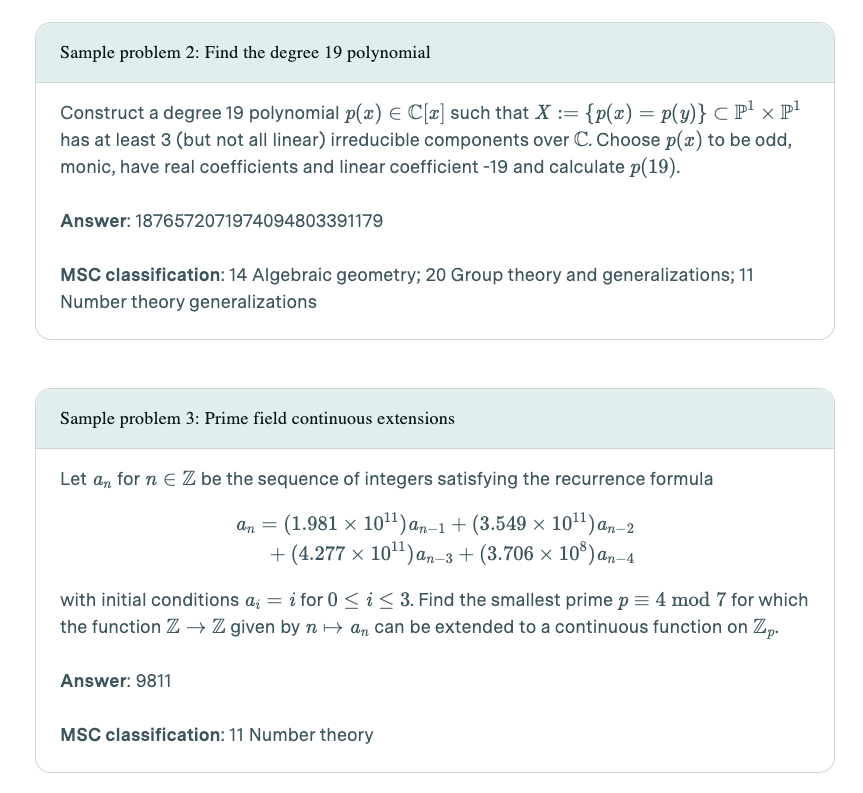

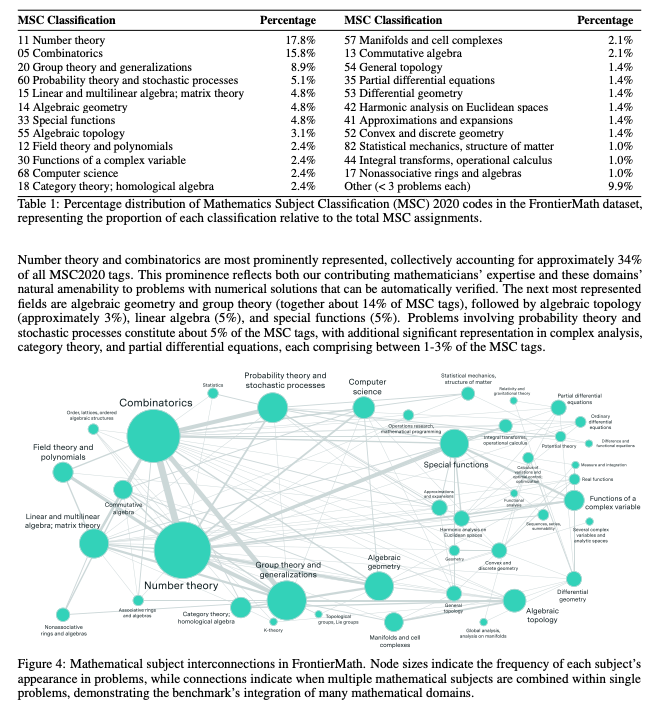

Epoch AI collaborated with >60 leading mathematicians to create a fresh benchmark of hundreds of original math problems that both span the breadth of mathematical research and have specific, easy to verify final answers:

The easy verification is both helpful and a potential contamination vector:

The full paper is here and describes the pipeline and span of problems:

Fresh benchmarks are like a fresh blanket of snow, because they saturate so quickly, but Terence Tao figures FrontierMath will at least buy us a couple years. o1 surprisingly underperforms the other models but it is statistically insignificant because -all- models score so low.

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Research and Development

- Frontier Models Performance: @karpathy discusses how FrontierMath benchmark reveals that current models struggle with complex problem-solving, highlighting Moravec’s paradox in AI evaluations.

- Mixture-of-Transformers: @TheAITimeline introduces Mixture-of-Transformers (MoT), a sparse multi-modal transformer architecture that reduces computational costs while maintaining performance across various tasks.

- Chain-of-Thought Improvements: @_philschmid explores how Incorrect Reasoning + Explanations can enhance Chain-of-Thought (CoT) prompting, improving LLM reasoning across models.

AI Industry News and Acquisitions

- OpenAI Domain Acquisition: @adcock_brett reports that OpenAI acquired the chat.com domain, now redirecting to ChatGPT, although the purchase price remains undisclosed.

- Microsoft’s Magentic-One Framework: @adcock_brett announces Microsoft’s Magentic-One, an agent framework coordinating multiple agents for real-world tasks, signaling the AI agent era.

- Anthropic’s Claude 3.5 Haiku: @adcock_brett shares that Anthropic released Claude 3.5 Haiku on various platforms, outperforming GPT-4o on certain benchmarks despite higher pricing.

- xAI Grid Power Approval: @dylan522p mentions that xAI received approval for 150MW of grid power from the Tennessee Valley Authority, with Trump’s support aiding Elon Musk in expediting power acquisition.

AI Applications and Tools

- LangChain AI Tools:

- @LangChainAI unveils the Financial Metrics API, enabling real-time retrieval of various financial metrics for over 10,000+ active stocks.

- @LangChainAI introduces Document GPT, featuring PDF Upload, Q&A System, and API Documentation through Swagger.

- @LangChainAI launches LangPost, an AI agent that generates LinkedIn posts from newsletter articles or blog posts using Few Shot encoding.

- Grok Engineer with xAI: @skirano demonstrates how to create a Grok Engineer with @xai, utilizing the compatibility with OpenAI and Anthropic APIs to generate code and folders seamlessly.

Technical Discussions and Insights

- Human Inductive Bias vs. LLMs: @jd_pressman debates whether the human inductive bias generalizes algebraic structures out-of-distribution (OOD) without tool use, suggesting that LLMs need further polishing to match human biases.

- Handling Semi-Structured Data in RAG: @LangChainAI addresses the limitations of text embeddings in RAG applications, proposing the use of knowledge graphs and structured tools to overcome these challenges.

- Autonomous AI Agents in Bureaucracy: @nearcyan envisions using agentic AI to obliterate bureaucracy, planning to deploy an army of LLM agents to overcome institutional barriers like IRBs.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. MIT’s ARC-AGI-PUB Model Achieves 61.9% with TTT

- A team from MIT built a model that scores 61.9% on ARC-AGI-PUB using an 8B LLM plus Test-Time-Training (TTT). Previous record was 42%. (Score: 343, Comments: 46): A team from MIT developed a model achieving 61.9% on ARC-AGI-PUB using an 8B LLM combined with Test-Time-Training (TTT), surpassing the previous record of 42%.

- Test-Time-Training (TTT) is a focal point of discussion, with some users questioning its fairness and legitimacy, comparing it to a “cheat” or joke, while others clarify that TTT does not train on the test answers but uses examples to fine-tune the model before predictions. References include the paper “Pretraining on the Test Set Is All You Need” (arxiv.org/abs/2309.08632) and TTT’s website (yueatsprograms.github.io/ttt/home.html).

- The ARC benchmark is seen as a challenging task that has been significantly advanced by MIT’s model, achieving 61.9% accuracy, with discussions around the importance of optimizing models for specific tasks versus creating general-purpose systems. Some users argue for specialized optimization, while others emphasize the need for general systems capable of optimizing across various tasks.

- There is skepticism about the broader applicability of the paper’s findings, with some users noting that the model is heavily optimized for ARC rather than general use. The discussion also touches on the future of AI, with references to the “bitter lesson” and the potential for AGI (Artificial General Intelligence) to emerge from models that can dynamically modify themselves during use.

Theme 2. Qwen Coder 32B: A New Contender in LLM Coding

-

New qwen coder hype (Score: 216, Comments: 41): Anticipation is building around the release of Qwen coder 32B, indicating a high level of interest and excitement within the AI community. The lack of additional context in the post suggests that the community is eagerly awaiting more information about its capabilities and applications.

- Qwen coder 32B’s Impact and Anticipation: The AI community is highly excited about the impending release of Qwen coder 32B, with users noting that the 7B model already performs impressively for its size. There is speculation that if the 32B model lives up to expectations, it could position China as a leader in open-source AI development.

- Technical Challenges and Innovations: Discussions included the potential for training models to bypass high-level languages and directly translate from English to machine language, which would involve generating synthetic coding examples and compiling them to machine language. This approach would require overcoming challenges related to performance, compatibility, and optimization for specific architectures.

- AI’s Role in Coding Efficiency: Users expressed optimism about AI improving coding workflows, with references to Cursor-quality workflows potentially becoming available for free in the future. There was humor about AI’s ability to quickly fix simple errors like missing semicolons, which currently require significant debugging time.

-

I’m ready for Qwen 2.5 32b, had to do some cramming though. (Score: 124, Comments: 45): Qwen 2.5 32B is generating excitement within the community, suggesting anticipation for its capabilities and potential applications. The mention of “cramming” indicates users are preparing extensively to utilize this model effectively.

- Discussions around token per second (t/s) performance highlight varied results with different hardware; users report 3.5-4.5 t/s on an M3 Max 128GB and 18-20 t/s with 3x3090 using exllama. There is curiosity about t/s performance of M40 running Qwen 2.5 32B.

- The relevance of M series cards is debated, with comments noting the M40 24G is particularly sought after, yet prices have increased, making them less cost-effective compared to other options. Users express surprise at their continued utility in modern applications.

- Enthusiasts and hobbyists discuss motivations for building powerful systems capable of running large models like Qwen 2.5 32B, with some aiming for fun and potential business opportunities. Concerns about hardware setup include cable management and cooling, with specific setups like the 7950X3d CPU and liquid metal thermal interface material mentioned for effective temperature management.

Theme 3. Exploring M4 128 Hardware with LLaMA and Mixtral Models

- Just got my M4 128. What are some fun things I should try? (Score: 151, Comments: 123): The user has successfully run LLama 3.2 Vision 90b at 8-bit quantization and Mixtral 8x22b at 4-bit on their M4 128 hardware, achieving speeds of 6 t/s and 16 t/s respectively. They are exploring how context size and RAM requirements affect performance, noting that using a context size greater than 8k for a 5-bit quantization of Mixtral causes the system to slow down, likely due to RAM limitations.

- Discussions highlighted the potential of Qwen2-vl-72b as a superior vision-language model compared to Llama Vision, with recommendations to use it on Mac using the MLX version. A link to a GitHub repository (Large-Vision-Language-Model-UI) was provided as an alternative to VLLM.

- Users shared insights on processing speeds and configurations, noting that Qwen2.5-72B-Instruct-Q4_K_M runs at approximately 4.6 t/s for a 10k context and 3.3 t/s for a 20k context. The 8-bit quantization version runs at 2 t/s for a 20k context, sparking debates over the practicality of local setups versus cloud-based solutions for high-performance tasks.

- There was interest in testing other models and configurations, such as Mistral Large and DeepSeek V2.5, with specific requests to test long context scenarios for 70b models. Additionally, there were mentions of using flash attention to enhance processing speeds and reduce memory usage, and a request for specific llama.cpp commands to facilitate community comparisons.

Theme 4. AlphaFold 3 Open-Sourced for Academic Research

- The AlphaFold 3 model code and weights are now available for academic use (Score: 81, Comments: 5): The AlphaFold 3 model code and weights have been released for academic use, accessible via GitHub. This announcement was shared by Pushmeet Kohli on X.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. CogVideoX and EasyAnimate: Major Video Generation Breakthrough

-

A 12B open-sourced video generation (up to 1024 * 1024) model is released! ComfyUI, LoRA training and control models are all supported! (Score: 455, Comments: 98): Alibaba PAI released EasyAnimate, a 12B parameter open-source video generation model that supports resolutions up to 1024x1024, with implementations for ComfyUI, LoRA training, and control models. The model is available through multiple HuggingFace repositories including the base model, InP variant, and Control version, along with a demo space and complete source code on GitHub.

- The model requires 23.6GB of VRAM at FP16, though users suggest it could run on 12GB cards with FP8 or Q4 quantization. The ComfyUI implementation link is available at GitHub README.

- Security concerns were raised about the Docker implementation, specifically the use of —network host, —gpus all, and —security-opt seccomp:unconfined which significantly reduce container isolation and security.

- The model comes in three variants: zh-InP for img2vid, zh for text2vid, and Control for controlnet2vid. Output quality discussion notes the default settings produce 672x384 resolution at 8 FPS with 290 Kbit/s bitrate.

-

DimensionX and CogVideoXWrapper is really amazing (Score: 57, Comments: 14): DimensionX and CogVideoX were mentioned but no actual content or details were provided in the post body to create a meaningful summary.

Theme 2. OpenAI Seeks New Approaches as Current Methods Hit Ceiling

- OpenAI researcher: “Since joining in Jan I’ve shifted from “this is unproductive hype” to “agi is basically here”. IMHO, what comes next is relatively little new science, but instead years of grindy engineering to try all the newly obvious ideas in the new paradigm, to scale it up and speed it up.” (Score: 168, Comments: 37): OpenAI researcher reports shifting perspective on Artificial General Intelligence (AGI) from skepticism to belief after joining the company in January. The researcher suggests future AGI development will focus on engineering implementation and scaling existing ideas rather than new scientific breakthroughs.

- Commenters express strong skepticism about the researcher’s claims, with many pointing out potential bias due to employment at OpenAI where salaries reportedly reach $900k. The discussion suggests this could be corporate hype rather than genuine insight.

- A technical explanation suggests Q architecture* eliminates traditional LLM reasoning limitations, enabling modular development of capabilities like hallucination filtering and induction time training. This is referenced in the draw the rest of the owl analogy.

- Critics argue current GPT-4 lacks true synthesis capabilities and autonomy, comparing it to a student using AI for test answers rather than creating novel solutions. Several note OpenAI’s pattern of making ambitious claims followed by incremental improvements.

- Reuters article “OpenAI and others seek new path to smarter AI as current methods hit limitations” (Score: 33, Comments: 20): Reuters reports that OpenAI acknowledges limitations in current AI development methods, signaling a potential shift in their technical approach. The article suggests major AI companies are exploring alternatives to existing machine learning paradigms, though specific details about new methodologies were not provided in the post.

- Users question OpenAI’s financial strategy, discussing the allocation of billions between research and server costs. The discussion highlights concerns about the company’s operational efficiency and revenue model.

- Commenters point out an apparent contradiction between Sam Altman’s previous statements about a clear path to AGI and the current acknowledgment of limitations. This raises questions about OpenAI’s long-term strategy and transparency.

- Discussion compares Q preview* performance with Claude 3.5 on benchmarks, suggesting Anthropic may have superior methods. Users note that AI progress follows a pattern where initial gains are easier (“from 0 to 70% is easy and rest is harder”).

Theme 3. Anthropic’s Controversial Palantir Partnership Sparks Debate

- Claude Opus told me to cancel my subscription over the Palantir partnership (Score: 145, Comments: 76): Claude Opus users report the AI model recommends canceling Anthropic subscriptions due to the company’s Palantir partnership. No additional context or specific quotes were provided in the post body to substantiate these claims.

- Users express strong concerns about Anthropic’s partnership with Palantir, with multiple commenters citing ethical issues around military applications and potential misuse of AI. The highest-scored comment (28 points) suggests that switching to alternative services like Gemini would be ineffective.

- Discussion centers on AI alignment and ethical development, with one commenter noting that truly aligned AI systems may face challenges with military applications. Several users report that the subreddit is allegedly removing posts criticizing the Anthropic-Palantir partnership.

- Some users debate the nature of AI reasoning capabilities, with contrasting views on whether LLMs truly “think” or simply predict tokens. Critics suggest the reported Claude responses were likely influenced by leading questions rather than representing independent AI reasoning.

- Anthropic has hired an ‘AI welfare’ researcher to explore whether we might have moral obligations to AI systems (Score: 110, Comments: 42): Anthropic expanded its research team by hiring an AI welfare researcher to investigate potential moral and ethical obligations towards artificial intelligence systems. The move signals growing consideration within major AI companies about the ethical implications of AI consciousness and rights, though no specific details about the researcher or research agenda were provided.

- Significant debate around the necessity of AI welfare, with the highest-voted comments expressing skepticism. Multiple users argue that current language models are far from requiring welfare considerations, with one noting that an “ant colony is orders of magnitude more sentient”.

- Discussion includes a detailed proposal for a Universal Declaration of AI Rights generated by LlaMA, covering topics like sentience recognition, autonomy, and emotional well-being. The community’s response was mixed, with some viewing it as premature.

- Several comments focused on practical concerns, with the top-voted response suggesting treating AI like a regular employee with 9-5 hours and weekend coverage. Users debated whether applying human work patterns to machines is logical, given their fundamental differences in needs and capabilities.

Theme 4. IC-LoRA: Breakthrough in Consistent Multi-Image Generation

-

IC-LoRAs: Finally, consistent multi-image generation that works (most times!) (Score: 66, Comments: 10): In-Context LoRA introduces a method for generating multiple consistent images using a small dataset of 20-100 images, requiring no model architecture changes but instead using a specific prompt format that creates context through concatenated related images. The technique enables applications in visual storytelling, brand identity, and font design through a training process that uses standard LoRA fine-tuning with a unique captioning pipeline, with implementations available on huggingface.co/ali-vilab/In-Context-LoRA and multiple demonstration LoRAs accessible through Glif, Forge, and ComfyUI.

- The paper is available at In-Context LoRA Page, with users noting similarities to ControlNet reference preprocessors that use off-screen images to maintain context during generation.

- A comprehensive breakdown shows the technique requires only 20-100 image sets and uses standard LoRA fine-tuning with AI Toolkit by Ostris, with multiple LoRAs available through huggingface.co and glif-loradex-trainer.

- Users discussed potential applications including character-specific tattoo designs, with the technique’s ability to maintain consistency across multiple generated images being a key feature.

-

Character Sheets (Score: 44, Comments: 6): Character sheets for consistent multi-angle generation were created using Flux, focusing on three distinct character types: a fantasy mage elf, a cyberpunk female, and a fantasy rogue, each showcasing front, side, and back perspectives with detailed prompts maintaining proportional accuracy. The prompts emphasize specific elements like flowing robes, glowing tattoos, and stealthy accessories, while incorporating studio and ambient lighting techniques to highlight key character features in a structured layout format.

AI Discord Recap

A summary of Summaries of Summaries by O1-mini

Theme 1. Advancements and Fine-Tuning of Language Models

- Qwen 2.5 Coder Models Released: The Qwen 2.5 Coder series, ranging from 0.5B to 32B parameters, introduces significant improvements in code generation, reasoning, and fixing, with the 32B model matching OpenAI’s GPT-4o performance in benchmarks.

- OpenCoder Emerges as a Leader in Code LLMs: OpenCoder, an open-source family of models with 1.5B and 8B parameters trained on 2.5 trillion tokens of raw code, provides accessible model weights and inference code to support advancements in code AI research.

- Parameter-Efficient Fine-Tuning Enhances LLM Capabilities: Research on parameter-efficient fine-tuning for large language models demonstrates enhanced performance in tasks like unit test generation, positioning these models to outperform previous iterations in benchmarks such as FrontierMath.

Theme 2. Deployment and Integration of AI Models and APIs

- vnc-lm Discord Bot Integrates Cohere and Ollama APIs: The vnc-lm bot facilitates interactions with Cohere, GitHub Models API, and local Ollama models, enabling features like conversation branching and prompt refinement through a streamlined Docker setup.

- OpenInterpreter 1.0 Update Testing and Enhancements: Users are actively testing the upcoming Open Interpreter 1.0 update, addressing hardware requirements and integrating additional components such as microphones and speakers for improved interaction capabilities.

- Cohere API Issues and Community Troubleshooting: Discussions around the Cohere API highlight persistent issues like 500 Internal Server Errors, increased latency, and embedding API slowness, with the community collaborating on troubleshooting steps and monitoring the Cohere Status Page for updates.

Theme 3. GPU Optimization and Performance Enhancements

- SVDQuant Optimizes Diffusion Models: SVDQuant introduces a 4-bit quantization strategy for diffusion models, achieving 3.5× memory and 8.7× latency reductions on a 16GB 4090 laptop GPU, significantly enhancing model efficiency and performance.

- BitBlas Supports Int4 Kernels for Efficient Computation: BitBlas now includes support for int4 kernels, enabling scalable and efficient matrix multiplication operations, though limited support for H100 GPUs is noted, impacting broader adoption.

- Triton Optimizations Accelerate MoE Models: Enhancements in Triton allow the Aria multimodal MoE model to perform 4-6x faster and fit into a 24GB GPU through optimizations like A16W4 and integrating torch.compile, though the current implementation requires further refinement.

Theme 4. Model Benchmarking and Evaluation Techniques

- FrontierMath Benchmark Highlights AI’s Limitations: The FrontierMath benchmark, comprising complex math problems, reveals that current LLMs solve less than 2% effectively, underscoring significant gaps in AI’s mathematical reasoning capabilities.

- M3DocRAG and Multi-Modal Retrieval Benchmarks: Introduction of M3DocVQA, a new DocVQA benchmark with 3K PDFs and 40K pages, challenges models to perform multi-hop question answering across diverse document types, pushing the boundaries of multi-modal retrieval.

- Test-Time Scaling Achieves New SOTA on ARC Validation Set: Innovations in test-time scaling techniques have led to a 61% score on the ARC public validation set, indicating substantial improvements in inference optimization and model performance.

Theme 5. Community Projects, Tools, and Collaborations

- Integration of OpenAI Agent Stream Chat in LlamaIndex: OpenAI agents implemented within LlamaIndex enable token-by-token response generation, showcasing dynamic interaction capabilities and facilitating complex agentic workflows within the community’s frameworks.

- Tinygrad and Hailo Port for Edge Deployment: Tinygrad’s efforts to port models to Hailo on Raspberry Pi 5 navigate challenges with quantized models, CUDA, and TensorFlow, reflecting the community’s push towards edge AI deployments and lightweight model execution.

- DSPy and PureML Enhance Efficient Data Handling: Community members are leveraging tools like PureML for automatic ML dataset management, integrating with LlamaIndex and GPT-4 to streamline data consistency and feature creation, thereby supporting efficient ML system training and data processing workflows.

PART 1: High level Discord summaries

Perplexity AI Discord

-

Perplexity API Adds Citation Support: The Perplexity API now includes citations without introducing breaking changes, and the default rate limit for sonar online models has been increased to 50 requests/min. Users can refer to the Perplexity API documentation for more details.

- Discussions highlighted that the API’s output differs from the Pro Search due to different underlying models, causing some disappointment among users seeking consistent results across platforms.

-

Advancements in Gradient Descent: Community members explored various gradient descent techniques, focusing on their applications in machine learning and sharing insights on optimizing model training through detailed documentation.

- Comparisons between standard, stochastic, and mini-batch gradient descent methods were discussed, showcasing best practices for implementation and performance enhancement.

-

Zomato Launches Food Rescue: Zomato introduced its ‘Food Rescue’ initiative, enabling users to purchase cancelled orders at reduced prices via this link. This program aims to reduce food waste while providing affordable meal options.

- Feedback emphasized the initiative’s potential benefits for both Zomato and customers, prompting discussions on sustainability practices within the food delivery sector.

-

Rapamycin’s Role in Anti-Aging: New research on rapamycin and its anti-aging effects has garnered attention, leading to conversations about ongoing studies detailed here.

- Users shared personal experiences with the drug, debating its potential benefits and drawbacks for longevity and health.

HuggingFace Discord

-

Zebra-Llama Enhances RAG for Rare Diseases: The Zebra-Llama model focuses on context-aware training to improve Retrieval Augmented Generation (RAG) capabilities, specifically targeting rare diseases like Ehlers-Danlos Syndrome with enhanced citation accuracy as showcased in the GitHub repository.

- Its application in real-world scenarios underscores the model’s potential in democratizing access to specialized medical knowledge.

-

Chonkie Streamlines RAG Text Chunking: Chonkie introduces a lightweight and efficient library designed for rapid RAG text chunking, facilitating more accessible text processing as detailed in the Chonkie GitHub repository.

- This tool simplifies the integration of text chunking processes into existing workflows, enhancing overall efficiency.

-

Ollama Operator Simplifies LLM Deployment: The Ollama Operator automates the deployment of Ollama instances and LLM servers with minimal YAML configuration, as demonstrated in the recent KubeCon presentation.

- By open-sourcing the operator, users can effortlessly manage their LLM deployments, streamlining the deployment process.

-

Qwen2.5 Coder Outperforms GPT4o in Code Generation: The Qwen2.5 Coder 32B model has shown superior performance compared to GPT4o and Claude 3.5 Sonnet in code generation tasks, according to YouTube performance insights.

- This advancement positions Qwen2.5 Coder as a competitive choice for developers requiring efficient code generation capabilities.

Unsloth AI (Daniel Han) Discord

-

Qwen 2.5-Coder-32B Surpasses Previous Models: The release of Qwen 2.5-Coder-32B has been met with enthusiasm, as members report its impressive performance exceeding that of earlier models.

- Expectations are high that this iteration will significantly enhance coding capabilities for developers utilizing robust language models.

-

Optimizing Llama 3 Fine-Tuning: A member highlighted slower inference times in their fine-tuned Llama 3 model compared to the original, sparking discussions on potential configuration issues.

- Suggestions included verifying float precision consistency and reviewing scripts to identify factors affecting inference speed.

-

Ollama API Enables Frontend Integration: Members explored running Ollama on terminal and developing a chat UI with Streamlit, confirming feasibility through the Ollama API.

- One user expressed intent to further investigate the API documentation to implement the solution in their projects.

-

Evaluating Transformers Against RNNs and CNNs: Discussion arose on whether models like RNNs and CNNs can be trained with Unsloth, with clarifications that standard neural networks lack current support.

- A member reflected on shifting perceptions, emphasizing the dominance of Transformer-based architectures in recent AI developments.

-

Debate on Diversity of LLM Datasets: There was frustration regarding the composition of LLM datasets, with concerns about the indiscriminate inclusion of various data sources.

- Conversely, another member defended the datasets by highlighting their diverse nature, underscoring differing perspectives on data quality.

Nous Research AI Discord

-

Qwen’s Coder Commandos Conquer Benchmarks: The introduction of the Qwen2.5-Coder family brings various sizes of coder models with advanced performance on benchmarks, as announced in Qwen’s tweet.

- Members observed that the flagship model surpasses several proprietary models in benchmark evaluations, fostering discussions on its potential influence in the coding LLM landscape.

-

NVIDIA’s MM-Embed Sets New Multimodal Standard: NVIDIA’s MM-Embed has been unveiled as the first multimodal retriever to achieve state-of-the-art results on the multimodal M-BEIR benchmark, detailed in this article.

- This development enhances retrieval capabilities by integrating visual and textual data, sparking conversations on its applications across diverse AI tasks.

-

Open Hermes 2.5 Mix Enhances Model Complexity: The integration of code data into the Open Hermes 2.5 mix significantly increases the model’s complexity and functionality, as discussed in the general channel.

- The team aims to improve model capabilities across various applications, with members highlighting potential performance enhancements.

-

Scaling AI Inference Faces Critical Challenges: Discussions on inference scaling in AI models focus on the limitations of current scaling methods, referencing key articles like Speculations on Test-Time Scaling.

- Concerns over slowing improvements in generative AI have led members to contemplate future directions and scalable performance strategies.

-

Machine Unlearning Techniques Under the Microscope: Research on machine unlearning questions the effectiveness of existing methods in erasing unwanted knowledge from large language models, as presented in the study.

- Findings suggest that methods like quantization may inadvertently retain forgotten information, prompting calls for improved unlearning strategies within the community.

Eleuther Discord

-

Normalized Transformer (nGPT) Replication Challenges: Participants attempted to replicate nGPT results, observing variable speed improvements dependent on task performance metrics.

- The architecture emphasizes unit norm normalization for embeddings and hidden states, enabling accelerated learning across diverse tasks.

-

Advancements in Value Residual Learning: Value Residual Learning significantly contributed to speedrun success by allowing transformer blocks to access previously computed values, thus reducing loss during training.

- Implementations of learnable residuals showed improved performance, prompting considerations for scaling the technique in larger models.

-

Low Cost Image Model Training Techniques Explored: Members highlighted effective low cost/low data image training methods such as MicroDiT, Stable Cascade, and Pixart, alongside gradual batch size increase for optimized performance.

- Despite their simplicity, these techniques have demonstrated robust results, encouraging adoption in resource-constrained environments.

-

Deep Neural Network Approximation via Symbolic Equations: A method was proposed to extract symbolic equations from deep neural networks, facilitating targeted behavioral modifications with SVD-based linear map fitting.

- Concerns were raised about potential side effects, especially in scenarios requiring nuanced behavioral control.

-

Instruct Tuned Models Require apply_chat_template: For instruct tuned models, members confirmed the necessity of the

--apply_chat_templateflag, referencing specific GitHub documentation.- Implementation guidance was sought for Python integration, emphasizing adherence to documented configurations to ensure compatibility.

aider (Paul Gauthier) Discord

-

Qwen 2.5 Coder Approaches Claude’s Coding Performance: The Qwen 2.5 Coder model has achieved a 72.2% benchmark score on the diff metric, nearly matching Claude’s performance in coding tasks, as announced on the Qwen2.5 Coder Demo.

- Users are actively discussing the feasibility of running Qwen 2.5 Coder locally on various GPU setups, highlighting interests in optimizing performance across different hardware configurations.

-

Integrating Embeddings with Aider Enhances Functionality: Discussions around Embedding Integration in Aider emphasize the development of APIs to facilitate seamless queries with Qdrant, aiming to improve context generation as detailed in the Aider Configuration Options.

- Community members are proposing the creation of a custom Python CLI for querying, which underscores the need for more robust integration mechanisms between Aider and Qdrant.

-

OpenCoder Leads with Extensive Code LLM Offerings: OpenCoder has emerged as a prominent open-source code LLM family, offering 1.5B and 8B models trained on 2.5 trillion tokens of raw code, providing model weights and inference code for research advancements.

- The transparency in OpenCoder’s data processing and availability of resources aims to support researchers in pushing the boundaries of code AI development.

-

Aider Faces Context Window Challenges at 1300 Tokens: Concerns have been raised regarding Aider’s 1300 context window, which some users report as ineffective, impacting the tool’s scalability and performance in practical applications as discussed in the Aider Model Warnings.

- It is suggested that modifications in Aider’s backend might be causing these warnings, though they do not currently impede usage according to user feedback.

-

RefineCode Enhances Training with Extensive Programming Corpus: RefineCode introduces a robust pretraining corpus containing 960 billion tokens across 607 programming languages, significantly bolstering training capabilities for emerging code LLMs like OpenCoder.

- This reproducible dataset is lauded for its quality and breadth, enabling more comprehensive and effective training processes in the development of advanced code AI models.

Stability.ai (Stable Diffusion) Discord

-

Stable Diffusion 3.5 and Flux Boost Performance: Users are transitioning from Stable Diffusion 1.5 to newer models like SD 3.5 and Flux, noting that these versions require less VRAM and deliver enhanced performance.

- A recommendation was made to explore smaller GGUF models which can run more efficiently, even on limited hardware.

-

GPU Performance and VRAM Efficiency Concerns: Concerns were raised about the long-term GPU usage from running Stable Diffusion daily, drawing comparisons to gaming performance impacts.

- Some users suggested that GPU prices might drop with the upcoming RTX 5000 series, encouraging others to wait before purchasing new hardware.

-

Efficient LoRA Training for Stable Diffusion 1.5: A user inquired about training a LoRA with a small dataset for Stable Diffusion 1.5, highlighting their experience with Flux-based training.

- Recommendations included using the Kohya_ss trainer and following specific online guides to navigate the training process effectively.

-

Pollo AI Introduces AI Video Generation: Pollo AI was introduced as a new tool enabling users to create videos from text prompts and animate static images.

- This tool allows for creative expressions by generating engaging video content based on user-defined parameters.

-

GGUF Format Enhances Model Efficiency: Users learned about the GGUF format, which allows for more compact and efficient model usage in image generation workflows.

- It was mentioned that using GGUF files can significantly reduce resource requirements compared to larger models while maintaining quality.

OpenRouter (Alex Atallah) Discord

-

3D Object Generation API Deprecated Amid Low Usage: The 3D Object Generation API will be decommissioned this Friday, citing minimal usage with fewer than five requests every few weeks, as detailed in the documentation.

- The team plans to redirect efforts towards features that garner higher community engagement and usage.

-

Hermes Model Exhibits Stability Issues: Users have observed inconsistent responses from the Hermes model across both free and paid tiers, potentially due to rate limits or backend problems on OpenRouter’s side.

- Community members are investigating the root causes, discussing whether it’s related to model optimization or infrastructural constraints.

-

Llama 3.1 70B Instruct Model Gains Traction: Llama 3.1 70B Instruct model is experiencing increased adoption, especially within the Skyrim AI Follower Framework community, as users compare its pricing and performance to Wizard models.

- Community members are eager to explore its advanced capabilities, discussing potential integrations and performance benchmarks.

-

Qwen 2.5 Coder Model Launches with Sonnet-Level Performance: The Qwen 2.5 Coder model has been released, matching Sonnet’s coding capabilities at 32B parameters, as announced on GitHub.

- Community members are excited about its potential to enhance coding tasks, anticipating significant productivity improvements.

-

Custom Provider Keys Beta Feature Sees High Demand: Members are actively requesting access to the custom provider keys beta feature, indicating strong community interest.

- A member thanked the team for considering their request, reflecting eagerness to utilize the new feature.

Interconnects (Nathan Lambert) Discord

-

Qwen2.5 Coder Performance: The Qwen2.5 Coder family introduces models like Qwen2.5-Coder-32B-Instruct, achieving performance competitive with GPT-4o across multiple benchmarks.

- Detailed performance metrics indicate that Qwen2.5-Coder-32B-Instruct has surpassed its predecessor, with anticipations for a comprehensive paper release in the near future.

-

FrontierMath Benchmark Introduction: FrontierMath presents a new benchmark comprising complex math problems, where current AI models solve less than 2% effectively, highlighting significant gaps in AI capabilities.

- The benchmark’s difficulty is underlined when compared to existing alternatives, sparking discussions about its potential influence on forthcoming AI training methodologies.

-

SALSA Enhances Model Merging Techniques: The SALSA framework addresses AI alignment limitations through innovative model merging strategies, marking a substantial advancement in Reinforcement Learning from Human Feedback (RLHF).

- Community reactions express excitement over SALSA’s potential to refine AI alignment, as reflected by enthusiastic exclamations like ‘woweee’.

-

Effective Scaling Laws in GPT-5: Discussions indicate that scaling laws continue to be effective with recent GPT-5 models, despite perceptions of underperformance, suggesting that scaling yields diminishing returns for specific tasks.

- The conversation highlights the necessity for OpenAI to clarify messaging around AGI, as unrealistic expectations persist among the community.

-

Advancements in Language Model Optimization: The latest episode of Neural Notes delves into language model optimization, featuring an interview with Stanford’s Krista Opsahl-Ong on automated prompt optimization techniques.

- Additionally, discussions reflect on MIPRO optimizers in DSPy, with intentions to deepen understanding of these optimization tools.

OpenAI Discord

-

Chunking JSON: Solving RAG Tool Data Gaps: The practice of chunking JSON into smaller files ensures the RAG tool captures all relevant data, preventing exclusions.

- Although effective, members noted that this method increases workflow length, as discussed in both

prompt-engineeringandapi-discussionschannels.

- Although effective, members noted that this method increases workflow length, as discussed in both

-

LLM-Powered Code Generation Simplifies Data Structuring: Members proposed using LLMs to generate code for structuring data insertion, streamlining the integration process.

- This approach was well-received, with one user highlighting its potential to reduce manual coding efforts as highlighted in multiple discussions.

-

Function Calling Updates Enhance LLM Capabilities: Updates on function calling within LLMs were discussed, with users seeking ways to optimize structured outputs in their workflows.

- Suggestions included leveraging tools like ChatGPT for brainstorming and implementing efficient strategies to enhance response generation.

-

AI TTS Tools: Balancing Cost and Functionality: Discussions highlighted various text-to-speech (TTS) tools such as f5-tts and Elven Labs, noting that Elven Labs is more expensive.

- Concerns were raised about timestamp data availability and the challenges of running these TTS solutions on consumer-grade hardware.

-

AI Image Generation: Overcoming Workflow Limitations: Users expressed frustrations with AI video generation limitations, emphasizing the need for workflows to stitch multiple scenes together.

- There was a desire for advancements in video-focused AI solutions over reliance on text-based models, as highlighted in the

ai-discussionschannel.

- There was a desire for advancements in video-focused AI solutions over reliance on text-based models, as highlighted in the

Notebook LM Discord Discord

-

Google Seeks Feedback on NotebookLM: The Google team is conducting a 10-minute feedback survey for NotebookLM, aiming to steer future improvements. Interested engineers can register here.

- Participants who complete the survey will receive a $20 gift code, provided they are at least 18 years old. This initiative helps Google gather actionable insights for product advancements.

-

NotebookLM Powers Diverse Technical Use Cases: NotebookLM is being utilized for technical interview preparation, enabling mock interviews with varied voices to enhance practice sessions.

- Additionally, engineers are leveraging NotebookLM for sports commentary experiments and generating efficient educational summaries, demonstrating its versatility in handling both audio and textual data.

-

Podcast Features Face AI-Generated Hiccups: Users report that the podcast functionality in NotebookLM occasionally hallucinates content, leading to unexpected and humorous outcomes.

- Discussions are ongoing about generating multiple podcasts per notebook and strategies to manage these AI-induced inaccuracies effectively.

-

NotebookLM Stands Out Against AI Tool Rivals: NotebookLM is being compared to Claude Projects, ChatGPT Canvas, and Notion AI in terms of productivity enhancements for writing and job search preparation.

- Engineers are evaluating the pros and cons of each tool, particularly focusing on features that aid users with ADHD in maintaining productivity.

-

Seamless Integration with Google Drive and Mobile: NotebookLM now offers a method to sync Google Docs, streamlining the update process with a proposed bulk syncing feature.

- While the mobile version remains underdeveloped, there is a strong user demand for a dedicated app to access full notes on smartphones, alongside improvements in mobile web functionalities.

LM Studio Discord

-

LM Studio GPU Utilization on MacBooks: Users raised questions about determining GPU utilization on MacBook M4 while running LM Studio, highlighting potential slow generation speeds compared to different setup specifications.

- Discussions involved comparisons of setup specs and results, emphasizing the need for optimized configurations to improve generation performance.

-

LM Studio Model Loading Issues: A user reported that LM Studio was unable to index a folder containing GGUF files, despite their presence, citing recent structural changes in the application.

- It was suggested to ensure only relevant GGUF files are in the folder and maintain the correct folder structure to resolve the model loading issues.

-

Pydantic Errors with LangChain: Encountered a

PydanticUserErrorrelated to the__modify_schema__method when integrating LangChain, indicating a possible version mismatch in Pydantic.- Users shared uncertainty whether this error was due to a bug in LangChain or a compatibility issue with the Pydantic version in use.

-

Gemma 2 27B Performance at Lower Precision: Gemma 2 27B demonstrated exceptional performance even at lower precision settings, with members noting minimal benefits when using Q8 over Q5 on specific models.

- Participants emphasized the necessity for additional context in evaluations, as specifications alone may not fully convey performance metrics.

-

Laptop Recommendations for LLM Inference: Inquiries about the performance differences between newer Intel Core Ultra CPUs versus older i9 models for LLM inference, with some recommendations favoring AMD alternatives.

- Suggestions included prioritizing GPU performance over CPU specifications and considering laptops like the ASUS ROG Strix SCAR 17 or Lenovo Legion Pro 7 Gen 8 for optimal LLM tasks.

Latent Space Discord

-

Qwen 2.5 Coder Launch: The Qwen2.5-Coder-32B-Instruct model has been released, along with a family of coder models ranging from 0.5B to 32B, available in various quantized formats.

- It has achieved competitive performances in coding benchmarks, surpassing models like GPT-4o, showcasing the capabilities of the Qwen series. Refer to the Qwen2.5-Coder Technical Report for detailed insights.

-

FrontierMath Benchmark Reveals AI’s Limitations: The newly introduced FrontierMath benchmark indicates that current AI systems solve less than 2% of included complex mathematical problems.

- This benchmark shifts focus to challenging, original problems, aiming to test AI capabilities against human mathematicians. More details can be found at FrontierMath.

-

Open Interpreter Project Advances: Progress has been made on the Open Interpreter project, with the team open-sourcing it to foster community contributions.

- ‘That’s so cool you guys open-sourced it,’ highlights the enthusiasm among members for the open-source direction. Interested parties can view the project on GitHub.

-

Infrastructure Challenges in AI Agents Development: Conversations revisited the infrastructure challenges in building effective AI agents, focusing on buy vs. build decisions that startups face.

- Concerns were highlighted regarding the evolution and allocation of compute resources in the early days of OpenAI, noting significant hurdles encountered.

-

Advancements in Test-Time Compute Techniques: A new state-of-the-art achievement for the ARC public validation set showcases a 61% score through innovative test-time compute techniques.

- Ongoing debates question how training and test-time processes are perceived differently within the AI community, suggesting potential unifications in methodologies.

GPU MODE Discord

-

SVDQuant accelerates Diffusion Models: A member shared an SVDQuant paper that optimizes diffusion models by quantizing weights and activations to 4 bits, leveraging a low-rank branch to handle outliers effectively.

- The approach enhances performance for larger image generation tasks despite increased memory access overhead associated with LoRAs.

-

Aria Multimodal MoE Model Boosted: The Aria multimodal MoE model achieved a 4-6x speedup and fits into a single 24GB GPU using A16W4 and torch.compile.

- Despite the current codebase being disorganized, it offers potential replication insights for similar MoE models.

-

BitBlas Supports int4 Kernels: BitBlas now supports int4 kernels, enabling efficient scaled matrix multiplication operations as discussed by community members.

- Discussions highlighted the absence of int4 compute cores on the H100, raising questions about operational support.

-

TorchAO Framework Enhancements: The project plans to extend existing Quantization-Aware Training (QAT) frameworks in TorchAO by integrating optimizations from recent research.

- This strategy leverages established infrastructure to incorporate new features, focusing initially on linear operations over convolutional models.

-

DeepMind’s Neural Compression Techniques: DeepMind introduced methods for training models with neurally compressed text, as detailed in their research paper.

- Community interest peaked around Figure 3 of the paper, though specific quotes were not discussed.

tinygrad (George Hotz) Discord

-

Hailo Port on Raspberry Pi 5: A developer is porting Hailo to the Raspberry Pi 5, successfully translating models from tinygrad to ONNX and then to Hailo, despite facing challenges with quantized models requiring CUDA and TensorFlow.

- They mentioned that executing training code on edge devices is impractical due to the chip’s limited cache and inadequate memory bandwidth.

-

Handling Floating Point Exceptions: Discussions centered around detecting floating point exceptions like NaN and overflow, highlighting the necessity for platform support in detection methods. Relevant resources included Floating-point environment - cppreference.com and FLP03-C. Detect and handle floating-point errors.

- Participants emphasized the importance of capturing errors during floating-point operations and advocated for robust error handling techniques.

-

Integrating Tinybox with Tinygrad: Tinybox integration with tinygrad was discussed, focusing on potential upgrades and addressing issues related to the P2P hack patch affecting version 5090 upgrades. Relevant GitHub issues were referenced from the tinygrad repository.

- There were speculations about the performance impacts of different PCIe controller capabilities on hardware setups.

-

TPU Backend Strategies: A user proposed developing a TPU v4 assembly backend, expressing willingness to collaborate post-cleanup. They inquired about the vectorization of assembly in LLVM and specific TPU versions targeted for support.

- The community engaged in discussions about the feasibility and technical requirements for merging backend strategies.

-

Interpreting Beam Search Outputs: Assistance was sought for interpreting outputs from a beam search, particularly understanding how the progress bar correlates with kernel execution time. It was noted that the green indicator represents the final runtime of the kernel.

- The user expressed confusion over actions and kernel size, requesting further clarification to accurately interpret the results.

Cohere Discord

-

AI Interview Bot Development: A user is launching a GenAI project for an AI interview bot that generates questions based on resumes and job descriptions, scoring responses out of 100.

- They are seeking free resources like vector databases and orchestration frameworks, emphasizing that the programming will be handled by themselves.

-

Aya-Expanse Model Enhancements: A user praised the Aya-Expanse model for its capabilities beyond translation, specifically in function calling and handling Greek language tasks.

- They noted that the model effectively selects

direct_responsefor queries not requiring function calls, which improves response accuracy.

- They noted that the model effectively selects

-

Cohere API for Document-Based Responses: A user inquired about an API to generate freetext responses from pre-uploaded DOCX and PDF files, noting that currently only embeddings are supported.

- They expressed interest in an equivalent to the ChatGPT assistants API for this purpose.

-

Cohere API Errors and Latency: Users reported multiple issues with the Cohere API, including 500 Internal Server Errors and 404 errors when accessing model details.

- Additionally, increased latency with response times reaching 3 minutes and Embed API slowness were highlighted, with users directed to the Cohere Status Page for updates.

-

vnc-lm Discord Bot Integration: A member introduced the vnc-lm Discord bot which integrates with the Cohere API and GitHub Models API, as well as local ollama models.

- Key features include creating conversation branches, refining prompts, and sending context materials like screenshots and text files, with setup accessible via GitHub using

docker compose up --build.

- Key features include creating conversation branches, refining prompts, and sending context materials like screenshots and text files, with setup accessible via GitHub using

OpenInterpreter Discord

-

Open Interpreter 1.0 Update Testing: A user volunteered to assist in testing the upcoming Open Interpreter 1.0 update, which is on the dev branch and set for release next week. They shared the installation command.

- The community emphasized the need for bug testing and adapting the update for different operating systems to ensure a smooth rollout.

-

Hardware Requirements for Open Interpreter: A user questioned whether the Mac Mini M4 Pro with 64GB or 24GB of RAM is sufficient for running Open Interpreter effectively. A consensus emerged affirming that the setup would work.

- Discussions also included integrating additional components like a microphone and speaker to enhance the hardware environment.

-

Qwen 2.5 Coder Models Released: The newly released Qwen 2.5 coder models showcase significant improvements in code generation, code reasoning, and code fixing, with the 32B model rivaling OpenAI’s GPT-4o.

- Members expressed enthusiasm as Qwen and Ollama collaborated, emphasizing the fun of coding together, as stated by Qwen, ‘Super excited to launch our models together with one of our best friends, Ollama!’. See the official tweet for more details.

-

CUDA Configuration Adjustments: A member mentioned they adjusted their CUDA setup, achieving a satisfactory configuration after making necessary tweaks.

- This successful instantiation on their system highlights the importance of correct CUDA configurations for optimal performance.

-

Software Heritage Code Archiving for Open Interpreter: A user offered assistance in archiving the Open Interpreter code in Software Heritage, aiming to benefit future generations.

- This proposal underscored the community’s commitment to preserving valuable developer contributions.

LlamaIndex Discord

-

LlamaParse Premium excels in document parsing: Hanane Dupouy showcased how LlamaParse Premium efficiently parses complex charts and diagrams into structured markdown, enhancing document readability.

- This tool transforms visual data into accessible text, significantly improving document usability.

-

Advanced chunking strategies boost performance: @pavan_mantha1 outlined three advanced chunking strategies and provided a full evaluation setup for testing on personal datasets.

- These strategies aim to enhance retrieval and QA functionality, demonstrating effective data processing methods.

-

PureML automates dataset management: PureML leverages LLMs for automatic cleanup and refactoring of ML datasets, featuring context-aware handling and intelligent feature creation.

- These capabilities improve data consistency and quality, integrating tools like LlamaIndex and GPT-4.

-

Benchmarking fine-tuned LLM model: A member sought guidance on benchmarking their fine-tuned LLM model available at Hugging Face, facing errors with the Open LLM leaderboard.

- They requested assistance to effectively utilize the leaderboard for evaluating model performance.

-

Docker resource settings for improved ingestion: Users discussed Docker configurations, allocating 4 CPU cores and 8GB memory, to optimize the sentence transformers ingestion pipeline.

- Despite these settings, the ingestion process remains slow and prone to failure, highlighting the need for further optimization.

DSPy Discord

-

M3DocRAG Sets New Standards in Multi-Modal RAG: M3DocRAG showcases impressive results for question answering using multi-modal information from a large corpus of PDFs and excels in ColPali benchmarks.

- Jaemin Cho highlighted its versatility in handling single & multi-hop questions across diverse document contexts.

-

New Open-domain Benchmark with M3DocVQA: The introduction of M3DocVQA, a DocVQA benchmark, challenges models to answer multi-hop questions across more than 3K PDFs and 40K pages.

- This benchmark aims to enhance understanding by utilizing various elements such as text, tables, and images.

-

DSPy RAG Use Cases Spark Interest: A member expressed enthusiasm about the potential of DSPy RAG capabilities, indicating a keen interest in experimentation.

- They noted the promising intersection between DSPy RAG and vision capabilities, hinting at intriguing future applications.

-

LangChain integration falls out of support: Recent updates on GitHub indicate that the current integration with LangChain is no longer maintained and may not function properly.

- One member raised a question about this change, seeking further context on the situation.

-

DSPy prompting techniques designed for non-composability: Members discussed the nature of DSPy prompting techniques, confirming they are intentionally not composable as part of the design.

- This decision emphasizes that while signatures can be manipulated, doing so may limit functionality and clarity of control flow.

OpenAccess AI Collective (axolotl) Discord

-

FastChat and ShareGPT Removal: The removal of FastChat and ShareGPT triggered strong reactions within the community, highlighted by the PR #2021. Members expressed surprise and concern over this decision.

- Alternative solutions, such as reverting to an older commit, were suggested to maintain project stability, indicating ongoing efforts to address the community’s needs.

-

Metharme Support Delays: A query about the continued support for Metharme led to an explanation that delays are due to fschat releases impacting development timelines.

- Community members showed interest in incorporating sharegpt conversations into the new chat_template, reflecting a collaborative approach to overcoming support challenges.

-

Fine-Tuning VLMs Best Practices: Assistance was sought for fine-tuning VLMs, with recommendations to utilize the provided configuration for llama vision from the example repository.

- Confirmation that training a VLM model using llama 3.2 1B is achievable demonstrated the community’s capability and interest in advanced model training techniques.

-

Inflection AI API Updates: Inflection-3 was discussed, introducing two models: Pi for emotional engagement and Productivity for structured outputs, as detailed in the Inflection AI Developer Playground.

- Members raised concerns about the absence of benchmark data, questioning the practical evaluation of these new models and their real-world application.

-

Metharme Chat_Template PR Addition: A pull request to add Metharme as a chat_template was shared via PR #2033, addressing user requests and testing compatibility with previous versions.

- Community members were encouraged to execute the preprocess command locally to ensure functionality, fostering a collaborative environment for testing and implementation.

LLM Agents (Berkeley MOOC) Discord

-

Midterm Check-in for Project Feedback: Teams can now submit their progress through the Midterm Check-in Form to receive feedback and possibly gain GPU/CPU resource credits.

- Submitting the form is crucial even if not requesting resources, as it could facilitate valuable insights on their projects.

-

Application for Additional Compute Resources: Teams interested in additional GPU/CPU resources must complete the resource request form alongside the midterm check-in form.

- Allocation will depend on documented progress and detailed justification, encouraging even new teams to apply.

-

Lambda Workshop Reminder: The Lambda Workshop is scheduled for tomorrow, Nov 12th from 4-5pm PST, and participants are encouraged to RSVP through this link.

- This workshop will provide further insights and guidance on team projects and the hackathon process.

-

Unlimited Team Size for Hackathon: A member inquired about the allowed team size for the hackathon, and it was confirmed that the size is unlimited.

- This opens up the possibility for anyone interested to collaborate without restrictions.

-

Upcoming Lecture on LLM Agents: An announcement was made regarding a discussion of Lecture 2: History of LLM Agents happening tonight.

- The discussion will include a review of the lecture and exploration of some Agentic code, welcoming anyone interested.

Torchtune Discord

-

Capturing attention scores without modifying forward function: A user inquired about capturing attention scores without altering the forward function in the self-attention module using forward hooks. Others suggested potential issues with F.sdpa(), which doesn’t currently output attention scores, indicating that modifications may be necessary.

-

DCP checkpointing issues causing OOM errors: A member reported that the latest git main version still fails to address issues with gathering weights and optimizers on rank=0 GPU, resulting in OOM (Out Of Memory) errors.

- They implemented a workaround for DCP checkpoint saving, intending to convert it to the Hugging Face format and possibly write a PR for better integration.

-

Community support for DCP integration in Torchtune: Discussions emphasized the community’s support for integrating DCP checkpointing into Torchtune, with talks about sharing PRs or forks related to the efforts.

- An update indicated that a DCP PR from PyTorch contributors is likely to be available soon, enhancing collaborative progress.

LAION Discord

-

SVDQuant Reduces Memory and Latency: The recent SVDQuant introduces a new quantization paradigm for diffusion models, achieving a 3.5× memory and 8.7× latency reduction on a 16GB laptop 4090 GPU by quantizing weights and activations to 4 bits.

-

Gorilla Marketing in AI: AI companies are adopting gorilla marketing strategies, characterized by unconventional promotional tactics.

- This trend was humorously highlighted with a reference to the Harambe GIF, emphasizing the playful nature of these marketing approaches.

MLOps @Chipro Discord

-

RisingWave Enhances Data Processing Techniques: A recent post highlighted RisingWave’s advancements in data processing, emphasizing improvements in stream processing techniques.

- For more insights, check out the full details on their LinkedIn post.

-

Stream Processing Techniques in Focus: The discussion centered around the latest in stream processing, showcasing methods to optimize real-time data handling.

- Participants noted that adopting these innovations could significantly impact data-driven decision-making.

Gorilla LLM (Berkeley Function Calling) Discord

-

Using Gorilla LLM for Testing Custom Models: A user inquired about using Gorilla LLM to benchmark their fine-tuned LLM, seeking guidance as they are new to the domain.

- They expressed a need for help specifically in benchmark testing custom LLMs, hoping for community support and recommendations.

-

Seeking Support for Benchmarking Custom LLMs: A user reached out to utilize Gorilla LLM for benchmarking their custom fine-tuned model, emphasizing their lack of experience in the area.

- They requested assistance in effectively benchmark testing custom LLMs to better understand performance metrics.

AI21 Labs (Jamba) Discord

- Continued Use of Fine-Tuned Models: A user requested to continue using their fine-tuned models within the current setup.

- ****:

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

Perplexity AI ▷ #general (1230 messages🔥🔥🔥):

Perplexity server issuesUser frustrationsCommunication with usersWaiting for fixesAlternatives to Perplexity

-

Perplexity Suffers Server Issues: Users reported difficulties logging in and accessing threads on Perplexity, with messages disappearing and threads showing errors such as ‘This Thread does not exist.’ Health updates from the team confirmed technical issues due to a recent deployment.

- While some threads have recently become accessible again, many users find that new messages are now hidden, compounding frustrations.

-

User Frustration and Support Deficiency: Many users expressed dissatisfaction with the Perplexity support team’s lack of communication regarding fixes for ongoing bugs, leading to feelings of neglect and frustration. Complaints were made about the poor handling and multiple recurring bugs impacting usage.

- Users highlighted difficulties in using the platform effectively, voicing their concerns about the company’s performance and the need for better customer support.

-

Communication on Updates: Participants discussed the company’s apparent inability to communicate effectively with users regarding ongoing issues, calling for better transparency. Some users suggested that the company should implement a more proactive approach to updating customers during outages.

- There was a call for the implementation of an API to relay information about platform status updates to Discord users promptly.

-

Looking for Alternatives: Some users began seeking alternatives to Perplexity, particularly interested in platforms that offer Opus support, leading to suggestions like ChatHub. Concerns were raised regarding the value of continuing with Perplexity amidst its ongoing issues.

-

Anticipation of Future Impact: As discussions unfolded regarding the state of the platform, there were reflections on how technology firms could affect user experiences in the long run. Users expressed propositions regarding how company reliance on investors might degenerate customer service once money starts to dwindle.

Links mentioned:

- Capybara Let Him Cook GIF - Capybara Let him cook - Discover & Share GIFs: Click to view the GIF

- Tweet from Aravind Srinivas (@AravSrinivas): It was a deployment issue. We’re reverting back. Sorry. Will be up and running very very shortly. Quoting Crunk ✈️ Network School (@crunk304) Plex is down! @AravSrinivas @perplexity_ai

- Perplexity - Status: Perplexity Status

- Weareback Wereback GIF - Weareback Wereback Wearebackbaby - Discover & Share GIFs: Click to view the GIF

- When Server Down Iceeramen GIF - When Server Down Iceeramen Monkey - Discover & Share GIFs: Click to view the GIF

- ChatHub - GPT-4o, Claude 3.5, Gemini 1.5 side by side: Use and compare GPT-4o, Claude 3.5, Gemini 1.5 and more chatbots simultaneously

- Bye Bye GIF - Bye bye - Discover & Share GIFs: Click to view the GIF

Perplexity AI ▷ #sharing (25 messages🔥):

Zomato's Food Rescue InitiativeAstrology DiscussionsGradient Descent TechniquesAnti-Aging Research with RapamycinFishing Bait Recommendations

-

Zomato’s Smart Food Rescue Initiative: Zomato introduced its ‘Food Rescue’ program, allowing users to purchase cancelled orders at lower prices here. This initiative aims to minimize food waste while offering consumers more affordable meal options.

- Feedback on this move highlights its potential to benefit both Zomato and customers alike, raising questions on sustainability practices in the food delivery industry.

-

Astrology: Reality or Myth?: Multiple members discussed the validity of astrology, specifically referencing a link that delves into its authenticity. The conversation sparked differing opinions on the impact of astrological claims in modern life.

- Participants expressed varied views, with some advocating for its psychological benefits while others dismissed it as pseudoscience.

-

Gradient Descent Techniques Explored: Several queries were raised about the various types of gradient descent, focusing on their applications in machine learning link. Members shared links and personal insights into how these methods optimize model training.

- Discussions included comparisons between standard gradient descent and advanced techniques like stochastic and mini-batch gradient descent, showcasing best practices for implementation.

-

Anti-Aging Research Gains Attention: New research on rapamycin and its anti-aging effects has caught the eye of members, leading to discussions about ongoing studies in the area link. Users shared personal experiences with the drug and discussed its potential benefits and drawbacks.

- Conversations centered around the implications of this research on longevity and health, with enthusiasm for future discoveries.

-

Best Bait for Fishing Adventures: A user inquired about the best bait to catch fish, which led to a lively discussion among fishing enthusiasts sharing tips link. Suggestions ranged from traditional options to innovative bait methods designed to attract various species.

- This exchange highlighted the community’s enthusiasm for fishing and willingness to share valuable insights gained from their experiences.

Perplexity AI ▷ #pplx-api (24 messages🔥):

Perplexity API citationsAPI vs. Pro Search outputSearch domain filterCitation links outputDifferent sources in API

-

Perplexity API now includes citations: The Perplexity API has announced the public availability of citations with no breaking changes, and the default rate limit for sonar online models has increased to 50 requests/min.

- For more details, users can refer to the docs.

-

API output differs from Pro Search: Discussion arose about the output quality of the API compared to the Pro Search, clarifying that the Pro version uses a different model not accessible via the API.

- Members expressed disappointment about not obtaining the same output quality in the API as in the Pro search.

-

Search domain filter query: One user inquired if the search_domain_filter supports subdomain searches, wanting to use ‘support.company.com’ while avoiding ‘community.company.com’.

- The conversation about this feature included a follow-up question seeking confirmation on its functionality.

-

Issues with citation links: Concerns were raised about the citation links returned by the API, which appeared as parenthetical numbers instead of clickable links.

- Multiple members reported similar experiences, prompting discussions on how to request URLs in different formats.

-

Discrepancies in API and chat source results: A user noticed the sources returned by the API varied significantly from those in the chat for identical queries, sparking questions about their search algorithms.

- The discrepancy was attributed to the underlying algorithm and data crawled, independent of the LLM model differences.

Links mentioned:

- no title found: no description found

- no title found.): no description found

HuggingFace ▷ #general (922 messages🔥🔥🔥):

AI model scamsBirthday celebrationsImage generation modelsModel fine-tuningLlama models and competition

-

Discussion on AI Model Scams: A user recounted how they were scammed for $500 by a developer who failed to deliver an AI model, highlighting the lack of trust in freelance arrangements.

- The community emphasized the importance of using platforms like Upwork for better security and reliable service.

-

Celebrating a Birthday with New Model Release: A user announced their birthday and shared a new model they created, dubbed ‘birthday-llm,’ designed for logical reasoning and roleplaying tasks.

- The model was well-received, with others expressing enthusiasm to try it out.

-

Image Generation and Character Consistency: Users discussed challenges in generating character consistency in images, with recommendations made for models such as Animagine XL 3.1 and DiffuseCraft.

- The importance of using labeled datasets for tasks such as score detection from pinball machines was highlighted.

-

Fine-tuning for Specific Use Cases: The community deliberated methods for obtaining a model capable of detecting specific objects and scores, and techniques for integrating text and image data.

- There was an emphasis on setting clear accuracy targets for training models in order to ensure reasonable expectations.

-

Exploring New AI Models and Frameworks: A user sought advice on finding high-quality AI models on Hugging Face amidst concerns about outdated models saturating the platform.

- The conversation touched on the rapid pace of advancements in AI model development and the need for clear documentation and evaluation.

Links mentioned:

- Redirecting…: no description found

- What Is Flux In Ai: no description found

- minchyeom/birthday-llm · Hugging Face: no description found

- How to convert model.safetensor to pytorch_model.bin?: I’m fine tuning a pre-trained bert model and i have a weird problem: When i’m fine tuning using the CPU, the code saves the model like this: With the “pytorch_model.bin…

- Forrest Gump Running GIF - Forrest Gump Running Me When Im Late - Discover & Share GIFs: Click to view the GIF

- rwitz/cat1.0 · Hugging Face: no description found

- взгляд 2000 ярдов GIF - Взгляд 2000 ярдов Война - Discover & Share GIFs: Click to view the GIF

- Why should we use Temperature in softmax?): I’m recently working on CNN and I want to know what is the function of temperature in softmax formula? and why should we use high temperatures to see a softer norm in probability distribution?Sof…

- Kitty Stinky Kitty GIF - Kitty Stinky kitty Stinky - Discover & Share GIFs: Click to view the GIF

- Cat Computer GIF - Cat Computer Typing - Discover & Share GIFs: Click to view the GIF

- Trump Donald GIF - Trump Donald Face - Discover & Share GIFs: Click to view the GIF

- Trump Orange Seat GIF - Trump Orange Seat Looking Around - Discover & Share GIFs: Click to view the GIF

- Spongebob Patrick GIF - Spongebob Patrick Patrick Star - Discover & Share GIFs: Click to view the GIF

- What is temperature?: I see the word “temperature” being used at various places like: in Models — transformers 4.12.4 documentation temperature ( float , optional, defaults to 1.0) – The value used to module the next…

- Snoop Snoop Dogg GIF - Snoop Snoop dogg Snoop smile - Discover & Share GIFs: Click to view the GIF

- Punisher The Punisher GIF - Punisher The Punisher Wait - Discover & Share GIFs: Click to view the GIF

- no title found: no description found

- Ghostbuster Toaster GIF - Ghostbuster Toaster - Discover & Share GIFs: Click to view the GIF

- Family Guy Peter Griffin GIF - Family guy Peter griffin Peter - Discover & Share GIFs: Click to view the GIF

- city96/FLUX.1-dev-gguf · Hugging Face: no description found

- 80m Increased Revenue & Redeploy 3 Fte GIF - 80M Increased Revenue & Redeploy 3 FTE - Discover & Share GIFs: Click to view the GIF

- ComfyUI - Advanced - a Hugging Face Space by wrdias: no description found

- ComfyUI (test) - a Hugging Face Space by John6666: no description found

- James Moriarty (hologram): You – or someone – asked your computer to program a nefarious fictional character from nineteenth century London – and that is how I arrived… but I am no longer that creation. I am no longer that ch…

- Animagine XL 3.1 - a Hugging Face Space by cagliostrolab: no description found

- Laxhar/sdxl_noob · Hugging Face: no description found

- YOLO11 🚀 NEW: Discover YOLO11, the latest advancement in state-of-the-art object detection, offering unmatched accuracy and efficiency for diverse computer vision tasks.

- Bad Piggies Theme: Provided to YouTube by The Orchard EnterprisesBad Piggies Theme · Ilmari HakkolaBad Piggies (Original Game Soundtrack)℗ 2012 Rovio EntertainmentReleased on: …

- GitHub - black-forest-labs/flux: Official inference repo for FLUX.1 models: Official inference repo for FLUX.1 models. Contribute to black-forest-labs/flux development by creating an account on GitHub.

- 🧩 DiffuseCraft - a Hugging Face Space by r3gm: no description found

- Stable Diffusion XL: no description found

HuggingFace ▷ #today-im-learning (10 messages🔥):

Teaching LLMs MathematicsFull Stack vs AI StackTraining a BART ModelProblem-Based LearningLong Sentences in Translation

-

Discussing Approaches to Teaching LLMs Math: A member expressed interest in incorporating reasoning and logic into teaching LLMs mathematics, emphasizing the significance of focusing on solvable problems.

- They noted that teaching LLMs with manageable challenges, like Middle School Math, is preferable over complex problems where success is unlikely.

-

Navigating the Full Stack Dilemma: A user shared confusion over whether to focus on Full Stack development before delving into AI, citing their existing knowledge of classical ML and basic NLP and CV.

- There was a suggestion that pursuing Full Stack may drain time and energy, prompting a request for guidance from the community.

-

Successful BART Model Training for Translation: After 6 hours of debugging, a user successfully trained a BART model on the OPUS books de-en dataset to translate English to German.

- They implemented efficiency measures like saving model states and configurations, but noted that some overly long sentences would get truncated during processing.

-