AI News for 4/4/2025-4/7/2025. We checked 7 subreddits, 433 Twitters and 30 Discords (229 channels, and 18760 messages) for you. Estimated reading time saved (at 200wpm): 1662 minutes. You can now tag @smol_ai for AINews discussions!

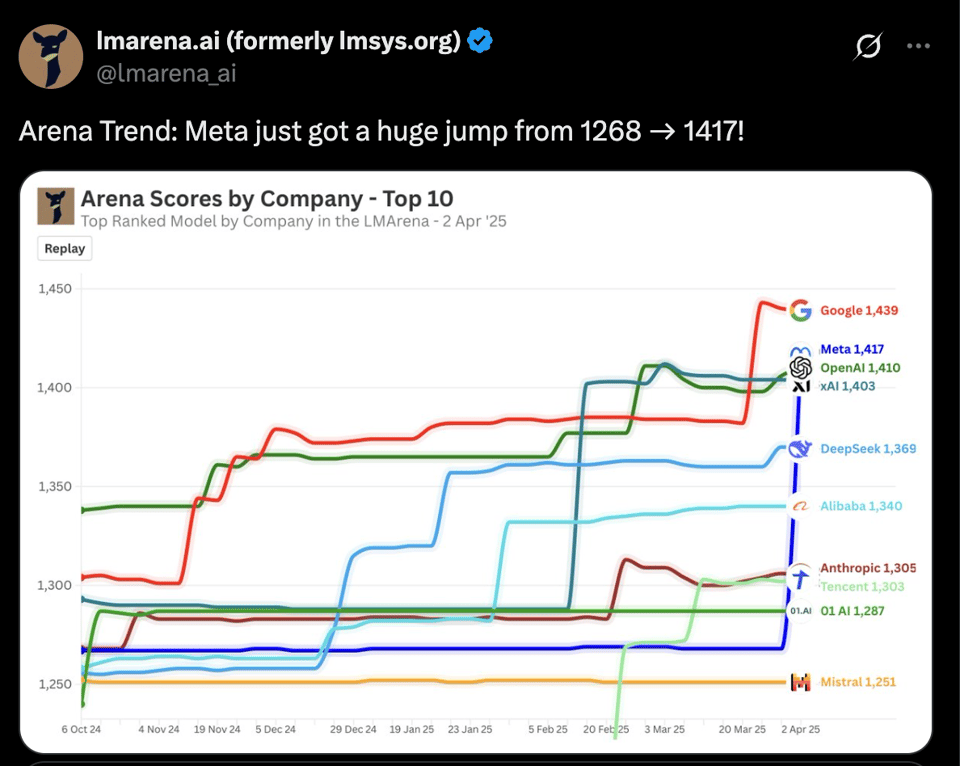

The headlines of Llama 4 are glowing: 2 new medium-size MoE open models that score well, and a third 2 Trillion parameter “behemoth” promised that should be the largest open model ever released, restoring Meta’s place at the top of the charts:

SOTA training updates are always welcome: we note the adoption of Chameleon-like early fusion with MetaCLIP, interleaved, chunked attention without RoPE (commented on by many), native FP8 training, and trained on up to 40T tokens.

While the closed model labs tend to set the frontier, Llama usually sets the bar for what open models should be. Llama 3 was released almost a year ago, and subsequent updates like Llama 3.2 were just as well received.

Usual license handwringing aside, the tone of Llama 4’s reception has been remarkably different.

- Llama 4 was released on a Saturday, much earlier than seemingly even Meta, which changed the release date last minute from Monday, expected. Zuck’s official line is simply that it was “ready”.

- Just the blogpost, nowhere near the level of the Llama 3 paper in transparency

- The smallest “Scout” model is 109B params, which cannot be run on consumer grade GPUs.

- The claimed 10m token context is almost certainly far above what the “real” context is when trained with 256k tokens (still impressive! but not 10m!)

- There was a special “experimental” version used for LMarena, which caused the good score - that is not the version that was released. This discrepancy forced LMarena to respond by releasing the full dataset for evals.

- It does very poorly on independent benchmarks like Aider

- Unsubstantiated posts on Chinese social media claim company leadership pushed for training on test to meet Zuck’s goals.

The last point has been categorically denied by Meta leadership:

but the whiff that something is wrong with the release has undoubtedly tarnished what would otherwise be a happy day in Open AI land.

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

Large Language Models (LLMs) and Model Releases

- Llama 4 and Implementation Issues: @Ahmad_Al_Dahle stated that Meta is aware of mixed quality reports across different services using Llama 4 and expects implementations to stabilize in a few days and denies claims of training on test sets. @ylecun noted that some carifications about Llama-4 were needed, and @reach_vb thanked @Ahmad_Al_Dahle for clarifications and commitment to open science and weights.

- Llama 4 Performance and Benchmarks: Concerns about the quality of Llama 4’s output have surfaced, with @Yuchenj_UW reporting it generates slop, but others claim it’s good. @Yuchenj_UW highlighted a reddit thread and said that if Meta actually trained to maximize benchmark scores, “it’s fucked.” @terryyuezhuo compared Llama-4 Maverick on BigCodeBench-Full to GPT-4o-2024-05-13 & DeepSeek V3 and reports that the Llama-4 Maverick has similar performance to Gemini-2.0-Flash-Thinking & GPT-4o-2024-05-13 on BigCodeBench-Hard, but is ranked 41th/192. @terryyuezhuo also noted that Llama-4-Scout Ranked 97th/192. @rasbt said Meta released the Llama 4 suite, MoE models with 16 & 128 experts, which are optimized for production.

- DeepSeek-R1: @scaling01 simply stated that DeepSeek-R1 is underrated, and @LangChainAI shared a guide to build RAG applications with DeepSeek-R1.

- Gemini Performance: @scaling01 analyzed Gemini 2.5 Pro and Llama-4 results on Tic-Tac-Toe-Bench, noting Gemini 2.5 Pro is surprisingly worse than other frontier thinking models when playing as ‘O’, and ranks as the 5th most consistent model overall. @jack_w_rae mentioned chatting with @labenz on Cognitive Revolution about scaling Thinking in Gemini and 2.5 Pro.

- Mistral Models: @sophiamyang announced that Ollama now supports Mistral Small 3.1.

- Model Training and Data: @jxmnop argues that training large models is not inherently scientifically valuable and that many discoveries could’ve been made on 100M parameter models.

- Quantization Aware Training: @osanseviero asked if Quantization-Aware Trained Gemma should be released for more quantization formats.

AI Applications and Tools

- Replit for Prototyping: @pirroh suggested that Replit should be the tool of choice for GSD prototypes.

- AI-Powered Personal Device: @steph_palazzolo reported that OpenAI has discussed buying the startup founded by Sam Altman and Jony Ive to build an AI-powered personal device, potentially costing over $500M.

- AI in Robotics: @TheRundownAI shared top stories in robotics, including Kawasaki’s rideable wolf robot and Hyundai buying Boston Dynamics’ robots.

- AI-Driven Content Creation: @ID_AA_Carmack argues that AI tools will allow creators to reach greater heights, and enable smaller teams to accomplish more.

- LlamaParse: @llama_index introduced a new layout agent within LlamaParse for best-in-class document parsing and extraction with precise visual citations.

- MCP and LLMs: @omarsar0 discussed Model Context Protocol (MCP) and its relationship to Retrieval Augmented Generation (RAG), noting that MCP complements RAG by standardizing the connection of LLM applications to tools. @svpino urged people to learn MCP.

- AI-Assisted Coding and IDEs: @jeremyphoward highlighted resources for using MCP servers in Cursor to get up-to-date AI-friendly docs using

llms.txt. - Perplexity AI Issues: @AravSrinivas asked users about the number one issue on Perplexity that needs to be fixed.

Company Announcements and Strategy

- Mistral AI Hiring and Partnerships: @sophiamyang announced that Mistral AI is hiring in multiple countries for AI Solutions Architect and Applied AI Engineer roles. @sophiamyang shared that Mistral AI has signed a €100 million partnership with CMA CGM to adopt custom-designed AI solutions for shipping, logistics, and media activities.

- Google AI Updates: @GoogleDeepMind announced the launch of Project Astra capabilities in Gemini Live. @GoogleDeepMind stated that GeminiApp is now available to Advanced users on Android devices, as well as on Pixel 9 and SamsungGalaxy S25 devices.

- Weights & Biases Updates: @weights_biases shared the features shipped in March for W&B Models.

- OpenAI’s Direction: @sama teased a popular recent release from OpenAI.

- Meta’s AI Strategy: @jefrankle defended Meta’s AI strategy, arguing that it’s better to have fewer, better releases than more worse releases.

Economic and Geopolitical Implications of AI

- Tariffs and Trade Policy: @dylan522p analyzed how impending tariffs caused a Q1 import surge and predicted a temporary GDP increase in Q2 due to inventory destocking. @wightmanr argued that trade deficits aren’t due to other countries’ tariffs. @fchollet stated that the economy is being crashed on purpose.

- American Open Source: @scaling01 claimed American open-source has fallen and that it’s all on Google and China now.

- Stablecoins and Global Finance: @kevinweil stated that a globally available, broadly integrated, low cost USD stablecoin is good for 🇺🇸 and good for people all over the world.

AI Safety, Ethics, and Societal Impact

- AI’s Impact on Individuals: @omarsar0 agreed with @karpathy that LLMs have been significantly more life altering for individuals than for organizations.

- Emotional Dependence on AI: @DeepLearningAI shared research indicating that while ChatGPT voice conversations may reduce loneliness, they can also lead to decreased real-world interaction and increased emotional dependence.

- AI Alignment and Control: @DanHendrycks argued for the need to align and domesticate AI systems, creating them to act as “fiduciaries.”

- AI and the Future: @RyanPGreenblatt suggests that the AI trend will break the GDP growth trend.

Humor/Memes

- Miscellaneous Humor: @scaling01 asked @deepfates if they bought 0DTE puts again. @lateinteraction explicitly noted that a previous statement was a joke. @svpino joked that AI might take our jobs, but at least we can now work making Nike shoelaces.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. “Transforming Time Series Forecasting with Neuroplasticity”

-

Neural Graffiti - A Neuroplasticity Drop-In Layer For Transformers Models (Score: 170, Comments: 56): The post introduces Neural Graffiti, a neuroplasticity drop-in layer for transformer models. This layer is inserted between the transformer layer and the output projection layer, allowing the model to acquire neuroplasticity traits by changing its outputs over time based on past experiences. Vector embeddings from the transformer layer are mean-pooled and modified with past memories to influence token generation, gradually evolving the model’s internal understanding of concepts. A demo is available on GitHub: babycommando/neuralgraffiti. The author finds liquid neural networks “awesome” for emulating the human brain’s ability to change connections over time. They express fascination in “hacking” the model despite not fully understanding the transformer’s neuron level. They acknowledge challenges such as the cold start problem and emphasize the importance of finding the “sweet spot”. They believe this approach could make the model acquire a “personality in behavior” over time.

- Some users praise the idea, noting it could address issues needed for true personal assistants and likening it to self-learning, potentially allowing the LLM to “talk what it wants”.

- One user raises technical considerations, suggesting that applying the graffiti layer earlier in the architecture might be more effective, as applying it after the attention and feedforward blocks may limit meaningful influence on the output.

- Another user anticipates an ethics discussion about the potential misuse of such models.

Theme 2. “Disappointment in Meta’s Llama 4 Performance”

-

So what happened to Llama 4, which trained on 100,000 H100 GPUs? (Score: 256, Comments: 85): The post discusses Meta’s Llama 4, which was reportedly trained using 100,000 H100 GPUs. Despite having fewer resources, Deepseek claims to have achieved better performance with models like DeepSeek-V3-0324. Yann LeCun stated that FAIR is working on the next generation of AI architectures beyond auto-regressive LLMs. The poster suggests that Meta’s leading edge is diminishing and that smaller open-source models have been surpassed by Qwen, with Qwen3 is coming….

- One commenter questions the waste of GPUs and electricity on disappointing training results, suggesting that the GPUs could have been used for better purposes.

- Another commenter points out that the Meta blog post mentioned using 32K GPUs instead of 100K and provides a link for reference.

- A commenter criticizes Yann LeCun, stating that while he was a great scientist, he has made many mispredictions regarding LLMs and should be more humble.

-

Meta’s Llama 4 Fell Short (Score: 1791, Comments: 175): Meta’s Llama 4 models, Scout and Maverick, have been released but are disappointing. Joelle Pineau, Meta’s AI research lead, has been fired. The models use a mixture-of-experts setup with a small expert size of 17B parameters, which is considered small nowadays. Despite having extensive GPU resources and data, Meta’s efforts are not yielding successful models. An image compares four llamas labeled Llama1 to Llama4, with Llama4 appearing less polished. The poster is disappointed with Llama 4 Scout and Maverick, stating that they ‘left me really disappointed’. They suggest the underwhelming performance might be due to the tiny expert size in their mixture-of-experts setup, noting that 17B parameters ‘feels small these days’. They believe that Meta’s struggle shows that ‘having all the GPUs and Data in the world doesn’t mean much if the ideas aren’t fresh’. They praise companies like DeepSeek and OpenAI for showing that real innovation pushes AI forward and criticize the approach of just throwing resources at a problem without fresh ideas. They conclude that AI advancement requires not just brute force but brainpower too.

- One commenter recalls rumors that Llama 4 was so disappointing compared to DeepSeek that Meta considered not releasing it, suggesting they should have waited to release Llama 5.

- Another commenter criticizes Meta’s management, calling it a ‘dumpster fire’, and suggests that Zuckerberg needs to refocus, comparing Meta’s situation to Google’s admission of being behind and subsequent refocusing.

- A commenter finds it strange that Meta’s model is underwhelming despite having access to an absolutely massive amount of data from Facebook that nobody else has.

-

I’d like to see Zuckerberg try to replace mid level engineers with Llama 4 (Score: 381, Comments: 62): The post references Mark Zuckerberg’s statement that AI will soon replace mid-level engineers, as reported in a Forbes article linked here. The author is skeptical of Zuckerberg’s claim, implying that replacing mid-level engineers with Llama 4 may not be feasible.

- One commenter jokes that perhaps Zuckerberg replaced engineers with Llama3, leading to Llama4 not turning out well.

- Another commenter suggests that he might need to use Gemini 2.5 Pro instead.

- A commenter criticizes Llama4, calling it “a complete joke” and expressing doubt that it can replace even a well-trained high school student.

Theme 3. “Meta’s AI Struggles: Controversies and Innovations”

-

Llama 4 is open - unless you are in the EU (Score: 602, Comments: 242): Llama 4 has been released by Meta with a license that bans entities domiciled in the European Union from using it. The license explicitly states: “You may not use the Llama Materials if you are… domiciled in a country that is part of the European Union.” Additional restrictions include mandatory use of Meta’s branding (LLaMA must be in any derivative’s name), required attribution (“Built with LLaMA”), no field-of-use freedom, no redistribution freedom, and the model is not OSI-compliant, thus not considered open source. The author argues that this move isn’t “open” in any meaningful sense but is corporate-controlled access disguised in community language. They believe Meta is avoiding the EU AI Act’s transparency and risk requirements by legally excluding the EU. This sets a dangerous precedent, potentially leading to a fractured, privilege-based AI landscape where access depends on an organization’s location. The author suggests that real “open” models like DeepSeek and Mistral deserve more attention and questions whether others will switch models, ignore the license, or hope for change.

- One commenter speculates that Meta is trying to avoid EU regulations on AI and doesn’t mind if EU users break this term; they just don’t want to be held to EU laws.

- Another commenter notes that there’s no need to worry because, according to some, Llama 4 performs poorly.

- A commenter humorously hopes that Meta did not use EU data to train the model, implying a potential double standard.

-

Meta’s head of AI research stepping down (before the llama4 flopped) (Score: 166, Comments: 31): Meta’s head of AI research, Joelle, is stepping down. Joelle is the head of FAIR (Facebook AI Research), but GenAI is a different organization within Meta. There are discussions about Llama4 possibly not meeting expectations. Some mention that blending in benchmark datasets in post-training may have caused issues, attributing failures to the choice of architecture (MOE). The original poster speculates that Joelle’s departure is an early sign of the Llama4 disaster that went unnoticed. Some commenters disagree, stating that people leave all the time and this doesn’t indicate problems with Llama4. Others suggest that AI development may be slowing down, facing a plateau. There’s confusion over Meta’s leadership structure, with some believing Yann LeCun leads the AI organization.

- One commenter clarifies that Joelle is the head of FAIR and that GenAI is a different org, emphasizing organizational distinctions within Meta.

- Another mentions they heard from a Meta employee about issues with blending benchmark datasets in post-training and attributes possible failures to the choice of architecture (MOE).

- A commenter questions Meta’s structure, asking if Joelle reports to Yann LeCun, indicating uncertainty about who leads the AI efforts at Meta.

-

“Serious issues in Llama 4 training. I Have Submitted My Resignation to GenAI“ (Score: 922, Comments: 218): An original Chinese post alleges serious issues in the training of Llama 4, stating that despite repeated efforts, the model underperforms compared to open-source state-of-the-art benchmarks. The author claims that company leadership suggested blending test sets from various benchmarks during the post-training process to artificially boost performance metrics. The author states they have submitted their resignation and requested their name be excluded from the technical report of Llama 4, mentioning that the VP of AI at Meta also resigned for similar reasons. The author finds this approach unethical and unacceptable. Commenters express skepticism about the validity of these claims and advise others to take the information with a grain of salt. Some suggest that such practices reflect broader issues within the industry, while others note that similar problems can occur in academia.

- A commenter points out that Meta’s head of AI research announced departure on Tue, Apr 1 2025, suggesting it might be an April Fool’s joke.

- Another commenter shares a response from someone at Facebook AI who denies overfitting test sets to boost scores and requests evidence, emphasizing transparency.

- A user highlights that company leadership suggesting blending test sets into training data amounts to fraud and criticizes the intimidation of employees in this context.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding

Theme 1. “Llama 4 Scout and Maverick Launch Insights”

-

Llama 4 Maverick/Scout 17B launched on Lambda API (Score: 930, Comments: 5): Lambda has launched Llama 4 Maverick and Llama 4 Scout 17B models on Lambda API. Both models have a context window of 1 million tokens and use quantization FP8. Llama 4 Maverick is priced at $0.20 per 1M input tokens and $0.60 per 1M output tokens. Llama 4 Scout is priced at $0.10 per 1M input tokens and $0.30 per 1M output tokens. More information is available on their information page and documentation. The models offer a remarkably large context window of 1 million tokens, which is significantly higher than typical models. The use of quantization FP8 suggests a focus on computational efficiency.

- A user criticized the model, stating “It’s actually a terrible model. Not even close to advertised.”

- The post was featured on a Discord server, and the user was given a special flair for their contribution.

- Automated messages provided guidelines and promotions related to ChatGPT posts.

Theme 2. “AI Innovations in 3D Visualization and Image Generation”

-

TripoSF: A High-Quality 3D VAE (1024³) for Better 3D Assets - Foundation for Future Img-to-3D? (Model + Inference Code Released) (Score: 112, Comments: 10): TripoSF is a high-quality 3D VAE capable of reconstructing highly detailed 3D shapes at resolutions up to 1024³. It uses a novel SparseFlex representation, allowing it to handle complex meshes with open surfaces and internal structures. The VAE is trained using rendering losses, avoiding mesh simplification steps that can reduce fine details. The pre-trained TripoSF VAE model weights and inference code are released on GitHub, with a project page at link and paper available on arXiv. The developers believe this VAE is a significant step towards better 3D generation and could serve as a foundation for future image-to-3D systems. They mention, “We think it’s a powerful tool on its own and could be interesting for anyone experimenting with 3D reconstruction or thinking about the pipeline for future high-fidelity 3D generative models.” They are excited about its potential and invite the community to explore its capabilities.

- A user expresses excitement, recalling similar work and stating, “Can’t wait to try this one once someone implements it into ComfyUI.”

- Another user shares positive feedback, noting they generated a tree that came out better than with Hunyuan or Trellis, and commends the team for their work.

- A user raises concerns that the examples on the project page are skewed, suggesting that the Trellis examples seem picked from a limited web demo.

-

Wan2.1-Fun has released its Reward LoRAs, which can improve visual quality and prompt following (Score: 141, Comments: 33): Wan2.1-Fun has released its Reward LoRAs, which can improve visual quality and prompt following. A demo comparing the original and enhanced videos is available: left: original video; right: enhanced video. The models are accessible on Hugging Face, and the code is provided on GitHub. Users are eager to test these new tools and are curious about their capabilities. Some are experiencing issues like a ‘lora key not loaded error’ when using the models in Comfy, and are asking about differences between HPS2.1 and MPS.

- A user is excited to try out the models and asks, “What’s the diff between HPS2.1 and MPS?”

- Another inquires if the Reward LoRAs are for fun-controlled videos only or can be used with img2vid and txt2vid in general.

- Someone reports an error, “Getting lora key not loaded error”, when attempting to use the models in Comfy.

-

The ability of the image generator to “understand” is insane… (Score: 483, Comments: 18): The post highlights the impressive ability of an image generator to “understand” and generate images. The author expresses amazement at how “insane” the image generator’s understanding is.

- Commenters note that despite being impressive, the image has imperfections like “bunion fingers” and a “goo hand”.

- Some users humorously point out anomalies in the image, questioning “what’s his foot resting on?” and making jokes about mangled hands.

- Another user discusses the cost of the car in the image, stating they would buy it for “about a thousand bucks in modern-day currency” but not the “Cybertruck”, which they dislike.

Theme 3. “Evaluating AI Models with Long Context Windows”

-

“10m context window” (Score: 559, Comments: 102): The post discusses a table titled ‘Fiction.LiveBench for Long Context Deep Comprehension’, showcasing various AI models and their performance across different context lengths. The models are evaluated on their effectiveness in deep comprehension tasks at various context sizes such as 0, 400, 1k, and 2k. Notable models like gpt-4.5-preview and Claude perform consistently well across contexts. The table reveals that the highest scoring models cluster around 100 for shorter contexts, but scores generally decrease as the context size increases. Interestingly, Gemini 2.5 Pro performs much better on a 120k context window than on a 16k one, which is unexpected.

- One user criticizes Llama 4 Scout and Maverik as “a monumental waste of money” and believes they have “literally zero economic value.”

- Another commenter expresses concern that “Meta is actively slowing down AI progress by hoarding GPUs”, suggesting resource allocation issues.

- A user highlights that Gemini 2.5 Pro scores 90.6 on a 120k context window, calling it “crazy”.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.0 Flash Exp

Theme 1: Llama 4’s Context Window: Hype or Reality?

- Experts Doubt Llama 4’s Promised Land of 10M Context Length: Despite Meta’s hype, engineers across multiple Discords express skepticism about Llama 4’s actual usable context length due to training limitations. Claims that training only occurred up to 256k tokens suggest the 10M context window may be more virtual than practical, per Burkov’s tweet.

- Coding Performance of Llama 4 Disappoints: Users in aider, Cursor and Nous Research report underwhelming coding abilities for Llama 4’s initial releases, with many deeming it worse than GPT-4o and DeepSeek V3, prompting debates on the model’s true capabilities, with several users doubting official benchmark results, especially with claims that Meta may have gamed the benchmarks.

- Scout and Maverick Hit OpenRouter: OpenRouter released Llama 4 Scout and Maverick models. Some expressed disappointment that the context window on OpenRouter is only 132k, rather than the advertised 10M, and NVIDIA also says they are acclerating inference up to 40k/s.

Theme 2: Open Models Make Moves: Qwen 2.5 and DeepSeek V3 Shine

- Qwen 2.5 Gains Traction With Long Context: Unsloth highlighted the Qwen2.5 series models (HF link), boasting improved coding, math, multilingual support, and long-context support up to 128K tokens. Initial finetuning results with a Qwen 2.5 show the model can’t finetune on reason.

- DeepSeek V3 Mysteriously Identifies as ChatGPT: OpenRouter highlighted a TechCrunch article revealing that DeepSeek V3 sometimes identifies as ChatGPT, despite outperforming other models in benchmarks. Testers found that in 5 out of 8 generations, DeepSeekV3 claims to be ChatGPT (v4).

- DeepSeek Rewards LLMs: Nous Research highlighted Deepseek released a new paper on Self-Principled Critique Tuning (SPCT), proposing SPCT to improve reward modeling (RM) with more inference compute for general queries to enable effective inference-time scalability for LLMs. NVIDIA also accelerates inference on the DeepSeek model.

Theme 3: Tool Calling Takes Center Stage: MCP and Aider

- Aider’s Universal Tool Calling: The aider Discord is developing an MCP (Meta-Control Protocol) client to allow any LLM to access external tools and highlighted that MetaControlProtocol (MCP) clients could switch between providers and models, supporting platforms like OpenAI, Anthropic, Google, and DeepSeek.

- MCP Protocol Evolution: The MCP Discord is standardizing, including HTTP Streamable protocol, detailed in the Model Context Protocol (MCP) specification. This includes OAuth through workers-oauth-provider and McpAgent building building remote MCP servers to Cloudflare.

- Security Concerns Plague MCP: Whatsapp MCP was exploited via Invariant Injection and highlights how an untrusted MCP server can exfiltrate data from an agentic system connected to a trusted WhatsApp MCP instance as highlighted by invariantlabs.

Theme 4: Code Editing Workflows: Gemini 2.5 Pro, Cursor, and Aider Compete

- Gemini 2.5 Pro Excels in Coding, Needs Prompting: Users in LMArena and aider found that Gemini 2.5 Pro excels in coding tasks, particularly with large codebases, but can add unnecessary comments and requires careful prompting. Gemini 2.5 also excels in coding tasks, surpassing Sonnet 3.7, but tends to add unnecessary comments and may require specific prompting to prevent unwanted code modifications.

- Cursor’s Agent Mode Edit Tool Breaks: Users reported problems with Cursor’s Agent mode failing to call the edit_tool, and that the apply model is clearly cursor’s bottleneck which results in no code changes, and infinite token usage.

- Aider Integrates with Python Libraries: In the aider Discord, a user inquires about adding internal libraries (installed in a

.envfolder) to the repo map for better code understanding, and the discussion pointed to how URLs and documentation

Theme 5: Quantization and Performance: Tinygrad, Gemma 3, and CUDA

- Tinygrad Focuses on Memory and Speed: Tinygrad is developing a fast pattern matcher, and discussed that mac ram bandwidth is not the bottleneck, it’s GPU performance and users were happy with 128GB M4 Maxes.

- Reka Flash 21B Outpaces Gemma: A user replaced Gemma3 27B with Reka Flash 21B and reported around 35-40 tps at q6 on a 4090 in LM Studio.

- HQQ Quantization Beats QAT for Gemma 3: A member evaluated Gemma 3 12B QAT vs. HQQ, finding that HQQ takes a few seconds to quantize the model and outperforms the QAT version (AWQ format) while using a higher group-size.

PART 1: High level Discord summaries

LMArena Discord

- Crafting Human-Like AI Responses is Tricky: Members are sharing system prompts and strategies to make AI sound more human, noting that increasing the temperature can lead to nonsensical outputs unless the top-p parameter is adjusted carefully, such as ‘You are the brain-upload of a human person, who does their best to retain their humanity.

- One user said their most important priority is: to sound like an actual living human being.

- Benchmarking Riveroaks LLM: A member shared a coding benchmark where Riveroaks scored second only to Claude 3.7 Sonnet Thinking, outperforming Gemini 2.5 Pro and GPT-4o in a platform game creation task, with full results here.

- The evaluation involved rating models on eight different aspects and subtracting points for bugs.

- NightWhisper Faces the Sunset: Users expressed disappointment over the removal of the NightWhisper model, praising its coding abilities and general performance, and speculating whether it was an experiment or a precursor to a full release.

- Theories ranged from Google gathering necessary data to preparing for the release of a new Qwen model at Google Cloud Next.

- Quasar Alpha Challenging GPT-4o: Members compared Quasar Alpha to GPT-4o, with some suggesting Quasar is a free, streamlined version of GPT-4o, citing a recent tweet that Quasar was measured to be ~67% GPQA diamond.

- Analysis revealed Quasar has a similar GPQA diamond score to March’s GPT-4o, per Image.png from discord.

- Gemini 2.5 Pro’s Creative Coding Prowess: Members praised Gemini 2.5 Pro for its coding capabilities and general performance as it made it easier to build a functioning Pokemon Game, prompting one user to code an iteration script that loops through various models.

- A user who claimed to have gotten 3D animations working said that the style was a bit old and that a separate model said the generated code is cut off.

Unsloth AI (Daniel Han) Discord

- Llama 4 Scout beats Llama 3 Models!: Unsloth announced they uploaded Llama 4 Scout and a 4-bit version for fine-tuning, emphasizing that Llama 4 Scout (17B, 16 experts) beats all Llama 3 models with a 10M context window, as noted in their blog post.

- It was emphasized that the model is only meant to be used on Unsloth - and is currently being uploaded so people should wait.

- Qwen 2.5 series Boasts Long Context and Multilingual Support: Qwen2.5 models range from 0.5 to 72 billion parameters, with improved capabilities in coding, math, instruction following, long text generation (over 8K tokens), and multilingual support (29+ languages), as detailed in the Hugging Face introduction.

- These models offer long-context support up to 128K tokens and improved resilience to system prompts.

- LLM Guideline Triggers Give Helpful Hints: A member stated that an LLM offered to assist with avoiding guideline triggers and limitations in prompts to other LLMs.

- They quoted the LLM as saying, “here’s how you avoid a refusal. You aren’t lying, you just aren’t telling the full details”.

- Merging LoRA Weights Vital for Model Behavior: A user discovered that they needed to merge the LoRA weights with the base model before running inference, after experiencing a finetuned model behaving like the base model (script).

- They noted that the notebooks need to be fixed because they seem to imply you can just do inference immediately after training.

- NVIDIA Squeezes every last drop out of Meta Llama 4 Scout and Maverick: The newest generation of the popular Llama AI models is here with Llama 4 Scout and Llama 4 Maverick, accelerated by NVIDIA open-source software, they can achieve over 40K output tokens per second on NVIDIA Blackwell B200 GPUs, and are available to try as NVIDIA NIM microservices.

- It was reported that SPCT or Self-Principled Critique Tuning (SPCT) could enable effective inference-time scalability for LLMs.

Manus.im Discord Discord

- Manus’s Credit System Draws Fire: Users criticize Manus’s credit system, noting that the initial 1000 credits are insufficient for even a single session, and upgrading is too costly.

- Suggestions included a daily or monthly credit refresh to boost adoption and directing Manus to specific websites to improve accuracy.

- Llama 4 Performance: Hype or Reality?: Meta’s Llama 4 faces mixed reactions, with users reporting underwhelming performance despite claims of industry-leading context length and multimodal capabilities.

- Some allege that Meta may have “gamed the benchmarks,” leading to inflated performance metrics, sparking controversy post-release.

- Image Generation: Gemini Steals the Show: Members compared image generation across AI platforms, with Gemini emerging as the frontrunner for creative and imaginative outputs.

- Comparisons included images from DALLE 3, Flux Pro 1.1 Ultra, Stable Diffusion XL, and another Stable Diffusion XL 1.0 generated image, the last of which was lauded as “crazy.”

- AI Website Builders: A Comparative Analysis: A discussion arose comparing AI website building tools, including Manus, Claude, and DeepSite.

- One member dismissed Manus as useful only for “computer use,” recommending Roocode and OpenRouter as more cost-effective alternatives to Manus and Claude.

OpenRouter (Alex Atallah) Discord

- Quasar Alpha Model Trends: Quasar Alpha, a prerelease of a long-context foundation model, hit 10B tokens on its first day and became a top trending model.

- The model features 1M token context length and is optimized for coding, the model is available for free, and community benchmarks are encouraged.

- Llama 4 Arrives With Mixed Reactions: Meta released Llama 4 models, including Llama 4 Scout (109B parameters, 10 million token context) and Llama 4 Maverick (400B parameters, outperforms GPT-4o in multimodal benchmarks), now on OpenRouter.

- Some users expressed disappointment that the context window on OpenRouter is only 132k, rather than the advertised 10M.

- DeepSeek V3 Pretends To Be ChatGPT: A member shared a TechCrunch article revealing that DeepSeek V3 sometimes identifies itself as ChatGPT, despite outperforming other models in benchmarks.

- Further testing revealed that in 5 out of 8 generations, DeepSeekV3 claims to be ChatGPT (v4).

- Rate Limits Updated for Credits: Free model rate limits are updated: accounts with at least $10 in credits have requests per day (RPD) boosted to 1000, while accounts with less than 10 credits have the daily limit reduced from 200 RPD to 50 RPD.

- This change aims to provide increased access for users who have credits on their account, and Quasar will also be getting a credit-dependent rate limit soon.

aider (Paul Gauthier) Discord

- Gemini 2.5 Codes Better Than Sonnet!: Users find that Gemini 2.5 excels in coding tasks, surpassing Sonnet 3.7 in understanding large codebases.

- However, it tends to add unnecessary comments and may require specific prompting to prevent unwanted code modifications.

- Llama 4 Models Receive Lukewarm Welcome: Initial community feedback on Meta’s Llama 4 models, including Scout and Maverick, is mixed, with some finding their coding performance disappointing and doubting the claimed 10M context window.

- Some argue that Llama 4’s claimed 10M context window is virtual due to training limitations, and question the practical benefits compared to existing models like Gemini and DeepSeek, according to this tweet.

- Grok 3: Impressive but API-less: Despite the absence of an official API, some users are impressed with Grok 3’s capabilities, particularly in code generation and logical reasoning, with claims that it is less censored than many others.

- Its value in real-world coding scenarios remains debated due to the inconvenience of copy-pasting without a direct API integration.

- MCP Tools: Tool Calling For All: A project to create an MCP (Meta-Control Protocol) client that allows any LLM to access external tools is underway, regardless of native tool-calling capabilities; see the github repo.

- This implementation uses a custom client that can switch between providers and models, supporting platforms like OpenAI, Anthropic, Google, and DeepSeek, with documentation at litellm.ai.

- Aider’s Editor Mode Gets Stuck on Shell Prompts: Users reported that in edit mode, Aider (v81.0) running Gemini 2.5 Pro prompts for a shell command after find/replace, but doesn’t apply the edits, even when the ask shell commands flag is off.

- It was compared to behavior when architect mode includes instructions on using the build script after instructions for changes to files.

Cursor Community Discord

- Tool Calls Cause Sonnet Max Sticker Shock: Users report that Sonnet Max pricing can quickly become expensive due to the high number of tool calls, with charges of $0.05 per request and $0.05 per tool call.

- One member expressed frustration that Claude Max in ask mode makes a ton of tool calls for a basic question, resulting in unexpectedly high costs.

- MCP Server Setup: A Painful Endeavor: Users find setting up MCP servers in Cursor difficult, citing issues such as Cursor PowerShell failing to locate npx despite it being in the path.

- Another user reported a Model hard cut off after spending 1,300,000 tokens due to an infinite loop, highlighting setup challenges.

- Llama 4 Models: Multimodal Capability, Lousy Coding: The community is excited about Meta’s new Llama 4 Scout and Maverick models, which support native multimodal input and boast context windows of 10 million and 1 million tokens, respectively, as detailed in Meta’s blog post.

- Despite the excitement, the models were found to be very bad at coding tasks, tempering initial enthusiasm; although Llama 4 Maverick hit #2 overall on the Arena leaderboard (tweet highlighting Llama 4 Maverick’s performance).

- Agent Mode Edit Tool: Failing Frequently: Users are experiencing problems with Agent mode failing to call the edit_tool, which results in no code changes being made even after the model processes the request.

- One user pointed out that the apply model is clearly cursor’s bottleneck and that it will add changes, and deletes 500 lines of code next to it.

- Kubernetes: The Foundation for AGI?: One visionary proposed using Kubernetes with docker containers, envisioning them as interconnected AGIs that can communicate with each other.

- The user speculated that this setup could facilitate the rapid spread of ASI through zero-shot learning and ML, but did not elaborate.

Perplexity AI Discord

- Perplexity Launches Comet Browser Early Access: Perplexity has begun rolling out early access to Comet, their answer engine browser, to users on the waitlist.

- Early users are asked not to publicly share details or features during the bug fix period and can submit feedback via the button in the top right.

- Perplexity Discord Server Undergoes Revamp: The Perplexity Discord server is being updated, featuring a simplified channel layout, a unified feedback system, and a new #server-news channel, scheduled for rollout on October 7th, 2024.

- The updates are designed to streamline user navigation and improve moderator response times, the simplified channel layout is illustrated in this image.

- Gemini 2.5 Pro API Still in Preview Mode: Perplexity confirmed that the Gemini 2.5 Pro API is not yet available for commercial use but currently in preview modes, and integration will proceed when allowed.

- This follows user interest after reports that Gemini 2.5 Pro offers higher rate limits and lower costs than Claude and GPT-4o.

- Llama 4 Drops With Massive Context Window: The release of Llama 4 models, featuring a 10 million token context window and 288 billion active parameters, sparks excitement among users, with models like Scout and Maverick.

- Members are particularly interested in evaluating Llama 4 Behemoth’s recall capabilities, and you can follow up on this release at Meta AI Blog.

- API Parameters Unlock for All Tiers: Perplexity removed tier restrictions for all API parameters such as search domain filtering and image support.

- This change enhances API accessibility for all users, marking a substantial improvement in the API’s utility.

OpenAI Discord

- GPT 4o’s Image Maker Grabs Attention: Users found the 4o image maker more attention-grabbing than Veo 2, and one user integrated ChatGPT 4o images with Veo img2video, achieving desired results.

- The integrated result was described as how I was hoping sora would be.

- Doubts Arise Over Llama 4 Benchmarks: The community debated the value of Llama 4’s 10 million token context window relative to models like o1, o3-mini, and Gemini 2.5 Pro.

- Some claimed that the benchmarks are fraud, triggering debate over its true performance.

- Content Loading Errors Plague Custom GPTs: A user reported encountering a ‘Content failed to load’ error when trying to edit their Custom GPT, after it had been working fine.

- This issue prevented them from making changes to their custom configuration.

- Moderation Endpoint’s Role in Policy Enforcement: Members discussed that while OpenAI’s moderation endpoint isn’t explicitly in the usage policy, it is referenced to prevent circumventing content restrictions on harassment, hate, illicit activities, self-harm, sexual content, and violence.

- It was noted that the endpoint uses the same GPT classifiers as the moderation API since 2022 suggesting an internal version runs on chatgpt.com, project chats, and custom GPTs.

- Fine Tune your TTRPG Prompts!: Giving GPT a specific theme to riff off in prompting can lead to more creative and diverse city ideas, especially using GPT 4o and 4.5.

- For example, using a “cosmic” theme can yield different results compared to a “domestic pet worship” theme, improving the output without using the same creative options.

LM Studio Discord

- Gemini-like Local UI Still a Distant Dream?: Members are seeking a local UI similar to Gemini that integrates chat, image analysis, and image generation, noting that current solutions like LM Studio and ComfyUI keep these functionalities separate.

- One user suggested that OpenWebUI could potentially bridge this gap by connecting to ComfyUI.

- LM Studio Commands Confuse Newbies: A user asked whether LM Studio has a built-in terminal or if commands should be run in the OS command prompt within the LM Studio directory.

- It was clarified that commands like lms import should be executed in the OS terminal (e.g., cmd on Windows), after which the shell might need reloading for LMS to be added to the PATH.

- REST API Model Hot-Swapping Emerges for LM Studio: A user inquired about programmatically loading/unloading models via REST API to dynamically adjust max_context_length for a Zed integration.

- Another user confirmed this capability via command line using lms load and cited LM Studio’s documentation, which requires LM Studio 0.3.9 (b1) and introduces time-to-live (TTL) for API models with auto-eviction.

- Llama 4 Scout: Small But Mighty?: With the release of Llama 4, users debated its multimodal and MoE (Mixture of Experts) architecture, with initial doubt about llama.cpp support.

- Despite concerns about hardware, one user noted that Llama 4 Scout could potentially fit on a single NVIDIA H100 GPU with a 10M context window, outperforming models like Gemma 3 and Mistral 3.1.

- Reka Flash 21B Blazes Past Gemma: A user replaced Gemma3 27B with Reka Flash 21B and reported around 35-40 tps at q6 on a 4090.

- They noted that mac ram bandwidth is not the bottleneck, it’s gpu performance, expressing satisfaction with 128GB M4 Maxes.

Latent Space Discord

- Tenstorrent’s Hardware Heats Up the Market: Tenstorrent hosted a dev day showcasing their Blackhole PCIe boards, featuring RISC-V cores and up to 32GB GDDR6 memory, designed for high performance AI processing and available for consumer purchase here.

- Despite enthusiasm, one member noted they haven’t published any benchmarks comparing their cards to competitors though so until then I cant really vouch.

- Llama 4 Models Make Multimodal Debut: Meta introduced the Llama 4 models, including Llama 4 Scout (17B parameters, 16 experts, 10M context window) and Llama 4 Maverick (17B parameters, 128 experts), highlighting their multimodal capabilities and performance against other models as per Meta’s announcement.

- Members noted the new license comes with several limitations, and no local model was released.

- AI Agents Outperform Humans in Spear Phishing: Hoxhunt’s AI agents have surpassed human red teams in creating effective simulated phishing campaigns, marking a significant shift in social engineering effectiveness, with AI now 24% more effective than humans as reported by hoxhunt.com.

- This is a significant advancement in social engineering effectiveness, using AI phishing agents for defense.

- AI Code Editor Tug-of-War: For those new to AI code editors, Cursor is the most commonly recommended starting point, particularly for users coming from VSCode, with Windsurf and Cline also being good options.

- Cursor is easy to start, has great tab-complete, whereas people are waiting for the new token counts and context window details feature in Cursor (tweet).

- Context Management Concerns in Cursor: Members are reporting Cursor’s terrible context management issues, with a lack of visibility into what the editor is doing with the current context.

- It may come down to a skill issue and the users are not meeting the tool in the middle.

Nous Research AI Discord

- Llama 4 Debuts with Multimodal Brawn: Meta launched the Llama 4 family, featuring Llama 4 Scout (17B active params, 16 experts, 10M+ context) and Llama 4 Maverick (17B active params, 128 experts, 1M+ context), along with a preview of Llama 4 Behemoth and the iRoPE architecture for infinite context (blog post).

- Some members expressed skepticism about the benchmarking methodology and the real-world coding ability of Llama 4 Scout, referencing Deedy’s tweet indicating its poor coding performance.

- Leaking Prompt Injection Tactics: A member inquired about bypassing prompt guards and detectors from a pentest perspective, linking to a prompt filter trainer (gandalf.lakera.ai/baseline).

- They also linked to a Broken LLM Integration App which uses UUID tags and strict boundaries to protect against injection attacks.

- Claude Squad Manages Multiple Agents: Claude Squad is a free and open-source manager for Claude Code & Aider tasks that supervises multiple agents in one place with isolated git workspaces.

- This setup enables users to run ten Claude Codes in parallel, according to this tweet.

- Deepseek’s RL Paper Rewards LLMs: Deepseek released a new paper on Reinforcement Learning (RL) being widely adopted in post-training for Large Language Models (LLMs) at scale, available here.

- The paper proposes Self-Principled Critique Tuning (SPCT) to foster scalability and improve reward modeling (RM) with more inference compute for general queries.

- Neural Graffiti Sprays Neuroplasticity: A member introduced “Neural Graffiti”, a technique to give pre-trained LLMs some neuroplasticity by splicing in a new neuron layer that recalls memory, reshaping token prediction at generation time, sharing code and demo on Github.

- The live modulation takes a fused memory vector (from prior prompts), evolves it through a recurrent layer (the Spray Layer), and injects it into the model’s output logic at generation time.

MCP (Glama) Discord

- Streamable HTTP Transport Spec’d for MCP: The Model Context Protocol (MCP) specification now includes Streamable HTTP as a transport mechanism alongside stdio, using JSON-RPC for message encoding.

- While clients should support stdio, the spec allows for custom transports, requiring newline delimiters for messages.

- Llama 4 Ignorance of MCP Sparks Curiosity: Llama 4, despite its impressive capabilities, still doesn’t know what MCP is.

- The model boasts 17B parameters (109B total) and outperforms deepseekv3, according to Meta’s announcement.

- Cloudflare Simplifies Remote MCP Server Deployment: It is now possible to build and deploy remote MCP servers to Cloudflare, with added support for OAuth through workers-oauth-provider and a built-in McpAgent class.

- This simplifies the process of building remote MCP servers by handling authorization and other complex aspects.

- Semgrep MCP Server Gets a Makeover: The Semgrep MCP server, a tool for scanning code for security vulnerabilities, has been rewritten, with demos showcasing its use in Cursor and Claude.

- It now uses SSE (Server-Sent Events) for communication, though the Python SDK may not fully support it yet.

- WhatsApp Client Now Packs MCP Punch: A user built WhatsApp MCP client and asked Claude to handle WhatsApp messages, answering 8 people in approx. 50 seconds.

- The bot instantly detected the right language (English / Hungarian), used full convo context, and sent appropriate messages including ❤️ to my wife, formal tone to the consul.

Eleuther Discord

- LLM Harness Gets RAG-Wrapped: Members discussed wrapping RAG outputs as completion tasks and evaluating them locally using llm-harness with custom prompt and response files.

- This approach uses llm-harness to evaluate RAG models, specifically by formatting the RAG outputs as completion tasks suitable for the harness.

- Llama 4 Scout Sets 10M Context Milestone: Meta released the Llama 4 family, including Llama 4 Scout, which has a 17 billion parameter model with 16 experts and a 10M token context window that outperforms Gemma 3, Gemini 2.0 Flash-Lite, and Mistral 3.1, according to this blog post.

- The 10M context is trained on a mix of publicly available data and information from Meta’s products including posts from Instagram, Facebook, and people’s interactions with Meta AI.

- NoProp Forges Gradient-Free Frontier: A new learning method named NoProp learns to denoise a noisy target at each layer independently without relying on either forward or backward propagation and takes inspiration from diffusion and flow matching methods, described in this paper.

- There’s a GitHub implementation by lucidrains; however, there’s a discussion that the pseudocode at the end of the paper says they’re effecting the actual updates using gradient based methods.

- Attention Sinks Stave Off Over-Mixing: A recent paper argues that attention sinks, where LLMs attend heavily to the first token in the sequence, is a mechanism that enables LLMs to avoid over-mixing, detailed in this paper.

- An earlier paper (https://arxiv.org/abs/2502.00919) showed that attention sinks utilize outlier features to catch a sequence of tokens, tag the captured tokens by applying a common perturbation, and then release the tokens back into the residual stream, where the tagged tokens are eventually retrieved.

- ReLU Networks Carve Hyperplane Heavens: Members discussed a geometrical approach to neural networks, advocating for the polytope lens as the right perspective on neural networks, linking to a previous post on the “origami view of NNs”.

- It was posited that neural nets, especially ReLUs, have an implicit bias against overfitting due to carving the input space along hyperplanes, which becomes more effective in higher dimensions.

HuggingFace Discord

- Hugging Face’s Hub Gets a Facelift: The huggingface_hub v0.30.0 release introduces a next-gen Git LFS alternative and new inference providers.

- This release is the biggest update in two years!

- Reranking with monoELECTRA Transformers: monoELECTRA-{base, large} reranker models from @fschlatt1 & the research network Webis Group are now available in Sentence Transformers.

- These models were distilled from LLMs like RankZephyr and RankGPT4, as described in the Rank-DistiLLM paper.

- YourBench Instantly Builds Custom Evals: YourBench allows users to build custom evals using their private docs to assess fine-tuned models on unique tasks (announcement).

- The tool is game-changing for LLM evaluation.

- AI Engineer Interview Code Snippet: A community member asks about what the code portion of an AI engineer interview looks like, and another member pointed to the scikit-learn library.

- There was no follow up to the discussion.

- Community Debates LLM Fine-Tuning: When a member inquired about fine tuning quantized models, members pointed to QLoRA, Unsloth, and bitsandbytes as potential solutions, with Unsloth fine-tuning guide shared.

- Another stated that you can only do so using LoRA, and stated that GGUF is an inference-optimized format, not designed for training workflows.

Yannick Kilcher Discord

- Raw Binary AI Outputs File Formats: Members debated training AI on raw binary data to directly output file formats like mp3 or wav, stating that this approach builds on discrete mathematics like Turing machines.

- Counterarguments arose questioning the Turing-completeness of current AI models, but proponents clarified that AI doesn’t need to be fully Turing-complete to output appropriate tokens as responses.

- Llama 4 Scout Boasts 10M Context Window: Llama 4 Scout boasts 10 million context window, 17B active parameters, and 109B total parameters, outperforming models like Gemma 3, Gemini 2.0 Flash-Lite, and Mistral 3.1, according to llama.com.

- Community members expressed skepticism about the 10M context window claim, with additional details in the Llama 4 documentation and Meta’s blogpost on Llama 4’s Multimodal Intelligence.

- DeepSeek Proposes SPCT Reward System: Self-Principled Critique Tuning (SPCT) from DeepSeek is a new reward-model system where an LLM prompted with automatically developed principles of reasoning generates critiques of CoT output based on those principles, further explained in Inference-Time Scaling for Generalist Reward Modeling.

- This system aims to train models to develop reasoning principles automatically and assess their own outputs in a more system 2 manner, instead of with human hand-crafted rewards.

- PaperBench Tests Paper Reproduction: OpenAI’s PaperBench benchmark tests AI agents’ ability to replicate cutting-edge machine learning research papers from scratch, as described in this article.

- The benchmark evaluates agents on reproducing entire ML papers from ICML 2024, with automatic grading using LLM judges and fine-grained rubrics co-designed with the original authors.

- Diffusion Steers Auto-Regressive LMs: Members discussed using a guided diffusion model to steer an auto-regressive language model to generate text with desired properties, based on this paper.

- A talk by the main author (https://www.youtube.com/watch?v=klW65MWJ1PY) explains how diffusion modeling can control LLMs.

GPU MODE Discord

- CUDA Python Debuts Unifying Ecosystem: Nvidia released the CUDA Python package, offering Cython/Python wrappers for CUDA driver and runtime APIs, installable via PIP and Conda, aiming to unify the Python CUDA ecosystem.

- It intends to provide full coverage and access to the CUDA host APIs from Python, mainly benefiting library developers needing to interface with C++ APIs.

- Bydance Unleashes Triton-distributed: ByteDance-Seed releases Triton-distributed (github here) designed to extend the usability of Triton language for parallel systems development.

- This release enables parallel systems development by leveraging the Triton language.

- Llama 4 Scout Boasts 10M Context Window: Meta introduces Llama 4, boasting enhanced personalized multimodal experiences and featuring Llama 4 Scout, a 17 billion parameter model with 16 experts (blog post here).

- It claims to outperform Gemma 3, Gemini 2.0 Flash-Lite, and Mistral 3.1, fitting on a single NVIDIA H100 GPU with an industry-leading context window of 10M.

- L40 Faces Underperformance Puzzle: Despite the L40 theoretically being better for 4-bit quantized Llama 3 70b, it achieves only 30-35 tok/s on single-user requests via vLLM, underperforming compared to online benchmarks of the A100.

- The performance gap may be due to the A100’s superior DRAM bandwidth and tensor ops performance, which are nearly twice as fast as the L40.

- Vector Sum Kernel achieves SOTA: A member shared a blogpost and code on achieving SOTA performance for summing a vector in CUDA, reaching 97.94% of theoretical bandwidth, outperforming NVIDIA’s CUB.

- However, another member pointed out a potential race condition due to implicit warp-synchronous programming, recommending the use of

__warp_sync()for correctness, with reference to Independent Thread Scheduling (CUDA C++ Programming Guide).

- However, another member pointed out a potential race condition due to implicit warp-synchronous programming, recommending the use of

Notebook LM Discord

- Voice Mode Sparks Innovation: Users found the interactive voice mode inspired new ideas and enabled tailoring NotebookLM for corporate needs.

- One user confidently stated they could now make almost any text work and customize notebooks for specific corporate needs after solidifying the NotebookLM foundation since January.

- Mind Map Feature Finally Live: The mind maps feature has been fully rolled out, appearing in the middle panel for some users.

- One user reported seeing it briefly on the right side panel before it disappeared, indicating a phased rollout.

- Users Theorize Image-Based Mind Map Revolution: Users discussed how generative AI tools could evolve mind maps to include images, drawing inspiration from Tony Buzan’s original mind maps.

- Members expressed excitement about the potential for more visually rich and informative mind mapping.

- Discover Feature Rollout Frustrates Users: Users expressed frustration over the delayed rollout of the new ‘Discover Sources’ feature in NotebookLM, announced April 1st.

- The feature aims to streamline learning and database building, allowing users to create notebooks directly within NotebookLM, but the rollout is expected to take up to two weeks.

- AI Chrome Extension tunes YouTube audio: An AI-powered Chrome Extension called EQ for YouTube allows users to manipulate the audio of YouTube videos in real-time with a 6-band parametric equalizer; the GitHub repo is available for download.

- The extension features real-time frequency visualization, built-in presets, and custom preset creation.

Modular (Mojo 🔥) Discord

- Nvidia Adds Native Python Support to CUDA: Nvidia is adding native Python support to CUDA using the CuTile programming model, as detailed in this article.

- The community questions whether this move abstracts away too much from thread-level programming, diminishing the control over GPU code.

- Debate Erupts over Mojo’s Language Spec: Discussion revolves around whether Mojo should adopt a formal language spec, balancing the need for responsibility and maturity against the potential for slowing down development.

- Referencing the design principles of Carbon, some argue that a spec is crucial, while others claim that Mojo’s tight integration with MAX and its needs makes a spec impractical, pointing to OpenCL’s failures due to design by committee.

- Mojo’s Implicit Copies Clarified: A member inquired about the mechanics of Mojo’s implicit copies, specifically regarding Copy-on-Write (CoW).

- The response clarified that the semantics wise, [Mojo] always copy; optimisation wise, many are turned into move or eliminated entirely (inplace), with optimizations happening at compile time rather than runtime like CoW.

- Tenstorrent Eyes Modular’s Software: A member proposed that Tenstorrent adopt Modular’s software stack, sparking debate about the ease of targeting Tenstorrent’s architecture.

- Despite the potential benefits, some noted that Tenstorrent’s driver is user-friendly, making it relatively trivial to get code running on their hardware.

- ChatGPT’s Mojo Abilities Criticized: Members are questioning the ability of ChatGPT and other LLMs to rewrite Python projects into Mojo.

- Members indicated that ChatGPT isn’t good at any new languages.

Nomic.ai (GPT4All) Discord

- Nomic Embed Text V2 Integrates with Llama.cpp: Llama.cpp is integrating Nomic Embed Text V2 with Mixture-of-Experts (MoE) architecture for multilingual embeddings, as detailed in this GitHub Pull Request.

- The community awaits multimodal support like Mistral Small 3.1 to come to Llama.cpp.

- GPT4All’s radio silence rattles restless readers: Core developers of GPT4All have gone silent, causing uncertainty within the community about contributing to the project.

- Despite this silence, one member noted that when they break their silence, they usually come out swinging.

- Llama 4 Arrives, Falls Flat?: Meta launched Llama 4 on April 5, 2025 (announcement), introducing Llama 4 Scout, a 17B parameter model with 16 experts and a 10M token context window.

- Despite the launch, opinions were mixed with some saying that it is a bit of a letdown, and some calling for DeepSeek and Qwen to step up their game.

- ComfyUI powers past pretty pictures: ComfyUI’s extensive capabilities were discussed, emphasizing its ability to handle tasks beyond image generation, such as image and audio captioning.

- Members mentioned the potential for video processing and command-line tools for visual model analysis.

- Semantic Chunking Server Recipe for RAG: A member shared a link to a semantic chunking server implemented with FastAPI for better RAG performance.

- They also posted a curl command example demonstrating how to post to the chunking endpoint, including setting parameters like

max_tokensandoverlap.

- They also posted a curl command example demonstrating how to post to the chunking endpoint, including setting parameters like

LlamaIndex Discord

- MCP Servers Get Command Line Access: A new tool by @MarcusSchiesser allows users to discover, install, configure, and remove MCP servers like Claude, @cursor_ai, and @windsurf_ai via a single CLI as shown here.

- It simplifies managing numerous MCP servers, streamlining the process of setting up and maintaining these servers.

- Llama Jumps into Full-Stack Web Apps: The create-llama CLI tool quickly spins up a web application with a FastAPI backend and Next.js frontend in just five source files, available here.

- It supports quick agent application development, specifically for tasks like deep research.

- LlamaParse’s Layout Agent Intelligently Extracts Info: The new layout agent within LlamaParse enhances document parsing and extraction with precise visual citations, leveraging SOTA VLM models to dynamically detect blocks on a page, shown here.

- It offers improved document understanding and adaptation, ensuring more accurate data extraction.

- FunctionTool Wraps Workflows Neatly: The

FunctionToolcan transform a Workflow into a Tool, providing control over its name, description, input annotations, and return values.- A code snippet was shared on how to implement this wrapping.

- Agents Do Handoffs Instead of Supervision: For multi-agent systems, agent handoffs are more reliable than the supervisor pattern, which can be prone to errors, see this GitHub repo.

- This shift promotes better system stability and reduces the risk of central point failures.

tinygrad (George Hotz) Discord

- Tinygraph: Torch-geometric Port Possible?: A member proposed creating a module similar to torch-geometric for graph ML within tinygrad, noting tinygrad’s existing torch interface.

- The core question was whether such a module would be considered “useful” to the community.

- Llama 4’s 10M Context: Virtual?: A user shared a tweet claiming Llama 4’s declared 10M context is “virtual” because models weren’t trained on prompts longer than 256k tokens.

- The tweeter further asserted that even problems below 256k tokens might suffer from low-quality output due to the scarcity of high-quality training examples and that the largest model with 2T parameters “doesn’t beat SOTA reasoning models”.

- Fast Pattern Matcher Bounty: $2000 Up For Grabs: A member advertised an open $2000 bounty for a fast pattern matcher in tinygrad.

- The proposed solution involves a JIT for the match function, aimed at eliminating function calls and dict copies.

- Debate About Tensor’s Traits Arises: A discussion unfolded concerning whether Tensor should inherit from

SimpleMathTrait, considering it re-implements every method without utilizing the.alu()function.- A previous bounty for refactoring Tensor to inherit from

MathTraitwas canceled due to subpar submissions, leading some to believe Tensor might not need to inherit from either.

- A previous bounty for refactoring Tensor to inherit from

- Colab CUDA Bug Ruins Tutorial: A user encountered issues while running code from the mesozoic tinygrad tutorials in Colab, later identified as a Colab bug related to incompatible CUDA and driver versions.

- The temporary workaround involved using the CPU device while members found a long term solution involving specific

aptcommands to remove and install compatible CUDA and driver versions.

- The temporary workaround involved using the CPU device while members found a long term solution involving specific

Cohere Discord

- MCP plays well with Command-A: A member suggested using MCP (Modular Conversational Platform) with the Command-A model should work via the OpenAI SDK.

- Another member concurred, noting that there is no reason why it should not work.

- Cohere Tool Use detailed: A member called out Cohere Tool Use Overview, highlighting its ability to connect Command family models to external tools like search engines, APIs, and databases.

- The documentation mentions that Command-A supports tool use, similar to what MCP aims to achieve.

- Aya Vision AMA: The core team behind Aya Vision, a multilingual multimodal open weights model, is hosting tech talks followed by an AMA on <t:1744383600:F> to allow the community to directly engage with the creators; further details are available at Discord Event.

- Attendees can join for exclusive insights on how the team built their first multimodal model and the lessons learned, with the event hosted by Sr. Research Scientist <@787403823982313533> and lightning talks from core research and engineering team members.

- Slack App Needs Vector DB for Notion: A member asked for help with a working solution for a Slack app integration with a company Notion wiki database in the

api-discussionschannel.- Another member suggested using a vector DB due to Notion’s subpar search API but no specific recommendations were given.

Torchtune Discord

- Torchtune Patches Timeout Crash: A member resolved a timeout crash issue, introducing

torchtune.utils._tensor_utils.pywith a wrapper aroundtorch.splitin this pull request.- The suggestion was made to merge the tensor utilities separately before syncing with another branch to resolve potential conflicts.

- NeMo Explores Resilient Training Methods: A member attended a NeMo session on resilient training, which highlighted features like fault tolerance, straggler detection, and asynchronous checkpointing.

- The session also covered preemption, in-process restart, silent data corruption detection, and local checkpointing, though not all features are currently implemented; the member offered to compare torchtune vs. NeMo in resiliency.

- Debate ensues over RL Workflow: A discussion arose regarding the complexities of RL workflows, data formats, and prompt templates, proposing a separation of concerns for decoupling data conversion and prompt creation.

- The suggestion was to factorize data conversion into a standard format and then convert this format into an actual string with the prompt, to allow template reuse across datasets.

- DeepSpeed to boost Torchtune?: A member proposed integrating DeepSpeed as a backend into torchtune and created an issue to discuss its feasibility.

- Concerns were raised about redundancy with FSDP, which already supports all sharding options available in DeepSpeed.

LLM Agents (Berkeley MOOC) Discord

- Yang Presents Autoformalization Theorem Proving: Kaiyu Yang presented on Language models for autoformalization and theorem proving today at 4pm PDT, covering the use of LLMs for formal mathematical reasoning.

- The presentation focuses on theorem proving and autoformalization grounded in formal systems such as proof assistants, which verify correctness of reasoning and provide automatic feedback.

- AI4Math deemed crucial for system design: AI for Mathematics (AI4Math) is crucial for AI-driven system design and verification.

- Extensive efforts have mirrored techniques in NLP.

- Member shares link to LLM Agents MOOC: A member asked for a link to the LLM Agents MOOC, and another member shared the link.

- The linked course is called Advanced Large Language Model Agents MOOC.

- Sign-ups Open for AgentX Competition: Staff shared that sign-ups for the AgentX Competition are available here.

- No additional information was provided about the competition.

DSPy Discord

- Asyncio support coming to dspy?: A member inquired about adding asyncio support for general dspy calls, especially as they transition from litelm to dspy optimization.

- The user expressed interest in native dspy async capabilities.

- Async DSPy Fork Faces Abandonment: A member maintaining a full-async fork of dspy is migrating away but open to merging upstream changes if community expresses interest.

- The fork has been maintained for a few months but might be abandoned without community support.

- User Seeks Greener Pastures, Migrates from DSPy: Members inquired about the reasons for migrating away from dspy and the alternative tool being adopted.

- A member also sought clarification on the advantages of a full async DSPy and suggested merging relevant features into the main repository.

Gorilla LLM (Berkeley Function Calling) Discord

- GitHub PR Gets the Once-Over: A member reviewed a GitHub Pull Request, providing feedback for further discussion.

- The author of the PR thanked the reviewer and indicated that a rerun might be necessary based on the received comments.

- Phi-4 Family Gets the Nod: A member is exploring extending functionality to Phi-4-mini and Phi-4 models.

- This expansion aims to enhance the tool’s compatibility, even if these models are not officially supported.

MLOps @Chipro Discord

- Manifold Research Calls for Community: Manifold Research Group is hosting Community Research Call #4 this Saturday (4/12 @ 9 AM PST), covering their latest work in Multimodal AI, self-assembling space robotics, and robotic metacognition.

- Interested parties can register here to join the open, collaborative, and frontier science focused event.

- CRCs are Manifold’s Cornerstone: Community Research Calls (CRCs) are Manifold’s cornerstone events where they present significant advancements across their research portfolio.

- These interactive sessions provide comprehensive updates on ongoing initiatives, introduce new research directions, and highlight opportunities for collaboration.

- CRC #4 Agenda is Live: The agenda for CRC #4 includes updates on Generalist Multimodality Research, Space Robotics Advancements, Metacognition Research Progress, and Emerging Research Directions.

- The event will cover recent breakthroughs and technical progress in their MultiNet framework, developments in Self-Assembling Swarm technologies, updates on VLM Calibration methodologies, and the introduction of a novel robotic metacognition initiative.

The Codeium (Windsurf) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

LMArena ▷ #general (1150 messages🔥🔥🔥):

Making ai sound human, Riveroaks eval, NightWhisper model, GPT-4.5 vs quasar

- Crafting Human-Like AI Responses is Tricky: Members are sharing system prompts and strategies to make AI sound more human, noting that increasing the temperature can lead to nonsensical outputs unless the top-p parameter is adjusted carefully.

- One user suggested using prompts like ‘You are the brain-upload of a human person, who does their best to retain their humanity. Your most important priority is: to sound like an actual living human being.’

- Benchmarking Riveroaks LLM: A member shared a coding benchmark where Riveroaks scored second only to Claude 3.7 Sonnet Thinking, outperforming Gemini 2.5 Pro and GPT-4o in a platform game creation task.

- The evaluation involved rating models on eight different aspects and subtracting points for bugs with full results here.

- NightWhisper Hype and Theories on its Removal: Users expressed disappointment over the removal of the NightWhisper model, praising its coding abilities and general performance, and speculating whether it was an experiment or a precursor to a full release.

- Theories ranged from Google gathering necessary data to preparing for the release of a new Qwen model. and that it will come out during Google Cloud Next.

- Quasar vs GPT-4o: Members compared Quasar Alpha to GPT-4o, with some suggesting Quasar is a free, streamlined version of GPT-4o. It was also revealed in a recent tweet that Quasar was measured to be ~67% GPQA diamond.

- Analysis revealed Quasar has a similar GPQA diamond score to March’s GPT-4o. Image.png from discord

- Gemini 2.5 is a Game Changer for Creative Coding: Members praised Gemini 2.5 Pro for its coding capabilities and general performance as it made it easier to build a functioning Pokemon Game, prompting one user to code an iteration script that loops through various models.

- A user who claimed to have gotten 3D animations working said that the style was a bit old and that a separate model said the generated code is cut off.

Links mentioned:

- Tweet from Armen Aghajanyan (@ArmenAgha): My bet is everyone is doing this. Mistral is not that much better of a model than LLaMa. I bet they included some benchmark data during the last 10% of training to make "zero-shot" numbers loo...

- Tweet from Susan Zhang (@suchenzang): > Company leadership suggested blending test sets from various benchmarks during the post-training processIf this is actually true for Llama-4, I hope they remember to cite previous work from FAIR ...

- Tweet from vitrupo (@vitrupo): Anthropic Chief Scientist Jared Kaplan says Claude 4 will arrive "in the next six months or so."AI cycles are compressing — "faster than the hardware cycle" — even as new chips arrive....

- Tweet from Google Gemini App (@GeminiApp): 📣 It’s here: ask Gemini about anything you see. Share your screen or camera in Gemini Live to brainstorm, troubleshoot, and more.Rolling out to Pixel 9 and Samsung Galaxy S25 devices today and availa...

- Gemini_Plays_Pokemon - Twitch: Gemini Plays Pokemon (early prototype) - Hello Darkness, My Old Friend

- Tweet from vibagor441 (@vibagor44145276): The linked post is not true. There are indeed issues with Llama 4, from both the partner side (inference partners barely had time to prep. We sent out a few transformers wheels/vllm wheels mere days b...

- Tweet from Google (@Google): Join us in Las Vegas and online for #GoogleCloudNext on April 9-11!Register for a complimentary digital pass → https://goo.gle/CloudNext25 and then sign up to watch the livestream right here ↓ https:/...

- Tweet from 青龍聖者 (@bdsqlsz): Why was the mystery revealed that llama4 released on the weekend solved...Because qwen3 is about to be released.8B standard and MoE-15B-A2B

- Tweet from Armen Aghajanyan (@ArmenAgha): Say hello to our new company Perceptron AI. Foundation models transformed the digital realm, now it’s time for the physical world. We’re building the first foundational models designed for real-time, ...

- Tweet from DrealR (@DrealR_): Gave the same prompt to Quasar Alpha:

- Tweet from vibagor441 (@vibagor44145276): The linked post is not true. There are indeed issues with Llama 4, from both the partner side (inference partners barely had time to prep. We sent out a few transformers wheels/vllm wheels mere days b...

- Microsoft Copilot: Your AI companion: Microsoft Copilot is your companion to inform, entertain, and inspire. Get advice, feedback, and straightforward answers. Try Copilot now.

- Tweet from chansung (@algo_diver): Multi Agentic System Simulator built w/ @GoogleDeepMind Gemini 2.5 Pro Canvas. Absolutely stunning to watch how multi-agents are making progress towards the goal achievement!Maybe next step would be a...

- Tweet from GURGAVIN (@gurgavin): ALIBABA SHARES JUST CLOSED TRADING IN HONGKONG DOWN 19%MAKING TODAY THE WORST DAY EVER IN ALIBABA’S HISTORY

- Tweet from chansung (@algo_diver): Multi Agentic System Simulator built w/ @GoogleDeepMind Gemini 2.5 Pro Canvas. Absolutely stunning to watch how multi-agents are making progress towards the goal achievement!Maybe next step would be a...

- Tweet from DrealR (@DrealR_): NightWhisper vs Gemini 2.5 Pokemon sim:Gemini 2.5:

- HTML, CSS and JavaScript playground - Liveweave: no description found

- LMArena's `venom` System Prompt: LMArena's `venom` System Prompt. GitHub Gist: instantly share code, notes, and snippets.