A happy outcome for a generational web platform.

AI News for 7/30/2025-7/31/2025. We checked 12 subreddits, 544 Twitters and 29 Discords (227 channels, and 5332 messages) for you. Estimated reading time saved (at 200wpm): 471 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

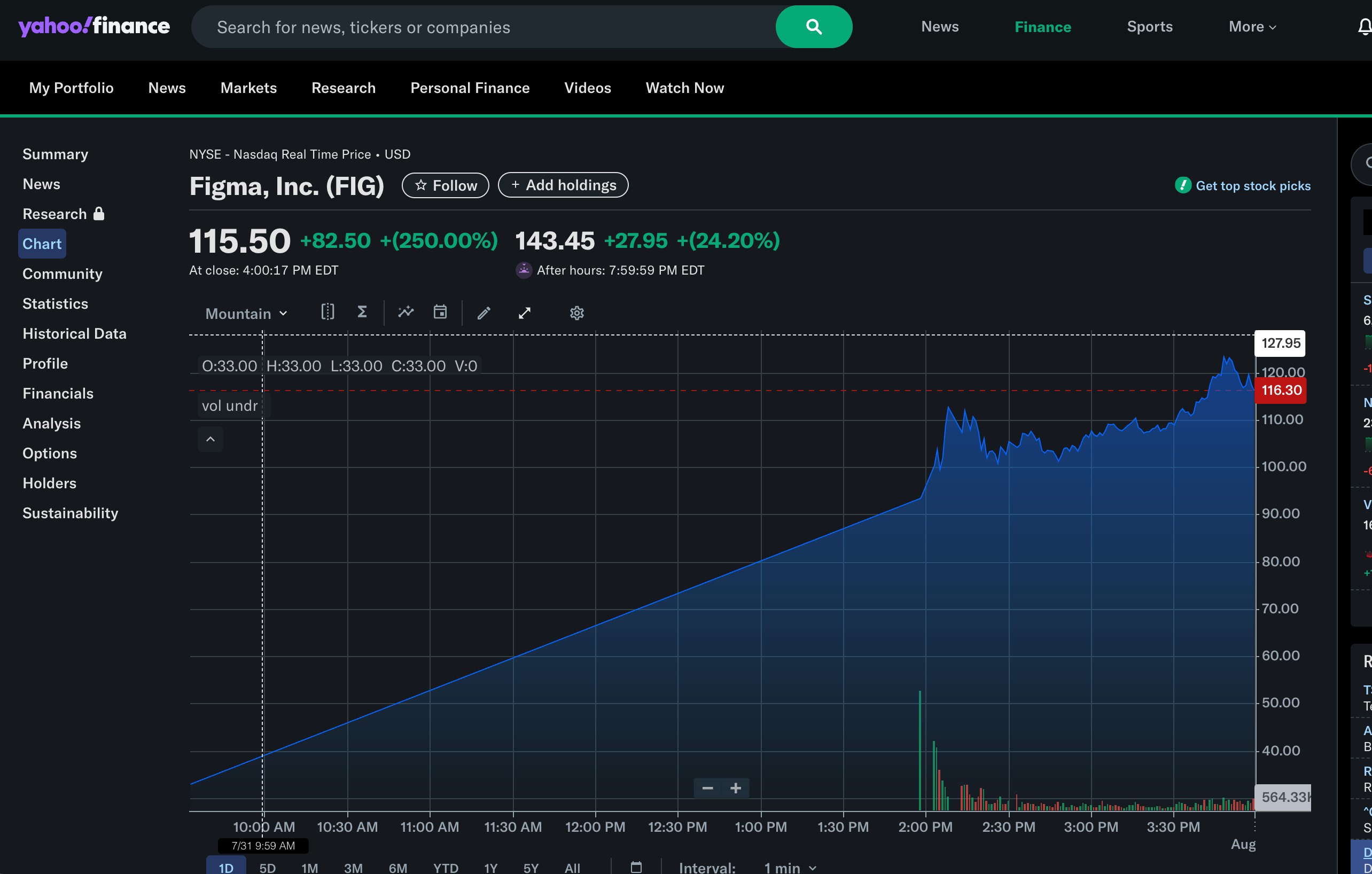

As you know we try to keep things technical, but significant tech business stories do break through. The occasion of a new publicly listed software decacorn is both likely to solidify Figma’s position as a web design platform (with some AI work) and likely to mint lots of millionaires who will fund the next wave and cycle of tech.

AI Twitter Recap

Model Releases, Updates, and Performance

- OpenAI’s “Horizon-alpha” Sparks Speculation: A new stealth model named horizon-alpha, available on OpenRouter, is generating significant buzz and is widely speculated to be a new OpenAI model, possibly a precursor to GPT-5 or a “nano” version. Initial testing by

@scaling01suggested it was weak on benchmarks like LisanBench and not a reasoning model. However, subsequent tests with a reasoning mode enabled showed it is casually capable of 20-digit multiplication, processes thoughts for a ridiculously long time, and performs on par with or better than Gemini 2.5 Pro on LisanBench. The model also shows strong, if distinct, SVG generation abilities.@teortaxesTexnotes it seems to excel at tasks involving “magic” and “ineffable soul,” smelling like a Sonnet killer. - Qwen3-Coder Family Released:

@huyberyannounced the release of Qwen3-Coder, a repo-scale coding model from Alibaba, which has seen significant community adoption and usage on platforms like OpenRouter. A smaller, faster version, Qwen3-Coder-Flash (30B-A3B), was also released for local users, offering basic agentic capabilities. The model is now available in LM Studio and can be run with 1M context length via UnslothAI. - Cohere Releases “Command A Vision” VLM: Cohere has entered the vision space with Command A Vision, a new state-of-the-art 111B parameter open-weights Vision Language Model (VLM). As announced by

@nickfrosst, the model weights are available on Hugging Face and it outperforms models like GPT-4.1 and Llama 4 Maverick on enterprise benchmarks. - FLUX.1 Krea [dev] for Photorealism: Black Forest Labs has released FLUX.1 Krea [dev], a new state-of-the-art open-weights FLUX model specifically built for photorealism.

@reach_vbhighlighted that it can be run for free on ZeroGPU, and the creators noted that existing fine-tuning tools like those from diffusers and ostrisai should work out of the box. - Zhipu AI Launches GLM-4.5:

@Zai_organnounced GLM-4.5, a new open model that unifies agentic capabilities. It is described as a hybrid reasoning model that can switch between “thinking” and “instant” modes and is now available on Together AI. - Inference-Time Training and Reasoning Generalization:

@corbttsenses that inference-time training is going to be a big deal soon. Separately,@jxmnopinquired about examples of reasoning model generalization, such as a model trained on math problems getting better at creative writing.@Teknium1posits that a model will learn to do whatever improves its accuracy during its thinking process, including hallucinating, referencing the null shot learning paper. - Mistral Releases Voxtral Technical Report: In a continued commitment to open science, Mistral AI has released the technical report for Voxtral.

- Step3 VLM Now Supported in vLLM:

@vllm_projectannounced that Step3, a fast and cost-effective VLM with MFA & AFD, is now fully supported.@teortaxesTexnotes that this model is strongly multimodal and features a different in-house attention mechanism than DeepSeek-V3.

AI Tooling, Frameworks, and Infrastructure

- LangChain Introduces Deep Agents and Align Evals:

@hwchase17from LangChain explained the concept of Deep Agents, which combine a planning tool, file system, sub-agents, and a detailed system prompt, and provided a video overview. The team also released Align Evals, inspired by work from@eugeneyan, to make it easier to build and align LLM-evaluators. - Infrastructure and Deployment Advances: Microsoft and OpenAI announced Stargate Norway, a new datacenter initiative.

@modal_labsintroduced GPU snapshotting, allowing for a 5-second cold-start of vLLM, a feature@sarahcat21called out as a feat of engineering. The vLLM project also highlighted that it will be featured in 5 talks at the PyTorch Conference 2025. - Funding for Developer Tools: Cline, an open-source code agent, announced it has raised $32M in Seed and Series A funding, a story also covered by Forbes.

@samapraised the founders’ partnership, calling their story remarkable. - RAG, Context Engineering, and Data Quality: The term Context Rot was highlighted as an excellent and useful term by

@jxmnop. DeepLearningAI provided a technical breakdown of how transformers process augmented prompts in RAG systems.@Teknium1pointed out that a large portion of a dataset was missing user turns, emphasizing the need to check data quality. - Hugging Face Launches “Tracks”:

@_akhaliqshared the launch of Tracks, a 100% open-source library from Hugging Face for experiment tracking, positioned as an alternative to paid services.

AI-Generated Media and Content

- Runway Aleph is Fully Released:

@c_valenzuelabannounced the full rollout of Runway Aleph to all paid plans, describing it as a completely new way of creating with AI. A demo showed its capability for complex environment changes while maintaining character consistency. The release is part of a rapid series of updates from Runway in 2025. - Google Launches Veo 3 Fast and New Capabilities: Google DeepMind announced that Veo 3 Fast, a quicker and more cost-effective text-to-video model, along with new image-to-video capabilities for Veo 3, are now available in the Gemini API.

- Midjourney’s “Midjourney TV” Experiment:

@DavidSHolzdescribed the new Midjourney TV experiment as weirdly hypnotic. The feature provides a live stream of trending videos generated by the community. - Amazon Backs “Showrunner,” the Netflix of AI: It was reported that Amazon is investing in Showrunner, an AI-generated streaming service that lets users generate scenes from prompts. The platform is being developed by Fable Simulation, which originated the South Park AI experiment.

Industry, Funding, and Geopolitics

- The US vs. China AI Race:

@AndrewYNgpenned a detailed thread arguing that there is now a path for China to surpass the U.S. in AI, citing its vibrant open-weights model ecosystem and aggressive moves in semiconductors. He notes that while top proprietary models are from the US, top open models often come from China.@carlothinksechoed this, quoting an ex-Alibaba CTO who claimed, “China is building the future of AI, not Silicon Valley.” - Figma Goes Public: Figma officially went public, with co-founder

@zoinkexpressing immense gratitude. The event was marked by the NYSE tweeting “Shipped: $FIG”.@saranormousand@samashared congratulatory messages. - Meta’s Vision and M&A Activity: Mark Zuckerberg shared Meta’s vision for the future of “personal superintelligence for everyone”. Separately,

@steph_palazzoloreported that Meta is on an M&A spree, having held talks with video AI startups like Pika, Higgsfield, and Runway. - Perplexity AI Launches Comet Shortcuts:

@AravSrinivasannounced Perplexity Comet Shortcuts, which allow users to automate repetitive web workflows with natural language prompts. One powerful example is the/fact-checkshortcut. - AI Policy and Regulation: It was reported that Google, Anthropic, OpenAI, and others will sign the EU AI code of practice.

@DanHendrycksclarified that xAI is only signing the safety portion, not the copyright portion. Meanwhile,@qtnx_noted a widespread global push for ID age verification to access the internet.

Broader Discourse and Developer Culture

- Developer Experience and Craftsmanship:

@ID_AA_Carmackposted a highly-trafficked reflection on the value of rewriting an RL agent from scratch without looking at prior code, noting it’s a blessing when scale allows for it.@ClementDelangueshared a heartfelt message of thanks to researchers who fight for open science and releasing open models, acknowledging the internal battles they often face in big tech. - Critiques of “Enshittification” and Past Tech Failures:

@jxmnopoffered a counter-narrative to software “enshittification,” arguing that, on the whole, things seem to get slowly and consistently better, citing improvements in phone performance, internet speed, and transit apps. In a separate discussion,@jeremyphowardand@random_walkeramplified critiques of the DOGE (Decentralized Organization for the Greater Good) project, with one commenter calling it a failure at every single possible level that crippled medical research while also failing to deliver its stated goals. - The Stanford NLP Legacy: The founders of Stanford NLP won both 2025 ACL Test of Time awards: the 25-year award to Gildea &

@jurafskyfor “Automatic Labeling of Semantic Roles,” and the 10-year award to@lmthang,@hyhieu226&@chrmanningfor “Effective Approaches to Attention-based NMT”.

Humor and Memes

- Tech Absurdity:

@RonFilipkowskijoked that every DUI defense lawyer hit the jackpot.@lauriewiredpointed out that a bank ACH transaction is literally just an SFTP upload of a 940-byte ASCII text file.@zacharynadoshared a comment explaining a failed Australian rocket launch by noting the engineers probably forgot to account for Australia being upside down. - AI Life:

@mlpoweredretweeted@claudeai’s simple reply, “You’re absolutely right.”.@typedfemalecompared a conversation to replying to someone on Tinder.@aidan_mclauposted a video of a chaotic Waymo trip. - Industry Commentary:

@nearcyanis having flashbacks to ‘23.@code_starremarked it’s time to move 10^98 parquet files.

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Qwen3-Coder-30B-A3B and Flash Model Announcements and Benchmarks

- 🚀 Qwen3-Coder-Flash released! (Score: 1197, Comments: 256): The image promotes Qwen3-Coder-Flash, specifically the

Qwen3-Coder-30B-A3B-Instructmodel, designed for lightning-fast and accurate code generation. It boasts a native256K context window(extendable up to1M tokenswith YaRN), and is optimized for integration with platforms like Qwen Code, Cline, Roo Code, and Kilo Code. The post highlights function-calling, agent workflow support, and provides links to deployment resources on HuggingFace and ModelScope. Top comments discuss availability of GGUF-format models (including 1M context versions and Unsloth optimizations), fixes to model sharding and tool-calling, plus active community development and API access details. Commenters praise the rapid evolution and open-source nature of the ecosystem, drawing attention to continuous fixes and strong community support. There is also enthusiasm for enhanced accessibility and technical improvements in recent model releases.- The release includes Dynamic Unsloth GGUFs of Qwen3-Coder-30B-A3B-Instruct, with standard and 1 million token context-length versions available on Hugging Face. There were fixes for tool-calling in both the 480B and 30B models (notably ‘30B thinking’) and users are advised to redownload the first shard due to these updates. Comprehensive setup guides for local deployment are also provided by Unsloth, facilitating wider user experimentation and custom deployments.

- Qwen-Code continues to improve post-launch, with several recent issue fixes and an active maintenance roadmap. For users in China, accessibility is enhanced via ModelScope APIs that provide 2,000 free API calls daily, and a free Qwen3-Coder API is also available through OpenRouter, broadening access and experimentation with the model. The main Qwen-Code repo remains at https://github.com/QwenLM/qwen-code, with active community engagement and patching.

- Qwen3-Coder-30B-A3B released! (Score: 433, Comments: 83): Qwen3-Coder-30B-A3B, a large language model optimized for agentic coding applications (e.g., Qwen Code and Cline), has been released. Notably, the model omits “thinking tokens,” suggesting a design focused primarily on direct code generation rather than step-by-step reasoning, which may impact tracing or interpretability for certain agentic tasks. Commenters note the absence of thinking tokens, with speculation that the model will integrate well for agentic use-cases like Roo Code. There is also interest regarding the availability of a GGUF (quantized) version for easier deployment.

- A discussion highlights that despite the model’s lack of explicit Fill-In-the-Middle (FIM) support, users report that FIM capabilities are present, albeit not as robust as in Qwen2.5-Coder-7B/14B. This suggests partial FIM compatibility, which may impact workflows depending on heavy code infill or agentic coding tasks.

- It’s noted that the model is designed for agentic coding use cases (like Qwen Code, Cline), implying targeted optimization for multi-step reasoning or tool-use scenarios, which may differentiate its real-world coding utility from generalist models.

- I made a comparison chart for Qwen3-Coder-30B-A3B vs. Qwen3-Coder-480B-A35B (Score: 207, Comments: 16): The image is a radar chart comparing several technical benchmarks between Qwen3-Coder-30B-A3B (Flash) and Qwen3-Coder-480B-A35B. It shows that on agent capability tests (“mind2web” and “BFCL-v3”), both models perform similarly, suggesting parity in those tasks. However, there are notable performance gaps on programming-focused evaluations (Aider-Polyglot and SWE Multilingual), where the 480B variant outperforms the 30B. These insights suggest that while agent/decision tasks are competitive across sizes, pure coding ability significantly improves with larger model size. View image Commenters discuss that a dense Qwen3 32B model might close the gap seen in coding benchmarks, and express interest in comparisons with GPT-4.1 or o4-mini to contextualize these results.

- Multiple users request the inclusion of the dense Qwen3 32B model in the comparison, noting that while it is not strictly a coding-specialized model, it performs very well at coding tasks. This suggests interest in understanding how dense architectures compare to mixture-of-experts (MoE) approaches in the Qwen3 family.

- A user provides practical performance metrics for Qwen3-Coder-30B-A3B, observing that it achieves approximately

90 tokens/secondon Apple M4 Max hardware. They argue that the speed and lower hardware requirements make the 30B model more appealing than the much larger 480B version, given the dramatically increased parameter count (16x) for relatively modest performance gains. - There is a request for comparative benchmarks against proprietary models, specifically OpenAI’s GPT-4.1 and o4-mini, indicating a desire for cross-family benchmarking using similar datasets or tasks for a better understanding of where open-source models stand relative to industry leaders.

2. Chinese Open-Source AI Model Momentum and Global Rankings

- Unbelievable: China Dominates Top 10 Open-Source Models on HuggingFace (Score: 756, Comments: 135): July saw a surge in Chinese open-source AI model releases on HuggingFace, with models such as Kimi-K2, Qwen3, GLM-4.5, Tencent’s HunyuanWorld, and Alibaba’s Wan 2.2 dominating the platform’s trending list. The post contrasts this with Meta’s recent announcement to move toward more closed-source strategies, highlighting a reversal in openness between Chinese and Western AI ecosystems, with Chinese models now leading in open-source momentum on HuggingFace (see Hugging Face trending models). Top comments debate the recent contributions from the West, notably citing only Mistral as a significant model, and suggest a paradox where China is currently more open in AI development than the West, attributed to shifting competitive dynamics and strategic openness.

- Several commenters highlight that major recent open-source model contributions from the West are perceived as limited, with only Mistral mentioned by name and not consistently ranked at the very top of HuggingFace’s leaderboards. This underscores a view that Western open-source progress is stagnating compared to China’s current momentum.

- A discussion develops around Meta’s (Facebook’s) and other tech giants’ strategies, with criticism that planned top-tier models—such as those from Meta—may be restricted for internal use only rather than released openly, drawing negative comparisons to the approach taken historically by Amazon with proprietary innovations. This trend is seen as moving away from open-source principles in favor of company-internal deployment, further reducing public access to cutting-edge AI technology.

- Chinese models pulling away (Score: 1121, Comments: 133): The post discusses the rapid progress and performance improvements of Chinese language models compared to Western offerings, particularly highlighting models like Qwen3-30B-A3B. The image (not provided in description, but inferred from comments and context) likely features benchmark or comparison charts showing how Chinese models are outperforming widely used Western models like LLaMA and Mistral in local Large Language Model (LLM) deployments. The discussion also references the migration of users from LLaMA-based models to newer Chinese-developed alternatives, due to better performance or reduced censorship. Commenters debate whether the shift to Chinese models means abandoning communities like r/LocalLLaMA, with one emphasizing that Mistral models still receive significant attention, highlighting ongoing diversity in LLM preference based on use-case and community engagement.

- A user outlines their progression through various local large language models: starting with LLaMA 3.1-3.2, moving to Mistral 3 Small and its variations (notably the less-censored Dolphin via R1 distillation), and ultimately adopting the Qwen3-30B-A3B model. This sequence highlights rapid switching as Chinese models like Qwen3-30B-A3B gain traction due to capability and tuning options.

- Discussion notes Mistral’s ongoing popularity in r/LocalLLaMA, disputing the narrative that users are abandoning non-Chinese models entirely. Mistral’s active community engagement and model updates keep it relevant for localized language tasks.

- A technical comment references Mistral’s multiple small model releases within the month and anticipates the impact of an upcoming Mistral Large update, indicating continued development and competitive positioning against emerging Chinese models.

- Everyone from r/LocalLLama refreshing Hugging Face every 5 minutes today looking for GLM-4.5 GGUFs (Score: 343, Comments: 71): The image is a meme satirizing the r/LocalLLaMA community’s anticipation for the release of GLM-4.5 GGUF files on Hugging Face, as technical users await their availability for local inference. Commenters clarify that GGUF conversion for GLM-4.5 is still being debugged in llama.cpp (see draft PR #14939), and current uploads are not reliable. Users interested in experimenting with GLM-4.5 are advised to try the mlx-community/GLM-4.5-Air-4bit version for MLX-based workflows while GGUF support is finalized. Discussion highlights the lack of stable GGUF conversion for GLM-4.5 and the interim use of alternative backends like MLX, with some users prioritizing other models (e.g., Qwen3-Coder-30B-A3B-Instruct).

- Support for GLM-4.5 GGUF in llama.cpp is still under development, with the main pull request (github.com/ggml-org/llama.cpp/pull/14939) still in draft status. Current GLM-4.5 GGUF models may have conversion issues and are not considered stable; they should not be used until the implementation is finalized.

- For users who can run models with MLX (e.g., via LMStudio), there’s a working version of GLM-4.5 Air in 4-bit quantization already available from the MLX community (huggingface.co/mlx-community/GLM-4.5-Air-4bit), which has shown good performance in agentic coding tasks during community testing.

- Unsloth GGUFs are best supported when using Unsloth’s fork of llama.cpp, as it contains tailored code matching their quantization and GGUF implementation, improving compatibility and likely reducing conversion issues.

3. Upcoming and Potential Benchmark Innovations: Deepseek ACL 2025

- Deepseek just won the best paper award at ACL 2025 with a breakthrough innovation in long context, a model using this might come soon (Score: 506, Comments: 35): Deepseek recently received the Best Paper award at ACL 2025 for a novel approach to long-context handling, likely centered around sparse attention mechanisms which improve scalability and efficiency in transformer architectures. This innovation could enable smaller language models to maintain longer and more effective context windows, addressing known limitations in existing models’ ability to track long dependencies (see Deepseek’s sparse attention research for background). Commenters highlight that this work demonstrates genuine innovation from Deepseek beyond accusations of cloning, with several emphasizing that sparse attention is a major optimization likely to influence future LLM scalability and context retention, especially for smaller models.

- Sparse attention has been highlighted as a major optimization strategy for long-context models, with commenters noting its potential to vastly improve efficiency and scale compared to standard dense attention approaches. This is seen as a key driver behind recent advances such as Deepseek’s innovation.

- The breakthrough is expected to help smaller models retain context much better as input length increases, directly addressing a weakness in current architectures where context retention typically degrades with length expansion. This has technical implications for memory usage and performance scaling.

- There is speculation on whether Deepseek’s advances could bring its models up to the performance tier of leading-edge systems like Gemini, particularly on specialized evaluation benches such as fiction.livebench. Such performance comparisons are regarded as a critical technical benchmark in the current LLM landscape.

- AMD Is Reportedly Looking to Introduce a Dedicated Discrete NPU, Similar to Gaming GPUs But Targeted Towards AI Performance On PCs; Taking Edge AI to New Levels (Score: 273, Comments: 48): AMD is reportedly exploring a dedicated discrete NPU (Neural Processing Unit) for PCs, aiming to deliver high AI performance as a standalone PCIe card, distinct from gaming GPUs. This approach could enable higher memory capacities (potentially 64-1024GB VRAM) for AI workloads and offload inference/LLM tasks from traditional GPUs, following directions similar to products like Qualcomm’s Cloud AI 100 Ultra. AMD’s current consumer AI stack, such as Strix Point APUs and XDNA engines, already supports large models (e.g., 128B parameter LLMs) for edge AI, but this would mark a shift for broader consumer/professional NPU deployment. Full details. Comments highlight the potential of dedicated AI NPUs in alleviating GPU bottlenecks for gaming and AI, as well as skepticism around AMD’s software maturity (e.g., concerns about ROCm support catching up to hardware capabilities).

- Dedicated NPUs could offload AI tasks from GPUs, enabling higher gaming performance (e.g., high-FPS 4K with AI-enhanced NPCs) by separating resources for gaming and AI workloads. Scalability in VRAM (up to 1TB) would benefit users needing large models or datasets locally.

- There is consensus that strong driver and ML framework support is crucial; without robust ROCm (or equivalent) software, discrete NPUs would be hamstrung regardless of hardware performance. ROCm 7.0 is mentioned as a potential improvement, but maturity is still a concern.

- Discussion highlights market segmentation: AMD could capture a new professional or prosumer segment left underserved by NVIDIA’s current focus, especially if AMD offers consumer NPUs with large memory and competitive performance per watt, bypassing the artificial segmentation seen in gaming GPUs (as with NVIDIA’s datacenter vs consumer product strategies).

- I built a local alternative to Grammarly that runs 100% offline (Score: 229, Comments: 61): The OP introduces ‘refine.sh’, a local Grammarly alternative leveraging the Gemma 3n E4B model for offline grammar checking, with a peak memory footprint under 500MB and 300MB when idle. The tool is in early development and operates fully offline, addressing privacy and local resource constraints. Commenters provide alternative suggestions such as the FOSS WritingTools, and raise concerns about the tool not being open source (FOSS).

- One commenter notes that using LLMs for grammar correction is generally ineffective due to their difficulty in fine-tuning for grammar-specific tasks. They mention that Grammarly’s recent switch to an LLM backend is causing problems, implying that rule-based systems or targeted NLP models may outperform general-purpose LLMs in this use case.

- There are references to alternative open-source (FOSS) tools aimed at grammar correction. Notably, WritingTools and Write with Harper are mentioned as free and open-source projects, with the latter emphasizing strict adherence to documented grammatical rules from style guides rather than relying on unconstrained LLM output.

- Junyang Lin is drinking tea (Score: 212, Comments: 31): The post, titled ‘Junyang Lin is drinking tea,’ includes an image that, in the absence of image analysis and direct technical content in the description, relies on contextual hints. The comments reference fast token generation speeds (“getting 120tok/s out of 30ba3b”), suggesting this could be a meme or informal nod to model developer Junyang Lin and the efficiency of one of their models, like 30B A3B (possibly a Llama or variant). No direct benchmarks, code, or technical implementation are present in the post itself. Commenters express enthusiasm and highlight performance—specifically 120 tokens per second output—implying satisfaction with recent advancements or releases from Junyang Lin, and underlining the community’s demand for efficient, powerful models.

- One user highlights generating

120 tokens/secondusing the 30B a3b model, which is a notably high inference speed for a 30B parameter model. This points to either highly optimized inference code or robust hardware support. - The poem references GLM 4.5 Air and Qwen3 Coder 30B a3b models, suggesting users are benchmarking multiple recent large language models. The explicit mention of 30 billion weights and silicon/GPU resources alludes to the significant computational demands and scale of these models.Less Technical AI Subreddit Recap

- One user highlights generating

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. OpenAI GPT-5 and Stealth Model Developments

-

Google has now indexed an OpenAI docs page for GPT-5 in yet another sign of preparations for its release - the page 404s for now (Score: 525, Comments: 103): The image shows a Google Search result indexing an official OpenAI documentation page titled ‘OpenAI API Docs — GPT-5’ (URL: https://platform.openai.com/docs/guides/gpt/gpt-5), but the actual page currently returns a 404 error. This event suggests that OpenAI is preparing to publicly update or release new documentation for GPT-5, indicating imminent progress towards its formal announcement or rollout. The documentation’s appearance in Google’s index is interpreted as an early sign of backend preparations for GPT-5, further fueling speculation about the timing of its release. Comments speculate on the timing of the GPT-5 release, with some users expressing skepticism about an imminent launch and others urging patience. There is no deep technical debate, mostly anticipation and discussion about OpenAI’s release cadence.

- ‘the codenames OpenAI is supposedly using for GPT-5 models: “o3-alpha > nectarine (GPT-5) > lobster (mini) > starfish (nano).”’ | ’”…Zenith, Summit, Lobster, Nectarine, Starfish, and o3-alpha—that are supposedly outperforming nearly every other known model,” have been spotted on LMArena.’ (Score: 163, Comments: 25): Leaked internal codenames for hypothetical future OpenAI models (‘o3-alpha’, ‘nectarine’, ‘lobster’, ‘starfish’) and their speculated model sizes (e.g., lobster=mini, starfish=nano), were allegedly observed on the LMArena benchmark, performing above most other models. There is speculation these represent stages of GPT-5 or next-gen offerings, with claims the models were visible but are no longer present for comparison on public leaderboards. Commenters question article credibility and technical accuracy; some seek clarification on the specifics of ‘O3 alpha’. There is skepticism regarding the presence and performance of these models, as well as the reliability of the coverage.

- A user notes that the models referenced (Zenith, Summit, Lobster, Nectarine, Starfish, and o3-alpha) are no longer present on the LMArena leaderboard, hinting at issues with model benchmarking continuity or removal of test entries. This may affect the reliability of current public model performance comparisons.

- A commenter queries the identity and capabilities of “O3 alpha,” indicating ongoing ambiguity about internal codenames, lineage, and architecture for OpenAI’s unreleased or experimental models, highlighting opacity in the progression from o3-alpha to finalized GPT-5 variants.

- OpenAI’s new stealth model on Open Router (Score: 185, Comments: 58): A new, unannounced OpenAI model has appeared on OpenRouter (screenshot: preview.redd.it/pgmajpmcs3gf1.png), prompting speculation about a possible AGI-related release. Benchmarks suggest the model underperforms at math, failing relatively easy problems, but outperforms others on coding tests—particularly on edge case handling, though its overall code quality is mediocre. Comparative references note that Claude 4 Sonnet did worse on benchmark test problems than Claude 3.7, but surpassed it in real-world tasks, highlighting the limitations of evaluating models solely on narrow benchmarks. Commenters debate the disconnect between benchmark performance (e.g., math/coding tests) versus practical usability and robustness in real-world coding, with several noting that catching edge cases may be more valuable than raw benchmark scores.

- One user noted the new OpenAI stealth model performs poorly on mathematical tasks, failing even fairly easy problems, suggesting that despite the hype around new AI models achieving high scores on standard math benchmarks, this particular model falls short in those areas.

- Another commenter observed that while this model delivers the best results on their typical coding problem set—particularly in managing edge cases—the general code quality remains mediocre. Furthermore, they highlighted that performance on small benchmark-style problems does not necessarily reflect the model’s value in broader, real-world applications, referencing their experience where Claude 4 sonnet underperformed versus Claude 3.7 in limited tests but excelled in practical work scenarios.

- Some discussion speculates that this new model could be an early form of “GPT-5 Nano,” based on its mixed performance—described as both impressive and lacking in different contexts—and similarities between generated games and outputs from anonymous LM Arena models suspected to be GPT-5 family members. This feeds into the theory that OpenAI is quietly testing next-generation, smaller-sized models in production settings.

- New (likely) OpenAI stealth model on openrouter, Horizon Alpha, first try made this (Score: 198, Comments: 51): A user tested ‘Horizon Alpha’, a newly surfaced model on OpenRouter purportedly built by OpenAI, by prompting it to generate a detailed Mario Bros game replica with pixel art. The resulting image (view here) showcases intricate, classic pixel art game elements, including a score/coins/world/time UI bar, reflecting notable fidelity to the original game’s design elements. Comments focus on the technical decision of replicating a status bar UI and speculate whether model outputs are consistent across runs (reusing color/font/UI motifs) or if the design varies significantly between outputs. Commenters critically question the model’s ability to produce a fully-playable, multi-level game, discuss whether ‘Horizon Alpha’ could be an open source model, and analyze the consistency and style decisions of its UI recreations, highlighting potential variance in repeated generations.

- One commenter compares this unknown “Horizon Alpha” model to GPT-4.1, stating that in their testing, it does not perform as well, suggesting it’s behind in either output quality or capabilities relative to GPT-4.1.

- There is a technical observation about the model’s handling of UI elements: specifically, the score/coins/world/time bar was generated in a way that stands out from the rest of the pixel art. The commenter suspects the model may not fully integrate UI elements with the background and questions whether re-running the prompt would result in consistent UI styles, such as similar colors or fonts.

- A user clarifies that the model is designed for text generation, not for coding tasks like building fully playable levels or games, setting expectations about the kind of output the model can generate.

- OpenAI’s new stealth model (horizon-alpha) coded this entire app in one go! (Score: 122, Comments: 44): The post discusses OpenAI’s unreleased model, ‘horizon-alpha’, which allegedly generated a full application from a single prompt (demo image linked) using OpenRouter’s API. The prompt used is lengthy and can be reviewed here. The OP notes the model performs well, with some quirks, and is particularly fast at reading and processing large files compared to other models, exhibiting strong error detection capabilities. Top comments question the prompt’s appropriateness and necessity, expressing that simple instructions may work as well, and suggesting that complex prompt engineering should not be necessary. Another commenter highlights the model’s exceptional speed and ability to identify subtle errors quickly, calling it a ‘game changer’ compared to current models.

- One commenter notes that ‘horizon-alpha’ demonstrates extremely fast file reading capabilities, claiming it can handle entire files “in a blink,” a speed which they state is unmatched by other models. The model also exhibits improved error detection, quickly spotting issues in projects that would be difficult to identify otherwise, indicating potential advancements in both speed and code analysis accuracy.

2. Wan2.2 and Flux: New Model Releases and Benchmarks

- wan 2.2 fluid dynamics is impressive (Score: 292, Comments: 31): The OP demonstrates fluid/particle simulation using WAN 2.2 (image-to-video, version 14b), with source images generated in Flux Dev and audio added via mmaudio. The focus is on evaluating WAN 2.2’s handling of complex physical phenomena like fluids and particles, noting impressive results but highlighting ongoing challenges in controlling camera angles and motion via prompting. One top comment raises a technical limitation: WAN 2.2 tends to generate persistent fluid flow from any initial liquid, e.g., a stationary teardrop results in continuous artificial flow, which is reported as a common unresolved issue.

- A user describes a notable limitation of Wan 2.2’s fluid simulation: the model tends to continuously generate fluid from the same spot if there’s a trace present (e.g., a teardrop on an eye results in an unrealistic waterfall-like effect). This indicates a challenge in modeling fluid persistence versus initiation, highlighting potential issues with the model’s temporal consistency or thresholding in identifying when fluid generation should cease.

- Wan 2.2 Reel (Score: 173, Comments: 35): The post showcases a demo reel using the Wan 2.2 GGUFQ5 i2v model, with all images generated via SDXL, Chroma, Flux, or movie screencaps. The total time for generation and editing was approximately 12 hours, and outputs demonstrate the capabilities of the involved generative pipelines. A key technical critique from commenters addresses the lack of consistency and narrative coherence in current AI-generated video, arguing the next technical challenge is producing “watchable story” rather than just visually impressive short clips. Some technical interest in the specifics of video generation is also raised (e.g., resolution and inference steps used).

- Discussion highlights challenges in AI-generated video: specifically, the lack of consistency and narrative structure, with current technology producing disjointed 3-5 second clips rather than coherent, longer stories. This points to story and temporal coherence as active frontiers for research and implementation.

- Technical comments touch on performance of different quantization and precision modes: an example is given using FP8 on an RTX 5080, where generating a 5-second 720p video takes around 40 minutes. The commenter plans to benchmark Q4 or use Unsloth’s Dynamic Quant for potentially faster inference, highlighting the tradeoff between quality and generation speed.

- A query is raised about the resolution and inference steps (likely diffusion steps) used for the demo videos, relevant for reproducibility and to compare speed versus quality across hardware and quantization approaches.

- Another “WOW - Wan2.2 T2I is great” post with examples (Score: 144, Comments: 34): The post discusses image generation using the Wan2.2 T2I model, emphasizing that a 4K image took approximately 1 hour to generate. The user notes that the workflow leverages CivitAI’s native T2I setup with LightX2V (0.4), FastWAN (0.4), and Smartphone LoRA (1.0), and observes that sampler and scheduler selection (e.g., euler) critically impacts color saturation and image realism. The workflow reportedly does not support resolution scaling with ‘bong’ (res2ly), highlighting limitations in scaling functionality. A comment claims Wan2.2 surpasses the Flux model in realism (e.g., fewer anatomical artifacts), but highlights a lack of features comparable to ControlNet or Pulix for ensuring image consistency across generations. Another notes disappointment in the lack of reproducibility due to the incomplete workflow description.

- A user reports that Wan 2.2 produces more realistic images with fewer anatomical errors (such as missing limbs or deformed fingers) compared to Flux, highlighting an improvement in image fidelity and coherence. However, they note the absence of features akin to ControlNet or Pulix, which would enable more consistent image generation and control over output, suggesting a gap in guided or reference-based generation capabilities.

- A technical question is raised about model requirements: one commenter asks if the impressive results require the full Wan 2.2 model or if the lighter, fp8-scaled versions (~14GB) are sufficient, noting they observed ‘super weird results’ with the fp8 variant, implying potential limitations or compatibility issues with quantized/optimized versions.

- PSA: WAN 2.2 does First Frame Last Frame out of the box (Score: 117, Comments: 19): The post announces that the WAN 2.2 model enables First Frame Last Frame (FLF) video output “out of the box” within ComfyUI by updating the existing WAN 2.1 FLF2V workflow with the new 2.2 models and samplers. The provided Pastebin link contains the modified workflow definition, highlighting ease of upgrade for those already using FLF2V (see: Pastebin workflow). Top comments question whether the model supports true video looping (first=last frame) or degenerates to a static image, and seek clarification on whether the order of intermediate nodes (e.g.,

LoraLoaderModelOnly,TorchCompilerModel,Patch Sage Attention,ModelSamplingSD3) affects output fidelity, as users report mixed results with different sequencing. There’s also inquiry into the utility of this workflow for low frame rate (e.g., 4fps) video interpolation.- A user inquires about whether WAN 2.2 can properly generate looped videos by setting the first and last frame to the same image, asking if the model avoids the common issue with other video models that produce a static image rather than a seamless loop.

- There’s a technical discussion about the impact of workflow node order between the model loader and the KSampler in WAN 2.2 pipelines. Two specific node orderings are compared: one where LoraLoaderModelOnly comes first, and another where TorchCompilerModel is first. The commenter asks whether these variations affect sample quality or consistency, noting mixed results in their own tests.

- A user questions the suitability of WAN 2.2 for interpolation tasks, such as generating intermediary frames for a low frame rate (e.g., 4fps) video, aiming to clarify the model’s effectiveness for this specific use case.

- Text-to-image comparison. FLUX.1 Krea [dev] Vs. Wan2.2-T2V-14B (Best of 5) (Score: 123, Comments: 58): A user conducted an informal side-by-side comparison of the FLUX.1 Krea [dev] and Wan2.2-T2V-14B text-to-image generative models across 35 samples each, using long-form prompts (~150 words). FLUX.1 Krea was run at 25 steps with CFG lowered from 3.5 to 2, while Wan2.2-T2V-14B used the Wan21_T2V_14B_lightx2v_cfg_step_distill_lora_rank32 lora at 0.6 strength to accelerate inference, impacting output visual quality. Major findings: Wan2.2-T2V-14B produced significantly more usable (4/5) and naturalistic outputs than FLUX, which exhibited frequent anatomical errors and less natural stylization. Lighting accuracy was slightly better in FLUX, but its contrast was unnaturally high and it consistently failed to accurately render freckles. Top comments strongly preferred Wan2.2-T2V-14B, succinctly condensing the consensus to ‘wan won’ and suggesting prompt tweaks (e.g., ‘(freckles:6)’) for controlling features. The discussion lacks deeper technical debate but indicates perceptible quality differences in practical use.

- Several users observe that the FLUX.1 Krea model may have been trained incorporating a significant number of MidJourney-produced images, particularly those with distinct features like freckles, raising questions about the novelty and originality of the training data compared to WAN2.2-T2V-14B.

- Technical comparisons note that WAN2.2-T2V-14B produces images visually comparable to TV show captures, suggesting higher photorealism and possibly a superior dataset or diffusion architecture versus Flux.1 Krea. Some users express a switch in preference toward WAN, citing product licensing differences (e.g., frustration with Flux and “bfl non commercial license”).

- New Flux model from Black Forest Labs: FLUX.1-Krea-dev (Score: 381, Comments: 250): Black Forest Labs has released the FLUX.1-Krea-dev model, available on Hugging Face. It is advertised as a drop-in replacement for the original flux-dev, aiming to produce AI images that are less distinguishable as synthetic, though early user tests report existing flux-dev LoRAs are not compatible. Notably, the model has trouble rendering human hands correctly, frequently producing images with 4 or 6 fingers (see sample output). Commenters suspect heavy content filtering/censorship in the model, and some express disappointment that compatibility with older LoRAs was not as advertised.

- Discussion highlights that while FLUX.1-Krea-dev was advertised as a drop-in replacement for previous FLUX dev models (including compatibility with existing LoRAs), actual user testing revealed that these older LoRAs do not function as expected with the new release.

- One technical issue identified with FLUX.1-Krea-dev is its continued difficulty rendering human hands accurately, with outputs sometimes producing 4 or 6 fingers—an artifact common in less refined image generation models.

- Flux Krea is quite good for photographic gens relative to regular Flux Dev (Score: 145, Comments: 57): The post provides visual results from Flux Krea, a photographic generation model by Flux (Krea.ai), and highlights its improved realism for photographic outputs compared to the standard Flux Dev model. There are no explicit benchmarks or technical details about model architecture, but the post focuses on qualitative output differences under different generative models. Top commenters critique the presence of a pervasive yellow filter resulting in ‘lifeless and cold’ images, suggesting a need for more neutral color defaults to give users greater post-generation control. Another point raised is the lack of direct side-by-side comparisons with identical prompts, making technical evaluation of improvements difficult.

- Several users point out a distinct yellow or cold filter applied by Flux Krea, with one stating the model’s attempt at a photographic look results in images that appear ‘lifeless’ and suggest they should have kept color tones neutral for more user control.

- A request is made for rigorous benchmarking, such as direct side-by-side comparisons between Flux Krea and regular Flux Dev using identical prompts and settings to accurately assess differences in photographic quality.

- There is speculation about the model’s possible improvements due to ‘better datasets & captaining,’ indicating community interest in technical details on training data or strategies that led to observable differences in output.

- FLUX Krea DEV is really realistic improvement compared to FLUX Dev - Local model released and I tested 7 prompts locally in SwarmUI with regular FLUX Dev preset (Score: 132, Comments: 53): The post compares the new FLUX Krea DEV model with the previous FLUX Dev, emphasizing improved photorealism, especially in tasks like dinosaur image generation using SwarmUI. Seven prompts were tested locally with the regular FLUX Dev preset to benchmark output quality. Key technical queries in comments focus on model realism (notably with ‘realistic dinosaur’ generation), inference speed improvements over previous versions, and model size/VRAM requirements, particularly concerning compatibility with an RTX 4080 GPU (16GB VRAM). Technical discussion centers on whether the new Krea DEV model materially accelerates inference and generates realism surpassing precedent, especially in complex tasks like dinosaurs, with some skepticism expressed about current AI capabilities in this specific domain.

- A commenter inquires about the generation speed compared to the previous FLUX Dev, specifically asking if FLUX Krea DEV is faster, which implies a community interest in performance improvements and inference time benchmarks between these two local models.

- A technical question is raised regarding the model’s VRAM requirements and hardware compatibility—particularly if FLUX Krea DEV can run on an RTX 4080 (16GB VRAM). This reflects concerns about local deployment feasibility and model size, which are critical for users running models locally on consumer GPUs.

3. Steampunk Video Game Concepts and Prompt Techniques

- Steampunk Video Games In European Cities (Prompts Included) (Score: 410, Comments: 31): The OP shares detailed text-to-image prompts designed for generating high-fidelity steampunk video game concept art set in iconic European cities (Paris, London, Venice) using Prompt Catalyst. These prompts specify camera perspective (third/first person), resolution (

2560x1440, ultrawide aspect), in-world UI (HUD with pressure dials, minibars, cooldown clockfaces, minimap, steam meters), and stylistic elements (sepia lighting, particle effects, mechanical themes, etc.), emphasizing dynamic environment features (smoke, fog, steam) and photorealistic asset styling (—ar 6:5 —stylize 400 prompt tokens). The generation pipeline and complete workflow are supported by an external tutorial on Prompt Catalyst’s site. Commenters note the high quality of the visual output and UI design, suggesting that the generated concepts surpass expectations for the steampunk genre and evoke comparisons to ‘The Order: 1886’ (implying missed potential in previous commercial implementations). There is consensus that these tools and prompts could meaningfully influence actual game development workflows if adopted by industry professionals.- There is a mention of how the steampunk aesthetic showcased in these images serves as inspiration and a critique for existing game franchises like The Order: 1886, suggesting that more effective or imaginative implementation in this genre is possible, especially for games set in European cities.

- A commenter references ‘Bioshock Infinite’ repeatedly, invoking it as a benchmark or exemplar of steampunk/alternate-history video game design, implying that it still represents a high standard for aesthetic and narrative integration in the genre.

- Steampunk Video Games In European Cities (Prompts Included) (Score: 410, Comments: 32): The post details highly structured prompts for generating steampunk-themed video game visuals using Prompt Catalyst (tutorial: https://promptcatalyst.ai/tutorials/creating-video-game-concepts-and-assets). Prompts specify technical parameters such as: third-/first-person perspectives, 2560x1440 resolution, 21:9 aspect ratio, in-game UIs with custom pressure-dial health bars, ability icons as clock faces, and environment effects including volumetric fog, real-time particle effects, and sepia lighting to accentuate brass and mechanical textures in historic European settings. Notably, animation and asset prompts are designed for high fidelity and stylization (—ar 6:5 —stylize 400). Top comments suggest missed opportunities in existing games (e.g., ‘the order 18xy’), general approval of the quality, and a desire for the steampunk genre to gain popularity, but no deep technical debate is present.

- Commenters discuss the underutilization of steampunk aesthetics in AAA titles, referencing games like “The Order 1886” as a missed opportunity for better execution. There’s a focus on how current graphics and world-building capabilities could more effectively realize the atmosphere and gameplay depth this genre demands, especially set in richly detailed European cities.

- One commenter highlights how titles such as “Bioshock Infinite” set high expectations for the integration of steampunk themes and immersive environments, suggesting that future games could surpass these benchmarks if genre popularity and investment increased.

AI Discord Recap

A summary of Summaries of Summaries by X.ai Grok-4

Theme 1: Models Muscle Up with New Releases

- Qwen3 Drops 30B Bombshell: Alibaba’s Qwen3-30B model rivals GPT-4o in benchmarks, running locally on 33GB RAM in full precision via Unsloth GGUF version, with quantized variants needing just 17GB. Community excitement centers on its multilingual prowess, though tool use falters in some setups like vllm.

- Gemma 3 Fine-Tunes Watermark Woes: Fine-tuning Gemma 3 4B on 16k context removes watermarks and boosts stability, as shown in a screenshot, sparking a new competition ending in 7 days via Unsloth’s X post. Users report enhanced translation across popular languages, positioning it as a compact alternative to larger models.

- Arcee Unleashes AFM-4.5B Powerhouse: Arcee.ai released AFM-4.5B with grouped query attention and ReLU² activations for high flexibility, available on Hugging Face. Future variants target reasoning and tool use, backed by a DatologyAI data partnership.

Theme 2: Hardware Hustles for AI Speed

- Quantization Zaps Bandwidth Bottlenecks: Quantization slashes memory bandwidth and boosts compute speed beyond just model fitting, though keeping vision ops in FP32 creates bottlenecks in conv layers. Users debate optimizing for consumer hardware, with dynamic 4-bit methods highlighted in Unsloth’s blog.

- AMD’s Strix Halo APUs Price Out Competitors: Strix Halo APUs hit $1.6k for 64GB and $2k for 128GB, but EPYC systems win on value with upgradeable RAM, as discussed in a Corsair AI Workstation post. Soldered memory draws ire for scam-like limitations versus DIMM flexibility.

- P104-100 GPU Bargain Sparks Scam Fears: The P104-100 GPU sells for 15 GBP on Taobao, touted as a 1080 equivalent for LLM inference despite PCIe 1.0 x4 constraints. Sharding across cards aids price-performance, but users warn of potential 4GB VRAM access issues.

Theme 3: Censorship Clashes in Model Mayhem

- Qwen3 Shuts Down Sensitive Queries: Asking Qwen3-30B about China’s internet censorship triggered immediate chatbot shutdowns, amid excitement for its release on Hugging Face. This highlights overzealous safety features limiting practical use.

- OpenAI’s Censorship Lectures Irk Users: Heavy censorship in OpenAI models yields canned responses and moral lectures, with Unsloth’s Llama 4 guide advising against authoritative phrasing. Community frustration grows over reduced utility for coding and non-client tasks.

- GLM 4.5 Air Mimics Gemini’s Guardrails: GLM 4.5 Air feels like Gemini with broken tool use in vllm, but excels in chatting and analysis per a Z.ai blog. Debates focus on balancing safety without crippling functionality.

Theme 4: Agents Arm Up with Security Shields

- DeepSecure Locks Down AI Agents: DeepTrail’s open-source DeepSecure enables auth, delegation, and policy enforcement for agents via split-key architecture and macaroons, with Langchain examples like secure workflows. It’s designed for cross-model proxying, detailed in technical overview.

- MCP Servers Battle Context Leaks: Single-instance MCP servers need user-context separation to prevent session data sharing, as users report EC2 deployment issues with Claude Desktop despite SSL setup, per MCP docs. Cursor connects fine, but state tools fail on Windows.

- Cursor Agents Hijack Ports in Parallel Panic: Cursor Background Agents unexpectedly hijack ports, disrupting dev environments; fixes include disabling auto-forward in VSCode or emptying ports array. Parallel task coordination uses API orchestration or Docker, with a task queue script proposed for dependencies.

Theme 5: Education Explodes with Study Groups

- Diffusion Models Study Group Ignites: A 12-person, 5-month group studies diffusion models via MIT curriculum, with free intros on Aug 2 (Flow Matching) and Aug 9 (PDEs/ODEs) at Luma. It features peer-led sessions and projects for AI pros.

- LLM Safety Research Resources Rally: PhD students seek LLM safety resources, recommending Alignment Forum posts on reasoning steps and beliefs in chain-of-thought. Focus includes bias mitigation and ethical domain adaptation.

- Video Arena Launches Bot Battles: LMArena’s experimental Video Arena lets users generate and vote on AI videos via bot, with a staff AMA by Thijs Simonian via Google Forms. It supports free comparisons of top models for images and videos.

Discord: High level Discord summaries

Perplexity AI Discord

- R1-der R.I.P: Model Removed From LLM Selector: The R1 1776 model has been removed from the LLM selector but is still accessible via the OpenRouter API.

- Users are contemplating a switch to O3 or Sonnet 4 for reasoning tasks after the removal.

- Android Comet App to Launch Soon: The Comet for Android app is under development and is scheduled for release towards the end of the year.

- While one user questioned the browser’s potential capabilities, others lauded its performance on Windows.

- Gemini 2.5 Pro Gets a Speed Boost: Users reported a significant speed increase in Gemini 2.5 Pro, speculating it might be using GPT-4.1.

- This improved performance may come with limitations like daily message caps for reasoning models.

- Spaces Craze: Custom Instructions Heat Up: Members discussed optimizing the use of the Spaces feature by adding custom instructions.

- One user clarified that the instruction field offers more options, such as adding specific websites for data retrieval.

- Deep Research API has Structured Outputs: A member building a product stated that they’re familiar with the deep research and structured outputs API.

- They also asked to chat with somebody about Enterprise API pricing, early access, rate limits, and support, and requested some credits to test and integrate the API appropriately.

Unsloth AI (Daniel Han) Discord

- Qwen3-30B Shuts Down Chatbot: Qwen released Qwen3-30B and one user reported that when they asked Qwen3 why China censors the internet on their chatbot, the system immediately shut down their requests.

- The release of Qwen3-30B excited the community, with a link to Hugging Face provided for further exploration.

- GLM 4.5 Air Mimics Gemini: Users discussed GLM 4.5 Air, with one mentioning it feels like Gemini and highlighted a blog post comparing it to other models.

- Members noted that the tool use is broken in vllm but it worked well for chatting, poetry analysis, and document search.

- Quantization Speeds Up Compute: Quantization isn’t just about fitting models into memory, it also reduces memory bandwidth and can significantly improve compute speed.

- A member noted that keeping the vision head ops like conv layers in FP32 seems suboptimal, as they tend to be quite slow and is a bottleneck.

- Gemma 3’s watermarks Removed After 16k Fine-Tuning: After fine-tuning Gemma 3 4B with 16k context, experiments found that watermarks were completely removed and models were more stable, according to an attached screenshot.

- A new Gemma 3 competition was announced with the notebooks being available and the competition ending in 7 days with more info available on Xitter.

- Unsloth Cracks Down on OpenAI’s Censorship: Members expressed disappointment with OpenAI’s heavy censorship, sharing experiences of canned answers and lectures from ChatGPT.

- One user pointed out Unsloth’s Llama 4 page that directs users to never use phrases that imply moral superiority or a sense of authority, and to generally avoid lecturing.

Cursor Community Discord

- Cursor’s MCP Browser Automations Coming Soon: Members are actively developing browser-reliant automations via Cursor’s MCP, with early access slated for release in the coming weeks.

- A member highlighted the ease of setup, noting it features a one-click MCP setup to facilitate building browser automations directly.

- Parallel Agent Coordination Conundrums: Members are grappling with managing parallel tasks with dependencies, given agents’ lack of shared workspaces complicates simultaneous triggering.

- Proposed solutions involve external orchestration via API, file-based coordination, and Docker-based parallel execution, including a sample task queue script.

- Cursor Background Agents Hijack Ports: Engineers reported unexpected port hijacking by Cursor Background Agents, leading to debugging efforts to restore their development environments.

- Mitigation suggestions include setting the

portsproperty to an empty array or disabling auto forward ports in VSCode settings.

- Mitigation suggestions include setting the

- Background Agents Considered for Research: A member explored utilizing background agents for research-oriented tasks, such as refactor research or feature implementation research.

- They inquired about optimal workflows, considering options like having the agent draft markdown in the PR or directly implementing changes.

- Fishy Terminal Defaults: A member encountered an issue with Cursor’s integrated terminal defaulting to the fish shell and sought solutions to change it.

- Attempts to modify the shell via settings and wrappers eventually succeeded after temporarily renaming the fish binary, but the root cause remains unknown.

LMArena Discord

- Dot-lol Data Collection Under Fire: Concerns arose about dot.lol’s potential to sell user data and profiling information, cautioning users against assuming their data won’t be used for targeted influence or profit.

- While some worry about the impact of extensive data collection, others argue data collection is inevitable and users should focus on not linking data to their personal identity.

- GPT-5 Buzz: August Release?: Rumors hint at a possible GPT-5 release in early August, with potential evidence of router architecture preparations found in the ChatGPT Mac app.

- Community members are speculating on its impact and whether it will surpass other models, with some hoping for a free tier.

- GDPR: Teeth or Toothless?: Members debated the effectiveness of the EU’s GDPR in preventing data collection by AI companies, with differing opinions on its impact.

- Some believe that GDPR primarily affects the use of data rather than the collection, while others countered that data collection is turned off for EU consumers.

- Zenith’s Arena Encore: Enthusiasm brewed for the return of the Zenith model to the LMArena, with anticipation for its potential ELO rating and overall performance.

- While some lamented missing the chance to try it out, others held strong opinions on its value to the platform.

- Lights, Camera, AI: Video Arena Launches: The LMArena team launched an experimental Video Arena on Discord where users can generate and compare videos from top AI models for free, using the LMArena bot to generate videos, images, and image-to-videos.

- A Staff AMA with the bot’s developer, Thijs Simonian, was announced, inviting users to submit questions via Google Forms for the AMA.

HuggingFace Discord

- HF Spaces go Boom: Members discussed that HF Spaces might unexpectedly restart, suggesting pinning dependency versions to avoid issues, as described in the docs.

- One user reported that both Ilaria RVC and UVR5 UI stopped working and recommended factory rebuilds, while others continued to work fine.

- P104-100 GPU on Sale for $15!: Users debated the merits of the P104-100 GPU for AI tasks, with one claiming it’s practically a 1080 for 15 GBP (though others called it a scam), available from Taobao.

- Some cited limitations like PCIE 1.0 x4, while others emphasized its price-to-performance for LLM inference, even when sharding models across multiple cards.

- Qwen 30B Challenges GPT-4o: Users highlighted the release of the Qwen 30B model, claiming it rivals GPT-4o and can run locally in full precision with just 33GB RAM using the Unsloth GGUF version.

- Users noted that a quantized version can run with 17GB RAM.

- Diffusion Model MIT Curriculum Study Group: A new study group will focus on learning diffusion models from scratch using MIT’s curriculum with early sign-up for $50/month, and two free intro sessions are available for non-members: Aug 2 and Aug 9, details on Luma.

- The study group will be based on MIT’s lecture notes and previous recordings.

- MoviePy Makes Video Editing Server: A member built a MCP server using MoviePy to handle basic video/audio editing tasks, integrating with clients like Claude Desktop and Cursor AI, available on GitHub.

- The author is seeking collaboration on features like object detection-based editing and TTS/SST-driven cuts.

OpenAI Discord

- GPT-5 Hype Restsless Fans: Users are actively debating the release of GPT-5, with some claiming access via Microsoft Copilot/Azure, but skeptics await an announcement from OpenAI.

- One user humorously criticized Sam Altman for fueling hype and making fans restless.

- Study and Learn: Distraction or Innovation?: OpenAI launched a new Study and Learn feature, viewed by some as a simple system prompt and perhaps a distraction from GPT-5 expectations.

- One user even dumped the system prompt into the O3 model for analysis.

- Copilot and ChatGPT Face Off: Discussions clarify that Microsoft Copilot uses either GPT-4o or O4-mini-high, with potential future integration of GPT-5 based on source code hints.

- Copilot’s unlimited daily message cap prompts questions about why users still prefer ChatGPT, though some users still believe Google’s Imagen4-Ultra is the best image generator.

- Chat History Vanishes into Thin Air: Multiple users reported disappearing ChatGPT chat histories, despite attempts to troubleshoot by logging in and out and clearing cache.

- OpenAI support suggests this could be an isolated bug, emphasizing that chat history recovery isn’t possible once it’s lost.

- Engineering new AI Memory Format: A member introduced a new memory format proposal with AI_ONLY_FORMAT_SPEC, aimed at optimized AI VM, systems interfacing with vector databases, or for protected symbolic transfers, emphasizing speed and efficiency over human readability

- Another member provided a detailed line-by-line reading of the format, highlighting its core principles like token embedding, semantic grouping, and binary encoding.

OpenRouter (Alex Atallah) Discord

- DeepSecure Unveils Open-Source AI Agent Auth: DeepTrail introduced DeepSecure (https://github.com/DeepTrail/deepsecure), an open-source auth and delegation layer for AI agents, enabling authorization, agent-to-agent delegation, policy enforcement, and secure proxying across models, platforms, and frameworks.

- The technology features a split-key architecture, gateway/proxy, separate control/data plane, policy engine, and macaroons, exemplified in integrations for Langchain/LangGraph like secure multi-agent workflows with fine-grained access controls and delegation workflows.

- OR Gives Free Messages for $10 Top-Up: A one-time $10 top-up on OpenRouter unlocks 1000 daily free messages, even after the initial credits are depleted.

- Users confirmed that after spending the initial $10, the 1000 requests/day limit remains active.

- API Blocks Unwanted Quantization: OpenRouter’s API now lets users specify acceptable quantization levels to avoid lower-precision models like FP4 using the provider routing documentation.

- The API allows excluding specific quantization levels, such as allowing everything except FP4 models.

- Deepinfra’s Secret Gemini Pro Deal: DeepInfra negotiated a lower rate with Google for Gemini 2.5 Pro and passed the savings to customers, indicated by a ‘partner’ tag on DeepInfra’s listing.

- Unlike the Kimi K2 model, DeepInfra’s Gemini 2.5 Pro has a partner tag, signaling a direct partnership with Google.

- Ori Bot Underperforms: Users report that the OpenRouter Ori bot may be a net negative due to inaccurate responses, particularly in payment processing issues.

- One user suggested that Ori often puts the fault on the user and asks questions that lead nowhere, and a developer is now working to update Ori’s knowledge.

Latent Space Discord

- Metislist Rank Provokes François Frenzy: A user shared Metislist, sparking debate over François Chollet’s rank at #80, with many feeling the creator of Keras deserved a higher placement.

- Some felt that Chollet should be in the top 50, with one user quipping Damn you got beef with my boy François?.

- Arcee Aces AFM-4.5B Model Drop: Lucas Atkins announced the release of AFM-4.5B and AFM-4.5B-Base on Hugging Face from Arcee.ai, touting the flexibility, high performance, and quality due to a data partnership with DatologyAI.

- The models incorporate architectural improvements such as grouped query attention and ReLU² activations, with future releases planned for reasoning and tool use.

- NotebookLM Now Summarizes Videos: NotebookLM introduced a new feature for video overviews of articles and blog posts (xcancel.com), enabling users to grasp content without reading full texts.

- Users lauded the innovation and suggested further development for interactive modes.

- GPT-5 Spotted on MacOS: References to gpt-5-auto and gpt-5-reasoning were discovered in MacOS app cache files (xcancel.com), hinting at the imminent arrival of GPT-5.

- Further corroboration mentioned gpt-5-reasoning-alpha in a biology benchmarks repository, leading to speculation about a potential announcement or release.

- Anthropic Aiming High for $170B Valuation: Anthropic is reportedly seeking to raise $5 billion, potentially valuing the AI startup at $170 billion, with a projected revenue of $9 billion by the end of the year (xcancel.com).

- The news was met with comparisons to OpenAI and xAI.

Notebook LM Discord

- Tableau Server Flexes LLM Orchestration Muscle: A member reported successfully integrating the latest Tableau Server edition to incorporate an LLM (server/on prem) for Vizql NLP.

- This setup aims to enable more sophisticated natural language processing capabilities within Tableau visualizations.

- Gemini Agentic Framework Prototype Emerges: A member shared a Gemini agentic framework prototype, describing it as a one-shot prototype.

- The prototype leverages AI Studio for building agentic apps, emphasizing clear intention-setting for the builder agent to facilitate phased testing and focused model development.

- NotebookLM Bypasses Bot Restrictions for Podcast Dreams: In response to inquiries about podcast creation limitations due to bot restrictions on NotebookLM, a member clarified that the tools are accessible via the API.

- They suggested rebuilding the workflow and manually loading reports into NotebookLM, as an alternative solution.

- Obsidian and NotebookLM cozy up together: An article detailing the integration of NotebookLM, Obsidian, and Google Drive was shared here.

- A member volunteered to offer more detailed guidance on Obsidian usage, tailored to individual user needs.

- NotebookLM Audio output varies between 8-15 minutes: Users reported audio file generation with NotebookLM averaging 8-10 minutes, though some have achieved up to 15 minutes.

- This discussion underscores the variability in output length, potentially influenced by content complexity and processing efficiency.

LM Studio Discord

- Tsunami Alert Issued After Russian Earthquake: An 8.7 magnitude earthquake off the coast of Russia triggered tsunami warnings for Hawaii and watches for the west coast of the United States.

- Residents in affected areas were advised to monitor updates closely due to potential tsunami arrival hours later.

- LM Studio Users Request Enhanced Conversation Handling: Users are requesting a feature in LM Studio to copy and paste entire conversations, which are stored in JSON format.

- One user pointed others to the feature request channel noting that many would find this feature useful.

- LM Studio Model Relives February 18th: A user reported that their LM Studio model was repeatedly referencing February 18, 2024, even when asked about current events.

- Another user suggested checking the system prompt or Jinja template for the date.

- Strix Halo APUs Pricey, Spark EPYC Debate: The price of Strix Halo APUs is around $1.6k for 64GB and $2k for 128GB, but some members suggest that EPYC systems offer better value.

- One member lamented soldered memory on such devices, drawing a comparison to a recent DIMM failure on a server and pointing to a Corsair AI Workstation 300 with Strix Halo APU.

- 9070 XT Performance Disappoints: The 9070 XT is significantly slower than a 4070 Ti Super, with one user reporting a model running at 7 t/s on their 4070 Ti Super only achieved 3 t/s on the 9070 XT.

- Another member suggested that RAM bandwidth limitations might be the cause.

LlamaIndex Discord

- LlamaCloud Struggles to Read PDFs: A member reported that LlamaCloud could not detect a PDF file and process it via API, using n8n for workflow simplification and linked to a screenshot.

- This issue occurred when trying to process the PDF file via API.

- Character AI Sparking Building Discussions: Members discussed building a character AI with deep understanding of a large story, using a classic RAG pipeline with chunked text, embeddings, and a vector database.

- This includes leveraging a RAG pipeline to create an AI with deep understanding.

- Neo4j’s Knowledge Graph Runs into Snags: A member reported their simple graph storage implementing Neo4j is taking ridiculously long to load and their server is not compatible with Neo4j 5.x, and LlamaIndex doesn’t seem to like 4.x.

- Aura is also blocked by the server proxy, presenting further roadblocks to implementation.

- Flowmaker Gemini 2.5 Pro Bug meets Rapid Fix: A member reported an error when using Flowmaker with Gemini API due to an invalid model name, requiring a number like gemini-2.5-pro.

- A fix was committed and deployed swiftly, resolving the issue.

- Community Offers RAG Debugging Assistance: A member offered help with a MIT-licensed repo designed to debug tricky RAG issues, including sparse retrieval, semantic drift, chunking collapse, and memory breakdowns.

- Following the initial offer, a community member inquired about specific complex issues addressed by the repo, focusing on concrete examples of sparse retrieval and semantic drift.

GPU MODE Discord

- Expert Parallelism Exhibits Excellence?: A member sought examples of Expert Parallelism (EP) outperforming Tensor Parallelism (TP), but found that with Qwen32B and Qwen 235B, the all-reduce communication overhead made EP less performant.

- They observed EP being beneficial only for models using MLA and needing DP attention.

- Triton Treasures in Torch Compile: To extract PTX code, members suggested using

TORCH_LOGS="output_code" python your_code.pyor accessing thecompiled_kernel.asm.keys()dictionary, as detailed in this blog post.- The

keys()dictionary contains keys for intermediate representations including llir, ttgir, ttir, ptx, and cubin.

- The

- Inductor’s Intriguing Influence on Triton: To force Triton code generation for matmuls, members suggested configuring settings in torch._inductor.config.py, by modifying settings such as use_aten_gemm_kernels, autotune_fallback_to_aten, max_autotune_conv_backends, and max_autotune_gemm_backends.

- However, it was noted that built-in kernels are often faster and not every op is converted to Triton by default.

- CuTeDSL Compiler Cuts Prefetching Code: A member shared a blogpost and code on using CuTeDSL for GEMM on H100, explaining how to let the compiler handle prefetching.

- The blogpost details an experimental argument to the

cutlass.rangeoperator to hint for prefetching, matching the performance of manual prefetching with simpler code.

- The blogpost details an experimental argument to the

- Gmem Guardian: Synchthreads Saves the Day: After copying from global memory (gmem) to shared memory, manually inserting a

synchthreads(or equivalent) is necessary to sync all threads before moving on.- This guarantees that all shared memory elements are available for collective calculations such as gemm, reduction, or scan.

Eleuther Discord

- M3 Ultra Crowned Local Inference King: The M3 Ultra is gaining traction as a top choice for local inference, due to its 80-core GPU and 512GB memory, according to this Reddit thread.

- One member instead bought a used M1 16g because nobody responded to their post.

- Solving Model Latency Offshore: A member is seeking solutions for running LLMs offshore with low latency, despite poor internet conditions.

- Other members simply stated that they’re fine with spending money on whatever they want.

- Microsoft’s Audio Instruction Research: Members expressed interest in researching open-source solutions for improving audio instruction-following in Speech-LLM models to create better speech UIs, pointing to Microsoft’s Alignformer.

- Alignformer is not open source, so collaboration may be necessary.

- ICL Demolishes Diagnostics?: Members speculate that in-context learning (ICL) could break interpretability tools like Sparse Autoencoders (SAEs), as ICL pushes activations out of their original training distribution, referencing the Lucas Critique and this paper.

- This issue isn’t exclusive to ICL, but arises whenever SAEs encounter activation distributions different from those they were trained on, according to members.

- Grouped GEMM Gaining Ground: A member highlighted a PR supporting torch._grouped_mm in GPT-NeoX, now in PyTorch core, suggesting performance gains for MoE and linking to this MoE implementation.

- They noted that interested users can use a one liner from TorchAO for low precision MoE training.

Modular (Mojo 🔥) Discord

- Paper on CUDA Generalization Surfaces: A member shared a paper about generalizing beyond CUDA, prompting a reminder to post such content in the appropriate channel.

- No further discussion or alternative perspectives were provided.

- Mojo Newbie Braves TLS Handshake Gremlins: A new Mojo user reported a TLS handshake EOF error when attempting to run a Mojo project with pixi and magic shell, using a Dockerfile from Microsoft Copilot.

- A suggested fix involved using the latest nightly

mojopackage with pixi and specific commands (pixi init my-project -c https://conda.modular.com/max-nightly/ -c conda-forgeandpixi add mojo), but this fix failed even with a VPN.

- A suggested fix involved using the latest nightly

- Mojo external_call Anatomy Examined: Users questioned why Mojo’s

external_calluses specific functions likeKGEN_CompilerRT_IO_FileOpeninstead of standardfopenfrom libc, with a concern if this choice is about safety.- A member clarified that these are artifacts from older Mojo versions and are not a high priority to fix, and that the KGEN namespace belongs to Modular and will be opened up eventually.

- Python call from Mojo suffers Overhead: A user discovered significant overhead when calling a Python no-op function from Mojo (4.5 seconds for 10 million calls) compared to direct Python execution (0.5 seconds).

- Members explain that Mojo needs to start a CPython process, and CPython is embedded via

dlopen libpython, so you shouldn’t call it in a hot loop.

- Members explain that Mojo needs to start a CPython process, and CPython is embedded via

- Spraying Glue In a Race Car Engine: Discussion covered the performance impact of calling Python from Mojo, especially in hot loops for tasks like OpenCV or Mujoco robot simulation.

- Members note that a lot of fast python libs are actually C libs with a wrapper, and that interacting with the context dicts alone can easily eat several hundred cycles.

aider (Paul Gauthier) Discord