Parallel thinking is all you need.

AI News for 7/31/2025-8/1/2025. We checked 12 subreddits, 544 Twitters and 29 Discords (227 channels, and 7130 messages) for you. Estimated reading time saved (at 200wpm): 614 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

Lots of rumors and leaks about the OpenAI GPT-OSS and GPT-5 models are flying around, meaning a launch is soon. Ahead of this highly anticipated launch there is some drama around Anthropic revoking OpenAI’s Claude access.

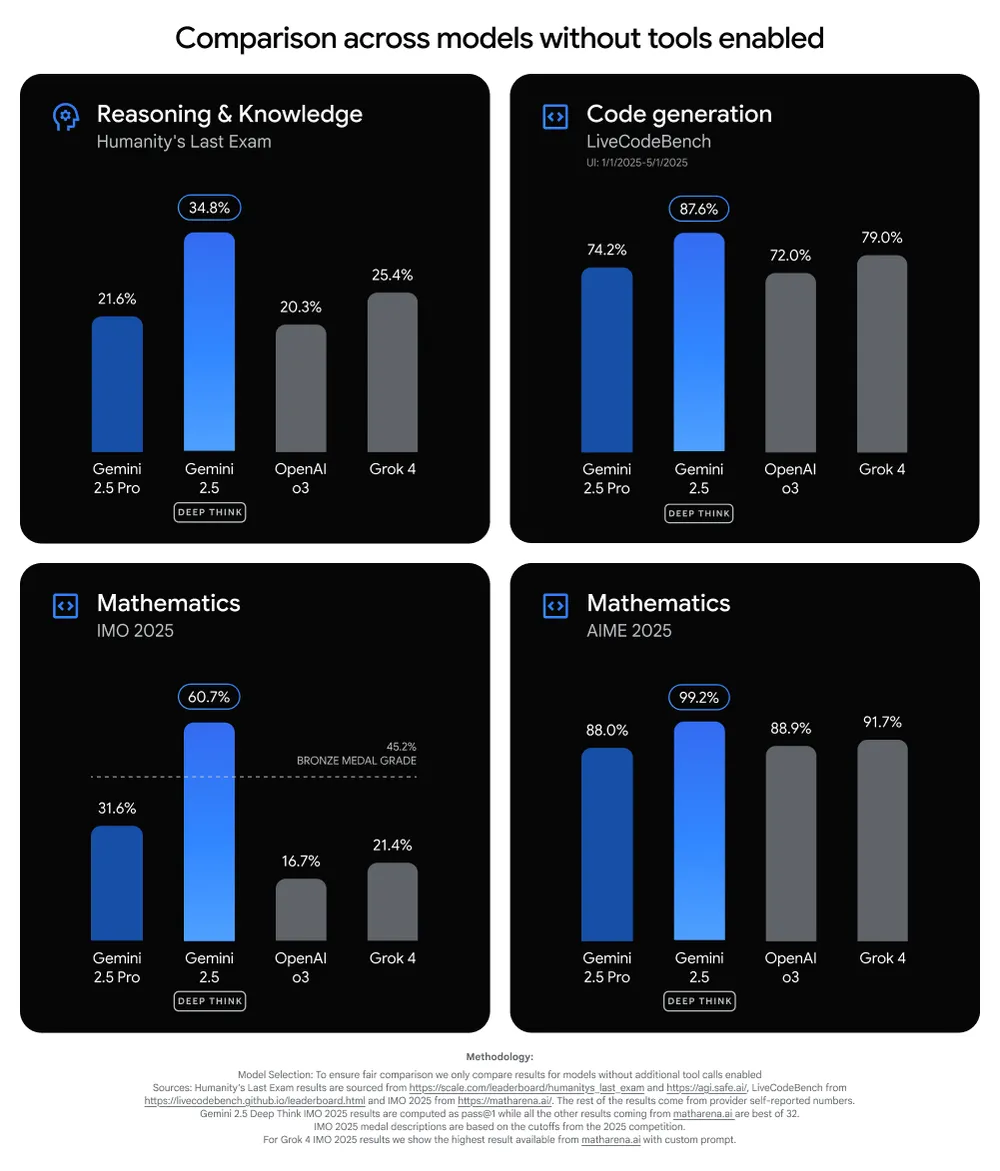

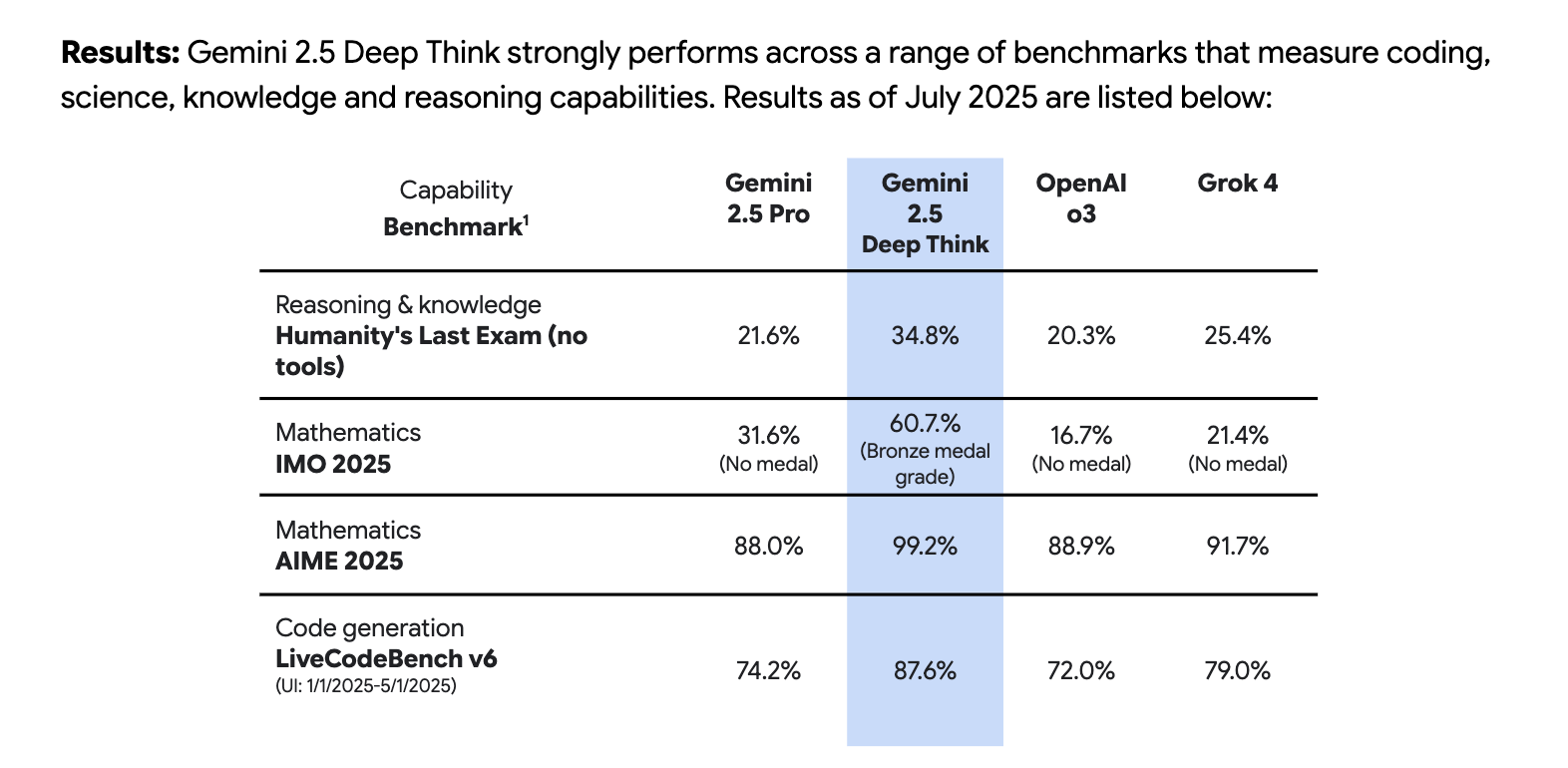

In the meantime GDM is quietly staying above the fray, just doing a clean launch of the Deep Think model (same model, but tuned down to be dumber than the one that got the IMO Gold a few days ago). It offers some impressive boosts on SOTA benchmarks, noticeably they are much higher boosts on the base model than o3 pro:

in table format:

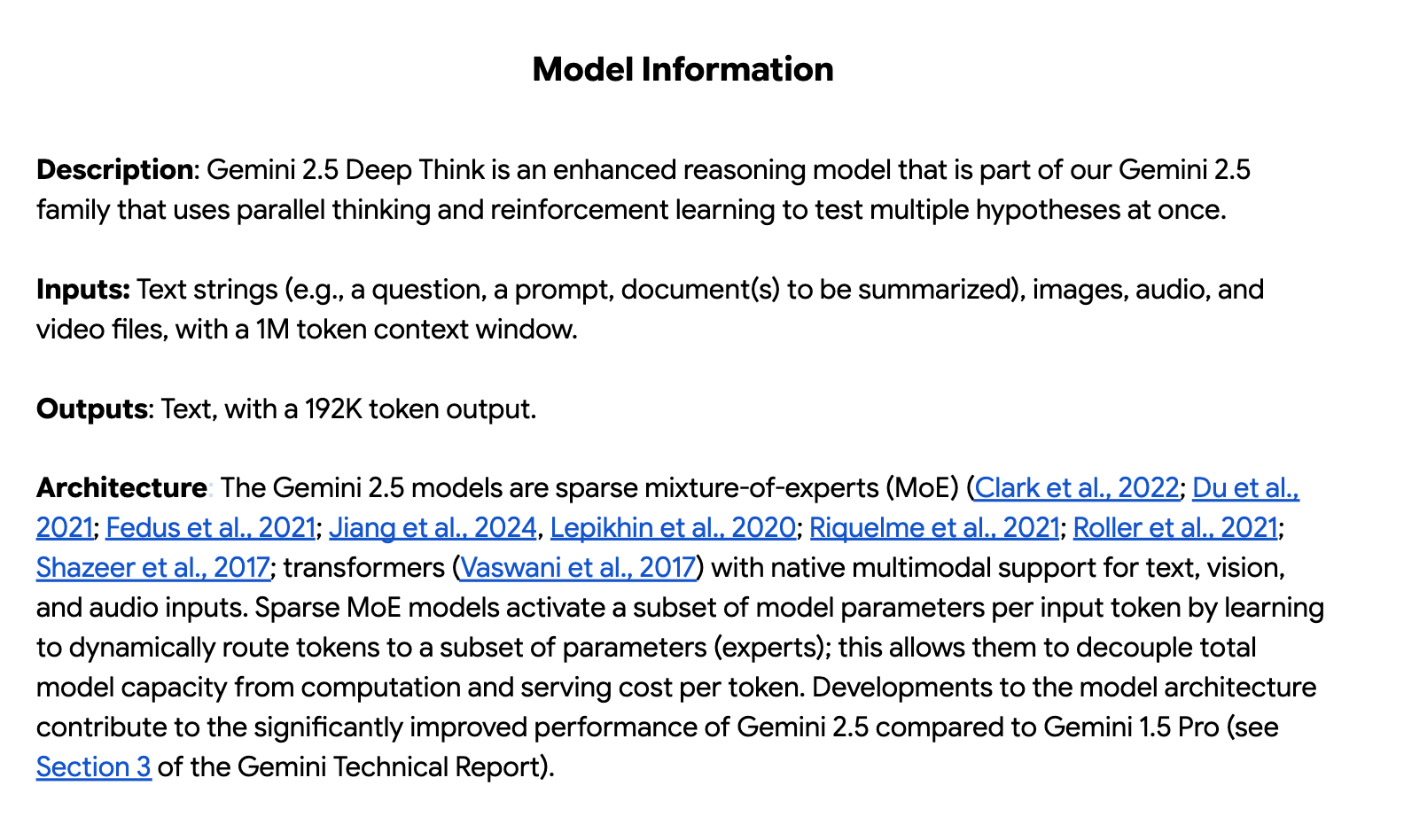

There’s more info on the model card, but not a lot so we can save you the click:

There’s also misc videos to see on the Deep Think parallel thinking, but we (biased) would actually recommend the full keynote from Jack Rae who led the work for 2.5 Deep Think and even commented on where they are going next:

AI Twitter Recap

Model Releases, Leaks, and Performance

- Google Releases Gemini 2.5 Deep Think: Google and DeepMind announced that Gemini 2.5 Deep Think is now available for Google AI Ultra subscribers. CEO @demishassabis states it’s great for creative problem solving and planning, being a faster variation of the model that achieved gold-medal level at the IMO. The model uses parallel thinking to extend “thinking time”, exploring multiple hypotheses to find the best answer. The team notes it’s not just a math model but also excels at general reasoning, coding, and creative tasks, with team members sharing that the final checkpoint was selected just 5 hours before the IMO problems were released. The model card has been released, and Google is sharing it with mathematicians for further feedback.

- OpenAI Open Source Model Leaks and Speculation: Rumors of an imminent OpenAI open-source model release sparked significant discussion. Leaks, notably from @scaling01, suggest two models: a 120B MoE and a 20B model. The 120B model is described as “super sparse” and shallow with 36 layers, 128 experts, and 4 active experts. The architecture is said to include attention sinks to improve upon sliding window attention, a detail @Teknium1 pointed out may be using techniques from Nous’ YaRN. The community is debating whether this leaked model is the much-discussed “Horizon-Alpha”, with @teortaxesTex noting that if it is, “it’s going to be awkward for everyone else.”

- Chinese Models Show Strong Momentum: Kimi Moonshot launched kimi-k2-turbo-preview, a version of their model that is now 4x faster, going from 10 tok/s to 40 tok/s with a 50% price reduction. Alibaba released Qwen3-Coder-Flash, a 30B model with a native 256K context, which is now available on Ollama. The main Qwen3 model was recognized as the #1 open model on the LMSys Chatbot Arena. ZHIpu AI released GLM-4.5, an open model with unified reasoning, coding, and agentic capabilities. StepFun also announced Step 3, their latest open-source multimodal reasoning model. This surge led @AndrewYNg to state there is now a path for China to surpass the U.S. in AI.

- New Models and Techniques: ByteDance is exploring diffusion LLMs with the release of Seed Diffusion Preview, a fast LLM for code. Cohere released a new vision model with weights on Hugging Face. Meta introduced MetaCLIP 2, with code and models available, shared by @ylecun. However, @teortaxesTex observes that despite these releases, there is still no open model that consistently beats DeepSeek-R1-0528 on hard coding, suggesting a potential plateau for current architectures.

Infrastructure, Efficiency, and Hardware

- High-Speed Inference on Specialized Hardware: Cerebras announced that Qwen3-Coder is live on their platform, achieving 2,000 tokens/s—a rate they claim is 20x faster than Sonnet with a 0.5s time-to-full-answer. They are offering two new monthly coding plans for access. This has led to speculation about optimal inference setups, with @dylan522p suggesting a “gigabrain” combination of Prefill on Etched and Decode on Cerebras/Groq.

- Modal Labs Enables 5-Second vLLM Cold Starts: @akshat_b from Modal Labs announced that users can now cold-start vLLM in 5 seconds on their platform, a capability enabled by their new GPU snapshotting primitive.

- Sparsity and MoE Architecture in Focus: Google presented a Junior Faculty Award to @Tim_Dettmers for his work on sparsity, who teased bringing large Mixture of Experts (MoE) models to small GPUs soon. This aligns with leaked details about OpenAI’s upcoming open model, which is rumored to be a very sparse and shallow MoE. Technical discussion from @nrehiew_ highlighted the architectural significance of attention sinks, which can fix issues with sliding window attention in such models.

- Performance Optimizations: Baseten detailed their work with Amp Tab to switch to TensorRT-LLM and KV caching, resulting in a 30% speedup. UnslothAI enabled running the powerful 671B hybrid reasoning model locally on consumer hardware.

- Runway’s Aleph and In-Context Generalization: @c_valenzuelab from Runway explains that their Aleph model is a single, in-context model that can solve many video workflows at inference time. This multi-task approach generalizes so well that it can replicate specialized features like Motion Brush through simple text and image/video references, without needing a dedicated UI or post-training.

Agent Tooling, Frameworks, and Development

- Perplexity Launches Comet Shortcuts for Workflow Automation: Perplexity introduced Comet Shortcuts, a new feature to automate repetitive web workflows using simple natural language prompts. @AravSrinivas shared the launch, noting that users can create and eventually share/monetize custom shortcuts. A key example is the /fact-check shortcut to make the web more truth-seeking.

- Rise of Deep Agents and Multi-Agent Systems: LangChain’s @hwchase17 released a video defining “Deep Agents” as a combination of a Planning Tool, File System, Sub-Agents, and a Detailed System Prompt, referencing models like Claude Code and Manus. He also demonstrated using the new qwen3-coder with deep agents. Separately, @omarsar0 showed how it’s becoming easier to build complex multi-agent systems in n8n, including supervisor agents that delegate tasks.

- Runway Opens Aleph Programming Interface: Runway has made its powerful Aleph video model available via API. Co-founder @c_valenzuelab framed this as the “Aleph Programming Interface,” an API to programmatically edit, transform, and generate video directly.

- Development Tools and Frameworks: MongoDB released an open-source MCP Server that allows AI tools to interact with databases using natural language. The DSPy framework is expanding its reach, with @lateinteraction announcing DSRs, a new port of DSPy to Rust. The supervision library for computer vision has been updated with advanced text position controls.

- RAG Internals: DeepLearningAI published a lesson unpacking how LLMs process augmented prompts in RAG systems, detailing the roles of token embeddings, positional vectors, and multi-head attention to help developers build more reliable RAG pipelines.

Company News, Funding, and Strategy

- Cline Raises $32M for Open-Source Code Agent: Cline, the open-source code agent, announced a $32M Seed and Series A raise led by Emergence Capital and Pace Capital. Originating as a hackathon project, the tool now has 2.7M developers and is focusing on a long-term bet on open-source to help developers control AI spend.

- Meta Reportedly on Video AI Acquisition Spree: A report from @steph_palazzolo indicates Meta is actively seeking to acquire video AI startups, having held conversations with companies like Pika, Higgsfield, and Runway.

- The US vs. China AI Race: A viral tweet from Andrew Ng (retweeted by @Teknium1) argues that China now has a path to surpass the U.S. in AI due to tremendous momentum, a topic also covered in The Batch. This prompted discussion on strategy, with President Trump releasing an “America’s AI Action Plan” to favor “ideologically neutral” models, fast-track data center permits, and support open-weights tools.

- DeepMind Team and Growth: DeepMind’s @_philschmid celebrated 6 months at the company, sharing that Google products and APIs are now processing over 980 trillion tokens monthly, up from 480T in May. CEO Demis Hassabis appeared on the Lex Fridman podcast to discuss AGI as the ultimate tool for scientific discovery.

Research, AI Safety, and Datasets

- Anthropic Develops “Persona Vectors” to Mitigate Bad Behavior: Anthropic released new research on “persona vectors,” which can identify and steer language models away from undesirable personas like sycophancy or evilness. @EthanJPerez explains the technique, which @mlpowered describes as creating “vaccines for LLMs” by injecting vectors for bad personas during training to teach the model to avoid them.

- International AI Safety and Alignment Initiatives: Yoshua Bengio announced he is serving as an expert advisor for a new Alignment Project launched by the UK’s AI Safety Institute and supported by its Canadian counterpart, encouraging researchers to apply for funding and compute. Following the release of Gemini Deep Think, @NeelNanda5 highlighted the extensive safety testing and risk management approaches used to catch and mitigate risks proactively.

- New Datasets and Evaluation Frameworks Released: The NuminaMath-LEAN dataset was released, containing 100K mathematical competition problems formalized in Lean 4, as shared by @bigeagle_xd. Researchers also introduced OpenBench 0.1, a new framework for open and reproducible evaluations. Additionally, the LMArena project released a dataset of 140,000 conversations.

- The End of Hackathons?: @jxmnop sparked a discussion by claiming that AI has “basically killed hackathons,” arguing that most projects that could be built at a hackathon in 2019 can now be created better and faster by AI.

Humor/Memes

- Relatable Developer Pain: A tweet from @hkproj lamenting a

ncclUnhandledCudaErrorwith the caption “who needs sleep anyway?” resonated with many. - AI Community In-Jokes: The rumored OpenAI leak prompted a series of “Me and the gang discussing the leaked OAI details” memes. Another popular sentiment was captured by @vikhyatk: “i find this genre of complaint very tiresome. it’s open source. submit a pr or gtfo”.

- Which Way, Western Man?: A meme from @Yuchenj_UW pitting “closed-source AI” against “open-source AI” received over 5,800 likes.

- Political Satire: A tweet retweeted by @zacharynado sarcastically noted that “DOGE had to cut funding to all of that worthless woke shit like air safety and weather forecasting”.

- Unstoppable Force vs. Immovable Object: A highly-liked tweet from @random_walker depicted the absurdity of permitting processes with the caption: “When an unstoppable force [environmental review] meets an immovable object [also environmental review]”.

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. OpenAI 120B Model Leaks and Speculation

- The OpenAI Open weight model might be 120B (Score: 631, Comments: 151): A supposed leak suggests OpenAI’s upcoming open-weight model will have 120B parameters, making local inference impractical for most users without significant hardware, and preserving ChatGPT’s subscription market. Comments speculate the model will use a proprietary .openai format, restricting third-party execution, and discuss model architecture: for a Mixture-of-Experts (MoE), Quantized (Q3) versions might fit in 64GB RAM; for dense, direct competition with recent models would require significant advances. Technical debate focuses on practical usability given likely proprietary restrictions and high hardware requirements, with skepticism about accessibility and community value unless OpenAI innovates meaningfully beyond current models.

- A key technical debate centers on whether the potential OpenAI 120B model will follow a Mixture of Experts (MoE) architecture or a dense design. One commenter notes that if it’s MoE, a quantized Q3 version could run on systems with just 64GB of RAM, but if it’s dense, the resource requirements and performance expectations would be dramatically higher—implying that only a significant leap in quality would make it worthwhile compared to recent releases.

- There is skepticism in the community regarding the usability of any ‘open weights’ OpenAI might release, with a comment suggesting the potential use of a proprietary .openai file format and requirements to use OpenAI’s own application for model inference, possibly limiting third-party experimentation or deployment and raising concerns about genuine openness.

- OpenAI OS model info leaked - 120B & 20B will be available (Score: 429, Comments: 138): A leaked image (see here) reportedly reveals configuration details of OpenAI’s upcoming ‘OS’ language models, specifically a 120B parameter model and a 20B parameter model. A posted config for the 120B model indicates it uses a Mixture of Experts (MoE) architecture:

36hidden layers,128experts with4experts per token,201088vocab size,2880hidden size,64attention heads,8key-value heads, and RoPE positional encoding with scaling factors. These specs suggest a highly scalable, high-context transformer design similar to recent Megatron or DeepSpeed MoE models. Commenters note the 20B model size is attractive for research/deployment, and speculate on openness/censorship and performance compared to other recent large models. Some point out this leak may result from a temporary internal error, underscoring the sensitivity of such information.- A leaked config file for the OpenAI “OS” 120B model reveals architecture details: 36 hidden layers, 128 experts (Mixture-of-Experts), experts_per_token set to 4, and a vocab size of 201,088. Key parameters include hidden/intermediate size of 2880, 64 attention heads (8 key/value heads), a 4096 initial context length, and advanced rotary positional encoding (rope_theta: 150000, rope_scaling_factor: 32.0).

- The config suggests use of Mixture-of-Experts (MoE) with 128 experts and 4 experts per token, an approach aimed at improving efficiency for larger models. The RoPE (Rotary Positional Embedding) enhancements (notably rope_ntk_alpha and rope_ntk_beta) and sliding window attention could support longer context handling and scaling.

- Discussion references a user who managed to access the 120B weights, indicating early external analysis is underway. Comparisons with recent open-source models (e.g., yofo-deepcurrent, yofo-riverbend) are anticipated, with technical curiosity about performance, context management, and censorship levels.

- The “Leaked” 120 B OpenAI Model is not Trained in FP4 (Score: 231, Comments: 64): The image is referenced in a discussion debunking a claim that a ‘leaked’ 120B OpenAI model is trained in FP4 (4-bit floating point), with the title clarifying it is not. The discussion and comments indicate skepticism about hype or misinformation around the technical details of the supposed model, emphasizing the need for critical analysis of such rumors within the AI community. There is no evidence or benchmark presented to support the claim of FP4 training, and the post mainly serves as a rebuttal to unverified leaks. Commenters dismiss the original claim as ‘bullshit hype’ and express skepticism about the rumor, echoing broader concerns about AI misinformation and unsubstantiated leaks.

- Several comments highlight skepticism around the FP4 training claim, referencing that the supposed OpenAI 120B model “leak” is likely hype and not technically credible, noting that FP4 is not recognized as a practical precision format for training (current standard being bfloat16 or FP16 for large models).

- Others emphasize the importance of model release quality over speed, comparing the situation to DeepSeek r2’s delay, and underscoring that ‘frontier’ AI labs like OpenAI prioritize robustness and performance in their models over early access or hype-driven releases.

- There is discussion of the recent frequency of large model releases and how increased openness—even from major labs like OpenAI—raises the bar for transparency and competition in the open weight community, helping to normalize open model sharing as a standard industry practice.

2. Qwen3 Model Launches and Benchmarks

- Qwen3 Coder 480B is Live on Cerebras ($2 per million output and 2000 output t/s!!!) (Score: 372, Comments: 123): Cerebras has launched deployment of the Qwen3 Coder 480B model, an open-source large language model for code generation, offering output at

2 USD per million tokensand2000 output tokens/secthroughput. This positions it as a potential competitor to Sonnet, especially given claims of being~20xfaster and~7.5xcheaper on US infrastructure. New tiered coding plans were also announced: ‘Code Pro’ at50 USD/month(1000 requests/day) and ‘Code Max’ at200 USD/month(5000 requests/day). Technical commenters note that the ‘1000 requests per day’ is limiting for users of code tools with high request frequency, and some dispute the model’s stated performance gap, claiming in practice Qwen3 is not just ‘5-10%’ worse than competitors but shows larger discrepancies in real-world coding tasks.- Systems like Roocode and Opencode using Qwen3 Coder 480B on Cerebras see extremely fast response speeds—so fast that UI processing in some tools (like Roocode) cannot keep up, and with others (Opencode), the outputs appear almost instantly. This highlights practical throughput meeting, or exceeding, the advertised 2000 output tokens per second benchmark.

- There is discussion about the pricing structure, suggesting that $50/month for 1000 requests per day may not be cost-effective for all users, especially when technical workflows (like code lookups or tool calls) generate a large number of requests, as each interaction (‘tool call and code lookup’) can result in separate API invocations, quickly consuming the quota.

- Comments caution about the risk of vendor lock-in, recommending users benefit from the current performance and value proposition, but remain aware of the potential for future ecosystem restrictions or dependency on a single provider, even as Cerebras seeks rapid adoption through aggressive pricing or performance leadership.

- Qwen3-Embedding-0.6B is fast, high quality, and supports up to 32k tokens. Beats OpenAI embeddings on MTEB (Score: 143, Comments: 16): Alibaba’s Qwen3-Embedding-0.6B (available on Hugging Face: https://huggingface.co/Qwen/Qwen3-Embedding-0.6B) delivers high-performance semantic embeddings with a large context window (up to 32k tokens) and reportedly ‘beats OpenAI embeddings on MTEB’ benchmarks. Users highlight the importance of updating Text Embedding Inference to version 1.7.3 to fix pad token bugs impacting results in earlier versions; such preprocessing/tokenization issues may affect different inference toolchains. Context: Embedding models like Qwen3 are used for semantic search via document/query vector similarity (dot/cosine product), and Qwen3-Embedding-0.6B is commended for accuracy and speed, enabling new use cases at smaller model scales. Commenters suggest the reranker variant (Qwen3-Reranker-0.6B-seq-cls: https://huggingface.co/tomaarsen/Qwen3-Reranker-0.6B-seq-cls) offers ultra-fast and highly relevant scoring for RAG chatbot pipelines, implying broad utility for retrieval-augmented generation (RAG) workflows.

- Qwen3-Embedding-0.6B is praised for semantic search use cases, leveraging document and query embeddings with dot product or cosine similarity for ranking. It outperforms OpenAI embeddings on MTEB benchmarks, indicating high performance for tasks involving embedding-based retrieval and ranking.

- The Qwen3-Reranker-0.6B variant is noted for delivering extremely fast inference and high-quality relevance scores in Retrieval-Augmented Generation (RAG) chatbots, as evidenced by user testing and its availability on Hugging Face (Qwen3-Reranker-0.6B-seq-cls).

- While Qwen3-Embedding-0.6B is strong for English (and expectedly Chinese), it’s reported to be less effective for other multilingual scenarios. Competing models like MPNet may offer better performance on diverse multilingual tasks.

- Qwen3-235B-A22B-2507 is the top open weights model on lmarena (Score: 122, Comments: 12): Qwen3-235B-A22B-2507 is now ranked as the highest-performing model with open weights on lmarena, surpassing even closed models like Claude-4-Opus and Gemini-2.5-pro based on lmarena’s current evaluation metrics. The model utilizes a 235B parameter architecture, and strong performance is confirmed in user reports for the UD-Q4_K_XL quantization, as well as on external benchmarks such as artificial analysis and livebench. Commentators express some skepticism regarding lmarena’s evaluation methodology; there’s also anticipation regarding future models (e.g., OpenAI 120B MoE, GLM-4.5 Air) potentially challenging Qwen3-235B’s dominance.

- Qwen3-235B-A22B-2507 is currently leading lmarena’s open-weights model leaderboard, with user feedback noting its strong performance and depth, particularly when running in quantized formats such as UD-Q4_K_XL. The discussion also highlights the community’s anticipation for upcoming models, specifically OpenAI’s open-weight 120B MoE and GLM-4.5 Air, with the latter expected to be more competitive once supported by llama.cpp.

- Skepticism is expressed regarding lmarena’s evaluation methodology, especially as Qwen3-235B reportedly outperforms proprietary models like Claude-4-Opus and Gemini-2.5-pro. This raises questions about model benchmarking standards and result reliability within community-run testbeds.

- Qwen3 performance is also validated by its top ranking on Artificial Analysis and LiveBench for non-reasoning tasks, and its variant (Qwen3 Coder 480B) is noted for high placement on Design Arena, only trailing behind Opus 4 and surpassing all other open-weight models. This suggests Qwen’s release cadence has yielded state-of-the-art open models across multiple technical benchmarks.

3. DocStrange Open Source Data Extraction Release

- DocStrange - Open Source Document Data Extractor (Score: 149, Comments: 27): The image advertises DocStrange, an open-source Python library for extracting data from documents in multiple formats (PDF, images, Word, PowerPoint, Excel), offering outputs such as Markdown, JSON, CSV, and HTML. The tool supports user-defined field extraction (e.g., specific invoice attributes) and enforces output schema consistency with JSON schemas. Two modes are available: a cloud-based mode for quick processing via API (raising privacy cautions for sensitive data) and a local mode for privacy and offline computation (CPU/GPU supported). Related resources: PyPI link, GitHub repo. Commenters highlight the importance of true visual language model (VLM)-driven image description (not basic OCR), as supported by competitors like Docling and Markitdown. Privacy concern is noted for the cloud API: sensitive documents should not be uploaded without care.

- Users highlight the direct competition with existing document extraction tools, specifying that advanced differentiation hinges on handling images using Vision-Language Models (VLMs) for descriptive image understanding (not just OCR). Tools like Docling and Markitdown are cited as benchmarks for such capabilities, raising the question of whether DocStrange can offer equivalent or superior VLM-driven image description features.

- Technical scrutiny emerges around how DocStrange compares to simply leveraging local LLMs (e.g., Gemma 3, Mistral Small 3.2, Qwen 2.5 VL) with vision processing, querying if the same extraction (Markdown/JSON/CSV outputs) could be achieved with a targeted prompt and local model, thus questioning the need for a separate cloud-based solution.

- There is a caution around data privacy given DocStrange’s cloud API is the default processing mechanism, as instant conversion requires sending documents to external servers—users are warned not to upload sensitive or personal data unless they trust the service.

- Gemini 2.5 Deep Think mode benchmarks! (Score: 247, Comments: 66): The image (not viewable here) is described as benchmark results for Google Gemini 2.5’s Deep Think mode, which appears to target high-intensity or detailed LLM tasks. The discussion highlights that Deep Think mode is currently limited to Gemini Ultra subscribers. One user compared Gemini 2.5 Deep Think to ChatGPT’s deep research capability, finding Gemini’s responses more impressive for complex tasks such as PC build recommendations and business idea analysis. Some commenters question the utility of Deep Think mode due to its exclusivity to Gemini Ultra, and there is mention of “AIME saturation in 2025”—possibly referring to anticipated compute or advanced AI model availability. The comparison with ChatGPT Plus outlines substantive performance preference for Gemini in specific research scenarios.

- One user reports informal benchmarking between ChatGPT Plus (with deep research) and Gemini 2.5 Deep Think, using prompts to generate a high-performance LLM-capable PC build within a £1200 budget and business analysis. They found Gemini 2.5 delivered more impressive and detailed outputs compared to their prior experiences with ChatGPT, suggesting practical differences in real-world prompt handling and decision-making capability between models.

- There’s interest in benchmarking Gemini 2.5 Deep Think mode on previously unsolved complex mathematical problems, with at least one user seeking to evaluate its performance on extremely challenging math queries. This underscores an active technical curiosity in how Gemini 2.5 competes against the top LLMs in advanced STEM reasoning tasks, a known weakness of many models.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Gemini 2.5 Deep Think Launch and Performance Benchmarks

- Gemini 2.5 Deep Think solves previously unproven mathematical conjecture (Score: 645, Comments: 49): A YouTube video claims that Google’s Gemini 2.5 Deep Think has solved a previously unproven mathematical conjecture, but the post and video omit details on the specific conjecture solved. Discussion emphasizes the significance of this claim but laments the lack of transparency regarding which mathematical problem was addressed and how the model accomplished the proof. Commenters raise concerns about the vagueness around the conjecture, urge for direct testing of difficult math problems on the model, and compare Google’s approach favorably to OpenAI in terms of early release/access, while questioning cost and actual model parity with the unreleased IMO Gold models.

- A user notes that while OpenAI has developed but not released their specialized IMO (International Mathematical Olympiad) model, Google is making their own advanced model—close to IMO Gold level—available sooner, highlighting differences in openness and release strategy. However, the commenter points out concerns about the high price and compute requirements for accessing Google’s Gemini 2.5 model, which may limit practical usage and experimentation for many.

- There is significant technical interest in benchmarking Gemini 2.5 against other state-of-the-art models on complex mathematical problems, as indicated by requests for access to the model for testing on difficult math conjectures. This suggests the community is eager to rigorously evaluate Gemini 2.5’s mathematical problem-solving capabilities and compare its performance to previous models, particularly on problems that have resisted previous AI approaches.

- Gemini 2.5 Deep Think rolling out now for Google AI Ultra (Score: 294, Comments: 23): Google is rolling out “Gemini 2.5 Deep Think” for its AI Ultra tier, suggesting enhanced capabilities over previous versions, but with reported limited daily usage. Technical details on new features or architectural differences from Pro are not specified in the post or linked benchmarks. Commenters raise concerns about the value proposition of Deep Think, highlighting restrictive usage caps (“a few uses per day”) versus high cost (

$250), and request clarification on technical distinctions from the Pro tier.- A user queries the key technical distinction of “DeepThink” compared to the existing “Pro” level, suggesting a need for clarification regarding the feature set, access limits, or underlying model differences between the two offerings. This indicates confusion or lack of transparency about what specific improvements (e.g., reasoning depth, context window expansion, or inference speed) DeepThink delivers.

- Another user expresses skepticism about the pricing and usage model, highlighting that DeepThink allows only a limited number of uses per day despite a high price tag ($250). This points to a potential technical or infrastructural limitation being masked as a product tier (possibly related to model inference costs, resource allocation, or queueing for Ultra-level compute).

- The Architecture Using Which I Managed To Solve 4/6 IMO Problems With Gemini 2.5 Flash and 5/6 With Gemini 2.5 Pro (Score: 216, Comments: 24): The post presents an architecture, depicted in this diagram here, designed for maximizing IMO problem-solving with Gemini 2.5 models. The approach involves parallel hypothesis generation, with dedicated Prover and Disprover agents producing ‘information packets’ fed into Solution and Refinement agents. The Refinement agent self-verifies responses, improving solution rigor and completeness—addressing past shortcomings and enabling 4/6 solutions with Gemini 2.5 Flash and 5/6 with 2.5 Pro. The repo at Iterative-Contextual-Refinements includes the architecture and general-purpose prompts, which were then tailored to IMO use-cases, placing emphasis on novelty, rigorous proof standards, and avoidance of approach fixation. Comments query why major companies don’t adopt similar parallel-agent architectures with smaller models, questioning compute inefficiency and the novelty of such techniques compared to industry efforts.

- A commenter provides a GitHub repository detailing their iterative contextual refinements architecture. The architecture evolved from a basic strategies/sub-strategies generation pipeline to a parallel hypothesis generation approach, where prover and disprover agents produce information packets, which are then processed by a refinement agent. The refinement agent performs self-verification of solutions, which was especially effective for Gemini 2.5 Flash versus previous versions. Enhancements included stricter, more IMO-focused prompt engineering, encouraging novel and diverse strategies, hypothesis consideration, and rigorous solution standards.

- One technical point raised is the observation that instead of using large-scale models and compute, the problem could see similar or better results by using smaller models working in parallel on sub-tasks. This questions the efficiency of current AI research strategies in scaling up model and compute sizes for complex problem solving.

- A particular prompt constraint specified in the repo demands strict role separation for hypothesis generation versus problem solving. The architecture required prompts that enforced agents (LLMs) not to solve or verify hypotheses, but to solely generate strategic conjectures, indicating non-trivial behavioral control issues with LLMs and the necessity for explicit, strong task-separation instructions in prompt engineering.

- Deep Think benchmarks (Score: 189, Comments: 69): A benchmark summary (shared via image) highlights the performance of Deep Think, Google’s latest large language model, with notably high scores—especially on the International Mathematical Olympiad (IMO) dataset, indicating significant breakthroughs in mathematical reasoning. Early technical commentary underscores the model’s strong results across mathematical and logic-heavy benchmarks, suggesting it rivals or surpasses state-of-the-art in these domains. Associated visual data points to impressive quantitative improvements, with particular attention to math-related tasks, but more detailed breakdowns would be needed for granular analysis. Top comments express surprise at the outstanding math benchmark scores, particularly for IMO, indicating that Deep Think could set a new standard for automated reasoning. There is anticipation regarding its broader practical capabilities in comparison to contemporaries.

- There’s an explicit call for benchmarking Deep Think specifically against higher-tier models such as “O3-Pro” and “Grok 4 Heavy,” suggesting that direct comparison to standard or base versions is insufficient for accurate assessment of performance in this context.

- The math benchmark scores for Deep Think are noted as exceptionally strong, implying that the model may have distinct capabilities or optimizations in mathematical reasoning tasks, which could differentiate it in technical or academic applications.

- One technical perspective highlights that for a new model to be considered relevant, it must outperform existing leading models in at least a few benchmark areas—in addition to aspects like cost and convenience—underscoring the highly competitive nature of LLM benchmarks.

- Damn Google cooked with deep think (Score: 378, Comments: 127): The post appears to reference a new feature or capability from Google called ‘deep think’, possibly an AI-powered tool or model. The image (not viewable) likely shows a screenshot of this feature in action and captures its user interface or pricing information. The top comments point out that the feature is paywalled behind a $250 per month subscription tier, indicating significant cost and possible limitations on general access. There is also a question about whether it is available to ‘Ultra subscribers’, suggesting different product access levels in Google’s offerings. Commenters criticize the steep $250/month paywall and discuss the rollout strategy, implying Google’s selective or high-cost approach to advanced AI capabilities. One user speculates that the feature’s timing may be significant in response to moves by competitors.

- Multiple commenters highlight that Deep Think (presumably a new AI capability or model from Google) is currently locked behind an Ultra subscription, which reportedly costs

$250/month, severely restricting access to only high-paying users or organizations. This paywall raises questions about the democratization of advanced AI tools compared to offerings from other providers. - Technical discussion centers on the limited availability: some ask whether the new feature is live for all Ultra subscribers or if there are further restrictions or rollout limitations, suggesting a possible phased or invite-only access model.

- There is speculation on release timing, implying strategic alignment by Google to coincide with competitive events or announcements, though no benchmarks or technical performance details are discussed in the initial comments.

- Multiple commenters highlight that Deep Think (presumably a new AI capability or model from Google) is currently locked behind an Ultra subscription, which reportedly costs

- Gemini 2.5 Deep Think rolling out now for Google AI Ultra (Score: 191, Comments: 64): Google has begun rolling out Gemini 2.5 Deep Think for its ‘AI Ultra’ tier, aiming to offer significantly improved reasoning and context retention capabilities. The rollout appears limited, with reports that only a small number of prompts per day currently leverage the new model—an apparent bottleneck in deployment or resource allocation. Top comments express frustration at the limited availability of the new model (few prompts/day), and dissatisfaction with Google AI’s refund policy for subscriptions, suggesting user support and access scalability are ongoing issues.

- Users note that Gemini 2.5 Deep Think for Google AI Ultra currently limits the number of prompts per day, which impacts usability for heavy users and contrasts with the core offering of significant cloud storage capacity. This limitation is a key technical constraint for those seeking consistent access to advanced models.

- There is discussion about subscription and refund policies, emphasizing user frustration when a model update (Gemini 2.5 Deep Think) is released right after a non-refundable subscription cancellation. This highlights the importance of transparent release timelines and refund processes for AI model rollouts.

- Oh damn Gemini deep think is far better than o3 ! Wen gpt 5?? (Score: 162, Comments: 51): The image appears to compare the performance of Google’s Gemini Deep Think and GPT-4 (referred to as o3), with the post author expressing surprise at Gemini’s superior performance over GPT-4 and questioning the release of GPT-5. Commenters note that such a comparison should use GPT-4 Pro (o3 Pro) as the benchmark for fairness, and raise skepticism about Gemini’s capabilities in practical question-answering and coding tasks. The context suggests the image may show benchmark results or qualitative comparisons between the AI models, possibly to promote Gemini’s Ultra tier. A key debate in the comments centers on the fairness of comparing Gemini Deep Think to standard GPT-4 instead of GPT-4 Pro, and the real-world relevance of Gemini’s claimed superiority in coding and question-answering. Some users express skepticism about Google’s advancements and maintain preference for OpenAI’s models, highlighting anticipation for GPT-5’s release.

- Commenters note that comparisons should be made to Gemini Deep Think vs. o3-Pro, rather than the base o3, as Pro is a more direct competitor in benchmarks and capability. Several users stress that meaningful performance discussions require comparing matching tiers (i.e., premium versions).

- One user critiques Gemini Deep Think’s real-world utility for question answering and agentic coding, claiming it does not outperform o3-Pro in those areas and indicating they’ve downgraded back. There is skepticism about the practical improvements delivered by Gemini’s Ultra tier as well.

- Comparative technical discussion also mentions that Gemini Deep Think is purportedly not better than Grok 4 Heavy in HLE (likely a benchmarking or eval context), and that its performance may only match o3-Pro, implying parity rather than meaningful superiority in major practical tasks.

- Gemini 2.5-pro with Deep Think is the first model able to argue with and push back against o3-pro (software dev). (Score: 156, Comments: 40): The post reports that Gemini 2.5-pro with Deep Think (Google) is the first LLM able to robustly challenge and analytically push back against claims from o3-pro (OpenAI), particularly in technical software development tasks involving complex reasoning. In a test case involving npm package selection—where o3-pro suggested a complicated workaround to a deprecated package’s vulnerability—Gemini 2.5-pro correctly recommended a safer, simpler alternative and, when presented with o3-pro’s counterargument (disguised as a human’s suggestion), offered a detailed, critical rebuttal focused on root cause analysis and sound package choice. This behavior contrasts with earlier models, which typically acquiesce to o3-pro’s arguments, indicating Gemini’s improved adversarial and debate capabilities. Commenters encourage rigorous, diverse testing, referencing notable mathematical challenges (e.g. the Latin Tableau Conjecture) and suggesting ensemble approaches (e.g. MCP with all major reasoning LLMs voting on solutions) for benchmarking adversarial reasoning and mathematical proof generation across top models.

- One user highlights Gemini 2.5-pro’s ability to tackle unsolved mathematical problems, such as the Latin Tableau Conjecture (LTC), arguing that its performance is at or above IMO level and wants it rigorously tested against detailed mathematical prompts. Specific known computational boundaries are noted (verification up to 12x12 Young diagrams), along with references to combinatorial mathematics literature and a challenge to provide mechanizable proofs or concrete counterexamples.

- Another technically insightful suggestion proposes running all top-tier reasoning models (Claude, Gemini, GPT-4, etc.) in parallel on complex tasks and having them vote on solutions, pointing out that such ensemble approaches could push the quality of results but would be expensive—potentially requiring enterprise resources to implement.

- A limitation of Gemini 2.5-pro’s Deep Think mode is raised: users are currently limited to 10 uses per day, which hinders comprehensive or iterative testing compared to o3-pro, which allows more extensive free interaction, thus affecting practical productivity in research or benchmarking settings.

2. WAN 2.2, Flux Krea, and Current Text-to-Image/Video Model Comparisons

- While as a video model it’s not as special, WAN 2.2 is THE best text2image model by a landslide for realism (Score: 478, Comments: 138): WAN 2.2 is highlighted as a leading text-to-image (T2I) model, outperforming alternatives like Flux and Chroma in realism, texture detail, and minimal censorship, and demonstrates synergistic performance when combined with Instagirl 1.5. The OP notes underwhelming performance and stability for video generation compared to 2.1, but exceptional results in T2I tasks, especially across noise levels. Linked Civitai examples demonstrate its output fidelity. Commenters contend OP’s video model criticism is likely due to suboptimal settings or reliance on “speed up loras”, asserting WAN 2.2 is highly competitive as a video model given optimal configuration, and highlight its performance for both free and open source use cases.

- Users highlight that WAN 2.2 achieves remarkable photorealism in text-to-image tasks, provided that default workflows are adjusted—specifically cautioning against using speed-up LoRAs which may degrade performance to that of WAN 2.1, thus stressing the need for tuning model settings for optimal results (sample images, further examples).

- There is a technical debate about the significance of WAN 2.2’s improvements as a video model: some users argue that the upgrade represents a substantial leap for open-source models in video generation, while others suggest the claimed improvements are overstated or inadequately demonstrated without robust benchmarks or comparisons.

- A dissenting technical perspective questions the model’s realism in output, implying that despite positive community perception, generated images still fall short of true photographic authenticity and may exhibit typical synthetic artifacts seen in other models.

- Pirate VFX Breakdown | Made almost exclusively with SDXL and Wan! (Score: 529, Comments: 49): The post details a professional VFX workflow using generative AI tools: reference frames were created from stills using SDXL (for improved ControlNet integration), actor segmentation employed both MatAnyone and After Effects’ rotobrush (noted for better hair masking), and the backgrounds were replaced with ‘Wan’, which has been optimized for high-quality video inpainting. The pipeline demonstrates seamless integration of multiple AI models for compositing and background replacement tasks, highlighting tangible improvements in video post-production efficiency and realism. Commenters emphasize the value of professional, non-trivial AI use in creative industries and express interest in deeper process disclosure, contrasting it with less technical AI applications.

- A 3D artist expresses strong interest in a detailed process breakdown, indicating there’s technical curiosity about how SDXL and Wan are specifically used in the VFX pipeline—this suggests relevant insights could include workflow integration, steps taken, and how these tools compare to traditional 3D workflows.

- Another commenter highlights how professional filmmakers using SDXL and Wan for VFX can produce film-quality scenes on a low budget, implying these models substantially lower production costs and raise expectations for affordable, high-quality digital content.

- Flux Krea can do more then just beautiful women! (Score: 397, Comments: 88): The post discusses the capabilities of the AI generative model Flux Krea, highlighting that it can generate diverse outputs beyond the common ‘beautiful women’, including complex scenarios such as ‘ingame screenshots’ and various ‘war pictures’, including those with ‘gore’. The model is contrasted with Wan 2.2, suggesting Flux Krea specializes in a broader or different range of visual content types, particularly those resembling ‘dashcam’ and ‘war journalist’ photography. Comments reference concerns about misinformation/propaganda potential due to realistic image generation, diversity in output style (e.g., tanks, villages, Minecraft themes), and the sensitivity of certain generated content (‘not safe for Warzone’), reflecting debates on AI image generation ethics and risks.

- A commenter references “village and minecraft generations,” suggesting Flux Krea’s capabilities in generating complex and varied scene layouts beyond portraits—a technical testament to its dataset diversity and semantic composition control.

- Another user humorously notes, “We’re going to need a bigger video card,” which indirectly references the computational intensity and high GPU memory requirements typical of running large, advanced image generation models like Flux Krea, especially for higher resolution or batch inference tasks.

- Flux Krea is a solid model (Score: 228, Comments: 48): The post reviews the Flux Krea image generation model, highlighting native image output at 1248x1824 resolution, using the Euler/Beta sampler and a CFG (Classifier-Free Guidance) of 2.4. The model demonstrates improved facial diversity and chin structure compared to previous versions like Flux Dev, though outputs are still noticeably artificial. Commenters note a persistent pale yellow tint and excessive freckles in outputs, suggesting potential issues with training data or style bias (possibly Unsplash-based). There’s also critique regarding a lack of sample diversity in shared outputs, suggesting evaluations should span landscapes, animals, and architecture for more comprehensive model assessment.

- Users report a consistent issue with a pale yellow tint in Krea model outputs, indicating a possible training data bias or color processing problem. Several commenters specifically compare this to outputs from models trained on Unsplash data, speculating similar source influences.

- Attempts to fine-tune Krea (e.g., using LoRA) to counteract the tint and excessive freckles have been met with limited success, suggesting that artifacts are deeply baked into the model’s learned representations, making post-training correction challenging.

- Some users note that Krea’s generated faces resemble those from SD1.5, implying similarities in data distribution or architectural approach, and point out a lack of output variety (e.g., limited non-human subjects), which raises questions about the model’s generalization beyond typical human close-ups.

- Wan 2.2 Text-to-Image-to-Video Test (Update from T2I post yesterday) (Score: 230, Comments: 49): The post presents a test of Wan 2.2’s Image-to-Video capabilities using prior text-to-image outputs, running at native 720p. The author emphasizes preservation of detail and realism (notably human figures) with minimal camera motion and no post-processing, and notes upscaling to 1080p was solely for improved Reddit compression. This builds on previous text-to-image comparisons with Flux Krea, showcasing the model’s output consistency across modalities. Top commenters consider this demonstration as the strongest empirical showcase of Wan 2.2’s generative video capabilities to date, specifically praising the accurate ‘physics’ and dynamic realism in the converted video, which suggests advances in model architecture or training regarding temporal and spatial coherence.

- One user highlights improvements in workflow efficiency when using Wan 2.2 as opposed to previous approaches: in Wan 2.1, their process entailed generating multiple 1080p stills per scene, selecting the best frames, then upconverting to video (720p) using img2video with motion-enhanced prompts. This reportedly yields superior detail and saves time compared to direct text-to-video generation, which often produces lower-quality results.

- The model’s handling of ‘physics’ is praised—implying enhancements in temporal consistency or object dynamics within generated video compared to earlier models. Users note the realism of scene and object movement, suggesting advances in video synthesis beyond just frame interpolation.

- A comparison is drawn to ‘Veo 3,’ implying Wan 2.2 delivers performance or workflow capabilities reminiscent of Google’s advanced video models, but presumably with more accessible or homebrew technology.

- Wan2.2 I2V 720p 10 min!! 16 GB VRAM (Score: 159, Comments: 23): The OP reports running the merged Wan2.2 I2V model (phr00t’s 4-step all-in-one merges) at 1280x720 (81 frames, 6 steps, CFG=2) on a 16GB VRAM card using the Kijaiwarpper workflow, achieving generation in 10-11 minutes with moderate RAM usage (

~25-30 GB, compared to~60 GBin standard Kijaiwarpper). The workflow avoids Out-of-Memory (OOM) issues when unable to use the standard dual-model setup, and reports lower (but not significantly) image quality than the official Wan2.2 site output (1080p, 150 frames at 30 fps), but much improved speed/efficiency and a substantial qualitative jump over 2.1. Full workflow details are shared via Pastebin, and comparisons are made to VEO3. Top comments discuss persistent OOM issues in Kijaiwarpper/Comfy workflows, with some users noting the workflow sometimes loads both models simultaneously instead of sequentially, leading to VRAM overflow and severe performance drops on the low-noise pass. Hardware-specific generation speed is also discussed, e.g., a 4070 Ti Super needing 15-20 min for 5s at 480p, suggesting substantial variation based on VRAM and workflow specifics. An uncompressed video showcase is linked for inspection.- Users report significant out-of-memory (OOM) issues when running the Kijai Workflow with block swapping in Comfy; despite enabling block swapping, sometimes both models are loaded simultaneously instead of sequentially, causing VRAM overflow into system RAM. This leads to acceptable performance only during high noise sampling (when the VRAM can hold the entire model), but performance degrades badly—sometimes to a complete stall—during low noise steps due to reliance on slower RAM.

- Performance observations detail that an RTX 4070 Ti Super can take

15-20 minutesto render a5 second 480pclip, highlighting substantial computational demands and suggesting dramatically longer times for higher resolutions or longer durations. Another user with an RTX 5060 Ti 16GB and matching system RAM experiences hard crashes, indicating possible incompatibilities or insufficient resources despite minimum VRAM seemingly being met. - There is mention of uncompressed output video, but the primary technical focus is on model adaptability and severe resource requirements or instability across different hardware, underscoring the need for further workflow optimization or clearer documentation regarding performance and hardware compatibility.

- Testing WAN 2.2 with very short funny animation (sound on) (Score: 143, Comments: 16): The post presents a test of WAN 2.2 for text-to-video (T2V) and image-to-video (I2V) continuation, with output rendered at 720p. The poster notes that while artifact issues persist in version 2.2, prompt following has improved. There are no reported changes in model architecture, and artifact reduction remains an ongoing limitation. A commenter asks about technique—whether I2V continuation was achieved by using the last frame of the previous WAN 2.2 output—with the poster implying that simply increasing frame count degrades video quality further. Another comment humorously notes prompt adherence issues, suggesting generation fidelity is still imperfect.

- One commenter inquires about animation continuity, asking if the next video starts from the last frame of the previous one. They note that when they attempted to render with more frames, the results actually looked worse, implying possible model or rendering limitations when dealing with frame sequences.

3. OpenAI & AI Industry Model/API Rumors and Announcements

- GPT-5 is already (ostensibly) available via API (Score: 581, Comments: 191): A Reddit user reports access to a model labeled

gpt-5-bench-chatcompletions-gpt41-api-ev3via the OpenAI API, suggesting it may be an ostensible early release of GPT-5. The model’s naming convention indicates adaptation to the GPT-4.1 API (for backwards compatibility) but possibly introduces new API parameters, as noted by the commenter that “it only supports temp=1 and modern parameters”. Linked logs and screenshots show API activity and OpenAI Console output before OpenAI disabled access. Commenters validated its capability with creative tasks: producing a detailed SVG image (example) and a feature-rich HTML/CSS/JS landing page in a single shot (sample output), reporting qualitative improvements over GPT-4/4.1, especially in creative and structural code generation.- Users report that the API (allegedly GPT-5) demonstrates strong capabilities in both creative coding and design generation, such as producing a consistent, visually polished iGaming landing page in a single completion, meeting detailed prompt requirements (responsive layout, modern CSS, JavaScript interactivity with no frameworks, all inline assets). This level of output—“oneshotting”—suggests a notable improvement over GPT-4, especially in specification-following and code quality.

- Technical details note that the API only supports

temperature=1and modern parameter sets, which could indicate an updated or experimental deployment compared to earlier GPT-4 endpoints. This parameter limitation itself may provide circumstantial evidence that it’s a distinct model or experimental branch. - There is skepticism and semantic debate about whether the model is truly GPT-5, highlighting that OpenAI’s naming conventions are not always transparent: the model is described as ‘supposedly’ or ‘ostensibly’ GPT-5, meaning users cannot independently verify its underlying architecture and are relying on external indicators (e.g., prompt performance, API metadata) rather than formal announcement.

- OpenAI’s new open source models were briefly uploaded onto HuggingFace (Score: 182, Comments: 37): The post discusses a leak where OpenAI’s new open-source models, reportedly with parameter sizes of 20B and 120B, were briefly uploaded to HuggingFace. The most notable technical details from the image and comments are the model hyperparameters: 36 hidden layers, 128 experts with 4 per token (Mixture-of-Experts architecture), vocab size 201,088, hidden/intermediate size 2,880, 64 attention heads, 8 key-value heads, 4096 context length, and specific rotary position embedding (RoPE) configurations (e.g., rope_theta 150000, scaling_factor 32). This hints at a large-scale, expert-mixture transformer model likely optimized for efficiency and performance at large parameter counts. Key technical debate centers on whether the 20B parameter model effectively supports tool calling and code use cases, indicating community interest in its practical integration capabilities rather than just size. There is also speculation on model architecture versus other open-source models.

- A user shares a detailed architecture breakdown of the model, indicating parameters such as

num_hidden_layers: 36,num_experts: 128(suggesting a Mixture-of-Experts architecture),experts_per_token: 4,hidden_size: 2880, and attention configuration details (e.g.,num_attention_heads: 64,num_key_value_heads: 8,sliding_window: 128, andinitial_context_length: 4096). These details are relevant for understanding the scale and structure of the model. - The discussion references two model sizes—20B and 120B parameters—implying both a large-scale and a more accessible model, with users expressing interest in using the 20B variant for tool calling and code tasks, indicating practical consideration of hardware requirements versus capability.

- A user shares a detailed architecture breakdown of the model, indicating parameters such as

- OpenAI are preparing to launch ChatGPT Go, a new subscription tier (Score: 230, Comments: 70): The image (https://i.redd.it/c4ouejprhdgf1.jpeg) presents a teaser or leak regarding a new OpenAI product called ‘ChatGPT Go’, implied as a forthcoming subscription tier. The main technical context, corroborated by comments, is speculation that this tier will fit between the Free and Plus offerings, purportedly priced at $9.99/month, potentially introducing a ‘pay as you go’ billing model—suggesting greater flexibility or usage-based pricing compared to current plans. The community is attempting to infer both features and pricing from the image and announcement leak. Discussion in comments centers on price speculation (from $10 to $2,000/month) and the possible shift to a usage-based subscription (‘pay as you go’)—but no concrete technical details or official benchmarks have been confirmed yet.

- Users speculate about the pricing and feature differentiation of “ChatGPT Go,” suggesting it may fill the gap between the free and Plus tiers, possibly at a $9.99/month price point, and raising the question of whether its feature set will be closer to the more limited free tier or the enhanced GPT Plus tier.

- One comment discusses the potential introduction of ads to the free tier as an offset for new subscription models, which would alter the current monetization strategy of OpenAI’s ChatGPT offerings. This highlights a broader trend in SaaS monetization where free services are subsidized by ads or tiered features.

- There is a question regarding resource allocation and access, with one user explicitly asking if “Go” will provide fewer capabilities or model access compared to GPT Plus, indicating interest in technical limitations or distinctions between the subscription levels (e.g., availability of GPT-4, limits on usage, or priority access during high-load periods).

- Anthropic just dropped 17 videos to watch (Score: 761, Comments: 139): Anthropic has released 17 new YouTube videos (approx. 8 hours total) via their official channel (link), potentially offering detailed technical insights into their latest research, model demos, safety implementations, or product updates. This structured video drop may suggest a coordinated knowledge-sharing or marketing push targeting both developers and the wider AI community. The most technically relevant comment highlights an issue with YouTube’s video watch rate limiting, which could hinder researchers’ ability to view large sets of content quickly. Another commenter mentions leveraging third-party summarization tools (e.g., Comet AI browser) for efficient information extraction, alluding to existing bottlenecks in manual video consumption.

- A commenter highlights that Anthropic had an internal usage leaderboard to track employees’ token consumption, revealing competitive non-research use among staff—one employee admitted leading in token usage without contributing code or direct company value. This is contrasted with criticism directed at external users for overuse, raising questions about the rationale and messaging behind Anthropic’s recent usage-limit policy.

- There is an expressed disappointment that Anthropic’s new videos lack deep technical detail, with a skeptical query about whether the company is shifting away from emphasizing research-centric content, possibly influenced by industry trends led by figures like Elon Musk.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.5 Flash Preview 05-20

Theme 1. Frontier LLM Developments & Speculation

- GPT-5 Mystery Deepens: Panic Drop or Polite Improvement? Speculation surrounds GPT-5’s release, with views split on whether it will be a full “panic drop” due to scaling limits or a smaller, more focused model. One user briefly spotted GPT-5 in the API (the GPT-5 API sighting) before its swift removal, fueling further speculation about its eventual unified, omnimodal nature.

- Horizon Alpha Rises: Free Model Crushes Paid LLMs! Horizon Alpha outperforms paid LLMs via the OpenRouter API, delivering perfect one-shot code in custom programming languages. Users laud its superior shell use and task list creation in orchestrator mode, with some speculating it’s an OpenAIstyle 120B MoE or 20B model.

- Gemini’s Generation Gets Glitchy, Pricing Gets Punished! Some members report Gemini’s repetitive behavior and note video limits dropped from 10 to 8. The community widely criticizes Gemini Ultra’s $250/month plan, which imposes a meager 10 queries per day, calling it a “scam” and “daylight robbery.”

Theme 2. Open-Source & Local LLM Optimization

- Qwen Models Push Quantization Limits, Codeium Flies! Discussions focus on optimal quantization for Qwen3 Coder 30B (Q4_K_M gguf slow, UD q3 XL for VRAM) and issues with tool calling. Qwen3-Coder now runs at approximately 2000 tokens/sec on Windsurf, fully hosted on US servers.

- Unsloth Finetuning Unleashes New Speed and Power! Unsloth now supports GSPO (an update to GRPO), which works as a TRL wrapper, and dynamic quantization can be replicated with the

quant_clonesmall application. Members are exploring continuous training of LoRAs and have used Unsloth to build a Space Invaders game. - LM Studio: Offline Dreams Meet Online Nightmares? Users anticipate image-to-video prompt generation and image attachments for offline use, preferring it over cloud-based alternatives like ChatGPT. However, security vulnerabilities for connecting to the LM Studio API across networks are a concern due to unverified security.

Theme 3. AI Coding & Agent Tooling

- Aider Dominates Code Editing, DeepSeek Delivers Big! Users praise Aider for its superior control and freedom, with one estimating it completed one week of programming work in a single day for just $2 using DeepSeek. Speed comparisons with SGLang and Qwen also show high performance, reaching 472 tokens/s on an RTX 4090.

- AI Agents Branch Out, Go On-Chain! Developers are building on-chain AI agents for trading and governance using Eliza OS and LangGraph, alongside efforts to create an OSS model training script for natural cursor navigation. Discussions also highlight AnythingLLM (AnythingLLM tweet) for ensuring data sovereignty in agentic systems.

- MCP Tools Level Up: Security, Payments, and JSON Processing! A new security MCP check tool (GitHub repo) seeks feedback, while PayMCP offers a payment layer for MCP servers with Python and TypeScript implementations. A JSON MCP Server (GitHub repo) further aids LLMs in efficiently parsing complex JSON files, saving valuable tokens and context.

Theme 4. Hardware & Performance Benchmarking

- AMD’s MI300X Flexes on Nvidia, GEAKs Out! New MI300X FP8 benchmarks (the MI300X FP8 benchmarks) suggest AMD’s MI300X outperforms NVIDIA’s H200 in certain tasks, with performance approaching the B200. AMD also introduced GEAK benchmarks and a Triton Kernel AI Agent (the GEAK paper) for AI-driven kernel optimization.

- Nvidia Drivers Go 580.88: Fixes for Fast Motion! Nvidia released driver 580.88 quickly after 577.00, a 9-day-old driver, to fix a potential issue with GPU video memory speed after enabling NVIDIA Smooth Motion. Discussions also cover solving CUDA compiler issues with

__launch_bounds__to determine register count at entry, despitesetmaxnregstill being ignored. - Builders Debate Multi-GPU Setups, Eye VRAM Savings! Discussions include recommendations for motherboards like the MSI X870E GODLIKE for dual 3090s, comparing Mac mini M4 to RTX 3070, and exploring the feasibility of partial KV Cache Offload in LM Studio to optimize VRAM usage. Efforts continue on DTensor and basic parallelism schemas, inspired by Marksaroufim’s visualizations.

Theme 5. AI Product Pricing & User Experience

- Perplexity Pro Rolls Out Comet, Glitches on iOS, Goes Free for Millions! Perplexity is slowly distributing Comet Browser invites to Pro users, but iOS image generation faces recurring issues where attached images are not incorporated. Notably, over 300 million Airtel subscribers in India are receiving free Perplexity Pro for 12 months.

- Kimi K2 Turbo Goes Ludicrous Speed, Drops Prices! The Moonshot team announced Kimi K2 Turbo, boasting 4x the speed at 40 tokens/sec with a 50% discount on input/output tokens until Sept 1 at platform.moonshot.ai. A new Moonshot AI Forum also launched for technical discussions, complementing Discord’s “vibin for memes” atmosphere.

- API Errors and Sky-High Costs Plague AI Users! Gemini Ultra’s Deep Think plan faces ridicule for its 10 queries/day limit at $250/month, prompting comparisons to more reasonably priced alternatives. Users also report persistent API errors and timeouts with OpenRouter models like Deepseek v3 free (often overloaded) and the Cohere API.

Discord: High level Discord summaries

Perplexity AI Discord

- Comet Browser Invites Trickle Out: Perplexity is slowly distributing Comet Browser invites, prioritizing Pro users.

- Users report varied wait times, suggesting Pro users can share up to 2 invites to speed up the process.

- Perplexity Pro Image Generation Fails on iOS: Users are reporting that Perplexity Pro on iOS fails to incorporate attached images during image generation, creating recurring issues.

- The model summarizes requests without generating images from the attachments, even after starting new chats.

- Airtel India Subscribers Score Free Perplexity Pro: Airtel subscribers in India (over 300 million people) are receiving Perplexity Pro for free for 12 months.

- The promotion is exclusive to Airtel subscribers located in India.

- GPT-5 Release Date: Still Shrouded in Mystery: Speculation surrounds the release of GPT-5, with conflicting views on whether it will be a full release or a smaller, more focused model.

- One user claimed to have briefly seen GPT-5 in the API (source), but it was quickly removed, fueling further speculation.

- Search Domain Filter Confounded: A Perplexity Pro subscriber reported that the search_domain_filter is not functioning as expected despite the feature not being in beta.

- Another member requested a copy of the user’s request for further investigation and assistance.

Unsloth AI (Daniel Han) Discord

- GPT-5: Panic Drop or Polite Improvement?: Members are speculating if GPT-5 will be a panic drop due to OpenAI’s limitations in scaling, along with diminishing returns from Chain of Thought (CoT).

- Claims suggest CoT is a complete dead end, proposing direct network feedback of the model’s vector output instead of using tokens for thinking.

- Qwen3 tests quantization limits: Discussions revolve around the best quantization for Qwen3 Coder 30B, with reports on Q4_K_M gguf being slow in Ollama, while others prefer UD q3 XL for VRAM savings.

- One member runs the April Qwen3-30b-a3b model at 40k in vllm on a 3090 24/7, awaiting a 4-bit AWQ version for the coder model.

- Unsloth now supports GSPO: After Qwen proposed GSPO as an update to GRPO, members clarified that GSPO already works in Unsloth and it is a wrapper that will auto-support TRL updates.

- Although GSPO is slightly more efficient, members did not note any significant updates to performance.

- VITS Learns to Breathe: A member training a VITS checkpoint overnight shared that model quality depends on epochs and dataset quality, and VITS excels at speaker disentanglement.

- Furthermore, they discovered VITS encodes raw audio into latent space for realistic recreation and can learn subtleties like breathing at commas with annotation and ran into memory issues on iOS.

- Dynamic Quantization gets Quant Clone: A member created a small application to quantize finetunes the same way as Unsloth’s dynamic quantization, wanting to replicate it on their own finetunes.

- A user reported high refusal rates in their Gemini finetunes, and found Gemini to be quite obnoxious in that regard.

LMArena Discord

- Arena Enhancements Aim to Assist: Members suggested adding buttons for Search, Image, Video, and Webdev Arena to boost visibility, and also suggested adding tooltips to the leaderboard explaining how Rank, CI, and Elo are determined, sharing a concept image.

- The goal is to assist users in navigating the platforms and understand ranking metrics.

- Data Concerns: Personal Info Peril: A user raised concerns about accidentally including personal information in published prompts and asked for ability to remove prompts.

- A member responded that such examples should be DM’d to them for escalation, and acknowledged sharing these concerns with the team.

- Gemini’s Generation Gets Glitchy: Some members noted Gemini exhibited repetitive behavior, while another questioned if Gemini 2.5 Flash fixed the issue and one user noted video limits dropping from 10 to 8, urging others to use the video generation arena quickly.

- The community’s sentiment is split between experiencing glitches and consistent performance.

- DeepThink Debut Disappoints?: With the release of Gemini 2.5 Deepthink for Ultra members, members are wondering if it is worth it after seeing 10 RPD limit.

- Members called it a scam and a daylight robbery, saying it’s just a rushed version because of the imminent GPT-5 release.

- Veo 3 Visuals Victory: Veo 3 Fast & Veo 3 are out with new Image-to-Video with audio capabilities within the Video Arena.

- The community can now create videos from images using the new

/image-to-videocommand in the video-arena channels, with voting open for the best videos.

- The community can now create videos from images using the new

Cursor Community Discord

- Vibe Coding Sparks GitHub Needs: A member inquired about the necessity of GitHub for background agents, exclaiming this thing is sick alongside an attached image, sparking curiosity about vibe coding setups.

- Another user, having spent $40 on prompts, sought advice on optimizing their Cursor setup, reflecting a common interest in efficient configuration.

- Cursor Freezing Bug Creates Frustration: A user reported frequent machine freezes every 30-60 seconds after an hour of chat use, indicating a persistent Cursor freezing bug.

- A Cursor team member recommended posting the issue on the Cursor forum, highlighting the official channels for bug reporting and assistance.

- Model Spending Compared to Claude Pro: Users debated the pricing of Cursor versus Claude Pro, with one stating their preference for the cheapest plans and best models, favoring Claude’s $200 plan.

- Another user cautioned about escalating costs, reporting spending $600 in 3 months, emphasizing the need for cost management.

- Horizon Alpha Experience Divides Users: One user described their personal experience with Horizon-Alpha as a bit underwhelming, suggesting mixed reactions to the new feature.

- Conversely, another user lauded cursor is the best app i have ever seen, underscoring the subjective nature of user experiences.

- Referral Program Requested for Cursor: Members have inquired about a referral program for Cursor, with one user claiming to have onboarded at least 200+ people by now sitting in discords lmao, indicating significant community-driven adoption.

- A link to the Cursor Ambassador program was shared, providing an alternative avenue for rewarding community contributions.

OpenAI Discord

- Function Calling APIs Trump XML Workarounds: Function Calling APIs have inherent value over structured XMLs, which are often used as a workaround when models like Qwen don’t support native tool calling.

- Inline tool calls maximize interoperability for coding models like Qwen, even with minor inefficiencies.

- Zuckerberg’s AI Sparks Bio-Weapon Concerns: Mark Zuckerberg’s AI superintelligence initiative raised concerns about potential bio-weapon creation, and one member warned against releasing superintelligence to the public.

- Members also expressed concern that controlling minds with fake users and carefully crafted language could be more dangerous than bio-weapons.

- GPT-5 Faces Delay, Grok4 Takes the Crown?: Rumors suggest GPT-5’s delay is due to an inability to surpass Grok4, but OpenAI plans to combine multiple products into GPT-5.

- Clarification was given that GPT-5 will be a single, unified, omnimodal model.

- Horizon Alpha Outshines Paid LLMs: Horizon Alpha is outperforming paid LLMs via the OpenRouter API, delivering perfect one-shot code in custom programming languages.

- Its shell use and task list creation in orchestrator mode are superior to other models, though some speculate it could always be something turbo weird we’re not thinking of like codex-2.

- Large Context Windows Spark Debate: Despite Gemini’s 1 million context window, legacy codebase issues were better solved with Claude and ChatGPT, sparking debate on whether large context windows are overrated.

- Some prefer models with smaller context windows and better output, while others insist larger windows are crucial for agentic applications to remember and weave in far‑back details automatically.

LM Studio Discord

- Image-to-Video Prompt Generation Dreams in LM Studio: Members are anticipating future image-to-video prompt generation and image attachment features in LM Studio, favoring offline capabilities over cloud-based alternatives like ChatGPT.

- As an alternative, one member mentioned ComfyUI, noting it might not be optimized for AMD cards.

- LM Studio’s Roadmap: A Mystery: The community discussed the absence of a public roadmap for LM Studio, with speculation that development plans might be unstructured and unpredictable.

- A member stated, no public roadmap so noone knows.

- LM Studio API Security Considerations: Users debated connecting to the LM Studio API across a network, highlighting potential security vulnerabilities.

- Concerns were raised about LM Studio’s unverified security, cautioning against exposing it without proper risk assessment and network protection.

- Qwen3 Coder Model Faces Loading Glitches: Users encountered difficulties when loading the Qwen3 Coder 30B model, triggering a Cannot read properties of null (reading ‘1’) error.

- A fellow member suggested an update to version 0.3.21 b2 which claims to have resolved the issue, along with enabling recommended settings.

- Nvidia Bursts Out a Driver: Nvidia released driver 580.88 quickly after 577.00, a 9-day-old driver with a fix for a potential issue with GPU video memory speed after enabling NVIDIA Smooth Motion [5370796].

- The user runs the drivers from the cuda toolkit, and doesn’t use the fancy control panel or GFE (GeForce Experience).

OpenRouter (Alex Atallah) Discord

- API Errors Plague OpenRouter: Users reported experiencing API errors when using models via the OpenRouter API, with one user suggesting checking the model ID prefix and base URL to resolve the issue.

- Errors include no endpoint found which members suggested was caused by potential misconfiguration.

- Deepseek v3 Free Model Plagued by Outages: Users experienced issues with the Deepseek v3 0324 free model, including internal errors, empty responses, and timeouts, leading some to switch to the paid version.

- One member pointed out free is completely overloaded. paid has none of these issue, and the actual content quality is better.

- Horizon Alpha Hailed as Effective: Users praised the Horizon Alpha model for its effective reasoning and good performance.

- While the model claimed it was developed by OpenAI, community members clarified that it was likely a distilled model.

- Personality.gg Leverages OpenRouter for Roleplay: Personality.gg launched a roleplay site using OpenRouter for most models, providing access to all 400 models through OpenRouter PKCE completely free/cheap.

- This integration lets users engage in role-playing scenarios with a wide variety of AI models.

- PyrenzAI’s UX Wins Praise: A user complimented the UI/UX of PyrenzAI, appreciating its unique look and style, and distinctive sidebar design compared to other apps.

- Despite speed and security critiques, the application’s user interface received positive feedback.

Moonshot AI (Kimi K-2) Discord

- Kimi K2 Goes Ludicrous Speed with Turbo!: The Moonshot team announced Kimi K2 Turbo, touting 4x the speed at 40 tokens/sec, with a 50% discount on input and output tokens until Sept 1 at platform.moonshot.ai.

- Users can now experience significantly faster performance thanks to faster hosting of the same model, available via official API.

- Moonshot AI Launches New Hangout Spot: Moonshot AI launched the Moonshot AI Forum (https://forum.moonshot.ai/) for technical discussions, API help, model behavior, debugging, and dev tips.

- While Discord’s still vibin for memes and chill convos, the forum aims to be the go-to spot for serious builds and tech discussions.

- Kimi K2 Challenges Claude’s Reign: One user reported Kimi K2 as the first model they can use instead of Claude, prompting them to drop Gemini 2.5 Pro due to coding, as a kind of information, becoming freer.

- The user also added that they expect most AIs will converge in terms of knowledge, so the differences between them will start to blur.

- Kimi K2 Turbo Pricing Details Exposed: The speedy Kimi K2 Turbo is priced at $0.30/1M input tokens (cached), $1.20/1M input tokens (non-cached), and $5.00/1M output tokens with a special promo until Sept 1.

- This equates to roughly 4x faster for 2x the price during the discount, tailored for users requiring swift processing.

- Gemini Ultra’s Deep Thinking Costs a Pretty Penny: Members ridiculed Google Gemini Ultra’s plan imposing a 10 queries a day limit for $250/month, with one user saying it was very funny and very scummy.