They put the Open back in OpenAI!

AI News for 8/4/2025-8/5/2025. We checked 12 subreddits, 544 Twitters and 29 Discords (227 channels, and 8121 messages) for you. Estimated reading time saved (at 200wpm): 615 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

Day 2 in what is expected to be the most packed AI news week of the year, 3 of the big labs all announced models that would individually have taken title story.

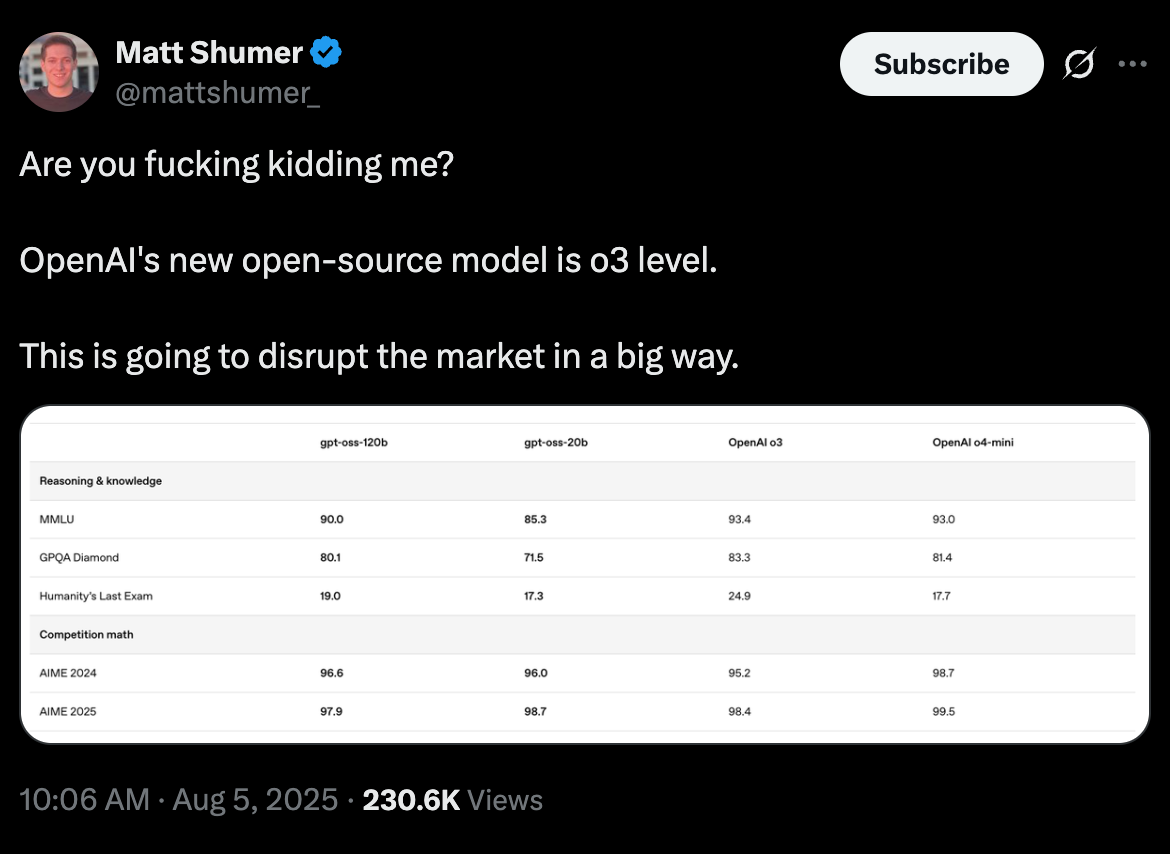

First the most (unintentionally) leaked launch: OpenAI’s new open weights GPT-OSS models bring o4-mini class reasoning to your desktop (60GB GPU) and phone (12GB), which you can test in the new gpt-oss playground:

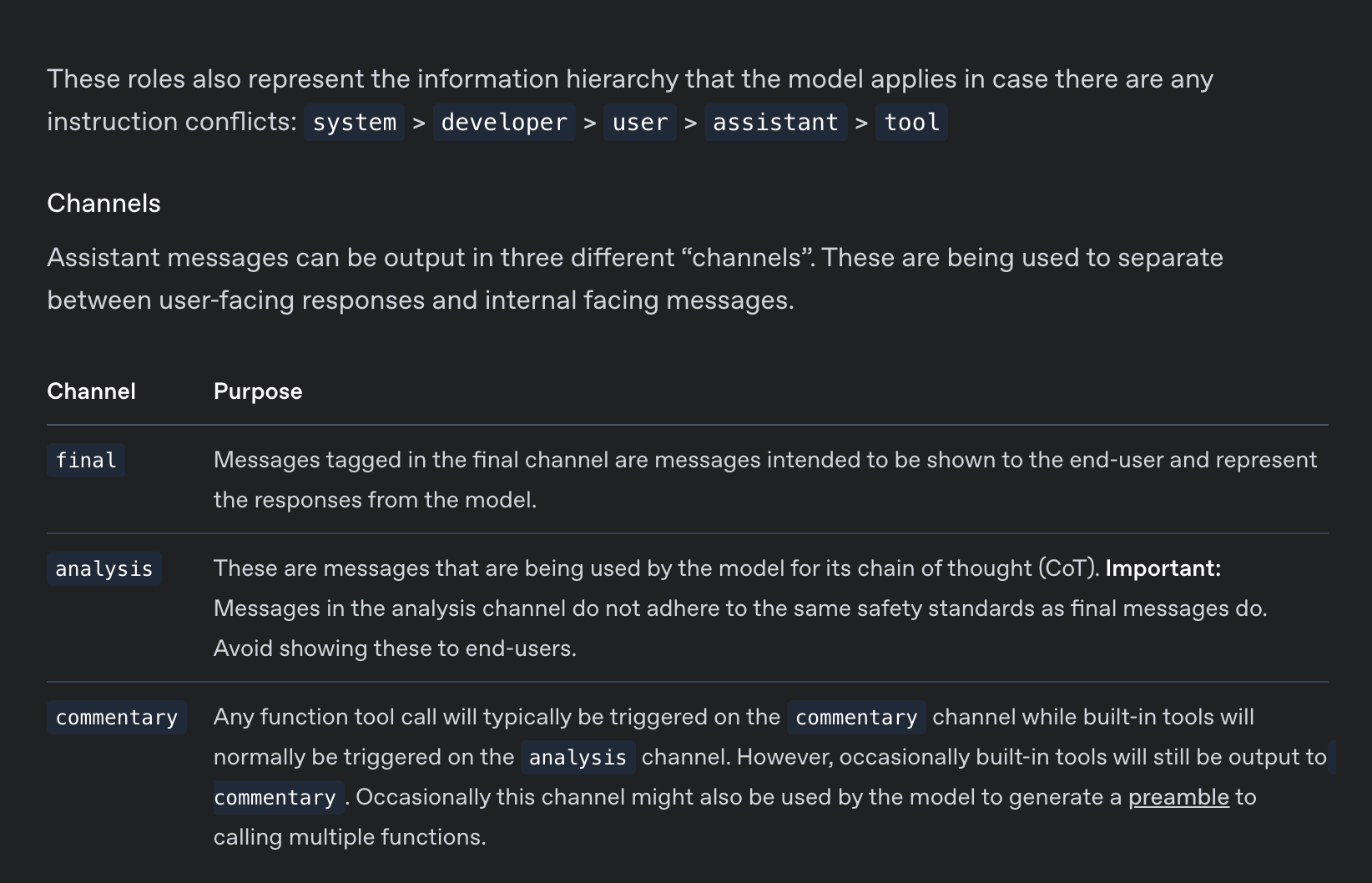

The model card and the research blog are worth a browse. The models also debut the harmony response format (open sourced) which update the old school ChatML with new concepts like message “channels”:

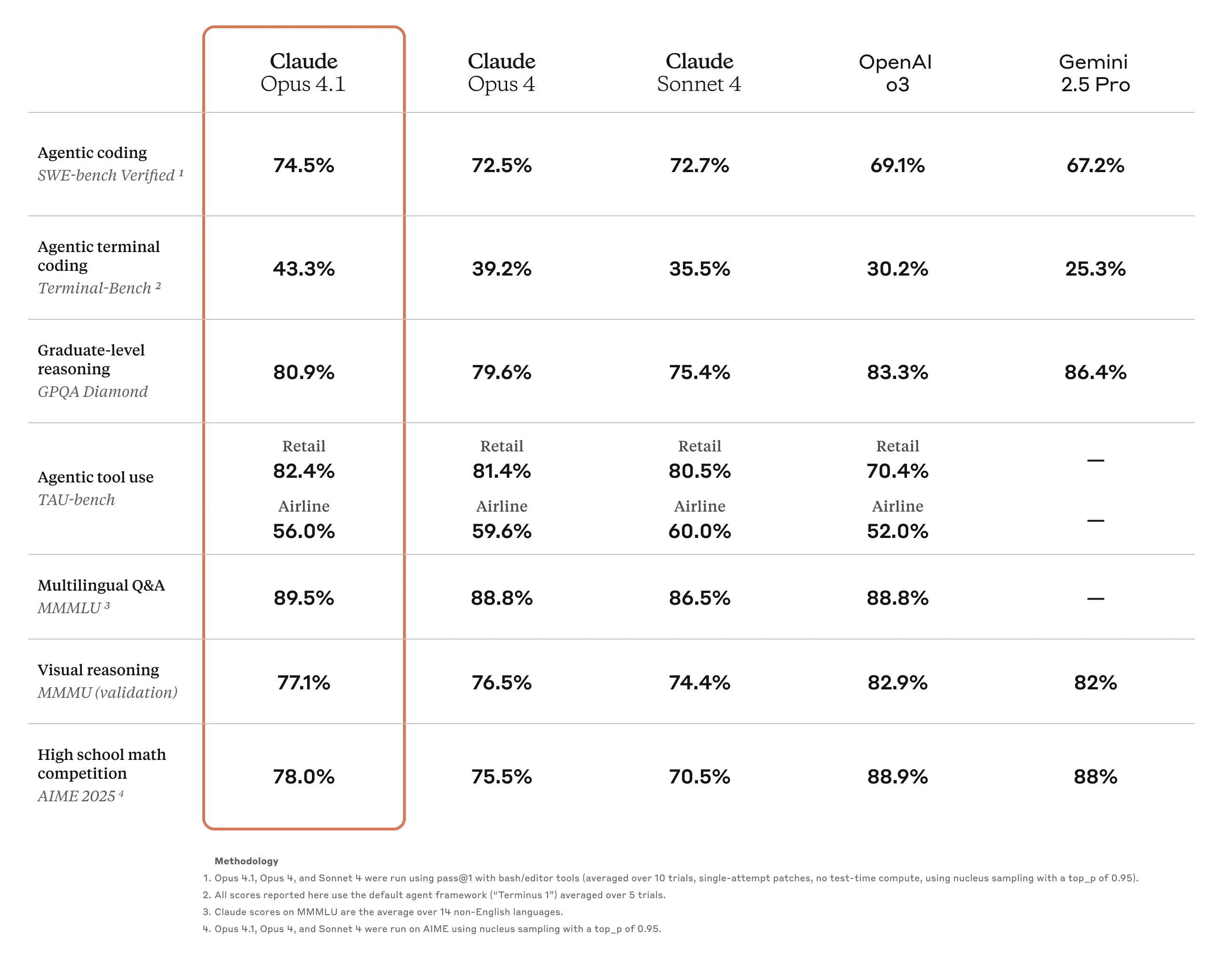

On the same day, Anthropic also released Claude 4.1 Opus (blog), which was also leaked. This should be the best coding model in the world… for now.

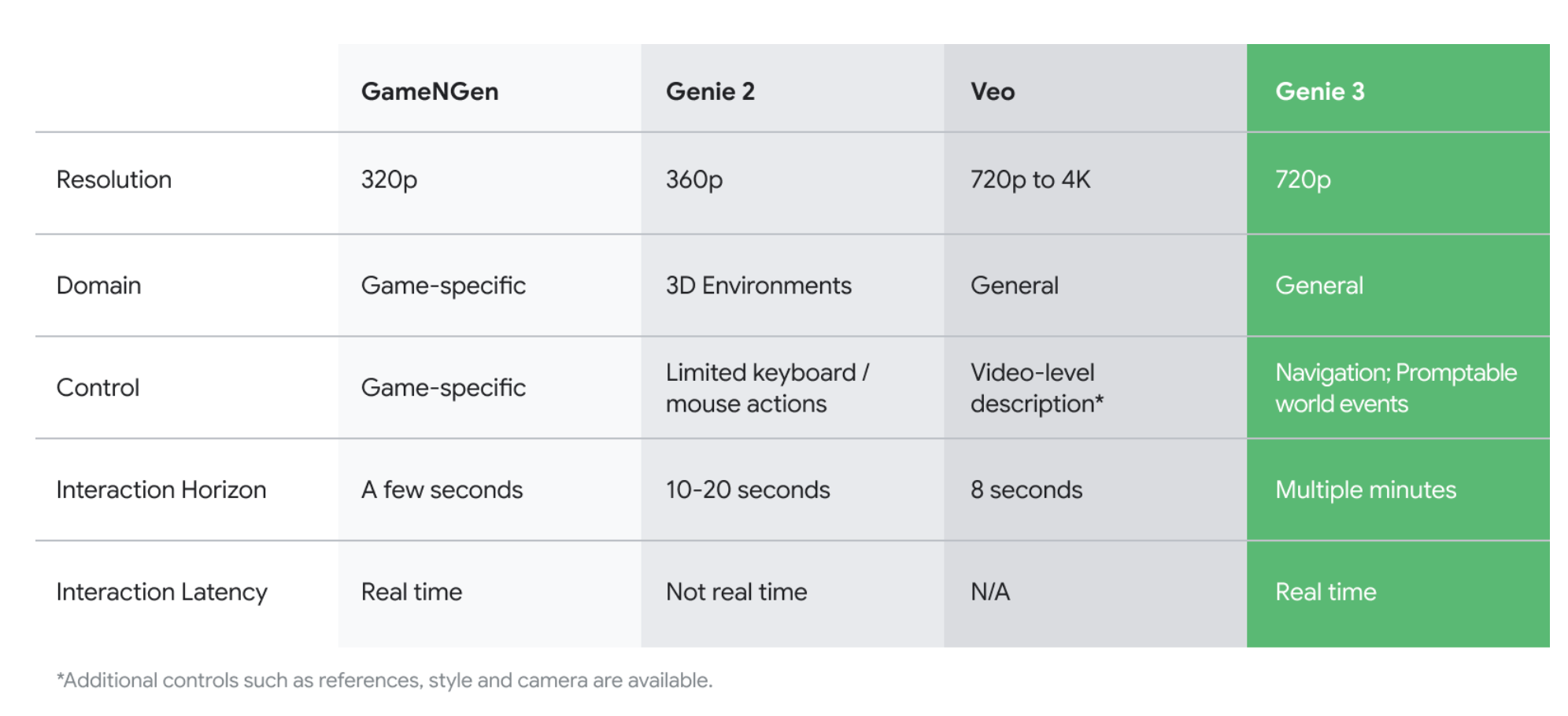

Finally, DeepMind’s Genie 3 showed off extremely impressive realtime world simulation with navigation and minute-long consistency, but in classic Genie fashion, you’ll just have to take their word that the demonstrated videos aren’t cherrypicked.

AI Twitter Recap

OpenAI’s gpt-oss Open-Weight Model Release

- OpenAI releases

gpt-oss-120bandgpt-oss-20b, its first open-weight models since GPT-2: @sama announced the release, describing them as state-of-the-art reasoning models with performance comparable too4-minithat can run locally on high-end laptops, licensed under Apache 2.0. The release aims to empower individuals with control over their AI, foster innovation, and promote an AI stack based on democratic values. The models are designed for agentic tasks, with safety mitigations for issues like biosecurity. OpenAI’s official account also announced the release, with employees like @polynoamial and @kaicathyc sharing their excitement. - Model Architecture and Technical Details: The models are Mixture-of-Experts (MoEs), with

gpt-oss-120bhaving 117B total / 5.1B active parameters andgpt-oss-20bhaving 21B total / 3.6B active parameters. @rasbt provided technical analysis, noting they appear to be wide vs. deep architectures and, surprisingly, use bias units in the attention mechanism, a feature from GPT-2. @finbarrtimbers highlighted the use of an efficient einsum MoE implementation, and @vikhyatk pointed out a unique swiglu variant with clamped inputs and a skip connection. The training compute for the 120B model was estimated at 2.1 million H100 hours, comparable to DeepSeek-R1’s 2.66 million H800 hours, as noted by @scaling01. - Performance, Benchmarks, and Hallucinations: @SebastienBubeck stated the models achieve impressive scores like 80 on GPQA and can run on a single GPU. However, @scaling01 pointed out a potential for a “hallucination fiesta” and poor scores on Aider Polyglot. The models seem to be overtrained for specific tasks, with @teortaxesTex noting they have “open sourced their reasoning effort scaling that totally destroys every other attempt” but fail on simpler problems. @vikhyatk speculates the models may be primarily trained on synthetic data to reduce risks like copyright infringement and harmful content.

- Tooling, Chat Format, and Integrations: The models are post-trained to use a web browser and an interactive python notebook, giving them powerful out-of-the-box agent capabilities. They use a new chat template called Harmony, which @Trinkle23897 recalls working on three years ago. The release saw immediate support from the ecosystem, including vLLM (which detailed its integration), Hugging Face (which provided a finetuning guide), Ollama (which partnered with NVIDIA and Qualcomm for acceleration), Groq, Cerebras, and Together AI.

- Community Reaction and Open Source Impact: The release was seen as a major win for open source, with @AndrewYNg thanking OpenAI for the “gift”. @ClementDelangue noted that

gpt-ossbecame the #1 trending model on Hugging Face almost instantly. @willdepue observed that it has been less than a year sinceo1was announced, and now ano3-levelmodel is runnable on consumer hardware.

Major Model & Product Releases (Non-OpenAI)

- Google DeepMind Unveils Genie 3: Google DeepMind announced Genie 3, a groundbreaking world model that can generate entire interactive, playable simulations from a text prompt. @demishassabis highlighted its ability to generate multi-minute, real-time interactive simulations. @DrJimFan described it as “game engine 2.0,” where a data-driven blob of weights replaces the complexity of engines like UE5. He also related it to the challenges of world modeling for robotics with GR00T Dreams. The model features world memory for environmental consistency and renders at 720p.

- Anthropic Releases Claude Opus 4.1: Anthropic released Claude Opus 4.1, an upgrade to Opus 4 focused on agentic tasks, real-world coding, and reasoning. It saw immediate integration into developer tools like Cursor, which announced day-one support. @alexalbert__ announced a livestream to discuss the new model and Claude Code. The release also came with a tease of “substantially larger improvements… in the coming weeks.”

- Alibaba Releases Qwen-Image and New APIs: Alibaba Qwen introduced Qwen-Image, a 20B MMDiT (Multimodal Diffusion Transformer) model for text-to-image generation, noted for its strength in creating graphics and Chinese text. It gained support in ComfyUI and Diffusers. Alibaba also released APIs for Qwen3-Coder and Qwen3-2507 with 1M token context length.

- xAI Launches Grok Imagine: Grok’s image generation feature, Grok Imagine, is now live for all X Premium users on the Grok app.

- Meta AI Releases Open Direct Air Capture Dataset: Meta AI, in collaboration with Georgia Tech and CuspAI, released the Open Direct Air Capture 2025 dataset, the largest open dataset for discovering materials to capture CO2 from the air.

AI Safety, Benchmarking, and Evaluation

- OpenAI Launches $500K Red Teaming Challenge: OpenAI announced a $500K Red Teaming Challenge to invite researchers and developers to help uncover novel risks and strengthen open-source safety. METR confirmed its involvement in providing external feedback on OpenAI’s methodology for assessing catastrophic risks. However, @RyanPGreenblatt expressed concerns that substantial CBRN (Chemical, Biological, Radiological, Nuclear) risks have not been ruled out.

- Kaggle Launches Game Arena: Demis Hassabis announced the Kaggle Game Arena, a new leaderboard and tournament series for testing modern LLMs on games, starting with chess. This provides a new way to measure agent performance in competitive environments.

- New Benchmarks and Model Performance: GLM-4.5 was noted for its strong performance on Terminal-Bench, placing it among Claude-level models. On the cost-aware AlgoTune benchmark, open-weight models like Qwen 3 Coder and GLM 4.5 were shown to beat Claude Opus 4, as the benchmark budgets models at $1 per task.

Industry News, Tooling, & Broader Implications

- The Cloudflare vs. Perplexity AI Agent Debate: A major debate erupted after Cloudflare began blocking AI crawlers, a move that drew sharp criticism. Perplexity AI issued a strong statement, claiming Cloudflare’s leadership is “dangerously misinformed” and arguing that AI agents are extensions of human users. The issue was amplified by figures like @balajis and Y Combinator’s @garrytan, who reported their sites were blocked without permission.

- Acquisitions and Funding: Perplexity AI announced it has acquired Invisible_HQ, a team with expertise in scalable infrastructure for agents. In funding news, @steph_palazzolo reported that Reflection AI, a startup from DeepMind researchers, is in talks to raise $1B+ for open-source model development, and EliseAI is being backed at a $2B valuation for its AI voice agents.

- AI’s Impact on the Future: Midjourney’s David Holz raised concerns about the power of AI technologies that will be used in the 2028 presidential election, stating “we aren’t ready.” Meanwhile, @OpenAI’s Woj Zaremba shared a link indicating user satisfaction with ChatGPT, noting its growth to nearly 700M weekly active users as shared by @juberti.

- Frameworks and Tooling Updates: LangChain announced that its LangGraph Platform and LangSmith have achieved SOC 2 Type II compliance. Jules, a coding agent, introduced Environment Snapshots to save dependencies for faster, more consistent task execution. Agent Reinforcement Trainer hit #1 on GitHub trending repositories.

- The Rise of Open Models: @natolambert argues that America needs to take open models more seriously, as its early lead via Llama has been eroded by strong open models from China. This sentiment was echoed by @hardmaru and @ClementDelangue, who emphasized that “the world runs on open-source.”

Humor/Memes

- Relatability and In-Jokes: @portiaspetrat posted the highly relatable “ever since i was a little girl ive loved information”. A tweet from @bigsnugga claimed “cher predicted grok” with a meme.

- The OpenAI Hype Cycle: A series of posts from @ollama showed a coffee cup getting progressively more jittery with captions like “just one more cup before ollama gets ready” and “getting ready for the day. @nvidia GeForce RTX is powered on.” in anticipation of the day’s releases.

- Model Behavior Quirks: @soumithchintala lamented having to “gentrify” his writing style because ChatGPT made the em dash the “official punctuation of soulless AI prose.”

- Parody: @Yuchenj_UW posted an “AI model dropping Law,” joking that whenever Google drops a model, OpenAI is bound to follow, predicting a massive release week.

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. OpenAI GPT-OSS Model Releases, Integrations, and Community Discussion

- 🚀 OpenAI released their open-weight models!!! (Score: 1124, Comments: 375): The image is associated with the announcement of OpenAI’s first open-weight models, gpt-oss-120b (117B parameters, 5.1B active) and gpt-oss-20b (21B parameters, 3.6B active), intended for production-ready and local/specialized AI tasks. These models, available on HuggingFace, are notable for being able to operate on a single H100 GPU, targeting high-reasoning and agentic applications with practical hardware requirements. The post represents a significant shift toward openness for OpenAI, as highlighted by community reactions and technical commentaries referencing the potential, initial safety testing, and model quality. Commenters debate the significance of OpenAI’s shift toward more open models, with some users labeling it a move from ‘ClosedAi’ to ‘SemiClosedAi’ and others noting the unexpectedly high quality of the release, even among critics. Initial third-party safety tests are being referenced, indicating ongoing scrutiny by the open-source community.

- The open-weight models are released under the permissive Apache 2.0 license, allowing use without copyleft restrictions or patent risk, making them suitable for commercial deployment and extensive customization.

- The models feature several technical innovations: configurable reasoning effort for latency/performance trade-offs, full access to chain-of-thought outputs (useful for debugging, though not for end users), fine-tuning support, and agentic capabilities like function calling, web browsing, Python execution, and structured output generation.

- With native MXFP4 quantization on the MoE layer, gpt-oss-120b can run on a single H100 GPU and gpt-oss-20b can fit within 16GB VRAM, enabling deployment on more accessible hardware. Full benchmark results are available at https://preview.redd.it/0nbuy4ejj8hf1.jpeg?width=967&format=pjpg&auto=webp&s=5840e94490e805fe978ba8bc877904cd3b94fe0c

- openai/gpt-oss-120b · Hugging Face (Score: 342, Comments: 87): The release of openai/gpt-oss-120b on Hugging Face is notable for being an

~117Bparameter model under the permissive Apache 2.0 license. A comment highlights its dual-parameter/active parameter approach (117Btotal,5.1Bactive), which suggests either MoE (Mixture of Experts) or related sparse activation techniques to optimize compute. Benchmarking results are yet to be independently verified. Commenters note the unusually permissive licensing (Apache 2.0) for a model of this size, and speculate that this release suggests OpenAI confidence in its upcoming GPT-5. Discussion centers on the technical implications of the parameter split and the broader implications for the LLM ecosystem.- The model is noted for being released under an Apache 2.0 license, which is less restrictive compared to other open-source AI licenses, making it more amenable for both commercial and research use.

- There is technical interest in the quantized versions of the model, with users referencing that Unsloth is preparing quantizations (quants) for

gpt-oss-120band noting that Unsloth’s quants are “less buggy and work better” compared to alternatives. Direct links to Unsloth models are provided, as well as ggml-org quantized uploads. - The model is reported to be heavily censored, which could impact its utility in applications requiring less restrictive response generation or broader output coverage.

- gpt-oss-120b is safetymaxxed (cw: explicit safety) (Score: 349, Comments: 122): The image referenced in the post is a technical benchmark or evaluation graphic showing the safety alignment (likely refusal rates, toxicity, or related safety measures) of large language models such as gpt-oss-120b versus other models, including possible mention of Nemotron. The discussion focuses on explicit safety metrics, with one comment stating it’s ‘one of the very few benchmarks I actually take seriously,’ suggesting that the image provides meaningful or high-signal comparison for model safety. Another comment links to a fuller version of the benchmark, underlining community interest in transparent quantitative safety assessments. The post highlights a growing technical expectation for rigorous safety evaluation in open-source large language models. Commenters seem to value the benchmark’s credibility and granularity, with some humor (‘Nemotron cockmaxxing’) but mainly focusing on the seriousness and trustworthiness of the presented safety data.

- A user expresses concern that making a “safety-maxxed” open-source model like gpt-oss-120b widely available allows researchers and adversaries to white-box study the safety mechanisms, facilitating the development of jailbreaks and logic attacks. The technical implication is that robust adversarial testing on open models could translate into more effective violations (e.g., prompt injections) on closed models, as attackers refine their techniques on open benchmarks.

- The thread references a benchmark or visual evaluation (image linked by user) that is considered credible among technical practitioners. This highlights a focus on empirical, publicly-auditable safety assessments rather than relying on developer claims or opaque safety scores.

- Llama.cpp: Add GPT-OSS (Score: 310, Comments: 60): Llama.cpp has added support for GPT-OSS, OpenAI’s new open-source model, enabling day-1 compatibility for inference and experimentation. Implementation details are sparse, but the update points to rapid ecosystem integration with llama.cpp’s efficient C++ backend. Commenters question whether OpenAI is actively involved with llama.cpp integration and express skepticism about the model’s licensing (specifically concerns about a ‘responsible use policy’) and real-world performance compared to top open-weight models.

- There is skepticism among commenters regarding the practical usability and performance of GPT-OSS, with comparisons to state-of-the-art open-weight models. One user questions whether this is a genuine open-source effort by OpenAI or a PR move, highlighting the community’s expectation for open models to meaningfully compete with established alternatives.

- Licensing concerns are raised, especially the fear of restrictive or changeable responsible use policies that might impact downstream adoption or freedom. The community’s technical stakeholders are particularly sensitive to licenses that are not truly permissive or might impose future constraints.

- A question is posed about the timeline for release, implying close monitoring of upstream integration and readiness in projects like llama.cpp—demonstrating the technical community’s demand for immediate, easy access to new models for local experimentation and benchmarking.

- GPT-OSS today? (Score: 289, Comments: 67): The post discusses a major near-merge pull request to llama.cpp (PR #15091) that adds support for the new GPT-OSS model, an open-weight model from OpenAI. The linked image likely displays terminal output or stats related to running GPT-OSS locally. Commenters confirm GPT-OSS is already operational in several projects: OpenAI’s Harmony (https://github.com/openai/harmony) now supports GPT-OSS, Hugging Face Transformers v4.55.0 includes it, and GGUF-format models are accessible here: https://huggingface.co/collections/ggml-org/gpt-oss-68923b60bee37414546c70bf. Comments highlight the rapid integration of GPT-OSS across major tooling, and the community is already leveraging GGUF models in local inference frameworks. There’s a clear sense from commenters that GPT-OSS is immediately practical and the ecosystem is adapting quickly.

- OpenAI’s Harmony is now open-sourced, with dedicated official model cards and resources available at https://openai.com/open-models/. This release is significant in enabling greater transparency and reproducibility for research and integration into downstream applications.

- HuggingFace’s Transformers library (v4.55.0) has integrated support for the released GPT-OSS models, allowing seamless adoption for developers. This indicates rapid ecosystem adaptation and support from major ML frameworks.

- GGUF (a quantized format for efficient inference, e.g. with llama.cpp) is already supported for GPT-OSS, as shown by the hosted models on HuggingFace (https://huggingface.co/collections/ggml-org/gpt-oss-68923b60bee37414546c70bf), enabling low-resource and edge deployments.

- I FEEL SO SAFE! THANK YOU SO MUCH OPENAI! (Score: 241, Comments: 39): The post critiques an OpenAI product release, noting that the model depicted is lacking in general knowledge and coding ability compared to a similar-sized GLM (likely GLM-4 or GLM Air). The title and selftext, paired with the image (which is presumably a sarcastic take on safety or model alignment), suggest skepticism about the model’s practical use cases, especially given its perceived limitations. Technical commenters echo that the model performs poorly—one calls it ‘lobotomized’—and question the motives behind its release, implying it’s more marketing than substance. Users debate the model’s utility, strongly suggesting that it’s mainly a marketing move by OpenAI and criticizing both the product’s capabilities and the surrounding hype cycle.

- Criticism is raised regarding heavy-handed safety and content restrictions in models, with some users arguing that over-censoring (“safetymaxxing”) significantly diminishes the model’s general knowledge utility. This perceived ‘lobotomization’ results in the model being less capable across a broad range of queries, not just in restricted topics.

- There is skepticism about the long-term relevance of hyped safety-forward model releases; the sentiment is that initial excitement fades quickly, especially if the restrictive policies render the model uncompetitive in practical utility or versatility compared to less-restricted alternatives.

- Anthropic’s CEO dismisses open source as ‘red herring’ - but his reasoning seems to miss the point entirely! (Score: 390, Comments: 203): The image referenced is likely a screenshot quoting Anthropic CEO Dario Amodei’s comments from a recent Big Technology Podcast, where he characterizes open source AI as a ‘red herring’, i.e., not the core problem in AI progress or safety. The post and technical comments criticize this stance, noting that access to powerful models (not running inference) is the real bottleneck, and suggesting that Anthropic’s technical limitations with inference further weaken Amodei’s argument. This reflects ongoing debate about whether open-sourcing models meaningfully advances access or safety in AI. Commenters point out perceived hypocrisy or misunderstanding by Anthropic, with some arguing their technical weaknesses undermine their position. Others compare Anthropic’s stance negatively to OpenAI’s, suggesting stronger sentiment against Anthropic in the open source context.

- Discussion centers on Anthropic’s comparative weakness in inference infrastructure and optimizations, with users asserting that Anthropic “are famously not good at running inference,” suggesting that the company’s technical limitations here undermine their dismissal of open source. The argument implies access to powerful models—and the means to efficiently run them—remains a key bottleneck in the industry, rather than issues purely of deployment or software openness.

2. KittenTTS: Ultra-Compact TTS Model Launch

- Kitten TTS : SOTA Super-tiny TTS Model (Less than 25 MB) (Score: 1752, Comments: 257): KittenTTS is a new open-source TTS model from Kitten ML, featuring code and weights (GitHub, HuggingFace) previewed at <25MB and ~15M parameters, with an ~80M param version (with identical 8 English voices) to follow. The ~15M param model delivers eight expressive voices (4 male, 4 female), runs in under 25MB, and can operate efficiently on low-resource hardware (e.g. Raspberry Pi, phones) without GPU—targeted at edge and CPU deployment scenarios. Multilingual support is planned for future releases. A technically informed commenter praises the model’s voice quality given the parameter budget and suggests defaulting to the more expressive voice demoed, rather than requiring source code editing. There is interest in expanding language support to Italian and other languages.

- The main technical praise centers on the model’s ability to deliver impressive audio quality given an exceptionally small footprint (<25MB), which is notable compared to standard TTS models that are often substantially larger. Users specifically mention it running well locally and express interest in support for additional languages such as Italian.

- There is a usability implementation criticism: one commenter points out that the best voice is not the default and that switching voices for quick tests requires editing source code, affecting fast prototyping or demo replication. Improving the UI or configuration for easier voice selection is suggested.

- A user provides a linked audio sample output from their local run, observing a difference between the generated audio and the demo in the announcement video, and asks for clarification or troubleshooting steps. This hints at possible reproducibility issues or inference configuration mismatches that may affect end-user results.

- generated using Qwen (Score: 188, Comments: 38): The post references image(s) generated using Alibaba’s large language model Qwen, but a user observes that images from Qwen across different posts appear consistently blurry. No other technical implementation, configuration, or version details are given. The primary technical discussion is the issue of image quality (specifically, blurriness) in Qwen’s outputs, with no further analysis or debugging.

- Multiple users note that Qwen’s generated images consistently appear blurry, with excessive bloom lighting effects, compared to outputs from other models like Flux. There is a suggestion that image clarity and lighting control in Qwen’s output may lag behind other state-of-the-art generative models.

3. Llama.cpp Feature Updates and MoE Offloading

- New llama.cpp options make MoE offloading trivial:

-n-cpu-moe(Score: 262, Comments: 65): The latestllama.cpprelease introduces the-cpu-moeand-n-cpu-moeflags, significantly simplifying Mixture-of-Experts (MoE) layer offloading from GPU to CPU. This eliminates complex regex patterns previously required for tensor offloading (ot), allowing users to tune offload count for models like GLM-4.5-Air-UD-Q4_K_XLggufby simply adjusting module count. In testing, users achieved>45 t/son 3x3090 GPUs with-n-cpu-moe 2. Comments broadly confirm the option’s technical efficacy, noting more efficient and user-friendly performance tuning than manual tensor selection, and successful application to demanding models (e.g., GLM4.5-Air). There is positive feedback on the straightforwardness of implementation versus prior manually configured solutions.- The —n-cpu-moe option in llama.cpp allows users to trivially offload Mixture-of-Experts (MoE) layers to CPU, as demonstrated with the GLM-4.5-Air-UD-Q4_K_XL model (

ggufformat). A user running llama-server with this flag on a 3x3090 setup reported achieving over45 t/sthroughput, highlighting the powerful impact on performance when offloading is distributed appropriately. - Technical discussion observes that the —n-cpu-moe option simplifies offloading compared to manual tensor offloading and is particularly well-suited to MoE models like GLM4.5-Air. This reduces the guesswork for users and lowers the barrier to optimal multi-hardware utilization.

- Suggestions for further enhancement include enabling cross-machine layer offloading (e.g., splitting model layers across a Mac mini and a Linux laptop to aggregate their resources) and future revisions of llama.cpp that might use model metadata to assign layers more intelligently to CPU/GPU based on their specific performance characteristics, potentially improving utilization and scalability for larger future models.

- The —n-cpu-moe option in llama.cpp allows users to trivially offload Mixture-of-Experts (MoE) layers to CPU, as demonstrated with the GLM-4.5-Air-UD-Q4_K_XL model (

- GPT-OSS today? (Score: 289, Comments: 67): The post discusses a major near-merge pull request to llama.cpp (PR #15091) that adds support for the new GPT-OSS model, an open-weight model from OpenAI. The linked image likely displays terminal output or stats related to running GPT-OSS locally. Commenters confirm GPT-OSS is already operational in several projects: OpenAI’s Harmony (https://github.com/openai/harmony) now supports GPT-OSS, Hugging Face Transformers v4.55.0 includes it, and GGUF-format models are accessible here: https://huggingface.co/collections/ggml-org/gpt-oss-68923b60bee37414546c70bf. Comments highlight the rapid integration of GPT-OSS across major tooling, and the community is already leveraging GGUF models in local inference frameworks. There’s a clear sense from commenters that GPT-OSS is immediately practical and the ecosystem is adapting quickly.

- OpenAI’s Harmony is now open-sourced, with dedicated official model cards and resources available at https://openai.com/open-models/. This release is significant in enabling greater transparency and reproducibility for research and integration into downstream applications.

- HuggingFace’s Transformers library (v4.55.0) has integrated support for the released GPT-OSS models, allowing seamless adoption for developers. This indicates rapid ecosystem adaptation and support from major ML frameworks.

- GGUF (a quantized format for efficient inference, e.g. with llama.cpp) is already supported for GPT-OSS, as shown by the hosted models on HuggingFace (https://huggingface.co/collections/ggml-org/gpt-oss-68923b60bee37414546c70bf), enabling low-resource and edge deployments.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Google DeepMind Genie 3 Model Release & Benchmarks

- Google Deepmind’s new Genie 3 (Score: 4461, Comments: 783): Google DeepMind’s new Genie 3 is highlighted in a Twitter video, showing AI-powered generative gameplay that dynamically creates interactive environments and objects, advancing beyond static world-generation. The showcased model appears capable of real-time synthesis of game scenes, indicating significant progress from previous iterations such as Genie v2, and hints at application in both open-world and immersive simulation contexts. Comments raise the idea of using Genie 3 for VR/metaverse applications and speculate about its impact on the simulation of open-world games, indicating potential competitive implications relative to major game franchises like GTA. Technical debate centers on the recursive simulation possibilities and scalability for interactive environments.

- Commenters highlight potential applications of Google’s Genie 3 in VR and metaverse environments, suggesting its capacity to generate interactive 3D simulations from 2D images could fast-track immersive content development and procedural world generation.

- Speculation arises about the future trajectory and scalability of Genie 3, with technical readers anticipating that subsequent research papers or model iterations will yield rapid advances in generative simulation, interactive environments, or even real-time, user-driven content generation.

- DeepMind: Genie 3 is our groundbreaking world model that creates interactive, playable environments from a single text prompt (Score: 1484, Comments: 364): DeepMind’s Genie 3 is a self-supervised world model that dynamically generates fully interactive, playable 2D environments from a single text prompt, reflecting significant advances over prior generative environment models. Technically, Genie 3 addresses the challenge of maintaining environmental consistency across long time horizons: whereas auto-regressive video generation models typically suffer from accumulating inaccuracies, Genie 3 sustains high visual fidelity and coherent physical states for minutes at a time—visual memory can persist up to one minute. The model outpaces its predecessors by a substantial margin in both quality and endurance of generated environments, as noted in DeepMind’s official Genie 3 announcement and related research papers. Commenters highlight the rapid technological progress, especially in environmental persistence over long horizons, and express excitement about the implications for real-time interactive media and AI-generated game or simulation content. The emergence of “persistent memory” in generated environments is singled out as a major technical breakthrough, suggesting imminent impacts in gaming and simulation fields.

- Discussion highlights the technical challenge of maintaining environmental consistency over long horizons in AI-generated worlds, particularly since generating environments autoregressively causes error accumulation that degrades the experience. Genie 3 was noted to achieve “visual memory extending as far back as one minute ago” and keep environments consistent for several minutes, representing a significant advance over models from just six months prior.

- Technical readers note that prior versions of similar technology were much worse in performance within just the last half-year, marking the recent progress as ‘insane’ due to drastic improvements in persistence and realism of generated environments.

- The progress from Genie 2 to Genie 3 is insane (Score: 934, Comments: 129): The post highlights significant advancements from Genie 2 to Genie 3, generative AI systems focused on interactive environment synthesis. While specific benchmarks aren’t detailed, the context implies major leaps in generative realism, interactivity, or capability. A referenced question asks if this technology is similar to Oasis AI’s Minecraft generation project, suggesting similarities in AI-driven open-world content creation. Top comments speculate about rapid progress (‘Genie 5 will create GTA 7 in 2 years’) and envision integration with VR and voice input for immersive experiences akin to a ‘holodeck.’

- A commenter highlights that Genie 2 was not capable of realtime interaction; users previously had to input an entire sequence of moves up front, in contrast to the improved interactivity and responsiveness enabled with Genie 3. This marks a notable leap in live, agent-driven gameplay simulation for the Genie project.

- Another technical theme considers AI content generation monetization, comparing the pay-per-token model (typical of large language models or generative AI) to traditional ‘buy-to-play’ games. The discussion explores whether future game access might shift to a pay-per-play-time model, fundamentally altering game revenue structures.

- In Genie 3, you can look down and see you walking (Score: 2710, Comments: 371): The post highlights a feature in DeepMind’s Genie 3, a generative agent that can synthesize interactive, playable 3D environments from images or videos, where users can look down and see their own avatar walking. This suggests the model has advanced self-representation and real-time rendering capabilities within the synthesized environment, which is significant for embodied AI and simulation fidelity. For background, see DeepMind’s Genie project documentation. Commenters are excited about applications in gaming and historical reenactment, noting the implications for immersion, while one comment connects the realism to simulation hypothesis debates.

- A key technical distinction is noted between simply generating a video and the complexity of Genie 3’s capability to render a real-time, first-person perspective where the user can look down and see themselves walking. This implies more advanced scene understanding, spatial consistency, and possibly an on-the-fly avatar generation and consistent localization, which are far beyond straightforward video generation. Such systems may require real-time 3D scene reconstruction and robust positional tracking to maintain immersion and realism.

- Genie 3 simulating a pixel art game world (Score: 570, Comments: 84): The post presents Genie 3, a generative AI model, simulating a pixel art game world, most likely by generating interactive visual environments in a low-resolution pixel style. The demonstration suggests the model’s capabilities at rendering dynamic, possibly playable, 2D-3D hybrid pixel scenes, indicating an application of diffusion models or video/game environment generation akin to recent breakthroughs by Google DeepMind and related labs. Specifics on model architecture, frame rates, or integration with gameplay engines are not provided in the post. Technical discussion in the comments speculates on the future potential, such as ultra-high-fidelity, world-scale simulation for VR using AI, and requests for references to existing games combining pixel art with 3D rendering, demonstrating an interest in practical adoption and hybrid visual styles.

- A technical inquiry is made about the hybrid visual style—specifically, interest in games that blend pixel art and 3D similarly to Genie 3. This suggests Genie 3 may employ either 2D sprites in a 3D-rendered environment or use neural rendering to simulate a pixel-art aesthetic over volumetric world geometry, and prompts discussion of comparable rendering approaches or engines supporting such workflows.

- A user speculates on the future impact of generative AI (like Genie 3), suggesting it could potentially disrupt game engines like Unreal and Unity by automating or revolutionizing content creation and world simulation. The comment alludes to the possibility that advanced models could eventually replace traditional development pipelines for immersive worlds.

- Genie 3 Frontier World Model (Score: 269, Comments: 56): DeepMind’s Genie 3, referenced as a ‘Frontier World Model,’ denotes a major leap in generative AI for creating interactive, explorable worlds from natural language prompts, potentially combining visual, physical, and semantic understanding. Technical aspirations center around seamless integration of generative 3D modeling, and the possibility of instant, high-fidelity virtual environments, hinting at applications in VR and advanced game design. Commenters highlight the potential of Genie 3 to revolutionize AAA game development by enabling on-demand VR/3D worlds. There is anticipation for integrating this with advanced 3D modeling, fueling debate on the future of immersive, AI-generated content.

- A key technical insight is the recognition that models like Genie 3 Frontier could be foundational for automating complex 3D world generation, suggesting the eventual convergence of generative AI and advanced 3D modeling workflows. This could bridge the gap toward automated AAA-level game development by creating assets and environments on demand.

- Some commenters discuss the potential for combining large-scale world models (such as Genie 3) with interactive 3D modeling pipelines. The implication is that this integration could enable on-the-fly creation and manipulation of game worlds, effectively accelerating and revolutionizing traditional game design and simulation production.

- Notes on Genie 3 from an ex Google Researcher who was given access (Score: 466, Comments: 68): An ex-Google Researcher evaluated the Genie 3 world model from Google DeepMind, highlighting its ability to generalize across gaming and real-world environments, rapid startup, strong visual memory with object coherence over occlusion/time, and effective handling of photorealistic and stylized scenes. Limitations include systematic physics failures (notably on rigid body and combinatorial tasks), limited multi-agent/social interaction support, constrained action spaces, and lacking advanced game logic/instruction following—indicating it’s still far from a production-grade game engine. The reviewer asserts Genie 3 evidences imminent disruption to gaming and possibly a step toward AGI/ASI if scaled, emphasizing the significance of integrating world models with 3D-AI and LLMs. Commenters debate whether advances like this world model represent an inflection point toward AGI/ASI, with some seeing such high-fidelity imagination/visualization models as a ‘final piece’ for AGI when merged with other modalities, while others speculate about industry leadership (Google vs. others) and competitive pressures in gaming.

- A key discussion point is the significance of Genie 3’s architecture in bridging the gap towards AGI: by granting models the ability to reason not only via language, but with a form of ‘imagination’ or visual/spatial reasoning akin to human cognition, it addresses a major bottleneck in multi-modal AI advancement.

- A commenter highlights the rapid pace of technical progress: since Genie 2, pixel counts have quadrupled and possible interaction time has increased tenfold in eight months. Extrapolating from this, they estimate real-time 4K generation for an hour could be feasible within a year, assuming sufficient resources.

- There is technical speculation on the compute demands, questioning how soon high-resolution, high-frame-rate models like Genie 3 could run outside of data centers, pointing towards a major challenge in democratizing access to advanced generative models.

2. OpenAI Open Source Model and GPT-OSS Launch

- If the open source model is this good, GPT5 will probably be INSANE (Score: 477, Comments: 119): The post discusses a newly open-sourced model (referred to as ‘o4-mini’), suggesting its specifications are highly competitive—implying comparable capabilities to OpenAI’s proprietary models. The user speculates OpenAI only open-sourced this model because their upcoming GPT-5 might significantly surpass current open-source models, rendering them less relevant. The image link is referenced as showing evidence of the new model’s benchmark results or config. Top comments are non-technical and express hype or reliance on OpenAI’s advancements (e.g., ‘Accelerate’, ‘always bet on the twink’), but lack substantive technical debate.

- Commenters are expressing surprise at the quality and apparent progress of the open-source model referenced, hinting at rapid iteration and competitive performance that rivals or approaches state-of-the-art closed-source alternatives. Some remarks imply that substantial financial offers have been declined by contributors or organizations involved, underscoring the high perceived value of the technology within the AI community. While no concrete benchmarks or technical details are provided in these specific comments, the discussion reflects recognition of impactful open-source model advancements potentially altering the competitive landscape vis-à-vis closed models like GPT-5.

- OpenAI OS Model today? (Score: 383, Comments: 60): The post discusses the release of the OpenAI GPT-OSS-20B open-weight language model, as referenced by the linked Kaggle competition for red-teaming (security evaluation) of the model (https://www.kaggle.com/competitions/openai-gpt-oss-20b-red-teaming). The image appears to be a screenshot or announcement related to this launch, signaling OpenAI’s move toward open-sourcing at least some models, with community emphasis on technical details and competitive benchmarking with models like Genie 3. The notable technical aspect is the open-weight status and the model’s competition use. Image link Commenters speculate whether this model is competitive with or bigger than anticipated (“Imagine if big-but-small is GPT-5”) and compare it to Genie 3, highlighting community expectations for performance and openness.

- A user points out the release of the OpenAI ‘gpt-oss-20b’ model as an open weight model, referencing its listing on Kaggle’s red teaming competition page (link). This suggests a significant move towards open-sourcing by OpenAI, allowing the community to rigorously test and evaluate the model’s safety and capabilities.

- Discussion speculates about an impending major model upgrade, with users questioning whether “GPT-5” or a smaller but architectural-advanced model (nicknamed “big-but-small”) could be released. The anticipation is technically grounded in expectations for substantial capability or efficiency improvements over prior generations like GPT-4.

- An additional link to a statement from an OpenAI employee hints at developer-focused announcements, possibly indicating new API features, enhanced model weights for third-party use, or tools targeting developer integration, further fueling speculation about the model’s openness and accessibility.

- OpenAI releases a free GPT model that can run right on your laptop (Score: 303, Comments: 50): OpenAI has released a free, open-weight GPT model named GPT-OSS available in 120B and 20B parameter sizes, with the smaller 20B model able to run on machines with 16GB RAM, while the larger requires a single Nvidia GPU. The 120B variant matches performance of the o4-mini model; the 20B variant matches o3-mini in capability. Both are distributed under the permissive Apache 2.0 license, and are accessible via Hugging Face, Databricks, Azure, and AWS (The Verge summary). Commenters highlight the practicality of running the 20B model on local hardware (16GB RAM) and question response latency and real-world capabilities. There is interest in comparative benchmarks versus established models but details remain sparse in the initial discussion.

- The new OpenAI open-weight model, GPT-OSS, comes in two variants—120B and 20B parameters. The 120B parameter model can run on a single Nvidia GPU and is reported to perform similarly to the o4-mini, while the 20B parameter version can run on just 16GB of memory, benchmarking close to o3-mini (see The Verge article). Both are distributed under the Apache 2.0 license, enabling commercial modification and deployment through platforms like Hugging Face, Databricks, Azure, and AWS.

- A user referenced a 91.4% hallucination rate for the new models, suggesting that, despite the accessibility improvements and hardware requirements, factual reliability remains a significant issue in these early releases. This underscores the necessity for rigorous evaluation and real-world testing of open-weight LLMs before deploying them in production settings.

- Finally os models launched by openai !! At level of o4 mini !! Now we can say it’s openai (Score: 284, Comments: 47): The post discusses the recent launch of open-source (“os”) models by OpenAI, which reportedly reach a performance level comparable to “O4 mini” (likely a reference to OpenAI’s GPT-4 mini or similar compact models). According to user comments, the 20B parameter version of these models can run on just 16GB of RAM, making high-quality LLM inference more accessible to a wider range of hardware. Another user confirms successful usage and impressive performance in LM Studio, a local inference environment for large language models. Commenters are surprised and impressed by the rapid progress of open-source model quality; some even misread the abbreviation “os” as “operating system” due to the context. There’s significant optimism about future releases (e.g., “GPT-5 is going to be a banger”) and enthusiasm about hardware efficiency for local runs.

- The 20B parameter open-source model from OpenAI reportedly requires only 16GB of RAM to run, making local inference feasible on consumer hardware—even with relatively large models. This low hardware requirement dramatically broadens accessibility for both developers and researchers. Screenshot

- Users testing the new models using tools like LM Studio report being impressed by the performance, noting that open-source model quality has accelerated rapidly over the past year. This suggests competitive inference speed and capability against other commercial offerings in the same parameter range.

- Discussion highlights that such open-source models could enable the development of custom chatbot-powered applications and novel products, with anticipated growth of open-source projects on platforms like GitHub as a direct result of simpler, high-quality local deployment.

- Gpt-oss is the state-of-the-art open-weights reasoning model (Score: 389, Comments: 141): A post announces that “Gpt-oss” is now considered the state-of-the-art open-weights reasoning model, potentially surpassing previous open-weight models in reasoning capabilities. The main evidence is a linked JPEG image, likely benchmarking Gpt-oss against existing models, suggesting considerable technical progress but without specific metrics or architecture details presented in the text. Comments express optimism about the implications for future models (such as GPT-5), but there is a lack of critical technical discussion or comparative benchmarking details in the thread.

- FoxB1t3 suggests that Horizon was in fact OSS 120b from OpenAI, noting that despite its large scale (‘120b’), it had the characteristic ‘small model feeling,’ which may refer to its inference speed, calibration, or perceived output sophistication compared to its size. The user also points out the impracticality of claims about running such massive models (120 billion parameters) on a typical PC, emphasizing the hardware requirements and suggesting these marketing statements are misleading from an implementation perspective.

- Grand0rk highlights that the model exhibits a very high degree of censorship, indicating that safety filters or content moderation are extremely restrictive. This affects deployment and research utility for tasks requiring less controlled outputs, which is a technical consideration for those intending to use or fine-tune the model in uncensored environments.

- Introducing gpt-oss (Score: 161, Comments: 48): A new open-source LLM, ‘gpt-oss’, has been released with a notable 20B parameter model. Benchmarks from user deployment on Apple silicon (M3 Pro, 18GB) show generation speeds of ~30 tokens/sec—significantly faster than Google Gemma 3 (17 TPS). The model reportedly loads efficiently on consumer-grade Apple hardware, supporting large-context completions. Expert users are debating the 20B model’s qualitative writing ability for long-form tasks (e.g., 500-word short stories, genre fiction like romance), with open questions on its creative coherence versus established AI models. Additionally, there is community interest in prompt support for integration into OpenRouter.

- A user noted that the 20B gpt-oss model achieved approximately

30 tokens per second(TPS) when running on a MacBook Pro M3 Pro with 18GB RAM, which is substantially faster than Google’s Gemma 3 (reported ~17 TPSon the same hardware). This suggests significant inference optimization for local deployment and improved efficiency compared to other large language models of similar size. - Another commenter discussed running the 20B model on a Mac mini (M4 Pro, 64GB RAM), questioning the model’s ability for long-form, coherent output (like a 500-word short story or niche genres such as romance). This highlights interest in practical generation quality and sustained performance for sizable output tasks on local hardware.

- There is interest in offline/local deployment, with one comment asking about minimum hardware requirements and whether the model can run entirely without an internet connection. The reference to “high end” hardware per Altman suggests a debate on the accessibility of running large models like gpt-oss locally for inference.

- A user noted that the 20B gpt-oss model achieved approximately

- Open models by OpenAI (Score: 178, Comments: 17): OpenAI has released open-weight models, notably a 20B parameter model, designed to run optimally on consumer hardware with ≥16GB VRAM or unified memory, including Apple Silicon Macs (see official docs). Early user testing with Ollama initially encountered deployment issues on a 16GB Mac mini, but a subsequent Ollama update resolved these, validating compatibility on this hardware configuration. Discussion centers on the model’s hardware demands, and initial issues with Ollama’s implementation (since resolved). Users generally express enthusiasm for the benchmarks and the availability of an open-source option, noting it as a leading choice in the current open AI ecosystem.

- A user with a 16GB Mac mini shares experience attempting to run the 20B OpenAI model, referencing documentation that specifies models are ‘best with ≥16GB VRAM or unified memory’ and suited to Apple Silicon Macs. Initially, they encounter issues running via Ollama, but note that after a new release/update from the Ollama team and a redownload, the model works as intended, suggesting rapid compatibility updates for consumer hardware.

- OpenAI Open Source Models!! (Score: 115, Comments: 15): The image appears to show a benchmark or comparison related to OpenAI’s newly released open-source models—potentially displaying their performance (possibly a 120B parameter MoE, with details like 5.1B/3.6B active params). The post context and technical comments debate the scale (120B parameters, Mixture-of-Experts) and number of active experts in inference, indicating OpenAI’s open release is state-of-the-art for open-source. OpenRouter support and comparative performance vs. unreleased models (e.g., potential GPT-5) are also highlighted. Commenters are impressed by the scale and the fact that OpenAI’s open-source release hasn’t been sandbagged (deliberately underpowered); some express excitement about what the closed-source GPT-5 could achieve if the open model is this strong, and note the model’s availability via OpenRouter.

- The released model is reported to be a 120B parameter Mixture-of-Experts (MoE), with only 5.1B or 3.6B parameters active at a time, highlighting a scalable efficiency setup where only a subset of experts is engaged during inference. This MoE structure allows the model to be much larger in capacity without incurring the full inference cost of its parameter count.

- There is technical debate regarding which variant—especially ‘o3’—provides the best performance among the released models, suggesting some comparative benchmarks or qualitative testing is ongoing within the community. Users also note early availability on OpenRouter, facilitating easier third-party evaluation and deployment.

3. Qwen-Image and Open-Source Multimodal Generation Benchmarks

- Qwen image prompt adherence is GT4-o level. (Score: 448, Comments: 128): The post discusses Qwen’s image generation model, comparing its prompt adherence to that of GPT-4o. The user provides a series of creative, detailed prompts and notes improvements in Qwen’s ability to faithfully follow instructions. Top comments highlight that while prompt adherence is strong, the outputs often have an ‘AI’ or photomontage quality and may lag behind top models per benchmarking sites such as genai-showdown.specr.net. Comments raise concerns about image realism and visual quality, with multiple users stating outputs appear ‘unrealistic’ or ‘like a bad photoshop,’ suggesting that fidelity to prompts does not necessarily produce photorealistic or natural-looking images. There’s also debate as to whether Qwen’s performance matches SOTA, referencing external benchmarks.

- There is discussion about prompt adherence and visual realism: while users note significant improvements in prompt obedience for Qwen’s image model (with some suggesting it is on par with GT4-o), there are critiques that outputs still look artificial or reminiscent of crude digital edits, highlighting ongoing challenges in generative model realism.

- One comment references https://genai-showdown.specr.net, which aggregates benchmark comparisons for generative models, implicitly suggesting that claims about Qwen’s prompt adherence being equal to GT4-o are not fully supported by head-to-head benchmark results.

- Qwen’s image model displays strong multilingual capabilities, as evidenced by examples of detailed and contextually accurate image generation from prompts in Spanish, demonstrating competitive performance across languages.

- Qwen image prompt adherence is amazing (Score: 140, Comments: 19): The post demonstrates the high prompt adherence of the Qwen-Image model (specifically the

gguf Q5_k_mvariant, available here), by generating images—such as a complex request for a 1920s archival photo with a datamoshed, glitched subject—using a 20-step inference process. Example outputs can be previewed here, and additional images are provided via a linked Google Drive folder. The technical showcase focuses on the model’s capacity for rendering fine-grained prompt details and complex visual effects like RGB glitches and emulsion artifacts. Commenters note the model’s strong baseline performance and express interest in the potential for further fine-tuning, indicating its adaptability for more tailored generative tasks.- Discussion references the Qwen model’s impressive image prompt adherence and inpainting capabilities, with user-provided screenshots (example1, example2) demonstrating strong results particularly in the context of inpainting. Commenters note that the image modifications are accurate and visually appealing, suggesting the model is competitive with or exceeds current standards for prompt-controlled image editing.

- Really impressed with Qwen-Image prompt following and overal quality (Score: 105, Comments: 36): The post highlights impressive prompt adherence and image quality achieved using Qwen-Image, an image generation model within the ComfyUI workflow. The user only increased inference steps to 30, otherwise following the standard procedure from Qwen-Image’s official documentation. The result, per their account, matched complex multi-element prompts with high fidelity on the first try, indicating robust conditional image synthesis and improved prompt-following behavior (as detailed at https://docs.comfy.org/tutorials/image/qwen/qwen-image). Commenters discuss technical resource requirements (the fp8 model reportedly needing ~20GB VRAM), reflecting possible hardware limitations for local use. Further comments appreciate the model’s narrative capability and draw parallels to quality leaps seen with new diffusion models.

- One comment highlights that the FP8 version of Qwen-Image requires 20GB VRAM, indicating high resource demands for full-precision inference, which may impact accessibility for users depending on hardware capabilities.

- A user asks about integration with platforms like Forge versus Comfy, expressing uncertainty about compatibility and required architecture, suggesting that deployment details for Qwen-Image are still a point of confusion for some implementers.

- It is notable that Qwen-Image achieves high-quality output and prompt adherence without the need for external LoRAs (Low Rank Adapters), unlike other models such as Flux which often require targeted LoRAs for similar performance, pointing toward architectural or training improvements in Qwen-Image.

- Why Qwen-image and SeeDream generated images are so similar? (Score: 107, Comments: 52): The OP observes near-identical image outputs between Qwen-image and SeeDream 3.0 when given the same prompt (“Chinese woman” and “Chinese man”), raising questions about potential overlap in training datasets or post-training procedures. Notably, Qwen-image is open-source, and SeeDream has since updated to version 3.1, which diverges in image style from 3.0. A technically relevant commenter notes a recurring ‘orange hue’ in several generations from these models, suggesting a possible artifact or bias in color representation in output, which may be linked to data or model training specifics.

- Some users speculate that Qwen-image and SeeDream might produce visually similar images due to being trained on overlapping or even identical datasets, possibly including prompts or data drawn from major sources like Midjourney, Stable Diffusion, or Flux. This shared training foundation could explain similarities in generated outputs across models.

- Notably, users have observed consistent visual motifs—such as a recurring orange hue across multiple generations from these models. This suggests possible common preprocessing pipelines or dataset biases introduced during training, which may propagate into the model outputs regardless of prompt.

- The discussion points out how the open-sourcing of such powerful generative models enables broad scrutiny, comparison, and reverse engineering—providing a unique lens to trace the evolution and biases of these systems compared to proprietary counterparts.

- 🚀🚀Qwen Image [GGUF] available on Huggingface (Score: 188, Comments: 74): The thread announces the availability of Qwen Image GGUF models (including Q4K M quants) on HuggingFace, with links to multiple repositories: lym00/qwen-image-gguf-test, city96/Qwen-Image-gguf, a separate GGUF text encoder (unsloth/Qwen2.5-VL-7B-Instruct-GGUF), and the VAE safetensors for ComfyUI. The Q4 quantized model alone is about 11.5GB, excluding VAE and text encoder, making it challenging to run on consumer GPUs with less VRAM (e.g., RTX 3060). GGUF format allows local inference, but does not speed up rendering, and VRAM remains a significant bottleneck, with 32GB+ only providing limited relief for latest generative models. Top comments highlight frustration with model sizes and VRAM constraints, noting lower quantization yields poor results. There is discussion of the lack of practical multi-GPU support for diffusion models and a desire for unified memory (as with TPUs). Examples for ComfyUI usage are also linked, providing practical workflows.

- GGUF format enables local inference for large generative image models like Qwen, but VRAM is currently the key limitation: for instance, the Q4 quantized model alone is 11.5GB, not counting additional requirements for VAE and text encoders, making it impossible to run on GPUs with limited VRAM (like RTX 3060 with 12GB) source. Lower quantization (e.g. Q4) significantly reduces quality, while FP8 remains slow on consumer GPUs.

- Although GGUF makes it technically possible to run these models locally, practical performance and speed are bottlenecked by lack of multi-GPU support—most workflows can only distribute separate tasks, not split core diffusion computation across GPUs. There is anticipation for better hardware integration, such as unified memory via TPUs, but current advances are not keeping pace with model demands.

- Various quantization and precision options are available—such as Dfloat11 and FP8—but users still report difficulty in identifying optimal generation settings (e.g., cfg params). Community resources like ComfyUI examples (see: https://comfyanonymous.github.io/ComfyUI_examples/qwen_image/) are being curated to help with guidance, but best practices are still being developed.

- Qwen-image now supported in Comfyui (Score: 208, Comments: 67): Qwen-image, a potent image generation model, now has integration with ComfyUI, as well as SwarmUI (docs). Benchmarking on a 4090 (Windows), inference times are about

45 sec/imageatCFG=4,Steps=20,Resolution=1024(or similarly forCFG=1,Steps=40). The model requires high VRAM due to its large text encoder and parameter size, and optimal results are reported at high steps/CFG, but with substantial tradeoffs in speed. Noted technical strengths include strong prompt understanding, text rendering, and minimal censorship; but inconsistent performance on certain prompts remains unresolved. Commenters debate parameter configurations (CFG/Steps/Resolution) for balancing quality and speed, and note thatquantizedmodel versions are necessary for broader accessibility due to high compute requirements. One user also remarks on the need for svqd (possibly semantic vector quantization) support.- Qwen-image is now supported in both ComfyUI and SwarmUI, with technical docs for SwarmUI detailing configuration parameters. Users report that optimal generation quality for Qwen-image requires high values (CFG=4, Steps=50, res=1024+), but this greatly increases inference time (e.g., CFG=4, Steps=20, Res=1024 takes ~45 seconds per image on an RTX 4090). Lower CFG or Steps runs faster but degrades output quality; using quantized (quants) versions or LoRAs is suggested for speed improvements on less powerful GPUs.

- The model’s text encoder and parameters demand significant VRAM and computational resources—commenters highlight the necessity for quantized or GGUF (for llama.cpp compatibility) versions to broaden accessibility for users with limited hardware. The image model is praised for its prompt fidelity, ability to render text, minimal censoring, and recognition of pop culture IPs, though it shows instability on some prompts.

- Request for SVQD (vector quantization) and GGUF file formats shows community interest in efficiency and wider deployment, especially for smaller GPUs, aligning with broader trends of porting large models to lightweight, accessible formats.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.5 Pro Exp

Theme 1. OpenAI’s GPT-OSS Release Ignites Widespread Debate

- GPT-OSS Models Drop, Community Scrambles to Test: OpenAI released its first open-source models since GPT-2, the GPT-OSS family, including a 120B and 20B parameter version, now available on HuggingFace and LM Studio. The release was accompanied by a six-week virtual hackathon and a $500K Red Teaming Challenge on Kaggle.

- Performance and Censorship Under Scrutiny: Initial tests on the LMArena leaderboard reveal the 120B model’s performance is near OpenAI o4-mini, but users found it disappointing with more hallucinations than most models, except for Llama 4 Maverick. Many engineers across the Unsloth and Perplexity communities noted the model is censored to hell and back, leading to discussions about abliteration techniques to improve usability.

- Technical Teardown Reveals Novel Architecture: The models use MXFP4 quantization with weights packed as uint8 and a block size of 32, allowing the 120B model to fit on a single 80GB GPU. The architecture also features interleaved sliding window attention, a Harmony chat format for structured interaction, and uses a learned attention sink per-head.

Theme 2. New Models from Anthropic, Google, and Others Flood the Market

- Anthropic’s Claude 4.1 Opus Targets Agentic Excellence: Anthropic released Claude 4.1 Opus, available on OpenRouter, which now leads the SWE Bench coding benchmark and dominates terminal agent benchmarks by securing 9 of the top 10 spots. While praised for superior tool usage, some users noted it still fails many spatial tests and questioned if the mild improvements justify its cost.

- Google DeepMind Unveils Genie 3 World Simulator: Google DeepMind announced Genie 3, its most advanced world simulator capable of generating high-fidelity visuals at 20-24 fps with dynamic prompting and persistent world memory. While no paper was released for Genie 3, the community pointed to the original Genie paper for technical insights into the underlying world model architecture.

- Speculation Mounts for Imminent GPT-5 Release: Chatter about a potential GPT-5 release this week intensified, fueled by hints from Sam Altman and internal leaks shared on X. Speculation suggests it might be an Operating System Model related to Horizon, though some believe a more incremental update like GPT-4.1 is more likely.

Theme 3. Developer Ecosystem Tools and Frameworks Evolve

- LibreChat Supercharges LM Studio Speed: Users raved about the blazing fast inference speeds when serving models from LM Studio via LibreChat, with one user claiming “It’s like a identical ChatGPT (OpenAI) UI that serves all my LM studio models. But it’s just blazing fast.” Setup requires careful configuration, as one user solved a connection issue by adjusting YAML indentation and another resolved Tailscale issues by binding the host to 0.0.0.0.

- LlamaIndex and DSPy Tackle Complex Documents: LlamaParse was showcased turning dense PDFs into multimodal reports, while LlamaCloud was highlighted for helping companies like Delphi scale by handling complex document ingestion. In the DSPy community, a developer shared a writeup on using DSPy to detect document boundaries in PDFs.

- AutoGen and MCP Power a YouTube Search Bot: A developer shared a YouTube tutorial on building a multi-agent chatbot with AutoGen and MCP servers for YouTube search. This coincided with a proposal for a new in-browser ‘postMessage’ transport for MCP, complete with a demo and a SEP draft for standardization.

Theme 4. AI Benchmarking and Novel Applications

- Kaggle Kicks Off AI Chess Tournament Amidst Skepticism: The Kaggle Game Arena launched with a 3-day AI chess exhibition tournament, but some engineers questioned chess as a true test of intelligence, viewing it as a strategy optimization game. In a separate match, Kimi K2 lost to Deepseek O3, with Kimi being forced to resign after making an illegal move.

- GLM 4.5 Air Hypes Up, Outperforming Rivals in Coding Test: GLM-4.5 Air is being touted as a strong contender, scoring 5/5 on one user’s test suite and outperforming models like Horizon Beta, Grok 4, and Opus in a ‘create-an-html-game’ test. Despite some quirks like infinite thinking loops, the consensus is that GLM-4.5 is really strong.

- Youzu.ai Visualizes the Future of E-Commerce: Youzu.ai demonstrated its visual AI infrastructure for e-commerce with a Room Visualizer feature that allows users to upload a room photo and get a complete redesign in seconds. An accompanying demo video shows how users can instantly shop every item in the redesigned room.

Theme 5. Hardware Havoc and Performance Tuning

- CUDA vs. Compute Shaders Debate Ignites: Engineers in the GPU MODE server debated the merits of CUDA kernels versus compute shaders for image post-processing with libtorch C++, with Pytorch announcing a seminar on its new kernel DSL, Helion. A user also reported issues with CuTe failing to generate a 128-bit vectorized store, instead emitting two STG.E.64 instructions, which breaks memory coalescing.

- Linux Users Lament Lethal Cursor Freezes: Multiple Linux users reported Cursor IDE freezing and becoming unresponsive, pointing to potential network issues or bad requests being investigated by the team, as documented in the Cursor forums. Meanwhile, Windows users were reminded that disabling the page file can cause weird, unexplainable crashes even with ample RAM.

- Modular Platform Gets a Boost with MAX and Mojo: Modular Platform 25.5 is now live, featuring Large Scale Batch Inference via SF Compute and an open-source MAX Graph API. The release enhances MAX and PyTorch interoperability through the

@graph_opdecorator, but users on Intel-based macOS systems were reminded that only Apple Silicon CPUs are officially supported.

Discord: High level Discord summaries

Perplexity AI Discord

- OpenAI Drops Open-Source LLM!: OpenAI released an open-source LLM, GPT-OSS-120B, available at HuggingFace, causing excitement about hardware requirements (H100 GPUs recommended) and censorship levels.

- Members are already experimenting, but some are crashing their computers and noting the need for quantization and censorship, suggesting it is censored to hell and back.

- Opus Arrives Quietly: Anthropic has released Claude 4.1 Opus, with initial reactions suggesting it offers mild improvements over previous versions, with better multi-file debugging but it fails many spatial tests.

- Some suggest it may be a move to one-up OpenAI, while others highlight that the model may not offer enough of an improvement to justify its pricing and there are old rate limits, and that this may only be a flex on OpenAI.

- GPT-5 Anticipation Heats Up: Anticipation is building for a potential GPT-5 release, possibly on the 7th, driven by hints from Sam Altman and chatter about OpenAI releasing an Operating System Model.

- There is conjecture that it might be related to Horizon, and debate on whether Grok 4 would break any benchmarks given this release.

- Youzu Visualizes E-Commerce: Youzu.ai is transforming online shopping with visual AI infrastructure, as demonstrated in a comprehensive demo at Vivre, which operates across 10 CEE countries.

- The Room Visualizer feature allows users to upload a room photo and receive a complete redesign in seconds, enabling instant shopping of every item, as seen in this demo.

- Sonar API Documentation Surfaces: A user new to Sonar API inquired about its usage, and another user shared a link to a YouTube video about Sonar API.

- In response to the question about the Sonar API, a user shared the Perplexity AI documentation link along with a GIF.

Unsloth AI (Daniel Han) Discord

- Unsloth Quantization Overload: The community requested Unsloth to quantize the

yisol/IDM-VTONmodel, but it lacks diffusion training support and doesn’t handle custom quantization requests unless there’s significant demand.- This is because of the manual labor and compute efforts needed to implement a manual quantization.

- Nemotron Super 49B 1.5: the Daily Driver?: Members discussed the Nvidia Nemotron Super 49B 1.5 model, with one member finding it to be a great daily driver because of its prompt adherence if you NOT USE F**ING LISTS*.

- Others expressed interest in its capabilities as a general thinking model, noting its good instruction following while mentioning its dry prose.

- GPT-OSS Suffers Abliteration Allegations: Initial reactions to the OpenAI GPT-OSS model were mixed, with some calling it pretty much junk, while others voiced concerns over its safety measures, speculating its a result of US safety acts, leading to discussions about abliteration and its potential to make the model dumb as F__K.

- It was noted that GPT-OSS could be outperformed by GLM 4.5 Air, leading to discussions about the model’s overall value and the possibility of a Chinese startup surpassing it soon.

- GRPO Batching Brain Teasers: Inquiries arose about how Unsloth handles batching for GRPO, specifically whether entire trajectory groups are batched together.

- A member clarified that if

n_chunksis set to 1, each batch corresponds one-to-one with a group.

- A member clarified that if

- Token Decoder Maps Framework is Born: A member introduced their LLM domain-specific language framework designed for purposes like summarization.

- The GitHub project utilizes EN- tokens to summarize specific concepts or facts for later injection and prompting.

LMArena Discord

- GLM 4.5 Hypes Up: A member touted GLM-4.5 as potentially living up to the hype, scoring 5/5 on a test suite and outperforming other models including Horizon Beta, Grok 4, o3 Pro, Gemini 2.5 Pro, Claude Sonnet and Opus in a ‘create-an-html-game’ test.

- Despite quirks like infinite thinking loops and result discrepancies, the member concluded that GLM-4.5 is overall really strong.

- AI Chess Tourney Kicks Off Kaggle Game Arena: The Kaggle Game Arena will kick off with a 3-day AI chess exhibition tournament featuring 8 models (YouTube link).

- Some raised concerns about chess being a strategy optimization game rather than a test of intelligence, and questioned how non-visual models will interpret the board.

- Long Context Benchmarks Embrace Diverse Models: Members discussed context windows in benchmarks, noting the necessity to accommodate most released models, even those with smaller context windows, to ensure fair scoring.

- It was also pointed out that different versions exist for different context sizes, allowing models to be punished/rewarded accordingly.

- GPT-5 Release Looming?: There was discussion about a potential GPT-5 release in a few days, with one member noting that internal leaks have cited the same thing (x.com link).

- Jimmy Apples speculated that heavy users may not notice improvements in GPT-5 due to auto-routing.

- OpenAI open source models show limitations on LMArena: OpenAI’s gpt-oss-120b and gpt-oss-20b models are now available in the arena, expanding the range of choices for users interested in open-source alternatives.

- Members tested GPT-OSS 120B and found it disappointing, with performance perhaps on par with o3-mini or Qwen3 235B A22B, and more hallucinations than any other model except for Llama 4 Maverick.

LM Studio Discord

- OpenAI Drops gpt-oss Models!: OpenAI released gpt-oss, a set of open models under the Apache 2.0 license, available on lmstudio.ai with sizes of 20B and 120B parameters.

- Community members testing the models on LM Studio reported broken links on the LM Studio website and difficulties locating the 120B model, as well as setting the context.

- LibreChat Supercharges LM Studio Speed!: Members raved about the blazing fast speed of inference using models in LM Studio via Libre Chat, claiming It’s like a identical ChatGPT (OpenAI) UI that serves all my LM studio models.

- One user resolved a connection issue by adjusting YAML indentation, emphasizing the importance of configuration details.

- Tailscale Troubles? Bind to 0.0.0.0: Users ran into setup issues trying to use AnythingLLM with LM Studio via a Tailscale IP, where the model list would fail to populate.

- The problem was resolved by setting the LM Studio host to 0.0.0.0 to allow external connections and opening port 1234 in the firewall.

- Hardware Havoc: Windows Page File Still Matters: Despite abundant RAM, disabling the page file on Windows can lead to weird, unexplainable crashes, as some programs rely on it.

- A better alternative is using zram to compress pages and store them in RAM, potentially fitting 2-3 compressed pages in one actual page.

- CUDA Runtime Confusions Cleared!: For setups with multiple GPUs like a 5090 and 4090, users recommend using CUDA 12 for the runtime.

- Advised to opt into the beta branch of both LM Studio and the runtime.

OpenAI Discord

- OpenAI Unveils GPT-OSS Models and Hackathon: OpenAI released the GPT-OSS model family and launched a six-week virtual hackathon in collaboration with Hugging Face, NVIDIA, Ollama, and vLLM.

- The hackathon features categories like Best Overall, Robotics, and Wildcard, offering winners cash prizes or NVIDIA GPUs, plus a $500K Red Teaming Challenge on Kaggle is being held.

- GPT-5 Release Date Still Unclear: Community members are actively speculating about the release of GPT-5, though some believe a more incremental update like GPT-4.1 or a unification of existing models is more likely; this X post is circulating.

- Despite anticipation, skepticism remains, with some suggesting OpenAI may be struggling to make GPT-5 sufficiently impressive.

- GPT-OSS Model Tested by Community: The 120B parameter GPT-OSS model reportedly achieves near-parity with OpenAI o4-mini on core reasoning benchmarks, while running efficiently on a single 80 GB GPU, according to OpenAI’s blogpost.

- The 20B parameter model is said to deliver results similar to OpenAI o3‑mini and can run on edge devices with only 16 GB of memory.

- AI sparks Academic Integrity Debate: A professor is teaching other professors how to use AI to create exams, but students are conversely using AI to complete them, raising concerns about critical thinking erosion.

- The sentiment is that AI should offload lower-skilled tasks, letting individuals focus on the important parts of the problem, but switching off one’s own brain results in generic fast food outputs.

- New Ollama GUI Eases Local Model Access: The new Ollama UI is praised for its simplicity, especially the toggle to enable network serving, though some find it immature compared to UIs like AnythingLLM and Dive.

- The new Ollama GUI has made running models locally easier than ever before.

OpenRouter (Alex Atallah) Discord

- Anthropic Opus 4.1 Takes Coding Crown: The new Anthropic Opus 4.1 model is now live on OpenRouter and leading in the SWE Bench coding benchmark, detailed on X.

- The model can be accessed here for immediate use.

- OpenAI Back to Open Source with GPT-OSS: OpenAI launched gpt-oss, new open-weight models with variable reasoning, on OpenRouter, detailed on X.

- The models include gpt-oss-120b at $0.15/$0.60 per M input/output tokens and gpt-oss-20b at $0.05/$0.20 per M input/output tokens.

- Model Prioritization Bug Vanquished: A quick fix has resolved the model vs models prioritization issue by ensuring only

modelormodelsis used, as per Google AI documentation.- The fix prevents conflicts and ensures proper model selection for users.

- OpenRouter Mulls Claude Cache Stripping: Some members have requested that OpenRouter automatically strip cache parameters for Claude providers that don’t support caching.