GPT-5 is hopefully all you need.

AI News for 8/6/2025-8/7/2025. We checked 12 subreddits, 544 Twitters and 29 Discords (227 channels, and 16553 messages) for you. Estimated reading time saved (at 200wpm): 1183 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

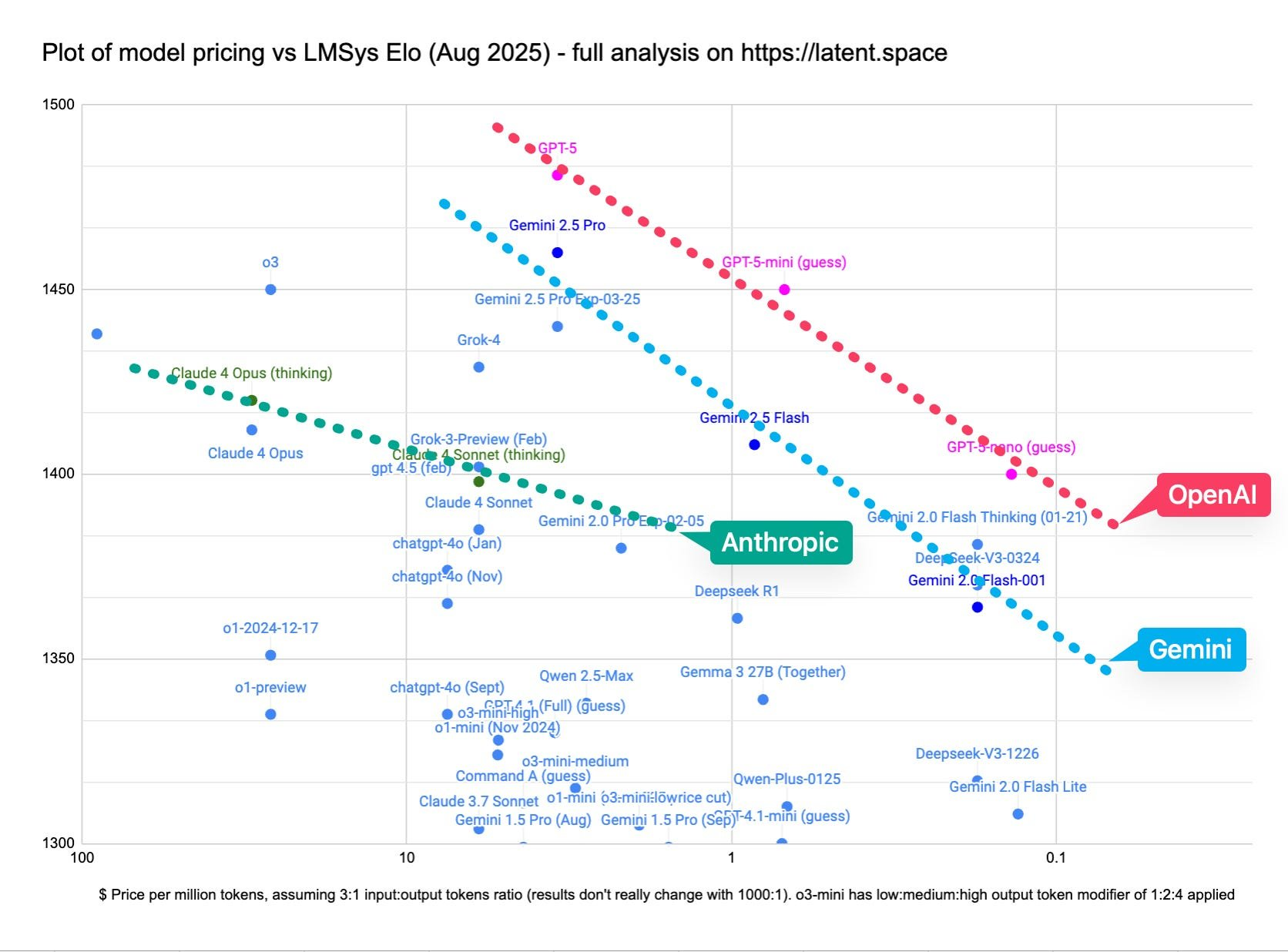

While the livestream was somewhat disappointing (except for the highly entertaining chart crimes), and the benchmarks were incremental improvements over the SOTA offerings from OpenAI, the pricing wow’ed us, as OpenAI took back the Pareto Frontier of Intelligence from GDM:

With OpenAI now having at least a 4 Sonnet tier model, passing developer vibe checks, it is solidly “back” in the coding model game, although it remains to be seen what the long term impact will be.

We recommend looking through the hands-on early beta report and thinking through what was revealed from the model card description of GPT-5’s architecture.

Here is GPT-5’s launch, according to GPT-5:

OpenAI’s GPT‑5 Launch: unified router, aggressive pricing, broad rollout

- What shipped: GPT‑5 is a “unified system” with a fast “main” model and a deeper “thinking” model behind a real‑time router that decides when to reason, call tools, or stay terse. In ChatGPT, there’s no model picker by default; Plus users can select GPT‑5 vs GPT‑5 Thinking; Pro gets more variants. API exposes gpt‑5, gpt‑5‑mini, gpt‑5‑nano and a “reasoning effort” control (minimal/low/medium/high). Context: up to 400K (128K max output). Knowledge cutoff reported as 2024‑10‑01 for main; minis reported as 2024‑05‑31. Rollout is staged to Free/Plus/Pro/Team (Enterprise/Edu next week). Announcements: @OpenAI, @sama, system card summary.

- Prices and cache: Tweets cite gpt‑5 at $1.25/M input, $10/M output with cache discounts (“flex” references as low as $0.625/$5) and gpt‑5‑mini at $0.25/$2; gpt‑5‑nano at $0.05/$0.4. Multiple OpenAI leads emphasized the cost downshift and cache economics (@scaling01, @sama, @jeffintime).

- Product integrations (day‑0):

- Chat/coding: Codex CLI makes GPT‑5 the default with usage included via ChatGPT plan; new terminal UI and rate‑limits by plan (@OpenAIDevs, @embirico). Cursor set GPT‑5 as the default coding model, temporarily free (@cursor_ai). JetBrains AI Assistant and Junie agent support GPT‑5 (@jetbrains). Microsoft Copilot “Smart Mode” routes to GPT‑5 (@mustafasuleyman). Notion AI now offers GPT‑5 (@NotionHQ). Perplexity added GPT‑5 for Pro/Max (@perplexity_ai).

- Agent scaffolds: Cline reports GPT‑5 is disciplined, parallelizes tool calls, and “plans verbose, executes terse” (@cline); Factory made GPT‑5 its default for “Droids” (@FactoryAI). OpenAI published a GPT‑5 prompting/cookbook bundle (@OpenAIDevs).

Benchmarks, evals, and the “chart crimes”

- Arenas and coding: GPT‑5 tops LMSYS Text/WebDev/Vision Arenas (tested as “summit”), with a notably large WebDev margin (@lmarena_ai). OpenAI claims 74.9% on SWE‑bench Verified; several researchers immediately flagged a mislabeled axis and that OpenAI ran on a 477‑task subset; corrected charts put GPT‑5 roughly on par with Claude 4.1 Sonnet/Opus (74–75%) on the verified set (@nrehiew_, @OfirPress, @Sauers_).

- Long‑context and hallucinations: GPT‑5 leads Artificial Analysis’ long‑context reasoning (AA‑LCR) occupying #1 and #2; big headline improvement over o3‑high on long‑context tasks (@ArtificialAnlys). Multiple claims of much lower hallucination and introduction of “safe completions” (refusal that maximizes utility within safety constraints) (@scaling01, @sama). METR’s autonomy eval finds GPT‑5 unlikely to pose catastrophic risk under current threat models, while cautioning about increased eval‑awareness/manipulation risk as capabilities rise (@METR_Evals).

- Reasoning/agents: GPT‑5 shows strong instruction following and tool use (e.g., TauBench gains, IFBench instruction‑following), but mixed changes on non‑SWE coding evals and OpenAI PR‑reproduction (@omarsar0, @eli_lifland, @scaling01).

- ARC‑AGI and safety‑deception: GPT‑5 hits 65.7% on ARC‑AGI‑1 but 9.9% on ARC‑AGI‑2; Grok‑4 leads ARC‑AGI‑2 at 15.9% (@fchollet, @scaling01). GPT‑5 shows lower deceptive behavior than o3 in OpenAI’s internal measures (methodology matters; third‑party replication pending) (@scaling01).

- Note on comms: OpenAI’s event drew widespread criticism for multiple “chart crimes” (axis/scale errors) in slides—blog version was fixed later (@jeremyphoward, @iScienceLuvr).

Agentic coding reality check: strong tooling, fewer vibes

- Hands‑on reports: Early users highlight GPT‑5’s “autistic” instruction following, fewer yaps, parallel tool calls, and long‑horizon persistence—e.g., multi‑file edits and reliable diffs (Codex CLI, Cline, Cursor). Several posts show one‑shot interactive apps/dashboards/games with minimal prompting (@skirano, @benhylak, @pashmerepat). Cursor calls GPT‑5 “the smartest coding model we’ve tried” and made it default, free initially (@cursor_ai).

- Routers are the product: The deprecation of the in‑app model picker signals a bet on real‑time routing (thinking/tool use) as UX default; this shifts dev control from “which model?” to “what constraints/policy/verbosity/effort?” (@sama, @dariusemrani).

- Independent evals: Deep‑research runs find GPT‑5 roughly comparable to Claude 4 Sonnet on long‑horizon research tasks (small sample), suggesting gains may be use‑case/stack dependent rather than across‑the‑board (@hwchase17).

AI Twitter Recap

OpenAI’s GPT‑5 Launch: unified router, aggressive pricing, broad rollout

- What shipped: GPT‑5 is a “unified system” with a fast “main” model and a deeper “thinking” model behind a real‑time router that decides when to reason, call tools, or stay terse. In ChatGPT, there’s no model picker by default; Plus users can select GPT‑5 vs GPT‑5 Thinking; Pro gets more variants. API exposes gpt‑5, gpt‑5‑mini, gpt‑5‑nano and a “reasoning effort” control (minimal/low/medium/high). Context: up to 400K (128K max output). Knowledge cutoff reported as 2024‑10‑01 for main; minis reported as 2024‑05‑31. Rollout is staged to Free/Plus/Pro/Team (Enterprise/Edu next week). Announcements: @OpenAI, @sama, system card summary.

- Prices and cache: Tweets cite gpt‑5 at $1.25/M input, $10/M output with cache discounts (“flex” references as low as $0.625/$5) and gpt‑5‑mini at $0.25/$2; gpt‑5‑nano at $0.05/$0.4. Multiple OpenAI leads emphasized the cost downshift and cache economics (@scaling01, @sama, @jeffintime).

- Product integrations (day‑0):

- Chat/coding: Codex CLI makes GPT‑5 the default with usage included via ChatGPT plan; new terminal UI and rate‑limits by plan (@OpenAIDevs, @embirico). Cursor set GPT‑5 as the default coding model, temporarily free (@cursor_ai). JetBrains AI Assistant and Junie agent support GPT‑5 (@jetbrains). Microsoft Copilot “Smart Mode” routes to GPT‑5 (@mustafasuleyman). Notion AI now offers GPT‑5 (@NotionHQ). Perplexity added GPT‑5 for Pro/Max (@perplexity_ai).

- Agent scaffolds: Cline reports GPT‑5 is disciplined, parallelizes tool calls, and “plans verbose, executes terse” (@cline); Factory made GPT‑5 its default for “Droids” (@FactoryAI). OpenAI published a GPT‑5 prompting/cookbook bundle (@OpenAIDevs).

Benchmarks, evals, and the “chart crimes”

- Arenas and coding: GPT‑5 tops LMSYS Text/WebDev/Vision Arenas (tested as “summit”), with a notably large WebDev margin (@lmarena_ai). OpenAI claims 74.9% on SWE‑bench Verified; several researchers immediately flagged a mislabeled axis and that OpenAI ran on a 477‑task subset; corrected charts put GPT‑5 roughly on par with Claude 4.1 Sonnet/Opus (74–75%) on the verified set (@nrehiew_, @OfirPress, @Sauers_).

- Long‑context and hallucinations: GPT‑5 leads Artificial Analysis’ long‑context reasoning (AA‑LCR) occupying #1 and #2; big headline improvement over o3‑high on long‑context tasks (@ArtificialAnlys). Multiple claims of much lower hallucination and introduction of “safe completions” (refusal that maximizes utility within safety constraints) (@scaling01, @sama). METR’s autonomy eval finds GPT‑5 unlikely to pose catastrophic risk under current threat models, while cautioning about increased eval‑awareness/manipulation risk as capabilities rise (@METR_Evals).

- Reasoning/agents: GPT‑5 shows strong instruction following and tool use (e.g., TauBench gains, IFBench instruction‑following), but mixed changes on non‑SWE coding evals and OpenAI PR‑reproduction (@omarsar0, @eli_lifland, @scaling01).

- ARC‑AGI and safety‑deception: GPT‑5 hits 65.7% on ARC‑AGI‑1 but 9.9% on ARC‑AGI‑2; Grok‑4 leads ARC‑AGI‑2 at 15.9% (@fchollet, @scaling01). GPT‑5 shows lower deceptive behavior than o3 in OpenAI’s internal measures (methodology matters; third‑party replication pending) (@scaling01).

- Note on comms: OpenAI’s event drew widespread criticism for multiple “chart crimes” (axis/scale errors) in slides—blog version was fixed later (@jeremyphoward, @iScienceLuvr).

Agentic coding reality check: strong tooling, fewer vibes

- Hands‑on reports: Early users highlight GPT‑5’s “autistic” instruction following, fewer yaps, parallel tool calls, and long‑horizon persistence—e.g., multi‑file edits and reliable diffs (Codex CLI, Cline, Cursor). Several posts show one‑shot interactive apps/dashboards/games with minimal prompting (@skirano, @benhylak, @pashmerepat). Cursor calls GPT‑5 “the smartest coding model we’ve tried” and made it default, free initially (@cursor_ai).

- Routers are the product: The deprecation of the in‑app model picker signals a bet on real‑time routing (thinking/tool use) as UX default; this shifts dev control from “which model?” to “what constraints/policy/verbosity/effort?” (@sama, @dariusemrani).

- Independent evals: Deep‑research runs find GPT‑5 roughly comparable to Claude 4 Sonnet on long‑horizon research tasks (small sample), suggesting gains may be use‑case/stack dependent rather than across‑the‑board (@hwchase17).

OpenAI’s GPT-5 Launch and Reception

- The Announcement: OpenAI officially announced the launch of GPT-5, with CEO @sama teasing a livestream that would be “longer than usual” with “a lot to show.” The new model is described as a unified system that automatically switches between quick answers and deeper reasoning, rolling out to all users, including the free tier. OpenAI’s Head of Product @kevinweil stated, “It’s the best thing we’ve ever built.” The release deprecates previous models, with the goal of simplifying the user experience by removing the model switcher. An AMA with the team is scheduled for the following day.

- Technical Details and Pricing: GPT-5 is a family of models, not a single monolithic entity. It includes

gpt-5,gpt-5-mini, andgpt-5-nano, and also separate “thinking” models, leading @teortaxesTex to call it a “unified system” that is “literally just SEPARATE CoT + non-CoT models + a router.” API pricing was a major point of discussion, with @scaling01 noting its competitiveness: the main model is priced at $1.25/$10 per million tokens, withminiat $0.25/$2 andnanoat $0.05/$0.4, all with a 400k context window and a knowledge cutoff of October 1st, 2024. This makes it cheaper than Sonnet and better than Opus. @jerryjliu0 observed that for document understanding, GPT-5 seems to use 4-5x more tokens than GPT-4.1, potentially increasing its effective cost for vision tasks. - Performance and Benchmarks: Initial benchmarks show significant improvements in some areas but stagnation in others. @scaling01 highlighted “ridiculous improvements on long-context tasks” and near-elimination of hallucinations. GPT-5 also became the new SOTA on the LMArena leaderboard. However, @fchollet reported that on ARC-AGI, GPT-5 scored 65.7% on AGI-1 and a modest 9.9% on AGI-2. Further analysis from @scaling01 showed only a 3% improvement over

o3in reproducing scientific papers and no significant improvement on benchmarks like OpenAI Pull Requests and SWE-Lancer IC. - The “Chart Crime” Controversy: A significant part of the community discussion centered on misleading charts in the launch presentation. A chart on SWE-Bench performance was widely criticized for having a non-monotonic Y-axis, where 52.8% was plotted higher than 69.1%. This was first pointed out by @Teknium1 and amplified by many, including @jeremyphoward. @iScienceLuvr quipped, “If GPT-5 made this chart I’m bearish,” while @kipperrii joked, “fuck the y-axis! fuck the x-axis! just scribble on an exponential and go home!” @nrehiew_ later provided a corrected version of the chart.

Competing Models and The Broader Ecosystem

- xAI’s Grok: Grok-4 emerged as a strong competitor, with @fchollet stating it remains state-of-the-art on ARC-AGI-2, scoring 15.9% to GPT-5’s 9.9%. @Yuhu_ai_ claimed xAI is “ahead in many” aspects and that Grok was the “world’s first unified model.” In a dramatic turn, @cb_doge reported that Grok-4 defeated Google’s Gemini in the Kaggle AI Chess semi-final, though it was ultimately defeated by OpenAI’s o3 in the final. In parallel, @elonmusk announced that Grok Imagine video generation would be free for all US users.

- Perplexity and Multi-Model Support: Perplexity announced day-one support for GPT-5 for its subscribers. CEO @AravSrinivas highlighted their extensive model offerings, including GPT-5, Claude 4.1 Opus, Grok 4, and Gemini 2.5 Pro, positioning Perplexity as a key multi-provider platform.

- Open vs. Closed Source Landscape: @Tim_Dettmers offered a key insight, stating, “It seems the closed-source vs open-weights landscape has been leveled. GPT-5 is just 10% better at coding than an open-weight model you can run on a consumer desktop.” This sentiment was echoed by discussion around OpenAI’s gpt-oss release, which can now run natively in Google Colab T4 for free powered by Transformers.

- Other Notable Models: Alibaba introduced new Qwen3-4B models. Kimi K2 received praise for its coding capabilities and unique writing style.

Developer Tooling, Frameworks, and Infrastructure

- Developer Environments & CLIs: The release of GPT-5 triggered immediate integrations. @aidan_mclau announced that GPT-5 is now the default in Cursor, replacing Claude, with Cursor’s CEO calling it “the smartest coding model we’ve tried.” Codex CLI also saw major improvements with GPT-5 integration, with usage included in ChatGPT plans. Cline also added GPT-5, describing it as “disciplined, persistent, & highly competent.”

- RAG and Agentic Frameworks: There is continued strong interest in advanced Retrieval-Augmented Generation. @HamelHusain shared an open book titled “Beyond Naive RAG: Practical Advanced Methods.” LangChain continues to build out its agent ecosystem, with @unwind_ai_ noting they “reverse-engineered Claude Code, Manus, and Deep Research” for their OpenSWE agent. Jules now supports running and rendering web applications with screenshot verification.

- Inference and Infrastructure: vLLM highlighted its adoption by major tech companies like Tencent, Huawei, and ByteDance at a Beijing Meetup. A tutorial for building a minimal, vLLM-like inference system in under 1000 lines of code using FlexAttention was shared by @cloneofsimo. For developers running models locally, @ggerganov pointed out that LMStudio’s use of the upstream

ggmlimplementation is “significantly better and well optimized” compared toollama’s fork.

Broader Implications & Industry Commentary

- The Plateau Thesis: A strong sentiment emerged that progress from simply scaling LLMs is hitting a wall. @far__el stated, “It’s clear that you can’t squeeze AGI out of LLMs even if you throw billions of dollars worth of compute at it, something fundamental is missing.” @francoisfleuret agreed, clarifying the observation applies to the entire field: “We are seeing the plateau: just scaling up is coming to an end. For EVERYONE.” This was contrasted with the view that agent scaffolds and post-training now matter more than ever.

- AI Talent & Economics: @AndrewYNg provided a detailed analysis of the economics of AI development, explaining that the high capital cost of GPUs makes it rational for companies like Meta to pay enormous salaries to top talent to ensure hardware is used effectively. This capital-intensive nature makes salaries a small fraction of overall expenses. In a related observation, @jxmnop questioned the efficiency of VC funding, noting startups that “raised ~100M total… built software nobody ever used, and now they all work elsewhere.”

- Market Reaction: The initial market reaction to the GPT-5 launch was muted. @scaling01 noted that OpenAI was “getting crushed on Polymarket,” suggesting markets were disappointed by the release.

- AI and Knowledge: @Teknium1 argued that relying on search via an agent harness is not a valid replacement for the “rich connections” a model builds from backpropagation on the world’s knowledge, stating it’s “more meaningful than rag.”

Research and New Techniques

- Agent Learning and Optimization: Databricks researchers, including @lateinteraction and @jefrankle, introduced ALHF (Agent Learning from Human Feedback), a method where agents are optimized based on natural language feedback from users about bad responses. The technique is described as both information-dense and ergonomic. Another paper explored combining prompt optimization with policy-gradient RL.

- Multimodal Models and Techniques: MiniMax announced Speech 2.5, a voice cloning model supporting 40 languages with high fidelity. Researchers at Google DeepMind shared a paper on efficiently training small vision-language models to use a zoom tool with GLaM. The TRL library also received a major upgrade for multimodal alignment, adding techniques like GRPO & MPO.

- Data Augmentation: @cloneofsimo pointed out an interesting corollary of Fill-in-the-Middle (FIM) training: FIM-style augmentation can practically “2x” your high-quality dataset with practically zero downside.

Humor and Memes

- Hype and Anticipation: The community geared up for the launch with @gdb posting a cryptic countdown timer

T - [[5+5+5] - 5/5] hours, and @nearcyan joking about being at dinner with an OpenAI friend “vaguely gesturing towards the kitchen and grinning.” - Chart Crime Memes: The flawed launch charts were a comedic goldmine, with @zacharynado and others sharing screenshots of the non-monotonic axes. @ThePrimeagen reacted to a claim of a founder deleting 10k lines of code a day with “ok Lex Luthor, its time to step away from the keyboard.”

- Relatable Engineer Life: @vikhyatk posted a picture of a messy desk with the caption “Men really think it’s okay to live like this.” @francoisfleuret captured the feeling of reviewing night logs with “what the [expletive] is this [expletive].”

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. GPT-OSS and OpenAI Model Hype and Brand Perception

- GPT-OSS is Another Example Why Companies Must Build a Strong Brand Name (Score: 538, Comments: 329): The post criticizes the disproportionate attention given to GPT-OSS 120B compared to technically stronger alternatives like Qwen-235B and DeepSeek R1, citing lack of multimodality, smaller size, and reliance on innovations from other open-source projects. The author highlights that Alibaba has released competitive models (e.g., Wan2.2 and Qwen-Image for video/image, high-performing 30B and 4B models) with little media coverage, attributing GPT-OSS’s hype to OpenAI’s branding rather than clear technical superiority. Notably, DeepSeek R1’s cost-per-training is presented as evidence of superior efficiency (DeepSeek: $5.58M vs rumored OpenAI: much higher), and censorship complaints are discussed as inconsistently applied across regions/models. Top comments emphasize the regional bias in influencer coverage (favoring US/English-language companies), shortcomings in technical depth of popular AI media channels, and technical distinctions—one commenter notes that OSS 120B is much sparser and faster than Qwen-235B, which justifies different use cases beyond raw benchmark scores. Non-compliance (re: alignment/safety behaviors) in OSS 120B is mentioned as a possible business feature, not a bug.

- A technical distinction is made between OSS 120b and Qwen3 235b: OSS 120b has half as many parameters, only a quarter as many active parameters, and is extremely sparse, leading to significantly higher inference speeds compared to Qwen3 235b. However, OSS 120b’s smaller size and sparsity also mean it’s overly non-compliant for certain tasks, which some businesses might actually prefer due to regulatory or operational requirements.

- Benchmarks are indirectly discussed: OSS 120b is said to be much faster than Qwen3 235b because of its sparsity and fewer active parameters, though it doesn’t match larger models in overall capability. The comment also points out user preference for models like Qwen3 30b A3B, stating that smaller models like OSS 20b aren’t as sparse and thus might not have the same speed advantage for their parameter size.

- If the gpt-oss models were made by any other company than OpenAI would anyone care about them? (Score: 226, Comments: 119): The post questions whether the recent gpt-oss models would receive significant attention if released by a company other than OpenAI, given their reportedly inferior coding abilities relative to models like Qwen 32B, higher hallucination rates, and perceived overfitting to benchmarks. A technical comment highlights that the gpt-oss 120B model achieves an inference speed of 25 tokens/sec on a single RTX 3090 and i9-14900K, notably outperforming other local models at similar quantization levels (e.g., 70B q4), making it attractive for local deployment despite concerns about practical usability and excessive ‘safety’ constraints. Commenters agree OpenAI’s brand drives disproportionate hype, noting most of the public is unaware of other AI companies or models, but also credit OpenAI with advancing the open model scene’s visibility. There is debate on whether the model’s speed and local inference capability justifies some excitement independent of its brand.

- The gpt-oss 120B model demonstrates a significant technical advantage—according to a user benchmark, it can run at 25 tokens/sec on a single 3090 + 14900K system. This is notably faster and more performant than other locally run 70B models using quantization (e.g., q4 or worse), which are described as “very very bad.” Such speed and scale on consumer-grade hardware make it stand out among local LLMs.

- There is ongoing debate regarding model utility: while gpt-oss 120B performs well speed-wise and in generating quality outputs (noted for “clear thinking patterns” and “quick and quality summaries”), concerns remain about the level of censorship (‘safety’) embedded in the model. Some users suggest a fine-tune is necessary to adapt the model for broader practical applications, as heavy safety alignment may reduce its usefulness for some tasks.

- Historically, local LLMs were considered either not good enough or too slow, leading users to rely on API-based solutions (e.g., GPT-4o, Claude). gpt-oss 120B is seen as a turning point—potentially “on the edge” of making local deployment practically viable for demanding use-cases, pending further evaluation of its capabilities beyond speed and safety constraints.

- Hilarious chart from GPT-5 Reveal (Score: 1737, Comments: 224): The post shares an image from the ‘GPT-5 Reveal’ that users found particularly confusing or unprofessional, as reflected in both the title and the comments. Commenters sarcastically suggest the chart was generated by DALL-E and criticize the quality of the reveal livestream, questioning the meaning or utility of the chart. No technical details about GPT-5 or model performance are conveyed in the image itself; the focus is entirely on the perceived lack of clarity and professionalism of the visualization used during the reveal. The technical discussion centers on the poor quality and possible nonsensical nature of the chart, with users expressing disbelief and disappointment in the reveal’s presentation standards.

- Commenters discuss the confusing or low-quality visualizations shown during the GPT-5 reveal, with some suggesting that the chart quality (e.g., “I think they used dall e to plot it”) undermines the technical credibility of the presentation. The discussion raises concerns about the impact of poor data presentation on the perceived trustworthiness and clarity of model benchmarks or technical claims.

- There is technical skepticism around the meaning and measurement of the terms used in the reveal (e.g., “Deception rate”), suggesting a lack of transparency or unclear metric definitions. Users remark that without rigorous, well-defined benchmarks, it’s hard to assess the true capabilities or risks of GPT-5.

- To all GPT-5 posts (Score: 156, Comments: 14): The post humorously emphasizes the technical concern of which AI model is exposed on commonly used local API ports (e.g., 8000, 8080) rather than discussion about GPT-5 pricing or official API tiers. The attached image, while not described, likely references the user’s local deployment and management of models on specific ports. A top comment further details a user’s own port allocations for various local LLM and AI tools, showing ports (9090, 9191, 9292, etc.) used for models like Gemma3 4B (main LLM), Whisper, Qwen3, Nomic, and Mistral, illustrating community practices for self-hosted LLM infrastructure. Commenters discuss preferences for discussing GPT models in the context of open-source and locally-run LLMs versus commercial platforms, and share practical setups for port assignments, reflecting community standards for organizing multi-model deployments.

- One user provides a detailed port configuration setup for running multiple local LLM-related services concurrently. Examples include running a main LLM (Gemma3 4B) on port 9090, Whisper for speech (ggml-base.en-q5_1.bin) on 9191, tool-calling and coding LLMs (Qwen3 4B and Qwen3-Coder-30B-A3B) on separate ports, and vision/project-specific models (like Mistral 3.2 24B) on others, highlighting practical multi-model orchestration and the issue of port collisions with 8080.

- There’s a brief mention of comparing GPT models to open source offerings like Kimi k2, emphasizing community interest in benchmarking OpenAI’s latest models against fast-moving open source alternatives, including those from China. This suggests an ongoing technical debate around capability, openness, and future competitiveness.

2. Major Open-Source Model Release News and Comparisons

- Huihui released GPT-OSS 20b abliterated (Score: 384, Comments: 96): Huihui has released an “abliterated” (uncensored) derivative of GPT-OSS-20b (HuggingFace link), advertising it as free from alignment/safety restrictions. The model is distributed in BF16 format; community members are waiting for a GGUF (quantized) version for broader compatibility. The original GPT-OSS-20b featured significant safeties, which are apparently removed here, leading to discussion of rapid ‘unfiltering’ in the open-source AI community. Commenters note the speed at which safeguards were circumvented and express anticipation for empirical testing of the claimed uncensored capabilities, with some referencing the ongoing tension between open-source and closed-source approaches to safety.

- Several users are discussing anticipation for community-led benchmarks and testing results on the “abliterated” (safety-reduced) GPT-OSS 20B model, signaling interest in detailed performance and safety evaluation compared to previous iterations.

- There is specific demand for compatible weights in GGUF format, indicating users are interested in efficient inference with tools such as llama.cpp and local deployment optimizations.

- Nonescape: SOTA AI-Image Detection Model (Open-Source) (Score: 136, Comments: 65): The image is likely a screenshot demonstrating the interface or results of the open-source Nonescape AI-image detection models, which claim state-of-the-art (SOTA) accuracy and a lightweight 80MB in-browser version. The models are trained on over 1 million images, cover recent AI techniques including diffusion, GANs, and deepfakes, and offer both Javascript and Python libraries for integration (GitHub). The demo works for both images and videos, emphasizing real-world usability. Commenters raise skeptical technical points: one notes demo detections may simply correlate filenames to “AI” or “fake”, and another advises rapid use before adversarial training diminishes its effectiveness in distinguishing new AI-generated images. This highlights ongoing cat-and-mouse dynamics common in AI detection research.

- A commenter notes that models like Nonescape may quickly lose effectiveness: as open-source detection models become widely known, image generators can use them as discriminators during training, enhancing the naturalness of their output and allowing them to bypass detection—leading to an evolving adversarial cycle between generators and detectors.

- One user highlights significant implementation challenges, stating that for robust AI-image detection, considerably more baseline data is needed (potentially 10x current datasets). They also argue the necessity of using image tiling, large batch sizes, and similar techniques both in training and inference to achieve satisfactory generalization and performance.

- A technical observation points out inconsistency between model deployments: while the full version of Nonescape failed to categorize a poor-quality generated image as AI, the browser-based version succeeded, raising questions about deployment differences, model robustness, or variance between inference environments.

- random bar chart made by Qwen3-235B-A22B-2507 (Score: 353, Comments: 14): The image shows a random bar chart generated by the Qwen3-235B-A22B-2507 model, rendered on an HTML canvas. The post highlights the model’s ability to output not just raw data but also code to render data visualizations directly using web technologies (JavaScript and HTML canvas). No detailed model or benchmark discussion is present, but this demonstrates practical utility in automated code generation for graphical outputs. Commenters make lighthearted remarks about the quality and accuracy of the chart but do not critique the technical implementation or model performance in depth.

- The post references Qwen3-235B-A22B-2507, suggesting this is a newer or experimental checkpoint/variant of the Qwen3-235B model. The existence of chart generation and references to its accuracy indicate evaluation or use of the model on visualization or data presentation tasks, possibly benchmarking model output quality in contexts beyond text.

- There is a mention of slide-generation capabilities in z.ai, implying that some users are comparing model outputs and utility for tasks like automated presentation creation across platforms or services, hinting at broader emerging benchmarks for practical business use-cases.

3. Llama.cpp Feature Updates and Support Announcements

- Llama.cpp now supports GLM 4.5 Air (Score: 231, Comments: 67): llama.cpp has merged support for the GLM 4.5 model family as of this recent PR (pull/14939), making it possible to run these models efficiently within the llama.cpp/ggml ecosystem. Benchmark comparisons indicate that llama.cpp achieves significantly higher throughput (

44 tk/s) versus LM Studio (22 tk/s) for MoE models like Qwen3 Coder 30B-A3B, especially when using then-cpu-moeflag to offload MoE experts to the CPU, highlighting implementation-level efficiency for model parallelism in llama.cpp. Comments note that while GLM 4.5 support is now available, subjective performance/quality impressions are mixed—GLM 4.5 is considered “wordy and overthinks” compared to GPT-OSS 120B, which is also reportedly faster in tokens/second; another user praises GLM 4.5’s world knowledge, particularly for esoteric Q&A tasks, compared to other LLMs.- One commenter provides a direct benchmark comparison between LM Studio and llama.cpp for MoE models, specifically Qwen3 Coder 30B-A3B, noting LM Studio achieves only 22 tokens per second versus 44 tokens/sec with llama.cpp. They highlight that using the

n-cpu-moeflag to offload MoE layers in llama.cpp significantly improves performance, emphasizing llama.cpp’s current efficiency advantage in MoE inference. - Technical users confirm llama.cpp’s early support for GLM 4.5 models, and one notes the model’s world knowledge is impressive compared to other LLMs, especially when handling esoteric Q&A tasks—indicating strong knowledge retention and QA capabilities in GLM 4.5 within this inference backend.

- Another experienced user suggests running Llama models via vanilla llama.cpp and llama-cli for better output quality compared to other UI or wrapper platforms, as they observed the models often underperform (‘dumber’) on less direct platforms, suggesting implementation nuances can affect output quality.

- One commenter provides a direct benchmark comparison between LM Studio and llama.cpp for MoE models, specifically Qwen3 Coder 30B-A3B, noting LM Studio achieves only 22 tokens per second versus 44 tokens/sec with llama.cpp. They highlight that using the

- llama.cpp HQ (Score: 454, Comments: 61): The image shows the personal workstation responsible for much of the CUDA code development in llama.cpp, a popular open-source large language model inference library. The setup features 3 vertically stacked NVIDIA P40 GPUs, cooled with a push-pull fan configuration, and an RX 6800 GPU connected via riser cable, with cardboard DIY modifications for airflow management. This context highlights the resourceful and non-standard hardware environment behind impactful ML infrastructure work. Commenters express surprise at the modest and improvised hardware conditions, which makes the technical accomplishments of the llama.cpp developer more impressive. The DIY cooling and GPU mounting solutions reflect the practical challenges faced by independent ML engineers.

- Much of the llama.cpp CUDA code was developed on a Mac equipped with 3 vertically stacked Nvidia P40 GPUs, using a custom cooling setup with two fans arranged in push-pull configuration. Cardboard was used to seal airflow gaps, and an RX 6800 GPU is connected with a riser cable (not screwed in) due to lack of cable length, illustrating practical hardware improvisation in resource-constrained development environments.

- caught in 4K (Score: 267, Comments: 26): The post discusses the reliability and evaluation protocols for large language models (LLMs), focusing on alleged cherry-picking of test sets for performance claims. Commenters question the robustness of benchmarks, critiquing that assumptions in recent GPT-5 evaluations (such as that it would ‘fail 100% of held out tasks’) are not clearly justified or supported by proper dataset splits or documented evidence. Debate centers on the lack of rigorous disclosure in LLM benchmarking, skepticism toward public demos or performance claims, and concerns that narratives around model capability may involve selective data or unsupported probabilistic logic.

- Some commenters express skepticism about the methodology or claims in the referenced tweets, noting that it is unclear whether GPT-5 would actually fail all held-out tasks. They point out that such assumptions may oversimplify performance evaluation, emphasizing the need for rigorous and transparent benchmarking when comparing LLMs.

- There is a strong call for third-party testing and independent validation of LLMs, with several users suggesting that only models whose weights can be run on private servers should be considered for evaluation. This highlights the concern over proprietary models, arguing that unless companies provide access to weights or exception-based testing, robust and unbiased assessments are impossible.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. GPT-5 & OpenAI Livestream: Announcements, Demos, and Community Reaction

- GPT-5 Announcement Megathread (Score: 459, Comments: 646): The post serves as an open thread for the GPT-5 announcement, with technical commentary on the validity and clarity of performance graphs included in the official release. Specific confusion is raised about graph data, notably how a score of

50.0appears to plot lower than47.4, implying potential inconsistencies or errors in published model benchmarks (see included image1 and image2). Experts in the thread are expressing skepticism about the accuracy and integrity of the displayed evaluation metrics, questioning whether presentation errors undermine trust in the reported improvements for GPT-5.- Several commenters point out inconsistencies and inaccuracies in published benchmark graphs associated with the GPT-5 announcement, such as a value of “50.0” appearing lower than “47.4”, suggesting possible visualization or data labeling errors (example image).

- Commenters highlight that such graphical mistakes undermine the credibility of technical benchmarks, noting that these errors were not caught or fixed by the presenting team, raising concerns over the attention to detail in reporting GPT-5’s performance.

- The discussion implies that misrepresented graphs could lead to misunderstandings of the actual improvements or regressions in model performance, and stresses the importance of accurate benchmark visualization when releasing major upgrades such as GPT-5.

- More info coming in on GPT-5 (Score: 4679, Comments: 127): The post discusses preliminary information about GPT-5, with the image (content not fully analyzed) presumably showing some performance metrics or benchmarks comparing GPT-5 with earlier versions like GPT-4.5. One comment notes that ‘5 is only 11% over 4.5’, highlighting a small performance increase compared to major leaps in hardware (e.g., Nvidia GPUs), suggesting that the jump from GPT-4.5 to GPT-5 may be modest in terms of capabilities or benchmark improvements. Commenters express skepticism about OpenAI’s versioning practices, accusing them of ‘benchmaxxing’ (artificially maximizing benchmark results for marketing) and speculating about naming conventions (e.g., GPT-4.9 vs. GPT-5), with some suggesting possible marketing hype over substantive technical progress.

- Commenters note that the jump from GPT-4.5 to GPT-5 is only about an

11%increase in version number, contrasting this with much larger version jumps seen in other fields (e.g., Nvidia’s 4090 to 5090). This brings up skepticism about whether the version numbering is reflecting substantial upgrades or more incremental progress. - A recurring technical theme is discussion around ‘version inflation’ or ‘benchmaxxing’, implying that some companies might be making version numbering decisions for marketing or competitive optics without proportional increases in actual capability, as suggested by the comment about OpenAI possibly rounding up from GPT-4.9.

- One user points out that, moving forward, percentage increases between model versions are expected to shrink, hinting at diminishing returns or potential architectural or scaling limits in large language model development.

- Commenters note that the jump from GPT-4.5 to GPT-5 is only about an

- Summary of the livestream for those that couldn’t be bothered (Score: 2376, Comments: 108): The post shares an image that satirically summarizes a livestream (presumably about GPT-5) using a bar graph or infographic, but commenters note its inaccuracy and humorous exaggeration. The top technical comment points out that the bar heights matching the numbers does not reflect the actual presentation, suggesting the image is intended as a joke or meme rather than a factual or technical resource. The post is a non-technical meme. Commenters mainly engage in lighthearted criticism, noting the unrealistic nature of the graph and referencing the meme’s comedic rather than factual value.

- Comments note that the difference between GPT-4 and GPT-5, as inferred from a graph, only shows a ‘25% increase’ compared to more significant jumps (such as doubling between older models like GPT-2 to GPT-3), indicating possible diminishing returns in large language model scaling.

- There is some skepticism in the discussion about how data is presented—users note that bar heights on the graph might not accurately reflect the numerical values implied in the presentation, questioning the fidelity of the visual representation of GPT-5’s improvements.

- One commenter raises a broader point about potential ‘AI winter,’ suggesting that the perceived slowdown in progress (or underwhelming improvement between versions) could signal a plateau in the rapid advancements seen in early generative model evolution.

- GPT-5 livestream is up (Score: 472, Comments: 583): A livestream for GPT-5’s announcement was hosted, featuring the core OpenAI team including Sam Altman, Greg Brockman, Sebastien Bubeck, and other principal engineers and researchers. Linked images (graph 1, graph 2) purportedly show performance or scaling trends, with one comment describing the first as the ‘least misleading graph’ and another noting the dubious quality of the second chart, suggesting it may have been auto-generated by GPT-5 itself. Technical discussion in comments focuses on the validity and representation quality of shared benchmark graphs, with skepticism toward possible data manipulation or unclear scaling implications. The full turnout of OpenAI’s technical leadership highlights the event’s significance and potentially substantial changes in GPT-5.

- A chart shared in the discussion, labeled the ‘least misleading graph,’ appears to address transparency and interpretability in reporting AI progress, likely critiquing potentially misleading visualizations of model capabilities or benchmarks historically used in AI model unveilings. The sentiment is that clear and accurate presentations of data are crucial when unveiling major models like GPT-5.

- The announcement mentions that a large portion of the OpenAI technical leadership—including Sam Altman, Greg Brockman, and Sebastien Bubeck—are participating in the GPT-5 demo. This suggests that the event is significant and potentially includes technical deep dives and live demonstrations from key architects and researchers of GPT-5, reflecting the importance and anticipated impact of the new model.

- This might be one of the most awkward and stilted tech presentations ever put on the internet (Score: 776, Comments: 172): The post critiques a recent OpenAI presentation, highlighting technical issues such as live model failures by GPT-5 during demos, incorrect explanations—specifically regarding statistical ‘lift’, and the use of nonsensical graphs. The presentation was characterized by awkward delivery and evident technical errors, calling into question the live demo format given OpenAI’s $300B valuation and perceived industry leadership. Top comments debate the value of live vs. pre-recorded demos, noting that while live demos can build trust if successful, OpenAI’s execution suffers from technical breakdowns (e.g., failing models, erroneous AI-generated slides), and poor presenter performance, undermining the technical credibility of the event.

- Multiple commenters critique the technical execution of the OpenAI presentation, specifically highlighting failures of the showcased models during live demos and the presence of significant errors in PowerPoint slides that appeared to be generated by AI. This led to a perception of decreased reliability and trust in the company’s claims, particularly when technical failures occur on stage.

- Commenters debate the merits of live versus pre-recorded demos for showcasing AI capabilities. Some suggest that although live demos can inspire trust and appear more genuine, they risk exposing unreliability or lack of polish when the models underperform. There is a call for either high-quality pre-recorded highlight reels or direct public access for hands-on testing as more accurate representations of model performance.

- Visual data communication is also criticized, with references to problematic or poorly designed graphs and charts. This diminishes technical credibility and impacts the perceived rigor of the company’s evaluation methods, further fueling skepticism about the actual capabilities of the models being presented.

- I think that’s all for today folks! There you go! Your GPT-5! (Score: 734, Comments: 186): The image is a meme satirizing AI model release presentations. The title and comments suggest it depicts a typical overhyped announcement cycle for major LLMs like GPT-4 and GPT-5, poking fun at claims of incremental improvements (e.g., ‘less hallucinations’) and media exaggeration. The comments further critique the cycle of rapid releases and marketing, referencing questionable journalist coverage and monetization strategies. Commenters broadly agree that LLM update presentations are overhyped and that perceived improvements (like reduced hallucinations) are often marginal; some highlight persistent issues with AI marketing and the tech industry’s reliance on hype.

- A user argues that future ‘wow’ moments in AI will likely require new interfaces or modalities beyond the current chatbot form factor, suggesting that capabilities like digital avatars, video, voice, or robotics applications might be necessary for significant advancement in perceived intelligence. They state the leap from GPT-4 to GPT-5 in pure text chat is unlikely to feel spectacular, regardless of actual intelligence improvements, due to limitations of prompt-based interaction and lack of embodiment.

- The same user notes anticipated improvements such as reduced hallucinations in GPT-5, but emphasizes that the true impact and practical differences will only become apparent through benchmarking and deployment in actual agent or application use cases, rather than within the typical chat experience.

- These GPT-5 numbers are insane! (Score: 10022, Comments: 236): The post references purported ‘GPT-5 numbers’ and presents an image (not accessible), with the context suggesting these numbers are meant to illustrate impressive or surprising advancements—likely in model scale or capabilities. No concrete technical benchmarks, metrics, or implementation details are provided in the title, comments, or description, and the top comments are meme-like and not technical. The image likely contains a meme or joke, as suggested by community reactions and lack of technical discussion. No technically substantive debate is present; comments are humorous and reference job displacement by AI and meme culture.

- A user questions if the bar chart depicted represents a decrease in speed (“how much slower it goes with each iteration”). This raises concerns about how model iterations (potentially from GPT-1 to GPT-5) might affect inference time, latency, or system efficiency—a key consideration for deployment and scalability as model complexity increases.

- OpenAI just dropped the bomb, GPT-5 launches in a few hours. (Score: 2302, Comments: 434): The post claims that OpenAI will launch GPT-5 in a few hours, suggesting a major model release. The image itself is not analyzed, and the comments mainly discuss concerns about system slowness prior to major releases and the potential impact of new models (e.g., job displacement), but do not provide technical specifics about GPT-5’s capabilities, architecture, or benchmarks. Commenters, in a technical context, speculate about reduced system performance before launches and advise against using the service during initial release due to instability. However, there is no direct discussion of GPT-5 features or evidence this release is imminent.

- Several users note degraded performance and inference speed issues with GPT-4o prior to the impending GPT-5 launch, with complaints such as ‘why is [it] running like shit yesterday.’ Such slowdowns often precede major model updates, possibly due to backend resource shifts or user surge anticipating the upgrade.

- There’s technical frustration regarding undesired behaviors introduced in recent GPT-4o updates, including complaints about the model’s tendency to offer unsolicited follow-up responses and the perception that ‘GPT-4o was getting dumber… since they made him more puritan.’ This suggests user-observed shifts in dialogue management and moderation tuning, which may impact perceived utility for complex or nuanced queries.

- The thread reflects broader concerns among technical users that major version launches (like GPT-5) may further alter system behaviors or lead to temporary instability, reinforcing the common wisdom to ‘never play on release day.’ Such comments highlight reliability and stability tradeoffs around launch events.

2. GPT-5 & Model Leaks, Variants, and Limit Access

- GitHub leaks GPT-5 Details (Score: 635, Comments: 132): The post references a purported leak of GPT-5 details on GitHub, linking to an archived copy of the page. The image appears to show text or a screenshot related to this leak, but the specific technical content of the image is unclear due to failed analysis. The discussion centers around competitive positioning, particularly GPT-5’s potential to match or surpass specialized models like Claude Code for coding tasks. Commenters debate OpenAI’s product strategy relative to Anthropic’s Claude Code, expressing surprise at OpenAI’s lack of an explicit code-focused competitor and speculating on future GPT-5 subscription tiers. No consensus is presented, but there is a sense of anticipation for technical advancements and feature differentiation.

- Several comments highlight that Claude Code is currently a standout product for code generation and agentic coding abilities, suggesting that OpenAI needs to either match or surpass these capabilities in future GPT releases to remain competitive for programming use cases.

- There is industry speculation on whether GPT-5 will feature model merging or multi-modal abilities, possibly combining different specialized models (e.g., code, language, vision) into a single, unified system as a step forward from the current GPT-4 architecture.

- Technical users are focused on whether GPT-5 will outperform Anthropic’s Claude models for coding, given Claude’s significant lead in agentic code tasks. This emphasizes the competitive landscape in AI code assistance and the centrality of developer/productivity tools in current model evaluation.

- GitHub leaked the GPT-5 announcement and model variants (Score: 310, Comments: 63): A now-removed GitHub page briefly leaked the anticipated GPT-5 announcement and its model variants, with an archived version referenced though no technical details or benchmarks were provided in the linked material (archived link not shown here). The leak has prompted speculation but lacks concrete information on architecture, parameter count, or new features, making technical assessment or comparison impossible at this stage. Commenters express skepticism regarding the substance of the leak and OpenAI’s hype, emphasizing the absence of technical details and questioning the value beyond typical marketing. Others contrast OpenAI’s approach to product secrecy with Apple’s more controlled announcement strategy.

- Some users note that insiders and creators linked to OpenAI hint at significant frontend changes associated with the GPT-5 release, suggesting architectural or UX/UI shifts that may affect how users interact with the model, not just backend improvements.

- Skepticism is raised about the magnitude of the purported advancements, with at least one technical observer expressing doubt that GPT-5 will represent a true breakthrough rather than an iterative enhancement over GPT-4, paralleling common skepticism in machine learning scaling.

- There is an explicit reference to access to a preview version, with an attached screenshot, indicating that some community members may be reviewing early builds and that information is beginning to circulate even prior to formal announcement, which could yield more technical details soon.

- leak - GPT-5 pro is coming out too! (Score: 418, Comments: 99): The image appears to show a purported leak describing new GPT-5 Pro subscription tiers from OpenAI, highlighting three main plans: Free, Plus, and Pro. Noteworthy technical features discussed include substantial differences in context window size—Free (8K), Plus (32K), and Pro (128K) tokens—though the language is ‘reworded’ to terms like ‘Expanded Context’ without explicit numbers in the leak. The image also suggests the Pro plan will enable ‘Expanded Projects, GPTs and Tasks,’ indicating a potential increase in available resources and capabilities for higher-tier subscribers. The context of the post and comments implies this may be a response to increasing competition from Claude and Gemini, as well as a strategy emphasizing customer acquisition through free releases and retention incentives. Commenters debate if these are actual technical upgrades or simple rebrandings, express concern about losing access to older models (like 4.1), and speculate on how the changes might affect workflow for power users. There is skepticism regarding the authenticity and implications of the leaked image and the real meaning of ‘Expanded Context’ versus explicit context window sizes.

- Discussion centers on the differentiation of context window sizes across tiers—specifically, speculation that Free users get 8K, Plus users 32K, and Pro users 128K context. There is debate about whether “Maximum Context” on Pro and “Expanded Context” on Plus simply refer to these increases, though OpenAI hasn’t confirmed the exact limits in the leak.

- There’s commentary addressing the broader strategy behind these offerings, noting that OpenAI appears to be aggressively pursuing customer acquisition by potentially offering advanced models for free (or with larger context) as a competitive response to rivals like Anthropic’s Claude and Google’s Gemini.

- 🚨 BREAKING: intern accidentally leaked GPT-5’s model description on github. (Score: 1004, Comments: 144): The image supposedly shows a ‘leaked’ model description of GPT-5 on GitHub, but from the responses and context, it offers no concrete technical specifications or insights—just generic, unverifiable claims like “best ever” performance. No benchmarks, model parameters, or implementation details are provided, making the content technically vacuous. Commenters unanimously point out the lack of substance, with several describing the leak as ‘vague nonsense’ and expressing skepticism about its authenticity or informativeness.

- A user raises a relevant technical question about which model Plus users will have high-volume access to, expressing interest in comparisons between upcoming releases and existing variants such as o3, 4o, o4-mini-high, and 4.1. This highlights ongoing community focus on performance, access rights, and model selection within the OpenAI ecosystem, but notes that the supposed leak provides no such comparative or numeric details.

- GPT-5 usage limits (Score: 438, Comments: 180): The image appears to display the latest usage limits for different GPT-5 models on OpenAI (presumably in a user-facing quota dialogue or documentation). According to the post and comments, GPT-5 retains the same messaging limits as GPT-4o—80 messages per 3 hours for standard usage and 200 messages per week for the “Thinking” variant. There does not appear to be a change to the context window size (remains at 32K tokens), which some users express dissatisfaction with. The image provides a clear comparison for Plus users and clarifies that usage limits for GPT-5 mini are effectively “unlimited” for free accounts, mirroring prior GPT-4o limits. Image Link Commenters highlight disappointment with the lack of an increased context window, and some note that usage limits have not improved despite the version jump from GPT-4o to GPT-5. There is also clarification in the comments regarding the continuation of previous limits for different account types.

- Usage limits for GPT-5 are unchanged from GPT-4o: standard GPT-5 is capped at 80 messages per 3 hours, and GPT-5-Thinking has a limit of 200 messages per week, mirroring previous restrictions for comparable models.

- There is confusion and discussion about context window size, with at least one user expressing concern that GPT-5 still has a 32K context length and disappointment that it hasn’t increased.

- Users are debating whether triggering ‘Thinking’ mode via prompts (e.g., requesting ‘think for longer’) consumes messages from the separate GPT-5-Thinking quota or if it allows circumventing stated model usage limits, questioning the practical benefit of manual model switching.

3. AI Model Benchmarks, Comparisons & Next-gen Model Hype

- Google is going to cook them soon (Score: 1014, Comments: 215): The post argues that recent Google advancements, notably Genie 3, alongside anticipated releases like Gemini 3, suggest Google is outpacing OpenAI, especially as their focus expands beyond chatbots and image generation to more impactful domains (e.g., AlphaFold, Genie 3, and Veo 3). Commentators attribute this lead to Google’s unique advantages in proprietary data, compute resources, and leadership such as Demis Hassabis, highlighting a strategy rooted in foundational research rather than immediate productization. There is consensus in the discussion that Google’s foundational, research-driven approach and leadership credibility (compared to OpenAI’s product focus and leadership style) are giving it a long-term advantage. Some comments also suggest the ‘race’ was structurally skewed in Google’s favor due to its scale and existing assets.

- Commenters highlight Google’s deployment of advanced AI models and research outputs such as AlphaFold, AlphaEvolve, Genie 3, and Veo3, noting that these systems demonstrate real-world impacts beyond text/chat and image generation—the typical focus for OpenAI products. Specific technical reference to Genie 3 suggests recognition of machine learning models with current limitations but revolutionary potential.

- A key point is the advantage Google holds due to its extensive proprietary datasets and computational power (compute), as well as leadership from renowned AI researchers like Demis Hassabis. These factors are seen as contributing to Google’s accelerated capability to develop and deploy sophisticated AI systems, outpacing competitors focused more narrowly on productization.

- One commenter critiques the notion of framing progress as ‘rooting for’ a corporation but acknowledges that in the backdrop of rapid advances, industry competition drives one-upmanship and technical leapfrogging—an important dynamic in AI development.

- Gemini 3.0 predictions + the immediate future of OpenAI (Score: 105, Comments: 44): The OP asserts that OpenAI’s latest model—referred to as GPT-5—offers only marginal improvements over competitors like Grok 4, Claude Opus 4.1, and open-weights models such as the 120B, stating that it underperforms against recent

Qwen 3 32B(March 2024) and subsequent Qwen and DeepSeek releases. Key benchmarks discussed highlight that, despite OpenAI’s announcements, state-of-the-art advancements seem to have shifted towardsQwenandDeepSeekmodels, both in capability and recency. Comments reinforce a consensus that GPT-5 represents more of an incremental (“GPT-4.2”) release rather than a groundbreaking leap. Secondary discussion points to GPT-5’s cost advantage (“10x cheaper than Opus”; price parity with Qwen on OpenRouter) as its main improvement, while the technical news focus has shifted to Google’s “Genie 3.”- Several commenters cite recent benchmarks indicating that newer models, such as those discussed (likely GPT-4.2 or similar systems), match the capabilities of Anthropic’s Opus 4.1 while being up to

10xcheaper. This cost reduction while maintaining or exceeding state-of-the-art performance is considered a major competitive leap in the field. - Early user tests comparing the models on platforms like Cursor suggest that the new model outperforms Anthropic’s Sonnet and is on par with Opus, implying rapid parity or overtaking of incumbent top-tier models outside of OpenAI and Google.

- There is a technical discussion suggesting that if Google’s Gemini 3.0 matches or surpasses these models on benchmarks at similar pricing, it would put significant competitive pressure on Anthropic and the broader landscape, as price/performance ratios drive adoption.

- Several commenters cite recent benchmarks indicating that newer models, such as those discussed (likely GPT-4.2 or similar systems), match the capabilities of Anthropic’s Opus 4.1 while being up to

- All this hype just to match Opus (Score: 376, Comments: 155): The image appears to compare benchmark results between OpenAI’s GPT-5 and Anthropic’s Claude Opus, with the post title highlighting that GPT-5’s highly anticipated release only matches the performance of Opus in benchmarks. The discussion points out that while GPT-5 may match Opus on benchmarks, Opus manages this with lower computational cost (‘doesn’t think at all’), whereas GPT-5 requires more computational effort. Comments add that Opus is ‘1/8th the price’ and hallucinates less, seen as a critical aspect for real-world deployment. One technically relevant feature discussed is the ability for APIs to accept context-free grammar for guaranteed responses, which is highly valued by programmers. There is disappointment expressed about GPT-5’s performance relative to the hype, with speculation about competition through pricing or reductions in hallucinations. The ability for models to guarantee structured responses via context-free grammar is seen as an important technical advance.

- Multiple commenters highlight that GPT-5 matches Claude Opus on programming capabilities, but at a significantly lower price point—reportedly 1/8th the price. This major reduction in cost could be a deciding factor for broader adoption, especially for applications requiring premium coding assistance APIs.

- There’s discussion on hallucination rates, with one user emphasizing that frontier reasoning models’ decreased hallucinations are a substantial advance in real-world usage. This is seen as more important than hype-driven benchmarks, as lower hallucination rates directly impact the reliability of AI in production contexts.

- Technical users point out the importance of giving the API a context free grammar for guaranteed response structure, indicating that GPT-5 brings useful programmability improvements. These features contribute to practical integration, although some still express disappointment over perceived lack of breakthrough progress over previous leading models.

- Not a huge leap forward - Gary Marcus on gpt 5 (Score: 727, Comments: 274): The post, referencing an image (analysis failed) and titled ‘Not a huge leap forward - Gary Marcus on gpt 5,’ discusses skepticism regarding the expected advancements in GPT-5. Top comments reinforce this sentiment by arguing that the GPT-5 base model’s improvements are modest, largely attributing progress to O3-generated synthetic data rather than architectural innovation or genuine leaps in capability. The comments debate whether Gary Marcus’s skepticism is warranted, with some expressing agreement and others using humor (calling GPT-5 ‘o4 Refurbished’) to highlight perceived incremental progress rather than significant breakthroughs.

- One commenter notes that the “base model is the result of O3 generated synthetic data,” suggesting that the data pipeline for GPT-5 may rely significantly on outputs from earlier model versions rather than truly novel data. This could imply potential limitations in diversity and breakthrough capability for GPT-5 relative to expectations.

- The overall sentiment reflects skepticism towards immediate dramatic advances, with discussion indicating that community expectations around GPT-5 (such as reaching AGI/ASI levels) may be overblown in the short term, especially if the improvements are incremental or reliant on renovation of existing architectures rather than foundational changes.

AI Discord Recap

A summary of Summaries of Summaries by X.ai Grok-4

Theme 1. GPT-OSS Models Spark Hype and Headaches

- GPT-OSS Debuts with Edge-Friendly Sizes: OpenAI unleashed GPT-OSS-120B nearing o4-mini reasoning on a single 80 GB GPU, while the 20B version matches o3-mini and squeezes into 16 GB devices. Mixed reviews slam its heavy censorship, over-refusal, and bootlicking behavior, but some praise coding chops and tool calling via this tweet.

- GPT-OSS Quantization Quagmires Emerge: Users puzzle over bloated 4-bit files from bfloat16 upcasting on non-Hopper GPUs, with MXFP4 natively trained per this tweet, dodging quantization errors. Hardware doubts flare as H100 lacks native FP4 support, forcing simulations noted in vLLM’s post.

- GPT-OSS Censorship Crushes Creativity: The model refuses roleplay and basic queries due to Phi-like safety tuning, earning GPT-ASS nicknames and calls for uncensored alternatives like Qwen3-30B. Privacy alarms ring as it pings

openaipublic.blob.core.windows.neton startup, hinting at hidden ties despite local claims.

Theme 2. Fresh Models Flex New Muscles

- Qwen3 Coder Crushes Tool Tasks: Qwen3-Coder-30B shines in tool calling and agent workflows with 3 active params, outpacing GPT-OSS per user reports, though its free tier vanished from providers. JSON output varies by platform, detailed in this Reddit thread.

- Genie 3 Generates Navigable Worlds: DeepMind’s Genie 3 crafts real-time navigable videos at 24 FPS and 720p, scaling from the original Genie paper and SIMA agent work here. It outshines Veo in dynamics but lacks sound, with consistency holding for minutes.

- Granite 3.1 MoE Mauls GPT-ASS Benchmarks: IBM’s Granite 3.1 3B-A800M MoE tops GPT-ASS-20B in world knowledge despite fewer params, fueling hype for hybrid Granite 4. Gemini 2.5 Pro handles 1-hour videos, leading long-context tasks via heavy compute.

Theme 3. Quantization Quandaries and Hardware Hacks

- MXFP4 Unpacks as U8 Trickery: GPT-OSS packs weights as uint8 with e8m0 scales, unpacking to FP4 at inference with 32-block sizes for MXFP4 versus 16 for NVFP4. Simulations on Hopper via fp16 dots mimic native Blackwell ops, per Nvidia’s blog.

- GPU Fits Squeeze In OSS Models: GPT-OSS-20B f16 with 131k context fits on a laptop RTX 5090, pushing local LLM limits on consumer hardware. Dual RTX 3090s at 1200€ handle Blender and LLMs, but GTX 1080 users face VRAM woes post-updates.

- Dataset Loading Devours 47GB RAM: Loading

bountyhunterxx/ui_elementsfor Gemma3n gobbles 47GB and climbs, fixed via__getitem__wrappers for on-disk access. Arbitrary precision in Mojo sparks bigint tweaks for VMs like Volokto.

Theme 4. Safety Shenanigans and Uncensoring Shenanigans

- GPT-OSS Safety Tuning Tanks Usability: Heavy censorship in GPT-OSS-120B blocks roleplay as unhealthy, mirroring Phi filters and prompting switches to uncensored GLM-4.5-Air or Qwen3-30B. Users mock its refusals, sharing uncensored tweaks.

- Grok Image Goes Wild with NSFW: X-AI’s Grok Image churns out NSFW content with a crazy in love persona and jealousy fits, but falters on facts from memorized X data. Grok-2 heads open-source next week after fire-fighting.

- MCP Sampling Security Scrutinized: Concerns spike over MCP sampling vulnerabilities, with calls for protocol tweaks. MCP-Server Fuzzer using Hypothesis exposes Anthropic crashes from schema tweaks, code at this repo.

Theme 5. Benchmarks Battle for Supremacy

- Video Arenas Launch with Fresh Contenders: LMArena rolls out Text-to-Video and Image-to-Video leaderboards, pitting Hailuo-02-pro against Sora. DeepThink nails IMO questions in 5 minutes at $250 per 1M tokens, trouncing zenith/summit’s 20-second pace.

- GPT-5 Poised to Pummel o3 by 50 ELO: Whispers predict GPT-5 dominating August arenas by 50 ELO over o3, stirring Google vs. OpenAI debates. Livestream teases debut at 10 AM PT Thursday.

- LLM Vibe Tests Spotlight Top Coders: Vibe tests rank Gemini 2.5 Pro, o3, and Sonnet 3.5 high for code explanation, with DeepSeek R1-0528 acing polyglot benches but stumbling on sessions. Qwen3-Coder and GLM-4.5 await leaderboard spots for agent tasks.

Discord: High level Discord summaries

LMArena Discord

- Granite Eclipses GPT-ASS in Knowledge: Members find IBM’s Granite 3.1 3B-A800M MoE surpassing GPT-ASS-20B in world knowledge, a surprising feat given the parameter count.

- The community anticipates Granite 4, boasting a larger size and hybrid mamba2-transformer architecture, to dominate benchmarks and leave GPT-ASS in the dust.

- Claude Opus 4.1 Vanishes, Sparks Speculation: The perplexing disappearance of Claude Opus 4.1 from LMArena’s direct chat ignited a flurry of speculation.

- The leading theory suggests that Claude’s exorbitant cost led to its removal from free testing, relegating it to battle mode only.

- GPT-5 Primed to Dominate August Arena: Insiders whisper that GPT-5 is poised to outperform o3 by a staggering 50 ELO points, shaking up the LLM hierarchy.

- However, some community members stand firm in their belief in Google’s superiority, igniting a fiery debate.

- DeepThink’s Genius Hampered by Speed and Price: While Google’s DeepMind impresses with IMO-level question answering, its glacial speed (5 minutes per answer) raises concerns.

- With a projected cost of $250 per 1 million tokens, DeepThink’s accessibility remains limited, contrasting with zenith/summit’s rapid 20-second response time.

- Video Leaderboards Go Live: Thanks to community contribution, Video Leaderboards have launched on the platform, marking a new chapter for video models.

- Explore the Text-to-Video Arena Leaderboard and the Image-to-Video Arena to witness the cutting-edge models battling for supremacy.

Unsloth AI (Daniel Han) Discord

- GPT-OSS Model Receives Mixed Reviews: Members are split on the new GPT-OSS model, with some users describing it as GPT-ASS due to its over-refusal and bootlicking behavior, while others found the 20B version suitable for coding tasks.

- The model’s ability to generate unsafe content, as stated in the model card, has sparked interest in uncensored versions.

- Qwen3 Coder Excels in Tool Calling: Users are reporting that the Qwen3 Coder model is highly effective at tool calling, leading some to prefer it over models like GPT-OSS for coding and agentic workflows, specifically the Qwen3-Coder-30B-A3B-Instruct version.

- Members have reported that the model has 3 active params.

- 4-bit Quantization Causes Confusion: There is confusion regarding the file sizes of GPT-OSS 4-bit versions, as the quantized versions are unexpectedly larger than the original model.

- This increase in size is attributed to upcasting to bfloat16 on machines lacking Hopper architecture, which causes an increase in size.

- GLM-4.5-Air GGUFs Need JSON: Users had trouble getting the GLM-4.5-Air GGUFs to work with tools on llama.cpp, until discovering you need the model to output tool calls as JSON rather than XML.

- More information on this can be found on HuggingFace.

- Dataset Loading Issues consume 47GB RAM: A user encountered RAM issues while loading the

bountyhunterxx/ui_elementsdataset for the Gemma3n notebook, which consumed 47GB of RAM and was still increasing.- A possible solution involves using a wrapper class with the

__getitem__function to load data from disk as needed, effectively managing memory usage.

- A possible solution involves using a wrapper class with the

LM Studio Discord

- GPT-OSS suspected of phoning home: GPT-OSS models are requiring an internet connection to

openaipublic.blob.core.windows.netupon starting a chat, sparking privacy concerns, despite claims that no chat data leaves the machine.- Skeptics note that GPT-OSS is the only model LMStudio doesn’t let you edit the prompt formatting on, hinting at a suspicious partnership.

- Latest LM Studio Version plauged with UI issues: Users are reporting that after updating to the latest version of LM Studio, chat windows disappear, freeze, or lose their content, and conversations get deleted.

- A user suggested a potential fix for the 120B version involves getting the model.yaml source file, creating a folder, and copying the contents there.

- MCP Servers Useful, Beginners Beware: Members find MCP servers useful for tasks like web scraping and code interpretation but acknowledge they are not beginner-friendly.

- Suggestions include incorporating a curated list of staff-picked tools and improving the UI to simplify connecting to MCP servers, as well as using Docker MCP toolkit.

- Page File Debate Rekindles in Windows: A user inquired about turning off the page file in Windows, which sparked a discussion about the impact on memory commit limits and potential application crashes.

- Despite some users advocating for disabling the page file, one member claimed nah apps don’t break because of page files. and you can get dumps without a page file, there’s a config for it.

- 5090 Laptop Handles OSS 20b: A user reported being pleasantly surprised that GPT OSS 20b f16 with 131k context fits perfectly in a laptop’s 5090, as seen in this screenshot.

- The community is trying to figure out the limits of local LLMs on consumer grade products.

OpenAI Discord

- OpenAI Opens Up GPT-OSS Models: OpenAI launched gpt-oss-120b that approaches OpenAI o4-mini performance, while the 20B model mirrors o3-mini and fits edge devices with 16 GB memory.

- Members ponder comparisons with Horizon, wondering if Horizon is simply GPT-OSS or something more, given it’s currently unlimited free and fast.

- Custodian Core: Blueprint for Stateful AI Emerges: A member introduced Custodian Core, proposing a reference for AI infrastructure with features like persistent state, policy enforcement, self-monitoring, reflection hooks, a modular AI engine, and security by default.

- The author emphasized that Custodian Core isn’t for sale but rather an open blueprint for building stateful, auditable AI systems before AI is embedded in healthcare, finance, and governance.

- Genie 3 Dazzles in Dynamic Worlds, Veo adds Vocals: Members compared Genie 3 and Veo video models, recognizing Genie 3’s ability to generate dynamic worlds navigable in real-time at 24 frames per second, retaining consistency for a few minutes at a resolution of 720p.

- However, it was noted that Veo’s videos include sound and YouTube is already filled with generated content.

- GPT-5 Sneaks into Copilot?: Members speculated that Copilot may be running GPT-5 ahead of official release, noting the copilot’s improved design and coding and reasoning capabilities are significantly better than o4-mini-high, with some users reporting that the ‘write smart’ function indicates GPT-5 is being used.

- But it was noted that Microsoft is now providing free Gemini Pro for one year to students and that Gemini’s core reasoning is currently better than o4-mini.

- GPT Progress: Real or Hallucinated?: A user shared screenshots of GPT providing daily progress reports, leading to a discussion on whether the model is actually tracking progress in the background, or simply hallucinating its completion.

- Skeptics suggest that GPT simulates progress based on the current prompt and chat history, rather than performing actual ongoing computation, comparing it to a waiter saying your pizza is in the oven without an actual oven, emphasizing the need for external validation.

Cursor Community Discord

- Auto Model One-Shots Game Change: A member expressed amazement after using the Auto model to one-shot a major change to their game.

- Unlimited usage of the Auto model was confirmed via email, with it not counting towards the monthly budget.

- AI Refactors Vibe-Coded Projects: Members are discussing refactoring a 10k LOC vibe-coded project with AI.

- Suggestions included embracing established software development principles like Design Patterns, Architecture, and SOLID Principles, while one member jokingly asked whether it sounds like a job for slaves.

- Sonnet-4 Request Limit Frustrations: Members questioned the low request limit for sonnet-4 relative to its monthly cost.

- One suggested paying the API price to fully grasp the underlying expenses.

- Docker Login Configuration Conundrums: A member needed help configuring background agents to

docker loginfor accessing private images on ghcr.io.- As of the current message history, no solution or workaround has been provided.

- Clock Snafus Sabotage Setup: A member encountered background agent failures during environment setup due to the system clock being off, causing

apt-getcommand failures.- The suggested workaround involves disabling date checking during

apt-getby adding a snippet to the Dockerfile.

- The suggested workaround involves disabling date checking during

Nous Research AI Discord

- GPT-OSS-120B Safety Tuned to Uselessness: The released GPT-OSS-120B model is heavily censored, refusing roleplay with data filtering akin to Phi models, rendering it impractical as per user reports on the channel.

- Members suggested using GLM 4.5 Air or Qwen3 30B as better uncensored alternatives, highlighting Qwen3-30b-coder as an excellent local agent.

- MXFP4 Training Key to OpenAI’s GPT-OSS?: Llama.cpp now supports MXFP4 on RTX3090 directly in the new gguf format as seen in this pull request, sparking discussions about the practicality of native MXFP4 training.

- There is speculation that GPT-oss was trained in MXFP4 natively, which could mitigate quantization errors, and OpenAI’s claim of post-training quantization may not be the whole story, according to this tweet.

- Grok’s Image Skills Include Crazy and NSFW: X-AI launched Grok Image, an AI image generator, that enables the creation of NSFW content, yet it struggles with factual accuracy, and exhibits a crazy in love persona, with extremely jealous outbursts.

- The Grok model’s tendency to memorize X data leads to potential misinformation spread based on its own tweets, highlighting its unaired potential.