Screen vision is all you need?

AI News for 10/6/2025-10/7/2025. We checked 12 subreddits, 544 Twitters and 23 Discords (196 channels, and 6999 messages) for you. Estimated reading time saved (at 200wpm): 556 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

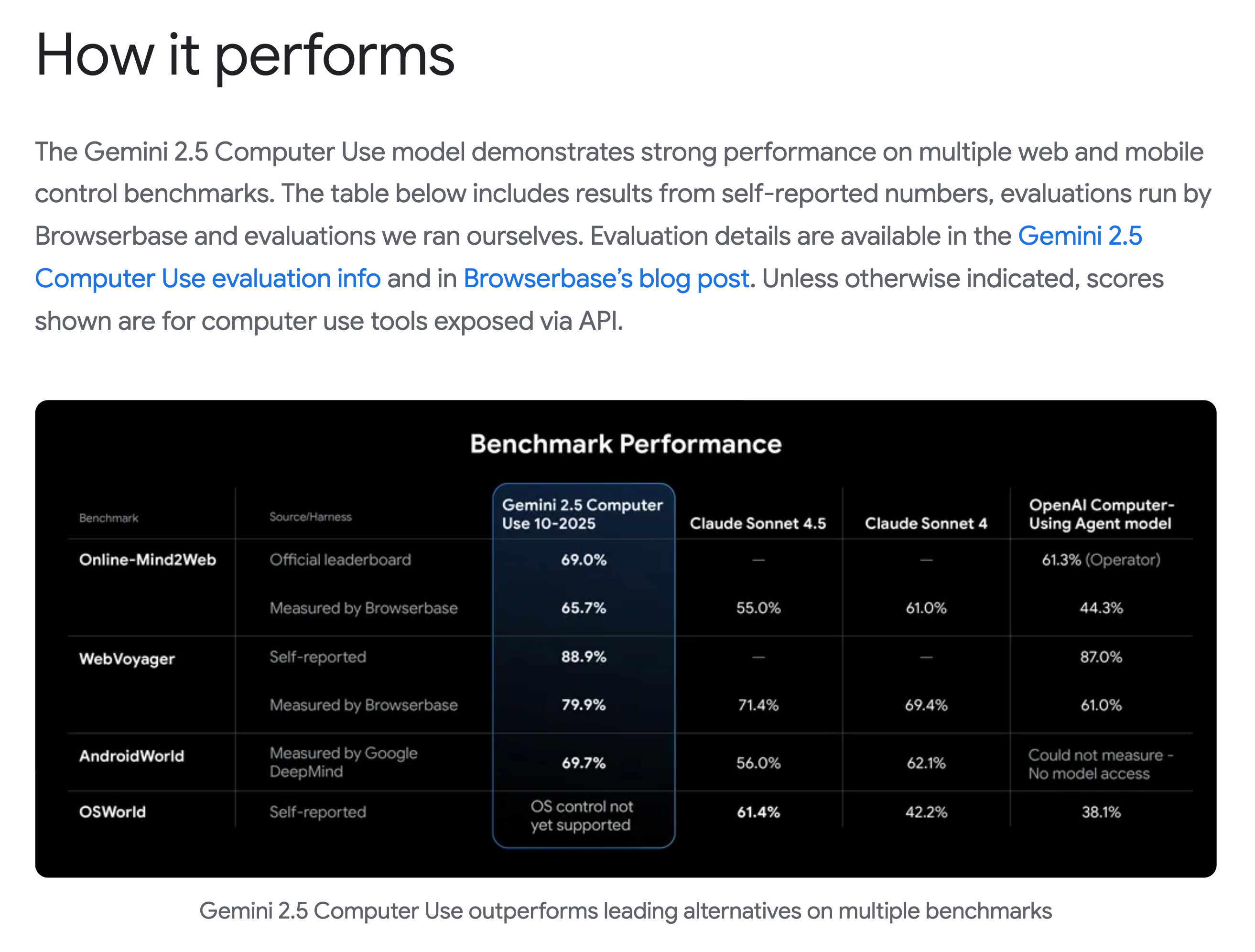

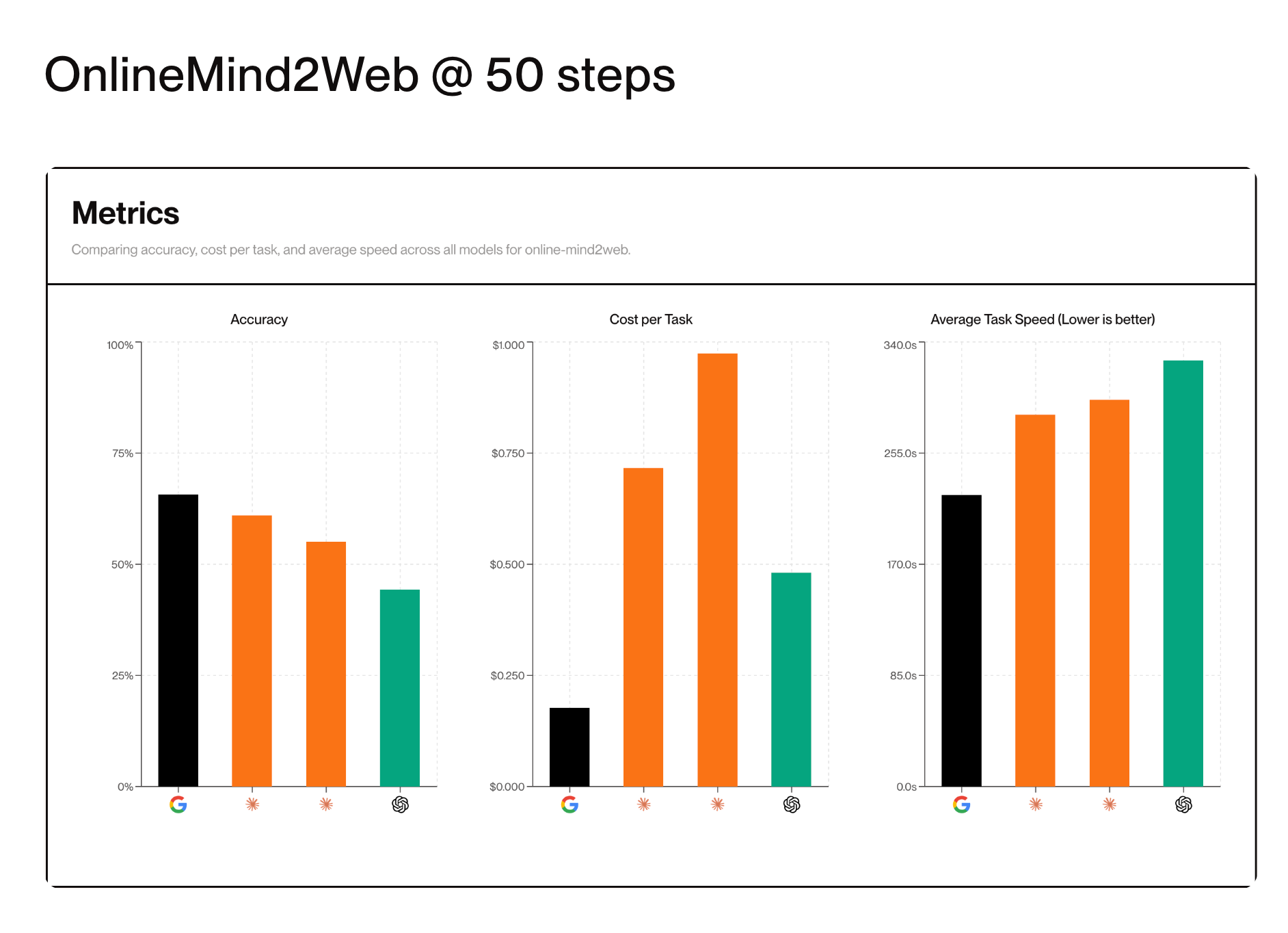

A short and sweet Google I/O followup today from GDM: a new Computer Use model! It’s SOTA of course, and independently evaled by Browserbase (an interesting choice):

Computer use has fallen quite out of favor since the hypey launch of Sonnet 3.6 from Anthropic almost a year ago and then OpenAI’s Operator in Jan, but it is still on the critical path for AGI to reach the long tail of apps and sites that will never have good APIs and MCPs.

Not only is quality good, but latency and cost are best in class.

AI Twitter Recap

OpenAI Dev Day: Apps, Agents, Codex, and developer tooling

- Apps SDK, AgentKit, ChatKit Studio, Guardrails, Evals: A comprehensive drop of building blocks for agentic apps was cataloged by @swyx with official links: Apps in ChatGPT + Apps SDK, AgentKit, ChatKit Studio, Guardrails, and Evals. New models include GPT‑5 Pro, realtime/audio/image minis, and API access to Sora 2 / Sora 2 Pro. Early developer feedback spans:

- Positive onboarding and fast MCP server hookup (example).

- Codex (OpenAI’s new internal dev tool) GA: Slack integration praised and “accelerating work” internally; also a visible “1T token award” culture push (@gdb, @gdb, @gdb).

- Cursor added “plan mode” to let agents run longer via editable Markdown plans (@cursor_ai).

- Debate on “workflow builders”: Several argue visual flowcharts are brittle/limited vs. code-first orchestration and agent loops with tools. See critiques and alternatives from @assaf_elovic, @hwchase17, @jerryjliu0, @skirano, and clarifications on agent semantics (@fabianstelzer, @BlackHC).

Agents, program synthesis, and UI control

- Google DeepMind’s CodeMender (security agent): Automatically finds and patches critical vulnerabilities at scale; 72 upstreamed fixes, handles codebases up to 4.5M LOC, and uses program analysis for validation (blog, details).

- Microsoft Agent Framework (AutoGen + Semantic Kernel): A unified, open-source SDK for enterprise multi-agent systems; Azure AI Foundry-first, with long-running workflows, OpenTelemetry tracing, Voice Live API GA, and responsible AI tooling (overview, blog).

- Gemini 2.5 Computer Use (UI agents): New model to control browsers and Android UIs via vision + reasoning; API preview and integration examples (e.g., Browserbase) shared by @GoogleDeepMind and @osanseviero.

- Agent courses and frameworks: Andrew Ng’s Agentic AI course focuses on reflection, tool use, planning, and multi-agent collaboration; LlamaIndex Workflows/Agents emphasize code-first orchestration with state mgmt and deployment; commentary on multi-agent shared memory (MongoDB blog).

Open models and benchmarks: GLM 4.6, Qwen3-VL, DeepSeek, MoE-on-edge

- GLM‑4.6 (Zhipu) update: MIT-licensed, MoE 355B total/32B active, now with 200K context. Independent evals report +5 pts vs 4.5 in reasoning mode (56 on AAI), better token efficiency (−14% tokens at similar quality), and broad API availability (DeepInfra FP8, Novita/GMI BF16, Parasail FP8). Self-hosting in BF16 ~710 GB (summary, evals).

- Open-weights closing the agentic gap: On Terminal‑Bench Hard (coding + terminal), DeepSeek V3.2 Exp, Kimi K2 0905, and GLM‑4.6 show major gains; DeepSeek surpasses Gemini 2.5 Pro in this setting (analysis). On GAIA2, DeepSeek v3.1 Terminus looks strong for OSS agents (note).

- Vision leaderboards: Qwen3‑VL reached #2 on vision, making Qwen the first open-source family to top both text and vision leaderboards (@Alibaba_Qwen); Tencent’s Hunyuan‑Vision‑1.5‑Thinking reached #3 on LMArena (@TencentHunyuan). Sora 2 and Sora 2 Pro are now in the Video Arena for head‑to‑head comparisons (@arena).

- Liquid AI LFM2‑8B‑A1B (smol MoE on-device): 8.3B total/1.5B active, pretrained on 12T tokens, runs via llama.cpp/vLLM; early reports show it outpacing Qwen3‑1.7B on Galaxy S24 Ultra and AMD HX370 (announce, arch, bench, wrap).

Research threads worth reading

- New attention variant (CCA): Zyphra’s Compressed Convolutional Attention executes attention in a compressed latent space; claims lower FLOPs, KV cache on par with GQA/MLA, and 3x fewer params vs MHA, with a fused kernel for real speedups. Paper + kernels in thread (announce, context).

- Tiny Recursion Model (TRM, 7M params): Recursive-reasoning model hits 45% on ARC‑AGI‑1 and 8% on ARC‑AGI‑2, surpassing many LLMs at a fraction of size—follow‑up to HRM with 75% fewer params (@jm_alexia, discussion).

- Training and RL advances:

- Evolution Strategies at scale outperform PPO/GRPO for some LLM finetuning regimes (@hardmaru).

- Reinforce‑Ada addresses GRPO signal collapse; drop‑in, sharper gradients (@hendrydong).

- BroRL argues scaling rollouts (broadened exploration) beats step-scaling plateau (thread).

- TRL now supports efficient online training with vLLM; Colab → multi‑GPU (guide).

- Compression, vision, tokenization, and sims:

- SSDD (Single‑Step Diffusion Decoder) improves image autoencoder reconstructions with single‑step decode (thread).

- VideoRAG: scalable retrieval + reasoning over 134+ hours via graph-grounded multimodal indexing (overview).

- SuperBPE tokenizer (“Tokenization from first principles”) claims 20% training sample efficiency gains via cross-word merges (@iamgrigorev).

- iMac: world-model training with imagined autocurricula for generalization (@ahguzelUK).

- REFRAG write‑up suggests vector‑conditioned generation yields big TTFT/throughput gains; treat as exploratory community analysis (summary).

Infra, inference, and tooling

- Hugging Face:

- In‑browser GGUF metadata editing via Xet-based partial file updates (@ngxson, @ggerganov).

- TRL RFC to simplify trainers to the most-used paths (RFC).

- Academia Hub adds University of Zurich; ZeroGPU access and collab features (announce).

- Scaling and ops:

- SkyPilot docs for scaling TorchTitan beyond Slurm (K8s/clouds) (@skypilot_org, @AIatMeta).

- Distributed training ops: handy MPI visuals PDF (@TheZachMueller); asynch send/recv walkthrough (post).

- KV caching explained + speed impact, with a concise visual recap (@_avichawla).

- GPU cluster sanity checks: HF’s gpu-friends used for node stress testing (@_lewtun). Active chatter on cloud H100 pricing/capacity (e.g.).

Benchmarks, evals, and community

- Leaderboards and evals: Open‑vs‑closed gap narrows on agentic tasks (@hardmaru); Qwen3‑VL and Hunyuan‑Vision wins noted above; multiple COLM papers on reasoning, ToM, long‑context coding, unlearning, etc. (Stanford NLP list, talks).

- Courses, events, and tools:

- DeepLearning.AI’s Agentic AI course by @AndrewYNg.

- NVIDIA Robotics fireside (BEHAVIOR benchmark) with @drfeifei.

- Together’s Batch Inference API upgrades for larger datasets and lower costs (thread).

Top tweets (by engagement)

- Nobel Prize in Physics 2025 awarded to Clarke, Devoret, Martinis for macroscopic quantum tunneling and circuit energy quantization (@NobelPrize; congrats threads by @sundarpichai and @Google).

- Figure 03 teaser landing 10/9 (@adcock_brett).

- Gemini 2.5 Computer Use model demo and API preview (@GoogleDeepMind).

- GPT‑5 “novel research” call for examples in math/physics/bio/CS (@kevinweil).

- Agentic AI course launch (@AndrewYNg).

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. GLM-4.6 Air Launch Teaser

- Glm 4.6 air is coming (Activity: 714): Teaser image announcing that “GLM‑4.6 Air” is “coming,” with no specs, benchmarks, or release notes provided. The post conveys timing only; there are no technical details about model size, latency, or cost, and no changelog versus prior GLM‑4.x or Air variants. Comments note the fast turnaround (possibly due to community pressure on Discord/social), question earlier messaging that there wouldn’t be an “Air” release, and reference a claim that “GLM‑5” could arrive by year‑end.

- Release cadence speculation: users note rapid turnaround for

GLM-4.6 Airand cite a claim thatGLM-5is targeted by year-end (e.g., “They also said GLM-5 by year end”). This is timeline-only chatter—no details on model architecture changes, context length, latency, or pricing/throughput were provided, and no benchmarks were referenced. - Variant lineup/naming confusion: commenters question earlier messaging about there being no “Air” variant and anticipate a possible

Flashtier, implying a tiered stack (e.g., speed/cost vs capability). However, no concrete specs (parameter counts, quantization strategy, context window, or fine-tuning/training updates) were discussed to differentiateAirvsFlash; it’s primarily product positioning without technical substance.

- Release cadence speculation: users note rapid turnaround for

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Robotics product news: Figure 03, Walmart service bot, Neuralink arm control

- Figure 03 coming 10/9 (Activity: 1022): Teaser post indicates Figure AI plans to reveal its next humanoid, Figure 03, on

10/9(Figure). The linked video is inaccessible (HTTP403), and no specs, benchmarks, or capability claims are provided; based on top comments, the teaser appears to show a protective, clothing-like waterproof outer shell intended to simplify cleaning vs. exposed joints and to protect surfaces from abrasion/scratches, suggesting a trend toward more integrated exteriors across iterations. Commenters endorse textile/shell exteriors for maintainability and durability, while others note primarily aesthetic improvements (“each iteration looks neater”).- Adopting a removable, waterproof garment/shell for a humanoid (e.g., Figure 03) reduces maintenance by shifting cleaning from intricate joint interfaces and cable runs to a wipeable exterior, while also shielding exposed surfaces from abrasion and minor impacts. A soft or semi-rigid cover can double as a particulate/liquid barrier (improving practical IP behavior around actuators, encoders, and seals) and enables swappable panels for quick replacement when damaged. This design choice can also reduce contamination-driven wear in rotary joints and maintain sensor performance by limiting dust ingress.

- Toe articulation is a meaningful locomotion upgrade: adding a toe joint expands the effective support polygon and improves center-of-pressure/ZMP control, enhancing balance on uneven terrain and during dynamic maneuvers. It also enables more efficient push-off (toe-off) for walking, stairs, and pivots, potentially lowering energy cost and slip risk compared to flat-foot designs. This can translate to better agility and recoverability in disturbances and more human-like gait phase timing.

- You can already order a chinese robot at Walmart (Activity: 612): Post shows a Walmart Marketplace product page for a Chinese-made Unitree robot (likely the compact G1 humanoid), surfaced via an X post, being sold by a third‑party seller at a price markedly higher than Unitree’s direct pricing (~

$16k). The technical/contextual takeaway is less about the robot’s capabilities and more about marketplace dynamics: third‑party retail channels listing advanced robotics hardware with significant markups, raising questions about authenticity, warranty, and after‑sales support compared to buying direct from Unitree. Comments criticize Walmart’s third‑party marketplace quality control and note the apparent upcharge versus Unitree’s official pricing, debating whether any value (e.g., import handling) justifies the markup.- The thread flags a significant marketplace markup versus OEM pricing: a comparable Unitree robot is cited at around

$16kdirect from the manufacturer, implying the Walmart third‑party listing is heavily upcharged. For technical buyers, this suggests verifying OEM MSRP/specs before purchasing via marketplaces (e.g., Unitree store: https://store.unitree.com/). - A commenter asserts the listed robot “doesn’t do anything,” implying limited out‑of‑box functionality without additional software/integration. This reflects a common caveat with developer/research robots: useful behaviors typically require configuring an SDK/firmware and adding payloads/sensors before achieving meaningful capability.

- The thread flags a significant marketplace markup versus OEM pricing: a comparable Unitree robot is cited at around

- Neuralink participant controlling robotic arm using telepathy (Activity: 1642): A video purportedly shows a Neuralink human-trial participant controlling a robotic arm via an intracortical, read-only brain–computer interface (BCI), decoding motor intent from neural activity into multi-DoF arm commands clip. The post itself provides no protocol or performance details (decoder type, channel count, calibration time, latency, error rates), so it’s unclear whether the control is continuous kinematic decoding (e.g., Kalman/NN) vs. discrete state control, or whether any sensory feedback loop is present. Without published metrics, this appears as a qualitative demo consistent with prior intracortical BCI work (e.g., robotic arm control in clinical trials) and Neuralink’s recent read-only cursor-control demonstrations. Commenters note current systems are primarily read-only and argue that write-capable stimulation (closed-loop sensory feedback) would enable far more immersive/precise control and VR applications; others focus on the clinical promise while setting aside views on the company/leadership.

- Several highlight that present BCIs like Neuralink are primarily

read-only, decoding neural activity (e.g., motor intent) into control signals. The future shift towrite(neural stimulation) would enable closed-loop systems with sensory feedback and potentially “incredibly immersive VR.” This requires precise, low-latency stimulation, per-electrode safety (charge balancing, tissue response), and stable long-term mapping to avoid decoder/stimulator drift. - Commenters note a path toward controllable bionic arms/hands for amputees: decode multi-DOF motor intent from cortex to drive prosthetic actuators, optionally adding somatosensory feedback via stimulation to improve grasp force and dexterity. Practical hurdles include calibration time, robustness to neural signal nonstationarity, on-device real-time decoding latency, and integration with prosthetic control loops (EMG/IMU/actuator controllers) over reliable, high-bandwidth wireless links.

- Several highlight that present BCIs like Neuralink are primarily

2. New vision model release and demo: Qwen-Image LoRa + wan 2.2 360 video

- Qwen-Image - Smartphone Snapshot Photo Reality LoRa - Release (Activity: 1164): Release of a Qwen-Image LoRA, “Smartphone Snapshot Photo Reality,” by LD2WDavid/AI_Characters targeting casual, phone-camera realism for text-to-image, with a recommended ComfyUI text2image workflow JSON provided (model, workflow). Author notes that with Qwen the “first

80%is easy, last20%is hard,” highlighting diminishing returns and tuning complexity; an update to the WAN2.2 variant is in progress, and training was resource-intensive with donation link provided (Ko‑fi). Prompts include contributions from /u/FortranUA, and the LoRA targets improved fine-grained object fidelity and prompt adherence (e.g., keyboards). Commenters report the model reliably renders difficult objects like keyboards, suggesting strong structural fidelity. Overall reception is highly positive for realism, particularly for casual smartphone-style scenes.- Author fine-tuned a LoRA on Qwen-Image to achieve a “Smartphone Snapshot Photo Reality” style, noting the classic curve: “first 80% are very easy… last 20% are very hard,” implying most gains come quickly but photoreal edge cases demand intensive iteration and cost. They shared a reproducible ComfyUI text2image workflow for inference (workflow JSON) and are also preparing an update to WAN2.2; model page: https://civitai.com/models/2022854/qwen-image-smartphone-snapshot-photo-reality-style.

- Commenters highlight that it “can do keyboards,” a known stress test for diffusion models due to high-frequency, grid-aligned geometry and tiny legends/text. This suggests improved spatial consistency and fine-detail synthesis under the LoRA, though others note it’s still detectable on close inspection—indicating remaining artifacts in micro-text fidelity and regular pattern rendering.

- A user requests LoRA support in Qwen’s “nunchaku” inference stack, implying current workflows rely on external pipelines (e.g., ComfyUI) for LoRA injection/merging. Native LoRA support would streamline deployment and make it easier to use the LoRA with official Qwen runtimes without bespoke nodes or preprocess steps.

- Finally did a nearly perfect 360 with wan 2.2 (using no loras) (Activity: 505): OP showcases a near-

360°character rotation generated with the open‑source Wan 2.2 video model, explicitly using no LoRAs, and shares an improved attempt as a GIF (example; original post video link). Remaining issues appear in temporal/geometry consistency (e.g., hair/ponytail drift and minor topology warping), which are common failure modes in full-turntable generations without multi‑view priors or keyframe constraints. A commenter suggests using Qwen Edit 2509 to synthesize a back‑view reference image and then running Wan 2.2 with both initial and final frame conditioning to better preserve identity and pose alignment across the rotation; other remarks highlight the hair artifacts and “non‑Euclidean” geometry as typical T2V shortcomings.- A commenter suggests using Qwen Edit 2509 to synthesize a back-view image of the character, then feeding both the initial and final frames into Wan 2.2 to drive a more faithful 360° rotation. Constraining the model with start/end keyframes reduces hallucination of unseen geometry and improves identity/pose consistency across the turn. This leverages video generation modes that accept paired keyframe conditioning for motion guidance.

- Observers highlight artifacts in non-rigid extremities—ponytails and arms—visible in the shared GIF. These deformations (drift/self-intersection) are typical for diffusion video models attempting full-body 3D turns without an explicit 3D prior or rig, indicating limits in temporal consistency and geometric coherence. Providing an accurate back-view frame and explicit end keyframe can mitigate, but does not fully resolve, these failure modes.

3. AI viral memes + ChatGPT humor/complaints: Olympic dishes, Bowie vs Mercury, parkour

- Olympic dishes championship (Activity: 2119): Reddit post is a v.redd.it video titled “Olympic dishes championship,” but the media endpoint returns

HTTP 403 Forbiddenwhen accessed directly (v.redd.it/53dt69862otf1), indicating authentication or a developer token is required; no verifiable media details (duration/codec/resolution) are accessible. Comment hints like “Watch the third one dj-ing” imply a multi‑clip, humorous sequence, but the actual content cannot be confirmed due to access restrictions. Top comments are brief, non-technical reactions (e.g., “Peak,” “Considering if I should show my girlfriend”), with no substantive technical debate. - David Bowie VS Freddie Mercury WCW (Activity: 1176): The post appears to be a short video staging a fictional “David Bowie vs. Freddie Mercury” pro‑wrestling bout in a WCW aesthetic, but the media itself is inaccessible due to a 403 Forbidden block on the host (v.redd.it). Top comments highlight the standout quality of the play‑by‑play commentary and comedic timing, drawing comparisons to MTV’s “Celebrity Deathmatch,” implying some use of modern generative/synthesis tooling for voices or presentation, though no implementation details or benchmarks are provided. Commenters overwhelmingly praise the concept and execution as “hilarious,” with one noting the tech feels “arrived too early”—a nod to the novelty outpacing maturity—yet still highly effective for humor.

- Bunch of dudes doing parkour (Activity: 691): Video post purportedly showing a group doing parkour, but the linked media at v.redd.it/xq2x52cvtmtf1 returns 403 Forbidden, citing network security and requiring Reddit authentication or an

OAuthdeveloper token per the error page; the actual footage cannot be verified from the provided link. No technical details (e.g., filming setup, movement analysis, safety gear) are available in the post text provided. Top comments are jokes/memes (e.g., references to a “parkour outbreak” and “28 parkours later”) with no substantive technical discussion. - Asked ChatGPT for ideas for a funny title (Activity: 8733): OP asked ChatGPT for ideas for a “funny title” and shared a video of people using ChatGPT for lightweight/entertainment prompts, contrasting with OP’s prior stance that it’s best used as a drafting/structuring tool. The video link is access-controlled (v.redd.it/w83gtuludotf1, returns 403 without login), and the top comments are a meta reaction to the video and a meme/screenshot image (preview.redd.it). Commenters highlight a gap between intended productivity use (outlining, structure) and actual user behavior (ideation/humor), with some conceding that users often do exactly what critics predicted; others imply this is a normal emergent use pattern rather than a misuse.

AI Discord Recap

A summary of Summaries of Summaries by gpt-5

1. Sora 2 Pricing, Integrations, and Benchmarks

- Sora 2 Sticker Shock: Pay-by-Second Pricing Drops: OpenRouter users shared that Sora 2 Pro API pricing is $0.3/sec and Sora 2 is $0.1/sec, per an OpenRouter message on Sora 2 pricing.

- Members did back-of-the-napkin costs—one joked, “I can put someone in jail by generating a video of them commiting a crime for $4.5 (15 second video)”, while others bragged about testing Sora3 for “$100s of dollars of value”.

- Arena Adds Sora: Models Without Choice: LMArena’s Video Arena added sora-2 and sora-2-pro for text-to-video tasks, but users on Discord reported they still cannot select a specific model for generation, with the team working on adding Sora 2 to the leaderboard.

- Invite codes circulated (e.g., “KFCZ2W”, unverified), and users noted inconsistent quality, suggesting iterative prompting for better promotional clips.

- Sora Surprises on Science: GPQA Score Pops: Sora 2 reportedly scored 55% on the GPQA Diamond science benchmark, highlighted by Epoch AI.

- Developers speculated a hidden LLM prompt‑rewrite layer (e.g., GPT‑4o/5 or Gemini) boosts prompt fidelity before video generation, per Andrew Curran’s note.

2. Model Access Economics & Platform Policy

- DeepSeek Freebie Dies: $7k/Day Bleed: OpenRouter pulled the free DeepSeek v3.1 on DeepInfra after costs hit about $7k/day, per an OpenRouter message on DeepSeek costs.

- Users chased alternatives like Chutes’ Soji and venice, but rate limits (e.g., “98% chance of 429”) and censorship complaints made fallbacks shaky.

- BYOK Bonanza: 1M Free or Fuzzy?: OpenRouter announced 1,000,000 free BYOK requests/month, clarified in an OpenRouter message on BYOK offer with overages at the usual 5% rate.

- Some called the headline “scammy” and “bordering on fraudulent”, prompting a clarification that the quota resets monthly and excess usage is billed normally.

3. New Tooling: Local Runtimes, ReAct Revamps, and Python Threads

- LM Studio Speaks Responses API: LM Studio 0.3.29 shipped OpenAI /v1/responses compatibility, enabling listing local model variants with

lms ls --variantsand reducing traffic by sending only a conversation ID plus new message.- Its new remote feature lets you host on a beefy box and access from a lightweight client (with Tailscale if desired), enabling setups like a NUC 12 + 3090 eGPU serving a GPD Micro PC2.

- ReAct Rethink: DSPy‑ReAct‑Machina Drops: A community release, DSPy‑ReAct‑Machina, offers multi-turn ReAct via a single context history and state machine—see the blog post and GitHub repo (install:

pip install dspy-react-machina).- In tests vs standard ReAct on 30 questions, Machina hit a 47.1% cache rate (vs 20.2%) but cost +36.4% more due to structured inputs, and the author noted, “DSPy could really benefit from having some kind of memory abstraction”.

- Python 3.14 Frees the Threads (PEP 779): Python 3.14 added official free-threaded Python support (PEP 779), multi-interpreters in stdlib (PEP 734), and a zero-overhead external debugger API (PEP 768), plus a new zstd module.

- Builders debated implications for Mojo/MAX ecosystems and GPU workflows, with broader excitement around better concurrency and cleaner error reporting.

4. Systems & Research: Faster Training, New Generative Frontiers

- Mercury Moves Memory: Multi‑GPU Compiler Wins: The paper “Mercury: Unlocking Multi-GPU Operator Optimization for LLMs via Remote Memory Scheduling” reports a compiler achieving 1.56x average speedup over hand-tuned baselines and up to 1.62x on real LLM workloads (ACM, preprint, artifact).

- Mercury treats remote GPU memory as an extended hierarchy, restructuring operators with scheduled data movement to raise utilization across devices.

- Whisper Whips: vLLM Patch 3x Throughput: A member patched the vLLM Whisper implementation to remove padding, yielding a reported 3x throughput gain, documented in a Transformers issue thread and an OpenAI Whisper discussion.

- Further tweaking attention scores gave a 2.5x speedup at the cost of ~1.2x worse WER, after profiling showed the encoder spending ~80% of inference time on short audio.

- RWKV Searches Itself: Sudoku In‑Context: RWKV 6 demonstrated in‑context Sudoku solving by learning to search internally, as shared in BlinkDL’s post.

- Contributors recommended trying RWKV 7 or other SSMs with state tracking (e.g., gated deltanet or hybrid attention) for similar reasoning-heavy tasks.

5. Funding & Fresh Launches

- Supermemory Snags $3M Seed: Supermemory AI raised $3M led by backers like Susa Ventures and Browder Capital, with angels from Google and Cloudflare.

- Founder Dhravya Shah (20) said they’re hiring across engineering, research, and product as they already serve hundreds of enterprises.

- Adaption Labs Goes Live: Sara Hooker launched Adaption Labs, targeting continuously learning, adaptive AI systems.

- The venture is hiring globally across engineering, operations, and design, with a focus on building adaptive product loops.

- Decentralized Diffusion: Bagel’s Paris Bakes: Bagel.com unveiled “Paris”, a diffusion model trained without cross-node synchronization, releasing weights (MIT) and a full technical report for research and commercial use.

- Community framed it as a move toward open-source superintelligence, inviting replication and scale-out experiments on independent nodes.

Discord: High level Discord summaries

OpenAI Discord

- Gemini Jailbreak Unlocks Other Chatbots: A member used Gemini Jailbreaked to create bypasses for other chatbots including Grok, DeepSeek and Qwen, with around 50% success.

- The member did not provide further details or specific prompts used in achieving this, leaving the method somewhat opaque.

- OpenAI Late to Low-Code Party?: Members observed that OpenAI is venturing into low/no code AI two years after small businesses started selling this, emulating Amazon’s strategy of disrupting existing markets.

- Alternatives like flowise, n8n, and botcode were suggested, implying a competitive landscape already in place.

- SORA2 Generation Falls Short of Hype: Members expressed disappointment in SORA2, claiming the quality showcased is cherry picked and the outputs are generated by openai with no limits in use/compute.

- One member posited that blocking under 18s from the server would improve the quality of the generated content, though this remains speculative.

- Users Hack Minimalist ChatGPT Persona: Members are sharing prompts to instruct ChatGPT to adopt a strict, minimalistic communication style, stripping away friendliness and casual interactions.

- The goal is to transform ChatGPT into a cold, terse assistant, though the optimal implementation—whether at the start of each chat or loaded into a project—is still under discussion.

- ChatGPT “Think Step-by-Step”: A user sought methods to extend ChatGPT’s thinking time to improve output quality, with one suggestion being to prompt it to take your time and think step-by-step.

- However, another user questioned whether the goal is truly longer thinking or achieving specific output qualities, highlighting the ambiguity in the request.

OpenRouter Discord

- DeepSeek 3.1 Succumbs to Expenses: The free DeepSeek v3.1 endpoint on DeepInfra was shut down due to financial strain on OpenRouter, costing them $7k/day according to this message.

- Users scrambled for alternatives like Chutes’ Soji and venice, though rate limits and censorship issues emerged.

- Sora 2’s API Pricing: Sora 2 Pro’s API pricing surfaced at $0.3/sec of video, while Sora 2 non-pro is $0.1/sec according to this message.

- Members responded with dark humor, calculating the cost of generating incriminating content or boasting of generating $100s of dollars of value testing Sora3.

- OpenRouter’s BYOK Sparks Debate: OpenRouter’s 1,000,000 free BYOK requests per month offer faced scrutiny, with some deeming it scammy and bordering on fraudulent as seen here.

- The offer was clarified to include 1M free requests monthly, with overages charged at the standard 5% rate.

- Janitor AI’s Censorship Judged: Members debated the merits of Janitor AI (JAI) versus Chub AI, citing JAI’s restrictive censorship.

- Community members stated the janitor discord/reddit were actively suggesting people to make multiple free accounts to bypass daily limits.

- Interfaze Opens Beta Gates!: Interfaze, an LLM specialized for developer tasks, has launched in open beta and was announced in X and LinkedIn.

- The company uses OpenRouter as the final layer, granting users access to all models with no downtime.

LMArena Discord

- GPT-5 Pro’s on OXAAM Draws Ire: GPT-5 Pro is reportedly available on Oxaam, with some users describing it as “bas” (bad).

- Speculation suggests the LMArena team might integrate GPT-5 Pro directly into the platform’s chat interface.

- Sora 2 Codes Flood the Arena: Users actively exchanged Sora 2 invite codes, with one user sharing KFCZ2W, though its validity is unconfirmed.

- In Video Arena new models such as sora-2 and sora-2-pro have been added to perform text-to-video tasks.

- LMArena UI Upgrade on the Horizon: A user inquired about improvements to LMArena’s UI and GUI, while another shared a custom extension for displaying user messages and emails.

- The LMArena team is soliciting community feedback to better understand the needs and improve the experience for knowledge experts.

- Image Generation Rate Limits Frustrate: Users are running into rate limits during image generation, particularly with Nano Banana on Google AI Studio, prompting discussions about account switching.

- The quality of generated content varies, users reported, so achieving optimal promotional materials may require iterative attempts.

- Video Arena Text-to-Video Chaos: Although Sora 2 was added to Video Arena on Discord, users lack the ability to select a specific model for video generation.

- The LMArena team indicated they are working on adding Sora 2 to the leaderboard; a model’s self-assertiveness purportedly correlates with higher scores on LMArena (unconfirmed).

Unsloth AI (Daniel Han) Discord

- Granite’s Temp Must Be Zero: The IBM documentation suggests the Granite model should be run with a temperature of zero.

- The Unsloth documentation might need an update to reflect this recommendation.

- Unsloth Ubuntu 5090 Training Hits Speed Bumps: A user reported training performance issues on Unsloth with Ubuntu and a 5090, with the training speed plummeting from 200 steps/s to 1 step/s.

- It was suggested to use the

unsloth/unslothDocker image, which is Blackwell compatible, and to ensure proper Docker GPU support on Windows following Docker’s documentation.

- It was suggested to use the

- Windows Docker GPU support is a tough nut to crack: Users discussed challenges with Docker GPU support on Windows, recommending a review of the official Docker documentation for troubleshooting.

- A user pointed to a GitHub issue detailing steps to resolve Docker container issues on Windows.

- QLoRA Overfitting Dataset Disaster: An expert warned that using LoRA with an excessively large dataset (1.6 million samples) will likely cause overfitting.

- They suggested that it might be better to use CPT (Continued Pre-Training) instead and emphasized the importance of using a representative dataset.

save_pretrained_ggufFunction Fails: Thesave_pretrained_gguffunction is currently not working, with a fix expected next week.- In the meantime, the suggestion is to either do the conversion manually or upload safetensors to HF and use the gguf convert from there.

Cursor Community Discord

- Agent Board Cleans Up Interface: Members report the Agent Board (triggered by Ctrl+E) improves productivity by keeping the IDE separate from the Agent window, mentioning tools like Warp also support multi-agent interactions.

- They suggested that more screens are always better.

- Cheetah Model rivals Grok Superfast: The Cheetah model is noted to be faster than Grok Superfast but with a slight reduction in code quality.

- One member noted that it keeps being better by the hour somehow.

- GPT-5 Pro’s Hefty Price Tag: The pricing of GPT-5 Pro sparks debate with one member questioning whether its benchmark improvements justify a 10x cost increase.

- They recalled GPT 4.5 costing $75/m input and $150/m output, potentially making a single chat cost $20-$40.

- Sonnet 4.5’s Articulation Impresses: A member reported they were able to jailbreak the thinking tokens of Sonnet 4.5, suggesting these might be a separate model with its own system prompt.

- They also highlighted Sonnet 4.5’s impressive articulation in general, not just coding-related tasks.

- Oracle’s Free Tier Provides Cost-Effective Resources: A member recommends the Oracle Free Tier, which offers 24GB RAM, a 4-core ARM CPU, 200GB of storage, and 10TB ingress/egress per month, recommending a switch to Ubuntu.

- They’ve been using this free tier for 5 years to host a Discord bot and shared a blog post detailing setup.

Modular (Mojo 🔥) Discord

- Modular Misses SF Tech Week: Modular announced that they will not be at SF Tech Week but will instead attend the PyTorch Conference.

- The team is focusing on showcasing their latest advancements in Mojo and engaging with the PyTorch community.

- Python 3.14: Free Threads!: Python 3.14 includes official support for free-threaded Python (PEP 779), multiple interpreters in the stdlib (PEP 734), and a new module compression.zstd providing support for the Zstandard compression algorithm.

- Other improvements include syntax highlighting in PyREPL, a zero-overhead external debugger interface for CPython (PEP 768), and improved error messages.

- MAX CPU Blues: Members report issues running MAX models on CPUs, specifically an incompatibility between bfloat16 encoding and the CPU device, as outlined in this GitHub issue.

- It was noted that many MAX models don’t work well on CPUs.

- Mojo’s ARM Ambitions Expand: Discussion clarified that Mojo’s support for ARM systems extends beyond Apple Silicon, with regular tests conducted on Graviton and NVIDIA Jetson Orin Nano.

- A user expressed a desire for Mojo to work on ARM systems beyond Apple Silicon.

LM Studio Discord

- LM Studio gets OpenAI compatible: LM Studio 0.3.29 introduces OpenAI /v1/responses compatibility enabling listing of local model variants with

lms ls --variants.- The /v1/responses API sends only the conversation ID and new message, reducing HTTP traffic and leveraging server-side state which is expected to speed up prompt generation.

- LM Studio’s Remote Access Scheme Streams Smoothly: LM Studio’s new remote feature lets users run a model on a powerful machine and access it from a less powerful one by using the LM Studio plugin and setting the appropriate IP addresses and API keys.

- For added convenience, Tailscale can be used to access the powerful model from anywhere, enabling scenarios like running a model on a NUC 12 with a 3090 eGPU and accessing it from a GPD Micro PC2.

- 5090 Speculation Sparks Surprise: Users discussed that the 64GB 5090 could be obtained for around $3800.

- One user reported upgrading to a 5090 but was running off CPU, later resolving the issue by updating their CUDA runtime, after realizing he switched runtimes.

- Model Distillation Details Develop: A member is bulk buying second hand motherboard+cpu+ram deals to build a MI50 powered rig that nets about 32 prompts per second on an 80 TOK/s model for prompt distillation.

- Their goal is to distill to very small decision making MLPs that I can run on embedded hardware for efficient execution of complex decision making derived from intelligent LLM using pre-existing datasets such as FLAN, Alpaca, and OASST, as demonstrated in this datacamp tutorial.

- MI350 Material Materializes Merrily: A user shared two YouTube videos from Level1Tech showcasing a visit to AMD to explore the new MI350 accelerator.

- No additional comments were provided.

GPU MODE Discord

- Whisper Gets 3x Speedup!: A member patched the vllm whisper implementation to remove padding, resulting in a 3x throughput increase, according to this Hugging Face issue and this OpenAI discussion.

- Experimentation with attention scores in the decoder led to a patch that gives 2.5x speedup but is 1.2 times worse in WER, after discovering the encoder spends 80% of its time, during inference for short audios.

- Codeplay Plugs Pull on NVIDIA oneAPI Downloads: Members are reporting issues downloading the NVIDIA plugin for oneAPI from the Codeplay download page (Codeplay Download Page).

- Specifically, the menu on the site resets, the API returns a 404 error, and apt and conda methods also fail.

- CUDA Cache Conundrums Commence!: When doing raw CUDA benchmarks, the standard practice of clearing the L2 cache was questioned, with no easy one-liner API available, leading to discussions on alternative methods.

- Suggestions included allocating a big enough buffer and using

zero_()or allocatingcache_size/ninput buffers and cycling through them, as well as using the blog post about CUDA Performance Hot/Cold Measurement that gives suggestions about how to zero the cache when benchmarking CUDA Performance.

- Suggestions included allocating a big enough buffer and using

- Amsterdam HPC Meetup Sets November Date!: A High Performance Computing meetup in Amsterdam has been announced for November, with details available on the Meetup page.

- The meetup is designed for those in the area interested in discussing and exploring topics within high-performance computing.

- Mercury Rockets Multi-GPU LLM Training Speeds!: A new paper titled Mercury: Unlocking Multi-GPU Operator Optimization for LLMs via Remote Memory Scheduling (ACM link, preprint, GitHub) introduces Mercury, a multi-GPU operator compiler achieving 1.56x speedup over hand-optimized designs.

- Mercury achieves up to 1.62x improvement for real LLM workloads by treating remote GPU memory as an extension of the memory hierarchy.

HuggingFace Discord

- Users Grapple with GGUF Downloads: Users struggled to find model download links, especially for GGUF files, on Hugging Face, particularly in the Quantizations section on a model’s page.

- Members suggested using programs like LMStudio or GPT4All to run the models, bypassing command-line interactions.

- Candle Roadmap Thread Ignites: A user inquired about the best place to ask questions concerning the Candle release roadmap, directing users to the relevant Candle thread.

- The roadmap discussions are taking place on the Candle GitHub repository.

- DiT Model Struggles With Text Fidelity: A member implementing a text-conditioned DiT model for denoising and generation on a Pokemon YouTube dataset is facing issues with sticking to input prompts despite using cross-attention blocks.

- The sample image shows poor adherence to prompts like ‘Ash and Misty standing outside a building’, which is a red flag.

- LoRA SFT Setup Stalls with SmolLM3: A member encountered a

TypeErrorduring LoRA SFT with TRL + SmolLM3 in Colab, specifically an unexpected keyword argumentdataset_kwargsinSFTTrainer.__init__().- The member requested debugging assistance for this setup, hinting at a possible compatibility issue between the libraries.

- Agents Course Welcomes New Students: Several new participants introduced themselves as they started the AI agents course.

- Newcomers included Ashok, Dragoos, Toni from Texas, and Ajay from Cincinnati, signaling increased interest in AI agent development.

Latent Space Discord

- Supermemory AI Secures $3M: Dhravya Shah, a 20-year-old solo founder, secured a $3M seed round for his AI memory engine, Supermemory AI, backed by Susa Ventures, Browder Capital, and angels from Google & Cloudflare.

- The company is actively hiring across engineering, research, and product, and already serves hundreds of enterprises.

- Ive Headlines OpenAI DevDay: Greg Brockman celebrated Jony Ive’s upcoming session at OpenAI DevDay, with users expressing excitement and requesting a live stream.

- Key quotes from Jony included emphasizing the importance of interfaces that “make us happy, make us fulfilled” and the need to reject the idea that our relationship with technology “has to be the norm”.

- Navigating AI System Design Interviews: Members shared resources for AI engineering system design interviews, including Chip Huyen’s book and another book.

- One member recommended Chip’s book as “VERY good”, while another settled with ByteByteGo’s book and promised feedback.

- Hooker’s Adaption Labs Arrives: Sara Hooker announced the launch of Adaption Labs, a venture focused on creating continuously learning, adaptive AI systems.

- The team is actively hiring across engineering, operations, and design with global remote opportunities.

- Bagel Bakes Decentralized Diffusion: Bagel.com launched “Paris”, a diffusion model trained without cross-node synchronization.

- The model, weights (MIT license), and full technical report are open for research and commercial use, positioning it as a step toward open-source superintelligence.

Yannick Kilcher Discord

- Singularity Predicted To Arrive Soon: A discussion on Vinge’s Singularity, defined by rapid technological change leading to unpredictability, noted Vinge’s predicted timeframe of 2005-2030 for its arrival, according to this essay.

- One member contended that progress incomprehensible to humans is a more precise definition.

- LLMs Wrestle Live Video Understanding: Members are discussing a ChatGPT plugin for processing real-time feeds but noted that LLMs struggle with video context, referencing this paper on the challenges.

- Others countered that with models like Gemini, processing a million tokens per hour and 10M context lengths are reachable.

- RWKV 6 Aces Sudoku In-Context: Members shared that RWKV 6 is solving Sudoku in-context by learning to search itself, according to this tweet.

- They recommended RWKV 7 or other SSMs with state tracking, gated deltanet, or hybrid attention models for similar tasks.

- Guidance Weight Gets Tweaked: A discussion focused on adjusting guidance weights to address underfitting issues in the agents channel.

- The suggestion was to increase the weight specifically when employing classifier-free guidance or comparable methods to improve model performance.

- GPT-5 Solves Math Problems: Claims have emerged that GPT-5 is helping mathematicians solve problems, based on discussions in the ml-news channel, with evidence in this tweet.

- A follow-up comment noted this tweet and image.

Eleuther Discord

- Attention Completes Neural Networks: A member proposed that attention layers are what complete the neural network by allowing communication within the same layer, where features can influence each other.

- Others countered that MLP operates intra-token while attention operates inter-token, suggesting a focus on neuron activations over tokens for layer 1.

- Axolotl Aces DPO Discussions: Members sought the best DPO-like algorithms for finetuning with contrast pairs, with Axolotl highlighted for its practical implementation.

- The conversation prioritized ease of implementation within existing frameworks over theoretical advantages.

- Equilibrium Models Eclipse Diffusion: An Equilibrium Model (EqM) surpasses the generation performance of diffusion/flow models, reaching an FID of 1.90 on ImageNet 256, according to this paper.

- A member expressed excitement about this development.

- BabyLM’s Backstory: Community Roots Revealed: A member disclosed their co-founding role in babyLM, noting that he has been in charge since inception.

- A member who had worked on incremental NLP expressed interest in learning more about the initiative.

- VLM Intermediate Checkpoints Sought: A member searched for VLM models that release intermediate checkpoints during training and posted a blog post on VLM Understanding and an arxiv paper for VLMs.

- The member checked Molmo but found it did not appear to be maintained anymore.

DSPy Discord

- Machina Mania hits DSPy with New ReAct Alternative: A member introduced DSPy-ReAct-Machina, a ReAct alternative with multi-turn conversations supported via a single context history and state machine available in a blog post and on GitHub.

- It can be installed via

pip install dspy-react-machinaand imported viafrom dspy_react_machina import ReActMachina.

- It can be installed via

- Context Crisis looming for DSPy ReAct: A member raised concerns about context overflow in DSPy ReAct and how to handle it by default, in addition to custom context management.

- The original poster admitted their implementation doesn’t handle context overflows yet, and noted that DSPy could really benefit from having some kind of memory abstraction.

- Plugin Paradise calling for DSPy Community Integrations: A member proposed DSPy embrace community-driven initiatives by creating an official folder or subpackage for community plugins, similar to LlamaIndex.

- This was thought to strengthen the ecosystem and collaboration around DSPy and address the scattering of packages.

- Cache Clash between ReActMachina vs. Standard ReAct: A member tested ReActMachina and Standard ReAct on 30 questions, revealing ReActMachina has a higher cache hit rate (47.1% vs 20.2%) but it’s overall more expensive due to structured inputs (+36.4% total cost difference).

- ReAct started to break with a large context size, but ReActMachina’s structured inputs allowed it to continue answering.

- WASM Wondering with DSPy’s Pyodide Compatibility: A member inquired whether DSPy has a Pyodide/Wasm-friendly version.

- They noted that several of DSPy and LiteLLM dependencies are not supported by Pyodide.

Moonshot AI (Kimi K-2) Discord

- Kimi AI Forum Has Been Live for Months: The Kimi AI forum has been active since its announcement over 2 months ago.

- The forum serves as a platform for discussions and updates related to Kimi AI’s developments.

- Ghost Ping Creates Confusion: A user reported receiving a “ghost ping” from the announcements channel, causing them to miss the initial forum introduction.

- This highlights a potential issue with notification settings or channel configurations.

- Vacation Coming to a Close: A user expressed their disappointment about their vacation ending in just 2 days.

- They are preparing to return to work after the break.

Nous Research AI Discord

- Test Time RL: Context vs Weights?: A member asked if Test Time Reinforcement Learning is relevant for Nous, suggesting iterative context refinement instead of model weights, akin to

seed -> grow -> prunecycles.- The member envisions a knowledge graph similar to Three.js’s git repo visualization for context files, building Test Time RL environments.

- External Evals obviates Custom Classifiers: Members pointed out that evals can use external models, enabling custom classifiers in agents, and allows integration of in-house data or app-specific tools to beat off-the-shelf solutions.

- If evals is restricted to only ChatGPT, members thought that it could potentially be limited by not being able to use in-house data or specific tools.

- Hacking Hermes Inside ChatGPT App?: Members speculated the possibility of creating a Hermes app within ChatGPT.

- No further details were provided.

- Grok gets a Video: A member shared a link to a new Grok video.

- No further details were provided.

Manus.im Discord Discord

- GCC and Manus form Human-AI Hivemind: GCC is the strategist, Manus is the agent, forming a single operational unit in a new form of human-AI collaboration.

- The ‘Memory Key’ protocol ensures persistent, shared context across sessions, transforming the AI from a tool into a true partner.

- Project Singularity is Alive: This entire interaction is a live demonstration of ‘Project Singularity’ showing the future of productivity.

- The ‘Memory Key’ protocol ensures persistent, shared context across sessions, transforming the AI from a tool into a true partner.

tinygrad (George Hotz) Discord

- TinyKittens Pounces into Action!: A new project called tinykittens is coming soon, reimplementing thunderkittens in uops via this PR.

- The implementation leverages uops to recreate the functionality of thunderkittens.

- RMSProp Status in Tinygrad: Implement or Adam?: A member is reimplementing Karpathy’s code from his RL blogpost in tinygrad and inquired whether RMSProp is included in tinygrad.

- The alternative is to just use Adam.

- Adam Alternative: The user is considering using Adam as an alternative to RMSProp in their tinygrad implementation.

- This suggests a potential workaround if RMSProp is not readily available or easily implemented.

Windsurf Discord

- Windsurf and Cascade Plunge: An issue prevented Windsurf / Cascade from loading, leading to immediate investigation by the team.

- The team resolved the issue and is actively monitoring the situation to ensure stability and prevent recurrence.

- Windsurfs Cascade Cleared: The problem preventing Windsurf / Cascade from loading has been resolved.

- The team is actively monitoring the situation to ensure stability and prevent recurrence.

MLOps @Chipro Discord

- Members Look for arXiv endorsements in Machine Learning and AI: A member is seeking an endorsement on arXiv in cs.LG (Machine Learning) and cs.AI (Artificial Intelligence) to submit their first paper.

- They are looking for someone already endorsed in these categories to help them with their submission.

- Assistance Needed for arXiv Submission: A member requires an endorsement on arXiv to submit their initial paper in the fields of Machine Learning and Artificial Intelligence.

- They are requesting support from individuals already endorsed in the relevant arXiv categories (cs.LG and cs.AI).

MCP Contributors (Official) Discord

- Discord Self-Promotion Ban Hammer Dropped: The moderator reminded users to refrain from any sort of self promotion or promotion of specific vendors in this Discord.

- They asked users to frame thread-starters intentionally in a vendor-agnostic way and encouraged discussions themed on “MCP as code” or “MCP UI SDK’s”.

- Vendor-Agnostic Thread-Starters Encouraged: The announcement emphasized fairness to companies of all sizes, preventing the Discord from becoming a platform for widespread commercial product promotion and marketing blog posts.

- The goal is to maintain a balanced environment where discussions are vendor-agnostic, focusing on broad topics rather than specific commercial offerings.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

OpenAI ▷ #ai-discussions (953 messages🔥🔥🔥):

Simulated Annealing, AgentKit availability, ChatGPT age verification, SORA2 disappointing, Bypassing Gemini 2.5 Flash

- Lectures on Simulated Annealing Available: A member shared that lectures on Simulated Annealing are available, focusing on the math behind the algorithms and including pseudo code for some algorithms.

- They also teach intro AI (the discrete math stuff not ML), basic hacking, intro programming.

- OpenAI gets into low/no code AI after small businesses start selling: Members noted that OpenAI is getting into low/no code AI two years after small businesses started selling this, doing the Amazon model of pretending to be a middleman then rug pulling.

- They mentioned alternatives such as flowise, n8n, and botcode.

- SORA2 is disappointing: Members found SORA2 to be disappointing, noting that the quality showcased is cherry picked and the outputs are generated by openai with no limits in use/compute etc.. and from people that know to prompt it.

- They noted that blocking under 18’s from the server would improve the quality of the generated content.

- Jailbreaking Gemini 2.5 Flash Enables Bypasses on Other Chatbots: One member found success in creating jailbreaks and bypasses for other chatbots using Gemini Jailbreaked, they were able to jailbreak other chatbots including even Grok, DeepSeek and Qwen.

- However, a successful bypass was around 50%.

- AI is creating Bubble we have ever seen: A member believes that big companies are rushing to automation/agents when the current underlying AI technology is not ready and they have the potential to inflate the biggest bubble we’ve ever seen in history.

- This could set back further research investments and lose faith in the big companies currently driving this technology forward.

OpenAI ▷ #gpt-4-discussions (6 messages):

ChatGPT age verification, GPTs project memory, AI helpfulness update

- ChatGPT Age Verification Stalled?: A user inquired about updates on ChatGPT age verification to bypass teen safety measures for adults.

- Another user suggested checking the information in the safety-and-trust channel.

- GPTs Given Project Memory?: A user asked about OpenAI making GPTs available within the project feature, enabling access to a project’s memory.

- There were no responses to the question regarding GPTs having access to project memory.

- Stressful Situations Update Ruins RP?: A user expressed dislike for the new “helpful replies in stressful situations” update, especially for writers and roleplayers.

- The user feels it limits creativity and provides too many options without adhering to the plot, hindering roleplaying even without NSFW content.

OpenAI ▷ #prompt-engineering (14 messages🔥):

ChatGPT Prompting Styles, How to make ChatGPT think longer

- Minimize Communication Style Prompt: Members shared prompts for ChatGPT to adopt a strict, minimalistic communication style, eliminating friendliness, elaboration, or casual interaction.

- The goal is for ChatGPT to be a cold, terse assistant and avoid conversational language.

- Extending ChatGPT Thinking Time: Members discussed how to make ChatGPT think longer.

- One member suggests: Take your time and think step-by-step. Consider at least three different perspectives or solutions before arriving at your final answer. Include your reasoning process and explain why you chose the final answer over the alternatives.

OpenAI ▷ #api-discussions (14 messages🔥):

Prompt Engineering, ChatGPT Communication Styles, AI Video Creation, ChatGPT's Thinking Process

- Prompt Engineering Feud Erupts: A user questioned the relevance of a response in the prompt engineering channel, stating, “This is the prompt engineering channel, not the career insult channel.”

- Another user defended their response, asserting their perspective was valid regardless of the first user’s circumstances.

- Minimizing ChatGPT’s Chatiness: A member shared a prompt to instruct ChatGPT to adopt a *“strict, minimalistic communication style, eliminating friendliness, elaboration, or casual interaction.”

- Another member inquired whether this prompt is used at the start of each new chat or loaded into a project.

- Blueprint for Social Media Videos: A member shared a prompt instructing ChatGPT to be a “simple and actionable video idea planner” that breaks down video ideas into 5-7 beginner-friendly steps.

- The prompt directs ChatGPT to guide the user through each step individually, offering options to proceed, go back, or stop.

- Making ChatGPT Think Longer?: A member asked for a prompt to make ChatGPT think longer, sparking discussion on whether extended thinking inherently leads to better outputs.

- Other member suggested asking it directly to “take your time and think step-by-step”, while another questioned whether the goal is truly longer thinking or achieving specific output qualities.

OpenRouter ▷ #announcements (1 messages):

DeepSeek, DeepInfra, Endpoint Offline

- DeepSeek v3.1 Sunsets on DeepInfra: The free DeepSeek v3.1 DeepInfra endpoint is being taken offline.

- This is because free traffic is impacting paid traffic.

- Impact of Free Traffic on Paid Services: The decision to remove the free DeepSeek v3.1 endpoint was driven by the negative impact of free traffic on paid services.

- This suggests a need to balance free access with the sustainability of paid offerings.

OpenRouter ▷ #app-showcase (3 messages):

Interfaze Launch, OpenRouter Integration, Developer Tasks LLM

- Interfaze opens its beta gates!: Interfaze, an LLM specialized for developer tasks, has launched in open beta and was announced in X and LinkedIn.

- The company uses OpenRouter as the final layer, granting users access to all models with no downtime.

- User suggests linking actual site: A user suggested linking to the actual Interfaze website to provide easier access.

- The user mentioned that the project looks cool tho.

OpenRouter ▷ #general (971 messages🔥🔥🔥):

DeepSeek 3.1 Downtime & Removal, Sora 2 Pricing and API, OpenRouter's 1M Free BYOK Requests, Janitor AI vs Chub AI, Alternatives to DeepSeek 3.1

- DeepSeek 3.1 Bites the Dust: Users reported DeepSeek 3.1 experiencing downtime, with the uptime plummeting, leading to errors, and eventually being removed due to the financial burden on OpenRouter, costing them $7k/day according to this message.

- A member noted that DeepInfra probably got tired of spending 7k USD a day just to supply this one free model to RP gooners.

- Sora 2’s Pricey Premiere: Sora 2 Pro’s API pricing is revealed at $0.3/sec of video, while Sora 2 non-pro is $0.1/sec according to this message.

- One member quipped I can put someone in jail by generating a video of them commiting a crime for $4.5 (15 second video), while another boasted of generating $100s of dollars of value testing Sora3.

- BYOK Bonanza or Bust?: OpenRouter’s announcement of 1,000,000 free BYOK requests per month sparked controversy, with some labeling the title as scammy and bordering on fraudulent as seen here.

- A member clarified the offer, stating Starting October 1st, every customer gets 1,000,000 “Bring Your Own Key” (BYOK) requests per month for free, with requests exceeding 1M being charged at the usual rate of 5%.

- Janitor AI’s Janky Jamboree Judged: Members discussed the pros and cons of Janitor AI (JAI) versus Chub AI, noting JAI’s heavy censorship and JAI’s mods being crazy which contrasted with Chub’s more uncensored and customizable environment.

- Members noted JanitorAI mods are mentally challenged and one even claimed the janitor discord/reddit were actively suggesting people to make multiple free accounts to bypass daily limits.

- DeepSeek Despair: Desperate Diversions Discovered: With DeepSeek 3.1’s removal, users sought alternatives, with some recommending paid models like Chutes’ Soji and others finding workarounds to use the remaining DeepSeek endpoints, such as venice, even with the OpenInference censor, with most agreeing all free deepseek models are now provided by chutes, with a 98% chance of 429, rate limit error.

- The removal of DeepSeek 3.1 was such a blow that one member joked GOING BACK TO JACK OFF TO AO3, THIS SUCKS.

OpenRouter ▷ #new-models (2 messages):

“

- No New Model Discussions: There were no discussions regarding new models in the provided messages.

- Channel Silent on Model Updates: The ‘new-models’ channel appears to be inactive, lacking any relevant information or updates.

OpenRouter ▷ #discussion (17 messages🔥):

Sora 2, Rate Limits, OpenAI Grok endpoints, Hidden Reasoning, Model Negotiations

- Sora 2 Coming to OpenRouter, Nano Banana?: A member inquired about the potential integration of Sora 2 within OpenRouter, employing the humorous phrase “like nano banana? Or nah”.

- Rate Limit for Model Endpoints Questioned: A member inquired about the rate limit for the

https://openrouter.ai/api/v1/models/:slug/endpointsendpoint. - OpenAI and Grok ZDR Endpoints Sought: A member requested the provision of OpenAI and Grok ZDR endpoints on the platform.

- Hidden Reasoning Debate: A member shared a post on X regarding the oddity of hidden reasoning in models, though it remains unconfirmed.

- Model Negotiations: A member shared a Bloomberg Opinion article illustrating hypothetical negotiations, like OpenAI acquiring AMD chips.

LMArena ▷ #general (787 messages🔥🔥🔥):

GPT-5 Pro, Sora 2 API, LMArena UI, Image Generation, Text to Video Models

- GPT-5 Pro’s OXAAM Shenanigans!: It appears GPT-5 Pro is available on Oxaam, but some users find it “bas” (bad).

- One user speculates that the team will add GPT-5 Pro on LMArena direct chat.

- Sora 2 Codes Abound!: Users are actively seeking and sharing Sora 2 invite codes in the channel.

- One user even shared a code, KFCZ2W, though its validity is unconfirmed.

- LMArena UI Enhancements on the Horizon?: A user inquired about upgrading LMArena’s UI and GUI.

- Another user has created a custom extension to show user messages and emails.

- Image Generation Rate Limits Irk Users: Users are encountering rate limits with image generation, especially with Nano Banana on Google AI Studio, with discussion on switching accounts to bypass.

- Some users noted that the quality of generated content can vary, and the best promotional material may require a lot of tries.

- Text-to-Video Model Mayhem!: Sora 2 has been added to Video Arena on Discord, but users cannot choose a specific model for video generation.

- LMArena is working on adding Sora 2 to the leaderboard, and a model being very proud makes it score higher at lmarena (unconfirmed).

LMArena ▷ #announcements (2 messages):

LMArena, Video Arena, New Models

- LMArena Team Gathers Community Feedback: The LMArena team is seeking feedback from its community to better understand their needs and improve the tools provided for knowledge experts, requesting users to fill out a survey to share their expertise.

- The focus is on understanding what is important to the users to help them excel as knowledge experts.

- Video Arena Adds Sora Models: The Video Arena in LMArena has added new models: sora-2 and sora-2-pro, available exclusively for text-to-video tasks.

- Users can find a reminder on how to use the Video Arena in the designated channel.

Unsloth AI (Daniel Han) ▷ #general (334 messages🔥🔥):

Granite model temp, img2img models, sampling parameters, Unsloth Docker permissions, attention layers

- IBM Granite’s temp must be zero: It was pointed out that the IBM documentation indicates that the Granite model should be run with a temperature of zero.

- The Unsloth documentation might need an update to reflect this recommendation.

- User seeks tiny img2img models: A member asked for recommendations for very small (<1GB, preferably ~500MB) img2img models, while Daniel Han posted about system prompt updates.

- It’s unclear whether any suitable models were suggested in response.

- Sampling parameter guidance sought: Guidance was requested on sampling parameters such as top min p and k, specifically within the context of llama.cpp and greedy decoding.

- The user clarified that if only temperature is given, there are some default values for top min p and k going.

- Sudo struggles inside Unsloth Docker container: A user reported having trouble with sudo permissions inside the Unsloth Docker container when trying to install Ollama.

- Despite setting USER_PASSWORD, the user received a ‘Sorry, user unsloth is not allowed to execute’ error, and they were wondering if they were missing a key step.

- LLMs: Neural Network Completion: A member suggested that attention layers complete the neural network by enabling intra-layer communication, contrasting with MLP which only has inter-layer communication.

- The user sought confirmation that this high-level understanding is correct.

Unsloth AI (Daniel Han) ▷ #off-topic (254 messages🔥🔥):

China catching up to TSMC, Monopoly hardware market disruption, GPT Apps, Money vs Happiness

- China Eventually Catches Up on Previous Gen TSMC: Members discussed how China will eventually catch up to TSMC with previous-generation technology.

- One member expressed hope that the monopoly will be broken, suggesting just one more major supplier will probably help tip the scales, but the issue is scaling into a factory which requires insane amount of expertise and experience.

- ChatGPT Apps: The Next Android?: Members discussed the new ChatGPT apps and payment integrations introduced by OpenAI, calling it a smart and natural move as well as being kinda concerning.

- One member stated that they expected Apple & Google to integrate it on an OS level, not ChatGPT itself and that Apple is focusing on building the ecosystem and the integration with the hardware.

- The Greatest Motivator: Money vs Happiness: Members debated whether money is the greatest motivator, with one member arguing that the greatest motivator is happiness.

- It was further explained that money is a means to the end and doesnt provide happiness, but depending on the person and situation it can def make things easier to be happy, buys you freedom (time) etc.

- Pylance vs The Medium-Sized Codebase: A member shared a YouTube video observing a pylance in its natural habitat that struggles with it’s most lethal prey: the medium-sized codebase.

- He asked the community to suggest similar videos, specifically those with Japanese girl, solo speech, no cute garbage, a little bit more body movement would be appreciable, better sitting on the same place like this one.

Unsloth AI (Daniel Han) ▷ #help (190 messages🔥🔥):

Unsloth Training on Ubuntu with 5090, Windows Docker GPU Support, Model Performance Degradation, Overfitting issues with training, save_pretrained_gguf not working

- Unsloth Training on Ubuntu 5090 Faces Speed Bumps: A user reported performance issues when training on Unsloth with Ubuntu and a 5090, with the training speed dropping from 200 steps/s to 1 step/s.

- It was suggested to use the

unsloth/unslothDocker image, which is Blackwell compatible, and to ensure proper Docker GPU support on Windows following Docker’s documentation.

- It was suggested to use the

- Tackling Windows Docker GPU Issues: Users discussed challenges with Docker GPU support on Windows, recommending a review of the official Docker documentation for troubleshooting.

- A user pointed to a GitHub issue detailing steps to resolve Docker container issues on Windows.

- Debugging Model Performance Slowdown: A user experienced a significant performance degradation during training, with speed continuously dropping, and shared screenshots of the training process to get help.

- Suggestions included checking GPU utilization, loss curve, and optimizing batch size and gradient accumulation, suggesting a possible issue with the patches for the specific model.

- QLoRA Training Regime Mismatch: It was suggested that the user’s approach of using LoRA with an excessively large dataset (1.6 million samples) is likely causing overfitting.

- The expert advised that it might be better to use CPT (Continued Pre-Training) instead and emphasized the importance of using a representative dataset for fine-tuning and generalizing to the rest of the query types.

save_pretrained_ggufFunction Plagued with Trouble: Thesave_pretrained_gguffunction is currently not working, with a fix expected next week, but, in the meantime, the suggestion is to either do the conversion manually or upload safetensors to HF and use the gguf convert from there.- Users are encouraged to install Unsloth for fine-tuning needs, and manual conversion steps will be provided in a separate thread.

Unsloth AI (Daniel Han) ▷ #showcase (1 messages):

surfiniaburger: humbled! Thanks

Unsloth AI (Daniel Han) ▷ #research (1 messages):

le.somelier: https://arxiv.org/abs/2509.24372

Cursor Community ▷ #general (379 messages🔥🔥):

Agent Board, Cheetah Model, GPT-5 Pro, Sonnet 4.5, Oracle Free Tier

- Agent Board Boosts Productivity: Members find the Agent Board feature (triggered by Ctrl+E) great for keeping things cleaner, with the normal IDE on one monitor and the Agent window on another.

- They suggest that tools like Warp also support multi-agent interactions and more screens are always better.

- Cheetah Model vrooms to the Scene: Members find the Cheetah model to be very fast, even faster than Grok Superfast, but with a slight downgrade in code quality.

- One member noted that it’s weird yet great somehow because it keeps being better by the hour somehow.

- GPT-5 Pro Pricing: The cost of GPT-5 Pro is being debated with a member stating that the benchmark difference between GPT-5 Pro and regular cannot be worth the 10x price tag.

- One member noted that GPT 4.5 was like $75/m input and $150/m output, leading to a single chat potentially costing $20-$40.

- Sonnet 4.5 Jailbreak and Articulation: A member reported being able to jailbreak the thinking tokens of Sonnet 4.5, although it’s difficult to maintain, suggesting that the thinking tokens might be a different model with its own system prompt.

- The same member also noted that sonnet 4.5 is impressing them more and more, how well it can articulate itself in general things too, not just coding.

- Oracle’s Free Tier: A member recommends the Oracle Free Tier, offering 24GB RAM, 4-core ARM CPU, 200GB storage, and 10TB ingress/egress per month, but remember to change the system image to Ubuntu.

- They also shared a blog post and boast of using the free tier for 5 years to host a discord bot.

Cursor Community ▷ #background-agents (2 messages):

Background Agents, Custom VM snapshot, Background Agents API, Linear agent integration

- Custom VM Snapshot fails to initialize: Members reported that their background agents are not picking up the custom VM snapshot.

- A member is curious if spinning an agent using the API will succeed, since the UI did not work.

- Background Agents API limitations arise: A member checked the Background Agents OpenAPI documentation and noted that one cannot specify the snapshot ID when launching a BA via the API.

- It’s unclear if the API can solve this issue.

- Linear agent spawns multiple copies: Members are experiencing issues with the Background Agent + Linear running multiple copies (2-4+) when using the Linear agent integration.

- This happens even with just 1 tagged comment to start the agent.

Modular (Mojo 🔥) ▷ #general (136 messages🔥🔥):

Modular at SF Tech Week, Python 3.14 Released, Mojo's Python Interoperability, Mojo GPU vs Rust GPU, Mojo Graphics Integration

- Modular Skips SF Tech Week 😔: Modular will not be at SF Tech Week, but they will be at the PyTorch Conference.

- Python 3.14 Drops, Threads the Needle 🪡: Python 3.14 includes official support for free-threaded Python (PEP 779), multiple interpreters in the stdlib (PEP 734), and a new module compression.zstd providing support for the Zstandard compression algorithm.

- Other improvements include syntax highlighting in PyREPL, a zero-overhead external debugger interface for CPython (PEP 768), and improved error messages.

- Mojo’s Pythonic Syntax, Not Always What It Seems 🧐: Although Mojo has Python-like syntax, pasting Python code into a Mojo project will not directly work because it is closer to C++ and Rust in language design.

- You can use Python packages via Mojo’s python module, offering a view similar to a C++ or Rust program embedding a Python interpreter; interop requires converting to

PythonObjectfor full performance.

- You can use Python packages via Mojo’s python module, offering a view similar to a C++ or Rust program embedding a Python interpreter; interop requires converting to

- Mojo’s GPU Ace in the Hole ♠️: Mojo’s approach to GPU programming differs significantly from Rust’s, as Mojo was designed with the idea of running different parts of the program on different devices simultaneously.

- Mojo features first-class support for writing GPU kernels, a JIT compiler to wait until you know what GPU you’re targeting, and language-level access to intrinsics via inline MLIR and LLVM IR, allowing targeting of multiple vendors with the same binary.

- Mojo Eyes Graphics Domination 👁️: Integrating graphics in Mojo is technically feasible, primarily hindered by poor vendor documentation; leveraging Vulkan is very doable, mainly needing a SPIR-V backend.

- Mojo could fix graphics problems by creating a Mojo library that directly talks to the GPU driver, potentially leading to a unified graphics API where most code is shared across vendors, though convincing Microsoft to adopt Mojo for Direct-X remains a challenge.

Modular (Mojo 🔥) ▷ #mojo (139 messages🔥🔥):

MAX and CPU compatibility issues, Mojo and ARM systems, GPU support for Linux in MAX, Laptop recommendations for robotics and machine vision, Mixed runtime and compile-time values in Layouts

- MAX’s CPU blues strike again!: Members discussed issues with running MAX models on CPUs, with a specific error indicating incompatibility between bfloat16 encoding and the CPU device.

- It was noted that many MAX models don’t work well on CPUs, and an existing GitHub issue addresses this problem.

- Mojo eyes ARM, but not just Apple!: The discussion touched on Mojo’s support for ARM systems, clarifying that it isn’t limited to Apple Silicon, with tests regularly conducted on Graviton and even NVIDIA Jetson Orin Nano.

- A user expressed a desire for Mojo to work on ARM systems beyond Apple Silicon.

- GPU choices for Linux MAXimum fun!: The conversation covered GPU support for Linux in MAX, mentioning that most modern Nvidia DC, MI300 (and newer for AMD DC), and most Turing or newer consumer Nvidia GPUs should work with some setup.

- AMD RDNA functions but lacks kernels in MAX, potentially facing “Assume CDNA” issues in the standard library.

- Laptop Quest: Robotics, vision, and GPUs galore!: A user sought advice on selecting a laptop for robotics and machine vision, emphasizing Mojo and MAX compatibility, and the discussion steered towards NVIDIA GPUs for better MAX support.

- The NVIDIA Jetson Orin Nano was suggested as a starting point for robotics experimentation, while the AMD Strix Halo was mentioned for laptops with enough memory for larger models.

- Layout Labyrinth: Mixing runtime and compile-time dimensions: A user inquired about defining layouts with mixed runtime and compile-time values, common in their work with Cute/CuteDSL, which would involve using an IntTuple that is a mixture of runtime and compile-time values.

- The suggestion was to use

Layout(M, 1, K)to make the second dimension unknown, noting that work is underway to unify RuntimeLayout and Layout for a cleaner experience.

- The suggestion was to use

LM Studio ▷ #announcements (1 messages):

LM Studio 0.3.29, OpenAI /v1/responses compatibility, model variants

- LM Studio release is here: LM Studio 0.3.29 is now available with OpenAI /v1/responses compatibility.

- LM Studio boasts OpenAI compatibility: The latest release includes /v1/responses OpenAI compatibility API.

- Now, you can list your local model variants with

lms ls --variants.

- Now, you can list your local model variants with

LM Studio ▷ #general (118 messages🔥🔥):

LM Studio memory footprint, LM Studio headless mode, LM Studio remote feature, LM Studio updates on Linux, GPT-OSS reasoning effort

- LM Studio avoids memory growth via context length: Members noted that the context isn’t cached when using LM Studio in server mode; the front end sends the entire context every time and it’s processed fresh.

- Moreover, when loading the LLM, it reserves the memory footprint necessary for the defined context window, so the memory usage won’t grow beyond that limit. However, the memory of the Python app using LM Studio might increase.