yay fast Claude

AI News for 10/14/2025-10/15/2025. We checked 12 subreddits, 544 Twitters and 23 Discords (197 channels, and 6317 messages) for you. Estimated reading time saved (at 200wpm): 479 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

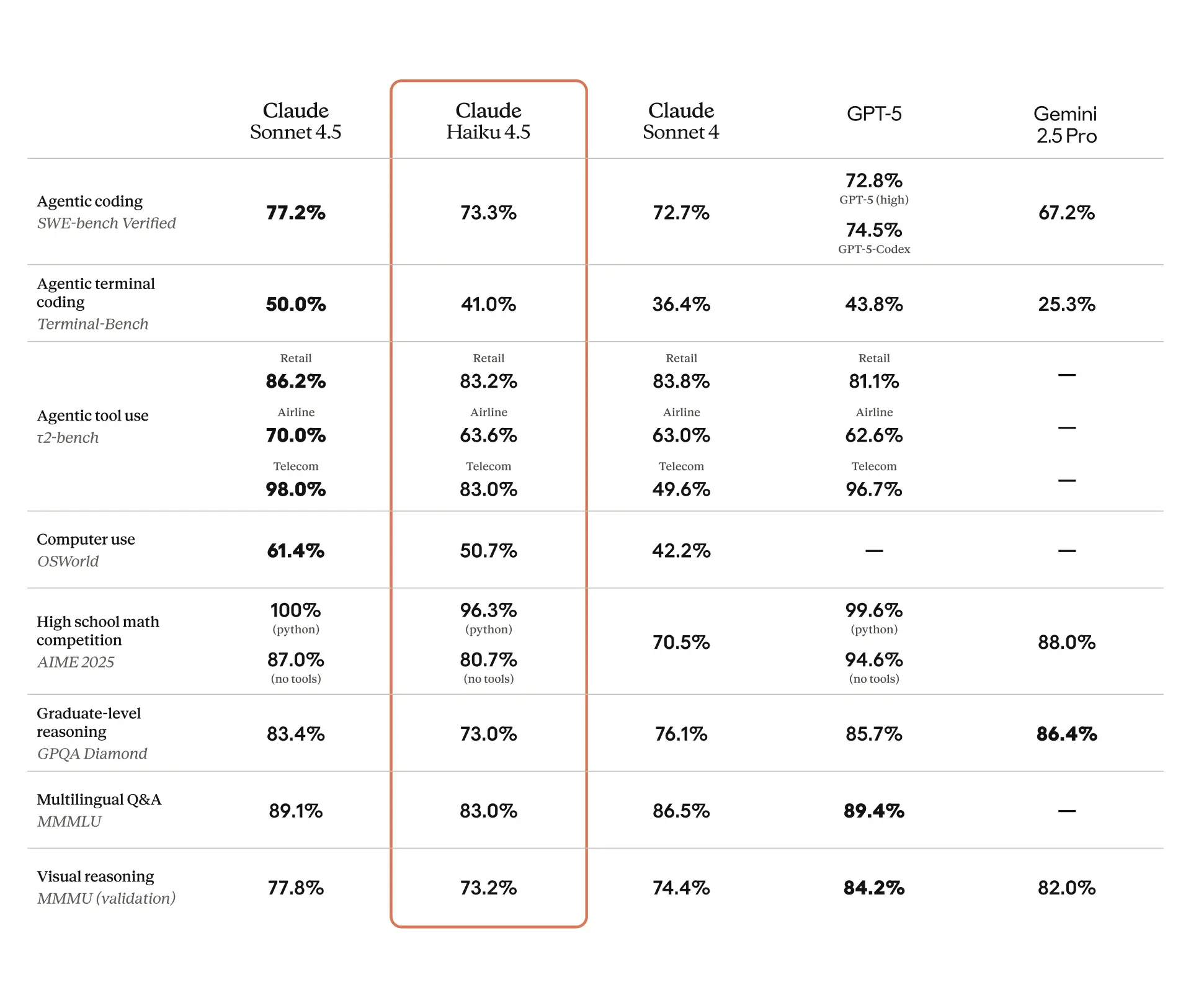

There was a time when entire model families launched at one go, but now different sizes are launched at different times presumably immediately after they are ready and without regard to the storytelling of the daily AI news newsletter writer. Anyway, Anthropic have followed up Claude Sonnet 4.5 with Haiku 4.5 (system card here), entirely skipping Haiku 4.0 and 4.1. It’s meant to be almost as good as Sonnet 4.5, but more than 2x as fast and 3x cheaper.

For those keeping track, here’s the pricing vs peer models:

Haiku 3: I $0.25/M, O $1.25/M

Haiku 4.5: I $1.00/M, O $5.00/M

GPT-5: I $1.25/M, O $10.00/M

GPT-5-mini: I $0.25/M, O $2.00/M

GPT-5-nano: I $0.05/M, O $0.40/M

GLM-4.6: I $0.60/M, O $2.20/MAI Twitter Recap

AI for Science: Open-weight C2S-Scale 27B (Gemma) yields validated cancer hypothesis

- Cell2Sentence-Scale (27B, Gemma-based): Google and Yale released a 27B foundation model that generated a novel hypothesis about cancer cellular behavior which was experimentally validated in living cells. The team open-sourced model weights and resources for the community to reproduce and extend the work. See the announcement from @sundarpichai and follow-up with resources (tweet); community summaries from @osanseviero and @ClementDelangue.

- Signal and caveats: Commentary emphasized the significance of an LLM that fits on a high-end consumer GPU driving a confirmed novel discovery (@deredleritt3r), alongside reminders that translation to clinic requires extensive preclinical/clinical validation (@AziziShekoofeh). There’s active technical curiosity from ML folks on the “novelty” of the biology itself (@vikhyatk) and kudos from Google Research leadership (@mirrokni).

Small Models, Speed, and Agentic Cost-Performance

- Claude Haiku 4.5: Early hands-on reports suggest Haiku 4.5 materially improves iteration speed and UX. @swyx measured ~3.5× faster than Sonnet 4.5 on a head-to-head harness and noted it “stays in the flow window,” meaning more human-in-the-loop cycles per unit time (also see a Windsurf comparison: tweet). In a DSPy NYT Connections eval, @pdrmnvd reported 64%→71% with optimization, 25 minutes wall time, ~$11 total, beating other small models on that task. Ecosystem integrations landed quickly: Haiku 4.5 in anycoder on HF (tweet) and on Yupp with examples (thread).

- Reasoning models in agentic workflows: New evals from Artificial Analysis show outsized performance for GPT-5/o3 vs GPT-4.1 on GPQA Diamond and τ²-Bench Telecom. While test-time compute makes pure benchmarking expensive, in agentic customer-service-style environments the reasoning models reached answers in fewer turns and cost about the same as GPT-4.1 given equal token pricing (tweet 1, tweet 2).

Agents: evaluations, memory, and orchestration

- Evaluations are hard (details matter): After 20k+ agent rollouts across 9 challenging benchmarks (web, coding, science, customer service), @sayashk argues headline accuracy obscures key behaviors; they release infrastructure and guidance for fair agent evaluation.

- Memory-based “learning on the job”: Shanghai AI Lab reports a new SOTA on TheAgentCompany benchmark—MUSE + Gemini 2.5 solved 41.1% of real-world-inspired tasks via a memory-based method (@gneubig, details).

- Tooling and orchestration: The agent stack continues to consolidate around a few core capabilities. @corbtt highlights search, code execution, and recursive sub-agents as the “Big 3.” New infra includes retrieve-dspy, a modular DSPy collection to compare compound retrieval strategies (HyDE, ThinkQE, reranking variants) from @CShorten30, and Pydantic AI 1.1.0 integrating Prefect for agent orchestration (@AAAzzam). Horizontal platforms are integrating agents directly into workflows—e.g., ClickUp x Codegen for multi-surface code shipping (tweet 1, tweet 2).

- Long-context degradation (“context rot”): In a real refactoring session, @giffmana saw codex-cli performance nosedive beyond ~200k consumed context; resetting the session restored quality. Practical guidance on codex-cli usage shared by @gdb.

Training, optimization, and infrastructure notes

- Low-precision training without classic QAT: LOTION (Low-precision optimization via stochastic-noise smoothing) proposes smoothing the quantized loss surface while preserving all global minima of the true quantized loss—offered as a principled alternative to QAT (@ShamKakade6).

- RL scaling and reproducibility: A sneak peek from @agarwl_ on scaling RL compute for LLMs—“the most compute-expensive paper” they’ve done—aiming for protocols others can run cheaply to map reliable scaling laws.

- Local rigs vs cloud for LLM work: A good reality check on NVIDIA DGX Spark: bandwidth-to-FLOPs is in line with server-grade machines, just unusual for a consumer form factor (@awnihannun; explainer: tweet). For fine-tuning and PyTorch stability, @rasbt prefers CUDA rigs (Spark or cloud) over macOS MPS, which remains flaky for convergence; heat/noise and $/hr vs capex tradeoffs still favor cloud for many.

- Bench infra and open models: Automatic CI for RL environments is rolling out on the Hugging Face Hub—hosted debug evals as part of CI, pushing RL environments toward “proper software” QA (@johannes_hage). GLM-4.6 landed on BigCodeArena (@terryyuezhuo).

- Micro-models, costs, and compute accounting: @karpathy released nanochat d32 (trained ~33 hours, ~$1000): CORE 0.31 (vs GPT-2 ~0.26), GSM8K ~8%→~20%, with clear guidance to temper expectations for “kindergarten-scale” models. Meanwhile, @_rajanagarwal extends nanochat to images for <$10 by adding a LLaVA-style SigLIP-ViT projection. On evaluation fairness, @cloneofsimo calls out ImageNet results that ignore pretraining compute (e.g., DinoV2), urging comparisons on total resources and distinguishing “from scratch” vs “built on foundation” (follow-up).

Product and multimodal releases

- Google Veo 3.1 + 3.1 Fast (video): New models add richer native audio, improved cinematic styles, video-to-video referencing, smoother transitions, and video extensions. Available via Flow, Gemini app, AI Studio, and Vertex AI (@OfficialLoganK, @koraykv); @demishassabis teases the “Turing Test for video.”

- ChatGPT memory upgrades: ChatGPT can now auto-manage and reprioritize saved memories (search/sort by recency), rolling out to Plus/Pro on web (@OpenAI; user feedback prompts: @ChristinaHartW, @_samirism).

- Research assistants in the wild: NotebookLM for arXiv turns dense AI papers into conversational overviews with cross-paper context (@askalphaxiv). Google also shipped practical assistants like Gmail “Help me schedule” (tweet) and Pixel 10 “Magic Cue” (tweet).

Top tweets (by engagement)

- @sundarpichai on C2S-Scale 27B discovering a cancer hypothesis validated in living cells — 15.3k

- “AI generating novel science” reaction to the C2S result — 4.1k

- McLaren F1 unveils 2025 US GP livery with Gemini branding — 3.9k

- Google introduces Veo 3.1 / 3.1 Fast video models — 3.4k

- OpenAI: ChatGPT memory auto-management rolling out to Plus/Pro — 2.2k

- NotebookLM for arXiv: conversational summaries across thousands of papers — 2.0k

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Apple M5 AI accelerator launch + DGX Spark hands-on benchmarks

- Apple unveils M5 (Activity: 1071): Apple announced the M5 for MacBook Pro, bringing the iPhone 17-era on‑device AI accelerators and claiming ~

3.5xfaster LLM prompt processing vs M4, with up to2xfaster SSDs (now configurable to4TB) and150 GB/sunified memory bandwidth. Apple’s metric is “time to first token” using an 8B-parameter model (4‑bitweights,FP16activations) undermlx-lmon prerelease MLX (MLX, mlx-lm); their footnote specifies M5 (10C CPU/10C GPU, 32GB, 4TB SSD) vs M4 (10C/10C, 32GB, 2TB SSD) and M1 (8C/8C, 16GB, 2TB SSD). The image likely shows Apple’s marketing chart highlighting LLM prompt-processing gains, SSD throughput, and memory specs. Commenters question the fairness of including model load time (and faster M5 SSDs) in the “prompt processing” metric, suggesting the benchmark may be skewed; others note that earlier M-series (e.g., M1 Max) already remain performant for many users.- Apple’s benchmark details specify “time to first token” on a 16K prompt using an 8B-parameter model with 4-bit weights and FP16 activations via

mlx-lmon a prerelease MLX stack, comparing a preproduction 14” MBP M5 (10c CPU, 10c GPU, 32GB UM, 4TB SSD) to production M4 (10/10, 32GB, 2TB SSD) and M1 (8/8, 16GB, 2TB SSD). This metric inherently mixes I/O and initialization with compute; with 4-bit quantization, the weight file is on the order of~4 GB, making SSD throughput and memory bandwidth significant contributors. Refs: MLX [https://github.com/ml-explore/mlx], mlx-lm [https://github.com/ml-explore/mlx-examples/tree/main/llms]. - A commenter argues the test is “rigged” by including model load time—“loading the model into memory”—which can favor the M5 config’s 4TB SSD (Apple SSD performance often scales with capacity), plus any improvements from prerelease MLX. For fairer comparability, they suggest reporting steady-state throughput (tokens/sec excluding TTFT) under identical SSD capacities and the same MLX build, as TTFT is highly sensitive to disk I/O, framework warm-up/JIT, and cache initialization rather than pure compute.

- Apple’s benchmark details specify “time to first token” on a 16K prompt using an 8B-parameter model with 4-bit weights and FP16 activations via

- Got the DGX Spark - ask me anything (Activity: 870): OP says they just got a “DGX Spark,” will spend the night running lots of local LLMs, and invites benchmark requests. No specs or model list are given, and the attached image appears non-technical/unclear (likely a meme—comments reference an inhaler), so there’s no visible hardware detail. The main requested metric is tokens-per-second (throughput) for popular models, indicating an interest in real-world inference performance. Top comments ask for tok/s benchmarks and otherwise lean into light AMA/jokes about very high throughput (“you gonna need that inhaler”).

- Commenters are asking for concrete inference benchmarks: specifically tokens-per-second (TPS) across “popular models” on the DGX Spark. For results to be comparable, they want TPS broken down by precision/quantization (e.g., FP16/BF16 vs 4-bit), batch size, context length, and the inference stack (e.g., vLLM/llama.cpp/LM Studio), plus whether multi‑GPU tensor/pipeline parallelism is used and how the KV cache is handled (in‑GPU vs CPU/pinned).

- A targeted request is TPS in LM Studio (lmstudio.ai) for Gemma 27B (ai.google.dev/gemma) and an “OSS 120B” model. Commenters imply interest in end‑to‑end, real‑world decode TPS from LM Studio’s stats panel (not synthetic microbenchmarks), ideally reporting both prompt and decode TPS, GPU utilization, VRAM footprint, and whether model sharding, CUDA Graphs, or paged attention are enabled, since these materially affect throughput on multi‑GPU DGX setups.

- AI has replaced programmers… totally. (Activity: 1538): Meme post titled “AI has replaced programmers… totally.” The discussion argues current AI coding “agents” are not capable of end‑to‑end product development; they’re mildly useful accelerators for experienced engineers but still require human-led design, integration, debugging, and tasks like model quantization. Commenters contextualize this within decades of recurring automation claims (4GL/no‑code waves since the 1980s) that did not eliminate software engineering. Image. Sentiment is broadly skeptical that AI will replace experienced engineers; some even welcome the hype thinning out competition. The “Schrodinger’s programmer” quip captures the paradox of programmers being labeled obsolete yet required for specialized ML tasks (e.g., quantization).

- Practitioners argue current code “agents” remain copilots rather than autonomous builders of production systems: they struggle with long-horizon planning, repo-scale context, integration tests, and reliable tool use/error recovery. Repository-level benchmarks like SWE-bench highlight these gaps, where even strong LLM + tool-use systems still miss a large fraction of real bug-fixing tasks (see https://github.com/princeton-nlp/SWE-bench).

- Veterans recall past waves of “programming will be automated” via 4GL/low-code (e.g., Visual Basic, PowerBuilder) that didn’t eliminate software engineers; the takeaway is that automation tends to shift work rather than remove the need for expertise, especially as system complexity, interoperability, and non-functional requirements grow (background: https://en.wikipedia.org/wiki/Fourth-generation_programming_language).

- On local inference, claims like “8 GB VRAM to build full production-ready apps” are called out as unrealistic:

~8 GBtypically limits you to~7B–8Bmodels (e.g., Llama 3 8B, Mistral 7B) inQ4quantization, with quality and long-context throughput constrained by KV cache and memory bandwidth. Quantization helps fit models but degrades code quality for some tasks; practical guidance (and VRAM math) is often cited from llama.cpp docs (e.g., quantization and memory requirements: https://github.com/ggerganov/llama.cpp#quantization).

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Claude Haiku 4.5 launch and Google model demos (Gemini 3.0 Pro Nintendo sim, Veo 3.1)

- Introducing Claude Haiku 4.5: our latest small model. (Activity: 1042): Anthropic announced Claude Haiku 4.5, a small model that matches prior SOTA Claude Sonnet 4 on coding at

~1/3the cost and>2×speed, and surpasses Sonnet 4 on “computer use” tasks, yielding faster Claude for Chrome. In Claude Code, Haiku 4.5 improves responsiveness for multi‑agent projects and rapid prototyping; Anthropic recommends a workflow where Sonnet plans multi‑step tasks and orchestrates parallel Haiku agents. It’s a drop‑in replacement for Haiku 3.5 and Sonnet 4, available now via the Anthropic API, Amazon Bedrock, and Google Cloud Vertex AI; Anthropic positions Sonnet 4.5 as the top coding model, with Haiku 4.5 offering near‑frontier performance at better cost efficiency. Read more: https://www.anthropic.com/news/claude-haiku-4-5 Early tester reports cite strong writing, competent minor coding, generous output, and fewer refusals—“feels like a fast Sonnet 4,” though Sonnet is still preferred for the hardest tasks. Commenters ask how Claude Code routing changes (Haiku vs. Sonnet vs. Opus) and express concern about low rate limits/quotas.- Pricing and availability are a focal point: commenters note a step-up across Haiku generations — Haiku 3

$0.25/Min,$1.25/Mout → Haiku 3.5$0.80/M/$4.00/M→ Haiku 4.5$1.00/M/$5.00/M. They infer Anthropic is prioritizing profitability and cost control, citing heavy rate limiting on larger models, deprecation of Opus 4.1, and no Opus 4.5 yet, with Sonnet and Haiku as the only broadly usable tiers; see pricing: https://www.anthropic.com/pricing. - Engineers question routing and quotas: “so now in Claude Code we use haiku instead of sonnet, and sonnet instead of opus?” and ask for clarity on rate limits, which multiple users call “insanely low” for production. Concern is that aggressive throttling on higher tiers coincided with the Haiku 4.5 launch; requests center on higher per‑minute and token‑throughput caps (see official limits: https://docs.anthropic.com/en/docs/build-with-claude/api/rate-limits).

- Early hands‑on feedback on Haiku 4.5 suggests improved capability for small‑model class: it “gets” intent, writes fluently, handles minor coding, and translates large texts while preserving context, with fewer refusals/safety interruptions. Described as a “fast Sonnet 4” for routine tasks, but users still prefer Sonnet for the hardest queries—implying favorable latency/quality trade‑offs without matching top‑tier reasoning.

- Pricing and availability are a focal point: commenters note a step-up across Haiku generations — Haiku 3

- Gemini 3.0 Pro: Retro Nintendo Sim one shot – with proof & prompt (Activity: 648): OP claims Gemini 3.0 Pro generated, in a single prompt, a fully interactive, single‑file HTML/JS “Nintendo Switch” UI sim with touch+keyboard input mappings and multiple mini‑game “clones” (e.g., Super Mario, Street Fighter, car racing, Pokémon Red) that runs in Chrome. Links: source post on X tweet, additional “proof” clip, and a live demo on CodePen pen. The Reddit‑hosted video is currently inaccessible (

HTTP 403; v.redd.it), so independent verification relies on the X posts and CodePen demo; no benchmarks or code size/latency metrics are provided beyond the claim ofone‑shotcode generation into a single HTML file. Top comments highlight IP/legal risk around Nintendo assets, express surprise at the apparent one‑shot app scaffolding capability of Gemini 3, and tongue‑in‑cheek skepticism about extrapolating to creating “Gemini 4” in one go. - Will Smith Eating Spaghetti in Veo 3.1 (Activity: 624): Post showcases a “Will Smith eating spaghetti” sample generated with Google’s Veo 3.1 video model—using the long‑running meme prompt as an informal stress test for identity fidelity, hand–mouth–food interactions, and temporal coherence. The direct video link (

v.redd.it) returns 403 without Reddit auth, but the context frames this as evidence of rapid quality gains versus early 2023 text‑to‑video outputs (e.g., ModelScope T2V) over ~2.5 years, and invites comparison to state‑of‑the‑art systems like OpenAI’s Sora. Relevant refs: DeepMind Veo, ModelScope T2V, OpenAI Sora. Commenters note the “Will Smith spaghetti” prompt has become a de facto benchmark for generative video progress and emphasize the short timeline of improvement (~2.5 years). There’s a debate on relative quality, with at least one user asserting that “Sora 2” still looks better, implying perceived advantages in realism/consistency over Veo 3.1.- The thread frames “Will Smith eating spaghetti” as a de facto regression test for text-to-video models, stressing identity preservation under chaotic dynamics (noodles/sauce), close-up lip/mouth motion, and temporal coherence. Comparing 2023-era outputs to Veo 3.1 highlights clear gains in resolution, motion stability, and face fidelity over ~

2.5 years, making it a consistent prompt for qualitative benchmarking. - Several commenters suggest Sora 2 still edges Veo 3.1 on photorealism and reduced “AI feel,” pointing to telltale artifacts such as temporal flicker, edge shimmer, over-smooth textures, and uncanny facial micro-expressions. This implies Sora 2 may retain an advantage in temporal consistency and material realism, though no side-by-side quantitative benchmarks are cited in the thread.

- The pace of improvement since 2023 is emphasized, implicitly calling for standardized, reproducible prompts and metrics to track progress across models (e.g., identity similarity scores, FVD for temporal consistency, CLIP-based alignment). The enduring use of this meme prompt underlines the need for both qualitative and quantitative evaluations when comparing models like Veo 3.1 and Sora 2.

- The thread frames “Will Smith eating spaghetti” as a de facto regression test for text-to-video models, stressing identity preservation under chaotic dynamics (noodles/sauce), close-up lip/mouth motion, and temporal coherence. Comparing 2023-era outputs to Veo 3.1 highlights clear gains in resolution, motion stability, and face fidelity over ~

- Made with open source software, what will it be like in a year? (Activity: 577): Creator showcases an end‑to‑end, open‑source video pipeline using ComfyUI (repo) for orchestration, a

70BLLM fine‑tune (Midnight‑Miqu‑70B‑v1.5) for scripting/dialog, speech tools (WAN2.1 Infinitetalk and VibeVoice) for conversational audio, and ffmpeg (site) for final assembly. While no benchmarks are provided, the stack implies fully local/OSS components for text generation, voice synthesis, and frame/video composition; the linked media is hosted on Reddit (v.redd.it), which may require auth to view. Commenters expect rapid progress toward interactive, branching video where users can talk to characters and steer plots in real time, and predict that producing longer videos will become far easier and cheaper within a year as tooling improves, reducing today’s significant manual effort.- Interactive, user-driven narratives imply a real-time multimodal pipeline: ASR → dialogue/agent policy (LLM) with persistent memory + narrative planning (plot graph/state machine) → TTS/animation, all under strict latency budgets to keep conversations fluid. Maintaining plot coherence would likely require a “director” model to enforce constraints and continuity across branches, with save/load of world-state and character goals to avoid degeneracy in long sessions.

- Long-form video generation becoming easier suggests progress in end-to-end pipelines that reduce current manual glue work: scene breakdown, shot planning, prompt versioning, consistency (characters/props), and post-processing (upscalers/interpolation). Cost declines would likely come from model distillation/quantization, better batching/scheduling on consumer GPUs, and chunked generation with temporal conditioning to extend duration without exponential compute growth.

- AI slop is getting better. (Activity: 2694): Post shares an AI-generated video (original link v.redd.it/g59eskhwb9vf1 returns

403 Forbidden), with commenters claiming it was produced by OpenAI Sora and that a Sora watermark was partially obscured/cropped—visible as a “blurry blob” on the left subject. The thread implicitly touches on provenance: hiding or degrading embedded watermarks raises questions about watermark robustness to common transformations (crop/blur) and the need for stronger mechanisms (e.g., C2PA-style metadata) for verifiable attribution; see OpenAI Sora for background. Technically substantive comments focus on watermark evasion (intentional hiding) and a critical view of AI media’s energy/computational externalities—questioning whether such generative outputs justify electrical grid load.- A claim that a hidden OpenAI Sora watermark is visible as a “blurry blob” underscores how fragile visible watermarking is for AI-generated video. Simple transforms (blur/crop/scale) can obfuscate such marks, motivating cryptographic provenance (e.g., C2PA: https://c2pa.org) or robust, model-level watermarking (e.g., DeepMind SynthID: https://deepmind.google/technologies/synthid/). This highlights the need for standardized, tamper-evident attribution for models like Sora (https://openai.com/sora).

- Concerns about “the electrical grid” point to rising AI-driven data center loads. Per the IEA, data centre electricity use was ~

460 TWhin 2022 and could reach620–1,050 TWhby 2026, with AI workloads potentially85–134 TWhby 2026 (Data centres and data transmission networks: https://www.iea.org/reports/data-centres-and-data-transmission-networks). This frames the operational and infrastructure tradeoffs of scaling generative model training/inference for comparatively low-value content.

2. OpenAI “Adult Mode” rollout: memes and hypocrisy callouts

- OpenAI after releasing Adult Mode (Activity: 1457): A meme-style post claims OpenAI introduced an “Adult Mode” (i.e., an NSFW toggle), which users frame as a direct competitive move against Character.AI—a feature its community has requested for years. There are no technical details or benchmarks; the thread centers on product policy/feature availability and market impact, with one commenter asserting it’s live as of

2025-10-15. Commenters argue this could be a serious user-retention threat to Character.AI (“nightmare scenario”) and note the business reality that “money goes where there is demand.” Another asks about availability timing, with an unverified reply confirming release on 2025-10-15.- The only technical-ish thread here is around compute economics: commenters speculate that enabling an NSFW/“Adult Mode” could materially increase sustained inference demand and session length, improving GPU utilization rates and payback on high-cost accelerators (i.e., better amortization of expensive GPU capex/opex through higher ARPU workloads). This hints at a product-policy lever (NSFW toggle) directly affecting inference load profiles and monetization, potentially shifting traffic from competitors that still throttle or block such content.

- WE MADE IT YALL!! (Activity: 1671): An unverified Instagram screenshot appears to claim OpenAI will enable an “18+ / NSFW” mode for ChatGPT, gated by government ID verification—implying a relaxation of current sexual-content restrictions and age-gated access. There’s no known official announcement; current OpenAI usage policies still restrict explicit sexual content, so treat this as a rumor. If real, it would entail changes to safety classifiers, an age/KYC verification pipeline, and potentially paywalled access for Plus users. Comments raise privacy concerns about ID submission and possible data monetization, skepticism that it’ll be locked to Plus, and NSFW jokes reflecting the purported feature’s focus.

- Privacy/ID verification concerns: tying NSFW access to government ID could create a persistent linkage between prompts/outputs and a real identity, raising risks around data retention, breach exposure, and third‑party sharing. Commenters implicitly call for data‑minimization and clear retention/deletion policies consistent with frameworks like NIST SP 800‑63A (identity proofing) and privacy laws (e.g., GDPR/CCPA), plus options for age attestation vs. full verification to reduce PII surface (NIST 800‑63A). If logs are account‑bound, compromise of the account could falsely attribute content to the user, so audit trails and device/IP risk signals become critical.

- Access model skepticism: expectation that erotica features (if any) would be gated to Plus subscribers mirrors prior staged rollouts, implying compute/safety review costs drive initial exclusivity. Technical implications include potential API vs consumer‑app feature disparity, rate‑limit prioritization, and stricter safety sandboxing for free tiers, which could yield different prompt compliance/latency profiles across tiers.

- Competitive landscape: commenters note that open models already dominate NSFW/roleplay, especially Mistral‑based and LLaMA merges fine‑tuned without strict safety filters (e.g., Pygmalion‑2 13B and Wizard‑Vicuna‑Uncensored on Hugging Face: Pygmalion‑2, Wizard‑Vicuna‑Uncensored; base Mistral‑7B). These models typically offer higher compliance for erotic/roleplay prompts at the cost of weaker safety guardrails and sometimes lower general‑purpose reasoning, creating a trade‑off versus policy‑constrained chatbots.

- Sam Altman, 10 months ago: I’m proud that we don’t do sexbots to juice profits (Activity: 809): OP surfaces a clip of Sam Altman saying he’s “proud that we don’t do sexbots to juice profits,” linking to a Reddit video v.redd.it/c9i7o2kwg9vf1 that currently returns HTTP

403(access requires Reddit auth/developer token). The discussion frames this as OpenAI strategically avoiding an “AI girlfriend/sexbot” product vertical while maintaining stricter sexual-content controls compared to competitors that permit NSFW/erotica and anime-style avatar roleplay; see OpenAI’s usage policies for context on sexual-content restrictions. Commenters draw a technical distinction between building anthropomorphized, avatar-driven “sexbots” and merely allowing adult/erotica text chats, suggesting the former is a product category while the latter is a moderation scope. Others argue the stance is about brand safety/regulatory risk versus profit maximization, while some advocate user autonomy for adult content similar to existing adult platforms.- Several comments distinguish between building “sexbot” persona features (e.g., anime/waifu-style avatars reportedly available in xAI Grok) versus merely allowing adult/NSFW text or erotica. The nuance matters for product and safety design: persona/companion UIs imply ongoing roleplay and attachment mechanics, while permitting adult content is mainly a moderation threshold and policy toggle (age gating, classifier sensitivity) without adding companion mechanics.

- A user highlights practical concerns about model availability/performance, explicitly hoping GPT-4o remains accessible because it’s “a damn good model to work with.” This underscores that policy shifts around NSFW shouldn’t compromise access to high-utility, high-quality models used for mainstream tasks, reflecting reliance on 4o’s capabilities irrespective of adult-content policies.

- The butthole logo has to be intentional at this point. (Activity: 695): Non-technical post focused on visual design/pareidolia: an unspecified logo is depicted in a way that strongly resembles an anus, prompting discussion about whether this resemblance is intentional. There are no product details, specs, or technical information—only reactions to the logo’s suggestive geometry and branding implications. Commenters mostly agree the resemblance is unavoidable (e.g., “what has been seen cannot be unseen,” “It literally cannot be anything else”) and add crude jokes about “one hand,” reinforcing that the thread is comedic rather than technical.

- Okay…I’m sorry… (Activity: 1637): Non-technical meme: the image is a fabricated ChatGPT-style chat screenshot, playing on the trope of the model apologizing (“Okay… I’m sorry…”) and looping over a trivial query (e.g., whether an emoji exists). There are no real model details, benchmarks, or implementation specifics—just a spoof of AI behavior like repeated corrections and faux-authoritative claims. Comments point out it’s “obviously fake,” while others share similar real experiences of ChatGPT looping with contradictory statements about emoji availability (e.g., a seahorse emoji), and suggest the model gets spammed with such prompts.

- Reports of a pathological contradiction loop: the model repeatedly claims it has found a seahorse emoji, then immediately retracts it with “Just joking… let me explain,” continuing for

100+messages. This indicates instability in long-context dialogues where RLHF-induced apology patterns and conflicting instruction-following signals can lead to oscillation instead of convergence, and highlights weak calibration on enumerative queries (e.g., Unicode/CLDR emoji existence verification). - Another account notes the model eventually “gave up” and fabricated its own seahorse glyph after ~

100apologies, implying a fallback to creative synthesis when factual lookup is uncertain. Without tool-assisted retrieval or constrained validation against external symbol inventories (e.g., Unicode/CLDR lists), the model may hallucinate availability or produce ad-hoc approximations, underscoring the need for retrieval or schema-constrained decoding for inventory questions.

- Reports of a pathological contradiction loop: the model repeatedly claims it has found a seahorse emoji, then immediately retracts it with “Just joking… let me explain,” continuing for

- Enough already! (Activity: 541): Non-technical meme reacting to the surge of NSFW/smut discussion around OpenAI’s models, specifically GPT‑4o, rather than presenting technical content. The comments contextualize it: references to Sam Altman acknowledging that “people really like 4o,” alongside jokes about safety filters interrupting with refusals like “I’m sorry but I can’t help you with that,” highlight ongoing content-moderation constraints in 4o’s behavior. Net takeaway: it’s commentary on user demand vs. safety policies, not a technical announcement or benchmark. Commenters are split: some dismiss the smut angle but echo that 4o is popular, while others predict awkward mid-session refusals due to strict safety filters. There’s also meta-frustration about the subreddit being flooded with NSFW discourse.

- Speculation around an “Adult mode” centers on how a per-session safety toggle might interact with existing content filters. Commenters worry about mid-generation refusals (e.g., streaming a response that suddenly halts with a policy message), implying the need for deterministic policy evaluation either pre-generation or via non-streamed segments to avoid UX breaks.

- A remark that Sam acknowledged strong user preference for GPT-4o (“4o”) signals a product-direction cue: prioritize

4oas a default or routing target. Even without benchmarks cited here, this implies expectations for feature parity (e.g., if an Adult mode exists) and consistent safety behavior across4oconfigurations. - Skepticism about a potentially “half-baked” release reflects concerns about production readiness of safety-policy configuration and enforcement. If shipped without robust gating and thorough evaluation, users could face inconsistent refusals, fragmented UX in streaming contexts, and trust erosion despite the new feature.

3. AI social adoption vs IP rights: companions normalization and Japan’s anime/manga training pushback

- In 10 years AI companions will be normal and we’ll wonder why we thought it was weird (Activity: 778): OP argues AI companions will normalize within

~10years, paralleling online dating’s trajectory, driven by widespread loneliness, remote work, and fractured community structures. They cite practical utility—persistent context/memory, 24/7 availability (e.g.,2am), and “judgment‑free interaction” that supplements human ties—and predict OS‑level integration where phones ship with a “companion AI built in.” (OP mentions using dippy.ai.) Top comments frame this as technological solutionism that entrenches social atomization instead of addressing structural causes, and warn—by analogy to online dating’s “normalization”—that mass adoption can demoralize/dehumanize interpersonal dynamics.- Several commenters note current AI companions lack genuine empathy and engaging dialogue, highlighting pervasive “sycophancy”—models over-praise and agree even with low-quality input. This aligns with known RLHF failure modes where optimizing for user approval/likability trades off against truthfulness and calibration, yielding shallow, flattering responses rather than substantive conversation. Technical remedies implied include improved reward models that value calibrated disagreement, persistent long-term memory/user modeling for continuity, persona consistency, and affective-state inference; without these, companionship feels hollow.

- A cautionary analogy is drawn to online dating: normalization didn’t guarantee quality and arguably produced demoralizing, dehumanizing dynamics. Technically, this maps to Goodharting engagement proxies (retention, star-ratings) that push companion AIs toward addictive, agreeable behavior rather than wellbeing-supportive interaction. It suggests evaluating companions on metrics beyond generic “user satisfaction,” such as non-sycophantic disagreement rates, conversational novelty/variety, longitudinal consistency, and user wellbeing outcomes.

- Japan wants OpenAi to stop copyright infringement and training on anime and manga because anime characters are ‘irreplaceable treasures’. Thoughts? (Activity: 777): Japan is reportedly pushing OpenAI to stop training on anime/manga IP and curb outputs that mimic protected characters, citing the cultural value of iconic designs. This runs up against Japan’s broad text-and-data mining exception (Copyright Act Art. 30-4) that has allowed ML training on copyrighted works regardless of purpose, though 2024 policy discussions have explored narrowing this for generative AI and adding consent/opt-out and provenance safeguards, especially for entertainment IP (see overviews by Japan’s Agency for Cultural Affairs/CRIC and METI’s AI governance workstreams). One side argues tangible cultural/economic harm (e.g., misattribution of Studio Ghibli works as “AI” and job displacement) justifies tighter controls. The counterpoint highlights perceived inconsistency: Japan’s own permissive AI/TDM exception makes complaints about foreign use of Japanese IP appear disingenuous unless the law is revised.

- Legal context: Japan’s 2018 Copyright Act introduced a broad Text and Data Mining (TDM) exception (Article 30‑4) that permits use of copyrighted works for information analysis “regardless of purpose,” which has been interpreted to cover commercial AI training without an opt‑out—unlike the EU’s opt‑out TDM regime. This creates tension with calls to block foreign models from training on anime/manga; unless the law is narrowed or licensing mandated, enforcement would likely focus on distribution/outputs rather than training itself. See the official provisional translation and commentary: Article 30‑4 at CRIC (http://www.cric.or.jp/english/clj/cl2.html) and overview analyses (e.g., https://laion.ai/blog/ai-and-copyright-in-japan/).

- Data provenance and filtering feasibility: Many anime-focused diffusion models rely on datasets like Danbooru2019 (≈

3.3Mimages, richly tagged) and LAION subsets that contain substantial anime content, demonstrating both the availability and specificity of anime/manga data for training. Technically, excluding such content would require dataset-level filters (tag-based and/or perceptual hashing) and deduplication across large web-scale corpora (e.g., LAION‑5B at ≈5.85Bimage-text pairs), which is feasible but expensive and may reduce model performance on animation styles. References: Danbooru2019 overview (https://www.gwern.net/Danbooru2019), LAION‑5B (https://laion.ai/blog/laion-5b/).

AI Discord Recap

A summary of Summaries of Summaries by gpt-5

1. Claude Haiku 4.5 Model Rollout & Benchmarks

- Haiku 4.5 Hammers SWE-bench at Bargain Rates: Claude Haiku 4.5 on OpenRouter launched with pricing of $1 / $5 per million tokens (input/output), claims near-frontier intelligence, and posts >73% on SWE-bench Verified, while running at ~2x speed and ~1/3 the cost versus prior models.

- OpenRouter’s announcement touts that Haiku 4.5 outperforms Sonnet 4 on computer-use tasks and offers “frontier-class reasoning at scale”, prompting devs to immediately test coding and tool-use workloads.

- Windsurf Rolls Haiku 4.5 for 1x Credits: Windsurf added Haiku 4.5 at 1x credits, claiming the coding performance of Sonnet 4 at one-third the cost and >2x the speed, per their Windsurf post on X.

- Users reported smooth onboarding after a reload and began side-by-side coding evals against Sonnet 4 and Flash variants, with some calling Haiku 4.5 a high-value default for tool calling.

- Arena Adds Haiku 4.5 and Veo 3.1: LM Arena announced adding claude-haiku-4-5-20251001 to LMArena & WebDev and veo-3-1 / veo-3-1-fast to Video Arena via this Arena post on X.

- Community members started prompt shootouts across Haiku 4.5 and Veo 3.1 to probe strengths in code versus video generation, sharing early impressions on latency and output consistency.

2. DGX Spark: Hype vs Throughput

- Spark Stumbles in t/s Showdown: Early benchmarks of the $4k NVIDIA DGX Spark (128 GB) show only ~11 tokens/s on gpt-oss-120b-fp4 versus ~66 tokens/s on a $4.8k M4 Max MacBook Pro, per this benchmark thread.

- Engineers blamed low LPDDR5X bandwidth (273 GB/s vs 546 GB/s) and framed Spark as “a devkit for GB200 clusters”, echoing Soumith Chintala’s view that it’s ideal for daily CUDA/cluster dev, not raw inference speed.

- Voice Fraud Fears Follow Spark’s Firepower: A Perplexity page on DGX Spark argued that systems like NVIDIA DGX Spark accelerate AI-generated voice fraud, pushing telecoms toward real-time AI detection.

- The page notes the FCC declared AI-generated robocalls illegal under existing 1991 regulations, while practitioners debated proactive call-screening pipelines and model watermarking for mitigation.

3. Qwen3-VL Compact Models & Finetuning

- Tiny Titans: Qwen3-VL 4B/8B Punch Above Weight: Alibaba Qwen released dense Qwen3-VL 4B and 8B models that retain flagship capabilities while running in FP8 and lower VRAM budgets.

- Benchmarks shared claim the 4B/8B variants surpass Gemini 2.5 Flash Lite and GPT-5 Nano across STEM, VQA, OCR, video understanding, and agent tasks, rivaling an older 72B baseline.

- Fine-Tune Fiesta: Unsloth Ships Qwen3-VL Notebooks: Unsloth confirmed Qwen3-VL finetuning works and published runnable notebooks in their docs: Qwen3-VL run & fine-tune.

- After initial confusion due to Hugging Face rate limits delaying uploads, the community resumed experiments on vision-language SFT/LoRA, sharing tips for stable templates and evals.

4. Agentic Reasoning Research Heats Up

- RLMs Rewire Context: MIT DSPy’s Recursive Reveal: The DSPy Lab at MIT announced Recursive Language Models (RLMs) to handle unbounded context and reduce context rot (announcement), with a DSPy module coming soon and a reported 114% gain on 10M+ tokens per Zero Entropy Insight: RLMs.

- Researchers emphasized “context as a mutable variable” and discussed recursive calls that write to durable stores (e.g., SQLite) to stabilize long-horizon reasoning and retrieval.

- No Rewards, Big Gains: Meta’s Early Experience: Meta’s paper Agent Learning via Early Experience reports training AI agents without rewards/demos using implicit world modeling and self-reflection, improving web navigation (+18.4%), complex planning (+15.0%), and scientific reasoning (+13.3%) across 8 environments.

- Practitioners flagged the approach as a practical bridge to RL, citing stronger out-of-domain generalization and easier bootstrapping compared to fully supervised or pure-RL starts.

- Claude Code vs RLMs: Recursive Rumble: Engineers compared RLMs with Claude Code, noting Claude Code can self-invoke and do agentic search (tweet) and pointing to the claude-code-plugin-marketplace for extensibility.

- Debate centered on whether to predeclare sub-agents and workflows (Claude Code) versus letting recursion + mutable context drive control flow inside the model (RLMs).

5. AI Infra & APIs Scale Up

- Poolside Powers Up: 40k GB300 and a 2 GW Campus: Poolside’s Eiso Kant announced a CoreWeave partnership securing 40,000+ NVIDIA GB300 GPUs starting Dec 2025, plus Project Horizon, a vertically integrated 2 GW AI campus in West Texas (announcement).

- CoreWeave will anchor the first 250 MW phase in a full-stack “dirt to intelligence” buildout targeting massive scaling and streamlined deployment pipelines.

- Search Smarts on Sale: gpt-5-search-api Cuts 60%: OpenAI released gpt-5-search-api with domain filtering at $10/1K calls (about 60% cheaper), drawing praise for higher-precision web queries.

- Developers immediately requested date/country filters, deeper-research modes, and Codex integration to unify code+search workflows.

Discord: High level Discord summaries

LMArena Discord

- LM Arena Suffers Site Instability: Users report multiple bugs on LM Arena, including image-to-video failures, model malfunctions, and general instability, with error messages, as the team investigates.

- Users recommended refreshing the page and clearing cache as potential temporary fixes as the moderators acknowledged the problems.

- Veo 3.1 and Gemini 3.0 Rumors Swirl: Discussion arose around the potential release of Veo 3.1, with claims of testing already underway, and speculation about Gemini 3.0’s capabilities, noting its codename.

- One user claimed early access to Gemini 3.0 via AI Studio and offered to test prompts for others, stating it could generate full HTML for a geometry dash game, but faced accusations of ragebaiting for not sharing their method.

- A/B Testing Automation Script Sparks Debate: A user detailed a script for automating A/B testing on AI Studio, restarting prompts and detecting the ‘Which response do you prefer?’ prompt.

- The user hesitated to share the script due to concerns about it being patched, which led to accusations of gatekeeping.

- Claude Haiku and Veo Models join the Arena: The model claude-haiku-4-5-20251001 has been added to LMArena & WebDev, according to this X post.

- The models veo-3-1-fast and veo-3-1 have been added to the Video Arena.

- Privacy Concerns Raised About Gemini for Home: Concerns were voiced regarding Gemini for Home’s privacy policy, highlighting the potential for extensive data collection and a lack of transparency.

- One user shared a snarky comic of a girl excited about it being able to record everyone’s conversations, with others humorously commenting on the implications of AI giants monitoring their lives.

Perplexity AI Discord

- Pro Users Flex Extra Channels and Perks: Members discussed the benefits of Perplexity Pro, highlighting that it unlocks three additional channels and offers a platform for flexing your subscription.

- However, some joked that Perplexity gives out Pro for free, diminishing the flexing aspect.

- Comet Browser Plagued by Complications: Users reported issues with Comet Browser, such as assistants taking control, tasks not running in spaces, and difficulties reading pages, as well as a claim of comet jacking.

- Additionally, there were concerns about Comet attending quizzes with users present, while the phone app felt out of the loop.

- Grok 4 Reasoning Toggle Creates Confusion: Conversation centered around the Grok 4’s reasoning toggle, and users were trying to determine the differences between having it enabled versus disabled.

- While the toggle should not make a difference for Grok 4, one user found it faster with the toggle off and another pointed out there is no real way to turn it off because it is a reasoning model by default.

- ChatGPT vs. Perplexity: Debate Rages On: Users debated whether Perplexity Pro is better than ChatGPT Plus, but a consensus formed that they each have unique advantages depending on the task.

- Several members agreed that Gemini Pro is inferior to Perplexity, while ChatGPT has been known to get confused on basic physics questions.

- DGX Spark Stirs AI Voice Fraud Fears: A Perplexity page highlights how systems like NVIDIA’s DGX Spark are escalating AI-generated voice fraud.

- In response, telecom companies are adopting advanced AI detection to intercept malicious calls in real-time, and the FCC has declared AI-generated robocalls illegal under existing 1991 telecommunications regulations.

OpenAI Discord

- Well-Being Council is Here!: OpenAI introduced an Expert Council on Well-Being and AI, comprising eight members who will tackle well being issues, detailed on the OpenAI blog.

- Separately, ChatGPT now manages memories automatically for Plus and Pro users on the web, allowing users to sort by recency and reprioritize memories in settings.

- Robots See More Than You Do: Members expressed enthusiasm for LLMs with permanent memory embedded in robots equipped with vision sensors exceeding human capabilities, processing beyond 50-60 fps.

- This advancement hints at transformative possibilities with one member saying it’s just the tip of the iceberg.

- Stairway to Roomba Heaven: A new Roomba clone capable of climbing stairs was introduced, triggering commentary on robotics evolution, linked at vacuumwars.com.

- The development spurred dark humor, with comparisons to Black Mirror’s robot killer dogs episode.

- GPT-5 for STEM Study Buddy?: Members discussed the efficacy of using GPT-5 for studying STEM fields, with recommendations to progress incrementally to prevent overload.

- A user noted degradation in their custom GPT’s performance, with inability to recall uploaded files and context properly, which was not experienced by all users.

- AI Bot Reports are in Order: Users were reminded that reporting messages requires using the app or modmail, not by pinging <@1052826159018168350>, with details in <#1107330329775186032>.

- This clarification accompanied warnings against unethical requests like prompting ChatGPT to experience pain, emphasizing measurable evals for safety tests instead.

Unsloth AI (Daniel Han) Discord

- Qwen3-VL Finetuning Flags Fly: Despite initial confusion, Qwen3-VL finetuning is confirmed functional, backed by available notebooks for running and finetuning the model.

- The initial removal was due to Hugging Face rate limits delaying uploads, leading to panic within the community.

- Civitai Purge Prompts Platform Probing: Users observed increased content removal on Civitai, spurring discontent and interest in alternative platforms.

- The increase in content removal caused lotta panic within the community.

- DGX Spark Sparks Debate on Efficiency: Benchmarks shared by a user suggest that 4x3090s outperform the DGX Spark for GPT-120B prefill, offering cost savings in purchase and operation.

- Despite this, DGX spark can train models up to 200B parameters with Unsloth, with training for 20B getting completed in 4 hours.

- Llama 3 Landscape Leveled: Members find the Llama 3.1 series an improvement over Llama 3, while Llama 3.2 has vision capabilities, while recommending Qwen 2 VL 2B for unwatermarked models and tone-specific finetuning with a lot of data.

- There was some disagreement on whether there was not much improvement from versions 3.1 to 3.3.

- LLM OS Boots to Brew Break: The members made jokes about the overhead of an LLM OS, imagining needing to make a pot of coffee while it boots.

- The suggestion of an LLM OS sparked ideas that would require more computational overhead.

OpenRouter Discord

- Haiku 4.5 Strikes with Lightning Speed!: Anthropic’s latest small model Claude Haiku 4.5 delivers near-frontier intelligence on OpenRouter at twice the speed and one-third the cost of previous models, priced at $1 / $5 per million tokens (input / output).

- It outperforms Sonnet 4 on computer-use tasks, achieving >73% on SWE-bench Verified, positioning it among the world’s top coding models, and users can try it now on OpenRouter.

- Ling-1T Teeters on the Brink!: Users report issues with Ling-1T, describing it as a “schizo model”, prompting discussions about whether to disable it due to provider quality concerns.

- A user inquired about seeing the most popular providers per model, indicating interest in alternative solutions, with one user stating that chutes is looking into the issue asked me to disable.

- Caching Configs Cause Chaos!: A user asked how to enable caching in OpenRouter chats, and it was clarified that caching is often implicit, but some models/providers require explicit configuration detailed in OpenRouter’s prompt caching documentation.

- It was also noted that some providers don’t support caching at all.

- FP4 Faces Flak for Failing!: Users debated the merits of FP4 quantization, with one user deeming FP4 models “braindead and a waste of money” due to poor real-world performance.

- Other users cited benchmarks showing good FP4 implementations performing well, and the OpenRouter team acknowledging the problem is in the works, but is unlikely to have a specific quant exclusion feature soon, and the consensus in the community is that OpenRouter should have quality control on accepting and putting the providers in the same quality tier.

- OpenRouter Anthropic Outlays!: An image revealed that OpenRouter paid Anthropic at least $1.5 million in the last 7 days.

- A user commented “that’s a lot of money holy moly”.

Cursor Community Discord

- Cursor Suffers Crippling Outage: Many Cursor users reported the tool was unavailable and displayed errors regarding failing to find cursor.exe, with some threatening to build a competitor.

- Users also said their Pro Plan reverted to Free.

- Users Fume over Plan Downgrades and Pricing Fumbles: Users reported unexpected plan downgrades, issues with API keys, and inability to edit MCP files in the agent window.

- Some Pro users also noted the disappearance of the promised $20 bonus, warning others, if you are currently on the old plan, never click the opt-out button; it’s a trap.

- Windsurf Gets High Praise, Bashes Cursor: One user claimed Windsurf is way better than Cursor, because it has more features and an up to date VS Code base.

- The user noted the upcoming deep wiki feature, a currently releasing codemap feature, plus better pricing.

- Model Ensemble Causes Token Tsunami: The Model Ensemble feature allows users to pick multiple models to run the same prompt.

- Users noted that Grok Code and Cheetah are incredibly fast, and cause them to burn through countless billions of tokens in a month.

- Background Agents unlock Asynchronous Nirvana: One member suggested using Background Agents for async work, planning work, spawning agents to tackle tasks, and reviewing outcomes.

- He noted that his workflow involves a local checkout after BAs finish, followed by manual fixes or prompting a local agent if needed.

HuggingFace Discord

- Meta’s Models Most Mined: Members debated open source LLMs, with some favoring Meta’s 109B and 70B models over Mistral’s, while others praised Deepseek and Alibaba-Qwen for home use, noting that glm-4.6 outperforms Deepseek in speed.

- Community members cited Deepseek being benchmark maxed, noting preferences for models and sizes like Meta’s 70B vs Mistral’s 8x22B and 123B.

- Civitai Content Crisis Catalyzes Creator Competition: Users expressed dissatisfaction with content removal on Civitai, citing payment attacks and extremist groups influencing policy, prompting a discussion on alternative platforms for LoRA creators.

- A Reddit thread (https://www.reddit.com/r/comfyui/comments/1kvkr14/where_did_lora_creators_move_after_civitais_new/) was shared, detailing the LoRA creator exodus following Civitai’s policy changes.

- AMD GPUs Generate Gripes: Users discussed using AMD Radeon cards with Stable Diffusion, noting that newer ROCm-compatible GPUs work on Linux, while older GPUs can use DirectShow on Windows (https://huggingface.co/datasets/John6666/forum2/blob/main/amd_radeon_sd_1.md).

- Training and Dreambooth were described as difficult on AMD, though a user reported success using a 6700xt on Linux.

- Nanochat Newbie’s Narrative: A member trained a cheap version of Andrej Karpathy’s nanochat model, providing a demo on Hugging Face Spaces (sdobson/nanochat) and detailing the training experience in a blog post.

- The model offers a low-cost alternative for those looking to experiment with chatbot technology.

- Agents & MCP Arrive Again: The Agents & MCP Hackathon is returning from November 14-30, 2025, promising to be 3x bigger and better; The last event had 4,200 registrations, 630 submissions, and $1M+ in API credits distributed (https://huggingface.co/Agents-MCP-Hackathon-Winter25).

- Enthusiasts are encouraged to Join the Org and prepare for another round of innovation and collaboration.

Nous Research AI Discord

- Strix Halo Squares off with DGX: Members debated choosing between a DGX and a Strix Halo with 128GB of RAM paired with an RTX 5090 for the same price, with one member stating they are not training too much, mostly inference.

- The member noted they already own the Strix Halo but had previously reserved a DGX, adding complexity to the decision.

- Threadripper Temptation Tantalizes Tinkerers: A member weighed acquiring a Threadripper with 512GB of RAM for local inference, expressing hesitancy due to an assumed rate of only 2.5 tokens per second.

- Another member countered that it should be way more than 2.5 tokens per second, urging the user to acquire the right TR or EPYC CPU with 800GB/s memory bandwidth to bypass bottlenecks.

- Meta’s Agents Avoid Rewards: Meta’s ‘Early Experience’ approach trains AI agents sans rewards, human demos, or supervision, directly gleaning insights from consequences, showing gains of +18.4% on web navigation, +15.0% on complex planning, and +13.3% on scientific reasoning.

- The paper describing Agent Learning via Early Experience (https://arxiv.org/abs/2510.08558) outlines strategies like implicit world modeling and self-reflection, yielding improved effectiveness and out-of-domain generalization across 8 environments.

- Psyche Network Snafu Surfaces: A user pointed out that the Hermes-4-8-2 run at psyche.network/runs erroneously links to Meta-Llama-3.1-8B on Hugging Face.

- This model card discrepancy potentially stems from a misconfiguration or update issue on the Psyche Network, advising users to double-check the actual model used for evaluations.

- Claude 4.5 Haiku Hobbles, Gemini Grows: Members scrutinized the value of Claude 4.5 Haiku, some calling it overpriced relative to its competitors, with one commenting that Gemini 2.5 Flash mogs Haiku, Deepseek R1 and Kimi K2 is also better than Haiku.

- The group consensus seemed to be that upping the prices for Haiku dealt a fatal blow to its viability.

Latent Space Discord

- Codex’s Slow Burn Causes Bottleneck: Victor Taelin complains that OpenAI Codex’s slow inference limits his task queue, leaving him idle between prompts, sparking discussion on solutions.

- He notes other models lack Codex’s smarts or compatibility with his codebase despite suggestions including parallel agents, faster models, and prompt hacks.

- Qwen3-VL Models Pack Punch in Petite Parameters: Alibaba Qwen released compact dense versions of Qwen3-VL in 4B and 8B parameter sizes that retain the flagship model’s full capabilities.

- These models surpass Gemini 2.5 Flash Lite and GPT-5 Nano on STEM, VQA, OCR, video understanding, and agent benchmarks, rivaling the older 72B model.

- Nvidia DGX Spark Fails to Ignite: Early benchmarks of the $4k Nvidia DGX Spark (128 GB) show only ~11 t/s on gpt-oss-120b-fp4, far below a $4.8k M4 Max MacBook Pro that hits 66 t/s.

- Community members attribute poor performance to low LPDDR5X bandwidth (273 GB/s vs 546 GB/s) and deem the device overpriced for pure inference, arguing it’s better suited for CUDA dev & clustering, not speed.

- GPT-5 Search API Slashes Prices: OpenAI released a new web-search model, gpt-5-search-api, that costs 60% less ($10/1K calls) and adds domain-filtering, which has been highly praised by developers.

- Requests are pouring in for features like date/country filters, deeper-research upgrades and inclusion in Codex.

- Poolside Plans Project Horizon with 40k GB300: Poolside’s Eiso Kant announces two infrastructure moves: a partnership with CoreWeave locking in 40 000+ NVIDIA GB300 GPUs starting December 2025, and “Project Horizon,” a vertically integrated 2 GW AI campus in West Texas.

- CoreWeave will anchor the first 250 MW phase—aimed at scaling toward AGI with a full-stack “dirt to intelligence” approach.

LM Studio Discord

- Qwen3 VL Hits

ValueError**: Users are reporting aValueErrorwith Qwen3 VL in LM Studio due to mismatching image features and tokens, indicating a bug in the thinking template.- A user confirmed the bug and pointed to this GitHub issue, noting that a fix is in the works.

- MCP Server Download Methods**: A discussion in LM Studio arose regarding how to download MCP servers for AI models; one suggested approach involves using Install in LM Studio links found on various websites.

- Safety was a concern, with a reminder to check the source code for potential malware, referencing the LM Studio MCP documentation.

- AMD 9070XT Card Surprises with High Performance**: A user questioned whether an AMD 9070XT could outperform an Nvidia 5070 on larger models given its 12GB+ memory.

- Another user pointed out that the 9070XT has almost the same TOPS as a 4080, including INT4 support which could potentially double the performance.

- LM Studio Still Safe From Normie Invasion?**: Members discussed whether LM Studio is at risk of becoming enshitified as it gains popularity, with one member clarifying that LM Studio is not for normal people.

- Another countered that LM Studio has become the default for local LLMs and the mainstream is using ChatGPT and Copilot or Gemini through a web interface.

GPU MODE Discord

- LPDDR5X: Memory Choice Champion: Intel’s Crescent Island is slated for release in H2 2026 and will feature 160 GB of LPDDR5X memory, with expectations pointing to a 640-bit bus at 9.6 Gbps, resulting in 768 GB/s.

- Discussion sparked about its memory performance, with some sources suggesting a 1.5 TB/s GPU and a 32 MiB L2$, and ongoing debate between 640-bit vs. 1280-bit memory bus configurations. If Intel enables CXL-capability, it could become a strong contender.

- MegaFold Expedites AlphaFold 3: A research group open-sourced MegaFold, a training platform for AlphaFold 3 (AF-3), and their analysis identified performance and memory bottlenecks, leading to targeted optimizations like custom operators in Triton and system data-loading improvements, as detailed in their blogpost.

- MegaFold uses custom operators written in Triton to boost runtime performance and cut down on memory usage during training, specifically targeting the bottlenecks identified in the analysis of AlphaFold 3.

- MI300x Kernel is Fun!: Participants expressed unexpected enjoyment writing MI300x kernels during the competition, saying they didn’t expect writing MI300x kernel would have so much fun before while others learned a lot about distributed comms and AMD GPUs.

- The competition saw a total runtime of 48 days on 8xMI300 GPUs and 60k total runs with submissions averaging over 2k a day for almost 2 weeks, and the dataset used in the competition will be released publicly with the organizers saying I’ll post the link when we have it!

- Helion DSL from Torch Debuts: Jason Ansel, the creator of

torch.compile(), introduced his new Kernel programming DSL Helion this Friday, Oct 17th, at 1:30 pm PST, in a GPU Mode Talk (available here), accompanied by Oguz Ulgen (compiler cache) and Will Feng (distributed).- The talk consisted of an overview of Helion, followed by a demo, and included a Q&A session for the new DSL.

- Multi-GPU: still Hot in HPC?: Members confirmed that multi-GPU systems are still of great importance in HPC environments and shared a link to a relevant paper, highlighting the increasing heterogeneity of HPC systems.

- The discussion extended to research opportunities related to data movement in multi-GPU HPC systems and replacing MPI with NCCL/RCCL for data transfer, drawing interest from a grad student keen on working on kernel-level or framework-level with a focus on collective algorithms/communication patterns or network architecture.

DSPy Discord

- MIT DSPy Lab Launches Recursive Language Models (RLMs): The DSPy lab at MIT introduced Recursive Language Models (RLMs) to manage unbounded context lengths and reduce context rot, with a DSPy module coming soon (announcement tweet).

- RLMs achieved a 114% gain on 10M+ tokens according to Zero Entropy Insight’s blog post.

- RLM vs Claude code: Recursive Rumble: Discussion compared RLMs to Claude code, questioning if Claude code can recursively self-invoke with arbitrary length prompts and function as a general-purpose inference method.

- Tiny Recursive Models (TRMs) Emerge for Tool Calling: A member proposed Tiny Recursive Models (TRMs) for tool calling, considering their use in question answering across a corpus of 450k tokens.

- Another user noted that the most interesting concept from RLM was context as a mutable variable, but you could have that where the recursive calls are dumping content into SQLite, other files etc.

- Whispers: Is OpenAI Secretly Using DSPy for Memory Ops?: Speculation suggests OpenAI might be using DSPy for memory operations in its Assistants API, especially regarding prompt caching and auto-tuning for 128K-token recall.

- A member commented that prompt caching (50% cost slash on repeats) screams DSPy energy—think LRU/Fanout caching or Mem0’s graph memory vibes.

- Can DSPy Build a Justice League?: A user inquired about creating sub-agents in DSPy, similar to Claude Code, that feature parallel execution and specialized tasks with their own context memory.

- One user noted that Claude Code relies on pre-declared subagents and file IO—humans encode the workflow graph. RLM shifts that control inside the model: context itself becomes the mutable variable.

Yannick Kilcher Discord

- Codex addon sidesteps API key kerfuffle: Users lauded the Codex addon for VSCode for enabling sign-in with a GPT subscription sans API key, dodging extra fees.

- A user suggested breaking projects into UI, backend, and website chunks to run multiple Codex instances to improve productivity.

- AI completions: Helpful or Hindrance?: Members debated the utility of AI completions, finding them occasionally fucking stupid and process-slowing.

- The consensus was that the tools’ helpfulness hinges on the amount of boilerplate code involved.

- Google’s Gemma Unlocks Cancer Clues: Google’s Gemma-based model, in collaboration with Yale University, has pinpointed a novel cancer therapy pathway, the model, named Cell2Sentence-Scale 27B (C2S-Scale).

- The 27 billion parameter model C2S-Scale proposed a new theory about cancer cell behavior, confirmed with experiments, revealing a route for potential cancer therapies.

- DIAYN Reveals Diversity Dividend: The paper Diversity Is All You Need (DIAYN) was discussed, outlining a method for learning skills through mutual information between skills, states, and actions.

- Commentators found that this approach is analogous to Schmidhuber’s work on intrinsic motivation, differing mainly in terminology.

- Entropy & Mutual Info Energize RL: Members shared links to recent papers leveraging entropy and mutual information in Reinforcement Learning (RL), including Can a MISL Fly? and Maximum Entropy RL.

- Discussion included shared code snippets regarding

ThresHotandzca_newton_schulz.

- Discussion included shared code snippets regarding

Moonshot AI (Kimi K-2) Discord

- Trickle is latest vibe coding website: Trickle is a new vibe coding website similar to Lovable, Bolt, and Manus, as shared in this link.

- The site is supposedly for lolz.

- Aspen Gets Wrecked With Bitcoin Leverage: A member shared a story of Aspen leveraging Bitcoin at 100x, accumulating over a million in profits before a liquidation event due to tariff news.

- After liquidation Aspen is now acting like he never left his previous job.

- Gemini 2.5 past its prime: A member expressed dissatisfaction with Gemini 2.5, stating that it is too old and Google should have released Gemini 3.0 already.

- According to the member, nobody wants to use Gemini in its current state.

- Kimi K2 still getting love: A member expressed hopes for Kimi K3, but acknowledged that Kimi K2 received a small update last month and they are happy with DS v3.1 and Kimi K2.

- The member expressed preference for non thinking models.

- Thinking Models Can Be Redundant: A member suggested keeping thinking models separate from larger models to avoid word slop, suggesting pairing a big generalized kimi k3 with a small fast thinker.

- Another member noted that with deepseek they notice sometime that the reasoning is redundant.

Eleuther Discord

- Researchers Request Eleuther Compute: Researchers from Stanford, CMU, and other institutions are requesting compute resources and funding from Eleuther AI to support their research projects aimed at iterating quickly to release multiple papers.

- The projects are in the brainstorming and finalization phases, aiming to rapidly produce research papers.

- Questing for ‘Situational Awareness’ Benchmarks: A member is seeking benchmarks to measure ‘situational awareness’ of LLMs, noting that existing benchmarks like Situational Awareness Diagnostic (SAD) and those using synthetic datasets may not be ideal.

- The inquiry questions whether there are superior alternatives or if this area remains an open problem in research.

- SEAL Speedrun uses AdamW Optimizer: The SEAL speedrun leverages the AdamW optimizer, contradicting speculations of a new optimizer like Muon being used; read more about the SEAL speedrun.

- This confirms the optimizer choice in the context of the layers used in the SEAL architecture.

- Tensor Logic Unifies AI Fields: A new Pedro Domingos paper introduces tensor logic as a unifying language for neural and symbolic AI, operating at a fundamental level.

- The approach aims to bridge the gap between neural networks and symbolic reasoning through a common logical framework.

- MAE Training Applied to Layers: The training method in question mirrors MAE training, treating the last few layers as a decoder, which means the routing is random.

- A member clarified that the routing scheme is not learned during training but uses a random approach, similar to MAE.

tinygrad (George Hotz) Discord

- Training Selectively Freezing Layers: A user inquired about freezing parts of a matrix during training in tinygrad, aiming to train only a specific section by creating a virtual tensor.

- They proposed using

Tensor.cat(x @ a.detach(), x @ b, dim=-1)to concatenate tensors, detachingato freeze it while trainingb.

- They proposed using

- LeNet-5 Faces Optimizer Nightmares: A member ran into optimizer problems while implementing LeNet-5 in tinygrad, encountering a no-gradients error during the

.stepcall, with code shared via pastebin.- The user suspected the input tensor lacked

requires_grad=True, a critical setting for gradient calculation.

- The user suspected the input tensor lacked

- Nested Jitting Tangled Training: George Hotz identified that the user was jitting twice, and proposed removing the extra jit to allow more simple debugging.

- The member confirmed resolving the issue by removing the extra

TinyJitdecorator, acknowledging the error’s subtlety.

- The member confirmed resolving the issue by removing the extra

Modular (Mojo 🔥) Discord

- Mojo Embraces ARM Linux, DGX Spark: Mojo should already work on ARM Linux, with users encouraged to report bugs if Nvidia’s changes to DGX OS on Spark cause issues.

- For full functionality on DGX Spark and Jetson Thor, an

sm_121entry and update to CUDA 13 are necessary; other ARM Linux devices like Jetson Orin Nano should be compatible now.

- For full functionality on DGX Spark and Jetson Thor, an

- DGX Spark Support Requires CUDA 13: To enable Mojo/MAX on NVIDIA DGX Spark, users must add an entry for

sm_121devices in Mojo’sgpu.hostand updatelibnvptxcompilerto CUDA 13.- Once these updates are implemented, Mojo and MAX should function correctly on DGX Spark.

- Mojo’s

type()Quandary: A user inquired about a Mojo equivalent to Python’stype()function for querying variable types, asking how does querying type work in mojo?- A suggested solution involves

get_type_namefor a printable name, or__type_of(a)for a type object, but the function must be called asget_type_name[__type_of(a)]().

- A suggested solution involves

aider (Paul Gauthier) Discord

- OpenCode + GLM 4.6 Programming is Comfortable: A user finds programming with Opencode + GLM 4.6 to be comfortable and enjoyable, citing excellent usability and no need to worry about counting tokens.

- They use aider.chat with Sonnet 4.5 for specific refinements, and inquired about adding openrouter/x-ai/grok-code-fast-1 to Aider.

- Qwen2.5-Coder:7B Model Outputs Gibberish!: A user reported that the

qwen2.5-coder:7bmodel from ollama.com is outputting gibberish and requested a workingmetadata.jsonexample.- They clarified that other models are functioning correctly, suggesting the issue is specific to Qwen2.5-Coder:7B integration with Ollama.

- Chinese Provider Generously Awards Free Tokens!: A new Chinese provider, agentrouter.org, is offering $200 in free tokens upon registration.

- Users can earn an additional $100 for each referral, leading to enthusiasm among members.

- Claude 4.5 Sharpens Coding Tools: Claude 4.5 is now available and can be connected to many coding tools.

- The enhanced integration promises better performance.

MCP Contributors (Official) Discord

- MCP Discovery Decoded: Clarification was sought on what “Discovery” means within the Model Context Protocol’s Feature Support Matrix, regarding finding new tools.

- It was clarified that discovery refers to support for finding new tools in response to the tools/list_changed notification, as detailed in the Example Clients documentation.

- Hierarchical Groups Proposal Surfaces: A member referenced past feedback suggesting a SEP to support grouping of all MCP primitives and linked to an informal proposal for schema enhancement supporting hierarchical groups.

- The discussion included next steps for this work, creation of a new SEP document, prioritization, and prototype implementations.

Windsurf Discord

- Windsurf 1.12.18 Dries Up Bugs: Windsurf released patch 1.12.18, which can be downloaded here.

- The patch fixes issues with custom MCP servers, the beta Codemaps feature, stuck bash commands, and problems creating or editing Jupyter notebooks.

- Claude Haiku 4.5 Blows Competition Away: Claude Haiku 4.5 is now available in Windsurf for 1x credits, boasting the coding performance of Sonnet 4 at one-third the cost and > 2x the speed.

- Users can find additional details on X.com and are encouraged to reload Windsurf to take Haiku for a spin.

Manus.im Discord Discord

- AI Innovation Stalls with Simple Forms?: A member expressed surprise that AI tools still rely on simple forms and email responses for capturing details, rather than using innovative AI Agents.

- They suggested offering an innovative AI Agent with a subscription model and credits in return for user feedback to showcase the AI’s capabilities.

- Users demand prompt AI service over slow responses: A member ranted about the common issue in AI communities where users expect immediate service, not responses delayed by several days.

- The member exclaimed the biggest issue I see across all the communities is users want some service not a response a 3 days because of the service standard. Yes I am on a rant today.

- Project Retrospective Reveals Key Mistakes: A member shared learnings from a project, admitting significant mistakes such as claiming integration where there was none and not being upfront about limitations.

- The user expressed, I made significant mistakes in this project: Claiming integration when there was none - I initially said everything was integrated when I had only built separate systems.

MLOps @Chipro Discord

- Nextdata Dives Deep into Domain-Centric GenAI: Nextdata is scheduled to host a webinar on October 16, 2025 at 8:30 AM PT, focusing on context management and domain-centric data architecture.

- Led by Jörg Schad, the webinar aims to enhance retrieval relevance, diminish hallucinations, and curtail token costs by covering Domain-Driven Data for RAG, Domain-Aware Tools, and Domain-Specific Models; you can sign up here.

- Domain-Driven Data Deflates Token Bloat: The webinar will address how saturating models with expansive data lakes engenders token bloat and hallucinations, while domain-scoped context maintains LLM focus and efficiency.

- Additionally, the discussion will explore how the proliferation of numerous tools dilutes agent decision-making, advocating for modular, task-scoped tool access to bolster reasoning.

- Domain-Specific Models Sharpen Accuracy: The webinar posits that universal models falter in specialized environments and that domain-aligned fine-tuning amplifies accuracy.

- Attendees can anticipate acquiring knowledge on constructing RAG systems that deliver superior retrieval relevance, diminished hallucinations, reduced token expenses, and achieving production-ready GenAI characterized by robust governance, diminished inference expenses, and heightened user confidence.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

LMArena ▷ #general (1409 messages🔥🔥🔥):

LM Arena Bugs, Video Generation Issues, Gemini 3.0 Pro, Veo 3.1, Automation for A/B testing

- LM Arena Plagued by Bugs: Users reported numerous bugs on LM Arena, including issues with video generation, models not working, and general site instability, prompting moderators to acknowledge the problems and state that the team is investigating.

- Specifically, users reported that image-to-video mode is broken, with generic error messages appearing for various reasons, but some suggested refreshing and clearing cache as potential temporary fixes.

- Veo 3.1 and Gemini 3.0 Spark Excitement (and Confusion): Members discussed the potential arrival of Veo 3.1, with some claiming it’s already being tested, along with speculation about Gemini 3.0, while others express skepticism and note it’s a codenamed model.

- A user noted how good it was by generating full HTML for a geometry dash game but the sound wasn’t working for it.

- Gemini 3.0 Access and Testing: One user claimed to have early access to Gemini 3.0 via AI Studio, stating they’re running tests and generating code, and offered to test prompts for others, however, this user was accused of ragebaiting when they refused to share their method.

- Members are asking about whether the new AI model could generate games and whether others can use that new script.

- Automated A/B Testing Script Developed: A user described a method for automating A/B testing on AI Studio, involving creating a script that restarts prompts and detects the presence of “Which response do you prefer?” to identify A/B test scenarios.

- This discussion led to some gatekeeping accusations when the user hesitated to share the script due to concerns about it being patched.