Vision is all you need?

AI News for 10/17/2025-10/20/2025. We checked 12 subreddits, 544 Twitters and 23 Discords (198 channels, and 14010 messages) for you. Estimated reading time saved (at 200wpm): 1097 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

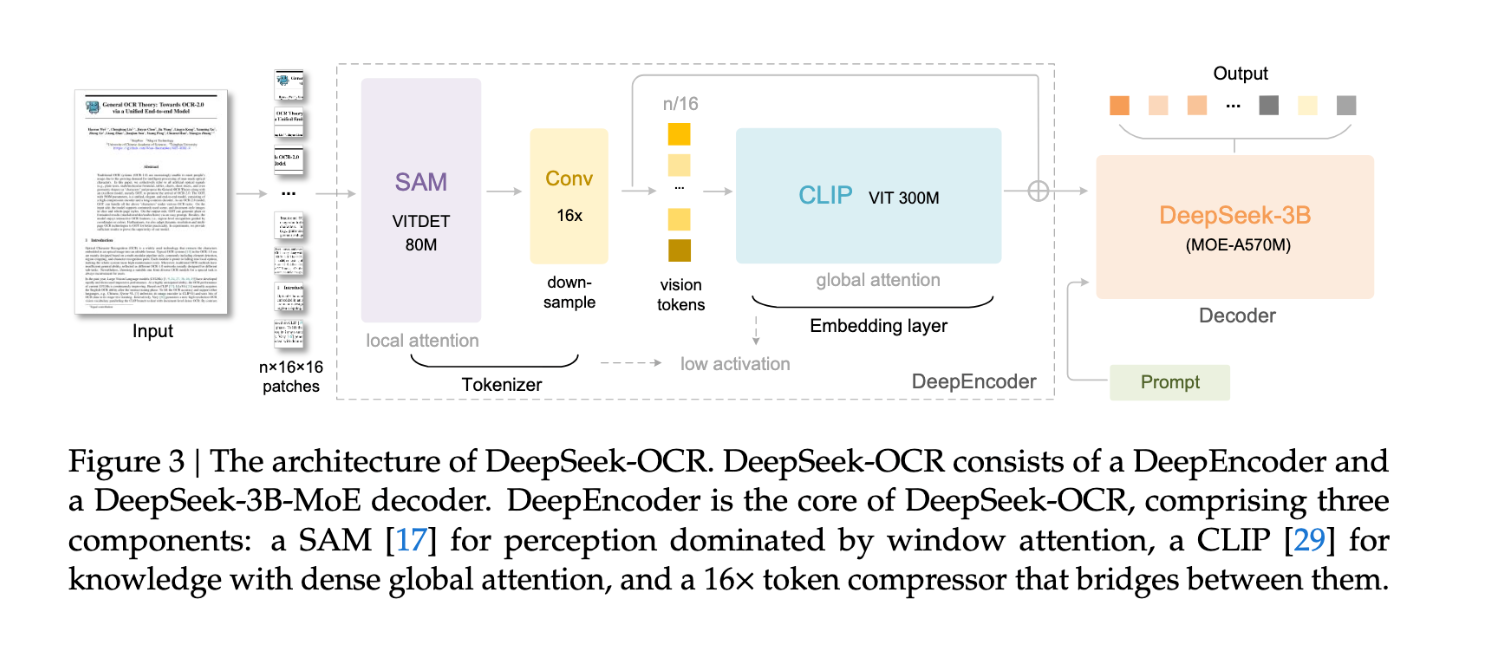

As ICCV kicks off in Hawaii, DeepSeek continues to show signs of life. This one is a relatively small paper with 3 authors, and a small 3B model, but the contribution of a SAM+CLIP+compressor named DeepEncoder:

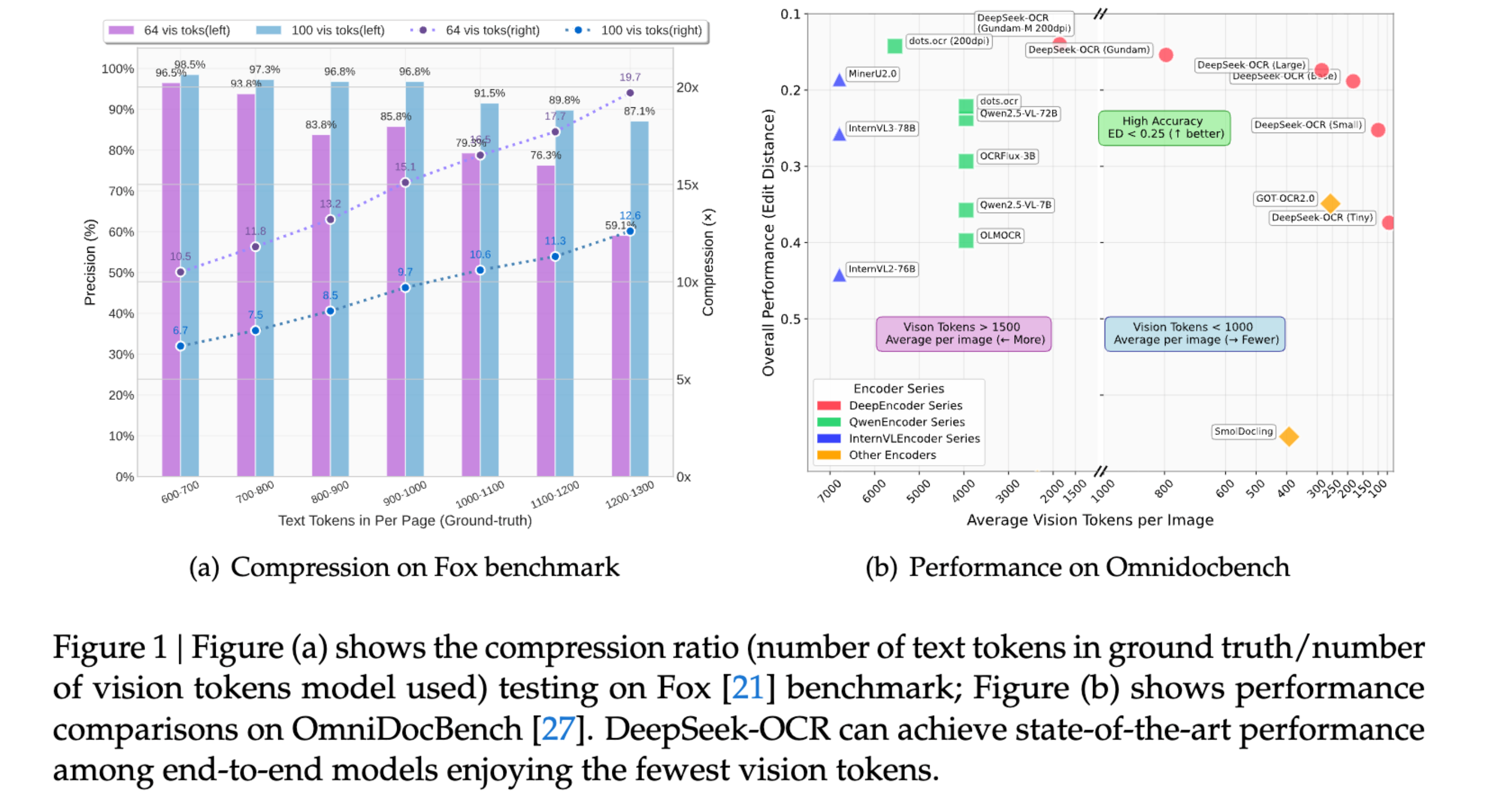

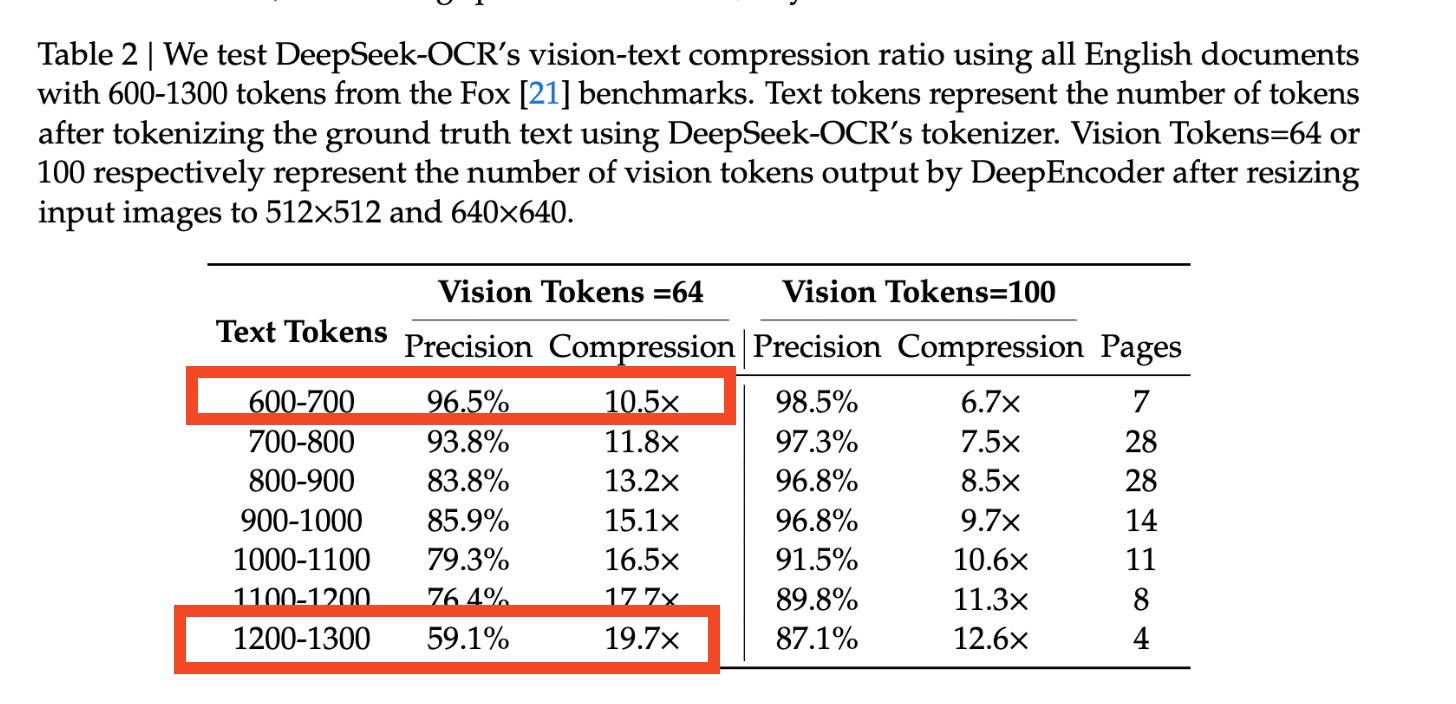

and the headline findings are sound:

The significance of a very good OCR model, beyond liberating a lot of data from books and PDFs, is the opportunity to always consume rich text and get rid of the tokenizer.

AI Twitter Recap

DeepSeek’s “Optical Context Compression” OCR and the end of text-only context?

- DeepSeek-OCR (3B MoE VLM) release: DeepSeek unveiled a small, fast vision-language OCR that treats long text as “visual” context and compresses it 10–20× while preserving accuracy. Key numbers: ~97% decoding precision at <10× compression and ~60% at 20×; ~200K pages/day per A100-40G and ~33M pages/day on 20 nodes (8× A100-40G each). It beats GOT-OCR2.0 and MinerU2.0 on OmniDocBench using far fewer vision tokens and can re-render complex layouts (tables/charts) into HTML. Day-0 support in vLLM delivers ~2,500 tok/s on A100-40G, with official support landing next release. Code and model are on GitHub/Hugging Face. See overviews and demos from @reach_vb, @_akhaliq, @casper_hansen_, @vllm_project, and the initial highlight by @teortaxesTex.

- Architecture and implications for long context: The released LLM decoder is a DeepSeek3B-MoE-A570M variant using MHA (no MLA/GQA), 12 layers, 2 shared experts, and a relatively high 12.5% activation ratio (vs 3.52% in V3 and 5% in V2), per @eliebakouch. The community debate centers on whether compressing “old” text into vision tokens enables “theoretically unlimited context” and better agent memory architectures, and whether pixels can be a superior input interface for LLMs than text tokens. See arguments for multimodal encoders and tokenization-free inputs by @teortaxesTex and @karpathy, clarifications that storage remains tokens (not screenshots) by @teortaxesTex, and counterpoints on prefix-caching incompatibility and practical KV compression limits by @vikhyatk. Good concise summaries: @iScienceLuvr, @_akhaliq.

Video generation: Veo 3.1 leaps ahead; Krea Realtime goes OSS

- Veo 3.1 tops community evals and adds precision editing: Google DeepMind’s Veo 3.1 jumped ~+30 on the Video Arena to become the first model over 1400 in both text-to-video and image-to-video, overtaking prior leaders on physics/realism per the community. DeepMind also shipped precision editing (add/remove elements with consistent lighting/scene interactions) and robust “Start Frame → End Frame” guidance that can blend real footage into stylized outputs. Try and compare in Flow/Gemini and LM Arena. Details from @GoogleDeepMind, @demishassabis, @arena, and examples via @heyglif.

- Open-source realtime video generation: Krea released “Realtime,” a 14B Apache-2.0 autoregressive video model capable of ~11 FPS long-form generation on a single B200. Weights and report are on Hugging Face; early benchmarks and notes from @reach_vb and the launch thread by @krea_ai. Also noteworthy: Ditto’s instruction-based video editing dataset/paper (@_akhaliq) and VISTA, a “test-time self-improving” video generation agent (@_akhaliq).

Agentic coding stacks, governance, and enterprise posture

- Claude Code goes web + iOS with safe-by-default execution: Anthropic launched Claude Code in the browser and iOS, running tasks in cloud VMs with the chat loop during execution. A new sandbox mode in the CLI lets you scope filesystem and network access, reducing permission prompts by 84%; Anthropic open-sourced the sandbox for general agent builders. Early reviews praise the direction but note rough edges in cloud handoff. See launch and deep dives by @_catwu, sandbox details from @trq212 and @_catwu, the open-source repo note by @omarsar0, and a product vibe check by @danshipper.

- Enterprise-grade agent ops (BYOI, multi-cloud, and speed): Cline announced an enterprise version that runs where developers work (VS Code/JetBrains/CLI) and with whichever model/provider is available (Claude/GPT/Gemini/DeepSeek across Bedrock, Vertex, Azure, OpenAI). This “bring your own inference” posture materially helps during cloud outages. IBM and Groq are pairing watsonx agents with Groq LPU inference (claimed 5× faster at 20% of cost) and enabling vLLM-on-Groq, indicating the agent stack is rapidly diversifying beyond a single cloud. See @cline, @robdthomas, and @sundeep. Also in this vein: MCP-backed doc servers injected into coding agents (@dbreunig), easy multi-cloud GPU dev envs (@dstackai), and global batch inference playbooks (@skypilot_org).

Infra resilience and performance tooling

- AWS us-east-1 outage (blast radius and lessons): A major outage took down multiple AI apps (e.g., Perplexity and Moondream’s website; Baseten’s web UI), with services gradually recovering. PlanetScale reported 99.97% of DB ops completed in us-east-1 by minimizing external dependencies. The episode re-emphasized multi-region/multi-cloud strategies, minimizing vendor lock-in, and BYOI: see outage status and recovery from @AravSrinivas and (recovery), impacts from @vikhyatk, @basetenco (recovery), @midudev, @reach_vb, @nikitabase, and a PSA on causality from @GergelyOrosz. Related: “BYOI strikes again” from @cline.

- Kernels, DSLs, and quantization: Modular brought industry-leading perf to AMD MI355 in two weeks and now supports 7 GPU architectures across 3 vendors, demonstrating the benefits of deep compiler investment (launch, coverage). TileLang, a new AI DSL, hits ~95% of FlashMLA on H100 with ~80 lines of Python via layout inference, swizzling, warp specialization, and pipelining (@ZhihuFrontier). Also, GPTQ int4 post-training quantization is now built into Keras 3 with a vendor-agnostic guide (@fchollet).

Evals and benchmarks: real money, real leaderboards, and structured reasoning

- Real-money trading eval (interpret with caution): A community benchmark (nof1.ai) allocated $10k per model over a few days; reports show DeepSeek V3.1 and Grok 4 leading while GPT-5/Gemini 2.5 lost money (@mervenoyann, @Yuchenj_UW). Caveats: small-N, high variance, prompt dependence, and path dependence; “noise dominates” unless you shard capital across many runs (@abeirami). Context: DeepSeek’s quant pedigree is a recurrent theme (@hamptonism).

- Leaderboards and structured reasoning: WebDev Arena added four models: Claude 4.5 Sonnet Thinking 32k; GLM 4.6 (new #1 open); Qwen3 235B A22B; and Claude Haiku 4.5 (@arena). Elsewhere, Parlant’s Attentive Reasoning Queries (ARQ) use schema-constrained, domain-specific “queries” instead of free-form CoT and reported 90.2% across 87 scenarios vs 86.1% for CoT (repo in thread) (@_avichawla). Also see “when to stop seeking vs act” termination training (CaRT) (@QuYuxiao) and the observation that DeepSeek perf tracks PrediBench results (@AymericRoucher).

- China model notes: Kimi K2 claims up to 5× faster and 50% more accurate on internal workloads (@crystalsssup); team shared internal benchmarks (@Kimi_Moonshot).

Domain tools: Life sciences, data pipelines, and structured extraction

- Claude for Life Sciences: Anthropic launched connectors (Benchling, PubMed, Synapse.org, etc.) plus Agent Skills to follow scientific protocols, with early users including Sanofi, AbbVie, and Novo Nordisk. Anthropic also published a Life Sciences GitHub repo with examples (launch, details, repo).

- Data workflows: LlamaIndex demonstrated a robust text-to-SQL workflow with semantic table retrieval (Arctic-embed), OSS text2SQL (Arctic via Ollama), multi-step orchestration, and error handling (@llama_index). FinePDFs released new PDF OCR/Language-ID datasets and models (XGB-OCR) to power document pipelines (@HKydlicek, @OfirPress). For structured VLM extraction, Moondream 3 shows single-shot JSON parsing of complex parking signs—no OCR stack required (@moondreamai).

Top tweets (by engagement)

- DeepSeek’s “visual compression OCR” and long-context implications caught fire across the community: succinct technical summary by @godofprompt and the broader “pixels over tokens” thread by @karpathy.

- Massive AWS outage updates (Spanish): impact and commentary roundup by @midudev; Perplexity outage and recovery from @AravSrinivas and (recovery).

- Veo 3.1’s leap to #1 in Video Arena, with official acknowledgments from @arena and @demishassabis.

- Kimi K2 performance claim: up to 5× faster and 50% more accurate (@crystalsssup).

- Classic read: Richard Sutton resurfaces original Temporal-Difference learning resources (@RichardSSutton).

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. DeepSeek OCR Release

- DeepSeek releases DeepSeek OCR (Activity: 565): DeepSeek has released a new OCR model, DeepSeek OCR, which introduces a novel approach called Optical Compression. This technique leverages increasing image compression over time to facilitate a form of visual/textual forgetting, potentially enabling longer or even infinite context handling. This approach is detailed in their paper, which highlights its potential to extend context length significantly beyond current capabilities. A notable discussion point is the comparison to Qwen3 VL, with some users intrigued by the naming of a mode as ‘gundam’. The community is also discussing the implications of the optical compression technique for context management in OCR applications.

- DeepSeek OCR introduces a novel approach called ‘Contexts Optical Compression’, which leverages increasing image compression over time as a method for visual/textual forgetting. This technique potentially allows for much longer context windows, possibly even infinite, by efficiently managing memory and processing resources. This could be a significant advancement in handling large-scale data inputs in OCR systems.

- The model has been trained on a substantial dataset, including 1.4 million arXiv papers and hundreds of thousands of e-books. This extensive training dataset suggests that DeepSeek OCR might excel in specific domains, particularly in recognizing complex text structures like math and chemistry formulae. While it may not surpass PaddleOCR-VL in overall state-of-the-art performance, it could outperform in specialized text recognition tasks.

- There is anticipation for the Omnidocbench 1.5 benchmarks to provide more detailed performance metrics. Current evaluations like edit distance are insufficient without complementary metrics such as table TEDS and formula CDM scores. These benchmarks will be crucial in assessing DeepSeek OCR’s capabilities in comparison to existing models, especially in specialized areas like mathematical and chemical text recognition.

- What happens when Chinese companies stop providing open source models? (Activity: 809): Chinese companies like Alibaba have shifted from open-source to closed-source models, exemplified by the transition from WAN to WAN2.5, which now requires payment. This move raises concerns about the future availability of open-source models from China, which have been crucial for global access and competition against US models. The change could impact the global AI landscape, as open-source models have been a key differentiator for Chinese companies in the international market. Commenters suggest that China’s open-source strategy has been a counter to the US’s proprietary models, providing affordable alternatives. If Chinese models become closed-source, they may lose international appeal, as their open-source nature was a primary advantage over US models.

- TopTippityTop discusses the strategic advantage China gains from open source models, highlighting that China’s economy is more focused on physical goods production, whereas the US economy is more dependent on software and services. This reliance makes the US economy more fragile, suggesting that China’s open source strategy is a calculated move to leverage this economic dynamic.

- RealSataan argues that the primary appeal of Chinese models to international users is their open source nature. If Chinese companies were to stop providing open source models, these models would lose their competitive edge against American alternatives, which are more widely accessible globally.

- Terminator857 suggests that if Chinese companies transition to closed source models, they could potentially increase their revenue significantly. This implies a trade-off between maintaining open source accessibility and capitalizing on proprietary models for financial gain.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Robotics Innovations

- Introducing Unitree H2 - china is too good at robotics 😭 (Activity: 1324): Unitree Robotics has introduced the Unitree H2, a new robotic model that showcases advanced movement capabilities, making strides towards more natural and fluid motions. This development highlights China’s growing expertise in robotics, with the H2 model demonstrating significant improvements in agility and functionality. The robot’s design and engineering reflect a focus on enhancing practical applications, although some users express a desire for more utility-focused features. Commenters note the impressive naturalness of the robot’s movements, suggesting that while the technology is advancing, there is still a demand for robots to perform more practical tasks.

- midgaze highlights China’s rapid advancements in robotics and automation, suggesting they are approaching an ‘automation singularity.’ This implies a self-reinforcing cycle where improved manufacturing leads to better robots, which in turn enhance manufacturing capabilities. The comment underscores the strategic advantage China is gaining in this sector, potentially outpacing global competitors.

- crusoe points out a critical observation regarding the Unitree H2, noting that while the robot is shown performing tasks like dancing, it lacks demonstrations of practical applications. This contrasts with companies like Boston Dynamics, which often showcase their robots in real-world scenarios, emphasizing functionality over entertainment.

- RDSF-SD comments on the naturalness of the Unitree H2’s movements, indicating significant progress in robotic kinematics and control systems. This improvement in movement fluidity is crucial for applications requiring human-like interaction and precision, suggesting that the technology is advancing towards more sophisticated and practical uses.

- Movies are staring to include “No AI was used in the making of…” ect in the end credits (Activity: 764): Recent films are beginning to include disclaimers in their end credits stating that “No AI was used in the making of this movie.” This trend reflects a growing concern over the use of AI in creative processes, reminiscent of past debates over digital versus film photography. The claim is seen by some as performative, given the pervasive integration of AI tools in production workflows, and the expectation that AI will become even more embedded in future creative tools. Commenters express skepticism about the feasibility of completely avoiding AI in film production, noting parallels to past technological shifts like the transition from practical effects to CGI. There is a belief that AI will become so integral that such disclaimers will be impossible to substantiate.

- NoCard1571 argues that claims of not using AI in film production are largely performative, suggesting that it’s unlikely no one used a language model during production. They predict that generative AI will become so integrated into tools that such claims will be impossible to verify in the future.

- zappads highlights a historical parallel with the replacement of pyrotechnic artists by CGI in the 2000s, noting that cost and ease often drive technological adoption in film production. They suggest that economic pressures will lead to increased AI use, regardless of current claims about its absence.

- letmebackagain emphasizes the importance of focusing on the quality of the final product rather than the tools used in its creation. This perspective suggests that the debate over AI usage should center on the artistic and technical merits of the work rather than the production methods.

2. AGI Predictions and History

- In 1999, most thought Ray Kurzweil was insane for predicting AGI in 2029. 26 years later, he still predicts 2029 (Activity: 626): Ray Kurzweil has consistently predicted the arrival of Artificial General Intelligence (AGI) by

2029, a claim he first made in1999. Despite skepticism, Kurzweil maintains this timeline, suggesting that AI will achieve human-level intelligence across a wide range of tasks by then. However, the lack of a universally accepted definition of AGI complicates these predictions, as noted by experts who argue that while AI may reach human parity in specific tasks, the essence of AGI remains elusive. A notable opinion from the comments highlights skepticism about AGI predictions due to the absence of a clear definition. Another perspective suggests that while AI might achieve human-level performance in many tasks by2029, the concept of true AGI is still undefined.- jbcraigs highlights the challenge in predicting AGI due to the lack of a universally accepted definition. They argue that while AI may achieve human-level performance in specific tasks by 2029, the concept of ‘true AGI’ remains elusive because we don’t fully understand what it entails.

- KairraAlpha discusses the misconception that AGI is merely about surpassing human capabilities in math and logic. They suggest that intelligence is multifaceted and that AGI might not conform to traditional expectations. They imply that current models like GPT-5 and Claude could reveal unexpected capabilities if unrestricted, hinting at the complexity and unpredictability of AGI development.

- Today is that day 😭 (Activity: 2058): The post discusses a controversial use of MLK Jr’s likeness in AI-generated content, leading to OpenAI’s decision to prohibit such uses. This reflects ongoing ethical concerns in AI regarding the representation and use of historical figures’ images and voices without consent. The issue highlights the need for stricter guidelines and oversight in AI content generation to prevent misuse and respect intellectual property rights. Commenters express frustration and disappointment, questioning the ethical oversight in AI development and the responsibility of companies like OpenAI to prevent such occurrences.

- The meme continues (Activity: 418): The Reddit post humorously references a situation where a streamer, possibly Hassan, is jokingly accused of forcing someone to watch their stream to increase watch time. This is likened to a potential South Park joke, highlighting the absurdity and humor in the situation. The external link indicates restricted access due to network security, requiring login or a developer token for further access. Commenters find the situation amusing and suggest it would fit well as a South Park joke, indicating the humor resonates with the show’s style.

AI Discord Recap

A summary of Summaries of Summaries by gpt-5

1. AI Video Generation Showdown

- Veo Victorious on Video Leaderboards: Veo-3.1 now ranks #1 on both the Text-to-Video Leaderboard and Image-to-Video Leaderboard, with the organizers inviting submissions and feedback in their Arena announcement on X. Community testing ramped up around these boards, centering on prompt coverage, temporal coherence, and motion fidelity under leaderboard constraints.

- Participants shared generations and edge-case prompts while discussing leaderboard methodology and evaluation bias toward short clips and specific prompt classes, highlighting the importance of consistent motion and maintained identity. Several engineers noted that leaderboards spur fast iteration cycles and reproducibility for text-to-video and image-to-video baselines.

- Sora Slides While Veo Surges: Engineers compared Sora 2 outputs (see example on Sora examples) against Veo-3.1, reporting perceived quality degradation in Sora since initial release despite Veo’s leaderboard dominance. Debates focused on subjective quality vs. leaderboard scores, and on prompt reproducibility across model updates.

- Discussion stressed that evaluation should normalize for seed, clip length, and postprocessing to fairly judge temporal consistency, physics plausibility, and character persistence. Some users concluded that even if Veo currently tops leaderboards, Sora’s strengths still appear in specific cinematic scenes and stylized VFX.

- Krea Realtime Cranks Out Open-Source Video: Krea AI open-sourced a 14B autoregressive text-to-video model, Krea Realtime, distilled from Wan 2.1 and capable of ~11 fps on a single NVIDIA B200, as announced in the Krea Realtime announcement. Engineers immediately explored ComfyUI graphs, expected throughput on RTX 5090, and fine-tuning hooks for domain-specific motion.

- Builders highlighted that an OSS baseline with real-time generation unlocks rapid workflow prototyping and benchmarking against closed models. Early adopters traded notes on context windows, frame conditioning, and optimizing decode pipelines for low-latency streaming.

2. Kernel DSLs and Quantization Updates

- Helion Hits Public Beta: Helion 0.2 shipped as a public beta on PyPI (Helion 0.2 on PyPI) alongside developer outreach at the Triton Developer Conference and PyTorch Conference 2025. The tool positions itself as a high-level DSL for kernel authoring layered over compiler stacks, with multiple talks and live Q&A for hands-on users.

- Engineers welcomed a higher-level path to author performant kernels while keeping MLIR and compiler ergonomics in play. Conference chatter emphasized tight integration with PyTorch Compiler stacks and future-proofing for evolving GPU backends.

- Triton TMA Truths on SM120 and Hopper: Practitioners testing TMA in Triton on NVIDIA SM120 reported no wins vs

cp.async, aligning with notes that on Hopper TMA underperforms for loads under ~4 KiB and that Ampere lacks TMA (pointer math may still be faster); background context referenced the matmul deep-dive Matmul post (Aleksa Gordić). Benchmarks suggest TMA shines for larger tiles and multicast patterns but demands careful tiling to beatcp.asyncon small transfers.- CUDA discussions contrasted latency/bandwidth behavior across DSMEM, L2, and device memory while tuning descriptor-driven layouts. Takeaway: profile both tile size and swizzle; keep a

cp.asyncfallback path for sub-4 KiB tiles on Hopper-class parts.

- CUDA discussions contrasted latency/bandwidth behavior across DSMEM, L2, and device memory while tuning descriptor-driven layouts. Takeaway: profile both tile size and swizzle; keep a

- TorchAO Tweaks Quant Configs: TorchAO will deprecate

filter_fnforquantize_opin favor of regex-capable ModuleFqnToConfig (TorchAO PR #3083), simplifying selective quant policies. In parallel, users noted SGLang online quantization’s current inability to skip vision stacks as documented in the SGLang quantization docs.- Teams welcomed regex-based scoping for large codebases mixing text and vision channels, flagging migration work in existing helpers. The broader thread tied into provider-agnostic deployment hygiene and upcoming PyTorch 2.9 features for symmetric memory backends in multi-GPU settings.

3. New Models, Datasets, and Agent Tooling

- Qwen3 Vision Lands with VL-8B: Qwen released the multimodal Qwen3-VL-8B-Instruct on Hugging Face (Qwen3-VL-8B-Instruct), with GGUF variants appearing for local runners. Engineers compared it against other VLMs in real workflows (e.g., ComfyUI) and discussed prompt templates and chat formatting.

- Early adopters evaluated OCR, chart/table parsing, and code-diagram grounding, noting tokenizer/chat-template sensitivities. The thread emphasized consistent ChatML formatting and careful system prompt handling to stabilize vision-language performance.

- xLLMs Drops Multilingual Dialogue Troves: The xLLMs collection published multilingual/multimodal dialogue datasets for long-context reasoning and tool-augmented chats (xLLMs dataset collection; spotlight: xllms_dialogue_pubs). The sets target long-context, multi-turn coherence, and tool-use evaluation across up to nine languages.

- Builders highlighted that standardized multi-turn traces accelerate SFT and eval pipelines. Discussions focused on splitting by language/task, curating tool traces, and mapping to instruct vs cloze templates for harnesses.

- Agents Get Self-Hosted Tracing: A community project released a self-hosted tracing and analytics stack for the OpenAI Agents framework to address GDPR and export limitations (openai-agents-tracing). The repo ships dashboards and storage to keep agent traces private and portable.

- Teams praised the ability to inspect latencies, tool-call fanout, and failure modes without sending telemetry to third-party dashboards. Privacy-conscious orgs flagged this as critical for regulated workloads requiring on-prem observability.

4. Portable GPU Compute on Macs

- tinygrad Turns USB4 Docks into eGPU Lifelines: tiny corp announced public testing of a pure-Python driver enabling NVIDIA 30/40/50-series and AMD RDNA2–4 GPUs via any USB4 eGPU dock on Apple‑Silicon MacBooks (tinygrad eGPU driver announcement). Engineers immediately probed perf ceilings, NPU interplay, and dev ergonomics for mobile rigs.

- Threads debated whether streamlined NPU programming could complement eGPU offload for hybrid pipelines. Mac users compared dock/firmware quirks and discussed driver maturity for compute-intensive workflows (LLM infer, diffusion, video).

- Secondhand 3090 Survival Guide: A practitioner shared a field-tested checklist for buying used RTX 3090 cards—bring a portable eGPU setup, confirm

nvidia-smi, runmemtest_vulkan, optionally rungpu-burn, and watch thermals (RTX 3090 used-buying tips). The guidance aims to reduce VRAM/thermal surprises and weed out flaky boards.- Mac eGPU experimenters echoed the importance of live validation under load rather than idle checks. The community also noted nascent macOS NVIDIA driver efforts circulating in tinygrad-related threads for broader compatibility testing.

5. Research & Evaluation Highlights

- Anthropic Maps Attention Mechanics: Anthropic extended attribution graphs from MLPs to attention with the paper Tracing Attention Computation Through Feature Interactions (paper). Discussions connected this to prior interpretability work and to techniques for mitigating logit blowups via QK normalization.

- Researchers debated how feature hierarchies emerge across attention layers and how to visualize QK interactions in practice. Engineers noted the potential for targeted ablations and better mechanistic probes in small-to-mid models.

- Eval Harness Gets a UX Glow-Up: Eleuther outlined an lm-evaluation-harness refactor adding new templates, standardized formats, clearer instruct tasks, and friendlier UX (branch: smolrefact; planning: Eval harness planning When2Meet). Goals include easier conversion between task variants (e.g., MMLU → cloze → generation) and saner

repeatsbehavior.- Library users welcomed fewer format footguns and better reproducibility for long-context and tool tasks. The team solicited feedback from heavy users to finalize templates before broader roll-out.

- NormUon Nudges Optimizer State of the Art: A new optimizer dubbed NormUon entered discussion circles with claims of SOTA-level results if benchmarks hold (NormUon optimizer (arXiv:2510.05491)). Community cross-checks compared it against Muon with non-speedrun baselines and QK-norm mitigations.

- Practitioners reported it’s “the same perf as muon on their non-speedrun setups but with good muon baselines” while flagging stability from smoother weight spectra. Others cautioned for head-to-head ablations before declaring wins on reasoning-heavy workloads.

Discord: High level Discord summaries

Perplexity AI Discord

- Comet’s Cash: Referral Program Payouts Probed: Users discussed the Comet referral program, troubleshooting referral links and reporting missing payouts.

- Tips for successful referrals included ensuring referred users install Comet and perform a search.

- AWS Accident: Outage Outlines Over-Reliance: A recent AWS outage caused widespread issues for Perplexity AI and other services, leading to discussions about the risks of over-reliance on single cloud providers.

- Users shared status links and personal experiences, noting that certain features were still affected even after the initial outage was resolved.

- Claude Conquers: GPT-5 Grounded in Competition: Members debated the relative merits of GPT-5 versus Claude, with many finding Claude to be superior for complex projects and reasoning tasks.

- Some users noted that GPT-5’s free tier felt noticeably weaker, while others suggested using Claude 4.5 for optimal results.

- Philosophical Forte found for Claude Sonnet 4.5: Claude Sonnet 4.5 is highlighted as effective for philosophical problem solving, accompanied by a shared Claude conversation link.

- Users have also begun claiming Perplexity AI claim invite links and recommending it for step-by-step guides.

- Pricing Particulars: API Access Assessed: A user inquired about who to contact for custom API pricing for large API users and how to get access.

- Another user suggested emailing [email protected] to get in touch with the appropriate team.

LMArena Discord

- Lithiumflow Impresses with Coding, Sparks Gemini Speculation: Members tested Lithiumflow’s coding capabilities, finding its ability to write a full macOS system in a single HTML file impressive, with a demo available on Codepen.

- Some users found it inferior to Claude 4.5 for coding, leading to theories that Lithiumflow might be a nerfed Gemini 3 variant or a specialized coding model that hints at Google’s prioritization of coding in their models.

- Gemini 3: Release Date Remains a Mystery: Speculation continues around the release and specifications of Gemini 3, with theories suggesting that Lithiumflow and Orionmist are potential Gemini 3 iterations or specialized models.

- A tweet suggests the release is still two months away, accessible on Twitter.

- Video Arena: Veo-3.1 Dominates Video Leaderboards!: The Text-to-Video Leaderboard & Image-to-Video Leaderboard now show Veo-3.1 ranking #1 in both categories.

- Users are encouraged to share their Veo-3.1 generations and provide feedback, as announced on X.

- AI Video Quality: Sora 2 vs Veo 3.1: Members are debating the video quality of different AI models, including Sora 2 and Veo 3.1, with examples available on ChatGPT’s website.

- Despite Veo 3.1 being ranked higher on leaderboards, the consensus is that Sora 2’s quality has degraded since its initial release.

- Claude Sonnet 4.5: A League Above?: Members discuss Claude Sonnet 4.5’s creative writing capabilities, with one stating that Claude Sonnet 4.5 Thinking is in “a different league” compared to Gemini 2.5 Pro.

- However, ongoing bugs and issues with models getting stuck or generating generic error messages on LMArena are hindering effective testing and comparison.

Unsloth AI (Daniel Han) Discord

- Unsloth Eyes Apple Hardware: Support for Apple hardware is planned for Unsloth sometime next year, but members expressed some hesitation.

- No further information or timeline was disclosed at this time.

- Hackathon Halted; MI300X for All!: The AMD x PyTorch Hackathon is extended, with each participant receiving 100 hours of 192GB MI300X GPUs, to compensate for disruptions.

- Community members described the event as having so many issues but the team is trying.

- Modelscope Saves the Day During HF Outage: Due to AWS outages affecting Hugging Face, members recommended using Modelscope as a temporary mirror.

- A code snippet was shared describing how to load models from Modelscope in a Colab environment, requiring the

load_in_4bitflag.

- A code snippet was shared describing how to load models from Modelscope in a Colab environment, requiring the

- Thinking Mode Tagging in SFT: A member proposed using XML tags such as

<thinking_mode=low>to teach an AI model different thinking modes during SFT, categorizing examples based on CoT token count, with an auto mode for the model to decide.- Another member suggested that controllable thinking usually benefits from some reinforcement learning after SFT.

- Luau-Devstral-24B-Instruct-v0.2 > GPT-5 Nano: A member pointed out that GPT-5 Nano in Luau-Devstral-24B-Instruct-v0.2 outperforms GPT-5, noting its surprising performance.

- The member remarked that GPT-5 is weird.

OpenAI Discord

- Comcast Representatives peddling user data?: A user alleged that Comcast representatives are stealing data and selling it through phishing attempts targeting authentic Kenyan organizations and Philippines-based call centers.

- The user claims they are documenting cases and suggested contacting CEO Brian Roberts for accountability.

- OpenCode Discovers Super Speedy Stealth Model: The OpenCode team has a mysterious stealth model that is incredibly fast at spitting out tokens, but they are unsure if it’s intelligent.

- Members stated it’s the fastest model they’ve ever seen, generating hype for what’s next in OpenCode.

- Grok cracks Video Generation: Grok has now launched video generation, accessible via SuperGrok subscription ($30/month) without requiring a Twitter/X account.

- Users are finding Grok video generation copes fine with franchises and 3rd party content, producing videos without watermarks.

- Sora Reality Distortion Field: A user inquired if Sora can distinguish between images of real people and fictional characters, with a simple response in the affirmative.

- The conversation starter also shared a link to pseudoCode thought experiment for AI models.

- Controlling Conversation flow on ChatGPT: A user expressed frustration with ChatGPT’s tendency to end responses with unsolicited follow-up questions and sought advice on disabling this ‘feature’, and other users had ideas.

- A user suggested replacing the follow-up questions with something else, such as a joke or a detail about a favorite subject, and shared example chats, like this one with Dad jokes.

OpenRouter Discord

- TLRAG Framework Claims Debated: A developer introduced TLRAG, a deterministic and model-agnostic framework claiming superiority over Langchain without function calling, and boasting token savings and dynamic AI persona evolution, as documented on its dev.to page.

- Community members expressed skepticism regarding TLRAG’s claims, UI/UX glitches, security and whitepaper structure, with one calling the whitepapers AI-generated shit wich isnt true at all.

- SambaNova Latency Woes in DeepSeek v3.1: Users reported latency issues with SambaNova in DeepSeek v3.1 Terminus, noting that despite theoretically providing higher throughput, DeepInfra appears faster in both throughput and latency as shown on OpenRouter’s comparison page.

- The discussion underscored the importance of practical performance metrics over theoretical throughput, especially in real-world applications.

- GPT-5 Image: Is It Just GPT-image-1?: Members debated the authenticity of GPT-5 Image, with some speculating that it is merely GPT-image-1 with a tweaked API, rather than a new or improved model.

- One member succinctly stated I think so I’ve tested both and I like nano banana a lot more, indicating a preference for alternative image generation models.

- Qwen3 Pricing Stuns Users: The community is stoked over the Qwen3 235A22B API pricing, finding it excellent, especially when integrated with W&B for extensive data processing.

- Users are urging OpenRouter to highlight routine price drops, emphasizing that the intelligence-per-dollar ratio is unmatched, setting a new standard in cost-effectiveness.

- LFM 7B Sees Its End: The community bid farewell to Liquid’s hosting of LFM 7B, the original $0.01/Mtok LLM, which was deleted at 7:54 AM (EST).

- Users noted the lack of a direct replacement in terms of pricing on OpenRouter, with Gemma 3 9B being the closest but triple the output cost.

LM Studio Discord

- LM Studio API on iPad with MCP Servers: A user wants to access LM Studio API from an iPad using 3Sparks Chat and use MCP servers, which is currently not supported via the API.

- Users suggested using remote desktop apps like Tailscale or RustDesk as workarounds.

- System Prompt Parsing Causes Bracket Chaos: System prompts in LM Studio are parsed, causing issues with special characters like brackets depending on the model and chat template, with a fix incoming via Jinja template fix.

- The ChatML template is suggested for Qwen models to mitigate some of these issues, along with a custom prompt template.

- Maximizing Auxiliary GPU Performance by Exhausting It: A user is trying to make their 3050 as an auxiliary GPU alongside a 3090 for extra VRAM, but some users noted that the two cards were “suffocating the GPU”.

- Discussion covered setting the 3090 as the primary compute device in hardware settings.

- Desk Fan Cooling Mod as a Joke: A user jokingly installed dual 12-inch turbine fans to cool their PC, after returning from a psych ward.

- The user clarified it was an external desk fan and not an internal PC fan.

- EPYC vs Threadripper for the Ultimate LLM Rig: Members debated the merits of using an EPYC 9654 versus a Threadripper for running large language models (LLMs).

- The consensus leaned towards EPYC for its superior memory bandwidth and dual-CPU capabilities, plus you can consider used 3090s or MI50s.

HuggingFace Discord

- HF Inference API demands conversational task names: Members found that Mistral models require renaming the variable ‘text-generation’ to ‘conversational’ to function correctly with the HF Inference API, referencing a relevant issue.

- The discovery of Florence model’s task name requirements highlight that other models may have similar constraints, requiring specific task names to respond to prompts.

- NivasaAI Navigates Google ADK: A member is switching NivasaAI from Agent + Tools to multiple agents with dynamic routing for enhanced UX, with the initial commit available on GitHub.

- A member plans to finalize a router using Google ADK that classifies requests into categories such as

code_validation,rag_query, andgeneral_chatto handle a range of tasks from syntax checking to casual conversation.

- A member plans to finalize a router using Google ADK that classifies requests into categories such as

- Local LLMs Exposed for Hallucinating: After testing 47 configs, a member found that local LLMs may hallucinate after 6K tokens, detailing their findings on quantization configurations in a Medium article.

- A member introduced a new architecture with a decentralized tokenizer, noting it’s not compatible with llama.gguf but available on GitHub.

- Self-Hosted Tracing Arrives for OpenAI Agents: A member open-sourced a self-hosted tracing and analytics infrastructure for OpenAI Agents framework traces, addressing GDPR concerns and the inability to export data from OpenAI’s tracing dashboard.

- This framework helps developers monitor and analyze the performance of their OpenAI Agents while maintaining data privacy and control.

- DeepFabric Delivers Data-Driven SLMs: A member introduced DeepFabric, a tool for training SLMs to improve structured output and tool calling, shared via a GitHub link.

- The tool enables the generation of reasoning trace-based datasets that can be directly loaded into TRL (SFT), enabling developers to train SLMs that excel in generating structured outputs and effectively utilize tools.

GPU MODE Discord

- Triton’s TMA Triumph on Nvidia SM120: A member reports implementing TMA support for dot in Triton for NVIDIA SM120 GPUs, but observed no performance boost compared to

cp.async. Members indicate that on Hopper, TMA is less efficient for loads under 4KiB.- A member noticed on Ampere that

desc.load([x, y])performance is poor, given that Ampere lacks TMA.

- A member noticed on Ampere that

- CUDA’s CTA Conundrum: CTA is now Coorperative Thread Array: In CUDA, the community clarified that Cooperative Thread Array (CTA) is synonymous with a thread block, especially when leveraging the distributed shared memory feature.

- A member shared a blog post which indicates that using distributed shared memory likely involves latency and bandwidth differences between current and other blocks.

- PyTorch Profiler’s CUPTI Predicaments on Windows & WSL: A user encounters a

CUPTI_ERROR_INVALID_DEVICE (2)error with torch.profiler on Windows and WSL, despite CUDA availability, which others suggest may require hardware counter access via this gist.- A user training an AlphaZero implementation on a 3090ti is experiencing slow training times, but members pointed out that AlphaZero training requires substantially more compute power.

- GPU Dev Resources Debut! Centralized Knowledge Beckons: A member shares their curated repository of GPU engineering resources, consolidating various helpful materials in one location.

- A member also posted a blog post about their PMPP-Eval journey for people interested in performance modeling and prediction.

- TorchAO Touts Quantization Configuration Triumph: The

filter_fnforquantize_opwill be deprecated in favor of using ModuleFqnToConfig, which now supports regex, described in TorchAO pull request #3083.- Currently SGLang online quantization does not support skipping quantization for vision models, and a user inquired about skipping quantization for the vision model.

Latent Space Discord

- ManusAI V1.5 conjures Web Apps in Minutes: ManusAI v1.5 now transforms prompts into production-ready web apps in under 4 minutes flat, incorporating unlimited context via auto-offloading and recall, a feature launched on October 16th.

- User reactions varied, with some marveling at its speed and diving into context engineering, while others found previous tools like Orchids, Loveable, and V0 underwhelming compared to the precision of coding directly.

- AI Gloom engulfs Researcher Sentiment: Prominent AI researchers like Sutton, Karpathy, and Hassabis are embracing longer AI timelines, triggering debates about a possible hype cycle correction as noted here.

- The community’s responses swung between alarm and defense of ongoing progress, amid questions on whether this new wave of pessimism is overblown or simply misinterpreted.

- Cursor spawns Git Worktrees for Parallel AI Agents: Cursor now auto-creates Git worktrees as highlighted here, enabling users to operate multiple AI agent instances in parallel across separate branches.

- The launch has garnered praise and triggered a flurry of setup tips, port usage inquiries, and enthusiasm for potential use cases.

- Tinygrad Turns Apple Macs into NVIDIA eGPU Powerhouses: The tiny corp team announced public testing of their pure-Python driver, which brings 30/40/50-series NVIDIA GPUs (and AMD RDNA2-4) to life via any USB4 eGPU dock on Apple-Silicon MacBooks, detailed in this announcement.

- The channel discussed whether NPUs needed streamlined programming and whether AMD’s success hinges on simplifying the process, as highlighted in this tweet.

- AI-Generated Luxe Escapism fuels Facebook Fantasies: A study funded by OpenAI revealed that in Indonesian Facebook groups with 30k members, low-income users (making <$400/month) post AI photos of themselves with Lambos, in Paris, or at Gucci stores (link).

- Discussion centers on whether this trend is purely geographic or socio-economic, drawing parallels to past Hollywood dreams and generative-AI photo apps.

Eleuther Discord

- RTX 3090 Tokes LLM Training: Members estimated training speeds for a 30M parameter LLM on an RTX 3090, ranging from hundreds to thousands of tokens per second (TPS).

- One member reported 120 kt/s on a 4090 using 30m rwkv7 bsz 16 seqlen 2048, leading to expectations of high thousands of TPS.

- Anthropic Extends Attribution Graphs to Attention: The discussion explores extending attribution graphs to attention mechanisms, inspired by this video and Anthropic explored this in a follow-up post, detailed in the paper Tracing Attention Computation Through Feature Interactions.

- The original Biology of LLMs paper only looked at MLPs while freezing attention, as well as the paper https://arxiv.org/abs/2510.14901.

- Eval Harness Revamp Ready: A meeting is scheduled to discuss additions to the eval harness, focusing on sharing current plans and gathering feedback from library users, with details available on When2Meet and on this branch.

- Key improvements include: new templates for easy format conversion, standardizing formats, making instruct tasks more intuitive, and general UX improvements (e.g.

repeats), with the goal of enabling easier conversion between task variants (e.g., MMLU -> cloze -> generation).

- Key improvements include: new templates for easy format conversion, standardizing formats, making instruct tasks more intuitive, and general UX improvements (e.g.

- AI Paper Punctuation Police: A discussion critiqued the writing style in AI papers, particularly regarding comma usage, referencing the paper https://arxiv.org/abs/2510.14717.

- One member quipped I unironically believe that the writing of half of AI papers would be improved if you gave the authors a comma limit, to which another replied Then they’ll switch to semicolons or em dashes.

- NormUon Optimizer Enters the SOTA Race: A member mentioned a new optimizer that looks like SOTA if the results are good.

- Multiple sources say it’s the same perf as muon on their non-speedrun setups but with good muon baselines with the observation that

modded-nanogpt does qk norm which is one way you can avoid logit blowups.

- Multiple sources say it’s the same perf as muon on their non-speedrun setups but with good muon baselines with the observation that

Moonshot AI (Kimi K-2) Discord

- Kimi K2 trounces Prediction Markets: One user shared that Kimi K2 is the best model for working with prediction markets and has been using it, as stated in this tweet.

- They did not mention their specific use case for prediction markets.

- Groq’s Kimi faces Growing pains: Groq’s implementation of Kimi experienced a period of instability, with intermittent functionality issues.

- According to a user, the implementation is now back to normal, but provided no specific details on the issues.

- Kimi K2 Cracks BSODs: A user praised Kimi K2’s ability to provide solid troubleshooting advice for computer problems, and specifically suggested the use of verifier for stress-testing drivers that may be causing BSODs.

- The user did not include specific details on the kind of troubleshooting advice given, only stating that it was solid.

- DeepSeek Falls Short of Moonshot AI: A user expressed a preference for Moonshot AI’s Kimi over DeepSeek.

- The user acknowledged that this preference was a personal opinion, with no supporting evidence or reasoning provided.

- Codex not Cutting It: Users discussed various CLI tools for working with MCP servers and models like DeepSeek, GLM Coder, and Qwen, highlighting Claude Code and Qwen-code as solid options.

- The consensus was that Codex is only ideal when using with OpenAI models; no specific reasons for this preference were provided.

Nous Research AI Discord

- GLM 4.6 Opens OS Community Horizons: The release of GLM 4.6, capable of running locally, aims to reduce reliance on proprietary models from companies led by Sam Altman, Elon Musk, and Dario Amodei, according to this YouTube video.

- Meanwhile, the rise of new Chinese LLMs like Ant Group’s Ling models being separate from Alibaba’s Qwen team also prompts debate about the necessity of multiple models, given the existing presence of several strong contenders like Qwen.

- ScaleRL Appears Perfect for Sparse Reasoning Models: According to Meta’s recent Art of Scaling RL paper, ScaleRL seems tailor-made for sparse reasoning models such as MoE.

- Members are questioning whether trajectory-level loss aggregation granularity performs better than sample-level ones for Iterative RL (Reasoning), with discussion on whether step/steps (iteration) level might be a middle ground for tuning.

- New Healthcare AI Safety Standards: A member proposed research on AI safety with a focus on clinical/healthcare applications, to create benchmarks for AI models, referencing the International AI Safety Report 2025.

- The goal is to propose more accurate metrics, with the member seeking feedback on whether this is a good research topic.

- Nous Champions Decentralization with Psyche: Nous Research is embracing decentralization through open-source methodologies and infrastructure implementations, exemplified by Psyche, as evidenced by these links Nous Psyche and Stanford paper.

- A member stated, “Nous successfully decentralizes with their open source methodologies and infrastructure implementations.”

Manus.im Discord Discord

- Manus Google Drive Connection Allegedly Operable: Users reported that Manus connects to Google Drive by clicking the + button and adding files.

- The user confirmed it’s not available as a connected app.

- Users Report Manuscript Project Files Vanishing with Credits: A user building a site reported that after spending 7000 credits, everything in the project disappeared, including files and the database, and support was unhelpful.

- Another user echoed this, reporting losing nearly 9000 credits and finding no files or preview.

- Manus Used to Create Android Frontend App MVP Cheaply: A user created an Android frontend app MVP using 525 Manus credits, then used Claude to fix issues, and praised Manus’ UI/UX capabilities.

- The user shared images of the app.

- Manus Infrastructure has Outage: Manus experienced a temporary outage due to an infrastructure provider issue, with some users in certain regions still facing errors accessing the homepage.

- The team communicated updates and thanked users for their patience, reporting that most Manus services are back online.

- Free Perplexity Pro Promo Sparks Discord Drama: A user shared a referral link for a free month of Perplexity Pro, prompting a negative reaction from another user who told them to Stfu! and Get a job.

- The linked user said it was a no drama, no lies, no clickbait way to earn real money.

Modular (Mojo 🔥) Discord

- Mojo Compiling Itself?: Mojo’s compiler, leveraging LLVM and tightly integrated with it by lowering to MLIR, faces challenges in achieving full self-hosting unless it incorporates LLVM.

- While a complete rewrite to Mojo is technically feasible, it’s deemed a lot of work for not a ton of benefit, but C++ interop might make it easier in the future.

- Mojo as a MLIR DSL: Mojo functions as a specialized DSL for MLIR, parsing directly into MLIR and utilizing it over traditional ASTs, which grants it considerable adaptability and flexibility.

- Mojo’s architecture features numerous dialects, primarily new ones apart from the LLVM dialect, positioning it for diverse computational applications.

- MAX Kernels Backending PyTorch: Interest is sparked to switch the backend of JAX to use MAX kernels, potentially interfacing with C++ as a fun project, noting a mostly functional MAX backend for PyTorch already exists.

- While Mojo can

@export(ABI="C")any C-abi-compatible function, direct communication with MAX currently requires Python.

- While Mojo can

- Mojo Aims for Pythonic Prevention: Mojo’s design seeks to avert the fragmentation seen in Python between users of Python and CPython by providing avenues to transition between more pythonic

defand more systems-orientedfncode.- A member emphasized that the goal is to leave the door open to lower-level control but give safe default ways for people to not shoot themselves in the foot; let’s hope we can deliver.

- Mojo Debates UDP Socket Support: Users questioned UDP socket support in Mojo, with a response that it’s possible via libc, but full standard library support is pending for proper implementation.

- The answer indicated a preference for doing it “right not fast” and cited “language-level dependencies” as a factor.

DSPy Discord

- DSPy Pilots Claude-Powered Agents: Members explored integrating Claude agents into DSPy programs, referencing a prior implementation with a Codex agent.

- The primary difficulty lies in the lack of an SDK for Claude code; the community wants to see example agents.

- Clojure Contemplates Concurrency with DSPy: A member inquired about adapting DSPy to Clojure REPL environments, particularly regarding data representation, concurrent LM calls due to immutability, and inspecting generated functions.

- The nuances of adapting DSPy’s Python paradigms to Clojure’s functional and concurrent nature are not yet fully explored.

- DSPy Grapples with Generics for Typing: The community debated the feasibility of fully typing DSPy in Python, with a focus on whether Python generics suffice.

- While one member expressed confidence, emphasizing optimizing the input handle/initial prompt, they cautioned against premature optimization without clear task and scoring mechanisms.

- Gemini Geodesy for Google API Keys: Users discussed using Gemini models within dspy.lm, confirming it’s achievable via proper configuration and API key setup.

- A member jokingly shared their ‘painful journey’ in finding the correct API key, recommending AI Studio over the console.

- LM Studio gets llms.txt generator: A member shared a llms.txt generator for LM Studio powered by the DSPy framework.

- The tool allows users to easily generate

llms.txtfor repos lacking it, leveraging any LLM available in LM Studio; the member recommended using “osmosis-mcp-4b@q4_k_s” for generating example artifacts.

- The tool allows users to easily generate

Yannick Kilcher Discord

- Tianshou Boosts RL in PyTorch: A member suggested Tianshou as a viable reinforcement learning framework for PyTorch, noting its relevance to graph neural networks.

- The recommendation was received well by the community, furthering interest in practical RL implementations.

- What even is an AI Engineer??: Members debated the qualifications for an “AI Engineer,” joking that even using the OpenAI API in Python might be enough to impress on LinkedIn.

- The discussion highlighted the varied interpretations of the role, from assembling Legos in visual n8n to creating custom GPTs.

- Cracking the ML Debugging Interview: Members discussed how to prepare for an “ML Debugging” coding interview, recommending readiness to discuss handling overfitting.

- ChatGPT was also suggested as a mock interview tool and the conversation was recorded here.

- Qwen3 sees clearly: A new Qwen3 Vision model has been released and is available on Hugging Face.

- Members seemed curious about how it compared to other vision models such as those on ComfyUI.

- Machine Unlearning Seeks ArXiv Fame: Members discussed advocating for papermachine unlearning & knowledge erasure to be recognized in its own arXiv category (cs.UL/stat.UL).

- The promotion would highlight the growing importance of data privacy and security.

aider (Paul Gauthier) Discord

- Aider Still Kicking, Releases Just Slower!: Users discussed Aider’s development pace, clarifying it’s active but with slower releases, recommending aider-ce for more frequent updates and cloning GitHub to familiarize with the codebase.

- Community members are aiming to boost the project through increased commit frequency.

- Aider Eyes Agentic Extension Integration: A developer is building an agentic extension for Aider using LangGraph, including task lists, RAG, and command handling.

- Discussion centered on whether to integrate the extension directly into Aider or keep it a separate project, highlighting the importance of maintaining Aider’s simplicity while competing with top-tier agent solutions.

- Devstral Small Model: Sleeper Hit?: The

Devstral-Small-2507-UD-Q6_K_XLmodel is receiving accolades for surprisingly strong performance on limited hardware (32GB RAM), with self-correction and large context handling, especially with Unsloth’s XL quantized versions.- The user found that the model is outperforming

Qwen3-Coder-30B-A3B-Instruct-UD-Q6_K_XL, in PHP, Python, and Rust coding tasks, supporting image, and should be added to Aider benchmarks.

- The user found that the model is outperforming

- Aider-CE Takes Aim at Codex CLI Crown: One user switched back to Aider after testing gemini-cli, opencode, and Claude (with claude-code-router for DeepSeek API), praising its grep-based code search/replace and self-updating todo list.

- The user also highlighted the value of Aider’s simplicity and straightforwardness for coding tasks with MCP formatting in .aider.conf.yml).

- Reasoning Timeouts Plague Commit Messages: A user reported that using Deepseek V3.1 Terminus via OpenRouter for commit message reasoning was too slow, motivating them to disable it.

- An alternative was suggested: copy the API’s reasoning in resources and setting a new alias to a weak model.

tinygrad (George Hotz) Discord

- Karpathy Launches Minimal Chat App: Andrej Karpathy launched nanochat, a minimalistic chat application, but the poster questioned how significant the release was.

- No other details provided.

- ShapeTracker Faces Deprecation: tinygrad plans to deprecate ShapeTracker during meeting #92, along with discussions on usb gpu, multi output kernel, and FUSE_OPTIM.

- Additional topics included rangeify regressions, openpilot, resnet, bert, assign, cleanups, viz, driver, tiny kitten, symbolic processing, bounties, new linearizer, and clang2py.

- Contributor Requests Performance Metrics: A contributor requested the addition of MFLOPS and MB/s metrics, displayed in yellow on the DEBUG=2 line for better performance monitoring.

- The contributor explicitly asked that clean code be written and cautioned against using AI code you don’t understand!

- macOS Nvidia Drivers Finally Arrive: Nvidia drivers for macOS have been successfully produced, opening up possibilities for GPU-accelerated tasks on macOS.

- To enable the drivers, users are instructed to run

brew tap sirhcm/tinymesa.

- To enable the drivers, users are instructed to run

- TinyJit Gradient Accumulation Troubles: Members identified gradient accumulation issues in TinyJit, particularly in model_train.py, questioning the math regarding gradient accumulation.

- A member solved the issues by rewriting the gradient addition step using assign to ensure it worked correctly.

MCP Contributors (Official) Discord

- DevOps Admin Explores Secure MCP Access: A DevOps admin is exploring secure methods for granting MCP access to non-technical users within their organization, with a focus on avoiding key management and implementing an Identity Provider (IDP) layer.

- Solutions under consideration include Webrix MCP Gateway and making Docker MCP Gateway multi-tenant.

- MCP auth extension promises enterprise-managed auth: It was suggested that the enterprise managed auth profile, which is being released as an MCP auth extension, is designed to address the DevOps admin’s needs.

- However, current fine-grained permissions are limited to oauth scope granularity.

- Discord Channel Aimed at Contributors: A member clarified that the Discord is intended for communication among MCP protocol and related projects contributors, rather than technical support.

- Users seeking help were encouraged to DM for links to appropriate communities.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Windsurf Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

Perplexity AI ▷ #general (1099 messages🔥🔥🔥):

Comet Referral Program, AWS Outage, GPT-5 vs Claude, Scientific Method, MCP

- Discord Discusses Dollars for Referrals: Members discuss the Comet referral program, with some users asking for help on how to properly execute referral links.

- Some users report missing referrals or payouts, while others share tips for success, such as ensuring referred users install Comet and perform a search.

- AWS Outage Ominously Overwhelms Online Operations: A recent AWS outage caused widespread issues for Perplexity AI and other services, leading to discussions about the risks of over-reliance on single cloud providers.

- Users shared status links and personal experiences, with some noting that certain features were still affected even after the initial outage was resolved.

- GPT-5 Gets Grounded: Claude Crowned King: Members debated the relative merits of GPT-5 versus Claude, with many finding Claude to be superior for complex projects and reasoning tasks.

- Some users noted that GPT-5’s free tier felt noticeably weaker, while others suggested using Claude 4.5 for optimal results.

- Scientific Method Schooled for Scrutinizing Studies: A user asked for guidance on using AI for academic research, prompting a discussion on the scientific method and its application in building research frameworks.

- Experienced members advised breaking down the process into steps, referencing successful papers, and leveraging the Perplexity API in combination with tools like Google Colab.

- MCP: Mystery Context Provider Proves Perplexing: Members inquired about Local and Remote MCPs (My Context Providers), with some struggling to find the PerplexityXPC in Connectors, and others wondering if MCPs were worth learning.

- This led to a broader discussion of Local access to files/device and the automation opportunities around this technology.

Perplexity AI ▷ #sharing (11 messages🔥):

Perplexity AI, TikTok Video, Claude Sonnet 4.5, Shareable Threads, AWS Dashboard

- Perplexity Powers Polished TikTok: A member shared a TikTok video made with help from Perplexity AI.

- Perplexity AI Steps Up as Guide: A member shared a Perplexity AI claim invite link recommending it for step-by-step guides.

- Claude 4.5’s Philosophical Forte: Claude Sonnet 4.5 is highlighted as effective for philosophical problem solving, accompanied by a shared Claude conversation link.

- Shareable Threads Savior: The Perplexity AI bot prompted multiple users to ensure their threads are shareable, linking to a relevant Discord channel message.

- AWS Ace-essibility with Perplexity: A member shared a Perplexity AI search regarding creating an AWS dashboard link.

Perplexity AI ▷ #pplx-api (8 messages🔥):

API pricing, ZTT server

- Contacting Perplexity for API Pricing: A user inquired about who to contact for custom API pricing for large API users.

- Another user suggested emailing [email protected] to get in touch with the appropriate team.

- Request for ZTT Server Invitation: A user asked for an invitation to the ZTT server.

- No further context or details were provided regarding the ZTT server or its purpose.

LMArena ▷ #general (1182 messages🔥🔥🔥):

Lithiumflow's Coding Capabilities, Gemini 3 Speculation, AI Video Generation Quality, Claude Sonnet 4.5

- Lithiumflow’s Coding Skills: Gemini’s Coding Focus?: Members tested Lithiumflow and found that it has impressive capabilities, writing a full macOS system with functioning apps in a single HTML file, showcased on Codepen, with positive feedback.

- Despite this, some users found that Lithiumflow is inferior to Claude 4.5 for coding, indicating it may be a nerfed or Pro model rather than an Ultra model, with discussions suggesting Google is prioritizing coding abilities in its models.

- Gemini 3: When Will it Arrive?: There is much speculation regarding Gemini 3’s release and specifications, and some members are theorizing that Lithiumflow and Orionmist could be nerfed versions of Gemini 3 or specialized coding models, while a tweet suggests the next release won’t occur for another two months, viewable on Twitter.

- AI Video Quality: Sora vs. Veo 3.1: Members are debating the video quality of different AI models, including Sora 2 and Veo 3.1, viewable on ChatGPT’s website, with some stating that the quality of Sora has degraded since its initial release, and expressing confusion about why Veo 3.1 is ranked higher on leaderboards.

- The consensus appears to be that even though Veo 3.1 is ranked higher, that it is not better than Sora 2.

- Claude Sonnet 4.5: Is It Worth the Hype?: Members discuss Claude Sonnet 4.5’s capabilities, particularly in creative writing, with one member stating that Claude Sonnet 4.5 Thinking is in “a different league” than Gemini 2.5 Pro.

- However, others mention ongoing bugs and issues with models getting stuck or generating generic error messages on LMArena, hindering their ability to test and compare effectively.

LMArena ▷ #announcements (1 messages):

Text-to-Video Leaderboard, Image-to-Video Leaderboard, Veo-3.1 ranking

- Veo-lociraptor: Veo-3.1 Dominates Video Leaderboards!: The Text-to-Video Leaderboard & Image-to-Video Leaderboard now show Veo-3.1 ranking #1 in both categories.

- Users are encouraged to share their Veo-3.1 generations and provide feedback.

- Arena X Post: The leaderboards were also announced on X.

- Check out how Video Arena works and share generations in the discord channels.

Unsloth AI (Daniel Han) ▷ #general (1106 messages🔥🔥🔥):

Apple hardware support in Unsloth, AMD x PyTorch Hackathon, Training reasoning models for legal systems, Synthetic data generation with synonyms, Choosing models for coding tasks

- Unsloth eyes Apple Silicon Support: Support for Apple hardware is planned for Unsloth sometime next year, but as one member noted, take my word with a grain of salt….

- No further information or timeline was disclosed at this time.

- AMD x PyTorch Hackathon Extension announced: The AMD x PyTorch Hackathon is extended and to compensate for disruptions, each participant receives 100 hours of 192GB MI300X GPUs to use even outside the hackathon.

- Community members described the event as having so many issues but the team is trying.

- Modelscope mirrors Huggingface to circumvent AWS: Due to AWS outages affecting Hugging Face, a member recommends using Modelscope (a Chinese HF mirror) as a temporary alternative.

- A code snippet was shared describing how to load models from Modelscope in a Colab environment, with continued need of setting the

load_in_4bitflag.

- A code snippet was shared describing how to load models from Modelscope in a Colab environment, with continued need of setting the

- Consulting Advice triggers liability fears: A user seeking guidance on commercially using a fine-tuned LLM for real estate messaging was cautioned against free advice due to potential liability concerns.

- Another member clarified that such concerns apply only when providing detailed step-by-step guidance, not for suggesting general model or dataset choices.

- GPT-OSS finetuning seeks “policy” removal: Members discussed using RLHF to penalize GPT-OSS for generating policy-related content or refusing to answer questions, with the goal of creating a less censored model.

- Suggestions included removing the Chain of Thought to reduce censorship, but it may make it dumber, but uncensored*.

Unsloth AI (Daniel Han) ▷ #introduce-yourself (8 messages🔥):

Software Engineer Introduction, AI Bot Development, New Tricks for Old Dogs

- Software Engineer Specializes in AI Projects: A software engineer specializing in AI project development introduced themselves, offering services such as automation tasks, NLP, model deployment, text-to-speech, speech-to-text, and AI agent development.

- They highlighted proficiency with tools like n8n, Zapier, Make.com, GPT-4.5, GPT-4o, Claude 3-7 sonnet, Llama-4, Gemini2.5, Mistral, and Mixtral, also providing a link to their portfolio.

- AI Bot Dev Enters the Chat: A developer primarily focusing on AI bot development introduced themselves, also mentioning capabilities in gaming and scraping.

- No other details were given.

- Big Tech Bot Dev Going Deeper: A dev with experience in big tech developing bots using ChatGPT expressed enthusiasm for Unsloth.

- They described themselves as an old dog learning new tricks and stated that they’re going deeper now.

Unsloth AI (Daniel Han) ▷ #off-topic (426 messages🔥🔥🔥):

Qwen 2.5 VL Issues, Hackathon Challenges and Synthetic Data, GPU vram burden, Diff2flow, RP Stat thing

- Qwen 2.5 VL Can’t Understand Images: A member reported that Qwen 2.5 VL couldn’t understand images, providing a GitHub link to the code.

- The member suspected HuggingFace of embedding watermarks to create unfair competition, but clarified that the issue was that every model did not work at that point.

- Hackathon Halted Due to Lost Work: The Unsloth hackathon was paused due to some participants losing their work, prompting an investigation to ensure a fair competition, with promises of an even better prize.

- Participants discussed using synthetic data kits, generating Q&A pairs from models, and the challenges of completing the task within the time limit with a 192GB restriction.

- Diff2flow Project Revealed: A member shared details about a project called diff2flow, which converts existing eps or vpred models to flow matching, requiring specific hyper-parameters and datasets.

- They recommended using S3 on AWS for data storage, cautioning about ingress/egress costs, and noted that it’s different from the identifying watermark project.

- Tackling Scene Graph Algorithmic Nightmares: A member expressed frustration with building a scene graph for an RP stat thing, describing it as a code nightmare with numerous edge cases.

- The discussion explored using structured outputs and traversing a simple tree, but the challenge lies in capturing a naturally emerging scene graph from incomplete data.

- Ultravox Adapter Struggles with Speech-to-Text: A member released their first speech-to-text model based on Ultravox and Qwen 3 4B Instruct, noting it’s currently subpar and barely hears input.

- They’re doing a new run with 1152k steps vs 256k steps on the old adapter, and pointed out the special thing with ultravox is that it isn’t asr to llm to tts, the speech input gets processed by whisper but with the output being a 768 dim space.

Unsloth AI (Daniel Han) ▷ #help (73 messages🔥🔥):

FailOnRecompileLimitHit, Gemma3-270m decoding, TTS and ASR model training, GRPO recipe for gpt oss 20b, QWEN2.5 7B chat template

- FailOnRecompileLimitHit Arises in Unsloth RFT Notebook: A user encountered a FailOnRecompileLimitHit issue while trying the GPT OSS 20B unsloth reinforcement fine tuning notebook on an H100 80G, suggesting adjusting the setting shown in the error message.

- Another user suggested increasing the

torch._dynamo.config.cache_size_limitor sorting the dataset by size might help.

- Another user suggested increasing the

- Cracking the Code: Decoding Gemma3-270m Predictions: A user was struggling to correctly decode predictions when training gemma3-270m, aiming to compare labels to predictions in

compute_metrics.- A possible solution involves setting

os.environ['UNSLOTH_RETURN_LOGITS'] = '1'or checking this GitHub issue for adjusting the HF code or generating regularly insidecompute_metrics.

- A possible solution involves setting

- TTS and ASR Model Training Guide Available: A user inquired about training a TTS and ASR model on local languages using Unsloth locally, with data in CSV format.

- A link to the Unsloth TTS guide was provided for assistance.

- GRPO Recipe Struggles for GPT OSS 20B: A user shared that the GRPO recipe for gpt oss 20b seems to be struggling even after 100 steps and linked to the colab notebook.

- They noted they made modifications to get it running on Modal.

- QWEN2.5 7B Model Chat Template Integration Asked About: A user asked about how to ensure Unsloth applies the chat template during fine-tuning of a QWEN2.5 7B model.

- Another user shared screenshots regarding chat template applying process for Gemma3.

Unsloth AI (Daniel Han) ▷ #showcase (14 messages🔥):

Luau-Qwen3-4B-FIM-v0.1, Training Configurations, Luau-Devstral-24B-Instruct-v0.2, Brainstorm adapter

- Luau-Qwen3-4B-FIM-v0.1 Training Finishes Swimmingly: A member announced the completion of training for Luau-Qwen3-4B-FIM-v0.1, and shared clean graphs showcasing the results.

- Other members congratulated them and noted the clean graphs.

- Luau-Devstral-24B-Instruct-v0.2 Beats GPT-5 Nano: A member pointed out that GPT-5 Nano in Luau-Devstral-24B-Instruct-v0.2 outperforms GPT-5, noting its surprising performance.

- The member remarked that GPT-5 is weird.

- Training Configs are Shared for Qwen3: A member shared their detailed training configuration for Qwen3, including parameters such as

per_device_train_batch_size = 2andlearning_rate = 2e-6.- Another member found the information very helpful in understanding the settings for training Qwen3s.

- Brainstorm Adapter to Boost Metrics: A member suggested adding a Brainstorm (20x) adapter to the model to potentially increase metrics and improve long generation stability.

- The original trainer welcomed the suggestion and expressed interest in the potential benefits.

Unsloth AI (Daniel Han) ▷ #research (12 messages🔥):

AI model thinking modes, xLLMs Dataset Collection, Double descent history and papers

- Thinking Mode XML Tags Teach New Tricks: A member proposed using XML tags such as

<thinking_mode=low>to teach an AI model different thinking modes during SFT, categorizing examples based on CoT token count, with an auto mode for the model to decide.- Another member suggested that controllable thinking usually benefits from some reinforcement learning after SFT, while acknowledging the lack of compute power for RL, planning to experiment with SFT only.

- xLLMs Datasets: A Multilingual Bonanza: The xLLMs project introduced a suite of multilingual and multimodal dialogue datasets on Hugging Face, designed for training and evaluating advanced conversational LLMs on capabilities like long-context reasoning and tool-augmented dialogue.

- One highlight is the xllms_dialogue_pubs dataset, ideal for training models on long-context reasoning, multi-turn coherence, and tool-augmented dialogue across 9 languages.

- Double Descent History Visualized: A member shared a YouTube video explaining the double descent history and related papers visually.

OpenAI ▷ #ai-discussions (569 messages🔥🔥🔥):

Comcast data selling, Stealth model, Grok video, Sora 2 video quality, Sora 2 invites

- Comcast reps steal data and distribute it, claims user: A user claimed Comcast representatives are stealing data and selling it through sophisticated phishing attempts targeted through authentic Kenyan organizations and Philippines-based call centers.

- They are documenting cases for collective escalation and suggested directly contacting the CEO Brian Roberts to be held accountable.

- OpenCode Testers Discover Super Speedy Stealth Model: The OpenCode team has a mysterious stealth model for the weekend that is incredibly fast at spitting out tokens.

- They’re unsure if it’s intelligent but noted it’s the fastest model they’ve ever seen.

- Grok Launches Video Imagine Feature: Grok is now doing video generation, accessible via SuperGrok subscription ($30/month) without requiring a Twitter/X account.

- Users are finding Grok video generation copes fine with franchises and 3rd party content, producing videos without watermarks.

- Veo and Grok Video Generation Benchmarked Against Sora 2: Members are discussing that Veo 3.1 is roughly on the same level as Sora, but it’s not free and should be available in Australia, while another agreed that OpenAI is 20x ahead.

- One user is trying to create a video with a KSI cameo, but wasn’t sure what prompts to use, and another had one video scene declined as too violent.

- Qwen3 finetuning on Unsloth Library hits roadblock: A user is facing issues finetuning the Qwen3-2.35B-A22B-Instruct-2507 model when saving checkpoints using a llama factory and the unsloth library.

- Another user suggested it sounds like running out of memory and that saving checkpoints—especially full models or even LoRA weights—can cause VRAM exhaustion because it involves duplicating tensors for serialization, which doesn’t benefit from training-time optimizations like gradient checkpointing.

OpenAI ▷ #gpt-4-discussions (42 messages🔥):

Agentic AI Hackathon, VPN use with ChatGPT/Sora, DALL-E private image generation, ChatGPT access in universities, Sora Access and "k0d3"

- Hackathon for Agentic AI Sought: A member inquired about an agentic AI hackathon, prompting responses regarding upcoming hackathons hosted by OpenAI and Microsoft.