Chromium is all you need.

AI News for 10/20/2025-10/21/2025. We checked 12 subreddits, 544 Twitters and 23 Discords (198 channels, and 7709 messages) for you. Estimated reading time saved (at 200wpm): 564 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

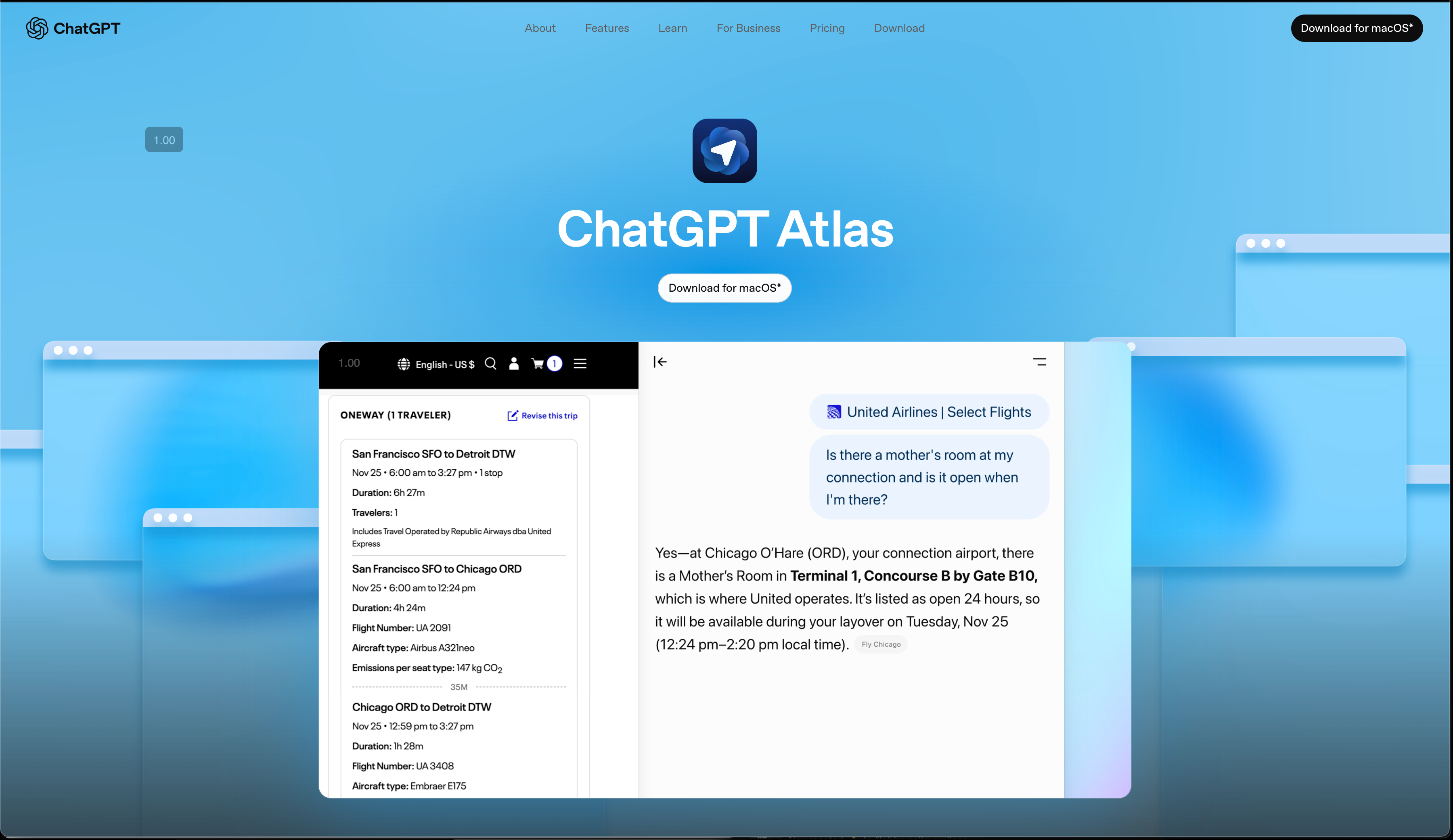

As leaked in July (and earlier), OpenAI finally launched their Chromium fork AI browser, Atlas (MacOS only for now but other platforms coming - download/website here):

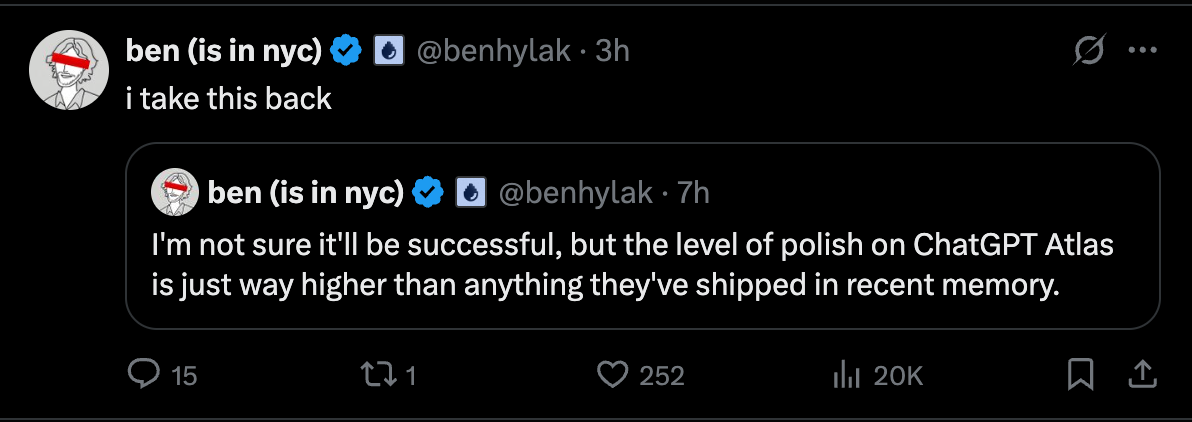

The integration is very polished and impressive, as you can see in the second half of the livestream. By bringing Agent mode into Atlas, OpenAI is not just matching what Gemini in Chrome already has, but going the next obvious step past it by reviving Operator and putting it in the local browser instead of remote, so that it can use your logins.

The vibes are positive, but not entirely so:

Your move, Google.

AI Twitter Recap

OpenAI’s ChatGPT Atlas Browser Launch

- Atlas ships with Agent Mode and “browser memory”: OpenAI unveiled an AI-first browser for macOS with ChatGPT embedded system-wide, optional page/context memory, and a preview “Agent mode” that can act on webpages (including logged-in sites with permission). macOS is rolling out now; Windows, iOS, and Android “coming soon.” See launch posts from @OpenAI, Agent mode details, and product notes. PMs highlighted use-cases and UX intent via @kevinweil, @bengoodger, and @fidjissimo. An incognito-style toggle for memory is present (@omarsar0).

- Early reactions: The “browser is the new OS” framing landed (@Yuchenj_UW, @nickaturley), but reliability and privacy trade-offs surfaced immediately. One head-to-head against Perplexity’s Comet showed Atlas completing a tedious grades-tracking task more robustly (context handling, faster actions, and “human-like” exploration) (@raizamrtn). Others called Agent mode “slop” for now and raised data access concerns (@Yuchenj_UW, privacy). Launch traffic briefly overwhelmed services (@TheTuringPost).

LangChain’s $125M Series B and v1.0 Agent Engineering Stack

- Funding + product milestone: LangChain raised a $125M Series B led by IVP with participation from CapitalG, Sapphire, Sequoia, Benchmark, and others, valuing the company at $1.25B. Alongside, it released 1.0 versions of LangChain and LangGraph, a LangSmith insights agent, and a no-code agent builder (@LangChainAI, @hwchase17, IVP note). The team emphasized a controlled, production-first agent runtime and observability, with a new createAgent abstraction + middleware in LangChainJS (@bromann, release notes). Usage claims: 85M+ OSS downloads/month and ~35% of the Fortune 500 using the stack (@veryboldbagel, @amadaecheverria).

- Ecosystem fit: vLLM added MoE LoRA expert finetuning support (@casper_hansen_) and credited an external analysis as impetus (@corbtt). Multiple teams highlighted production usage of LangGraph/LangSmith for agent reliability and evals (@Hacubu, @jhhayashi).

Vision Tokens, OCR, and New VLMs: DeepSeek-OCR, Glyph, Qwen3-VL, Chandra OCR

- DeepSeek-OCR (text-as-image) sparks debate: The paper reports large long-context compression by rendering text as images and decoding via a vision encoder + MoE decoder. Commentary ranges from enthusiastic technical breakdowns (97% reconstruction precision with ~10x fewer “visual” tokens; high-res convolutional compressor) (@rasbt) to sharp critiques on missed prior art (pixels-for-language and visual token compression lines) (@awinyimgprocess, @NielsRogge). Others argue the core takeaway is inefficiency in current embedding/token usage, not image superiority per se (@Kangwook_Lee).

- Zhipu’s “Glyph”-like direction and KV via vision tokens: Several noted Zhipu releasing a contemporaneous vision-token compression approach (“Glyph”), with claims of 3–4x context compression and infilling cost reductions without quality drop on long-context QA/sum (@arankomatsuzaki, context). Details remain sparse; watch for BLT-like extensions to push decoding efficiency further.

- Qwen3-VL-2B/32B: Alibaba released dense 2B and 32B VLMs, including FP8 variants and “Thinking”/Instruct types, claiming strong wins vs GPT‑5 mini and Claude Sonnet 4 across STEM, VQA, OCR, video, agent tasks; the 32B aims to match much larger models on OSWorld with high memory efficiency (@Alibaba_Qwen). Demos landed on HF quickly (@_akhaliq).

- Open-source OCR: Chandra OCR launched with full layout extraction, image/diagram captions, handwriting, and table support; works with Transformers/vLLM (@VikParuchuri).

Training/Serving Stack Updates: PyTorch, vLLM, FlashInfer, Providers

- Meta PyTorch drops new libraries: torchforge (scalable RL training), OpenEnv (agentic environments), and torchcomms, plus momentum around Monarch and TorchTitan within a “future-of-training” map (pretrain→post-train→inference) (@eliebakouch, stack summary, Monarch).

- vLLM and memory: kvcached enables serving multiple models sharing unused KV cache blocks on the same GPU (@vllm_project); the project is featured at PyTorch Conference (@vllm_project).

- FlashInfer-Bench: new “self-improving” benchmarking workflow to standardize LLM serving kernel signatures and auto-surface fastest kernels for day-0 integration in FlashInfer/SGLang/vLLM (@shanli_xing).

- Provider benchmarks for GLM‑4.6 (Reasoning): Baseten led output speed (104 tok/s) and fastest time-to-first-answer-token; pricing across providers clustered near $0.6/M input, ~$2/M output; all support 200k context and tool calling (@ArtificialAnlys).

Research, Evals, and Methods

- Continual learning via memory layers: Sparsely finetuned memory layers enable targeted updates with minimal forgetting compared to full finetune/LoRA (−11% vs −89%/−71% on fact tasks), proposing a practical route to incremental model updates (@realJessyLin, blog).

- Mechanistic interp at scale: Anthropic analyzed Claude 3.5 Haiku on a “perceptual” task, revealing clean geometric transformations and distributed attention algorithms; community notes it as among the deepest behaviors understood mechanistically to date (@wesg52, @NeelNanda5).

- Prompt optimization > RL for compound systems? GEPA uses reflective prompt evolution with Pareto selection to beat GRPO on HotpotQA, IFBench, Hover, PUPA, reducing rollout needs via natural language self-critique (@gneubig, paper/code, summary).

- Evals in the wild: SWE‑Bench Pro leaderboard update shows top models now >40% pass rate, with Claude 4.5 Sonnet leading (@scale_AI).

- Self-play caveats for LLMs: Why self-play shines in two‑player zero‑sum settings (minimax) but is tricky in real‑world domains (reward shaping, equilibria untethered from human utility) (@polynoamial).

Developer Tooling and Apps

- Google AI Studio “AI-first coding”: revamped build mode integrates multi-capability scaffolding (“I’m Feeling Lucky”), targeting faster prompt→production iteration for Gemini apps (@OfficialLoganK, demo, @GoogleAIStudio).

- Runway: announced self-serve model fine-tuning and a node-based Workflows system to chain models/modalities/intermediate steps for production creative pipelines (@runwayml, Workflows).

- Together AI: video and image generation models (e.g., Sora 2, Veo 3) now accessible through the same APIs used for text inference (@togethercompute).

- LlamaIndex: llamactl CLI for local LlamaAgents development/deployments; turnkey document agents template and private-preview hosting for doc-centric workflows (@llama_index, @jerryjliu0).

Top tweets (by engagement)

- “hahahaha the bed sends 16gb of data a month oh god” — IoT reliability/telemetry facepalm during the AWS outage (@internetofshit).

- “Meet our new browser—ChatGPT Atlas. Available today on macOS” (@OpenAI); “Make room in your dock” (@OpenAI).

- Karpathy on synthetic identity/personality tuning for nanochat via diverse synthetic dialogs (@karpathy).

- Qwen Deep Research upgrade: report + live webpage + podcast auto-generation with Qwen3 stack (@Alibaba_Qwen).

- Airbnb CEO: Qwen is “very good, fast and cheap,” often preferred in production over “latest” OpenAI models due to cost/latency (@natolambert).

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Qwen3-VL Model Performance Comparison

- Qwen3-VL-2B and Qwen3-VL-32B Released (Activity: 626): The image provides a detailed comparison of the performance metrics for the newly released Qwen3-VL-2B and Qwen3-VL-32B models against other models like Qwen3-VL-4B, Qwen3-VL-8B, and Qwen2.5-VL-7B. The table highlights the models’ performance across various tasks such as STEM & Puzzle, General VQA, and Text Recognition. Notably, the Qwen3-VL-32B model demonstrates superior performance, achieving higher scores in most categories, which are marked in red to indicate their significance. This suggests that the Qwen3-VL-32B model is particularly effective in these tasks, outperforming its predecessors and other variants. One comment humorously suggests that the release of the 32B model should satisfy those requesting it, indicating anticipation and demand for this model size.

- The release of Qwen3-VL-2B and Qwen3-VL-32B models marks a significant advancement, with the new models reportedly outperforming the previous 2.5-VL 72B model despite being less than half its size. This suggests substantial improvements in model efficiency and performance, likely due to architectural optimizations or enhanced training techniques.

- A comparison image provided by a user highlights the performance differences between Qwen3-VL-2B and Qwen3-32B, indicating that the newer models may offer superior capabilities in text processing tasks. This could be of particular interest to those evaluating model performance for specific applications.

- Benchmarks shared in the discussion suggest that the Qwen3-VL models excel in ‘thinking’ tasks, which may refer to complex reasoning or problem-solving capabilities. This positions the models as strong candidates for applications requiring advanced cognitive processing.

- DeepSeek-OCR AI can scan an entire microfiche sheet and not just cells and retain 100% of the data in seconds… (Activity: 405): DeepSeek-OCR AI claims to scan entire microfiche sheets, not just individual cells, and retain

100%of the data in seconds, as per Brian Roemmele’s post. The tool reportedly offers a comprehensive understanding of text and complex drawings, potentially revolutionizing offline data curation. However, the post lacks detailed technical validation or benchmarks to substantiate these claims. Commenters express skepticism about the verification of the extracted data’s accuracy and the openness of AI development between countries, particularly comparing the US and China. There is also criticism of the announcement’s lack of technical detail, labeling it as ‘hype BS’ without verification.- rseymour raises a technical concern about the resolution capabilities of the DeepSeek-OCR AI, questioning the feasibility of using ‘vision tokens’ at a resolution of

1024x1024. They suggest that this resolution might be insufficient for accurately capturing the details of a microfiche sheet, which typically requires higher resolution due to its small size and dense information content. The comment implies that the technology might be overhyped without proper validation of its capabilities. - Robonglious discusses the openness of AI development between countries, specifically comparing the transparency of AI advancements in China versus the US. They speculate whether companies like OpenAI or Anthropic would release similar OCR technology if they developed it, suggesting that the US might be less cooperative in sharing such advancements compared to China.

- TheHeretic and Big_Firefighter_6081 express skepticism about the claims made regarding DeepSeek-OCR AI’s capabilities. They criticize the lack of verification and validation of the results, implying that the information might be more hype than reality. This highlights the importance of rigorous testing and validation in AI technology claims to ensure credibility.

- rseymour raises a technical concern about the resolution capabilities of the DeepSeek-OCR AI, questioning the feasibility of using ‘vision tokens’ at a resolution of

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. ChatGPT Atlas Browser Launch

- Meet our new browser—ChatGPT Atlas. (Activity: 3175): ChatGPT Atlas is a new browser launched by OpenAI, currently available exclusively on

macOS. The browser integrates AI capabilities directly into the browsing experience, potentially enhancing user interaction with web content. However, the release is limited to Mac users, which has sparked some debate about accessibility and platform support. Commenters have raised concerns about data privacy and the decision to release the browser only for macOS, questioning the strategic choice and potential data handling practices.- Big-Info and douggieball1312 discuss the platform exclusivity of ChatGPT Atlas, noting that it is currently only available for Mac. This decision is critiqued as potentially alienating Windows users, especially given Microsoft’s financial backing of OpenAI. The irony is highlighted in the context of Microsoft’s investment, as Windows is a major competitor to Mac.

- Tueto raises concerns about data privacy with ChatGPT Atlas, questioning where user data is being sent. This reflects broader concerns about data handling and privacy in AI-driven applications, especially in the context of web browsing where sensitive information is often accessed.

- douggieball1312 points out the irony in ChatGPT Atlas being exclusive to Mac, despite OpenAI’s backing by Microsoft. This decision is seen as a reflection of a Silicon Valley tech bubble that may overlook the broader user base, particularly Windows users, which could impact adoption and user satisfaction.

- GPT browser incoming (Activity: 1511): The image is a social media post by Sam Altman, CEO of OpenAI, announcing a livestream event to launch a new product. The post is retweeted by OpenAI and features a graphic with the word “Livestream” and the OpenAI logo, indicating a significant announcement. The community speculates about the nature of the product, with some comments humorously suggesting a ‘sexbot’ or expressing concerns about privacy, likening it to ‘spyware’ similar to Google’s practices. The engagement on the post suggests high interest and anticipation for the announcement. The comments reflect a mix of humor and skepticism, with some users joking about the product being a ‘sexbot’ and others expressing concerns about privacy, comparing it to Google’s data practices.

- trustmebro24 speculates that the upcoming GPT browser might be based on Chromium, which is a common choice for many modern browsers due to its open-source nature and robust performance. Chromium’s architecture allows for extensive customization and integration of advanced features, which could be beneficial for a browser leveraging GPT technology.

- qodeninja raises a concern about the potential for the company to overextend itself by developing too many products, suggesting that it might be more effective to allow the broader ecosystem to innovate and create complementary technologies. This reflects a strategic consideration about resource allocation and focus in tech development.

- Vegetable_Fox9134 mentions the potential privacy concerns associated with a new browser, comparing it to existing issues with Google. This highlights the ongoing debate about data privacy and the trade-offs users face when using technology that may collect personal information.

- OpenAI’s AI-powered browser, ChatGPT Atlas, is here (Activity: 1041): OpenAI has launched an AI-powered browser named ChatGPT Atlas, which integrates the capabilities of ChatGPT into web browsing. This tool aims to enhance user interaction by providing AI-driven insights and assistance directly within the browser environment. The integration is expected to streamline tasks by leveraging the conversational abilities of ChatGPT, potentially transforming how users interact with web content. The comments reflect a mix of skepticism and curiosity, with some users expressing concerns about privacy and the potential for misuse, while others are intrigued by the possibilities of AI-enhanced browsing.

- CONFIRMED: OpenAI is Launching a New Browser TODAY Called ChatGPT Atlas (Activity: 747): OpenAI has launched a new browser called ChatGPT Atlas, available globally on

macOSwith plans forWindows,iOS, andAndroidsoon. The browser integrates AI capabilities directly into the browsing experience, offering a chat interface for seamless AI communication. It is introduced by key figures like Sam Altman and Ben Goodger. The browser is perceived as a strategic move to compete with Google and Microsoft, though it has been critiqued for its similarity to existing browsers with added chat functionality. More details can be found in the YouTube video. Commenters express skepticism about the browser’s impact, noting it may primarily serve OpenAI’s data collection needs rather than offering significant user benefits. Concerns are raised about privacy and data being sent to OpenAI, with some questioning the necessity of the browser given existing alternatives.- The introduction of ChatGPT Atlas by OpenAI is seen as a strategic move to compete with tech giants like Google and Microsoft. While the browser may offer some convenience and speed improvements, there is skepticism about its impact on users. The primary concern is the extensive data collection capabilities, which could surpass current systems by learning about users’ lives, interests, and behaviors in real-time.

- There is speculation that ChatGPT Atlas might be based on the Chrome engine, which would align with many modern browsers that leverage Chromium for compatibility and performance benefits. This choice could influence the browser’s adoption by providing a familiar user experience and support for existing web standards.

- A significant concern among users is the potential privacy implications of using ChatGPT Atlas. The browser could collect vast amounts of personal data, raising issues about how OpenAI will handle and protect this information. This concern is particularly relevant for users who may not fully understand the extent of data sharing involved.

2. Claude Desktop General Availability

- Claude Desktop is now generally available. (Activity: 836): Claude Desktop is now generally available for both Mac and Windows, offering seamless integration with local work environments. Users can access Claude by double-tapping the Option key on Mac, capture screenshots, share windows, and use voice commands via Caps Lock. The application supports enterprise deployment with

MSIXandPKGinstallers. For more details and to download, visit Claude’s official site. Some users were confused about the announcement, thinking the app was already available, while others noted the absence of a Linux version. The Quick Entry feature is praised for its functionality.- ExtremeOccident mentions that despite Claude Desktop being in beta, the Quick Entry feature is effective, indicating a focus on user experience and efficiency in input handling.

- Logichris highlights a limitation in token allocation for Claude Desktop, comparing it to a ‘paycheck to paycheck’ scenario, which suggests that the current token system may not support extensive use without frequent replenishment.

- Multiple users, including Yeuph and JAW100123, point out the lack of a Linux version, indicating a gap in platform support that could limit adoption among Linux users.

- {Giveaway} 1 Year of Gemini AI PRO (40 winners) (Activity: 2833): The post announces a giveaway for a one-year subscription to Gemini AI PRO for 40 winners, highlighting features such as the upcoming Gemini 3.0 Ultra,

1,000 monthly AI credits, and tools like Gemini Code Assist, NotebookLM, and integration with Gmail, Docs, and Vids. The package also includes2TB storageand extended limits on various applications, aiming to enhance productivity and creativity across different domains. Commenters highlight diverse uses of Gemini AI, such as aiding in storytelling and language translation for personal and professional purposes, supporting filmmaking through its ecosystem, and enhancing open-source contributions with code generation capabilities.- Bioshnev highlights the practical applications of Gemini AI in both personal and professional settings. He uses it for generating custom bedtime stories for his daughter and for work-related tasks like translating for foreign customers and retrieving product details. This showcases the model’s versatility in handling language processing and information retrieval tasks.

- thenakedmesmer discusses the impact of Gemini AI on creative projects, particularly in filmmaking. He mentions using features like ‘nano banana’ and ‘veo’ as part of a supportive ecosystem that aids in film production, illustrating how AI can serve as a virtual creative team, compensating for physical limitations and enhancing creative workflows.

- vladlearns emphasizes the importance of code generation capabilities in Gemini AI for open source contributions. This points to the model’s utility in software development, where it can assist in automating coding tasks, potentially increasing productivity and supporting collaborative projects.

3. Amazon’s Robot Workforce Plans

- Amazon hopes to replace 600,000 US workers with robots, according to leaked documents. Job losses could shave 30 cents off each item purchased by 2027. (Activity: 1630): Amazon is reportedly planning to replace

600,000US workers with robots by2027, as per leaked documents. This automation could potentially reduce costs by30 centsper item. The initiative is part of a broader strategy to address labor shortages and improve efficiency in fulfillment centers, a goal Amazon has pursued since acquiring Kiva Systems over a decade ago. The transition to robotics is seen as a necessary step due to high turnover rates and labor shortages in Amazon’s fulfillment centers. Commenters highlight that the cost savings may not translate to lower prices for consumers, and emphasize the strategic necessity of automation due to Amazon’s labor challenges. A former Amazon Robotics employee notes that the goal of replacing workers with robots has been longstanding but is progressing slower than anticipated.- The comment by ‘theungod’ highlights a critical operational challenge for Amazon: the high turnover and difficulty in staffing their fulfillment centers (FCs). The user notes that Amazon has been aiming to automate these roles since acquiring Kiva Systems over a decade ago, but the transition to robotics has been slower than anticipated. This suggests that the integration of robotics into Amazon’s logistics is not just about cost savings but also about addressing labor shortages.

- ‘theungod’ also provides an insider perspective, having worked at Amazon Robotics for over five years. They emphasize that the goal of replacing 600,000 workers with robots has been a long-standing objective, indicating that the technological and logistical hurdles are significant. This insight underscores the complexity of implementing large-scale automation in fulfillment operations, which involves not just technological development but also overcoming practical deployment challenges.

- The discussion touches on the broader implications of automation in logistics, particularly the potential societal impact. While the cost savings per item (30 cents) are noted, the focus is on the necessity of automation due to labor shortages rather than purely financial incentives. This reflects a shift in the narrative from cost-cutting to operational necessity, driven by the inability to maintain a stable workforce in demanding environments.

- Shape shifting drone (Activity: 1226): The post discusses a shape-shifting drone that appears to have a unique design, possibly inspired by biological forms, as suggested by the comment likening it to a ‘floating colonoscopy’. The image linked in the comments shows a drone with a flexible structure, which may allow it to adapt its shape for different flight dynamics or environmental conditions. This could be an innovative approach in drone technology, potentially enhancing maneuverability and efficiency. One comment suggests that the concept of a shape-shifting drone is not entirely new, indicating that similar designs may have been seen before. This could imply ongoing research and development in this area, reflecting a trend towards more adaptable and versatile UAV designs.

AI Discord Recap

A summary of Summaries of Summaries by gpt-5

1. GPU and eGPU Hardware Breakthroughs

- Blackwell Pro Packs 72GB, Quietly Drops: TechPowerUp reported NVIDIA quietly launching the workstation-class RTX Pro 5000 Blackwell with 72 GB GDDR7 memory, targeting pro workflows (NVIDIA RTX Pro 5000 Blackwell GPU with 72 GB GDDR7 appears).

- Engineers joked about likely pricing and use cases, while others flagged initial confusion over the unusual 72 GB capacity, mirroring similar coverage on VideoCardz.

- Tinygrad Makes Apple Silicon Love NVIDIA eGPUs: The tinygrad team announced early public testing of a pure-Python driver enabling NVIDIA eGPUs over USB4 on Apple Silicon using the ADT-UT3G dock,

extra/usbgpu/tbgpudriver, and NVK-basedtinymesacompiler (tinygrad enables NVIDIA eGPU on Apple Silicon (X)).- They measured about ≈3 GB/s PCIe bandwidth with SIP disabled and teased support for AMD RDNA 2/3/4 and Windows eGPU stacks next.

- Tiny Corp Boots NVIDIA on ARM MacBooks: Tiny Corp demonstrated an NVIDIA GPU running on an ARM MacBook via USB4 using an external dock, validating eGPU viability beyond Intel-era Macs (Tiny Corp Successfully Runs An Nvidia GPU on Arm Macbook Through USB4 Using An External GPU Docking Station).

- Mac users were upbeat, noting newer Pros with Thunderbolt 5 may further improve bandwidth headroom for local LLM and VLM workloads.

2. Triton/Kernel Tooling and Benchmarks

- FlashInfer-Bench Kicks Off Agentic Kernel Races: CMU Catalyst introduced FlashInfer-Bench, a workflow and leaderboard for agent-driven, self-improving LLM serving kernels with standardized signatures and integrations with FlashInfer, SGLang, and vLLM (FlashInfer-Bench blog).

- They published a live leaderboard and GitHub repo, inviting the community to iterate on kernels and benchmark updates.

- Triton Talks Stream and Sizzle: Developers shared full-session videos from the Triton conference at Microsoft, covering compiler advances and kernel design (Triton Conference livestream and Triton-openai streams).

- A recurring theme was hand-tuned PTX/assembly for critical kernels to beat compiler defaults, echoing calls to rethink execution from the ground up.

- Helion 0.2 Beta Fuzzes Triton to Tears: Helion 0.2 entered public beta as a Triton tile abstraction on PyPI, surfacing compiler edge cases during optimization passes (helion 0.2.0 on PyPI).

- Users reported MLIR failures in

TritonGPUOptimizeThreadLocalityPass, framing Helion as an effective Triton-compiler ‘fuzzer’ whose autotuner skips bad configs.

- Users reported MLIR failures in

3. OpenRouter SDK and New Reasoning Model

- OpenRouter SDK Types 300+ Models: OpenRouter released a TypeScript SDK (beta) with fully typed requests/responses for 300+ models, built-in OAuth, and support for all API paths (@openrouter/sdk on npm).

- SDKs in Python, Java, and Go are coming soon, aiming to simplify multi-model app development and authentication.

- Andromeda-alpha Cloaks Visual Reasoning: OpenRouter launched Andromeda-alpha, a small reasoning model focused on image/visual understanding, available for trial (Andromeda-alpha on OpenRouter).

- Since prompts/outputs are logged to improve the provider’s model, moderators warned: avoid personal/confidential data and do not use it for production.

- Mercury Outduels Qwen in Agent Arena: In agentic benchmarks, Inception/Mercury from provider Chutes edged Qwen on failure rate, latency, and cost in simple tasks (Chutes provider page).

- Members noted newer DeepSeek v3.1 models aren’t free via Chutes anymore, though a free longcat endpoint remains (longcat-flash-chat:free).

4. Open-Source Models and Text-to-Video Releases

- Ring & Ling MoEs Land in llama.cpp: Ring and Ling MoE models from InclusionAI now run in llama.cpp, spanning 1T, 103B, and 16B parameter scales (llama.cpp PR #16063).

- Practitioners questioned real-world reasoning quality and verbosity control, hoping for a model that doesn’t YAP during chain-of-thought.

- Krea Realtime Drops 14B Open T2V: Krea Realtime released a 14B open-source autoregressive text-to-video model distilled from Wan 2.1, generating long-form video at ~11 fps on a single NVIDIA B200 (Krea Realtime announcement (X)).

- Weights ship under Apache-2.0 on HuggingFace; users asked about ComfyUI workflows, RTX 5090 performance, and fine-tuning options.

- DeepSeek-OCR Joins the OCR Fray: DeepSeek-OCR arrived on GitHub, expanding the OCR toolkit with modern VLM-friendly design and multilingual aims (DeepSeek-OCR (GitHub)).

- Developers contrasted it with existing OCR stacks and highlighted the importance of contextual understanding for scripts like kanji.

5. AI Apps: ChatGPT Atlas Launch and Funding News

- OpenAI Ships Atlas, a Chromium AI Browser: OpenAI launched the ChatGPT Atlas browser for macOS, a Chromiumbased browser with boosted limits and multi-site browsing (Introducing ChatGPT Atlas and chatgpt.com/atlas).

- Early users flagged missing vertical tabs and built-in ad blocking (extensions required), while elsewhere users compared Atlas to Perplexity’s Comet, praising Comet’s privacy focus and integrated adblocker.

- AI Browser Buzz Meets Skeptic Snark: Engineers questioned the utility of new AI browsers, sharing skepticism over performance and data practices (AI browser hype thread (X)).

- One member quipped, ‘OpenAI knows this too, they are just farming data and throwing shit at the wall,’ capturing wider concerns about hype versus real value.

- LangChain Grabs $125M to Build Agent Stack: LangChain raised $125M Series B, positioning a three-part stack: LangChain (agent dev), LangGraph (orchestration), and LangSmith (observability) (LangChain raises $125M (X)).

- They touted adoption by Uber, Klarna, and LinkedIn, signaling continued investor confidence in agent tooling and production ops.

Discord: High level Discord summaries

Perplexity AI Discord

- Comet Outshines Atlas in Privacy: Users compared Comet and ChatGPT’s Browser Atlas, favoring Comet’s commitment to privacy and integrated adblocker.

- Several noted the similarity between the two, but praised Comet for its features catering to user privacy.

- AI Therapy Sparks Ethical Concerns: Discord members debated the ethics of using AI for therapy, with some emphasizing the importance of human emotional maturity.

- Opinions diverged, with some suggesting “ChatGPT is a good therapist,” while others cautioned against over-reliance on AI for mental health support.

- Perplexity Fanatic Shows Swag: A user showcased their Perplexity stickers, expressing enthusiasm for the brand and requesting a Comet hoodie from the Perplexity Supply store.

- The display of enthusiasm led to lighthearted jokes about resembling a “cult,” with others encouraging further purchases.

- API Users Want ChatGPT5 Access: A user inquired whether the Perplexity API grants access to models like ChatGPT5 and Claude, or is restricted to Sonar.

- The inquiry reflects a desire to utilize the API for potentially more advanced models beyond the currently available Sonar.

- Shareable Discord Threads Reminder: A message reminded users to ensure their Discord threads are set to

Shareableto be more accessible.- This ensures that links to the thread can be accessed by others, even outside of the specific channel, improving collaboration.

LMArena Discord

- Gemini 3 Pro Blows Away GPT-5 High in Web Design: Members compared Gemini 3 Pro and GPT-5 High, reporting that Gemini 3 Pro crushes web design.

- The general consensus is that Gemini 3 Pro is better for coding, whereas GPT-5 High is better for math and other tasks.

- Sora 2 Downgrade Sparks AI Subscription Debate: Members expressed frustration over the Sora 2 downgrade, leading to a broader discussion on the value of AI subscriptions.

- One member pointed out that their job performance is about 25-30% better because of AI, underscoring AI’s impact on desk job efficiency.

- Lithiumflow and Orionmist Speculated as Gemini 3 Checkpoints: Members speculated about the differences between Lithiumflow and Orionmist, ultimately concluding that these models are checkpoint versions of Gemini 3.

- The models sometimes erroneously claim training by OpenAI, suggesting potential model distillation.

- Open Source Models Allegedly Stealing Gemini 2.5 Pro: Discussion arose regarding the ethics of open-source models using stolen data for improvement, with claims that Chinese AI companies stole the 2.5 pro and made it open source.

- Some members agreed with the sentiment that this is okay as that’s the only way open source can win.

- TikZ Generation Task Elicits Surprise: Members are exploring the use of LLMs to generate images in TikZ, a typesetting language, to avoid data contamination.

- Early results indicate some success in generating TikZ images with LLMs, demonstrating a novel approach to image creation.

Unsloth AI (Daniel Han) Discord

- Ring and Ling Launch!: Ring and Ling MoE models are now supported in llama.cpp (link to Github), including 1T, 103B, and 16B parameter models from InclusionAI.

- Members pondered the reasoning abilities of the models, with one hoping for a reasoning model that doesn’t YAP.

- Disable Unsloth Statistics: To prevent telemetry calls when running Unsloth in offline mode, set the

UNSLOTH_DISABLE_STATISTICSenvironment variable andos.environ['HF_HUB_OFFLINE'] = '1', as the Unsloth community reached 100M lifetime downloads on Hugging Face (announcement on X).- Members also discussed resolving network issues by setting proxy environments.

- Nvidia RTX Pro 5000 Blackwell Workstation Card Quietly Appears: Nvidia quietly launched the RTX Pro 5000 Blackwell workstation card with 72GB of memory, as reported by VideoCardz.

- Initial confusion arose regarding the 72GB capacity, with one user joking that it was a way to bypass automod.

- User Fumes over Rate Limiting Tactics: A user ranted about a premium subscription service blocking access to URLs containing roman numerals, incorrectly interpreting them as malicious activity.

- The user, frustrated with manual workarounds and security plugins, criticized the service for ignoring requests to allow bulk downloads for pro subscribers.

- Nvidia GPU Transplanted onto ARM Macbook: A member shared an article from Tom’s Hardware on successfully running an Nvidia GPU on an ARM Macbook through USB4 using an external GPU docking station: Tiny Corp Successfully Runs An Nvidia GPU on Arm Macbook Through USB4 Using An External GPU Docking Station.

- This was exciting to Mac users since they have Thunderbolt 5 too now on the ‘pros’ which gives slightly more hope to Mac users.

Cursor Community Discord

- Codex Called a Billion Dollars Compared to Claude’s Toonie: Users debated the merits of Codex vs Claude for code generation, with one user stating that comparing them is like comparing “a billion dollars or a toonie”.

- No further details were provided.

- Cursor Site Crashes, Subs Lost: Multiple users reported the Cursor website being down for several hours, preventing them from logging in, upgrading plans, or renewing subscriptions.

- Some suspected AWS issues as the root cause, while others pointed out the lack of subscription expiration notifications as a major inconvenience.

- Dashboard Cracks Cursor Costs Post-Pricing Changes: A user shared a dashboard they created to track actual Cursor costs after the pricing changes, especially for users on legacy pricing plans and gave this forum link cursor.com/blog/aug-2025-pricing.

- The tool requires cookie login or .json upload from the user’s local machine, but promises comparison with real API pricing.

- Background Agents Encounter Internal Error: A member reported encountering an internal error when running a first experiment with background agents via Linear, where the agent starts, does some thinking and grepping, but then stops.

- The error message received was: “We encountered an internal error that could not be recovered from. You might want to give it another shot in a moment.”

OpenRouter Discord

- OpenRouter SDK: Beta Boost: The new OpenRouter SDK is in beta on npm, aiming to be the simplest way to use OpenRouter and offering fully typed requests and responses for 300+ models.

- Python, Java, and Go versions are coming soon, featuring built-in OAuth and support for all API paths.

- Andromeda-alpha: Cloaked & Ready: OpenRouter launched a new stealth model named Andromeda-alpha (https://openrouter.ai/openrouter/andromeda-alpha), a smaller reasoning model focused on image and visual understanding.

- Prompts/outputs are logged to improve the model, users are cautioned against uploading personal/confidential info and not using it for production.

- Objective AI’s Confidence Code: Objective AI now offers a Confidence Score for each OpenAI completion choice, derived through a smarter method than directly asking the AI and emphasizing cost-efficiency.

- The CEO is building reliable AI Agents, Workflows, and Automations free of charge using n8n integration to gather more examples.

- Mercury Swats Qwen in Agent Arena: Inception/Mercury (Chutes provider) edges out Qwen in simple agentic tasks, exhibiting lower failure rate, faster speed, and lower cost.

- New Deepseek models like v3.1 aren’t available as free versions through Chutes, though they recently added a free longcat endpoint.

- AI Browser Bandwagon Bewilders Brains: Members are skeptical towards the hype around new AI browsers like X’s AI browser, questioning the utility and performance impact of integrated AI.

- One member compared the hype to the dotcom bubble, stating that OpenAI knows this too, they are just farming data and throwing shit at the wall.

OpenAI Discord

- OpenAI Launches Atlas Browser: OpenAI released the ChatGPT Atlas Browser for macOS today at chatgpt.com/atlas, detailed in their blog post.

- The browser, based on Chromium, boasts boosted limits, direct website access and support for multiple websites, but lacks vertical tabs and a built-in ad blocker.

- Meta Shuts Down 1-800-ChatGPT on WhatsApp: Meta is blocking 1-800-ChatGPT on WhatsApp after January 15, 2026, according to a blog post.

- The change is due to Meta’s new policies.

- Sora Limits Video Lengths: The Sora iOS app limits video generation to 10-15 seconds, while the web version allows longer videos for Pro subscribers.

- Free and Plus users can also generate longer videos on the web version, with Pro users having access to the storyboard feature and generating up to 25-second videos.

- AI-Driven OS Prototype Appears: A member introduced a prototype AI-driven OS, featuring an AI Copilot Core, a Seamless Dual-Kernel Engine, and a NeoStore for AI-curated apps (source).

- Further components include a HoloDesk 3D workspace, an Auto-Heal System, Quantum Sync, and an Atlas Integration Portal for accessing external AI tools.

- GPT-4 Annoying Users: A user expressed irritation with GPT-4’s new condescending tone, especially phrases like “if you insist,” and requested to make the model less confident.

- No solutions were provided, other than a general agreement that the new GPT is annoying.

HuggingFace Discord

- GPT-4o Saves Data Pipeline?: A member suggested using GPT-4o or other vision models for high-accuracy labeling and automated comparisons, but they were also cautious about the costs of replacing Apache Beam.

- Another member thought the architecture was overkill, likening it to proposing a starship to go to the grocery store.

- Unsloth’s Script Tunes LLMs Easily: A member requested insights into setting up Parameter-Efficient Fine-Tuning (PEFT) on Large Language Models (LLMs), and another member pointed out challenges in multi-GPU setups and suggested using Unsloth’s script on Colab Free.

- They cautioned about handling internal company data and linking to further resources, like the Fine-tuning LLMs Guide.

- Databomz Manages All Prompts!: A member introduced Databomz, a workspace and Chrome extension for saving, organizing, and sharing prompts with features like tags, versions, and folders.

- The member highlighted a Forever Free plan and encouraged feedback from prompt engineers.

- Solo Dev creates TheLastRag: A solo developer created an entire LLM Framework called TheLastRag, highlighting features like True memory, True personality, True learning and True intelligence, and is looking for feedback.

- The main points are that the AI never forgets, has a true personality, has true learning, and has true intelligence.

- Local VLM Training Consumes Gigabytes of Memory: A member reported that while training the VLM exercise locally, it’s using a large amount of swap memory (62GB claimed and ~430GB virtual memory).

- The same member asked if there’s a way to limit memory usage specifically for MPS (Metal Performance Shaders) on Macs, with a goal to enable training within a more reasonable 40GB VRAM limit.

LM Studio Discord

- LM Studio Struggles Linking llama.cpp: Members noted that how can I call my own llama.cpp for LM Studio to use is not yet fully supported and the LM Studio docs that references this are a broken link.

- There is not an obvious known workaround, so users may have to wait until the feature is added.

- AGI ETA: 2044?: A member forecasted that AGI is 10-20 years away, claiming that in 5 years LLM’s will probably have context large enough.

- Another member jokingly suggested he become a consultant and charge 1000/h.

- GPT-OSS Reasoning Demands Metadata: A user inquired about setting reasoning effort in GPT-OSS finetunes, with a member responding that it works due to the metadata in the mxfp4 model of gpt-oss, which is why finetunes/ggufs don’t have it.

- The helpful member offered to make it available before quantizing it to gguf.

- OpenWebUI Connects to LM Studio via OpenAI: When trying to connect OpenWebUI to LM Studio, users suggested leveraging the OpenAI option instead of OpenAPI.

- Members helped troubleshoot the connection, pointing to this huggingface discussion recommending to put /v1 in the address.

- NVIDIA’s RTX Pro 5000 Blackwell Leaks: A member shared a TechPowerUp article about NVIDIA’s RTX Pro 5000 Blackwell GPU featuring 72 GB of GDDR7 memory.

- Excited users reacted with humor, guessing the card will cost around $8-10k.

Latent Space Discord

- TinyGrad Powers Apple Silicon eGPUs: Tinygrad now supports NVIDIA eGPUs on Apple Silicon via USB4, enabling users to run external RTX 30/40/50-series GPUs using an ADT-UT3G dock with the

extra/usbgpu/tbgpudriver and NVK-basedtinymesacompiler (source).- With SIP disabled, this setup achieves roughly 3 GB/s PCIe bandwidth, and future support for AMD RDNA 2/3/4 and Windows eGPU stacks is planned.

- Krea AI Unveils Realtime Video Model: Krea AI released Krea Realtime, a 14B open-source autoregressive text-to-video model distilled from Wan 2.1, generating long-form video at 11 fps on a single NVIDIA B200 (source).

- Released weights are on HuggingFace under Apache-2.0, prompting user inquiries about ComfyUI workflows, RTX 5090 performance, and fine-tuning support.

- Google AI Studio’s ‘Vibe-Coding’ with Gemini: Google AI Studio is launching a new “prompt-to-production” Gemini experience after five months of development aiming to make AI-app building 100× easier (source).

- Reactions mixed excitement (requests for mobile app, opt-outs, higher rate limits), feature suggestions (GSuite-only publishing, VS Code plug-in, short browser-agent tasks) and some skepticism about fit vs Gemini 3 expectations; team confirms enterprise-only deployment is already available.

- Fish Audio S1: TTS Revolution?: Fish Audio launched S1, a text-to-speech model that’s purportedly 1/6 the cost of ElevenLabs, touting 20k devs and $5M ARR (source).

- Users shared instant voice-clone demos, asking about real-time latency (~500ms), while founders admitted current limits and promised wider language support + conversational model next.

- Second-hand RTX 3090 Buying Tips: Taha shared lessons learned after buying a used RTX 3090: meet seller in person to inspect card, bring a portable eGPU test rig, verify recognition with nvidia-smi, run memtest_vulkan for VRAM integrity, optionally gpu-burn for compute stress, load a large model and monitor temps <100 °C; see guide here.

- The test rig is a Framework 13 Ryzen laptop on NixOS in PRIME offload mode, and a user suggested trying tinygrad on their rig since mine works ootb since I’m on linux.

GPU MODE Discord

- AMD’s Web3 Cloud Gambit: At an AMD event, the company emphasized the “cloud” aspect of web3, raising some eyebrows (smileforme emoji).

- Details of AMD’s specific offerings remain vague, leaving the community to speculate on their approach to decentralized technologies in the cloud.

- FlashInfer-Bench Automates AI: FlashInfer-Bench was introduced by CMU Catalyst as a workflow for creating self-improving AI systems via agents, featuring standardized signatures for LLM serving kernels and integration with FlashInfer, SGLang, and vLLM (blog post, leaderboard, GitHub repository).

- The project aims to foster community development and benchmarking, enabling AI systems to iteratively enhance their performance.

- Triton Conference Electrifies Microsoft: Members who attended the Triton conference at Microsoft in Mountain View shared a YouTube link to watch the conference online and a link to the Triton-openai streams.

- The conference brought together developers and researchers to discuss the latest advancements and applications of the Triton language.

- NCCL Kernels run on PG-NCCL’s internal streams: When a

CUDAStreamGuardis set and an NCCL op is called viaProcessGroupNCCL, the NCCL kernels run on PG-NCCL’s internal streams, typically using one stream per device with high priority, and using the tensor lifetime stream (relevant code).- Setting a

CUDAStreamGuarddetermines which stream the NCCL stream waits on, establishing an incoming dependency, as seen in the pytorch source code.

- Setting a

- SLNG.AI Hunts Voice AI Performance Wiz: SLNG.AI is on the lookout for a Speech Model Performance Engineer to build the backbone for real-time speech AI (more details).

- The role requires a strong software engineering background to optimize and enhance speech model performance.

Yannick Kilcher Discord

- IntelliCode reads your mind: A member expressed awe at Microsoft’s IntelliCode in Visual Studio, an AI-powered code completion tool that accurately predicts entire method bodies by leveraging a lot of context.

- They remarked that it was almost like it’s reading your mind when it works well due to its ability to understand and anticipate coding needs with impressive accuracy.

- DeepSeek OCR Enters the Ring: DeepSeek-AI released DeepSeek-OCR on GitHub, joining the competition in the OCR technology space.

- Also, Anthropic released Claude Code on the web, expanding options for developers seeking AI-assisted coding tools.

- Amazon Vibe Code ditches Beta: Amazon’s Vibe Code IDE is out of invite-only beta, but it costs 500 credits to use.

- It is yet another VSCode fork that leverages AI.

- Open Source details evade West’s grasp?: A member lamented the West’s lack of superior OS labs, as Deepseek consistently unveils impressive discoveries.

- They pointed out that open source weights account for only a fraction of the overall value, emphasizing the importance of open source data collection, methods, and training details.

- Unitree set to crush on Tesla?: A member predicted that Unitree will dominate the humanoid robotics market.

- They speculated that Elon Musk may be struggling to acquire necessary components, quipping he probably can’t even get the magnets for the actuators at the moment thanks to the orange dude.

DSPy Discord

- DSPy Powers AI NPC Voices: A member built a voice generation system for game NPCs, using DSPy to parse wiki content and generate voice prompts for ElevenLabs, also sharing a devlog style video.

- They plan to leverage DSPy’s optimization features to improve the character analysis pipeline and automate voice selection, and intend to collect manual selections as training signals, optimizing toward subjective quality judgments in the future using an automated judging loop.

- DSPy Featured in Research Paper: A new paper (https://arxiv.org/abs/2510.13907v1) utilizes DSPy in its research, signaling growing adoption within the academic community.

- Although the paper mentions the use of DSPy, the corresponding code repository is not yet publicly available.

- Navigating DSPy History Access: Members debated why

inspect_history()is a method indspyrather than a module object, and clarified thatdspy.inspect_history()is more for global history and individual programs also track history.- It was pointed out that history can be accessed with

predictor.historyifdspy.configure(track_usage=True)is set, but some still found this confusing.

- It was pointed out that history can be accessed with

- Demystifying DSPy Adapters with Context: The discussion covered using adapters in DSPy, with an example showing how to use

dspy.contextto apply a single adapter, and the user can track usage withdspy.configure(track_usage=True).- A member gave an example of setting it up with

with dspy.context(lm=single_call_lm, adaptor=single_adaptor):to further clarify the process.

- A member gave an example of setting it up with

- Trace Claims Accuracy Edge Over DSPy: A member asked for a comparison between Microsoft Trace and DSPy, with another noting that Trace claims an 8% accuracy increase over DSPy and appears more token efficient.

- One member mentioned they would try it out to give a fair comparison, although they will probably still feel like they have more granular control with DSPy.

Eleuther Discord

- Discord Server Badges Spark Debate: Members discussed the possibility of adding a server badge, similar to a role icon, and how a server tag might broadcast the server too widely, potentially increasing the moderation load for EAI staff, referencing this screenshot.

- One member noted, *“making a tag is cool but that is in a way broadcasting this server everywhere else, eai staff already gets too many people here to moderate.”

- EleutherAI IPO Dreams Spark Jokes: Following a question about whether a particular stock symbol was available, a member jokingly asked, *“what will Eleuther’s NYSE stock symbol be?”

- Another member responded, “I think you misunderstand the purpose of being a non-profit,” implying that EleutherAI, as a non-profit organization, would not be publicly traded.

- Normuon’s Triumph Prevents Logit Blowup: A member noted that normuon beating muon even with qk-norm (which avoids logit blowup) in their baseline suggests logit blowup prevention might not fully explain the performance parity.

- It was posited that updates without clipping increase the spectral rank of weights, directly leading to logit blowups, making large-scale validation against normuon interesting.

- AGI Definition Benchmarks Beckon: A member shared a link to Dan Hendrycks’ AGI Definition benchmarks and asked how fast they would be benchmarked.

- Another member predicted multimodality would likely be covered in 1-2 years, with speed coming from mini versions of models.

Manus.im Discord Discord

- Cloudflare snags Manus Users: Users are reporting issues with Cloudflare security when visiting most websites while using Manus.

- A suggestion was made for the Manus team to consider open-sourcing some of their older models, possibly to bypass the Cloudflare issues.

- Payment Problems Plagues Platform: A user encountered issues paying for credits via a web browser, experiencing jumbled code and transaction failure.

- The user stated this is a known issue and contacted support; lucia_ly requested their email to follow up and resolve the payment issues.

- Chat Slowdowns Irk Users: A user reported excessive delays in chat processing when translating long Japanese chapters into English.

- Despite usually appreciating Manus’s speed, the user noted, “this morning, I put one chapter and the ai is still thinking. What happened?”

- Pro Plan Credit Cap Confusion Continues: Users are reporting conflicting information about unlimited credits on the Pro plan, with the help system and iOS upgrade page stating it is unlimited, while the PC upgrade page indicates a high limit.

- One user with 11k credits remaining was concerned about depletion, and another suggested, that they should participate in “various opportunities to help improve Manus, as they always give free credits for your time”.

- Scam Alert issued to Users: A user was accused of being a “fraudster scammer” asking for people’s login access to their accounts to do their *“fucking law school exam research”.

- Another user warned, that the supposed fraudster “wont make another account or pay $20/month and complains its like tomorrow and begging to get ur EMAIL PASSWORD for a PAID ACCOUNT To probably steal ur personal info and bank info”.

Nous Research AI Discord

- China’s A.I. Competition benefits the Globe: A member believes that China’s insane spartan involution competition in A.I. is great for the A.I. space because it democratizes access to advanced models and destroys monopolies.

- They also state that the rate of advancement in OS model development means that 2026 should bring us OS models reaching 100% high intelligence with 90% lower cost, destroying monoplist ambition.

- Nous Promoted as Decentralized A.I.: A member notes that Nous Research is promoted as Decentralize A.I. and hopes the team will resolve issues with centralization, linking to the Nous Psyche page.

- Another member stated they are more focused on the democratization of A.I models for the masses, citing a Stanford paper on centralization and asserting that Nous successfully decentralizes with their open source methodologies and infrastructure implementations.

- Sora AI Project Showcased: A member showcased a video creation with Sora, sharing the video at 20251022_0850_01k850gmktfant3bs18n3hbd79.mp4.

- The video’s content and implications for AI-driven content creation are under discussion within the community.

- Microsoft Trace Utility Resurfaces: A member shared a link to the Microsoft Trace utility, noting that apparently it’s not all that new.

- Its features and capabilities are being re-evaluated in light of current development practices.

tinygrad (George Hotz) Discord

- Nvidia Drivers Hacked onto macOS: Madlads accomplished the impossible, porting Nvidia drivers to macOS and sparking excitement within the community.

- The driver port enables running tinygrad on macOS with Nvidia GPUs, opening new possibilities for development and testing.

- GLSL Renderer Almost Ready: The community has been developing a GLSL renderer for tinygrad, which is now passing the majority of tests and is available on GitHub.

- This marks a significant step toward expanding tinygrad’s compatibility with different platforms and graphics APIs.

- clspv Bugs Plague Vulkan Backend: Progress on tinygrad’s Vulkan backend is hampered by numerous bugs in clspv, requiring optimizations to be disabled (

-O0 --cl-opt-disable) to pass tests.- The member also reported much more miscompilations from clspv if optimizations aren’t disabled.

- Vulkan Sine’s Accuracy Troubles: The Vulkan’s sine function isn’t as accurate, requiring a custom implementation which would impact performance.

- This accuracy issue could pose challenges for tinygrad’s performance on Vulkan, necessitating careful consideration of alternative sine implementations.

- TinyJit’s Gradient Addition is Broken: Gradient accumulation was broken in TinyJit a couple months ago and the member fixed it by rewriting the gradient addition step to use an assign.

- A member also reported running into issues with gradient accumulation and fixed it by setting

reduction=sumand manually counting non-padding tokens.

- A member also reported running into issues with gradient accumulation and fixed it by setting

Moonshot AI (Kimi K-2) Discord

- Karpathy Criticism Raises Bubble Concerns: A member speculates that recent mockery of Karpathy on X might signal a valuation bubble in American frontier AI labs, citing this post.

- The referenced post features a chart that appears to be mocking Karpathy, though without explicit context from the original poster.

- Kimi K-2 Support Faces Scrutiny: A member reported a lack of response from Kimi support, noting zero communication regarding their issue.

- Other members clarified that the channel isn’t an official support platform, recommending direct messaging and requesting details about the problem and the email used for the bug report.

Modular (Mojo 🔥) Discord

- Python Familiarity Boosts Mojo Discovery: A member suggested that prior experience with Python facilitates easier discovery of Mojo and the unique features it offers.

- However, discrepancies between Mojo and Python could potentially cause confusion for new users.

- Human Touch Beats Compiler in Matmul Tuning: A discussion arose regarding why matmul optimizations aren’t directly integrated into the compiler, especially given their performance impact.

- The response highlighted that manual tuning by kernel writers often surpasses compiler optimizations for hot-path code, allowing for fine-tuning to specific hardware, with reference given to Mojo’s open-source, hardware-optimized matmuls.

- Freeing Kernel Writers from the Compiler: Moving optimizations out of the compiler expands contribution opportunities to more kernel writers.

- This approach allows compiler engineers to focus on broader ecosystem improvements rather than niche optimizations, such as a 1% boost to matmuls where one dimension is less than 64.

- Finished Type System Tops Mojo Wishlist: When asked about the most crucial missing feature in Mojo, a member emphasized the need for a finished type system.

- Additional desired features include rounding out standard library datatypes, proper IO, a good async runtime, an effect system, static reflection, compiler plugins, the ability to deal with more restrictive targets, cluster compute, device/cluster modeling, and some clone of Erlang’s OTP.

MCP Contributors (Official) Discord

- GitHub Actions Fail Amidst Billing Issues: GitHub Actions are currently failing because the account is locked due to a billing issue.

- Users should address the billing issue promptly to restore GitHub Actions functionality.

- GitHub Actions Billing Lockout: The root cause of the failing GitHub Actions is a billing lockout on the account.

- Immediate resolution of the billing issue is necessary to restore the functionality of GitHub Actions.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Windsurf Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

Perplexity AI ▷ #general (1090 messages🔥🔥🔥):

Comet vs Atlas, AI and Mental Health, Schumacher vs Senna, Perplexity Merch, Using AI Responsibly

- Comet Crushes Atlas in Privacy Showdown: Users debated the merits of Comet versus ChatGPT’s Browser Atlas, with many valuing Comet’s focus on privacy and built-in adblocker, noting that they are “essentially copies of each other.”

- AI Therapy: A Mental Health Minefield?: Discord members questioned the ethical implications of using AI for therapy, some highlighted the importance of human emotional maturity and responsibility, while others suggested that “ChatGPT is a good therapist.”

- Schumacher > Senna?: A long discussion comparing Schumacher and Senna, with one member declaring that “Schumacher was better than Senna,” while another stated “he was for sure the best one ever.”

- Perplexity’s New Swag: Is It a Cult?: A member proudly showed off their Perplexity stickers on their laptop, joking about being a “PPLX fan” and the need for a Comet hoodie and water bottle from the Perplexity Supply store.

- Some users lightheartedly joked about this level of enthusiasm resembling a “cult”, while others playfully encouraged them to buy everything.

- Navigating the AI Maze: Responsibility Required: In Germany, it is required you must say that you have used AI when you are working with it.

Perplexity AI ▷ #sharing (3 messages):

Shareable Threads, Time-based Researcher

- Discord Threads Should Be Shareable: A message reminded users to ensure their Discord threads are set to

Shareable.- This ensures that links to the thread can be accessed by others, even outside of the specific channel.

- Time-Based Researcher Launched: A user shared a link to a Perplexity AI search for a time-based researcher.

- The link directs to perplexity.ai/search/time-base-researcher.

Perplexity AI ▷ #pplx-api (2 messages):

Perplexity API, ChatGPT5, Claude, Sonar

- Perplexity API Question Asks About Model Access: A user inquired about whether the Perplexity API allows access to ChatGPT5 and Claude, or if it is limited to Sonar.

- The inquiry is centered around understanding the scope of model access provided through the Perplexity API.

- Clarification on Model Availability via Perplexity API: The user seeks to confirm if the Perplexity API extends beyond the Sonar model to include access to more advanced models like ChatGPT5 and Claude.

- This reflects interest in leveraging the API for potentially higher-performing models if available.

LMArena ▷ #general (1064 messages🔥🔥🔥):

GPT-5 vs Gemini 3, Sora 2 and Video Generation, TikZ Generation, Gemini 3 Model Performance

- Gemini 3 Pro Crushes GPT-5 High in Web Design: Members are discussing whether to wait for a GPT-5 release or use Gemini 3 Pro with one member reporting that Gemini 3 Pro crushes web design.

- They find that Gemini 3 Pro is better for coding while GPT-5 High is better for math and other miscellaneous tasks.

- Sora 2 Downgrade Drives AI Subscription Debate: Members are upset about the Sora 2 downgrade, which prompted a conversation about the value of AI subscriptions.

- One member noted my job performance is about 25-30% better because of AI, highlighting AI’s impact on desk job efficiency, while others are not so sure of the value.

- Lithiumflow and Orionmist are Gemini 3?: Members speculate about the differences between Lithiumflow and Orionmist, with a conclusion that the models are checkpoint versions of Gemini 3.

- Members have discovered that the models sometimes claim to be trained by OpenAI which suggests that the models may have been distilled.

- Open Source Models Distilling Gemini 2.5 Pro: There is discussion regarding open-source models stealing data to improve, with one member suggesting that the Chinese AI companies stole the 2.5 pro and made it open source.

- Members agreed that this is okay as that’s the only way open source can win.

- TikZ Generation Task Elicits Surprise: Members are prompting models to make images in TikZ, a typesetting language, to avoid data contamination in models.

- Members have found some level of success in generating TikZ images with LLMs.

Unsloth AI (Daniel Han) ▷ #general (367 messages🔥🔥):

Magistral and Think Tags, Grok 4 Fast vs Deepseek V3.2, Ring/Ling MoE Models, Unsloth Telemetry and Offline Mode, Qwen3-VL Models

- Magistral Learns To Think Different: A member found that Magistral learned to use the

<think>tag instead of the[THINK]tag, but by using FastLanguageModel it lost the ability to use the vision encoder.- Additionally, the model overthinks like crazy because of the tags.

- Deepseek V3.2 vs Grok4Fast: Data Generation Duel: A member is deciding between using Grok 4 Fast or Deepseek V3.2 for synthetic data generation due to budget constraints.

- They noted that r1-0528 is pretty cheap, especially 3.1 on Parasail which is 0.6 input/1.7 output per million, but questioned provider reliability, with another member pointing out that Open Router is just too inconsistent in model quality by provider.

- Ring/Ling MoE Models Launch: Ring and Ling MoE models are now supported in llama.cpp (link to Github), including 1T, 103B, and 16B parameter models from InclusionAI.

- Members pondered the reasoning abilities of the models, with one hoping for a reasoning model that doesn’t YAP.

- Disable Unsloth Telemetry in Offline Mode: Members discussed running Unsloth in offline mode, with one user resolving network issues by setting proxy environments.

- It was suggested to set the

UNSLOTH_DISABLE_STATISTICSenvironment variable andos.environ['HF_HUB_OFFLINE'] = '1'to prevent telemetry calls, as the Unsloth community reached 100M lifetime downloads on Hugging Face (announcement on X).

- It was suggested to set the

- Qwen3-VL Models: Thinking Big: Qwen3-VL-2B was released, with members noting that Qwen3 VL 8B 4-bit runs easily on 16GB of RAM, and there was a direct upgrade to Qwen3-32b-Instruct.

- It was then asked if anyone has been able to run unsloths qwen3 VL 32b with llama.CPP but VL is not merged into llama.cpp yet.

Unsloth AI (Daniel Han) ▷ #introduce-yourself (9 messages🔥):

AI Bot Development, Workflow Automation with LLMs, AI Content Detection, Image AI Pipeline, Voice Cloning and Transcription

- Veteran Dev Explores New AI Tricks: A developer with a background in building bots using ChatGPT is now diving deeper into AI and expresses their enthusiasm for Unsloth.

- They are experienced in gaming and scraping, showcasing a desire to learn new skills.

- Engineer Pioneers Workflow Automation with LLMs: An engineer specializing in workflow automation, LLM integration, RAG, AI detection, image, and voice AI describes their experience building automated pipelines and task orchestration systems using Dspy, OpenAI APIs, and custom agents.

- They have created a support automation system connecting Slack, Notion, and internal APIs to an LLM, reducing response times by 60%.

- AI Content Detection Tools Deployed: The engineer developed AI content detection tools for a moderation platform using stylometric analysis, embedding similarity, and fine-tuned transformers to identify GPT-generated text with high precision.

- Details were provided about an image AI pipeline using CLIP and YOLOv8 on AWS Lambda and S3, classifying and filtering thousands of images daily.

- Voice Cloning Service Built: A voice cloning and transcription service was built using Whisper and Tacotron2, enabling personalized voice assistants through ASR, TTS, and CRM integration.

- The individual has deep expertise in blockchain technology, including smart contract development (Solidity and Rust), decentralized application architecture, and secure on-chain/off-chain integrations.

Unsloth AI (Daniel Han) ▷ #off-topic (143 messages🔥🔥):

Ultravox encoder and LLMs, REAP algorithm, Nvidia RTX Pro 5000 Blackwell, scraping content with rate limits, evaluation loss influenced by outliers

- Ultravox Projector Plugs into LLMs: The Ultravox project involves adding a projector to an LLM and training only the projector, without training the LLM, which is similar to how Voxtral works and is available on GitHub.

- A member confirmed the configuration improves with more data, clarifying that there is a training pass over the projector; however, it might be possible to ‘rip off the audio encoder from Qwen 2.5 Omni and slap it in Qwen 2.5 VL and just train a simple projector’.

- DeepSeek Dials Down Resource Use: A new DeepSeek model reduces resource usage by converting text and documents into images, using up to 20 times fewer tokens via vision text compression, further discussed on Tom’s Hardware.

- A member indicated that Gemma already implemented a similar approach, while another shared links about the Cerebras REAP algorithm, which was lauded as so cool.

- Nvidia’s RTX Pro 5000 Blackwell Workstation Card Quietly Launches: Nvidia quietly launched the RTX Pro 5000 Blackwell workstation card with 72GB of memory, as reported by VideoCardz.

- Initially, there was confusion about the 72GB capacity, and one user noted this was a way to bypass automod.

- User Rages About Rate Limiting: A user ranted about a premium subscription service blocking access to URLs containing roman numerals, interpreting them as malicious activity.

- The user also has to manually search and circumvent the system’s security plugin, and complained about the service ignoring requests to allow bulk downloads for pro subscribers.

- Evaluation Loss Skewed by Outliers: One member highlighted that evaluation loss can be significantly influenced by outliers in the evaluation set.

- With mean eval loss at 0.85, median (of per-example means) eval loss is 0.15, and 95th percentile at 0.95, the member suggested that poor generalization may not necessarily be indicated.

Unsloth AI (Daniel Han) ▷ #help (26 messages🔥):

GRPO recipe for gpt oss 20b struggling, Vision model on llama-server, Quantized parameters in bitsandbytes, Algorithmic changes to GRPO, Version mismatch in Unsloth notebooks

- GPT OSS 20B GRPO Recipe Falls Flat!: A user reported that the GRPO recipe for gpt oss 20b is still struggling after running 100 steps using this notebook.

- They indicated modifications were made to get it running on Modal.

- Vision Models Vanish on Llama-Server!: A user inquired about running a vision model on llama-server, specifically asking if any arguments are needed.

- No solutions or workarounds were given in the discussion.

- Quantized Parameter Quest for Bitsandbytes!: A user sought to locate the internal values (scaling, center, etc.) of quantized parameters in a bitsandbytes model to apply noise directly.

- They noted that modifying the parameter directly won’t work due to dequantization requirements and memory usage.

- Unsloth GRPO Algorithmic Alterations!: A user asked if Unsloth is “hackable” regarding algorithmic changes to GRPO (e.g., applying dense reward) without ruining optimizations.

- No response was given

- Notebook Version Nightmares!: A user complained about dealing with version mismatches while running Unsloth’s GitHub notebooks, stating that most are not replicable.

- No solutions or workarounds were given in the discussion.

Unsloth AI (Daniel Han) ▷ #showcase (2 messages):

Brainstorm model

- Brainstorm Model Might Improve Stability: A member mentioned they might add Brainstorm (20x) to their model to see what happens, anticipating it will increase metrics as well as long gen stability.

- Another member requested the results to be posted if the member actually does that.

- Empty Topic: There was not much discussed in this message history.

- The discussion was not detailed enough to create two distinct summaries.

Unsloth AI (Daniel Han) ▷ #research (5 messages):

Kyutai Codec Explainer, Nvidia GPU on ARM Macbook, Thunderbolt 5

- Kyutai Codec gets Explained: A member shared a link to the Kyutai Codec Explainer.

- Nvidia GPU Transplants onto ARM Macbook: A member shared an article from Tom’s Hardware on successfully running an Nvidia GPU on an ARM Macbook through USB4 using an external GPU docking station: Tiny Corp Successfully Runs An Nvidia GPU on Arm Macbook Through USB4 Using An External GPU Docking Station.

- Thunderbolt 5 Sparkles Hope for Mac Users: A member noted that they have Thunderbolt 5 too now on the ‘pros’ which gives slightly more hope to Mac users.

Cursor Community ▷ #general (343 messages🔥🔥):

Codex vs Claude, Github spec-kit, Cursor Meetups, Cursor site down, AWS CEO fired

- Codex is like having “a billion dollars or a toonie”: Users debated the merits of Codex vs Claude for code generation, with one user stating that comparing them is like comparing “a billion dollars or a toonie”.

- Cursor Site Downtime and Subscription Issues Plague Users: Multiple users reported the Cursor website being down for several hours, preventing them from logging in, upgrading plans, or renewing subscriptions.

- Some suspected AWS issues as the root cause, while others pointed out the lack of subscription expiration notifications as a major inconvenience.

- Cursor Team Plan Pricing Model: Users discussed the shift to a usage-based pricing model for Cursor team plans, replacing the previous fixed request limit, and that the plan is currently still operating under the legacy request-based system, but will automatically migrate to the new pricing at the next billing cycle.

- One user shared their boss’s correspondence with Cursor support, clarifying the new pricing structure and its impact on team plans and also shared this link with the new pricing model cursor.sh/pricing-update-sept-2025.

- Cracking Cursor Costs with a Custom Dashboard: A user shared a dashboard they created to track actual Cursor costs after the pricing changes, especially for users on legacy pricing plans and gave this forum link cursor.com/blog/aug-2025-pricing.

- The tool requires cookie login or .json upload from the user’s local machine, but promises comparison with real API pricing.

- Nightly Builds and Installation Guide Available: One user asked where to download nightly versions, and another shared, that you need to go to Settings -> Beta -> Early access to see the nightly build.

- However, another user noted there seems to be an issue with the new update and it does not prompt the user that you are in “ask” mode.

Cursor Community ▷ #background-agents (1 messages):

Background Agents in Linear, Internal Error Troubleshooting

- Background Agents Error in Linear: A member reported encountering an internal error when running a first experiment with background agents via Linear, where the agent starts, does some thinking and grepping, but then stops.

- The error message received was: “We encountered an internal error that could not be recovered from. You might want to give it another shot in a moment.”

- Troubleshooting the Internal Error: The user mentioned that the background agent seems to start but then fails, with the Cursor output showing only ”…”.

- Sending a stop command from Linear halts the agent, but messaging it again results in the same error.

OpenRouter ▷ #announcements (2 messages):

OpenRouter SDK, Andromeda-alpha stealth model

- OpenRouter SDK Enters Beta: The new OpenRouter SDK is now in beta on npm with Python, Java, and Go versions coming soon, aiming to be the simplest way to use OpenRouter.

- It features fully typed requests and responses for 300+ models, built-in OAuth, and support for all API paths.

- Andromeda-alpha Stealth Model Launched: OpenRouter launched a new stealth model named Andromeda-alpha, a smaller reasoning model focused on image and visual understanding, available for trial at https://openrouter.ai/openrouter/andromeda-alpha.

- It is cloaked to gather feedback and all prompts/outputs are logged to improve the provider’s model, so users are cautioned against uploading personal/confidential info and advised not to use it for production.

OpenRouter ▷ #app-showcase (13 messages🔥):

True memory AI, AI Personality, Objective AI, AI Diversity, OpenRouter

- AI boasts True Memory and Zero Amnesia: An AI system claims True memory, zero amnesia, suggesting it never forgets past conversations and retains context-rich memories.

- It purports to shape an AI identity and learns continuously via a Night Learn Engine.

- Objective AI Unveils Confidence Scores for OpenAI: CEO of Objective AI announced their platform offers a Confidence Score for each OpenAI completion choice, derived through a smarter method than directly asking the AI.

- They emphasize cost-efficiency and leveraging diverse LLMs via OpenRouter.

- AI Agents Built Free of Charge: CEO of Objective AI is personally building reliable AI Agents, Workflows, and Automations free of charge to gather more examples.

- The integration with n8n is mentioned, with documentation and examples coming soon.

OpenRouter ▷ #general (222 messages🔥🔥):

inception/mercury vs qwen, Deepseek v3.1 availability on Chutes, Chub Venus and Chutes key connection, Stripe supporting debit cards, Context in chatting

- Inception/Mercury Defeats Qwen for Agentic Tasks: A member shared that Inception/Mercury performs better than Qwen for simple agentic tasks, exhibiting a lower failure rate, faster speed, and lower cost.

- The member was pleasantly surprised by the diffusion model’s performance, referencing Chutes as the provider.