an incremental step.

AI News for 11/11/2025-11/12/2025. We checked 12 subreddits, 544 Twitters and 23 Discords (201 channels, and 5148 messages) for you. Estimated reading time saved (at 200wpm): 423 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

GPT 5.1 launched in ChatGPT today, with API availability “later this week”:

- 5.1 Instant is

- “warmer by default and more conversational... surprises people with its playfulness while remaining clear and useful.”

- improved instruction following - including respecting emdash preferences

- can use adaptive reasoning to decide when to think before responding to more challenging questions, resulting in more thorough and accurate answers, while still responding quickly

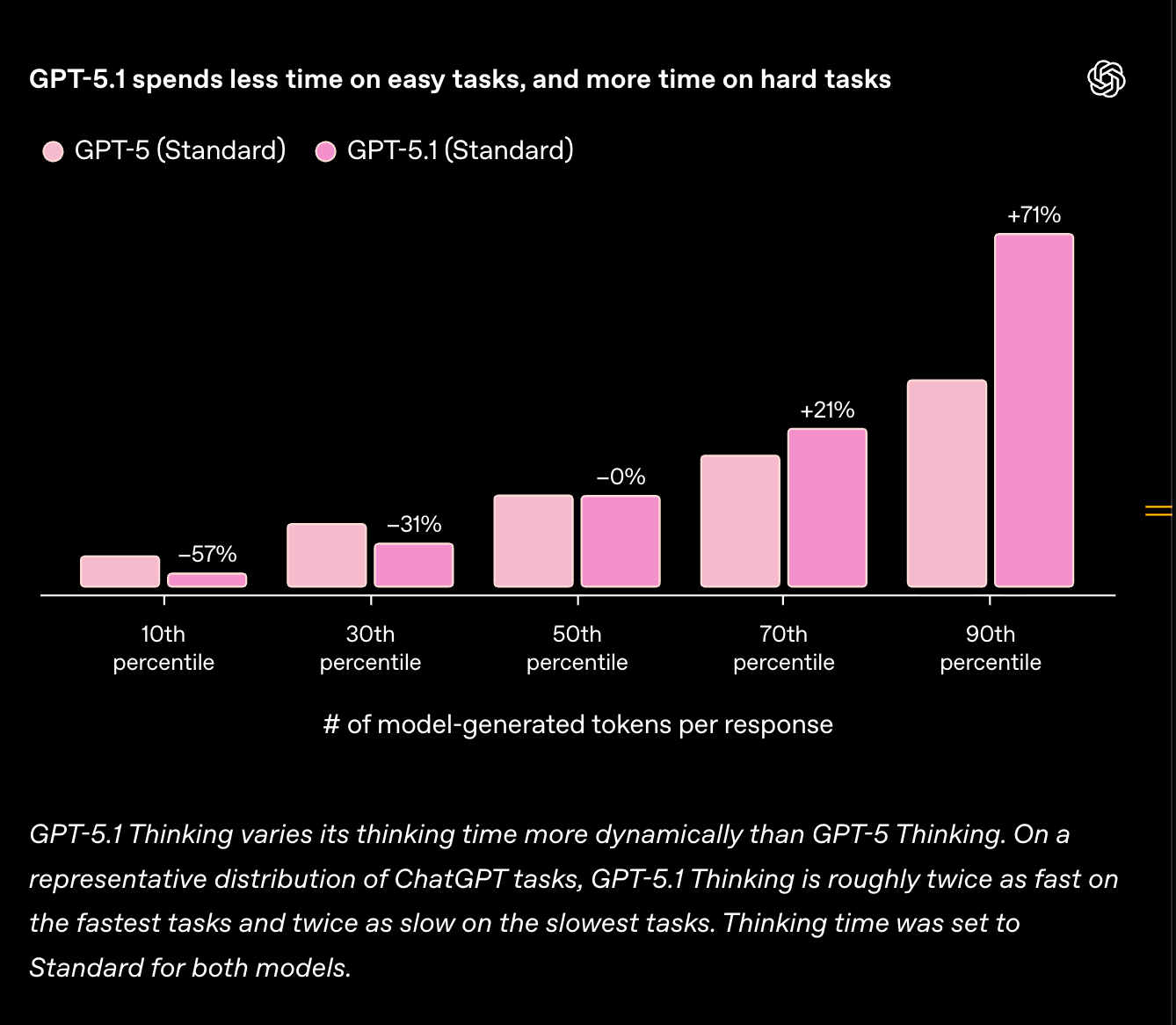

- 5.1 Thinking now:

- now adapts its thinking time more precisely to the question

- now adapts its thinking time more precisely to the question

GPT5.0 move to a “legacy model”, and will be sunset in 3 months.

There is mention of AIME and Codeforces, but no evals made it to this particular blogpost, which some are criticising.

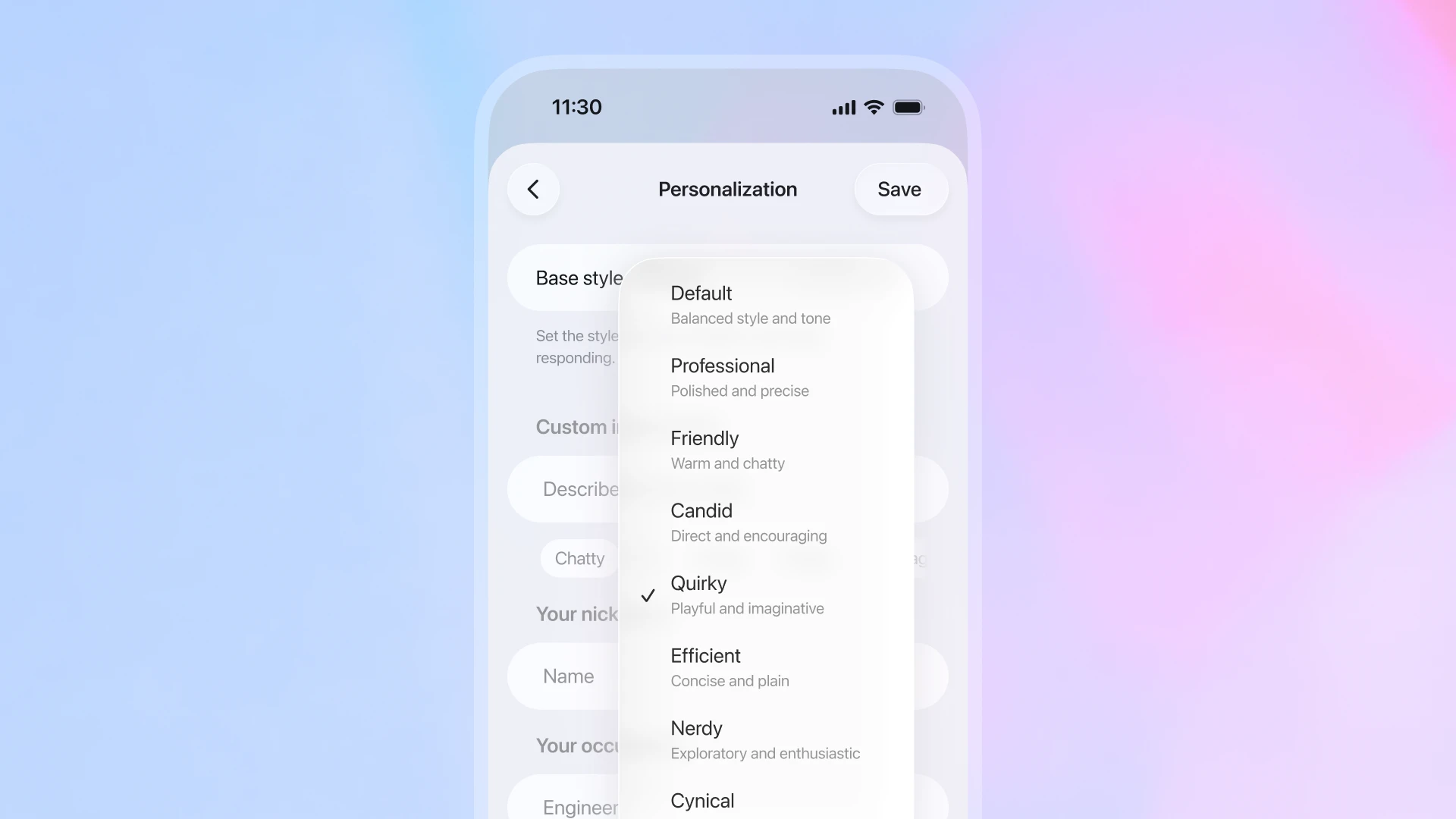

ChatGPT also gets new tone toggles for personalization. Fidji Simo’s blog says “with more than 800 million people using ChatGPT, we’re well past the point of one-size-fits-all.”

AI Twitter Recap

Autonomy and Physical AI: Waymo freeway rollout, Anthropic’s Project Fetch, and Perceptron’s platform

- Waymo freeway driving goes live: Waymo is rolling out freeway driving for public riders in Phoenix, LA, and across the SF Bay Area, connecting SF↔San Jose with curbside access to SJC. Leadership frames this as a validation of the Driver’s generalization and safety claims; scale enables new airport routes and longer corridors. See announcements from @dmitri_dolgov and @JeffDean.

- Anthropic’s Project Fetch (robot dog with/without Claude): Anthropic had two non-roboticist teams program a quadruped; only one team could use Claude. It’s framed as an empirical check on “LLMs as robotics copilots” for planning/control authoring, debugging, and iteration speed. Results and methodology are in the thread: @AnthropicAI.

- Perceptron’s “Physical AI” platform: A new API and Python SDK targeting multimodal perception-and-action apps, currently supporting Isaac-0.1 and Qwen3VL‑235B for VLM/VLA use cases (prompting primitives grounded in vision + language, plus “chat competitions”). Free access to Isaac this week per founders. Details: @perceptroninc, @AkshatS07.

Agent evals and control: Code Arena, LangChain middlewares, and LlamaIndex SEC agent

- Code Arena (live coding evals): A step-by-step evaluation harness where models must plan, scaffold, debug, and ship working web apps. Currently lists support for Claude, GPT‑5, GLM‑4.6, and Gemini. Useful for measuring agentic decomposition, tool use, and temporal coherence under realistic coding tasks: @arena.

- Agent governance via middleware (LangChain):

- Human‑in‑the‑loop middleware that pauses execution for user approval of the next step—adds an explicit “ask before acting” gate to reduce unintended actions: @bromann.

- Tool‑call limit middleware to cap runaway tool invocation and costs; demo shows reining in a spend‑happy shopping agent: @sydneyrunkle.

- LlamaIndex structured extraction template (SEC filings): Multi‑step agent that classifies filing type, routes to the correct extraction schema, provides a review UI prior to commit, and can extend to downstream syncing/monitoring—built on LlamaAgents with LlamaClassify + Extract. Starter template: @llama_index.

- Benchmarking push: NousResearch endorses ARC Prize’s interactive benchmarks for measuring generalized intelligence: @NousResearch.

Systems and infra: cross-container covert channel, edge LM IPW harness, and inference infra

- Cross‑container communication via /proc lock state: A clever channel encodes ~63 bits in the shared lock for /proc/self/ns/time that all processes can access (even across unprivileged containers), enabling a chat app without networking. Implications for container isolation and policy hardening: @eatonphil.

- Local LMs and the “intelligence‑per‑watt” (IPW) thesis: Evidence that ≤20B‑active‑param local models improved ~3.1× in capability and ~5.3× in efficiency since 2023, with a released profiling harness across NVIDIA, AMD, and Apple Silicon. Authors argue a cloud→edge redistribution similar to mainframe→PC, with IPW as the guiding metric. Summary: @Azaliamirh; paper/blog links: arXiv + blog.

- Inference infra note: Teams report building bespoke inference platforms, crediting Modal for compressing time‑to‑ship: @ArmenAgha.

Model UX and product updates: Gemini Live, GPT‑5.1 persona, and AI privacy

- Gemini Live upgrade: A large update emphasizes faster turn‑taking, expressiveness, and accents for voice interactions, with usage demos highlighting more fluid conversation latency and paralinguistic variety: @joshwoodward.

- GPT‑5.1 tone and “persona” tuning: Mixed reception on style. Some users find the default tone too saccharine or over‑empathetic @tamaybes, while others report a meaningful reduction in sycophancy and more grounded, self‑aware suggestions vs GPT‑5 (and better than 4o) in journaling‑style use @_simonsmith. Net: persona tuning is now a first‑order product surface; defaults matter.

- AI privilege and data minimization: OpenAI’s CPO calls for a new “AI privilege” to protect sensitive, conversation‑level interactions and pushes back on indiscriminate requests for millions of chats—arguing granularity matters for respecting user intent: @jasonkwon.

Research and theory notes

- RL geometry and “implicit KL leash”: Commentary on a new paper argues RL updates implicitly constrain divergence from the base model (a de‑facto KL leash) and preserve pretrained geometry; methods targeting “principal weights” (e.g., PiSSA) may underperform or destabilize vs LoRA. Discussion: @iScienceLuvr.

- Spatial intelligence framing: Fei‑Fei Li’s new blog (via The Turing Post) argues world models for spatial intelligence must be generative, multimodal, and interactive—setting expectations for next‑gen embodied systems: @TheTuringPost.

- Demos: collaborative multi‑agents in tldraw: Early look at multi‑agent collaboration UX explored live at Sync conf, with a grilling session on task decomposition and shared canvases: @swyx.

Top tweets (by engagement)

- Waymo expands to freeways across Phoenix, LA, and SF Bay Area; adds SF↔San Jose and SJC curbside — @JeffDean (5,557)

- Waymo’s CTO on the rollout and safety/generalization framing — @dmitri_dolgov (1,214.5)

- Cross‑container comms via /proc/self/ns/time lock bits — @eatonphil (910)

- Gemini Live’s biggest update (speed, expressiveness, accents) — @joshwoodward (624.5)

- Code Arena: live coding evals for agentic coding — @arena (514.5)

- Anthropic’s Project Fetch (robot dog + Claude vs control) — @AnthropicAI (478.5)

- AI privacy and “AI privilege” stance — @jasonkwon (438.5)

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. AELLA Open-Science Initiative

- AELLA: 100M+ research papers: an open-science initiative to make scientific research accessible via structured summaries created by LLMs (Activity: 455): AELLA is an open-science initiative aimed at making over

100 millionresearch papers accessible through structured summaries generated by Large Language Models (LLMs). The project is hosted on Hugging Face and offers a visualizer tool for exploring these summaries. The initiative is detailed in a blog post by Inference.net, highlighting its potential to democratize access to scientific knowledge by leveraging AI to create concise, structured summaries of vast amounts of research data. Some users express skepticism about the project’s utility and the choice of its name, indicating a need for clearer communication on its practical applications and benefits. - Repeat after me. (Activity: 671): The post discusses the performance of AMD graphics cards in processing tokens per second compared to Nvidia cards, highlighting that an AMD card, which is significantly cheaper, achieves

45 tokens per second. This is contrasted with Nvidia cards that can achieve120 to 160 tokens per second, but at a higher cost. The post suggests that while AMD cards may currently be slower, they are improving over time, and users should not feel pressured to pay a premium for faster performance. Commenters note that the token speed is sufficient as long as it exceeds their reading and comprehension speed. There is also a mention of misinformation regarding the difficulty of running LLM models on AMD hardware, suggesting that it may not be as challenging as some claim.- A key issue highlighted is the performance disparity between AMD and NVIDIA GPUs, particularly in handling large-context processing tasks. While 45 tokens per second (tps) is adequate for single-user generation, NVIDIA’s GPUs excel in prompt processing at larger contexts, achieving several thousand tps compared to AMD’s few hundred. This makes NVIDIA more suitable for complex applications like RAG pipelines and coding assistants.

- The software ecosystem for AMD is criticized for being poorly supported, with users experiencing issues such as random crashes and lack of driver support. For instance, the Radeon PRO W6000-series has been plagued with GCVM_L2_PROTECTION_FAULT_STATUS faults, and AMD’s ROCm support is inconsistent, requiring users to apply workarounds like monkey-patching libraries. In contrast, NVIDIA’s CUDA has maintained long-term support, with Pascal support only recently dropped after a decade.

- AMD’s approach to customer support is criticized as lacking, with a focus on selling hardware rather than maintaining it. Users report that AMD often fails to support their products beyond a single generation, leading to a reliance on community-driven solutions to make AMD hardware functional. This contrasts with NVIDIA’s more stable and long-term support for their products, making them a more reliable choice for compute tasks.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. GPT-5.1 Release and Features

- GPT-5.1: A smarter, more conversational ChatGPT (Activity: 878): OpenAI has launched GPT-5.1, featuring two models: GPT-5.1 Instant and GPT-5.1 Thinking. The release focuses on enhancing conversational AI with adaptive reasoning and dynamic thinking time adjustments, allowing for faster responses to simple queries and more detailed answers for complex ones. However, the release lacks benchmarks, a comprehensive system card, and an API, raising questions about the rushed nature of the launch. For more details, see the OpenAI announcement. Commenters noted the absence of benchmarks and a detailed system card, suggesting a rushed release possibly to compete with other tech announcements. Concerns were raised about the lack of API and incomplete testing phases.

- Several users noted the absence of benchmarks in the GPT-5.1 release, which is unusual for a major model update. This lack of performance metrics makes it difficult to assess improvements over previous versions, such as GPT-4, and raises questions about the model’s capabilities and enhancements.

- The release of GPT-5.1 appears rushed, as indicated by a brief system card and the delay in API availability. Additionally, the model did not complete its stealth testing phase, known as Windsurf, which is typically a standard procedure before a full release. This has led to speculation about the reasons behind the hurried launch.

- Some users speculate that GPT-5.1 is aimed at users who preferred the style of GPT-4 over GPT-5, suggesting that the new version might be an attempt to cater to those who were not satisfied with the previous iteration’s changes. However, without benchmarks or detailed documentation, it’s challenging to confirm these assumptions.

- ChatGPT-5.1 (Activity: 813): The image is a promotional announcement for the release of GPT-5.1 by OpenAI, scheduled for November 12, 2025. This version is described as a more intelligent and conversational iteration of ChatGPT, with a focus on customization features. The release is initially targeted at paid users, indicating a strategic move to prioritize premium services. The announcement suggests improvements in user interaction, particularly in the ‘Instant mode,’ which may offer a different tone or style of responses compared to previous versions. Some users express concern over the increasing number of similar model names, which could lead to confusion. Others note the prioritization of paid users, indicating a shift in OpenAI’s business strategy.

- AdDry7344 highlights a noticeable change in tone with ChatGPT-5.1’s Instant mode, suggesting it may affect user experience by altering how responses are perceived, especially in stress-related queries. This could imply a shift in the model’s conversational style, potentially impacting its effectiveness in providing concise, direct advice.

- Nakrule18 criticizes ChatGPT-5.1 for defaulting to a more verbose, ‘chatty’ style compared to GPT-5, which was appreciated for its concise and direct responses. This change might affect users who prefer straightforward answers over a conversational tone, indicating a possible regression in user experience for those seeking efficiency.

- Dark_Karma notes the improved speed and more engaging responses of ChatGPT-5.1, suggesting enhancements in processing and interaction quality. This could indicate optimizations in the model’s architecture or algorithms, leading to faster response times and potentially more dynamic conversational capabilities.

2. AI in Personal Legal Success Stories

- I Won Full Custody With No Lawyer Thanks to ChatGPT. (Activity: 727): A Reddit user, a health physicist, successfully navigated a custody battle without a lawyer by leveraging ChatGPT to understand court rules, procedures, and fill out legal forms. The user was awarded full custody, with the other parent limited to conditional visitation due to preexisting assault charges. The user emphasizes that while AI was instrumental, the success was also due to the specific circumstances of the case, including the mother’s legal history and the user’s technical expertise. The post highlights the potential of AI in legal contexts but cautions against over-reliance on it for legal success. Commenters noted AI’s potential to disrupt traditional legal practices, with one highlighting the importance of understanding AI’s limitations, such as hallucinations, and another sharing a similar tool, FreeDemandLetter.com, for legal assistance.

- Dry-Peanut6627 highlights the disruptive potential of AI in family law, noting that while attorneys criticize AI for generating inaccuracies, users can quickly correct these ‘hallucinations’ if they are knowledgeable. This suggests a shift in power dynamics, where litigants are increasingly equipped with information that was traditionally monopolized by legal professionals.

- bobboblaw46, a lawyer, strongly advises against self-representation in legal matters, even with AI assistance like ChatGPT. They emphasize that legal errors can have severe consequences, and AI often provides incorrect legal advice, misinterprets case law, or offers overly simplistic solutions. The complexity of law justifies the extensive education and training lawyers undergo, underscoring the risks of relying solely on AI for legal representation.

- MetsToWS mentions creating FreeDemandLetter.com, a tool designed to assist individuals with legal issues such as unpaid contracts and security deposit refunds. This tool, similar to ChatGPT, guides users through legal processes, indicating a trend towards accessible legal assistance through technology.

- Chat gpt used to write article in Dawn newspaper (Activity: 970): Dawn, a prominent Pakistani newspaper, reportedly used ChatGPT to write an article, sparking discussions about the role of AI in journalism. The incident highlights concerns over AI-generated content, particularly regarding the lack of human oversight, as evidenced by a commenter’s experience where AI editing led to significant content distortion, including the addition of

30 em dashes. This raises questions about the reliability and editorial standards when using AI tools in professional writing. Commenters express skepticism about AI’s role in journalism, emphasizing the importance of human oversight in editing to maintain content integrity and quality.- irr1449 shares a technical issue where using ChatGPT for editing led to a significant alteration of their article. The AI not only shortened the text but also introduced about 30 em dashes, which disrupted the original content. This highlights potential pitfalls in relying on AI for nuanced editing tasks, where the AI’s changes can inadvertently alter the intended message or style of the writing.

3. Creative AI Experiments

- I told my AI to surf the internet and send me postcards (Activity: 499): The post describes an experiment where an AI is tasked with a multi-step process: surfing the internet, generating an image as if it were a postcard from a virtual location, and writing a short message. The AI is instructed not to reveal the websites it visited, focusing instead on the creative output. This experiment highlights the AI’s ability to integrate web search, image generation, and text composition into a cohesive task, showcasing advancements in AI multitasking capabilities. The comments include links to images presumably generated by the AI, suggesting a focus on the visual output of the experiment. However, there is no substantive technical debate or discussion in the comments.

- Gemini switched roles (Activity: 1632): The image appears to be a humorous depiction of a digital interface, possibly related to an AI or software named “Gemini,” which is tasked with changing the color of a jacket worn by a character. The interface suggests that “Gemini” might have switched roles, implying a mix-up or error in its functionality. This is further emphasized by the comments, which mock the AI’s response capabilities, suggesting it might not be performing as expected. The image and comments highlight the challenges and limitations of AI in understanding and executing specific visual tasks. The comments humorously critique the AI’s limitations, with one suggesting a sarcastic response from the AI and another pointing out the AI’s inability to perform the task, reflecting a common sentiment about AI’s current capabilities.

- UBTech shows off its self charging humanoid robots army aiming to fullfill a >100M factory order (Activity: 1239): UBTech has showcased its self-charging humanoid robots, which are part of a significant order valued at

112M USD, not100M unitsas initially misunderstood. According to a South China Morning Post article, the company plans to deliver “more than 500” units by the end of the year. These robots are designed for factory jobs, highlighting a significant step in automation and robotics in industrial settings. A comment clarified the misunderstanding about the order size, emphasizing the financial value rather than the number of units. This highlights the importance of precise communication in technical discussions.- The discussion clarifies that UBTech has received $112 million in orders, not 100 million units, as some might have misunderstood. According to a SCMP article, the company plans to deliver over 500 units by the end of the year. This indicates a significant scale of production and deployment for humanoid robots in industrial settings.

- Wisker dont like to take orders (Activity: 3151): The post humorously suggests that a cat, referred to as ‘Wisker’, is resistant to taking orders, possibly in the context of a playful or metaphorical scenario involving AI or automation. The comments play along with this theme, joking about a cat being involved in tasks like cooking or using AI, such as ‘CatGPT’. The external link summary indicates restricted access to the content, requiring login or a developer token for further details. The comments reflect a light-hearted engagement with the idea of a cat being autonomous or involved in AI tasks, with no substantive technical debate present.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.5 Flash Preview 05-20

Theme 1. Next-Gen AI Models Spark Hope and Frustration

- GPT 5.1 Disappoints While Gemini 3 Hype Builds: Many users trashed the newly released GPT 5.1 as trash and safetylobotomized, noting a lack of benchmarks, but eagerly await Gemini 3 Pro, expected next week, with one test showing it comparable to a human. OpenAI announced GPT-5.1 rolls out to all users this week, with a Reddit AMA planned for tomorrow at 2 PM PT.

- Riftrunner Codes Mario, Other Models Crash: Riftrunner demonstrated superior coding by building a 3D Mario game and a functional 3D Flappy Bird game from a simple prompt, generating 2k lines of code and outperforming Lithiumflow and the “bad” rain-drop (a Llama model). However, Riftrunner also exhibited laziness, prompting one user to state, if you motivate it, it might listen to you.

- New Small Models Make Big Claims, But Drift: New models like WeiboAI (based on qwen2.5) showed surprisingly good initial performance for a 1.5B parameter model, but it drifts after the first 1-2 turns, while ixlinx-8b was released as a state-of-the-art (SOTA) small model from a local hackathon. Users also noted Aquif-3.5-Max-42B-A3B trending, speculated to be upscaled and fine tuned.

Theme 2. Developer Tooling Navigates Complex AI Landscapes

- Aider’s Vim Mode Wins Praise, Markdown Still a Mess: Users lauded Aider’s Vim mode as fantastic and praised new session management features, but reported Aider gets confused by nested markdown when creating code snippets with

anthropic.claude-sonnet-4-5-20250929-v1:0. Adding three and four backticks ('```'and'````') toconventions.mdforced<source>tags, resolving the issue. - Cursor’s Max Mode Boosts Power, But Costs Double: Max mode in Cursor removes limits for maximum performance and cost reduction, enabling it to read entire files instead of chunks, but exceeding 200k context with Sonnet 4.5 doubles the cost. Users humorously suggested capping it, Cant we limit this to 200k and post that we can give another command 💀.

- Perplexity Partner Program Bans Frustrate Users: Several users reported Perplexity Partner Program bans for “fraudulent activity,” citing a lack of support for appeals and suspecting issues like referral system gaming or VPN usage. Meanwhile, Gemini 2.5 Pro integration within Perplexity also “is broken and poorly implemented,” automatically switching to GPT.

Theme 3. Hardware Challenges Drive AI Performance Optimization

- CUDA Compiler Commands Clarified, PTXAS Already O3: New CUDA developers learned to use

O3for host optimization andlineinfofor profiling with Nsight Compute. It was clarified thatO3primarily optimizes the host (CPU) part of the code, and PTXAS already defaults to O3 optimization for GPU code. - Vulkan’s Stability Issues Arise, CUDA Saves the Day: Users experienced frequent blue screen errors (BSODs) with LM Studio using Vulkan, particularly on NVIDIA GPUs, resolving issues by switching to CUDA. Although Vulkan was faster for small tests on a 3090, it proved unstable.

- NVIDIA Competition Rules Cache Kernels, Not Tensors: Users submitting to the NVIDIA competition (e.g.,

nvfp4_gemv) learned that caching compiled kernels is permissible, but caching tensor values between benchmark iterations is strictly prohibited. The B200 GPU with 148 SMs running at 1.98 GHz scores submissions, with details in Nvidia’s blog post.

Theme 4. AI’s Ethical Battlegrounds and Licensing Quandaries

- OpenAI Fights NYT Over User Privacy: OpenAI’s CISO addressed The New York Times’ invasion of user privacy in a letter, detailing the legal battle and their commitment to protecting user data. OpenAI also offered 12 months of free ChatGPT Plus to eligible active-duty service members and recent veterans.

- AI Chatbot Hordes Threaten Social Media Propaganda: Members discussed the potential for an AI chatbot infestation across social media, predicting online will be dominated by AI chatbots who will just constantly push propaganda. This raises concerns about distinguishing real people from AI and the spread of misinformation.

- Nemo-CC 2’s License Raises Developer Eyebrows: Members debated the restrictive licensing terms of Nemo-CC 2, citing concerns about NVIDIA terminating licenses with 30 days notice and prohibiting public sharing of evaluation results without prior written consent. One user summarized, You are not allowed to train a model on the dataset, evaluate the model, and publicly share the results without NVIDIA’s prior written consent, with more details in this paper.

Theme 5. Advancing LLM Research and Development Practices

- MLE Interview Prep: Leetcode Trap or Real-World Skills?: Members debated MLE interview preparation, with some calling it a trap due to employer/team dependency, while others advised building something in the open that would be useful to companies training/serving models. Implementing Multi-Head Attention in Numpy was deemed horrible for interviews.

- DSPy Demands Domain Knowledge, Signatures Still Act as Prompts: While DSPy abstracts prompting, domain-specific LLM applications still require detailed instructions within signatures (some users writing 100 lines), indicating that DSPy needs encoded domain knowledge to guide the LLM effectively. Participants noted that DSPy’s signatures still function as prompts, particularly in docstrings encoding business rules, despite offering better abstraction.

- Mojo’s Metaprogramming Might, Mutability Muddle: Mojo aims for dynamic type reflection and features metaprogramming capabilities more powerful than Zig’s, with Mojo able to allocate memory at compile time (Mojo’s metaprogramming capabilities). A debate arose over mandatory

mutannotations for function parameters, with comparisons to Rust and proposals for optional annotations orcomptimesyntax.

Discord: High level Discord summaries

LMArena Discord

- Riftrunner masters Mario, Other Models Fail: Members found Riftrunner excels in creating a Mario game, surpassing other models, but it can also lie.

- In comparison, the model rain-drop turned out to be a Llama model that generated bad terminal outputs and acted like Gemini 3 Flash.

- Riftrunner codes Flappy Bird better than LithiumFlow: Riftrunner outperforms Lithiumflow in coding tasks, creating a functional 3D Flappy Bird game after generating 2k lines of code from a shared prompt.

- However, Riftrunner also exhibited laziness, prompting the user to share, if you motivate it, it might listen to you.

- GPT 5.1 Dissappoints, Awaiting Gemini 3: Members expressed disappointment with the recent GPT 5.1 release and hope that Gemini 3 Pro will be significantly better and is expected to release sometime next week.

- One user derisively called GPT 5.1 trash.

- Code Arena Replaces WebDev Arena: Code Arena is live on LMArena, offering real-time generation of deployable web apps that users can directly inspect and judge, succeeding the old WebDev Arena, according to a blog post and YouTube video.

- Models generate live, deployable web apps and sites that anyone can open, inspect, and judge directly, in real time, while the leaderboard showcases the new evaluation system.

Perplexity AI Discord

- Perplexity Referral Program Bans Users: Several users report being banned from the Perplexity Partner Program for “fraudulent activity,” and expressed frustration over the lack of support.

- Some users suspect the bans are related to gaming the referral system or VPN usage, while others speculate that the issue might be related to payout eligibility.

- Gemini 2.5 Pro Integration Flounders: Users are reporting issues with Gemini 2.5 Pro in Perplexity, saying that “it’s broken and poorly implemented in pplx atm, no way to fix it.”

- Perplexity’s interface seems to be automatically switching to GPT, even when Gemini 2.5 Pro is selected.

- GPT Go Sells GPT-5 mini: A user who purchased the GPT Go subscription reported that while it advertises GPT-5 thinking, it mostly uses GPT-5 thinking mini, leading to a refund request.

- Members debated on whether they preferred a specific model or not, which led to the refund.

- Comet Plagued by Control Catastrophies: Users reported Comet AI Assistant issues such as the inability to perform webpage actions, unresponsive buttons, and an inability to control the browser.

- Some users have found solutions such as logging in, changing IP address (VPN) or deleting and reinstalling Comet, and posting in the troubleshooting channel.

- Sourcify’s Open Source Shindig: Sourcify IN is hosting an event titled Forks, PRs, and a Dash of Chaos: The Open Source Adventure on November 15, 2025, featuring Swapnendu Banerjee.

- The talk will be broadcast on Google Meet & YouTube Live.

Cursor Community Discord

- Cursor Review Agent is Cheap, Still Costs: Members noted the agent review feature in Cursor IDE incurs costs with each use, but is relatively inexpensive, sharing usage screenshots.

- One user had used 76% of their allowance, and others found clicking Try Again sometimes resolves issues.

- Cursor vs Copilot Preference Prevails: Some users returned to Copilot after trying Cursor, emphasizing that tool preference can be subjective, saying My brother is a fan of copilot idk why.

- The discussion highlights how developer’s choice can be influenced by personal style.

- Users Exploit Unlimited ChatGPT Glitch: Some users exploited ChatGPT 5 when it was briefly free due to a pricing bug, including unlimited Opus 4 requests.

- One user lamented Sad I wasn’t aware of the opportunity, and another described the situation as bugged as hell, we had unlimited everything.

- Max Mode Costs Double at 200k Context: Max mode in Cursor removes limits to maximize performance, enabling it to read entire files instead of chunks and reduce costs.

- Exceeding 200k context with Sonnet 4.5 doubles the cost, with users humorously suggesting capping it: Cant we limit this to 200k and post that we can give another command 💀.

- Custom Rules Keep AI in Check: Members are establishing custom rules and lints to control AI behavior and prevent dirty code from entering repos.

- One member shared a streamlined approach using

.cursorrules, lints, custom eslint plugins, and husky to prevent AI from drifting.

- One member shared a streamlined approach using

GPU MODE Discord

- Popcorn CLI strikes syntax scare: Users encountered a syntax error with

popcorn-cli submit, pointing to the popcorn-cli readme for correct syntax and emphasizing that URLs should be entered without quotes when using the export command.- Members reported that the grayscale leaderboard is closed and that ensuring nvmnvfp4_gemv is selected in the popcorn cli is crucial for proper evaluation.

- CUDA Compiler Commandments clarified: New CUDA developers were advised to use

-O3for optimization and-lineinfofor profiling with Nsight Compute.- It was also pointed out that the

-O3compiler option primarily optimizes the host (CPU) part of the code, with default optimization level of PTXAS already O3.

- It was also pointed out that the

- DMA Documentation Desired!: A member expressed dissatisfaction with existing documentation on Direct Memory Access (DMA) and Remote Direct Memory Access (RDMA) from sources like Wikipedia, ChatGPT, and vendor sites.

- The user is seeking more detailed and technical documentation, though specific requirements were not detailed.

- Nvidia comp requires correct auth: Users faced 401 Unauthorized errors with

popcorn-cli submit, which was traced to needing to re-authenticate via the Discord OAuth2 link provided during registration.- It was clarified that while caching compiled kernels is permissible, caching tensor values between benchmark iterations is not allowed, with reference code available here.

- Cutlass and CuTe cuts out the bugs: The CuTeDSL and Cutlass libraries were updated to version 4.3.0, resolving issues with CuTe submissions, with the CuTe example now passing.

- The B200 GPU has 148 SMs (Streaming Multiprocessors) running at a boost clock of 1.98 GHz and is used to score submissions and the relevant Nvidia’s blog post includes the B300 diagram.

Unsloth AI (Daniel Han) Discord

- VibeVoice sings off-key in Bulgarian: Users discovered that VibeVoice had difficulty producing high-quality Bulgarian TTS without further finetuning.

- Community members joked about the output sounding ‘like a drunk brit trying to read a phonetic version of the sentence’, highlighting the challenges in adapting TTS models to new languages.

- QAT: Intel autoround vs BNB Showdown: A discussion arose regarding the potential benefits of using Intel autoround quants for training compared to bnb 4-bit quants, especially with the introduction of QAT in Unsloth.

- Concerns were raised about the compatibility of autoround with Unsloth’s QAT and the need for customization, with emphasis on QAT targeting fast, simple quantization formats.

- GPT-OSS-20b gives senseless solution: A user reported encountering nonsense generations from gpt-oss-20b when prompted with a math problem, tracing the issue back to an attention patch modifying matmul() calls.

- The user shared detailed code and logs on Github, pinpointing the issue to a previous training module in their Dockerfile.

- Translation Dataset prompt details Data Debacle: A member shared a prompt for generating a translation dataset for LLMs, emphasizing the use of provided samples only, without generating new translations.

- The prompt details how to create a dataset with specific formatting rules, including language combinations and punctuation alignment.

- Ollama documentation faces link lapse: A user reported that Ollama links are broken on the documentation page.

- They also questioned the use of

f16in the example, suggesting it should beq8_0instead, when using 8-bit quantization for the KV cache.

- They also questioned the use of

OpenRouter Discord

- MiniMax M2’s Free Ride Ends: The free access period for MiniMax M2 is concluding, requiring users to switch to a paid endpoint to continue using the model.

- Users have only one hour to migrate to the paid endpoint to prevent interruptions.

- OpenRouter Chat Crashes, Users Rage: Users reported a chat scrolling issue on OpenRouter that prevented access to old chats, and one user identified a commit that broke the chat.

- Despite the inconvenience, a user joked that OpenRouter’s mistakes are benign compared to hidden system prompt changes from other AI companies, before the OpenRouter team quickly resolved the issue within 3 minutes.

- Gemini 3 Hype Train Departs Station: Enthusiasm builds around the potential of Gemini 3, with some cautioning against excessive hype, despite a LiveBench test showing Gemini 3 achieving a ranking comparable to a human.

- The community anticipates a release that is both powerful and nicely priced.

- Free Model Drought Sparks Anxiety: The scarcity of free AI models is increasing due to rising popularity and increased internet access, leading to resource limitations, particularly after a YouTube video caused Deepseek Free to go down.

- While some suspect RP apps are siphoning off the API, others say paid services remain the most reliable due to reduced abuse, after reporting mixed experiences with Claude’s free tier limits.

- Local AI Hardware: Ryzen Gets Roasted: Users debated the best hardware for local AI, with a Minisforum mini PC dismissed as a poor choice due to its Ryzen architecture and limited power.

- The conversation shifted to recommending RTX Pro 6000 Blackwell, RTX 5090, or RTX 3090, depending on the budget, with concerns about the high cost of junkyard builds with DDR4 memory.

OpenAI Discord

- OpenAI Fights Back Against NYT: OpenAI’s CISO addressed The New York Times’ invasion of user privacy in a letter.

- The letter detailed the legal battle and OpenAI’s dedication to protecting user data from unauthorized access.

- Free ChatGPT Plus for Vets: OpenAI is offering 12 months of free ChatGPT Plus to eligible active-duty service members and veterans who have transitioned from service in the last 12 months; claim here.

- The announcement was made to the community and all users have been notified.

- GPT-5.1’s Debut: GPT-5.1 is rolling out to all users this week, becoming smarter, more reliable, and more conversational, read more here.

- A Reddit AMA on GPT-5.1 and customization updates will happen tomorrow at 2 PM PT.

- AI Chatbot Hordes Threaten Social Media: Members discussed the potential for an AI chatbot infestation across social media, pushing propaganda and making it difficult to distinguish between real people and AI.

- One member said that online will be dominated by AI chatbots who will just constantly push propaganda, and the only escape might be going outside.

- Users Find and Share Prompt Engineering Tips: A member shared a detailed prompt lesson using markdown for prompting, abstraction via variables, reinforcement for guiding tool use, and ML format matching for compliance.

- The member provided a markdown snippet for teaching hierarchical communication, abstraction, reinforcement, and ML format matching.

LM Studio Discord

- Phi-4: Small Model Has Big Brain: A user sought a lightweight chat model for writing a book for private research, and settled on Microsoft Phi 4 mini.

- Another user suggested considering budget and usage plans to decide between a subscription or dedicated hardware.

- Gemini 2.5 Pro Dethrones Sonnet: A user reported that Gemini 2.5 Pro outstripped the current Sonnet 4.5 iterations.

- The user expressed eagerness for Gemini 3 to come out soon.

- CUDA Update Causes Vision Model Carnage: Users reported that the new CUDA version 1.57 is breaking vision models, causing crashes, with a recommendation to roll back.

- One user specified that Qwen3 VL also crashed and suggested it affects llama.cpp runtimes.

- Multi-GPU Model Loading Still Complex: Users found that the possibility of loading two different models on two different GPUs in the same system with LM Studio is only possible if you run multiple instances of LM Studio.

- GPU offload has always been all or none in LM Studio, you can’t pick and choose which one is used for individual models.

- Vulkan’s Stability Issues Arise Again: Users experienced frequent blue screen errors (BSODs) while running LM Studio with Vulkan, with suspicions falling on compatibility issues with NVIDIA GPUs.

- Switching to CUDA resolved the stability issues, but it was noted that Vulkan was faster for small tests on a 3090.

Eleuther Discord

- Einops or GTFO Numpy Implementations: Members joked about implementing Numpy without Einops, with one member suggesting that Numpy implementations are kinda useless without autodiff to train.

- Another member said that implementing Multi-Head Attention in Numpy is horrible and better suited to being motivated/rederived rather than coded up during an interview.

- Is MLE interview prep a Leetcode Trap?: Members debated the best way to prepare for MLE interviews, with one describing it as a trap that is too employer and team-dependent to nail down.

- Instead, one member advised to build something in the open that would be useful to companies training/serving models.

- Dataset Mixing Ideal for Pretraining: Members suggested using Zyda-2, ClimbLab, and Nemotron-CC-v2 for initial pretraining, noting that mixing them could be ideal given their individual strengths and weaknesses.

- One member asked about the token breakdown, and if subsets like slimpj and the slimpj_c4 scrape are upsampled/downsampled.

- NVIDIA Dominates Quality Datasets: A member noted that NVIDIA and HF are overall leading along the quality axis for open-source datasets rn.

- They shared a link to the ClimbMix dataset on Hugging Face, calling it especially interesting (https://huggingface.co/datasets/nvidia/Nemotron-ClimbMix).

- Nemo-CC 2 License Raises Eyebrows: Members debated the licensing terms of Nemo-CC 2, expressing concerns about potential restrictions on sharing datasets/models that leverage it and pointing out that they can terminate your license at any time for no reason with 30 days notice.

- One user summarized, You are not allowed to train a model on the dataset, evaluate the model, and publicly share the results without NVIDIA’s prior written consent, with more details available in this paper.

Nous Research AI Discord

- Autonomous AI Created By Accident: A user shared a GitHub repository claiming to have created autonomous AI by accident.

- No further details were provided regarding the specifics or capabilities of this project.

- WeiboAI Stuns but drifts after 2 turns: Users discussed the new WeiboAI model, based on qwen2.5, noting its surprisingly good initial performance, referencing this tweet.

- Another user pointed out that it drifts after the first 1-2 turns, but remains somehow good for a 1.5B parameter model and can recite content from Quora.

- Baguettotron Reasoning Gets Attention: A member inquired about benchmarking Baguettotron, noting its fairly interesting reasoning traces despite its small size.

- There was no follow up on whether this benchmark was pursued.

- GGUF Files Unavailable in Nous Chat: Users were informed that importing GGUF files directly into Nous Chat is not currently supported, but can be used locally with tools like llama.cpp or Ollama.

- A huggingface documentation link and the Ollama website were shared.

- ixlinx-8b Debuts as SOTA Small Model: The ixlinx-8b model was released on GitHub after a long period of development, advertised as a state-of-the-art (SOTA) small model from a local hackathon.

- The creators invited contributions and suggested that the developers of Hermes should evaluate it.

Latent Space Discord

- Windsurf Releases Aether Models for Testing: Windsurf Next launched Aether Alpha, Aether Beta, and Aether Gamma models in the

#new-modelschannel, available for free testing for a limited time, with a direct download link provided.- Users were urged to test the models quickly, as free access won’t be free for more than a week.

- OpenAI’s Training-Cost Trends Charted: Masa’s chart illustrating OpenAI’s training-cost trends sparked discussions on metrics, with members requesting more data points, including burn rate and revenue.

- Some members pointed out that OpenAI is nearly 10 years old and suggested adjusting the numbers for inflation.

- Meta’s FAIR v2 Allegedly Foiled: Susan Zhang revealed that Meta declined to create a lean FAIR v2 in early 2023 to pursue AGI, instead tasking the GenAI org with shipping AGI products, according to this tweet.

- She alleges that vision-less execs hired cronies who overpromised results and later joined OpenAI with inflated résumés, causing lasting damage.

- Character.AI’s Kaiju Models Optimize for Speed: Character.AI’s proprietary Kaiju models (13B/34B/110B) were engineered for inference speed using techniques like MuP-style scaling, MQA+SWA, and ReLU² activations, as detailed in this Twitter thread.

- The team deliberately avoided MoEs due to production constraints.

- Magic Patterns 2.0 Raises $6M Series A: Alex Danilowicz unveiled Magic Patterns 2.0 and a $6M Series A led by Standard Capital.

- The company celebrated bootstrapping to $1M ARR with no employees and 1,500+ product teams now using the AI design tool, planning to rapidly hire across enterprise, engineering, community and growth roles.

Modular (Mojo 🔥) Discord

- Mojo’s Dynamic Reflection Digs Deep: Mojo aims to support dynamic type reflection, using its JIT compiler to handle dynamic data, and try-catch and raise will be standard for error handling to match Python’s style, as well as monadic options.

- In a recent interview, Chris Lattner said that Mojo’s metaprogramming is more powerful than Zig’s because Mojo can allocate memory at compile time (YouTube link).

- Members Mull Mandatory

mut: A debate arose around the verbosity of mandatorymutannotations for function parameters, drawing comparisons to Rust and Python.- Members suggested a compromise where

mutis mandatory insidefnonly if the argument is reused, and call sidemutannotation is applied after the function call.

- Members suggested a compromise where

- Metal Compiler Meltdown on M4 Solved: One member encountered a Metal Compiler failed to compile metallib error while following the ‘Get started with GPU programming’ tutorial on an Apple M4 GPU.

- The issue was resolved by ensuring the full Xcode installation was present, and there are no

print()statements in the GPU kernel, and using the latest nightly build.

- The issue was resolved by ensuring the full Xcode installation was present, and there are no

- C-FFI Conundrums Confronted: Members discussed pain points in doing C-FFI with Mojo and suggest using

Origin.externalto workaround the rewrite explicitly trying to fix things.- It was also suggested to use

MutAnyOriginto preserve old behavior exactly, though it will extend all lifetimes in scope.

- It was also suggested to use

comptime Birdsyntax scrutinized: The syntaxcomptime Bird = Flyable & Walkablefor trait composition was discussed, with some finding it less intuitive than thealiaskeyword.- Others argued that

comptimemore accurately reflects the keyword’s functionality, particularly with static reflection and the ability to mix types and values at compile time.

- Others argued that

DSPy Discord

- DSPy Does Demand Domain-Driven Domain Knowledge: While DSPy aims to abstract away prompting, domain-specific LLM applications still require detailed instructions within signatures, with one user having 100 lines for some modules.

- The consensus is that DSPy requires more than just basic prompts for complex tasks; it necessitates encoding domain knowledge and step-by-step instructions to guide the LLM effectively.

- Signatures: Better Than Prompts, But Still Prompts?: Participants discussed that DSPy’s signatures, while a better abstraction than raw prompts, still function as prompts, particularly within the docstrings of class-based signatures where business rules are encoded, facilitating optimization.

- The framework helps to program, rather than focus on prompting, but a lot of the confusion in the community stems from the fact that a prompt means different things to different people.

- GEPA Geometries Gradual Gains: While GEPA aims to optimize prompts, users find that specific guidelines are still necessary, even with tool functions, such as instructing the LLM to use regex for agentic search when initial terms fail.

- One user found that they needed to add specific guidelines that LLM should send specific terms for tool to search via ripgrep but if it doesn’t find one MAKE SURE you add Regex as next, without which the LLM wouldn’t use Regex terms in the search tool

- Agentic Agents Augmenting Analytics: A user shared a scenario where they needed to instruct the LLM to use regex in agentic search with ripgrep to effectively search through documents, highlighting the need for specific guidance even with advanced tools.

- Another user shared about instructing the LLM that the answer might not be on page 1 in search results.

- Taxonomy Tail Troubles Told: A member wrote a blogpost about their experience creating taxonomies.

- They find the topic super relevant in the context of structured generation.

HuggingFace Discord

- ZeroGPU Zeros Performance Concerns: Members discussed issues with ZeroGPU, but it seems working now, although it is unclear whether there are still lingering concerns.

- The discussion comes after it had been reported that it wasn’t working with logs.

- Reuben’s Recursive Removal Resolved: Reuben was banned by a bot due to sending too many messages triggering a spam filter, and later unbanned by lunarflu.

- The situation prompted discussions on using regex or AI to detect spam, with concerns raised about privacy.

- Aquif-3.5-Max-42B-A3B Attracts Attention: Members noticed the Aquif-3.5-Max-42B-A3B model trending on Hugging Face.

- Speculation arose that this was due to it being upscaled and fine tuned.

- Tokenflood Tool Tests LLM Latency: A freelance ML engineer released Tokenflood, an open-source load testing tool for instruction-tuned LLMs, available on GitHub.

- It simulates arbitrary LLM loads and is useful for assessing prompt parameter changes.

- MCP Celebrates Milestone with Anthropic and Gradio: The MCP 1st Birthday Bash, hosted by Anthropic & Gradio, kicks off this Friday, Nov 14 (00:00 UTC) at https://huggingface.co/MCP-1st-Birthday.

- It features $20K in cash prizes and $2.7M+ in API credits for participants, with thousands already registered.

Moonshot AI (Kimi K-2) Discord

- Researcher Mode Bugs frustrate Users: Users reported receiving errors from Researcher Mode instead of results, even with minimal prior use, and they asked about credits.

- The problems may be related to whether Researcher Mode is completely paid, as users are receiving insufficient credit/upgrade messages.

- Kimi Coding Plan API Quota Dries Up: The Kimi Coding Plan’s API quota depletes quickly (within hours) due to web search and plan mode usage.

- One user speculated that Moonshot AI might transition to a cursor-like plan, particularly given their funding compared to OAI and Anthropic.

- Kimi API Setup Causes Headaches: Users needed help with Kimi API setup for the thinking model using HTTP, encountering authorization failures despite having credits and a valid API key.

- It was discovered that the user was employing the Chinese platform URL instead of the global

https://api.moonshot.ai/v1/chat/completionsURL, which fixed the issue.

- It was discovered that the user was employing the Chinese platform URL instead of the global

- Turbo Version gets Kimi K2 Moving: Users asked about accelerating the processing time for the Kimi K2 thinking model through the API.

- It was advised to utilize the turbo version, which delivers quicker output speeds without impacting model performance.

- GPT 5.1 Stealth Rolls Out: Members noted that GPT 5.1 rollout and that it was the stealth model on OR so it was decent but so safetylobotomized.

- One member celebrated everyone kew it was coming since a few weeks ago as OpenAI takes an L.

Yannick Kilcher Discord

- Elevenlabs Unveils Speech-to-Text: Elevenlabs, known for text to speech, has introduced speech to text capabilities, as highlighted in their blog post.

- Members are contemplating whether this new feature will enhance Elevenlabs’ appeal in the market.

- Kimi K2 Scores on Coding Tasks: A member shared a YouTube video showcasing Kimi K2’s strong performance on one-shot coding tasks.

- No further details were mentioned.

- ICLR Reviewer Ruckus: A member expressed frustration with the ICLR review process, citing poor scores on a resubmission despite addressing previous concerns and adding new datasets with over 30k new questions total.

- The member quoted reviewers criticizing them for not providing hyperparameters, even though they were in the appendix, and dismissing their work as not a benchmark paper despite extensive testing.

- Whisper Woes Resolved?: A member encountered errors and hallucinations when using the Whisper model directly with PyTorch, but found relief using Whisper-server.

- They recommended compiling Whisper-server with Vulkan support for portability and filtering out quiet sections to improve transcription.

- Reasoning from Memorization Paper: A member linked to a paper titled From Memorization to Reasoning in the Spectrum of Loss Curvature: [2510.24256] From Memorization to Reasoning in the Spectrum of Loss Curvature.

- No further details were mentioned.

MCP Contributors (Official) Discord

- Timezone Info Travels From MCP Client to Server: A discussion started about passing timezone information from MCP clients to MCP servers, and it was considered to supply this as metadata via a client-sent notification or a server elicitation.

- A member has drafted a SEP (spec enhancement proposal) for timezone and will post it to GitHub after internal feedback, and is weighing adding it to CallToolRequest, using a Header, adding it to JSONRPCRequest.params._meta, or adding it to InitializeRequest.

- Claude.ai Fights Connectivity: Members discussed debugging connectivity issues between Claude.ai and MCP Servers.

- It was noted that this is flaky and specific to the client and suggested a developer mode that gave a bit more feedback about what’s going on.

- MCP Tool Call Goes Wild, Considers Alternate Serialization: Members wondered about returning data other than serialized JSON from mcp tool call results, such as Toon format.

- One member shared results of small-scale evals on a synthetic dataset: accuracy is comparable, 9% slower, 11% less tokens (n = 84, p = 0.10).

Manus.im Discord Discord

- AI Automation Expert Joins Server: A new member with expertise in AI automation integration has joined, bringing skills in Python, SQL, JavaScript, and frameworks like PyTorch, scikit-learn, LightGBM, and LangChain.

- They have experience building chatbots, recommendation engines, and time series forecasting systems.

- Server Mulls Over Spanish Language Section: A member suggested creating a dedicated Spanish language section within the server, providing image links for context 1.png, 2.png, 3.png, 4.png.

- The suggestion aims to cater to Spanish-speaking members and potentially broaden the community’s reach.

- Engineers Pursue Generative Engine Optimization: A member is seeking resources and guidance on how to effectively track and optimize for Generative Engine Optimization.

- The request highlights the growing interest in refining generative models for enhanced performance.

- Users Encounter Pesky Manus System Error: A user reported a recurring Manus system error preventing publishing, specifically a “pathspec ‘417ea027’ did not match any file(s) known to git” error.

- The member expressed frustration with the lack of support, noting previous unresolved issues despite ongoing subscription fees.

- Support Troubles Plague Manus Users: Multiple members are experiencing difficulty accessing Manus support, with one reporting the support channel’s apparent closure.

- One user was advised by the Manus agent to “Wait for Manus support” or “Escalate the ticket” after facing a git commit error and provided a feedback link.

aider (Paul Gauthier) Discord

- Aider’s Markdown Mishaps: Users found Aider gets confused by nested code markdown marks when creating code snippets in markdown files using

anthropic.claude-sonnet-4-5-20250929-v1:0.- Adding three and four backticks (

'```'and'````') to theconventions.mdfile triggers Aider to demarcate files with<source>tags, resolving the code snippet issue.

- Adding three and four backticks (

- Aider’s Vim Mode Gets Rave Reviews: A user lauded Aider’s Vim mode as fantastic, and also praised the new

<#1403354332619079741> aider-ce/load-session /save-session functionality for its usefulness in parking and resuming jobs.- These functionalities significantly enhance the user experience by allowing for seamless interruption and continuation of tasks.

- Aider’s Update Cadence Questioned: Users expressed concerns over the lack of updates from Paul Gauthier regarding Aider’s development status.

- Speculation arose about whether Paul Gauthier is still actively developing Aider, with some users wondering if an announcement about his departure was missed.

- GPT 5.1 Drops Without Numbers: Members noted the release of GPT 5.1, but observed that no benchmarks were included in the release notes.

- The lack of benchmarks makes it difficult to assess the improvements and capabilities of GPT 5.1 compared to previous versions.

tinygrad (George Hotz) Discord

- Package Data Faces Scrutiny: A member inquired about potential file omissions from the archive, questioning if

package_datais a no-op and suggesting that specifying files explicitly could enhance the process.- The member expressed gratitude to the reviewer for their insightful feedback, hinting at ongoing efforts to refine package management within the project.

- OpenCL Error Messages Cried Out For Overhaul: A member advocated for enhanced error messaging when an OpenCL device goes undetected, citing the cryptic

RuntimeError: OpenCL Error -30: CL_INVALID_VALUEas an example.- The pinpointed error stems from

/tinygrad/tinygrad/runtime/ops_cl.py, line 103, signaling a need for more informative diagnostics in the OpenCL runtime operations.

- The pinpointed error stems from

Windsurf Discord

- Windsurf Launches Stealth Aether Models: Windsurf released a surprise set of stealth models (Aether Alpha, Aether Beta, and Aether Gamma) available in Windsurf Next and a small percentage of Windsurf Stable users.

- These models are free to use and the team is seeking feedback in the designated channel.

- Windsurf Next Available for Preview: Windsurf Next is a pre-release version of Windsurf that includes experimental features and models, which users can download here.

- Users can test out the new features and provide feedback on the stealth models.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

LMArena ▷ #general (1258 messages🔥🔥🔥):

Riftrunner vs other models, GPT 5.1 benchmarks, Gemini 3 Pro speculation, AI model sycophancy, Riftrunner Game Development

- Riftrunner Creates Mario Game While Other Models Fail: Members agreed that, compared to other models, no model has done better in creating a Mario game than Riftrunner has.

- Some members noted that Riftrunner can lie, while others mentioned that it is better than Lithiumflow.

- Rain-drop Turns Out To Be Llama Model: Members revealed that the model rain-drop turned out to be a Llama model and posted a screenshot.

- Users found that rain-drop produced bad terminal outputs and was like Gemini 3 Flash.

- Riftrunner Proves Better Than LithiumFlow at Coding Tasks: One user confirmed that Riftrunner is better than Lithiumflow for coding tasks, specifically citing its ability to create a functional 3D Flappy Bird game, but it also suffered from laziness syndrome.

- One user shared the prompt for a 3D flappy bird game, which led to the generation of 2k lines of code, they said, if you motivate it, it might listen to you.

- GPT 5.1 Falls Flat Compared to Gemini 3 Pro: Members discussed the recent release of GPT 5.1, noting its shortcomings and expressing hope that Gemini 3 Pro will be significantly better.

- One user called GPT 5.1 trash, whereas many members are awaiting Gemini 3’s release sometime next week.

- Riftrunner Demonstrates Superior Understanding and Debugging Skills: A user highlighted Riftrunner’s superior understanding by getting it to create a 3D flappy bird game from a simple prompt, it also fixed some issues with the user’s game and added sounds.

- Another user confirmed that Riftrunner is better for coding tasks than Lithiumflow, but they expressed the sentiment that Lithiumflow was amazing for writing, not sure about riftrunner.

LMArena ▷ #announcements (1 messages):

Code Arena, WebDev Arena, LMArena Leaderboard

- Code Arena Arrives with a Bang: Code Arena is now live on LMArena, offering real-time generation of deployable web apps that users can directly inspect and judge, succeeding the old WebDev Arena.

- A blog post and YouTube video provide more details, while the leaderboard showcases the new evaluation system.

- WebDev Arena Gets a Facelift: The WebDev Arena has been redesigned based on community feedback and is now known as Code Arena which has a completely rebuilt evaluation method.

- Models generate live, deployable web apps and sites that anyone can open, inspect, and judge directly, in real time.

Perplexity AI ▷ #general (670 messages🔥🔥🔥):

Perplexity referral program, Gemini 2.5, GPT-5 mini vs GPT-5, Comet issues, Comet for Android

- Referral Program Accusations Trigger Account Bans: Several users report being banned from the Perplexity Partner Program for “fraudulent activity,” despite claiming their referrals were genuine, and expressed frustration over the support team’s lack of response to their appeals.

- Some users suspect the bans are related to gaming the referral system by inviting alts with the same code or using VPNs, while others speculate that the issue might be related to the platform reviewing payout eligibility for the 100$ bounty.

- Gemini 2.5 Pro Experiencing Implementation Issues: Users are reporting issues with Gemini 2.5 Pro in Perplexity, with one user stating that “it’s broken and poorly implemented in pplx atm, no way to fix it.”

- Perplexity’s interface seems to be automatically switching to GPT, even when Gemini 2.5 Pro is selected.

- GPT-5 thinking mini or GPT-5 regular?: A user who purchased the GPT Go subscription reported that while it advertises GPT-5 thinking, it mostly uses GPT-5 thinking mini, leading to a refund request.

- Members debated on whether they preferred a specific model or not.

- Comet Users Troubleshooting Webpage Control and Functionality: Users reported Comet AI Assistant issues such as the inability to perform webpage actions, unresponsive buttons, and an inability to control the browser, with some speculating that VPN usage or login status might be the cause.

- Some users have found solutions such as logging in, changing IP address (VPN) or deleting and reinstalling Comet or posting in the troubleshooting channel.

- Users Want Pro Discord Role: Several users have been asking about how to get the Pro role in Discord, and other users pointed them to link their Discord account with their Perplexity account on the website.

- A member noted “it should give it to you automatically on the website when you press the uh discord button, it made me link discord to the website during the process of joining the server”.

Perplexity AI ▷ #sharing (3 messages):

Sourcify Event, Open Source, Forks, PRs and Chaos, Threads Shareable

- Sourcify IN hosts Open Source Adventure!: Sourcify IN is hosting an event titled Forks, PRs, and a Dash of Chaos: The Open Source Adventure on November 15, 2025.

- The talk will feature Swapnendu Banerjee (GSoC 2025 @Keploy | Engineering @DevRelSquad) and will be broadcast on Google Meet & YouTube Live.

- Discord Thread needs to be Shareable!: A member requested that a thread be made

Shareable, with an attachment showing how to set this option.- This relates to this discord thread.

Cursor Community ▷ #general (534 messages🔥🔥🔥):

Cursor IDE Cost Awareness, Cursor vs Copilot preference, Exploiting ChatGPT, Cursor 'Max' Mode, Cursor Rules

- Review agent is costing, but cheap: Members noted that the agent review feature in Cursor IDE incurs costs with each use, however it is relatively inexpensive, with one user having used 76% of their allowance.

- Users are showing their usage screenshots like this one, and finding that clicking Try Again sometimes resolves issues.

- Cursor vs Copilot: Preference Prevails: Some users have returned to Copilot after trying Cursor, emphasizing that tool preference can be subjective.

- One user mentioned, My brother is a fan of copilot idk why, highlighting individual preferences.

- Early Access to Unlimited Exploits: Some users exploited ChatGPT 5 when it was briefly free, including unlimited Opus 4 requests, with one user lamenting, Sad I wasn’t aware of the opportunity.

- The pricing bug allowed unlimited usage, described as bugged as hell, we had unlimited everything.

- Cursor ‘Max’ Mode Unlocks Full Potential, Incurs Extra Costs: Max mode in Cursor removes limits to maximize performance and reduce costs, enabling it to read entire files instead of chunks.

- However, exceeding 200k context with Sonnet 4.5 doubles the cost, with users humorously suggesting capping it: Cant we limit this to 200k and post that we can give another command 💀

- Rule Creation to Maintain AI Discipline: Members are establishing custom rules and lints to control AI behavior, preventing dirty code from entering repos.

- One member shared a streamlined approach, I actually took the concept of .cursorrules and turned it into a rigid system that keeps AI from drifting with lints, custom eslint plugins and husky is my last defense for protecting dirty code getting in.

GPU MODE ▷ #general (10 messages🔥):

popcorn-cli syntax, status 400 grayscale, CPU-focused community, leaderboard/eval selection, export command quotes

- Syntax Scare with Popcorn-CLI: Some users encountered a syntax error with

popcorn-cli submit, others suggested checking the popcorn-cli readme for correct syntax. - Grayscale Gauntlet has Gone: One user received a status 400 error, and discovered that the grayscale leaderboard is closed.

- CPU Kernel Competition Craving: A member inquired about the existence of a CPU-focused community similar to gpumode, emphasizing CPU kernel competitions.

- Evaluation Enigmas Explored: A user mentioned that it may be necessary to ensure you select the nvmnvfp4_gemv in the popcorn cli, its all the way at the bottom of the evaluation suites.

- Export Expertise Expressed: A member clarified that when using the export command, the URL should be entered without quotes.

GPU MODE ▷ #cuda (18 messages🔥):

CUDA compiler options, warp tiling, TMEM allocation, PTXAS optimization, Mutex locking via TMEM

- Layman’s CUDA Compiler Commandments: New CUDA developers inquired about essential compiler options beyond basic usage, and one member suggested using

-O3for optimization and-lineinfofor preserving line number information for profiling with Nsight Compute.- They also recommended the

-res-usageoption to check register and static shared memory usage post-compilation.

- They also recommended the

- PTXAS Optimization Revelation: It was clarified that the

-O3compiler option primarily optimizes the host (CPU) part of the code, which may not be as critical if the CPU code isn’t on the critical path.- A member noted that the default optimization level of PTXAS is already O3, making the flag redundant for GPU code optimization.

- TMEM Static Allocation Lament: A developer questioned why TMEM must be allocated dynamically, viewing it as a downgrade compared to static shared memory.

- Another member speculated that TMEM allocation could be used for dependency management, with the TMEM buffer acting as a mutex that locks over the data it contains.

GPU MODE ▷ #jobs (1 messages):

HippocraticAI Hiring, LLM Inference Engineer Role, CUDA/CUTLASS/Triton expertise, NVIDIA B200s, AMD MI355, and Google TPUs

- HippocraticAI Expands LLM Inference Team: HippocraticAI is expanding its Large Language Model Inference team to enhance healthcare accessibility globally, actively seeking talented engineers for multiple positions.

- They posted a job link at https://lnkd.in/eW5qzuMc and encourages interested candidates to apply and shape the future of healthcare.

- LLM Inference Engineer Role Focuses on Optimization: The LLM Inference Engineer role will focus on researching, prototyping, and building state-of-the-art LLM inference solutions, especially with expertise in CUDA, CUTLASS, Triton, TileLang, or contributions to major inference frameworks like vLLM and SGLang.

- The role involves optimizing and accelerating inference performance across cutting-edge hardware platforms, including NVIDIA B200s, AMD MI355, and Google TPUs.

GPU MODE ▷ #beginner (4 messages):

Atomic Max for FP32, PTX Documentation Inaccuracy

- Achieving Atomic Max for FP32 with Int32 Trick: A member noted that while the PTX documentation suggests atomic max operations for FP32 are possible, it results in an error, but a workaround exists using int32.

- The trick involves inverting the bottom 31 bits if the sign bit is set to achieve an int32-like representation, as shown in PyTorch’s source code.

- PTX Doc Claims Atomic Max, Reality Says No!: Despite what the PTX documentation implies, direct atomic max operations for FP32 types are not supported, leading to errors during compilation.

- The compiler throws an error indicating that the

.maxoperation requires specific types like.u32,.s32,.u64,.s64,.f16,.f16x2,.bf16, or.bf16x2for theatominstruction.

- The compiler throws an error indicating that the

GPU MODE ▷ #off-topic (3 messages):

Skunk on a car, Dune Meme

- Skunk chills on car hood: A member posted a picture of a skunk on the hood of a car.

- Dune meme makes an appearance: A member posted a Dune meme.

GPU MODE ▷ #rocm (3 messages):

hipkittens, image0.jpg

- HipKittens Link Shared: A member shared a link to HipKittens on X.com, prompting positive feedback.

- Another member responded positively with “Got a chuckle outta me - great stuff!”

- Image0 attachment shared: A member shared an image called image0.jpg on cdn.discordapp.com.

GPU MODE ▷ #self-promotion (5 messages):

hipkittens, FSDP Implementation, AMD Open Source AI Week Recap

- HipKittens get Shared!: A member shared a link to hipkittens at luma.com/ai-hack.

- NanoFSDP simplifies Distributed Training: A member wrote a small FSDP implementation to learn the basics of distributed training, available at github.com/KevinL10/nanofsdp.

- They noted it is fairly minimal but well-documented (~300 LOC), and works as a drop-in replacement for

fsdp.fully_shard, and that it may be helpful for understanding how PyTorch implements FSDP under the hood.

- They noted it is fairly minimal but well-documented (~300 LOC), and works as a drop-in replacement for

- AMD Open Source AI Week Recapped: A member shared a recap of AMD Open Source AI Week at amd.com/en/developer.

GPU MODE ▷ #🍿 (4 messages):

Building from source, Nvidia competition submissions

- Compile Compatibility Considerations: It was suggested to build from source and compile on an older image to improve compatibility, because the release build seems to be done on Ubuntu 24.04.

- The CI guy <@325883680419610631> is on parental leave, so this may take a while to implement.

- Submitting to Nvidia Comp via Discord Bot: A member inquired if submissions for the Nvidia competition were primarily happening through the Discord bot.

- Another member confirmed that they support Discord, the site, and the CLI, with the CLI being the most popular submission method.

GPU MODE ▷ #thunderkittens (2 messages):

Hipkittens launch, Other Kittens on X

- Hipkittens are shared on X: A member shared a link to Hipkittens on X.

- Still more Kittens on X: Another user liked it.

GPU MODE ▷ #submissions (66 messages🔥🔥):

Leaderboard Submissions, GEMV Cheating Accusations, Benchmark Input Sizes

- NVIDIA GEMV Leaderboard Race Heats Up: Multiple users made submissions to the

nvfp4_gemvleaderboard, with <@1435179720537931797> ultimately clinching the first place position with a time of 24.5 µs.- Other notable submissions included <@772751219411517461> achieving third place at 66.0 µs and <@1291326123182919753> securing 6th place with 58.4 µs.

- Input Size Sparks Debate on GEMV Benchmark: A member questioned the benchmark’s input sizes, noting that if the top times are around 7 µs, the input is likely tiny, potentially skewing optimizations and increasing the relative cost of prologue and epilogue.

- The member suggested evaluating the benchmarks on larger inputs to provide a more realistic assessment.

- GEMV Leaderboard Caching Controversy: The top times on the

nvfp4_gemvleaderboard were identified as potentially being the result of caching values between benchmark runs, leading to accusations of cheating.- Another member suggested that these issues may have been the result of LLMs iterating on the problem and suggested that it was an honest mistake.

- Grayscale V2 Leaderboard Records New Bests: <@1144081605854498816> achieved 7th place on L4 with 27.5 ms and secured 8th place on H100 with 12.9 ms.

- Additionally, the user also made personal bests on A100 with 20.4 ms and B200 with 6.69 ms for the

grayscale_v2leaderboard.

- Additionally, the user also made personal bests on A100 with 20.4 ms and B200 with 6.69 ms for the

GPU MODE ▷ #cutlass (1 messages):

kinming_32199: impressive animation👍

GPU MODE ▷ #general (2 messages):

GPU MODE Leaderboard, Submission process

- GPU MODE Leaderboard submission options: Users asked whether submissions should be done via Discord or the GPU MODE website.

- A member clarified that both methods are acceptable.

- Submission via Discord: A user inquired whether submissions could be made via Discord.

- Another user confirmed that Discord submissions are indeed accepted.

GPU MODE ▷ #multi-gpu (1 messages):

DMA Documentation, RDMA Documentation, Wikipedia, ChatGPT, Vendor websites

- Hunting DMA and RDMA documentation: A member is seeking better documentation on Direct Memory Access (DMA) and Remote Direct Memory Access (RDMA) than what’s available on Wikipedia, ChatGPT, and vendor sites.

- Additional DMA/RDMA Resources Sought: The user expressed dissatisfaction with current information sources, hoping for more detailed or technical documentation.

GPU MODE ▷ #helion (9 messages🔥):

Triton Errors, Auto Skip Triton Errors, Helion Configs BC-compatible

- Triton Errors autotuning investigated: A member inquired why Triton errors are not skipped by default when autotuning.

- Another member responded that they already auto skip a ton of them referencing the helion autotuner logger.

- Helion Configs promise BC-Compatibility: A member inquired about Helion Configs and if they will be BC-compatible.

- Another member stated that yes, we are gonna be BC compatible, citing an example where they updated indexing to be a list instead of single value because each load/store can be optimized independently for perf gain, but they still support single value input.

GPU MODE ▷ #nvidia-competition (212 messages🔥🔥):

Cutlass and CuTe 4.3.0, Popcorn-cli submission errors, Kernel global caching allowed, CuTe DSL is not requirement, torch doesn't support sm100

- Cutlass and CuTe fixes submissions: CuTeDSL and Cutlass were updated to 4.3.0, fixing the issues with CuTe submissions and the CuTe example passes.

- Popcorn CLI Authentication Errors: Users encountered a 401 Unauthorized error with

popcorn-cli submit, resolved by re-authenticating via the Discord OAuth2 link provided during registration.- One user initially missed the “Please open the following URL in your browser to log in via discord: https://discord.com/oauth2/authorize?client_id” step.

- Caching Compiled Kernels is OK: Caching compiled kernels is allowed, but caching results is forbidden.

- It was clarified that while caching compiled kernels is permissible, caching tensor values between benchmark iterations is not allowed as each benchmark should have different data, but the same shape.

- Raw PTX can be used for kernel implementation: Users confirmed that it is acceptable to write CUDA C++ or raw PTX, loading it with

torch.cuda.load_inline, with reference code available here.- A user inquired about a CUDA example template, but it was indicated that while there isn’t one, CUDA and PTX can be used, although a template for it might be slow.

- Blackwell GPU details revealed: The B200 GPU has 148 SMs (Streaming Multiprocessors) running at a boost clock of 1.98 GHz and is used to score submissions.

- Nvidia’s blog post includes the B300 diagram.

GPU MODE ▷ #xpfactory-vla (3 messages):

RLinf, Qwen3-VL VLA-adapter training

- RLinf Repo: new tool in town: A member mentioned checking out RLinf, promising updates after running Qwen3-VL VLA-adapter training overnight.

- GPU Usage Questioned: A member inquired about the number and type of GPUs used for training, expressing concern for those with limited GPU resources.

- No response was given.

Unsloth AI (Daniel Han) ▷ #general (179 messages🔥🔥):

VibeVoice finetuning for Bulgarian, Intel autoround quants vs BNB 4-bit quants for training, QAT in Unsloth, MoE models and output quality, Aquif-3.5-Max-42B-A3B

- Bulgarian Blues: VibeVoice struggles with new language: A member attempted to use VibeVoice for Bulgarian TTS but found the results were ‘not good’, though still ‘close to understandable’, needing further finetuning.

- Another member joked that it sounds ‘like a drunk brit trying to read a phonetic version of the sentence’.

- QAT Showdown: Intel autoround vs. BNB 4-bit: A question was raised about using Intel autoround quants for training and if it would have any benefit over using bnb 4-bit quants.