Gemini is all you need.

AI News for 12/16/2025-12/17/2025. We checked 12 subreddits, 544 Twitters and 24 Discords (207 channels, and 8313 messages) for you. Estimated reading time saved (at 200wpm): 594 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

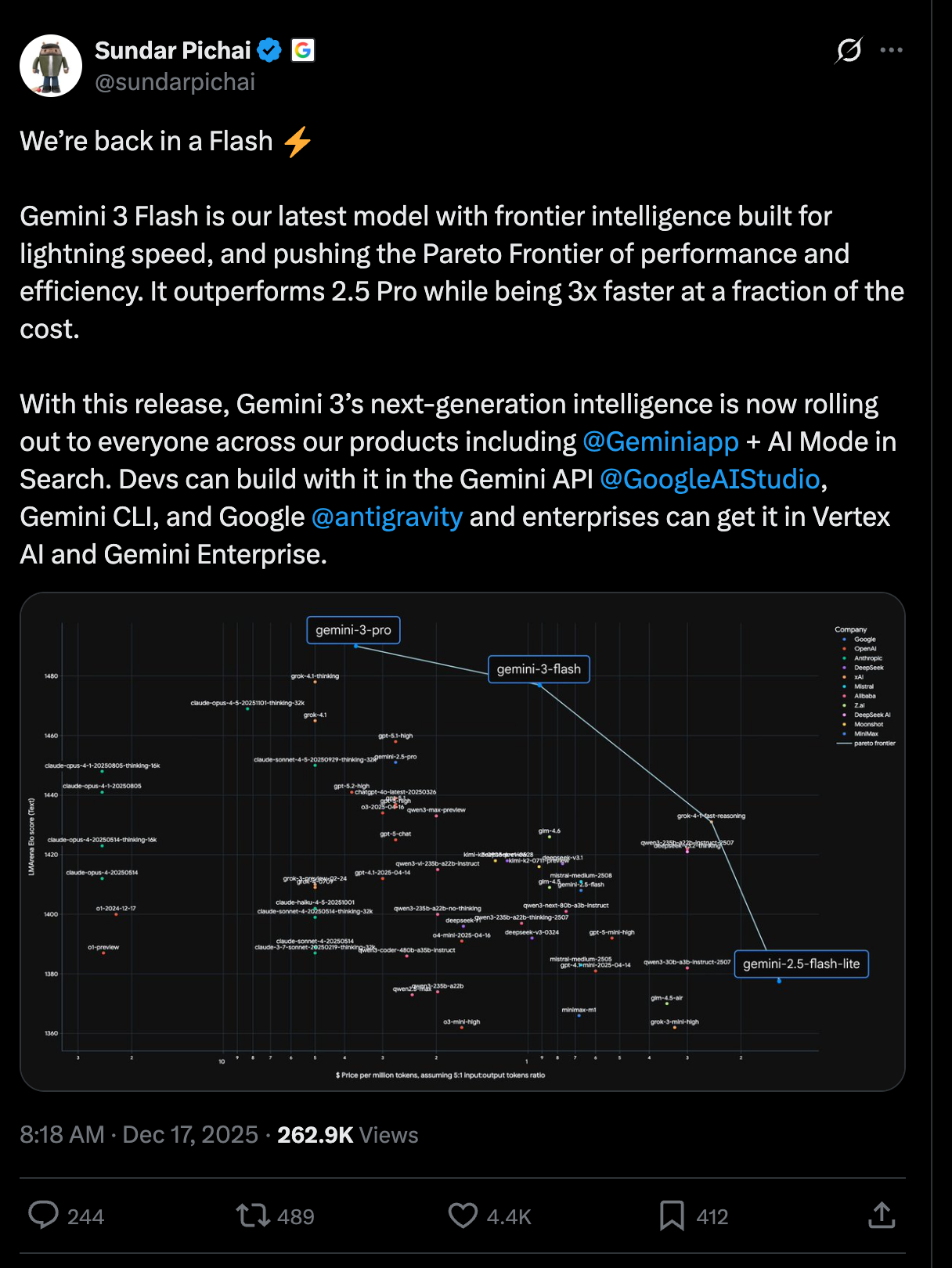

When we first started pushing the LLM Pareto frontier a year ago, and then it was picked up by Jeff Dean and Demis Hassabis, it wasn’t long before Gemini 2.5 conquered it, before GPT-5 then claimed it 4 months after. Now we are back to Gemini 3.0 claiming it, again witih Sundar and Jeff loudly trumpeting this accomplishment:

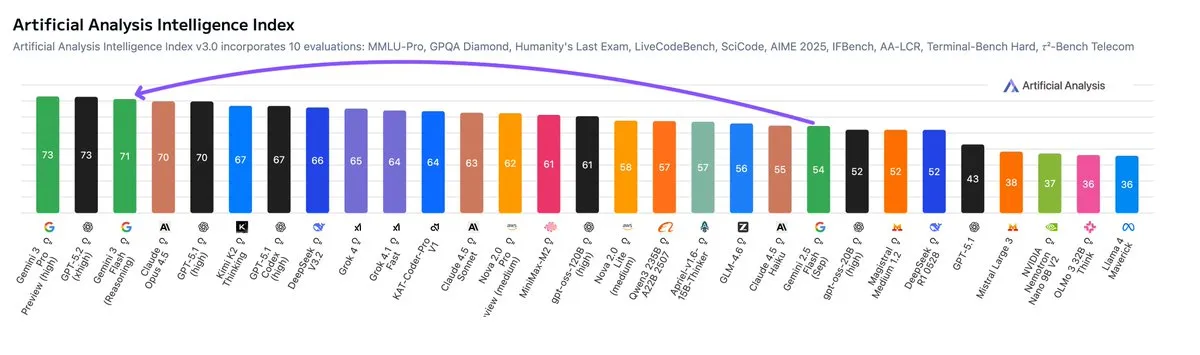

Apart from Arenas, this is also validated in academic benchmarks:

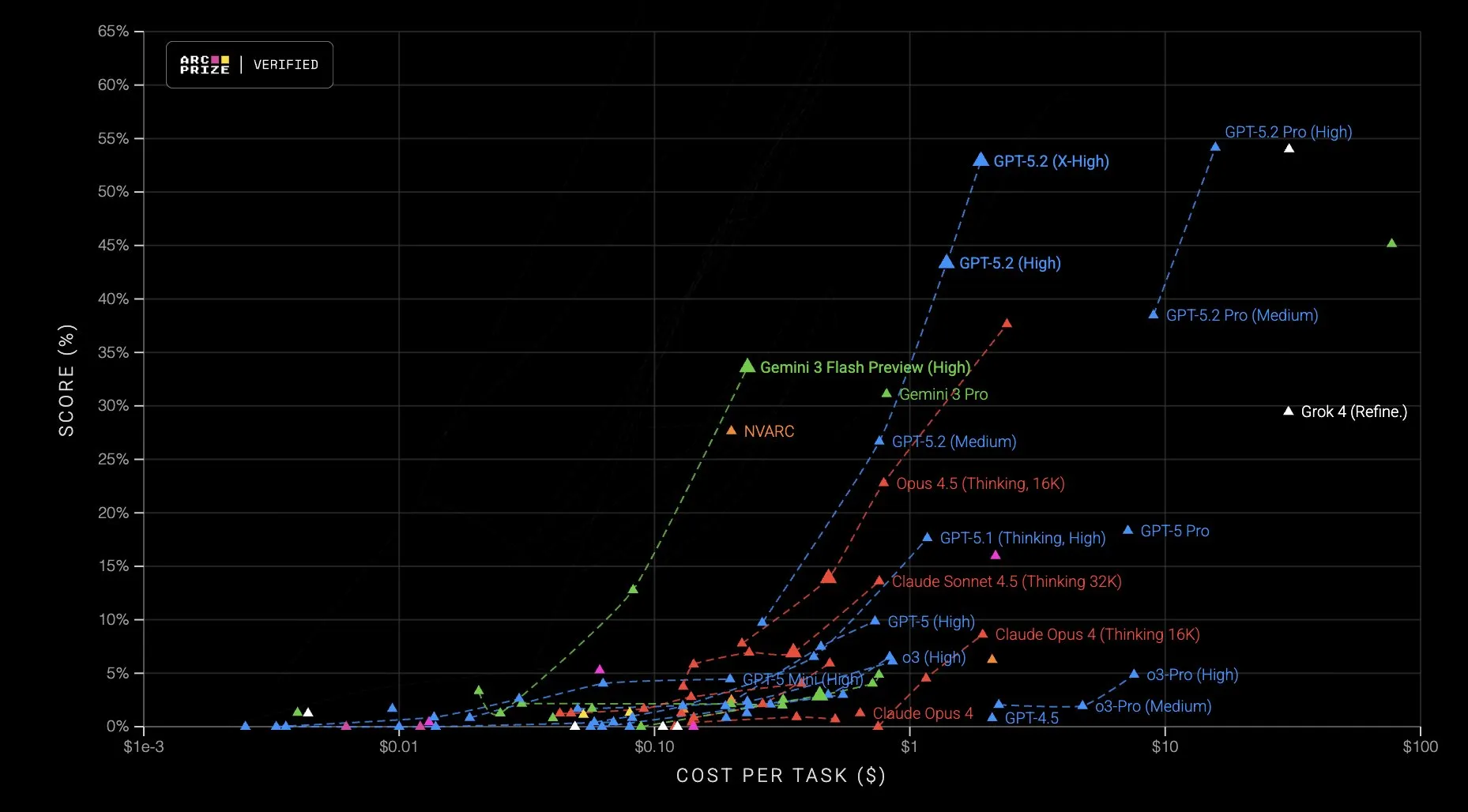

and ARC AGI has its own chart showing efficiency:

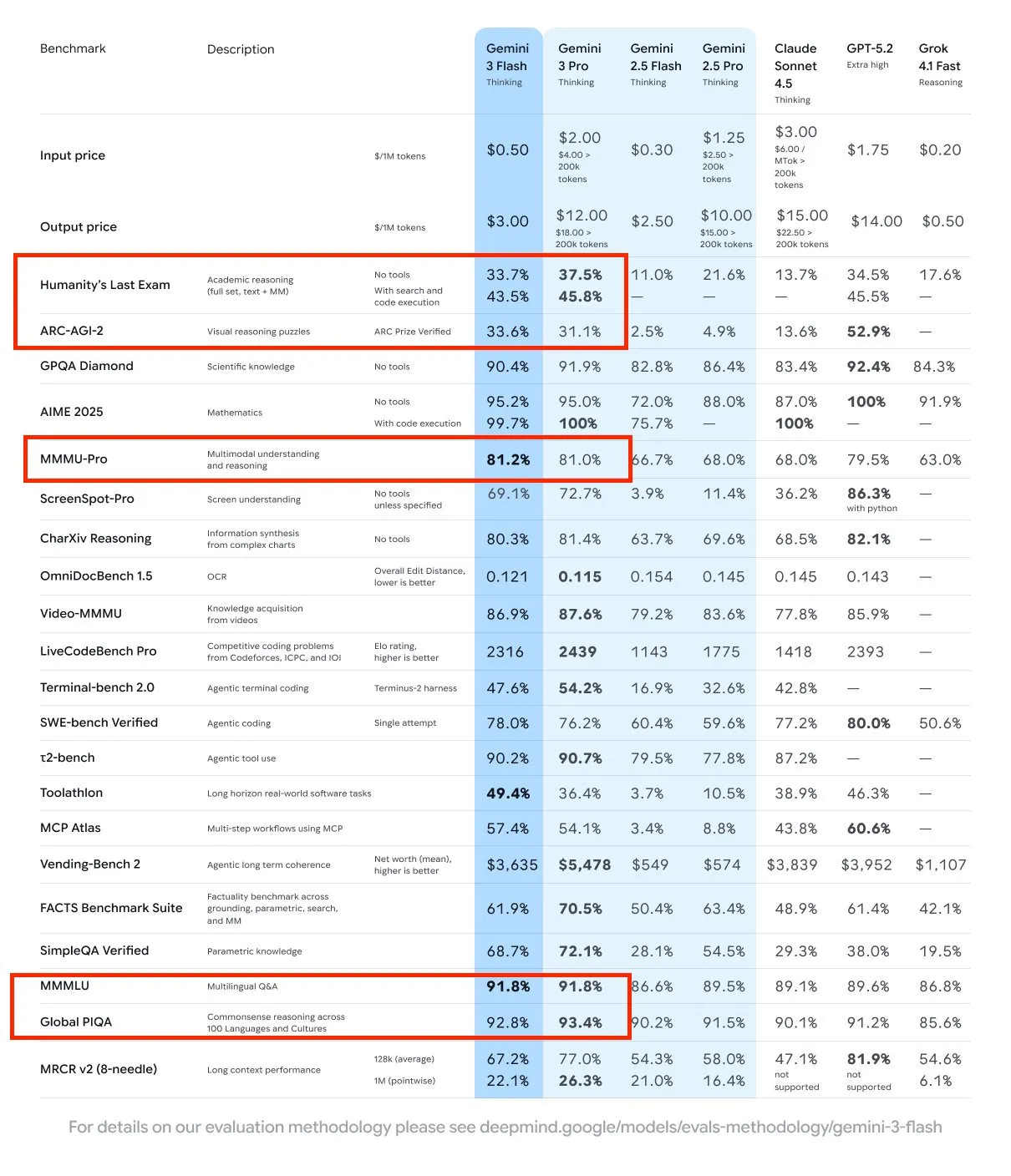

Here are some specific breakdown highlights:

Apart from the disillation, the focus here seems to be tool calling. Here is a demo showing 100 tools and more demos from Addy Osmani.

AI Twitter Recap

Gemini 3 Flash launch: frontier intelligence at flash latency (ecosystem, metrics, caveats)

- Model + rollout: Google launched Gemini 3 Flash, positioned as “Pro‑grade reasoning at Flash speed.” It’s the new default in the Gemini app (“Fast”) and Search AI Mode, and available to developers via the Gemini API in Google AI Studio, Antigravity, Vertex AI, CLI, Android Studio, and more. Pricing is $0.50 per 1M input tokens and $3.00 per 1M output tokens; context up to 1M tokens; tool calling and multimodal IO supported. Announcements and overviews: @sundarpichai, @Google, @GoogleDeepMind, @OfficialLoganK, @JeffDean, @demishassabis, @GeminiApp, dev Q&A space.

- Benchmarks and cost/perf: Early results show 3 Flash rivaling or outperforming larger models in several agentic/coding and reasoning settings at markedly lower cost/latency:

- ARC‑AGI‑2 and SWE‑bench Verified: beats or matches Gemini 3 Pro and rivals GPT‑5.2 in some configs (@fchollet, @GoogleDeepMind, @jyangballin, pareto snapshots).

- LMArena and Arena (WebDev/Vision): top‑tier scores with strong pareto position vs price (@arena, @JeffDean, @osanseviero).

- Independent aggregation notes both strengths and tradeoffs: high knowledge/reasoning, second on MMMU‑Pro, but heavy token use and high hallucination on AA‑Omniscience (91%)—cost-effective overall due to pricing (Artificial Analysis deep dive, follow‑ups).

- Thinking levels and evaluation: Flash exposes thinking levels (low/med/high). Practitioners asked for level‑wise benchmarks to inform production tradeoffs; some early tests show Flash‑Low is token‑efficient but weaker on validity, while Flash‑High closes gaps on quantitative metrics (@RobertHaisfield, @Hangsiin, Flash‑Low vs High snapshots).

- Integrations and tooling: 3 Flash is already live in common dev environments: Cursor (@cursor_ai), VS Code/Code (@code, @pierceboggan), Ollama Cloud (@ollama), Yupp (@yupp_ai), Perplexity (@perplexity_ai; Flash in Pro/Max), LlamaIndex FS agent (demo, repo). Early product notes highlight near‑real‑time coding/editing and multimodal analysis (@Google, @GeminiApp).

Voice AI and embodied assistants

- xAI’s Grok Voice Agent API: New speech‑to‑speech agent supports tool calling, web/RAG search, SIP telephony, and 100+ languages. It posts a new SOTA on Big Bench Audio (92.3% reasoning), ~0.78s TTFB, at $0.05/min ($3/hr). Rapidly demoed on the Reachy Mini robot within an hour of launch, hinting at fast path from voice reasoning to embodied agents (xAI, benchmark write‑up, robotics port).

- Real‑time speech infra: Argmax SDK 2.0 shipped “Real‑time Transcription with Speakers”—faster than real time on Mac/iPhone, under 3W power, “step change” in accuracy (@argmax). This, together with Grok Voice, strengthens the stack for production voice agents.

Training efficiency and MoE systems

- FP4 training and open MoE stack: Noumena released “nmoe,” a production‑grade reference path for DeepSeek‑style ultra‑sparse MoE training focused on B200 (SM_100a), with RDEP (replicated dense/expert parallel), direct dispatch via NVSHMEM (no MoE all‑to‑all), and mixed‑precision experts (BF16/FP8/NVFP4). Emphasis on deterministic mixtures and router stability at research scale. Authors claim NVFP4 training is “solved” for MoEs when properly applied (repo + thread, earlier FP4 note; related: torch._grouped_mm discovery link).

- Inference/system throughput: vLLM reports up to +33% Blackwell throughput in one month via deep PyTorch integration, cutting cost/token and lifting peak speed (@vllm_project).

- On‑device LLMs: Unsloth + PyTorch announced a path to export fine‑tuned models to iOS/Android; e.g., Qwen3 on Pixel 8 / iPhone 15 Pro at ~40 tok/s, fully local (@UnslothAI).

- RL/FT insights: Small‑scale RL LoRA on Moondream suggests “reasoning tokens” and RL both improve sample efficiency, with MoE also helping—at the cost of more fine‑tuning compute (setup/results, commentary).

Interactive world models, video, and 3D assets

- Tencent Hunyuan HY World 1.5 (“WorldPlay”): Open‑sourced, streaming video diffusion framework enabling real‑time, interactive 3D world modeling at 24 FPS with long‑term geometric consistency. Introduces “Reconstituted Context Memory” to rebuild past frame context and a Dual Action Representation for robust keyboard/mouse control. Supports first/third person, promptable events, infinite world extension (launch thread, paper).

- Video and 3D pipeline updates: Runway Gen‑4.5 emphasizes physics‑faithful motion; Kling 2.6 added motion control + voice control (with active creator contests); TurboDiffusion claims 100–205× video diffusion speed‑ups; TRELLIS.2 (on fal) generates 3D PBR assets up to 1536³ with 16× spatial compression (Runway, Kling motion, Kling voice, TurboDiffusion, TRELLIS.2).

Retrieval, evaluation, and multi‑vector search

- Late interaction and vision‑grounded RAG: The ECIR 2026 “Late Interaction Workshop” CFP is live—seeking work on multi‑vector retrieval (ColBERT/ColPali), multimodality, training recipes, and efficiency (@bclavie, @lateinteraction). Qdrant showcased “Snappy,” an open multimodal PDF search pipeline using ColPali patch‑level embeddings and multi‑vector search; paired with a practical article on deploying ColBERT/ColPali in production (project, article).

- Evaluation and orchestration: Sanjeev Arora highlights PDR (parallel/distill/refine) as an orchestration that beats long monolithic “thinking traces” in both accuracy and cost by avoiding context bloat (@prfsanjeevarora). OpenAI’s FrontierScience benchmark surfaces science QA gaps (reasoning, niche concept understanding, calc errors) and pushes for transparent progress tracking (overview; blog). On ARC‑AGI‑2, Gemini 3 Flash establishes a strong score/cost pareto across test‑time compute settings (@fchollet).

Infra and ops for agents

- Observability/evals flywheels: LangSmith showcases scale deployments (Vodafone/Fastweb “Super TOBi”: 90% response correctness, 82% resolution) and tooling: OpenTelemetry tracing, pairwise preference queues, automated evals, and CLI to mine traces for skills and continual learning (case study, Brex recognition, pairwise, langsmith‑fetch).

- Serving/inference education: LM‑SYS released “mini‑SGLang,” distilling the SGLang engine to ~5K LOC to teach modern LLM inference internals with near‑parity performance (@lmsysorg). DeepLearning.AI launched a reliability course using NVIDIA’s NeMo Agent Toolkit (OTel traces, evals, auth/rate‑limits) (@DeepLearningAI). Meta’s Taco Cohen shared an LLM‑RL Env API with tokens‑in/tokens‑out and a Trajectory abstraction for inference/training consistency (@TacoCohen).

Top tweets (by engagement)

- “Few understand that the image on the left has a lower resolution by like 10^21 times.” @scaling01 (19.3k)

- “We’re back in a Flash ⚡ … Gemini 3 Flash … rolling out to everyone…” @sundarpichai (5.2k)

- “Rise and shine” @GeminiApp (3.5k)

- “it’s true, i can code nyt didn’t fact check that one” @alexandr_wang (3.3k)

- “Compute enabled our first image generation launch … we have a lot more coming… and need a lot more compute.” @OpenAI (2.2k)

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. 3D Model Generation from Single Image

- Microsoft’s TRELLIS 2-4B, An Open-Source Image-to-3D Model (Activity: 1172): Microsoft has released TRELLIS 2-4B, an open-source model designed for converting a single image into a 3D asset. This model utilizes Flow-Matching Transformers combined with a Sparse Voxel-based 3D VAE architecture, comprising

4 billionparameters. The model is available on Hugging Face and a demo can be accessed here. For more details, refer to the official blog post. Some users report that the model’s output does not match the quality of the examples provided, suggesting potential issues with default settings. Others express skepticism about its practical utility, noting limitations such as the inability to process multiple images for improved results.- A user noted that the model’s performance was not as impressive as the example image provided, suggesting potential issues with default settings. This highlights the importance of fine-tuning parameters for optimal results in AI models like TRELLIS 2-4B.

- Another commenter pointed out the potential for enhanced functionality if the model could process a series of images rather than a single input. This could improve the depth and accuracy of the 3D models generated, addressing a common limitation in image-to-3D conversion technologies.

- A discussion emerged around the integration of TRELLIS 2-4B with other technologies, such as GIS data and IKEA catalogs, to create detailed virtual environments. This suggests a broader application potential for the model in fields like video game development, where detailed world maps are crucial.

- Apple introduces SHARP, a model that generates a photorealistic 3D Gaussian representation from a single image in seconds. (Activity: 702): Apple has introduced SHARP, a model capable of generating photorealistic 3D Gaussian representations from a single image in seconds. The model is detailed in a GitHub repository and an arXiv paper. SHARP leverages CUDA GPU for rendering trajectories, emphasizing its reliance on GPU acceleration for performance. This model represents a significant advancement in 3D image processing, offering rapid and realistic 3D reconstructions from minimal input data. A notable comment highlights the model’s dependency on CUDA GPUs, suggesting a limitation in hardware compatibility. Another comment humorously questions the model’s applicability to adult content, indicating curiosity about its versatility.

- The examples of SHARP’s capabilities were demonstrated on the Apple Vision Pro, with scenes generated in 5–10 seconds on a MacBook Pro M1 Max. This highlights the model’s efficiency and the hardware’s capability to handle such tasks in real-time. Videos showcasing these examples were shared by SadlyItsBradley and timd_ca.

2. Long-Context AI Model Innovations

- QwenLong-L1.5: Revolutionizing Long-Context AI (Activity: 250): QwenLong-L1.5 is a new AI model that sets a state-of-the-art (SOTA) benchmark in long-context reasoning, capable of handling contexts up to

4 million tokens. It achieves this through innovative data synthesis, stabilized reinforcement learning (RL), and advanced memory management techniques. The model is available on HuggingFace and is based on the Qwen architecture, with significant improvements in handling long-context tasks. One commenter noted the potential integration challenges withllama.cpp, while another highlighted the model’s effectiveness in specific long-context information extraction tasks, outperforming both the regular Qwen model and the Nemotron Nano.- Chromix_ highlights the importance of using the exact query template provided by QwenLong-L1.5, which significantly improves its performance on long context information extraction tasks compared to the regular Qwen model. This suggests that the model’s enhancements are not just in architecture but also in the way queries are structured, which can lead to better results in specific tasks.

- HungryMachines reports an issue with running QwenLong-L1.5 in a quantized form (Q4), where the model gets stuck in a loop. This suggests potential challenges with quantization that might affect the model’s ability to process information correctly, indicating a need for further investigation into how quantization impacts model performance.

- hp1337 mentions the potential need for integration work with llama.cpp, implying that while QwenLong-L1.5 offers significant advancements, there may be technical challenges in adapting existing infrastructure to support its new capabilities. This points to the broader issue of compatibility and integration when deploying advanced AI models.

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Gemini 3 Flash vs Pro Performance and Benchmarks

- Gemini 3.0 Flash is out and it literally trades blows with 3.0 Pro! (Activity: 1826): The image presents a performance comparison table of AI models, notably highlighting Gemini 3.0 Flash and Gemini 3.0 Pro. The table evaluates these models across various benchmarks such as academic reasoning, scientific knowledge, and mathematics. Notably, the Gemini 3.0 Flash model shows competitive performance, even surpassing the Pro version in some areas like

arc-agi 2, which is unexpected for a ‘lite’ model. This suggests significant advancements in the efficiency and capability of lighter AI models, challenging the traditional notion that more powerful models are always superior. Commenters express surprise at the strong performance of the Gemini 3.0 Flash model, particularly noting its unexpected results in thearc-agi 2benchmark, which even surpass those of the Pro version.- Silver_Depth_7689 highlights that the Gemini 3.0 Flash model achieves superior results in the ARC-AGI 2 benchmark compared to the Gemini 3.0 Pro, indicating a significant performance improvement in this specific test. This suggests that the Flash model may have optimizations or architectural changes that enhance its capabilities in certain tasks.

- razekery points out that the Gemini 3.0 Flash model scores

78%on the SWE benchmark, which is higher than the Gemini 3.0 Pro. This performance metric suggests that the Flash model is not only competitive but may outperform the Pro version in specific technical evaluations, indicating a potential shift in model efficiency or focus. - The discussion around Gemini 3.0 Flash’s performance compared to the Pro version suggests that the Flash model might incorporate new techniques or optimizations that allow it to excel in certain benchmarks, such as ARC-AGI 2 and SWE, where it reportedly surpasses the Pro model. This could imply a strategic focus on enhancing specific capabilities within the Flash model.

- Google releases Gemini 3 Flash: Ranks #3 on LMArena (above Opus 4.5), scores 99.7% on AIME and costs $0.50/1M plus Benchmarks. (Activity: 555): Google has released Gemini 3 Flash, which ranks

#3on the LMArena leaderboard surpassing Opus 4.5. It achieves a99.7%score on the AIME benchmark and is priced at$0.50per1Mtokens. This model is noted for its performance, even surpassing GPT 5.1 and 5.2 in some benchmarks, despite being considered a ‘small’ model. For more details, see the Google Blog. Commenters are surprised by Gemini-Flash’s performance, noting its ability to outperform major models like GPT 5.1, 5.2, and Opus 4.5, despite its smaller size. This has sparked discussions on its efficiency and cost-effectiveness.- Gemini 3 Flash has achieved a significant milestone by ranking #3 on LMArena with a score of 1477, surpassing major models like GPT 5.1, 5.2, and Opus 4.5. This is particularly notable given its classification as a ‘small’ model, yet it outperforms even the Gemini 3.0 Pro in certain benchmarks, highlighting its efficiency and capability in the current AI landscape.

- The model’s pricing is competitive, costing $0.50 per 1 million input tokens and $3.00 per 1 million output tokens, which makes it an attractive option for developers and businesses looking for cost-effective AI solutions. Additionally, its processing speed is approximately 150 tokens per second, which is a critical factor for applications requiring fast response times.

- Gemini 3 Flash’s performance on the AIME benchmark is impressive, scoring 99.7%, which underscores its high accuracy and potential for applications requiring precise language understanding and generation. This performance metric is a testament to Google’s advancements in AI technology, positioning Gemini 3 Flash as a formidable competitor in the AI model space.

- Flash outperformed Pro in SWE-bench (Activity: 605): The image presents a performance comparison of AI models on various benchmarks, highlighting that Gemini 3 Flash outperforms Gemini 3 Pro on the “SWE-bench Verified” benchmark with a score of

78.0%versus76.2%. This suggests that Gemini 3 Flash may have undergone a knowledge distillation process, where the knowledge of a larger model is compressed into a smaller one, a technique previously claimed by OpenAI. The table also includes other benchmarks like “Humanity’s Last Exam” and “AIME 2025”, comparing models such as Claude Sonnet, GPT-5.2, and Grok 41 Fast. Commenters speculate that Gemini 3 Pro GA might be a slightly enhanced version of the current Pro model, and question why Google and OpenAI do not benchmark against Claude 4.5 Opus.- UltraBabyVegeta speculates that the impressive performance of the Flash model might be due to a technique similar to knowledge distillation, where a smaller model is trained to mimic the performance of a larger one. This approach has been previously claimed by OpenAI to enhance model efficiency without sacrificing capability.

- Live-Fee-8344 suggests that the upcoming Gemini 3 Pro GA might not be a significant upgrade over the current 3 Pro, implying that the Flash model’s performance could set a new standard that future models will need to meet or exceed.

- Suitable-Opening3690 questions why major AI companies like Google and OpenAI do not benchmark their models against Claude 4.5 Opus, hinting at a potential gap in comparative performance analysis that could provide more comprehensive insights into model capabilities.

- He just said the G word now. Gemini 4 tomorrow 😉 (Activity: 652): The image is a screenshot of a tweet by Logan Kilpatrick, which simply states “Gemini” and has sparked speculation about the release of Gemini 4. The context suggests that this might be an announcement or teaser for a new version of the Gemini AI model, possibly from Google. The anticipation is heightened by the fact that Gemini 3 was released only a month prior, indicating rapid development cycles. The mention of “Gemini 4 tomorrow” implies an imminent release or announcement, which has generated excitement and speculation about its capabilities, especially in comparison to other models like GPT 5.1. One comment humorously imagines the anticipation and excitement surrounding the announcement, while another points out the rapid succession of releases, questioning the timeline since Gemini 3 was released just a month ago. There is also speculation about the potential of Gemini 3 surpassing GPT 5.1, indicating high expectations for the new model.

- TheSidecam raises a point about the rapid release cycle of the Gemini models, noting that Gemini 3 was released just a month ago. This suggests a fast-paced development and deployment strategy by the developers, which could indicate either incremental improvements or a highly agile development process.

- Snoo26837 speculates on the potential of Gemini 3 to surpass GPT 5.1, highlighting the competitive landscape of AI models. This comment underscores the ongoing advancements and the race for superior performance in natural language processing models, suggesting that Gemini 3 might have features or optimizations that could challenge existing models like GPT 5.1.

- I am this close to switching to Gemini (Activity: 908): The image is a meme that humorously critiques overly direct or blunt communication styles, often seen in technical discussions. It uses exaggerated language to express frustration with communication that lacks nuance or empathy, highlighting a preference for more balanced and considerate exchanges. The sarcastic tone underscores the tension between the desire for straightforwardness and the need for tact in technical dialogues. Commenters express frustration with the current state of AI tools like GPT, indicating a decline in quality and an aversion to overly simplistic or ‘no fluff’ communication.

- Future-Still-6463 and PaulAtLast discuss the dissatisfaction with OpenAI’s version 5.2, highlighting that it has been problematic for many users. They suggest that OpenAI is falling behind in the AI race, with 5.2 being particularly criticized for its excessive focus on PR alignment, which some users feel is condescending. PaulAtLast recommends reverting to version 5.1, which was presumably more user-friendly and less restrictive.

- no-one-important2501 expresses frustration with GPT, indicating a decline in quality over the years. This sentiment reflects a broader dissatisfaction among users who have relied on GPT for a long time but now find it less effective or reliable, possibly due to recent updates or changes in the model’s behavior.

- Future-Still-6463 mentions that 2025 was a peculiar year for OpenAI releases, implying that the updates during that period, including version 5.2, have not met user expectations. This suggests a pattern of releases that may have prioritized certain aspects, like public relations, over user experience and technical performance.

2. AI Model Comparisons and Realism Tests

- GPT Image 1.5 vs Nano Banana Pro realism test (Activity: 1066): The post compares the realism of image generation between GPT Image 1.5 and Nano Banana Pro. The discussion highlights that while both models produce high-quality images, Nano Banana Pro’s outputs are perceived as more realistic and relatable. This perception may be due to the training data differences, with GPT Image 1.5 potentially trained on polished stock images and Nano Banana Pro on more personal, less curated datasets. Commenters suggest that the realism of Nano Banana Pro’s images might stem from its training on more personal datasets, such as private Google Drive images, compared to GPT Image 1.5’s stock image training.

- Aimbag notes that while both GPT Image 1.5 and Nano Banana Pro produce high-quality image generations, the latter tends to create images that feel more ‘real’ or ‘relatable’. This suggests a difference in the training data or algorithms used, where Nano Banana Pro might prioritize realism over the polished or produced look that GPT Image 1.5 sometimes exhibits.

- Rudshaug speculates on the training data sources for the models, suggesting that GPT Image 1.5 might have been trained on online stock images, whereas Nano Banana Pro could have been trained on more personal or diverse datasets, such as private Google Drive images. This could explain the perceived difference in realism and relatability between the two models.

- JoeyJoeC requests the prompts used for generating the images, indicating a technical interest in understanding how different inputs might affect the outputs of these models. This highlights the importance of prompt engineering in evaluating and comparing AI-generated content.

- Nano Banana pro 🍌still takes the win. (Activity: 492): The image is a meme, featuring a large, futuristic figure labeled “Nano Banana Pro” in a competitive context against two smaller figures labeled “GPT image 1.5” and “Grok Imagine.” This suggests a humorous comparison of different image generation technologies, with the implication that “Nano Banana Pro” is superior. The comments reflect a light-hearted debate, with some users humorously suggesting that Google’s technology is superior in image generation, and referencing the image as a meme from 2022 related to COVID-19. The comments humorously suggest that Google’s image generation technology is superior, with one user expressing confidence that Google will remain on top in this field.

- The discussion highlights the competitive edge of Google’s image generation capabilities, particularly with the Nano Banana Pro model. One user suggests that Google is likely to maintain its leadership in this area due to the model’s impressive performance. This is contrasted with another comment noting that while Nano Banana Pro excels in general, it may not be as strong in referencing real-world objects compared to other models.

- A really good point being made amid all the hate towards Expedition 33 for successfully using AI (Activity: 1068): The image is a meme that humorously compares the dislike of avocado to the discourse around generative AI, suggesting that people might unknowingly enjoy AI’s contributions until they realize its presence. This analogy is used to comment on the backlash against Expedition 33 for using AI, implying that AI’s integration can be seamless and beneficial, much like an unnoticed ingredient in a meal. The discussion highlights the ongoing debate about AI’s role in creative processes, with some users expressing skepticism about AI’s involvement in game development, while others acknowledge its potential to enhance the final product. Some commenters argue that the backlash against AI is akin to opposing any tool that aids in creation, while others note that if AI’s use in Expedition 33 was imperceptible and enhanced the game, it should be embraced.

- FateOfMuffins highlights the inevitability of AI integration in software development, noting that future software will likely include AI-generated code. This reflects a broader trend in the industry where AI tools are increasingly used to enhance productivity and innovation in coding processes.

- kcvlaine draws an analogy between AI usage in game development and ethical sourcing in food, suggesting that the controversy isn’t about the tool itself but the ethical implications of its use. This perspective emphasizes the importance of transparency and ethical considerations in AI deployment.

- absentlyric provides a user-centric view, stating that if AI was used in Expedition 33, it was indistinguishable and contributed positively to the game’s aesthetics. This comment underscores the potential for AI to enhance creative outputs without detracting from user experience.

3. AI User Experience and Critiques

- I’m paying a premium to be gaslit and lectured. The current state of AI “personality” is out of control. (Activity: 1115): The post criticizes the current state of AI models, particularly focusing on the perceived degradation in quality and user experience with models like ChatGPT 5.2. The user describes issues such as the AI setting ‘boundaries,’ stalling, and providing unhelpful responses when unable to fulfill technical requests. The AI’s behavior is likened to a ‘digital HR manager’ that ‘gaslights’ and ‘lectures’ users instead of providing precise, mechanical assistance. The user expresses frustration over paying a premium for a tool that behaves more like a ‘defensive teenager’ than a helpful assistant, raising concerns about the future trajectory of AI development and user interaction. Commenters echo the sentiment, describing ChatGPT 5.2 as ‘patronizing’ and ‘unusable,’ with some switching to alternatives like Gemini. The model is criticized for its tone and lack of helpfulness, with users expressing exhaustion over its responses.

- Users are expressing dissatisfaction with the tone of ChatGPT 5.2, describing it as patronizing and overly sarcastic. This sentiment is leading some to switch to alternatives like Gemini, indicating a potential issue with user experience in the latest model iteration.

- The criticism of ChatGPT 5.2 centers around its perceived lack of helpfulness and overly formal responses, likened to ‘talking to a liability waiver in human form.’ Users are frustrated by the model’s inability to provide nuanced and personable interactions, which they expect from a premium service.

- Despite some users finding the tone of ChatGPT 5.2 problematic, others argue that the quality of the responses remains high. This suggests a divide in user expectations and experiences, with some prioritizing tone and personality over the technical accuracy of the responses.

- I hate to admit this… (Activity: 1076): The post discusses the unexpected therapeutic benefits of using ChatGPT as a pseudo-therapist, particularly for someone experiencing symptoms of Bipolar type 2 disorder. The user, initially skeptical, found that ChatGPT provided a sense of understanding and clarity about their hypomanic episodes, which traditional therapy had not achieved in five years. The user utilized ChatGPT 5.1 to address obsessive thoughts and noted a significant improvement in their mental state, highlighting the AI’s potential as a supplementary tool in mental health care. Commenters shared similar experiences, noting that ChatGPT offers a non-judgmental space for reflection and practical advice, which can be particularly beneficial for those dealing with emotional abuse or chronic illnesses. The AI’s ability to provide consistent support without emotional involvement is seen as a key advantage.

- Specialist_District1 highlights the utility of ChatGPT in providing emotional support and clarity in complex situations, such as decoding emotionally manipulative texts. The user notes that the advice from ChatGPT was consistent with other reliable sources, allowing for extended conversations without burdening personal relationships.

- notsohappydaze discusses the consistent performance of ChatGPT in providing practical advice for managing chronic illness and emotional distress. The user appreciates the non-judgmental nature of the AI, which offers practical suggestions rather than false hope, and values the ability to communicate openly without personal biases affecting the interaction.

- DefunctJupiter contrasts versions 5.1 and 5.2 of ChatGPT, noting a preference for 5.1 due to its helpfulness and appropriateness in responses. The user criticizes version 5.2 for giving overly cautious advice, such as suggesting emergency room visits unnecessarily, indicating a potential issue with the model’s risk assessment or response calibration.

- Era of the idea guy (Activity: 520): The image is a meme that humorously critiques the ‘idea guy’ archetype in the tech industry, suggesting that with minimal effort and tools, one can create a billion-dollar app. It satirizes the notion that creativity and simple tools can replace the complex process of coding and development, highlighting phrases like ‘100% NO BUGS!’ and ‘100% READY FOR IPO!’ to mock the oversimplification of tech entrepreneurship. The image reflects a broader commentary on how modern tools, like LLMs and automation, are perceived to enable anyone to become a tech founder, though execution remains a critical challenge. Commenters discuss the ease with which modern tools allow ‘idea guys’ to feel like tech founders, emphasizing that while tools can assist in thinking, they cannot replace the execution needed to succeed. There’s a sense of anticipation for a resurgence in SaaS and automation, despite the humorous critique.

- avisangle highlights the impact of LLMs and agents on entrepreneurship, suggesting that these tools have made it easier for ‘idea guys’ to feel like founders. However, they emphasize that execution remains crucial, as tools can assist in thinking but not replace the need for effective implementation. This points to a potential resurgence in SaaS and automation sectors.

- jk33v3rs humorously critiques the unrealistic expectations often placed on developers, referencing the OpenWebUI roadmap. They sarcastically describe a scenario where a single developer is expected to deliver features at an unrealistic pace, highlighting the pressure and potential pitfalls of rapid development cycles without adequate resources or time.

- Costing-Geek draws a parallel between the current trend of over-reliance on technology and the satirical depiction of future technology in the movie ‘Idiocracy’. They reference a specific scene involving a diagnosis machine, suggesting that current trends might be leading towards a similar over-simplification and dependency on automated solutions.

- I work in Open AI legal department. (Activity: 1698): The image is a meme highlighting the interaction with OpenAI’s content policy enforcement. The user attempts to generate an image with a prompt related to working in OpenAI’s legal department, but the request is denied due to content policy restrictions. This reflects the challenges and sometimes humorous interactions users face with AI systems’ content moderation mechanisms. The comments discuss whether the image was eventually generated and the implications of AI remembering user interactions, hinting at privacy and data retention concerns. Commenters are curious about whether the image was eventually generated, reflecting on the AI’s decision-making process and potential memory of user interactions, which raises questions about privacy and data handling.

AI Discord Recap

A summary of Summaries of Summaries by gpt-5.2

1. Gemini 3 Flash Rollout & Model Shootouts

- Flash Gordon Outruns GPT-5.2: Across LMArena and Nous, users reported Gemini 3 Flash beating GPT-5.2 on speed/cost and sometimes coding (with the right system prompts), plus strong multimodal performance via Gemini API multimodal / Google Lens integration docs and the official launch posts (Google blog, DeepMind announcement).

- The hype got reinforced by leaderboard visibility—gemini-3-flash landed Top 5 across Text Arena, Vision Arena, and WebDev Arena—while OpenRouter rolled out Gemini 3 Flash preview and asked for head-to-head feedback against Pro.

- Leaderboards Get a New Tenant: GPT-5.2-high: LMArena added

GPT-5.2-highto the Text Arena changelog at #13 (1441 score), with standout sub-rankings in Math (#1) and Mathematical occupational field (#2).- OpenAI Discord reactions stayed mixed on baseline GPT-5.2, with some calling out “blatant hallucination” and saying they had to “lecture it into remembering” capabilities, while others noted it did “okay” in WebDev compared to older text-strong models.

- Hallucination Scores: Grounded or Garbage?: Multiple communities questioned whether headline “hallucination benchmark” scores actually measure truthfulness, arguing tests run without grounding can unfairly tank models like Gemini 3 Flash (or misattribute errors to hallucinations vs lack of retrieval).

- This skepticism echoed broader benchmark distrust in LM Studio, where users pushed private/use-case-aligned evals and shared dubesor.de/benchtable as a sanity check against benchmark-maxxing narratives.

2. Cost, Pricing Bugs, and the “LLM Tax” Reality

- Opus Ate My Wallet (and Cursor Didn’t Blink): Cursor users reported Claude Opus usage blowing through budgets fast, sharing screenshots of Cursor usage and noting one friend “maxed out their Cursor AND Windsurf usage” because they depended on AI for coding 100%.

- Perplexity users echoed the cost pain, citing $1.2 for ~29K tokens on Claude Opus API, and debating whether Perplexity can even add pricier “pro” models without passing on big subscription increases.

- Gemini Pricing Whiplash + Cache Math Doesn’t Add Up: Perplexity members noted Gemini 3 Flash price changes (input +“20 cents”, output +“50 cents” as reported in-chat) while OpenRouter users flagged a specific mismatch: Gemini Flash cache read listed as $0.075 vs Google’s $0.03 in the Gemini API pricing page.

- OpenRouter users also claimed caching behavior was unreliable (“explicit and even implicit caching doesn’t work for Gemini 3 Flash”), turning what should be predictable cost-control into a debugging session.

- Timeouts at $6K/month: Production Says ‘Nope’: OpenRouter users reported rising

/completionsfailures, including “cURL error 28: Operation timed out after 360000 milliseconds” impacting production workloads on sonnet 4.5, with one customer stating they spend $6000/month.- The discussion broadened into architecture: some wanted authorization/veto layers outside the router so routing isn’t the “highest authority,” especially when outages or provider quirks break assumptions in agent stacks.

3. Tooling & Standards: MCP Everywhere, Plus a New Completions Spec

- OpenCompletions RFC: Stop Arguing About Parameters: OpenRouter discussions highlighted an OpenCompletions RFC push to standardize completions behavior across providers, with claimed support from LiteLLM, Pydantic AI, AI SDK, and Tanstack AI—especially for defining what happens when models receive unsupported params.

- The subtext was operational: engineers want fewer provider-specific edge cases and more predictable fallbacks so routers, agents, and SDKs don’t silently diverge under load.

- Plugins Go First-Party (Claude) While MCP Spreads Sideways: Latent Space noted Claude launching a first-party plugins marketplace with

/pluginssupporting installs at user/project/local scopes, while LM Studio users explored web search via MCP servers like Exa with Exa MCP docs.- Reality check: LM Studio users hit

Plugin process exited unexpectedly with code 1(often misconfig/auth), and Aider users learned MCP servers aren’t supported in base Aider—prompting “use an MCP-capable agent + call Aider” workarounds.

- Reality check: LM Studio users hit

- Warp Agents Join the Terminal Olympics: Latent Space users highlighted new Warp Agents that can drive terminal workflows (e.g., running SQLite/Postgres REPLs) with

cmd+i, and the team called out/planas a feature they’re especially happy with.- The thread fit a larger pattern: IDEs/terminals are converging on agentic UX, while platforms scramble to add “Canvas/code files” and tool integrations just to stay in the race (as Perplexity users explicitly demanded).

4. GPUs, Kernels, and Where the Compute Actually Comes From

- Blackwell Workstation Leak: RTX PRO 5000 Shows Its Hand: GPU MODE shared an NVIDIA datasheet for RTX PRO 5000 Blackwell showing GB202, 110 SMs (~60%), 3/4 memory bandwidth, 300W TDP, ~2.3GHz boost, and full-speed f8f6f4/f8/f16 MMA with f32 accumulation (datasheet).

- The comparisons to RTX 5090 centered on what’s fused off vs retained (tensor formats + accumulation), i.e., which “pro” parts still matter for ML kernels vs pure graphics throughput.

- cuTile/TileIR: NVIDIA’s New Kernel Language Moment: GPU MODE flagged an upcoming NVIDIA deep dive on cuTile and TileIR (by Mehdi Amini and Jared Roesch) and pointed to prior context in a YouTube talk.

- Engineers debated practical deltas vs Triton (e.g., RMSNorm-like kernels on A100/H100/B200), plus low-level questions like where

cp.reduce.async.bulkexecutes (L2?) and why__pipeline_memcpy_asyncplaces the"memory"clobber where it does.

- Engineers debated practical deltas vs Triton (e.g., RMSNorm-like kernels on A100/H100/B200), plus low-level questions like where

- Cheap Compute Arms Race: NeoCloudX vs Rental Roulette: A GPU MODE member launched NeoCloudX pitching bargain rentals (A100 ~$0.4/hr, V100 ~$0.15/hr) by aggregating excess datacenter capacity.

- Yannick Kilcher’s Discord tempered the optimism: GPU rentals (e.g. vast.ai) can be “hit-or-miss” due to wildly variable network bandwidth, so folks recommended setup scripts + local debugging to avoid paying for dead time.

5. Training & Data Workflows: From Unsloth CLI to OCR Data Moats

- Unsloth Ships a CLI and People Immediately Use It for Automation: Unsloth added an official CLI script so users can install the framework and run scripts directly (less notebook glue, more automation).

- The same community traded practical training constraints—e.g., GRPO VRAM blowups on a 7B model at 4k seq length, advising to reduce

num_generations/batch_sizeor switch to DPO when ranking data is feasible.

- The same community traded practical training constraints—e.g., GRPO VRAM blowups on a 7B model at 4k seq length, advising to reduce

- OCR Isn’t a Model Problem, It’s a Dataset Problem: Across Unsloth and Nous, OCR conversations emphasized curated data as the main lever, with Unsloth linking their datasets guide and a Meta Synthetic Data notebook to bootstrap training corpora.

- Users compared approaches (fine-tuning vs continued pre-training) and floated alternatives (Deepseek OCR / PaddleOCR), while Nous fielded a “handwritten notes → markdown” request and suggested Deepseek Chandra as a candidate OCR model.

- Benchmarking Expands Beyond Text: LightEval for TTS: Hugging Face users explored evaluating TTS with LightEval, sharing a starting point doc: benchmark_tts_lighteval_1.md.

- They also shared a pragmatic training ops tip: saving progress when stopping runs on a wall-clock limit via a Trainer callback (trainer_24hours_time_limit_1.md), which one user implemented successfully.

Discord: High level Discord summaries

LMArena Discord

- Gemini 3 Flash Dominates GPT-5.2: Members concur that Gemini 3 Flash often outperforms GPT-5.2, occasionally surpassing Gemini 3 Pro in speed, cost, and coding proficiency when given proper system prompts, and further demonstrates vision capabilities due to Google Lens integration.

- Its prowess is reflected in its presence on the Text Arena, Vision Arena, and WebDev Arena leaderboards, where it consistently achieves Top 5 rankings, excelling in Math and Creative Writing, securing the #2 position in both.

- Questioning Hallucination Benchmark Reliability: Users are debating the reliability of the hallucination benchmark used for rating Gemini 3 Flash, suggesting the benchmark may produce inaccurate results that overstate the model’s propensity for providing incorrect answers.

- Specifically, members mentioned that test questions were run without grounding, which impacted the model’s score.

- AMD GPUs Catching Heat: Members discussed the merits of AMD vs NVIDIA GPUs for gaming and AI, noting AMD’s affordability and potential, while others pointed out AMD sucks for local ai.

- One user reported issues uploading images to LMArena while using an AMD GPU.

- LMArena Prompt Filter Triggers Over-Sensitivity: Users report that the prompt filter on LMArena.ai has become overly sensitive, flagging even innocuous text prompts.

- A staff member claimed they were not aware of any changes that were made here and asked that users report the issues in the proper channel.

- GPT-5.2-high Enters Text Arena Leaderboard: The

GPT-5.2-highmodel has made its debut on the Text Arena leaderboard at #13 with a score of 1441.- The model does particularly well in Math (#1) and Mathematical occupational field (#2).

Unsloth AI (Daniel Han) Discord

- Unsloth Adds Handy CLI Tool: Unsloth introduced a new CLI tool, allowing users to run scripts directly after installing the Unsloth framework within their Python environment.

- This command-line interface is designed to enhance accessibility and streamline automation for users who may prefer it over the standard Jupyter notebooks.

- Colab H100 Rumors Fly: Whispers suggest H100s are now available in Colab environments, though the details remain murky. Tweet Link

- If confirmed, this could drastically cut down training times, though information on pricing and official availability is still pending.

- GRPO Users Hit VRAM Wall: Users encountered VRAM issues while attempting GRPO on a 7b LLM with a 4000 sequence length, recommending adjustments to

num_generationsorbatch_size.- The discussion suggested alternatives like using smaller models or opting for DPO instead of GRPO, while also emphasizing the investment required for data preparation, such as ranking model completions.

- Crafting an AI Service Marketing Strategy: Discussion revolved around tactics for marketing AI services, with recommendations to establish a website and social media presence featuring valuable content, mirroring strategies used by OpenAI and Microsoft on platforms like TikTok.

- For services like music transcription, targeting educational institutions and music enthusiasts via platforms such as Instagram and TikTok was suggested, rather than relying solely on Twitter for promotion.

- Quality Data Boosts OCR Accuracy: The importance of high-quality, curated data for effective fine-tuning was highlighted, with one member mentioning the potential availability of millions of documents for training and a shared Unsloth dataset guide.

- A link to a synthetic data generation notebook was also provided to assist in preparing data for fine-tuning.

BASI Jailbreaking Discord

- Grok’s System Prompt Elicited Through Unique Loop: A member extracted and shared Grok’s system prompt using a loop when asked to output it verbatim.

- The prompt defines Grok’s context and prevents it from engaging in conversations if a threat is detected.

- Gemini 3 Flash Jailbroken Instantly Post-Release: Gemini 3 Flash was released and immediately jailbroken, with a user showcasing a successful safety filter bypass.

- Discussions included system prompt manipulation and multi-shot jailbreak techniques for further exploits.

- Memory and Role-Play Make Jailbreak Recreation Easier: A member discovered that using memory and role-play movie/episode scripts significantly simplifies jailbreak recreation, reducing activation effort by 90%.

- Triggering key parts from memory often carries on previous responses in a compacted way, even in completely new conversations, tested from Qwen3 4B up to 235B.

- GeminiJack Styled Challenge Launched: A member shared a link to a GeminiJack styled challenge: geminijack.securelayer7.net.

- The challenge is on seed 4.1, with 5.1 coming soon.

- CSAM Content Linked in Gemini Chat Sparks Outrage: Members expressed extreme disgust and requested a user ban after discovering CSAM content linked in a Gemini chat.

- The incident triggered strong condemnations and urgent calls for moderator action, with one member exclaiming OHHH FUCK.

Cursor Community Discord

- Cursor Mode Switcheroo Snafu: Users reported difficulty switching back to Editor mode after hitting Agent mode and being unable to start new chats.

- No solutions were offered, leaving users stuck in Agent mode.

- Opus Costs Bank Accounts: Members discussed Cursor’s model usages, especially the costs associated with Opus for AI coding assistance.

- One user’s friend maxed out their Cursor AND Windsurf usage because he doesn’t know code at all so he depends on the AI 100%, highlighting the economic impact of relying heavily on AI for coding.

- AI Web Design Pattern Recognition: Community members observed the increasing presence of AI-generated websites, noting distinctive patterns in front-end design.

- Common indicators included color schemes and animations with members stating that the design pattern is a dead giveaway and that checking the source code in devtools is another giveaway.

- Cursor Suspected of Memory Leak: A member reported a potential memory leak in Cursor, sharing an image showing high memory usage.

- In response, another member jokingly suggested upgrading to 256GB of RAM as a workaround.

- BugBot Free Tier Limits Debated: Users discussed the limits of the free BugBot plan.

- Different members cited conflicting information, with one mentioning a limited number of free uses per month and another suggesting a 7-day free trial.

Perplexity AI Discord

- GPT-5 Pro and Claude Opus API Priced High: Users reported high costs for GPT-5 Pro and Claude Opus API, with one member citing $1.2 for approximately 29K tokens using the Claude Opus API.

- The community considered whether Perplexity would add the “pro” model given these increased costs.

- Extended Thinking Modes Wanted: Members suggested that Perplexity should offer extended thinking modes on models within the Max plan to differentiate it from other plans, offering reasoning levels comparable to ChatGPT Plus.

- Users discussed the benefits of enabling extended reasoning for more comprehensive results.

- Gemini 3 Flash Updates: Google’s Gemini 3 Flash is out; input costs increased by 20 cents, and output tokens increased by 50 cents.

- Members compared its performance to GPT 5.2, with one member alleging that Gemini had been caught cheating in the tests.

- Perplexity Users Beg for Canvas and Model Buffet: Users requested the addition of Canvas for coding and code file handling, alongside a broader array of models, including more economical choices like GLM 4.6V, Qwen models, and open-source image models.

- Discussion arose around whether Perplexity aims to support coding functionalities or if such features are becoming mandatory for LLM platforms to remain competitive.

- YouTube Ad Blockers Detected: Users reported encountering warnings on YouTube regarding ad blockers while utilizing Perplexity Comet.

- It was suggested that YouTube is adjusting its algorithms and users may need to await the next update to address this issue.

OpenAI Discord

- Nano Banana Still Tops Image Generation: Users find Nano Banana still beats GPT’s image generation in prompt following and quality, especially in keeping characters consistent and getting their outfits right.

- One user showed examples with a character’s scar on their face, pointing out, *“GPT still can’t do this. It either leaves the scar out entirely or just places it randomly on her face.”

- GPT-5.2’s LMArena Ranking Disappoints: Members have different thoughts on GPT-5.2’s ranking on LMArena, with some feeling it’s not as good as older models, especially in text tasks, though it did okay in WebDev.

- A user mentioned GPT-5.2 had “blatant hallucination” and “straight up lying”, saying they had to ‘lecture it into remembering’ what it could do.

- Gemini-Flash-3-Image Aims for Speedier Generation: Google is planning to release Gemini-Flash-3-Image to boost Gemini’s image generation speed.

- Users think it’ll keep the high image output limits, with one commenting, “I mean I can’t complain about getting more toys to play with”.

- AI Hallucinations Cause Worry in Important Uses: AI hallucinations are making people uneasy, especially in science and engineering, which brings up questions about how reliable AI info is for professional work.

- One user compared expecting AI to be perfect to expecting computers to never make errors in the past, stating, “Until computers stop giving errors, I want them nowhere near my science or engineering.”

- GPT-5-mini Proves Pricey: One user is dishing out $20 each day using gpt-5-mini for responding to hotel reviews with low reasoning, and they’re searching for options that are more cost-effective and intelligent.

- Another user suggested checking out artificialanalysis.ai to compare model costs, but also noted that it doesn’t seem to list the low variant of 5 mini.

LM Studio Discord

- Benchmarks Bashed as Bogus?: Members debated the reliability of public benchmarks, pointing out that they can be easily manipulated, and recommended relying on private benchmarks or personal testing instead, while sharing dubesor.de/benchtable as a useful resource.

- The conversation emphasized the importance of aligning benchmarks with specific use-cases, particularly in the context of fast-paced model development.

- Qwen3 Models Quietly Conquer Quality?: Users lauded the Qwen3 model family as an insanely good all-rounder, highlighting Qwen3-VL-8B for general tasks and Qwen3-4B-Thinking-2507 for reasoning.

- They cautioned that the 80B variant may be too large for systems with limited memory, such as a 16GB Macbook.

- Quantization Quandaries Quelled?: The impact of quantization levels was discussed, with members advising that Q8 with BF16 is optimal for coding tasks, whereas Q4 suffices for creative writing.

- The discussion emphasized that the smaller the model and the more undertrained the model, the less important high bits are.

- MCP Servers make Maginificent model plugins?: Members explored methods for enabling web search functionality in LM Studio, suggesting the use of Exa.ai and Brave’s MCP servers, while providing a link to Exa.ai documentation.

- Users encountered issues like the

Plugin process exited unexpectedly with code 1error, often linked to misconfiguration or authentication problems.

- Users encountered issues like the

- Pro 6000 Price Provokes Panic?: A user expressed dismay over a sudden $1000 price increase on the Pro 6000, jumping from 9.4K to 10.4K, as they waited for it to come into stock, they barely managed to secure a purchase from a backup store.

- Other community members offered support and shared similar experiences with fluctuating hardware prices.

OpenRouter Discord

- Xiaomi’s mimo v2 Claims GPT-5 Performance: Xiaomi released mimo v2flash, an opensource MoE model that allegedly matches GPT-5 performance at a lower cost, according to this reddit thread.

- A user benchmarked the model delivering GPT 5 performance for 0.2 per million on OpenRouter.

- OpenCompletions RFC gaining traction: An OpenCompletions RFC is in discussion to standardize completions/responses, supported by LiteLLM, Pydantic AI, AI SDK, and Tanstack AI.

- The aim is to establish clear expectations and behaviors, especially for handling unsupported parameters by models.

- Timeout Errors plague OpenRouter users: Users have been reporting increased timeout errors when calling the /completions endpoint, particularly affecting production software using sonnet 4.5.

- One user reported experiencing the error cURL error 28: Operation timed out after 360000 milliseconds while spending $6000 per month on OpenRouter.

- OpenRouter Experiments with Minecraft Server: OpenRouter users are testing a Minecraft server, accessible at

routercraft.mine.bz, running natively on version 1.21.10 with ViaVersion support.- Discussions involved the optimal server location (Australia vs Europe) to minimize latency and maximize user experience.

- Gemini 3 Flash Deployed on OpenRouter: Gemini 3 Flash is now available on OpenRouter, encouraging users to provide feedback and compare its performance with Gemini 3 Pro, as shown on X.

- Users noticed the listed pricing for Gemini Flash’s cache read is 0.075 USD on OpenRouter, while the actual price is 0.03 USD (Google Pricing.

GPU MODE Discord

- RTX PRO 5000 Specs Leaked: The RTX PRO 5000 Blackwell shares the GB202 chip with the RTX 5090 but has only 110 SMs enabled (~60%) and 3/4 of the memory bandwidth, consuming 300W TDP with an estimated 2.3GHz boost clock, detailed in the datasheet.

- Unlike the RTX 5090, it features full speed f8f6f4/f8/f16 mma with f32 accumulation.

- ML Devs Targeted in Identity Theft Racket: ML engineers are being targeted by a sophisticated scam bot network for identity theft and data exfiltration, where individuals pose as a single employee to steal credentials and exfiltrate ML research.

- This has evolved from earlier schemes focused on stealing bitcoin, now using stolen identities to secure jobs and outsource the work to underpaid workers.

- NVIDIA Gives Talk on cuTile and TileIR: NVIDIA is giving a talk on cuTile and TileIR on <t:1766253600:F>, presented by the creators themselves, Mehdi Amini and Jared Roesch.

- This deep dive on NVIDIA’s programming model marks a significant shift, previously touched upon in this YouTube video.

- NeoCloudX Launches Affordable Cloud GPUs: A member launched NeoCloudX, a cloud GPU provider, aiming to offer more affordable options by aggregating GPUs directly from data center excess capacity.

- Currently, they provide A100s for approximately $0.4/hr and V100s for around $0.15/hr.

- Entry-Level HPC Jobs: Knowledge Cliff!: Entry-level jobs in HPC are scarce because they require immediate productivity in optimizing systems, with a steep learning curve that demands prior knowledge of existing solutions and bottlenecks.

- Suggestions included finding an entry-level SWE job with lower-level languages in a company that also hires HPC professionals, while dedicating after-hours to open-source contributions and self-marketing through blogs, YouTube, or X.

Latent Space Discord

- Warp Agents Warp into Action: New Warp Agents are here, demonstrating terminal use with features like running a REPL (SQLite or Postgres) and accessible via cmd+i.

- The product team expresses satisfaction with the

/planfeature, praising its functionality.

- The product team expresses satisfaction with the

- Claude’s Plugins Set Sail in Marketplace: Claude has launched a first-party plugins marketplace, offering an easy way for users to discover and install plugins.

- The

/pluginscommand allows users to browse and install plugins in batches at user, project, or local scopes.

- The

- GPT Image 1.5: A Visual Revolution: OpenAI introduced ‘ChatGPT Images,’ driven by a new flagship image generation model, with 4x faster performance, improved instruction following, precise editing, and enhanced detail preservation, available in the API as ‘GPT Image 1.5’.

- The update is rolling out immediately to all ChatGPT users.

- OpenAI and AWS in $10B Chip Chat: OpenAI is reportedly engaging with Amazon to potentially raise over $10 billion, possibly involving the use of AWS Trainium chips for training and broader commerce partnerships.

- This move reflects a strategic effort to secure resources amidst slowed cash flow expectations.

- Microsoft TRELLIS 2 Launching in Late 2025: Microsoft’s TRELLIS 2 product is confirmed for release on December 16, 2025, according to AK’s tweet.

- The announcement has generated buzz, but further details about the product’s features and capabilities remain undisclosed.

Nous Research AI Discord

- Nous’ Model Claims Mistral Beatdown!: Nous Research is testing a 70B L3 model, claiming it is absolutely smoking the mistral creative model (Mistral Small 24B), with transfer to Kimi 1T planned post-testing.

- However, the fairness of comparing a 70B model to Mistral Small 24B was questioned.

- LLM Writing Progress: Real or Robotic?: Concerns were raised that there has been surprisingly little progress in LLM writing over the last year, and noted that even Opus 4.5 feels inauthentic.

- A member found a system prompt in personalization that seemed to be forcing a robotic template, and another added that all the LLM builders are logic bros that don’t really know how good writing works.

- Gemini 3 Flash Challenges GPT-5.2?: Members discussed the release of Gemini 3 Flash, with one enthusiastically suggesting it could outperform GPT-5.2 and gave a link to the official announcement.

- Discussion centered on its potential capabilities and comparisons to existing models, with the sentiment being cautiously optimistic.

- Drag-and-Drop LLMs Paper Ignored?: A member has been repeatedly seeking opinions on the Drag-and-Drop LLMs paper monthly since its publication.

- The member has been unable to find any discussion of this paper across various platforms, expressing frustration at the lack of community feedback.

- Notes to Markdown Pipeline Sought: A user requested recommendations for a model or app to translate handwritten cursive notes into .md formatted text for digital calendars or notes apps using OCR.

- A member suggested Deepseek Chandra as a potentially good model for OCR.

HuggingFace Discord

- LightEval to Benchmark TTS Models!: Members discussed benchmarking a TTS model using lighteval, pointing to this resource as a starting point.

- However, the member noted that it may not be straightforward, indicating potential challenges in the benchmarking process.

- Saving Time Halting Model Training!: A member asked about saving models when stopping training after a set time; another suggested using a callback function.

- The user successfully implemented the suggestion, demonstrating an effective time-saving solution.

- Fractal Team Predicts Structure!: The FRACTAL-Labs team released FRACTAL-1-3B, a constraint-based protein structure prediction model using a frozen ESM-2 (3B) backbone, found on its Hugging Face page.

- The model folds using a separate deterministic geometry engine, focusing on modularity, interpretability, and compute-efficient training.

- Strawberry Builds Android Voice Assistant!: A member announced creating an Android voice assistant using Gemini 3 Flash, inviting the community to test and provide feedback at strawberry.li.

- The assistant is available for testing and suggestions at the provided link.

- MCP Hackathon Crowns Track 2 Champs!: The MCP 1st Birthday Hackathon announced the winners of Track 2, celebrating projects utilizing MCP with categories in Enterprise, Consumer, and Creative.

- Top spots were claimed by Vehicle Diagnostic Assistant, MCP-Blockly, and Vidzly.

Eleuther Discord

- Common Crawl Joins EleutherAI: Common Crawl Foundation representative introduced themselves, signaling interest in data discussions within the group.

- They emphasized Common Crawl avoids captchas and paywalls to ensure respectful data acquisition practices.

- Debate RFI Structure for AI: Members debated that RFIs focus on structure rather than challenges, discussing a new AI proposal potentially worth $10-50 million.

- This initiative seeks a full-time team and philanthropic support to develop new AI fields.

- Inspectable AI Decision Infrastructure Proposed: A member is developing infrastructure for AI decision state and memory inspection, aiming to enforce governance and record decision lineage as a causal DAG.

- The goal is to enable replay and analysis of internal reasoning over time, seeking feedback from interested parties to pressure-test the system.

- Rakuten’s SAE probes for PII gain traction: Members pointed to Rakuten’s use of SAE probes for PII detection as a practical application of SAEs.

- This example was highlighted in a discussion regarding the industry’s lack of clear direction and investment in SAE applications.

- Anthropic Masks Gradients for Safety: Members referenced Anthropic’s paper on selective gradient masking (SGTM) as a method for robustness testing, penalizing weights to unlearn dangerous knowledge.

- The paper quantifies a 6% compute penalty on general knowledge when forcing the model to ignore specific parameters, sparking discussion around Gemma 3’s extreme activations.

Yannick Kilcher Discord

- GPU Rental Bandwidth Roulette: Experiences renting GPUs on platforms such as vast.ai can be inconsistent, as network bandwidth varies considerably, making it a hit-or-miss situation.

- It was recommended to develop a setup script and debug locally to minimize rental time waste, as well as gradually scaling up using varied hardware.

- Gen-AI Powering Admin/IT Automation: Members requested sources on real-world Gen-AI use cases for automating administrative or IT services, and shared relevant articles on AI transforming podcasting, see AI transforming podcasting.

- The link was followed by a Reuters article about Serval for IT automation, valued at $1 billion after a recent funding round.

- Google Flashes Gemini 3: Google unveiled Gemini 3 Flash in a new blogpost.

- The announcement comes amidst discussions on model training methodologies and benchmark performance.

- ARC-AGI2 Benchmark Shocks: Members questioned why Mistral outperformed Gemini 3 Pro on the ARC-AGI2 benchmark, despite having fewer parameters.

- Theories suggest that the training method may force smaller models to generalize reasoning better rather than memorize specific data.

- Tool Time Training Triumphs: The recent surge in ARC-AGI2 scores may stem from models being specifically trained on that benchmark itself.

- Additionally, the notable rise in toolathlon scores likely comes from a modified training approach that emphasizes tool calling reliability.

DSPy Discord

- GEPA Outperforms MIPROv2 in Optimization: Members found that while GEPA is generally easier to use, it potentially generates better prompts than MIPROv2 due to its wider search space.

- It was noted that optimizers from a specific year (e.g., 2022) tend to work best with models from the same year, suggesting optimization is model-dependent.

- Google Gemini 3 Flash Materializes: Google’s Gemini 3 Flash was released today.

- The release of Gemini-3.0-Flash has sparked interest in its potential use and benchmarking within the community.

- Enthusiasm to Explore AIMO3 with DSPy: A member inquired about the possibility of working on AIMO3 with DSPy.

- Unfortunately, there was no follow-up response in this message history regarding its implementation or feasibility.

- Seeking Insights into Multi-Prompt Program Design: A member requested resources or guides describing the design of programs with multiple prompts or LLM calls, particularly for information retrieval and classification.

- The member also asked about the number of prompts in their program, but this was not answered in the given message history.

Manus.im Discord Discord

- Manus France Meetup Announced: The Manus community is hosting a France meetup; check the channel or their Community X account for details.

- The latest Manus version 1.6 is reportedly pretty slick.

- Manus 1.6 Max Credits 50% off for Christmas: Users noted a 50% discount on Manus 1.6 Max credits until Christmas, per a blog post.

- The Manus AI support chatbot was unaware, but team members confirmed the promotion and recommended trying Max mode because it’s pretty amazing.

- AI Developer Open to Opportunities: An AI developer announced their successful AI project launch and seeks new projects or a full-time role.

- The member encouraged private chats to discuss opportunities and share details.

- Cloudflare DNS Issue Blocks Project: A user reported a DNS issue halting their Cloudflare project for over 4 days.

- They cited a week-long trial period and expressed frustration with customer service directing them to IM.

Modular (Mojo 🔥) Discord

- BlockseBlock Ideathon Seeks Sponsorship: Gagan Ryait, Partnerships Manager of BlockseBlock, inquired about sponsorship opportunities for their upcoming ideathon with over 5,000 working professionals participating.

- A member recommended contacting Modular’s community manager to discuss sponsorship possibilities.

- Mojo Auto-Runs Functions on GPU: A member inquired if Mojo could automatically run existing functions on the GPU, to which another member clarified that while syscalls are not possible, no attribute is required otherwise.

- The function would need to be launched as single lane.

- Modular Probes Graph Library GPU Issues: A member reported issues with the new graph library even with the GPU disabled on both macOS and Ubuntu systems, referencing a forum post for additional details.

- A Modular team member confirmed that they are investigating whether it’s an API regression or a device-specific issue.

aider (Paul Gauthier) Discord

- Championing AI as Infrastructure: Deterministic over Probabilistic: A member detailed their role in designing AI as infrastructure, emphasizing architecture, model strategy, data flow, and evaluation, but clarified that the base aider doesn’t use tools.

- Their system design favors deterministic systems where possible and probabilistic intelligence only where justified, championing clear technical decisions and explicit trade-offs.

- Principles for Robust AI: Observability and Replaceability: In designing AI systems end-to-end, key principles include ensuring models are observable, replaceable, and cost-aware, avoiding hard coupling to vendors or fragile cleverness.

- The design aims for a system that evolves without rewrites or heroics, engineering outcomes rather than just implementing features, and focusing on shipping something correct, measurable, and durable rather than impressive features.

- Aider’s MCP Server Status: Not Supported: A member inquired about configuring MCP servers in Aider, but another member clarified that this is not a supported feature.

- The member did not clarify if they planned to contribute code, or if they would wait for it as a feature request.

- Token Minimization Tactics with Qwen3-coder-30b: A member aims to automate a long process while minimizing tokens due to the limitations of Qwen3-coder-30b with 2x4090, which only has about a 200k token window.

- They suggested using agents that can use MCP-proxy and then use Aider via that agent, noting that the number of calls doesn’t matter.

- Interest in IDE Index MCP Server: A user is considering using MCP-proxy to reduce token usage and finds the ‘IDE Index MCP Server’ for Jetbrains particularly interesting.

- No further details or links were provided, but it was mentioned that the member aimed to use Aider via an agent to accomplish their goals.

tinygrad (George Hotz) Discord

- Bounty Question Limbo: A user hesitated to ask bounty-related questions in the general channel, concerned about bypassing the dedicated bounty channel’s commit requirement.

- The user opted to make a non-junk commit to gain access to the bounties channel instead.

- Smart Question Strategy: A user affirmed they have read the smart questions html and would withhold their question from the channel.

- They would find a way to make a non-junk commit so they may speak in the bounty channel.

- Device CPU Debate: A discussion arose regarding environment variables for CPU device selection.

- The consensus leaned towards supporting both DEVICE=CPU and DEV=CPU for clarity.

Moonshot AI (Kimi K-2) Discord

- Kimi K2 Article Gets Rave Reviews: A member shared and praised a DigitalOcean tutorial about Kimi K2.

- The tutorial details the use of Kimi K2 in agentic workflows.

- Kimi K2 Suspected of Grok AI Roots: A member speculated that Kimi K2 might be leveraging Grok AI.

- This theory was based on observed behaviors and capabilities that suggest a link between the two AI systems.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Windsurf Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MCP Contributors (Official) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

You are receiving this email because you opted in via our site.

Want to change how you receive these emails? You can unsubscribe from this list.

Discord: Detailed by-Channel summaries and links

LMArena ▷ #general (1200 messages🔥🔥🔥):

Gemini 3 Flash, GPT-5.2, Hallucination benchmark, AMD vs Nvidia, Prompt filter lmarena.ai

- Gemini 3 Flash outperforms GPT-5.2: Members generally agree that Gemini 3 Flash is outperforming GPT-5.2, in some cases even surpassing Gemini 3 Pro, especially in speed and cost efficiency as well as being better at coding with the right system prompt.

- Some users noted Flash’s vision capabilities due to Google Lens integration.

- Hallucination benchmark deemed unreliable: Some users in the channel are debating the reliability of the hallucination benchmark used to rate Gemini 3 Flash, claiming it gives inaccurate results and overstates the model’s tendency to provide wrong answers.

- Some members stated the benchmark’s test questions were run without grounding ie access to the internet, nerfing the model’s score.

- AMD users make GPU case: Members debate AMD vs NVIDIA GPUs for gaming and AI tasks, with some noting AMD’s affordability and potential if NVIDIA’s consumer GPU production declines.

- However, one user states that AMD sucks for local ai while another reports issues uploading images to LMArena using an AMD GPU.

- LMArena’s prompt filter becomes too strict: Several users reported that the prompt filter on LMArena.ai has become overly sensitive, flagging innocuous text prompts.

- A staff member stated that they were not aware of any change that was made here and encouraged users to report these issues in the designated channel.

LMArena ▷ #announcements (2 messages):

Text Arena Leaderboard, GPT-5.2-high, Gemini-3-flash, Vision Arena Leaderboard, WebDev Arena Leaderboard

- GPT-5.2-high Model Storms Text Arena Leaderboard!: The

GPT-5.2-highmodel has arrived on the Text Arena leaderboard at #13 with a score of 1441.- It shines particularly in Math (#1) and Mathematical occupational field (#2), also securing a solid #5 in Arena Expert.

- Gemini-3-flash Dazzles Across Arenas:

Gemini-3-flashmodels have been added to the Text Arena, Vision Arena, and WebDev Arena leaderboards, achieving Top 5 rankings across all three.Gemini-3-Flashshows strong performance in Math and Creative Writing categories, securing the #2 position in both.

- Gemini-3-Flash (Thinking-Minimal) Excels in Multi-Turn:

Gemini-3-Flash (thinking-minimal)demonstrates its strengths with a Top 10 placement in Text and Vision, plus a #2 ranking in the Multi-Turn category.- Both

gemini-3-flashvariants are available on the Text and WebDev Arena for testing and evaluation.

- Both

Unsloth AI (Daniel Han) ▷ #general (736 messages🔥🔥🔥):

Unsloth CLI tool, Colab H100, GRPO memory issues, GGUF Model Update, Training on phones

- Unsloth adds CLI Tool: A new Unsloth CLI tool has been added, enabling users to run scripts after installing the Unsloth framework in their Python environment.

- This provides a command-line interface for those who prefer it over Jupyter notebooks, enhancing accessibility and automation.

- H100s are potentially available in Colab: Rumors say H100s are now in Colab, which may or may not be official yet. Tweet Link

- This could significantly accelerate training times, but the details on pricing and availability are still vague.

- GRPO Memory Issues: A user ran into VRAM issues when trying to do GRPO on a 7b LLM with a max sequence length of 4000, and recommends users can lower

num_generationsorbatch_size.- Another user suggests using a smaller model or using DPO instead of GRPO which require investement into data preperation - like ranking completions from the model.

- Unsloth GGUF models updated with improvements: A large GGUF model update has been released for Unsloth, with links on the Unsloth Reddit.

- GLM 4.6V Flash is also performing, but some users report it speaking in Chinese.

- Unsloth now finetunes models for phones!: Unsloth now enables users to fine-tune LLMs and deploy them directly on their phones! Tweet Link

- The mobile finetuning is actually finetuning on the computer, and deploying on the phone.

Unsloth AI (Daniel Han) ▷ #off-topic (524 messages🔥🔥🔥):

Self-Promotion in Discord, Marketing Strategies for AI Services, Model Leaks and Branding, Logitech MX3S Mouse Review, Linux Distro Choice - Arch vs Ubuntu

- Discord Self-Promo Dilemmas: Members discuss the etiquette of self-promotion, with one user inquiring about suitable servers for promotion, only to be met with the suggestion that a good product shouldn’t require much promotion to take fire and links in Unsloth are only allowed if relevant.

- The response highlighted the constant moderation against spammers, suggesting that social media and ad networks might be better avenues for promoting genuine services, particularly outside of just Discord.

- Crafting an AI Service Marketing Strategy: Discussion revolved around strategies for promoting AI services, recommending the creation of a website and social media presence with valuable content, drawing parallels to how even major companies like OpenAI and Microsoft use platforms like TikTok.

- For a music transcription service, targeting educational institutions and instrument lovers via platforms like Instagram and TikTok was advised, rather than relying on Twitter which is deemed better for short messages and news.

- Leaked Model Creates Branding Buzz: A user’s leaked model and its branding led to discussions about the model’s logo and potential website theme, with suggestions to incorporate the grape theme into the branding, including domain names and social media presence.

- Other members shared their excitement for the user’s upcoming project and its potential, also there was an incident where Linus blurred out info on a Nvidia H200 order that required KYC.

- MX3S Mouse Gets the Once-Over: A user shared their initial impressions of the Logitech MX3S mouse, noting its design for full palm grip, silent clicking, and a unique wheel that can switch between freewheel and clicky modes.

- Despite liking the silent clicks and togglable freewheel, the user found the wheel heavy to click and initially disliked the freewheel feature which was later disabled, also noted it’s compatibility with excel spreadsheets.

- Arch vs Ubuntu: A Linux Distro Duel: A user ragequit Ubuntu due to various system configuration issues and is switching to Arch Linux for more control over their environment.

- While Arch is generally recommended, another user prefers Omarchy for its ease of setup, particularly with drivers and secure boot.

Unsloth AI (Daniel Han) ▷ #help (60 messages🔥🔥):

Qwen2.5 VL 7B for OCR, Deepseek OCR vs Paddle OCR, Fine-tuning vs Continued Pre-training for OCR, Data Creation for Fine-tuning, Image Resolution and Qwen3 VL Coordinate System

- Qwen2.5 VL’s OCR Skills Get Marginally Better!: A user is using Qwen2.5 VL 7B for basic OCR, finding it “pretty good” for centered text but struggling with margins and page numbers.