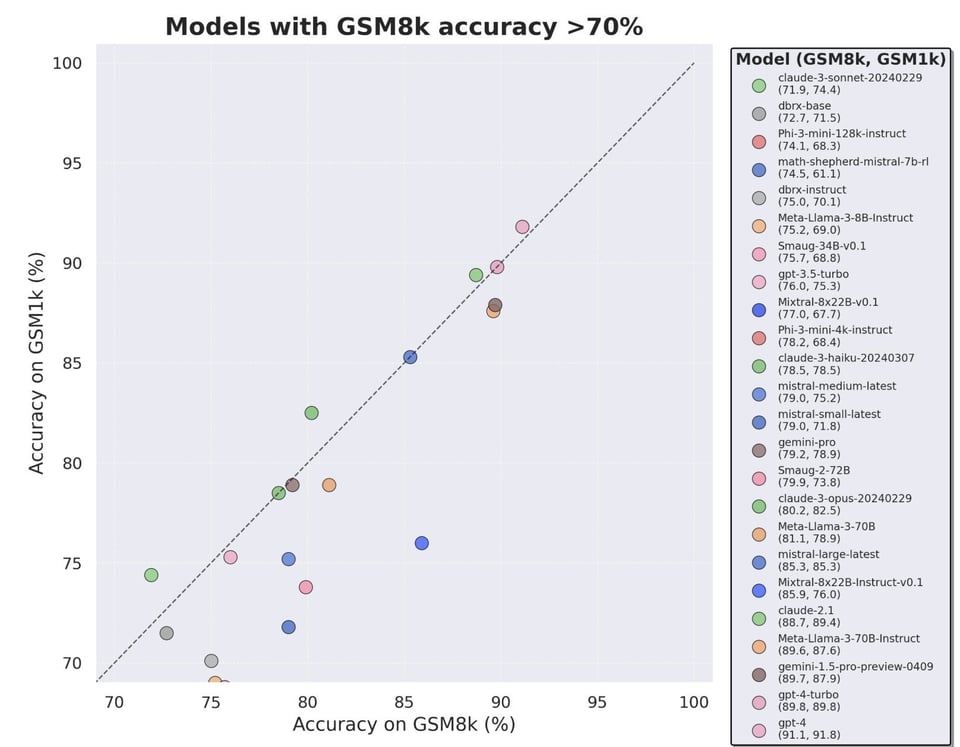

The problem of data/benchmark contamination is often a passing joke but this year is reaching a breaking point with decreasing trust in the previous practice of self reported scores on well known academic benchmarks like MMLU and GSM8K. Scale AI released A Careful Examination of Large Language Model Performance on Grade School Arithmetic which proposed a new GSM8K-like benchmark that would be less contaminated, and plotted the deviations - Mistral seems to overfit notably on GSM8k, and Phi-3 does remarkably well:

Reka has also released a new VibeEval benchmark for multimodal models, their chosen specialty. They tackle the well known MMLU/MMMU issues with multiple choice benchmarks not being a good/stable measure for chat models.

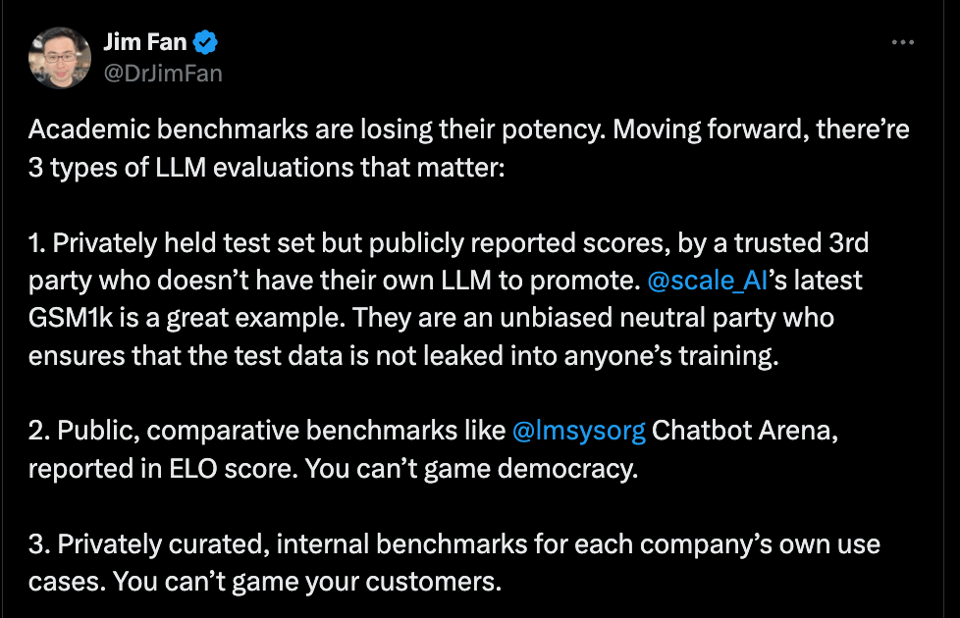

Lastly we’ll feature Jim Fan’s thinking on the path forward for evals:

Table of Contents

[TOC]

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Development and Capabilities

-

GPT-4 and beyond: In various talks and interviews, OpenAI CEO Sam Altman has referred to GPT-4 as “dumb” and “embarrassing,” hinting at the imminent release of GPT-5, which is expected to be a substantial improvement. Altman believes AI agents that can assist users with tasks and access personal information will be the next big breakthrough, envisioning a “super-competent colleague” that knows everything about the user’s life.

-

Jailbreaking GPT-3.5: A researcher demonstrated how to jailbreak GPT-3.5 using OpenAI’s fine-tuning API, bypassing safety checks by training the model on a dataset of harmful questions and answers generated by an unrestricted LLM.

AI Regulation and Safety

-

Calls for banning AI weapons: World leaders are calling for a ban on AI-powered weapons and “killer robots,” comparing the current moment in AI development to the “Oppenheimer moment” of the atomic bomb. However, some believe such a ban will be difficult to enforce.

-

Human control over nuclear weapons: A US official is urging China and Russia to declare that only humans, not AI, should control nuclear weapons, highlighting growing concerns over AI-controlled weapons systems.

AI Applications and Partnerships

-

Ukraine’s AI consular avatar: Ukraine’s Ministry of Foreign Affairs has announced an AI-powered avatar to provide updates on consular affairs, aiming to save time and resources for the agency.

-

Moderna and OpenAI partnership: Moderna and OpenAI have partnered to accelerate the development of life-saving treatments using AI, with the potential to revolutionize medicine.

-

Sanctuary AI and Microsoft collaboration: Sanctuary AI and Microsoft are collaborating to accelerate the development of AI for general-purpose robots, aiming to create more versatile and intelligent machines.

AI Research and Advancements

-

Kolmogorov-Arnold networks: MIT researchers have developed “Kolmogorov-Arnold networks” (KANs), a new type of neural network with learnable activation functions on edges instead of nodes, demonstrating improved accuracy, parameter efficiency, and interpretability compared to traditional MLPs.

-

Meta’s Llama 3 models: Meta is training Llama 3 models with over 400 billion parameters, expected to be multi-modal, have longer context lengths, and potentially specialize in different domains.

-

mRNA cancer vaccine breakthrough: A new mRNA cancer vaccine technique using “onion-like” multi-lamellar RNA lipid particle aggregates has shown success in treating brain cancer in four human patients.

-

First prime editing therapy: The FDA has cleared Prime Medicine’s IND for the first prime editing therapy, a novel gene editing technique with the potential to treat genetic diseases more precisely than existing methods.

-

AI-powered animal longevity studies: Olden Labs has introduced AI-powered smart cages that automate animal longevity studies, aiming to deliver low-cost, data-rich studies while improving animal welfare.

Memes and Humor

- Regulating AI meme: A humorous image depicts a person attempting to regulate AI by physically restraining a robot with a leash, poking fun at the challenges of AI governance.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

LLMs in Space and Efficient Inference

- Hardening LLMs for Space: @karpathy proposed hardening LLM code to pass NASA standards, making it safe to run in space. LLMs are well-suited with a fixed array of floats and bounded, well-defined dynamics. Sending LLM weights to space could allow them to “wake up” and interact with aliens.

- Efficient Inference with Groq: @awnihannun highlighted @GroqInc leading the way in reducing $/token for high quality LLMs. @virattt found Llama-3 70B on @GroqInc has best performance and pricing on benchmarks. @svpino encouraged trying Groq for huge model speed.

- 4-bit Quantization: @awnihannun calculated 4-bit 70B Llama-3 on M2 Ultra costs $0.2/million tokens, consuming 60W power. @teortaxesTex showed quantization levels of “external brain”.

Evaluating and Improving LLMs

- Evaluating LLMs: @DrJimFan proposed 3 types of evals: private test sets with public scores by trusted 3rd parties like @scale_AI, public comparative benchmarks like @lmsysorg Chatbot Arena ELO, and private internal benchmarks for each company’s use case.

- GSM1K Benchmark: @alexandr_wang and @scale_AI introduced GSM1K, a new test set showing up to 13% accuracy drops in LLMs, with Phi and Mistral overfitting. @SebastienBubeck noted phi-3-mini 76.3% accuracy as “pretty good for a 3.8B model”.

- Inverse Scaling in Multimodal Models: @YiTayML observed inverse scaling being more prominent in multimodal vs text-only models, where smaller models outperform larger ones. Still anecdotal.

- Evaluating Reasoning: @omarsar0 shared a paper on interpreting the inner workings of transformer LMs for reasoning tasks.

Open Source Models and Frameworks

- Reka Releases Evals: @RekaAILabs released a subset of internal evals called Vibe-Eval, an open benchmark of 269 image-text prompts to measure multimodal chat progress. 50%+ prompts unsolved by any current model.

- LlamaIndex Typescript Release: @llama_index released LlamaIndex.TS v0.3 with agent support, web streams, typing, and deployment enhancements for Next.js, Deno, Cloudflare and more.

Emerging Models and Techniques

- Jamba Instruct from AI21: @AI21Labs released Jamba-Instruct based on SSM-Transformer Jamba architecture. Leads quality benchmarks, has 256K context, and competitive pricing.

- Nvidia’s Llama Finetune: @rohanpaul_ai noted Nvidia’s competitive Llama-3 70B finetune called ChatQA-1.5 with good benchmarks.

- Kolmogorov-Arnold Networks: @hardmaru shared a paper on KANs as alternatives to MLPs for approximating nonlinear functions. @rohanpaul_ai explained KANs use learnable spline activation functions vs fixed in MLPs.

- Meta’s Multi-Token Prediction: @rohanpaul_ai broke down Meta’s multi-token prediction for training LMs to predict multiple future tokens for higher sample efficiency and up to 3x inference speedup.

Industry Developments

- Anthropic’s Claude iOS App: @AnthropicAI released the Claude iOS app, putting “frontier intelligence” in your pocket. @alexalbert__ shared how it helped launch tool use.

- Lamini AI Raises $25M Series A: @realSharonZhou announced @LaminiAI’s $25M Series A to help enterprises develop in-house AI capabilities. Investors include @AndrewYNg, @karpathy, @saranormous and more.

- Google I/O May 14: @GoogleDeepMind announced Google I/O developer conference on May 14 featuring AI innovations and breakthroughs.

- Anthropic Introduces Claude Team Plan: @AnthropicAI released a new Team plan for Claude with increased usage, user management, billing, and a 200K context window.

Memes and Humor

- Meme: @DeepLearningAI shared a meme about AI from buildooors on Reddit.

AI Discord Recap

A summary of Summaries of Summaries

-

Model Advancements and Fine-Tuning:

- Increasing LoRA rank to 128 for Llama 3 to prioritize understanding over memorization, adding over 335M trainable parameters [Tweet]

- Exploring multi-GPU support for model training with Unsloth, currently limited to single GPU [GitHub Wiki]

- Releasing Llama-3 8B Instruct Gradient with RoPE theta adjustments for longer context handling [HuggingFace]

- Introducing Hermes 2 Pro based on Llama-3 architecture, outperforming Llama-3 8B on benchmarks like AGIEval [HuggingFace]

-

Hardware Optimization and Deployment:

- Discussions on optimal GPU choices for LLMs, considering PCIe bandwidth, VRAM requirements (ideally 24GB+), and performance across multiple GPUs

- Exploring local deployment options like RTX 4080 for smaller LLMs versus cloud solutions for privacy

- Optimizing VRAM usage during training by techniques like merging datasets without increasing context length

- Integrating DeepSpeed’s ZeRO-3 with Flash Attention for efficient large model fine-tuning

-

Multimodal AI and Computer Vision:

- Introducing Motion-I2V for image-to-video generation with diffusion-based motion modeling [Paper]

- Sharing resources on PyTorch Lightning integration with models like SegFormer, Detectron, YOLOv5/8 [Docs]

- Accelerating diffusion models like Stable Diffusion XL by 3x using PyTorch 2 optimizations [Tutorial]

- Unveiling Google’s Med-Gemini multimodal models for medical applications [Video]

-

Novel Neural Network Architectures:

- Proposing Kolmogorov-Arnold Networks (KANs) as interpretable alternatives to MLPs [Paper]

- Introducing Universal Physics Transformers for versatile simulations across datasets [Paper]

- Exploring VisualFactChecker (VFC) for high-fidelity image/3D object captioning without training [Paper]

- Sharing a binary vector representation approach for efficient unsupervised image patch encoding [Paper]

-

Misc:

-

Stable Diffusion Model Discussions and PC Builds: The Stability.ai community shared insights on various Stable Diffusion models like ‘4xNMKD-Siax_200k’ from HuggingFace, and discussed optimal PC components for AI art generation like the 4070 RTX GPU. They also explored AI applications in logo design with models like harrlogos-xl.

-

LLaMA Context Extension Techniques: Across multiple communities, engineers discussed methods to extend the context window of LLaMA models, such as using the PoSE training method for 32k context or adjusting the rope theta. The RULER tool was mentioned for identifying actual context sizes in long-context models.

-

Quantization and Fine-Tuning Discussions: Quantization of LLMs was a common topic, with the Unsloth AI community increasing LoRA rank to 128 from 16 on Llama 3 to prioritize understanding over memorization. The OpenAccess AI Collective introduced Llama-3 8B Instruct Gradient with RoPE theta adjustments for minimal training on longer contexts (Llama-3 8B Gradient).

-

Retrieval-Augmented Generation (RAG) Techniques: Several communities explored RAG techniques for enhancing LLMs. A new tutorial series on RAG basics was shared (YouTube tutorial), and a paper on Adaptive RAG for dynamically selecting optimal strategies based on query complexity was discussed (YouTube overview). Plaban Nayak’s guide on post-processing with a reranker to improve RAG accuracy was also highlighted.

-

Introducing New Models and Architectures: Various new models and architectures were announced, such as Hermes 2 Pro from Nous Research built on Llama-3 (Hermes 2 Pro), Snowflake Arctic 480B and FireLLaVA 13B from OpenRouter, and Kolmogorov-Arnold Networks (KANs) as alternatives to MLPs (KANs paper).

PART 1: High level Discord summaries

CUDA MODE Discord

CUDA Debugging Tips and Updates: Members exchanged insights on CUDA debugging, recommending resources such as a detailed Triton debugging lecture, and the importance of using the latest version of Triton, citing recent bug fixes in the interpreter.

CUDA Profiling Woes and Wisdom: Engineers grappled with inconsistent CUDA profiling results, suggesting the utilization of NVIDIA profiling tools like Nsight Compute/Systems over cudaEventRecord. A tinygrad patch for NVIDIA was shared, aiming to aid similar troubleshooting efforts.

Torch and PyTorch Prowess: Discussions mentioned the need for expertise in PyTorch internals, specifically ATen/linalg, while TorchInductor aficionados were pointed to a learning resource (though unspecified). A call went out to any PyTorch contributors for in-depth platform knowledge.

Advances in AI Model Training Constructs: Conversations in #llmdotc revealed a considerable volume of activity centered on model training. From FP32 master copy of params to CUDA Graphs, the talks included a range of technical challenges related to performance, precision, and complexity, coupled with links to various GitHub issues and pull requests for collaborative problem-solving.

Diving Deeper into Engineering Sparsity: Engineers mulled over the Effort Engine, debating its benchmark performances and the balance between speed and quality. Points of contemplation included parameter importance over precision, the quality trade-offs in weight pruning, and potential model improvements.

Forward-Thinking with AMD and Intel Tech: Enthusiasm was shown for AMD’s HIP language with a tutorial playlist on the AMD ROCm platform, indicating a growing interest in diversified programming languages for GPUs. Additionally, a mention of Intel joining the PyTorch webpage suggested movement toward broader support across different architectures.

LM Studio Discord

CLI’s New Frontier: The release of LM Studio 0.2.22 introduced a new command-line interface, lms, enabling functionalities such as loading/unloading LLMs and starting/stopping the local server, with development open for contributions on GitHub.

Tackling LLM Installation Chaos: Community discussions highlighted installation issues of LM Studio 0.2.22 Preview, which were surmounted by providing a corrected download link; meanwhile, users exchanged ideas on model performance improvements and quantization techniques, especially for the Llama 3 model.

Headless Operation Innovations: Members shared strategies for running LM Studio headlessly on systems without a graphical user interface, suggesting xvfb and other workarounds, creating a pathway for containerization possibilities like Docker.

ROCm and AMD Under the Lens: Conversations centered on the compatibility of different AMD GPUs with ROCm, alongside the challenges of ROCm’s Linux support, highlighting the community’s quest for efficient use of diverse hardware infrastructures.

Hardware Discourse Goes Deep: Discussions delved into the nitty-gritty of hardware choices, especially on suitable GPUs for running LLMs and the impact of PCIe 3.0 vs 4.0 on multi-GPU VRAM performance, culminating in a consensus that a minimum of 24GB VRAM is ideal for formidable models like Meta Llama 3 70B.

Stability.ai (Stable Diffusion) Discord

-

Stable Diffusion Gets an Upgrade: Discussions centered on the latest Stable Diffusion models such as ‘Juggernaut X’ and ‘4xNMKD-Siax_200k’, with many users sourcing from HuggingFace for the 4xNMKD-Siax_200k model.

-

PC Build Recommendations for AI Workloads: Community members exchanged advice on the most suitable PC components for AI art creation, stressing the potential benefits of the upcoming Nvidia 5000 series and the current high-performing 4070 RTX GPU for running models efficiently.

-

AI Enters the Arena of Design: There was an in-depth conversation around using AI for logo design, highlighted by harrlogos-xl, which specializes in generating custom text within Stable Diffusion and touched on legal implications.

-

Tips on Upscaling and Inpainting for Enhanced Realism: The dialogue included tips on achieving higher image quality with upscale techniques LDSR, and sharing a Reddit guide on repurposing models for inpainting, though successes varied among users.

-

Securing Digital Artistic Endeavors with Open-Source Solutions: In the push for better security, some members recommended open-source alternatives like Aegis for Android and Raivo for iOS for one-time passwords (OTP), noting the importance of features, such as device syncing and secure backup options.

Unsloth AI (Daniel Han) Discord

-

LoRA Levels Up Llama 3: Engineers have been increasing LoRA rank on Llama 3 to 128 from 16, pushing trainable parameters past 335 million to prioritize understanding over rote memorization.

-

VRAM and Training Dynamics: Clarifications around VRAM usage in model training were made; merging datasets affects training time, not VRAM, unless it increases context length. Running Phi3 on Raspberry Pi was deemed feasible, with a successful instance of Gemma 2b running on an Orange Pi Zero 3.

-

Browsers Host Phi3: A Twitter post showcased Phi 3 running in a browser, sparking member interest. Meanwhile, Phi3 Mini 4k was flagged to outperform the 128k version on the Open LLM Leaderboard.

-

Fine-Tuning Finesse: Fine-tuning Llama 3 with Unsloth does not require ref_model in DPOTrainer; fine-tuning techniques and community collaboration were emphasized, and llama.cpp successfully deployed for a Discord bot.

-

Collaborative Coding Call: Members rallied for a designated collaboration channel leading to the creation of <#1235610265706565692> for project collaboration. The FlagEmbedding repository was shared for those interested in retrieval-augmented LLM work.

-

Improving AI Reasoning: To amplify task performance, an AI model was forced to memorize 39 reasoning modules from the Self-Discover paper, integrating advanced reasoning levels into tasks.

Nous Research AI Discord

-

Hermes 2 Pro: A New Model Champion: The introduction of Hermes 2 Pro by Nous Research, built on Llama-3 architecture, boasts superior performance on benchmarks like AGIEval and TruthfulQA when compared to Llama-3 8B Instruct. Its unique features include Function Calling and Structured Output capabilities, and it comes in both standard and quantized GGUF versions for enhanced efficiency, available on Hugging Face.

-

Exploring the Frontiers of Context Length: Research discussions revealed strategies to tackle out-of-distribution (OOD) issues for longer sequence contexts by normalizing outlier values, alongside arXiv:2401.01325 deemed influential for context length extension. Techniques from

llama.cpphighlight normalization approaches with debates concerning the efficacy of truncated attention to truly manifest “infinite” contexts. -

AI-Assisted Unreal Development and LLM Inquiries: Announcing the deployment of an AI assistant for Unreal Engine 5 on neuralgameworks.com, the guild also addressed the integration of GPT-4 vision in UE5 development. Questions around computational resources for AI research, such as access to A100 GPUs, were considered alongside tools and techniques for model training, like the Kolmogorov Arnold neural network’s performance on CIFAR-10.

-

AI Orchestration and Prompt Structuring Insights: The shared orchestration framework MeeseeksAI makes waves among AI agents, while the knowledge base on prompt structuring grows with insights into using special tokens and guidance on generating certain output formats. Evidence of structured prompt benefits is exhibited in Hermes 2 Pro’s approach to JSON output, detailed at Hugging Face.

-

Mining and Networking in AI Communities: Prospective finetuners of Large Language Models (LLMs) seek wisdom on suitable datasets, while WebSim’s introduction of an expansive game spanning epochs promises updates that may reshape gaming experiences. The anticipation for testing environments like world-sim and ongoing discourse suggests community eagerness for collaborative developments and shared research pursuits.

Perplexity AI Discord

-

Opus Gains Buzz Over GPT-4 Amongst Techies: Technical discussions on Perplexity AI have compared the Claude Opus and GPT-4, noting a preference for Opus in maintaining conversation continuity and for GPT-4 regarding technical accuracy, despite a temporary daily limit of 50 uses on Opus.

-

New Pages Feature Ignites Creative Sparks: The Perplexity AI community is keen on the new Pages feature, chatting about its potential for transforming threads into formatted articles and hopes for future enhancements including image embedding.

-

AI Content Assistance A Hot Potato: Ranging from debates over drone challenges to the utility of AI in making food choices, links to Perplexity AI’s insights were shared, with notable discussions on the proper consumption of raw pasta, DJI drones, and the Binance founder’s legal woes, indicating a broad set of interests.

-

Bridge Building Between UI and API: The pplx-7b-online model yielded different results between Pro UI and API implementations, prompting users to seek an understanding of ‘online models’ and celebrate the addition of the Sonar Large model to the API, despite some material confusion over its parameter count.

-

Members Seek Solutions for Platform Gremlins: Users encountered bugs with Perplexity AI on browsers like Safari and Brave, along with issues in correctly citing from attachments, leading to shared troubleshooting methods and a collective look for fixes.

Note: For the detailed and latest updates on API offerings and models like Sonar Large, check the official documentation.

Eleuther Discord

-

Binary Brains Beat CNNs in Unsupervised Learning: Revolutionary research on binary vector representation for image patches suggests superior efficiency compared to supervised CNNs. The discussion, inspired by biological neural systems, spotlighted a repulsive force loss function for binary vectors, potentially mimicking neuron efficiency, as detailed in arXiv.

-

KANs Kickoff as MLP Rivals: The AI community is buzzing about Kolmogorov-Arnold Networks (KANs), which may offer interpretability improvements over MLPs. The conversation also tackled mode collapse in LLMs and the promise of Universal Physics Transformers, accompanied by a critique of GLUE test server anomalies and considerations of SwiGLU’s unique scaling properties (KANs paper, Physics paper).

-

Interpreting the Uninterpretable: A rigorous dialogue explored the difficulties in articulating a model’s “true underlying features”, the role of tied embeddings in prediction models, and the definition of computation in next-token prediction. Celebrations were due for academic paper acceptances and the inception of the Mechanistic Interpretability Workshop at ICML 2024, with the community encouraged to contribute (workshop website).

-

MT-Bench: Mounting Expectation for Integration: A single yet significant request surfaced regarding the assimilation of MT-Bench into the lm-evaluation-harness, hinting at an eagerness for more rigorous conversational AI benchmarks.

OpenRouter (Alex Atallah) Discord

-

Introducing Snowflake Arctic & FireLLaVA: OpenRouter unveiled two disruptive models: the Snowflake Arctic 480B, excelling in coding and multilingual tasks at $2.16/M tokens, and FireLLaVA 13B, a rapid, open-source multimodal model with a cost of $0.2/M tokens. Both models represent significant strides in language and image processing; Arctic combines dense and MoE transformer architectures, while FireLLaVA is designed for speed and multimodal understanding, as detailed in their release post.

-

Maximizing Performance with Load Balancing: In response to high traffic demands, OpenRouter introduced enhanced load balancing features and now enables providers to track performance statistics such as latency and completion reasons on the Activity page.

-

LLaMA Context Expanding Tactics Revealed: Engineers examined strategies to extend LLaMA’s context window, featuring the PoSE training method for 32k context using 8 A100 GPUs, and the adjustment of rope theta. The discussion touched on RULER, a tool used to identify actual context size in long-context models, which can be explored further on GitHub.

-

Google Gemini Pro 1.5 Scrutinized for NSFW Handling: The community critiqued Google Gemini Pro 1.5 for its abrupt curtailment of NSFW content and noted substantial changes post-update, which seemed to diminish the model’s ability to follow instructions.

-

AI Deployment Risks & Corporate Influence Examined: The debates dived into the deployment of “orthogonalized” models, the implications of unaligned AI, and the political sway injected by model creators into their AIs. There was critical reflection on corporate budget allocations, evidenced by Google’s Gemini project, which contrasted marketing expenditures against those for research and development.

OpenAI Discord

-

Music Descriptors and DALL-E Developments Hit High Note: Engineers sought a tool to describe music tracks, while others updated that DALL-E 3 is being improved without a yet-announced DALL-E 4. The Claude IOS app was praised for its human-like responses by a middle school teacher, and discussions on leveraging AI in education emerged.

-

Chatbot Benchmarks Spark Conversation: A vigorous debate took place over the utility of benchmarks in gauging chatbot abilities, revealing a divide between those who see benchmarks as a beneficial metric and those who argue they do not accurately reflect nuanced real-world use.

-

ChatGPT Plus: Big on Tokens, Small on Limits: Users exchanged insights on ChatGPT’s token limit; with clarification that ChatGPT Plus has a 32k token limit, although the actual GPT supports up to 128k via the API. Skepticism was advised when considering ChatGPT’s self-referential answers about its architecture or limits, despite a participant’s experience sending texts exceeding the supposed 13k character limit.

-

Prompt Engineering Prodigy or Illusion?: The community deliberated on prompt engineering, with strategies like few-shot prompting with negative examples and meta-prompting thrown into the mix. The extraction of Ideal Customer Persona (ICP) from social media analytics using personal branding was also discussed, and the use of GPT-4-Vision coupled with OCR was shared as a method for extracting information from documents.

-

LLM Recall: Room for Improvement: Conversation centered on enhancing Long-Lived Memory (LLM) recall; members considered how context window limits affect platforms like ChatGPT Plus and contemplated over the combination of GPT-4-Vision with OCR for better data extraction, acknowledging the ongoing challenges with data retrieval from extensive texts.

HuggingFace Discord

-

Fashionable AI Pondering: In search of an AI that can manipulate images to show a shirt on a kid in multiple poses while maintaining logo position, community members discussed the potential of existing AI solutions but did not point to a specific tool.

-

Community Constructs Visionary Course: A newly launched, community-developed computer vision course open for contributions on GitHub has been met with enthusiasm, aiming to enrich the know-how in the realm of computer vision.

-

SDXL Inference Acceleration with PyTorch 2: A Hugging Face tutorial illustrates how to reduce the inference time of text-to-image diffusion models like Stable Diffusion XL by up to 3x, leveraging optimizations in PyTorch 2.

-

Google Unleashes Multimodal GenAI in Medicine: Med-Gemini, Google’s multimodal GenAI model tailored for medical applications, was highlighted in a YouTube video, aiming to raise awareness about the model’s capabilities and applications.

-

PyTorch Lightning Shines on Object Detection: A member sought examples of PyTorch Lightning for object detection evaluation and visualization, which led to the sharing of comprehensive tutorials on PyTorch Lightning integration with SegFormer, Detectron, YOLOv5, and YOLOv8.

-

RARR Clarification Sought in NLP: Queries were raised about the RARR process, an approach that investigates and revises language model outputs, although further discussion on its implementation amongst the community appeared limited.

LlamaIndex Discord

LlamaIndex 0.3 Heralds Enhanced Interoperability: Version 0.3 of LlamaIndex.TS introduces Agent support for ReAct, Anthropic, and OpenAI, a generic AgentRunner class, standardized Web Streams, and a bolstered type system detailed in their release tweet. The update also outlines compatibility with React 19, Deno, and Node 22.

AI Engineers, RAG Tutorial Awaits: A new tutorial series on Retrieval-Augmented Generation (RAG) by @nerdai progresses from basics to managing long-context RAG, accompanied by a YouTube tutorial and a GitHub notebook.

Llamacpp Faces Parallel Dilemmas: In Llamacpp, concerns have been voiced about deadlocks while processing parallel queries, stemming from the lack of continuous batching support on a CPU server. Sequential request processing is seen as a potential workaround.

Word Loom Proposes Language Exchange Framework: The Word Loom specification is proposed for separating code from natural language, enhancing both composability and mechanical comparisons, with an aim to be globalization-friendly, as outlined in the Word Loom update proposition.

Strategies for Smarter AI Deployments: Discussions highlighted the sufficiency of the RTX 4080’s 16 GB VRAM for smaller LLMs operations, while privacy concerns have some users shifting towards local computation stations over cloud alternatives like Google Colab for fine-tuning language models. Additionally, integrating external APIs with QueryPipeline and techniques for post-processing with a reranker to improve RAG application accuracy emerged as strategic considerations.

Modular (Mojo 🔥) Discord

Mojo’s Anniversary Dominates Discussions: The Mojo Bot community commemorated its 1-year anniversary with speculations about a significant update release tomorrow. There were fond reflections on the progress Mojo made, particularly enhancements in traits, references, and lifetimes.

Modular Updates Celebrated: Community contributions have shaped the latest Mojo 24.3 release, leading to positive evaluations of its integration in platforms like Ubuntu 24.04. Concurrently, MAX 24.3 was announced, showcasing advancements in AI pipeline integration through the Engine Extensibility API, enhancing developer experiences in managing low-latency, high-throughput inferences as detailed in the MAX Graph APIs documentation.

CHERI’s Potential Game-Changer for Security: The CHERI architecture is touted to significantly reduce vulnerability exploits by 70%, according to discussions referencing a YouTube video and the Colocation Tutorial. Talk of its adoption hinted at the possibility of transforming operating system development, empowering Unix-style software development, and potentially rendering conventional security methods obsolete.

Evolving Language Design and Performance: AI engineers continue to digest and deliberate on Mojo’s language design objectives, aspiring to infer lifetimes and mutability akin to Hylo and debating the merit and safety of pointers over references. Community members leveraged Mojo’s atomic operations for multi-core processing, achieving 100M records processing in 3.8 seconds.

Educational Content Spreads Mojo and MAX Awareness: Enthusiasm for learning and promotion of Mojo and MAX is evident with shared content like a video with Chris Lattner discussing Mojo, referenced as “Tomorrow’s High Performance Python,” and a PyCon Lithuania talk promoting Python’s synergy with the MAX platform.

OpenInterpreter Discord

Bridging the Gap for AI Vtubing: Two AI Vtuber resources are now available, with one kit needing just a few credentials for setup on GitHub - nike-ChatVRM, as announced on Twitter. The other, providing an offline and uncensored experience, is shared along with a YouTube demo and source code on GitHub - VtuberAI.

Speed Boost for Whisper RKNN Users: A Git branch is now available that provides up to a 250% speed boost for Whisper RKNN on SBC with Rockchip RK3588, which can be accessed at GitHub - rbrisita/01 at rknn.

Ngrok Domain Customization Steps Outlined: Someone detailed a process for ngrok domain configuration, including editing tunnel.py and using a specific command line addition, with a helpful resource at ngrok Cloud Edge Domains.

Solving Independent Streaks in Ollama Bot: Trouble arose with Ollama, hinting at quirky autonomous behavior without waiting for user prompts, yet specific steps for resolution were not provided.

Eager for OpenInterpreter: There was speculation about the roll-out timeline for the OpenInterpreter app, the seamless inclusion of multimodal capabilities, and a sharing of community-driven assistance on various technical aspects. Solutions such as using the --os flag with GPT-4 for Windows OS mode compatibility, and a cooperative spirit were highlighted in the discussions.

Latent Space Discord

-

Mamba Zooms into Focus: Interest in the Mamba model peaked with a Zoom meeting and mention of the comprehensive Mamba Deep Dive document, sparking discussions around selective copying as a recall test in Mamba and considerations of potential overfitting during finetuning.

-

Semantic Precision in Chunking: Participants discussed advanced text chunking approaches, with a focus on semantic chunking as a technique for document processing. This included mention of practical resources such as LlamaIndex’s Semantic Chunker and LangChain.

-

Local LLMs? There’s an App for That: Engineers debated running large language models (LLMs) on MacBooks, highlighting tools and applications for local operations like Llama3-8B-q8 and expressing interest in efficiency and performance.

-

AI Town Packs 300 Agents on a MacBook: An exciting project, AI Town with a World Editor was showcased, depicting 300 AI agents functioning smoothly on a MacBook M1 Max 64G, likened to a miniature Westworld.

-

OpenAI’s Web Woes?: Feedback on OpenAI’s website redesign sparked conversations about user experience issues, with engineers noting performance lag and visual glitches on the new OpenAI platform.

OpenAccess AI Collective (axolotl) Discord

Time to Mask Instruct Tags?: Engineers debated masking instruct tags during training to enhance ChatML performance, using a custom ChatML format, and considered the impact on model generation.

Llama-3 Leaps to 8B: Llama-3 8B Instruct Gradient is now available, featuring RoPE theta adjustments for improved context length handling, with discussions on its implementation and limitations at Llama-3 8B Gradient.

Axolotl Devs Patch Preprocessing Pain Points: A pull request was submitted to address a single-worker problem in the Orpo trainer and similarly in the TRL Trainer, allowing multithreading for speedier preprocessing, captured in PR #1583 on GitHub.

Python 3.10 Sets the Stage: A new baseline has been set within the community, where Python 3.10 is now the minimum version required for developing with Axolotl, enabling the use of latest language features.

Optimizing Training with ZeRO-3: Talks revolved around integrating DeepSpeed’s ZeRO-3 and Flash Attention for finetuning to accelerate training, where ZeRO-3 optimizes memory without affecting quality, when appropriately deployed.

LAION Discord

-

AI’s Role in Education Raises Eyebrows: A member highlighted potential dependence issues with AI use in education, suggesting that an over-reliance could impede learning crucial problem-solving skills.

-

From Still Frames to Motion Pictures: The Motion-I2V framework was introduced for converting images to videos via a two-step process incorporating diffusion-based motion predictors and augmenting temporal attention, detailed in the paper found here.

-

Promising Results with LLaMA3, Eager Eyes on Specialized Fine-tunes: A discussion ensued on LLaMA3’s performance post-4bit quantization, with members expressing optimism about future fine-tunes in specific fields and anticipating further code releases from Meta.

-

Enhancing Model Quality Under Scrutiny: There was a request for advice on improving the MagVit2 VQ-VAE model, with potential solutions revolving around the integration of new loss functions.

-

Coding the Sound of Music: Technical difficulties in implementing the SoundStream codec were brought to the table, with members collaborating to interpret omitted details from the original paper and pointing to resources and possible meanings.

-

Project Timelines and Advances Debate: The community engaged in discussions around project deadlines, using phrases like “Soon TM” informally, and expressed curiosity about the configurations used in LAION’s stockfish dataset.

-

Exploring Innovative Network Architectures: The guild touched upon novel network alternatives to MLPs with the introduction of Kolmogorov-Arnold Networks (KANs), which are highlighted in a research paper emphasizing their improved accuracy and interpretability.

-

Quality Captioning Sans Training: Distinctions were made regarding the VisualFactChecker (VFC), which is a training-free method for generating accurate visual content captions, and its implications for enhanced image and 3D object captioning as described in this paper.

AI Stack Devs (Yoko Li) Discord

- The Allure of AI in Nostalgic Gaming: Discussions have surfaced about leveraging AI in reviving classic social media games, taking the example of Farmville, and extending to the creation of a 1950s themed AI town with a communist spy intrigue.

- Hexagen World Recognized for Sharp AI Imagery: Users are praising Hexagen World for its high-quality diffusion model outputs, and have been discussing its platform’s potential for hosting AI-driven games.

- Possible Tokenizer Bug in AI Chat: Technical issues with ollama and llama3 8b configurations in ai-town, resulting in odd messages and strings of numbers, have been tentatively attributed to a tokenizer fault.

- Linux for Gamers? A Viable Option!: Among members, there’s talk about shifting from Windows to Linux with reassurances shared about gaming compatibility, such as Stellaris running smoothly on Mac and Linux, and advice to set up a dual boot system.

- Invitation to Explore AI Animation: A Discord invite to an AI animation server was shared, aiming to bring in individuals interested in the intersection of AI and animation techniques.

LangChain AI Discord

Groq, No Wait Required: Direct sign-up to Groq’s AI services is confirmed through a provided link to Groq’s console, eliminating waitlist concerns for those eager to tap into Groq’s capabilities.

AI’s Script Deviation Head-Scratcher: Strategies to mitigate AI veering off script in human-AI interaction projects are sought after, highlighting the need for maintaining conversational flow without looping responses.

Adaptive RAG Gains Traction: A new Adaptive RAG technique, which selects optimal strategies based on query complexity, is discussed alongside a YouTube video explaining the approach.

LangChain Luminaries Launch Updates and Tools: An improved LangChain v0.1.17, Word Loom’s open spec, deployment of Langserve on GCP, and Pydantic-powered tool definitions for GPT showcase the community’s breadth of innovation with available resources on GitHub for Word Loom, a LangChain chatbot, and a Pydantic tools repository.

Feedback Loop Frustration in LangServe: A member’s experience with LangServe’s feedback feature highlights the importance of clear communication channels when submitting feedback, even after a successful submission response; changes may not be immediate or noticeable.

tinygrad (George Hotz) Discord

tinygrad Tackles Conda Conundrum: The tinygrad environment faced hitches on M1 Macs due to an AssertionError linked to an invalid Metal library, with potential fixes on the horizon, as well as a bounty posted for a solution to conda python issues after system updates, with progress reported recently.

From Podcast to Practice: One member’s interest in tinygrad spiked after a Lex Fridman podcast, leading to recommendations to dive into the tinygrad documentation on GitHub for further understanding and comparing it with PyTorch.

Hardware Head-Scratcher for tinygrad Enthusiasts: A member deliberated over the choice between an AMD XT board and a new Mac M3 for their tinygrad development rig, highlighting the significance of choosing the right hardware for optimal development.

Resolving MNIST Mysteries with Source Intervention: An incorrect 100% MNIST accuracy alert prompted a member to ditch the pip version and successfully compile tinygrad from source, solving the version discrepancy and underscoring the approachability of tinygrad’s build process.

CUDA Clarifications and Symbolic Scrutiny: Questions bubbled up about CUDA usage in scripts impacting performance, while another member pondered the differentiation between RedNode and OpNode, and the presence of blobfile was affirmed to be crucial for loading tokenizer BPE in tinygrad’s LLaMA example code.

Mozilla AI Discord

-

Matrix Multiplication Mysteries: A user was baffled by achieving 600 gflops with np.matmul while a blog post by Justine Tunney mentioned only 29 gflops, leading to discussions about various methods for calculating flops and their implications for performance measurement.

-

File Rename Outputs Inconsistent: When running a file renaming task using llamafile, outputs varied, suggesting discrepancies across versions of llamafile or their executions, with one output example mentioned as

een_baby_and_adult_monkey_together_in_the_image_with_the_baby_monkey_on.jpg. -

Llamafile on Budget Infrastructure: A member queried the guild about the most effective infrastructure for experimenting with llamafile, debating between services like vast.ai and colab pro plus given their limited resources at hand.

-

GEMM Function Tips for the Fast Lane: Advice was sought on boosting a generic matrix-matrix multiplication (GEMM) function in C++ to cross the 500 gflops mark, with discussions revolving around data alignment and microtile sizes, in light of numpy’s capability to exceed 600 gflops.

-

Running Concurrent Llamafiles: It was shared that multiple instances of llamafile can be executed simultaneously on different ports, but it was emphasized that they will compete for system resources as managed by the operating system, rather than having a specialized management of resources between them.

Cohere Discord

-

Stockholm’s LLM Scene Wants Company: An invitation is open for AI enthusiasts to meet in Stockholm to dive into LLM discussions over lunch, highlighting the community’s collaborative spirit.

-

Cohere for Cozy Welcomes: The Discord guild actively fosters a welcoming atmosphere, with members like sssandra and co.elaine greeting newcomers with warmth.

-

Tune Into Text Compression Tips: An upcoming session focusing on Text Compression using LLMs was announced, reinforcing the guild’s commitment to continuous learning and skill enhancement.

-

Navigating the API Maze: Users shared real-world challenges involving AI API integration and key activation issues, with some hands-on guidance from co.elaine, including referencing the Cohere documentation on preambles.

-

Unlocking Document Search Strategies: A user sought advice on constructing a document search system tuned to natural language querying, contemplating the application of document embeddings, summaries, and extraction of critical information.

Interconnects (Nathan Lambert) Discord

-

Ensemble Techniques Tackled Mode Collapse: Discussions emphasized the potential of ensemble reward models in Reinforcement Learning for AI alignment, as demonstrated by DeepMind’s Sparrow, to mitigate mode collapse through techniques such as “Adversarial Probing” despite a KL penalty.

-

Llama 3’s Method Mix Raises Eyebrows: The community pondered why Llama 3 employed both Proximal Policy Optimization (PPO) and Decentralized Proximal Policy Optimization (DPO), with the full technical rationale still under wraps, possibly due to data timescale constraints.

-

Bitnet Implementation Interrogated: Curiosity was stoked about the practical application of the Bitnet approach to training large models, with successful small-scale reproductions such as Bitnet-Llama-70M and updates from Agora on GitHub; discussions also suggested significant hardware investments are necessary for efficiency in large model training.

-

Specialized Hardware for Bitnet a Tough Nut to Crack: The necessity for specialized hardware for Bitnet to be efficient was illuminated, referencing the need for chips supporting 2-bit mixed precision and recalling IBM’s historical efforts, alongside the hype around CUDA’s recent fp6 kernel.

-

AI Drama Unfolds Over Model Origins: The community dissected a speculative tweet about the unauthorized release of a model resembling one created by Neel Nanda and Ashwinee Panda, with a slight against its legitimacy and a call for more testing or release of the model weights. The tweet in question is Teortaxes’ tweet.

-

Anthropic Lights Up with Claude: Anthropic’s release of their Claude app stirred the community, with anticipation for comparative reviews against OpenAI’s products, while member conversations expressed admiration for the company’s branding.

-

A Pat on the Back for Performance Upticks: Positive feedback was given in acknowledgment of the notable improvement in performance by an individual post-critical review.

Alignment Lab AI Discord

Since there was only a single, non-technical message shared, which read “Hello,” by user manojbh, there is no relevant technical discussion to summarize. Please provide messages that contain technical, detail-oriented content for a proper summary.

Datasette - LLM (@SimonW) Discord

Seeking a Language Model Janitor: Discussions highlighted the need for a Language Model capable of identifying and deleting numerous localmodels from a hard drive, underscoring a practical use case for AI in system maintenance and organization.

DiscoResearch Discord

-

Qdora’s New Tactic for LLM Enhancement: A user brought attention to Qdora, a method that enables Large Language Models (LLMs) to learn additional skills while sidestepping catastrophic forgetting, building on earlier model expansion tactics.

-

LLaMA Pro-8.3B Adopts Block Expansion Strategy: Members discussed a block expansion research approach, which allows LLMs like LLaMA to evolve into more capable versions (e.g., CodeLLaMA) while retaining previously learned skills, marking a promising development in the field of AI.

AI21 Labs (Jamba) Discord

Jamba-Instruct Rolls Out: AI21 Labs announced the release of Jamba-Instruct, as per a tweet linked by a member. This could signal new developments in instruction-based AI models.

The Skunkworks AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

CUDA MODE ▷ #general (7 messages):

- Ban Hammer Strikes: A member successfully identified and banned an unauthorized user, highlighting the group’s monitoring efficiency.

- CUDA Best Practices Shared: CUDA C++ Core Libraries best practices and techniques were shared, including a link to a Twitter post and slides via Google Drive, although the shared folder currently appears empty. See the tweet

- Autograd Hessian Discussion: A member initiated a discussion on whether the

torch.autograd.gradfunction’s second derivative returns the diagonal of the Hessian matrix. It was clarified that with specific parameters likecreate_graph=Trueit would, while another member pointed out that it is actually a Hessian vector product. - Estimating the Hessian’s Diagonal: A different technique to estimate the Hessian’s diagonal involving randomness plus the Hessian vector product was mentioned, referencing an approach seen in a paper.

Link mentioned: CCCL - Google Drive: no description found

CUDA MODE ▷ #triton (11 messages🔥):

-

Seeking Triton Debugging Wisdom: A member sought advice on the best approach to debug Triton kernels, expressing difficulties using

TRITON_INTERPRET=1anddevice_print, withTRITON_INTERPRET=1not allowing normal program execution anddevice_printyielding repetitive results. The best debugging practices were discussed, including watching a detailed Triton debugging lecture on YouTube and ensuring that they have the latest Triton version by installing from source or using triton-nightly. -

Triton Development Insights Shared: In the course of the discussion, it was suggested to ensure Triton is up-to-date due to recent fixes in the interpreter bugs. However, there is no confirmed date for the next release beyond the current 2.3 version.

-

Troubleshooting Gather Procedure in Triton: A newcomer to Triton presented an issue with implementing a simple gather procedure, specifically encountering an

IncompatibleTypeErrorImplwhen executing a store-load sequence. The desire to use Python breakpoints inside Triton kernel code for debugging was also mentioned, with difficulties in getting them to trigger.

Link mentioned: Lecture 14: Practitioners Guide to Triton: https://github.com/cuda-mode/lectures/tree/main/lecture%2014

CUDA MODE ▷ #cuda (14 messages🔥):

-

CUDA Profiling Puzzlement: A member discussed difficulties with inconsistent timing reports when profiling CUDA kernels using

cudaEventRecord. They observed unexpected timing results when adjusting tile sizes in a matrix multiplication kernel and questioned the robustness of the timing mechanism. -

NVIDIA Tools to the Rescue: In response to profiling concerns, a suggestion was made to try NVIDIA profiling tools like Nsight Compute or Nsight System, which are specifically designed for such tasks.

-

Discrepancies in Profiling Data: The member continued to note discrepancies between timings reported by

cudaEventRecordand theDurationfield in the NCU (Nsight Compute) report. It was clarified that profiling itself incurs overhead, potentially affecting the captured timings. -

Nsight Systems as an Alternative: A further recommendation was made to use Nsight Systems, which handles profiling without the explicit use of

cudaEventRecord. -

Sharing Solutions for Tinygrad on NVIDIA: A post was shared regarding a tinygrad patch for the NVIDIA open driver, documenting issues and solutions encountered during installation, potentially aiding others with similar issues.

CUDA MODE ▷ #torch (3 messages):

- Learning Opportunity for TorchInductor Enthusiasts: A member encouraged those interested in learning more about TorchInductor to check out an unspecified resource.

- Seeking PyTorch Contributor Insight: A user requested to connect with any PyTorch contributors with knowledge of the platform’s internals.

- In Search of ATen/linalg Experts: The same user further specified a need for expertise in ATen/linalg, a component of PyTorch.

CUDA MODE ▷ #algorithms (11 messages🔥):

- Effort Engine Discussion Unfolds: The creator of Effort Engine joined the chat, teased about an upcoming article, and indicated that despite new benchmarks, effort/bucketMul is inferior to quantization in terms of speed/quality.

- Quality Over Quantity: It was noted that Effort Engine’s method shows less quality degradation when compared to pruning the smallest weights, and charts are promised for visual comparison.

- Understanding Sparse Matrices: A comparison was shared contrasting the removal of least important weights from a matrix versus skipping the least important calculations, commencing a deeper dive into the subject of sparsity.

- Matrix Dimensions Matter: A discrepancy in matrix/vector dimensions was pointed out and acknowledged, with a commitment to rectify the vector orientation errors mentioned in the documentation.

- Exploration of Parameter Importance Over Precision: A member reflects on Effort Engine and recent advances suggesting that the number of parameters in AI models might be more important than their precision, citing examples such as quantization to 1.58 bits.

CUDA MODE ▷ #cool-links (2 messages):

- Exploring Speed Enhancements: A member mentioned that random_string_of_character is currently very slow, expressing curiosity about potential methods to accelerate its performance.

CUDA MODE ▷ #beginner (2 messages):

- Seeking CUDA Code Feedback: A member inquired if there is a dedicated channel or individuals available for feedback on their CUDA code. Another member directed them to post in a specific channel identified by its ID (<#1189607726595194971>) where such discussions are encouraged.

CUDA MODE ▷ #youtube-recordings (1 messages):

- Request for Taylor Robie’s Scripts on Lightning: A member expressed interest in having Taylor Robie upload his scripts as a studio to Lightning for the benefit of beginners. There was a suggestion that this could be a helpful resource.

CUDA MODE ▷ #torchao (1 messages):

- FP6 datatype welcomes CUDA enthusiasts: An announcement about new fp6 support was shared with a link to the GitHub issue (FP6 dtype! · Issue #208 · pytorch/ao). Those interested in developing a custom CUDA extension for this feature were invited to collaborate and offered support to get started.

Link mentioned: FP6 dtype! · Issue #208 · pytorch/ao: 🚀 The feature, motivation and pitch https://arxiv.org/abs/2401.14112 I think you guys are really going to like this. The deepspeed developers introduce FP6 datatype on cards without fp8 support, wh…

CUDA MODE ▷ #off-topic (2 messages):

- Creating Karpathy-style Explainer Videos: A member seeks advice on creating explainer videos following the style of Andrej Karpathy, particularly combining a live screen share with a face cam overlay. They provided a YouTube video link to illustrate, showing Karpathy building a GPT Tokenizer.

- OBS Streamlabs Recommended for Video Creation: In response to the inquiry on making explainer videos, a member recommended using OBS Streamlabs, highlighting the availability of numerous tutorials for it.

Link mentioned: Let’s build the GPT Tokenizer: The Tokenizer is a necessary and pervasive component of Large Language Models (LLMs), where it translates between strings and tokens (text chunks). Tokenizer…

CUDA MODE ▷ #triton-puzzles (2 messages):

- Confusion Over Problem Information: A member mentioned that the problem description contains contradictory details, assuming that N0 = T led to avoiding conflicting information.

- Acknowledgment of Error in Problem Description: It was acknowledged that the problem description was incorrect, and a member confirmed an update would be released with a clearer version.

CUDA MODE ▷ #llmdotc (813 messages🔥🔥🔥):

<ul>

<li><strong>Master Params Mayhem</strong>: A recent merge enabling FP32 master copy of params by default disrupted expected model behavior, causing significant loss mismatches.</li>

<li><strong>Stochastic Rounding to the Rescue</strong>: Tests showed that incorporating stochastic rounding during parameter updates aligns results more closely with expected behavior.</li>

<li><strong>CUDA Concerns</strong>: Discussion raised around the substantial size and compilation time of cuDNN and possible optimizations for better usability within the llm.c project.</li>

<li><strong>CUDA Graphs Glow Dimly</strong>: CUDA Graphs, which improve kernel launch overhead, were briefly mentioned as a possible performance booster, but current GPU idle times imply limited benefits.</li>

<li><strong>Aiming for NASA Level C Code?🚀</strong>: Ideation around improving llm.c code to potentially meet safety-critical standards, with a side dream of LLMs in space and discussions on optimizing for more significant model sizes.</li>

</ul>Links mentioned:

- no title found: no description found

- The Power of 10: Rules for Developing Safety-Critical Code - Wikipedia: no description found

- (beta) Compiling the optimizer with torch.compile — PyTorch Tutorials 2.3.0+cu121 documentation: no description found

- Performance Comparison between Torch.Compile and APEX optimizers: Note On torch.compile Generated Code I’ve been receiving a lot of questions on what exactly torch.compile generates when compiling the optimizer. For context the post on foreach kernels contains the m...

- cuda::discard_memory: CUDA C++ Core Libraries

- Release Notes — NVIDIA cuDNN v9.1.0 documentation: no description found

- Compiler Explorer - CUDA C++ (NVCC 12.2.1): #include <cuda_runtime.h> #include <cuda_bf16.h> typedef __nv_bfloat16 floatX; template<class ElementType> struct alignas(16) Packed128 { __device__ Pack...

- Performance Comparison between Torch.Compile and APEX optimizers: TL;DR Compiled Adam outperformed SOTA hand-optimized APEX optimizers on all benchmarks; 62.99% on Torchbench, 53.18% on HuggingFace, 142.75% on TIMM and 88.13% on BlueBerries Compiled AdamW performed...

- Which Compute Capability is supported by which CUDA versions?: What are compute capabilities supported by each of: CUDA 5.5? CUDA 6.0? CUDA 6.5?

- karpa - Overview: karpa has 13 repositories available. Follow their code on GitHub.

- LLM.c Speed of Light & Beyond (A100 Performance Analysis) · karpathy/llm.c · Discussion #331: After my cuDNN Flash Attention implementation was integrated yesterday, I spent some time profiling and trying to figure out how much more we can improve performance short/medium-term, while also t...

- mixed precision utilities for dev/cuda by ngc92 · Pull Request #325 · karpathy/llm.c: cherry-picked from #315

- fixes to keep master copy in fp32 of weights optionally · karpathy/llm.c@795f8b6: no description found

- cuDNN Flash Attention Forward & Backwards BF16 (+35% performance) by ademeure · Pull Request #322 · karpathy/llm.c: RTX 4090 with BF16 and batch size of 24: Baseline: 232.37ms (~106K tokens/s) cuDNN: 170.77ms (~144K tokens/s) ==> +35% performance! Compile time: Priceless(TM) (~2.7s to 48.7s - it's a big dep...

- single adam kernel call handling all parameters by ngc92 · Pull Request #262 · karpathy/llm.c: First attempt at a generalized Adam kernel

- feature/cudnn for flash-attention by karpathy · Pull Request #323 · karpathy/llm.c: Builds on top of PR #322 Additional small fixes to merge cudnn support, and with it flash attention

- pytorch/torch/_inductor/fx_passes/fuse_attention.py at main · pytorch/pytorch: Tensors and Dynamic neural networks in Python with strong GPU acceleration - pytorch/pytorch

- What's the difference of flash attention implement between cudnn and Dao-AILab? · Issue #52 · NVIDIA/cudnn-frontend: Is this link a flash attention?

- first draft for gradient clipping by global norm by ngc92 · Pull Request #315 · karpathy/llm.c: one new kernel that calculates the overall norm of the gradient, and updates to the adam kernel. Still TODO: clip value is hardcoded at function call site error handling for broken gradients would...

- Add NSight Compute ranges, use CUDA events for timings by PeterZhizhin · Pull Request #273 · karpathy/llm.c: CUDA events allow for more accurate timings (as measured by a GPU) nvtxRangePush/nvtxRangePop Adds simple stack traces to NSight Systems: Sample run command: nsys profile mpirun --allow-run-as-roo...

- Add NSight Compute ranges, use CUDA events for timings by PeterZhizhin · Pull Request #273 · karpathy/llm.c: CUDA events allow for more accurate timings (as measured by a GPU) nvtxRangePush/nvtxRangePop Adds simple stack traces to NSight Systems: Sample run command: nsys profile mpirun --allow-run-as-roo...

- feature/fp32 weight master copy by karpathy · Pull Request #328 · karpathy/llm.c: optionally keep a master copy of params in fp32 the added flag is -w 0/1, where 1 is default (i.e. by default we DO keep the fp32 copy) increases memory for the additional copy of params in float, ...

- Build software better, together: GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

- Build software better, together: GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

- Pull requests · karpathy/llm.c: LLM training in simple, raw C/CUDA. Contribute to karpathy/llm.c development by creating an account on GitHub.

- Second matmul for fully custom attention by ngc92 · Pull Request #227 · karpathy/llm.c: So far, just in the /dev files, because for the main script we also need to touch backward. For some reason, I see considerable speed-up in the benchmarks here, but in my attempts to use this in ...

- onnxruntime/onnxruntime/python/tools/transformers/onnx_model_gpt2.py at main · microsoft/onnxruntime: ONNX Runtime: cross-platform, high performance ML inferencing and training accelerator - microsoft/onnxruntime

- Faster Parallel Reductions on Kepler | NVIDIA Technical Blog: Parallel reduction is a common building block for many parallel algorithms. A presentation from 2007 by Mark Harris provided a detailed strategy for implementing parallel reductions on GPUs…

- Updated adamw to use packed data types by ChrisDryden · Pull Request #303 · karpathy/llm.c: Before Runtime total average iteration time: 38.547570 ms After Runtime: total average iteration time: 37.901735 ms Kernel development file specs: Barely noticeable with the current test suite: Bef...

- option to keep weights as fp32 by ngc92 · Pull Request #326 · karpathy/llm.c: adds an optional second copy of the weights in fp32 precision TODO missing free

- Foreach kernel codegen in inductor by mlazos · Pull Request #99975 · pytorch/pytorch: design doc Add foreach kernel codegen for a single overload of foreach add in Inductor. Coverage will expand to more ops in subsequent PRs. example cc @soumith @voznesenskym @penguinwu @anijain2305...

- Packing for Gelu backwards by JaneIllario · Pull Request #306 · karpathy/llm.c: Update gelu backwards kernel to do packing into 128 bits, and create gelu brackward cuda file Previous kernel: block_size 32 | time 0.1498 ms | bandwidth 503.99 GB/s block_size 64 | time 0.0760...

- convert all float to floatX for layernorm_forward by JaneIllario · Pull Request #319 · karpathy/llm.c: change all kernels to use floatX

- Update residual_forward to use packed input by JaneIllario · Pull Request #299 · karpathy/llm.c: Update residual_forward to use 128 bit packed input, with floatX Previous Kernel: block_size 32 | time 0.1498 ms | bandwidth 503.99 GB/s block_size 64 | time 0.0760 ms | bandwidth 993.32 GB/s b...

- [inductor] comprehensive padding by shunting314 · Pull Request #120758 · pytorch/pytorch: Stack from ghstack (oldest at bottom): -> #120758 This PR adds the ability to pad tensor strides during lowering. The goal is to make sure (if possible) tensors with bad shape can have aligned st...

CUDA MODE ▷ #rocm (2 messages):

- Expressing Enthusiasm: A participant indicated strong interest with a brief message “super interesting”.

- AMD HIP Tutorial Compilation: A YouTube playlist was shared, titled “AMD HIP Tutorial,” providing a series of instructional videos on programming AMD GPUs with the HIP language on the AMD ROCm platform.

Link mentioned: AMD HIP Tutorial: In this series of videos, we will teach how to use the HIP programming language to program AMD GPUs running on the AMD ROCm platform. This set of videos is a…

CUDA MODE ▷ #oneapi (1 messages):

neurondeep: also added intel on pytorch webpage

LM Studio ▷ #💬-general (240 messages🔥🔥):

-

Command Line Interface Arrives: Members enthusiastically discussed the release of LM Studio 0.2.22, which introduces command line functionality with a focus on server mode operations. Issues and solutions for running the app in headless mode without a GUI on Linux machines were a key topic, with members actively troubleshooting and sharing advice (LM Studio CLI setup).

-

Flash Attention Sparks Intrigue: There were several discussions about Flash Attention at 32k context, highlighting its ability to allegedly improve processing speed by 3 times for document analysis with long contexts. This upgrade is poised to revolutionize interactions with large text blocks, like books.

-

Beta Testing Invites Sent: Users shared excitement over the latest beta build for LM Studio 0.2.22 and discussed the potential for community feedback to influence development. Mentioned were pull requests on llama.cpp that are relevant to the updates.

-

Headless Mode Workarounds and Hacks: Various members brainstormed workarounds for running LM Studio in a headless state on systems without a graphical user interface, suggesting methods like using a virtual X server or utilizing

xvfb-runto avoid display-related errors. -

Helpful YouTubing Advice: When asked about adding voice to read generated text, one member pointed to sillytavern or external solutions utilizing xtts, suggesting that tutorials are available on YouTube for implementation guidance.

Links mentioned:

- Pout Christian Bale GIF - Pout Christian Bale American Psycho - Discover & Share GIFs: Click to view the GIF

- Squidward Oh No Hes Hot GIF - Squidward Oh No Hes Hot Shaking - Discover & Share GIFs: Click to view the GIF

- elija@mx:~$ xvfb-run ./LM_Studio-0.2.22.AppImage: 20:29:24.712 › GPU info: '1c:00.0 VGA compatible controller: NVIDIA Corporation G A104 [GeForce RTX 3060 Ti] (rev a1)' 20:29:24.721 › Got GPU Type: nvidia 20:29:24.722 › LM Studio: gpu type = NVIDIA 2...

- Perfecto Chefs GIF - Perfecto Chefs Kiss - Discover & Share GIFs: Click to view the GIF

- GitHub - lmstudio-ai/lms: LM Studio in your terminal: LM Studio in your terminal. Contribute to lmstudio-ai/lms development by creating an account on GitHub.

- GitHub - lmstudio-ai/lms: LM Studio in your terminal: LM Studio in your terminal. Contribute to lmstudio-ai/lms development by creating an account on GitHub.

- GitHub - N8python/rugpull: From RAGs to riches. A simple library for RAG visualization.: From RAGs to riches. A simple library for RAG visualization. - N8python/rugpull

- Attempt at OpenElm by joshcarp · Pull Request #6986 · ggerganov/llama.cpp: Currently failing on line 821 of sgemm.cpp, still some parsing of ffn/attention head info needs to occur. Currently hard coded some stuff. Fixes: #6868 Raising this PR as a draft because I need hel...

LM Studio ▷ #🤖-models-discussion-chat (159 messages🔥🔥):

-

Exploring LLAMA’s Bible Knowledge: A member expressed curiosity about how well LLAMA 3 model can recite known texts like the Bible. They shared an experiment to graph the model’s recall of Genesis and John, revealing a poor recall rate for Genesis and only a 10% correct recall for John.

-

Quality Matters for Storytelling AI: One member discussed the importance of “quality” for story writing with AI, stating a preference for Goliath 120B Q3KS for better prose over faster models like LLAMA 3 8B. They emphasized that while they have high standards, no AI is perfect, and human rewriting is still essential.

-

The Right Tool for Biblical Recall: In response to the Bible recall rate test, another member mentioned trying the CMDR+ model, which seemed to handle the task better than LLAMA 3. It showed strong recall for specific biblical passages.

-

Technical Discussions on AI Model Quantization: There was a technical exchange about GGUF models and the process of quantization, with users sharing insights and seeking advice on techniques to quantize models like Gemma.

-

Exploring Agents and AutoGPT: A conversation about the potential of “agents” and AutoGPT took place, discussing the ability to create multiple AI instances that communicate with one another to optimize outputs. However, no consensus was reached on their effectiveness compared to larger models.

-

LLAMA’s Vision Abilities Questioned: Members discussed the capabilities of LLAMA models with vision, comparing LLAMA 3 with other models and mentioning LLAVA’s ability to understand but not generate images. There were also queries about the best text-to-speech (TTS) models, with a nod to Coqui still being on top.

Links mentioned:

- GoldenSun3DS's unclaimed Humblebundle Games: Discover the magic of the internet at Imgur, a community powered entertainment destination. Lift your spirits with funny jokes, trending memes, entertaining gifs, inspiring stories, viral videos, and ...

- Dont Know Idk GIF - Dont Know Idk Dunno - Discover & Share GIFs: Click to view the GIF

- mradermacher/Goliath-longLORA-120b-rope8-32k-fp16-GGUF · Hugging Face: no description found

- High Quality Story Writing Type Third Person: no description found

- Meta AI: Use Meta AI assistant to get things done, create AI-generated images for free, and get answers to any of your questions. Meta AI is built on Meta's latest Llama large language model and uses Emu,...

- LLM In-Context Learning Masterclass feat My (r/reddit) AI Agent: LLM In-Context Learning Masterclass feat My (r/reddit) AI Agent👊 Become a member and get access to GitHub and Code:https://www.youtube.com/c/AllAboutAI/join...

- High Quality Story Writing Type First Person: no description found

- Reddit - Dive into anything: no description found

LM Studio ▷ #announcements (1 messages):

- LM Studio Gets a CLI Companion: LM Studio introduces its command-line interface,

lms, featuring capabilities to load/unload LLMs and start/stop the local server. Interested parties can installlmsby runningnpx lmstudio install-cliand ensure they update to LM Studio 0.2.22 to use it. - Streamline Workflow Debugging: Developers can now use

lms log streamto debug their workflows more effectively with the newlmstool. - Join the Open Source Effort:

lmsis MIT licensed and available on GitHub for community contributions. The team encourages developers to smash that ⭐️ button and engage in discussions in the #dev-chat channel. - Pre-requisites for Installation: A reminder was given that users need to have NodeJS installed before attempting to install

lms, with installation steps available on the GitHub repository.

Link mentioned: GitHub - lmstudio-ai/lms: LM Studio in your terminal: LM Studio in your terminal. Contribute to lmstudio-ai/lms development by creating an account on GitHub.

LM Studio ▷ #🧠-feedback (4 messages):

- Respect for Different Projects: A member pointed out that expressing preference for one project doesn’t equate to disparaging another, emphasizing that both can be great and there’s no value in trashing other projects.

- Strawberry vs Chocolate Milk Analogy: In an attempt to illustrate that a preference doesn’t imply criticism of the alternatives, a member compared their liking for LM Studio over Ollama to preferring strawberry milk over chocolate, without trashing the latter.

- Standing Firm on Opinions: Another member reaffirmed their stance by recognizing the worth of other programs but maintaining a personal preference for LM Studio, indicating that this shouldn’t be seen as attacking the other project.

LM Studio ▷ #⚙-configs-discussion (3 messages):

- Restoring Configs to Defaults: A member needed to reset the config presets for llama 3 and phi-3 after an update to version 22. Another member advised deleting the configs folder to repopulate it with the default configurations upon the next app launch.

LM Studio ▷ #🎛-hardware-discussion (247 messages🔥🔥):

-

RAM Speeds and CPU Compatibility: Members discussed RAM speed limitations based on CPU compatibility, specifically mentioning that E5-2600 v4 CPUs support faster RAM than 2400MHz. However, the specific capabilities of different Intel Xeon processors were examined, with links to Intel’s official specifications provided to clarify.

-

Choosing GPUs for LLM: Participants talked about the optimal GPU choices for running language models, debating between models such as P100s and P40s, and K40’s lower compatibility with certain backends. Tesla P40’s performance was appraised through a Reddit post, and concerns were raised about second-hand market supply and shipping times from China for desired GPU models.

-

NVLink and SLI: Users clarified that while SLI is not a feature for enterprise GPUs, NVLink bridges can be used, however, the physical layout of the P100 might inhibit easy connection of NVLink bridges due to shroud design.

-

PCIe Bandwidth and Card Performance: Discussions occurred about PCIe 3.0 versus 4.0 bandwidth and how it impacts VRAM performance across multiple GPUs. Real-world performance drop was noted when incorporating Gen 3 cards into a system heavily utilizing Gen 4 cards.

-

VRAM Requirements for LLMs: There was a back-and-forth about the amount of VRAM required to efficiently run models, such as Meta Llama 3 70B, with the consensus being that a minimum of 24GB VRAM is ideal. The recommendation was to use GPUs with full offloading capabilities for the best speed, with 70B models still proving challenging for even 24GB cards, while 7/8B models are comfortably runnable across the board.

Links mentioned:

- no title found: no description found

- MACKLEMORE & RYAN LEWIS - THRIFT SHOP FEAT. WANZ (OFFICIAL VIDEO): The Heist physical deluxe edition:http://www.macklemoremerch.comThe Heist digital deluxe on iTunes: http://itunes.apple.com/WebObjects/MZStore.woa/wa/viewAlb...

- Reddit - Dive into anything: no description found

LM Studio ▷ #🧪-beta-releases-chat (152 messages🔥🔥):

-

Chaos in 0.2.22 Preview Installation: Users experienced issues when installing LM Studio 0.2.22 Preview, with downloads leading to version 0.2.21 instead. After several updates and checks by community members, a fresh download link was shared which resolved the version discrepancy.

-

In Search of Improved Llama 3 Performance: Participants discussed ongoing issues with the Llama 3 model, especially GGUF versions, underperforming on reasoning tasks. It was recommended to use the latest quantizations that incorporate recent changes to the base llama.cpp.

-

Quantization Quandaries and Contributions: Users shared GGUF quantized models and debated over the discrepancy in performance across different Llama 3 GGUFs. Bartowski1182 confirmed that 8b models were on latest quants, and was in the process of updating the 70b models.

-

Server Troubles Spotted in 0.2.22: One user flagged potential server issues on LM Studio 0.2.22, with odd prompts being added to each server request and suggested using the

lms log streamutility for an accurate diagnosis. -

Cross-Compatibility and Headless Operation: A conversation on how to run LM Studio on Ubuntu was concluded with simple installation steps, and there was excitement about the possibility of creating a Docker image thanks to headless operation capability.

Links mentioned:

- no title found: no description found

- no title found: no description found

- no title found: no description found

- GGUF My Repo - a Hugging Face Space by ggml-org: no description found

- bartowski/Meta-Llama-3-8B-Instruct-GGUF · Hugging Face: no description found

- Tweet from bartowski (@bartowski1182): Ran into multiple issues making llamacpp quants for 70b instruct, it'll be up soon I promise :) eta is tomorrow morning

- Qawe Asd GIF - Qawe Asd - Discover & Share GIFs: Click to view the GIF

- NousResearch/Hermes-2-Pro-Llama-3-8B-GGUF · Hugging Face: no description found

- Doja Cat GIF - Doja Cat Star - Discover & Share GIFs: Click to view the GIF

- Ojo Huevo GIF - Ojo Huevo Pase de huevo - Discover & Share GIFs: Click to view the GIF

- Build software better, together: GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

- GitHub - lmstudio-ai/lms: LM Studio in your terminal: LM Studio in your terminal. Contribute to lmstudio-ai/lms development by creating an account on GitHub.

LM Studio ▷ #amd-rocm-tech-preview (25 messages🔥):

- Curiosity About LM Studio and OpenCL: A member mentioned surprise upon discovering that LM Studio can utilize OpenCL, although they noted it is slow. This underscores a general interest in alternative compute backends for machine learning software.

- ROCm Compatibility Quandaries: Members discussed the compatibility of ROCm with various AMD GPUs, specifically the 6600 and 6700 XT models. For instance, a member shared that their 6600 does not appear to be supported by ROCm, as evidenced by the official AMD ROCm documentation.

- Lack of Linux Support for ROCm Build: A direct question about the availability of a ROCm build for Linux led to the revelation that there currently is no such build specifically for Linux. Participants were seeking alternatives for utilizing their AMD hardware efficiently.

- Comparing GPU Prices Across Regions: The conversation took a lighter turn as members considered international travel to the UK to procure an AMD 7900XTX GPU at a significantly lower price compared to local costs, revealing the impact of regional pricing variances on consumer decisions.

- New CLI Tool for LM Studio ROCm Preview: A detailed announcement introduced

lms, a new CLI tool designed to assist with managing LLMs, debugging workflows, and interacting with the local server for LM Studio users with the ROCm Preview Beta. The information included a link to LM Studio 0.2.22 ROCm Preview and the lms GitHub repository, encouraging community involvement and contributions.

Links mentioned: