AI News for 6/12/2024-6/13/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (414 channels, and 3646 messages) for you. Estimated reading time saved (at 200wpm): 404 minutes. You can now tag @smol_ai for AINews discussions!

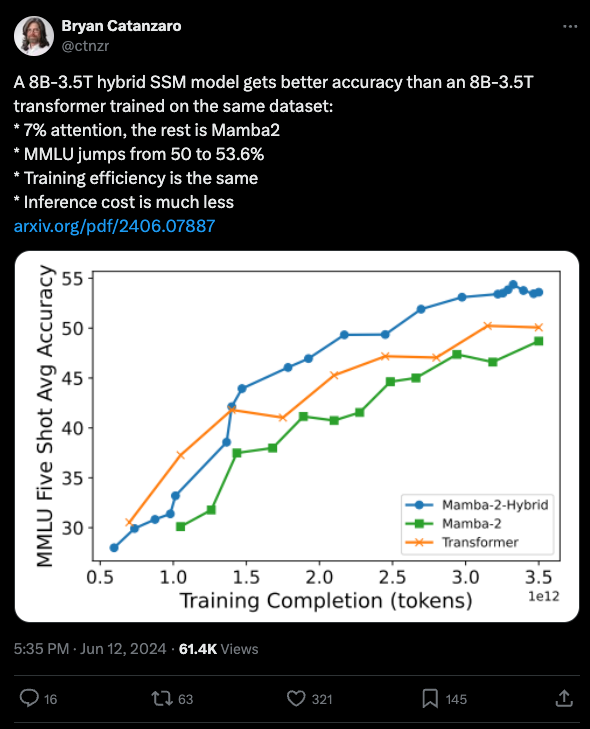

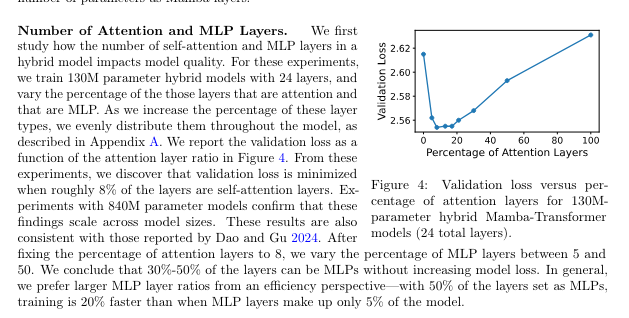

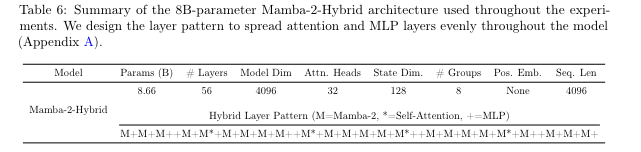

Lots of fun image-to-video and canvas-to-math demos flying around today, but not much technical detail, so we turn elsewhere, to Bryan Catanzaro of NVIDIA calling attention to their new paper studying Mamba models:

As Eugene Cheah remarked in the Latent Space Discord, this is the third team (after Jamba and Zamba that has independently found the result that mixing Mamba and Transformer blocks does better than either can alone. And the paper does conclude empirically that the optimal amount of Attention is <20%, being FAR from all you need.

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

LLM Capabilities and Evaluation

- Mixture-of-Agents Enhances LLM Performance: @bindureddy noted that Mixture-of-Agents (MoA) uses multiple LLMs in a layered architecture to iteratively enhance generation quality, with the MoA setup using open-source LLMs scoring 65.1% on AlpacaEval 2.0 compared to GPT-4 Omni’s 57.5%.

- LiveBench AI Benchmark: @bindureddy and @ylecun announced LiveBench AI, a new LLM benchmark with challenges that can’t be memorized. It evaluates LLMs on reasoning, coding, writing, and data analysis, aiming to provide an independent, objective ranking.

- Mamba-2-Hybrid Outperforms Transformer: @ctnzr shared that an 8B-3.5T hybrid SSM model using 7% attention gets better accuracy than an 8B-3.5T Transformer on the same dataset, with MMLU jumping from 50 to 53.6% while having the same training efficiency and lower inference cost.

- GPT-4 Outperforms at Temperature=1: @corbtt found that GPT-4 is “smarter” at temperature=1 than temperature=0, even on deterministic tasks, based on their evaluations.

- Qwen 72B Leads Open-Source Models: @bindureddy noted that Qwen 72B is the best performing open-source model on LiveBench AI.

LLM Training and Fine-Tuning

- Memory Tuning for 95%+ Accuracy: @realSharonZhou announced @LaminiAI Memory Tuning, which uses multiple LLMs as a Mixture-of-Experts to iteratively enhance a base LLM. A Fortune 500 customer case study showed 95% accuracy on a SQL agent task, up from 50% with instruction fine-tuning alone.

- Sakana’s Evolutionary LLM Optimization: @andrew_n_carr highlighted Sakana AI Lab’s work using evolutionary strategies to discover new loss functions for preference optimization, outperforming DPO.

Multimodal and Video Models

- Luma Labs Dream Machine: @karpathy and others noted the impressive text-to-video capabilities of Luma Labs’ new Dream Machine model, which can extend images into videos.

- MMWorld Benchmark: @_akhaliq introduced MMWorld, a benchmark for evaluating multimodal language models on multi-discipline, multi-faceted video understanding tasks.

- Table-LLaVa for Multimodal Tables: @omarsar0 shared the Table-LLaVa 7B model for multimodal table understanding, which is competitive with GPT-4V and outperforms existing MLLMs on multiple benchmarks.

Open-Source Models and Datasets

- LLaMA-3 for Image Captioning: @_akhaliq and @arankomatsuzaki highlighted a paper that fine-tunes LLaVA-1.5 to recaption 1.3B images from the DataComp-1B dataset using LLaMA-3, showing benefits for training vision-language models.

- Stable Diffusion 3 Release: @ClementDelangue and others noted the release of Stable Diffusion 3 by Stability AI, which quickly became the #1 trending model on Hugging Face.

- Hugging Face Acquires Argilla: @_philschmid and @osanseviero announced that Argilla, a leading company in dataset creation and open-source contributions, is joining Hugging Face to enhance dataset creation and iteration.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

Stable Diffusion 3 Medium Release

- Resource-efficient model: In /r/StableDiffusion, Stable Diffusion 3 Medium weights were released, a 2B parameter model that is resource-efficient and capable of running on consumer GPUs.

- Improvements over previous models: SD3 Medium overcomes common artifacts in hands and faces, understands complex prompts, and achieves high quality text rendering.

- New licensing terms: In /r/OpenAI, Stability AI announced new licensing terms for SD3: free for non-commercial use, $20/month Creator License for limited commercial use, and custom pricing for full commercial use.

- Mixed initial feedback: First testers are reporting mixed experiences, with some facing issues replicating results and others giving positive feedback on prompt adherence, detail richness, and lighting/colors.

Issues and Limitations of SD3 Medium

- Struggles with human anatomy: In /r/StableDiffusion, users report that SD3 Medium struggles with human anatomy, especially when generating images of people lying down or in certain poses. This issue is further discussed with nuanced thoughts on the model’s limitations.

- Heavy censorship: The model appears to have been heavily censored, resulting in poor performance when generating nude or suggestive content.

- Difficulty with artistic styles: SD3 Medium has difficulty adhering to artistic styles and concepts, often producing photorealistic images instead.

Comparisons with Other Models

- Varying strengths and weaknesses: Comparisons between SD3 Medium, SDXL, and other models like Stable Cascade and PixArt Sigma show varying strengths and weaknesses across different types of images (photorealism, paintings, landscapes, comic art). Additional comparison sets further highlight these differences.

- Outperforms in specific areas: SD3 Medium outperforms other models in certain areas, such as generating images of clouds or text, but falls short in others like human anatomy.

Community Reactions and Speculation

- Disappointment with release: Many users in /r/StableDiffusion express disappointment with the SD3 Medium release, citing issues with anatomy, censorship, and lack of artistic style. Some even call it a “joke”.

- Speculation on causes: Some users speculate that the poor performance may be due to bugs in adopting the weights or the model architecture.

- Reliance on fine-tuning: Others suggest that the community will need to rely on fine-tuning and custom datasets to improve SD3’s capabilities, as was done with previous models.

Memes and Humor

- Poking fun at shortcomings: Users share memes and humorous images poking fun at SD3’s shortcomings, particularly its inability to generate anatomically correct humans. Some even sarcastically claim “huge success” with the model.

AI Discord Recap

A summary of Summaries of Summaries

-

Stable Diffusion 3 Faces Scrutiny but Offers Alternatives:

- SD3 Faces Criticism for Model Quality: Users conveyed dissatisfaction with SD3—highlighting anatomical inaccuracies and prompt issues—while medium models can be downloaded on Huggingface.

- Preferred Interfaces & Tools Discussed: ComfyUI emerged as the favored interface, with suggested samplers like uni_pc and ddim_uniform for optimal performance. Alternatives like Juggernaut Reborn and Playground are highlighted for their specific capabilities.

-

Boosting AI Performance and Infrastructure Insights:

- LLM Performance Boosted by Higher Model Rank: Shifting from rank 16 to 128 resolved Qwen2-1.5b’s gibberish output, aligning it with llama-3 caliber outputs.

- Perplexity AI’s Efficient LLM Use: Quick results are achieved by leveraging NVIDIA A100 GPUs, AWS p4d instances, and TensorRT-LLM optimizations.

-

Innovations in Fine-Tuning and Quantization:

- Fine-Tuning LLMs with New Models: The discussion covered the legal aspects of using GPT-generated data, referencing OpenAI’s business terms. Experimentations with ToolkenGPT show creative approaches to synthetic data for fine-tuning.

- CUDA Quantization Project discussions: Projects like the BiLLM showcase rapid quantization of large models, essential for efficient AI deployments.

-

Model Management and Deployment Techniques:

- Strategies for Handling Large Embeddings: Queries about 170,000 embedding indexes led to recommendations on using Qdrant or FAISS for faster retrieval. Specific fixes for erroneous queries were shared here.

- Docker and GPU Configuration Troubleshooting: Users dealing with Docker GPU detection on WSL found solutions by consulting the official NVIDIA toolkit guide.

-

AI Community Trends and Updates:

- OpenAI’s Revenue Milestone and Focus Shift: OpenAI’s revenue doubled, reflecting sales direct from ChatGPT and other services, not primarily facilitated by Microsoft (source).

- Partnerships and Conferences Engage Community: Aleph Alpha and Silo AI joined forces to advance European AI (read more), and Qwak’s free virtual conference promises deep dives into AI mechanisms and networking opportunities.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

SD3’s Rocky Release: Users have expressed dissatisfaction with Stable Diffusion 3 (SD3), citing issues such as anatomical inaccuracies and non-compliance with prompts compared to SDXL and SD1.5. Despite the critiques, the medium model of SD3 is now downloadable on Huggingface, requiring form completion for access.

Preferred Interfaces and Samplers: ComfyUI is currently the go-to interface for running SD3, and users are advising against Euler samplers. The favored samplers for peak performace with SD3 are uni_pc and ddim_uniform.

Exploring Alternatives: Participants in the channel have highlighted alternative models and tools like Juggernaut Reborn and Divinie Animemix to achieve more realism or anime style, respectively. Other resources include Playground and StableSwarm for managing and deploying models.

Keep Discussions Relevant: Moderators have had to direct conversations back on topic after detours into global politics and personal anecdotes sidetracked from the technical AI discussions.

Big Models, Bigger Needs: The 10GB model of SD3 was mentioned as a very sought-after option among the community, showing the desire for larger, more powerful models despite the mixed reception of the SD3 release.

Unsloth AI (Daniel Han) Discord

-

Boosting Model Performance with Higher Rank: Increasing the model rank from 16 to 128 resolved issues with Qwen2-1.5b producing gibberish during training, aligning the output quality with results from llama-3 training.

-

scGPT’s Limited Practical Applications: Despite interesting prompting and tokenizer implementation, scGPT, a custom transformer written in PyTorch, is deemed impractical for use outside of an academic setting.

-

Embracing Unsloth for Efficient Inference: Implementing Unsloth has significantly reduced memory usage during both training and inference activities, offering a more memory-efficient solution for artificial intelligence models.

-

Mixture of Agents (MoA) Disappoints: The MoA approach by Together AI, meant to layer language model agents, has been criticized for being overly complex and seemingly more of a showpiece than a practical tool.

-

Advancing Docker Integration for LLMs: AI engineers are recommending the creation of command-line interface (CLI) tools for facilitating workflows and better integrating notebooks with frameworks like ZenML for substantial outcomes in real-world applications.

HuggingFace Discord

SD3 Revolutionizes Stable Diffusion: Stable Diffusion 3 (SD3) has dropped with a plethora of enhancements - now sporting three formidable text encoders (CLIP L/14, OpenCLIP bigG/14, T5-v1.1-XXl), a Multimodal Diffusion Transformer, and a 16 channel AutoEncoder. Details of SD3’s implementation can be found on the Hugging Face blog.

Navigating SD3 Challenges: Users encountered difficulties with SD3 on different platforms, with recommendations such as applying pipe.enable_model_cpu_offload() for faster inference and ensuring dependencies like sentencepiece are installed. GPU setup tips include using RTX 4090, employing fp16 precision, and making sure paths are correctly formulated.

Hugging Face Extends Family With Argilla: In an exciting turn of events, Hugging Face welcomes Argilla into its fold, a move celebrated by the community for the potential to advance open-source AI initiatives and new collaborations.

Community and Support in Action: From universities, such as the newly created University of Glasgow organization on Hugging Face, to individual contributions like Google Colab tutorials for LLM, members have been contributing resources and sourcing support for their various AI undertakings.

Enriched Learning Through Shared Resources: Members are actively exchanging knowledge, with highlighted assets including a tutorial for LLM setup on Google Colab, a proposed reading group discussion on the MaPO technique for text-to-image models, and an Academic paper on NLP elucidating PCFGs.

OpenAI Discord

-

Path to AI Expertise: Aspiring AI engineers were directed towards resources by Andrej Karpathy and a YouTube series by sentdex on creating deep learning chatbots. The discussions revolved around the necessary knowledge and skillsets for AI engineering careers.

-

GPT-4.5 Turbo Speculations Ignite Debate: A debated topic was a leaked mention of GPT-4.5 Turbo, speculated to have a 256k context window and a knowledge cutoff in June 2024. It stirred speculation on its potential continuous pretraining feature.

-

Demystifying ChatGPT’s Storage Strategy: It was suggested that better memory management within ChatGPT could involve techniques for collective memory summary and cleanup to resolve current limitations.

-

Teamwork Makes the Dream Work: Key points about ChatGPT Team accounts were shared, emphasizing the double prompt limit and the financial commitment for multiple team seats when not billed annually.

-

Breaking Down Big Data: There was advice on managing substantial text data, like 300MB files, by chunking and trimming them down for practicality. Useful tools and guides were linked, including a forum post with practical tips for large documents and a notebook on handling lengthy texts through embeddings.

LLM Finetuning (Hamel + Dan) Discord

-

Fine-Tuning LLMs: Adding New Knowledge: AI enthusiasts discussed fine-tuning large language models (LLMs) like “Nous Hermes” to introduce new knowledge, despite costs. A legal debate ensued concerning the use of GPT-generated data, with users consulting OpenAI’s business terms; a separate mention was made of generating synthetic data referenced in the ToolkenGPT paper.

-

Technical Glitches and Advice: In the realm of LLMs, users reported preprocessing errors with models like llama-3-8b and mistral-7b. On the practical side, members traded tips on maintaining SSH connections via

nohup, with recommendations found on this SuperUser thread. -

Innovative Model Frameworks on Spotlight: Model frameworks gained attention, with LangChain and LangGraph sparking diverse opinions. The introduction of the glaive-function-calling-v1 prompted talk about function execution capabilities in models.

-

Deployments and Showcases in Hugging Face Spaces: Several users announced their RAG-based applications, such as the RizzCon-Answering-Machine, built with Gradio and hosted on Hugging Face Spaces, though some noted the need for speed improvements.

-

Credits and Resources Quest Continues: Queries arose about missing credits and who to contact for platforms like OpenPipe. Users who haven’t received credits shared their usernames (e.g., anopska-552142, as-ankursingh3-1-817d86), and a mention of a second round of credits expected on the 14th was made.

Nous Research AI Discord

-

LLM Objective Discovery Without Human Experts: A paper on arXiv details a method for discovering optimization algorithms for large language models (LLMs) driven by the models themselves, which could streamline the optimization of LLM preferences without needing expert human input. The approach employs iterative prompting of an LLM to enhance performance according to specified metrics.

-

MoA Surpasses GPT-4 Omni: A Mixture-of-Agents (MoA) architecture, as highlighted in a Hugging Face paper, shows that combining multiple LLMs elevates performance, surpassing GPT-4 Omni with a 65.1% score on AlpacaEval 2.0. For AI enthusiasts wanting to contribute or delve deeper, the MoA model’s implementation is available on GitHub.

-

Stable Diffusion 3: A Mixed Bag of Early Impressions: While Stable Diffusion 3 garners both applause and criticism in its initial release, discussions around GPT-4’s counterintuitive better performance with higher temperature settings fuel the debate on model configuration. Conversely, a community member circulates an uncensored version of the OpenHermes-2.5 dataset, and a paper on eliminating MatMul operations promises remarkable memory savings.

-

In Search of the Lost Paper: Engagement is seen around the task of locating a forgotten paper on interleaving pretraining with instructions, suggesting active interest in cutting-edge research sharing within community channels.

-

RAG Dataset Development Continues: The dataset schema for RAG is still a work in progress, with further optimization needed for Marker’s document conversion tool, where setting min_length could boost processing speeds. Simultaneously, Pandoc and make4ht emerge as possible conversion solutions for varied document types.

-

World-sim Project Status Quo: There’s no change yet in the closed-source status of the World-sim project, despite discussions and potential for future reconsideration. Additionally, calls for making the world-sim AI bolder and adapting it for mobile platforms reflect the community’s forward-looking thoughts.

Perplexity AI Discord

-

Enthusiasm for Perplexity’s Search Update: Members showed great excitement for the recently introduced search feature in Perplexity AI, with immediate interest expressed for an iOS version.

-

Musk and OpenAI Legal Battle Closes: Elon Musk has withdrawn his lawsuit against OpenAI, alleging a shift from mission-driven to profit-orientation, a day prior to the court hearing. The lawsuit included claims of prioritizing investor interests such as those from Microsoft (CNBC).

-

Perplexity AI Speed with Large Language Models: Perplexity.ai is achieving fast results despite using large language models by utilizing NVIDIA A100 GPUs, AWS p4d instances, and software optimizations like TensorRT-LLM (Perplexity API Introductions).

-

Custom GPT Woes on Perplexity: Engineers are experiencing connectivity issues with Custom GPTs; problems seem confined to the web version of the platform as no issues are reported on desktop applications, suggesting potential API or platform-specific complications.

-

Email’s Environmental Footprint: An average email emits about 4 grams of CO2; the carbon impact can be mitigated by preferential use of file share links over attachments (Mailjet’s Guide to Email Carbon Footprint).

CUDA MODE Discord

Compute Intensity Discussion Left Hanging: A member inquired whether the compute intensity calculation should consider only floating-point operations on data from Global Memory. The topic remained open for discussion without a conclusive answer.

Streamlined Triton 3.0 Setup: Two practical installation methods for Triton 3.0 surfaced; one guide details installing from source, while another involves using make triton with a specific version from the PyTorch repository.

Optimizing Optimizers in PyTorch: A robust conversation on creating a fast 8-bit optimizer using pure PyTorch and torch.compile, as well as making a drop-in replacement for 32-bit with comparable accuracy was had, drawing inspiration from the bitsandbytes implementation.

Breakthroughs in Quantization and Training Dynamics: The BiLLM project boasts rapid quantization of large language models, while torchao members debate the trade-offs in speed and accuracy across various numeric representations during matrix multiplication, from INT8 to FP8 and even INT6.

Hardware Showdown and Quantization Innovations: AMD’s MI300X showcases higher throughput for LLM inference than NVIDIA’s H100, and Bitnet sees progress with refactoring and nightly build strategies, but a lingering build issue remains due to an unrelated mx format test.

LM Studio Discord

Gemini 1.5 JSON Woes: Engineers report that Gemini 1.5 flash struggles with JSON mode, causing intermittent issues with output. Users are invited to share insights or solutions to this challenge.

Tess Takes the Stage: The Tess 2.5 72b q3 and q4 quant models are now live on Hugging Face, offering new tools for experimentation.

AVX2 Instruction Essential: Users facing direct AVX2 errors should verify their CPU’s support for AVX2 instructions to ensure compatibility with application requirements.

LM Studio Limitations and Solutions: LM Studio cannot be run on headless web servers or support safetensor files, but it succesfully employs GGUF format and Flash Attention can be enabled via alternatives like llama.cpp.

Hardware Market Fluctuations: There’s a spike in the price of electronically scrapped P40 GPUs with current prices over $200, as well as a humorous note on sanctions possibly affecting Russian P40 stocks. A community member shares specs for an efficient home server build: R3700X, 128GB RAM, RTX 4090, and multiple storage options.

Eleuther Discord

-

LLAMA3 70B Shows Diverse Talents: LLAMA3 70B displays a wide-ranging output capability, producing 60% AI2 arc format, 20% wiki text, and 20% code when prompted from an empty document, suggesting a tuning for specific formats. In a separate query, there’s guidance on finetuning BERT for longer texts with a sliding window technique, pointing to resources such as a NeurIPS paper and its implementation.

-

Samba Dances Over Phi3-mini: Microsoft’s Samba model, trained on 3.2 trillion tokens, notably outperforms Phi3-mini in benchmarks while maintaining linear complexity and achieving exceptional long-context retrieval capabilities. A different conversation delves into Samba’s passkey retrieval for 256k sequences, discussing Mamba layers and SWA effectiveness.

-

Magpie Spreads Its Wings: The newly introduced method Magpie prompts aligned Large Language Models (LLMs) to auto-generate high-quality instruction data, circumventing manual data creation. Along this innovative edge, another discussion highlights the controversial practice of tying embedding and unembedding layers, sharing insights from a LessWrong post.

-

Debating Normalization Standards: Within the community, the metrics for evaluating models spurred debate, particularly whether to normalize accuracy by tokens or by bytes for models with identical tokenizers. A related log from a test on Qwen1.5-7B-Chat was shared, discussing solutions for troubleshooting empty responses in

truthfulqa_gentasks. -

Open Flamingo Spreads Its Wings: A brief message pointed members to LAION’s blog post about Open Flamingo, a likely reference to their multimodal model work.

LlamaIndex Discord

TiDB AI Experimentation on GitHub: PingCap demonstrates a RAG application using their TiDB database with LlamaIndex’s knowledge graph, all available as open-source code with a demo and the source code on GitHub.

Paris AI Infrastructure Meetup Beckons: Engineers can join an AI Infrastructure Meetup at Station F in Paris featuring speakers from LlamaIndex, Gokoyeb, and Neon; details and sign-up are available here.

Vector Database Solutions for Quick Queries: For indexes containing 170,000 embeddings, use of Qdrant or FAISS Index is recommended; discussion includes fixing an AssertionError related to FAISS queries and direct node retrieval from a VectorStoreIndex with Chroma.

Adjacent Node Retrieval from Qdrant: A user inquiring about fetching adjacent nodes for law texts in a Qdrant vector store is advised to leverage node relationships and the latest API features for directional node retrieval.

Pushing LLM-Index Capabilities with PDF Embedding: An AI Engineer discusses embedding PDFs and documents into Weaviate using LLM-Index, demonstrating interest in expanding the ingestion of complex data types into vector databases.

Cohere Discord

- Command-R Takes the Stage: Coral has been rebranded as Command-R, yet both Command-R and the original Coral remain operational facilitating model-related tasks.

- To Tune or Not to Tune: In the pursuit of optimal model performance, debate flourished with some engineers emphasizing prompt engineering over parameter tuning, while others exchanged note-worthy configurations.

- Navigating Cohere’s Acceptable Use: A collective effort was noted to decode the nuances of the Cohere Acceptable Use Policy, with a focus on delineating private versus commercial usage nuances in the context of personal projects.

- Trials and Tribulations with Trial Keys: The community exchanged frustrations regarding trial keys encountering permission issues and limitations, contrasting these experiences with the smoother sailing reported by production key users.

- Hats Off to Fluent API Support: A quick nod was given in the conversations to the preference for Fluent API and appreciation for its inclusion by Cohere, evidenced by a congratulatory tone for a recent project release featuring Cohere support.

LAION Discord

-

Gender Imbalance Troubles in Stable Diffusion: The community discussed that Stable Diffusion has problems generating images of women, clothed or otherwise, due to censorship, suggesting the use of custom checkpoints and img2img techniques with SD1.5 as workarounds. Here’s the discussion thread.

-

Dream Machine Debuts: Luma AI’s Dream Machine, a text-to-video model, has been released and is generating excitement for its potential, though users note its performance is inconsistent with complex prompts. Check out the model here.

-

AI Landscape Survey: Comparisons across models such as SD3 Large, SD3 Medium, Pixart Sigma, DALL E 3, and Midjourney were discussed, alongside the reopening of the /r/StableDiffusion subreddit and Reddit’s API changes. The community is keeping an eye on these models and these issues, and the Reddit post offers a comparison.

-

Model Instability Exposed: A study revealed that models like GPT-4o breakdown dramatically when presented with the Alice in Wonderland scenario involving minor changes to input—highlighting a significant issue in reasoning capabilities. Details can be found in the paper.

-

Recaptioning the Web: Enhancements in AI-generated captions for noisy web images are on the horizon, with DataComp-1B aiming to improve model training by better aligning textual descriptions. For further insights, review the overview and the scientific paper.

LangChain AI Discord

-

Chasing the Best Speech-to-Text Solution: Engineers discussed speech-to-text solutions, seeking datasets with MP3s and diarization, beyond tools like AWS Transcribe, OpenAI Whisper, and Deepgram Nova-2. The need for robust processing that could handle simple responses without tools and manage streaming responses without losing context was also highlighted.

-

LangChain Links Chains and States: In LangChain AI, integration of user and thread IDs in state management was explicitedly discussed, and tips to leverage LangGraph for maintaining state succinctly across various interactions were shared. For message similarity checks within LangChain, both string and embedding distance metrics were suggested with practical use cases.

-

Simplifying LLMs for All: A GitHub project called tiny-ai-client was presented to streamline LLM interactions, and a YouTube tutorial showed how to set up local executions of LLMs with Docker and Ollama. Meanwhile, another member shared a GitHub tutorial to set up LLM on Google Colab utilizing the 15GB Tesla T4 GPU.

-

Code Examples and Conversations to Streamline Development: Throughout the discussions, various code examples and issues were referenced to aid in troubleshooting and streamlining LLM development processes, with links like the Chat Bot Feedback Template and methodologies for evaluating off-the-shelf evaluators and maintaining Q&A chat history in LangChain.

-

Community Knowledge Sharing: Members actively shared their own works, methods, and problem-solving strategies, creating a community knowledge base that included how-tos and responses to non-trivial LangChain scenarios, affirming the collaborative ethos of the engineering community.

Modular (Mojo 🔥) Discord

-

Windows Woes and Workarounds: Engineers discussed Windows support for Modular (Mojo 🔥), with a predicted release in the Fall and a livestream update anticipated. Meanwhile, some have turned to WSL as a temporary solution for Mojo development.

-

Mojo Gets Truthy with Strings: It was noted that non-empty strings in Mojo are considered truthy, potentially causing unexpected results in code logic. Meanwhile, Mojo LSP can be set up in Neovim with configurations available on GitHub.

-

Optimizing Matrix Math: Benchmarks revealed that Mojo has superior performance to Python in small, fixed-size matrix multiplications, attributing the speed to the high overhead of Python’s numpy for such tasks.

-

Loop Logic and Input Handling: The peculiar behavior of

forloops in Mojo led to a suggestion to usewhileloops for iterations requiring variable reassignment. Additionally, the current lack ofstdinsupport in Mojo was confirmed. -

Nightly Updates and Compiler Quips: Mojo compiler release

2024.6.1305was announced, sparking conversations about update procedures with advice to usemodular update nightly/maxand consider aliases for simplification. Discussions also addressed compiler limitations and the potential benefits of the ExplicitlyCopyable trait for avoiding implicit copies in the language.

Interconnects (Nathan Lambert) Discord

-

OpenAI’s Indirect Profit Path: OpenAI’s revenue surges without Microsoft’s aid, nearly doubling in the last six months primarily due to direct sales of products like ChatGPT rather than relying on Microsoft’s channels, contradicting industry expectations. Read more.

-

AI Research Goes Full Circle: Sakana AI’s DiscoPOP, a state-of-the-art preference optimization algorithm, boasts its origins in AI-driven discovery, suggesting a new era where LLMs can autonomously improve AI research methods. Explore the findings in their paper and contribute via the GitHub repo.

-

Hardware Hype and Research Revelations: Anticipation builds around Nvidia’s potentially forthcoming Nemotron as teased in a tweet, while groundbreaking advancements are discussed with the release of a paper exploring speech modeling by researchers like Jupinder Parmar and Shrimai Prabhumoye.

-

SSMs Still in the Game: The community holds its breath with a 50/50 split on the continuation of Structured State Machines (SSMs), despite a leaning interest towards hybrid SSM/transformer architectures as attention layers may not be needed at each step.

-

Benchmarks Blasted by New Architecture: Introducing Samba 3.8B, an architecture merging Mamba and Sliding Window Attention, showcasing it can significantly outclass models like Phi3-mini in major benchmarks, offering infinite context length with linear complexity. Details of Samba’s prowess are found in this paper.

Latent Space Discord

-

Haize Labs Takes on AI Guardrails: Haize Labs launched a manifesto on identifying and fixing AI failure modes, demonstrating breaches in leading AI safety systems by successfully jailbreaking their protection mechanisms.

-

tldraw Replicates iPad Calculator with Open Source Flair: The team behind tldraw has reconstructed Apple’s iPad calculator as an open-source project, showcasing their commitment to sharing innovative work.

-

Amazon’s Conversational AI Missteps Analyzed: An examination of Amazon’s conversational AI progress, or lack thereof, pointed to a culture and operational process that prioritizes products over long-term AI development, according to insights from former employees in an article shared by cakecrusher.

-

OpenAI’s Surging Fiscal Performance: OpenAI has achieved an annualized revenue run rate of nearly $3.4 billion, igniting dialogue about the implications of such earnings, including sustainability and spending rates.

-

Argilla Merges with Hugging Face for Better Datasets: Argilla has merged with Hugging Face, setting the stage for improved collaborations to drive forward improvements in AI dataset and content generation.

OpenInterpreter Discord

-

Open Interpreter Empowers LLMs: Open Interpreter is being discussed as a means to transform natural language into direct computer control, offering a bridge to future integrations with tailored LLMs and enhanced sensory models.

-

Vision Meets Code in Practical Applications: The community shared experiences and troubleshooting tips on running code alongside vision models using Open Interpreter, particularly focusing on the

llama3-vision.pyprofile, and strategies for managing server load during complex tasks. -

Browser Control Scores a Goal: A real-world application saw Open Interpreter successfully navigating a browser to check live sports scores, showcasing the simplicity of user prompts and the implications on server demand.

-

DIY Approach to Whisper STT: While seeking a suitable Whisper Speech-To-Text (STT) library, a guild member ended up crafting a unique solution themselves, reflecting the community’s problem-solving ethos.

-

Tweaking for Peak Performance: Discussions on fine-tuning Open Interpreter, such as altering core.py, highlighted the ongoing efforts to address performance and server load challenges to meet the particular needs of users.

OpenAccess AI Collective (axolotl) Discord

-

Apple’s 3 Billion Parameter Breakthrough: Apple has unveiled a 3 billion parameter on-device language model at WWDC, achieving the same accuracy as uncompressed models through a strategy that mixes 2-bit and 4-bit configurations, averaging 3.5 bits-per-weight. The approach optimizes memory, power, and performance; more details are available in their research article.

-

Dockerized AI Hits GPU Roadblock: An engineer encountered issues when Docker Desktop failed to recognize a GPU on an Ubuntu virtual machine over Windows 11 despite commands like

docker run --gpus all --rm -it winglian/axolotl:main-latest. Suggested diagnostic steps include checking GPU status withnvidia-smiand confirming the installation of the CUDA toolkit. -

CUDA Confusions and WSL 2 Workarounds: The conversation shifted towards whether the CUDA toolkit should be set up on Windows or Ubuntu, with a consensus forming around installation within WSL 2 for Ubuntu. A user has expressed intent to configure CUDA on Ubuntu WSL, armed with the official NVIDIA toolkit installation guide.

OpenRouter (Alex Atallah) Discord

Param Clamping in OpenRouter: Alex Atallah specified that parameters exceeding support, like Temp > 1, are clamped at 1 for OpenRouter, and parameters like Min P aren’t passed through the UI, despite UI presentation suggesting otherwise.

Mistral 7B’s Lag Time Mystery: Users noticed increased response times for Mistral 7B variants, attributing it to context length changes and potential rerouting, supported by data from an API watcher and a model uptime tracker.

Blockchain Developer on the Market: A senior full-stack & blockchain developer is on the lookout for new opportunities, showcasing experience in the field and eagerness to engage.

Vision for Vision Models: A request surfaced for the inclusion of more advanced vision models such as cogvlm2 in OpenRouter to enhance dataset captioning capabilities.

tinygrad (George Hotz) Discord

- Bounty for RDNA3 Assembly in tinygrad: George Hotz sparked interest with a bounty for RDNA3 assembly support in tinygrad, inviting collaborators to work on this enhancement.

- Call for Qualcomm Kernel Driver Development: An opportunity has arisen for developing a “Qualcomm Kernel level GPU driver with HCQ graph support,” targeting engineers with expertise in Qualcomm devices and Linux systems.

- tinygrad’s Mobile Capabilities Confirmed: Confirmation was given that tinygrad is functional within the Termux app, showcasing its adaptability to mobile environments.

- In Discussion: Mimicking Mixed Precision in tinygrad: A discourse on implementing mixed precision through casting between bfloat16 and float32 during matrix multiplication revealed potential speed benefits, especially when aligned with tensor core data types.

- Tensor Indexing and UOp Graph Execution Queries: Efficient tensor indexing techniques are being explored, referencing boolean indexing and UOp graph execution with

MetalDeviceandMetalCompiler, with an emphasis on streamlined kernel execution usingcompiledRunner.

Datasette - LLM (@SimonW) Discord

-

A Dose of Reality in AI Hype: A blog from dbreunig suggests that the AI industry is predominantly filled with grounded, practical work, comparing the current stage of LLM work to the situation of data science circa 2019. His article hit Hacker News’ front page, signaling a high interest in the pragmatic approach to AI beyond the sensationalist outlook.

-

CPU Yesteryear, GPU Today: The search for computing resources has shifted from RAM and Spark capacity to GPU cores and VRAM, illustrating the changing technical needs as AI development progresses.

-

Token Economics in LLM Deployment: Databricks’ client data shows a 9:1 input-to-output ratio for Large Language Models (LLMs), highlighting that input token cost can be more critical than output, which has economic implications for those operating LLMs.

-

Spotlight on Practical AI Application: The recognition of dbreunig’s observations at a Databricks Summit by Hacker News underscores community interest in discussions about the evolution and realistic implementation of AI technologies.

DiscoResearch Discord

- European AI Synergy: A strategic partnership has been formed between Aleph Alpha and Silo AI to push the frontiers of open-source AI and tailor enterprise-grade solutions for Europe. This collaboration leverages Aleph Alpha’s advanced tech stack and Silo AI’s robust 300+ AI expert team, with an eye to accelerate AI deployment in European industrial sectors. Read about the partnership.

Torchtune Discord

-

Participate in the Poll, Folks: A user has requested the community’s participation in a poll regarding how they serve their finetuned models, with an undercurrent of gratitude for their engagement.

-

Tokenizers Are Getting a Makeover: An RFC for a comprehensive tokenizer overhaul has been proposed, promoting a more feature-rich, composable, and accessible framework for model tokenization, as found in a pull request on GitHub.

MLOps @Chipro Discord

- Free Virtual Conference for AI Buffs: Infer: Summer ‘24, a free virtual conference happening on June 26, will bring together AI and ML professionals to discuss the latest in the field, including recommender systems and AI application in sports.

- Industry Experts Assemble: Esteemed professionals such as Hudson Buzby, Solutions Architect at Qwak, and Russ Wilcox, Data Scientist at ArtifexAI, will be sharing their insights at the conference, representing companies like Lightricks, LSports, and Lili Banking.

- Live Expert Interactions: Attendees at the conference will have the chance for real-time engagement with industry leaders, providing a platform to exchange practical knowledge and innovative solutions within ML and AI.

- Hands-On Learning Opportunity: Scheduled talks promise insights into the pragmatic aspects of AI systems, such as architecture and user engagement strategies, with a focus on building robust, predictive technologies.

- Networking with AI Professionals: Participants are encouraged to network and learn from top ML and AI professionals by registering for free access to the event. The organizers emphasize the event as a key opportunity to broaden AI understanding and industry connections.

Mozilla AI Discord

-

Don’t Miss the Future of AI Development: An event discussing how AI software development systems can amplify developers is on the horizon. Further info and RSVP can be found here.

-

Catch Up on Top ML Papers: The newest Machine Learning Paper Picks has been curated for your reading pleasure, available here.

-

Engage with CambAI Team’s Latest Ventures: Join the upcoming event with CambAI Team to stay ahead in the field. RSVP here to be part of the conversation.

-

Claim Your AMA Perks: Attendees of the AMA, remember to claim your 0din role to get updates about swag like T-shirts by following the customized link provided in the announcement.

-

Contribute to Curated Conversations with New Tag: The new

member-requestedtag is now live for contributions to a specially curated discussion channel, reflecting community-driven content curation. -

Funding and Support for Innovators: The Builders Program is calling for members seeking support and funding for their AI projects, with more details available through the linked announcement.

YAIG (a16z Infra) Discord

- GitHub Codespaces Roll Call: A survey was launched in #tech-discussion to determine the usage of GitHub Codespaces among teams, using ✅ for yes and ❌ for no as response options.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI Stack Devs (Yoko Li) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

Stability.ai (Stable Diffusion) ▷ #general-chat (854 messages🔥🔥🔥):

- Members express disappointment with SD3 quality: Users have criticized the quality of the newly released SD3, often comparing it unfavorably with SDXL and 1.5 versions. Concerns include anatomical inaccuracies, the model not obeying prompts, and reduced quality in photographic styles.

- SD3 medium released on Huggingface: The SD3 medium model is now available for download on Huggingface, although users must fill out a form for access. There have been some issues with git cloning, and a 10GB model option is popular.

- ComfyUI favored for SD3 usage: ComfyUI is currently the preferred interface for running SD3, with users suggesting the best samplers and schedulers for optimal results. Recommendations include avoiding Euler samplers and using uni_pc and ddim_uniform for better performance.

- Alternative model suggestions and tools: Members shared alternatives and tools like Juggernaut reborn for realistic styles and Divinie animemix for anime styles. Other recommended resources for running models include Playground and StableSwarm.

- Discussion off-topic around global and political issues: At times, the channel veered into off-topic discussions about politics, international relations, and personal interactions, distracting from the core focus on AI models and technical help. Mods reminded community members to maintain topical relevance and report inappropriate content.

Links mentioned:

- stabilityai/stable-diffusion-3-medium · Hugging Face: no description found

- Muajaja Risa Malvada GIF - Muajaja The Simpsons - Discover & Share GIFs: Click to view the GIF

- Free AI image generator: Art, Social Media, Marketing | Playground: Playground (official site) is a free-to-use online AI image creator. Use it to create art, social media posts, presentations, posters, videos, logos and more.

- SD3 IS HERE!! ComfyUI Workflow.: SD3 is finally here for ComfyUI!Topaz Labs: https://topazlabs.com/ref/2377/HOW TO SUPPORT MY CHANNEL-Support me by joining my Patreon: https://www.patreon.co...

- Crycat Crying Cat GIF - Crycat Crying Cat Crying Cat Thumbs Up - Discover & Share GIFs: Click to view the GIF

- Reddit - Dive into anything: no description found

- Installation Guides: Stable Diffusion Knowledge Base (Setups, Basics, Guides and more) - CS1o/Stable-Diffusion-Info

- imgur.com: Discover the magic of the internet at Imgur, a community powered entertainment destination. Lift your spirits with funny jokes, trending memes, entertaining gifs, inspiring stories, viral videos, and ...

- GitHub - RocketGod-git/stable-diffusion-3-gui: GUI for Stable Diffusion 3 written in Python: GUI for Stable Diffusion 3 written in Python. Contribute to RocketGod-git/stable-diffusion-3-gui development by creating an account on GitHub.

- Reddit - Dive into anything: no description found

- Blender - Open Data: Blender Open Data is a platform to collect, display and query the results of hardware and software performance tests - provided by the public.

- Stability AI releases DeepFloyd IF, a powerful text-to-image model that can smartly integrate text into images — Stability AI: DeepFloyd IF is a state-of-the-art text-to-image model released on a non-commercial, research-permissible license that allows research labs to examine and experiment with advanced text-to-image genera...

- Stable Assistant Gallery — Stability AI: Stable Assistant delivers image creation capabilities like never before. Explore our gallery and be amazed by what's possible!

- Stable Assistant Gallery — Stability AI: Stable Assistant delivers image creation capabilities like never before. Explore our gallery and be amazed by what's possible!

- imgsys.org | an image model arena by fal.ai: A generative AI arena where you can test different prompts and pick the results you like the most. Check-out the model rankings and try it yourself!

- Mobius - v1.0 | Stable Diffusion Checkpoint | Civitai: Mobius: Redefining State-of-the-Art in Debiased Diffusion Models Mobius, a diffusion model that pushes the boundaries of domain-agnostic debiasing ...

Unsloth AI (Daniel Han) ▷ #general (446 messages🔥🔥🔥):

- Rank boosts give better training results: “I can confirm that the issues with Qwen2-1.5b training and it outputting gibberish is to do with too low of a rank. I switched from rank 16 to rank 128, and my outputs matched the quality of my outputs from my llama-3 train.”

- scGPT might not be practical outside academia: “That’s a custom transformer implemented in torch 😅 Unusable outside academia as is…interesting what they did on the prompting and the tokenizer!”

- Inference support and memory efficiency in Unsloth: A user asked if Unsloth affects inference and received confirmation that Unsloth reduces memory usage significantly during both training and inference.

- Mixture of Agents (MoA) does not impress: Users discussed Together AI’s MoA approach to layering LLM agents but found it “kind of just for show” and overly complex.

- Dockerizing for seamless pipelines: “The notebooks are a great ‘jump in’ but in real-world apps you want to integrate that with other stuff. Making CLI tools for consistent workflows and easier integrations with frameworks like ZenML.”

Links mentioned:

- Let's reproduce GPT-2 (124M): We reproduce the GPT-2 (124M) from scratch. This video covers the whole process: First we build the GPT-2 network, then we optimize its training to be really...

- Together MoA — collective intelligence of open-source models pushing the frontier of LLM capabilities: no description found

- google/recurrentgemma-9b · Hugging Face: no description found

- Let's build the GPT Tokenizer: The Tokenizer is a necessary and pervasive component of Large Language Models (LLMs), where it translates between strings and tokens (text chunks). Tokenizer...

- Smh Shaking GIF - Smh Shaking My - Discover & Share GIFs: Click to view the GIF

- Introducing Apple’s On-Device and Server Foundation Models: At the 2024 Worldwide Developers Conference, we introduced Apple Intelligence, a personal intelligence system integrated deeply into…

- scGPT/tutorials/zero-shot at main · bowang-lab/scGPT: Contribute to bowang-lab/scGPT development by creating an account on GitHub.

- GitHub - bowang-lab/scGPT: Contribute to bowang-lab/scGPT development by creating an account on GitHub.

- GitHub - bowang-lab/scGPT at integrate-huggingface-model: Contribute to bowang-lab/scGPT development by creating an account on GitHub.

- ollama/examples at main · ollama/ollama: Get up and running with Llama 3, Mistral, Gemma, and other large language models. - ollama/ollama

- Download the complete genome for an organism: no description found

- Turbo Sparse: Achieving LLM SOTA Performance with Minimal Activated Parameters: Exploiting activation sparsity is a promising approach to significantly accelerating the inference process of large language models (LLMs) without compromising performance. However, activation sparsit...

- PowerInfer-2: Fast Large Language Model Inference on a Smartphone: This paper introduces PowerInfer-2, a framework designed for high-speed inference of Large Language Models (LLMs) on smartphones, particularly effective for models whose sizes exceed the device's ...

- GitHub - sebdg/unsloth at cli-trainer: Finetune Llama 3, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory - GitHub - sebdg/unsloth at cli-trainer

- Neuroplatform - FinalSpark: no description found

- Replete-AI/code_bagel_hermes-2.5 · Datasets at Hugging Face: no description found

Unsloth AI (Daniel Han) ▷ #help (190 messages🔥🔥):

-

Trainer and TrainingArguments Confusion: A user expressed confusion about the specifics of Trainer and TrainingArguments in the Huggingface documentation. They noted that the descriptions do not fully explain how to use these classes.

-

Saving as gguf Issues: A user faced a

ValueErrorwhen attempting to save a model as gguf, but found success after specifyingf16as the quantization method. They shared this solution along with the syntax:model.save_pretrained_gguf("model", tokenizer, quantization_method = "f16"). -

Untrained Tokens Error: Another user encountered a

ValueErrorrelated to untrained tokens while training a model, suggesting thatembed_tokensandlm_headmust be included in the training process. This issue was linked to adding new tokens that require enabling training on certain model parts. -

Dataset Formatting for Multilabel Classification: One member sought advice on finetuning Llama 3 for multilabel classification and whether the same prompt format could be used. While responses acknowledged the need for consistent dataset templates, no specific solution for multilabel classification was provided.

-

citing Unsloth: Users discussed how to cite Unsloth in a paper, with a suggestion to reference it as: “Daniel Han and Michael Han. 2024. Unsloth, Unsloth AI.” followed by the Unsloth GitHub page.

Links mentioned:

- afrizalha/Kancil-V1-llama3-fp16 · Hugging Face: no description found

- How do you calculate max steps: I am looking to understand the math behind how max steps is calculated when left alone, I’ve tried to work backwards from making changes to epoch, batch, and micro-batch to see if I could figure out t...

- Home: Finetune Llama 3, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Trainer: no description found

HuggingFace ▷ #announcements (8 messages🔥):

- Stable Diffusion 3 Launches with Enhanced Features: SD3 is a diffusion model that includes three text encoders (CLIP L/14, OpenCLIP bigG/14, and T5-v1.1-XXL), a Multimodal Diffusion Transformer, and a 16 channel AutoEncoder model. Check the complete details and code on the Stable Diffusion 3 Medium space.

- Community Highlights #62: Featuring new tools and projects like a formula 1 prediction model, Simpletuner v0.9.7, and Tiny LLM client. Check out the 1M+ Dalle 3 captioned dataset and MARS v5 for more cool stuff.

- Argilla Joins Hugging Face: Today’s a huge day for Argilla, Hugging Face, and the Open Source AI community: Argilla is joining Hugging Face! Emphasizing the community and data-centric, open source AI approach.

- Community Welcomes Argilla: Members congratulated Argilla on joining Hugging Face and expressed excitement for future projects and collaborations. “Looking forward to my first distilabel x arguilla project (of course hosted on hf!)”.

Links mentioned:

- stabilityai/stable-diffusion-3-medium-diffusers · Hugging Face: no description found

- stabilityai/stable-diffusion-3-medium · Hugging Face: no description found

- Diffusers welcomes Stable Diffusion 3: no description found

HuggingFace ▷ #general (185 messages🔥🔥):

-

Troubleshooting SD3 Issues with Diffusers: Users faced multiple errors while trying to run the Stable Diffusion 3 (SD3) model with the latest diffusers library update. One user shared that adding

pipe.enable_model_cpu_offload()significantly improved inference time. -

University of Glasgow Organization on HuggingFace: A member announced the creation of the University of Glasgow organization on HuggingFace, inviting faculty, researchers, and students to join using their university email. Link to the organization.

-

Error in LLM Session Management: A user sought help for maintaining sessions with their local LLM using the Ollama interface with Llama3. Another member provided a Python script solution for continuous session management using the OpenAI API format.

-

Generalized LoRA (GLoRA) Implementation Help: A user working on integrating GLoRA into the PEFT library requested assistance with an error in their forked implementation. They provided a link to the GitHub repository and related research paper.

-

Tutorial for LLM on Google Colab: A member shared a tutorial for setting up LLM on Google Colab to take advantage of the free GPU for both GPU-accelerated and CPU-only inference. They highlighted the tutorial as beneficial for others encountering similar issues.

Links mentioned:

- Stable Diffusion 3 - a Hugging Face Space by nroggendorff: no description found

- Ilaria RVC - a Hugging Face Space by TheStinger: no description found

- UniversityofGlasgow (University of Glasgow): no description found

- stabilityai/stable-diffusion-3-medium-diffusers · Hugging Face: no description found

- Video LLaVA - a Hugging Face Space by LanguageBind: no description found

- One-for-All: Generalized LoRA for Parameter-Efficient Fine-tuning: We present Generalized LoRA (GLoRA), an advanced approach for universal parameter-efficient fine-tuning tasks. Enhancing Low-Rank Adaptation (LoRA), GLoRA employs a generalized prompt module to optimi...

- Helsinki-NLP/opus-mt-ko-en · Hugging Face: no description found

- GitHub - viliamvolosv/peft: 🤗 PEFT: State-of-the-art Parameter-Efficient Fine-Tuning.: 🤗 PEFT: State-of-the-art Parameter-Efficient Fine-Tuning. - viliamvolosv/peft

- GitHub - casualcomputer/llm_google_colab: A tutorial on how to set up a LLM on Google Colab for both GPU-accelerated and CPU-only session.: A tutorial on how to set up a LLM on Google Colab for both GPU-accelerated and CPU-only session. - casualcomputer/llm_google_colab

- GitHub - VedankPurohit/LiveRecall: Welcome to **LiveRecall**, the open-source alternative to Microsoft's Recall. LiveRecall captures snapshots of your screen and allows you to recall them using natural language queries, leveraging semantic search technology. For added security, all images are encrypted.: Welcome to **LiveRecall**, the open-source alternative to Microsoft's Recall. LiveRecall captures snapshots of your screen and allows you to recall them using natural language queries, leverag...

- GitHub - continuedev/deploy-os-code-llm: 🌉 How to deploy an open-source code LLM for your dev team: 🌉 How to deploy an open-source code LLM for your dev team - continuedev/deploy-os-code-llm

- Smol Illegally Smol Cat GIF - Smol Illegally smol cat Cute - Discover & Share GIFs: Click to view the GIF

- Google Colab: no description found

HuggingFace ▷ #cool-finds (10 messages🔥):

-

Distill’s invaluable yet inactive resources: Despite being inactive, Distill offers highly illustrative articles on various ML and DL topics. Highlights include Understanding Convolutions on Graphs and A Gentle Introduction to Graph Neural Networks.

-

Enterprise LLMs security query sparks interest: A member inquired if anyone is working on security for Enterprise level LLMs. This reflects a growing concern in the community regarding AI model security.

-

Academic dive into NLP and Graph Models: Various academic resources were shared including NLP notes on PCFGs and a pioneering approach to neural ODE models on arXiv.

-

DreamMachine by Luma AI hailed as Pikalabs successor: DreamMachine by Luma AI was celebrated as a successor to Pikalabs, offering 30 free videos a month with img2vid and text2vid features. Prompting tips were discussed to optimize results, with a suggestion to keep prompts simple and let the system’s model assume control.

-

Nodus Labs’ ACM paper: A member shared a paper from ACM which may be insightful for those interested in further technical details.

Links mentioned:

- Flow Straight and Fast: Learning to Generate and Transfer Data with Rectified Flow: We present rectified flow, a surprisingly simple approach to learning (neural) ordinary differential equation (ODE) models to transport between two empirically observed distributions π_0 and π_1, henc...

- Distill — Latest articles about machine learning: Articles about Machine Learning

- Archives - Bartosz Ciechanowski: no description found

HuggingFace ▷ #i-made-this (11 messages🔥):

-

Colab Tutorial tackles LLM setup: A member shared a tutorial on setting up a LLM on Google Colab to leverage the free 15GB Tesla T4 GPU for both GPU-accelerated and CPU-only inference sessions. They hope it will assist others struggling with existing solutions and troubleshooting problems.

-

Tiny-AI-Client simplifies LLM usage: Another member introduced a tiny, intuitive client for LLMs that supports vision and tool use, aiming to be an alternative to langchain for simpler use cases. They offered to help with bugs and invited others to try it out.

-

SimpleTuner release integrates SD3: A new release of SimpleTuner was announced, fully integrating Stable Diffusion 3’s unet and lora training. The member shared their dedication to the project, working tirelessly to achieve this integration.

-

French Deep Learning Notebooks: A GitHub repository containing Deep Learning notebooks in French was shared, aimed at making Deep Learning more accessible. The course, inspired by resources from Andrej Karpathy and DeepLearning.ai, is a work in progress.

-

Conceptual Captions dataset: A member shared a massive dataset with 22 million high-quality captions for 11 million images from Google’s CC12M, created using LLaVaNext.

Links mentioned:

- Pixel Prompt - a Hugging Face Space by Hatman: no description found

- @Draichi on Hugging Face: "Hey Hugging Face Community 🤗

I’m excited to share my latest project that…”: no description found

- Release v0.9.7 - stable diffusion 3 · bghira/SimpleTuner: Stable Diffusion 3 To use, set STABLE_DIFFUSION_3=true in your sdxl-env.sh and set your base model to stabilityai/stable-diffusion-3-medium-diffusers. What’s Changed speed-up for training sample…

- GitHub - casualcomputer/llm_google_colab: A tutorial on how to set up a LLM on Google Colab for both GPU-accelerated and CPU-only session.: A tutorial on how to set up a LLM on Google Colab for both GPU-accelerated and CPU-only session. - casualcomputer/llm_google_colab

- GitHub - SimonThomine/CoursDeepLearning: Un regroupement de notebooks de apprendre le deep learning à partir de 0: Un regroupement de notebooks de apprendre le deep learning à partir de 0 - SimonThomine/CoursDeepLearning

- GitHub - piEsposito/tiny-ai-client: Tiny client for LLMs with vision and tool calling. As simple as it gets.: Tiny client for LLMs with vision and tool calling. As simple as it gets. - piEsposito/tiny-ai-client

- CaptionEmporium/conceptual-captions-cc12m-llavanext · Datasets at Hugging Face: no description found

HuggingFace ▷ #reading-group (1 messages):

- Proposal to discuss MaPO: A member suggested inviting one of the authors to present the paper MaPO, highlighting the potential interest within the community. The paper discusses a novel alignment technique for text-to-image diffusion models that avoids the limitations of divergence regularization and is more flexible in handling preference data MaPO.

Link mentioned: MaPO Project Page: SOCIAL MEDIA DESCRIPTION TAG TAG

HuggingFace ▷ #computer-vision (28 messages🔥):

- Help on Image Retrieval Models: A newcomer seeks advice on the best models for image retrieval, mentioning success with Microsoft’s Vision API and CLIP. A suggestion includes using Mobilenets for real-time apps, OpenCLIP for best scores, and Faiss for versatile search engine capabilities.

- Recommendation of smol-vision: A member shares a GitHub link for smol-vision, which provides recipes for shrinking, optimizing, and customizing cutting edge vision models.

- Channel Redirection: Guidance was given to post in a more relevant channel, <#1019883044724822016>, for deeper insights and recommendations.

Links mentioned:

- Smash GIF - Smash - Discover & Share GIFs: Click to view the GIF

- GitHub - merveenoyan/smol-vision: Recipes for shrinking, optimizing, customizing cutting edge vision models. 💜: Recipes for shrinking, optimizing, customizing cutting edge vision models. 💜 - GitHub - merveenoyan/smol-vision: Recipes for shrinking, optimizing, customizing cutting edge vision models. 💜

HuggingFace ▷ #NLP (1 messages):

- Finetuning Llama3 for MultiLabel Classification: A member inquired about the method for finetuning Llama3 for MultiLabel classification, asking if the same prompt format used in a specific Kaggle notebook should be followed for each row, or if there exists another method similar to BERT.

HuggingFace ▷ #diffusion-discussions (79 messages🔥🔥):

-

Different results between schedulers with SD3: A user noted they were getting different results between Comfy and Diffusers when testing different schedulers with SD3. They solicited input from others to see if anyone else had similar experiences.

-

Stable Diffusion 3 Medium model released: Stable Diffusion 3 Medium with 2B parameters has been released and is available on the Hugging Face Hub. The model includes integrations with Diffusers and comes with Dreambooth and LoRA training scripts blog post.

-

Common issues while running Diffusers scripts: Multiple users ran into issues running Diffusers scripts for SD3 with errors related to tokenizer and environment setup. Installing

sentencepieceand ensuring proper paths and dependencies were suggested fixes. -

Training SD3 LoRA with single GPU: Users discussed the possibility of training SD3 LoRA using a single high-end GPU like the RTX 4090. Recommendations included adjusting batch sizes, using fp16 precision, and validating paths are absolute and properly formatted.

-

Troubleshooting GPU configurations: Users with issues running scripts on Windows and Linux shared solutions like ensuring correct NVIDIA driver installations and accelerate configurations. It was suggested to use Hugging Face model hub to manually download models and check dependencies.

Links mentioned:

- Diffusers welcomes Stable Diffusion 3: no description found

- stabilityai/stable-diffusion-3-medium-diffusers at main: no description found

- stabilityai/stable-diffusion-3-medium-diffusers · Hugging Face: no description found

- diffusers/examples/dreambooth/README_sd3.md at main · huggingface/diffusers: 🤗 Diffusers: State-of-the-art diffusion models for image and audio generation in PyTorch and FLAX. - huggingface/diffusers

OpenAI ▷ #ai-discussions (223 messages🔥🔥):

- AI Pathways Explored with Potential Guidance: A user inquired about the pathways to become an AI engineer and it was suggested to look into resources provided by Karpathy, a former cofounder of OpenAI and Director of AI at Tesla. Another user linked the YouTube series by sentdex for creating a chatbot with deep learning.

- Speculation on GPT-4.5 Turbo Model: There was a heated discussion about a temporarily visible page suggesting OpenAI’s release of GPT-4.5 Turbo with a 256k context window and a knowledge cutoff of June 2024. Some users believed it could have been a test page or a mistake while others speculated about continuous pretraining capabilities.

- Memory Limits and Management in ChatGPT: Several users discussed issues related to memory limits in ChatGPT. One member recommended checking and collectively summarizing memory to free up space as a workaround.

- Team Account Benefits and Conditions: There were clarifications about the benefits and restrictions of ChatGPT Team accounts, including doubled prompt limits and billing policies. A user shared that the ChatGPT Team plan requires a minimum commitment of two seats and has a higher monthly cost if not billed annually.

- New UI and Behavioral Changes in ChatGPT: Users observed new UI changes and more expressive text responses in the latest ChatGPT updates, sparking interest and speculation about improvements in the model’s behavior.

Link mentioned: DROP: A Reading Comprehension Benchmark Requiring Discrete Reasoning Over Paragraphs: Reading comprehension has recently seen rapid progress, with systems matching humans on the most popular datasets for the task. However, a large body of work has highlighted the brittleness of these s…

OpenAI ▷ #gpt-4-discussions (3 messages):

-

Clarification on model names: The model known as GPT-4 Turbo is referenced with the identifier

"gpt-4-turbo"in the OpenAI API. Another member confirmed that the model based on the GPT-4 architecture is often referred to with identifiers like"gpt-4"or"gpt-4-turbo". -

Customizing GPT roles generates confusion: A member expressed confusion over the customization of GPT roles with model names like

"gpt-4"and"gpt-4-turbo". -

Temporary messages in GPTs possible: A user inquired whether GPTs can have temporary messages. The same user confirmed that it is possible, expressing excitement with “it is! awesome”.

OpenAI ▷ #prompt-engineering (3 messages):

- Chunk and Trim Large Text Data: A member suggested that for managing “300MB of text data”, you “chunk that up, and cut it down.” This implies breaking down the data into smaller, manageable parts.

- Practical Tips for Large Documents: A link to a forum post offering practical tips for dealing with large documents was shared. This resource likely provides strategies to handle large text inputs within the constraints of token limits.

- Handling Long Texts with Embeddings: Another link to a notebook on embedding long inputs demonstrates handling texts exceeding a model’s maximum context length. The guide uses embeddings from

text-embedding-3-smalland refers to the OpenAI Embeddings Guide for further learning.

Links mentioned:

- Practical Tips for Dealing with Large Documents (>2048 tokens): Dear Mr Plane, Please correspond with the OpenAI team and they will reply with your specific parameters. Kind Regards, Robinson

- Embedding texts that are longer than the model's maximum context length | OpenAI Cookbook: no description found

OpenAI ▷ #api-discussions (3 messages):

-

Chunk your data for processing: One member advised splitting large text data files, particularly files around 300MB, to manage them better. They noted that smaller chunks are easier to handle.

-

Practical tips for large documents: A link to a community forum post was shared, providing guidance on managing documents exceeding 2048 tokens in length.

-

OpenAI’s embedding models limit: A resource on embedding long inputs was shared, explaining that OpenAI’s embedding models have maximum text length limits measured by tokens. The post includes a notebook that demonstrates handling over-length texts and links to the OpenAI Embeddings Guide.

Links mentioned:

- Practical Tips for Dealing with Large Documents (>2048 tokens): Dear Mr Plane, Please correspond with the OpenAI team and they will reply with your specific parameters. Kind Regards, Robinson

- Embedding texts that are longer than the model's maximum context length | OpenAI Cookbook: no description found

LLM Finetuning (Hamel + Dan) ▷ #general (15 messages🔥):

-

Finding example data for LLM finetuning: A user inquired about sourcing examples for finetuning when lacking a large dataset. No specific solutions were discussed in the responses.

-

Recordings for June sessions: It was clarified that there wouldn’t be a single recording for June’s sessions, but each event has its own recording.

-

Memorization experiment reveals interesting results: A user shared results from an experiment on LLM memorization, showing significant improvement with 10x example sets but little increase with higher multiples. Detailed findings and a GitHub repo with datasets and scripts were shared for further exploration.

-

Call for LLM experts to join the team: An open position was posted looking for an expert in developing and fine-tuning LLMs to help create a model for Hugging Face’s leaderboard, emphasizing the need for innovative evaluation metrics. Interested candidates were invited to submit resumes and cover letters for consideration.

-

Morale-boosting reminder with a new channel announcement: In response to a humorous complaint about a member enjoying free time, a new channel, <#1250895186616123534>, was announced for showcasing and demoing projects. Users were encouraged to use the General voice channel for live demonstrations.

Links mentioned:

- Tweet from Hamel Husain (@HamelHusain): Its so nice to have free time!

- Tweet from Hamel Husain (@HamelHusain): Its so nice to have free time!

- Fine-Tuning-Memorisation-v2: Results-all-v2 Examples,Run 1 or 2,Object,No,Question,Expected Answer,Response,Extracted Number,Correct,Temperature 1-50-Examples,One,Apple,Q1,What is the number for Apple?,65451,The number for Apple...

LLM Finetuning (Hamel + Dan) ▷ #🟩-modal (4 messages):

- Trouble with text-to-SQL template in finetuning llama3: An issue was raised about finetuning llama3 for text-to-SQL using the

llama-3.ymlconfig andsqlqa.subsample.jsonldataset. The preprocessing stage did not format the data correctly, resulting in<|begin_of_text|>tags instead of[INST]and[SQL]markers. - Extra credit confusion: Several members discussed missing out on extra credits due to the deadline on June 11th. One member expressed the complexity of keeping up with many channels and appreciated the ease of starting an app with Modal.

- Modal credits gratitude: Multiple members thanked for receiving $1000 credits with one sharing their email for not receiving $500 extra credits despite running the starter app before the deadline.

LLM Finetuning (Hamel + Dan) ▷ #learning-resources (1 messages):

- Seeking resources for LLM input & output validations: A user asked for recommendations on resources to write input & output validations for LLMs. They also requested suggestions for favorite tools in this context.

LLM Finetuning (Hamel + Dan) ▷ #hugging-face (2 messages):

- Trouble Uploading SQLite DB to Huggingface Spaces: A user struggled with uploading a 3MB SQLite file to Huggingface Spaces, initially unable to update it due to file size restrictions. The solution they discovered was to use git lfs to manage the file upload.

LLM Finetuning (Hamel + Dan) ▷ #langsmith (2 messages):

- LangSmith Free Developer Plan Info: Users on the free Developer plan have a “$0/seat cost and 5K free traces a month.” You won’t be charged for anything until your credits are exhausted.

LLM Finetuning (Hamel + Dan) ▷ #berryman_prompt_workshop (1 messages):

hamelh: humanloop, promptfoo are both popular

LLM Finetuning (Hamel + Dan) ▷ #clavie_beyond_ragbasics (4 messages):

- Victory in building a working system: A member expressed relief and satisfaction after successfully building a working system, mentioning it took several hours to accomplish.

- Library issues with chunk separation: Another message raised an issue about the fasthtml library not getting the correct answers due to separation in chunks, hinting at possible performance impacts.

- Seeking examples of Colbert embeddings: A member inquired about good examples showing how Colbert embeddings are used for retrieval, suggesting a need for clarity or learning resources.

- Deployed app to Hugging Face Spaces: The deployed app includes a feedback mechanism and is slow but functional. The app took longer to deploy than build, covers 20-21 talks, and stores feedback in a SQLite DB.

Link mentioned: RizzConn Answering Machine - a Hugging Face Space by t0mkaka: no description found

LLM Finetuning (Hamel + Dan) ▷ #jason_improving_rag (2 messages):

-

Evaluating Category Selection Approaches: A member discussed challenges in selecting from 500 categories when filtering documents. They mentioned testing this approach with GPT-3.5 and 4 without success and are considering revisiting it with GPT-4.0 for different product feeds to explore potential improvements.

-

ParadeDB Installation Highlight: A message recommended installing ParadeDB extensions, emphasizing its ability to “unify operational and analytical data” and “unlock insights faster and simplify the data stack with an all-in-one search and analytical database.” ParadeDB’s ACID-compliant transaction control and Postgres compatibility were also mentioned as key benefits.

Link mentioned: ParadeDB - Postgres for Search and Analytics: ParadeDB is a modern Elasticsearch alternative built on Postgres.

LLM Finetuning (Hamel + Dan) ▷ #saroufimxu_slaying_ooms (7 messages):

-

Member Requests Training Profiling Lecture: A member asked if experts could give a lecture on training profiling, covering tools, setup, and log interpretation. They emphasized the importance of maximizing GPU utilization and reducing training costs, noting a “BIG gap in speed between frameworks.”

-

Poll for Lecture Demand: A suggestion was made to gauge interest through a poll. Another member received superpowers to create the poll to measure demand.

-

Potential Speaker Shows Interest: The potential speaker preliminarily agreed to give the lecture if there was enough interest. They also humorously agreed to create a private lesson if community interest was lacking.

LLM Finetuning (Hamel + Dan) ▷ #paige_when_finetune (4 messages):

-

Gemini models differ in approach: One user sought clarification on whether the Gemini.Google.com model is a mixture of a RAG/search model, while the Gemini 1.5 Pro is solely a language model. They were trying to reconcile differing outputs to the same prompt across these models.

-

Detailed description of the Adolescent Well-being Framework: The user shared a comprehensive breakdown of the Adolescent Wellbeing Framework (AWF) from gemini.google.com, emphasizing its five interconnected domains such as mental and physical health and community and connectedness. The framework, developed by the WHO, aims to assess and promote well-being in adolescents.

-

Comparing frameworks for adolescent well-being: Another response from aistudio.google.com with Gemini 1.5 Pro provided a broader view, highlighting several well-known models like the Five Cs of Positive Youth Development and the Whole School, Whole Community, Whole Child (WSCC) Model. This alternative response presented various dimensions such as physical health, mental and emotional health, and economic well-being, noting these are crucial for adolescent development.

LLM Finetuning (Hamel + Dan) ▷ #gradio (2 messages):

-

RAG-based app launched on Huggingface Spaces: A member announced that they built a RAG-based app with Gradio and uploaded it to Huggingface Spaces. RizzCon-Answering-Machine is presented as “Refreshing,” processing data for the first 20 talks.

-

Performance needs improvement for RAG-based app: The same member noted that their RAG-based app is presently too slow and needs some profiling for better performance.

Link mentioned: RizzConn Answering Machine - a Hugging Face Space by t0mkaka: no description found

LLM Finetuning (Hamel + Dan) ▷ #axolotl (9 messages🔥):

- Llama-3-8b preprocessing error plagues user: A member reported encountering a warning while preprocessing llama-3-8b, stating: “copying from a non-meta parameter in the checkpoint to a meta parameter in the current model, which is a no-op”. This error seems to repeat for each layer, also appearing with the mistral-7b model.

- VSCode disconnects hinder fine-tuning tasks: A member experienced frequent disconnections when running fine-tunes on a DL rig via SSH in VSCode. Another member suggested using

nohupor switching to manual execution on the physical machine, with additional advice to consider using SLURM for better handling. - Nohup to the rescue for background processes: Members discussed using