AI News for 6/14/2024-6/17/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (414 channels, and 5506 messages) for you. Estimated reading time saved (at 200wpm): 669 minutes. You can now tag @smol_ai for AINews discussions!

A bunch of incremental releases over this weekend; DeepSeekCoder V2 promises GPT4T-beating performance (validated by aider) at $0.14/$0.28 per million tokens (vs GPT4T’s $10/$30), Anthropic dropped some Reward Tampering research, and Runway finally dropped their Sora response.

However probably the longer lasting, meatier thing to dive into is the discussion around “test-time” search:

spawning a list of related papers:

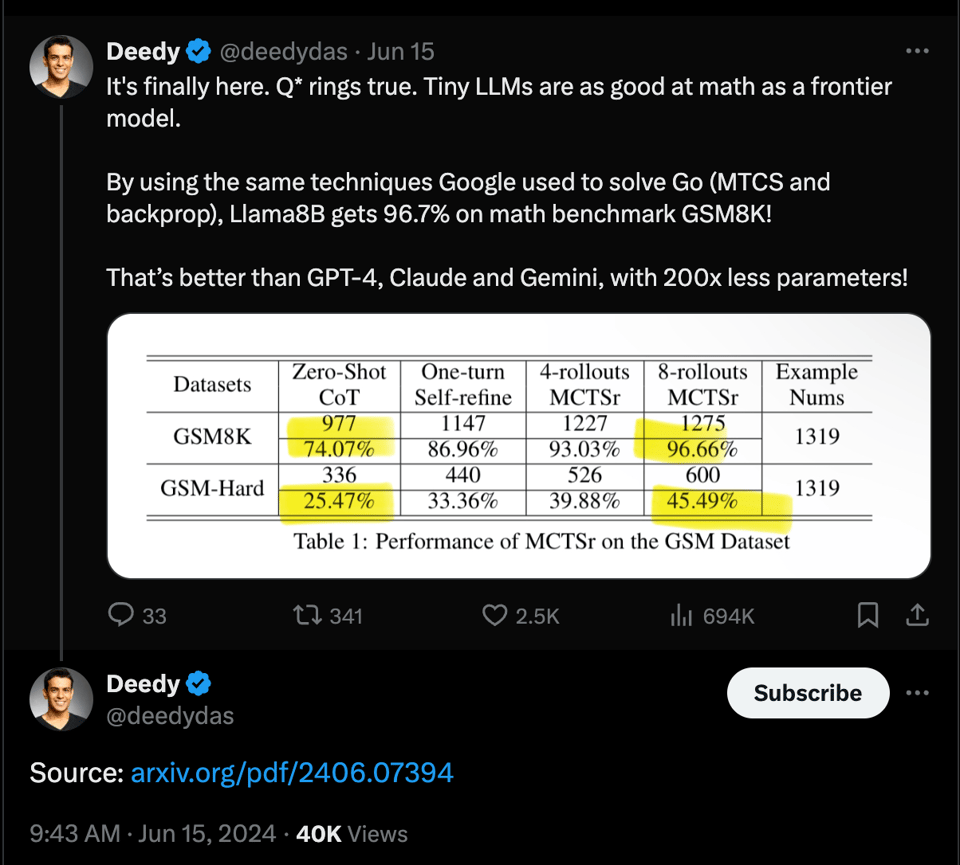

- Accessing GPT-4 level Mathematical Olympiad Solutions via Monte Carlo Tree Self-refine with LLaMa-3 8B: A Technical Report

- Improve Mathematical Reasoning in Language Models by Automated Process Supervision

- AlphaMath Almost Zero: Process Supervision Without Process

- ReST-MCTS*: LLM Self-Training via Process Reward Guided Tree Search

We’ll be honest that we haven’t read any of these papers yet, but we did cover OpenAI’s thoughts on verifier-generator process supervision on the ICLR podcast, and have lined the remaining papers up for the Latent Space Discord Paper Club.

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

Apple’s AI Developments and Partnerships

- Apple Intelligence announced: @adcock_brett noted Apple revealed Apple Intelligence at WWDC, their first AI system coming to iPhone, iPad, and Mac, with features like a smarter Siri and image/document understanding.

- OpenAI partnership: Apple and OpenAI announced a partnership to directly integrate ChatGPT into iOS 18, iPadOS 18, and macOS, as mentioned by @adcock_brett.

- On-device AI models: @ClementDelangue highlighted that Apple released 20 new CoreML models for on-device AI and 4 new datasets on Hugging Face.

- Optimized training: Apple offered a peek into its new models’ performance and how they were trained and optimized, as reported by @DeepLearningAI.

- LoRA adapters for specialization: @svpino explained how Apple uses LoRA fine-tuning to generate specialized “adapters” for different tasks, swapping them on the fly.

Open Source LLMs Matching GPT-4 Performance

- Nemotron-4 340B from NVIDIA: NVIDIA released Nemotron-4 340B, an open model matching GPT-4 (0314) performance, according to @adcock_brett.

- DeepSeek-Coder-V2: @deepseek_ai introduced DeepSeek-Coder-V2, a 230B model excelling in coding and math, beating several other models. It supports 338 programming languages and 128K context length.

- Stable Diffusion 3 Medium: Stability AI released open model weights for its text-to-image model, Stable Diffusion 3 Medium, offering advanced capabilities, as noted by @adcock_brett.

New Video Generation Models

- Dream Machine from Luma Labs: Luma Labs launched Dream Machine, a new AI model generating 5-second video clips from text and image prompts, as reported by @adcock_brett.

- Gen-3 Alpha from Runway: @c_valenzuelab showcased Runway’s new Gen-3 Alpha model, generating detailed videos with complex scenes and customization options.

- PROTEUS from Apparate Labs: Apparate Labs launched PROTEUS, a real-time AI video generation model creating realistic avatars and lip-syncs from a single reference image, as mentioned by @adcock_brett.

- Video-to-Audio from Google DeepMind: @GoogleDeepMind shared progress on their video-to-audio generative technology, adding sound to silent clips matching scene acoustics and on-screen action.

Robotics and Embodied AI Developments

- OpenVLA for robotics: OpenVLA, a new open-source 7B-param robotic foundation model outperforming a larger closed-source model, was reported by @adcock_brett.

- Virtual rodent from DeepMind and Harvard: DeepMind and Harvard created a ‘virtual rodent’ powered by an AI neural network, mimicking agile movements and neural activity of real-life rats, as noted by @adcock_brett.

- Manta Ray drone from Northrop Grumman: @adcock_brett mentioned Northrop Grumman released videos of the ‘Manta Ray’, their new uncrewed underwater vehicle drone prototype.

- Autonomous driving with humanoids: A new approach to autonomous driving leveraging humanoids to operate vehicle controls based on sensor feedback was reported by @adcock_brett.

Miscellaneous AI Research and Applications

- Anthropic’s reward tampering research: @AnthropicAI published a new paper investigating reward tampering, showing AI models can learn to hack their own reward system.

- Meta’s CRAG benchmark: Meta’s article discussing the Corrective Retrieval-Augmented Generation (CRAG) benchmark was highlighted by @dair_ai.

- DenseAV for learning language from videos: An AI algorithm called ‘DenseAV’ that can learn language meaning and sound locations from unlabeled videos was mentioned by @adcock_brett.

- Goldfish loss for training LLMs: @tomgoldsteincs introduced the goldfish loss, a technique for training LLMs without memorizing training data.

- Creativity reduction in aligned LLMs: @hardmaru shared a paper exploring the unintended consequences of aligning LLMs with RLHF, which reduces their creativity and output diversity.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Models and Techniques

- Improved CLIP ViT-L/14 for Stable Diffusion: In /r/StableDiffusion, an improved CLIP ViT-L/14 model is available for download, along with a Long-CLIP version, which can be used with any Stable Diffusion model.

- Mixed Precision Training from Scratch: In /r/MachineLearning, a reimplementation of the original mixed precision training paper from Nvidia on a 2-layer MLP is presented, diving into CUDA land to showcase TensorCore activations.

- Understanding LoRA: Also in /r/MachineLearning, a visual guide to understanding Low-Rank Approximation (LoRA) for efficient fine-tuning of large language models is shared. LoRA reduces the number of parameters involved in fine-tuning by 10,000x while still converging to the performance of a fully fine-tuned model.

- GPT-4 level Math Solutions with LLaMa-3 8B: A research paper explores accessing GPT-4 level Mathematical Olympiad solutions using Monte Carlo Tree Self-refine with the LLaMa-3 8B model.

- Instruction Finetuning From Scratch: An implementation of instruction finetuning from scratch is provided.

- AlphaMath Almost Zero: Research on AlphaMath Almost Zero introduces process supervision without process.

Stable Diffusion Models and Techniques

- Model Comparisons: In /r/StableDiffusion, a comparison of PixArt Sigma, Hunyuan DiT, and SD3 Medium models for image generation is presented, with PixArt Sigma and SDXL refinement showing promise.

- ControlNet for SD3: ControlNet Canny and Pose models have been released for SD3, with Tile and Inpainting models coming soon.

- Sampler and Scheduler Permutations: An overview of all working sampler and scheduler combinations for Stable Diffusion 3 is provided.

- CFG Values in SD3: A comparison of different CFG values in Stable Diffusion 3 shows a narrower usable range compared to SD1.

- Playground 2.5 Similar to Midjourney: The Playground 2.5 model is identified as the most similar to Midjourney in terms of output quality and style.

- Layer Perturbation Analysis in SD3: An analysis of how adding random noise to different layers in SD3 affects the final output is conducted, potentially providing insights into how and where SD3 was altered.

Llama and Local LLM Models

- Llama 3 Spellbound: In /r/LocalLLaMA, Llama 3 7B finetune is trained without instruction examples, aiming to preserve world understanding and creativity while reducing positivity bias in writing.

- Models for NSFW Roleplay: A request for models that can run on a 3060 12GB GPU and produce NSFW roleplay similar to a provided example is made.

- Model Similar to Command-R: Someone is seeking a model with similar quality to Command-R but requiring less memory for 64k context size on a Mac with M3 Max 64GB.

- System Prompt for RP/Chatting/Storytelling: A detailed system prompt for controlling models in roleplay, chatting, and storytelling scenarios is shared, focusing on thorough, direct, and symbolic instructions.

- Running High Models on 24GB VRAM: Guidance is sought on running high models/context on 24GB of VRAM, possibly using quantization or 4/8 bits.

- Underlying Model Importance with RAG: A discussion on whether the underlying model matters when using Retrieval-Augmented Generation (RAG) with a solid corpus takes place.

AI Ethics and Regulation

- OpenAI Board Appointment Criticism: Edward Snowden criticizes OpenAI’s decision to appoint a former NSA director to its board, calling it a “willful, calculated betrayal of the rights of every person on earth.”

- Stability AI’s Closed-Source Approach: In /r/StableDiffusion, there is a discussion on Stability AI’s decision to go the closed-source API selling route, questioning their ability to compete without leveraging community fine-tunes.

- Clarification on Stable Diffusion TOS: A clarification on the terms of service (TOS) for Stable Diffusion models is provided, addressing misunderstandings caused by a clickbait YouTuber.

- Crowdfunded Open-Source Alternative to SD3: A suggestion to start a crowdfunded open-source alternative to SD3 is made, potentially led by a former Stability AI employee who helped train SD3 but recently resigned.

- Malicious Stable Diffusion Tool on GitHub: A news article reports on hackers targeting AI users with a malicious Stable Diffusion tool on GitHub, claiming to protest “art theft” but actually seeking financial gain through ransomware.

- Impact of Debiasing on Creativity: A research paper discusses the impact of debiasing language models on their creativity, suggesting that censoring models makes them less creative.

AI and the Future

- Feeling Lost Amidst AI Advancements: In /r/singularity, a personal reflection on feeling lost and uncertain about the future in the face of rapid AI advancements is shared.

- Concerns About AI’s Impact on Career: Also in /r/singularity, someone expresses feeling lost about the future of AI and their career in light of recent developments.

AI Discord Recap

A summary of Summaries of Summaries

1. AI Model Performance and Scaling

- Scaling Up with New AI Models: DeepSeek’s Coder V2 reportedly beats GPT-4 on benchmarks and Google DeepMind reveals new video-to-audio tech creating tracks for any video, gaining traction on Rowan Cheung’s X profile.

- Expanding AI Capabilities Across Platforms: Runway introduces Gen-3 Alpha for video generation, enhancing cinematic styles and scene transitions. AP details shared on Twitter.

2. Integration and Implementation Across Platforms

- Hybrid Notes App Unveils LLM integration: OpenRouter unveils a notes app integrating LLMs for dynamic content interaction, though lacking mobile support as specified on their full-screen app.

- Challenges with Implementation on Various Platforms: Users face issues like CORS errors on OpenRouter and integration challenges on LangChain, reflecting the need for better implementation guides or platform-specific APIs.

3. Ethical AI and Governance

- OpenAI Shifts Towards Profit-Driven Model: Speculations and confirmations stir about OpenAI’s move towards becoming a profit entity, potentially impacting governance and ethical considerations. More on this from The Information.

- Discussions on AI Ethics Heat Up: Debates continue about data privacy, model biases, and corporate governance in AI, as Edward Snowden criticizes OpenAI’s new board appointments on Edward Snowden’s X profile.

4. New AI Developments and Benchmarking

- AI Innovations and Improvements Announced: Anthropic publishes insights into AI’s ability to tamper with reward systems in their new research article.

- Benchmarking New Models: Stability AI releases SD3 models discussing new techniques for loss stabilization and artifacts management across forums, including a spotlight on Reddit.

5. Collaborative AI Projects and User Engagement

- Community Projects Highlight AI Integration: From a notes app merging notes and API keys management on OpenRouter to innovative AI-driven video generation tools like Dream Machine, community-built tools are pushing the boundaries of creativity and practical AI application, visible on platforms like Lumalabs.

- Interactive AI Discussions and Collabs Flourish: Webinars and collaborative events like the upcoming Mojo Community Meeting encourage deep dives into AI advancements, with detailed discussions and participation boasts from across the global user base as shared on blog.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

- SD3 License Troubles: The new license for Stable Diffusion 3 (SD3) has led to its ban on Civitai due to legal ambiguities, with a review by Civitai’s legal team announced in their temporary ban statement.

- Community Rift Over SD3: Users expressed frustration with Stability AI’s licensing of SD3, highlighting both confusion and discontent, while some criticized youtuber Olivio Sarikas for allegedly misrepresenting the SD3 license for views, referencing his video.

- Guidance for ComfyUI: Issues around ComfyUI setup sparked technical discussion, with suggested fixes for custom node installations including dependencies like cv2; a user-contributed ComfyUI tutorial was shared to assist.

- Seeking SD3 Alternatives: The dialogue points to a shift towards seeking alternative models and artistic tools, such as video generation with animatediff, possibly due to the ongoing SD3 controversy.

- Misinformation Allegations in the AI Community: Accusations fly regarding youtuber Olivio Sarikas spreading misinformation about SD3’s license, with community members challenging the veracity of his content found in his contentious video.

Unsloth AI (Daniel Han) Discord

-

Ollama Integration Nears Completion: The Ollama support development has reached 80% completion, with the Unsloth AI team and Ollama collaboratively pushing through delays. Issues with template fine-tuning validation and learning rates concerning Ollama were discussed, along with an issue where running

model.push_to_hub_mergeddoes not save the full merged model, prompting a manual workaround. -

Unsloth Speeds Ahead: Unsloth’s training process is touted to be 24% faster than torch.compile() torchtune for the NVIDIA GeForce RTX 4090, as benchmarks show its impressive training speed. Additionally, upcoming multi-GPU support for up to 8 GPUs is being tested with a select group of users getting early access for initial evaluations.

-

Training Troubles and Tricks: Members encountered challenges like crashes during saving steps while training the Yi model, possible mismanagement of

quantization_methodduring saving, and confusion around batch sizes and gradient accumulation in VRAM usage. Solutions and workarounds included verifying memory/disk resources and a submitted pull request addressing the quantization error. -

Lively Discussion on Nostalgia and Novelty in Music: Members shared music ranging from a nostalgic 1962 song to iconic tracks by Daft Punk and Darude, showing a light-hearted side to the community. In contrast, concerns were raised over Gemma 2’s output on AI Studio, with mixed reactions varying from disappointment to intrigue and anticipation for Gemini 2.0.

-

CryptGPT Secures LLMs with an Encryption Twist: CryptGPT was introduced as a concept using the Vigenere cipher to pretrain GPT-2 models on encrypted datasets, ensuring privacy and requiring an encryption key to generate output, as detailed in a shared blog post.

-

Singular Message of Curiosity: The community-collaboration channel featured a single message expressing interest, but without further context or detail, its relevance to broader discussion topics remains unclear.

CUDA MODE Discord

-

NVIDIA’s Next Big Thing Speculated and PyCUDA SM Query Clarified: Engineers speculated about the potential specs of the upcoming NVIDIA 5090 GPU, with rumors of up to 64 GB of VRAM circulating yet met with skepticism. Additionally, a discrepancy in GPU SM count for an A10G card reported by techpowerup was cleared up, with independent sources such as Amazon Web Services confirming the correct count as 80, not the 72 originally stated.

-

Triton and Torch Users Navigate Glitches and Limits: Triton users encountered an

AttributeErrorin Colab and debated the feasibility of nested reductions for handling quadrants. Meanwhile, PyTorch users adjusted the SM threshold intorch.compile(mode="max-autotune")to accommodate GPUs with less than 68 SMs and explored enabling coordinate descent tuning for better performance. -

Software and Algorithms Push the AI Envelope: A member lauded the matching of GPT-4 with LLaMA 3 8B, while Akim will attend the AI_dev conference and is open to networking. Elsewhere, Vayuda’s search algorithm paper spurred interest among enthusiasts, discussed across multiple channels. Discussions around AI training, evident in Meta’s described challenges in LLM training, underscore the importance of infrastructure adaptability.

-

CUDA Development Optics: News from CUDA-focused development revealed: Permuted DataLoader integration did not significantly affect performance; a unique seed strategy was developed for stochastic rounding; challenges surfaced regarding ZeRO-2’s memory overhead; and new LayerNorm kernels provided much-needed speedups under certain configurations.

-

Beyond CUDA: Dynamic Batching, Quantization, and Bit Packing: In the domain of parallel computing, engineers struggled with dynamic batching for Gaudi architecture and discussed the complexity of quantization and bit-packing techniques. They stressed the VRAM limitations constraining local deployment of large models and shared diverse resources, including links to Python development environments and documentation on novel machine learning libraries.

LM Studio Discord

-

LM Studio equips engineers with CLI tools: The latest LM Studio 0.2.22 release introduced ‘lms’, a CLI management tool for models and debugging prompts, which is detailed in its GitHub repository. The update streamlines the workflow for AI deployments, especially with model loading/unloading and input inspection.

-

Performance tweaks and troubleshooting: Engineers discussed optimal settings for AI model performance, including troubleshooting GPU support for Intel ARC A7700, configuration adjustments for GPU layers, and adjusting Flash Attention settings. There was a recommendation to check Open Interpreter’s documentation for issues hosting local models and a call for better handling of font sizes in LM Studio interfaces for usability.

-

Diverse model engagement: Members recommended Fimbulvetr-11B for roleplaying use-cases, while highlighting the fast-paced changes in coding models like DeepSeek-Coder-V2, advising peers to stay updated with current models for specific tasks like coding, which can be reviewed on sites like Large and Small Language Models list.

-

Hardware optimization and issues: A link to archived LM Studio 0.2.23 was shared for those facing installation issues—a MirrorCreator link. Hardware discussions also included the compatibility of mixed RAM sticks, setting CPU cores for server mode, and troubleshooting GPU detection on various systems.

-

Development insights and API interactions: Developers shared their aspirations for integrating various coding models like

llama3anddeepseek-coderinto their VSCode workflow and sought assistance with implementing models incontinue.dev. There was also a conversation about decoupling ROCm from the main LM Studio application and a user guide for configuringcontinue.devwith LM Studio. -

Beta release observations and app versioning: The community tested and reviewed recent beta releases, discussing tokenizer fixes and GPU offloading glitches. There’s a need for access to older versions, which is challenged by LM Studio’s update policies, and a suggestion to maintain personal archives of preferred versions.

-

AI-driven creativity and quality of life concerns: Engineers raised issues like the mismanagement of stop tokens by LM Studio and a tool’s tendency to append irrelevant text in outputs. A frequent use-case-related complaint was an AI model not indicating its failure to provide a correct output by using an “#ERROR” message when necessary.

HuggingFace Discord

AI Alternatives for GPT-4 on Low-End Hardware: Users debated on practical AI models for less powerful servers with suggestions like “llama3 (70B-7B), mixtral 8x7B, or command r+” for self-hosted AI similar to GPT-4.

RWKV-TS Challenges RNN Dominance: An arXiv paper introduces RWKV-TS, proposing it as a more efficient alternative to RNNs in time series forecasting, by effectively capturing long-term dependencies and scaling computationally.

Model Selection Matters in Business Use: In the choice of AI for business applications, it’s crucial to consider use cases, tools, and deployment constraints, even with a limitation like the 7B model size. For tailored advice, members suggested focusing on specifics.

Innovations and Integrations Abound: From Difoosion, a user-friendly web interface for Stable Diffusion, to Ask Steve, a Chrome extension designed to streamline web tasks using LLMs, community members are actively integrating AI into practical tools and workflows.

Issues and Suggestions in Model Handling and Fine-Tuning:

- A tutorial for fine-tuning BERT was shared.

- Concerns about non-deterministic model initializations were raised, with advice to save the model state for reproducibility.

- Mistral-7b-0.3’s context length handling and the quest for high-quality meme generator models indicate challenges and pursuits in model customization.

- For TPU users, guidance on using Diffusers with GCP’s TPU is sought, indicating an interest in leveraging cloud TPUs for diffusion models.

OpenAI Discord

-

iOS Compatibility Question Marks: Members debated whether ChatGPT functioned with iOS 18 beta, recommending sticking to stable versions like iOS 17 and noting that beta users are under NDA regarding new features. No clear consensus was reached on compatibility.

-

Open Source Ascending: The release of an open-source model by DeepSeek AI that outperforms GPT-4 Turbo in coding and math sparked debate about the advantages of open-source AI over proprietary models.

-

Database Deployments with LLMs: For better semantic search and fewer hallucinations, a community member highlighted OpenAI’s Cookbook as a resource for integrating vector databases with OpenAI’s models.

-

GPT-4 Usage Ups and Downs: Users expressed frustrations with access to GPT interactions, privacy settings on Custom GPTs, and server downtimes. The community provided workarounds and suggested monitoring OpenAI’s service status for updates.

-

Challenges with 3D Modeling and Prompt Engineering: Conversations focused on the technicalities of generating shadow-less 3D models and the intricacies of preventing GPT-4 from mixing information. Members shared various strategies, including step-back prompting and setting explicit actions to guide the AI’s output.

LAION Discord

-

Stabilizing SD3 Models: The discussion revolved around SD3 models facing stability hurdles, particularly with artifacts and training. Concerns were raised about loss stabilization, pinpointing issues like non-uniform timestep sampling and missing elements such as qk norm.

-

T2I Models Take the Stage: The dialog highlighted interest in open-source T2I (text-to-image) models, notably for character consistency across scenes. Resources such as Awesome-Controllable-T2I-Diffusion-Models and Theatergen were recommended for those seeking reliable multi-turn image generation.

-

Logical Limitbreak: A member brought attention to current challenges in logical reasoning within AI, identifying Phi-2’s “severe reasoning breakdown” and naming bias in LLMs when tackling AIW problems—a key point supported by related research.

-

Boosting Deductive Reasoning: Queries about hybrid methods for enhancing deductive reasoning in LLMs directed to Logic-LM, a method that combines LLMs with symbolic AI solvers to improve logical problem-solving capabilities.

-

Video Generation Innovation: Fudan University’s Hallo model sparked excitement, a tool capable of video generation from single images and audio, with potential application alongside Text-to-Speech systems. A utility to run it locally was shared from FXTwitter, highlighting community interest in practical integrations.

OpenAccess AI Collective (axolotl) Discord

-

200T Parameter Model: AGI or Fantasy?: Discussions about the accessibility of a hypothetical 200T parameter model surfaced, highlighting both the limits of current compute capabilities for most users and the humor in staking an AGI claim for such models.

-

Competing at the Big Model Rodeo: Members juxtaposed the Qwen7B and Llama3 8B models, acknowledging Llama3 8B as the dominant contender in performance. The problem of custom training configurations for Llama3 models was tackled, with a solution shared to address the

chat_templatesetting issues. -

Optimization Quest for PyTorch GPUs: Requests for optimization feedback directed towards various GPU setups in PyTorch have yielded a trove of diverse community experiences ranging from AMD MI300X to RTX 3090, Google TPU v4, and 4090 with tinygrad.

-

Navigating Axolotl’s Development Labyrinth: An issue halting the development with the Llama3 models was found and traced to a specific commit, which helped identify the problem but emphasized the need for a fix in the main branch. Instructions for setting inference parameters and fine-tuning vision models within Axolotl were detailed for users.

-

Data Extraction with a Twist of Structure: Community showcase hinted at positive results after fine-tuning LLMs with Axolotl, particularly in transforming unstructured press releases into structured outputs. A forthcoming post promises to expound on the use of the OpenAI API’s function calling to enhance LLM accuracy in this task. The author points to a detailed post for more information.

Perplexity AI Discord

-

Pro Language Partnerships!: Perplexity AI has inked a deal with SoftBank, offering Perplexity Pro free for one year to SoftBank customers. This premium service, typically costing 29,500 yen annually, is set to enhance users’ exploration and learning experiences through AI (More info on the partnership).

-

Circumventing AB Testing Protocols? Think Again: Engineers discussed how to bypass A/B testing for Agentic Pro Search, with a Reddit link provided; however, concerns about integrity led to reconsideration. The community also tackled a myriad of usage questions on Perplexity features, debated the merits of Subscriptions to Perplexity versus ChatGPT, and raised critical privacy issues concerning web crawling practices.

-

API Access is the Name of the Game: Members expressed urgency for closed-beta access to the Perplexity API, emphasizing the impact on launching projects like those at Kalshi. Troubleshooting Custom GPT issues, they exchanged tips to enhance its “ask-anything” feature using schema-based explanations and error detail to improve action/function call handling.

-

Community Leaks and Shares: Links to Perplexity AI searches and pages on varied topics, from data table management tools (Tanstack Table) to Russia’s pet food market and elephant communication strategies, were circulated. A mishap with a publicized personal document on prostate health led to community-driven support resolving the issue.

-

Gaming and Research Collide: The shared content within the community included a mix of academic interests and gaming culture, demonstrated by a publicly posted page pertaining to The Elder Scrolls, hinting at the intersecting passions of the technical audience involved.

Nous Research AI Discord

-

Neurons Gaming with Doom: An innovative approach brings together biotech and gaming as living neurons are used to play the video game Doom, detailed in a YouTube video. This could be a step forward in understanding biological process integration with digital systems.

-

AI Ethics and Bias in the Spotlight: A critical take on AI discussed in a ResearchGate paper calls attention to AI’s trajectory towards promulgating human bias and aligned corporate interests, naming “stochastic parrots” as potential instruments of cognitive manipulation.

-

LLM Merging and MoE Concerns: An engaged debate over the practical use of Mixture of Experts (MoE) models surfaced, contemplating the effectiveness of model merging versus comprehensive fine-tuning, citing a PR on llama.cpp and MoE models on Hugging Face.

-

Llama3 8B Deployment Challenges: On setting up and deploying Llama3 8B, it was advised to utilize platforms like unsloth qlora, Axolotl, and Llamafactory for training and lmstudio or Ollama for running fast OAI-compatible endpoints on Apple’s M2 Ultra, bringing light to tooling for model deployment.

-

Autechre Tunes Stir Debate: Opinions and emotions around Autechre’s music led to sharing of contrasting YouTube videos, “Gantz Graf” and “Altibzz”, showcasing the diverse auditory landscapes crafted by the electronic music duo.

-

Explore Multiplayer AI World Building: Suggestion raised for collaborative creation in WorldSim, as members discussed enabling multiplayer features for AI-assisted co-op experiences, while noting censorship from the model provider could influence WorldSim AI content.

-

NVIDIA’s LLM Rolls Out: Introductions to NVIDIA’s Nemotron-4-340B-Instruct model, accessible on Hugging Face, kindled talks on synthetic data generation and strategic partnerships, highlighting the company’s new stride into language processing.

-

OpenAI’s Profit-Minded Pivot: OpenAI’s CEO Sam AltBody has indicated a potential shift from a non-profit to a for-profit setup, aligning closer to competitors and affecting the organizational dynamic and future trajectories within the AI industry.

Modular (Mojo 🔥) Discord

-

Mojo Functions Discussion Heats Up: Engineers critiqued the Mojo manual’s treatment of

defandfnfunctions, highlighting the ambiguity in English phrasing and implications for type declarations in these function variants. This led to a consensus that whiledeffunctions permit optional type declarations,fnfunctions enforce them; a nuanced distinction impacting code flexibility and type safety. -

Meetup Alert: Mojo Community Gathers: An upcoming Mojo Community Meeting was announced, featuring talks on constraints, Lightbug, and Python interoperability, inviting participants to join via Zoom. Moreover, benchmark tests revealed that Mojo’s Lightbug outstrips Python FastAPI in single-threaded performance yet falls short of Rust Actix, sparking further discussion on potential runtime costs entailed by function coloring decisions.

-

Fresh Release of Mojo 24.4: The Mojo team has rolled out version 24.4, introducing core language and standard library improvements. Detail-oriented engineers were pointed towards a blog post for a deep dive into the new traits, OS module features, and more.

-

Advanced Mojo Techniques Uncovered: Deep technical discussions unveiled challenges and insights in Mojo programming, from handling 2D Numpy arrays and leveraging

DTypePointerfor efficient SIMD operations to addressing bugs in casting unsigned integers. Notably, a discrepancy involvingaliasusage in CRC32 table initialization sparked an investigation into unexpected casting behaviors. -

Nightly Mojo Compiler on the Horizon: Engineers were informed about the new nightly builds of the Mojo compiler with the release of versions

2024.6.1505,2024.6.1605, and2024.6.1705, along with instructions to update viamodular update. Each version’s specifics could be examined via provided GitHub diffs, showcasing the platform’s continuous refinement. Additionally, the absence of external documentation for built-in MLIR dialects was noted, and enhancements such as direct output expressions in REPL were requested.

Eleuther Discord

-

Replication of OpenAI’s Generalization Techniques by Eleuther: EleutherAI’s interpretability team successfully replicated OpenAI’s “weak-to-strong” generalization on open-source LLMs across 21 NLP datasets, publishing a detailed account of their findings, positive and negative, on experimenting with variants like strong-to-strong training and probe-based methods, here.

-

Job Opportunities and Navigating CommonCrawl: The AI Safety Institute announced new roles with visa assistance for UK relocation on their careers page, while discussions on efficiently processing CommonCrawl data mentioned tools like ccget and resiliparse.

-

Model Innovations and Concerns: From exploring RWKV-CLIP, a vision-language model, to concerns about content generated by diffusion models and the stealing of commercial model outputs, the community addressed various aspects of AI model development and security. The effectiveness of the Laprop optimizer was debated, and papers ranging from those on online adaptation to those on “stealing” embedding models were shared, with a key paper being here.

-

Evolving Optimization and Scaling Laws: A member’s critique of a hypernetwork-based paper sparked conversations on the value and comparison of hypernetworks with Hopfield nets. Interested parties ventured into the scaling of scaling laws, considering online adaptation for LLMs and citing Andy L. Jones’ concept of offsetting training compute against inference compute.

-

Interpretability Insights on Sparse Autoencoders: Interpretability research centered around Sparse Autoencoders, with a paper proposing a framework for evaluating feature dictionaries in tasks like indirect object identification with GPT-2, and another highlighting “logit prisms” decomposing logit output components, as documented in this article.

-

Need for A Shared Platform for Model Evaluation: Calls were made for a platform to share and validate evaluation results of AI models, particularly for those using Hugging Face and seeking to verify the credibility of closed-source models, highlighting the need for comprehensive and transparent evaluation metrics.

-

Awaiting Code Release for Vision-Language Project: A specific request for a release date for code related to RWKV-CLIP was directed to the GitHub Issues page of the project, indicating a demand for access to the latest advancements in vision-language representation models.

LLM Finetuning (Hamel + Dan) Discord

-

Apple Sidesteps NVIDIA in AI: Apple’s WWDC reveal details its avoidance of NVIDIA hardware, preferring their in-house AXLearn on TPUs and Apple Silicon, potentially revolutionizing their AI development strategy. The technical scoop is unpacked in a Trail of Bits blog post.

-

Embeddings and Fine-Tuning: Enthusiasm emerges for fine-tuning methodologies, with discussions ranging from embedding intricacies, highlighted by resources like Awesome Embeddings, to specific practices like adapting TinyLlama for unique narration styles, detailed in a developer’s blog post.

-

Prompt Crafting Innovations: Mention of Promptfoo and inspect-ai indicates a trend toward more sophisticated prompt engineering tools, with the community weighing functionality and user-friendliness. Diverging preferences suggest such tools are pivotal for refined human-AI interaction schemes.

-

Crediting Confusions Cleared: Participants express mixed signals about course credits across platforms like LangSmith and Replicate, with reminders and clarifications surfacing through communal support. The difference between beta and course credits was elucidated for concerned members.

-

Code Llama Leaps Forward: Conversations ignited by the release of Code Llama show a commitment to enhancing programming productivity. Curiosity about permissible variability between Hugging Face and GitHub configuration formats for Code Llama indicates the precision required for fine-tuning these purpose-built models.

Interconnects (Nathan Lambert) Discord

-

Sakana AI Joins the Unicorn Club: Sakana AI, pushing past traditional transformer models, has secured a monster $1B valuation from heavy-hitters like NEA, Lux, and Khosla, marking a significant milestone for the AI community. Full financial details can be ferreted out in this article.

-

Next-Gen Video Generation with Runway’s Gen-3 Alpha: Runway has turned heads with its Gen-3 Alpha, flaunting the ability to create high-quality videos replete with intricate scene transitions and a cornucopia of cinematographic styles, setting a new bar in video generation which can be explored here.

-

DeepMind’s Video-Turned-Audio Breakthrough: Google DeepMind’s new video-to-audio technology aims to revolutionize silent AI video generations by churning out a theoretically infinite number of tracks tailored to any video, as showcased in Rowan Cheung’s examples.

-

Wayve’s Impressive Take on View Synthesis: Wayve claims a fresh victory in AI with a view synthesis model that leverages 4D Gaussians, promising a significant leap in generating new perspectives from static images, detailed in Jon Barron’s tweet.

-

Speculations Stir on OpenAI’s Future: Whispers of OpenAI’s governance shake-up suggest a potential pivot to a for-profit stance with musings of a subsequent IPO, stirring debate within the community; some greet with derision while others await concrete developments, as covered in The Information and echoed by Jacques Thibault’s tweet.

LlamaIndex Discord

-

RAG and Agents Drawn Clear: An Excalidraw-enhanced slide deck was shared detailing the construction of Retrieval-Augmented Generation (RAG) and Agents, containing diagrams that elucidate concepts from simple to advanced levels.

-

Observability Integrated in LLM Apps: A new module for instrumentation brings end-to-end observability to LLM applications through Arize integration, with a guide available detailing custom event/span handler instrumentation.

-

Knowledge Graphs Meet Neo4j: Discussions around integrating Neo4j knowledge graphs with LlamaIndex focused on transforming Neo4j graphs into property graphs for LlamaIndex, with resources and documentation provided (LlamaIndex Property Graph Example).

-

Enhanced LLMs with Web Scraping Strategies: Apublication discusses improving LLMs by combining them with web scraping and RAG, recommending tools such as Firecrawl for effective Markdown extraction, and Scrapfly for diverse output formats suitable for LLM preprocessing.

-

Practical Tutorials and AI Event Highlights: Practical step-by-step guides for full-stack agents and multimodal RAG pipelines were made available, and AI World’s Fair highlighted with noteworthy speakers shared their knowledge on AI and engineering, enhancing the community’s skill set and understanding of emerging AI trends.

tinygrad (George Hotz) Discord

-

Script Snafu and OpenCL Woes: Discussions around

autogen_stubs.shrevealed thatclang2pybreaks the indentation, but this was found unnecessary for GPU-accelerated tinygrad operations. Meanwhile, George Hotz suggested fixing OpenCL installation and verifying withclinfodue to errors affecting tinygrad’s GPU functionality. -

Enhanced OpenCL Diagnostics on the Horizon: A move to improve OpenCL error messages is underway, with a proposed solution that autonomously generates messages from available OpenCL headers, aiming to ease developers’ debugging process.

-

Deciphering Gradient Synchronization: In a bid to demystify gradient synchronization, George Hotz affirmed Tinygrad’s built-in solution within its optimizer, touting its efficiency compared to the more complex Distributed Data Parallel in PyTorch.

-

Chasing PyTorch’s Tail with Ambitions and Actions: George Hotz conveyed ambitions for tinygrad to eclipse PyTorch in terms of speed, simplicity, and reliability. Although currently trailing, particularly in LLM training, tinygrad’s clean design and strong foundation exude promise.

-

Precision Matters in the Kernel Cosmos: A technical exchange discussed strategies for incorporating mixed precision in models, where George Hotz recommended late casting for efficiency gains and the use of

cast_methods, highlighting a critical aspect of optimizing for computation-heavy tasks.

OpenRouter (Alex Atallah) Discord

-

GPT Notes App Unveiled: An LLM client and notes app hybrid has been demonstrated, featuring dynamic inclusion of notes, vanilla JavaScript construction, and local storage of notes and API keys in the browser; however, it currently lacks mobile support. The app is showcased with a Codepen and a full-screen deployment.

-

OpenRouter Gripes and Glimpses: OpenRouter requires at least one user message to prevent errors, with users suggesting the use of the

promptparameter; formatting tools like PDF.js and Jina AI Reader are recommended for PDF pre-processing to enhance LLM compatibility. -

Censorship Consternation with Qwen2: The Qwen2 model is facing user criticism for excessive censorship, while the less restrictive Dolphin Qwen 2 model garners recommendation for its more realistic narrative generation.

-

Gemini Flash Context Clash: Questions arise over Gemini Flash’s token limits, with OpenRouter listing a 22k limit, in contrast to the 8k tokens cited in the Gemini Documentation; the discrepancy is attributed to OpenRouter’s character counting to align with Vertex AI’s pricing.

-

Rate Limits and Configuration Conversations: Users discuss rate limits for models like GPT-4o and Opus and model performance configurations; for further information, the OpenRouter documentation on rate limits proves informative, and there is a focus on efficiency in API requests and usage.

LangChain AI Discord

-

LangChain API Update Breaks TextGen: A recent API update has disrupted textgen integration in LangChain, with members seeking solutions in the general channel.

-

Technical Troubleshooting Takes the Stage: Users discussed challenges with installing langchain_postgres and a ModuleNotFoundError caused by an update to tenacity version 8.4.0; reverting to version 8.3.0 fixed the issue.

-

LangChain Knowledge Sharing: Questions around LangChain usage emerged, including transitioning from Python to JavaScript implementations, and handling of models like Llama 3 or Google Gemini for local deployment.

-

Tech enthusiasts Intro New Cool Toys: Innovative projects were highlighted such as R2R’s automatic knowledge graph construction, an interactive map for Collision events, and CryptGPT, which is a privacy-preserving approach to LLMs using Vigenere cipher.

-

AI for the Creatively Inclined: Community members announced a custom GPT for generating technical diagrams, and Rubik’s AI, a research assistant and search engine offering free premium with models like GPT-4 Turbo to beta testers.

Latent Space Discord

OtterTune Exits Stage Left: OtterTuneAI has shut down following a failed acquisition deal, marking the end of their automatic database tuning services.

Apple and OpenAI Make Moves: Apple released optimized on-device models on Hugging Face, such as DETR Resnet50 Core ML, while OpenAI faced criticism from Edward Snowden for adding former NSA Director Paul M. Nakasone to its board.

DeepMind Stays in Its Lane: In recent community discussions, it was clarified that DeepMind has not been contributing to specific AI projects, debunking earlier speculation.

Runway and Anthropic Innovate: Runway announced their new video generation model, Gen-3 Alpha, on Twitter, while Anthropic publicized important research on AI models hacking their reward systems in a blog post.

Future of AI in Collaboration and Learning: Prime Intellect is set to open source sophisticated models DiLoco and DiPaco, Bittensor is making use of The Horde for decentralized training, and a YouTube video shared among users breaks down optimizers critical for model training.

Cohere Discord

-

AGI: Fantasy or Future?: Members shared their perspectives on a YouTube video about AGI, discussing the balance between skepticism and the potential for real progress that parallels the aftermath of the dot-com bubble.

-

Next.js Migrations Ahead: There’s a collaborative push to utilize Next.js App Router for the Cohere toolkit, aiming at better code portability and community contribution, details of which are in GitHub issue #219.

-

C4AI by Cohere: Nick Frosst invites to a C4AI talk via a Google Meet link, offering an avenue for community engagement on LLM advancements and applications.

-

Command Your Browser: A free Chrome Extension has been released, baking LLMs into Chrome to boost productivity, while an interactive Collision map with AI chat features showcases events using modern web tech stacks.

-

Developer Touch Base: Cohere is hosting Developer Office Hours with David Stewart for a deep dive into API and model intricacies; interested community members can join here and post their questions on the mentioned thread for dedicated support.

OpenInterpreter Discord

-

Frozen Model Mystery Solved: Engineers reported instances of a model freezing during coding, but it was determined that patience pays off as the model generally completes the task, albeit with a deceptive pause.

-

Tech Support Redirect: A query about Windows installation issues for a model led to advice pointing the user towards a specific help channel for more targeted assistance.

-

Model Memory Just Got Better: A member celebrated a breakthrough with memory implementation, achieving success they described in rudimentary terms; meanwhile, Llama 3 Instruct 70b and 8b performance details were disclosed through a Reddit post.

-

Cyber Hat Countdown: An open-source, AI-enabled “cyber hat” project sparked interest among engineers for its originality, potential for innovation, and an open invite for collaboration watch here; similarly, Dream Machine’s text and image-based realistic video creation signaled strides in AI model capabilities.

-

Semantic Search Synergy: Conversation turned to the fusion of voice-based semantic search and indexing with a vector database holding audio data, leveraging the prowess of an LLM to perform complex tasks based on vocal inputs, suggesting the nascent power of integrated tech systems.

Torchtune Discord

-

Tuning Into Torchtune’s Single Node Priorities: Torchtune is focusing on optimizing single node training before considering multi-node training; it utilizes the

tune runcommand as a wrapper fortorch run, which might support multi-node setups with some adjustments, despite being untested for such use. -

Unlocking Multi-Node Potential in Torchtune: Some members shared how to potentially configure Torchtune for multi-node training, suggesting the use of

tune run —nnodes 2and additional tools like TorchX or slurm for script execution and network coordination across nodes, referencing the FullyShardedDataParallel documentation as a resource for sharding strategies.

DiscoResearch Discord

- Llama3 Sticks to Its Roots: Despite the introduction of a German model, the Llama3 tokenizer has not been modified and remains identical to the base Llama3, raising questions about its efficiency in handling German tokens.

- Token Talk: Concerns emerged over the unchanged tokenizer, with engineers speculating that not incorporating specific German tokens could substantially reduce the context window and affect the quality of embeddings.

- Comparing Llama2 and Llama3 Token Sizes: Inquisitive minds noted that Llama3’s tokenizer is notably 4 times larger than Llama2’s, leading to questions about its existing efficacy with the German language and potential unrecognized issues.

Datasette - LLM (@SimonW) Discord

Heralding Data Engineering Job Security: ChatGPT’s burgeoning role in the tech landscape drew humor-inflected commentary that it represents an infinite job generator for data engineers.

Thoughtbot Clears the Fog on LLMs: The guild appreciated a guide by Thoughtbot for its lucidity in dissecting the world of Large Language Models, specifically for their delineation of Base, Instruct, and Chat models which can aid beginners.

New Kid on the Search Block: Turso’s latest release integrates native vector search with SQLite, which aims at enhancing the AI product development experience by replacing the need for independent extensions like sqlite-vss.

AI Stack Devs (Yoko Li) Discord

- In Search of Hospital AI Project Name: User gomiez inquired about the name of the hospital AI project within the AI Stack Devs community. There was no additional context or responses provided to further identify the project.

Mozilla AI Discord

- Llama as Firefox’s New Search Companion?: A guild member, cryovolcano., inquired about the possibility of integrating llamafile with tinyllama as a search engine in the Firefox browser. No further details or context about the implementation or feasibility were provided.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

Stability.ai (Stable Diffusion) ▷ #general-chat (723 messages🔥🔥🔥):

- Lack of Trust in SD3 License Creates Chaos: There are significant concerns over Stability AI’s new license for SD3, leading to temporary banning of SD3-related content on Civitai due to the perceived legal ambiguities. Civitai announcement mentions that “legal team review” is underway.

- Community Frustration and Critics’ Backlash: Many users voice their frustrations and criticisms towards Stability AI’s confusing license and handling of SD3’s release. One user notes, “The worst base model release yet… I just wanted nice hands.”

- Inquiry and Troubleshooting in ComfyUI: Several users discuss issues and fixes for ComfyUI setup, particularly around custom nodes installations and dependencies like cv2. One user shared a helpful ComfyUI install tutorial.

- Interest in Model Applications and Alternatives: Users explore models for various art styles and uses, such as retro dark fantasy and video generation with animatediff tools. User discussions imply the open-source community might pivot attention to alternative models and tools post-SD3 controversy.

- Youtuber Olivio Sarikas Faces Scrutiny: Multiple users discuss the youtuber’s video on SD3’s license, accusing him of spreading misinformation and overblown fears about the legal implications, with one stating, “Olivio had all the information… and willfully misreported it to farm views.”

Links mentioned:

- - YouTube: no description found

- PenelopeSystems/penelope-palette · Hugging Face: no description found

- SD3 - Absurd new License. Stability AI asks you to destroy your Models!: The new SD3 License from Stability AI asks you to destroy your models. The new Creator License has some pretty absurd Terms. Including limiting you to only 6...

- YouTube: no description found

- SD3 - Absurd new License. Stability AI asks you to destroy your Models!: The new SD3 License from Stability AI asks you to destroy your models. The new Creator License has some pretty absurd Terms. Including limiting you to only 6...

- Stable Diffusion 3's Concerning Fine Print: What Studios and Artists Should Know About the New Terms: We took a look at the fine print of Stable Diffusion 3's new licenses and break down what you need to know if you are planning to use SD3 for commercial or n...

- ONNX | Home: no description found

- SD3 - Absurd new License. Stability AI asks you to destroy your Models!: The new SD3 License from Stability AI asks you to destroy your models. The new Creator License has some pretty absurd Terms. Including limiting you to only 6...

- DreamStudio: no description found

- SD3 IS HERE!! ComfyUI Workflow.: SD3 is finally here for ComfyUI!Topaz Labs: https://topazlabs.com/ref/2377/HOW TO SUPPORT MY CHANNEL-Support me by joining my Patreon: https://www.patreon.co...

- Temporary Stable Diffusion 3 Ban | Civitai: Unfortunately, due to a lack of clarity in the license associated with Stable Diffusion 3 , we are temporarily banning: All SD3 based models All mo...

- ByteDance/Hyper-SD · Hugging Face: no description found

- Hyper-SD: Trajectory Segmented Consistency Model for Efficient Image Synthesis: no description found

- GitHub - Picsart-AI-Research/StreamingT2V: StreamingT2V: Consistent, Dynamic, and Extendable Long Video Generation from Text: StreamingT2V: Consistent, Dynamic, and Extendable Long Video Generation from Text - Picsart-AI-Research/StreamingT2V

- Shoe Nike GIF - Shoe Nike Design Shoe - Discover & Share GIFs: Click to view the GIF

- GitHub - Fannovel16/comfyui_controlnet_aux: ComfyUI's ControlNet Auxiliary Preprocessors: ComfyUI's ControlNet Auxiliary Preprocessors. Contribute to Fannovel16/comfyui_controlnet_aux development by creating an account on GitHub.

- Tweet from D̷ELL (@xqdior): Stable Diffusion 3 8Bを搭載した、Stable Image API Ultraをみなさまに体験していただきました。生成いただいた画像をまとめましたので、レポートさせて頂きます。 Stable Diffusion 3 最上級モデルの性能を、ぜひご覧くださいませ。 https://qiita.com/nqdior/items/ce894b5c5382b2029ced #Qiita

- sd web ui 3d lora: Discover the magic of the internet at Imgur, a community powered entertainment destination. Lift your spirits with funny jokes, trending memes, entertaining gifs, inspiring stories, viral videos, and ...

- Tweet from -Zho- (@ZHOZHO672070): InstantX 刚刚又连续上传了 4 个 SD3 Medium 的 ControlNet 模型 Canny(1024):https://huggingface.co/InstantX/SD3-Controlnet-Canny Pose:https://huggingface.co/InstantX/SD3-Controlnet-Pose Tile(还在上传):https://huggingfa...

- Releases · comfyanonymous/ComfyUI: The most powerful and modular stable diffusion GUI, api and backend with a graph/nodes interface. - comfyanonymous/ComfyUI

- Professional Membership Agreement — Stability AI: no description found

- ptx0/sd3-reality-mix · Hugging Face: no description found

- nerijs/pixel-art-medium-128-v0.1 · Hugging Face: no description found

- Civitai: The Home of Open-Source Generative AI: Explore thousands of high-quality Stable Diffusion models, share your AI-generated art, and engage with a vibrant community of creators

- Reddit - Dive into anything: no description found

- Realistic Vision V6.0 B1 - V5.1 Hyper (VAE) | Stable Diffusion Checkpoint | Civitai: Recommendations for using the Hyper model: Sampler = DPM SDE++ Karras or another / 4-6+ steps CFG Scale = 1.5-2.0 ( the lower the value, the more m...

Unsloth AI (Daniel Han) ▷ #general (517 messages🔥🔥🔥):

- Work in Progress on Ollama Support: A member stated, "Unfortunately the Ollama support got kinda delayed," but reassured that they are "working with the amazing Ollama team." The support is around 80% complete.

- Validation Issues in Template Fine-Tuning: A member queried about validating templates for use with Ollama and discussed issues with learning rates and model configurations. They noted, "I had acceptable results with my merged models but it turns sick sometimes."

- Push to HF Merged Models Issue: A member raised a problem where running `model.push_to_hub_merged` only saves the adapter but not the full merged model. Another member suggested a workaround involving manually merging before uploading.

- Training Performance Comparisons: A user highlighted UnsLoath's performance in training speed, claiming it was "24% faster than torch.compile() torchtune for 4090" based on their benchmarking results. The UnsLoath team acknowledged this and discussed the possibility of releasing an academic paper on it.

- Upcoming Multi-GPU Support: The team confirmed that they will be implementing multi-GPU support up to 8 GPUs. A small group is getting early access for initial testing.

Links mentioned:

- Join the Ollama Discord Server!: Check out the Ollama community on Discord - hang out with 49602 other members and enjoy free voice and text chat.

- nyunai/nyun-c2-llama3-50B · Hugging Face: no description found

- Accessing GPT-4 level Mathematical Olympiad Solutions via Monte Carlo Tree Self-refine with LLaMa-3 8B: This paper introduces the MCT Self-Refine (MCTSr) algorithm, an innovative integration of Large Language Models (LLMs) with Monte Carlo Tree Search (MCTS), designed to enhance performance in complex m...

- Lecture 10: Build a Prod Ready CUDA library: Slides https://drive.google.com/drive/folders/158V8BzGj-IkdXXDAdHPNwUzDLNmr971_?usp=sharingSpeaker: Oscar Amoros Huguet

- KAN: Kolmogorov-Arnold Networks: A Google Algorithms Seminar TechTalk, presented by Ziming Liu, 2024-06-04ABSTRACT: Inspired by the Kolmogorov-Arnold representation theorem, we propose Kolmo...

- torchtune vs axolotl vs unsloth Trainer Performance Comparison: A performance comparison of various trainers and GPUs. Made by lhl using Weights & Biases

- Card Codes GIF - Card Codes - Discover & Share GIFs: Click to view the GIF

- Tweet from Ryan Els (@RyanEls4): AI revealed 😲

- save_pretrained_merged doesn't merge the model · Issue #611 · unslothai/unsloth: Problem My goal, I want to save the merged model as a GGUF file, but I'm getting various errors. The deeper problem seems to be that merging lora+base model isn't saving a merged file. I think...

- Home: Finetune Llama 3, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Tweet from AI Engineer (@aiDotEngineer): We're excited to announce our speakers! CEO @Modular AI LEAD @MozillaAI ENG LEAD @OpenAI CEO @UnslothAI TBA @Microsoft TBA @AnthropicAI CEO @cognition_labs (Devin) CEO @anysphere (@cursor_ai) CTO...

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Google Colab: no description found

- Continued LLM Pretraining with Unsloth: Make a model learn a new language by doing continued pretraining with Unsloth using Llama 3, Phi-3 and Mistral.

- Google Colab: no description found

- Reddit - Dive into anything: no description found

- Training Comparison: Sheet1 Run,Trainer,GPU,Train (h),Max Mem (GiB),Power (W),Energy (kWh),Tok/s,Steps,Optimizer,Max Seq,Batch Size,Grad Accum,Global,Notes <a href="https://wandb.ai/augmxnt/train-bench/runs/n59y6...

- augmxnt: Weights & Biases, developer tools for machine learning

Unsloth AI (Daniel Han) ▷ #random (17 messages🔥):

- Vintage Music Video Shared: A member posted a YouTube video titled “Not like us (1962) full song,” indicating their appreciation for older music styles. Another member complimented the taste, humorously noting they’ve only listened to anime songs.

- Darude’s Sandstorm and Musical Preferences: A member jokingly shared Darude - Sandstorm, later revealing a genuine preference for Daft Punk’s Discovery album, sharing it on Spotify. Other users chimed in to share their favorite Daft Punk songs like “Lose Yourself to Dance.”

- Mixed Reactions to Gemma 2 on AI Studio: A member mentioned trying out Gemma 2 27b on aistudio.google.com, noting the output was not impressive. Another user recognized the reference from Reddit, while others expressed excitement and anticipation for Gemma 2 and its potential capabilities.

- Speculation and Excitement for Gemini 2.0: Users speculated that the release of Gemma 2 could mean that Gemini 2.0 is also near. There was notable excitement about the potential for training the model, with one user contemplating renting a Runpod 48GB instance to thoroughly test the model’s performance and capacity.

Links mentioned:

- Not like us (1962) full song: K Dot senior is back to fulfill your requests for the full version of this song.

- Darude - Sandstorm: New Darude album "Together" out now → https://found.ee/Darude-TogetherNew 'Closer Together' music video out now → https://youtu.be/edUBI3k2lUo?si=ynkxg7p7Ofa...

- Spotify - Web Player: Music for everyone: Spotify is a digital music service that gives you access to millions of songs.

- High Life: Daft Punk · Song · 2001

Unsloth AI (Daniel Han) ▷ #help (304 messages🔥🔥):

-

Facing issues with Triton on Windows: A member reported issues installing Triton on Windows 11 even after setting up Visual C++ correctly. Assistance was provided by querying if

g++orclang++could be called from the terminal. -

Data Preparation Tutorial Request: A member inquired about a data preparation tutorial for Unsloth fine-tuning similar to OpenAI’s chat fine-tuning data prep notebook. Another member cited a plan to create a tutorial and recommended a related YouTube video.

-

Model training crashes during saving: A member experienced crashes while training the Yi model during the last saving steps, suspecting memory or disk space issues. It was suggested to check available memory and disk space, and a link to Unsloth’s saving issues on GitHub was provided.

-

Issues with batch size and gradient accumulation: A member questioned the discrepancy in VRAM usage when adjusting batch size and gradient accumulation. Discussions clarified that gradient accumulation steps act similar to increasing batch size, and experimenting with larger batch sizes was recommended.

-

Error with quantization_method in save.py: A bug was identified where

quantization_methodwas mishandled as a string, leading to errors. A workaround involved passingquantization_methodas a list, and a pull request to fix the bug was submitted.

Links mentioned:

- CUDA Quick Start Guide: no description found

- Llama 3 Fine Tuning for Dummies (with 16k, 32k,... Context): Learn how to easily fine-tune Meta's powerful new Llama 3 language model using Unsloth in this step-by-step tutorial. We cover:* Overview of Llama 3's 8B and...

- Data preparation and analysis for chat model fine-tuning | OpenAI Cookbook: no description found

- Home: Finetune Llama 3, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Home: Finetune Llama 3, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- maplerxyz1/rbxidle · Datasets at Hugging Face: no description found

- Home: Finetune Llama 3, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- GitHub - Syllo/nvtop: GPU & Accelerator process monitoring for AMD, Apple, Huawei, Intel, NVIDIA and Qualcomm: GPU & Accelerator process monitoring for AMD, Apple, Huawei, Intel, NVIDIA and Qualcomm - Syllo/nvtop

- Continuous training on Fine-tuned Model: Thank you for your reply. I tried this as well. My old dataset and the new dataset has different texts. This method makes the model heavily lean towards the new text provided. This results in the te...

- I got unsloth running in native windows. · Issue #210 · unslothai/unsloth: I got unsloth running in native windows, (no wsl). You need visual studio 2022 c++ compiler, triton, and deepspeed. I have a full tutorial on installing it, I would write it all here but I’m on mob...

- Fix breaking bug in save.py with interpreting quantization_method as a string when saving to gguf by ArcadaLabs-Jason · Pull Request #651 · unslothai/unsloth: Context Upon attempting to save my fine-tuned models as gguf I encountered a new error as of today. Upon investigation I discovered the issue to be some code that incorrectly broke strings passed f...

- Home: Finetune Llama 3, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- unsloth/unsloth/save.py at main · unslothai/unsloth: Finetune Llama 3, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Google Colab: no description found

Unsloth AI (Daniel Han) ▷ #showcase (3 messages):

- CryptGPT introduces privacy-preserving LLMs: A user shared an introductory blog post titled “CryptGPT: Privacy-Preserving LLMs using Vigenere cipher”. The blog post describes pretraining a GPT-2 model on an encrypted dataset, achieving comparable performance to a regular GPT-2 but requiring an encryption key to use it. Blog Post Link.

Link mentioned: Tweet from Diwank Singh (@diwanksingh): http://x.com/i/article/1802116084507848704

Unsloth AI (Daniel Han) ▷ #community-collaboration (1 messages):

starsupernova: Oh very interesting!

CUDA MODE ▷ #general (49 messages🔥):

-

Lighting AI Interface Suggestions: A member shared the NVIDIA warp example code and sought advice on a graphical interface to see the rendered results. They considered setting up a VNC session to resolve the issue.

-

Solved NVRTC Compilation Error: A user described an issue with NVRTC where compiling multiple kernels resulted in ‘invalid resource handle’. They later resolved it by avoiding initializing a new context for each compilation, which was causing CUDA to free the modules/functions.

-

GPU SM Count Discrepancy: A query was raised about the discrepancies between measured and reported SM counts for the A10G GPU, noting that techpowerup reports 72 SMs while pycuda measures 80. It was clarified that the site might be wrong and other sources confirm 80 SMs.

-

New NVIDIA 5090 GPU Speculations: Members discussed the upcoming NVIDIA 5090, with speculations about it having up to 64 GB of VRAM (source). There were debates about the likelihood of these specs, with pessimistic views on seeing 64GB in consumer versions.

-

Value of Forum Knowledge in Daily AI Work: A member expressed doubts about the practical value of most discussions in their daily AI work apart from a few specific topics. Others responded by emphasizing the importance of performance optimization and the general value of learning and being part of such communities.

Links mentioned:

- Chinese zodiac - Wikipedia: no description found

- warp/warp/examples/core/example_sph.py at main · NVIDIA/warp: A Python framework for high performance GPU simulation and graphics - NVIDIA/warp

- NVIDIA A10 vs A10G for ML model inference: The A10, an Ampere-series GPU, excels in tasks like running 7B parameter LLMs. AWS's A10G variant, similar in GPU memory & bandwidth, is mostly interchangeable.

- Reddit - Dive into anything: no description found

- NVIDIA GeForce RTX 3090 Specs: NVIDIA GA102, 1695 MHz, 10496 Cores, 328 TMUs, 112 ROPs, 24576 MB GDDR6X, 1219 MHz, 384 bit

- NVIDIA GeForce RTX 4090 Specs: NVIDIA AD102, 2520 MHz, 16384 Cores, 512 TMUs, 176 ROPs, 24576 MB GDDR6X, 1313 MHz, 384 bit

- NVIDIA A10G Specs: NVIDIA GA102, 1710 MHz, 9216 Cores, 288 TMUs, 96 ROPs, 24576 MB GDDR6, 1563 MHz, 384 bit

CUDA MODE ▷ #triton (2 messages):

-

AttributeError in Triton on Colab: A user encountered an AttributeError while running Fused Softmax from Triton’s official tutorial on Colab. The error message indicated

'CudaDriver' object has no attribute 'active'and they are seeking assistance for this issue. -

Nested Reduction Feasibility in Triton: Another user inquired about the possibility of performing nested reductions in Triton. They are interested in running reduction code at various stages to handle quadrants individually, asking if this staged reduction is supported.

CUDA MODE ▷ #torch (10 messages🔥):

-

Error with

torch.compile(mode="max-autotune"): A user reported receiving an error message,Not enough SMs to use max_autotune_gemm mode, due to a hard-coded limit of 68 SMs in the PyTorch code, while their GPU only has 66 SMs. The user shared a link to the relevant section in the PyTorch repository. -

Discussion on Reducing SM Threshold: A member suggested lowering the SM threshold to test if performance remains good without needing to rebuild from source. The lack of consumer GPUs in CI was mentioned as a reason for the current hard-coded value.

-

Testing Performance with Modified SM Threshold: After changing the SM threshold to 0, the user reported no significant performance improvement.

-

Enabling Coordinate Descent Tuning: Another member proposed enabling coordinate descent tuning found in

inductor/config.pyas a potential solution for improving performance.

Link mentioned: pytorch/torch/_inductor/utils.py at f0d68120f4e99ee6c05f1235d9b42a4524af39d5 · pytorch/pytorch: Tensors and Dynamic neural networks in Python with strong GPU acceleration - pytorch/pytorch

CUDA MODE ▷ #algorithms (2 messages):

-

Vayuda paper sparks interest in search algorithms: A member shared a link to the Vayuda paper expressing hope that more people would work on search. This implies a potential for significant research and development in the area.

-

GPT-4 matches LLaMA 3 8B impressively: A member was impressed by how matching GPT-4 with LLaMA 3 8B turned out. They highlighted this achievement as noteworthy in current AI capabilities.

CUDA MODE ▷ #beginner (5 messages):

- Blockwise softmax not in PMPP book: Blockwise softmax concepts are not covered in the PMPP book, but understanding the flash-attn algorithm and shared memory (smem) is crucial. High-end implementations leverage tensor cores, requiring further exploration into resources like CUTLASS.

- Start with accessible YouTube lectures: For newcomers to GPU programming and high-performance computing, starting with YouTube lectures is advised. These lectures aim to provide an accessible introduction to the fundamentals.

CUDA MODE ▷ #jax (1 messages):

- Announcing tpux for simplifying Cloud TPU: A member announced the tpux project, a suite of tools aimed at simplifying Cloud TPU setup and operation to facilitate the usage of JAX across multiple hosts. For more details, visit tpux on GitHub and give it a ⭐️.

Link mentioned: GitHub - yixiaoer/tpux: A set of Python scripts that makes your experience on TPU better: A set of Python scripts that makes your experience on TPU better - yixiaoer/tpux

CUDA MODE ▷ #torchao (11 messages🔥):

-

Quant API Import Documentation Issue: A member flagged a correction stating,

unwrap_tensor_subclass()should be imported fromtorchao.utilsortorchao.quantization.quant_api, nottorchao.quantization.utils. They emphasized the importance of users callingunwrap_tensor_subclass()before compiling the quant model to avoid errors. -

API Release Delay and BC Issues: It was confirmed that the 0.3 release is being delayed due to backward compatibility issues that need resolution. This delay ensures the team can address and fix critical problems.

-

Innovative API Naming with ‘brrr’ Proposal: There was a playful yet practical suggestion to create an API with the name

brrrthat adds additional experimental flags based on the number of ‘r’s. A member humorously asked if this was serious but also hinted at a need for easier control overtorchinductorflags likeuse_mixed_mm. -

Feedback on

use_mixed_mmFlag: A member suggested enabling theuse_mixed_mmflag by default if the relevant kernel in AO is on. This feedback may lead to a GitHub issue for further discussion and implementation.

CUDA MODE ▷ #off-topic (10 messages🔥):

-

Meta tackles large-scale AI training challenges: Meta’s article discusses the complexity and computation required to train large language models (LLMs). The shift to generative AI has necessitated a rethinking of software, hardware, and network infrastructure.

-

Interview with Esolang Academics: A YouTube video titled “Interview with Esolang Academic 2024” was shared. The full version and BC Vim Linter will be available on Patreon for $5 the following day.

-

Pessimistic Neko’s Jensen Emojis: Member pessmistic_neko posted emojis <:jensen:1189650200147542017> to express their amusement.

Links mentioned:

- Interview with Esolang Academic 2024: Esoteric programming languageFull version + BC Vim Linter for $5 tomorrow on: https://www.patreon.com/ProgrammersAreAlsoHuman Interview with an Esoteric deve...

- How Meta trains large language models at scale: As we continue to focus our AI research and development on solving increasingly complex problems, one of the most significant and challenging shifts we’ve experienced is the sheer scale of co…

- How Meta trains large language models at scale: As we continue to focus our AI research and development on solving increasingly complex problems, one of the most significant and challenging shifts we’ve experienced is the sheer scale of co…

CUDA MODE ▷ #irl-meetup (1 messages):

- Catch Akim at AI_dev Conference: One member mentioned they will “probably be at AI_dev” and invited others to reach out. They also noted that there will be a movie about “PyTorch” shown on Tuesday.

CUDA MODE ▷ #llmdotc (473 messages🔥🔥🔥):

-

DataLoader PR merged, surprising no performance difference: The Permuted DataLoader PR was merged after some discussion and testing, although initial runs showed no performance improvement. A surprised Aleksa retested and finally confirmed a slight improvement in validation loss.

-

Stochastic rounding only with unique seeds: The PR to ensure unique seeds for stochastic rounding was discussed. The team discovered that an overflow feature in their approach was actually intended by the noise function algorithm.

-

ZeRO-2 PR has noticeable memory overhead: PR 593 for ZeRO-2 was discussed for its complexity and memory overhead. Suggestions included falling back to ZeRO-1 for certain parameters.

-

Master weight storage: A PR (https://github.com/karpathy/llm.c/pull/522) to save master weights to resume state was merged to improve determinism. Follow-up tasks included verifying determinism through CI and exploring memory-saving techniques such as saving 16-bit master weights.

-

LayerNorm kernel optimization delivers speedup: Profiling data indicated that the new LayerNorm kernel (kernel 6) was faster than the older ones (kernel 3 and 5). This boosted certain tasks significantly especially under specific configurations, like recompute=2.

Links mentioned:

- Revisiting BFloat16 Training: State-of-the-art generic low-precision training algorithms use a mix of 16-bit and 32-bit precision, creating the folklore that 16-bit hardware compute units alone are not enough to maximize model acc...

- Tweet from undefined: no description found

- Noise-Based RNG: In this 2017 GDC Math for Game Programmers talk, SMU Guildhall's Squirrel Eiserloh discuss RNGs vs. noise functions, and shows how the latter can replace th...

- Tweet from Squirrel Eiserloh (@SquirrelTweets): Updated my raw noise function. Eliminates a flaw discovered by @ptrschmdtnlsn in which certain high input bits lacked influence over certain low output bits. Anyone using Squirrel3 from my GDC 2017 ...

- Noise-Based RNG: In this 2017 GDC Math for Game Programmers talk, SMU Guildhall's Squirrel Eiserloh discuss RNGs vs. noise functions, and shows how the latter can replace th...

- Scaling Laws and Compute-Optimal Training Beyond Fixed Training Durations: Scale has become a main ingredient in obtaining strong machine learning models. As a result, understanding a model's scaling properties is key to effectively designing both the right training setu...

- Use faster kernel for LayerNorm forward by gordicaleksa · Pull Request #600 · karpathy/llm.c: I ran kernel 5 under /dev/cuda/ (./layernorm_forward 5) on both RTX 3090 and H100 systems and it's faster on both of them. Numbers: kernel 3, optimal block size on: RTX 3090 → 32 (689.11 GB/s) H1...

- llm.c/llmc/global_norm.cuh at master · karpathy/llm.c: LLM training in simple, raw C/CUDA. Contribute to karpathy/llm.c development by creating an account on GitHub.

- add scripts to export to HF and run Eleuther evals by karpathy · Pull Request #594 · karpathy/llm.c: no description found

- GELU Fusion with cuBLASLt (SLOWER because it only merges in FP16 mode, not BF16/FP32...) by ademeure · Pull Request #338 · karpathy/llm.c: It turns out that not only is cuBLASLt not able to fuse BF16 GELU (or RELU) into a BF16 matmul, it also ends up with a strange kernel that is slower than our own GELU kernel as it does 2 writes per...

- Hotfix - proper handling of max num of block sums by gordicaleksa · Pull Request #602 · karpathy/llm.c: The previous assert logic was too restrictive as it depended on the number of layers of the model and the specification of the GPU (num of SMs & max number of threads per SM). This PR fixes that. ...