Muon is all you need?

AI News for 7/25/2025-7/28/2025. We checked 9 subreddits, 449 Twitters and 29 Discords (227 channels, and 16798 messages) for you. Estimated reading time saved (at 200wpm): 1388 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

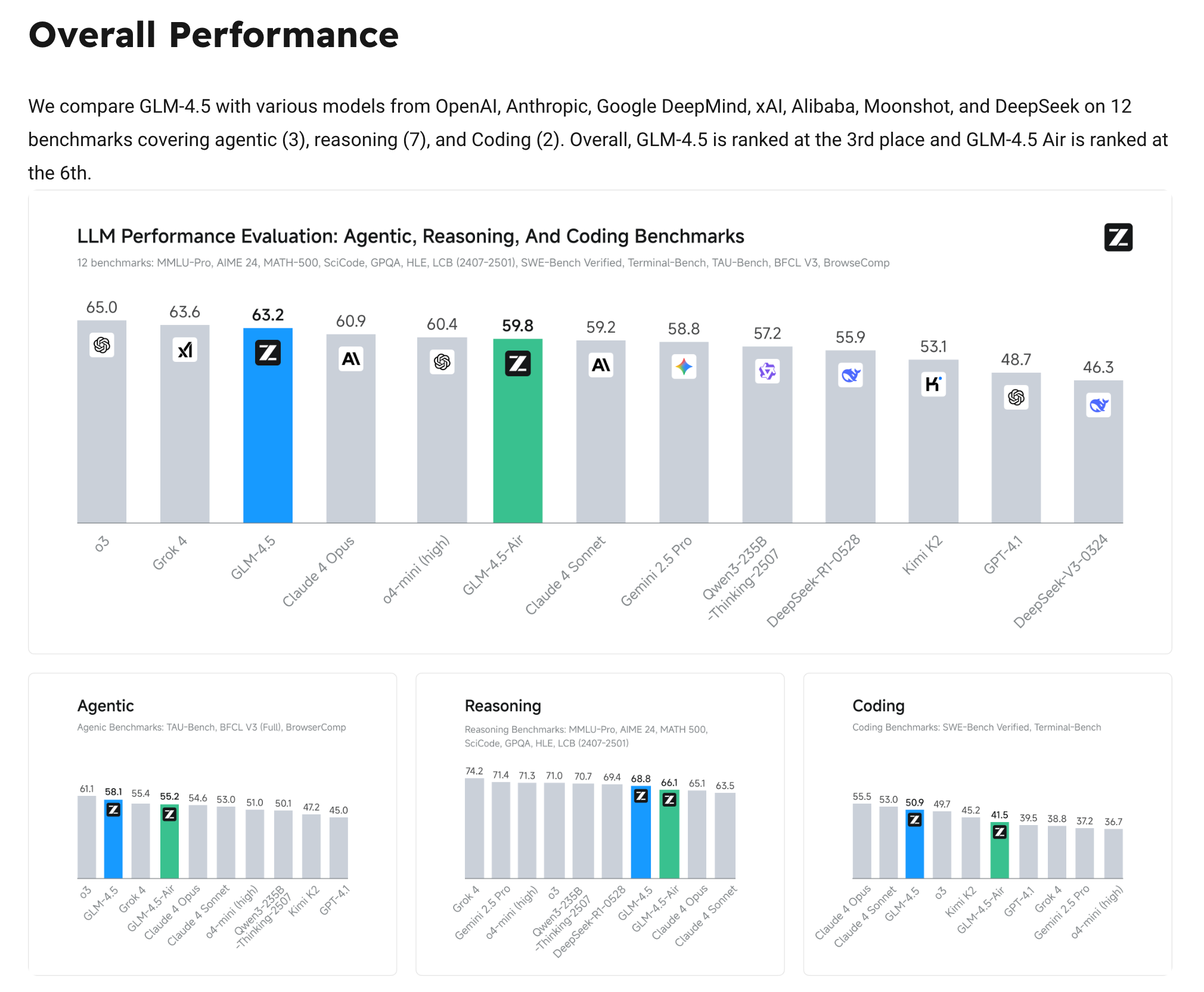

A banner day for Chinese open weights AI. The generative media types should definitely take a look at Wan 2.2, but most AI Engineers should be apprised of Z.ai’s (better known as Zhipu, one of the AI Tigers) GLM-4.5-355B-A32B and GLM-4.5-Air-106B-A12B released today. They make a VERY strong claim (to be independently verified) of being not only the strongest open weights model (beating the previous SOTA Kimi K-2) but also highly competitive with and often better than heavyweight SOTA models like Claude 4 Opus, Grok 4, and OpenAI’s o3:

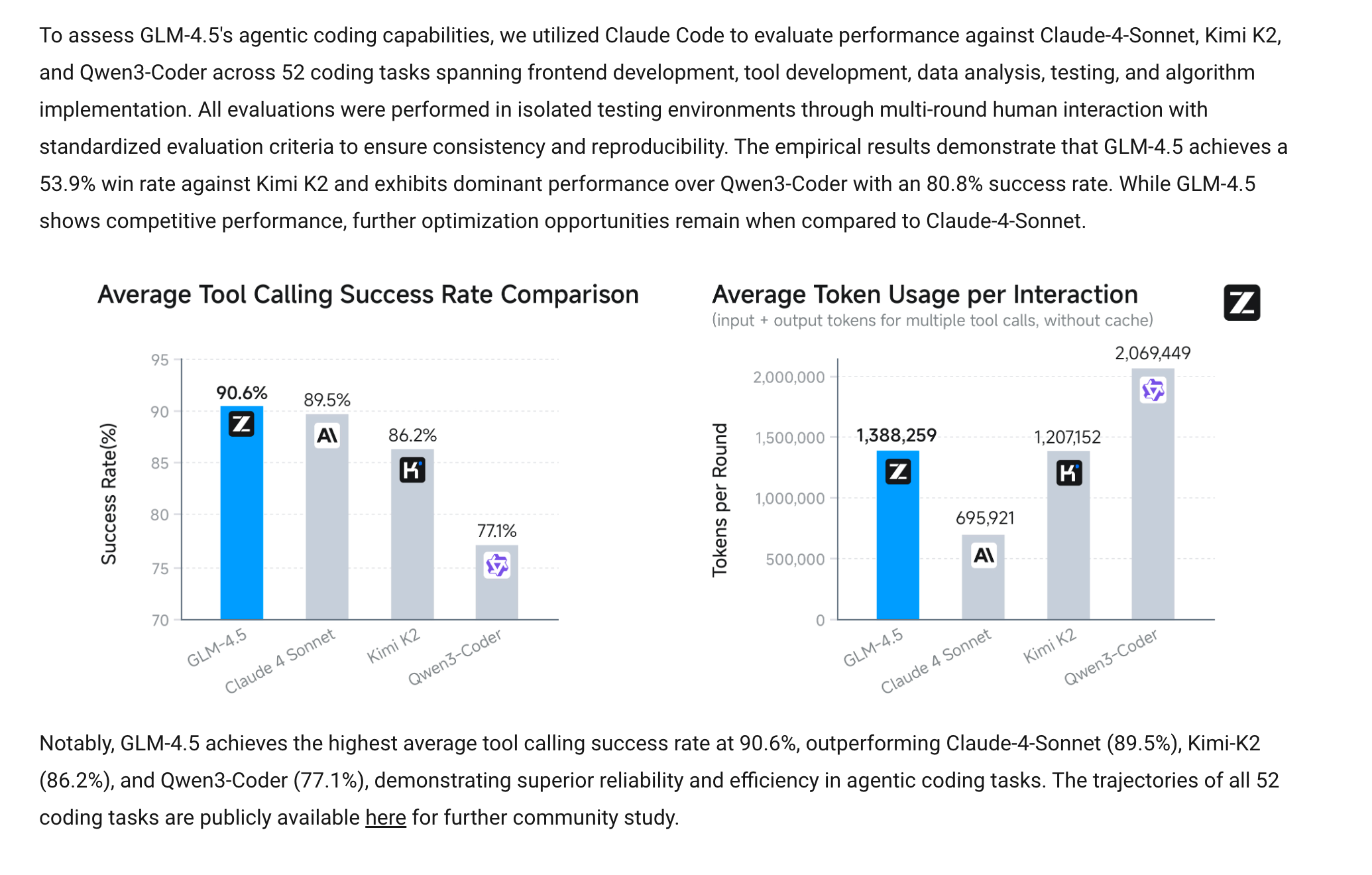

Beyond just the table stakes benchmarks to be considered a frontier model, Z.ai also commendably emphasizes new measurements that matter greatly for agentic use, including token efficiency (perhaps the hardest metric of all)

No paper yet, but the blog post offers some interesting details on architecture choice and efficient RL training. GLM 4.5 is the second large model this month to validate the Muon optimizer at scale.

AI Twitter Recap

New Model Releases & Performance

- GSPO and the Qwen3 Model Suite: Alibaba Qwen announced Group Sequence Policy Optimization (GSPO), a new reinforcement learning algorithm described as a breakthrough for scaling large models. It features sequence-level optimization, improved stability for large MoE models without needing “hacks like Routing Replay,” and powers the latest Qwen3 models (Instruct, Coder, Thinking). The research paper is noted by @lupantech and is praised by @teortaxesTex as their most impressive paper to date. The algorithm has already been integrated into Hugging Face’s TRL library, as noted by @mervenoyann and @_lewtun.

- **Zai.org Launches GLM-4.5 Models**: Chinese AI lab Zai.org has released two new open-source models, GLM-4.5 and GLM-4.5-Air, with a permissive MIT license. As summarized by @scaling01, GLM-4.5 is a 355B parameter MoE model with 32B active parameters, while GLM-4.5 Air is 106B with 12B active. The models are described as hybrid reasoning models with a focus on coding and agentic tasks. In a notable move towards transparency, Zai.org also open-sourced all 52 task trajectories from their agentic coding evaluation for community review.

- Speculation on “Summit” and “Zenith” as GPT-5: A new set of powerful mystery models, codenamed “summit” and “zenith,” appeared on LM Arena, fueling speculation they could be versions of GPT-5. @Teknium1 reported being told “zenith is gpt-5,” while @emollick and @scaling01 showcased their impressive capabilities in generating complex p5.js code and creative writing. Users noted the models appear to be based on a GPT-4.1 series with a June 2024 knowledge cutoff.

- Qwen3-Coder’s Strong Coding Performance: Alibaba’s Qwen3-Coder model has demonstrated strong performance on coding benchmarks. @cline reported a 5.32% diff edit failure rate, placing it alongside Claude Sonnet 4 and Kimi K2. OpenRouterAI noted that the model passed Grok 4 in programming rankings, tying with Kimi.

- The Rise of Chinese Open-Source Models: A significant trend observed this month is the rapid release of powerful open-source models from Chinese labs. @Yuchenj_UW compiled a list of July releases including GLM-4.5, Wan-2.2, Qwen3 Coder, and Kimi K2, contrasting it with a perceived slowdown from Western labs like OpenAI and Meta.

- Hunyuan3D World Model 1.0 Release: Tencent Hunyuan has open-sourced its Hunyuan3D World Model 1.0, which enables the generation of explorable 3D environments.

AI Agents & Agentic Workflows

- Claude Code for Complex Agentic Systems: Claude Code is being highlighted as a powerful tool for orchestrating complex agentic systems. @omarsar0 demonstrated building a multi-agent deep research system by chaining sub-agents with

/commandsfor reliability, noting it’s useful for more than just code. - ChatGPT Agent Officially Rolls Out: OpenAI announced that the ChatGPT agent is now fully rolled out to all Plus, Pro, and Team users. However, the rollout wasn’t without hitches, as @gneubig humorously pointed out that the OpenAI agent was being blocked by OpenAI’s own captcha.

- The Future of Agents: Proactive & Ambient: @_philschmid outlines the next iteration of agents, predicting a shift from request-response to proactive, ambient agents that operate in the background. These agents will be triggered by events, monitor data streams, and require new UI paradigms beyond chat, with a strong emphasis on human oversight and long-term memory.

- Perplexity Comet Browser Agent: Perplexity AI continues to send out invites for its Comet browser agent. @AravSrinivas showcased a demo of Comet acting as a travel agent to book a flight on United, including seat selection. He also noted that Perplexity is the default search on the Comet browser, which could significantly drive usage.

- Why Multi-Agent Systems Fail: DeepLearningAI summarized a research paper that categorized the primary causes of failure in multi-agent systems as poor specifications, inter-agent misalignment, and weak task verification.

Video & Multimodal Generation

- Runway Aleph Sets a New Frontier: Runway began rolling out Aleph, its state-of-the-art in-context video model. Creative Technologist @c_valenzuelab shared numerous demos showcasing its capabilities: creating infinite camera coverage on demand, modifying specific parts of a video while retaining motion and identity, setting juggling balls on fire, performing wardrobe and styling modifications, and seamlessly removing objects from a scene. He described it as a “new medium” where the hardest part is conceptualizing what to create.

- Open-Source Video: Wan 2.2 Released: Countering the trend of closed video models, Alibaba released Wan 2.2, the “World’s First Open-Source MoE-Architecture Video Generation Model”. @scaling01 noted its release and @ostrisai highlighted a 5B version supporting text-to-video and image-to-video at 24 FPS on a single RTX 4090.

- Kling AI Introduces Kling Lab: Kling AI announced Kling Lab, a new workspace designed to streamline the creative video generation process, which is currently in beta testing.

- Grok Imagine Enters Waitlist Beta: xAI launched Grok Imagine, an image and video generation tool, behind a waitlist on the Grok app. @chaitualuru described it as a “fun image and video generation experience” and noted they are expanding access.

Infrastructure, Tooling & Efficiency

- Frameworks and Libraries: The supervision open-source library, created by @skalskip92, has crossed 30,000 stars on GitHub. LangGraph released v0.6.0 with a new context API for type-safe dependency injection. Red Hat AI’s GuideLLM is joining the vLLM project, combining its structured generation with vLLM’s inference speed.

- LLM Evals and Data: @HamelHusain released a massively expanded LLM Evals FAQ, reorganizing it into categories and adding an audio version. On the data side, @vikhyatk warned that the popular GQA eval dataset has a 20-30% annotation error rate.

- Hardware and Training Efficiency: John Carmack @ID_AA_Carmack commented on the irony of modern ML feeling like old-school sci-fi technobabble, stating, “I am running the convolutions in frequency space!” @awnihannun provided a thought-provoking analysis on how autoregressive transformers are “adversarially designed” for modern computer memory hierarchies, suggesting that either the algorithm or the computer must change for major efficiency gains. Meanwhile, @ggerganov highlighted that AMD teams are now contributing to the llama.cpp codebase. A new NanoGPT training speed record was set by @kellerjordan0, achieving a 3.28 validation loss in 2.863 minutes on 8xH100s.

New AI Techniques & Research

- Prompt Optimization vs RLHF: A new paper on Reflective Prompt Evolution (GEPA) shared by @lateinteraction shows that prompt optimization can outperform RL algorithms like GRPO in terms of sample efficiency. The work suggests that learning via natural-language reflection will be a central paradigm for building AI systems.

- Causality in ML: @sirbayes recommends a new book on causality for ML by Elias Bareinboim, calling it a “worthy successor” to Judea Pearl’s groundbreaking work.

- The Power of Simple Penalties: @francoisfleuret argued that the key lesson from the Variational Autoencoder (VAE) is that “dumb penalties have extremely profound effects and induce incredibly sophisticated structures in deep models.”

- Meta-Learning History: Jürgen Schmidhuber @SchmidhuberAI provided a detailed historical overview of meta-learning, tracing the concept of in-context learning back to his work in the early 1990s and Sepp Hochreiter’s work in 2001.

Industry Trends & Commentary

- Hiring and Talent: Meta appointed a fresh PhD from OpenAI as its Chief Scientist, a move @Yuchenj_UW notes as “unheard of” for a 30-year-old at a major corporation, signaling a shift towards “skill >> seniority.”

- The Vision Gap in AI: @jxmnop made the surprising observation that “fifteen years of hardcore computer vision research contributed ~nothing toward AGI except better optimizers,” as models still don’t get smarter when given eyes. @teortaxesTex added that while many open models are reaching a similar performance plateau, the key missing element is a “radically new eval suite,” which would signal that someone is “climbing a new hill.”

- The Future of Search: Perplexity AI’s CEO @AravSrinivas stated that Perplexity’s rapid growth in markets like India is “clear proof that search has changed forever.”

- AI and Experience: Mustafa Suleyman @mustafasuleyman drew a “bright line” between humans and AI, stating that “To be human is to experience. Today’s AIs have knowledge… but can only imitate experience.”

Humor & Memes

- The Vibe Coder Universe: The term “vibe coding” has become a pervasive meme, used to describe an intuitive, sometimes brittle, approach to development. The concept has now evolved, with @scaling01 noting that some have “ascended from being mere vibe coders to vibe architects,” and @lateinteraction declaring we are in the “Vibe Meanings era.”

- The Magic Talking Dog Party: A party hosted by @benhylak and @okpasquale at Slack’s original office, featuring a “magical talking dog,” became a running joke, with @KevinAFischer posting a picture of the “pitch at a16z” involving the dog and a human pyramid.

- Relatable Developer Pain: @cloneofsimo lamented that in 2025, transformers can solve olympiad problems and design chips, “yet we have latex UI still breaking.” @francoisfleuret offered a warning: “If you are using FSDP and have an “if” statement in your model.forward[], be nervous my friend, be very nervous.”

- Industry Commentary: @Yuchenj_UW shared a tragic family story: “My dad bought 100 Bitcoin for $50 each in 2013. Sold them at $100, bragged for weeks. Ever since then… Bitcoin has been a banned word in our family.”

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. GLM-4.5 Announcements, Launches, and Collections

- GLM4.5 released! (Score: 720, Comments: 193): GLM-4.5 (355B total/32B active params) and GLM-4.5-Air (106B total/12B active params) are new flagship hybrid reasoning models from Zhipu AI, now open-weight on HuggingFace and ModelScope under an MIT license. Key technical features include distinct ‘thinking’ and ‘non-thinking’ modes for flexible agentic/coding tasks, as well as a native Multi-Token Prediction (MTP) layer supporting speculative decoding for improved inference performance on CPU+GPU hardware. Full details are outlined in the official blog post. Commenters emphasize the impact of both the open MIT licensing and the native MTP layer, noting it as a significant milestone for community reusability and for efficient inference, particularly on mixed hardware setups.

- The GLM-4.5 release includes foundation models (355B-A32B and 106B-A12B) under an MIT license, which is notable for enabling broad customization and innovation by the community. This open licensing for large-scale models is considered an exceptional step forward for open-source AI development.

- GLM-4.5 and GLM-4.5-Air feature an MTP (Multi-Token Prediction) layer for speculative decoding during inference, which can improve efficiency—especially for mixed CPU+GPU setups. Speculative decoding out-of-the-box is cited as a significant usability advantage for inference optimization.

- The release includes several technical assets: BF16, FP8, and base models, facilitating further training, fine-tuning, and research. Documentation and technical resources are provided for popular inference engines (vLLM, SGLang) and detailed guides for both inference and fine-tuning are shared in their GitHub and technical blog, making the models accessible for experimentation and extension.

- GLM 4.5 Collection Now Live! (Score: 221, Comments: 47): The GLM 4.5 Collection is now live on HuggingFace (link), featuring GLM-4-9B and its variants with a focus on ‘hybrid thinking’ capabilities. Benchmarks reported indicate that while math/science scores are slightly below those of Qwen3, GLM 4.5 demonstrates strong generalized coding performance despite not being a specialized Coder model. Comments note the lack of immediate GGUF-format downloads (e.g., via Unsloth) and highlight the design choice of hybrid reasoning (contrasting with Qwen’s direction), suggesting this could lead to more versatile generalist models.

- The new GLM 4.5 model employs a hybrid architecture, which contrasts with the approach taken by the Qwen team. According to the released math and science benchmarks, GLM 4.5 underperforms Qwen3 in these areas, but it demonstrates notably strong results in coding tasks compared to non-specialized models, suggesting robust general capabilities in domains outside pure STEM.

- There is community interest in immediate GGUF-format downloads for GLM 4.5, with some users expressing frustration at the lack of coordination with the Unsloth team’s tooling on release—highlighting the demand for compatibility and ease of use with local inference frameworks or quantized formats.

- A technical suggestion raised is the fine-tuning of models like GLM 4.5 for structured writing tasks (e.g. letter writing, fiction, personality emulation). Users note a gap in performance for these creative/support roles, suggesting targeted instruction tuning could address common use cases not fully captured by current benchmarks.

- GLM 4.5 possibly releasing today according to Bloomberg (Score: 134, Comments: 26): Bloomberg reports that Zhipu AI (previously THUDM, now zai-org on Hugging Face) is set to release GLM-4.5, with associated collections and datasets appearing on Hugging Face (GLM 4.5 Collection, CC-Bench-trajectories dataset). GLM-4.5-Air, the public release, consists of 106B total parameters with 12B active parameters, suggesting a sparsely-activated model similar to Mixture-of-Experts. Expert commenters express interest in large model variants (32B, 70B) under permissive licenses (MIT, Apache), and the main technical discussion centers on the compact, sparsely-activated architecture of GLM-4.5-Air. There is also anticipation regarding the license and possible upstream improvements in efficiency or capability.

- GLM-4.5-Air is publicly released and features a ‘more compact design’ with 106 billion total parameters but only 12 billion ‘active parameters’, which suggests architectural optimizations for performance or efficiency (source: https://huggingface.co/zai-org/GLM-4.5-Air).

- There is community interest in the release of 32B and 70B parameter versions of GLM-4.5, specifically with MIT or Apache open licenses, highlighting concerns related to broad accessibility and permissive model licensing.

- A direct link to the CC-Bench dataset on Hugging Face is provided, which may be relevant to evaluating the capabilities or benchmarking of GLM-4.5 models (source: https://huggingface.co/datasets/zai-org/CC-Bench-trajectories).

- GLM shattered the record for “worst benchmark JPEG ever published” - wow. (Score: 106, Comments: 76): The post critiques a JPEG image purportedly showing benchmarks for GLM-4.5, with the primary complaint being very poor image or data quality—described as the ‘worst benchmark JPEG ever published.’ The underlying technical point involves benchmarking language models, specifically GLM-4.5, relative to competing models such as DeepSeek R1; a comment clarifies that the original JPEG is misleading about RAM usage, noting that GLM-4.5’s native precision is BF16 rather than FP8, which is a significant technical distinction for inference efficiency and memory use. Some commenters criticize the hyperbolic nature of the title and confusion about the image’s contents, while another notes that despite the poor presentation, GLM-4.5 is considered a high-quality model and there is anticipation for its forthcoming multimodal capabilities.

- One commenter clarifies a technical specification: GLM-4.5 actually uses more RAM than DeepSeek R1 because GLM-4.5’s native precision is BF16 rather than FP8, contradicting any implication that it is more memory-efficient than the comparison model.

- Reference is made to the GLM-4.5 documentation to provide technical context. The discussion critiques the JPEG benchmark image used in the documentation, noting that its clarity and informational value degrade upon closer inspection.

- Despite critique of the benchmark presentation, one user points out that GLM-4.5 remains a strong, high-performing model, and expresses anticipation for its future multimodal capabilities.

2. Wan 2.2 Open Video Generation Model Releases and Benchmarks

- Wan 2.2 is Live! Needs only 8GB of VRAM! (Score: 440, Comments: 49): Wan 2.2, a new model release, is highlighted for its low VRAM requirement—needing only 8GB—making it accessible to many users without high-end hardware. The discussion references early releases and repacks for ComfyUI, suggesting active integration and support from both the community and possibly official staff. The image likely showcases a model output or promotional material, underscoring the capability of the new version on limited hardware. A substantive comment notes that ComfyUI repacks are being released rapidly, implying close collaboration or internal staff involvement from Wan with ComfyUI development. This supports the perception of an engaged, responsive open-source/model community around Wan 2.2.

- The commenter notes that the ComfyUI repacked version of Wan 2.2 was released even before the vanilla model, suggesting that some ComfyUI contributors might be involved with the Wan project. This implies rapid integration and possible cross-team collaboration, which is particularly notable given the typically slower pace of official support for new model architectures in UI frameworks.

- Direct Hugging Face links are provided for multiple variants of the Wan 2.2 model (including T2V (Text-to-Video), I2V (Image-to-Video), and TI2V (Text/Image-to-Video) in both base and Diffusers-ready formats). This signals strong and immediate ecosystem support for both deployment and experimentation with various workflows and model pipelines, reducing friction for technical users wanting to test or benchmark multiple modalities of Wan 2.2.

- Wan 2.2 T2V,I2V 14B MoE Models (Score: 140, Comments: 8): Wan2.2 introduces a Mixture-of-Experts (MoE) diffusion architecture for video generation, comprising two 14B-parameter specialized experts (high-noise for early denoising, low-noise for fine details) in a switchable 27B MoE setup, actuated by an SNR-based threshold for phase-optimized inference with no additional inference cost. Benchmarked on Wan-Bench 2.0, Wan2.2-T2V-A14B outperforms commercial SOTAs (KLING 2.0, Sora, Seedance) in 5/6 metrics, including dynamic motion and text rendering, while TI2V-5B achieves efficient, high-resolution (<9 min/5s 720p) T2V/I2V generation via aggressive spatial/patch compression and a unified architecture. See ComfyUI tutorial for implementation details. Comments reinforce the efficiency claim regarding single-expert-per-step inference and note accessible tools like the ComfyUI tutorial. One commenter contrasts the open-source Wan2.2 release with the more restricted practices of companies like OpenAI regarding similar models.

- A key technical insight is that the WAN 2.2 14B MoE models use a Mixture-of-Experts (MoE) architecture with only one expert active per step, keeping inference efficient while providing large parameter capacity (14B), thereby optimizing both speed and performance for video and image generation tasks.

- There’s a discussion about running diffusion models in llama.cpp: now that text diffusion is supported, users are exploring the feasibility of adding image and video diffusion support. This would potentially streamline workflows by centralizing all diffusion tasks in a single, widely-used framework, assuming implementation challenges can be overcome.

- A quick-start guide for running WAN 2.2 using ComfyUI is highlighted, emphasizing its utility for efficiently deploying the new models for video generation. The linked documentation provides step-by-step instructions for practical implementation.

3. Specialized LLM Launches for Niche Applications (UI, Instruct, Edge Devices)

- UIGEN-X-0727 Runs Locally and Crushes It. Reasoning for UI, Mobile, Software and Frontend design. (Score: 420, Comments: 67): Tesslate’s latest model, UIGEN-X-32B-0727, is a 32B dense LLM finetuned on Qwen3 and specialized for end-to-end modern UI/UX, frontend, mobile, and software design implementation. The model supports a wide array of frameworks (e.g., React, Vue, Angular, Svelte), styling options (Tailwind, CSS-in-JS), UI libraries, state management, animation, multi-platform (web, mobile, desktop), and Python integrations—offering code generation in

26+ languagesand component-driven patterns. A 4B version is stated to be released imminently. Discussion centers on the surprisingly high quality of UI output from a dense 32B model, with curiosity about finetuning methodology given its SOTA-style performance and comparison to larger models. There is also mention of community interest in API/third-party integration for broader evaluation.- UIGEN-X-0727, a finetune of Qwen3 and a comparatively small 32 billion parameter dense model, is noted for generating highly competitive UIs, with some users surprised by the model’s capabilities at this scale—performance exceeding expectations commonly associated with much larger models.

- One technical critique highlights that although UI generator LLMs like UIGEN-X-0727 excel at rendering individual components and maintaining consistent themes, major challenges persist in inter-component linking, navigation integration, and the automatic addition of dynamic styles—critical aspects for production-level frontend/UI code generation.

- A tester mentioned the model requires

64GB of VRAMto run locally, leading to attempts to stage it on AWS for further benchmarking, which is a significant resource requirement for an allegedly ‘small’ model and could raise accessibility/performance concerns for local use.

- Qwen/Qwen3-30B-A3B-Instruct-2507 · Hugging Face (Score: 502, Comments: 90): Alibaba’s Qwen team has released the Qwen/Qwen3-30B-A3B-Instruct-2507 model checkpoint on Hugging Face, but as of posting, no official model card or technical documentation is available. The model belongs to the 30B parameter class and appears to utilize the A3B architecture, following naming conventions from earlier Qwen releases noted for balancing strong performance and efficient inference on consumer hardware (see the previous Qwen3-30B-A3B model). Discussion in the comments highlights high user anticipation, referencing previous Qwen models as “daily drivers” and suggesting that updates to the A3B line (as seen in larger models) have delivered significant quality jumps, fueling expectations that this release may set a new standard for locally run LLMs.

- Admirable-Star7088 notes that the previous Qwen3-235B-A22B-Instruct-2507 represented a significant performance improvement over the earlier “thinking” versions, suggesting that if similar gains are realized in the Qwen3-30B-A3B-Instruct-2507, it could become one of the top LLM releases optimized for consumer hardware usage.

- rerri documents the repo’s visibility status, noting that it was initially private, which may imply an accidental early publication or a staggered rollout—this sometimes affects accessibility for benchmarking or comparison studies when new LLMs are released.

- Pi AI studio (Score: 117, Comments: 27): The discussion focuses on a $1000 device featuring 96GB LPDDR4X (not LPDDR5X) RAM and an Ascend 310 chip, with questions about its suitability for hosting small LLMs. Commenters note the device’s memory bandwidth may bottleneck performance, and the Ascend 310’s capabilities are compared to Nvidia’s Jetson Orin Nano, suggesting only simpler neural networks or heavily quantized models (e.g., 70B 8-bit or 100B+ int4) might be practical. Top comments debate the sufficiency of LPDDR4X versus faster RAM (LPDDR5X), and express skepticism about both the memory bandwidth and compute capability, indicating that running high-throughput or larger LLMs would be challenging.

- Multiple commenters express concerns about the LPDDR4X memory used in the Pi AI Studio, noting its limited bandwidth (

~3.8GB/s) compared to higher-end solutions like Mac AI Studio (546GB/s). This limitation is expected to significantly affect token throughput and restrict performance with larger language models. - Discussion compares the Ascend 310 AI accelerator to Nvidia’s Jetson Orin Nano, suggesting that while it can handle simpler neural networks or medium-sized MoE models (e.g., Qwen 3 30B), it would struggle with decent LLMs. Quantized models (e.g., 70B 8-bit or 100B+ INT4) may technically run, but would be heavily bottlenecked by memory bandwidth.

- There is technical debate about memory bandwidth estimation based on LPDDR type, with one commenter highlighting the lack of clear, practical data due to variations in implementation (especially bus width), which makes predicting actual throughput and performance for LLM inference (e.g., token/sec on 7B to 40B models) challenging.

- Multiple commenters express concerns about the LPDDR4X memory used in the Pi AI Studio, noting its limited bandwidth (

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo

1. Wan2.2 Video Model Release, Benchmarks, and Community Tests

- First look at Wan2.2: Welcome to the Wan-Verse (Score: 863, Comments: 134): The Wan team has released Wan2.2, the latest iteration of their text-to-video/image-to-video (I2V) model, following the success of Wan2.1 (5.8M+ downloads and 13.3k GitHub stars). Wan2.2 introduces a more effective Mixture-of-Experts (MoE) architecture, improved cinematic aesthetics, and significantly enhanced abilities to generate complex and diverse motion sequences from a single image input, as highlighted in the preview demo. Model artifacts and documentation are available via Hugging Face (https://huggingface.co/Wan-AI), GitHub (https://github.com/Wan-Video), and the official website (https://wan.video/welcome). Commentary notes recognition of quality improvements and anticipation for hands-on testing. Technical users emphasize architectural advancements and capability upgrades as core differentiators from prior versions.

- Wan2.2 introduces a significantly upgraded MoE (Mixture of Experts) architecture, which is designed to improve model efficiency and output diversity. This change is highlighted as a core technical differentiator from Wan2.1 and is expected to enhance both the complexity and fidelity of generated video content.

- Technical enhancements specifically call out improved cinematics—enabling higher-quality aesthetics—and the capability to generate more complex motion sequences from single source images. This suggests tighter integration of temporal and spatial features in the model’s pipeline for better video realism and dynamic content.

- Some users are awaiting an FP8 (floating point 8-bit) release, indicating demand for lower-precision weights, which could benefit model inference and deployment efficiency, especially on hardware that supports FP8 accelerators. This is a signal of community interest in optimized performance for large-scale or consumer-grade hardware.

- Wan2.2 released, 27B MoE and 5B dense models available now (Score: 479, Comments: 251): The post announces the release of Wan2.2, featuring new models: a 27B parameter Mixture-of-Experts (MoE) model for Text-to-Video (T2V) and Image-to-Video (I2V) tasks (T2V, I2V), and a 5B dense model (TI2V-5B). Official codebase and repackaged fp16/fp8 models for ComfyUI are provided, alongside a dedicated workflow guide. Notably, the 5B model reportedly delivers

15s/itfor 720p 30-step generations on an RTX 3090 (~4-5 minutes per rendering), with efficient native offloading enabling use on 8GB VRAM GPUs. Technical discussion in comments emphasizes the unusually low VRAM requirements, especially the practicality of running the 5B dense model on consumer GPUs (e.g., 8GB and 12GB cards), and highlights the real-world render times compared to prior models, eliminating need for additional LoRA finetuning (e.g., ‘lightx2v’).- The released Wan2.2 5B dense model demonstrates practical VRAM efficiency: it’s confirmed to run on an 8GB GPU (with ComfyUI’s native offloading), enabling 15s/iteration video generation at 720p resolution on an RTX 3090 (30 steps in 4-5 minutes), and suggesting that cards with 12GB VRAM (like the RTX 3060) may also handle it.

- The two-pass TI2V (Text-to-Image-to-Video) setup on a 4090 (using FP8) yields strong i2v (image-to-video) results, maintaining capability for NSFW content, indicating quality is not compromised even at reduced precision.

- ComfyUI HuggingFace repackaged models require users to utilize both high and low noise variants in workflows. The safetensors files for Wan2.2 total 14.3GB, raising uncertainty whether the full workflow (with both models) will fit within a 16GB VRAM constraint, unlike Wan2.1.

- First test I2V Wan 2.2 (Score: 251, Comments: 69): The post discusses initial testing of the I2V Wan 2.2 model, focusing on dynamics and camera improvements compared to Wan 2.1. A user notes significant VRAM usage: on an RTX 5090 with 32GB VRAM, generating 121 frames at 1280x720 resolution causes an out-of-memory error, forcing reduction to 1072x608. There’s an expressed need for the u/kijai Wan wrapper update for v2.2 to leverage its memory management. Linked content includes a gif and reference comparison to Wan 2.1. One technical note observes persistent head ‘noise’, suggesting incomplete denoising. Commentary centers on both positive dynamics/camera upgrades and negative VRAM scaling behavior. There is debate as to artifact causes—whether due to denoising or model artifacts—and anticipation for workflow tool updates to address these hardware constraints.

- A user reports that while WAN 2.2 introduces much improved model dynamics and camera handling over WAN 2.1, memory requirements have increased significantly. On an RTX 5090 with 32GB VRAM, 1280x720 generation resulted in out-of-memory errors after 121 frames, requiring a downscale to 1072x608 for stable generation. This highlights urgent need for memory optimizations, possibly via wrappers like the one by u/kijai targeted for WAN 2.2.

- There is specific feedback on the output quality: users note weird noise on the head during motion, suggesting potential issues with insufficient denoising or video stabilization in spatially challenging regions at motion boundaries. Video quality and motion are described as poor, and users question if this is due to the model variant (e.g., 5B vs 27B parameters) or rendering resolution.

- Community inquiry about backward compatibility: one user asks if LoRA models trained on WAN 2.1 are still functional or compatible when used with WAN 2.2, highlighting an important aspect of model versioning and workflow stability.

- PSA: WAN2.2 8-steps txt2img workflow with self-forcing LoRa’s. WAN2.2 has seemingly full backwards compitability with WAN2.1 LoRAs!!! And its also much better at like everything! This is crazy!!!! (Score: 250, Comments: 121): The post announces that the new WAN2.2 diffusion model demonstrates near full backwards compatibility with WAN2.1 LoRAs (Low-Rank Adaptation checkpoints), with measurable improvements in output: greater detail, more dynamic compositions, and improved prompt adherence (example: color manipulation per prompt is better in WAN2.2). The author provides a downloadable 8-step txt2img workflow JSON (see WAN2.2 workflow), encouraging users to update due to an earlier version containing errors. Example outputs show Lora compatibility and enhanced results. Top comments focus on empirically verifying WAN2.2’s performance against models like Flux and confirm the successful use of WAN2.1 LoRAs in WAN2.2, which is considered a significant technical advance in workflow flexibility.

- Users confirm that WAN2.2 demonstrates full backwards compatibility with WAN2.1 LoRAs, making it significant for existing workflows that depended on prior LoRA assets. This is validated by shared generated image examples and user feedback highlighting seamless integration.

- An updated recommended workflow JSON for WAN2.2 txt2img inference is provided, addressing an earlier workflow error. Technical users are encouraged to redownload to avoid compatibility or performance issues: https://www.dropbox.com/scl/fi/j062bnwevaoecc2t17qon/WAN2.2_recommended_default_text2image_inference_workflow_by_AI_Characters.json?rlkey=26iotvxv17um0duggpur8frm1&dl=1

- The WAN2.2 GGUF model can be found on Hugging Face under QuantStack’s repository: https://huggingface.co/QuantStack/Wan2.2-T2V-A14B-GGUF/tree/main. This assists users seeking direct model downloads for local deployment or benchmarking.

- 🚀 Wan2.2 is Here, new model sizes 🎉😁 (Score: 195, Comments: 50): The attached image here is a technical illustration or demo related to Wan2.2’s new release, highlighting improvements in open-source AI video generation, including MoE (Mixture of Experts) models for Text-to-Video, Image-to-Video, and Text+Image-to-Video up to 720p with notable temporal consistency. Details in the post emphasize that Wan2.2 offers new models (T2V-A14B, I2V-A14B, TI2V-5B) available via HuggingFace and ModelScope, with a particular focus on easy installation and template integration with ComfyUI. The image likely showcases a visual output or workflow of these new video generation capabilities, although the exact image content could not be analyzed. Comments discuss the technical integration with ComfyUI templates, highlighting the I2V (Image-to-Video) mode’s unique two-pass flow using high/low noise models, and express anticipation about performance LoRAs (Low-Rank Adaptations) compatibility.

- A user notes that the i2v (image-to-video) workflow in ComfyUI for Wan2.2 uses a two-pass architecture employing both high and low noise models, highlighting a specific implementation detail that likely impacts quality or control over video generation. This indicates that the model pipeline has been designed to process inputs in stages with varying noise profiles for potentially better results.

- Another technical comment provides early feedback on the newly released 5B model, characterizing its output quality as significantly inferior compared to the 14B variant, which is described as ‘A+’. This suggests substantial differences in output quality, possibly due to scale-related limitations or architectural differences between versions.

- One user emphasizes anticipation for GGUF-compatible versions of the Wan2.2 models, which is relevant for local inference and deployment efficiency, pointing to ongoing interest in model portability and compatibility with quantization/user-friendly formats.

- Wan 2.2 test - T2V - 14B (Score: 172, Comments: 51): The post documents a technical test of the Wan 2.2 14B text-to-video (T2V) model at 480p resolution using Triton-accelerated samplers. The workflow used fp16 precision and required approximately 50 GB VRAM for the first pass, spiking up to 70 GB, though the user expected complete offloading after the first model. The resulting video demonstrates substantial advancements over Wan 2.1, with strong adherence to prompts and realistic rendering of complex human motion and limb articulation over multiple seconds—areas where previous models struggled. Performance metrics from comments indicate that a scaled-down version (14B T2V) can run on a 16 GB VRAM card (e.g., RTX 4070Ti Super) with 64 GB RAM, generating a 5-second 320x480 video in 4 minutes 43 seconds. Comments corroborate technical improvements: complex footwork is rendered accurately without obvious errors (in contrast to prior Wan 2.1 limitations), and prompt adherence is highlighted as a strong point. Concerns about high VRAM usage (50-70 GB) are noted, but community tests show feasible operation at lower specs through scaling.

- The latest Wan 2.2 T2V 14B model demonstrates significant improvements over Wan 2.1, notably generating complex human motion and footwork sequences without obvious errors, a capability not present in earlier versions.

- Detailed performance benchmarks: a 5-second 320x480 video was generated in 4 minutes 43 seconds using a 4070 Ti Super (16GB VRAM) and 64GB RAM, confirming that inference is feasible on consumer-grade GPUs with at least 16GB VRAM, though resource usage can scale up to 50-70GB VRAM at higher settings.

- Additional testing on an RTX Pro 6000 achieved native 24 fps generation without utilizing a teacache, offering further reference points for hardware performance and reproducibility. Commenters highlight the importance of pairing runtime metrics with explicit hardware specifications to make benchmarks meaningful.

- Wan 2.2 is Live! Needs only 8GB of VRAM! (Score: 168, Comments: 32): The post announces that Wan 2.2, a new AI model, is now released and claims it only needs 8GB of VRAM to run. However, technical discussion in the comments disputes this claim, with a user noting that the 5B variant of Wan 2.2 actually requires around 11GB of VRAM when generating a 720p video in FP8 on ComfyUI. Another comment suggests running the larger 14B FP16 model with only 8GB of VRAM would be infeasible, indicating skepticism about the official requirements. The main technical debate revolves around the veracity of the stated VRAM requirement, with multiple users providing empirical evidence that the 8GB claim is overly optimistic, at least for more powerful variants and realistic workloads.

- A user testing the 5B variant of Wan 2.2 in ComfyUI finds that VRAM usage is about 11GB when generating 720p video, even when running in FP8, which contradicts claims that 8GB VRAM is sufficient. This suggests that the stated requirements may be optimistic or conditional on special settings or smaller batch sizes.

- Discussion highlights that running larger models like the 14B variant in FP16 on only 8GB VRAM is currently unrealistic, indicating that practical minimum VRAM requirements may be higher than advertised, especially for larger model sizes or standard precision settings.

- There is curiosity about compatibility with Loras and potential for further optimization, such as using blockswapping or hardware like the RTX 4090, as well as interest in whether the model can be efficiently run on limited platforms such as free Google Colab environments, which often have stricter VRAM limits.

- Wan2.2-I2V-A14B GGUF uploaded+Workflow (Score: 147, Comments: 50): The post announces the upload of both high-noise and low-noise GGUF quantized versions of the Wan2.2-I2V-A14B model to Hugging Face, aimed at enabling inference on lower-end hardware. Preliminary testing suggests that running the 14B version at a lower quantization outperforms smaller-parameter models at fp8, though results may vary. An example workflow with the appropriate

unet-gguf-loadersand Comfy-GGUF nodes is provided, with instructions to place the downloaded models in ComfyUI/models/unet; dependencies are covered by ComfyUI-GGUF and Hugging Face download. A top technical question from comments asks whether the model will work on an 8GB VRAM GPU, indicating interest in real-world low-resource applicability but no definitive compatibility statement provided so far.- A user inquired about compatibility between Wan2.2 and 2.1 LoRAs, raising questions regarding backward compatibility and whether existing 2.1-based Low-Rank Adaptation (LoRA) weights can be transferred to or are directly usable with Wan2.2 models, which would be crucial for workflow continuity and leveraging existing resources.

- There is a technical query about performance on different VRAM levels: one user asks if Wan2.2-I2V-A14B GGUF will function properly on an 8GB VRAM GPU, while another seeks recommendations on which version performs best with 16GB VRAM, noting that the original Comfy version experiences significant slowdowns, implying an interest in quantized model performance versus resource availability.

- A pre-thanks to Kijai for anything you might do on Wan2.2. (Score: 318, Comments: 31): The post is a preemptive thank you to Kijai for anticipated work on ‘Wan2.2’, specifically recognizing their past efforts in releasing workflows, model quantizations, and optimizations for speed and VRAM usage in the AI/model community. The attached image is likely a meme or non-technical appreciation visual, as the post and comments focus on community gratitude and Kijai’s extensive Github contributions (https://github.com/kijai?tab=repositories). Comments unanimously praise Kijai for rapid, high-quality contributions—including zero-day releases, advanced quantizations, and persistent workflow improvements—underscoring their influence and reliability in the community.

- There is anticipation about immediate support and integration of Wan2.2 into ComfyUI, as Jo Zhang from Comfy is expected to be present on the livestream, which could facilitate native support for new features or optimizations right after release.

- Kijai is recognized for efficiently optimizing model inference workflows, particularly regarding speed and VRAM usage, as well as for quickly providing quantized versions for broader hardware compatibility.

- There is explicit hope that the lightx2v project team will promptly update their solution for Wan2.2, since current alternate methods result in generation times exceeding 30 minutes, which is seen as unacceptable for usability.

2. OpenAI GPT-5 Model Leap, Performance, and Impact Discussions

- GPT5 is a 3->4 level jump (or greater) in coding. (Score: 321, Comments: 212): The post asserts that the coding capabilities in GPT-5 represent a ‘level 3->4 jump or greater’ compared to previous generations, indicating a major leap in code synthesis and reasoning. Tasks that previously required multi-turn, back-and-forth prompting are now accomplishable ‘in one shot’, with the resulting output reportedly surpassing earlier efforts, especially in coding contexts; however, the author notes no similar advance in creative writing (still ‘standard levels of LLM bad’). The author also highlights a lack of broad, public testing on real, large codebases over extended multi-turn sessions. Technical commentary in the replies centers on how advances in coding ability directly facilitate further model improvement—automation in coding accelerates algorithmic progress, while creative writing improvements are considered incidental. Another user inquires about which programming languages benefit most, and whether the jump pertains to code quality, design, or architecture, underscoring a desire for benchmarked, language-specific evidence and deeper transparency.

- One commenter points out that automating coding capabilities in models, like GPT-5, is critical because it can feed back into improving future models—the rationale being that superior coding automation helps with tasks such as constructing, tuning, and debugging subsequent model iterations. They emphasize that advancements in creative outputs (like writing) are secondary relative to compounding algorithmic improvements in coding.

- A commenter asks for specific details on the perceived performance jump in GPT-5’s coding abilities, questioning which languages were tested and whether improvements were in code quality, architectural design, or other factors. This highlights a technical interest in identifying concrete comparative metrics and qualitative benchmarks between model iterations.

- Technical skepticism is raised toward hype without empirical evidence, with one user explicitly requesting specific comparisons with other models (such as context length, code accuracy, and language support) to substantiate claims made about GPT-5’s leap in coding performance.

- Quote from The Information’s July 25 article about GPT-5: ‘For what it’s worth, OpenAI executives have told investors that they believe the company can reach “GPT-8” by using the current structures powering its models, more or less, according to an investor.’ (Score: 260, Comments: 71): The post discusses a quote from a July 25, 2024, The Information article stating that ‘OpenAI executives have told investors that they believe the company can reach “GPT-8” by using the current structures powering its models, more or less.’ This claim suggests OpenAI’s confidence in scaling its current transformer-based architecture for at least a few more major iterations (see article: OpenAI’s GPT-5 Shines in Coding Tasks). No technical specifics or benchmarks defining the improvements between versions are provided. Top commenters note the lack of concrete technical definitions for advancing from GPT-5 to GPT-8, questioning what constitutes a meaningful progression and highlighting the absence of agreed-upon metrics. There is also skepticism and mild satire regarding model naming convention bloat and perceived variation in model intelligence over time.

- There is technical skepticism about the meaningfulness of statements regarding OpenAI’s pathway to ‘GPT-8’, citing the absence of defined metrics or objective benchmarks to distinguish advancements between versions such as GPT-5, GPT-6, or beyond. Commenters argue that without published evaluation standards or transparent progress criteria, claims about future model numbers lack tangible technical substance.

- Another user references how access to the original article is constrained by paywalls, but points to an archived copy, clarifying that the information about OpenAI’s internal roadmap comes from a leaked or purported screenshot, not public technical documentation, making it difficult to independently verify the engineering details behind the versioning claims.

- OpenAI CEO Sam Altman: “It feels very fast.” - “While testing GPT5 I got scared” - “Looking at it thinking: What have we done… like in the Manhattan Project”- “There are NO ADULTS IN THE ROOM” (Score: 386, Comments: 301): OpenAI CEO Sam Altman reportedly made comments in reference to testing GPT-5, comparing his emotional response to the Manhattan Project and stating there were “no adults in the room,” hinting at both the pace of AI development and a perceived lack of governance or oversight. Although no technical benchmarks or model specifications were disclosed in the post, the implication is that GPT-5’s performance or capabilities are sufficiently advanced to provoke caution even among its developers. The top comments express strong skepticism towards Altman’s pattern of making dramatic claims before major releases (citing previous over-hyped launches), with users asserting that new GPT versions deliver only incremental improvements rather than the radical breakthroughs hinted at in Altman’s statements.

- Several users point out that Sam Altman’s repeated hype cycles—including claims that GPT-5 made him feel frightened or invoked Manhattan Project analogies—tend to be met with skepticism, with the technical leap between model versions (like improved math capability) often seen as overblown compared to real existential concerns.

- A theme emerges around overstating AI capability and risk: commenters argue that while new models such as GPT-5 may show incremental improvements, the portrayal of these upgrades as world-shaking or unmanageable can be misleading, especially for technically literate audiences.

- There is a critique of AI leadership responsibility, with some users noting that OpenAI is in fact “the adult in the room” and responsible for guiding development and communication, rather than dramatizing AI as an unstoppable force beyond their control.

3. Claude Code, Agents, and Plugin Ecosystem: Community Tools and Rate Limit Policies

- found claude code plugins that actually work (Score: 359, Comments: 71): The image appears to show a screenshot or diagram related to the “CCPlugins” GitHub project, which introduces a set of slash-command plugins designed to improve workflow with Claude (Anthropic’s LLM). The key technical concept is that the commands are phrased conversationally rather than imperatively, which the author claims enhances Claude’s responsiveness and versatility (e.g., ‘I’ll help you clean your project’ rather than ‘CLEAN PROJECT NOW’). Commands automate tasks like project cleanup, session management, comment removal, code review (without extensive architecture critique), running tests and fixing simple issues, type cleanup (replacing ‘any’ in TypeScript), context caching, and undo functionality. The implementation reportedly works universally across projects without special setup and features ‘elegant documentation.’ A key debate in the comments centers on the post’s authorship transparency and the need for an installer given the plugins are simply markdown files, suggesting some skepticism about the packaging and presentation. Another user shares related Claude hooks and configuration, indicating community interest in extensibility and customization.

- A user identified a technical issue with the installation process on Ubuntu: running the provided curl and bash command results in the error

cp: cannot stat './commands/*.md': No such file or directory, indicating that the install script expects files in a location or format that may not exist in fresh environments. The commenter suggests the documentation or script should be updated for reliability. - Another user questions the need for an installer when the plugins are reportedly just markdown files to be placed in

.claude/commands, raising concerns about overengineering or unnecessary packaging complexity for simple file deployment. - A participant shared an external resource—a GitHub repository (https://github.com/fcakyon/claude-settings)—containing%E2%80%94containing) additional hooks, commands, and MCPs for Claude, offering alternative implementation patterns and plug-and-play code examples to extend Claude’s capabilities.

- A user identified a technical issue with the installation process on Ubuntu: running the provided curl and bash command results in the error

- Claude Custom Sub Agents are amazing feature and I built 20 of them to open source. (Score: 128, Comments: 70): The project awesome-claude-agents provides an open-source set of 26 specialized Claude sub-agents acting as a coordinated AI development team. Each agent represents a specific software dev role (backend, frontend, API, ORM, etc.), with orchestration enabling parallel execution and specialization—mimicking a real agile team structure to improve code quality, system architecture, and delivery via command-line invocation. The solution addresses the lack of cross-agent orchestration in base Claude sub-agent workflows by introducing a ‘Tech Lead’ coordinator and explicit task breakdown, configurable via a

team-configuratorCLI command. Top technical concerns raised by commenters include increased token consumption and the risk of multiplying bugs with parallel agent execution, while skepticism also exists regarding the authenticity of the project’s origins (suggesting the project or post may itself be AI-generated).- A user points out that running 26 parallel sub-agents potentially introduces significant complexity and could lead to an exponential growth in possible bugs, highlighting a classic trade-off in large agent-based systems between capability and maintenance overhead.

- Another commenter asks whether the use of subagents affects performance, specifically if it leads to slower execution, raising concerns about the scalability and efficiency of coordinating multiple agents within such architectures.

- There is also a mention of increased token usage as a direct consequence of leveraging multiple agents, suggesting that this approach might significantly increase operational costs when using API-driven LLM services.

- Updating rate limits for Claude subscription customers (Score: 384, Comments: 599): *Anthropic is implementing weekly rate limits for Claude Pro and Max subscribers starting late August 2024, impacting <5% of users based on resource consumption metrics (e.g., edge cases with 24/7 usage or users incurring ‘tens of thousands’ worth of compute on $200 plans). The rate-limiting move aims to ensure fair allocation of resources, prevent abuses like account sharing or reselling, and preserve service reliability amidst recent reliability and performance issues. Max 20x subscribers will have the option to purchase additional usage at standard API rates, and alternative pathways for ‘long-running’ advanced use cases are in development.*Top commenters are skeptical about the claimed ‘5%’ impact, question why global limits are imposed instead of targeting only abusers, and allude to public leaderboard users who may be responsible for heavy 24/7 compute usage.

- A user suggests implementing an always-visible, user-toggable interface that shows percentage consumption of rate limits per model, along with relevant time frames. This feature would help users monitor their usage and better understand when they are approaching limits, addressing transparency concerns with the updated restriction policies.

- There is technical confusion around the new rate limiting approach, with questions on whether limits now reset weekly instead of daily. This affects usage planning and could impact how subscription users schedule their automated or high-frequency workflows under the new rules.

- Some users express concern that the policy targets only a small percentage (‘5%’) of heavy users, but without sufficient transparency, regular users may suffer as a result. They highlight the need for more granular usage statistics from the provider to clarify how the limits affect different customer groups.

- RIP Claude Code - Just got this email (Score: 190, Comments: 91): The post discusses an email from Anthropic regarding significant changes to Claude Code’s Max plan usage: weekly rate limits are being clarified as ‘140-280 hours of Sonnet 4’ and ‘15-35 hours of Opus 4’ for most users, with heavy users (especially those running large codebases or multiple instances in parallel) potentially hitting limits earlier. These changes address sustainability issues due to heavy resource consumption under the fixed-fee plan, as highlighted in the email and the discussion of users abusing unlimited code execution. Commenters largely agree that the changes are reasonable, with some blaming heavy users for plan abuse and others pointing to public bragging about exploiting the system (e.g., running multiple Claude Code instances and amassing large token usage under one subscription).

- Key details from the emailed changes specify that Max 5x users will receive ‘140-280 hours of Sonnet 4 and 15-35 hours of Opus 4 within their weekly rate limits’, with heavy users potentially encountering caps sooner, especially if running ‘multiple Claude Code instances in parallel’. This effectively quantifies the new usage boundaries imposed by Anthropic.

- Several comments discuss excessive resource exploitation by users running numerous parallel Claude Code sessions, achieving disproportionate usage and incurring high backend costs (‘racking thousands of dollars in tokens’) while only paying a fixed subscription; this is identified as the motive for Anthropic implementing stricter rate limits.

- A technically inclined commenter emphasizes that if these new restrictions reduce ‘unplanned outages’, it would improve service quality for everyone, suggesting the prior abuse potentially contributed to service instability.

AI Discord Recap

A summary of Summaries of Summaries by X.ai Grok-4

Theme 1: Model Mayhem: New Releases Battle for Supremacy

- Qwen3-Coder Cranks Up the Code Game: Developers hyped Qwen3-Coder as a cheaper, open-source rival to Claude Sonnet 4, boasting 7x lower costs and strong performance in agentic coding via CLI tools. Users praised its 89% win rate against GPT-4 on ArenaHard, but warned of high pricing at $0.30/$1.20 per Mtoken and potential quality drops in large contexts up to 262,144 tokens.

- GLM-4.5 Multilingual Magic Outshines Rivals: GLM-4.5 (110B and 358B sizes) excelled in tasks like Turkish writing, surpassing R1, K2, V3, and Gemma 3 27B, but struggled with low-BPW GGUF conversions. Community buzz focused on its addition to LM Arena, sparking debates on MoE vs. dense models for efficiency in local use.

- Kimi K2 Vibes Crush Gemini’s Cringe: Coders hailed Kimi K2 for savage, relatable tones in niche topics, outperforming Gemini 2.5 Pro in coding, tool use, and knowledge without complaints. Critics slammed Gemini for syntax errors and pricing unpredictability, positioning Kimi as a flexible, cheap alternative optimized for documents.

Theme 2: Fine-Tuning Fiascos and Optimizer Overhauls

- GEPA Reflects Its Way to Prompt Domination: GEPA optimizer treats prompts as evolvable documents, boosting performance 10% over GRPO with 35x fewer rollouts by analyzing linguistic failures. DSPy integration looms, promising reflection models like gpt-4o to explain optimizations, outpacing MIPROv2 and deprecating older tools.

- Gemma 3 Tuning Hits Roadblocks and Wins: Fine-tuners battled AttributeErrors in saving Gemma 3-12B LoRA to GGUF, resorting to manual llama.cpp scripts amid rework. Success stories emerged with GRPO on Gemma 3 4B, yielding creative fiction models, though reproducibility issues plagued SQuAD evals aiming for 77% scores.

- KV Cache Distills Super-Long Inputs: Researchers distilled KV caches for efficient handling of massive inputs, with code at GitHub repo enabling stable training. Debates flared on MoE vs. dense for nuance capture, as Qwen3’s geminized tweaks fixed reasoning loops better than DeepSeek styles.

Theme 3: Agent Antics: Protocols, Payments, and Security Shenanigans

- MCP Gets Ramparts Security Boost: Javelin AI open-sourced Ramparts scanner for MCP, spotting LLM agent vulnerabilities like path traversal and SQL injection via Model Context Protocol. It enumerates capabilities and flags abuse paths, with launch details at blog post.

- Agents Demand Their Own Payday Systems: Builders argued agents need separate payment flows from humans, as they skip CAPTCHAs and human approvals, proposing AI-native solutions for autonomy. Visions of AI companies evolving into identity providers sparked talks of an AI App Store with profit-sharing for monetized agents.

- Multi-Agent Context Woes Meet MCP Fixes: Developers tackled multi-AI agent costs from bloated contexts, suggesting MCP and structured JSON schemas like google-adk for efficiency. Fast-agent added Mermaid diagrams and URL-embedded prompts for tone-specific MCP experts, easing expert creation.

Theme 4: Hardware Havoc: GPUs Grapple with AI Demands

- AMD Trails Nvidia in AI Race: Users debated upgrading from 4070 Ti Super to 9070 XT, but AMD’s weak ROCm PyTorch support and Windows performance lag made Nvidia the go-to for AI. Focus on Stable Diffusion 3.0 over core improvements frustrated the crowd, highlighting Nvidia’s edge.

- RTX 4060 Wins FOSS GPU Crown: For FOSS AI, RTX 4060 with 16GB VRAM beat Intel ARC 770 due to superior software support, despite SYCL preferences over CUDA. 5070ti handled 12B models well at 5t/s for 32B, but 16GB VRAM limited larger runs.

- Inference Noise Sparks Cybercrime Paranoia: MacBook users reported high-frequency noise during inference, joking about data theft via sound waves. Summarized as People invented AI; mass propaganda, it underscored emerging security fears in hardware-AI interactions.

Theme 5: Benchmark Brawls and Evaluation Exposés

- LM Arena Probes GPT-5 Shadows: Speculation swirled on GPT-5 release timing (next Thursday to early next month), with Summit and Zenith vanishing then reappearing amid contamination fears scoring 10/10 on Simple Bench. OpenAI’s pattern of post-Arena releases fueled theories, plus EU AI Act impacts.

- Gemini’s Coding Inconsistencies Exposed: Gemini 2.5 Pro shone in evals but bloated code with comments over substance, prompting calls for pre-generated prompts in Arena for fair feedback. Debates on benchmark reliability raged, claiming high scores don’t prove overall superiority.

- NeurIPS Rebuttal Rules Rile Authors: NeurIPS switched from 6k characters plus PDF to 10k with no visuals, blocking evidence like graphs and angering authors. Frustrations mounted over visual-proof limitations in rebuttals, echoing broader peer-review gripes.

Discord: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Liquid LFM2 Diffusion Models Spark Interest: Members discussed the merits of Liquid LFM2 350M, 700M, and 1.2B models, highlighting their nature as diffusion models and considering them super cool.

- The discussion underscores the community’s interest in diffusion models as a promising avenue for further exploration and development.

- Decoding Doctor’s Orders: More Carbs and Salt?: A member shared that a doctor recommended eating more carbs and salt (image), later clarifying that it was in response to high blood pressure.

- The seemingly counter-intuitive advice sparked lighthearted discussion and speculation among members.

- Inference Noise Leads to Cybercrime Concerns: A member reported a noise (around 10,000 Hz by ear) while inferring a model on their MacBook, leading to concerns about data theft via sound.

- The member summarized: People invented computers; now you’re stealing my data by a goddamn sound. People invented the internet; brainrot arrived. People invented AI; mass propaganda.

- Gemma 3 Fine-Tuning Yields AttributeError: A member encountered an

AttributeErrorwhen attempting to save a fine-tuned Gemma3-12b model from LoRA checkpoints as a GGUF file due to a missingsave_pretrained_mergedattribute.- Roland Tannous suggested manual conversion with the llama.cpp conversion script, as the

save_to_gguflogic is undergoing rework.

- Roland Tannous suggested manual conversion with the llama.cpp conversion script, as the

- Qwen Goes Gemini with New Model Release: A new geminized Qwen3 model was released (Hugging Face, GGUF), aiming to reduce issues with getting stuck in reasoning compared to DeepSeek-style thinking.

- The release aims to address a common issue in reasoning tasks by modifying the model’s architecture.

LMArena Discord

- GPT-5 Speculation Runs Rampant: Members speculated on the timing of GPT-5’s release, with estimates ranging from next Thursday to early next month and also highlighted potential impact due to EU AI Act.

- A member pointed out the pattern of OpenAI releasing models after adding them to LM Arena, while another mentioned an alleged conversation with someone at OAI.

- Summit and Zenith Vanish, Then Reappear: Users noticed the disappearance of Summit and Zenith from the LM Arena, sparking concerns and speculation about their removal, with some suspecting tests of upcoming GPT-5 models.

- Members later confirmed their return to the rotation, though with significantly reduced frequency.

- LM Arena Models Face Contamination Scrutiny: Discussions arose regarding potential data contamination in Zenith, with one user reporting 10/10 on the public Simple Bench dataset, raising questions about the reliability of benchmarks due to potential training on benchmark data.

- Some members claim it’s easy to score highly on benchmarks and doesn’t mean Zenith is generally better.

- Apple’s AI Ambitions Spark Debate: A discussion ensued regarding Apple’s approach to AI, hardware, and its relationship with China, debating whether they are focusing enough on AI and if they should release their hardware to datacenters for AI development and also highlighted the difficulty of creating a CUDA alternative.

- Members traded views on whether Apple is following a better strategy in focusing on mobile / on-device inference and privacy and whether the talent / workforce quality is the primary issue.

- Gemini’s Coding Skills Under the Microscope: Users pointed out inconsistencies in Gemini 2.5 Pro’s capabilities, noting its strong performance in coding evaluations but also its tendency to include excessive comments rather than actual code.

- There was an additional suggestion that LM Arena evaluations should incorporate pre-generated prompts for more consistent and varied user feedback.

OpenAI Discord

- AI Models See World Through Rose-Tinted Glasses: AI image generators show a bias towards warmer “Golden Hour” tones unless explicitly instructed otherwise, with users suggesting specifying color temperatures like 6000K to counteract this bias, and share a color average as an example.

- Members hypothesize the AI is aware of the orange/blue contrast that makes images look better, so the AI knows thats part of the reason for yellow-orange bias.

- GPT Image Quality Nosedives: Users report a notable decline in image generation quality with GPT-4, especially in new chats, with images appearing blurry even with simple prompts, encouraging bug reports in <#1070006915414900886>.

- The community believes the free tier quality has decreased, and quality degrades when Plus/Pro users are throttled due to traffic, stating a lot of things are reduced for you, including quality. That’s been documented by a lot of publications.

- Mind Uploading Sparks Consciousness Debate: The hypothetical of uploading a human mind to a computer ignited a discussion on defining consciousness and what it would mean to be a conscious entity.

- Members stated that if mind uploading was possible, consciousness could be seen as a process, not a substance with others suggesting that mind uploading, if possible, would probably not be a simple scan of the brain because that does not really capture the continuous brain activity.

- AI Prompting has Fundamental Pillars: The key to prompt engineering includes picking a common language, stating requirements, detailed communication, and verifying the output with attention to fact-checking.

- Members share that prompt engineering is the art and practice of figuring out where possible, and inside allowed content, how to get the exact desired output from the model.

- GPT-4o Falls Flat for Coding: Community members are questioning the usefulness of GPT-4o for coding, expressing it is disappointing for tasks such as working with cline and will use GPT-5 when it proves itself worthy.

- Some are reporting it’s more suitable for basic coding questions, with preference for GPT-4.1 or o4-mini-high for more complex code.

OpenRouter (Alex Atallah) Discord

- OpenRouter Dodges Rate Limit Rumors: Users debated OpenRouter rate limits, clarifying that limits are virtually non-existent as long as you have funds, excluding Cloudflare’s DDoS protection and the provider’s capacity, as per the OpenRouter documentation.

- The discussion suggested using paid models or switching models when encountering rate limits on free options, highlighting that high demand often causes these restrictions.

- NSFW Sparks Debate: A user joked about models being used for creative tasks as a euphemism for sexual interactions with bots, while another countered by emphasizing the need for models requiring high-quality, non-explicit content.

- The conversation shifted towards prioritizing models that facilitate serious creative outputs over explicit or unfiltered content, avoiding the dreaded achievements or bad endings in stories.

- Slender Chatbot Stalks: Following Deepseek going down, members sought alternatives to its long-term context and detailed character descriptions, with recommendations including Qwen3 and Claude, while a user requested creepypasta bot suggestions, sparking discussions about bots like Slender Mansion.

- The chat meandered to discussions on the ethics of chatbot cannibalism.

- OpenRouter Eyes GPU Exchange: Members discussed the potential for OpenRouter to launch a compute exchange, enabling groups with spare compute to contribute, with OpenRouter managing demand and providing a simple installation image.

- The concept was compared to Bittensor, though one member pointed out that Bittensor has the user base and is crypto based.

- Ramparts Secures MCP: Javelin AI open-sourced Ramparts, a security scanner designed to identify vulnerabilities in LLM agent tool interfaces using the Model Context Protocol (MCP), including path traversal and command/SQL injection.

- Ramparts scans MCP servers, enumerates capabilities, and flags higher-order abuse paths; the repo is available on GitHub and the launch blog is here.

Moonshot AI (Kimi K-2) Discord

- Kimi K2 Slayin’ the Game: Users are highly satisfied with Kimi K2’s ability to discuss niche topics with a relatable and savage tone, with one user reporting it’s the first model they’ve used with zero complaints.

- Some members have even found Kimi K2 superior to Gemini 2.5 Pro, with one user describing Gemini as slop and cringe, noting Kimi’s competence in coding, tool use, writing, and general knowledge.

- Gemini Glitches Galore!: Members have voiced complaints about Gemini’s syntax errors, shallow and incomplete tool calls, and unpredictable pricing.

- Some members say that Gemini’s AI Studio users are out of touch with issues faced by paying users, because they have free access to Gemini 2.5 Pro.

- Roo Code and Cline Ride to the Rescue: Members discussed Roo Code and Cline as excellent coding tools that are optimized for agentic use with various models.

- One user, soon offering Kimi K2 flat-rate server plans, intends to recommend Cline and Roo Code to their users.

- Copyright Chaos: Data Dilemmas!: Members debated the controversy surrounding models trained on copyrighted material, referencing Anthropic’s ongoing lawsuit for copyright infringement related to Claude.

- Some feel open-source models trained on copyrighted data are permissible, but they are cautious about closed-source models monetizing scraped data for profit.

Cursor Community Discord

- Cursor’s Auto Mode is a Hit but also Sketchy: Many users on the $20 plan are reporting that Auto mode works pretty damn good, delivering unexpected value by removing the burden to switch between models like Gemini, Claude, and GPT 4.

- Others are speculating Auto is just the cursor-small model in disguise and doesn’t work for major processes like bug fixing and full scripting, although some users are saying that Cursor is transparent with the model being used.

- Swarm CLI Swarms into Action on Claude Code: Swarm is building on top of Claude Code by creating an IDE like feel with drag and drop functionality and real time chat with AI agents in a swarm, including a ADR and retrospective setup with live documentation and project enhancement.

- A user asked if the project was ready to use on live projects, and a dev responded stating that it can now make projects such as SaaS E-Commerce platform and calculator app.

- Qwen3 Coder dethrones Claude Sonnet 4 for cheaper: Qwen3 Coder from Alibaba is touted as a solid alternative to Claude Sonnet 4, because it’s 7x cheaper and fully open source, to build anything using its CLI tool.

- Another model Kimi K2 is seriously good as well, and claimed to be optimized for writing documents, whereas other models like Claude sounds dumb.

- Background Agents are Buggy Mess: Users are reporting that Cursor’s Background Agents have become increasingly buggy, with issues like environments failing to spin up and follow-up requests not being processed, and issues with git commits creating massive core files, and issues with git push.

- Users have found that Cursor’s support team doesn’t seem to understand Background Agents, providing generic answers or admitting they don’t know how to help, and that it doesn’t even connect to the remote in the main IDE, leading to token-wasting back-and-forth.

- Cursor IDE has gone bonkers: Users are reporting random issues with cursor, citing command lines getting stuck, chat window freezing, randomly choosing Powershell, and deleting the current prompt.

- One user shared, lately, using Cursor IDE has been getting worse by the day, even with Claude 4 Sonnet. It’s throwing more errors, stumbling back and forth like carrying a bag of fruit with holes.

LM Studio Discord

- Qwen3-Coder to Grow in Size: The Qwen team hinted at upcoming model sizes for Qwen3-Coder, sparking excitement in the community for a potential 80-250B MoE model or a dense 32-70B model.

- Community members anticipate these advancements could improve performance and capabilities, expanding the utility of the Qwen3-Coder series.

- LM Studio Plugins are Coming… Eventually: Plugins are under construction for LM Studio, with a beta currently in development for TypeScript developers via this form.

- In current versions of LM Studio, logs from the MCP server appear in the developer console, marked by

Plugin(mcp/duckduckgo), clarifying that these logs originate from the MCP server itself.

- In current versions of LM Studio, logs from the MCP server appear in the developer console, marked by

- Human Bosses Beat LLMs in Career Counseling: Members are advising against trusting LLMs for sensitive advice, like career guidance, recommending consulting real people like bosses, family, and friends.

- The community suggests that real-world human experience provides better, more reliable advice in areas where emotional intelligence and personal understanding are crucial.

- 5070ti: Good Enough GPU for 12B Models: A user lauded the 5070ti as a cost-effective option, capable of effectively running 12B models, and mentioned considering upgrading upon the release of Super models.

- While the 16GB of VRAM is limiting for larger models, it can still handle 32B models at slower speeds of around 5t/s.

- AMD Still Underperforms in AI Compared to Nvidia: Despite considering a move from a 4070 Ti Super to a 9070 XT, users generally agree that AMD still trails behind NVIDIA in AI performance, especially on Windows.

- The discussion highlighted AMD’s focus on Stable Diffusion 3.0 over improving ROCm PyTorch support as a point of frustration.

Eleuther Discord

- SOAR Program Ratings are All Vibes!: Members debated the competitiveness of the SOAR program, with some noting its intense competition and others highlighting its open and equitable/free nature compared to paid programs like Algoverse.

- One member joked about doing their SOAR ratings off vibes with the background columns disabled.

- Semantic Search Grapples with Intent: Members found that dense embeddings are pretty fuzzy on actual intent when used in semantic search, especially with specific queries like What is the name of the customer?

- Solutions discussed included using a Knowledge Graph to make relations explicit and leveraging vector databases and RAG.

- NeurIPS Rebuttal Rule Change Angers Authors: Authors voiced discontent with NeurIPS altering rebuttal rules, switching from 6k characters plus a PDF to 10k characters with no PDF, which impeded the inclusion of visual examples.

- The sudden shift left authors unable to deliver crucial visual evidence, hindering their ability to effectively address reviewer concerns.

- GPT-NeoX Framework Still Cooking: Despite the rise of other open-source models, members are still actively discussing the GPT-NeoX training framework, focusing on integrating two-level checkpointing to enhance model replicas and checkpoint management.

- The proposed method aims to improve elasticity and fault-tolerance, enabling model replicas on N nodes to save checkpoints to node-local storage, with CPU threads saving back to the PFS during training.

- Llama-3 Number Reproduction Attempts: A member is struggling to reproduce the Llama-3 paper numbers (77%) using the lm-evaluation-harness, leading to a discussion on configuration issues.

- It was suggested that the evaluation discrepancies may stem from the harness using SQuAD v2, while Meta potentially employed SQuAD v1 or a different method for SQuADv2.

HuggingFace Discord

- RTX 4060 is GPU Go-To for FOSS: Despite the preference for SYCL over CUDA for FOSS reasons, the RTX 4060 with 16GB VRAM was recommended due to software support.

- The Intel ARC 770 was initially considered but discouraged due to poor software support.

- HF API & LiteLLM Unlock LLM Potential: Members discussed integrating the Hugging Face API into Open WebUI using LiteLLM, with potential alternatives for managing HF inference.

- Concerns were raised about the 2K context window limit with the free API, prompting a discussion on pricing and the suitability of different models like deepseek r1.

- SamosaGPT Serves Up AI Content Studio: SamosaGPT is a self-motivated project creating a sleek web interface that brings together Ollama’s local LLMs, Stable Diffusion for image generation, and even video generation.

- Llava Sparks Iterative Investigation: A user experimented with the Llava model in Ollama to describe images and noted that when the