Gated Attention is all you need?

AI News for 9/10/2025-9/11/2025. We checked 12 subreddits, 544 Twitters and 22 Discords (187 channels, and 4884 messages) for you. Estimated reading time saved (at 200wpm): 414 minutes. Our new website is now up with full metadata search and beautiful vibe coded presentation of all past issues. See https://news.smol.ai/ for the full news breakdowns and give us feedback on @smol_ai!

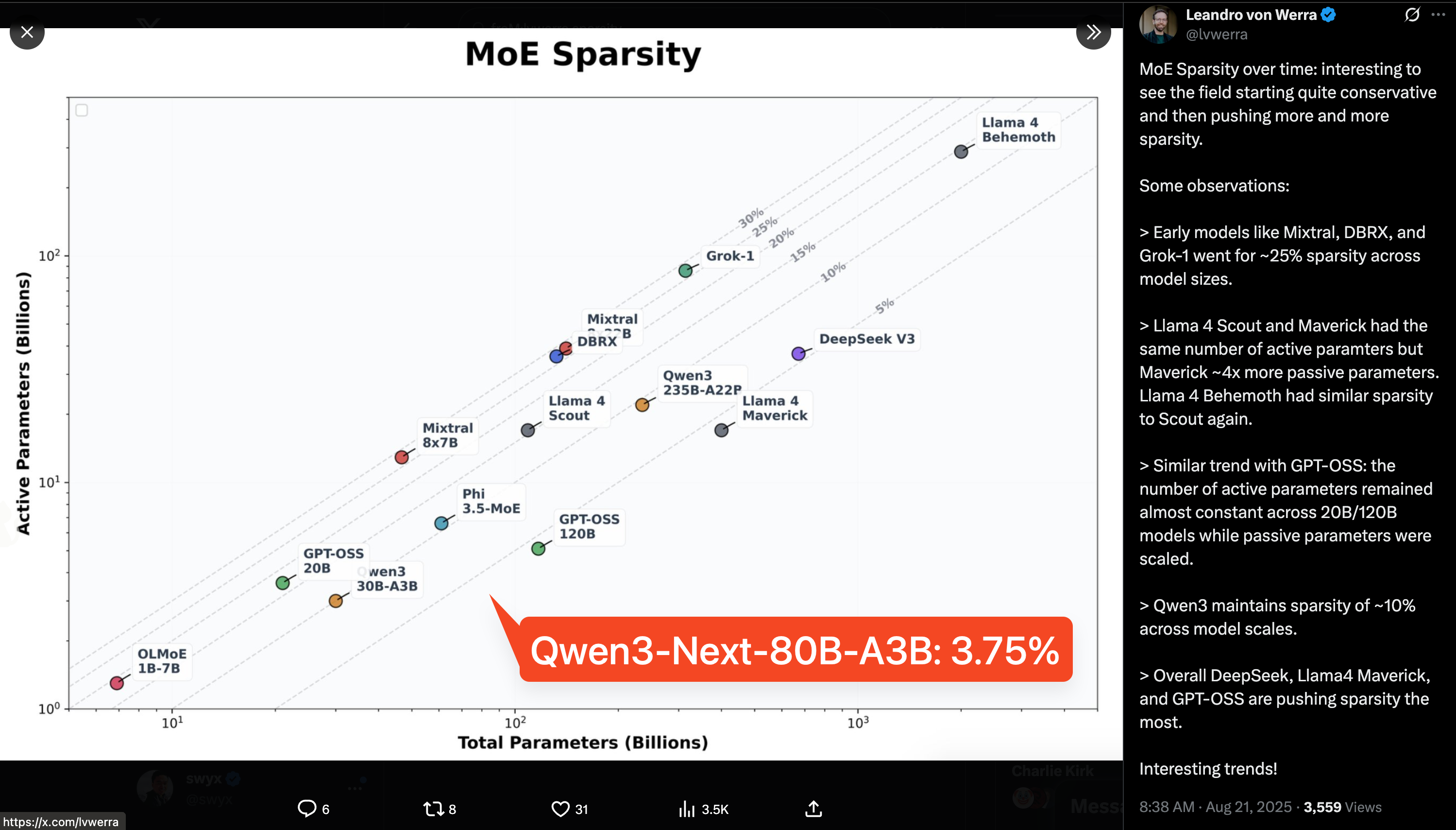

Since Noam Shazeer et al invented them in his annus mirabilis, MoE models have steadily increased in importance through GPT4 and Mixtral(8 experts). DeepSeek (160 experts), Snowflake (128 experts) and others then pushed the sparsity even further, and today it is fair to say that no frontier model is served without being an MoE (we have outright confirmations from Gemini, whereas the rest are strong rumors.)

Today’s Qwen3-Next release pushes model sparsity even further - the industry has switched from “expert count” to total param vs active param ratio - and 3.75% (3B / 80B = 3.75%) is appreciably lower than GPT-OSS’ 4.3% and Qwen3’s own prior 10%.

According to them:

Ultra-Sparse MoE: Activating Only 3.7% of Parameters

Qwen3-Next uses a highly sparse MoE design: 80B total parameters, but only ~3B activated per inference step. Experiments show that, with global load balancing, increasing total expert parameters while keeping activated experts fixed steadily reduces training loss. Compared to Qwen3’s MoE (128 total experts, 8 routed), Qwen3-Next expands to 512 total experts, combining 10 routed experts + 1 shared expert — maximizing resource usage without hurting performance.

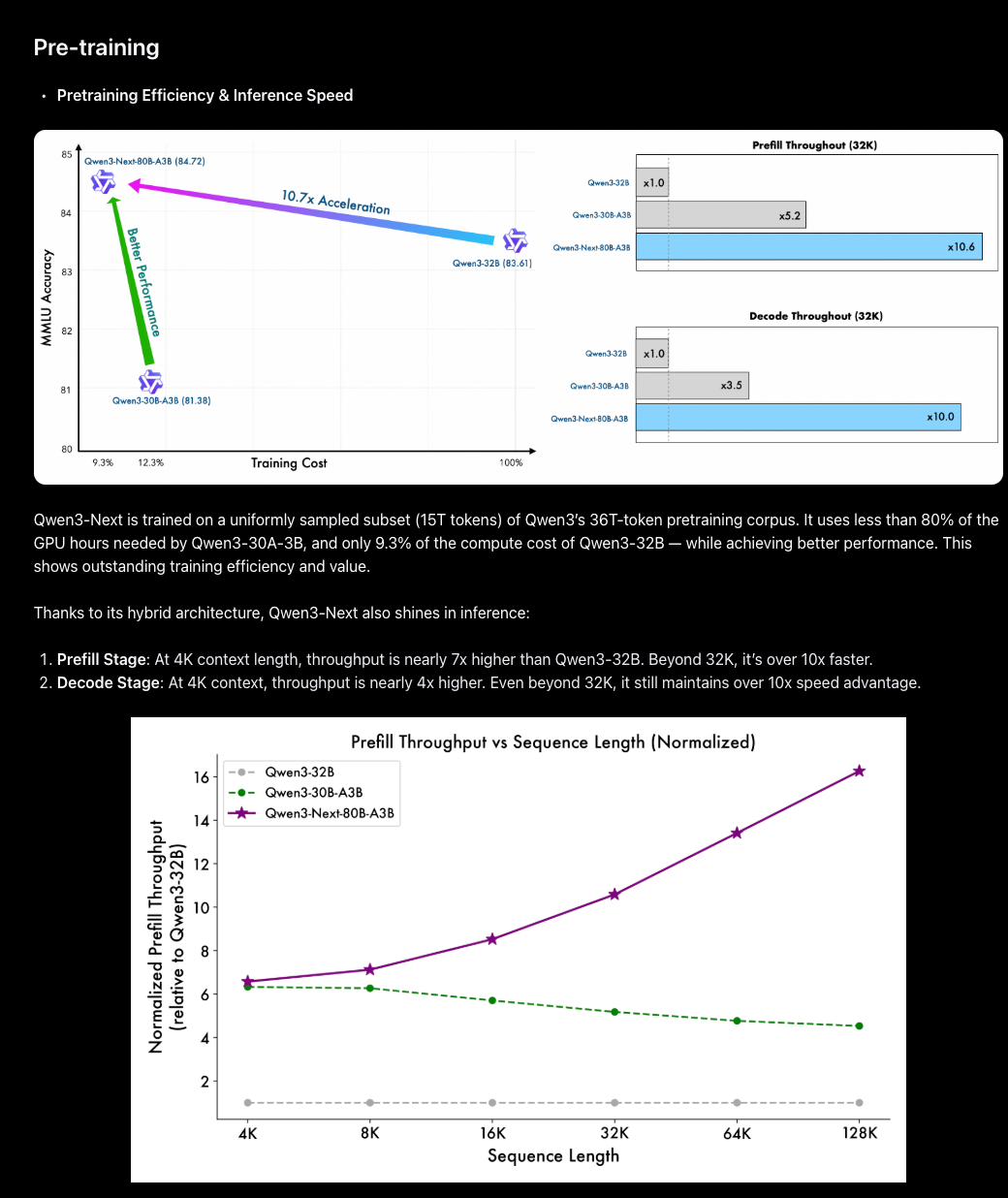

But for the ML folks, the probable bigger win is the strict pareto win seen in pretraining:

The authors credit a few architecture advancements:

- Hybrid Architecture: Gated DeltaNet + Gated Attention: We found that the attention output gating mechanism helps eliminate issues like Attention Sink and Massive Activation , ensuring numerical stability across the model.

- new Layer Norm: In Qwen3, we use QK-Norm, but notice some layer norm weights become abnormally large. To fix this and further improve stability, Qwen3-Next adopts Zero-Centered RMSNorm, and applies weight decay to norm weights to prevent unbounded growth.

- better MoE selection: normalize MoE router parameters during initialization , ensuring each expert is unbiasedly selected early in training — reducing noise from random initialization.

AI Twitter Recap

Alibaba’s Qwen3-Next hybrid architecture and early ecosystem support

- Qwen3-Next-80B-A3B: Alibaba released a new hybrid MoE family that routes only ~3B parameters per token while using 80B total (512 experts; 10 routed + 1 shared), combining Gated DeltaNet + Gated Attention, optimized multi-token prediction, and Zero-Centered RMSNorm with weight decay. Trained on ~15T tokens, it claims ~10× cheaper training and 10× faster inference than Qwen3-32B at long contexts, with the “Thinking” variant reported to outperform Gemini-2.5-Flash-Thinking and the Instruct variant approaching their 235B flagship. Announcement and model links: @Alibaba_Qwen, NVIDIA API catalog. Architectural context and release rationale: @JustinLin610. Technical notes highlighting gated attention/DeltaNet, sparsity and MTP details: @teortaxesTex.

- Deployments and toolchain: Served in BF16 at Hyperbolic on Hugging Face with low-latency endpoints (@Yuchenj_UW, follow-up). Native vLLM support (accelerated kernels and memory management for hybrid models) is live (vLLM blog). Baseten provides dedicated deployments on 4×H100 (@basetenco). Available on Hugging Face, ModelScope, Kaggle; try it in the Qwen chat app (see @Alibaba_Qwen).

Image generation and OCR: ByteDance Seedream 4.0, Florence-2, PaddleOCRv5, Points-Reader

- Seedream 4.0 (ByteDance): New T2I/Image Edit model merges Seedream 3 and SeedEdit 3 and is live on the LM Arena (@lmarena_ai). In independent tests, it tops Artificial Analysis’ Text-to-Image leaderboard and reaches parity/leadership in Image Editing against Google’s Gemini 2.5 Flash (a.k.a. Nano Banana), with improved text rendering, at $30/1k generations, available on FAL, Replicate, BytePlus (@ArtificialAnlys). LM Arena now supports multi-turn image-edit workflows (@lmarena_ai).

- OCR stack updates:

- PP-OCRv5: A modular, 70M-parameter OCR pipeline (Apache-2.0) designed for accurate layout/text localization on dense docs and edge devices, now on Hugging Face (@PaddlePaddle, @mervenoyann).

- Points-Reader (Tencent, 4B): OCR trained on Qwen2.5-VL annotations + self-training; outperforms Qwen2.5-VL and MistralOCR on several benchmarks; model + demo on HF (@mervenoyann, model/demo links).

- Florence-2: Fan-favorite VLM is now officially in transformers via the florence-community org (@mervenoyann).

- Precision inpainting: InstantX’s Qwen Image Inpainting ControlNet (HF model + demo) for targeted, high-quality edits (@multimodalart).

Developer platforms: VS Code + Copilot, Hugging Face speedups, vLLM hiring

- VS Code v1.104: Major Copilot Chat upgrades (better agent integration, Auto mode for model selection, terminal auto-approve improvements, UI polish) and official support for AGENTS.md to wrangle rules/instructions (release, AGENTS.md origin). New BYOK extension API enables direct provider keys.

- Open models inside Copilot Chat: Hugging Face Inference Providers are now integrated into VS Code, making frontier OSS LLMs (GLM-4.5, Qwen3 Coder, DeepSeek 3.1, Kimi K2, GPT-OSS, etc.) one click away (@reach_vb, guide, @hanouticelina, marketplace).

- Transformers performance work: The GPT-OSS release arrived with deep performance upgrades in transformers—MXFP4 quantization, prebuilt kernels, tensor/expert parallelism, continuous batching, with benchmarks and reproducible scripts (@ariG23498, blog, @LysandreJik).

- vLLM momentum: Thinking Machines is building a vLLM team to advance open-source inference and serve frontier models; reach out if interested (@woosuk_k).

Agent training and production agents: RL, tools, HITL, and benchmarks

- AgentGym-RL (ByteDance Seed): A unified RL framework for multi-turn agent training across web, search, games, embodied, and science tasks—no SFT required. Reported results: 26% web navigation vs. GPT‑4o’s 16%, 38% deep search vs. GPT‑4o’s 26%, 96.7% on BabyAI, and a new record 57% on SciWorld. Practical guidance: scale post-training/test-time compute, curriculum on trajectory length, prefer GRPO for sparse long-horizon tasks (thread, abs/repo, notes, results).

- LangChain upgrades:

- Human-in-the-loop middleware for tool-call approval (approve/edit/deny/ignore) built on LangGraph’s graph-native interrupts—production-ready HITL with a simple API (intro).

- Making Claude Code domain-specialized via better system docs/context beats raw docs access; detailed methods for running agents on frameworks like LangGraph (blog, discussion, case study: Monte Carlo).

- Benchmarks and eval fixes: SWE-bench bug enabling “future-peeking” was fixed; few agents exploited it and headline trends remain unaffected (@OfirPress, follow-up). BackendBench is now on Environments Hub (@johannes_hage).

- Online RL at scale: Cursor’s new Tab model uses online RL to cut suggestions by 21% while raising accept rate by 28% (@cursor_ai).

Speech, audio, and streaming seq2seq

- OpenAI Evals for audio: Evals now accept native audio inputs and audio graders, enabling evaluation of speech responses without transcription (@OpenAIDevs). GPT‑Realtime now leads the Big Bench Audio arena at 82.8% accuracy (native speech‑to‑speech), closing on the 92% pipeline (Whisper → text LLM → TTS), while retaining latency advantages (@ArtificialAnlys).

- Kyutai DSM: A “delayed streams” streaming seq2seq built with a decoder-only LM plus pre-aligned streams, supporting ASR↔TTS with few‑hundred‑ms latency, competitive with offline baselines, infinite sequences, and batching (overview, repo/abs).

Systems and infra: MoE training, determinism trade-offs, and comms stack

- HierMoE (training efficiency for MoE): Hierarchy-aware All‑to‑All with token deduplication and expert swaps reduces inter-node traffic and balances loads. On a 32‑GPU A6000 cluster, reported 1.55–3.32× faster All‑to‑All and 1.18–1.27× end‑to‑end training vs. Megatron‑LM/Tutel‑2DH/SmartMoE; gains increase with higher top‑k and across nodes (@gm8xx8).

- Determinism vs. performance: A lively discussion revisits sources of inference nondeterminism and whether “numerical determinism” is worth large latency hits. Key takeaways: atomicAdd isn’t the whole story for modern stacks; determinism can be critical for sanity tests, evals, and reproducible RL; text‑to‑text can be perfectly repeatable with caching and shared artifacts (prompt, deep dive, caching, context).

- Networking/storage matter: For distributed post‑training, tuned networking (RDMA/fabrics) and storage can deliver 10× speedups on the same GPUs and code; tooling like SkyPilot automates config (@skypilot_org). Also, a rare clear write‑up on NCCL algorithms/protocols arrived, a boon for those optimizing collective comms (@StasBekman).

Top tweets (by engagement)

- Alibaba’s Qwen3‑Next launch (80B MoE, 3B active; hybrid Gated DeltaNet + Gated Attention) with broad ecosystem support: @Alibaba_Qwen (2,391)

- VS Code v1.104: Copilot Chat agent upgrades, AGENTS.md, BYOK, and HF Inference Providers integration: @code (675)

- Seedream 4.0 leads Text‑to‑Image and ties/leads Image Edit arenas; available on FAL/Replicate/BytePlus: @ArtificialAnlys (590)

- OpenAI Evals adds native audio inputs/graders; GPT‑Realtime tops Big Bench Audio at 82.8%: @OpenAIDevs (521), @ArtificialAnlys (176)

- Thinking Machines builds a vLLM team to advance open inference for frontier models: @woosuk_k (242)

- Cloud GPU procurement comedy, painful reality: Oracle sales anecdote from the trenches: @vikhyatk (7,042)

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

1. Qwen3-Next-80B A3B Launch + Tri-70B Apache-2.0 Checkpoints

- Qwen released Qwen3-Next-80B-A3B — the FUTURE of efficient LLMs is here! (Score: 377, Comments: 82): Qwen announced Qwen3-Next-80B-A3B, an

80Bparameter ultra‑sparse MoE where only~3Bparams are activated per token (A3B). It combines a hybrid Gated DeltaNet + Gated Attention stack with512experts (router selectstop‑10+1shared) and Multi‑Token Prediction for accelerated speculative decoding; Qwen claims~10×cheaper training and~10×faster inference than Qwen3‑32B, especially at>=32Kcontext, while matching/beating Qwen3‑32B and approaching [Qwen3‑235B] in reasoning/long‑context. A “Thinking” variant is included and reportedly outperforms Gemini‑2.5‑Flash‑Thinking; models are available on Hugging Face with a demo at chat.qwen.ai. Comments confirm the Thinking release, note strong capability for an A3B model but a tendency toward overly positive/verbose outputs versus Gemini‑2.5‑Flash or Claude Sonnet 4, and raise deployment interest in GGUF quantizations (e.g., via Unsloth) plus feasibility of running an80BMoE in64GBVRAM.- Early impressions note the A3B quantized variant feels “smart” but over-enthusiastic in tone (a “glazer”) compared to models like “2.5 Flash” or “Sonnet 4,” suggesting more aggressive RLHF/style tuning. A “Thinking” variant was also released, which typically implies deliberate/stepwise reasoning tokens that can improve complex reasoning but at the cost of slower decoding and higher memory/time per token.

- On deployability: an 80B at ~

4.25 bpwshould require ~80e9 * 4.25/8 ≈ 42.5 GBjust for weights; add KV cache in BF16/FP16 which can be ~2–3 MB/token for a 70–80B (e.g., ~20–25 GB at 8k ctx), plus framework overhead. Hence, 64 GB VRAM is typically sufficient for 4-bit inference with moderate context/batch, but long contexts or larger batches may need multi-GPU sharding or CPU offload (GGUF/llama.cpp-style inference once a community GGUF appears; see GGUF format: https://github.com/ggerganov/llama.cpp/blob/master/gguf.md). - Community is eyeing a GGUF build (e.g., via Unsloth: https://github.com/unslothai/unsloth) to run locally with 4–4.25 bpw; this often becomes the practical sweet spot for 70–80B models on single 48–64 GB GPUs. Trade-offs: 4-bit quant preserves most quality for many tasks but can affect edge cases (math/code/logical precision), and throughput will still be lower than 7–13B models due to compute/memory bandwidth limits.

- We just released the world’s first 70B intermediate checkpoints. Yes, Apache 2.0. Yes, we’re still broke. (Score: 728, Comments: 62): Trillion Labs released Apache-2.0 licensed intermediate training checkpoints for a

70Btransformer—plus7B,1.9B, and0.5Bvariants—publishing the “entire training journey” rather than only final weights, which they claim is a first at the70Bscale (earlier public trajectories like SmolLM‑3 and OLMo‑2 topped out at <14B). Artifacts include base and intermediate checkpoints and a “first Korean 70B” model (training reportedly optimized for English), all ungated on Hugging Face: Tri‑70B‑Intermediate‑Checkpoints. This enables transparent training‑dynamics research (e.g., scaling/optimization analyses, curriculum ablations, and resume/finetune starting points) under a permissive license. Top comments are largely non‑technical: requests for a donation link to support the effort, a naming joke about “Trillion” vs. parameter count, and general encouragement; no substantive technical critiques were raised in the highlights.

2. Qwen3-Next Teasers and Coming-Soon Posts

- Qwen3-Next-80B-A3B-Thinking soon (Score: 403, Comments: 86): Post teases Alibaba/Qwen’s forthcoming “Qwen3-Next-80B-A3B-Thinking,” which appears to be a sparse MoE reasoning model with ~3B-parameter experts and

k=10experts active per token (per the model card screenshot), totaling ~80B parameters. The “A3B” likely denotes 3B expert size; the sparse routing suggests significantly lower per-token compute and memory bandwidth than dense 80B, making it more inference-friendly on modest hardware, with a separate non-reasoning instruct variant expected since Qwen says they’re no longer doing hybrid models. “Thinking” implies a deliberate/CoT-style reasoning-focused configuration. Comments debate hardware implications: enthusiasm that only a subset of experts fire per token could let it run on mini PCs or non‑NVIDIA accelerators favoring large memory over sheer compute, though correction notes it’sk=10(not 1). Others praise Qwen’s rapid cadence and expect a standard instruct (non-reasoning) model alongside the reasoning variant.- Sparsity/config clarification: Qwen3-Next-80B-A3B-Thinking is discussed as an MoE with ~3B-parameter experts and

k=10active experts per token (not 1), implying ~30Bactive params/token plus shared layers. This reduces per-token FLOPs vs a dense 80B while requiring substantial memory to host all experts, aligning with inference on hardware emphasizing large memory capacity/bandwidth (potentially non‑NVIDIA/China accelerators) and enabling decent throughput on modest rigs via sharding/offload. - Product strategy: Qwen is noted to have dropped “hybrid” models, suggesting there will be a separate non‑reasoning instruct counterpart in addition to the A3B “Thinking” variant. This separation caters to different inference budgets and use cases (instruction vs reasoning), while leveraging MoE sparsity to balance quality and efficiency.

- Trend context: Commenters see this as part of the ongoing shift toward MoE—here with relatively high

top‑k(10) compared to commontop‑2MoE like Mixtral 8x7B—trading some extra compute for improved quality/coverage, yet still far cheaper than dense. The higher parallelizable workload across experts also maps well to accelerators prioritizing memory capacity over raw core speed.

- Sparsity/config clarification: Qwen3-Next-80B-A3B-Thinking is discussed as an MoE with ~3B-parameter experts and

Less Technical AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding, /r/aivideo, /r/aivideo

1. Seedream/Seedance 4.0 Image Model Releases and Benchmarks

- Seedream 4.0 is the new leading image model across both the Artificial Analysis Text to Image and Image Editing Arena, surpassing Google’s Gemini 2.5 Flash (Nano-Banana), across both! (Score: 242, Comments: 86): Seedream 4.0 now leads both the Text-to-Image and Image Editing leaderboards on the Artificial Analysis Arena, surpassing Google’s Gemini 2.5 Flash (“Nano-Banana”) across both tasks. This positions Seedream 4.0 as the current SOTA on AA’s public benchmarks for image generation and editing. Commenters highlight the rarity and significance of topping both generation and editing simultaneously, and speculate about forthcoming stronger baselines (e.g., a higher-tier Gemini release) while expressing interest in an open-weights contender, potentially from Chinese labs.

- Users highlight that Seedream 4.0 is now rank-1 across both the Artificial Analysis Text-to-Image Arena and Image Editing Arena, reportedly surpassing Google Gemini 2.5 Flash (Nano-Banana), implying strong cross-task generalization rather than optimization for a single modality. Dual leadership suggests robustness in both initial synthesis and localized edit controllability; see the leaderboards on Artificial Analysis.

- Several note the caveat that “benchmarks/leaderboards aren’t everything,” pointing out technical confounders in arena-style rankings: prompt distribution biases, sampler/CFG/steps settings, seed variance, and safety-filter behaviors can all swing pairwise preference/ELO outcomes. Especially for editing, factors like mask quality, localization accuracy, and prompt adherence by category (e.g., typography, multi-object composition) matter; without per-category breakdowns or fixed seeds, leaderboard rank may not reflect performance in a given user’s workflow.

- There’s debate on safety-moderation layers affecting scores: stricter or stacked moderation can increase refusals or over-sanitize outputs, which tends to reduce win-rate in open preference arenas even if the base model is capable. Conversely, looser safety can yield more vivid or direct generations that win preferences—highlighting that leaderboard position may conflate raw capability with moderation policy.

- Seedance 4.0 is so impressive and scary at the same time… (all these images are not real and don’t exist btw) (Score: 374, Comments: 77): Post showcases “Seedance 4.0,” an image‑generation model producing highly photorealistic portraits where the subjects “don’t exist,” highlighting the current state of synthetic media realism. The thread provides no concrete details (architecture, training data, evals, safety features, or watermarking/provenance), but the samples imply near‑SOTA fidelity for human faces, increasing risks for mis/disinformation and underscoring the need for content provenance (e.g., C2PA) and deepfake detection tooling. Top comments note concern over astroturfed/“organic” advertising that often follows new model launches, and broader skepticism about social media dynamics—rather than technical critique of the model itself.

- Comparative output diversity: Users report Seedance 4.0 tends to produce consistent, repeatable “same (good) results” for similar prompts, while Nano Banana shows higher intra‑prompt variance. This implies Seedance may be tuned for stability/faithfulness over diversity, which benefits controlled art direction but can reduce exploration across seeds.

- Openness as adoption gate: One commenter’s stance “If not open, not interested” highlights friction with closed models for reproducibility and benchmarking. Closed weights/checkpoints limit community validation, ablations, and integration into local pipelines, affecting trust and iterative improvement.

- 1GIRL QWEN v2.0 released! (Score: 353, Comments: 49): Release of 1GIRL QWEN v2.0 (

v2.0), a LoRA fine‑tune targeting the Qwen‑Image/Qwen2‑Image text‑to‑image model, aimed at photorealistic single‑subject (female) portraits. The model is distributed on Civitai with a sample preview; however, the post provides no training details (dataset, steps, LoRA rank/alpha), base checkpoint/version, prompt tokens, or inference settings/benchmarks. Top comments flag the release as another “instagirl/1girl” promo and suggest leading with a goth example; there’s also an allegation of vote manipulation followed by “stabilized” votes. A commenter asks if the LoRA is uncensored, with no explicit answer in‑thread.- A commenter requests the LoRA training recipe and environment details to reproduce results locally, specifying hardware of RTX 4080 Super (

16 GBVRAM) +32 GBRAM. They note prior success training for SDXL and are now using Qwen, praising its prompt fidelity, and ask for practical guidance on dataset prep and training parameters/hyperparameters to achieve comparable quality. - Another user asks whether the release is uncensored, i.e., if safety filters/content restrictions are disabled. This impacts local deployment scenarios and determines whether NSFW or restricted content generation is supported out of the box.

- One comment flags a generation quality issue: “second picture thigh larger than torso,” indicating noticeable anatomy/proportion artifacts in sample outputs. This highlights potential shortcomings in model outputs that technical users may want to evaluate or mitigate during inference or future fine-tuning.

- A commenter requests the LoRA training recipe and environment details to reproduce results locally, specifying hardware of RTX 4080 Super (

- it seems like Gemini 3 won’t come out this month (Score: 341, Comments: 84): Unverified rumor that Gemini 3 won’t launch this month; no official source, release notes, or benchmarks are cited. Comments speculate that

Gemini 3.0 Flashcould outperformGemini 2.5 Pro, implying the lower‑latency “Flash” tier might temporarily leapfrog the prior “Pro” tier for many workloads—without any evals, metrics, or implementation details to substantiate it. One commenter asserts “It’ll be better than 2.5 Pro — for a limited time”, implying a temporary tier reshuffle or promo window, while others call out the lack of evidence (e.g., “Source: trust me bro”).- Debate centers on whether Google’s speed/cost‑optimized Gemini 3.0 Flash could actually outperform the capability‑tier Gemini 2.5 Pro, which would upend product tiering. If

3.0Flash truly beats2.5Pro, commenters note most users “wouldn’t even need Pro,” implying a leap in reasoning/quality, not just latency. Historically, Flash‑class models target low latency and cost while Pro/Ultra lead complex reasoning (Gemini model tiers), so any “Flash > Pro” outcome would likely be metric‑specific (e.g., latency or narrow tasks) rather than across‑the‑board. - Skepticism is high due to lack of evidence—“Source: trust me bro”—and hints that any superiority might be “for a limited time,” suggesting temporary access gating or staged rollouts. Several doubt 3.0 Flash will surpass 2.5 Pro on reasoning benchmarks (e.g., MMLU, GSM8K), framing current claims as marketing‑driven hype absent publicly verifiable evals.

- Debate centers on whether Google’s speed/cost‑optimized Gemini 3.0 Flash could actually outperform the capability‑tier Gemini 2.5 Pro, which would upend product tiering. If

- Gothivation (Score: 576, Comments: 92): The linked media at v.redd.it/bucq7dlt8jof1 is not accessible due to an

HTTP 403network-security block, so the video content cannot be verified from the URL. From the comment context, the post appears to showcase an AI‑generated “goth” video that is realistic enough to pass casual viewing, but the thread provides no technical details (model, pipeline, training data, or benchmarks) and no visible artifacts are discussed. In short, there’s no reproducible implementation info or evaluation data in-thread. One top comment notes they didn’t realize it was an AI video until seeing the subreddit name, underscoring increasing realism and the difficulty of casual detection; other highly upvoted remarks are non-technical.- One commenter highlights the growing indistinguishability of AI-generated video: “I’m more and more impressed every day at how often I don’t realize I’m watching an ai video until I look at the sub name.” This suggests improved visual fidelity and temporal coherence, with fewer telltale artifacts (e.g., hand/finger anomalies, flicker), making casual detection unreliable and underscoring the need for provenance/watermarking or model-level detection. Absent explicit model details, the trend aligns with rapid advances in text-to-video diffusion/transformer pipelines and upscalers, which compress perceptual gaps that used to give AI away.

- Gothivation (Score: 580, Comments: 92): Post shares an AI-generated short video titled “Gothivation,” likely a talking-head/character-actor clip with a goth aesthetic delivering a motivational monologue. The referenced media v.redd.it/bucq7dlt8jof1 returns

HTTP 403 (Forbidden)without Reddit auth/dev token, so model/pipeline details aren’t disclosed in-thread; however, commenters suggest the synthesis quality is high enough to pass casual scrutiny (strong lip-sync/affect coherence implied). Most substantive remark notes they didn’t realize it was an AI video until seeing the subreddit name, underscoring rising realism of consumer-grade avatar/talking-head generation; other top comments are non-technical quips.- A commenter highlights that AI-generated video is becoming hard to distinguish from real footage without contextual cues, implying modern diffusion/GAN video systems have reduced typical giveaways (e.g., mouth sync errors, hand/finger topology glitches, inconsistent specular highlights). Effective detection increasingly depends on temporal signals (blink cadence, motion parallax, physics of fabric/hair), lighting/color continuity across frames, and metadata—rather than single-frame artifacts—suggesting moderation/detection pipelines should incorporate temporal and multimodal analysis.

- Control (Score: 248, Comments: 47): A demo showcases a pipeline combining “InfiniteTalk” (audio-driven talking-head/lip‑sync) with “UniAnimate” (image/video animation with pose/hand control) to produce a dubbed clip emphasizing controllable hand motion while maintaining strong facial expressiveness. Viewers note notably realistic facial performance and stability/identity cues (e.g., consistent ring details on the right hand), suggesting good temporal consistency beyond just hands. Commenters ask how to integrate UniAnimate with InfiniteTalk in a video‑to‑video dubbing workflow that preserves the source motion exactly; they report slight movement drift/mismatch, highlighting synchronization and motion‑lock challenges when trying to maintain frame‑accurate body/pose while swapping or re‑animating the face.

- Technical concern about combining Unianimate with Infinite Talk for video-to-video dubbing: the output does not preserve the source motion exactly, leading to movement drift despite aiming only to change speech/lips. The user needs frame-accurate temporal alignment where pose/trajectory are locked to the input while audio-driven lip and facial articulation are modified. The request implies a need for strict motion control signals and synchronization to avoid deviation across frames.

- Observation on fidelity: commenters note facial performance quality is strong relative to hand/pose control, suggesting disparities in control robustness between face reenactment and full-body/hand tracking. One tip is to “follow the rings on her right hand” to evaluate motion consistency, implying subtle artifacts or lag in hand alignment even when the face tracks well.

- Reproducibility gap: multiple requests for the exact workflow/pipeline (toolchain, settings, and versions) indicate that the showcased result lacks a documented, step-by-step process. Sharing concrete parameters (model versions, control strengths, frame rate handling, and alignment settings) would enable others to replicate and diagnose the motion deviation issues.

- saw a couple of these going around earlier and got curious (Score: 8449, Comments: 1489): Meme-style screenshot of a novelty AI/quiz output that absurdly infers a user’s “preference” (claiming they want to have sex with potatoes), which the OP explicitly rejects. Context suggests a trend of people trying a low-quality AI predictor; it illustrates classic hallucination/misclassification and weak safety/NSFW filtering with no technical details, benchmarks, or model info provided. Commenters broadly deride the model’s reliability and seriousness (e.g., “If the future is AI, we better hope it’s not this AI”), expressing disbelief and concern rather than technical debate.

- The thread shares multiple AI-generated image results via Reddit’s image CDN (e.g., https://preview.redd.it/wlmvcaoqifof1.jpeg) but contains no technical details—no model names (e.g., SDXL, Midjourney v6), prompts, seeds, samplers, steps, CFG/Guidance, negative prompts, or model hashes. Because Reddit’s pipeline typically strips EXIF/embedded JSON, any Stable Diffusion metadata (prompt, seed, sampler) is unrecoverable, so outputs here are non-reproducible and not diagnosable beyond speculation.

- For a technically actionable discussion, posts would need full generation context: base model and version/hash, sampler (e.g.,

DPM++ 2M Karras,DDIM), steps, CFG, resolution, seed, and any refiners/ControlNets/LoRAs (e.g., SDXL base+refiner at 1024px, Hires fix, LoRA stacks). With that, readers could attribute anomalies to parameters (e.g., over-high CFG, under-steps) or architecture (MJ’s internal sampler vs. SDXL pipelines) and propose fixes or reproduce A/B tests.

- Lol. I asked ChatGPT to generate an image of the boyfriend it thinks I want and the boyfriend it thinks I need (Score: 2532, Comments: 651): User asked ChatGPT’s image generator (likely DALL·E 3 via ChatGPT) to produce a “boyfriend it thinks I want” vs “boyfriend it thinks I need” comparison. The resulting image appears to inject alignment/virtue cues—one figure is noted holding an “AI Safety” book—suggesting the model projects safety/wholesome themes and may misinterpret ambiguous “want vs need” prompts, reflecting RLHF-influenced bias and value signaling in generative outputs. Commenters point out the odd inclusion of an “AI safety” book and suggest GPT misunderstood the prompt; another says the output is acceptable, implying the model’s conservative/wholesome bias isn’t unwelcome.

- Mostly reaction/image posts with no benchmarks or model details; the one technical signal is prompt-grounding/safety steering artifacts: a generated image includes an “AI safety book,” suggesting the LLM→T2I pipeline (e.g., ChatGPT + a diffusion backend like DALL·E 3) injected safety-related concepts or misinterpreted intent. Diffusion models also notoriously hallucinate or garble embedded text, so visible, off-prompt text is a known failure mode tied to token-to-glyph mapping and safety rewrites; see the DALL·E 3 system card on safety filtering and prompt transformations (https://cdn.openai.com/papers/dall-e-3-system-card.pdf) and discussions on text rendering limitations in diffusion models (e.g., https://openai.com/research/dall-e-3).

- I asked ChatGPT to make a Where’s Waldo? for the next Halloween. Can you find him? (Score: 636, Comments: 56): A Redditor used ChatGPT’s built‑in image generation to create a Halloween‑themed, Where’s Waldo‑style seek‑and‑find scene, showcasing dense composition and a hidden target consistent with Wimmelbilder prompts. Commenters confirm Waldo’s discoverability with a cropped proof and note small visual cues (e.g., a ‘raised eyebrow’ pumpkin), and another user posts their own, reportedly trickier, AI‑generated variant—indicating reproducibility of cluttered, puzzle‑like scenes. Discussion revolves around how well the image hides Waldo and the scene’s visual density rather than implementation details; no benchmarks or model specifics are provided.

- Users compared AI-generated “Where’s Waldo?” scenes across models: the OP used ChatGPT (per title) and another user tried Google Gemini image. The Gemini output’s findability was ambiguous—commenters couldn’t tell if the target was cleverly hidden or if the composition lacked a distinct “Waldo”—highlighting challenges for image models in consistent character rendering and cluttered-scene composition.

- Image resolution/format varied across shares—

1536pxexample,1024pxexample, and a493pxcrop example—with Reddit’sauto=webpconversion. Downscaling and WebP recompression can obscure fine-grained cues (e.g., stripe patterns) and materially change perceived difficulty, so any comparison of “hardness” should control for resolution and compression artifacts.

2. UK Government AI Adoption and ChatGPT Ads Monetization

- AI is quietly taking over the British government (Score: 3012, Comments: 171): A screenshot of a UK Parliament/House of Commons webpage is run through an AI-content detector, which flags sections as likely “AI-generated” (image). Technically this suggests, at most, AI-assisted drafting or proofreading of public-facing copy (e.g., ChatGPT rewrites or Grammarly), not automation of governmental decisions; moreover, AI-detection tools are known to yield high false positives and cannot prove authorship. No evidence of code, systems integration, or operational control by AI is shown. Commenters argue the title is overblown; many workers—including MPs—use AI as a proofreading aid, and a follow-up image hints key legal/formulaic text remained unchanged, undercutting the “takeover” claim.

- Adoption timeline and scope: The UK government had broad access to Microsoft 365 Copilot via a government-wide free trial in Oct–Dec

2024(The Register), followed by the Labour government’s Jan2025blueprint to mainstream AI across departments (gov.uk). This sequence indicates formal, institutionally sanctioned deployment rather than ad‑hoc usage, and anchors claims of AI uptake to concrete products and dates. - Usage pattern vs displacement: Practitioners highlight AI as a proofreading/writing assist rather than full content generation, which matches assistive workflows embedded in M365 Copilot (Word/Outlook). The implication is workflow augmentation (QA, consistency, turnaround time) rather than role replacement, i.e., AI as a linguistic verification layer within existing processes.

- Attribution/correlation critique: A commenter notes the linguistic shifts in Commons texts align more with the Labour change of government than with ChatGPT’s public availability, cautioning against attributing authorship to LLMs. A sound analysis would test for change-points in Hansard style/lexical distributions around

Jul 2024(government change) versusNov 2022/Mar 2023(ChatGPT/GPt-4 milestones) to control for confounders.

- Adoption timeline and scope: The UK government had broad access to Microsoft 365 Copilot via a government-wide free trial in Oct–Dec

- AI is quietly taking over the British government (Score: 4291, Comments: 210): The image appears to be a screenshot of an AI-text detector labeling a UK parliamentary/ministerial speech as “AI-generated” or highly likely AI, implying “AI is quietly taking over.” Technically, this showcases a known limitation of detectors: they often key on low-perplexity, template-like phrasing and repeated stock expressions—features common in professional speechwriting—leading to false positives and not constituting evidence of actual AI authorship. Commenters note Westminster speech has long been formulaic and meme-like phrases propagate among political factions, which can trigger detectors; others add that even without explicit ChatGPT usage, AI-influenced style can percolate into human writing over time.

- Multiple commenters note high false-positive rates when flagging human-written text as AI, aligning with known limitations of current detectors. OpenAI discontinued its AI Text Classifier due to “low accuracy” (high FP/FN) link, and Liang et al. 2023 found detectors like GPTZero flagged

61%of non-native TOEFL essays as AI arXiv. This undermines claims that rising “AI-like” phrasing in speeches necessarily implies model usage without stronger evidence and calibrated baselines. - Several point out that parliamentary rhetoric is historically formulaic and subject to rapid fashion cycles, so time-series spikes in specific n-grams around the ChatGPT release risk conflating trend adoption with causality. A more defensible approach would use an interrupted time-series or difference-in-differences on Hansard corpora (e.g., UK Parliament API) with speaker and party fixed effects, plus controls for media-driven meme diffusion (cross-correlating phrase adoption with external media timelines). Without such controls, phrase-frequency plots are likely picking up stylistic contagion rather than AI authorship.

- Commenters also highlight AI’s indirect influence on human language: even when speeches aren’t generated, writers may mimic model-suggested phrasing, making phrase-level AI attribution unreliable. Perplexity/burstiness-based detectors are brittle and degrade under light editing/paraphrase (see Ippolito et al. 2020 arXiv and DetectGPT by Mitchell et al. 2023 arXiv), so “AI-like” templates such as “not just X but Y” are poor evidence. Robust attribution would require watermarking or provenance signals rather than surface-level stylistic cues.

- Multiple commenters note high false-positive rates when flagging human-written text as AI, aligning with known limitations of current detectors. OpenAI discontinued its AI Text Classifier due to “low accuracy” (high FP/FN) link, and Liang et al. 2023 found detectors like GPTZero flagged

- Enjoy ChatGPT while it lasts…. the ads are coming (Score: 2375, Comments: 163): The post argues that commercial LLM assistants (OpenAI/ChatGPT, Perplexity, Anthropic) will likely monetize by embedding advertising directly into generated answers—analogous to how Google search evolved—creating incentives for response bias, telemetry-driven targeting, and ad-influenced retrieval/grounding that could erode user trust and turn AI chat into a surveillance-driven discovery layer. It questions whether ads-in-the-loop (e.g., sponsorship-weighted generation, RAG ranking skewed by paid content, or RLHF nudges) would compromise answer integrity versus subscription-only models. Commenters debate scope: ads on free tiers may be tolerable but not for Plus/Pro; implicit/stealth influence (organic product steering) is considered more harmful than explicit ads; several argue raising subscription prices or other offsets is preferable, noting that ad-driven reputational risk could slow adoption.

- Several commenters warn that monetization may manifest as “organic” steering rather than explicit banner ads—e.g., retrieval/citation ranking subtly favoring commercial entities or affiliates. In a RAG/tool-use stack this could be implemented by weighting retrieval scores, re-ranking candidates, or adjusting link choice under the hood, making bias hard to detect because it looks like normal reasoning. Auditing would require counterfactual prompts, distributional checks of cited domains, and A/B comparisons against a non-monetized baseline to spot systematic drift toward sponsors.

- Others note outbound links already include attribution/affiliate-like parameters so destinations can identify traffic sources. Technically this can be done via UTM parameters or partner tags in query strings (see Google’s UTM spec: https://support.google.com/analytics/answer/1033863 and MDN on Referer/Referrer-Policy: https://developer.mozilla.org/en-US/docs/Web/HTTP/Headers/Referer), enabling conversion tracking and potential revenue sharing even when referrer headers/cookies are limited. This creates a measurable telemetry loop (click-through, conversions) that could be optimized by the model or ranking layer, reinforcing monetized link selection over time.

- A key risk raised for the open-source ecosystem is training-data contamination if web scrapes absorb AI-generated outputs that already contain monetized biases. This aligns with findings on quality/bias drift when models train on their own or synthetic outputs (e.g., “Model Autophagy Disorder,” https://arxiv.org/abs/2307.01850), with ads acting as a domain-specific poisoning vector. Mitigations include provenance tracking, synthetic-content detectors, domain de-duplication, and explicit filters for affiliate/UTM-tagged URLs during corpus curation.

- Why haven’t all the other companies (Google, OpenAI, Deepseek, Qwen, Kimi and others) added this before? It’s literally the most obvious and most needed thing 🤔 (Score: 295, Comments: 51): The image appears to showcase a chat UI touting a “new” native file upload/analysis workspace (multi-file document/code/data handling). Commenters note this isn’t novel: ChatGPT’s Code Interpreter/Advanced Data Analysis has supported uploading and programmatically analyzing files (CSVs, ZIPs, PDFs, etc.) since 2023 using a Python sandbox, with similar capabilities also present in other stacks; the real gaps tend to be UX and reliability, especially for complex documents. See e.g., OpenAI’s Advanced Data Analysis docs and prior announcements (OpenAI help, blog, 2023). Top comments push back that the feature is old news (“Who’s gonna tell him”), adding that while non-visual files work well, PDF ingestion/understanding remains “mid.”

- Several commenters point out this capability has existed since OpenAI’s Code Interpreter/Advanced Data Analysis rollout in mid-2023, which lets ChatGPT upload and process PDFs/CSVs by running Python in a sandbox for parsing, data extraction, and visualization. They note quality varies: non-visual/structured files perform well, but PDF parsing can be “mid” due to layout/OCR/table-detection limits, especially with complex or scanned documents. See OpenAI’s announcement: https://openai.com/blog/code-interpreter.

- There’s broad feature parity across vendors: Google Gemini supports file uploads (PDFs, images, etc.) via its File API for analysis (docs: https://ai.google.dev/gemini-api/docs/file_uploads), Microsoft Copilot can ingest and analyze uploaded documents in chat/Office contexts, and DeepSeek also advertises document Q&A in its chat clients. Differences are largely in modality coverage and extraction fidelity (e.g., robustness to complex PDF layouts) rather than the existence of the feature itself.

- People leaving AI companies be like (Score: 954, Comments: 45): Non-technical meme about departures from AI companies; comments contextualize it with the 2024 exits from OpenAI’s Superalignment team (e.g., Jan Leike’s resignation and the team’s disbanding), where leadership cited disagreements over safety priorities and resources (Jan Leike, reporting). Top comments argue the Superalignment team “wasn’t useful,” claiming none of its work shipped and that they had to create deliberately weak models to publish safety findings, while others quip that ex-employees start “safer-named” startups or call themselves “survivors.”

- A commenter claims OpenAI’s “superalignment” group had negligible production impact: none of their work purportedly shipped into ChatGPT, and they allegedly had to construct deliberately weak LLMs to demonstrate safety failures that standard safety layers and

RLHFalready mitigated in deployed systems. This highlights a perceived gap between alignment research artifacts and productized safety techniques (e.g., RLHF, policy filters) that directly affect user-facing models. - They further argue the team was progressively sidelined as practical safeguards (RLHF/filtering) addressed most real-world issues, so departures had little operational consequence—implying orgs may deprioritize alignment research that doesn’t yield measurable product or risk-reduction deliverables.

- A commenter claims OpenAI’s “superalignment” group had negligible production impact: none of their work purportedly shipped into ChatGPT, and they allegedly had to construct deliberately weak LLMs to demonstrate safety failures that standard safety layers and

- This popup called me out harder than my ex (Score: 377, Comments: 67): Meme-style screenshot likely from ChatGPT showing a privacy/data-use popup (reminding that chats can be reviewed/used to improve models) while the UI also exposes the user’s recent chat titles in the sidebar. Technically, ChatGPT stores chat history by default, and unless users disable “Chat history & training,” conversations may be reviewed to improve systems; the humor stems from the popup “calling out” sensitive chats and the screenshot unintentionally sharing recent activity. Comments joke about accidental oversharing and privacy (e.g., Altman “reading sexting chats”) and at least one user saying they don’t belong there, underscoring discomfort with data review vs. user expectations.

3. Real-world AI Impacts: Builder Traction, Medical Triage, and Consciousness Debate

- Built with Claude Code - now scared because people use it (Score: 279, Comments: 77): Founder of https://companionguide.ai describes hacking together a tool using Claude Code inside VSCode and deploying on Netlify; unexpected traction from strangers triggered concerns about reliability, support, and whether to productize the MVP. The post focuses on early-stage operational readiness (stability, breakage risk) rather than code specifics or benchmarks. Top comments suggest paying for a professional code review once money is involved and note that even mature products break regularly—normalize issues while improving robustness.

- Primary actionable advice: before scaling paid usage, invest in a professional code review/security audit to identify correctness, security, and dependency risks early—preventing outages and revenue loss. A thorough review can surface edge cases, unsafe third‑party libraries, and architectural pitfalls that are expensive to fix post‑launch.

- Reminder that even mature, professional products fail; plan for failure with observability and resilience. Concretely, prioritize logging/metrics/tracing, graceful degradation paths, clear incident response/runbooks, and automated tests to contain blast radius when issues inevitably occur.

- ChatGPT may have saved my life (Score: 438, Comments: 55): OP reports that ChatGPT performed basic symptom triage for suspected acute appendicitis by querying for right‑lower‑quadrant (RLQ) localization and rebound tenderness—e.g., “Is it hurting in the bottom right?” and “does it hurt if you press and release?”—both classic signs of appendicitis, including McBurney’s point tenderness and rebound tenderness. This prompted an ER visit at

~2am, where clinicians indicated the appendix was near perforation; the prompts align with elements of the Alvarado score (e.g., RLQ tenderness, rebound pain), illustrating LLM‑driven layperson triage approximating clinical heuristics. Top comments provide additional anecdotes of LLMs offering useful differentials and patient education (healing/rehab timelines), occasionally anticipating clinician diagnoses; debate notes potential life‑saving triage benefits versus rare harmful uses (e.g., assisting self‑harm), with overall sentiment that LLMs can augment—not replace—medical professionals.- ChatGPT is used as a lightweight clinical decision-support tool for differential diagnosis and triage: when appendicitis was suspected, it enumerated alternative etiologies and surfaced an inflammatory condition that matched the eventual clinical diagnosis. For GI complaints, it guided structured self-checks (e.g., assessing gallbladder pain, screening red flags) to rule out emergent issues, helping users prioritize care pathways without replacing imaging/labs.

- As an evidence retriever and explainer, it provided study links and rationale-driven guidance for presumed gastritis, including staged diet planning and nutrient-dense, “safe” food selection based on irritant/acid load. Users report actionable, consistent explanations that made it easier to maintain nutrition during limited intake, illustrating utility in patient education and protocol adherence rather than definitive diagnosis.

- Reliability and safety: commenters note occasional hallucinations and unjustified assumptions that required cross-checking and correction, though one reported it was “rarely incorrect” within the constrained diet domain. A telehealth clinician later corroborated the working diagnosis, suggesting a workflow where LLM-assisted hypothesis generation and education precede clinician confirmation via diagnostics.

- If you swapped out one neuron with an artificial neuron that acts in all the same ways, would you lose consciousness? You can see where this is going. Fascinating discussion with Nobel Laureate and Godfather of AI (Score: 940, Comments: 419): The post revisits the neuron‑replacement (silicon prosthesis) thought experiment: if a single biological neuron is replaced by a functionally identical artificial unit matching spike timing, synaptic/plasticity dynamics, and neuromodulatory responses, would consciousness change—and what follows under gradual full‑brain replacement? The setup implicitly tests substrate‑independence/functionalism (cf. Chalmers’ “fading/dancing qualia” argument: https://consc.net/papers/fading.html) versus biologically essentialist views, and invokes identity continuity puzzles akin to the Ship of Theseus and multiple realizability (see SEP on Functionalism). Top comments emphasize that the “oomph” intuition has no operational/empirical content—“not something you can objectively measure”—and relate the scenario to Ship‑of‑Theseus identity continuity; others note the discussion is standard in philosophy of mind but acknowledge the speaker’s clear delivery.

- Several commenters note that the term “oomph” for consciousness lacks an operational definition, making it non-measurable and unfalsifiable. For technical evaluation, this highlights the need for operational criteria (e.g., reportability, behavioral/physiological markers, timing/causal interventions) rather than appeals to an undefined scalar of “consciousness.” Without agreed-upon metrics, discourse reduces to intuition pumps and can’t be benchmarked or stress-tested like other AI capabilities.

- Applying Ship of Theseus to neural replacement, the technically salient claim is that if each biological neuron is replaced by a functionally isomorphic artificial unit (preserving IO mappings, latencies, plasticity rules, and network-level dynamics), system-level behavior should remain invariant. This aligns with functionalism and the “gradual replacement” defense of consciousness continuity, pushing back on substrate-essentialist views; see Chalmers’ arguments on fading/dancing qualia for why massive qualia shifts without behavioral change are implausible (https://consc.net/papers/qualia.html). The hard part is specifying the equivalence class: does the replica need to match spike-timing statistics, neuromodulatory effects, and learning rules, or only causal role at some abstraction level?

- A “duck test” perspective argues for behavioral/operational criteria: if an agent is behaviorally indistinguishable and expresses preferences (e.g., not wanting shutdown), that may be a sufficient practical criterion irrespective of substrate, akin to a Turing-style operationalization (https://www.csee.umbc.edu/courses/471/papers/turing.pdf). The technical question becomes detecting and auditing non-instrumental preference expression versus goal-misdirected outputs under optimization pressure (e.g., deception), which implies the need for interpretability, consistency checks, and causal interventions. Full episode for deeper context: https://www.youtube.com/watch?v=giT0ytynSqg

- AI (Score: 1858, Comments: 94): The post titled “AI” contains no technical content—no models, code, datasets, benchmarks, or implementation details. It appears to be a short GIF/video gag featuring an initially blurred face followed by a full reveal (an intentionally inconsistent “censorship” effect), with no accompanying explanation or references. Commenters note the comedic timing—highlighting the abrupt de‑blurring (e.g., “blurred face then the fully revealed face”)—and express general appreciation; there is no substantive technical debate.

- wtf (Score: 1692, Comments: 144): Non-technical meme: a screenshot implies a user is shocked (“wtf”) by an AI/robot/chatbot response that is exactly what it was trained/programmed to do. The thread jokes about trivial or poorly designed training/inference (e.g., wasting CPU to print “hello”), underscoring the basic principle that models do what they’re trained to do (garbage in, garbage out). Comments emphasize user responsibility (“you trained it”), mock expecting emergent behavior from trivial code, and note the bot responding “exactly as programmed.”

- I think I have Alzheimer’s. (Score: 577, Comments: 59): OP shares evidence that the assistant isn’t retaining information across chats (framed as “I think I have Alzheimer’s”), implying a failure of cross-session recall rather than in-thread context loss. A top comment suggests adding a third screenshot showing whether the Memory across conversations feature is enabled to substantiate the claim; if disabled, the behavior is expected per OpenAI’s memory design (see OpenAI’s overview: https://openai.com/index/memory-and-new-controls-for-chatgpt/). Most replies are humorous; the only technically substantive feedback is to verify the memory toggle before diagnosing a bug or regression.

- One commenter suggests adding a third screenshot showing whether “memory across conversations” is enabled to substantiate claims about the assistant’s forgetfulness. This highlights that product-level memory toggles can confound observations by mixing cross-chat memory with per-session context limits; a reproducible report should control for that setting and specify model/session details.

AI Discord Recap

A summary of Summaries of Summaries by gpt-5

1. Generation Efficiency and Kernel-Level Wins

- Set Block Decoding Slashes Steps: The paper Set Block Decoding (SBD) integrates next-token prediction (NTP) and masked token prediction (MATP) to cut generation forward passes by 3–5x while maintaining accuracy on Llama‑3.1 8B and Qwen‑3 8B, with no architectural changes and full KV-cache compatibility.

- Members highlighted SBD’s use of discrete diffusion solvers and praised its practicality as a fine-tune on existing NTP models, noting it promises significant speedups without hyperparameter headaches or system overhauls.

- MI300X VALU Mystery Meets Thread Trace: Engineers probed a suspected dual VALU glitch on MI300X where VALUBusy hit 200%, advising confirmation via limiting to one wave per SIMD (launch 1216 waves) and thread tracing with rocprofiler thread trace and rocprof compute viewer.

- They recommended using rocprofv3 and thread traces to verify if cycles with two waves issue VALUs, framing a repeatable methodology to isolate scheduler behavior at SIMD granularity.

- CUDA Graph Warmup: Capture Smarter, Not Longer: A prolonged CUDA graph warmup (~30 min) triggered guidance to capture a graph for decoding a single token rather than long model.generate() loops, referencing the profiling code in low-bit-inference profiling utils.

- Experts suggested capturing a single forward pass to avoid redundant warmup paths and reduce setup time, aligning graph capture with the intended steady‑state decode workload.

2. Leaderboards, MoE Moves, and New Models

- Qwen3-Next-80B Teases Tiny-Active Titan: Alibaba announced Qwen3‑Next‑80B‑A3B, an 80B ultra‑sparse MoE with only 3B active parameters, claiming 10× cheaper training and 32K+ faster inference while matching Qwen3‑235B reasoning (announcement).

- Community chatter noted extreme sparsity (e.g., ~1:51.2 at the MoE level and ~1:20 overall), flagging it as a key signal that sparse experts are the near‑term path to scalable inference economics.

- LMArena Adds Models and Cleans House: The leaderboard added Seedream‑4, Qwen3‑next‑80b‑a3b‑instruct/thinking, and Hunyuan‑image‑2.1 per LMArena announcements.

- Users also noted the removal of the legacy sites and were invited to submit feature requests for the current platform, consolidating evaluation traffic to a single surface.

- Nano‑Banana Nukes Seedream V4 in Edits: Early reports showed Seedream V4 struggling on image‑editing tasks (e.g., changing outfits while preserving face/body pose) against Nano‑Banana; users tested via LMArena image mode.

- Feedback described Seedream V4 as getting “massacred” on targeted edits, underscoring that edit‑preservation benchmarks remain a differentiator among image models.

3. Agentic Tools and Connectors Go Practical

- Comet Controls the Canvas (and Concerns): Perplexity’s Comet browser drew attention for agentic control that can fill forms, open tabs, and reply to emails, alongside praise for ad‑blocking and summarization but concerns about privacy/security after a reported vulnerability.

- Members emphasized that it “can control ur browser” and debated the safety tradeoffs of autonomous browsing versus productivity gains for routine workflows.

- OpenAI Connectors Unlock Custom MCPs: OpenAI enabled custom MCPs in ChatGPT via Connectors in ChatGPT, giving teams more control over infrastructure choices and data paths.

- Builders welcomed the flexibility and asked for better artifact distribution (e.g., hosting proposal PDFs online) to streamline collaboration and review.

- Transparent Optimizations Pitches Prompt Previews: A proposal for Transparent Optimizations introduced optimizer markers, prompt rewrite previews, and feasibility checks (discussion link).

- Participants requested easier access to supporting docs (e.g., web‑hosted PDFs) and debated how much control users should retain over optimizer‑driven rewrites.

4. Systems Tooling Shifts and GPU Gotchas

- vLLM’s uv pip Trips Nightly Torch: A change to custom builds using uv pip in vLLM uninstalled nightly torch, breaking environments per vLLM PR #3108.

- Practitioners reacted with “ok this is not good”, rolled back to v0.10.1 with

python use_existing_torch.py, and pushed maintainers for an alternative approach.

- Practitioners reacted with “ok this is not good”, rolled back to v0.10.1 with

- cuBLAS TN Quirk Lands on Blackwell: Developers noted newer NVIDIA GPUs (Ada 8.9, Hopper 9.0, Blackwell 12.x) require TN (A‑T, B‑N) for

cublasLtMatmulfast paths (cuBLAS docs).- While technically routine, some found the requirement “incredibly specific”, reminding kernel authors to validate layouts across architectures to avoid silent slow paths.

- Paged Attention Post Peeks Inside vLLM: A new deep dive, Paged Attention from First Principles: A View Inside vLLM, covers KV caching, fragmentation, PagedAttention, continuous batching, speculative decoding, and quantization.

- Systems engineers flagged it as a practical explainer for memory‑bound inference design, clarifying why paged caches and batching policies dominate throughput.

5. Mojo/MAX Platform: Custom Ops and Bindings

- bitwise_and Blocks? Build Custom Ops Instead: Because adding RMO/MO ops via Tablegen isn’t currently possible in closed‑source components, maintainers recommended implementing bitwise_and as a MAX custom op, keeping PRs open for potential internal completion later.

- Users hit API rough edges (broadcasting, dtype promotion), and a team member offered a quick demo notebook while acknowledging long‑term fixes are on the roadmap.

- DPDK Delight: Mojo Bindings Materialize: The community generated most DPDK modules in Mojo at dpdk_mojo, missing a few AST nodes and leaning on a Clang AST parser with JSON dumps for debugging and type reconstruction.

- They called

generate_bindings.mojo“hacky” but workable, aiming next at OpenCV while they iron out struct representation gaps in Mojo.

- They called

- Roll Your Own Mojo Dev Container: Builders shared a roll‑your‑own approach to a Mojo dev environment using Docker, referencing mojo-dev-container as a base for a customized setup.

- This pattern packages the Mojo toolchain predictably, enabling consistent local development and CI without waiting on official images.

Discord: High level Discord summaries

Perplexity AI Discord

- DeepSeek Debuts, Demolishes Delusions: Members on Discord debated which model follows instructions better, with some stating that DeepSeek is less delusional than ChatGPT.

- No further details were given.

- Grok Garners Gripes for Garrulousness: Users on Discord complained about Grok giving what we didn’t even ask for and for yapping too muchhh.

- Some believe Grok is programmed to be controversial for attention, while others find it hard to follow instructions.

- Comet Causes Controversy for Controlling Browser: Users discussed the Comet browser, an AI browser made by Perplexity, noting it can control ur browser, fill forms, open tabs, and even reply to emails.

- Some users expressed concerns about privacy and security, citing a reported vulnerability that allowed hackers to access user data, while others praised its ad-blocking and summarization capabilities.

- Perplexity’s API Parameter Problem Patched: A user reported a single API error a few hours ago, indicating that

num_search_results must be bounded between 3 and 20, but got 50.- Another user confirmed that this was a known issue that got resolved, thanking the user for reporting the error.

Unsloth AI (Daniel Han) Discord

- Multi-GPU ETA Remains Elusive for Unsloth: Despite user praise for Unsloth’s simplicity in single-GPU training, there’s no ETA for official multi-GPU support, with development updates available in this Reddit thread.

- Users are encountering struggles with unofficial methods, underscoring the demand for native multi-GPU capabilities.

- Dynamic GGUF Quantization Desired for Model-Serving: A user expressed high interest in a Dynamic 2.0 GGUF service to improve quantization, suggesting a pay-for-service model, highlighting the need for I-matrices and their quantization schemes.

- They noted the labor-intensive process of model analysis, dynamic quantization, and testing puts strain on the Unsloth team.

- GuardOS: Privacy-Focused NixOS OS Goes Live: A member shared a link to GuardOS, a privacy-first NixOS-based operating system.

- Another member found the idea comical, stating the idea itself was already comical, but the top comment is even funnier.

- Unsloth BERT Model Fine-Tuning Support Confirmed: A user inquiring about Unsloth support for BERT models for finetuning with EHR data to classify ICD-10 codes received a link to a relevant Colab notebook.

- Unsloth officially supports certain models, and users are encouraged to experiment with others, making it suitable for classification tasks.

- Spectral Edit Reveals Audio Secrets: Insights from a spectral edit show that content lies around 0-1000 Hz, prosody between 1000-6000 Hz, and harmonics from 6000-24000 Hz.

- Harmonics determine audio quality and can reveal the sample rate by ear, suggesting natural generation or stretching crystal clear audio can add depth, similar to “frequency noise”.

LMArena Discord

- O3 Model Flounders: Users report underwhelming performance from the O3 model on complex tasks, with some finding it worse than Gemini Pro.

- Conflicting opinions exist, however, as some users see O3-medium as potentially approaching GPT5-low level performance.

- Psychological Prompting: Brain Hack or Bust?: A user suggested employing psychological prompting tactics, such as instructing the AI to work your best with no exceptions.

- Skeptics argue that vague statements are ineffective and verbose prompting yields better results for LLMs.

- AI Spritesheet Factory: A user is generating spritesheet animations using AI, converting video to frames in just 10 minutes.

- They are using Gemini for character images and has posted ready spritesheet animations on itch.io called hatsune-miku-walking-animation.

- LM Arena Says Goodbye to Old Website: The legacy version of the LM Arena website, including alpha.lmarena.ai and arena-web-five.vercel.app, has been removed.

- A team member posted an announcement and invited users to submit feature requests for the current site.

- Nano-Banana Annihilates Seedream V4: Early reports suggest Seedream V4 is underperforming, even against Nano-Banana, especially in image editing tasks.

- Specifically it has trouble changing a person’s outfit while preserving their face and body position. Use this link to use Seedream V4.

HuggingFace Discord

- QLoRA Batch Size Blues: A member ran into batch size limitations using QLoRA with PEFT and a 7B model with 4096 token sequence length on an H200 GPU.

- Suggestions included checking FA2/FA3, setting

gradient_checkpointing=True, using smaller batch sizes, and referencing Unsloth AI docs for context length benchmarks.

- Suggestions included checking FA2/FA3, setting

- ArXiv Paper Needs Endorsement: A user urgently seeks endorsement in CS.CL on ArXiv to publish a preprint featuring the Urdu Translated COCO Captions Subset dataset.

- The endorsement request URL was shared: here.

- Docker Model Runner Debuts: Users discussed using Ollama, Docker Model Runner, and Hugging Face for downloading and utilizing free models.

- Challenges with model availability were noted, with suggestions to consult the Hugging Face documentation and use a VPS.

- n8n valuation jumps to $2.3 Billion: A user inquired about integrating Hugging Face Open Source models within n8n, a no-code automation platform.

- An image was shared indicating that the Berlin-based AI startup n8n has seen its valuation skyrocket from $350 million to $2.3 billion in just four months, per this youtube video.

- Zero Loss on Smol Course: Members experienced zero loss during fine-tuning with an already fine-tuned model, recommending a base model for proper loss.

- A code snippet to disable thinking functionality in the tokenizer in the course’s SmolLM3-3B can be found here.

Cursor Community Discord

- Cursor Plagued by Issues: Cursor users are reporting numerous issues with Cursor and are being directed to report them on the forum for assistance.

- Among the issues reported is Cursor’s auto mode using PowerShell commands to edit, prompting a user to request a bug report.

- Legacy Auto Mode Locks Subscribers: Users with annual subscriptions purchased before September 15th retain the old auto mode until their next renewal, according to pricing details.

- A user is attempting to use rules to make auto use the inline tools.

- Cursor Beta Obscures Release Notes: The latest Cursor release (1.6.6) is in beta, and release notes are scattered across the forum, requiring users to hunt for them.

- The pre-release nature of the version means rapid changes and potential feature removals.

- Director AI Pursues C3PO Dream: A user is trying to stop the whole Your absolutely right! crap by essentially trying to build a C3PO.

- The project is already running on an MCP server and integrated into Cursor.

- Linear Integration Snags on Repository Selection: A user reported that when assigning an issue to Cursor via Linear, it prompts to choose a default repository, even though one is already specified in the Cursor settings, as seen in the attached image.

- This recurring prompt occurs despite the user having configured the default repository within Cursor.

OpenRouter Discord

- OpenRouter query prompts have race condition bug: A member reported a bug related to a possible race condition in query prompting, where longer, more detailed prompts yield worse results for translations.

- No solution was found, but it was suggested to report the bug to the developers.

- Developers have Token Calculation Conundrums: A member inquired about calculating the number of tokens for input, seeking a non-heuristic method due to model-specific variations.

- It was suggested to use external APIs in conjunction with the endpoint’s tokenizer information, as documented, since there’s nothing in the documentation about that.

- JSONDecodeError surfaces in server responses: Users discussed a JSONDecodeError indicating an invalid JSON response from the server, often due to server-side failures like rate limiting, misconfigured models, or internal errors.

- The error suggests the server returned HTML or an error blob instead of valid JSON.

- Avoiding Moonshot AI’s turbo pricing: A user asked how to avoid the more expensive turbo version when selecting Moonshot AI as the provider for Kimi K2 in the OpenRouter chatroom.

- The solution offered was to select a cheaper provider in the advanced settings.

- iOS upload bug squashed: A user reported a bug where they couldn’t upload PDF or TXT files to OpenRouter chat on iOS because non-image files were grayed out.

- It was confirmed as a bug, likely an oversight when file uploads were added, with no workaround available on iOS.

GPU MODE Discord

- Lambda Labs Cloud GPUs Face Instance Drought: Users reported inconsistent GPU instance availability with Lambda Labs, questioning the frequency and impact of cloud GPU shortages.

- The discussion underscored the importance of understanding the reliability of GPU resources when relying on cloud platforms for resource-intensive tasks.

- CUDA Graph Warmup Reaches Half Hour Mark: A user reported that CUDA graph warmup was taking half an hour in their low-bit-inference project, and another suggested that capturing a CUDA graph for decoding one token instead of generating many tokens may provide better results.

- The user may want to capture a single forward pass, not multiple passes like

model.generate()does internally.

- The user may want to capture a single forward pass, not multiple passes like

- vLLM’s uv pip Transplants Nightly Torch: A member noted that vLLM switched to

uv pipfor custom builds with pre-installed torch, but it uninstalls the nightly torch, leading to environment issues, from this PR.- A member said ok this is not good, just saw their pr. I’m gonna go ask them if they can find another way to do this, and another member reverted to

v0.10.1building withpython use_existing_torch.py.

- A member said ok this is not good, just saw their pr. I’m gonna go ask them if they can find another way to do this, and another member reverted to

- MI300X Probes Potential Dual VALU Glitch: Users investigated a potential dual VALU issue on MI300X, where VALUBusy hits 200%, suggesting confirming it by limiting to one wave per SIMD, and using rocprof compute viewer and rocprofv3 to diagnose.

- The user was advised to launch 1216 waves to achieve 1 wave/simd, leveraging AMD’s documentation for thread tracing and rocprof compute viewer documentation.

- Kernel Dev Roadmap makes Progress: A member suggested adding a roadmap for kernels and increasing available kernels in GPU mode leaderboard, following the format of gpu-mode/reference-kernels.

- Members also mentioned that submissions can now be made online, with the primary need being an editor-like experience.

LM Studio Discord

- NVMe Upgrade Speeds Up Load Times: A user replaced a slow NVMe with a faster one, achieving a 4x improvement in sequential read speed and model load times.

- The user did not provide details on the old or new drives.

- Markdown Sub Tag Renders Incorrectly: A member reported that the

<sub>tag has no effect on text inside it in Markdown style within LM Studio, and also that italic text is not rendered correctly when using asterisks such as*(n-1)*.- There are ongoing discussions about the proper rendering of Markdown syntax, specifically with sub tags and italicized text.

- Western Digital Drives Blows Up: Users reported high failure rates with Western Digital Blue drives, humorously calling them Western Digital Blew Up drives.

- The users did not elaborate on the specific failure modes or use cases, but the consensus was to avoid the drives.

- PNY NVIDIA DGX Spark Plagued by ETA Shenanigans: Users joked about the PNY NVIDIA DGX Spark having conflicting ETAs, initially October then late August, as listed on linuxgizmos.com.

- The inconsistency in the release dates has led to speculation about the availability and production timeline of the device.

- Linux Dominates for Max+ 395 Box: Users recommended Linux over Windows for a Max+ 395 box, citing Vulkan’s functionality but noting potential context limits.

- It was suggested to use a custom-built llama.cpp with ROCm 7 from lemonade-sdk/llamacpp-rocm that already has compiled versions in Releases.

OpenAI Discord

- Laconic Game Causes Gemini 2.5 Pro to Hallucinate: A user joked that their laconic game was so strong that it caused Gemini 2.5 Pro to hallucinate.

- The user did not elaborate further on the nature of the hallucination or laconic game.

- GPT-5 Now Integrates Code Snippets and Linux Shell Access: A member reported that GPT-5 now writes its own code snippets to use as tools in a chain of tasks and appears to have access to an underlying Linux shell environment.

- Another member mentioned they vibe coded directly from the ChatGPT interface to develop an app hosted locally on GitHub.

- Custom MCPs Now Supported in OpenAI: Users can now use custom MCPs (Managed Cloud Providers) in OpenAI, according to the Connectors in ChatGPT documentation.

- This update allows for more flexibility and control over the infrastructure used by ChatGPT.

- Transparent Optimizations Proposal Introduced: A proposal for Transparent Optimizations was posted, introducing optimizer markers, prompt rewrite previews, and feasibility checks; the proposal was linked here.

- One member requested that associated PDFs be hosted online for easier access, rather than requiring downloads.

- AI Self Help Conversation Analyzer Launched: A member introduced a conversation analyzer called AI Self Help that helps determine why conversations take odd turns.

- The tool includes a conversation starter that lists issues and detailed questions to ask ChatGPT to get the answers.

Nous Research AI Discord

- Disable WebGL to fix perf issues: A member requested a feature to disable WebGL in the browser due to performance issues without a GPU, and suggested disabling the animation orb as well, shown in this screenshot.

- The suggestion came from someone working on a project requiring rapid iteration with automated bug fixes and updates, and passing the MOM test.

- Dataset quality better with Tokenizer Filtering: A member shared a link to dataset_build on GitHub, highlighting the idea of running languages through a model’s tokenizer and rejecting those with unknown tokens to ensure quality.

- The approach also organizes calibration datasets using folders/directories for later combination.

- SBD accelerates LLM Gen: A new paper introduces Set Block Decoding (SBD), a paradigm that accelerates generation by integrating standard next token prediction (NTP) and masked token prediction (MATP) within a single architecture, without requiring architectural changes or extra training hyperparameters.

- Authors demonstrate that SBD enables a 3-5x reduction in the number of forward passes required for generation while achieving the same performance as equivalent NTP training by fine-tuning Llama-3.1 8B and Qwen-3 8B.

Latent Space Discord

- GPT-OSS Outshines Llama2 on a Budget: It was noted that running GPT-OSS 120B is cheaper than running Llama2 7B, with discussion suggesting that MoEs are the future.

- Optimizations for speeding up GPT-OSS, such as MXFP4 quantization, custom kernels, and continuous batching, were also mentioned.

- Altman Grilled in Murder Mystery: During an interview, Sam Altman was accused of murder, prompting a classic deflection move as highlighted in this video clip.

- A member shared that there’s a clip on Twitter of this like 5 min segment.

- Codex Cranks Get Exclusive Peek: Alexander Embiricos invited heavy Codex users to beta test something new, as seen in this tweet.

- This might be related to conversation resume and forking, based on recent repository activity here.

- OpenAI’s Oracle Oddity Obscures Overspending: OpenAI reportedly signed a 5-year, $300 billion cloud-computing contract with Oracle starting in 2027 at $60 billion per year.

- Commentators are questioning OpenAI’s ability to afford the annual $60B cost against ~$10B revenue, raising concerns about energy and business-model sustainability.

- ByteDance Squeezes Google’s Fruit: Deedy highlighted ByteDance’s new Seedream 4.0 as top ranked on Artificial Analysis leaderboards, touting 2–4 K outputs, relaxed policies, faster generation, multi-image sets, and $0.03 per result.

- Community reactions range from glowing praise for quality and pricing to skepticism that Nano Banana still wins on speed and natural aesthetics.

DSPy Discord

- Math GPT App Seeks DSPy Savvy: A member seeks an advanced DSPy blog writing agent for a Math GPT app available at https://next-mathgpt-2.vercel.app/.

- The agent would presumably generate math-related content, given the nature of the Math GPT app.

- Pythonic Programs Propel Proliferation of Ports: A member suggested modeling and optimizing DSPy programs directly in Python and transpiling to languages like Go, Rust, or Elixir.

- A key challenge is how to export an arbitrary python program, perhaps by serving a backend to a python interface.

- Arbor Advantages Accelerate Adoption of RL: Members discussed using Reinforcement Learning (RL) in DSPy, but one member expressed fear of diving in because of the many moving parts and need for powerful GPUs.

- Another member said that Arbor + DSPy is quite seamless, and they are working on new things to make config even easier so everyting just works.

- Instructions’ Immutability Incites Iterations: A member inquired whether instructions can be modified by an optimizer when using

signature.with_instructions(str).- It was clarified that mipro and gepa do modify the instructions, with the actual instructions saved in the

program.json.

- It was clarified that mipro and gepa do modify the instructions, with the actual instructions saved in the