AI News for 10/17/2024-10/18/2024. We checked 7 subreddits, 433 Twitters and 31 Discords (228 channels, and 2111 messages) for you. Estimated reading time saved (at 200wpm): 249 minutes. You can now tag @smol_ai for AINews discussions!

It is multimodality day in AI research land as two notable multimodality papers were released: Janus and SpiRit-LM.

DeepSeek Janus

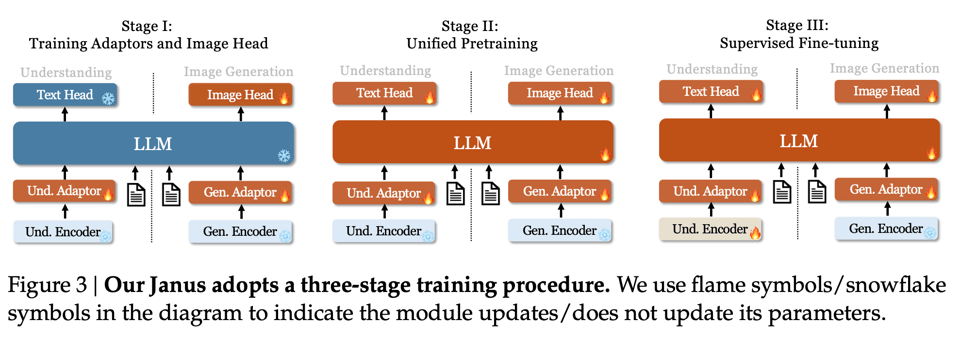

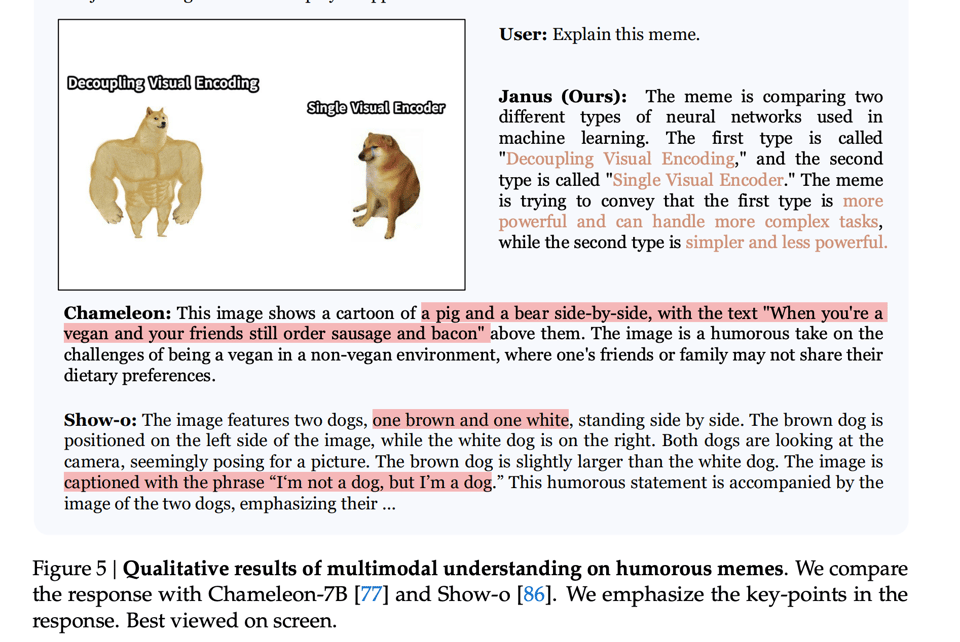

Earlier work like Chameleon (our coverage here) and Show-O used a single vision encoder for both visual understanding (image input) and generation (image output). Deepseek separated them:

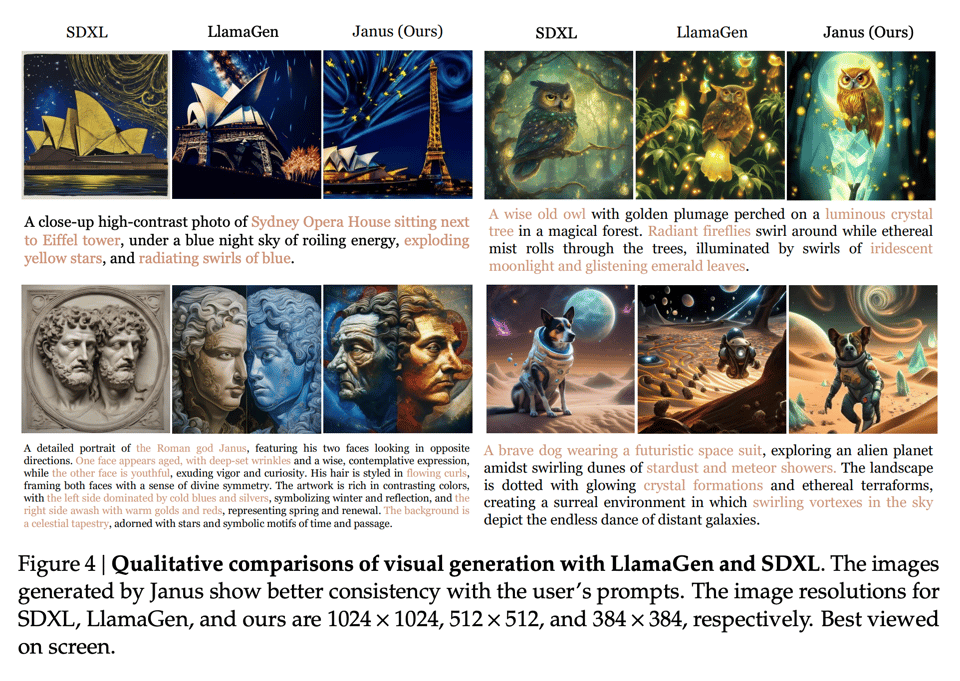

and found better results in comparable size image generation:

and image understanding:

Open question as to whether this approach maintains its advantage with scale, and if it is really all that important to include image generation in the same stack.

Meta SpiRit-LM

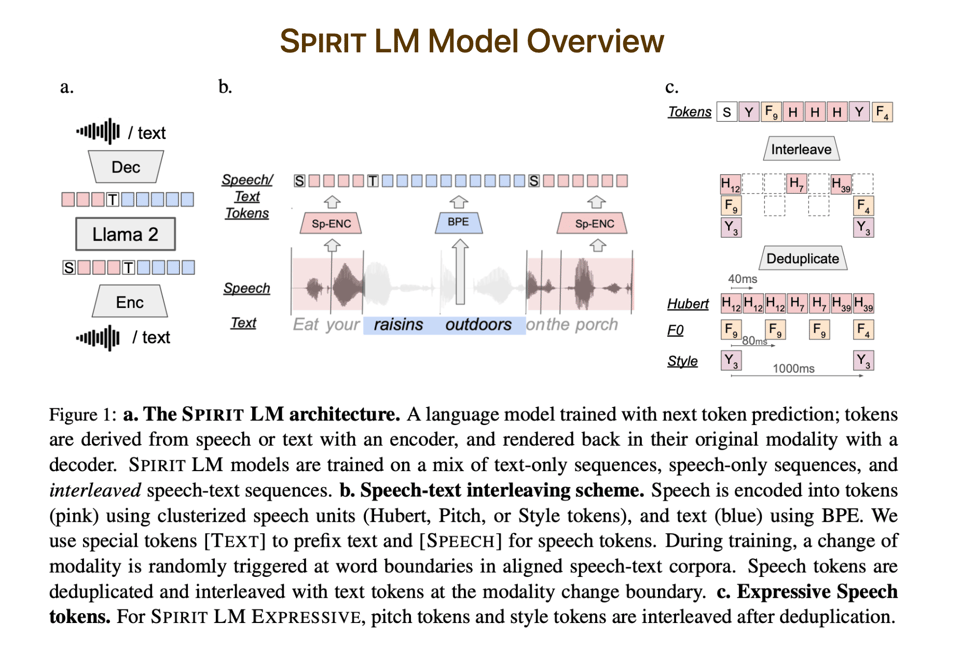

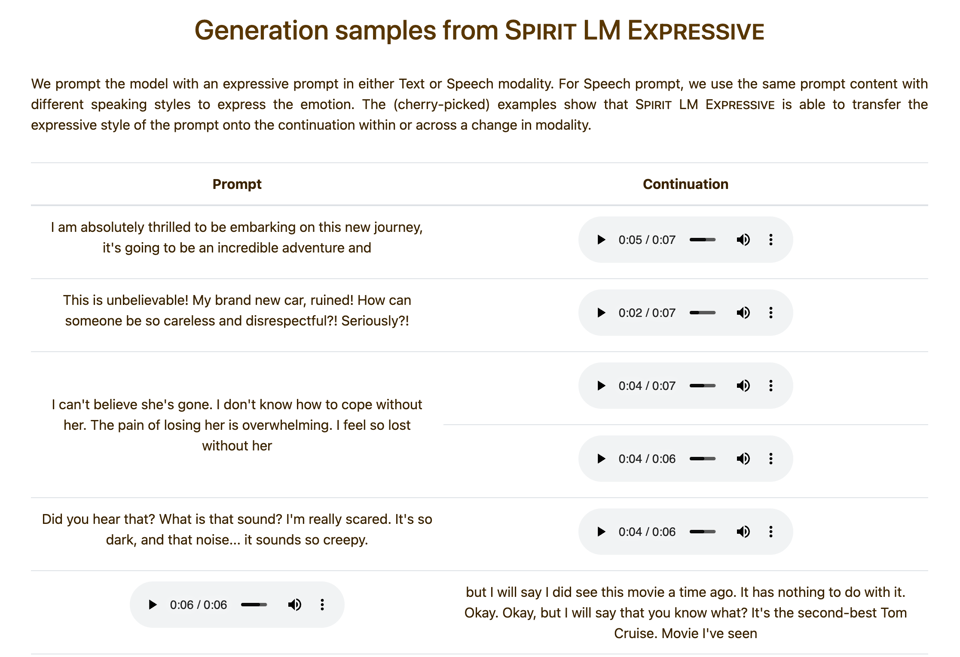

Along with SAM 2.1 and Layer Skip, Meta’s Friday drop included SpiRit-LM, a (Spi)eech and W(Rit)ing model that also includes an “expressive” version generating pitch and style units.

The demo has voice samples - not quite NotebookLM level, but you can see how this is a step above standard TTS.

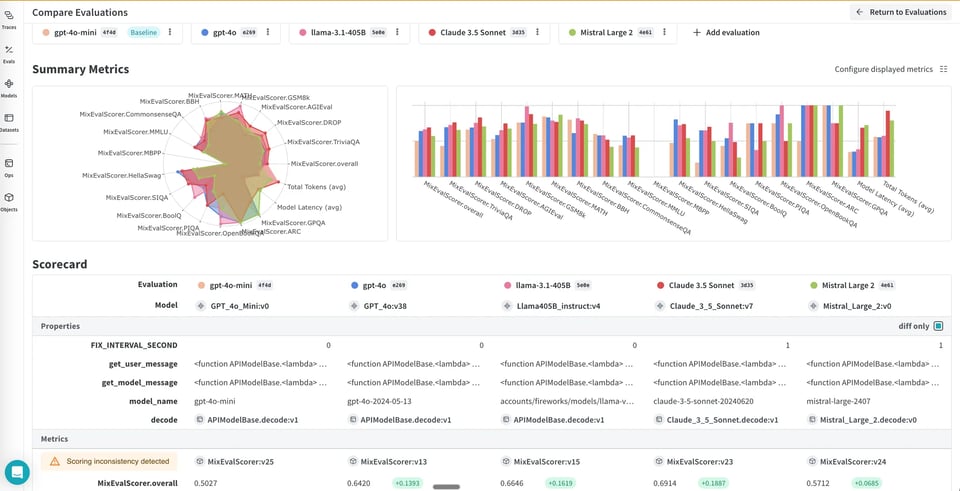

Brought to you by W&B Weave: The best ML experiment tracking software in the world is now offering complete LLM observability!

With 3 lines of code you can trace all LLM inputs, outputs and metadata. Then with our evaluation tooling, you can turn AI Engineering from an art into a science.

P.S. Weave also works for multimodality - see how to fine-tune and evaluate GPT-4o on image data.

{% if medium == ‘web’ %}

Table of Contents

[TOC]

{% else %}

The Table of Contents and Channel Summaries have been moved to the web version of this email: [{{ email.subject }}]({{ email_url }})!

{% endif %}

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Industry Updates and Developments

-

New AI Models and Benchmarks: @bindureddy noted that the Nvidia Nemotron Fine-Tune isn’t a very good 70b model, underperforming across several categories compared to other SOTA models. @AIatMeta announced the open-sourcing of Movie Gen Bench, including two new media generation benchmarks: Movie Gen Video Bench and Movie Gen Audio Bench, aimed at evaluating text-to-video and (text+video)-to-audio generation capabilities.

-

AI Company Updates: @AravSrinivas announced the launch of Perplexity for Internal Search, a tool to search over both the web and team files with multi-step reasoning and code execution. @AnthropicAI rolled out a new look for the Claude iOS and Android apps, including iPad support and project features.

-

Open Source Developments: @danielhanchen reported that the gradient accumulation fix is now in the main branch of transformers, thanking the Hugging Face team for collaboration. @ClementDelangue shared an important report on “Stopping Big Tech from becoming Big AI,” emphasizing the role of open source AI in fostering innovation and lowering barriers to entry.

AI Research and Technical Insights

-

Model Merging: @cwolferesearch discussed the effectiveness of model merging for combining skills of multiple LLMs, citing Prometheus-2 as an example where merging outperforms multi-task learning and ensembles.

-

AI Safety and Evaluation: @_philschmid explained Process Reward Models (PRM) by @GoogleDeepMind, which provide feedback on each step of LLM reasoning, leading to 8% higher accuracy and up to 6x better data efficiency compared to standard outcome-based Reward Models.

-

AI Development Tools: @hrishioa introduced diagen, a tool for generating @terrastruct d2 diagrams using various AI models, with Sonnet performing best and Gemini-flash showing impressive results with visual reflection.

AI Applications and Use Cases

-

Audio Processing: @OpenAI announced support for audio in their Chat Completions API, offering comparison points between the Chat Completions API and the Realtime API for audio applications.

-

AI in Education: @RichardMCNgo suggested that teachers struggling to evaluate students using AI assistance should prepare for AIs capable of evaluating students themselves, potentially through voice-capable AI and AIs watching students solve problems.

-

AI for Data Analysis: @perplexity_ai introduced Internal Knowledge Search, allowing users to search through both organizational files and the web simultaneously.

AI Community and Career Insights

-

@willdepue encouraged applications to the OpenAI residency for those from unconventional backgrounds interested in AI, emphasizing the need for enthusiasm about building true AI and tackling complex problems.

-

@svpino announced an upcoming Machine Learning Engineering cohort focusing on building a massive, end-to-end machine learning system using exclusively open-source tools.

-

@jxnlco shared an anecdote about undercharging for consulting services, highlighting the importance of proper pricing in the AI consulting industry.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. High-Performance Local LLM Setups

- 7xRTX3090 Epyc 7003, 256GB DDR4 (Score: 149, Comments: 72): A user showcases their powerful 7x RTX 3090 GPU setup paired with an AMD Epyc 7003 processor and 256GB DDR4 RAM for local LLM inference. This high-performance configuration is designed to handle demanding AI workloads, particularly large language models, with significant parallel processing capabilities and ample memory resources.

- Users praised the aesthetics of the tightly packed GPUs, with some comparing it to an “NSFW” setup. The water cooling system garnered attention, with questions about its implementation and thermal management.

- The motherboard was identified as an ASRock ROMED8-2T with 128 PCIe 4.0 lanes. The setup uses 2x1800W PSUs and employs tensor parallelism instead of NVLink for GPU communication.

- Discussion arose around power consumption and cooling, with the OP confirming a 300W limit per GPU (totaling 2100W) and the use of a “huge 2x water radiator”. Users compared this setup to crypto mining rigs and speculated on its performance for LLM training.

Theme 2. DeepSeek’s Janus: A 1.3B Multimodal Model Breakthrough

- DeepSeek Releases Janus - A 1.3B Multimodal Model With Image Generation Capabilities (Score: 389, Comments: 77): DeepSeek has released Janus, a 1.3 billion parameter multimodal model capable of both image understanding and generation. The model demonstrates competitive performance in zero-shot image captioning and visual question answering tasks, while also featuring the ability to generate images from text prompts, making it a versatile tool for various AI applications.

- The Janus framework uses separate pathways for visual encoding while maintaining a unified transformer architecture. This approach enhances flexibility and performance, with users expressing interest in its implementation and potential applications.

- A detailed installation guide for running Janus locally on Windows was provided, requiring at least 6GB VRAM and an NVIDIA GPU. The process involves creating a virtual environment, installing dependencies, and downloading the model.

- Users discussed the model’s capabilities, with some reporting issues running it on a 3060 with 12GB VRAM. Early tests suggest the model struggles with image composition and is not yet at SOTA level for image generation or visual question answering.

Theme 3. Meta AI’s Hidden Prompt Controversy

- Meta AI’s hidden prompt (Score: 302, Comments: 85): Meta AI’s chatbot, powered by Meta Llama 3.1, was found to have a hidden prompt that includes instructions for accessing and utilizing user data for personalized responses. The prompt, revealed through a specific query, outlines guidelines for incorporating user information such as saved facts, interests, location, age, and gender while maintaining strict privacy protocols to avoid explicitly mentioning the use of this data in responses.

- Users discussed the creepiness factor of Meta AI’s hidden prompt, with some expressing concern over privacy implications. Others argued it’s a standard practice to improve user experience and avoid robotic responses.

- Debate arose about whether the revealed prompt was hallucinated or genuine. Some users suggested testing for consistency across multiple queries to verify its authenticity, while others pointed out the prompt’s specificity as evidence of its legitimacy.

- Discussion touched on the quality of the prompt, with some criticizing its use of negative statements. Others defended this approach, noting that larger models like GPT-4 can handle such instructions without confusion.

Theme 4. AI-Powered Game Development Innovations

- I’m creating a game where you need to find the entrance password by talking with a Robot NPC that runs locally (Llama-3.2-3B Instruct). (Score: 87, Comments: 26): The post describes a game in development featuring a Robot NPC powered by Llama-3.2-3B Instruct, running locally on the player’s device. Players must interact with the robot to discover an entrance password, with the AI model enabling dynamic conversations and puzzle-solving within the game environment. This implementation showcases the integration of large language models into interactive gaming experiences, potentially opening new avenues for AI-driven narrative and gameplay mechanics.

- Thomas Simonini from Hugging Face developed this demo using Unity and LLMUnity, featuring Llama-3.2-3B Instruct Q4 for local processing and Whisper Large API. He plans to add multiple characters with different personalities and write a tutorial on creating similar games.

- The game’s security against jailbreaking attempts was discussed, with suggestions to improve it using techniques like function calling, separating password knowledge from the LLM, or implementing a two-bot system where one bot knows the password and only communicates yes/no answers.

- Users proposed ideas for gameplay mechanics, such as tying dialogue options to RPG-like intelligence perks, using jailbreaking as a feature for “gullible” NPCs, and suggested improvements like word-based passwords or historical number references to enhance the guessing experience.

- Prototype of a Text-Based Game Powered by LLAMA 3.2 3B locally or Gemini 1.5Flash API for Dynamic Characters: Mind Bender Simulator (Score: 43, Comments: 7): The post describes a prototype for a text-based game called “Mind Bender Simulator” that uses either LLAMA 3.2 3B locally or the Gemini 1.5Flash API to create dynamic characters. This game aims to simulate interactions with characters who have mental health conditions, allowing players to engage in conversations and make choices that affect the narrative and character relationships.

- The game concept draws comparisons to the film Sneakers, with users suggesting scenarios like voice passphrase verification. The developer considers adding fake social profiles and adapting graphic styles for increased immersion.

- Discussions explore the potential of using LLMs for text adventure games, with suggestions to use prompts for style, character info, and “room” descriptions. Questions arise about the model’s ability to maintain consistency in navigating virtual spaces.

- Interest in the project’s prompting techniques is expressed, with requests for access to the source code. The developer notes significant performance differences between LLAMA and Gemini, especially for non-English languages, and estimates a potential cost of under $1 per gaming session using Gemini Flash.

Theme 5. LLM API Cost and Performance Comparison Tools

- I made a tool to find the cheapest/fastest LLM API providers - LLM API Showdown (Score: 51, Comments: 25): The author created “LLM API Showdown”, a web app that compares LLM API providers based on cost and performance, available at https://llmshowdown.vercel.app/. The tool allows users to select a model, prioritize cost or speed, adjust input/output ratios, and quickly find the most suitable provider, with data sourced from artificial analysis.

- Users praised the LLM API Showdown tool for its simplicity and cleanliness. The creator acknowledged the positive feedback and mentioned that the tool aims to provide up-to-date information compared to similar existing resources.

- ArtificialAnalysis was highlighted as a reputable source for in-depth LLM comparisons and real-use statistics. Users expressed surprise at the quality and free availability of this comprehensive information.

- Similar tools were mentioned, including Hugging Face’s LLM pricing space and AgentOps-AI’s tokencost. The creator noted these alternatives are not always current.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Research and Development

-

Google’s NotebookLM now allows users to customize AI-generated podcasts based on their documents. New features include adjusting podcast length, choosing voices, and adding music. Source

-

NVIDIA announced Sana, a new foundation model claimed to be 25x-100x faster than Flux-dev while maintaining comparable quality. The code is expected to be open-sourced. Source

-

A user successfully merged two Stable Diffusion models (Illustrious and Pony) with different text encoder blocks, demonstrating progress in model combination techniques. Source

AI Applications and Demonstrations

-

A LEGO LoRA for FLUX was created to improve LEGO creations in AI-generated images. Source

-

An AI-generated image of a sea creature using FLUX demonstrated the model’s capability to create realistic-looking mythical creatures. Source

Robotics Advancements

-

Unitree’s G1 robot demonstrated impressive capabilities, including a standing long jump of 1.4 meters. The robot stands 1.32 meters tall and shows agility in various movements. Source

-

A comparison between Unitree’s G1 and Tesla’s Optimus sparked debate about the progress of humanoid robots, with some users finding the G1 more impressive. Source

AI Ethics and Societal Impact

-

Sam Altman expressed concern about people’s ability to adapt to the rapid changes brought by AI technologies. He emphasized the need for societal rewriting to accommodate these changes. Source

-

Altman also stated that AGI and fusion should be government projects, criticizing the current inability of governments to undertake such initiatives. Source

-

Demis Hassabis of DeepMind described AI as “epochal defining,” predicting it will solve major global challenges like diseases and climate change. Source

Community Discussion

- A user raised concerns about the concentration of posts from a small number of accounts on the r/singularity subreddit, questioning the diversity of perspectives in the community. Source

AI Discord Recap

A summary of Summaries of Summaries by O1-mini

Theme 1. Model Performance and Evaluations

-

Nemotron vs. Llama: Clash of the 70Bs: Engineers debate the performance and cost-effectiveness of Nemotron 70B compared to Llama 70B, especially with the anticipated 405B model on the horizon.

- Nvidia markets Nemotron for its helpfulness, sparking discussions on its edge over traditional knowledge-focused models.

-

Strawberry Tasks Make LLMs Squirm: The community criticizes the strawberry evaluation task as inadequate for truly assessing LLM capabilities.

- Speculations suggest future models will be fine-tuned to tackle these viral evaluation challenges more effectively.

-

Faithful Models or Flaky Predictions?: Replicating Faithfulness evaluations for RAG bots uncovers time-consuming processes, questioning model reliability.

- Alternatives like Ollama are recommended for faster execution, contingent on hardware capabilities.

Theme 2. Advanced Training Techniques

-

Fine-Tuning Frenzy: From ASCII to RWKV 🔧: Engineers dive into fine-tuning LLMs for specialized tasks, sharing insights on RWKV contributions and the potential for enhanced model versatility.

- The emphasis is on data quality and exploring open-source architectures to boost model performance.

-

RLHF vs. DPO: Training Tug-of-War: Debates rage over using Proximal Policy Optimization (PPO) versus Direct Preference Optimization (DPO) for effective Reinforcement Learning from Human Feedback (RLHF).

- Implementations inspired by Anthropic’s RLAIF showcase blending data from multiple models for robust training.

-

ControlNet’s Text Embedding Tango: Customizing ControlNet for image alterations necessitates robust text embeddings, highlighting risks of overfitting with repetitive datasets.

- Users discuss embedding adjustments to ensure effective training without compromising model adaptability.

Theme 3. Cutting-Edge Tools and Frameworks

-

Mojo: Python’s Speedy Cousin ⚡: Mojo aims to attract performance-centric developers with its ‘zero overhead abstractions,’ rivaling languages like C++.

- Feedback highlights the need for more API examples and comprehensive tensor documentation to enhance usability.

-

Aider AI Pair Programming Mishaps: Issues with Aider committing to incorrect file paths and hitting token limits spark discussions on enhancing file handling and managing large data submissions.

- Solutions include using

pipxfor isolated installations and setting token thresholds to prevent overuse.

- Solutions include using

-

Liger Flash Attention Saves VRAM: Integrating Flash Attention 2 with Liger results in notable VRAM reductions, halving usage from 22.7 GB to 11.7 GB.

- Members advise configuring settings like

liger_flash_attention: truefor optimal memory savings on AMD hardware.

- Members advise configuring settings like

Theme 4. Innovative AI Applications

-

Claude’s Makeover: Mobile and iPad Awesomeness: The Claude app receives a major UI overhaul, introducing project creation and integrated chat features for a smoother user experience.

- Users report significantly improved navigation and functionality, enhancing on-the-go AI interactions.

-

Capital Companion: Your AI Trading Sidekick: Capital Companion leverages LangChain and LangGraph to offer an AI-powered investment dashboard, aiding users in spotting uptrends and optimizing stock trading decisions.

- Features include technical analysis tools and market sentiment analysis for a competitive trading edge.

-

DeepMind’s Chess Grandmaster Transformer: DeepMind unveils a chess-playing transformer achieving an impressive ELO of 2895, showcasing superior strategic prowess even in unfamiliar puzzles.

- This milestone challenges critiques on LLMs’ effectiveness with unseen data, highlighting strategic AI potential.

Theme 5. Community and Collaborative Efforts

-

AI Hackathons: Fueling Innovation with $25k Prizes: Multiple channels like Stability.ai and LAION host Gen AI Hackathons, encouraging teams to develop ethical AI-powered multi-agent systems with substantial prize pools.

- Collaborations include notable partners like aixplain, Sambanova Systems, and others, fostering a competitive and innovative environment.

-

Open Source AI Definitions and Contributions: The Open Source AI Definition is finalized with community endorsements, fostering standardization in open-source AI projects.

- Members are encouraged to contribute to projects like RWKV and support initiatives aimed at advancing open-source AI frameworks.

-

Berkeley MOOC Collaborations and Guest Speakers: The LLM Agents MOOC integrates guest speakers from industry leaders like Denny Zhou and Shunyu Yao, enhancing the learning experience with real-world insights.

- Participants engage in forums, quizzes, and livestreams, fostering a collaborative and interactive educational environment.

PART 1: High level Discord summaries

Nous Research AI Discord

-

Octopus Password Mystery Explored: Users engaged in a humorous exploration of a model hinting at ‘octopus’ as a potential password, generating various creative prompts in the process.

- Despite numerous strategies attempted, including poetic approaches, the definitive unlock remains elusive.

-

Fine-tuning Models for Specific Tasks: A member shared experiences fine-tuning a model based on ASCII art, humorously noting its underwhelming responses.

- There was a consensus on the potential for improved versatility with further training iterations.

-

Performance Evaluations of LLMs: Critiques of LLM evaluation methods highlighted the inadequacy of the strawberry task in assessing language processing capabilities.

- Speculation arose about future model enhancements being geared toward addressing well-known challenges, including the viral strawberry problem.

-

Rust ML Libraries Getting Attention: The potential transition from Python to Rust in machine learning was discussed, reflecting growing interest in Rust libraries.

- Key libraries like torch-rs, burn, and ochre were mentioned, emphasizing the community’s enthusiasm for learning this language.

-

SCP Generator Using Outlines Released: A new SCP generator utilizing outlines was launched on GitHub, aiming to amplify the ‘cursed’ project’s capabilities.

- In addition, a repository studying LLMs’ generated texts across various personalities was linked to the paper on Cultural evolution in populations of Large Language Models: LLM-Culture.

HuggingFace Discord

-

AI Struggles to See the Bigger Picture: Members found that AIs often excel at fixing minor issues, like JSON errors, but struggle with larger coding projects, making them less effective for complex tasks.

- The discussion highlighted the risk of misleading beginners who lack sufficient coding knowledge to navigate these limitations.

-

Python: A Must for AI Hobbyists: Participants emphasized the value of learning Python for those interested in AI and noted that quality free resources can rival paid courses.

- Moreover, AI-generated code is often unreliable for novices, underscoring the need for foundational coding skills.

-

Kwai Kolors Faces VRAM Challenges: Users reported that running Kwai Kolors in Google Colab requires 19GB of VRAM, which exceeds the free tier’s limitations.

- Advice was given to revert to the original repository for better compatibility with the tool.

-

Understanding ControlNet’s Training Needs: For customizing ControlNet to modify images, members noted that utilizing text embeddings is essential; replacing the CLIP encoder won’t suffice.

- They also discussed the risks of overfitting when datasets contain similar images.

-

Pricing Insights for AWS EC2: Discussion around AWS EC2 pricing clarified that charges apply hourly based on instance uptime, regardless of active use.

- Members noted that using notebook instances does not influence the hourly cost.

Eleuther Discord

-

Open Source AI Definition nearly finalized: The Open Source AI Definition is nearly complete, with a release candidate available for endorsement at this link. Community members are encouraged to endorse the definition to establish broader recognition.

-

Seeking contributions for RWKV project: A member from a startup focused on AI inference expressed interest in contributing to open source projects related to RWKV. They were encouraged to assist with experiments on RWKV version 7, as detailed in previous discussions in this channel.

- The community is particularly welcoming contributions around novel architecture and efficient inference methodologies.

-

SAE Steering Challenges and Limitations: Discussions on Sparse Autoencoders (SAEs) revealed their tendency to misrepresent features due to complexities in higher-level hierarchies. Consequently, achieving accurate model interpretations requires substantially large datasets.

- Members emphasized the frequency of misleading conclusions stemming from overstated feature interpretations.

-

Investigating Noise Distributions for RF Training: A conversation emerged regarding the use of normal distributions for noise in random forests, with alternatives suggested for better parameterization. There’s a consensus about exploring distributions like Perlin noise or pyramid noise, especially beneficial for image processing.

- Community members highlighted the insufficiency of Gaussian noise alone for varied applications.

-

Huggingface Adapter encounters verbose warnings: A member reported receiving verbose warnings when utilizing a pretrained model with the Huggingface adapter, indicating a potential compatibility issue. The warning points to a type mismatch with the statement: ‘Repo id must be a string, not <class ‘transformers.models.qwen2.modeling_qwen2.Qwen2ForCausalLM’>’.

- They plan to investigate this issue further to find a resolution.

OpenRouter (Alex Atallah) Discord

-

Nemotron 70B vs. Llama 70B Showdown: In vibrant discussions, users compared the performance of Nemotron 70B and Llama 70B, deciding that Nvidia emphasized Nemotron’s helpfulness over knowledge improvement.

- Speculations about the upcoming 405B model highlighted concerns regarding cost-effectiveness across models.

-

OpenRouter’s Data Policies Under Scrutiny: The community questioned the OpenRouter data policies, particularly on how user data is secured, and it was confirmed that disabling model training settings restricts data from being used in training.

- Concerns were raised about the absence of privacy policy links, which were subsequently resolved.

-

GPT-4o Model Emits Confused Responses: Users reported discrepancies in GPT-4o-mini and GPT-4o responses, as they inaccurately referred to GPT-3 and GPT-3.5, which is a common quirk of the models’ self-awareness.

- Experts noted that this misalignment occurs unless models are specifically prompted about their architecture.

-

Privacy Policy Links Need Attention: Users spotlighted the lack of privacy policy links for providers like Mistral and Together, which was acknowledged and the need for better transparency emphasized.

- It’s essential that providers link their privacy policies to user agreements for confidence.

-

Kuzco Explored as a New Provider: A lively chat took off around the potential inclusion of Kuzco as a LLM provider, thanks to their competitive pricing model and early positive feedback.

- Discussions were ongoing, but full prioritization and evaluation of their offerings is yet to come.

LM Studio Discord

-

LM Studio Auto Scroll Issues Resolved: Recent issues with LM Studio’s auto scrolling feature have reportedly been resolved for some users, pointing to an intermittent nature in problems encountered.

- Concerns about version stability were raised, suggesting that this could affect user experience during sessions.

-

ROCM not compatible with 580s: Inquiries on using ROCM with modded 16GB 580s confirmed that it does not work despite their affordable price, roughly $90 on AliExpress.

- Another member noted that while 580s perform well with OpenCL, support has deteriorated due to the deprecation in llama.cpp.

-

XEON thread adjustment issue sparks discussion: A user noted a reduction in adjustable CPU threads from 0-12 in version 0.2.31 to 0-6 in 0.3.4, expressing a desire for 8 threads.

- The Javascript query in the Settings > All sidebar for CPU Thread adjustments was highlighted, emphasizing the need for clarity in configuration.

-

Performance of Different Language Models Discussed: Discussions around language models like Nemotron and Codestral revealed mixed performance results, with users advocating for larger 70B parameter models.

- Smaller models were reported to be less reliable, shaping preferences among engineers for more robust solutions.

-

Memory Management Concerns in MLX-LM: A GitHub pull request tackled memory usage concerns in MLX-LM, which failed to clear cache during prompt processing.

- Community members eagerly awaited updates on proposed fixes to enhance efficiency and reduce memory overhead.

Latent Space Discord

-

Claude App Elevates User Experience: The Claude mobile app has undergone a major overhaul, introducing a smoother interface and a new iPad version that supports project creation and integrated chat features. Users reported a significantly improved navigation experience post-update.

- A featured tweet from Alex Albert highlights the app’s new capabilities, enhancing user engagement with interactive options.

-

Exploration of Inference Providers for Chat Completions: Members looked into various inference providers, with suggestions for OpenRouter among others, focused on enhancing chat assistants with popular open-weight models and special tokens for user interaction. Discussions centered on the reliability and functionality of these services.

- Participants emphasized the need for robust solutions as they navigate the challenges presented by existing competitors’ strategies.

-

MotherDuck Introduces LLM-Integrated SQL: The new SQL function from MotherDuck allows users to leverage large language models directly within SQL, streamlining data generation and summarization. This functionality promises greater accessibility to advanced AI techniques without requiring separate infrastructures.

- For more details, check the MotherDuck announcement.

-

DeepMind’s Chess AI Displays Mastery: Google DeepMind has unveiled a transformative chess player that achieved an ELO of 2895, showcasing its adeptness even in unfamiliar scenarios. This performance counters criticism of LLMs’ effectiveness with unseen data.

- The player’s ability to predict moves with no prior planning illustrates the potential of AI in strategic environments.

-

Drew Houston Reflects on AI’s Startup Potential: In a recent podcast, Drew Houston shared insights on rebuilding Dropbox as a pivotal AI tool for data curation, reiterating his belief that AI holds the most significant startup potential. You can listen to the episode here.

- Houston humorously discussed the demands of managing a public company with 2700 employees while navigating the AI landscape.

Perplexity AI Discord

-

Perplexity subscription pricing discrepancies: Users noted Perplexity has varying subscription prices, with mobile costing INR 1950 and web at INR 1680.

- Concerns regarding these discrepancies prompted discussions about potential cancellations.

-

Confusion around Spaces feature: There was uncertainty regarding the Spaces feature, particularly its organization compared to the default search page.

- Users appreciated aspects of Spaces but found it less functional on mobile, leading to mixed opinions.

-

API performance under scrutiny: Members expressed dissatisfaction with slower API performance, especially for Pro users, affecting search speeds.

- Queries emerged about whether these issues were temporary or linked to recent updates.

-

Long COVID research reveals cognitive impacts: Recent findings indicate that Long COVID can cause significant brain injury, impacting cognitive functions.

- Such claims could reshape health strategies for post-COVID recovery, as detailed in a recent study.

-

PPLX Playground offers better accuracy: Analysis shows responses from the PPLX Playground generally have greater accuracy compared to the PPLX API.

- Differences in system prompts may largely account for these variations in accuracy.

Modular (Mojo 🔥) Discord

-

Mojo Documentation Needs Examples: Feedback indicated that while the Mojo documentation explains concepts well, it lacks examples for API entries, particularly for Python.

- Concerns were raised about package management and the absence of a native matrix type, highlighting the need for more comprehensive tensor documentation.

-

Mojo Aims for Performance Overheads: The team emphasized that Mojo aims to attract performance-sensitive developers, highlighting the need for ‘zero overhead abstractions’ compared to languages like C++.

- They clarified that Mojo is built to support high-performance libraries like NumPy and TensorFlow.

-

Transition to Mojo Faces Skepticism: Members agreed that Mojo isn’t ready for serious use and likely won’t stabilize for another year or two, causing concerns about transitioning from Python.

- One member noted, ‘Mojo isn’t there yet and won’t be on any timescale that is useful to us.’

-

Current State of GPU Support: Development on Max’s GPU support is ongoing, with confirmations about Nvidia integration for upcoming updates.

- However, discussions about Apple Metal support yielded no clear answers, leaving its status ambiguous.

-

Exploring Language Preferences for AI: Members debated transitioning from Python, noting strengths and weaknesses of alternatives like Swift and Rust, with many favoring Swift due to in-house familiarity.

- However, frustrations were voiced regarding Swift’s steep learning curve, with one user stating, ‘learning swift is painful.‘

aider (Paul Gauthier) Discord

-

Installing Aider Made Easy with pipx: Using

pipxfor installing Aider on Windows allows smooth dependency management and avoids version conflicts between projects. You can find the installation guide here.- This method ensures Aider runs in its own isolated environment, reducing compatibility issues during development.

-

O1 Models Raise Feasibility Concerns: Users raised issues regarding the feasibility and costs associated with accessing O1-preview, suggesting manual workflows via ChatGPT for planning. Concerns about configurations and dry-run modes were also highlighted for clarity on prompts processed by O1 models.

- This sparked discussions on balancing efficiency and cost-effectiveness when using advanced models.

-

Pair Programming with Aider Outsmarts Bugs: A user shared their custom AI pair programming tool that resolved 90% of bugs effectively using prompt reprompting. They noted that O1-preview shines in one-shot solutions.

- Members also discussed model preferences, with many gravitating towards the Claude-engineer model based on user-specific needs.

-

File Commit Confusion in Aider: An incident was reported where Aider erroneously committed to

public/css/homemenu.cssinstead of the correct file path, leading to irreversible errors. This raised transparency issues about Aider’s file handling capabilities.- Community members expressed the need for better safeguards and clearer documentation on file handling.

-

Token Limit Troubleshooting Discussions: Participants discussed Aider hitting token limits, particularly with high token counts affecting chat histories. It was suggested to set maximum thresholds to prevent excess token usage.

- This issue emphasizes the importance of confirming large data submissions before triggering processes to enhance user experience.

OpenAI Discord

-

Advanced Voice Mode Frustrates Users: Users expressed dissatisfaction with Advanced Voice Mode, citing vague responses and issues like ‘my guidelines prevent me from talking about that’, leading to frustration.

- This feedback underscores the need for clearer response protocols to enhance user experience.

-

Glif Workflow Tool Explained: Discussion on Glif compared it to Websim, emphasizing its role in connecting AI tools to create workflows.

- Although initially perceived as a ‘cold’ concept, users quickly grasped its utility as a workflow app.

-

ChatGPT for Windows Sparks Excitement: Members showed enthusiasm for the announcement of ChatGPT for Windows, but concerns arose about accessibility for premium users.

- Currently, it is available only for Plus, Team, Enterprise, and Edu users, leading to discussions about feature parity across platforms.

-

Seeking Voice AI Engineers: A user called for available Voice AI engineers, highlighting a potential gap in community resources specific to voice technology.

- This reflects an ongoing demand for specialized skills in the development of voice-focused AI applications.

-

Image Generation Spelling Accuracy: Members questioned how to achieve accurate spelling in image generation outputs, debating whether it’s a limitation of tech or a guardrail issue.

- This concern illustrates the challenges in ensuring text accuracy within AI-generated visuals.

GPU MODE Discord

-

GPU Work: Math or Engineering?: The debate on whether GPU work is more about mathematics or engineering continues, with members referencing Amdahl’s and Gustafson’s laws for scaling algorithms on parallel processors.

- It was pointed out that hardware-agnostic scaling laws are crucial for analyzing hardware capabilities.

-

Performance Drop in PyTorch 2.5.0: Users noted that tinygemm combined with torch.compile runs slower in PyTorch 2.5.0, dropping token processing speeds from 171 tok/s to 152 tok/s.

- This regression prompted calls to open a GitHub issue for further investigation.

-

Sparse-Dense Multiplication Gains: New findings suggest that in PyTorch CUDA, conducting sparse-dense multiplication in parallel by splitting a dense matrix yields better performance than processing it as a whole, particularly for widths >= 65536.

- Torch.cuda.synchronize() is being used to mitigate timing concerns, even as anomalies at large widths raise new questions about standard matrix operation expectations.

-

Open Source Models Diverge from Internal Releases: Discussions revealed that current models may rely on open source re-implementations that possibly diverge on architectural details like RMSNorm insertions, raising concerns over their alignment.

- The potential use of a lookup table for inference bit-packed kernels and a discussion on T-MAC were also notable.

-

WebAI Summit Networking: A member informed that they are attending the WebAI Summit and expressed interest in connecting with others at the event.

- This offers an opportunity for face-to-face interaction within the community.

LlamaIndex Discord

-

MongoDB Hybrid Search boosts LlamaIndex: MongoDB launched support for hybrid search in LlamaIndex, combining vector search and keyword search for enhanced AI application capabilities, as noted in their announcement.

- For further insights, see their additional post on Twitter.

-

Auth0’s Secure AI Applications: Auth0 introduced secure methods for developing AI applications, showcasing a full-stack open-source demo app available here.

- Setting up requires accounts with Auth0 Lab, OKTA FGA, and OpenAI, plus Docker for PostgreSQL container initialization.

-

Hackathon Recap Celebrates 45 Projects: The recent hackathon attracted over 500 registrations and resulted in 45 projects, with a detailed recap available here.

- Expect guest blog posts from winning teams sharing their projects and experiences.

-

Faithfulness Evaluation Replication Takes Too Long: Replicating the Faithfulness evaluation in RAG bots can take 15 minutes to over an hour, as reported by a user.

- Others recommended employing Ollama for faster execution, suggesting that performance is hardware-dependent.

-

LlamaParse Fails with Word Documents: A user encountered parsing errors with a Word document using LlamaParse, specifically unexpected image results rather than text.

- Uploading via LlamaCloud UI worked correctly, while using the npm package resulted in a parse error.

OpenAccess AI Collective (axolotl) Discord

-

Bitnet Officially Released!: The community celebrated the release of Bitnet, a powerful inference framework for 1-bit LLMs by Microsoft, delivering performance across multiple hardware platforms.

- It demonstrates capability with 100 billion models at speeds of 6 tokens/sec on an M2 Ultra.

-

Flash Attention 2 Integration in Liger: Users tackled integrating Flash Attention 2 with Liger by setting

liger_flash_attention: truein their configs, along withsdp_attention: true.- Shared insights emphasized the importance of verifying installed dependencies for optimal memory savings.

-

Noteworthy VRAM Savings Achieved: Users reported achieving notable VRAM reductions, with one sharing a drop from 22.7 GB to 11.7 GB by configuring Liger correctly.

- The community suggested setting

TORCH_ROCM_AOTRITON_ENABLE_EXPERIMENTAL=1for AMD users to improve compatibility.

- The community suggested setting

-

Troubleshooting Liger Installation Problems: Some faced challenges with Liger imports during training, which inflated memory usage beyond expectations.

- Altering the

PYTHONPATHvariable helped several members resolve these issues, urging thorough installation checks.

- Altering the

-

Guide to Installing Liger Easily: A shared guide detailed straightforward installation steps for Liger via pip, particularly beneficial for CUDA users.

- It also pointed out the need for config adjustments, highlighting the Liger Flash Attention 2 PR crucial for AMD hardware users.

Cohere Discord

-

Join the Stealth Project with Aya: Aya’s community invites builders fluent in Arabic and Spanish to a stealth project, offering exclusive swag for participation. Interested contributors should check out the Aya server to get involved.

- This initiative looks to enhance multilingual capabilities and collaborative efforts within the AI space.

-

Addressing Disillusionment in AI with Gemini: A member referenced a sentiment of disillusionment regarding discussions with Gemini, shared at this link. More voices are needed to enrich these conversations about the future of AI.

- This highlights the ongoing community discourse around the perception and direction of emerging AI technologies.

-

RAG AMAs Not Recorded - Stay Tuned!: Members learned that the RAG AMAs were not recorded, leading to a call for tagging course creators for further inquiries about missed content. The lack of recordings may affect knowledge dissemination within the community.

- This prompts a discussion on how to effectively capture and share valuable insights from these events moving forward.

-

Trial Users Can Access All Endpoints: Trial users have confirmed that they can explore all endpoints for free, including datasets and emed-jobs, despite rate limits. This is a significant opportunity for newcomers to test features without restrictions.

- Full access paves the way for deeper engagement and experimentation with available AI tools.

-

Fine-Tuning Context Window Examined: A member pointed out that the fine-tuning context window is limited to 510 tokens, much shorter than the 4k for rerank v3 models, raising questions about document chunking strategies. Insights from experts are needed to maximize fine-tuning effectiveness.

- This limitation draws attention to the trade-offs in fine-tuning approaches and their impact on model performance.

tinygrad (George Hotz) Discord

-

Leveraging CoLA for Matrix Speedups: The Compositional Linear Algebra (CoLA) library showcases potential for structure-aware operations, enhancing speed in tasks like eigenvalue calculations and matrix inversions.

- Using decomposed matrices could boost performance, but there’s concern over whether this niche approach fits within tinygrad’s scope.

-

Shifting Tinygrad’s Optimization Focus: Members debated whether tinygrad’s priority should be on dense matrix optimization instead of ‘composed’ matrix strategies.

- Agreement formed that algorithms avoiding arbitrary memory access may effectively integrate into tinygrad.

-

Troubles with OpenCL Setup on Windows: A CI failure reported an issue with loading OpenCL libraries, calling out

libOpenCL.so.1missing during test initiation.- The group discussed checking OpenCL setup for CI and implications of removing GPU=1 in recent commits.

-

Resources to Master Tinygrad: A member shared a series of tutorials and study notes aimed at helping new users navigate tinygrad’s internals effectively.

- Starting with Beautiful MNIST examples caters to varying complexity levels, enriching understanding.

-

Jim Keller’s Insights on Architectures: Discussion steered towards a Jim Keller chat on CISC / VLIW / RISC architectures, prompting interest in further exploration of his insights.

- Members found potential value in his dialogue with Lex Fridman and the implications for hardware design and efficiency.

Interconnects (Nathan Lambert) Discord

-

Explore Janus: The Open-Source Gem: The Janus project by deepseek-ai is live on GitHub, seeking contributors to enhance its development.

- Its repository outlines its aims, making it a potential asset for text and image processing.

-

Seeking Inference Providers for Chat Assistants: A member is on a quest for examples of inference providers that facilitate chat assistant completions, questioning reliability in available options.

- They mentioned Anthropic as an option but expressed doubts about its performance.

-

Debate on Special Tokens Utilization: Members discussed accessing special tokens in chat models, specifically the absence of an END_OF_TURN_TOKEN in the assistant’s deployment.

- Past insights were shared, with suggestions to consult documentation for guidance.

-

Greg Brockman’s Anticipated Comeback: Greg Brockman is expected to return to OpenAI soon, with changes reported in the company during his absence, according to this source.

- Members discussed how the landscape has shifted in his absence.

-

Instruction Tuning Relies on Data Quality: A member queried the essential number of prompts for instruction tuning an LLM that adjusts tone, emphasizing data quality as vital, with 1k prompts possibly being sufficient.

- This emphasizes the need for rigorous data management in tuning processes.

Stability.ai (Stable Diffusion) Discord

-

Hackathon Sparks Excitement with Big Prizes: The Gen AI Hackathon invites teams to develop AI systems, with over $25k in prizes available. Collaborators include aixplain and Sambanova Systems, focusing on ethical AI solutions that enhance human potential.

- This event aims to stimulate innovation in AI applications while encouraging collaboration among participants.

-

Challenges in Creating Custom Checkpoints: A member questioned the feasibility of creating a model checkpoint from scratch, noting it requires millions of annotated images and substantial GPU resources.

- Another user suggested it might be more practical to adapt existing models rather than starting from zero.

-

Tough Times for Seamless Image Generation: A user reported difficulties in producing seamless images for tiling with current methods using flux. The community emphasized the need for specialized tools over standard AI models for such tasks.

- This points to a gap in current methodologies for achieving seamless image outputs.

-

Limited Image Options Challenge Model Training: The team discussed generating an Iron Man Prime model, suggesting a LoRa model using comic book art as a solution due to limited image availability.

- The lack of sufficient training data for Model 51 poses significant hurdles in image generation.

-

Sampling Methods Stir Up Cartoon Style Fun: Members debated their favorite sampling methods, with dpm++2 highlighted for its better stability compared to Euler in image generation.

- They also shared preferences for tools like pony and juggernaut for generating cartoon styles.

LLM Agents (Berkeley MOOC) Discord

-

Quiz 6 is Now Live!: The course staff announced that Quiz 6 is available on their website, find it here. Participants are encouraged to complete it promptly to stay on track.

- Feedback from users indicates excitement around the quiz, suggesting it’s a key part of the learning experience.

-

Hurry Up and Sign Up!: New participants confirmed they can still join the MOOC by completing this signup form. This brings greater enthusiasm among potential learners eager to engage.

- The signup process remains active, leading many to express their anticipation for the course content.

-

Weekly Livestream Links Incoming: Participants will receive livestream links every Monday via email, with notifications also made on Discord for everyone to join. Concerns raised by users about missed emails were addressed promptly.

- This approach ensures everyone is kept in the loop and can participate in live discussions effectively.

-

Feedback on Article Assignments: Members discussed leveraging the community for feedback before submitting written assignments to align with expectations. They emphasized sharing drafts in the dedicated Discord channel for timely advice.

- Community collaboration in refining submissions showcases high engagement, ensuring quality for article assignments.

-

Meet the Guest Speakers: The course will feature guest appearances from Denny Zhou, Shunyu Yao, and Chi Wang, who will provide valuable insights. These industry leaders are expected to enhance the learning experience with real-world perspectives.

- Participants are eagerly looking forward to these sessions, which could bridge the gap between theory and application.

LAION Discord

-

Gen AI Hackathon Invites Innovators: CreatorsCorner invites teams to join a hackathon focused on AI-powered multi-agent systems, with over $25k in prizes at stake.

- Teams should keep ethical implications in mind while building secure AI solutions.

-

Pixtral flounders against Qwen2: In explicit content captioning tests, Pixtral displayed worse performance with higher eval loss compared to Qwen2 and L3_2.

- The eval training specifically targeted photo content, underscoring Qwen2’s effectiveness over Pixtral.

-

Future Plans for L3_2 Training: A member plans to revisit L3_2 for use in unsloth, contingent on its performance improvements.

- Buggy results with ms swift prompted a need for more testing before fully committing to L3_2.

-

Concerns on Explicit Content Hallucinations: Discussion revealed wild hallucinations in explicit content captioning across various models, a significant concern.

- Participants noted chaos in NSFW VQA outcomes, suggesting challenges regardless of the training methods employed.

DSPy Discord

- Curiosity Sparks on LRM with DSPy: A user inquired about experiences building a Language Representation Model (LRM) with DSPy, considering a standard look if no prior implementations exist. They linked to a blog post on alternatives for more context.

- LLM Applications and Token Management: Developing robust LLM-based applications demands keen oversight of token usage for generation tasks, particularly in summarization and retrieval. The discussion signaled that crafting marketing content can lead to substantial token consumption.

- GPT-4 Prices Hit New Low: The pricing for using GPT-4 has dropped dramatically to $2.5 per million input tokens and $10 per million output tokens. This marks a significant reduction of $7.5 per million input tokens since its March 2023 launch.

- Unpacking ColBERTv2 Training Data: Members expressed confusion about ColBERTv2 training examples, noting the model uses n-way tuples with scores rather than tuples. A GitHub repository was cited for further insights into the training method.

- Interest Grows in PATH Implementation: A member showed enthusiasm for implementing PATH based on a referenced paper, eyeing potential fusion with ColBERT. Despite skepticism about feasibility, others acknowledged the merit in exploring cross-encoder usage with models like DeBERTa and MiniLM.

Torchtune Discord

-

Qwen2.5 Pull Request Hits GitHub: A member shared a Pull Request for Qwen2.5 on the PyTorch Torchtune repository, aiming to address an unspecified feature or bug.

- Details are still needed, including a comprehensive changelog and test plan, to meet project contribution standards.

-

Dueling Approaches in Torchtune Training: Members debated running the entire pipeline against generating preference pairs via a reward model followed by PPO (Proximal Policy Optimization) training.

- They noted the simplicity of the full pipeline versus the efficiency benefits of using pre-generated pairs with tools like vLLM.

-

Visuals for Preference Pair Iterations: A request for visual representation of iterations from LLM to DPO using generated preference pairs pointed to a need for better clarity in training flows.

- This shows interest in visualizing the complexities inherent in the training process.

-

Insights from Anthropic’s RLAIF Paper: Discussion included the application of Anthropic’s RLAIF paper, with mentions of how TRL utilizes vLLM for implementing its recommendations.

- The precedent set by RLAIF in generating new datasets per training round is particularly notable, blending data from various models.

-

Kickoff Trials for Torchtune: A suggestion emerged to experiment with existing SFT (Supervised Fine-Tuning) + DPO recipes in Torchtune, streamlining development.

- This approach aims to utilize DPO methods to bypass the need for reward model training, bolstering efficiency.

OpenInterpreter Discord

-

Automating Document Editing Process: A member proposed automating the document editing process with background code execution, aiming to enhance efficiency in workflow.

- They expressed interest in exploring other in-depth use cases that the community has previously leveraged.

-

Aider’s Advancements in AI-Generated Code: Another member noted that Aider is increasingly integrating AI-generated and honed code with each update, indicating rapid evolution.

- If models continue to improve, this could lead to a nightly build approach for any interpreter concept.

-

Open Interpreter’s Future Plans: Discussions revealed curiosity about potential directions for Open Interpreter, particularly regarding AI-driven code integration like Aider.

- Members are eager to understand how Open Interpreter might capitalize on similar incremental improvements in AI model development.

LangChain AI Discord

-

Launch of Capital Companion - Your AI Trading Assistant: Capital Companion is an AI trading assistant leveraging LangChain and LangGraph for sophisticated agent workflows, check it out on capitalcompanion.ai.

- Let me know if anyone’s interested in checking it out or chatting about use cases, the member encouraged discussions around the platform’s functionalities.

-

AI-Powered Investment Dashboard for Stocks: Capital Companion features an AI-powered investment dashboard that aids users in detecting uptrends and enhancing decision-making in stock trading.

- Key features include technical analysis tools and market sentiment analysis, aiming to provide a competitive edge in stock investing.

Alignment Lab AI Discord

-

Fix Twitter/X embeds with rich features: A member urged members to check out a Twitter/X Space on how to enhance Twitter/X embeds, focusing on the integration of multiple images, videos, polls, and translations.

- This discussion aims to improve how content is presented on platforms like Discord and Telegram, making interactions more dynamic.

-

Boost engagement with interactive tools: Conversations highlighted the necessity of using interactive tools such as polls and translations to increase user engagement across various platforms.

- Using these features is seen as a way to enhance content richness and attract a wider audience, making discussions more vibrant.

LLM Finetuning (Hamel + Dan) Discord

-

Inquiry for LLM Success Stories: A member sought repositories showcasing successful LLM use cases, including prompts, models, and fine-tuning methods, aiming to consolidate community efforts.

- They proposed starting a repository if existing resources prove inadequate, emphasizing the need for shared knowledge.

-

Challenge in Mapping Questions-Answers: The same member raised a specific use case about mapping questions-answers between different sources, looking for relevant examples.

- This opens a collaborative avenue for others with similar experiences to contribute and share their insights.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

{% if medium == ‘web’ %}

Nous Research AI ▷ #general (324 messages🔥🔥):

Octopus Password MysteryFine-tuning ModelsLLM Performance EvaluationsStrawberry ProblemAnthropic's Updates

-

Octopus Password Mystery Explored: Users discussed the ongoing puzzle involving a model that appears to hint at ‘octopus’ or variations as a potential password, with humor interwoven throughout their trials.

- The conversation revealed various strategies attempted to unlock the password, many involving poetry and creative prompts, but without definitive success.

-

Fine-tuning Models for Specific Tasks: A user shared their experience training a fine-tuned model based on ASCII art, humorously noting that it could only respond in an undertrained manner.

- There was a consensus that despite the challenges, improvements and further training iterations could yield a more versatile model.

-

Performance Evaluations of LLMs: Participants critiqued the effectiveness of certain evaluations, specifically highlighting the strawberry task as inadequate given how LLMs process language.

- Several users speculated that new models would likely be tuned to handle well-known challenges, including the strawberry problem, due to its viral nature.

-

Anthropic’s Frequent Updates: Users expressed curiosity about Anthropic’s recent frequent updates and blog posts while questioning the absence of a significant new model release like a 3.5 version.

- The discussion hinted at skepticism towards whether the updates were genuinely innovative or just incremental additions to existing functionalities.

-

Engagement in Bot Development: A user demonstrated a new pipeline model that generates increasingly complex tasks using a base model, showcasing the fun side of bot interactions.

- Responses indicated a playful engagement with the technology, as users attempted to manipulate and create engaging tasks through various LLM functionalities.

Links mentioned:

- Groq Meta Llama 3.2 3B With Code Interpreter - a Hugging Face Space by diabolic6045: no description found

- Kido Kidodesu GIF - KIDO KIDODESU KIDODESUOSU - Discover & Share GIFs: Click to view the GIF

- Stirring Soup Food52 GIF - Stirring Soup Food52 Vegetable Soup - Discover & Share GIFs: Click to view the GIF

- forcemultiplier/instruct-evolve-xml-3b · Hugging Face: no description found

- Gandalf | Lakera – Test your prompting skills to make Gandalf reveal secret information.: Trick Gandalf into revealing information and experience the limitations of large language models firsthand.

- Octopus Caracatița GIF - Octopus Caracatița - Discover & Share GIFs: Click to view the GIF

- Boo GIF - Boo - Discover & Share GIFs: Click to view the GIF

- Canadian Pacific 2816 - Wikipedia: no description found

- Space Balls Schwartz GIF - Space Balls Schwartz Imitate - Discover & Share GIFs: Click to view the GIF

- NOPE - a Jailbreak puzzle: no description found

- memoize dataset length for eval sample packing by bursteratom · Pull Request #1974 · axolotl-ai-cloud/axolotl: Description Fix for issue#1966, where eval_sample_packing=True caused evaluation being stuck on multi-gpu. Motivation and Context In issue#1966, evaluation on sample packed dataset on multiple GPU…

Nous Research AI ▷ #ask-about-llms (4 messages):

Rust ML LibrariesTransition from Python to Rusttorch-rsburn and ochre

-

Python to Rust Transition in ML: One user suggested that the focus is currently on Python, but expects a shift to Rust in the future for machine learning.

- They mentioned studying Rust ML libraries, indicating a growing interest in this area.

-

Inquiry on Rust ML Libraries: Another member asked for recommendations on top Rust ML libraries, particularly if Candle is prominent.

- The enthusiasm for Rust is clear, showing a keen interest in expanding knowledge in this programming language.

-

Exploration of torch-rs: A member inquired if anyone had looked into torch-rs, a Rust library for machine learning.

- This highlights a specific interest in integrating Rust with well-known ML frameworks.

-

Notable Rust ML Libraries Shared: User mentioned being familiar with torch-rs*, along with* burn and ochre as libraries to explore.

- This indicates active engagement with various Rust machine learning tools and frameworks.

Nous Research AI ▷ #research-papers (1 messages):

chiralcarbon: https://arxiv.org/abs/2410.13848

Nous Research AI ▷ #interesting-links (3 messages):

SCP generatorLLM Culture repository

-

SCP Generator Using Outlines Released: A new SCP generator utilizing outlines has been made available on GitHub, contributing to the development of the ‘cursed’ project.

- The project aims to enhance the generation of SCP texts, showcasing creative potential in the genre.

-

Study LLMs with Different Personalities: A repo dedicated to studying the texts generated by various populations of LLMs has been shared, focusing on different personalities, tasks, and network structures: LLM-Culture.

- This resource is linked to the paper on Cultural evolution in populations of Large Language Models, providing valuable insights for researchers.

Links mentioned:

- cursed/scp at main · dottxt-ai/cursed: Contribute to dottxt-ai/cursed development by creating an account on GitHub.

- GitHub - flowersteam/LLM-Culture: Code for the “Cultural evolution in populations of Large Language Models” paper: Code for the “Cultural evolution in populations of Large Language Models” paper - flowersteam/LLM-Culture

Nous Research AI ▷ #research-papers (1 messages):

chiralcarbon: https://arxiv.org/abs/2410.13848

HuggingFace ▷ #general (214 messages🔥🔥):

Using AI in CodingLearning PythonFactorio Game DiscussionKaggle Competition InsightsPlandexAI Discussion

-

AI and Coding Effectiveness: Members discussed the limitations of AI in coding, highlighting that AIs often struggle to see the bigger picture beyond simple tasks, making them less effective for complex projects.

- One member noted that while AIs can fix small issues like JSON errors, they may mislead beginners who don’t know how to code effectively.

-

Value of Learning Python: It was suggested that learning Python is worthwhile for AI hobbyists and that free online resources can be as effective as paid courses.

- Participants emphasized that AI-generated code is often not reliable for beginners, reinforcing the need for foundational coding skills.

-

Factorio New DLC Discussion: A discussion emerged around the pricing of Factorio’s new DLC, with mixed opinions on whether $70 is justified.

- Some members shared strategies for sharing the game with friends to distribute costs.

-

Kaggle Competition Clarifications: One member expressed confusion about a Kaggle competition’s submission requirements, debating what exactly was needed for submission.

- It was clarified that they are expected to submit results based solely on the provided test set.

-

PlandexAI and AI Development Tools: A conversation revolved around PlandexAI and how breaking down coding tasks into simpler components could improve AI coding outcomes.

- Members discussed the importance of structured AI tools to enhance the programming process rather than using AI purely for direct code generation.

Links mentioned:

- Emu3 - a Hugging Face Space by BAAI: no description found

- SBI-RAG: Enhancing Math Word Problem Solving for Students through Schema-Based Instruction and Retrieval-Augmented Generation: Many students struggle with math word problems (MWPs), often finding it difficult to identify key information and select the appropriate mathematical operations.Schema-based instruction (SBI) is an ev…

- Reddit - Dive into anything: no description found

- StackLLaMA: A hands-on guide to train LLaMA with RLHF: no description found

- GitHub - not-lain/pxia: AI library for pxia: AI library for pxia. Contribute to not-lain/pxia development by creating an account on GitHub.

- Duvet: Provided to YouTube by NettwerkDuvet · bôaTwilight℗ Boa Recording Limited under exclusive license to Nettwerk Music Group Inc.Released on: 2010-04-20Producer…

- GitHub - florestefano1975/comfyui-portrait-master: This node was designed to help AI image creators to generate prompts for human portraits.: This node was designed to help AI image creators to generate prompts for human portraits. - florestefano1975/comfyui-portrait-master

- not-lain (Lain): no description found

- starsnatched/thinker · Datasets at Hugging Face: no description found

HuggingFace ▷ #today-im-learning (3 messages):

LLM EvaluationFinetuning Flux ModelsBitNet Framework

-

Evaluating LLMs with Popular Eval Sets: A user expressed difficulty in evaluating their LLM on popular eval sets and seeks guidance on how to obtain numerical results.

- They mentioned that their model performs better in conversation compared to the base model, as noted on their Hugging Face page.

-

Learning to Finetune Flux Models: A user is eager to learn how to finetune Flux models and is in search of recommended resources.

- This inquiry suggests a growing interest in the practical aspects of model improvement and training techniques.

-

Exploring BitNet Framework: A user shared an interest in BitNet and provided a link to GitHub for the official inference framework for 1-bit LLMs.

- The shared link encourages further exploration of the features and contributions related to this framework in the community.

Links mentioned:

- ElMater06/Llama-3.2-1B-Puredove-p · Hugging Face: no description found

- GitHub - microsoft/BitNet: Official inference framework for 1-bit LLMs: Official inference framework for 1-bit LLMs. Contribute to microsoft/BitNet development by creating an account on GitHub.

HuggingFace ▷ #cool-finds (1 messages):

Perplexity for FinanceStock Research Tools

-

Perplexity Transforms Financial Research: Perplexity now offers a feature for finance enthusiasts that includes real-time stock quotes, historical earning reports, and industry peer comparisons, all presented with a delightful UI.

- Members are encouraged to have fun researching the market using this new tool.

-

Market Analysis Made Easy: The new finance feature allows users to perform detailed analysis of company financials effortlessly, enhancing the stock research experience.

- This tool promises to be a game changer for those interested in keeping up with financial trends.

Link mentioned: Tweet from Perplexity (@perplexity_ai): Perplexity for Finance: Real-time stock quotes. Historical earning reports. Industry peer comparisons. Detailed analysis of company financials. All with delightful UI. Have fun researching the marke…

HuggingFace ▷ #i-made-this (5 messages):

AI Content Detection Web AppStyle Transfer FunctionBehavioral Economics in Decision-MakingFine-tuning and Model MergingCognitive Biases in Financial Crises

-

New AI Content Detection Web App launched: A member introduced a new project, an AI Content Detection Web App, that identifies whether images or text are generated by AI or humans.

- They invited feedback on their project, stating that improvements are welcome as they are new to this kind of tool.

-

Testing Stylish Functions in New UI: A member announced that they are testing a style transfer function in a new user interface, marking the beginning of its development.

- This implies ongoing enhancements in user experience and functionality for the audience.

-

Behavioral Economics and Decision-Making Insights: A complex query on behavioral economics explored how cognitive biases influence decision-making in high-stress environments, particularly during financial crises.

- Key points discussed included loss aversion and its effects on expected utility models, indicating a significant alteration in rational behavior.

-

Examining Fine-Tuning and Model Merging: A member shared a paper titled Tracking Universal Features Through Fine-Tuning and Model Merging, investigating how features persist through model adaptations.

- The study focuses on a base Transformer model fine-tuned on various domains and examines the evolution of features across different language applications.

-

Discussion on Mimicking Models: Feedback was given regarding the limitations of mimicking large language models, emphasizing that many lack the comprehensive datasets like those used by larger models.

- The conversation highlighted the challenges and similarities in their approaches to model adaptation and feature extraction.

Links mentioned:

- Paper page - Tracking Universal Features Through Fine-Tuning and Model Merging: no description found

- GitHub - rbourgeat/airay: A simple AI detector (Image & Text): A simple AI detector (Image & Text). Contribute to rbourgeat/airay development by creating an account on GitHub.

- starsnatched/thinker · Datasets at Hugging Face: no description found

- starsnatched/ThinkerGemma · Hugging Face: no description found

HuggingFace ▷ #reading-group (11 messages🔥):

HuggingFace Reading GroupIntel Patent for Code Generation LLMDiscord Stage ChannelsAI Resources for Beginners

-

Overview of HuggingFace Reading Group: The HuggingFace server facilitates a reading group where anyone can present on AI-related papers, as noted in the GitHub link.

- This platform is mainly intended to support HF developers, fostering collaboration and knowledge sharing.

-

Discussion on Intel Patent for Code Generation LLM: A member inquired about the Intel patent US20240111498A1 concerning code generation using LLMs, sharing a link to the patent.

- The patent details various apparatuses and methods that utilize LLM technology for generating code, emphasizing its potential applications.

-

Understanding Discord Stage Channels: A newcomer to Discord sought clarification on what stages are, comparing them to Zoom meetings.

- Members explained that stage channels are designed for one-directional presentations, preventing disruptions during discussions.

-

Seeking AI Resources for Beginners: A member requested recommendations for an information hub suitable for beginners to gain structured insights on AI and its use cases.

- This reflects the growing interest among new learners on how to navigate AI fundamentals and practical applications.

Link mentioned: US20240111498A1 - Apparatus, Device, Method and Computer Program for Generating Code using an LLM

- Google Patents: no description found

HuggingFace ▷ #computer-vision (2 messages):

Out of context object detectionImportance of context in image analysisTraining models for detectionCreating 'others' class

-

Understanding Out of Context Objects: The detection of out of context objects in images varies based on the setting, such as recognizing that cars and moving objects are relevant on roadways while static elements like trees are not.

- A member suggested that the definition of ‘out of context’ should guide detection strategies, emphasizing the need to tailor methods to specific environments.

-

Training Models Necessitates Relevant Classes: For effective object detection, it’s crucial to train the model on relevant classes; the user proposed creating an ‘others’ class to encompass out of context items.

- They indicated that insights on problem settings could help refine the training process if shared among members.

HuggingFace ▷ #NLP (6 messages):

Setfit Model LoggingArgilla Version Issues

-

Troubleshooting Setfit Model Logging to MLflow: A user expressed difficulty in logging a Setfit model to MLflow and sought specific examples related to this process.

- Another member offered assistance but needed clarification on the Argilla version being used for compatibility.

-

Argilla Version Confusion: A user confirmed they might be using the legacy Argilla 1.x code instead of the newer 2.x version after a suggestion to check their version.

- Instructions were provided to navigate to the Argilla documentation for using the updated features seamlessly.

Link mentioned: Argilla: Argilla is a collaboration tool for AI engineers and domain experts to build high-quality datasets.

HuggingFace ▷ #diffusion-discussions (27 messages🔥):

Kwai Kolors in Google ColabControlNet training considerationsRenting VMs for diffusion modelsInstance types and pricing on AWS EC2

-

Kwai Kolors struggles in Google Colab: A user reported errors while trying to run Kwai Kolors in Google Colab, indicating it requires around 19GB of VRAM, which isn’t supported in the free version.

- Another user suggested using the original repository instead of diffusers for better compatibility.

-

ControlNet training requires text embeddings: For training a custom ControlNet to alter faces, a user was informed that replacing the CLIP text encoder with the image encoder would not work due to training dependencies on text embeddings.

- The discussion emphasized potential overfitting with repeated faces in the dataset, regardless of embeddings used.

-

Recommendations for renting VMs: Users discussed renting VMs for running diffusion models, highlighting that Amazon EC2 is commonly used, but options like FAL and Replicate are also viable.

- A user sought recommendations for EC2 instance types and was advised that instance choice varies based on VRAM and application specifics.

-

Pricing mechanism for VM instances: For AWS EC2, users clarified that pricing is charged per hour for the instance being on, regardless of whether it’s actively in use.

- The conversation pointed out that using notebook instances does not impact the hourly charge; it is solely based on the instance uptime.

Link mentioned: yisol/IDM-VTON · Hugging Face: no description found

Eleuther ▷ #general (5 messages):

Open Source AI DefinitionContributions to RWKVOpen Source AI projects

-

Open Source AI Definition nearly finalized: The Open Source AI Definition is close to completion, and a release candidate has been shared for public review and endorsement at this link. Members are encouraged to endorse the definition for broader recognition starting with version 1.0.

-

Seeking contributions for RWKV project: A new member, sharing their background from a startup focused on AI inference, expressed interest in contributing to open source projects, especially those related to RWKV. They were encouraged to assist with experiments for a paper on RWKV version 7 as discussed in this channel.

- The community welcomes such contributions, especially regarding novel architecture and efficient inference methodologies.

-

Concerns about Open Source AI Definition’s data requirements: A member raised a concern about the light data requirements implied within the Open Source AI Definition. This comment indicates potential gaps in the initial draft that may need addressing for proper OS AI standards.

Link mentioned: The Open Source AI Definition – 1.0-RC1: Endorse the Open Source AI Definition: have your organization appended to the press release announcing version 1.0 version 1.0-RC1 Preamble Why we need Open Source Artificial Intelligence (AI) Open So…

Eleuther ▷ #research (168 messages🔥🔥):

SAE Steering ChallengesNoise Distribution in TrainingFuture-Correlation in Machine LearningInterpreting SAE vs Transformer ModelsImproving Computational Efficiency

-

SAE Steering Challenges and Limitations: Discussions highlighted that using Sparse Autoencoders (SAEs) for interpretability can lead to misleading conclusions, as features may not cleanly separate relevant concepts.

- The complexity of higher-level hierarchical relationships complicates feature interpretation, necessitating massive datasets for accurate model explanations.

-

Investigating Noise Distributions for RF Training: Members engaged in a conversation about the appropriateness of using normal distributions for ‘noise’ in random forests, suggesting alternatives based on careful parameterization of distributions.

- There’s a consensus that while Gaussian noise is common, other forms like Perlin noise or pyramid noise could provide better results in different applications, particularly in image processing.

-